Abstract

Tools to monitor implementation progress could facilitate scale-up of effective treatments. Most treatment for depression, a common and disabling condition, is provided in primary care settings. Collaborative Care Management (CoCM) is an evidence-based model for treating common mental health conditions, including depression, in this setting; yet, it is not widely implemented. The Stages of Implementation Completion (SIC) was adapted for CoCM and piloted in eight rural primary care clinics serving adults challenged by low-income status. The CoCM-SIC accurately assessed implementation effectiveness and detected site variations in performance, suggesting key implementation activities to aid future scale-ups of CoCM for diverse populations.

Keywords: Collaborative Care, CoCM, depression, adaptation, implementation, SIC

1. Introduction

Great advances have been made in the field of Implementation Science over the last decade to bridge the gap between research and practice. Even so, significant challenges continue to prevent the most effective and efficient clinical interventions from reaching the people who need them (http://implementationscience.biomedcentral.com/about). This is particularly true for populations suffering from mental health conditions, such as depression, for which evidence-based treatments are available but continue to be underutilized. Translation of interventions from science to bedside is hampered by the dearth of methods available to support the implementation of existing, evidence-based treatments.

A significant gap in the field of implementation science is the lack of measurement tools to monitor and assess implementation processes and achievement of key milestones (Saldana, 2014). Recently, the Stages of Implementation Completion (SIC; described in detail below) was developed to address this gap, along with an adaptation process to tailor the tool to specific intervention approaches (Saldana, 2014). This paper describes this adaptation process, within the context of an implementation pilot of Collaborative Care (or Collaborative Care Management; CoCM) for depression in eight rural primary care clinics serving adults with low-income status. The utility of the SIC for highlighting variations in implementation approaches, and the impact on implementation outcomes, will be described.

1.1. Access to Treatment for Depression

Worldwide, depression is the leading cause of years lived with disability (Whiteford et al., 2013). On average 10% of adults across the United States grapple with depression at any given time. The majority of depression care in the U.S. is delivered in primary care settings, which have been called the “de facto U.S. mental health system” (Regier et al., 1993; Wang et al., 2006), particularly for patients from low-income and rural settings who face significant access barriers to both primary care and mental health treatment, and who are less likely to receive evidence-based treatments when they do receive care (Wang et al., 2005; Olfson et al., 2002).

Poor quality of identification and treatment of depression in vulnerable populations is well documented. Primary care providers (PCPs), especially those practicing in rural and otherwise underserved areas, report serious lack of access to mental health specialists (Cunningham, 2009). PCPs lack the support necessary to actively track treatment outcomes, make proactive adjustments when symptoms are not improving, offer non-pharmacologic treatments, and problem-solve when first and second line treatments fail. Consequently, only 20% of patients receiving usual primary care for depression show substantial clinical improvement after one year of treatment (Rush et al., 2004; Unützer et al., 2002). Similarly, patients referred for psychotherapy often receive inadequate trials and/or ineffective therapies resulting in treatment response as low as 20% in usual specialty mental health care (Hansen, Lambert, & Forman, 2002).

1.1.a. Evidence for Collaborative Care Management (CoCM)

Over the past 20 years more than 80 randomized control research trials have established a robust evidence base for a solution to these problems with usual care (Gilbody, Bower, Fletcher, et al., 2006; Gilbody, Bower, & Whitty, 2006; Katon, Unützer, Wells, & Jones, 2010). Collaborative Care, more recently also called Collaborative Care Management (CoCM), applies the principles of effective chronic disease management to common mental health conditions like depression and anxiety, including measurement-based care, treatment-to-target, and stepped care (Von Korff & Tiemens, 2000; Trivedi, 2009). Adjusting the treatment plan based on whether or not symptoms are improving is one of the most important components of CoCM.

In the largest depression treatment trial to date, patients receiving CoCM were more than twice as likely compared with those in usual care to experience substantial improvement in depression symptoms over 12 months (Unützer et al., 2002). Both patients and primary care providers strongly endorsed CoCM as better than usual care (Levine et al., 2005). CoCM also was significantly more effective than usual care for all patients, including those who identified as ethnic minority (Areán et al., 2005) or who came from low income backgrounds (Areán, Gum, Tang, & Unützer, 2007). Because CoCM benefits populations that are challenged by socioeconomic vulnerability, it presents an opportunity to reduce disparities in mental health care delivery and outcomes (Wells et al., 2002).

Despite strong and consistent evidence for the significantly superior effectiveness of CoCM, implementation success is highly variable in scale-up efforts (Solberg, 2014; Solberg et al., 2013; Bauer, Azzone, & Goldman, 2011). Anecdotal evidence from coaches suggests that prior to 2017, clinics failed primarily due to lack of leadership, psychiatrist commitment, and insufficient reimbursement or financial challenges (Solberg et al., 2013). The latter barrier has since been substantially reduced with the creation of CoCM billing codes by the Centers for Medicare and Medicaid Services. Despite reduction of reimbursement barriers, success of CoCM implementation remains highly variable (Solberg, Crain, Maciosek & Unützer, 2015; Rossom et al., 2017), and little is known about the key indicators for implementation success or failure.

1.1.b. Guiding Implementation of CoCM

In an effort to optimize successful implementation of CoCM, including fidelity to key components proven to drive improved patient outcomes (Bao, Druss, Jung, Chan, & Unützer, 2016; Coventry et al., 2014), the developers of CoCM created a purveyor organization to provide training, technical assistance, and support to implementing clinics: the AIMS Center (Advancing Integrated Mental Health Solutions; http://uwaims.org) in the Department of Psychiatry and Behavioral Sciences at the University of Washington.

Many studies across the US and worldwide have confirmed the evidence base for CoCM in diverse patient populations and clinical settings and for a broader array of common behavioral health conditions (Blasinsky, Goldman, and Unützer, 2006; Vannoy et al., 2011; Huang et al., 2012; Bauer, Chan, Huang, Vannoy, & Unützer, 2013). These health services efficacy and effectiveness studies (with a focus on patient outcomes rather than implementation), in combination with accumulated experience with implementation practice, have informed an evolved and standardized approach to implementation by the AIMS Center. For example, because of the need for substantial CoCM team building, clinics now participate in 3–6 months of pre-launch activities (based on the progress of each site) spanning two stages, followed by a 2-day intensive in-person training, based on adult learning principles. General practice facilitators are used to support health center implementation team formation and dynamics (Grumbach, Bainbridge, and Bodenheimer, 2012). This AIMS Center approach to supporting implementation of CoCM is accepted and funded by diverse payers, including state and federal agencies, private foundations, and individual healthcare delivery organizations. To date, CoCM implementations have focused on settings serving a variety of patients including general adult, geriatric, adolescent, and perinatal populations and addressing a range of behavioral health conditions including depression, anxiety, post-traumatic stress disorder, and substance abuse.

1.2. The Stages of Implementation Completion

The Stages of Implementation Completion (SIC; Saldana, Chamberlain, Wang, & Brown, 2011), is a measure of implementation process and milestones first developed as part of a randomized control implementation trial (Chamberlain, Saldana, Brown, & Leve, 2010). That trial compared two implementation strategies for the adoption of an evidence-based practice (EBP) for youth referred to out-of-home care with severe behavior problems, by non-early adopting counties (Brown et al., 2014). Thus, the measure was originally developed to be flexible enough to assess varying implementation strategies, yet standardized so as to capture comparable implementation process information across strategies.

As shown in Table 1, the SIC defines the completion of implementation activities across eight stages (Engagement through Competency) that span three phases of implementation including pre-implementation, implementation, and sustainability. The goal of the measure is to provide a low-burden observation tool for developer purveyor organizations (e.g. AIMS Center) to record progress of clinics/sites that are attempting to adopt an EBP. The SIC is a date-driven measure with item responses including the date by which implementation activities are completed by a newly adopting site (as rated by the EBP developer or purveyor). The SIC yields three scores: (1) Duration—time taken for completion of implementation activities, (2) Proportion—percentage of activities completed, and (3) Final Stage—the furthest point in the implementation process achieved. Originally developed as a research tool, the SIC successfully predicts implementation outcomes, including successful program start-up (Saldana, Chamberlain, Wang, & Brown, 2011) and implementation costs and resources (COINS; Saldana, Chamberlain, Bradford, Campbell, & Landsverk, 2013).

Table 1.

SIC Stages with Example Activities

| Stages | Activities |

|---|---|

| 1. Engagement | Date Interest Indicated |

| 2. Consideration of Feasibility | Date First Feasibility Call Conducted |

| Date of Feasibility Site Visit | |

| 3. Readiness Planning | Date Reviewed Site requirements |

| Date Referral Process Approved | |

| 4. Staff Hired and Trained | Date of First Staff Hired/Assigned |

| Date of Supervisor Training | |

| 5. Fidelity Monitoring in Place | Date Team Registered on Portal |

| Date Recording Equipment Tested | |

| 6. Services and Consultation Begin | Date of First Case Screened |

| Date of First Client Session | |

| 7. Ongoing Services, Consultation, Fidelity, Feedback | Date First Case Submitted for full Review |

| 8. Competency (Certification) | Date of Certification Approval |

Note. SIC = Stages of Implementation Completion.

Recently, to fill the gap of limited tools available to assess implementation process, the SIC has been adapted for a range of practices including mental health, school prevention programs, primary care interventions, substance abuse treatments, and large state system initiatives. SIC results repeatedly demonstrate that when there is variability among sites attempting to implement (i.e., when there is not a prescribed timing of implementation activities), that implementation behavior predicts successful program start-up and the achievement of competency in program delivery (Saldana, Schaper, Campbell, & Chapman, 2015). Thus, utilizing the SIC as a method for assessing and understanding the implementation process that optimizes the chance for success in the delivery of Collaborative Care was a logical step to, in turn, examine the potential of enhancing the implementation process of CoCM. Next, the methods for adaptation will be described as they were conducted to develop the CoCM-SIC.

2. Method

The SIC can be tailored to meet the needs of particular EBPs, with implementation activities adapted to describe the steps necessary to achieve sustainable adoption of the practice (Saldana, 2014). To evaluate generalizability of SIC validity, reliability, and predictability, Saldana and colleagues defined an adaptation protocol that was utilized to operationalize the AIMS Center implementation strategy to yield a CoCM version of the SIC. This tool then was pilot tested in eight rural primary care clinics implementing CoCM with patients from low-income backgrounds who were experiencing depression.

SIC Adaptation: Operationalizing the AIMS Center Implementation Strategy

Following the standard protocol for SIC adaptation, the first author met with the AIMS Center purveyor team for an intensive day-long meeting. The meeting involved all key AIMS Center staff who provide training, coaching, and implementation assistance to organizations attempting to adopt CoCM.

Defining the implementation process

As shown in Table 1, the implementation process includes a range of activities from Engagement with the EBP purveyor (e.g. AIMS Center) to establishment of the Competency necessary to sustain the program. Through a series of qualitative prompts (e.g., “define the process by which sites first contact the AIMS Center”; “describe the process new clinics go through to assess if the EBP is a good fit”), the AIMS team described the step-by-step process sites undergo to successfully implement CoCM. Through this process, purveyor staff discussed variations in implementation behavior that they had experienced. Through this facilitated discussion, consensus was reached among them regarding the activities that are essential for quality implementation (i.e., those that are always recommended or they agree should be recommended). Attention was provided to identifying implementation activities that always must be completed (e.g., training), are encouraged but not always completed (e.g., setting program goals), and not required to be completed (e.g., supplemental training). Finally, the AIMS team came to consensus on the criteria to be used to determine when defined activities should be considered “completed” (e.g., which stakeholders are considered “key” for participation).

One of the challenges of the SIC adaptation process is to help developer/purveyor groups identify the full range of implementation activities while not becoming too focused on overly “micro activities,” thereby limiting the ability to capture the associated data. For example, although there might be multiple steps that are required to assess a clinic’s financial readiness to adopt a new program, the total financial analysis process might be captured using a cost calculator. In this example, the date that the cost calculator is completed would be recorded. Thus, the goal is to concretely define the implementation process with enough precision to distinguish different implementation activities at a consistently observable level, but with low burden on the data collector (typically the developer/purveyor).

Missing data designations

Adding to the complexity of defining an implementation process is understanding why implementation activities are not completed by a newly adopting site. The SIC includes 4 missing data type designations: (1) the activity was known to be completed, but the date on which it was completed is unknown; (2) the activity was completed, but for the adoption of a different practice; (3) the activity is not applicable to the site’s implementation; or (4) the activity was truly not completed. As part of the adaptation process, it is necessary to clearly define when and how to assess missing data. Consistent treatment of missing data is critical for accurate SIC scoring.

Defining contextual factors

The SIC adaptation process also involves defining site demographics expected to influence variation in the implementation process. Collaborative Care is implemented in a wide array of primary care settings, and the AIMS Center was interested in using the SIC to assess a diverse range of scale-up efforts. To facilitate this, an extensive list of demographics was developed (Table 2), and a coding scheme and data source for each was created.

Table 2.

Contextual Site Demographic Characteristics

| Populationa |

| Site Typeb |

| Number of Unique Patients Per Year |

| Care Manager(s) FTE |

| Consulting Psychiatrist(s) FTE |

| Primary Care Provider(s) FTE |

| Organization contacted AIMS Center directly or through a third party intermediary? |

| Is site receiving grant funding for this initiative? |

| Medically Underserved (MUA)? |

| Healthcare Provider Shortage area - Primary Care? |

| Healthcare Provider Shortage area - Behavioral Health? |

| Pre-existing behavioral health program? |

| If yes: What was the pre-exiting BH staffing FTE? |

| What is the current BH staffing FTE? |

| Previous experience implementing a quality improvement effort? |

| If yes: Was it related to mental health? |

| Agreed to consider implementation? |

| If no: Reason declined? |

Note. FTE = full-time equivalent.

< 25,000; 25,000 – 99,999; 100,000 – 250,000; > 250,000

Federally Qualified Health Center/Community Clinic; Other Primary Care Clinic; Specialty Behavioral Health; Community Based Organization

CoCM-SIC Refinement

Following the day-long, in-person meeting with the AIMS Center, further iterative adaptation occurred over multiple phone calls, email exchanges, and document modifications. Once consensus was obtained among key AIMS Center team members, the adapted SIC was completed using retrospective data from a clinical site that previously adopted CoCM. Through this retrospective data collection, additional modifications were identified as being necessary to accurately capture all of the implementation activities. Once this final step was concluded and the team had confidence in the accuracy of the CoCM-SIC, the measure was programmed into the web-based SIC data collection tool (https://sic.oslc.org/SIC/auth/login). The final tool has 89 implementation activities across the eight SIC Stages, and 18 site demographic characteristics.

Pilot Testing the CoCM-SIC

The AIMS Center obtained funding to pilot test the CoCM-SIC in eight rural primary care clinics serving patients with challenges of low-income status, in four states (Alaska, Washington, Montana, Wyoming). These clinics already had been selected to participate in a grant-funded initiative to implement CoCM for depression with training and implementation support provided by the AIMS Center. This allowed for piloting the CoCM-SIC with eight independent, non-early adopting, primary care clinics recruited to implement CoCM. Primary pilot questions included an assessment of: (a) the CoCM-SIC’s potential to accurately assess implementation behavior of rural primary care clinics; (b) the proportion of activities completed by sites; and (c) the duration necessary to complete the implementation phases.

Participants

Data collected from eight primary care clinics was used in the CoCM-SIC pilot. All eight sites are Federally Qualified Health Centers (FQHCs) located in areas defined as “medically underserved” and/or “health provider shortage areas” by the Health Resources and Services Administration (HRSA). These clinics serve communities with populations between 25,000 and 250,000, and the average number of unique patients served annually per clinic was 11,537.

Sites implemented in two cohorts. Cohort 1 included five clinics and provided initial testing and refinement of the adapted SIC. Cohort 2 included three clinics and provided preliminary reliability support for the CoCM-SIC.

Data collection

Data was gathered and entered into the SIC website by an AIMS Center staff member. Implementation activities were observed for completion and, when appropriate, date-stamped documents were submitted to demonstrate completion of questionnaires. As sites completed implementation activities, the dates on which the activities were completed were recorded and entered.

Scoring

SIC data are scored across implementation phases. As noted previously, Stage 8 defines activities toward establishing competency to begin sustainability (i.e., phase 3). SIC scoring currently is limited to assessment of pre-implementation and implementation phases (future research will examine the more complex sustainability phase).

Phase Proportion is scored by calculating the number of activities completed within a phase compared to the total number of activities available to complete in the phase. Phase Duration is calculated by comparing the dates of the first and last activities completed within each phase. Because implementation is non-linear in nature, the first and last activities observed might not be the first and last activities listed within each stage on the SIC. The Final Stage score documents the Stage in which each site discontinues or achieves competency. Missing data types are factored into scoring such that only activities truly not completed are counted against the score. Given the limited sample size and pilot nature of this measurement development project, analyses are descriptive in nature including average SIC scores.

Results

Overall results suggest that the SIC adaptation for CoCM yielded a face valid observational measure of the AIMS Center implementation strategy for CoCM. AIMS Center staff reported no difficulty mapping the processes with which they were involved with the organizations onto the measure. Due to the strong operationalization of the implementation process, the AIMS Center staff was able to easily collect the necessary SIC data based on observation of sites through the natural purveyor–site technical assistance relationship. Data collectors self-reported minimal difficulty identifying, defining, and entering appropriate data into the web-based data entry site. This suggests the CoCM-SIC is a low-burden, practical tool. Outcomes first are presented for Cohort 1, followed by reliability outcomes provided with Cohort 2.

Cohort 1

One site discontinued with the other four reaching sustainment. Although the site that discontinued did successfully launch a program and entered Stage 8, the program discontinued during this stage, prior to achieving competency. The SIC data, as well as independent purveyor report, suggested that this site did not engage in the implementation process to the same degree as other sites. Although the clinic reported their decision to discontinue was based on lack of continued external funding, observation of their implementation behavior as assessed by the SIC provided clearly defined implementation behaviors missing throughout the process: (1) selection of a program champion; (2) on-site sight coaching; (3) achievement of recommended caseload; (4) completion of a financial sustainability plan; (5) refresher training participation.

SIC Proportion

The data seen with the CoCM-SIC is consistent with other EBP implementations guided by developer/purveyor organizations. On average, sites completed a high proportion of implementation activities in both pre-implementation and implementation phases (87% and 97%, respectively). It is noteworthy that data collection for the five sites utilized all four of the missing data designations, with only those that were truly not completed counted as missing. In so doing, it was possible to uncover that the one discontinued site had a higher number of implementation activities truly not completed, as opposed to the other possible reasons for missing data, than the other sites (Table 3).

Table 3.

Incidence of Missing Data Types for Eight Rural Clinics Implementing Collaborative Care (CoCM), as Assessed by the Stages of Implementation Completion (SIC)

| Clinic | Date Missing but Confirmed as Completed | Completed with Previous Implementation | Not Applicable | Truly Not Completed |

|---|---|---|---|---|

| A | 6 | 0 | 2 | 1 |

| B | 7 | 0 | 1 | 9 |

| C | 11 | 0 | 3 | 1 |

| D | 7 | 10 | 5 | 1 |

| E | 12 | 10 | 5 | 2 |

| F | 7 | 0 | 3 | 2 |

| G | 6 | 0 | 5 | 3 |

| H | 7 | 0 | 3 | 1 |

Note. Clinics A, C-F, and H achieved sustainment; Clinic G did not achieve competency by the end of the study, but was still active; Clinic B discontinued during the competency stage.

SIC Duration

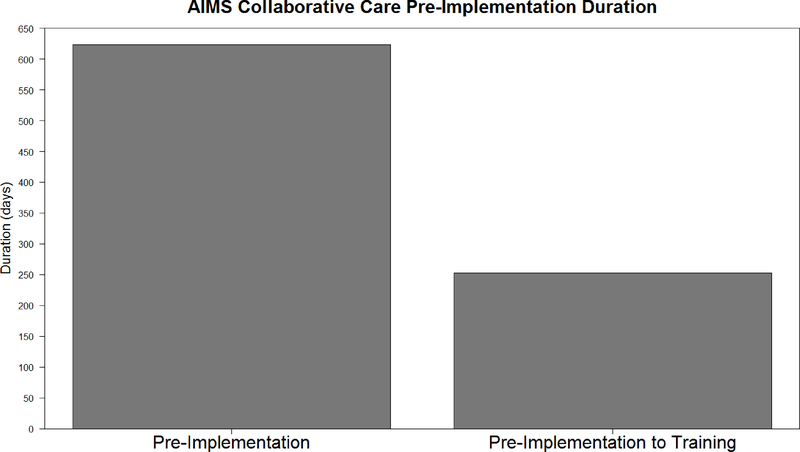

The average duration for pre-implementation was 623 days, with no variation among sites, whereas the average duration for the implementation phase was 768 days, with a range between 706 and 1,015 days. The lack of variation and exceedingly long pre-implementation duration compared to durations of other EBPs (Saldana, Schaper, Campbell, & Chapman, 2015) created questions. In discussion with the AIMS Center, it was discovered that the operationalization process used to adapt the SIC to describe the implementation process (i.e., a necessary step in the SIC adaptation process), included newly defined activities as well as time lags dictated by the grant-making portion of the initiative that were not part of routine pre-implementation processes. When these artificially imposed time lags were removed, the average duration was reduced to an average of 250 days (Figure 1), which is consistent with pre-implementation durations of other EBPs. Thus, SIC scores accurately reflected the impact of changes made to the implementation process by the grant-making portion of the initiative.

Figure 1.

Comparison of newly adopting sites’ pre-implementation duration with and without grant imposed time-lags.

Cohort 2

Three additional rural clinics implemented CoCM with the support of the AIMS Center. These clinics implemented under the approach refined with Cohort 1. Two of the three entered into sustainment by the end of the study period; one remained active but not yet competently implementing.

Unlike Cohort 1, the second cohort implemented at a pace and rate of activity completion consistent to other EBPs. Across the three clinics, the average pre-implementation duration was 315 days to complete and average of 90% of activities. Average implementation duration was 782 days to complete and average of 95% of activities. Observing patterns of implementation behavior among the three clinics, the only distinguishing feature identified of the not-competent clinic was the inability to achieve the recommended caseload. Thus, Cohort 2 demonstrated the reliability of the CoCM-SIC in assessing the CoCM implementation process. Successful clinics followed patterns similar to Cohort 1 successful clinics. The clinic that was not a failure, but also not yet competent, was assessed as implementing with behavior similar to successful clinics, but with one key omission that prevented the ability to move into sustainment (caseload size). Thus, the SIC successfully distinguished varying levels of implementation success.

Discussion

The SIC adaptation process resulted in successful measure development. Although the resulting CoCM-SIC was detailed enough to accurately capture the complex and recursive implementation with a pilot of eight rural primary care clinics, AIMS Center reported low-burden in using the tool. The ability to observe implementation behavior and assess reasons for missing steps in the implementation process allowed for improved monitoring by the purveyor organization and also allowed for better understanding why one of the clinics did not sustain the implementation and why another was not competently prepared for sustainment.

Outcomes suggest the CoCM-SIC is a reliable tool, with strong face validity, for assessing the CoCM implementation process. Given the limited pilot sample, predictive validity was not assessed; however, the consistency with which CoCM-SIC scores compare to other EBPs that have been measured with the SIC, and the information available from the discontinued and not yet competent site, suggest the CoCM-SIC has the potential to accurately predict successful implementation.

The Value of Understanding Missingness

As noted, one of the clinics discontinued implementation during Stage 8. The SIC tool assesses the reason for missing data to provide insights regarding why certain implementation activities were not completed. Although all sites had some missing data for various reasons (e.g., an activity was not applicable to their implementation, an activity had previously been completed as part of a different program) the discontinued site truly did not complete several implementation activities. Two of these activities are key to creating sustainment: (1) achievement of recommended caseload sizes and (2) completion of a financial sustainability plan. Both of these activities speak to the organization’s potential to obtain a return on investment. Subsequently, this organization’s reported reason for discontinuing was lack of resources to continue once their initial funding ran out. If the CoCM-SIC had not captured the reason for missing data, it would not be possible to disentangle the differences between a site that failed to complete an implementation activity because it was not necessary (and thus they were behaving efficiently) versus one that failed to complete an implementation activity because they truly did not complete it. Such nuances are critical to understanding successful implementation behavior.

Adaptation as an Opportunity for Definition

An unexpected consequence of the adaptation process was the opportunity it provided for the AIMS team to revisit their implementation strategy. In so doing, they more clearly defined amongst themselves expectations for a quality implementation. As a result, existing technical assistance protocols were enhanced. It is expected that the standard inclusion of these implementation activities will bolster newly adopting sites’ preparedness for a successful implementation. Thus, a secondary benefit of the adaptation process was improving the AIMS Center strategy and, subsequently, the implementation process for future CoCM scale-ups. This development work was used for preparation of a new proposal focusing on studying strategies to support the implementation of Collaborative Care for perinatal depression by members of the research team.

Conclusion

Depression and other behavioral health conditions are common and significantly impact health and functioning, especially among patients from low-income settings. Collaborative Care is an evidence-based approach that increases both access and quality in primary care, but there is wide variation in success of implementation in scale-up efforts. The recently announced availability of CMS (Center for Medicare and Medicaid Services) payment codes for CoCM services increases the urgency for developing a tool to support wide scale effective implementation.

This pilot, carried out in primary care settings serving vulnerable populations, demonstrated the potential for the SIC to help assess the implementation process for Collaborative Care. The overarching goal of understanding and defining implementation activities necessary to yield a successful and sustainable CoCM program within rural primary care settings serving patients from low-income settings was accomplished. As developers of evidence-based practices gain a stronger understanding of the steps necessary for sites to adopt the interventions with fidelity, with an eye toward sustainment, a greater number of individuals will have access to quality care. Measures like the SIC have the potential to facilitate this progression in the field, thereby realizing a significant public health impact.

Acknowledgments

Compliance with Ethical Standards

Funding: The preparation of this article was supported in part by the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis; through an award from the National Institute of Mental Health (R25 MH080916–01A2) and the Department of Veterans Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative (QUERI); funding from the National Institute of Mental Health (R01 MH097748, Saldana; R01 MH108548, Bennett); and through an award from The John A. Hartford Foundation (2012–0213, Unützer) that includes support from the Corporation for National and Community Service Social Innovation Fund. Conflict of interest: The authors declare that they have no conflict of interest. Ethical approval: This article does not contain any studies with human participants performed by any of the authors.

Footnotes

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

Contributor Information

Lisa Saldana, Oregon Social Learning Center.

Ian Bennett, University of Washington.

Diane Powers, University of Washington.

Mindy Vredevoogd, University of Washington.

Tess Grover, University of Washington.

Holle Schaper, Oregon Social Learning Center.

Mark Campbell, Oregon Social Learning Center.

References

- Areán PA, Ayalon L, Hunkeler E, Lin EH, Tang L, Harpole L, ... & Unützer J (2005). Improving depression care for older, minority patients in primary care. Medical Care, 43(4), 381–90. doi: 10.1097/01.mlr.0000156852.09920.b1 [DOI] [PubMed] [Google Scholar]

- Areán PA, Gum AM, Tang L, & Unützer J (2007). Service use and outcomes among elderly persons with low incomes being treated for depression. Psychiatric Services, 58(8), 1057–64. doi: 10.1176/ps.2007.58.8.1057 [DOI] [PubMed] [Google Scholar]

- Bao Y, Druss BG, Jung HY, Chan YF, & Unützer J (2016). Unpacking collaborative care for depression: Examining two essential tasks for implementation. Psychiatric Services, 67(4), 418–424. doi: 10.1176/appi.ps.201400577 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer AM, Azzone V, & Goldman HH (2011). Implementation of collaborative depression management at community-based primary care clinics: An evaluation. Psychiatric Services, 62, 1047–1053. doi: 10.1176/ps.62.9.pss6209_1047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer AM, Chan YF, Huang H, Vannoy S, & Unützer J (2013). Characteristics, management, and depression outcomes of primary care patients who endorse thoughts of death or suicide on the PHQ-9. Journal of General Internal Medicine, 28, 363–369. doi: 10.1007/s11606-012-2194-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blasinsky M, Goldman HH, & Unützer J (2006). Project IMPACT: A report on barriers and facilitators to sustainability. Administration and Policy in Mental Health and Mental Health Services Research, 33, 718–729. doi: 10.1007/s10488-006-0086-7 [DOI] [PubMed] [Google Scholar]

- Brown CH, Chamberlain P, Saldana L, Padgett C, Wang W, & Cruden G (2014). Evaluation of two implementation strategies in 51 child county public service systems in two states: Results of a cluster randomized head-to-head implementation trial. Implementation Science, 9, 134. doi: 10.1186/s13012-014-0134-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Saldana L, Brown CH, & Leve L (2010). Implementation of Multidimensional Treatment Foster Care in California: A randomized control trial of an evidence-based practice In Eds. Roberts-DeGennaro M & Fogel S Using Evidence to Inform Practice for Community and Organizational Change (pp. 218–234). Chicago, IL: Lyceum Books. [Google Scholar]

- Coventry PA, Hudson JL, Kontopantelis E, Archer J, Richards DA, Gilbody S, ... & Bower P (2014). Characteristics of effective collaborative care for treatment of depression: A systematic review and meta-regression of 74 randomised controlled trials. PLoS One 9, e108114. doi: 10.1371/journal.pone.0108114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cunningham PJ (2009). Beyond parity: Primary care physicians’ perspectives on access to mental health care. Health Affairs, 28(3), w490–w501. doi: 10.1377/hlthaff.28.3.w490 [DOI] [PubMed] [Google Scholar]

- Gilbody S, Bower P, Fletcher J, Richards D, & Sutton AJ (2006). Collaborative care for depression: A cumulative meta-analysis and review of longer-term outcomes. Archives of Internal Medicine, 166, 2314–2321. doi: 10.1001/archinte.166.21.2314 [DOI] [PubMed] [Google Scholar]

- Gilbody S, Bower P, & Whitty P (2006). Costs and consequences of enhanced primary care for depression: Systematic review of randomised economic evaluations. British Journal of Psychiatry, 189, 297–308. doi: 10.1192/bjp.bp.105.016006 [DOI] [PubMed] [Google Scholar]

- Grumbach K, Bainbridge E, & Bodenheimer T (2012). Facilitating Improvement in Primary Care: The Promise of Practice Coaching. The Commonwealth Fund. [PubMed] [Google Scholar]

- Hansen NB, Lambert MJ, & Forman EM (2002). The psychotherapy dose response effect and its implications for treatment delivery services. Clinical Psychology: Science and Practice, 9(3), 329–343. doi: 10.1093/clipsy.9.3.329 [DOI] [Google Scholar]

- Huang H, Chan YF, Katon W, Tabb K, Sieu N, Bauer AM, ... & Unützer J (2012). Variations in depression care and outcomes among high-risk mothers from different racial/ethnic groups. Family Practice, 29(4), 394–400. doi: 10.1093/fampra/cmr108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katon W, Unützer J, Wells K, & Jones L (2010). Collaborative depression care: History, evolution and ways to enhance dissemination and sustainability. General Hospital Psychiatry, 32, 456–464. doi: 10.1016/j.genhosppsych.2010.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levine S, Unützer J, Yip JY, Hoffing M, Leung M, Fan MY, ... & Langston CA (2005). Physicians’ satisfaction with a collaborative disease management program for late-life depression in primary care. General Hospital Psychiatry, 27(6), 383–391. doi: 10.1016/j.genhosppsych.2005.06.001 [DOI] [PubMed] [Google Scholar]

- Olfson M, Marcus SC, Druss B, Elinson L, Tanielian T, & Pincus HA (2002). National trends in the outpatient treatment of depression. JAMA, 287(2), 203–209. doi: 10.1001/jama.287.2.203 [DOI] [PubMed] [Google Scholar]

- Regier DA, Narrow WE, Rae DS, Manderscheid RW, Locke BZ, & Goodwin FK (1993). The de facto US mental and addictive disorders service system. Archives of General Psychiatry, 50, 85–94. doi: 10.1001/archpsyc.1993.01820140007001 [DOI] [PubMed] [Google Scholar]

- Rossom RC, Solberg LI, Magnan S, Crain AL, Beck A, Coleman KJ, … & Unützer J (2017). Impact of a national collaborative care initiative for patients with depression and diabetes or cardiovascular disease. General Hospital Psychiatry, 44, 77–85. doi: 10.1016/j.genhosppsych.2016.05.006 [DOI] [PubMed] [Google Scholar]

- Rush AJ, Trivedi M, Carmody TJ, Biggs MM, Shores-Wilson K, Ibrahim H, & Crismon ML (2004). One-year clinical outcomes of depressed public sector outpatients: A benchmark for subsequent studies. Biological Psychiatry, 56(1), 46–53. doi: 10.1016/j.biopsych.2004.04.005 [DOI] [PubMed] [Google Scholar]

- Saldana L (2014). The Stages of Implementation Completion for evidence-based practice: Protocol for a mixed methods study. Implementation Science, 9, 43. doi: 10.1186/1748-5908-9-43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saldana L Chamberlain P, Bradford WD, Campbell M, & Landsverk J (2013). The Cost of Implementing New Strategies (COINS): A method for mapping implementation resources using the Stages of Implementation Completion. Children and Youth Services Review, 39, 177–182. doi: 10.1016/j.childyouth.2013.10.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saldana L, Chamberlain P, Wang W, & Brown H (2011). Predicting program start-up using the Stages of Implementation measure. Administration and Policy in Mental Health and Mental Health Services Research, 39, 419–425. doi: 10.1007/s10488-011-0363-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saldana L, Schaper H, Campbell M, & Chapman J (2015). Standardized Measurement of Implementation: The Universal SIC. 7th Annual Conference on the Science of the Dissemination and Implementation in Health. Implementation Science, 10 (S1), A73. doi: 10.1186/1748-5908-10-S1-A73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solberg LI (2014). Impact of a Learning Health Care Network on Depression Care: The DIAMOND Initiative. Paper presented at the The 22nd NIMH Conference on Mental Health Services Research, Bethesda, MD. [Google Scholar]

- Solberg LI, Crain AL, Jaeckels N, Ohnsorg KA, Margolis KL, Beck A, . . . Van de Ven AH (2013). The DIAMOND initiative: Implementing collaborative care for depression in 75 primary care clinics. Implementation Science, 8. doi: 10.1186/1748-5908-8-135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solberg LI, Crain AL, Maciosek MV, Unützer J, Ohnsorg KA, Beck A, ... & Glasgow RE (2015). A stepped-wedge evaluation of an initiative to spread the collaborative care model for depression in primary care. The Annals of Family Medicine, 13(5), 412–420. doi: 10.1370/afm.1842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trivedi MH (2009). Treating depression to full remission. The Journal of Clinical Psychiatry, 70(1), e01. doi: 10.4088/JCP.8017br6c.e01 [DOI] [PubMed] [Google Scholar]

- Unützer J, Katon W, Callahan CM, Williams JW Jr, Hunkeler E, Harpole L, ... & Areán PA (2002). Collaborative care management of late-life depression in the primary care setting. JAMA, 288(22), 2836–2845. doi: 10.1001/jama.288.22.2836 [DOI] [PubMed] [Google Scholar]

- Vannoy SD, Mauer B, Kern J, Girn K, Ingoglia C, Campbell J, ... & Unützer J (2011). A learning collaborative of CMHCs and CHCs to support integration of behavioral health and general medical care. Psychiatric Services, 62, 753–758. doi: 10.1176/ps.62.7.pss6207_0753 [DOI] [PubMed] [Google Scholar]

- Von Korff M, & Tiemens B (2000). Individualized stepped care of chronic illness. Western Journal of Medicine, 172(2), 133–7. doi: 10.1136/ewjm.172.2.133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang PS, Demler O, Olfson M, Pincus HA, Wells KB, & Kessler RC (2006). Changing profiles of service sectors used for mental health care in the United States. American Journal of Psychiatry, 163(7), 1187–1198. doi: 10.1176/appi.ajp.163.7.1187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang PS, Lane M, Olfson M, Pincus HA, Wells KB, & Kessler RC (2005). Twelvemonth use of mental health services in the United States: Results from the National Comorbidity Survey Replication. Archives of General Psychiatry, 62(6), 629–640. doi: 10.1001/archpsyc.62.6.629 [DOI] [PubMed] [Google Scholar]

- Wells KB, Miranda J, Bauer MS, Bruce ML, Durham M, Escobar J, . . . Unützer J (2002). Overcoming barriers to reducing the burden of affective disorders. Biological Psychiatry, 52, 655–675. doi: 10.1016/S0006-3223(02)01403-8 [DOI] [PubMed] [Google Scholar]

- Whiteford HA, Degenhardt L, Rehm J, Baxter AJ, Ferrari AJ, Erskine HE, ... & Burstein R (2013). Global burden of disease attributable to mental and substance use disorders: Findings from the Global Burden of Disease Study 2010. Lancet, 382(9904), 1575–1586. doi: 10.1016/S0140-6736(13)61611-6 [DOI] [PubMed] [Google Scholar]