Abstract

Vector autoregressive models characterize a variety of time series in which linear combinations of current and past observations can be used to accurately predict future observations. For instance, each element of an observation vector could correspond to a different node in a network, and the parameters of an autoregressive model would correspond to the impact of the network structure on the time series evolution. Often these models are used successfully in practice to learn the structure of social, epidemiological, financial, or biological neural networks. However, little is known about statistical guarantees on estimates of such models in non-Gaussian settings. This paper addresses the inference of the autoregressive parameters and associated network structure within a generalized linear model framework that includes Poisson and Bernoulli autoregressive processes. At the heart of this analysis is a sparsity-regularized maximum likelihood estimator. While sparsity-regularization is well-studied in the statistics and machine learning communities, those analysis methods cannot be applied to autoregressive generalized linear models because of the correlations and potential heteroscedasticity inherent in the observations. Sample complexity bounds are derived using a combination of martingale concentration inequalities and modern empirical process techniques for dependent random variables. These bounds, which are supported by several simulation studies, characterize the impact of various network parameters on estimator performance.

Keywords: Autoregressive processes, Generalized linear models, Statistical learning, Structured learning

I. Autoregressive Processes in High Dimensions

Imagine recording the times at which each neuron in a biological neural network fires or “spikes”. Neu-ron spikes can trigger or inhibit spikes in neighboring neurons, and understanding excitation and inhibition among neurons provides key insight into the structure and operation of the underlying neural network [1], [2], [3], [4], [5], [6], [7]. A central question in the design of this experiment is “for how long must I collect data before I can be confident that my inference of the network is accurate ?” Clearly the answer to this question will depend not only on the number of neurons being recorded, but also on what we may assume a priori about the network. Unfortunately, existing statistical and machine learning theory give little insight into this problem.

Neural spike recordings are just one example of a non-Gaussian, high-dimensional autoregressive process, where the autoregressive parameters correspond to the structure of the underlying network. This paper examines a broad class of such processes, in which each observation vector is modeled using an exponential family distribution. In general, autoregressive models are a widely-used mechanism for studying time series in which each observation depends on the past sequence of observations. Inferring these dependencies is a key challenge in many settings, including finance, neuroscience, epidemiology, and sociology. A precise understanding of these dependencies facilitates more accurate predictions and interpretable models of the forces that determine the distribution of each new observation.

Much of the autoregressive modeling literature focuses on linear auto-regressive models, especially with independent Gaussian noise innovations (see e.g. [8], [9], [10], [11]). However, in many settings linear Gaussian models with signal-independent noise are restrictive and fail to capture the data at hand. This challenge arises, for instance, when observations correspond to count data -e.g., when we collect data by counting individual events such as neurons spiking. Another example arises in epidemiology, where a common model involves infection traveling stochastically from one node in a network to another based on the underlying network structure in a process known as an “epidemic cascade” [12], [13], [14], [15]. These models are used to infer network structure based on the observations of infection time, which is closely related to the Bernoulli autoregressive model studied in this paper. Further examples arise in a variety of applications, including vehicular traffic analysis [16], [17], finance [18], [19], [20], [21], social network analysis [22], [23], [24], [25], [26], biological neural networks [1], [2], [3], [4], [5], [6], [7], power systems analysis [27], and seismology [28], [29].

Because of their prevalence across application domains, time series count data (cf [30], [31], [32], [33], [34]) and other non-Gaussian autoregressive processes (cf. [35], [36], [37]) have been studied for decades. Although a substantial fraction of the this literature is focused on univariate time series, this paper focuses on multivariate settings, particularly where the vector observed at each time is high-dimensional relative to the duration of the time series. In the above examples, the dimension of the each observation vector would be the number of neurons in a neural network, the number of people in a social network, or the number of interacting financial instruments.

This paper focuses on estimating the parameters of a particular family of time series that we call the vector generalized linear autoregressive (GLAR) model which incorporates both non-linear dynamics and signal-dependent noise innovations. We adopt a regularized likelihood estimation approach that extends and generalizes our previous work on Poisson inverse problems (cf [38], [39], [40], [41]). While similar algorithms have been proposed in the above-mentioned literature, little is known about their sample complexity or how inference accuracy scales with the key parameters such as the size of the network or number of entities observed, the time spent collecting observations, and the density of edges within the network or dependencies among entities.

In this paper, we conduct a detailed investigation of the GLAR model. In addition, we examine our results for two members of this family: the Bernoulli autoregressive and the log-linear Poisson autoregressive (PAR) model. The PAR model has been explicitly studied in [42], [43], [44] and is closely related to the continuous-time Hawkes point process model [45], [46], [47], [48], [49] and the discrete-time INGARCH model [50], [51], [52], [53]. However, that literature does not contain the sample complexity results presented here. The INGARCH literature is focused on low-dimensional settings, typically univariate, whereas we are focused on the high-dimensional setting where the number of nodes or channels is high relative to the number of observations. Additionally, existing sample complexity bounds for Hawkes processes [48] focus on a linear (as opposed to log-linear) model with samples collected after reaching the stationary distribution. The log-linear model is largely used in practice both for numerical reasons and modeling efficacy for real world data. We note that linear models can predict inadmissible negative event rates, whereas the log-linear model enforces the feasibility of the predicted model. The log-linear and linear models exhibit very different behaviors in their properties and stationary distributions, making this work a significant step forward from the analysis of linear models. The extension of these prior investigations to the high-dimensional, non-stationary setting is non-trivial and requires the development of new theory and methods.

In this paper, we develop performance guarantees for the vector GLAR model that provide sample complexity guarantees in the high-dimensional setting under lowdimensional structural assumptions such as sparsity of the underlying autoregressive parameters. In particular, our main contributions are the following:

Formulation of a maximum penalized likelihood estimator for vector GLAR models in highdimensional settings with sparse structure.

Mean-squared-error (MSE) bounds on the proposed estimator as a function of the problem dimension, sparsity, and the number of observations in time for general GLAR models. These mean-squared error bounds are identical to the bounds in the linear Gaussian setting (see e.g. [8], [54]) up to log and constant factors.

Application of our general result to obtain sample complexity bounds for Bernoulli and Poisson GLAR models.

Analysis techniques for generalized linear models that adapt to signal-dependent noise that simultaneously leverage martingale concentration inequalities, empirical risk minimization analysis, and covering arguments for high-dimensional regression.

A. Comparison to Gaussian Analysis

There has been a large body of work providing theoretical results for certain high-dimensional models under low-dimensional structural constraints (see e.g. [55], [41], [56], [57], [58], [59], [60], [61], [49]). The majority of prior work has focused on the setting where samples are independent and/or follow a Gaussian distribution. In the GLAR setting, however, non-Gaussianity and temporal dependence among observations can make such analyses particularly challenging and beyond the scope of much current research in high-dimensional statistical inference (see [62] for an overview).

This problem is substantially harder than the Gaussian case from a technical perspective because we can not exploit linearity and spectral properties of linear Gaussian time-series. In our case we have signal-dependent noise, and we can not exploit the same linear spectral properties. One of the important steps is to prove a restricted eigenvalue/restricted strong convexity condition for high-dimensional models (see e.g. [8], [63], [10]). In the Gaussian linear auto-regressive setting, the restricted eigenvalue/strong convexity convexity condition reduces studying the covariance structure of Gaussian design matrices. The greatest technical challenge associated with non-linear time series models with signal-dependent noise is proving a restricted eigenvalue/strong convexity condition. Much of the technical work in this manuscript focuses on proving strong convexity of the objective function over the domain of Xt for all t.

To further expand on this point, consider momentarily a LASSO estimator of the autoregressive parameters. In the classical LASSO setting, the accuracy of the estimate depends on characteristics of the Gram matrix associated with the design or sensing matrix. This matrix may be stochastic, but it is usually considered independent of the observations and performance guarantees for the estimator depend on the assumption that the matrix obeys certain properties (e.g., the restricted eigenvalue condition [8], [63], [10]). In our setting, however, the “design” matrix is a function of the observed data, which in turn depends on the true underlying network or autoregressive model parameters. Thus a key challenge in the analysis of a LASSO-like estimator in the GLAR setting involves showing that the data- and network-dependent Gram matrix exhibits properties that ensure reliable estimates.

Perhaps the most closely related prior work in the high-dimensional setting is [8]. In [8], several performance guarantees are provided for different linear Gaussian problems with dependent samples including the Gaussian autoregressive model. Since [8] deals exclusively with linear Gaussian models, they exploit many properties of linear systems and Gaussian random variables that cannot be applied to non-Gaussian and nonlinear autoregressive models. In particular, compared to standard autoregressive processes with Gaussian noise, in the GLAR setting the conditional variance of each observation is dependent on previous data instead of being a constant equal to the noise variance. Works such as [41], [55], [64] provide results for non-Gaussian models but still rely on independent observations. Weighted LASSO estimators for Hawkes processes address some of these challenges in a continuous-time setting [48]. As we show after stating the main results, we achieve the same mean-squared error bounds for non-linear time series up to log and constant factors whilst avoiding the restrictive linear Gaussian assumptions.

The remainder of the paper is structured as follows: Section II introduces the generalized linear autoregressive model and Section III presents the novel risk bounds associated with the regularized maximum likelihood estimator of the process. We then use our theory to examine two special cases (the Poisson and Bernoulli models) in Sections III–A and III–B, respectively. The main proofs are provided in Section IV, while supplementary lemmas are deferred to the appendix. Finally, Section V contains a discussion of our results, their implications in different settings, and potential avenues for future work.

II. Problem Formulation

In this paper we consider the generalized linear autoregressive model:

| (1) |

where Xt+1, m is the mth variate of Xt+1 where 1 ≤ m ≤ M, are M-variate vectors and a* ∈ [αmin, αmax]M is an unknown parameter vector, ν ∈ [νmin, νmax]M is a known, constant offset parameter, and p is an exponential family probability distribution. Specifically, X ~ p(θ) means that the distribution of the scalar X is associated with the density p(x|θ) = h(x) exp[ϕ(x)θ − Z(θ)], where Z(θ) is the so-called log partition function, ϕ(x) is the sufficient statistic of the data, and h(x) is the base measure of the distribution. Distributions that fit such assumptions include the Poisson, Bernoulli, binomial, negative binomial and exponential. According to this model, conditioned on the previous data, the elements of Xt are independent of one another and each have a scalar natural parameter. The input of the function p in (1) is the natural parameter for the distribution, i.e., is the natural parameter of the conditional distribution at time t + 1 for observation m. A similar, but low-dimensional, model appears in [44], but that work focuses on maximum likelihood and weighted least squares estimators in univariate settings that are known to perform poorly in high-dimensional settings (as is our focus). For these distributions it is straightforward to show when they have strongly convex log-partition functions, which will be crucial to our analysis. Note that this distribution has and , the first and second derivatives of the log-partition function, respectively. Compared to standard autoregressive processes with Gaussian noise, the conditional variance is now dependent on previous data instead of being a constant equal to the noise variance.

We can state the conditional distribution explicitly as:

where h is the base-measure of the distribution p. Using this equation and observations, we can find an estimate for the network A* which is constructed row-wise by (i.e. is the mth row of A*).

In general, we observe T + 1 samples and our goal is to infer the matrix A*. In the setting where M is large, we need to impose structural assumptions on A* in order to have strong performance guarantees. Let

In this paper we assume that the matrix A* is s-sparse, meaning that A* belongs to the following class:

where and 1(·) is the indicator function. That is, we assume . Furthermore, we define

so ρ is the maximum number of non-zero elements in a row of A*.

We might like to estimate A* via a constrained maximum likelihood estimator by solving the following optimization problem:

| (2) |

or its Lagrangian form

| (3) |

However, these are difficult optimization problems due to the non-convexity of the ℓ0 norm. Therefore, we instead find an estimator using the element-wise ℓ1 regularizer, the convex relaxation of the ℓ0 function, along with the negative log-likelihood to create the following estimator:

| (4) |

where ∥·∥1 is the ℓ1 norm and . The above is the regularized maximum likelihood estimator (RMLE) for the problem, which attempts to find an estimate of A* which both fits the empirical distribution of the data while also having many zerovalued elements. Notice that we assume the elements of A* are bounded and we use these bounds in the estimator definition. One reason for this is that bounds on the elements of A* can enforce stability. If the elements of A* are allowed to be arbitrarily large, the system may become unstable and therefore impossible to make proper estimates. Knowing loose bounds facilitates our analysis but in practice does not appear to be necessary. In the experiment section we discuss choosing these bounds in the estimation process.

We note that while we assume that ν is a known constant vector, if we assume there is some unknown constant offset that we would like to estimate, we can fold it into the estimation of A. For instance, consider appending ν as an extra column of the matrix A*, and appending a 1 to the end of each observation Xt. Then for indices 1, …, M the observation model becomes where and Xt are the appended versions. We can then find the RMLE of this distribution to find both and , but for clarity of exposition we assume a known ν.

Estimating the network parameters in the autoregressive setting with Gaussian observations can be formulated as a sparse inverse problem with connections to the well-known LASSO estimator. Consider the problem of estimating the . Define

and

where ym is the time series of observed counts associated with the mth node and X is a matrix of the observed counts associated with all nodes. Then , where is noise, and we could consider the LASSO estimator for each m:

However, there are two key challenges associated with the LASSO estimator in this context: (a) the squared residual term does not account for the non-Gaussian statistics of the observations and (b) the “design matrix” is data-dependent and hence a function of the unknown underlying network. In classical LASSO analyses, performance bounds depend on the design matrix satisfying the restricted eigenvalue condition or restricted isome-try property or some related condition; it is relatively straightforward to ensure such a condition is satisfied when the design matrix is independent of the data, but much more challenging in the current context. As a result, despite the fact that we face a sparse inverse problem, the existing LASSO literature does not address the subject of this proposal.

III. Main results

In this section, we turn our attention to deriving bounds for , the difference in Frobenius norm between the regularized maximum likelihood estimator, , and the true generating network, A*, under the assumption that the true network is sparse. We assume that . Recall is the maximum number of non-zero elements in a row of A*. First we define a family of autoregressive processes, generated by Equation 1 that will permit low approximation errors in the sparse regime. The definition of this class involves several sufficient conditions that concern stability and convexity of the autoregressive process that allow the underlying network to be estimated successfully. Without stability of the process it would be impossible to learn about the underlying model, this is similar to the assumption that the maximum eigenvalue of a Gaussian Autoregressive process being bounded by 1. The convexity conditions similarly are sufficient for learning the underlying network and in generally more easily satisfied in the Gaussian case due to the form of the distribution function. For general exponential family distributions it requires proving which distributions would fit into this family. After the definition of this class of autoregressive processes and statement of the main theorem, we show significant results proving that both the Bernoulli and Poisson distributions fit in this family.

Definition III.1.

We define a class of autoregressive processes as any process generated by Equation 1 such that for any realization there exists a subset of observations for that satisfies the conditions:

There exists a constant U such that where U is independent of T.

- Z(·) is σ-strongly convex on a domain determined by U:

for all where , and , where σ is independent of T. The smallest eigenvalue of is lower bounded by ω > 0, which is independent of T.

We define the constant ξ as a constant such that , which will be determined in part by the constant U, and can be set such that ξ is very close to 1.

For ξ ≈ 1, membership in means most of the observed data is bounded independent of T. The condition allows us to analyze time series in which the maximum of a series of iid random variables can grow with T, but any percentile is bounded by a constant. Our analysis will then be conducted on the bounded series . We prove to these conditions to be true with high probability for the Bernoulli and Poisson cases in Sections III–A and III–B, respectively, and the corresponding values of U, σ, ξ, and ω are computed explicitly. Other exponential family distributions and their associated autoregressive processes likely belong to as well, but proving the conditions and parameters of their inclusion remains an open problem beyond the scope of this paper

Theorem 1.

Assume λ ≥ and let be the RMLE for a process which belongs to as defined in Definition III.1. For any row of the estimator and for any δ ∈ (0,1), with probability at least 1 — δ,

for where c is independent of M, T, ρ and s. Furthermore,

with probability greater than 1 – δ for .

To apply Theorem 1 to specific GLAR models, we need to provide bounds on λ, as well as σ, ω, U and ξ for inclusion in . We do this in the next section for Bernoulli and Poisson GLAR models.

We can compare the results of Theorem 1 to the related results of [8]. In that work they arrive at rates for the Gaussian autoregressive process that are equivalent with respect to the sparsity parameter, number of observations and regularization parameter. However, we incur slightly different dependencies on ξ, σ and ω. These are due mainly to the fact that our bounds hold for a wide family of distributions and not just the Gaussian case, which has nice properties related to restricted strong convexity and specialized concentration inequalities. Additionally, the way λ is defined is very similar, but bounding λ for a non-Gaussian distribution will result in extra log factors. It is an open question whether this bound is rate optimal in the general setting.

A. Result 1: Bernoulli Distribution

For the Bernoulli distribution we have the following autoregressive model:

| (5) |

The first observation about this model is that the sufficient statistic ϕ(x) = x and the log-partition function Z(θ) = log(l + exp(θ)), which is strongly convex when the absolute value of θ is bounded. One advantage of this model is that the observations are inherently bounded due to the nature of the Bernoulli distribution, so and ξ = l. Using this observation we derive the strong convexity parameter of Z on the bounded range, thus .

To derive rates from Theorem 1, we must prove that this process belongs to as defined in Definition III.1; this is shown with high-probability by Theorem 2.

Theorem 2.

For a sequence Xt generated from the Bernoulli autoregressive process with the matrix A* and the vector ν, we have the following properties:

The smallest eigenvalue of the matrix is lower bounded by .

- Assuming 1 ≤ t ≤ T and that T ≥ 2 and log(MT) ≥ 1, then

with probability at least at least .

Using these results we get the final sample error bounds for the Bernoulli autoregressive process.

Corollary 1.

The RMLE for the Bernoulli autoregressive process defined by Equation 5, and setting has error bounded by

with probability at least 1 − δ for for constants C, c > 0 which are independent of M, T, s and ρ.

The lower bound on the number of observations T comes from needing to satisfy the conditions of both parts of Theorems 1 and 2. In order to get this statement we use a union bound over the high probability statements of Theorem 1 described in (9) and Theorem 2 which holds with probability greater than .

B. Result 2: Poisson Distribution

In this section, we derive the relevant values to get error bounds for the vector autoregressive Poisson distribution. Under this model we have

We assume that αmax = 0 for stability purposes, thus we are only modeling inhibitory relationships in the network. Deriving the sufficient statistic and log-partition function yields ϕ(x) = x and Z(θ) = exp(θ). The next important values are the bounds on the magnitude of the observations, which will both ensure the strong convexity of Z and the stability of the process.

Lemma 1.

For the Poisson autoregressive process generated with A* ∈ [αmin, 0]M×M and constant vector ν ∈ [νmin, νmax]:

If log MT ≥ 1, there exists constants C and c which depend on the value νmax, but are independent of T,M,s and ρ such that 0 ≤ Xt, m ≤ C log(MT) with probability at least 1 – e−clog(MT) for all 1 ≤ t ≤ T and 1 ≤ m ≤ M.

For any α ∈ (0, 1) such that αMT is an integer, there exist constants U and c which depend on the values of νmax and α, but independent of T, M, s and ρ, such that with probability at least 1−e−cMT, 0 ≤ Xt, m ≤ U for at least αMT of the indices. We define to be these αMT indices.

As a consequence of Lemma 1, we have ∥|Xt∥∞ ≤ U for at least ξT values of t ∈ {1, 2, …, T} where ξ = 1 − (1 – α)M. We additionally assume that U is large enough such that and therefore ξ ∈ (0,1).

Using this Lemma, we prove that this process belongs to with high-probability, by deriving the strong convexity parameter of Z and a lower bound on the smallest eigenvalue of Γt. In the Poisson case, Z(·) = exp(·) and therefore the strong convexity parameter, .

Theorem 3.

For a sequence Xt generated from the Poisson autoregressive process with the matrix A*, with all non-positive elements, and the vector ν, we have the following properties

The smallest eigenvalue of the matrix , for consecutive indices and in as defined in the definition of , is lower bounded by .

- Assuming Xt, m ≤ C log(MT) for all 1 ≤ m ≤ M and 1 ≤ t ≤ T and that T ≥ 2 and log (MT) ≥ 1, then

with probability at least at least 1−exp(−clog (MT)) for some c > 0 independent of ρ, s,M and T.

Using Theorem 3, we can find the error bounds for the PAR process by using the result of Theorem 1.

Corollary 2.

Using the results of Theorem 1 and using the Poisson autoregressive model with A* with all nonpositive values, the RMLE admits the overall error rate of

with probability at least 1 − δ for for constants C, c > 0 which are independent of M, T, s and ρ

Again, the lower bound on the number of observations comes from combining the high probability statements of each of the constituent parts of the corollary in the same way as was done in the Bernoulli case. In this case all of Theorem 1, both parts of Lemma 1 and Theorem 3 need to hold.

Remark:

It is worthwhile comparing the theoretical results in the Bernoulli and Poisson processes compared to results for Gaussian processes in [8], [10]. The mean-squared error bounds in the Gaussian case is [8], [10] whilst our bound for Bernoulli, Poisson, and Gaussian random variables are the same up to log and constant factors. The additional log factors arise because our analysis is more general and does not exploit specific properties of the linear Gaussian process. Hence our analysis is not optimal in the linear Gaussian setting since additional log factors are incurred to ensures the process satisfies Definition III.1 using our more general analysis that applies to non-linear models with signal-dependent noise.

C. Experimental Results

We validate our theoretical results with experimental results performed on synthetically generated data using the Poisson autoregressive process. We generate many trials of synthetic data with known underlying parameters and then compare the estimated values. For all trials the constant offset vector ν is set identically at 0, and the 20×20 matrices A* are set such that s randomly assigned values are in the range [−1,0] and with constant ρ = 5. Data is then generated according the process described in Equation 1 with the Poisson distribution. X0 is chosen as a 20 dimensional vector drawn randomly from Poisson(1), then T observations are used to perform the estimation. The parameters s and T are then varied over a wide range of values. For each (s, T) pair 100 trials are performed, the regularized maximum likelihood estimate is calculated with and the MSE is recorded. The MSE curves are shown in Figure 1. Notice that the true values of A* are bounded by −1 and 0, but in our implementation we do not enforce these bounds (we set αmin = −∞ and αmax = to in Equation 4). While αmin = −∞ would cause the theoretical bounds to be poor, the theory can be applied with the smallest and largest elements of the matrix estimated from the unconstrained optimization. In other words, the theory depends on having an upper and lower bound on the rates, but mostly as a theoretical convenience, while the estimator can be computed in an unconstrained way.

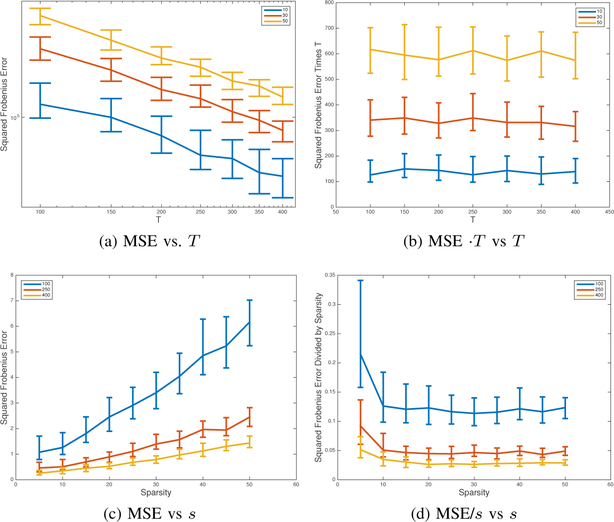

Fig. 1:

A series of plots to show the behavior of the MSE of the RMLE for the Poisson Autoregressive process to show how error scales with time horizon, T, and sparsity, s. Our theoretical analysis states that error should decay as and should grow like s both up to constants and log factors. The top row of plots shows the MSE behavior over a range of T values, from 100 to 400 all less than or equal to M2 = 400, where (a) is the MSE and (b) is the MSE multiplied by T to show that the MSE scales as . The bottom row shows the MSE behavior over a range of s values, where (c) shows MSE and (d) shows MSE divided by s to show that the MSE is linear is s. Plots (b) and (d) are included to show the values which are expected to scale as constants, which is confirmed. In all plots the median value of 100 trials is shown, with error bars denoting the middle 50 percentile.

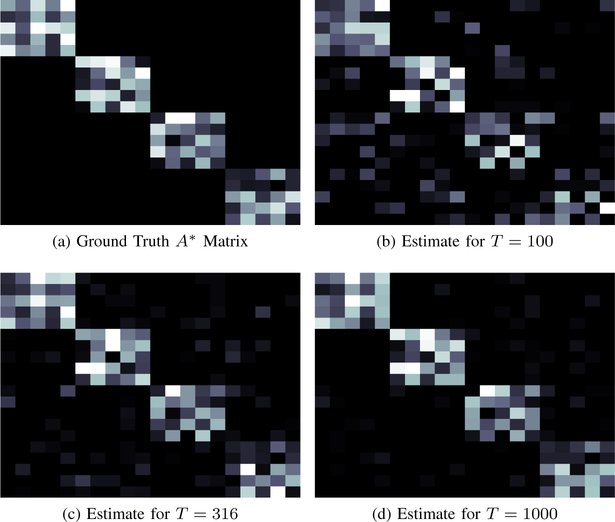

We show a series of plots which compare the MSE versus increasing behavior of T and s, as well as comparing the behavior of MSE·T and of MSE/s. Plotted in each figure is the median of 100 trials for each (s, T) pair, with error bars denoting the middle 50 percentile. These plots show that setting λ proportional to T−1 gives us the desired T−1 error decay rate. Additionally, we see that the error increases approximately linearly in the sparsity level s, as predicted by the theory. Finally, Figure 2 shows one specific example process and the estimates produced. The first image is the ground truth matrix, generated to be block diagonal, in order to more easily visualize support structure whereas in the first experiment the support is chosen at random. One set of data is generated using this matrix, and then estimates are constructed using the first T = 100, 316 and 1000 data points. The figure shows how with more data, the estimates become closer to the original, where much of the error comes from including elements off the support of the true matrix.

Fig. 2:

These images show the ground truth A* matrix (a) and 3 different estimates of the matrix created using increasing amounts of data. We observe that even for a relatively low amount of data we have picked out most of the support but with several spurious artifacts. As the amount of data increases, fewer of the erroneous elements are estimated. All images are scaled from 0 (dark) to −1 (bright).

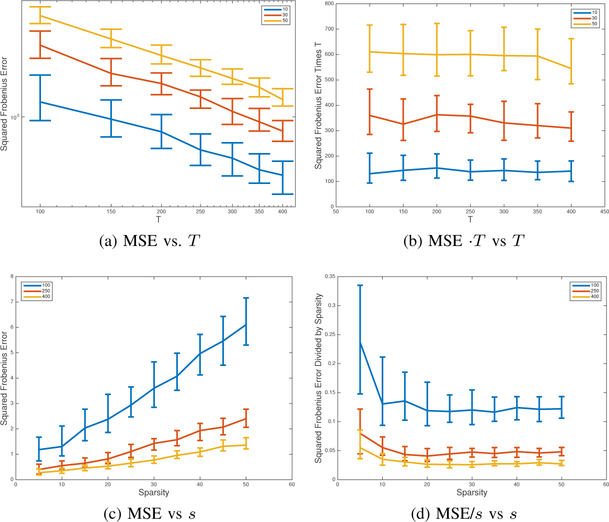

One important characteristic of the our results is that it does not depend on any assumptions about the stationarity or the mixing time of the process. To show that this is truly a property of the system and not just our proof technique, we repeat the experimental process described above, but for each set of observations of length T, we first generate 10,000 observations to allow the process to mix. In other words, for every matrix A we generate T+10,000 observations, but only use the last T to find the RMLE. The plots in figure 3 show the results of this experiment. The important observation is that the results both scale the same way, and have approximately the same magnitude as the experiment when no mixing was done.

Fig. 3:

Repeat of experimental set up from Figure 1, but now allowing for mixing. The top row of plots shows the MSE behavior over a widely varying range of T values, from 100 to 400, where (a) is the MSE and (b) is the MSE multiplied by T to show that the MSE is behaving as 1/T. The bottom row shows the MSE behavior over a range of s values, where (c) shows MSE and (d) shows MSE divided by s to show that the MSE is linear is s. Plots (b) and (d) are included to show the values which are expected to scale as constants, independent of mixing time, which is confirmed. In all plots the median value of 100 trials is shown, with error bars denoting the middle 50 percentile. Most importantly, the behavior and magnitude of errors in this plot matches the results with no mixing.

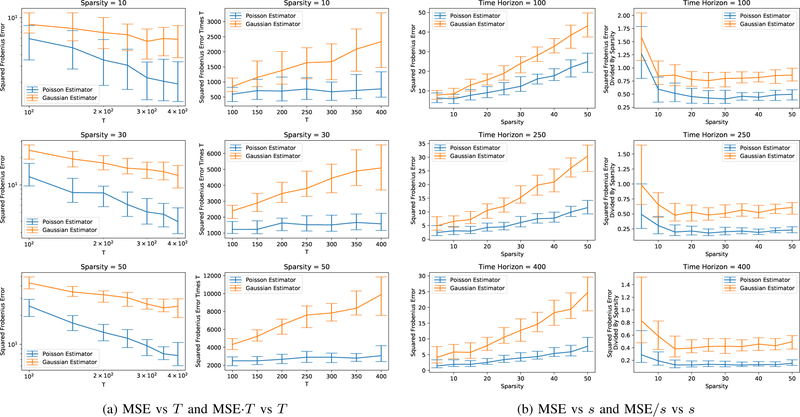

Up to this point we have shown the results of estimating the underlying network when the data was generated using a Poisson Autoregressive Process and using the RMLE associated with it. We have shown these results in order to demonstrate that the rates predicted by our theoretical error bounds match the rates seen empirically. A natural question is how well would estimating the underlying network perform when the data generation process was Poisson Autoregressive? Gaussian estimators have many nice properties and their error rates have been studied extensively, so being able to use this as an estimator would seem like a logical choice if the approximation error with the non-Gaussian data was relatively small. To test this hypothesis data was generated in much the same way as the previous experimental set-ups, except values in the A* matrix were allowed to vary from 0 to −2.5, meaning even more inhibition was possible. We then made estimates of the underlying network using both the Poisson AR loss function as well as the more traditional Gaussian, using the same regularization parameter for each. The results of these experiments are shown in Figure 4. These plots show a few key characteristics, the most important being that the Poisson based objective function soundly outperforms the Gaussian. We also see that as the time horizon gets larger, meaning more observations are available, and as sparsity decreases the gap between the two increases. Both of these make intuitive sense because as more observations are revealed the Poisson objective function can more closely narrow in on the underlying network whereas the Gaussian objective function is still stuck with some amount of approximation error that will not decrease with increasing data. Additionally as more elements of the matrix A* are non-zero, the Gaussian estimate will get worse comparatively because it will be wrong in more locations. With a very sparse true matrix the Gaussian estimate could set lots of elements to zero and be “accidentally” correct, which will not happen with a less sparse true matrix. For all of these reasons, we find it convincing that using the objective function which matches the generative process to be extremely important for accurate estimation in the autoregressive regime.

Fig. 4:

Repeat of the experimental set up from Figure 1, but with the true A* matrix allowed to have elements ranging from 0 to 2.5. This time both the Poisson Autoregressive RMLE is estimated as well as an estimator based on the Gaussian approximation to the Poisson distribution. We see that the Poisson based estimator consistently and significantly outperforms the Gaussian estimator and that the gap increases with increasing T and decreasing sparsity. In all plots the median value of 100 trials is shown, with error bars denoting the middle 50 percentile.

IV. Proofs

A. Proof of Theorem 1

Proof: We start the proof by making an important observation about the estimator defined in Equation 4: this loss function can be completely decoupled by a sum of functions on rows. Therefore we can bound the error of a single row of the RMLE and add the errors to get the final bound. For each row we use a standard method in empirical risk minimization and the definition of the minimizer of the regularized likelihood for each row:

We define , which is conditionally zero mean random variable. By using a moment generating function argument, we know that , and therefore . Hence

Now we use the definition of a Bregman divergence to lower bound the left hand side. An important property of Bregman divergences is that if they are induced by a strongly convex function, then the Bregman can be lower bounded by a scaled ℓ2 difference of its arguments. This is where our squared error term will come.

where denote the Bregman divergence induced by Z. Hence

First we upper bound the right-hand side of the inequality as follows:

In the above, we use the defintion of as the true support of A* and have used the decomposability of ∥·∥1. The decomposability of the norm means that we have the property .

Note that . Under the assumption that and by the non-negativity of the Bregman divergence on the left hand side of the inequality, we have that

Using the decomposability of the ℓ1 norm, this inequality implies that for all rows 1 ≤ m ≤ M, we have that . Since , and consequently

where the final inequality follows since for all j. Using this inequality and the fact that implies that , and therefore for all the range of both and are in [].

Now to lower bound the Bregman divergence in terms of the Frobenius norm, we use the first condition of membership in . Inherently, the RMLE will admit estimates which should converge to the true matrix A* under a Bregman divergence induced by the log-partition function, but we are interested in convergence of the Frobenius norm. Therefore, to convert from one to the other, we require the log-partition function to be strongly convex. This issue is side-stepped in the Gaussian noise case, due to the fact that the Bregman in question would identically be the Frobenius norm. By Definition III.1, Z is σ-strongly convex, and therefore on it is true that and on the rest of the time indices.

Therefore

Implies

Define for any , then we have the bound:

Therefore we can define the cone on which the vector Δm must be defined:

and restrict ourselves to studying properties of vectors in that set. Since where ρm is the number of non-zeros of , we have that

| (6) |

where Now we consider three cases: if ∥Δm∥T ≥ ∥Δm∥2, then max(∥Δm∥T, ∥Δm∥2) ≤ δm. On the other hand if ∥Δm∥T ≤ ∥Δm∥2 and ∥Δm∥2 ≤ δm, then max(∥Δm∥T, ∥Δm∥2) ≤ δm.

Hence the final case we need to consider is ∥Δm∥T ≤ ∥Δm∥2 and ∥Δm∥2 ≥ δm. Now we follow a similar proof technique to that used in Raskutti et al. [60] adapted to dependent sequences, to understand this final scenario. Let us define the following set:

| (7) |

Further, let us define the alternative set:

| (8) |

We wish to show that for , we have for some κ ∈ (0, 1) with high probability, and therefore Equation 6 would imply that max(∥Δm∥T, ∥Δm∥2) ≤ δm/κ. We claim that it suffices to show that is true on (δm) with high probability. In particular, given an arbitrary non-zero , consider the re-scaled vector . Since , We have and by construction. Together, these facts imply . Furthermore, if is true, then is also true. Alternatively if we define the random variable , then it suffices to show that .

For this step we use some recent concentration bounds [65] and empirical process techniques [66] for martingale random variables. Recall that the empirical norm is . Further let denote the indices in . Next we define the conditional expectation

Then we have

To bound the first quantity, , we first note that

by the definition of and the fact that since . Thus

Now we focus on bounding sup . First, we use a martingale version of the bounded difference inequality using Theorem 2.6 in [65] (see Appendix VII–D):

with high probability. Recall that on , we have Because , it is true that . We then use the the relationship between the ℓ1 and ℓ2 norms to say where ρm is the number of non-zeros in the mth row of the true matrix A*. Putting these together means . In particular, we apply Theorem 4 in Appendix VII–D with , , and , and therefore . Therefore, applying Theorem 4

with probability at least . Since , the above statement holds with probability at least . Hence

Now we bound . Here we use a recent symmetrization technique adapted for martingales in [66]. To do this, we introduce the so-called sequential Rademacher complexity defined in [66]. Let be independent Rademacher random variables, that is . For a function class , the sequential Rademacher complexity is:

Note here that Xt is a function of the previous independent random variables (ϵ1, ϵ2, …, ϵt−1). Using Theorem 2 in [66] (also stated Appendix VII–D) with and noting that even though we use the index set , is still a martingale, it follows that:

Additionally since by the argument above and using the symmetry of Rademacher random variables

The final step is to upper bound the sequential Rademacher complexity where Xti is a function of (ϵ1, ϵ2, …, ϵti−1). Clearly:

Because we have and .

Finally, we use Lemma 6 applied to the index set :

with probability at least . Now if we set ,

with probability 1 – (MT)−2.

Overall this tells us that on the set we have that with high probability. Now we return to the main proof. After considering all three cases that can follow from 6, we have

with probability at least , which bounds the error accrued on any single row, as a function of the sparsity of the true row. Combining, to get an overall error yields,

with probability at least

| (9) |

B. Proof of Theorem 2

1). Part 1:

Proof: The matrix Γt can be expanded as

Thus Γt has two parts, one is the outer product of a vector with itself, and the second is a diagonal matrix. Therefore, the smallest eigenvalue will be lower bounded by the smallest element of the diagonal matrix, because the outer product matrix will always be positive semi-definite with smallest eigenvalue equal to 0. Using properties of the Bernoulli distribution, the conditional variance is explicitly given as (2 + exp(ν + A*Xt−1) + exp(−ν−A*Xt−1))−1 and therefore the smallest eigenvalue of Γt is lower bounded by . ■

2). Part 2:

Proof: In order to prove this part of the Theorem, we use of Markov’s inequality and Lemma 5 in the case of the Bernoulli autoregressive process. Define the sequence as

Notice the following values:

The first value shows that and therefore Yn (and the negative of the sequence, −Yn) is a martingale. Additionally, we know and

where the last step follows because Bernoulli random variables are bounded by one, and the variance is bounded by ¼. We also need to bound as follows:

We use these values to get a bound on the summation term used in Lemma 5.

In the above corresponds to the sum corresponding to the negative sequence −Y0, −Y1, … which we also need to obtain the desired bound. Now we use a variant of Markov’s inequality to get a bound on the desired quantity.

The final inequality comes from the use of Lemma 5, which states that the given terms are supermartingales with initial term equal to 1, so the entire expectation is less than or equal to 1. The final step of the proof is to find the optimal value of η to minimize this upper bound.

Setting yields the lowest such bound, giving

where H(x) = (1 + x) log(1 + x) − x. We use the fact that to further simplify the bound.

To complete the proof, we set n = T and take a union bound over all indices because YT considered specific indices m and ℓ, which gives the bound

Here we have additionally assumed that T ≥ 2 and that log(MT) ≥ 1. ■

C. Proof of Theorem 3

1). Part 1:

Proof: We start with the following observation:

Thus Γt has two parts, one is the outer product of a vector with itself, and the second is a diagonal matrix. Therefore, the smallest eigenvalue will be lower bounded by the smallest element of the diagonal matrix. In order to lower bound this variance, we must consider the two cases, one where where the previous term in the sequence is the previous term in the overall sequence, and the other case where where the previous term is not in the sequence . The variance of can be characterized based on these two possible situations:

where p is the probability that . Because variances are lower bounded by 0, we can lower bound this entire term by the first part of the sum, where . For this term, we know that is drawn from a Poisson distribution, with the added information that each element is bounded above by U because it is an element of the sequence , …. Thus using Lemma 3 we know that the variance of each value is lower bounded by which can in turn be lower bounded by exp(νmin + ραminU). Finally, since there are at least ξT elements of 1, 2, …, T which are in the bounded set of observations, then the worst case distribution of the observations with elements greater than U is that they are never consecutive. This maximizes the number of times there is a break in the sequence , …, which means there would be a total of T − ξT times when there was a break. Thus the probability that consecutive elements are in the set is at least ξ, meaning that the minimum eigenvalue of is lower bounded by .

2). Part 2:

Proof: To prove this part of the Theorem, we use of Markov’s inequality and Lemma 5 as they pertain specifically to our problem. Define the sequence as

Notice the following values:

The first value shows that and therefore Yn (and the negative of the sequence, −Yn) is a martingale. Additionally, we have assumed that log MT for 1 ≤ m ≤ M and 1 ≤ i ≤ T, so it is true that . Additionally:

where the last step follows because ~ Poisson () and the mean and variance of a Poisson random variable are equal. The final line uses the fact that Xt is bounded. We will also need to bound as follows:

We need to use these values to get a bound on the summation term used in Lemma 5.

In the above corresponds to the sum corresponding to the negative sequence −Y0, −Y1, … which we will also need to obtain the desired bound. Now we are able to use a variant of Markov’s inequality to get a bound on the desired quantity.

The final inequality comes from the use of Lemma 5, which states that the given terms are supermartingales with initial term equal to 1, so the entire expectation is less than or equal to 1. The final step of the proof is to find the optimal value of η to minimize this upper bound.

Setting yields the lowest such bound, giving

where H(x) = (1 + x) log(1 + x) − x. We can use the fact that for x ≥ 0 to further simplify the bound.

To prove the proof, we set n = T and take a union bound over all indices because YT considered specific indices m and ℓ, which gives the bound

where which is positive for sufficiently large C. Here we have additionally assumed that T ≥ 2 and that log(MT) ≥ 1.

V. Discussion

Corollaries 1 and 2 provide several important facts about the inference process. Primarily, if ρ is fixed as a constant for increasing M (suggesting that the maximum degree of a node does not increase with the number of nodes in a network), then the error scales inversely with T, linearly with the sparsity level s and only logarithmically with the dimension M in order to estimate M2 parameters. These parameters will dictate how much data needs to be collected to achieve a desired accuracy level. This rate illustrates the idea that doing inference in sparse settings can greatly reduce the needed amount of sensing time, especially when s ≪ M2. Another quantity to notice is that we require T ≥ ω−4ρ3 log(M). If ρ is fixed as a constant for increasing M, this tells us that T needs to be on the order of log(M), which is significantly less than the total M2 parameters which are being estimated, and therefore including the sparsity assumption has lead to a significant gain. One final observation from the risk bound is that it provides guidance in the setting of the regularization parameter. We see that we would like to set λ generally as small as possible, since the error scales approximately like λ2, but we also require λ at least as large as for the bounds to hold. The balance between setting λ small enough to have low error, while maintaining that it’s large enough is an equivalent argument to needing to set λ large enough for it to take effect, but not too large to cause over smoothing.

A. Dense rows of A*

The exponential scaling in Corollaries 1 and 2 with the maximum number of non-zeros in a row, ρ, at first seems unsatisfying. However, we can imagine a worst-case scenario where a large ρ relative to s and M would actually lead to very poor estimation. Consider the case of a large star-shaped network, where every node in the network influences and is influenced by a single node, and there are no other edges in the network. This would correspond to a matrix with a single, dense row and corresponding column. Therefore, we would have ρ = M and s = 2M − 1. In the Poisson setting, this network would have M − 1 independently and identically distributed Poisson random variables at every time with mean ν, but the central node of the network would be constantly inhibited, almost completely. In a large network, it would be very difficult to know if this inhibition was coming from a few strong connections or from the cumulative effect of all the inhibitions. Additionally, since the central node would almost never have a positive count, it would also be difficult to learn about the influence that node has on the rest of the network. Because of networks like this, it is important that not only is the overall network sparse, but each row also needs to be sparse. This requirement might seem restrictive, but it has been shown in many real world networks that the degree of a node in the network follows a power-law which is independent of the overall size of the network [67], and ρ would grow slowly with growing M.

B. Bounded observations and higher-order autoregressive processes

Recall that the definition of ensures that most observations are bounded. Bounded observations are important to our analysis because we use martingale concentration inequalities [68] which depend on bounded conditional means and conditional variances, the latter condition being equivalent to Z being strongly convex. Since the conditional means and variances are data-dependent, bounded data (at least with high probability) is a sufficient condition for bounded conditional means and conditional variances. In some settings (e.g., Bernoulli), bounded observations are natural and ξ = 1. In other settings (e.g., Poisson) there is no constant U independent of T that is an upper bound for all observations with high probability. Furthermore, if we allow U to increase with T in violation of in Definition III.1, we derive a bound on that increases polynomially with T. To avoid this and get the far better bound in Theorem 1, our proof focuses on characterizing the error on the set defined in the definition of .

Thus far we have focused on the case where , a first order autoregressive process. However, we could imagine a simple, higher-order version where for some known sequence αi. This process could be reformulated as a process where , and much of the same proof techniques would still hold, especially in the case of the Bernoulli autoregressive process, where is easily defined. However, in the more general GLAR case finding the right analogy to in the higher space is not an obvious extension. A true order-q autoregressive process where could also be formulated as an order-1 process by properly stacking vectors and matrices, however, in this case proving the key lemmas and showing that the process belongs to is also an open question.

C. Stationarity

As stated in the problem formulation, we restrict our attention to bounded matrices A* ∈ [αmin, αmax]M×M; in the specific context of the log-linear Poisson autoregressive model, we use αmax = 0, corresponding to a model that only accounts for inhibitory interactions. One might ask whether these constraints could be relaxed and whether the Poisson model could also account for stimulatory interactions.

These boundedness constraints are sufficient to ensure that the observed process has a stationary distribution. The stationarity of processes is heavily studied; once a process has reached its stationary distribution, then data can be approximated as independent samples from this distribution and temporal dependencies can be can be ignored. While stationarity does not play an explicit role in our analysis, we can identify several sufficient conditions to ensure the vector GLAR processes of interest are stationary. In particular we assume that A* = A*⊤ which ensures reversibility of the Markov chain described by the process defined by . We derive the stationary distribution π(x), and then establish bounds on the mixing time. Note that this is a Markov chain with transition kernel:

If we further assume that the entries of Xt take on values on a countable domain to ensure a countable Markov chain, we can derive bounds on the mixing time.

Lemma 2.

Assume A* = A*⊤, then the Markov chain is a reversible Markov chain with stationary distribution:

Further, if , αmax = 0 and Z(·) is an increasing function, then for any , if νm ≤ νmax < ∞ for all 1 ≤ m ≤ M and αmin ≤ 0 we have that

Notice that for large M, the chain will mix very slowly, and additionally this bound has no dependence on the sparsity of the true matrix A*. Conversely, our results require T to be greater than a value that scales roughly like ρ3 log(M), which has a much milder dependence on M, and varies based on the sparsity of A*. What we can conclude from these observations is that while the RMLE needs a certain amount of observations to yield good results, we do not necessarily need enough data to reach the stationary distribution. Additionally, under conditions where mixing time guarantees are not given (i.e. non-symmetric A*, uncountable domain), we still have guarantees on the performance of the RMLE.

VI. Conclusions

Instances of the generalized linear autoregressive process have been used successfully in many settings to learn network structure. However, this model is often used without rigorous non-asymptotic guarantees of accuracy. In this paper we have shown important properties of the Regularized Maximum Likelihood Estimator of the GLAR process under a sparsity assumption. We have proven bounds on the error of the estimator as a function of sparsity, maximum degree of a node, ambient dimension and time, and shown how these bounds look for the specific examples of the Bernoulli and Poisson autoregressive proceses. In order to prove this risk bound, we have incorporated many recently developed tools of statistical learning, including concentration bounds for dependent random variables. Our results show that by incorporating sparsity the amount of data needed is on the order of ρ3 log(M) for bounded degree networks, which is a significant gain compared to the M2 parameters being estimated.

While this paper has focused on generalized linear models, we believe that the extension of these ideas to other models is possible. Specifically, for modeling firing rates of neurons in the brain, we are interested in settings in which we observe

and exploring possible functions g beyond the exponential function considered here. Such analysis would allow our results to apply to stimulatory effects in addition to inhibitory effects, but key challenges include ensuring that the process is stable and, with high probability, bounded. Another direction would be settings where the counts are drawn from more complicated higher-order or autoregressive moving average (ARMA) models which would better model real-world point processes.

VII. Appendix

A. Supplementary Lemmas

First we present supplementary Lemmas which we use throughout the proofs of the main Theorems.

Lemma 3.

Let X be a Poisson random variable, with the following probability density function:

and let X′ be a random variable defined by the following pdf:

Where . Roughly speaking, X′ is generated by taking a Poisson pdf, and removing the tail probability, and scaling the remaining density so that it is a valid pdf. For this random variable, assuming U ≥ max(6, 1.5eλ, λ + 5) then

Proof: Define the error terms and . We know

| (10) |

Our strategy will be to show ϵ1, ϵ2 are small relative to λ, which will tell us Var(X’) ≈ Var(X) = λ. Intuitively, the error terms should be small relative to λ because X’ differs from X only by cutting off the extreme edge of the pdf, given the assumptions on the size of U relative to λ.

First, we bound ϵ1. We have

Since , the first term is bounded by . To bound the second term, we note that the pdf for X’ is given explicitly as

where . And therefore

Using this fact to bound gives us

Note is the remainder term of the degree U − 1 Taylor Polynomial for eλ. We can bound this using Taylor’s Remainder theorem:

and so

where the second inequality comes from the assumption that U ≥ 1.5eλ. Here, the second fraction is small by Sterling’s approximation formula. Formally, Sterling tells us

and therefore

Combining the two terms tells us

since U ≥ 6.

and therefore

where the last inequality is due to the fact that for all k ≥ U +1. Here is the remainder term for the degree U − 2 Taylor Polynomial approximation to eλ. By the Taylor’s remainder formula, we can bound this by

and so

and since , it follows from Sterling’s approximation that

since U ≥ 6.

Putting the bounds for ϵ1 and ϵ2 back into Equation 10 to get the final form of the Lemma

We next present a one-sided concentration bound for Poisson random variables due to Bobkov and Ledoux [69]: random variables.

Lemma 4

(Proposition 10 in [69]). If X ~ Poisson(λ):

Lemma 5

(Lemma 3.3 in [68]). Let be a martingale. For all k ≥ 2, let

Then for all integers n ≥ 1 and for all η such that for all i ≤ n, ,

is a super-martingale. Additionally, if Y0 = 0, then .

Lemma 6.

Let be i.i.d. Rademacher random variables(i.e and are a sequence of random variables, where Xt ∈ [0, U]M, Xt(ϵ1, ϵ2, …, ϵt−1) is a function of (ϵ1, ϵ2, …, ϵt−1). Then

with probability at least

Proof:To prove this Lemma, we once again use Markov’s inequality and Lemma 5. For a fixed m ∈ {1, …, M}, define the sequence () as

Notice the following values:

The first value shows that and therefore Yn (and the negative of the sequence, −Yn) is a martingale. Additionally, we have assumed that 0 ≤ Xm, i ≤ U for 1 ≤ m ≤ M and 1 ≤ i ≤ T, so it is true that . Additionally:

We will also need to bound as follows:

We need to use these values to get a bound on the summation term used in Lemma 5.

In the above corresponds to the sum corresponding to the negative sequence −Y0, −Y1, … which we will also need to obtain the desired bound. Now we are able to use a variant of Markov’s inequality to get a bound on the desired quantity.

The final inequality comes from the use of Lemma 5, which states that the given terms are supermartingales with initial term equal to 1, so the entire expectation is less than or equal to 1. The final step of the proof is to find the optimal value of η to minimize this upper bound.

Setting yields the lowest such bound, giving

where H(x) = (1 + x) log(1 + x) − x. We can use the fact that to further simplify the bound.

To complete the proof, we set n = T and take a union bound over all indices because YT considered specific indices m, which gives the bound

B. Proof of Lemma 1

1). Part 1:

Proof:For all 1 ≤ t ≤ T and 1 ≤ m ≤ M, Xt, m|Xt−1 is drawn from a Poisson distribution with mean for some . Because of the range of values can take, we know that where νm ≤ νmax for some νmax < ∞ for all m. Therefore, we know that

where Y is a Poisson random variable with mean eνmax. To bound this quantity we use the result of Lemma 4,

Setting ,

Here, we have assumed that C ≥ eνmax (2e − 1) and log MT ≥ 1. This upper bound is not dependent on the value of Xt−1, so this quantity is also an upper bound for the unconditional probability of Xt,m ≥ C log MT. Using this for a single index t, m of our data X, and taking a union bound over all possible indices 1 ≤ m ≤ M, 1 < t ≤ T gives

for . Thus if C > max(eνmax (2e − 1), 4 + eνmax), then c > 0, and the bound is valid. ■

2). Part 2:

Proof:We are interested in bounding the number of observations Xt,m for 1 ≤ m ≤ M and 1 ≤ t ≤ T that are above the value U. Saying at least observations are less than a certain value, is equivalent to saying that the jth smallest observation is less than that value. Therefore,

(jth smallest observation Xt,m > U)

Here we define , and . We then condition the values of Yt on all previous values of Y and then understand this as a marginal of the joint distribution over Yt and Xt−1. Below we use the notation Y1:t to denote all the time indices of Y from 1 to t, and similarly for y.

In the last line we use the fact that conditioned on Xt−1, Yt is independent across dimensions m, and independent of previous values Y1:t−1. We now make the observation that is exactly the probability that a Poisson random variable with rate exp() is greater than U, which can be upper-bounded by the probability that a Poisson random variable with rate exp(νmax) is greater than U because we have assumed all values of a are non-positive. Call this probability pνmax. Thus we have and therefore,

The second inequality is from the application of Taylor’s Remainder Theorem, and the third is from the fact that . Now use the fact that j = αMT as stated in the Lemma, to give

By using Lemma 4 in a similar way as was used in the proof of Lemma 1 part 1, pνmax can be controlled by U in the following way,

when U ≥ eνmax(2e − 1). Plugging the result back into the bound gives

When and additionally greater than eνmax (2e − 1) the condition from above, then the probability of this event is decaying in M and T. Therefore, for , have the inequality

(at least αMT observations Xt,m ≤ U) ≥ 1 – e−cMT ■

C. Proof of Lemma 2

Proof: To prove the form of the stationary distribution we show that

where

Plugging in π(x) as specified,

The second to last equality uses the definition of Z as the log partition function, and the third uses the assumption that A* = A*⊤.

To prove the upper bound on total variation distance for Markov chains on countable domains, we define two chains, one chain Yt begins at the stationary distribution and the other independent chain starts at Xt begins at some arbitrary random state x, both with transition kernel P. These two chains are said to be coupled if they are run independently until the first time where the states are equal, then are equal for the rest of the trial. The notation Pt(x, y) denotes the probability of transitioning from state y to state x in exactly t steps. Theorem 5.2 of [70] asserts that:

where . Note first that . Since the chains are independent until . Note also that:

where the first inequality is due to the fact that Z is an increasing function, and from the assumption that Ai,j ≥ 0. Hence. . ■

D. Empirical processes for martingale sequences

To concretely define the martingale, let (Xt)t≥1 be a sequence of random variables adapted to the filtration . First we present a bounded difference inequality for martingales developed by van de Geer [65].

Theorem 4

(Theorem 2.6 in [65]). Fix T ≥ 1 and let ZT be an -measurable random variable, satisfying for each t = 1, 2, …, t,

almost surely where Lt < Ut are constants. Define . Then for all α > 0,

The second important result we need is a notion of sequential Rademacher complexity for martingales that allows us to do symmetrization, an important step in empirical process theory (see e.g. [71]). To do this we use machinery developed in [66]. Recall that (Xt)t≥1 is a martingale and let χ be the range of each Xt. Let be a function class where for all .

To define the notion of sequential Rademacher complexity, we first let be a sequence of independent Rademacher random variables (i.e. ). Next we define a tree process as a function of these independent Rademacher random variables.

A χ-valued tree x of depth T is a rooted complete binary tree with nodes labelled by elements of χ. We identify the tree x with the sequence (x1, x2, …, xT) of labeling functions which provide the labels for each node. Here x1 ∈ χ is the label for the root of the tree, while xt for t > 1 is the label of the node obtained by following the path of length t − 1 from the root, with +1 indicating “right” and −1 indicating “left.” Based on this tree, xt is a function of (ϵ1, ϵ 2, …, ϵ t−1).

Based on this, we define the sequential Rademacher complexity of a function class .

Definition 1

(Definition 3 in [66]). The sequential Rademacher complexity of a function class on a χ-valued tree x is defined as

where the outer supremum is taken over all χ-valued trees. Importantly note that (ϵtf(xt(ϵ1, ϵ2, …, ϵt−1))t≥1 is a martingale. Now we are in a position to state the main result which allows us to do symmetrization for functions of martingales.

Theorem 5

(Theorem 2 in [66]).

For further details refer to [66].

Acknowledgments

We gratefully acknowledge the support of the awards NSF CCF-1418976, NSF IIS-1447449, NIH 1 U54 AI117924–01, ARO W911NF-17-1-0357 and NSF DMS - 1407028

Contributor Information

Eric C. Hall, Wisconsin Institute of Discovery, University of Wisconsin-Madison, Madison, WI 53706, USA. eric.hall87@gmail.com..

Garvesh Raskutti, Department of Statistics and the Wisconsin Institute of Discovery, University of Wisconsin-Madison, Madison, WI 53706, USA. raskutti@stat.wisc.edu..

Rebecca M. Willett, Professor of Statistics and Computer Science at the University of Chicago, Chicago, IL 60637, USA. willett@uchicago.edu..

References

- [1].Brown EN, Kass RE, and Mitra PP, “Multiple neural spike train data analysis: state-of-the-art and future challenges,” Nature neuroscience, vol. 7, no. 5, pp. 456–461, 2004. [DOI] [PubMed] [Google Scholar]

- [2].Coleman TP and Sarma S, “Using convex optimization for nonparametric statistical analysis of point processes,” in Proc. ISIT, 2007. [Google Scholar]

- [3].Smith AC and Brown EN, “Estimating a state-space model from point process observations,” Neural Computation, vol. 15, pp. 965–991, 2003. [DOI] [PubMed] [Google Scholar]

- [4].Hinne M, Heskes T, and van Gerven MAJ, “Bayesian inference of whole-brain networks,” arXiv:1202.1696 [q-bio.NC], 2012. [Google Scholar]

- [5].Ding M, Schroeder CE, and Wen X, “Analyzing coherent brain networks with Granger causality,” in Conf. Proc. IEEE Eng. Med. Biol. Soc, 2011, pp. 5916–8. [DOI] [PubMed] [Google Scholar]

- [6].Pillow JW, Shlens J, Paninski L, Sher A, Litke AM, Chichilnisky EJ, and Simoncelli EP, “Spatio-temporal correlations and visual signalling in a complete neuronal population,” Nature, vol. 454, pp. 995–999, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Masud MS and Borisyuk R, “Statistical technique for analysing functional connectivity of multiple spike trains,” Journal of Neuroscience Methods, vol. 196, no. 1, pp. 201–219, 2011. [DOI] [PubMed] [Google Scholar]

- [8].Basu S and Michailidis G, “Regularized estimation in sparse high-dimensional time series models,” Annals of Statistics, vol. 43, no. 4, pp. 1535–1567, 2015. [Google Scholar]

- [9].Han F and Liu H, “Transition matrix estimation in high dimensional time series,” in Proc. Machine Learning Research, 2013, vol. 28(2), pp. 172–180. [Google Scholar]

- [10].Kock A and Callot L, “A class of multiple-error-correcting codes and the decoding scheme,” Journal of Econometrics, vol. 186(2), pp. 325–344, 2015. [Google Scholar]

- [11].Song S and Bickel PJ, “Large vector auto regressions,” Tech. Rep, UC Berkeley, 2011. [Google Scholar]

- [12].Netrapalli Praneeth and Sanghavi Sujay, “Learning the graph of epidemic cascades,” in ACM SIGMETRICS Performance Evaluation Review. ACM, 2012, vol. 40, pp. 211–222. [Google Scholar]

- [13].Altarelli Fabrizio, Braunstein Alfredo, Luca Dall’Asta Ingrosso Alessandro, and Zecchina Riccardo, “The patient-zero problem with noisy observations,” Journal of Statistical Mechanics: Theory and Experiment, vol. 2014, no. 10, pp. P10016, 2014. [Google Scholar]

- [14].Kempe David, Kleinberg Jon, and Tardos Éva, “Maximizing the spread of influence through a social network,” in Proceedings of the ninth ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 2003, pp. 137–146. [Google Scholar]

- [15].Kuperman M and Abramson G, “Small world effect in an epidemiological model,” Physical Review Letters, vol. 86, no. 13, pp. 2909, 2001. [DOI] [PubMed] [Google Scholar]

- [16].Johansson Per, “Speed limitation and motorway casualties: a time series count data regression approach,” Accident Analysis & Prevention, vol. 28, no. 1, pp. 73–87, 1996. [DOI] [PubMed] [Google Scholar]

- [17].Matteson David S, McLean Mathew W, Woodard Dawn B, and Henderso Shane Gn, “Forecasting emergency medical service call arrival rates,” The Annals of Applied Statistics, pp. 1379–1406, 2011. [Google Scholar]

- [18].Rydberg Tina Hviid and Neil Shephard, “A modelling framework for the prices and times of trades made on the new york stock exchange,” Tech. Rep, Nuffield College, 1999, Working Paper W99–14. [Google Scholar]

- [19].Aït-Sahalia Y, Cacho-Diaz J, and Laeven RJA, “Modeling financial contagion using mutually exciting jump processes,” Tech. Rep, National Bureau of Economic Research, 2010. [Google Scholar]

- [20].Chavez-Demoulin V and McGill JA, “High-frequency financial data modeling using Hawkes processes,” Journal of Banking & Finance, vol. 36, no. 12, pp. 3415–3426, 2012. [Google Scholar]

- [21].Cameron A Colin and Trivedi Pravin K, Regression analysis of count data, vol. 53, Cambridge university press, 2013. [Google Scholar]

- [22].Raginsky M, Willett R, Horn C, Silva J, and Marcia R, “Sequential anomaly detection in the presence of noise and limited feedback,” IEEE Transactions on Information Theory, vol. 58, no. 8, pp. 5544–5562, 2012. [Google Scholar]

- [23].Silva J and Willett R, “Hypergraph-based anomaly detection in very large networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 31, no. 3, pp. 563–569, 2009, doi: 10.1109/TPAMI.2008.232. [DOI] [PubMed] [Google Scholar]

- [24].Stomakhin A, Short MB, and Bertozzi A, “Reconstruction of missing data in social networks based on temporal patterns of interactions,” Inverse Problems, vol. 27, no. 11, 2011. [Google Scholar]

- [25].Blundell C, Heller KA, and Beck JM, “Modelling recip-rocating relationships with Hawkes processes,” in Proc. NIPS, 2012. [Google Scholar]

- [26].Zhou K, Zha H, and Song L, “Learning social infectivity in sparse low-rank networks using multi-dimensional Hawkes processes,” in Proceedings of the 16th International Conference on Artificial Intelligence and Statistics (AISTATS), 2013. [Google Scholar]

- [27].Huang Shyh-Jier and Shih Kuang-Rong, “Short-term load forecasting via arma model identification including non-Gaussian process considerations,” Power Systems, IEEE Transactions on, vol. 18, no. 2, pp. 673–679, 2003. [Google Scholar]

- [28].Vere-Jones D and Ozaki T, “Some examples of statistical estimation applied to earthquake data,” Ann. Inst. Statist. Math, vol. 34, pp. 189–207, 1982. [Google Scholar]

- [29].Ogata Y, “Seismicity analysis through point-process modeling: A review,” Pure and Applied Geophysics, vol. 155, no. 2–4, pp. 471–507, 1999. [Google Scholar]

- [30].Brännäs Kurtand Johansson Per, “Time series count data regression,” Communications in Statistics-Theory and Methods, vol. 23, no. 10, pp. 2907–2925, 1994. [Google Scholar]

- [31].MacDonald Iain L and Zucchini Walter, Hidden Markov and other models for discrete-valued time series, vol. 110, CRC Press, 1997. [Google Scholar]

- [32].Zeger Scott L, “A regression model for time series of counts,” Biometrika, vol. 75, no. 4, pp. 621–629, 1988. [Google Scholar]

- [33].Jørgensen Bent, Lundbye-Christensen Soren, Song PX-K, and Sun Li, “A state space model for multivariate longitudinal count data,” Biometrika, vol. 86, no. 1, pp. 169–181, 1999. [Google Scholar]

- [34].Fahrmeir Ludwig and Tutz Gerhard, Multivariate statistical modelling based on generalized linear models, Springer Science & Business Media, 2013. [Google Scholar]

- [35].Grunwald Gary K, Hyndman Rob J, Tedesco Leanna, and Tweedie Richard L, “Theory & methods: Non-Gaussian conditional linear AR (1) models,” Australian & New Zealand Journal of Statistics, vol. 42, no. 4, pp. 479–495, 2000. [Google Scholar]

- [36].Benjamin Michael A, Rigby Robert A, and Stasinopoulos D Mikis, “Generalized autoregressive moving average models,” Journal of the American Statistical association, vol. 98, no. 461, pp. 214–223, 2003. [Google Scholar]

- [37].Gouriéroux Christian and Jasiak Joann, “Autoregressive gamma processes,” Les Cahiers du CREF of HEC Montréal Working Paper,, no. 05–03, 2005. [Google Scholar]

- [38].Willett R and Nowak R, “Multiscale Poisson intensity and density estimation,” IEEE Transactions on Information Theory, vol. 53, no. 9, pp. 3171–3187, 2007, doi: 10.1109/TIT.2007.903139. [DOI] [Google Scholar]

- [39].Raginsky M, Willett R, Harmany Z, and Marcia R, “Compressed sensing performance bounds under Poisson noise,” IEEE Transactions on Signal Processing, vol. 58, no. 8, pp. 3990–4002, 2010, arXiv:0910.5146. [Google Scholar]

- [40].Raginsky M, Jafarpour S, Harmany Z, Marcia R, Willett R, and Calderbank R, “Performance bounds for expander-based compressed sensing in Poisson noise,” IEEE Transactions on Signal Processing, vol. 59, no. 9, 2011, arXiv:1007.2377. [Google Scholar]

- [41.Jiang X, Willett R, and Raskutti G, “Minimax rates of estimation for high-dimensional linear regression over ℓq-balls,” IEEE Transactions on Information Theory, vol. 61, pp. 44584474, 2015. [Google Scholar]

- [42].Fokianos Konstantinos, Rahbek Anders, and Tjøstheim Dag, “Poisson autoregression,” Journal of the American Statistical Association, vol. 104, no. 488, pp. 1430–1439, 2009. [Google Scholar]

- [43].Zhu Fukang and Wang Dehui, “Estimation and testing for a Poisson autoregressive model,” Metrika, vol. 73, no. 2, pp. 211230, 2011. [Google Scholar]

- [44].Fokianos Konstantinos and Tjøstheim Dag, “Log-linear Poisson autoregression,” Journal of Multivariate Analysis, vol. 102, no. 3, pp. 563–578, 2011. [Google Scholar]

- [45].Hawkes AG, “Point spectra of some self-exciting and mutually-exciting point processes,” Journal of the Royal Statistical Society. Series B (Methodological), vol. 58, pp. 83–90, 1971. [Google Scholar]

- [46].Hawkes AG, “Point spectra of some mutually-exciting point processes,” Journal of the Royal Statistical Society. Series B (Methodological), vol. 33, pp. 438–443, 1971. [Google Scholar]

- [47].Daley DJ and Vere-Jones D, An introduction to the theory of point processes, Vol. I: Probability and its Applications, Springer-Verlag, New York, second edition, 2003. [Google Scholar]

- [48].Hansen Niels Richard, Reynaud-Bouret Patricia, and Rivoirard Vincent, “LASSO and probabilistic inequalities for multivariate point processes,” Bernoulli, vol. 21, no. 1, pp. 83–143, February 2015. [Google Scholar]

- [49].Bacry Muzy, Gaiffas, “A generalization error bound for sparse and low-rank multivariate hawkes processes,” arXiv:1501.00725, 2015. [Google Scholar]

- [50].Heinen Andréas, “Modeling time series count data: an autoregressive conditional Poisson model,” Available at SSRN1117187, 2003. [Google Scholar]

- [51].Zhu Fukang, “A negative binomial integer-valued garch model,” Journal of Time Series Analysis, vol. 32, no. 1, pp. 54–67, 2011. [Google Scholar]

- [52].Zhu Fukang, “Modeling time series of counts with COM-poisson INGARCH models,” Mathematical and Computer Modelling, vol. 56, no. 9, pp. 191–203, 2012. [Google Scholar]

- [53].Zhu Fukang, “Modeling overdispersed or underdispersed count data with generalized Poisson integer-valued garch models,” Journal of Mathematical Analysis and Applications, vol. 389, no. 1, pp. 58–71, 2012. [Google Scholar]

- [54].Achilioptas D and McSherry F, “On spectral learning of mixtures of distributions,” in 18th Annual Conference on Learning Theory (COLT), July 2005. [Google Scholar]

- [55].van de Geer S, “High-dimensional generalized linear models and the LASSO,” Annals of Statistics, vol. 36, pp. 614–636, 2008. [Google Scholar]

- [56].Koltchinskii V and Yuan M, “Sparse recovery in large ensembles of kernel machines,” in Proceedings of COLT, 2008. [Google Scholar]

- [57].Meier L, van de Geer S, and Buhlmann P, “High-dimensional additive modeling,” Annals of Statistics, vol. 37, pp. 3779–3821, 2009. [Google Scholar]

- [58].Negahban S, Ravikumar P, Wainwright MJ, and Yu B, “A unified framework for high-dimensional analysis of M-estimators with decomposable regularizers,” Statistical Science, vol. 27, no. 4, pp. 538–557, 2010. [Google Scholar]

- [59].Raskutti G, Wainwright MJ, and Yu B, “Minimax rates of estimation for high-dimensional linear regression over ℓq-balls,” IEEE Transactions on Information Theory, vol. 57, pp. 69766994, 2011. [Google Scholar]

- [60].Raskutti G, Wainwright MJ, and Yu B, “Minimax-optimal rates for sparse additive models over kernel classes via convex programming,” Journal of Machine Learning Research, vol. 13, pp. 398–427, 2012. [Google Scholar]

- [61].Zhao P and Yu B, “On model selection consistency of LASSO,” Journal of Machine Learning Research, vol. 7, pp. 2541–2567, 2006. [Google Scholar]

- [62].Bühlmann P and van de Geer S, Statistics for High-Dimensional Data: Methods, Theory and Applications, Springer, 2011. [Google Scholar]

- [63].Bickel P, Ritov Y, and Tsybakov A, “Simultaneous analysis of Lasso and Dantzig selector,” Annals of Statistics, vol. 37, no. 4, pp. 1705–1732, 2009. [Google Scholar]

- [64].Jiang X, Reynaud-Bouret P, Rivoirard V, Sansonnet L, and Willett R, “A data-dependent weighted LASSO under Poisson noise,” arXiv preprint arXiv:1509.08892, 2015. [Google Scholar]