Abstract

Background:

Adults with cochlear implants (CIs) are believed to rely more heavily on visual cues during speech recognition tasks than their normal-hearing peers. However, the relationship between auditory and visual reliance during audiovisual (AV) speech recognition is unclear and may depend on an individual’s auditory proficiency, duration of hearing loss (HL), age, and other factors.

Purpose:

The primary purpose of this study was to examine whether visual reliance during AV speech recognition depends on auditory function for adult CI candidates (CICs) and adult experienced CI users (ECIs).

Study Sample:

Participants included 44 ECIs and 23 CICs. All participants were postlingually deafened and had met clinical candidacy requirements for cochlear implantation.

Data Collection and Analysis:

Participants completed City University of New York sentence recognition testing. Three separate lists of twelve sentences each were presented: the first in the auditory-only (A-only) condition, the second in the visual-only (V-only) condition, and the third in combined AV fashion. Each participant’s amount of “visual enhancement” (VE) and “auditory enhancement” (AE) were computed (i.e., the benefit to AV speech recognition of adding visual or auditory information, respectively, relative to what could potentially be gained). The relative reliance of VE versus AE was also computed as a VE/AE ratio.

Results:

VE/AE ratio was predicted inversely by A-only performance. Visual reliance was not significantly different between ECIs and CICs. Duration of HL and age did not account for additional variance in the VE/AE ratio.

Conclusions:

A shift toward visual reliance may be driven by poor auditory performance in ECIs and CICs. The restoration of auditory input through a CI does not necessarily facilitate a shift back toward auditory reliance. Findings suggest that individual listeners with HL may rely on both auditory and visual information during AV speech recognition, to varying degrees based on their own performance and experience, to optimize communication performance in real-world listening situations.

Keywords: audiovisual speech recognition, cochlear implants, multimodal integration, speech perception

INTRODUCTION

It is well-known that both normal-hearing (NH) listeners and individuals with hearing loss (HL) rely on visual cues during speech recognition, especially when noise is introduced into the listening environment, when the speech signal is degraded, or when incongruent audiovisual (AV) stimuli are presented in laboratory settings (Sumby and Pollack, 1954; Grant et al, 1998; Rouger et al, 2007). Traditionally, as a result of their degraded auditory input, individuals with severe-to-profound HL have been thought to shift their reliance more heavily to visual cues (and away from auditory cues) during AV speech recognition tasks, relative to NH peers (Desai et al, 2008; Rouger et al, 2008; Leybaert and LaSasso, 2010; Moradi et al, 2016). It is reasonable to assume that this shift occurs to optimize communicative performance during combined AV sensory input, which is the most common communication mode experienced by listeners with HL (Dorman et al, 2016). However, the details of how visual reliance changes as a result of cochlear implantation have not been examined explicitly.

Several previous studies of AV speech recognition in clinical populations of patients with HL have focused on experienced users of cochlear implants (CIs), devices which restore auditory sensation to the listener (Rabinowitz et al, 1992; Kaiser et al, 2003; Hay McCutcheon et al, 2005; Rouger et al, 2007; Desai et al, 2008; Strelnikov et al, 2009; Altieri et al, 2011; Stevenson et al, 2017; Schreitmüller et al, 2018). For example, several investigators have found that experienced CI users (ECIs) demonstrated stronger reliance on visual cues than NH peers (Desai et al, 2008; Rouger et al, 2008; Leybaert and LaSasso, 2010). Moreover, there is evidence that ECIs perform better than NH controls in visual-only (V-only) (i.e., speech reading) conditions of speech recognition, suggesting a shift to reliance on visual cues and away from auditory cues (Goh et al, 2001; Kaiser et al, 2003; Rouger et al, 2007; Strelnikov et al, 2009). On the other hand, Tremblay et al (2010) found no differences in speech reading abilities between groups of ECIs and NH peers, although nonproficient CI users relied on visual information more heavily than proficient CI users and NH peers when presented with an AV conflict.

One limitation of studies examining AV speech recognition in ECIs compared with NH controls is a lack of control for ECI participant variability within groups. For example, age has been found to correlate negatively with speech reading ability in adults (Hay-McCutcheon et al, 2005; Sommers et al, 2005; Tye-Murray et al, 2007; 2010; Schreitmüller et al, 2018) and may contribute to outcome variability in groups of ECIs. In addition, the degree of visual reliance appears to relate to the duration of HL (i.e., greater visual dominance with longer experience of HL) (Giraud et al, 2001; Giraud and Lee, 2007), but it is unclear how this reliance changes with prolonged use of a CI. Although speech reading enhancement has been found to persist in the years after implantation (Rouger et al, 2007), it has also been reported that visual reliance decreases in direct relation to duration of CI use (Desai et al, 2008). None of these studies, however, explicitly examined whether the degree of visual reliance by individual listeners was related to the severity of their HL or their auditory-only (A-only) performance.

The first aim of this study was to answer the following question: For individual listeners with HL, does the magnitude of reliance on visual input during AV speech recognition depend on the quality of that individual’s auditory function? We hypothesized that to optimize AV speech recognition, individuals with HL would show reliance on visual information, with the magnitude of this visual reliance being inversely related to the quality of their A-only speech recognition. That is, individual listeners with relatively poorer auditory function would demonstrate greater reliance on visual input during AV speech recognition. We predicted that once this auditory function was accounted for, other audio-logic factors previously found to contribute to variability in visual reliance, such as duration of HL prior to CI (Giraud and Lee, 2007), would not independently provide additional predictive power to explain the magnitude of visual reliance. In addition to auditory function, age was included as a predictor because it has previously been found to impact visual reliance (Tye-Murray et al, 2007; 2010; Schreitmüller et al, 2018).

In addition to those limitations, previous studies of ECIs were not able to determine whether changes in AV speech recognition abilities could be attributed to the experience of prolonged severe-to-profound HL before implantation, or whether these changes were a result of the restoration of auditory input through a CI. The present study was designed to disentangle the effects of prolonged HL and CI intervention on AV speech recognition skills by testing both a group of ECIs and a group of CI candidates (CICs) with severe-to-profound HL. Our second aim was to answer the following question: Does restoration of auditory input through a CI result in relatively greater reliance on auditory input during AV speech recognition? We hypothesized that, as a result of restoration of auditory input, ECIs would demonstrate greater reliance on auditory cues and less reliance on visual cues during AV speech recognition than CIC listeners.

To test the hypotheses, participants with HL were asked to repeat sentences presented in A-only, V-only, and combined AV fashions. To perform analyses, it was necessary to compute appropriate metrics of relative visual and auditory gains during multimodal AV speech recognition by comparing AV performance in either unimodal condition (A-only or V-only) alone. Several measures related to AV speech recognition can be computed, based on recommendations in previous work (Sommers et al, 2005; Tye-Murray et al, 2007). The simplest approach to computing visual gain is to compute the difference score between AV performance and A-only performance; however, this metric is biased because high A-only scores result in artificially low visual gain scores (Grant et al, 1998). Thus, a better approach takes into consideration the relative A-only performance. In this case, “visual enhancement” (VE) (Grant et al, 1998; Grant and Seitz 1998; Sommers et al, 2005; Rouger et al, 2007; Tye-Murray et al, 2007; 2010; Schreitmüller et al, 2018) expresses the amount of visual gain observed for a given individual relative to what could possibly be gained over A-only performance. When AV and A-only scores are represented as percent words correct, VE is computed as follows:

A second measure, “auditory enhancement” (AE) (Rabinowitz et al, 1992; Hay-McCutcheon et al, 2005; Sommers et al, 2005; Tye-Murray et al, 2007; 2010; Desai et al 2008; Schreitmüller et al, 2018), represents the benefit of adding auditory information to what potentially can be gained over V-only performance (also represented as percent words correct). This AE measure is computed as follows:

Finally, in the present study, we computed a ratio between VE and AE (VE/AE), representing the relative reliance during AV speech recognition of visual versus auditory gain. Our first hypothesis was that, as a result of their experience of HL, individual listeners with HL would demonstrate a VE/AE ratio that would be inversely related to that listener’s auditory ability (A-only performance). Our second hypothesis was that, as a result of restoration of auditory input through a CI, ECIs would demonstrate greater reliance on auditory input (and less reliance on visual input) and, thus, smaller VE/AE ratios than their CIC counterparts.

By addressing these two hypotheses, the objective of this study was to examine more explicitly the visual reliance demonstrated during speech recognition by listeners with HL, and to investigate how this reliance relates to auditory processing abilities. Moreover, the study sought to investigate the effects of restoration of auditory input through a CI on AV speech recognition.

METHODS

Participants

Participants were 67 native English speakers with at least a high school diploma or equivalency. They were recruited from a single tertiary care clinical CI program of adult patients. The ECIs were established CI users and recruited from the pool of clinical patients either in person during a follow-up audiology visit, or via invitation by letter. The CICs were patients who had recently been determined clinically to be CICs and were invited in person to participate. A screening task for cognitive impairment was completed, using a visual presentation version of the Mini-Mental State Examination (MMSE) (Folstein et al, 1975), with an MMSE raw score ≥26 required; all participants met this criterion, suggesting no evidence of cognitive impairment. A screening test of basic word reading was completed, using the Wide Range Achievement Test (Wilkinson and Robertson, 2006). Participants were required to have a word reading standard score ≥75, suggesting reasonably normal general language proficiency. All participants were screened for vision using a basic near-vision test and were required to have better than 20/40 near vision because of visual presentation of tasks. Two participants had vision scores of 20/50 but displayed normal reading scores, suggesting sufficient visual abilities to include their data in analyses. Socioeconomic status (SES) of participants was also collected because it may be a proxy of speech and language abilities. This was accomplished by quantifying SES based on a metric developed by Nittrouer and Burton (2005). There were two scales for occupational and education levels, each ranging from 1 to 8, with eight being the highest level. These two numerical scores were then multiplied, resulting in scores between 1 and 64.

The 44 ECI participants and 23 CICs had met clinical candidacy requirements for cochlear implantation, including severe-to-profound HL in both ears and best-aided sentence recognition scores of <60% words correct using AzBio (Spahr et al, 2012) or Hearing In Noise Test sentences (Nilsson et al, 1994). These two groups of participants were recruited from the patient population of our neurotology tertiary care center. All ECIs and CICs were post-lingually deafened, meaning they should have developed reasonably proficient language skills before losing their hearing. Thirty-four (77.3%) ECIs and 21 (91.3%) CICs reported onset of HL no earlier than age 12 years (i.e., NH until the time of puberty). The other ten (22.7%) ECIs and two (8.7%) CICs reported some degree of congenital HL or onset of HL during childhood. However, all participants self-reported that they experienced early hearing aid intervention and typical A-only spoken language development during childhood, had been mainstreamed in conventional schools with spoken language instruction, and had experienced progressive HLs into adulthood. All of the ECI users received their implants at or after the age of 35 years.

ECIs were between the ages of 50 and 83 years. All ECIs demonstrated CI-aided warble thresholds in the clinic of better than 35 dB HL across speech frequencies. Duration of HL ranged from 4 to 76 years and duration of CI use ranged from 18 months to 27 years. CICs were between the ages of 49 and 94 years. Duration of HL ranged from 11 to 53.5 years and duration of hearing aid use ranged from 2 to 46 years.

Equipment and Materials

Speech recognition and audiometric testing took place within a sound-treated booth, and screening measures were collected in an acoustically insulated testing room. Tasks requiring verbal responses from participants were audio-visually recorded for later scoring. Participants wore FM transmitters through the use of specially designed vests. This allowed for direct input of responses into the camera, permitting later off-line scoring of tasks.

Visual stimuli were presented on a computer monitor placed two feet in front of the participant. Auditory stimuli were presented via a Roland MA-12C speaker placed one meter in front of the participant at zero degrees Azimuth. Before the testing session, the speaker was calibrated to 68 dB SPL using a sound level meter positioned at the participants’ head position.

After the screening measures were completed, City University of New York (CUNY) sentences were administered (Boothroyd et al, 1985a). Three separate lists of twelve CUNY sentences were presented. Each list contained 102 words. One list was presented in the A-only condition, one list was presented in the V-only condition, and the third list was presented in combined AV fashion. Condition order was always A-only, V-only, then AV presentation, but sentence lists were randomized among participants. Sentences were presented via computer monitor and/or loudspeaker, and participants were asked to repeat as much of the sentence as they could. The sentences were spoken by a single female talker, and they varied in length and subject matter. An example sentence is: “The forecast for tomorrow is clear skies, low humidity, and mild temperatures.” Scores for each measure were percentage of total words repeated correctly.

General Approach

The study protocol was approved by the local Institutional Review Board. All participants provided informed, written consent and were reimbursed $15 per hour for participation. Testing was completed over a single 30-min session, with breaks between lists to prevent fatigue. During testing, ECI participants and CICs used their typical hearing prostheses, including any hearing aids.

Data Analyses

As described previously, the following values were computed for each individual listener in their best-aided condition:

VE = (AV – A-only)/(100 – A-only);

AE = (AV – V-only)/(100 – V-only);

VE/AE ratio, computed as the main outcome measure for analyses.

Our first hypothesis was that individual listeners with HL would demonstrate a VE/AE ratio that would be inversely related to that listener’s auditory ability (A-only performance), and our second hypothesis was that ECIs would demonstrate greater reliance on auditory input (and less reliance on visual input) and, thus, smaller VE/AE ratios than their CIC counterparts. To test our two hypotheses, a blockwise multivariate linear regression analysis was performed with VE/AE ratio as the outcome. In the first block, predictors were A-only performance (to test the first hypothesis) and group (ECI versus CIC, to test the second hypothesis). In the second block, participant duration of HL before CI and age were entered as additional predictors.

RESULTS

For analyses, an alpha of 0.05 was set. When p > 0.05, outcomes are reported as not significant. For the ECI group, side of implantation (left, right, or bilateral) did not influence any speech recognition scores. Also, no differences in any scores were found for ECIs who wore only CIs versus a CI plus hearing aid. Therefore, the data were collapsed across all ECIs in all subsequent analyses reported in the following paragraphs.

Reliability

Inter-scorer reliability was assessed for tests that involved AV recording and off-line scoring of responses. All responses were scored by one trained scorer and scored again by a second scorer for 25% of all participants (n = 17). With interscorer reliability >90% (range: 93–100%) for the MMSE, word reading, and CUNY sentence recognition, scores from the initial scorer were used in analyses.

Group Data

Group mean demographic and screening measures for the ECIs and CICs are shown in Table 1. No significant differences were found on independent-samples t-tests between groups for age, duration of HL before CI, word reading ability, MMSE score, or SES.

Table 1.

Participant Demographics for ECI and CIC Groups

| ECI (N = 44) | CIC (N = 23) | |||

|---|---|---|---|---|

| Mean (SD) | Mean (SD) | t Value | p Value | |

| Demographics | ||||

| Age (years) | 67.2 (10.2) | 68.2 (10.9) | 0.37 | 0.708 |

| Duration of HL before CI (years) | 33.9 (19.2) | 30.0 (12.7) | 0.85 | 0.400 |

| Duration of CI use (years) | 4.8 (6.4) | |||

| Reading (standard score) | 98.4 (12.0) | 97.3 (11.0) | 0.38 | 0.715 |

| SES | 26.7 (14.5) | 29.7 (13.7) | 0.84 | 0.404 |

| MMSE (raw score) | 28.8 (1.3) | 28.6 (1.3) | 0.43 | 0.668 |

Notes: t values and p values for independent-samples t-tests are shown. Reported degrees of freedom for t-tests are 65.

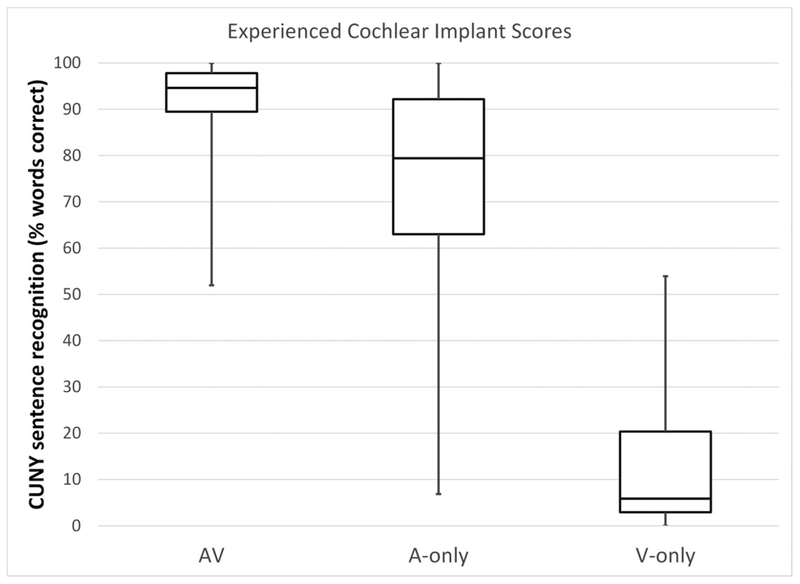

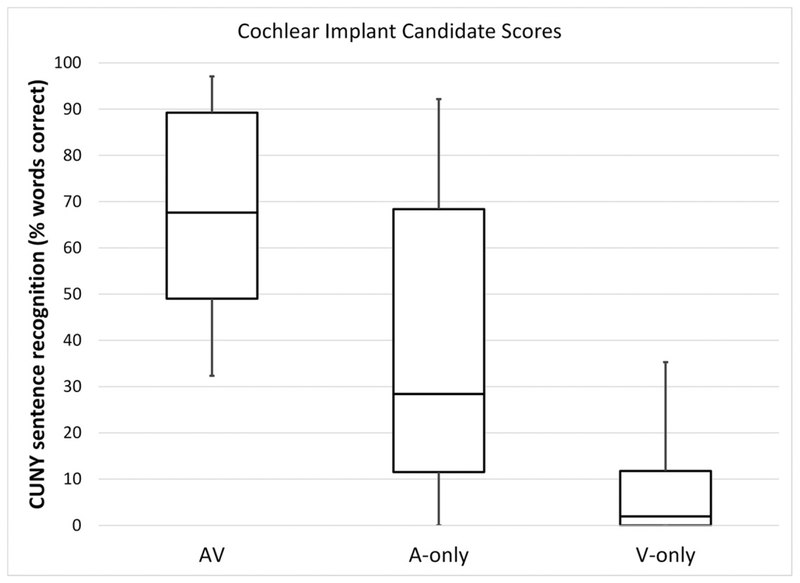

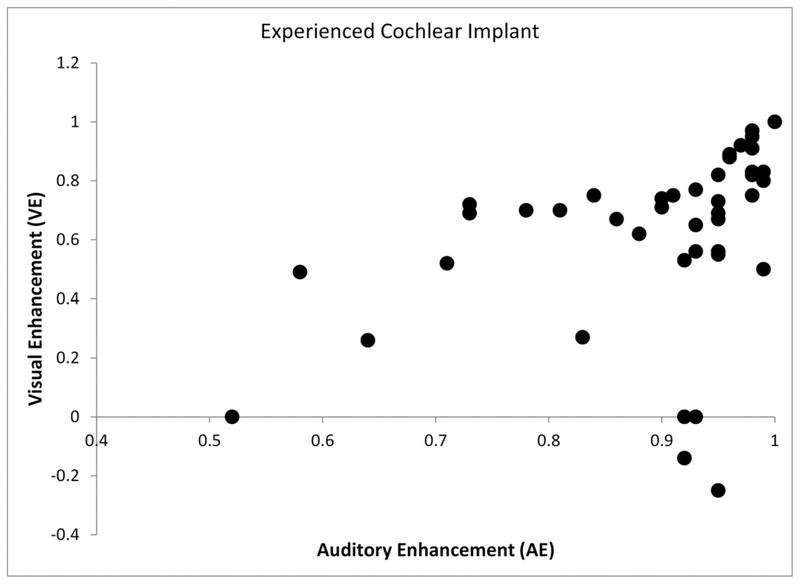

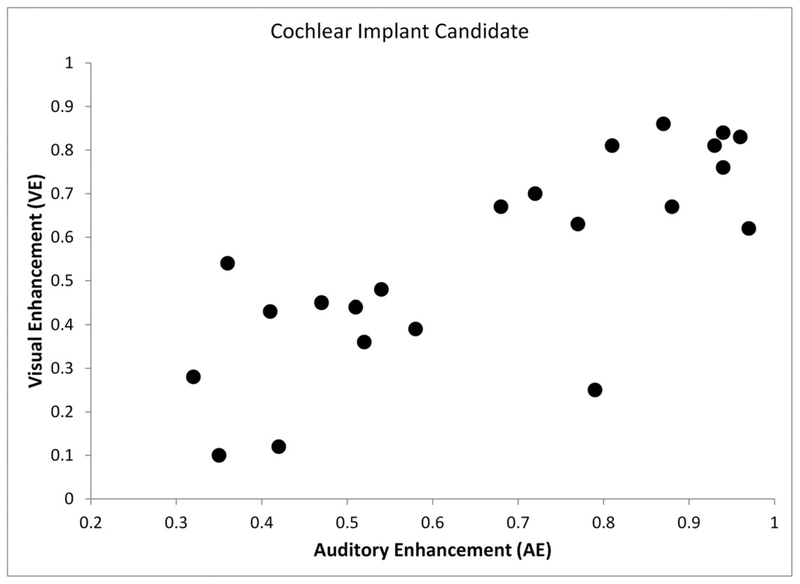

Results for ECIs and CICs on CUNY sentence recognition measures are shown in Table 2, along with computed scores of VE, AE, and VE/AE. Boxplots of data are also shown in Figure 1 (ECIs) and Figure 2 (CICs), and scatterplots demonstrating VE versus AE are shown in Figure 3 (ECIs) and Figure 4 (CICs). One ECI participant demonstrated a VE/AE ratio that was >2 standard deviation (SD) worse than the mean (VE/AE was −1.58), so data for this participant were excluded from further analyses. Our first hypothesis was that individual listeners with HL would demonstrate a VE/AE ratio that would be inversely related to that listener’s auditory ability (A-only performance). Our second hypothesis was that, as a result of restoration of auditory input through a CI, ECIs would demonstrate smaller VE/AE than their CIC counterparts. A blockwise multivariate linear regression analysis was performed with VE/AE ratio as the outcome and A-only performance and group (ECI versus CIC) as predictors in the first block, and duration of HL before implantation and age as predictors in the second block. Results demonstrated that the full model was significant [F(4, 60) = 2.65, p = 0.042], with findings reported in Table 3. This analysis demonstrated that VE/AE ratio was predicted negatively and significantly by A-only performance. On the other hand, group did not significantly predict VE/AE ratio, despite the mean VE/AE score being 0.79 for CICs and 0.68 for ECIs. Duration of HL before implantation and participant age also did not account for additional variance in VE/AE ratio.

Table 2.

Speech Recognition Scores for ECI and CIC Groups

| ECI (N = 42) | CIC (N = 23) | |

|---|---|---|

| Mean (SD) | Mean (SD) | |

| CUNY Sentences | ||

| A-only (% words correct) | 73.2 (21.6) | 35.5 (29.1) |

| V-only (% words correct) | 12.9 (14.3) | 6.9 (9.4) |

| AV (% words correct) | 90.5 (10.7) | 67.6 (22.3) |

| VE score | 0.61 (0.31) | 0.53 (0.25) |

| AE score | 0.89 (0.12) | 0.66 (0.23) |

| VE/AE ratio | 0.68 (0.32) | 0.79 (0.29) |

Figure 1.

Boxplots of scores for ECI users on CUNY sentence recognition in AV, A-only, and V-only conditions. For each condition, the median is represented by the horizontal line that divides the box into two parts. The upper limit of the box represents the 75th percentile and the lower limit of the box represents the 25th percentile. Upper and lower whiskers represent maximum and minimum scores, respectively.

Figure 2.

Boxplots of scores for CICs on CUNY sentence recognition in AV, A-only, and V-only conditions. For each condition, the median is represented by the horizontal line that divides the box into two parts. The upper limit of the box represents the 75th percentile and the lower limit of the box represents the 25th percentile. Upper and lower whiskers represent maximum and minimum scores, respectively.

Figure 3.

Scatterplot of VE versus AE scores for ECI users on CUNY sentence recognition.

Figure 4.

Scatterplot of VE versus AE scores for cochlear implant CICs on CUNY sentence recognition.

Table 3.

Results of Linear Regression Analyses for VE/AE Ratio

| Dependent Measure: VE/AE Ratio | B | SE (B) | β | T | Sig. (p) | R | R2 |

|---|---|---|---|---|---|---|---|

| Predictors: | 0.387 | 0.150 | |||||

| Block 1: | |||||||

| A-only performance (% words correct) | −0.005 | 0.002 | −0.382 | 2.58 | 0.012 | ||

| Group (ECI vs. CIC) | −0.030 | 0.064 | −0.070 | 0.47 | 0.637 | ||

| Block 2: | |||||||

| Duration of HL before CI (years) | 0.004 | 0.003 | 0.183 | 1.48 | 0.144 | ||

| Age (years) | 0.000 | 0.005 | 0.007 | 0.057 | 0.954 |

Note: Auditory-only performance score and group served as predictors. VE/AE ratio = Ratio of VE score to AE score. Values shown in bold for p value < 0.05.

DISCUSSION

The objective of this study was two-fold: To determine whether the magnitude of visual reliance during AV speech recognition in listeners with HL was inversely related to their auditory performance and to examine the effects on this visual reliance of restoration of auditory input through a CI. Results demonstrated support for our first hypothesis: A-only performance was a negative predictor of visual reliance as measured using the VE/AE ratio, and duration of HL and participant age did not account for additional variance in visual reliance. Although this finding does not prove a causal relationship between poorer A-only performance and greater reliance on visual information during AV speech recognition, it is consistent with that concept. However, our findings cannot inform us regarding the time course of the shift toward visual reliance during AV speech recognition. That is, we suspect that this shift toward visual reliance occurs as the listener adapts over a prolonged time period to chronically degraded auditory input. By contrast, this shift could potentially be rapid and automatic as the listener quickly shifts attention to visual cues during AV speech recognition under degraded listening conditions. This latter hypothesis could easily be tested by presenting listeners with AV speech stimuli across a range of noise levels or by using speech materials of varying difficulty and determining if and how visual reliance shifts. Additional studies will be required to study the time course of shifting toward visual reliance during AV speech recognition.

An alternative explanation for our findings may be that participants who rely more on visual information simply pay less attention during recognition of A-only information. The likelihood of this explanation is diminished by the demands of our task, in which participants were specifically asked to repeat correctly as many words in the sentences as possible, including in the A-only condition, which should have directed their attention to optimize A-only speech recognition for that condition. It should also be noted that our task may not be representative of everyday speech recognition demands, in which the listener may focus more on comprehension of the message and less on recognition of individual words. In addition, our tasks included only CUNY sentence materials, which are relatively high-context and contained only one talker. It is unclear how well our findings translate to speech materials with variable context and multiple talkers. Moreover, only a single list of CUNY sentences was tested in each condition (A-only, V-only, and AV), which could have limited our ability to identify a significant group effect. However, the differences in mean scores among lists for each group were relatively large (i.e., greater than the 16 percentage point 95%-confidence interval determined by Boothroyd et al [1985b]), suggesting that our findings were overall reliable.

Our results demonstrated that restoration of auditory input through a CI did not lead to a statistically significant difference in visual reliance between ECIs and CICs. CICs did show a larger mean VE/AE ratio (0.79) than ECIs (0.68), but this difference was not significant. It is possible that because of the broad variability in VE/AE ratio scores among participants, this study was underpowered to demonstrate a group difference in the ratio. In addition, a better way to assess the effects of cochlear implantation on visual reliance would be to test a group of CICs preoperatively, perform cochlear implantation, and then retest that same group longitudinally 6 or 12 months after activation. This study approach is currently in progress and will shed light on the shifts in visual reliance that may occur within participants because of implantation, rather than comparing visual reliance between two separate groups of listeners.

Findings from this study have clinical ramifications. First, results are consistent with the anecdotal experience of many clinicians that the more severe a prolonged HL is, the greater that patient tends to demonstrate visual reliance (i.e., rely on speech reading) during AV speech recognition. Our findings, although not conclusive, may provide additional support to the idea that the auditory performance of the listener actually drives a shift to visual reliance. This could suggest that optimizing amplification through a hearing aid before cochlear implantation might prevent (at least to some degree) the shift to visual reliance otherwise demonstrated. Preventing that shift toward visual reliance may have a positive impact on the ultimate speech recognition outcome of the patient after receiving a CI. For example, a recent study using positron emission tomography imaging in adult CI users showed that less activation of auditory cortical regions during a V-only speech reading task, which we posit may have reflected less of a shift to visual reliance, was associated with better speech recognition outcomes in the A-only listening condition (Strelnikov et al, 2013). A second clinical implication is that restoration of auditory input through a CI may not necessarily shift a listener’s reliance back to auditory processing and away from visual processing during AV speech recognition. Indeed, that would be consistent with Rouger et al (2007), who demonstrated in CI users that an enhancement in visual reliance (i.e., speech reading ability alone in that study) was found to persist in the years after cochlear implantation. Results of our ongoing longitudinal study of AV speech recognition pre- and post-CI in a single group of participants will help sort out the possible changes in visual reliance that may occur post-implantation.

There are several additional limitations of this study that should be acknowledged. First, twelve participants were included whose HL started before the age of 12 years, meaning they might be considered “peri-lingual” rather than post-lingual. It is possible that this impacted their performance during CUNY sentence testing. Second, it is possible, although unlikely, that the findings from this study are specific to CUNY sentence materials, and not more broadly applicable to phoneme, word, or sentence recognition using other materials. Third, although significant differences were identified at the group level between AV speech recognition and A-only speech recognition, these differences may not be clinically significant at the individual level, which limits our ability to establish from the present study whether AV measures are clinically valuable above A-only measures. Last, some ECI users demonstrated AV scores that were at or near ceiling performance, and this may have restricted the magnitude of the VE/AE ratio computed for those individuals. In theory, then, the mean VE/AE ratio for the ECIs would actually be larger and closer to the value for the CICs, still counter to our hypothesis. Thus, this limitation most likely did not impact the results of the study.

CONCLUSION

Findings from this study suggest that the degree to which listeners with HL rely on visual information during AV speech recognition depends on the quality of their A-only speech recognition. ECIs do not necessarily demonstrate a shift away from visual reliance as a result of using their CIs, as demonstrated by similar visual reliance as CICs. Additional studies will be needed to further investigate whether longitudinal changes in hearing status result in shifts in sensory reliance during AV speech recognition.

Acknowledgments

This work was supported by the American Otological Society Clinician-Scientist Award and the National Institutes of Health and National Institute on Deafness and Other Communication Disorders (NIDCD) Career Development Award 5K23DC015539-02 to Aaron Moberly. Research reported in this paper received IRB approval from The Ohio State University.

A.C.M. receives grant funding support from Cochlear Americas for an unrelated investigator-initiated research study.

Abbreviations:

- AE

auditory enhancement

- A-only

auditory-only

- AV

audiovisual

- CI

cochlear implant

- CICs

cochlear implant candidates

- CUNY

City University of New York

- ECIs

experienced cochlear implant users

- HL

hearing loss

- NH

normal-hearing

- MMSE

Mini-Mental State Examination

- SD

standard deviation

- SES

socioeconomic status

- VE

visual enhancement

- V-only

visual-only

Footnotes

Data from this manuscript were presented at the AAA 2018 annual conference of the American Academy of Audiology, Nashville, TN, April 18–21, 2018.

REFERENCES

- Altieri NA, Pisoni DB, Townsend JT. (2011) Some normative data on lip-reading skills. J Acoust Soc Am 130(1):1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boothroyd A, Hanin L, Hnath T. (1985a) CUNY Laser Videodisk of Everyday Sentences. New York, NY: Speech and Hearing Sciences Research Center; City University of New York. [Google Scholar]

- Boothroyd A, Hanin L, Hnath T. (1985b) A sentence Test of Speech Perception: Reliability, Set Equivalence, and Short-Term Learning Internal Report RCI 10. New York, NY: Speech and Hearing Sciences Research Center; City University of New York. [Google Scholar]

- Desai S, Stickney G, Zeng FG. (2008) Auditory-visual speech perception in normal-hearing and cochlear-implant listeners. J Acoust Soc Am 123(1):428–440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Liss J, Wang S, Berisha V, Ludwig C, Natale SC. (2016) Experiments on auditory-visual perception of sentences by users of unilateral, bimodal, and bilateral cochlear implants. J Speech Lang Hear Res 59(6):1505–1519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. (1975) “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J Psych Res 12(3):189–198. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Lee HJ. (2007) Predicting cochlear implant outcome from brain organisation in the deaf. Restor Neurol Neurosci 25(3–4):381–390. [PubMed] [Google Scholar]

- Giraud AL, Truy E, Frackowiak R. (2001) Imaging plasticity in cochlear implant patients. Audiol Neurotol 6(6):381–393. [DOI] [PubMed] [Google Scholar]

- Goh WD, Pisoni DB, Kirk KI, Remez RE. (2001) Audio-visual perception of sinewave speech in an adult cochlear implant user: a case study. Ear Hear 22(5):412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant KW, Seitz PF. (1998) Measures of auditory–visual integration in nonsense syllables and sentences. J Acoust Soc Am 104(4): 2438–2450. [DOI] [PubMed] [Google Scholar]

- Grant KW, Walden BE, Seitz PF. (1998) Auditory-visual speech recognition by hearing-impaired subjects: consonant recognition, sentence recognition, and auditory-visual integration. J Acoust Soc Am 103:2677–2690. [DOI] [PubMed] [Google Scholar]

- Hay-McCutcheon MJ, Pisoni DB, Kirk KI. (2005) Audiovisual speech perception in elderly cochlear implant recipients. Laryngoscope 115(10):1887–1894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser AR, Kirk KI, Lachs L, Pisoni DB. (2003) Talker and lexical effects on audiovisual word recognition by adults with cochlear implants. J Speech Lang Hear Res 46(2):390–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leybaert J, LaSasso CJ. (2010) Cued speech for enhancing speech perception and first language development of children with cochlear implants. Trends Amplif 14(2):96–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moradi S, Lidestam B, Rönnberg J. (2016) Comparison of gated audiovisual speech identification in elderly hearing aid users and elderly normal-hearing individuals: effects of adding visual cues to auditory speech stimuli. Trends Hear 20:2331216516653355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. (1994) Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am 95(2):1085–1099. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Burton LT. (2005) The role of early language experience in the development of speech perception and phonological processing abilities: evidence from 5-year-olds with histories of otitis media with effusion and low socioeconomic status. J Commun Dis 38(1):29–63. [DOI] [PubMed] [Google Scholar]

- Rabinowitz WM, Eddington DK, Delhorne LA, Cuneo PA. (1992) Relations among different measures of speech reception in subjects using a cochlear implant. J Acoust Soc Am 92(4):1869–1881. [DOI] [PubMed] [Google Scholar]

- Rouger J, Fraysse B, Deguine O, Barone P. (2008) McGurk effects in cochlear-implanted deaf subjects. Brain Res 1188:87–99. [DOI] [PubMed] [Google Scholar]

- Rouger J, Lagleyre S, Fraysse B, Deneve S, Deguine O, Barone P. (2007) Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc Natl Acad Sci USA 104(17): 7295–7300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schreitmüller S, Frenken M, Bentz L, Ortmann M, Walger M, Meister H. (2018) Validating a method to assess lipreading, audio-visual gain, and integration during speech reception with cochlear-implanted and normal-hearing subjects using a talking head. Ear Hear 39(3):503–516. [DOI] [PubMed] [Google Scholar]

- Sommers MS, Tye-Murray N, Spehar B. (2005) Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear Hear 26(3): 263–275. [DOI] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF, Litvak LM, Van Wie S, Gifford RH, Loizou PC, Loiselle LM, Oakes T, Cook S. (2012) Development and validation of the AzBio sentence lists. Ear Hear 33(1):112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Sheffield SW, Butera IM, Gifford RH, Wallace MT. (2017) Multisensory integration in cochlear implant recipients. Ear Hear 38(5):521–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Barone P, Deguine O. (2009) Role of speechreading in audiovisual interactions during the recovery of speech comprehension in deaf adults with cochlear implants. Scand J Psychol 50(5):437–444. [DOI] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Demonet JF, Lagleyre S, Fraysse B, Deguine O, Barone P. (2013) Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain 136(12):3682–3695. [DOI] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. (1954) Visual contributions to speech intelligibility in noise. J Acoust Soc Am 26:212–215. [Google Scholar]

- Tremblay C, Champoux F, Lepore F, Théoret H. (2010) Audiovisual fusion and cochlear implant proficiency. Restor Neurol Neurosci 28(2):283–291. [DOI] [PubMed] [Google Scholar]

- Tye-Murray N, Sommers MS, Spehar B. (2007) Audiovisual integration and lipreading abilities of older adults with normal and impaired hearing. Ear Hear 28(5):656–668. [DOI] [PubMed] [Google Scholar]

- Tye-Murray N, Sommers M, Spehar B, Myerson J, Hale S. (2010) Aging, audiovisual integration, and the principle of inverse effectiveness. Ear Hear 31(5):636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilkinson GS, Robertson GJ. (2006) Wide range achievement test (WRAT4). Lutz, FL: Psychological Assessment Resources. [Google Scholar]