Abstract

This trial examined whether a stepped care program for depression, which initiated treatment with internet cognitive behavioral therapy, including telephone and messaging support, and stepped up non-responders to telephone-administered cognitive behavioral therapy (tCBT), was noninferior, less costly to deliver, and as acceptable to patients compared to tCBT alone. Adults with a diagnosis of major depressive episode (MDE) were randomized to receive up to 20 weeks of stepped care or tCBT. Stepped care (n=134) was noninferior to tCBT (n=136) with an end-of-treatment effect size of d=0.03 and a 6-month post-treatment effect size of d=−0.07 [90% CI 0.29 to 0.14]. Therapist time in stepped care was 5.26 (SD=3.08) hours versus 10.16 (SD 4.01) for tCBT (p<0.0001), with a delivery cost difference of $−364.32 [95% CI $−423.68 to $−304.96]. There was no significant difference in pre-treatment preferences (p=0.10) or treatment dropout (39 in stepped care; 27 in tCBT; p=0.14). tCBT patients were significantly more satisfied than stepped care patients with the treatment they received (p<0.0001). These findings indicate that stepped care was less costly to deliver, but no less effective than tCBT. There was no significant difference in treatment preference or completion, however satisfaction with treatment was higher in tCBT than stepped care.

Trial Registration

clinicaltrials.gov Identifier: .

Keywords: Depression, Non-inferiority, CBT, Internet, Psychotherapy

INTRODUCTION

Depression is prevalent and imposes a very high societal burden in cost, morbidity, quality of life, and mortality (Greenberg, Fournier, Sisitsky, Pike, & Kessler, 2015; Wells, et al., 2002). While psychotherapy is both effective and acceptable to patients, a variety of barriers exist both to initiating and completing psychotherapy (Bedi, et al., 2000; Mohr, et al., 2010). Psychotherapy delivered remotely via telephone has been shown to increase treatment retention while being no less effective than face-to-face psychotherapy (Mohr, et al., 2012; Mohr, Vella, Hart, Heckman, & Simon, 2008). Indeed, telephone psychotherapy is increasingly being used to overcome access barriers (Godleski, Darkins, & Peters, 2012; Turner, Brown, & Carpenter, 2018). However, increased treatment retention resulting from telephone psychotherapy may also increase treatment costs to healthcare organizations and payers.

Internet interventions, which combine digital tools with brief support from a coach or therapist, are another form of remote treatment. A large number of trials have consistently shown them to be effective at reducing depression (Andrews, et al., 2018). As the evidence base for internet interventions accumulates, care systems are trying to understand how to integrate it into existing mental health services. The Dutch healthcare system, for example, has begun using blended care models, in which therapists can use internet treatments in combination with standard psychotherapy delivered in person or remotely. However, preliminary results suggest the use of internet interventions in blended care may actually increase therapist time and costs without improving outcomes (Kenter, et al., 2015).

Stepped care has been proposed to be a solution for integrating technology-based treatments into existing care resources. However, the benefits of internet therapy as a low-intensity first step for treatment of depression have not been tested (Cuijpers, Beekman, & Reynolds, 2012). Stepped care models typically have two features (Bower & Gilbody, 2005): they should be “least restrictive” in terms of cost and personal inconvenience, and they should be self-correcting. Thus, stepped care programs should initiate care with a low-intensity, less costly treatment, monitor outcomes systematically, and step patients up to more intensive and more costly treatments if criteria for improvement are not met. For example, stepped care protocols have been initiated treatment for depression with low-intensity psychoeducation, stepping up to a more intensive psychotherapy if a criterion such as dropping below a clinical threshold on a symptom severity measure is not met after a defined period (Araya, et al., 2003; Bot, Pouwer, Ormel, Slaets, & de Jonge, 2010).

The aim of this study was to compare a remotely-delivered stepped care program to telephone administered cognitive behavioral therapy (t-CBT) for the treatment of depression. The stepped care program initiated treatment using a guided internet CBT (iCBT), stepping up patients, who were non responsive, to t-CBT. The use of tCBT as the comparison treatment, rather than face-to-face (Mohr, et al., 2012; Mohr, et al., 2008), also improves internal validity, as lower retention in face-to-face treatment could bias our findings in favor of our hypotheses. We evaluated three sets of hypotheses recommended for evaluating stepped care models (Bower & Gilbody, 2005): Stepped care would 1) be noninferior to tCBT for depression outcomes; 2) have lower therapist costs, and; 3) be acceptable to patients.

METHODS

Participants

Participants were recruited from February 23, 2015 through May 4, 2017 via online sources (e.g. ResearchMatch, Reddit) and healthcare systems in the United States (Group Health and Northwestern Medicine). Those recruited through healthcare systems were identified through electronic medical records. These recruitment methods and procedures, their effectiveness, and costs have been described in detail in two recent publications (Lattie, et al., 2018; Palac, et al., 2018).

Participants were included if they met criteria for major depressive episode (MDE) using the Mini International Neuropsychiatric Interview (Sheehan, et al., 1998); had a score of 12 or greater on the Quick Inventory of Depressive Symptomatology – Clinician Rated (Rush, et al., 2006); were aged 18 years or older, could speak and read English, and had a computer or tablet that would allow them to access a web intervention. Participants were excluded if they met diagnostic criteria for a severe psychiatric disorder, for which these treatments would be inappropriate, including psychotic disorders, bipolar disorder, or eating disorders; showed imminent risk of suicide in which both a plan and intent were reported using the Columbia Suicide-Severity Rating Scale (Posner, et al., 2011); had visual or hearing impairments that would prevent participation; were receiving or planning to receive individual psychotherapy; or had initiated or modified antidepressant pharmacotherapy in the previous 14 days, to eliminate the effects of placebo response to medications. Participants receiving pharmacotherapy were permitted to continue their care and receive dose or medication adjustments. While alcohol or drug abuse or dependence were not exclusionary, potential participants who screened positive were further interviewed to assess their level of impairment. These audio-recordings were reviewed by the assessment supervisor; those whose impairment was judged to be severe enough that treatment for depression alone would be inappropriate were excluded. Excluded prospective participants were referred for appropriate treatment. All participants were consented and signed an online consent form in accordance with trial procedures that were approved by the Northwestern University institutional review board. The trial was monitored by an independent Data Safety Monitoring Board.

Treatments

Stepped care initiated treatment with guided iCBT and stepped up those participants who did not meet criteria for improvement to tCBT. The comparison condition was tCBT. Participants in both conditions were treated until they reached full remission, defined as a PHQ-9<5 over two consecutive weeks (Kroenke, Spitzer, & Williams, 2001), or for a maximum of 20 weeks, whichever came first. All patients completed a weekly PHQ-9 as part of their treatment, which was used to guide treatment decisions.

iCBT

iCBT used a highly interactive web-based intervention called ThinkFeelDo, which teaches basic CBT skills and has been piloted in several trials (Lattie, et al., 2017; Schueller & Mohr, 2015; Tomasino, Lattie, Ho, et al., 2017). Didactic material was presented in “lessons” released 4 times per week. Two of the weekly lessons required approximately 10 minutes of time and include text and short animated video clips. The other 2 weekly lessons were brief summaries of previous material requiring 1–2 minutes to read. ThinkFeelDo included interactive tools supporting CBT skills (e.g., a calendaring tool for scheduling and monitoring pleasant events or a cognitive restructuring tool) and a coach interface that allowed the coach to see when and what the patient had accessed, the content of work with tools, and a secure messaging feature.

The iCBT coaching protocol was manualized (Tomasino, Lattie, Wilson, & Mohr, 2017) and based on the supportive accountability and efficiency models (Mohr, Cuijpers, & Lehman, 2011; Schueller, Tomasino, & Mohr, 2016). Coaches began with a 30–40 min engagement phone call, followed by weekly 10–15 min. calls and 2–3 secure messages per week through the ThinkFeelDo site. After 3 weeks, patients could elect to move to messaging only or continuing to use telephone coaching on an as-needed basis.

Participants in iCBT were stepped up to tCBT if they met criteria that predicts nonresponse to iCBT and psychotherapy: PHQ-9≥17 from weeks 4–8, PHQ-9≥13 from weeks 9–13, or PHQ-9≥9 after week 13 (Schueller, Kwasny, Dear, Titov, & Mohr, 2015).

tCBT

tCBT used our manualized treatment based on a standard CBT approach, validated in numerous randomized trials and shown to be noninferior to face-to-face CBT (Mohr, et al., 2012). Patients received a workbook, Beating Depression (Mohr, Ho., Duffecy, & Glazer-Baron, 2007), and spoke weekly with their assigned therapist for 45–50 minutes.

Therapists, training, and fidelity monitoring

Therapists included 5 PhD level clinical psychologists, 2 licensed clinical social workers, and a master’s level therapist, all of whom were trained CBT therapists. Therapists provided both iCBT and tCBT and remained with the participant throughout treatment in both study arms.

tCBT training consisted of readings, 1 day of didactic training, weekly supervision on at least 3 cases until criteria for competence was met, which included a score of ≥40 on the Cognitive Therapy Scale (CTS) (Vallis, Shaw, & Dobson, 1986), and the supervisor’s determination of competence. iCBT training consisted of listening to exemplary audiotaped coach calls and review of messaging logs, an additional 3–5 hours of didactic training, review of the patient ThinkFeelDo site, and weekly individual supervision until the supervisor determined proficiency.

Supervision for tCBT and iCBT occurred separately. All phone therapy sessions in both iCBT and tCBT were audio-recorded. tCBT supervisors listened to at least one recording for each supervision session and rated it on the CTS. Supervision for therapists began weekly and was gradually reduced to not less than once monthly. The mean of the 112 therapist CTS rating scores was 50.8 (range=40–61). iCBT supervisors listened to call recordings and reviewed message logs. iCBT supervision occurred in a weekly group format, where at least one therapist’s interactions with a participant were reviewed by reading messages and listening to the phone call if it occurred. As therapists began iCBT, they also received individual weekly supervision until the supervisor determined that they were competently following the protocol. Fidelity to iCBT was not monitored quantitatively. When iCBT participants stepped up to tCBT, supervision for the therapist also transferred to the tCBT supervisor.

Assessment

The primary clinical outcome was depressive symptom severity measured using the Quick Inventory of Depressive Symptomatology-Clinician version (QIDS) (Rush, et al., 2006), an interviewer-based measure, administered by masked clinical evaluators. Comorbid diagnoses and MDE diagnosis as a secondary outcome were assessed using the MINI structured interview (Sheehan, et al., 1998). All measures were administered at baseline, end of treatment (EOT), and at 3 and 6 months post-treatment. A mid-treatment assessment was also scheduled at week 10, however participants who remitted before week 10 would not have this mid-treatment assessment.

Bachelor-level research assistants, trained and supervised by a PhD-level psychologist, administered the MINI and QIDS by phone. Training included assigned readings from selected portions of the DSM-V (American Psychiatric Association, 2013), a 4-hour presentation on psychopathology, didactic training on MINI and QIDS administration, review of previously recorded assessments, and practice administrations. All interview assessments were audio-recorded. When the evaluator began assessing participants, the supervisor reviewed all recordings and provided individual feedback until competence was established (usually 5–10 assessments). Evaluators were supervised weekly as a group.

Time to remission (PHQ-9>5) was measured in weeks. Because the PHQ-9 was used to determine treatment decisions such as stepping and cessation of treatment, it was not used as an outcome measure in trial evaluation.

The primary delivery cost outcome was therapist cost. Therapists logged all time spent with a patient on phone sessions, messaging (iCBT), review of the dashboard and notes, outreach and call scheduling, and preparation. As these costs are intended to estimate the cost that might be expected in real-world implementation, we did not include time spent in training, supervision, and fidelity monitoring, as these are typically far more intensive in trials than in real-world care settings, where supervision is variable and often minimal. Therapist costs were calculated using an annualized psychologist wage of $80,510, based on the US Bureau of Labor Statistics 2017 estimates for Chicago. Consistent with previous work (Simon, Ludman, & Rutter, 2009), we added 28% fringe and 30% overhead, for a total annualized cost of $133,969, or an hourly cost of $74.43 based on 1800 work hours yearly.

Acceptability was explored at three times: pre-treatment preferences, adherence during treatment, and post-treatment satisfaction. Preference was evaluated using a previously employed method that provided a brief description of the treatments followed by a preference rating (Mohr, et al., 2012). Non-adherence was defined as dropping out of treatment. Satisfaction was measured at the end of treatment using the Satisfaction Index for Mental Health Treatment (SIMH) measure, which has a possible range of 0–72, and a mean of 40 (SD=13), based on a previous study of psychometric properties (Nabati, Shea, McBride, Gavin, & Bauer, 1998).

Baseline patient medication status was characterized by self-report. Dosing was categorized based on FDA recommendations (Education Medicaid Integrity Contractor, 2013).

Randomization and Masking

Prior to the beginning of recruitment, a statistician produced a sequentially masked randomization scheme to the start of the trial, based on a 1:1 ratio, stratified by antidepressant medication status and therapist, with a block size of 4 within each stratum. To prevent allocation bias, all study personnel were masked to the randomization scheme. Clinical evaluators, who were masked to treatment assignment, enrolled and evaluated participants. Evaluators began each interview by asking participants not to say anything about the treatment they were receiving. After each assessment, evaluators reported if they had become unmasked, which occurred in 40 (1.7%) of the 2422 assessments. These participants were reassigned to another masked evaluator.

Statistical Analyses

Noninferiority is established by showing that the true difference between two treatment arms is likely to be smaller than a prespecified noninferiority margin that separates clinically important from clinically negligible (acceptable) differences (D’Agostino, Massaro, & Sullivan, 2003; Nutt, Allgulander, Lecrubier, Peters, & Wittchen, 2008). Noninferiority trials of pharmaceuticals have used 30% to 50% of the difference between treatment and control conditions to define noninferiority margins (Jones, Jarvis, Lewis, & Ebbutt, 1996; Nutt, et al., 2008). A meta-analysis of CBT found an overall effect size of d=0.82 (Cuijpers, Smit, Bohlmeijer, Hollon, & Andersson). We use face-to-face CBT rather than tCBT as the effect-size as this literature is far larger and therefore more reliable, and because there is no reason to believe that the effects of t-CBT are different from face-to-face CBT (Mohr, et al., 2012). Using the midpoint of 40% for the acceptable criterion, we set d=0.33 as the noninferiority criterion. A 1-sided test at a type I error rate of 5%, testing whether the difference in treatment groups is less than the noninferiority margin, is equivalent to testing whether a 2-sided 90% CI for the difference does not contain the noninferiority margin (Walker & Nowacki, 2011).

A sample of 115 per group provided 80% power to detect noninferiority using a one-sided, confidence interval for the difference in two independent means with type I error rate of 5% and a standardized margin of equivalence of 0.33. Assuming a 25% dropout rate, the study was designed to enroll 155 per treatment group.

Current literature suggests not directly imputing missing data for noninferiority analyses, but instead using mixed-effects models with repeated measures (Wiens & Rosenkranz, 2013). This method can estimate the difference between treatments at each time while accounting for observations that may be missing and adjusting for covariates. Hence, we used these models to estimate differences in primary outcomes (QIDS) between treatment allocation, assuming a random intercept and unstructured covariance structure. Additionally, we parameterized time as a nominal variable to not impose any structure to the trajectory of outcome over time. We provide the difference and 90% CI for treatment differences on the QIDS scale for interpretability. MDE, a secondary outcome, is binary and therefore used the effect size, h. All clinical outcome models were fit in SAS v.9.04. To verify that each arm produced reductions in symptoms, we evaluated the mean QIDS over time using mixed models with random intercepts and categorical indexing of time. Analyses for the primary clinical outcome was conducted using modified intention-to-treat, where all participants with at least one QIDS assessment after baseline were used in analyses. Additionally, as ITT analyses may increase the likelihood of falsely concluding non-inferiority, we performed a secondary analysis using a hybrid ITT/ per-protocol (PP) approach, where participants who did not complete treatment were excluded. Non-trivial missing data was addressed using MLE-based ITT analysis via mixed models, as in our primary analysis (Matilde Sanchez & Chen, 2006).

Therapist cost differences and satisfaction across treatments were analyzed using t-tests. We calculated confidence intervals using bootstrapping and Fieller’s theorem (a probabilistic model) (Chaudhary & Stearns, 1996; Willan & O’Brien, 1996). Differences in acceptability were compared using logistic regression (to determine if dropout differed between groups), and linear regression was used to determine if satisfaction was different across groups after adjusting for covariates. Analyses of acceptability (treatment preferences, dropout, and satisfaction) and time to remission were analyzed using t-tests and chi-squared tests. These analyses were done in R v3.4.3.

RESULTS

Participants

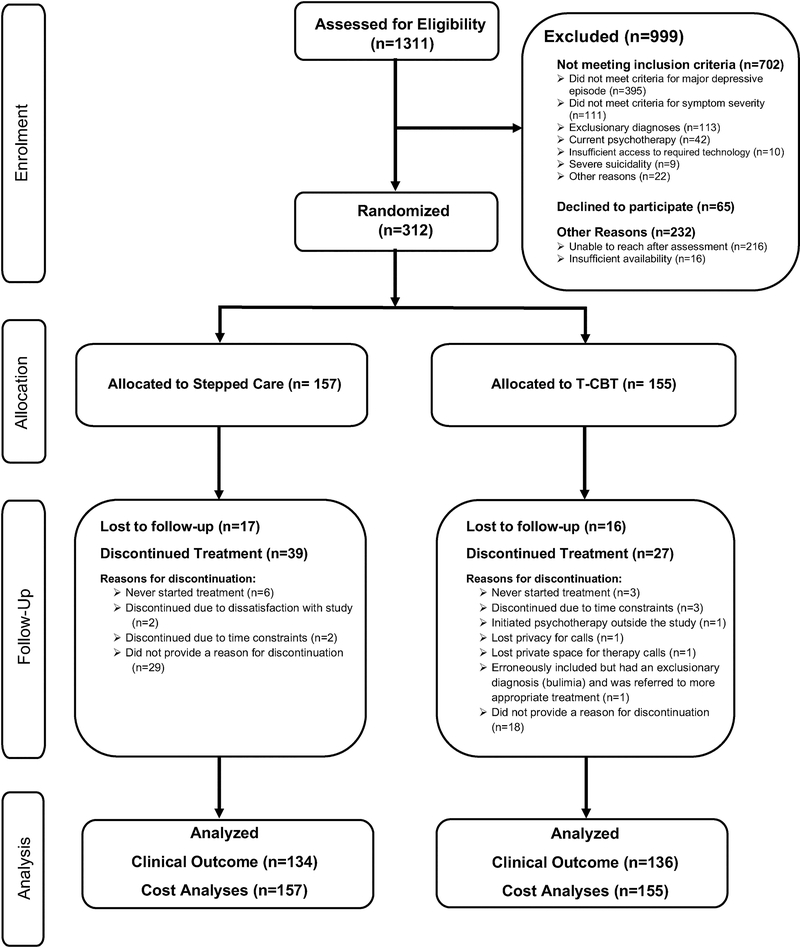

The flow of patients through the study is depicted in Figure 1. A total of 270 (86.5%) of the 312 randomized had at least one QIDS assessment post baseline. There were no significant differences across treatment arms in lost-to-follow-up rates (p=0.99). Table 1 summarizes the baseline demographics and characteristics of the randomized participants.

Fig. 1.

Flow of participants through the study.

Table 1.

Baseline characteristics of the intention-to-treat population

| Stepped Care (157) | tCBT (155) | |

|---|---|---|

| Age (Years) (median [IQR]) | 33.0 [27.0, 49.0] | 32.0 [26.0, 45.5] |

| Sex (%) | ||

| Female | 118 (75.2%) | 111 (71.6%) |

| Male | 37 (23.6%) | 44 (28.4%) |

| Missing | 2 (1.3%) | 0 (0.0%) |

| Race | ||

| White | 142 (90.4%) | 133 (85.8%) |

| Black | 8 (5.1%) | 13 (8.4%) |

| Asian | 6 (3.8%) | 8 (5.2%) |

| Other | 3 (1.9%) | 5 (3.2%) |

| Ethnicity | ||

| Non-Latino | 141 (89.8%) | 134 (86.5%) |

| Latino | 12 (7.6%) | 20 (12.9%) |

| Missing | 4 (2.5%) | 1 (0.6%) |

| Marital Status (%) | ||

| Married/Partnered | 70 (44.6%) | 68 (43.9%) |

| Single | 58 (36.9%) | 67 (43.2%) |

| Separated/Divorced/Widowed | 29 (18.5%) | 20 (12.9%) |

| College | ||

| High School or less | 8 (5.1%) | 7 (4.5%) |

| Some college | 36 (22.9%) | 42 (27.1%) |

| College degree | 113 (72.0%) | 106 (68.4%) |

| Household Income (median [IQR]) | $53,500 [$30,500, $80,000] | $50,000 [$30,000, $82,000] |

| Antidepressant Use | 68 (43.3%) | 67 (43.2%) |

| Below minimal dosage | 13 (19.4%)1 | 6 (9.0%)1 |

| Minimal dosage | 6 (9.0%)1 | 13 (19.4%)1 |

| >Minimal to < 2x minimal dosage | 9 (12.0%)1 | 7 (10.5%)1 |

| ≥ 2x minimal dosage | 40 (59.7%)1 | 41 (61.2%)1 |

| Baseline QIDS | ||

| Mean (SD) | 14.81 (2.42) | 14.95 (2.57) |

| Median (IQR) | 14.0 (13.0, 16.0) | 14.0 (13.0, 17.0) |

| Baseline PHQ-9 | ||

| Mean (SD) | 16.54 (3.66) | 16.61 (3.97) |

| Median (IQR) | 16.0 (14.0, 19.0) | 17.0 (14.0, 19.0) |

| Comorbid Diagnoses (%) | ||

| Generalized Anxiety Disorder | 75 (47.8) | 58 (37.4) |

| Social Anxiety Disorder | 32 (20.4) | 32 (20.6) |

| Panic Disorder | 19 (12.3) | 14 (8.9) |

| Obsessive-compulsive Disorder | 12 (7.7) | 5 (3.2) |

| PTSD | 19 (12.1) | 12 (7.7) |

| Agoraphobia | 36 (22.9) | 31 (20.0) |

| Alcohol Abuse/Dependence | 9 (5.7) | 5 (3.2) |

| Drug Abuse/Dependence | 4 (2.5) | 2 (1.3) |

| Number of comorbid diagnoses (%) | ||

| 0 | 46 (29.3) | 54 (34.8) |

| 1 | 60 (38.2) | 53 (34.2) |

| 2 | 31 (19.7) | 30 (19.4) |

| 3 | 11 (7.0) | 10 (6.5) |

| ≥4 | 9 (5.7) | 8 (5.1) |

| Treatment Preferences | ||

| Stepped Care | 48 (30.6%) | 36 (23.2%) |

| Telephone Cognitive Behavioral Therapy | 53 (33.8%) | 62 (40.0%) |

| No preference | 56 (35.7%) | 57 (36.8%) |

Percentages for dosage are among those receiving antidepressant medications.

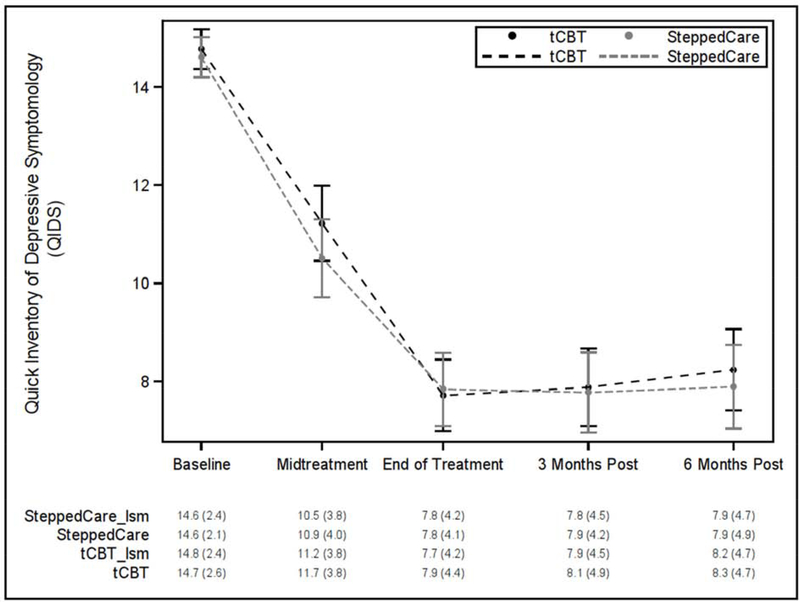

Depression Outcomes

Group ratings by all interviewers on audiotaped QIDS were conducted revealing interrater reliabilities of 0.82. Figure 1 displays the QIDS outcomes over time. Participants demonstrated clinically meaningful reductions in the QIDS at EOT in both stepped care (Δ=−6.8 [95%CI= −7.6, –6.0]; p<0.0001) and tCBT (Δ=−7.1 [95%CI= −7.9, −6.2]). Participants remained improved at 6-month follow-up relative to baseline (ΔQIDS=−6.7 [95%CI=−7.6, −5.8] for stepped care; −6.5 [95%CI= −7.4, −5.7] for tCBT).

Figure 2. QIDS over time by randomized group with least squares estimated and actual means (SDs).

QIDS = Quick Inventory of Depressive Symptoms

tCBT = Telephone administered cognitive behavioral therapy

lsm = least squares model

MDE also showed substantial reductions, with MDE at EOT of 15% in stepped care and 11% in tCBT, and 16% and 15%, respectively, at 6-month follow-up.

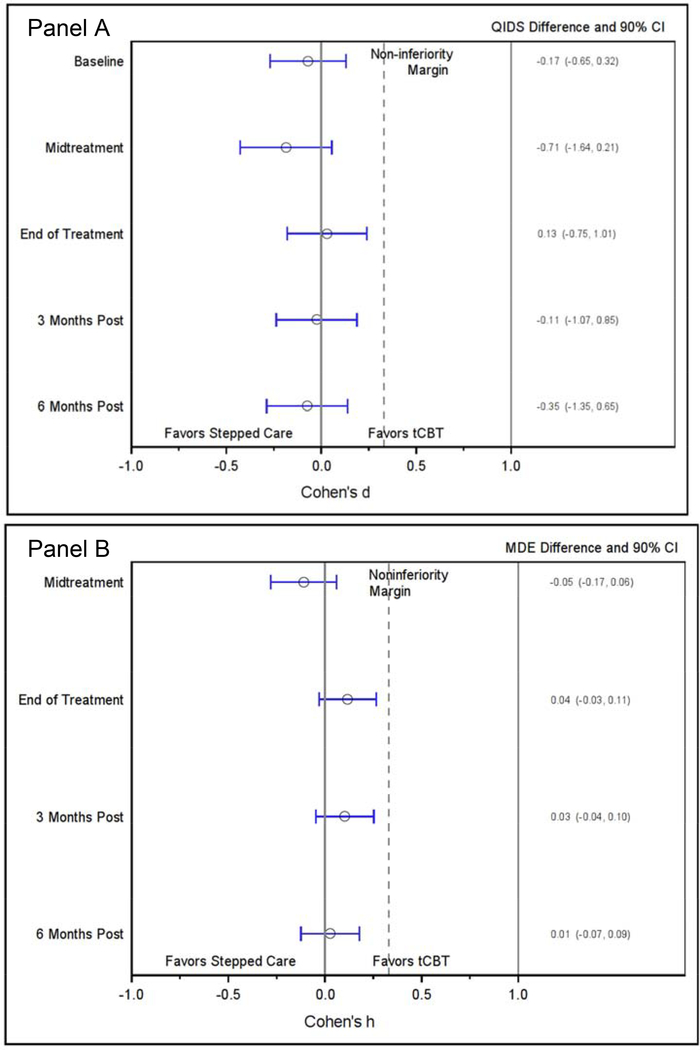

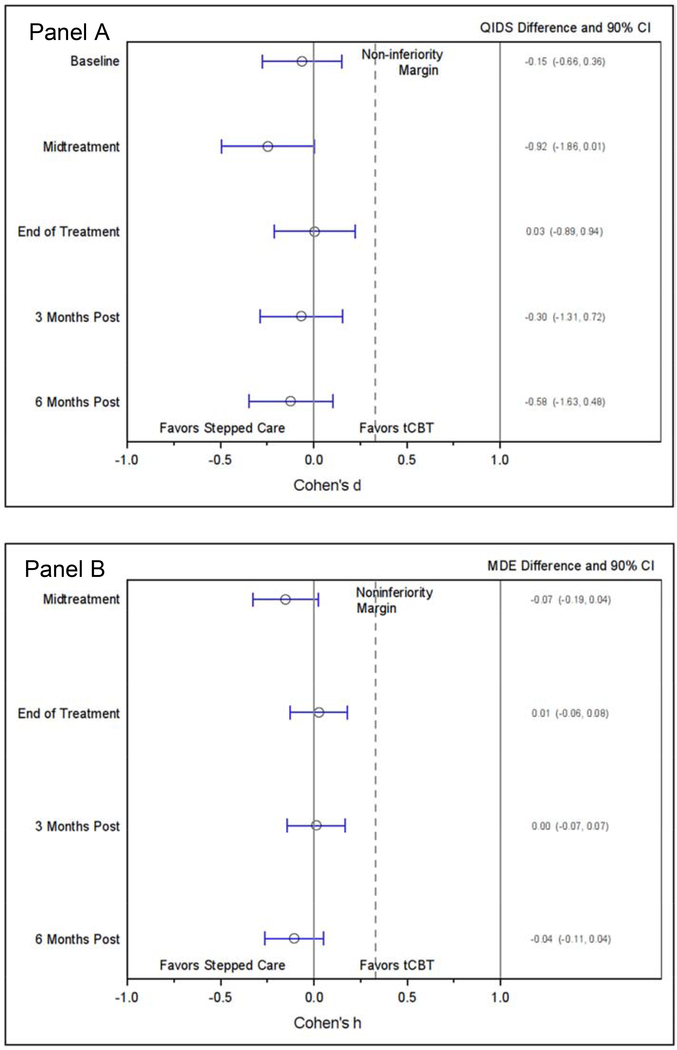

The primary outcome of QIDS showed little difference between groups (Figure 2, Panel A). The effect size comparing QIDS between groups at EOT found an effect size of d=0.03 (90%CI=−0.18, 0.24) with an absolute QIDS difference of 0.13 (90%CI=−0.75, 1.01). The effect size at 6-month post-treatment was d=−0.07 (90%CI=−0.29, 0.14) with an absolute QIDS difference of −0.35 (90% CI=−1.35, 0.65). In the hybrid ITT/PP analysis, we excluded 66 (24%) of the 270 participants who discontinued treatment (Figure 3, Panel A). The EOT effect size was d=0.01 (90%CI=−0.21, 0.22) with an absolute QIDS difference of 0.03 (90%CI=−0.89, 0.94). The effect size at 6-month post-treatment was d=−0.12 (90%CI=−0.34, 0.10) with an absolute QIDS difference of −0.58 (90% CI=−1.63, 0.48). Model coefficients are provided in an online supplemental table.

Figure 3. Modified intention to treat effect size (Cohen’s d & h) differences and actual differences between groups. Panel A: QIDS; Panel B: MDE.

MDE = Major Depressive Episode

QIDS = Quick Inventory of Depressive Symptoms;

tCBT = Telephone administered cognitive behavioral therapy

Figure 4. Per protocol effect size (Cohen’s d & h) differences and actual differences between groups. Panel A: QIDS; Panel B: MDE.

MDE = Major Depressive Episode

QIDS = Quick Inventory of Depressive Symptoms;

tCBT = Telephone administered cognitive behavioral therapy

The EOT effect size for MDE (Figure 2, Panel B) was h=0.12 (90%CI=−0.03, 0.27) with an absolute difference in rates of 3.9% (90%CI=–3.1, 10.9). At 6 months post-treatment, the effect size for difference in MDE was h=0.03 (90%CI=−0.12, 0.18) with an absolute difference in rates of 1.0% (90%CI=–6.7, 8.6). In the hybrid ITT/PP analysis (Figure 3, Panel B), the EOT effect size was h=0.03 (90%CI=−0.13, 0.18) with an absolute difference in rates of 0.8% (90%CI=–6.0, 7.6). At 6 months post-treatment, the effect size for difference in MDE was h=−0.11 (90%CI=−0.27, 0.05) with an absolute difference in rates of −3.7% (90%CI=–11.4, 4.0). Model coefficients are provided in an online supplemental table.

Stepping

Within the stepped care arm, a total of 36 (23%) stepped up to tCBT; 19 (53% of steppers) in weeks 4–8, 15 (42%) in weeks 9–13, and 2 (5%) after week 13.

Time to Remission

There was no difference in the number of participants completing treatment prior to 20 weeks due to reaching the criterion for full remission [56 (36%) for stepped care; 56 (36%) for tCBT; 95% CI=−0.10, 0.11; p=1.0] and no significant difference in the average number of weeks to completion due to full remission [9.5 (SD=3.5) weeks for stepped care; 10.5 (SD=3.5) for tCBT; 95% CI=−0.04, 0.18; p=0.10].

Therapist Cost

The average total therapist time per stepped care patient was 5.26 (SD=3.08) hours compared to 10.16 (SD=4.01) hours for tCBT (p<0.001). Using the average hourly rate of $74.43, this translated into an average cost of $391.81 (SD=$229.06) per stepped care patient and $756.13 (SD=$298.64) per tCBT patient. Using probabilistic methods, the average difference between the cost to deliver stepped care and tCBT was $−364.32 [95% CI $−423.68, $−304.96]. Bootstrapping gave similar results, with an average difference of $−364.47 [95% CI $−422.89, $−305.07].

Acceptability

There was no significant difference in pre-randomization treatment preferences (Table 1; p=0.10). There was no evidence that receiving or not receiving one’s preferred treatment was significantly associated with change from baseline outcomes for any outcomes (ps<0.61).

In stepped care, 39 (25%) participants dropped out from treatment, compared to 27 (17%; p=0.14) in tCBT. We note that among the 36 participants (23%) who stepped up, only 1 participant dropped out at that point, failing to make the transition from iCBT to tCBT.

Participants receiving tCBT had significantly higher satisfaction scores (M=62.3, SD=7.58; N=124) compared to stepped care (M=57.4, SD=10.61, N=130; p<0.0001).

Safety

One tCBT participant was briefly hospitalized for panic symptoms, which was determined by the data and safety monitoring board to be unrelated to treatment. There were no other adverse events.

DISCUSSION

This is the first study to examine the integration of an internet intervention into a stepped care model for the treatment of depression. Stepped care processes using standard, non-technology-based treatments have generally been shown to be effective for depression (van Straten, Hill, Richards, & Cuijpers, 2015). This study extends this body of work, demonstrating that a stepped care program for depression, in which patients begin with therapist-supported iCBT and move to tCBT if not meeting criteria for improvement, can be as effective as initiating treatment with tCBT. Both stepped care and tCBT achieved a reduction of approximately 7 points on the QIDS, which is equivalent to 7–8 points on the Hamilton Rating Scale for Depression and 10 points on the Beck Depression Inventory, indicating a substantial and meaningful reduction in symptom severity (Rush, et al., 2003). Stepped care achieved these outcomes with approximately half the therapist time and costs as tCBT.

Stepped care was initially acceptable to patients, with no difference across treatment arms in pre-treatment preferences. Treatment completion rates were high and generally equivalent. This is notable, given that adherence rates for iCBT, even when accompanied by therapist support, can be lower than is commonly seen in tCBT (van Ballegooijen, et al., 2014). It is possible that the comparatively high rates of adherence to iCBT in this trial were due to the website design (which utilized briefer more frequent interactions), the use of the telephone as a therapist contact medium (which was similar across treatment arms), or reassurance that participants would receive more standard care if iCBT did not work for them. However, while patients’ satisfaction ratings with stepped care were good, they were still significantly lower than for patients who received tCBT. Care organizations may want to consider this finding in any stepped care implementation.

There have been two other trials that have used an internet treatment as part of a stepped care protocol. One trial examining stepped care for depression in primary care used a complex procedure that included multiple steps, with multiple options within each step. Treatment was initiated with active monitoring. The second step allowed patients to choose between bibliotherapy, an uncoached iCBT program, or telephone-administered psychotherapy, while the third step included outpatient psychotherapy or pharmacotherapy, and a fourth step combined psych- and pharmacotherapy in out- or inpatient settings (Harter, et al., 2018). While there was a significant effect for stepped care relative to treatment as usual in primary care, no data are presented on the proportion of patients who received any specific treatment (including iCBT or telephone-therapy), making it difficult to compare to the present study.

The other stepped care trial, which compared iCBT and face-to-face CBT to face-to-face CBT alone for panic or social anxiety disorders, found no significant difference between the two treatment arms (Nordgreen, et al., 2016). There were some notable differences in methodology. It was neither designed nor analyzed as a non-inferiority trial. The study used an additive stepped care design (2 weeks for psychoeducation, stepping up non-responders to 9 weeks of iCBT followed by 10 weeks of CBT) for a potential total of 21 weeks, while the CBT condition was 12 weeks, confounding treatment arm with potential length of treatment and treatment. Nonetheless, similar to our study, the differences in outcomes were small, the stepped care program required substantially less therapist time, and treatment dropout was not high.

While our findings indicate that a stepped care approach to integrating iCBT may reduce therapist costs in a telemental health service, we offer some considerations in extending these findings to stepping from iCBT to face-to-face CBT. The stepping process is a sensitive period in which dropouts can occur (van Straten, et al., 2015). We saw very little dropout at stepping. We expect that stepping from remotely delivered iCBT to face-to-face treatment would result in a higher dropout rate, consistent with the Nordgreen study (Nordgreen, et al., 2016). Thus, we urge some caution in generalizing these findings to face-to-face treatment settings.

While this study was not integrated into a healthcare setting, our findings may have relevance for implementation. Stepped care utilized approximately half the amount of time required by tCBT due to the use of iCBT, and thus may extend the capacity of mental health professionals. However, by using less time, iCBT increases the number of patients a therapist must manage through briefer interactions. In our experience this resulted a few unique challenges. During brief calls, therapists had less time to reorient to a patient’s problems and issues. Therapists reported that voice quality was often a helpful cue in orienting the therapist to the patient. Messaging, which does not provide non-verbal cues, generally required some review of logged messages and notes to remind the therapist of patient’s issues. These issues notwithstanding, therapists did not find the caseload or patient management burdensome in this trial.

iCBT has been successfully implemented as standalone eHealth clinics in Australia, Sweden, Denmark, Norway, and Canada, funded by national or regional healthcare systems (Titov, et al., 2018). There have been numerous attempts at integrating digital mental health into value-based care systems in the United States, however these have not resulted, to the best of our knowledge, in successful, sustainable implementations (Bertagnoli, 2018; Bertagnoli, et al., 2015; Mohr, Weingardt, Reddy, & Schueller, 2017). Problems have included difficulty integrating digital services into existing services, low compliance by healthcare workers, and low patient uptake. A growing number of studies have shown that more positive expectations about the value of digital mental health by patients and providers, suggesting that education and marketing may improve uptake by patients and providers (Mira, et al., 2019; Vis, et al., 2018). However, greater efforts on the design of the services, technologies, and implementation plans to integrate digital mental health into care systems and patient’s lives will also likely be required (Mohr, Riper, & Schueller, 2018).

This trial has several strengths. It kept the treatment time consistent across treatment arms, eliminating the biases that can occur when stepped care programs add treatments sequentially, which can extend time in treatment and the trial for stepped care relative to the comparator. We were able to retain a large portion of treatment dropouts in follow-up assessments, reducing the likelihood of bias associated with dropout.

There are also several limitations and caveats that should be considered in interpreting these data. First, there are a number of caveats in generalizing these findings to real-world settings. Patients in this sample may not be representative of real-world patients as research processes such as recruitment methods, screening, and consenting may select for a more motivated sample. This selection bias may have produced stronger adherence and engagement than would be seen in real-world settings. Second, as this was a noninferiority trial, we cannot rule out the possibility that the improvements seen in this sample were due to factors other than the treatments, such as measurement drift, regression to the mean, the natural course of the disorder, patient expectations and placebo effects, or any pharmacotherapy or adjustments to their pharmacotherapy they may have received. Third, we only looked at cost of therapist time, and not at a broad range of costs, such as medical costs or patient costs. Potential software costs were not included in the cost analysis, as there is no consistent pricing model. Fourth, the iCBT program was supported by telephone and messaging contact with a therapist; these findings should not be generalized to uncoached iCBT, which likely has much lower adherence and effectiveness (Karyotaki, et al., 2017). Fifth, while we monitored fidelity through supervision, we did not conduct quantitative monitoring for iCBT. We note that since this study was conducted, an iCBT fidelity monitoring tool has been developed and validated (Hadjistavropoulos, Schneider, Klassen, Dear, & Titov, 2018). Finally, we did not observe any evidence that iCBT prior to stepping was any less effective than tCBT, raising the question as to whether iCBT alone would have performed as well. Given the relatively high rates of treatment dropout commonly seen in iCBT alone, and the 23% of patients who stepped up, we believe this is unlikely. However, we cannot rule out this possibility in the present study.

As internet treatments continue to demonstrate efficacy in a growing number of trials (Andrews, et al., 2018), healthcare organizations are trying to understand how to integrate them into processes of care. This study indicates that use of a guided iCBT program, coupled with measurement-based stepping procedures to trigger transition to a standard manual-based tCBT program, can be a less costly, but no less effective method of providing treatment for depression than tCBT alone.

Supplementary Material

Highlights.

Stepped care used internet cognitive behavioral therapy (iCBT) + telephone CBT [tCBT]).

Stepped care was noninferior to tCBT for depression at both post-treatment and 6-month follow-up.

Stepped care cost approximately half to administer, compared to tCBT.

Treatment dropout for stepped care was not significantly different from tCBT.

Acknowledgments

FUNDING

This study was supported by the US National Institute of Mental Health grant number R01 MH095753 to DCM. REDCap is funded by the Northwestern University Clinical and Translational Science (NUCATS) Institute and by the National Institutes of Health’s National Center for Advancing Translational Sciences, grant number UL1TR001422. EGL was supported by an award (K08 MH112878) from the National Institute of Mental Health. SMS was supported through an award from the National Institute of Mental Health (R25 MH08091607).

Footnotes

Conflict of Interest

Dr. Mohr has accepted speaking fees from Apple Inc. and the American Psychological Association and has an ownership interest in Actualize Therapy. Dr. Schueller serves as a scientific advisor to Joyable, Inc. and receives stock options in Joyable. None of the other authors have any conflicts of interest to disclose.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- American Psychiatric Association D-TF (2013). Diagnostic and statistical manual of mental disorders: DSM-5™ (5th ed.). Arlington, VA, US: American Psychiatric Publishing, Inc. [Google Scholar]

- Andrews G, Basu A, Cuijpers P, Craske MG, McEvoy P, English CL, & Newby JM (2018). Computer therapy for the anxiety and depression disorders is effective, acceptable and practical health care: An updated meta-analysis. Journal of Anxiety Disorders, 55, 70–78. [DOI] [PubMed] [Google Scholar]

- Araya R, Rojas G, Fritsch R, Gaete J, Rojas M, Simon G, & Peters TJ (2003). Treating depression in primary care in low-income women in Santiago, Chile: a randomized controlled trial. Lancet, 361, 995–1000. [DOI] [PubMed] [Google Scholar]

- Bedi N, Chilvers C, Churchill R, Dewey M, Duggan C, Fielding K, Gretton V, Miller P, Harrison G, Lee A, & Williams I (2000). Assessing effectiveness of treatment of depression in primary care. Partially randomised preference trial. British Journal of Psychiatry, 177, 312–318. [DOI] [PubMed] [Google Scholar]

- Bertagnoli A (2018). Digital mental health: Challenges in Implementation. In American Psychiatric Association; New York, NY. [Google Scholar]

- Bertagnoli A, Trangle M, Marx L, Lacoutture E, Rukanonchai D, & Schuster J (2015). Behavioral Health. In Annual Meeting of the Alliance of Community Health Plans Washington, DC. [Google Scholar]

- Bot M, Pouwer F, Ormel J, Slaets JP, & de Jonge P (2010). Predictors of incident major depression in diabetic outpatients with subthreshold depression. Diabetic Medicine, 27, 1295–1301. [DOI] [PubMed] [Google Scholar]

- Bower P, & Gilbody S (2005). Stepped care in psychological therapies: access, effectiveness and efficiency. Narrative literature review. British Journal of Psychiatry, 186, 11–17. [DOI] [PubMed] [Google Scholar]

- Chambers DA, Glasgow RE, & Stange KC (2013). The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci, 8, 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaudhary MA, & Stearns SC (1996). Estimating confidence intervals for cost-effectiveness ratios: an example from a randomized trial. Statistics in Medicine, 15, 1447–1458. [DOI] [PubMed] [Google Scholar]

- Cuijpers P, Beekman AT, & Reynolds CF 3rd. (2012). Preventing depression: a global priority. JAMA, 307, 1033–1034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuijpers P, Smit F, Bohlmeijer E, Hollon SD, & Andersson G (2010). Efficacy of cognitive-behavioural therapy and other psychological treatments for adult depression: meta-analytic study of publication bias. British Journal of Psychiatry, 196, 173–178. [DOI] [PubMed] [Google Scholar]

- D’Agostino RB Sr., Massaro JM, & Sullivan LM (2003). Non-inferiority trials: design concepts and issues - the encounters of academic consultants in statistics. Statistics in Medicine, 22, 169–186. [DOI] [PubMed] [Google Scholar]

- Education Medicaid Integrity Contractor. (2013). Antidepressant medications: U.S. Food and Drug Administration-approved indications and dosages for use in adults. In: CMS Medicaid Integrity Program. [Google Scholar]

- Godleski L, Darkins A, & Peters J (2012). Outcomes of 98,609 U.S. Department of Veterans Affairs patients enrolled in telemental health services, 2006–2010. Psychiatric Services, 63, 383–385. [DOI] [PubMed] [Google Scholar]

- Greenberg PE, Fournier AA, Sisitsky T, Pike CT, & Kessler RC (2015). The economic burden of adults with major depressive disorder in the United States (2005 and 2010). Journal of Clinical Psychiatry, 76, 155–162. [DOI] [PubMed] [Google Scholar]

- Hadjistavropoulos HD, Schneider LH, Klassen K, Dear BF, & Titov N (2018). Development and evaluation of a scale assessing therapist fidelity to guidelines for delivering therapist-assisted Internet-delivered cognitive behaviour therapy. Cogn Behav Ther, 47, 447–461. [DOI] [PubMed] [Google Scholar]

- Harter M, Watzke B, Daubmann A, Wegscheider K, Konig HH, Brettschneider C, Liebherz S, Heddaeus D, & Steinmann M (2018). Guideline-based stepped and collaborative care for patients with depression in a cluster-randomised trial. Sci Rep, 8, 9389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones B, Jarvis P, Lewis JA, & Ebbutt AF (1996). Trials to assess equivalence: the importance of rigorous methods. BMJ, 313, 36–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karyotaki E, Riper H, Twisk J, Hoogendoorn A, Kleiboer A, Mira A, Mackinnon A, Meyer B, Botella C, Littlewood E, Andersson G, Christensen H, Klein JP, Schroder J, Breton-Lopez J, Scheider J, Griffiths K, Farrer L, Huibers MJ, Phillips R, Gilbody S, Moritz S, Berger T, Pop V, Spek V, & Cuijpers P (2017). Efficacy of Self-guided Internet-Based Cognitive Behavioral Therapy in the Treatment of Depressive Symptoms: A Meta-analysis of Individual Participant Data. JAMA Psychiatry, 74, 351–359. [DOI] [PubMed] [Google Scholar]

- Kenter RF, van de Ven PM, Cuijpers P, Koole G, Niamat S, Gerrits RS, Willems M, & van Straten A (2015). Costs and effects of Internet cognitive behavioral treatment blended with face-to-face treatment: Results from a naturalistic study. Internet Interventions, 2, 77–83. [Google Scholar]

- Kroenke K, Spitzer RL, & Williams JB (2001). The PHQ-9: validity of a brief depression severity measure. Journal of General Internal Medicine, 16, 606–613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattie EG, Ho J, Sargent E, Tomasino KN, Smith JD, Brown CH, & Mohr DC (2017). Teens engaged in collaborative health: The feasibility and acceptability of an online skill-building intervention for adolescents at risk for depression. Internet Interventions, 8, 15–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattie EG, Kaiser SM, Alam N, Tomasino KN, Sargent E, Rubanovich CK, Palac HL, & Mohr DC (2018). A Practical Do-It-Yourself Recruitment Framework for Concurrent eHealth Clinical Trials: Identification of Efficient and Cost-Effective Methods for Decision Making (Part 2). J Med Internet Res, 20, e11050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matilde Sanchez M, & Chen X (2006). Choosing the analysis population in non-inferiority studies: per protocol or intent-to-treat. Statistics in Medicine, 25, 1169–1181. [DOI] [PubMed] [Google Scholar]

- Mira A, Soler C, Alda M, Banos R, Castilla D, Castro A, Garcia-Campayo J, Garcia-Palacios A, Gili M, Hurtado M, Mayoral F, Montero-Marin J, & Botella C (2019). Exploring the Relationship Between the Acceptability of an Internet-Based Intervention for Depression in Primary Care and Clinical Outcomes: Secondary Analysis of a Randomized Controlled Trial. Front Psychiatry, 10, 325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Cuijpers P, & Lehman K (2011). Supportive Accountability: A Model for Providing Human Support to Enhance Adherence to eHealth Interventions. J Med Internet Res, 13, e30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Ho J, Duffecy J, Baron KG, Lehman KA, Jin L, & Reifler D (2010). Perceived barriers to psychological treatments and their relationship to depression. Journal of Clinical Psychology, 66, 394–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Ho J, Duffecy J, Reifler D, Sokol L, Burns MN, Jin L, & Siddique J (2012). Effect of telephone-administered vs face-to-face cognitive behavioral therapy on adherence to therapy and depression outcomes among primary care patients: a randomized trial. JAMA, 307, 2278–2285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Ho. J, Duffecy J, & Glazer-Baron K (2007). Beating Depression: Cognitive Behavioral Therapy Patient Workbook. In. Northwestern University: Northwestern University. [Google Scholar]

- Mohr DC, Riper H, & Schueller SM (2018). A Solution-Focused Research Approach to Achieve an Implementable Revolution in Digital Mental Health. JAMA Psychiatry, 75, 113–114. [DOI] [PubMed] [Google Scholar]

- Mohr DC, Vella L, Hart S, Heckman T, & Simon G (2008). The effect of telephone-administered psychotherapy on symptoms of depression and attrition: A meta-analysis. Clinical Psychology: Science and Practice, 15, 243–253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Weingardt KR, Reddy M, & Schueller SM (2017). Three Problems With Current Digital Mental Health Research … and Three Things We Can Do About Them. Psychiatric Services, 68, 427–429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nabati L, Shea N, McBride L, Gavin C, & Bauer MS (1998). Adaptation of a simple patient satisfaction instrument to mental health: psychometric properties. Psychiatry Res, 77, 51–56. [DOI] [PubMed] [Google Scholar]

- Nordgreen T, Haug T, Ost LG, Andersson G, Carlbring P, Kvale G, Tangen T, Heiervang E, & Havik OE (2016). Stepped Care Versus Direct Face-to-Face Cognitive Behavior Therapy for Social Anxiety Disorder and Panic Disorder: A Randomized Effectiveness Trial. Behav Ther, 47, 166–183. [DOI] [PubMed] [Google Scholar]

- Nutt D, Allgulander C, Lecrubier Y, Peters T, & Wittchen U (2008). Establishing non-inferiority in treatment trials in psychiatry: guidelines from an Expert Consensus Meeting. J Psychopharmacol, 22, 409–416. [DOI] [PubMed] [Google Scholar]

- Palac HL, Alam N, Kaiser SM, Ciolino JD, Lattie EG, & Mohr DC (2018). A Practical Do-It-Yourself Recruitment Framework for Concurrent eHealth Clinical Trials: Simple Architecture (Part 1). J Med Internet Res, 20, e11049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner K, Brown GK, Stanley B, Brent DA, Yershova KV, Oquendo MA, Currier GW, Melvin GA, Greenhill L, Shen S, & Mann JJ (2011). The Columbia-Suicide Severity Rating Scale: initial validity and internal consistency findings from three multisite studies with adolescents and adults. American Journal of Psychiatry, 168, 1266–1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rush AJ, Bernstein IH, Trivedi MH, Carmody TJ, Wisniewski S, Mundt JC, Shores-Wilson K, Biggs MM, Woo A, Nierenberg AA, & Fava M (2006). An evaluation of the quick inventory of depressive symptomatology and the hamilton rating scale for depression: a sequenced treatment alternatives to relieve depression trial report. Biological Psychiatry, 59, 493–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rush AJ, Trivedi MH, Ibrahim HM, Carmody TJ, Arnow B, Klein DN, Markowitz JC, Ninan PT, Kornstein S, Manber R, Thase ME, Kocsis JH, & Keller MB (2003). The 16-Item Quick Inventory of Depressive Symptomatology (QIDS), clinician rating (QIDS-C), and self-report (QIDS-SR): a psychometric evaluation in patients with chronic major depression. Biological Psychiatry, 54, 573–583. [DOI] [PubMed] [Google Scholar]

- Schueller SM, Kwasny MJ, Dear BF, Titov N, & Mohr DC (2015). Cut points on the Patient Health Questionnaire (PHQ-9) that predict response to cognitive-behavioral treatments for depression. General Hospital Psychiatry, 37, 470–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schueller SM, & Mohr DC (2015). Initial field trial of a coach-supported web-based depression treatment In The Proceedings of Pervasive Health ‘15. IEEE; Istanbul, Turkey. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schueller SM, Tomasino KN, & Mohr DC (2016). Integrating Human Support into Behavioral Intervention Technologies: The Efficiency Model of Support. Clinical Psychology: Science and Practice, 27–45. [Google Scholar]

- Sheehan DV, Lecrubier Y, Sheehan KH, Amorim P, Janavs J, Weiller E, Hergueta T, Baker R, & Dunbar GC (1998). The Mini-International Neuropsychiatric Interview (M.I.N.I.): the development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. Journal of Clinical Psychiatry, 59 Suppl 20, 22–33;quiz 34–57. [PubMed] [Google Scholar]

- Simon GE, Ludman EJ, & Rutter CM (2009). Incremental benefit and cost of telephone care management and telephone psychotherapy for depression in primary care. Archives of General Psychiatry, 66, 1081–1089. [DOI] [PubMed] [Google Scholar]

- Titov N, Dear B, Nielssen O, Staples L, Hadjistavropoulos H, Nugent M, Adlam K, Nordgreen T, Bruvik KH, Hovland A, Repal A, Mathiasen K, Kraepelien M, Blom K, Svanborg C, Lindefors N, & Kaldo V (2018). ICBT in routine care: A descriptive analysis of successful clinics in five countries. Internet Interv, 13, 108–115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomasino KN, Lattie EG, Ho J, Palac HL, Kaiser SM, & Mohr DC (2017). Harnessing Peer Support in an Online Intervention for Older Adults with Depression. American Journal of Geriatric Psychiatry, 25, 1109–1119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomasino KN, Lattie EG, Wilson RE, & Mohr DC (2017). Coaching Manual for the ThinkFeelDo Internet Intervention Program In. Chicago, IL: Northwestern University. [Google Scholar]

- Turner J, Brown JC, & Carpenter DT (2018). Telephone-based CBT and the therapeutic relationship: The views and experiences of IAPT practitioners in a low-intensity service. Journal of Psychiatric and Mental Health Nursing, 25, 285–296. [DOI] [PubMed] [Google Scholar]

- Vallis TM, Shaw BF, & Dobson KS (1986). The cognitive therapy scale: psychometric properties. Journal of Consulting and Clinical Psychology, 54, 381–385. [DOI] [PubMed] [Google Scholar]

- van Ballegooijen W, Cuijpers P, van Straten A, Karyotaki E, Andersson G, Smit JH, & Riper H (2014). Adherence to Internet-based and face-to-face cognitive behavioural therapy for depression: a meta-analysis. PLoS One, 9, e100674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Straten A, Hill J, Richards DA, & Cuijpers P (2015). Stepped care treatment delivery for depression: a systematic review and meta-analysis. Psychological Medicine, 45, 231–246. [DOI] [PubMed] [Google Scholar]

- Vis C, Mol M, Kleiboer A, Buhrmann L, Finch T, Smit J, & Riper H (2018). Improving Implementation of eMental Health for Mood Disorders in Routine Practice: Systematic Review of Barriers and Facilitating Factors. JMIR Ment Health, 5, e20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker E, & Nowacki AS (2011). Understanding equivalence and noninferiority testing. Journal of General Internal Medicine, 26, 192–196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wells K, Miranda J, Bauer M, Bruce M, Durham M, Escobar J, Ford D, Gonzalez J, Hoagwood K, Horwitz S, Lawson W, Lewis L, McGuire T, Pincus H, Scheffler R, Smith W, & Unutzer J (2002). Overcoming barriers to reducing the burden of affective disorders. Biological Psychiatry, 52, 655. [DOI] [PubMed] [Google Scholar]

- Wiens BL, & Rosenkranz GK (2013). Missing data in noninferiority trials. Statistics in Biopharmaceutical Research, 5, 383–393. [Google Scholar]

- Willan AR, & O’Brien BJ (1996). Confidence intervals for cost-effectiveness ratios: an application of Fieller’s theorem. Health Economics, 5, 297–305. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.