Abstract

The identification of interactions between drugs/compounds and their targets is crucial for the development of new drugs. In vitro screening experiments (i.e. bioassays) are frequently used for this purpose; however, experimental approaches are insufficient to explore novel drug-target interactions, mainly because of feasibility problems, as they are labour intensive, costly and time consuming. A computational field known as ‘virtual screening’ (VS) has emerged in the past decades to aid experimental drug discovery studies by statistically estimating unknown bio-interactions between compounds and biological targets. These methods use the physico-chemical and structural properties of compounds and/or target proteins along with the experimentally verified bio-interaction information to generate predictive models. Lately, sophisticated machine learning techniques are applied in VS to elevate the predictive performance.

The objective of this study is to examine and discuss the recent applications of machine learning techniques in VS, including deep learning, which became highly popular after giving rise to epochal developments in the fields of computer vision and natural language processing. The past 3 years have witnessed an unprecedented amount of research studies considering the application of deep learning in biomedicine, including computational drug discovery. In this review, we first describe the main instruments of VS methods, including compound and protein features (i.e. representations and descriptors), frequently used libraries and toolkits for VS, bioactivity databases and gold-standard data sets for system training and benchmarking. We subsequently review recent VS studies with a strong emphasis on deep learning applications. Finally, we discuss the present state of the field, including the current challenges and suggest future directions. We believe that this survey will provide insight to the researchers working in the field of computational drug discovery in terms of comprehending and developing novel bio-prediction methods.

Keywords: virtual screening, drug-target interactions, ligand-based VS and proteochemometric modelling, machine learning, deep learning, compound and bioactivity databases, gold-standard data sets

Introduction

The development of new drugs remains the key problem and challenge to improve the current field of biomedicine. Computational methods have been used in bioinformatics and cheminformatics studies for nearly three decades, to aid understanding the molecular mechanisms and propose novel treatment options for several diseases. Recent advances in computational power (e.g. massively parallel and computing on graphical processing units (GPU)) and in data analysis and inference techniques (e.g. artificial intelligence, machine learning and deep learning) provide opportunities for various fields of data science, including biomedicine.

In this study, our objective is to provide an overview of recent applications of computational drug discovery methods, called virtual screening (VS), where the aim is to predict the bio-interactions between drug-like small molecules (i.e. compounds) and potential target proteins for the identification of novel drugs, using structural and physico-chemical properties of compounds and targets along with the experimentally known (i.e. validated) bioactivities. In this review, we explored various data resources that provide vast amount of information, which is essential for conducting VS studies. We also investigated novel machine learning approaches with recent applications to drug-target interaction (DTI) prediction. In this framework, we discussed in detail the recent applications of deep learning techniques, which outperformed state-of-the-art VS methods. Finally, we stated our observations and comments about the current status of the field of VS.

We divided the text in six main chapters. The first chapter, introduction, defines the basic terminology, provide statistics regarding the relevant information stored in source biological databases, summarizes the experimental procedures along with computational approaches in drug discovery. The second chapter, descriptors and features for VS, lists and explains in detail molecular representations and descriptors for both compounds and targets. The third chapter, libraries and toolkits for VS, expresses the available computational tools and libraries to generate these descriptors/representations. The fourth chapter, compound and bioactivity databases and gold-standard data sets, explains the available repositories for bioactivity data. The fifth chapter, machine learning approaches in VS, provides an overview of the recent machine learning and data mining applications, including the deep learning for drug discovery, together with the explanations of performance evaluation metrics and a predictive performance comparison between the machine learning-based VS methods. The sixth and the last chapter, discussion and conclusion, summarizes the field and briefly discusses the future directions together with challenges.

The terminology used in this survey is given below:

A ligand is a molecular structure that physically binds another molecular structure and modulates its function.

A compound is a chemical structure that is formed by the combination of two or more atoms that are connected by chemical bonds.

Some of the compounds, bioactive compounds, modulate the functions of bio-molecules such as proteins.

A drug is an approved [by Food and Drug Administration (FDA), for example] bioactive compound that acts on protein targets to cure/decelerate a specific disease or to promote the health of a living being.

A target protein (or just a target) is a naturally occurring bio-molecule of an organism that is bound by a ligand and has its function modulated, which results in a physiological change in the body of the organism.

The Anatomical Therapeutic Chemical (ATC) Classification System is a controlled vocabulary to classify drugs hierarchically based on their therapeutic, pharmacological and chemical properties. There are five levels in each ATC code and each level of an ATC code represents a different property of drugs. The first level represents anatomical groups; the second level shows a therapeutic main group; the third level represents a therapeutic and pharmacological subgroup; the fourth level represents a chemical, therapeutic and pharmacological subgroup; and the fifth level shows the indicated chemical substance.

Cheminformatics is the application of computational techniques to the field of chemistry. Most of the VS methods are considered to be cheminformatics based.

It is important to note that, in this article, the terms: ‘small molecule’ and ‘compound’ are used synonymously to refer to the ‘chemical substances’. The term ‘bioactive compound’ corresponds to chemical substances with biological activities. The term ‘ligand’ represents a chemical substance that interacts with a target biomolecule to accomplish a biological purpose. The term ‘drug’ is used to represent approved bioactive compounds, which are currently being used in the clinics. ‘Active pharmaceutical ingredients’ (APIs) refers to the biologically active ingredient in a drug and is responsible for the interactions with cellular polymeric macromolecules as well as small secondary messenger molecules. The terms ‘biomolecule’, ‘receptor’, ‘target’ and ‘protein’ refer to the cellular biological molecules targeted by APIs and/or bioactive compounds.

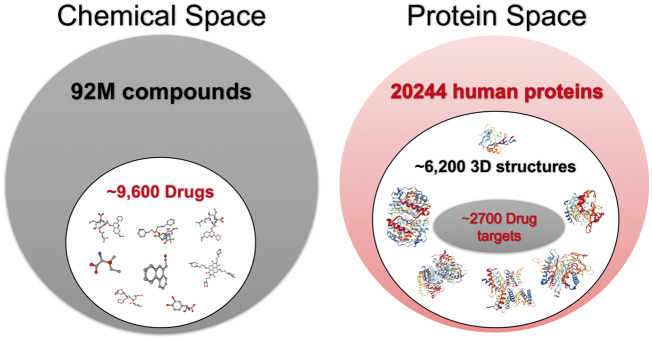

In terms of the statistics, there are tens of millions of compounds available in compound and bioactivity databases [1–4]. There are about 9000 FDA-approved small molecule drugs (approved + experimental) [5], roughly 550 000 reviewed protein records available (20 244 of which are human proteins) in protein sequence and annotations resources (e.g. UniProtKB/Swiss-Prot) and nearly 2700 of human proteins are known to be targeted by either approved or experimental drugs [1, 6]. The 3D structure information of proteins and compounds provide important qualities of these molecules to determine their functions and bioactivities. However, 3D structures of a relatively small subset of compounds (i.e. around 24 000) and human proteins (i.e. about 6200) are experimentally known (partly or completely) and currently available in Protein Data Bank–PDB (Figure 1) [5].

Figure 1.

Statistics of current chemical and protein spaces in open access chemical and biological data repositories.

The main role of drugs, which are bioactive compounds, is the alteration of cellular events involved in disease conditions for treatment purposes. The following two problems are of importance for the hit discovery, one of the initial steps in the development of new drugs:

Identification of novel bioactive compounds for a target protein; and

identification of new targets for known bioactive compounds.

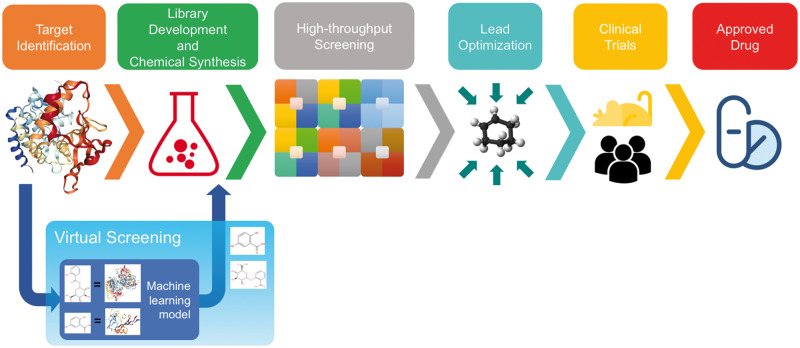

Drug discovery is defined as the process of identifying the roles of bioactive compounds to develop new drugs, and it is usually one of the initial steps in a drug development pipeline. Traditionally, drug research and development starts with the identification of the biomolecular targets for an intended treatment and proceeds with the high-throughput screening experiments to identify bioactive compounds for the defined targets, together with the corresponding bioactivity levels. The aim of high-throughput screening is to find suitable drug candidates. With the advancement of high-throughput screening technology, it is now possible to conduct experiments to scan thousands of different compounds and detect their bioactivity levels on selected target proteins [7]. However, designing high-throughput screening experiments is expensive, it is a time-consuming process, and it requires advanced laboratories having chemical and biological libraries. Furthermore, it is not feasible to conduct high-throughput screening experiments for all expressed proteins in the human genome and for all known compounds [8]. Another problem with high-throughput screening is its high failure rates, which limits the identification of novel drugs [9]. The problem escalates when we consider the process of drug development. The term drug development refers to the whole process to bring a drug to the market, starting with the drug discovery and ending with clinical trial phases. In Figure 2, main phases of the drug development procedure are shown. Most of the drug candidates fail to become an approved drug in the late phases of clinical trials because of the unexpected side effects and toxicity problems. In 2010, the cost of developing a single drug was estimated about 1.8 billion US dollars, and the process requires about 13 years the on average [8].

Figure 2.

A broad overview of drug development and the place of virtual screening in this process.

To address the abovementioned challenges and problems, computational methods have been developed and used in the past decades. The field of in silico estimation of unknown drug-target pairs using statistical models is called ‘virtual screening’–VS–(i.e. DTI prediction). In drug development pipelines, VS methods are mostly placed just before the high-throughput screening, so that the unlikely drug-target pairs are eliminated; as a result, only potentially active combinations are run through the experimental screening procedure (Figure 2). In this sense, VS has the potential to greatly reduce the cost and time required for high-throughput screening [10]. Although the main purpose of VS is to identify new drug candidates for specified targets, it also has other applications such as finding beneficial drug pairs [11] and the prediction of ATC codes for known drugs [12, 13]. In addition, the computational approaches mainly employed in VS can also be used for drug repurposing and off target effect identification, where the aim is to find new uses for the already approved drugs [14]. Drug repurposing is an important research area since the approved drugs are already tested for safety issues; therefore, the cost and the required time for marketing repurposed drugs is much less than discovering and marketing novel drugs [15]. There are various examples of repurposed drugs in the market, most of which are being used for treatments of multiple diseases [16].

There have been several successful applications of VS in detecting compounds with high affinities against pre-specified targets [17]. Some of these drug candidate compounds have also passed the clinical trials and became marketed drugs [18–22]. Doman et al. showed that their VS approach substantially improved the rate of identified drug candidates against protein tyrosine phosphatase-1B enzyme. The authors experimentally showed that the hit rate of their method was 34.8%, whereas the hit rate of the high-throughput screening experiment was only 0.021% [23]. Another successful application of VS was proposed by Powers et al., which led to the discovery of a novel inhibitor of AmpC ß-lactamase [24].

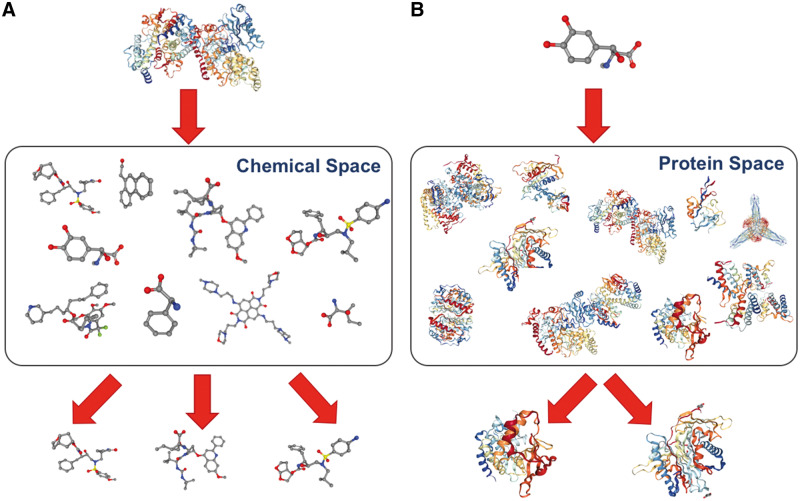

Both in high-throughput screening experiments and in conventional VS approaches, the aim is to identify whether a given set of compounds is bound to a pre-specified target protein or not. In these applications, off-target effects are generally overlooked and other possible targets of the compounds cannot be identified. However, it is known that most of the bioactive compounds act on multiple targets (which causes these off target effects); in fact, the cases where a compound interacts with only a one-target protein are considered as exceptional [25, 26]. The identification of the off-target effects is crucial to obtain potential side effect and toxicity information of the test compounds. For this purpose, another type of computational approach, target prediction (also known as the reverse VS), was proposed [27, 28]. In target prediction, a compound is screened against a large set of proteins with the aim of identifying all possible targets of the corresponding compound (Figure 3). Generally speaking, the goal of both approaches is the prediction of unknown interactions between various compound–protein pairs.

Figure 3.

(A) In conventional virtual screening, multiple compounds are screened against a pre-specified target, and candidate interacting compounds (i.e. ligands) are identified, whereas (B) in target prediction (i.e. reverse virtual screening), a compound is searched against multiple proteins and candidate targets are identified.

Most of the VS methods make use of biological, topological and physico-chemical properties of compounds and/or targets along with the experimentally validated bioactivity values of compound-target pairs to predict the unknown activities [29, 30]. For this, it is required to computationally record the compounds and targets as quantitative vectors (i.e. representations and descriptors) according to their molecular features. VS methods use these feature vectors as input to model the interactions between compounds and target molecules. VS methods can be divided into three groups based on the employed input features:

Structure-based VS employs 3D structure of targets and compounds to model the interactions [31, 32],

Ligand-based VS uses the molecular properties of compounds (mostly non-structural) to model the interactions with targets [29, 33, 34],

Proteochemometric modeling (PCM) approach models the interactions by combining non-structural descriptors of both compounds and targets at the input level [35–38].

Previously, VS was mainly divided in two groups (i.e. structure-based and ligand-based methods) [39, 40]; however, recent advances in PCM have put this field forward to be considered as a third group [37]. Both ligand-based and PCM methods can be considered as non-structure-based VS methods. The field of ligand-based VS has been extensively reviewed by Geppert et al. and Lavecchia and Di Giovanni [33, 34]. In another study, Glaab reviewed the recent developments in both ligand- and structure-based VS approaches. The author defined a comprehensive pipeline for VS over a target protein of interest and overviewed workflow management systems. The whole process was divided into four main steps, namely, data collection, pre-processing, screening, selectivity and ADMETox (i.e. absorption, distribution, metabolism, excretion and toxicity) filtering, and explained each step with a focus on relevant open-access software and databases. The author also implemented a downloadable cross-platform software by integrating open-access screening tools using the Docker platform [41]. Qiu et al. introduced the emergence of PCM and mentioned its advantages by referring to studies in which PCM models outperform conventional quantitative structure-activity relationship (QSAR) models in DTI modelling. The authors focused on the recent progress in PCM modelling in terms of target descriptors, cross-term descriptors and application scope of PCM, including protein-small molecule and protein-macro molecule interactions. The authors reported that, with further advancements in molecular representations, machine learning techniques and the available bioactivity data, it may be possible to generate PCM models for more complicated systems such as ligand-catalyst-target reactions, which could provide help to identify biochemical reactions more accurately [37]. The field of PCM was also reviewed by van Westen et al. and Cortés-Ciriano et al. [36, 38].

Structure-based VS methods can only be applied when the 3D structure of both targets and the candidate compounds are available, which are either experimentally determined by X-ray crystallography or Nuclear magnetic resonance (NMR), or predicted by computational approaches such as the homology modelling. Once the 3D structural information is obtained, docking can be applied to find interactions between a compound and a target, which predicts compound conformations in the binding site of the target using search algorithms and ranks them via scoring functions representing estimated binding affinities [23, 27]. Some of the most commonly used docking tools are AutoDock [42], DOCK [43], Glide [44], GOLD [45], FlexX [46] and Fred [47]. These methods rely on the conformations of atoms in 3D space; as a result, they are computationally intensive since the number of possible conformations of proteins and compounds increase exponentially with the increasing number of rotatable bonds. Moreover, the calculation of binding energies is a problematic issue [17]. In addition to these traditional methods, there are also similarity-based docking approaches such as HomDock [48], eSimDock [49] and fkcombu [50] that use structural similarities of compounds to predict their protein-bound states by aligning them on the experimentally determined 3D structure of a reference compound that is in complex with a target protein or evolutionarily related structures of that target protein [49]. Therefore, they do not require searching for low energy conformations of compounds contrary to conventional methods, which reduces the computational cost and makes them faster than traditional docking methods [48]. Both approaches can achieve high performance in estimating the interactions; however, their applicability is limited since the structural information is not available for the majority of the proteins and compounds, and the experimental identification of the 3D structures is challenging [8]. Although homology models of proteins can be used as templates for docking, it is not possible to obtain a reliable model for all proteins because of the lack of a reference protein structure that is evolutionarily close to the target protein to be modelled. Even if similarity-based docking approaches are less sensitive to weakly homologous protein models [49], they are not feasible in the absence of similar compounds to the reference compound. Therefore, non-structure-based VS methods are more preferable if a reliable target structure is not available [51]. It was reported in the literature that the non-structure-based methods have a similar potential to detect drug targets as the structure-based methods [52]. In addition, several studies showed that structure and non-structure-based methods often provide complementary results [28, 53–55]. There are also hybrid-type methods that combine 3D structure information along with the ligand-based information in the literature [51]. Structure-based VS methods are out of the scope of this study, and information about this field can be obtained from the literature [31, 32, 52, 56, 57].

Descriptors and features for VS

Compounds and biological target molecules are required to be quantized to be used in VS models. Molecular representations and descriptors are employed for this purpose. A descriptor should reflect the intrinsic physical and chemical properties of the corresponding molecule, so that the statistical model can learn and generalize the shared properties among the molecules that lead to the interaction between compounds and targets. After the models are constructed using the descriptors of known ligand-receptor pairs, interaction predictions are produced for the unknown ligand-receptor couples, by providing query descriptors as input to the model.

There are various types of descriptors both for small molecule compounds and target proteins, each have strengths and weaknesses in terms of the power of representation of molecular properties. The descriptors, which are highly used in the literature, are explained in this chapter, which is further divided into two subsections: compound and target descriptors. In the first subsection, we first describe the line representations that are used to store and search compounds in data repositories. Subsequently, several types of numerical descriptors for compounds are explained. Finally, target descriptors are investigated.

Compound descriptors

Line notations have been proposed to express the 2D structures of compounds as a string of characters [58–60], to be able to computationally store and search them in chemical databases. Line notations are also used by cheminformatics libraries and toolkits to generate molecular descriptors. Each line notation uses a distinct algorithm to represent structures and chemical properties (i.e. atoms, bonds and aromaticity) of compounds. The most popular line notations are SMILES [58] and InChI [59] notations (for detailed information, please refer to the supplementary material). Graphical representations are drawings of compounds to display the positions of its atoms and bonds in 2D- or 3D. Most chemical databases (e.g. PubChem and ChEMBL) provide both line and graphical representations for the recorded compounds. Table 1 includes example graph and line notation representations for a sample compound.

Table 1.

Chemical formula, 2D/3D graphical representation, SMILES and InChI notations of aspirin

| Category | Representation | |

|---|---|---|

| Compound name | Aspirin | |

| Chemical formula | C9H8O4 | |

| 3D/2D structure |

|

|

| SMILES | CC(=O)OC1 = CC=CC=C1C(=O)O | |

| InChI | InChI = 1S/C9H8O4/c1-6(10)13-8-5-3-2-4-7 (8)9(11)12/h2-5H, 1H3, (H, 11, 12) | |

Molecular descriptors are representative numerical vectors (i.e. feature vectors) for compounds that are generated by algorithms based on the geometrical, structural and physiochemical properties. There are more than a thousand different types of molecular descriptors in the literature [61]. Molecular descriptors are categorized based on the dimensionality of the included information. A popular sub-group of molecular descriptors are fingerprints (i.e. binary vectors), where each dimension of the vector represents presence (1) or absence (0) of a particular property. Fingerprints are used to represent compounds by their chemical bonds, structural fragments, functional groups and connectivity pathways. Several studies have been performed to investigate the effects of choice of fingerprints on prediction performance in VS [62–66]. These studies showed that each fingerprint type represents different aspects of compounds; therefore, selection of fingerprints is crucial for VS [62]. Sawada et al. trained several models using 18 different compound descriptors to compare prediction performance of these descriptors [67]. They showed that KEGG chemical function and substructures (KCF-S) fingerprints performed best among 18 different individual fingerprints based on multiple criteria. However, the dimensionality of the KCF-S vector is considerably higher compared with the conventional compound fingerprints [i.e. 63 891 as opposed to 1024 for extended connectivity fingerprints (ECFP4)], which significantly increases the computational complexity, and it is debatable if the obtained performance increase worth the significant increase in computational requirements. The authors also showed that integrating multiple descriptors usually improve the predictive performance; nevertheless, the performance gain was not significant in most cases. In another study, Cano et al. used several compound descriptors with random forest algorithm for automatic selection and ranking of molecular descriptors based on relevancy [68]. Their report indicated that automatically selected and combined features significantly enhanced the prediction accuracy. Duan et al. reported that no fingerprint method could outperform the others considering all targets and that different types of fingerprints are effective on different targets [65]. Bender et al. compared 37 different fingerprints that belong to four classes of molecular descriptors (i.e. circular fingerprints, circular fingerprints considering counts, path-based and keyed fingerprints and pharmacophoric descriptors) [69]. They reported that different fingerprints retrieved different active compounds, and combination of multiple fingerprints provided the best performance. Their evaluation results showed that ECFP4 performed best, when the fingerprints were evaluated individually. Soufan et al. created several types of compound features and used a wrapper method (please see supplementary material for details regarding feature selection methods) to create the most representative features for training [70, 71]. The authors showed that combining several features with a classifier performance aware system enhanced the prediction results.

To sum up, it can be said that each conventional molecular descriptor is capable of representing different properties of compounds. For example, substructure keys-based fingerprints are created based on the presence or absence of predefined substructures in compounds (e.g. MACCs [72]); circular fingerprints can be used to represent structural properties of compounds, independent of a pre-defined key set (e.g. ECFPs [73]). On the other hand, pharmacophore descriptors can represent complex physico-chemical properties of compounds. Therefore, combining different molecular descriptors is frequently preferred in the literature. Compound descriptors and their properties are listed in Table 2. Citations column in this table references the studies from the literature that used the corresponding descriptors in their methods.

Table 2.

Compound descriptors: categories, properties and fingerprints

| Descriptor category | Properties | Fingerprints | Citations |

|---|---|---|---|

| 0D descriptors |

|

[76] | |

|

| |||

| 1D descriptors |

|

[61] | |

|

| |||

| 2D descriptors |

|

|

[65, 67, 73] |

|

| |||

| 3D descriptors |

|

|

[61, 77] |

|

| |||

| Non-structure-based molecular descriptors |

|

|

[78, 79] |

Calculation of pairwise similarities of compounds based on fingerprints is another important issue in VS. Various types of measures have been proposed for this purpose such as the Dice coefficient [74] or the Tanimoto coefficient, which currently is the most popular similarity measure for compounds [62, 75]. Bajusz et al. performed statistical analysis and ranking of eight different similarity metrics using the sum of ranking differences and the analysis of variance methods [62]. They used ECFP4 and Chemaxon Chemical Fingerprints to represent compounds. The authors first showed that all the similarity metrics had significantly better performance compared with the random selection. They reported that Cosine, Dice, Tanimoto and Soergel metrics performed better than the others. For more details about fingerprints and similarity measures, please refer to the first section in the supplementary material.

Target protein descriptors

In proteochemometrics, both ligand and target spaces are modelled to accurately predict DTIs in a large scale. Hence, target protein descriptors are employed along with compound molecular descriptors in PCM [35, 38]. Considering the type of protein properties used for the feature generation, target descriptors are mainly categorized as sequence- and structure-based descriptors. While sequence-based target descriptors use the amino acid sequence of proteins, which can be retrieved from UniProt Knowledgebase (http://www.uniprot.org) [80], structure-based descriptors use 3D atomic coordinates of proteins retrieved from Protein Databank–PDB– (http://www.rcsb.org) [81]. In terms of the properties they describe, target descriptors can roughly be divided into six groups, as briefly explained below and shown in Table 3. Citations column in this table references the studies from the literature, which employed the corresponding descriptors in their methods.

Table 3.

Categories of target descriptors based on the properties they describe

| Descriptor category | Descriptor type | Citations | |

|---|---|---|---|

| Sequence composition |

|

[82–85] | |

|

| |||

| Physico-chemical properties |

|

[36, 82–84, 86] | |

|

| |||

| Similarity measures |

|

[36, 86–91] | |

|

| |||

| Topological properties |

|

[36, 84] | |

|

| |||

| Geometrical characteristics |

|

[86, 88, 92, 93] | |

|

| |||

| Functional sites |

|

[83, 84, 94, 95] | |

Descriptors based on sequence composition reflect the occurrence frequencies of different amino acid combinations on a protein sequence [96]. Descriptors based on physico-chemical properties describe protein sequences in terms of a combination of physical and chemical properties of amino acids such as hydrophobicity, van der Waals volume, polarity, polarizability, charge, secondary structure and solvent accessibility [36, 82, 96–109]. Descriptors based on similarity measures use similarities between proteins via sequence or structural alignments, based on the idea that similar targets may interact with similar compounds [110–118]. Descriptors based on topological properties characterize amino acids according to atom-connectivity indices generated from molecular graphs [119, 120]. Descriptors based on geometrical characteristics reflect structural characteristics of proteins related to shape, size, atomic positions in space, etc., mainly including residue–residue contacts, bond lengths, bond angles and torsion angles between atoms of residues, secondary structures, flexibility and solvent accessibility of proteins [92, 121–124]. Descriptors based on functional sites describe certain functional characteristics of proteins that can be responsible for the interactions with other molecules such as proteins, small molecules and nucleic acids [38, 94, 95, 125, 126]. For detailed information about different types of target descriptors, please refer to the supplementary material.

The selection of descriptor sets is important to be able to generate high-performance predictive models using PCM. There are a few studies on benchmarking of target descriptors. In 2007, Ong et al. evaluated the effectiveness of 10 commonly used descriptor sets (i.e. amino acid composition (AAC); dipeptide composition (DC); three types of autocorrelation; Composition, transition and distribution (CTD); Quasi-sequence-order descriptors (QSO); Pse-AAC; combination of AAC and DC; and combination of the first eight descriptors) for the prediction of protein functional families using support vector machines. The authors reported that the selected descriptors were effective in general, and their performance did not significantly differ from each other although combined sets of descriptors provided better results [82]. In another study, van Westen and colleagues [36] compared the performances of 13 different types of amino acid descriptors (i.e. three variants of z-scales, BLOSUM, FASGAI, MSWHIM, T-scales, ST-scales, VHSE and four variants of ProtFP as a novel descriptor set) and their combined versions, for bioactivity modelling using random forest classifiers. According to their findings, z-scale descriptors and combined sets were consistently better than the others while ProtFP and ST-scales descriptors consistently performed worse. Furthermore, they showed that the generated PCM models outperformed QSAR model that uses only compound descriptors. Shaikh et al. also developed PCM models for the prediction of DTIs using sequence- and structure-based descriptors, employing different machine learning techniques. The authors reported that, while models generated using random forests and support vector machines outperformed the others, there was no significant difference between the two types of descriptor sets in terms of the model performance. As a result, the authors stated that using sequence-based descriptors was more advantageous as it comprised a larger set of proteins [83]. Apart from these studies, Sun et al. performed an analysis for the prediction of RNA-binding protein residues using the random forest algorithm. They developed different predictive models based on five types of protein features, including similarity measures, geometrical characteristics and physico-chemical properties of amino acids as well as two newly developed structural features. Among all models generated using these features separately, and in different combinations, they found that the model with the highest performance was the one combining all these five features [86]. Based on these studies, it can be inferred that there is no outstanding descriptor type that represents the proteins to achieve a significantly higher predictive performance. Therefore, we suggest researchers to select protein descriptors specific to the problem at hand by carrying out performance comparison tests. Also, combinations of different protein features should be considered in these tests to be able to capture distinct aspects of proteins in one model.

Libraries and toolkits for VS

One issue in VS field is finding a convenient resource (i.e. a computational tool or a programming library) to accomplish specific tasks such as the construction of molecular descriptors, interconversion between two different representations, calculation of pairwise molecular similarities or the applications of various statistical and machine learning algorithms for DTI prediction. Several libraries and toolkits have been developed for these purposes, each supported by different operating system(s) and programming language(s). In this chapter, we describe these libraries and toolkits.

Table 4 provides information on tools, their features and computational dependencies for compounds. Further information about compound-specific toolkits and libraries can be found in the supplementary material. Target descriptors are valuable sources to be used in predictive models not only for DTI prediction but also for protein structure and function prediction and estimation of protein-protein interactions. To facilitate the retrieval of protein data and calculation of protein features, a vast number tools and data services have been constructed. Some of currently available tools and libraries are shown in Table 5 and explained in detail in the supplementary material.

Table 4.

Libraries and toolkits for cheminformatics

| Tools and libraries | Basic properties and included descriptors | Operating systems | Programming languages |

|---|---|---|---|

| RDKit [127] |

|

Microsoft Windows, Linux, Mac OSX | Python; wrappers are available for Java and C# |

|

| |||

| OpenBabel [128] |

|

Microsoft Windows, Linux, Mac OSX | C++, Perl, Python interfaces |

|

| |||

| Dragon [129] |

|

Microsoft Windows, Linux | Stand-alone application |

|

| |||

| DayLight Tookit [130] |

|

Microsoft Windows, Linux, Solaris | C, Fortran; Wrappers are available for Java and C++ |

|

| |||

| Chemistry Development Tookit [131] |

|

Microsoft Windows, Linux, MacOSX | Java |

|

| |||

| Open Eye Toolkit [132] |

|

Microsoft Windows, Linux, MacOSX | C++; Wrappers are available for Python, Java, and.NET |

|

| |||

| ChemmineR [133] |

|

Microsoft Windows, Linux, MacOSX | R |

|

| |||

| Indigo [134] |

|

Microsoft Windows, Linux, MacOSX | C++; Java, Python, Wrapper is available for .NET |

Table 5.

Libraries and toolkits for protein analysis (including VS)

| Tools and libraries | Basic properties and included descriptorsa | Operating systems | Programming languages |

|---|---|---|---|

| PROFEAT [95], ProPy [135], PyDPI [136] | AAC, DC, TC (ProPy and PyDPI), autocorrelation, CTD, CTriad (only PyDPI), SOCN, QSO, Pse-AAC, Am-Pse-AAC, topological descriptors (only PROFEAT), total amino acid properties (only PROFEAT) | Microsoft Windows, Linux | PROFEAT:

|

|

| |||

| protr/ProtrWebb [137], Rcpi [138] | AAC, DC, TC, autocorrelation, CTD, CTriad, SOCN, QSO, Pse-AAC, Am-Pse-AAC, scales-based descriptors derived by PCA, factor analysis, and multidimensional scaling, BLOSUM/PAM matrix derived descriptors, PSSM profiles, similarity measures based on sequence alignment and GO annotation semantic similarity | Microsoft Windows, Linux, MacOSX | protr/ProtrWeb:

|

|

| |||

| camb [139] | AAC, DC, TC, autocorrelation, CTD, CTriad, SOCN, QSO, Pse-AAC, Am-Pse-AAC, Z-scales, T-scales, ST-scales, VHSE, MSWHIM, FASGAI, ProtFP8, BLOSUM62 | Linux, Mac OS | C++, Java, Python, R |

|

| |||

| ProFET [140] | Various features based on biophysical quantitative properties, letter-based features, local potential features, information-based statistics, AA scale-based features, and transformed CTD features | Linux | Python |

|

| |||

| BLAST [141], ClustalWc [142] | Heuristic pairwise sequence alignments/database search (BLAST), multiple sequence alignments (ClustalW) | Microsoft Windows, Linux, MacOSX | Web server, C, C++ |

|

| |||

| DALI [143], MultiProt [144], TM-align [145], RCSB PDB Comparison Tool [146] | Protein global structure alignments |

|

All: Web server,TM-align:Fortran, C++ |

|

| |||

| SiteEngine [147], APoc [148], eMatchSite [149], G-LosA [150] | Protein local structure alignmentsd | Linux |

|

|

| |||

| POSSUM [151] | PSSM profile-based feature descriptors | Microsoft Windows, Linux, MacOSX | Web server, Perl, Python |

|

| |||

| GOSemSim [152] | Gene Ontology annotation semantic similarity | Microsoft Windows, Linux, MacOSX | R |

|

| |||

| FragHMMent [153] | Prediction of residue-residue contacts | Linux | Java |

|

| |||

| PSIPRED [154] | Secondary structure prediction | Linux | Web server, C |

|

| |||

| Naccess [155], POPS [156] | Solvent accessible surface area | Naccess: Linux, POPS: Microsoft Windows, Linux, MacOSX | Naccess: Fortran, POPS: Java |

|

| |||

| PocketPicker [157] | Prediction of protein binding pockets | Linux | PyMol plugin |

|

| |||

| SCREENc [158], trj_cavity [159] | Identification of protein cavities | trj_cavity: Linux | SCREEN: Web server, trj_cavity: C++ |

Descriptor names are abbreviated according to information in Section 2.B Target Protein Descriptors.

ProtrWeb only provides AAC, DC, TC, Autocorrelation, CTD, CTriad, SOCN, QSO, Pse-AAC and Am-Pse-AAC descriptors.

ClustalW has been retired and replaced with Clustal Omega. The original SCREEN tool is also replaced with SCREEN2.

These tools can also be included in ‘prediction of protein binding pockets’ part, which are mainly used for this purpose.

Open access web applications, online tools, data sets and source codes for VS, provided in the websites or in the supplementary material of the reviewed studies, are given in Table 6. Most of the VS studies in the literature describe methodologies and test them on various data sets, without providing open access web-services or tools that researchers can use to carry out their own analysis. The underlying reason is that, successful VS tools have potential to be employed in the pharmaceutical industry; as a result, the researchers often choose to develop commercial products with their methods. There are several commercial VS services and tools on the market. We did not provide any information regarding these commercial products, as they are out of scope of this study.

Table 6.

Open access web services, online tools and data sets provided in the reviewed VS studies

| Article | Method/tool name | Website | Resource type |

|---|---|---|---|

| Gfeller et al.[160] | SwissTargetPrediction | http://www.swisstargetprediction.ch | Web service |

|

| |||

| Shi et al. [161] | – | http://www.bmlnwpu.org/us/tools/PredictingDTI_S2 /METHODS.html | Source Code/Data set |

|

| |||

| Yabuuchi et al. [162] | – | http://msb.embopress.org/content/7/1/472 | Data set (Supplementary) |

|

| |||

| Iwata et al. [11] | – | https://pubs.acs.org/doi/abs/10.1021/acs.jcim.5b00444 | Data set (Supplementary) |

|

| |||

| Liu et al. [12] | SPACE | http://www.bprc.ac.cn/space | Web tool |

|

| |||

| Ma et al. [163] | – | https://pubs.acs.org/doi/abs/10.1021/ci500747n | Data set (Supplementary) |

|

| |||

| Koutsoukas et al. [164] | – | https://jcheminf.springeropen.com/articles/10.1186/s13321 -017-0226-y | Source Code/Data set (Supplementary) |

|

| |||

| Wen et al. [85] | DeepDTIs | https://github.com/Bjoux2/DeepDTIs_DBN | Source Code/Data set |

|

| |||

| Wallach et al. [165] | AtomNet | – | Commercial |

|

| |||

| Altae-Tran et al. [166] | DeepChem | https://github.com/deepchem/deepchem | Source Code/Data set |

|

| |||

| Soufan et al. [70] | DRABAL | https://figshare.com/articles/ DRABAL/3309562 | Source Code/Data set |

Databases and gold-standard data sets

The aim of this chapter is to provide a brief overview of the open access chemical and biological data repositories and the available gold-standard data sets that are widely used in VS. Compound and target databases, together with the tools that they provide, are crucial for the development of novel VS methods. The databases for compounds, bioactivities and proteins and their statistics are given in Table 7.

Table 7.

Databases of chemicals/compounds, bioactivities and target proteins, statistics and links

| Compound and bioactivity databases | Statisticsa |

Website | Version | ||

|---|---|---|---|---|---|

| Compounds | Targets | Interactions | |||

| PubChem [1] | 93 977 773 (C) 235 653 627 (S) | 10 341 (P) | 233 799 255 (I) 1 252 820 (E) | https://pubchem.ncbi.nlm.nih.gov | 03.12.2017 |

| ChEMBL [2] | 1 735 442 (C) | 11 538 (P) | 14 675 320 (I) 1 302 147 (E) | https://www.ebi.ac.uk/chembl | v23 |

| DrugBank [5] | 9591 (D) | 4270 (P) | 16 748 (I) | http://www.drugbank.ca | v5.0 |

| STITCH [167] | ∼500 000 (C) | 9 643 763 (P) | ∼1.6 billion (I) | http://stitch-beta.embl.de | v5.0 |

| BindingDB [168] | 635 301 (C) | 7000 (P) | 1 419 347 (I) | http://www.bindingdb.org | 03.12.2017 |

| BindingMoad [169] | 12 440 (C) | 7599 (F) | 25769 (I) | http://bindingmoad.org | Rel. 2014 |

| KEGG [170] | 18 211 (C) 10 484 (D) | 976 (P) | 6502 (I) | http://www.kegg.jp | Rel. 84.1 |

| DCDB [171] | 904 (D) 1363 (DC) | 805 (P) | – | http://www.cls.zju.edu.cn/dcdb/index.jsf | v2.0 |

| T3DB [172] | 3673 (T) | 2087 (P) | 42471 (I) | http://www.t3db.ca/ | v2.0 |

|

| |||||

| Side effect databases | Statisticsa | Website | Version | ||

|

| |||||

| SIDER [173] | 1430 (D), 5868 (SE), 139 756 (A) | http://sideeffects.embl.de | v4.1 | ||

|

| |||||

| Metabolome databases | Statisticsa | Website | Version | ||

|

| |||||

| HMDB [174] | 114 089 (M) | http://www.hmdb.ca/ | v4.0 | ||

|

| |||||

|

Chemical databases |

Compounds |

|

|

Website |

Version |

| ChemSpider [3] | ∼62 000 000 (C) | http://www.chemspider.com | 03.12.2017 | ||

| ChEBI [4] | 53 495 (C) | https://www.ebi.ac.uk/chebi | Rel. 158 | ||

| ZINC [175] | ∼100 000 000 (C) | http://zinc15.docking.org/ | ZINC 15 | ||

|

| |||||

| Target databases | Statisticsa | Website | Version | ||

|

| |||||

| AAindex [176] | AAindex1:566 indices, AAindex2:94 matrices, AAindex3:47 contact potential matrices | http://www.genome.jp/aaindex | Rel. 9.2 | ||

| UniProtKB [80] | Swiss-Prot: 556 196 (P), TrEMBL: 98 705 220 (P) | http://www.uniprot.org | v2017_11 | ||

| InterPro [177] | 2128 (SF), 20 410 (F), 8840 (DM) | https://www.ebi.ac.uk/interpro/ | v66 | ||

| Pfam [178] | 16 712 (F) | http://pfam.xfam.org/ | v31.0 | ||

| RCSB PDB [81] | 125 799 (P) | https://www.rcsb.org/ | 28.11.2017 | ||

| sc-PDB [179] | 6326 (C), 4782 (P), 16 034 (I) | http://bioinfo-pharma.u-strasbg.fr/scPDB/ | Rel. 2017 | ||

| CATH [180] | 6119 (SF), 434 857 (DM) | http://www.cathdb.info | v4.2 | ||

| SCOPe [181] | 2008 (SF), 4851 (F), 244 326 (DM) | https://scop.berkeley.edu | v2.06 | ||

Abbreviations in the statistic column: compound (C), substance (S), drug (D) protein (P), protein family (F), interaction (I), experiments (E), associations (A), toxin (T), side effects (SE), drug combination (DC), metabolite (M), domain (DM), superfamily (SF).

Compound, bioactivity and target protein databases

With the improvements in the drug screening technologies and VS methods, the amount of both the experimental bioassay data and computationally produced DTI data are increasing. Therefore, researchers require structured chemical and biological databases to store and publish this vast amount of data in a well-organized way. A chemical database of bioactive molecules (i.e. a compound database) is a resource that contains several properties of chemical substances such as 2D and 3D structures, physical and chemical attributes, molecular descriptors, side effects and clinical information, as well as targets and activity measurements. The public release of large-scale experimental bioactivity data, mostly from high throughput screening (HTS) assays, has started a new era in computational biomedical research. Research groups from all around the world have started to access and analyse the data, which boost the field of computational drug discovery (specifically VS) in the past decade. In this sense, the prominent bioactivity and compound data resources can be listed as PubChem [1], ChEMBL [2], DrugBank [5], STITCH [167], BindingDB [168], BindingMoad [169], KEGG [170], SIDER [173], DCDB [171], HMDB [174] and T3DB [172]. Although the discussed databases have common properties, they also complement each other by providing different features. For example, PubChem contains the largest bioactivity data for compounds—mainly retrieved from HTS experiments—and the other databases generally import data from PubChem. ChEMBL is also a large-scale compound and bioactivity database. However, one of the most significant differences of ChEMBL from the other large-scale sources is that the provided data are manually curated by experts from the literature in a comprehensive manner, making ChEMBL a more reliable resource, whereas the PubChem data are non-curated. ChEMBL also categorizes targets as ‘Single Protein’, ‘Protein Family’ and ‘Protein Complex’ and assigns a confidence score to state the specificity of compound activity. The main advantage of using PubChem over the other resources is its unmatched high volume (i.e. in terms of the number of bioassays, bioactivities, compounds and targets). Another bioactivity database BindingDB contains only experimentally validated bioactivity values of compound-target complexes without considering other functional assay results. BindingDB directly provides validation data sets for computational drug design studies. In contrary to PubChem, ChEMBL and BindingDB, BindingMoad is a small-scale bioactivity database, which includes high-resolution 3D structures of proteins and their ligand annotations for related protein-ligand interactions. In this sense, BindingMoad is especially convenient to be employed for the structure-based VS approaches. As an extensive network of biological systems, KEGG is a valuable resource for understanding functional hierarchies of biological events involving molecular interactions, pathways and disease mechanisms from molecular-level information of genes and genomes extracted from large-scale data sets of genome sequencing or other high-throughput experimental techniques. DrugBank database includes information regarding the approved and experimental drugs along with their target associations; hence, it is a small-scale database. However, DrugBank covers almost all aspects of drugs as a manually curated biomedical resource with high-quality standards. The data obtained from DrugBank is often used in test sets for novel large-scale VS methods. SIDER and STITCH are sister projects, where the former focuses on side effect information, and the latter focuses on the compound-target interactions under biological networks point of view. Therefore, it is quite common to combine complementary features from these databases, when applicable. In addition to the abovementioned resources, there are also useful databases such as DCDB, HMDB and T3DB, which focus on drug combinations, human metabolites and toxic substances, respectively. Considering these bioactivity databases, PubChem, ChEMBL, Binding MOAD and BindingDB represent activity data with quantitative measurements such as the IC50, EC50, Ki and potency, while DrugBank, STITCH, KEGG, DCDB, HMDB and T3DB only provide the information regarding presence of an activity/interaction between the corresponding drug-target pairs.

A protein information database includes protein sequences as well as their physico-chemical and biochemical properties, together with detailed functional annotation and structural information to provide data that can be used for various purposes, including function prediction and drug discovery. Many compound and target databases were constructed with the manual curation of the literature by expert scientists. Most of the databases also incorporate data from third-party resources and provide cross-references. UniProt is the main resource of protein sequence and annotation [80]. It presents a comprehensive protein repository, a central hub, importing and organizing vast amount of information from third-party protein resources as well. The PDB includes experimental protein structure information [81], which is crucial for structure-based VS studies, as well as for PCM. AAindex is a database of physico-chemical and biochemical properties of amino acids and a highly used resource for VS [176]. InterPro, Pfam, CATH and SCOP resources classify proteins in structural and functional groups/families, using pre-defined curated sequence motifs and structural domains [177, 178, 180, 181]. Further information about these data resources can be obtained from the supplementary material.

Gold-standard data sets for VS

In machine learning, the term ‘gold-standard data sets’ refers to reliable sets of information created to address a particular problem, which can be used for the following purposes:

development (i.e. training and testing) of computational methods;

adjustment of the parameters of computational methods;

evaluation of the performance of trained models; and

benchmarking to compare the performances of various prediction models.

In VS, gold-standard data sets generally comprise manually curated compound-target pairs and their bioactivity values. The abovementioned data repositories provide data that can be used for model training and benchmarking; however, it is not easy to understand which database to employ at which step, to obtain the required data. Therefore, data set construction is one of the critical steps in VS studies. Although these databases provide cross-references to each other to some extent, the data are mostly disconnected, and it is often non-trivial to carry out data integration operations on different resources, which requires expert-level knowledge. As a result, expert curated gold-standard data sets are extremely valuable for the community.

Because of the lack of adequate experimental data and publicly available data repositories, it was a significant problem to define a suitable gold-standard data set for benchmark studies until 10 years ago. The early data sets were either too small or proprietary. For example, a data set generated in 1988 for comparative molecular field analysis included only 21 varied steroid structures for the analysis of their binding affinities to human corticosteroid- and testosterone-binding globulins [182]. In 2001, Hert et al. generated a data set for the comparison of different types of 2D fingerprints used in similarity-based VS with a total of 11 activity class, each of which was involving active compounds in a range of approximately 300–1200. However, this data set was derived from MDL Drug Data Report database, which is licensed and not publicly available [183].

As one of the first gold-standard data sets that is large enough and freely accessible, Yamanishi et al. created a data set with four classes (i.e. families) of targets that are enzymes, ion channels, G-protein coupled receptors (GPCRs) and nuclear receptors [90]. These target families are explained in the supplementary material. The data set by Yamanishi et al. involves only human proteins and was constructed using KEGG BRITE, BRENDA, SuperTarget and DrugBank databases and generated mainly for evaluating and training of their own VS method. This data set can be reached via: http://web.kuicr.kyoto-u.ac.jp/supp/yoshi/drugtarget/. The numbers of targets in these data sets are 664, 204, 95 and 26, whereas the numbers of DTIs are 2926, 1476, 635 and 90, respectively, for each class. An updated version of the data set was later created again by Yamanishi et al., including the same target classes [184]; this time using the JAPIC database (http://www.japic.or.jp/). The numbers of the targets in the updated set are the same as previous data set, and the numbers of the interactions are 1515, 776, 314 and 44, respectively, for each class. Yamanishi’s sets were generated to train and test the performances of network/graph-based DTI prediction methods; thus, they are among the most used benchmarking data sets for network/graph-based approaches. However, they usually are not suitable for machine learning approaches, which require large training data sets. Yamanishi’s gold-standard sets can be downloaded from http://cbio.mines-paristech.fr/∼yyamanishi/pharmaco/.

Huang, Irwin and Shoichet have generated a benchmarking data set called directory of useful decoys (DUD) for testing VS methods, by curating challenging decoys that have a low probability of interacting with the selected targets. The DUD data set contained active compounds for the selected targets together with 50 decoys for each active compound, which have similar physico-chemical properties but different topology [185]. As an updated and enhanced version of DUD (DUD-E) with more diverse target classes such as GPCRs and ion channels (along with enzymes and nuclear receptors), DUD-E contains 22 886 ligands and their affinities against 102 targets retrieved from the ChEMBL database, together with property-matched decoys obtained from the ZINC database. The data set is freely available at http://dude.docking.org [186].

Another benchmark data set designed for VS is maximum unbiased validation (MUV), which was generated from PubChem bioactivity data by topological optimization based on a refined nearest neighbour analysis. MUV provides randomly distributed sets of active compounds—selected from potential actives—and inactive compounds—selected from potential decoys—that minimizes the influence of data set bias on validation results. The workflow used for the generation of optimized MUV data set is also freely available as a software package that can be applied on other activity data sets for optimization. The data set and the software package can be accessed via https://www.tu-braunschweig.de/pharmchem/forschung/baumann/muv [187].

In 2012, Merck sponsored a drug target interaction challenge over Kaggle data competition service (https://www.kaggle.com/c/MerckActivity). They provided 164 024 compounds for 15 biologically relevant targets. For each activity, they provided a list of chemicals along with their molecular descriptors and bioactivity measurement values. The participating teams tried to predict the experimentally known held-out interactions among the overall data set. The evaluation mechanism and the performance results of the teams are available in the competition page. Following the end of the competition, the held-out evaluation sets were released, which can now be used as benchmarking data sets for different VS approaches. The data sets are explained in the publication by Ma et al. [163] and available at https://www.kaggle.com/c/MerckActivity/data.

Another data set called Tox21 is also commonly used in machine learning-based computational drug discovery applications. This data set has been generated by The Tox21 Data Challenge community in 2014 to evaluate the performances of different computational methods in terms of toxicity prediction. The data set comprises approximately 12 000 environmental chemicals and approved drugs screened in 12 different bioassays related to nuclear receptor signalling and stress response pathways to reveal their toxic effects based on the disruption of these processes [188].

There are also novel approaches for generating gold-standard data sets, especially for deep learning applications in DTI prediction. Wu et al. developed a platform, MoleculeNet, as a benchmark collection for machine learning methods used in molecular systems. The curated data set of MoleculeNet contains nearly 700 000 compounds retrieved from publicly available databases such as QM7/QM7b, QM8, QM9, ESOL, FreeSolv, Lipophilicity and PDBbind for regression data sets and PCBA, MUV, HIV, BACE, BBBP, Tox21, ToxCast, ClinTox and SIDER for classification data sets. The data were split into training/validation/test subsets and tested on a range of categories, such as quantum mechanics, physical chemistry, biophysics and physiology. Furthermore, MoleculeNet provided evaluation metrics and open-source implementations of several well-known molecular featurization methods and machine learning algorithms. All parts of MoleculeNet have also been integrated into DeepChem open-source framework (https://github.com/deepchem/deepchem) [189]. Apart from these gold-standard sets, there has also been efforts to generate purpose specific data sets [190], often using the ZINC database [175] as their resource. With the increased volume of open access experimental data in repositories such as PubChem, ChEMBL and ZINC the data resources for VS studies has been significantly changed, compared with 10 years ago. Novel data sets derived from these resources such as the DUD and MUV, together with the new algorithmic approaches, are highly promising in terms of developing the field of computational drug discovery. The field of generating and utilizing gold-standard/benchmarking data sets for VS has been extensively discussed in the recent works by Lagarde et al. and Xia et al. [190, 191].

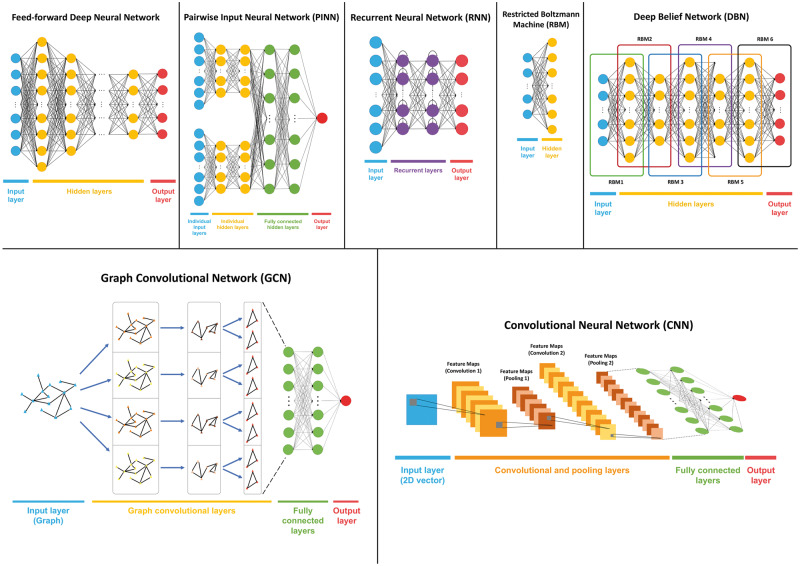

Machine learning applications in VS

The field of machine learning has been extensively reviewed and discussed in several books [192]. There are two main approaches in machine learning literature in terms of how the learning process is carried out, supervised learning and unsupervised learning. In supervised learning, the objective is to infer a function that maps the input data to the output class labels [193], whereas the aim in unsupervised learning is to learn the hidden structure of input data without having class labels. Unsupervised learning algorithms employ techniques to discover relationships among the non-labeled input samples. The most popular applications of unsupervised learning are clustering and dimensionality reduction. Once the groups and clusters are obtained with the application of an unsupervised learning method, each group can be inspected to assign semantic meanings by experts [194]. Both supervised and unsupervised machine learning techniques are used in cheminformatics on a wide range of topics, including VS [195–206], yet most of the methods so far assumed the supervised approach. The subject covered in this chapter is mostly the supervised learning applications in VS. A plethora of methods has been proposed for VS purposes in the past decade. These VS methods use experimentally validated compound-target pairs and their features along with the bioactivity information to create predictive models for future predictions of activities.

In terms of the methodological utilization of the input properties, VS methods can be divided into similarity-based and feature-based methods, although there is no such technical classification in the machine learning literature [192, 193, 201, 207]. In the following sections, similarity-based and feature-based VS methods are investigated, which is followed by the recently popularized deep learning-based applications in VS. For this, we have mostly focused on the studies published in the past 3 years, some of which have aims beyond DTI prediction (e.g. estimation of beneficial drug-drug combinations or ATC code prediction). There are numerous examples of especially ligand-based DTI prediction methods that are highly cited in the literature. We chose to leave these articles out of this review because of they were published more than 5 years ago and were the subject of previous VS field review papers.

Similarity-based approach

Similarity-based methods rely on the assumption that biologically, topologically and chemically similar compounds have similar functions and bioactivities and, therefore, they have similar targets [160, 161, 197, 208]. In the similarity-based approach, the target associations of similar compounds (or the compound associations of similar target proteins) are transferred between each other. Therefore, transfer approach is a term used interchangeably to define similarity-based methods. In chemical space, similarities are calculated by searching molecular substructure and isomorphism based on the representations of molecules such as SMILES and InChI. In target space, similarities are mainly calculated by sequence alignment methods. The methods under this approach construct similarity matrices either for compounds or targets, or for both of them [207]. Subsequently, constructed similarity matrices are used by the machine learning models. Below, we provided reviews for three similarity-based VS methods, which were published in the past few years.

With the aim of identifying biologically and structurally similar clusters of compounds, weighted clustering was proposed by integrating multiple similarity matrices [197]. Two data sets were used: the epidermal growth factor receptor (EGFR) and the fibroblast growth factor receptor (FGFR) data sets. EGFR data set contained bioactivity assay readouts and gene expression profiles for 35 compounds and 3595 genes. In FGFR data set, the chemical structure information, gene expression data and bioactivity assay readouts were available for 94 compounds and 1056 genes. Two similarity matrices were generated based on the structural and the phenotypic properties. Structural properties of compounds were represented by ECFP6 fingerprints, and similarities of compounds were calculated using the Tanimoto coefficient. For the phenotypic similarity matrix calculation, bioactivity readouts were used. The Euclidean distance was employed to calculate the phenotypic similarities between two compounds based on their bioactivity results on the same assays. Subsequently, generated similarity matrices were used to perform clustering using a weighted clustering algorithm. The weighted clustering technique was shown to be more efficient in terms of identifying structurally and biologically similar compounds compared with the individual clustering methods.

A supervised similarity-based PCM method was described for the detection of: (i) interactions between new drug candidates and known targets and (ii) interactions between new drug candidates and new targets [161]. The similarity between two compounds was measured by a combination of non-structure-based score (ATC-based semantic similarity score) and 2D graph structure-based score. ATC-based semantic similarity score was calculated by counting the common subgroups between ATC code annotations of two compounds. 2D structure-based similarity calculation was performed by aligning graph structures of compounds. The similarity score for a pair of targets was computed using a combination of a functional-similarity-based (using Enzyme Commission -EC- numbers) score and a sequence-based similarity score. Functional similarity-based score was calculated by counting the number of common EC number annotations. For sequence-based similarity score calculation, subsequences in the ligand-binding domains were extracted, and they aligned the extracted subsequences to calculate similarity scores between targets. The data sets constructed by Yamanishi et al. [90] for four classes of targets (i.e. GPCRs, ion channels, enzymes and nuclear receptors) were employed for the tests. A concept called ‘super-target’ was proposed to overcome the problem of the scarcity of training instances in terms of targets. Similar targets were clustered, and it was assumed that if the drug interacted with a target, it would also interact with the other targets in the same super-target cluster. For the prediction of new drug candidates for a known target, the following methodology was pursued: When a new compound was given as input to the system, for each known target tx, a confidence score was calculated between the query compound and the super-target cluster that tx belonged to, based on the drug associations of the targets in that super-target cluster. Subsequently, another confidence score was calculated between query compound and tx based only on the drug associations of tx. Finally, these two scores were combined as a single prediction score. For the prediction of new drug candidates for a new target, a similar procedure was followed. In this case, the new target was considered as a member of most similar super-target cluster based on its functional and sequence similarities.

SwissTargetPrediction is a supervised similarity-based method that combines 2D similarity and 3D similarity of compounds with the aim of identifying new targets for query compounds [160]. ChEMBL database was employed to obtain known compound–target pairs. The training data set consisted of 280 381 small compounds for 2686 targets. When a compound was given as input to the system, a combination of 2D and 3D similarity scores were calculated between the query compound and all compounds with known targets. To obtain 2D similarity score, a compound was represented by FP2 fingerprints and the 2D similarity scores between the query compound and other compounds were calculated by the Tanimoto coefficient. For the 3D similarity score, 20 different conformations of compounds were generated, and the Manhattan distance was used to calculate distances among all conformations of two compounds. The smallest distance was then chosen among the 20×20 distance scores, and it was converted into a 3D similarity score. 2D and 3D similarity scores were combined as a single prediction score for targets. Finally, the system outputs a ranked list of targets based on the combined similarity scores. Users can get predictions for a compound using SMILES string of the query compound or by drawing 2D structure of compounds using the web tool provided. SwissTargetPrediction is available at http://www.swisstargetprediction.ch.

Feature-based approach

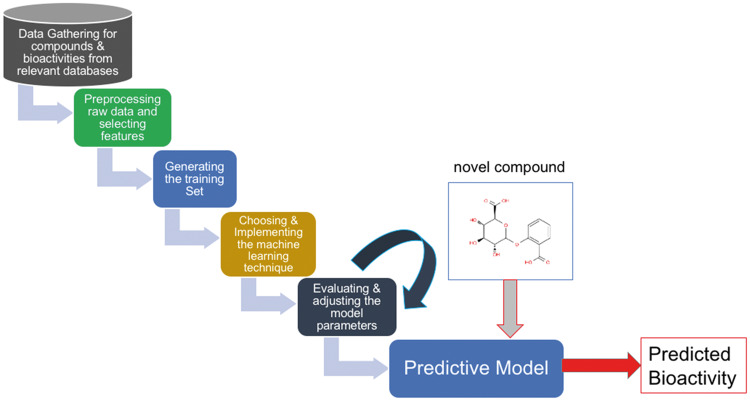

In Feature-based VS methods, each instance (i.e. compound and/or target) is represented by a numerical feature vector, which reflects various types of physico-chemical and molecular properties of the corresponding molecules. Targets are usually modelled using their physical and chemical properties and subsequence distributions or functional attributes, whereas the compounds are usually modelled using structural properties. In a typical feature-based VS application, a set of compounds that is known to interact with a specific target is extracted from compound and bioactivity databases. Subsequently, feature vectors are generated for each compound. Finally, the constructed feature vectors are fed to a machine learning algorithm to create a predictive model for the interaction with the corresponding target. When a new query compound’s feature vector is given to the trained model as input, the output of the predictive model is either active or inactive against the corresponding target protein (Figure 4). This is the so-called ligand-based approach in terms of the incorporated input feature types (i.e. compound features). PCM methods also assume a similar methodology, but they jointly model the target properties at the input level along with the compounds, so that the query can be a compound–protein pair, and the model predicts the presence of that specific interaction. Examples of feature-based VS methods are given below.

Figure 4.

The steps of a typical feature-based virtual screening method for training a predictive model.

A supervised machine learning methodology was proposed by Liu et al. [12] using a combination of both similarity and feature-based approaches to predict drug–ATC code associations. DrugBank database was employed to create their positive and negative training data sets. The total set was composed of 1333 small molecule drugs and their ATC codes at various levels. ATC code prediction problem was described as a binary classification problem. Therefore, for each ATC code, a positive training data set and a negative training data set were constructed. Known drug-ATC code pairs were retrieved to construct the positive training data sets. To construct a negative training data set for each ATC code, they first removed the positive drug–ATC code pairs from all possible drug–ATC code pairs and randomly selected samples from the remaining set. Then six scores were defined to calculate drug–drug similarities, which are based on chemical structures, functional groups, target proteins, drug-induced gene expression profiles, side-effects and chemical–chemical associations. Each drug was represented as a six-dimensional feature vector. The value of a certain feature was determined by taking the largest similarity score between the input drug and the drugs associated with the corresponding ATC code. Once the drugs were converted into feature vectors, the logistic regression method was used to train predictive models for each ATC code. When a new query compound is given to the system, first, it is converted to the feature vector based on the similarity values; then, it is given to the predictive models as input to predict the candidate ATC codes. The method, SPACE, is available at http://www.bprc.ac.cn/space.

In the work by Cano et al. [68], the main objective was the inherent selection/ranking of features (see wrappers in feature selection section of supplementary material) and training a DTI prediction classifier using random forest. Directory of Useful Decoys (DUD) was used to create their training data set, which was composed of kinases, nuclear hormone receptors and other proteins. The constitutional, charged partial surface area and fingerprint-based descriptors were the input to the system. The performance of the model was compared with support vector machine (SVM) and neural network classifier-based models, and the random forest classifier was successful to select and rank most representative features, given a large set of input features. In this setting, it was also observed that a reduced number of features drastically decreased the computational complexity of DTI prediction models.

For drug repurposing, a combination of similarity and feature-based supervised method was proposed by integrating drug/compound, target protein, phenotypic effect and disease association data from several sources [54]. The chemical structures of drugs and compounds were retrieved from the ChEMBL database. Three different molecular descriptors were used to represent compounds, which are ECFP4), Chemistry Development Kit (CDK) Fingerprints, and KEGG Chemical FunKCF-S. The compounds were, thus, represented by 1024, 1024 and 475 692 dimensional fingerprints. The obtained feature vectors were referred to as the ‘chemical profile’ of the compounds. Phenotypic effects of drugs were obtained from FDA Adverse Event Reporting System, and each of the 2594 drugs were represented as a 16 075-dimensional feature vector, where each dimension represents the presence or absence of a phenotypic effect. This data set was named as the ‘phenotypic profile’ of a drug. Compound–target interactions and the bioactivity values were obtained from seven different databases. Their total activity set comprised 1 287 404 interactions involving 519 061 compounds and 3736 targets. This data set was referred as the ‘chemical protein interactome data set’. Molecular features of diseases were obtained from the International Classification of Diseases (ICD10) and the KEGG DISEASE database. The diseases were represented as 6342-dimensional binary feature vectors, where each dimension represents presence or absence of a molecular feature. Drug–disease associations were obtained from medical books and from the KEGG DRUG database. This data set comprised 5830 drug-disease associations involving 2271 drugs and 463 diseases. Disease–target associations were obtained from the KEGG DRUG database. They created a data set consisting of 2062 disease-target associations for 250 diseases and 462 therapeutic target proteins, and this data set was named as the ‘disease-target association template’. Their prediction method was composed of three parts, which were called as the Target Estimation with Similarity Search (TESS), Indication Prediction by Template Matching (IPTM) and Indication Prediction by Supervised Classification (IPSC). In TESS, the aim was to predict potential targets of a given drug based on similarity search. Each compound was represented by a 3736-dimensional target interaction profile. The similarity search was performed against the compounds in the chemical–protein interactome data set based on the chemical and phenotypic profiles of the compounds. Subsequently, for each target, the compounds that were associated with the corresponding target were retrieved, and the drug-target similarity score was assigned using the similarity score between query drug and the most similar compound that were associated with the corresponding target. In IPTM, the aim was to predict novel drug indications for the query drugs. First, target proteins of the query drug were retrieved. For each target, the diseases that were associated with the corresponding target were obtained from the disease target association template. This way, the query drug was linked to the diseases based on their target associations. In IPSC, the aim was to predict novel drug indications using a supervised classification method. In this method, target proteins of the query drug and molecular features of diseases were used. Each drug–disease pair was represented by a feature vector, and drug indication prediction was formulized as a binary classification problem, where the output of the regression-based classifier shows if the drug could be applicable to the paired disease. The cross-validation results showed that IPTM and IPSC methods outperformed the previous methods from the literature.

A supervised feature-based PCM method was proposed for GPCR and protein kinase targets [162]. The positive training data set was generated using the GLIDA database by extracting experimental compound-target interactions, containing 5207 interactions for 317 targets and 866 compounds [209]. Negative training samples were generated among the unknown compounds-target pairs. Compounds were converted into 929-dimensional molecular descriptors. Descriptors for targets were generated using a string kernel, resulting in 400-dimensional feature vectors. Two vectors, that is, compound and target descriptors, were then concatenated for each positive and negative interaction. Finally, the generated feature vectors were fed to an SVM classifier to train predictive models for each target family. Selected novel drug predictions were also experimentally validated for both GPCR and protein kinase families.

A supervised feature-based PCM method for the identification of novel drug combinations was described by Iwata et al. [11]. Orange Book and the KEGG databases were proposed to extract beneficial drug–drug combinations [170, 210]. Interacting drug–target pairs were collected from seven different databases. Furthermore, 4007 DTIs were incorporated for 588 drugs and 930 targets. Each drug was represented by a 1078-dimensional binary feature vector, where 930 of them represent the presence or absence of each target, and 148 of them represent the presence or absence of ATC code annotations. Subsequently, each drug–drug pair was represented as a binary feature vector by combining individual feature vectors of the corresponding drug pairs. Finally, the obtained feature vectors were fed to a logistic regression classifier. When a new drug–drug pair is given as a query to the system, the output was calculated as potentially beneficial or not.