Abstract

What emotions do the face and body express? Guided by new conceptual and quantitative approaches (Cowen, Elfenbein, Laukka, & Keltner, 2018; Cowen & Keltner, 2017, 2018), we explore the taxonomy of emotion recognized in facial-bodily expression. Participants (N = 1,794; 940 female, ages 18–76 years) judged the emotions captured in 1,500 photographs of facial-bodily expression in terms of emotion categories, appraisals, free response, and ecological validity. We find that facial-bodily expressions can reliably signal at least 28 distinct categories of emotion that occur in everyday life. Emotion categories, more so than appraisals such as valence and arousal, organize emotion recognition. However, categories of emotion recognized in naturalistic facial and bodily behavior are not discrete but bridged by smooth gradients that correspond to continuous variations in meaning. Our results support a novel view that emotions occupy a high-dimensional space of categories bridged by smooth gradients of meaning. They offer an approximation of a taxonomy of facial-bodily expressions, visualized within an online interactive map.

Keywords: emotion, expression, faces, semantic space, dimensions

As novelists, poets, and painters have long known, the dramas that make up human social life—flirtations, courtships, family affection and conflict, status dynamics, grieving, forgiveness, and personal epiphanies—are rich with emotional expression. The science of emotion is revealing this to be true as well: From the first moments of life the recognition of expressive behavior is integral to how the individual forms relationships and adapts to the environment. Infants as young as 6 months old identify expressions of anger, fear, joy, and interest (Peltola, Hietanen, Forssman, & Leppänen, 2013) that guide behaviors from approaching strangers to exploring new sources of reward (Hertenstein & Campos, 2004; Sorce, Emde, Campos, & Klinnert, 1985). Adults signal love and desire in subtle facial movements (Gonzaga, Keltner, Londahl, & Smith, 2001). We more often forgive those who express embarrassment (Feinberg, Willer, & Keltner, 2012), conform to the actions of those who express pride (Martens & Tracy, 2013), and concede in negotiations to those who express anger (Reed, DeScioli, & Pinker, 2014). Even shareholders’ confidence in a company depends on a CEO’s facial expression following corporate transgressions (ten Brinke & Adams, 2015). The recognition of fleeting expressive behavior structures the interactions that make up social life.

The study of emotion recognition from facial-bodily expression (configurations of the facial muscles, head, torso, limbs, and eye gaze) has been profoundly influenced by the seminal work of Ekman, Sorenson, and Friesen (1969) in New Guinea. In that work, children and adults labeled, with words or situations, prototypical facial expressions of anger, fear, disgust, happiness, sadness, and surprise. Enduring in its influence, that research, as we will detail, established methods that preclude investigating the kinds of emotion people recognize from expressive behavior, the degree to which categories (e.g., fear) and appraisals (e.g., valence) organize emotion recognition, and whether people recognize expressions as discrete clusters or continuous gradients—issues central to theoretical debate in the field. Based on new conceptual and quantitative approaches, we test competing predictions concerning the number of emotions people recognize, whether emotion recognition is guided by categories or domain-general appraisals and whether the boundaries between emotion categories are discrete.

The Three Properties of an Emotion Taxonomy

Emotions are dynamic states that are initiated by meaning-based appraisals and involve multiple response modalities—experience, expression, and physiology—that enable the individual to respond to ongoing events in the environment (Keltner, Sauter, Tracy, & Cowen, 2019). Within each modality, empirical study (and theoretical controversy) has centered upon the number of emotions with distinct responses, how people represent these responses with language, and how emotions overlap or differ from one another (Barrett, 2006; Keltner, Sauter, et al., 2019; Keltner, Tracy, Sauter, & Cowen, 2019; Lench, Flores, & Bench, 2011; Nummenmaa & Saarimäki, 2019).

To provide mathematical precision to these lines of inquiry, we have developed what we call a semantic space approach to emotion (Cowen & Keltner, 2017, 2018). As emotions unfold, people rely on hundreds, even thousands, of words to describe the specific response, be it a subjective feeling, a physical sensation, a memory from the past, or—the focus of this investigation—the expressive behavior of other people. How people describe emotion-related responses (e.g., experience, expression, physiology) manifests in what we call a semantic space of that response modality. A semantic space is defined by three properties. The first property is its dimensionality, or the number of distinct varieties of emotion that people represent within the semantic space. In the present investigation, we ask, how many emotions do people recognize in facial-bodily expressions? The second property is what we call conceptualization: To what extent do emotion categories and appraisals of valence, arousal, dominance, and other themes capture how people ascribe meaning to emotional responses within a specific response modality? The third property is the distribution of the emotional states within the space. In the present investigation, we ask how distinct the boundaries are between emotion categories recognized in expressive behavior.

Approximating a semantic space of emotion within a response modality requires judgments of emotion categories and appraisals of a vast array of stimuli that spans a wide range of emotions and includes variations within categories of emotion. In previous investigations guided by this framework, we examined the semantic space of emotional experience (Cowen & Keltner, 2017) and vocal expression (Cowen, Elfenbein, Laukka, & Keltner, 2018; Cowen, Laukka, Elfenbein, Liu, & Keltner, 2019). In these studies, 2,185 emotionally evocative short videos, 2,032 nonverbal vocalizations (e.g., laughs, screams), and 2,519 speech samples varying only in prosody (tune, rhythm, and timbre) were rated by participants in terms of emotion categories and domain-general appraisals (e.g., valence, dominance, certainty), and new statistical techniques were applied to participants’ judgments of experience, vocal bursts, and prosody. With respect to the dimensionality of emotion, we documented 27 kinds of emotional experience, 24 kinds of emotion recognized in nonverbal vocalizations, and 12 kinds of emotion recognized in the nuanced variations in prosody that occur during speech. With respect to the conceptualization of emotional experience, we found that emotion categories (e.g., awe, love, disgust), more so than broad domain-general appraisals such as valence and arousal, explained how people use language to report upon their experiences and perceptions of emotion. Finally, in terms of the distribution of emotional experiences and expressions, we documented little evidence of sharp, discrete boundaries between kinds of emotion. Instead, different kinds of emotion—for example, love and sympathy—were linked by continuous gradients of meaning.

In the present investigation, we apply this conceptual framework to investigate a more traditionally studied modality of expression: facial-bodily expressions. We do so because questions regarding the number of emotions signaled in the face and body, how emotions recognized in the face and body are best conceptualized, and the structure of the emotions we recognize in the face and body are central to the science of emotion and a central focus of theoretical debate (Barrett, 2006; Keltner, Sauter, et al., 2019; Keltner, Tracy, et al., 2019; Shuman, Clark-Polner, Meuleman, Sander, & Scherer, 2017). A semantic space approach has the potential to yield new advances in these controversial areas of inquiry.

Beyond Impoverished Methods: The Study of the Dimensionality, Conceptualization, and Distribution of Emotion Recognition

The classic work of Ekman and colleagues (1969) established prototypical facial-bodily expressions of anger, disgust, fear, sadness, surprise, and happiness (Elfenbein & Ambady, 2002). The expressions documented in that work have enabled discoveries concerning the developmental emergence of emotional expression and recognition (Walle, Reschke, Camras, & Campos, 2017), the neural processes engaged in emotion recognition (Schirmer & Adolphs, 2017), and cultural variation in the meaning of expressive behavior (e.g., Elfenbein, Beaupré, Lévesque, & Hess, 2007; Matsumoto & Hwang, 2017). The photos of those prototypical expressions may be the most widely used expression stimuli in the science of emotion—and the source of the most theoretically contested data.

Critiques of the methods used by Ekman and colleagues (1969) can often be reframed as critiques of how a narrow range of stimuli and constrained recognition formats fail to capture the richness of the semantic space of emotion recognition (Keltner, Sauter, et al., 2019; Keltner, Tracy, et al., 2019; Russell, 1994). First, the focus on prototypical expressions of anger, disgust, fear, sadness, happiness, and surprise fails to capture the dimensionality, or variety of emotions, signaled in facial-bodily expression (see Cordaro et al., in press; Keltner, Sauter, et al., 2019; Keltner, Tracy, et al., 2019). Recent empirical work finds that humans are capable of producing a far richer variety of facial-bodily signals than traditionally studied, using our specially adapted facial morphology with 30 to 40 facial muscles, pronounced brow ridges, accentuated sclera, and the emotional information conveyed in subtle movements of the head, torso, and gaze activity (Godinho, Spikins, & O’Higgins, 2018; Jessen & Grossmann, 2014; Reed et al., 2014; Schmidt & Cohn, 2001). Studies guided by claims concerning the role of emotion in attachment, hierarchies, and knowledge acquisition point to candidate facial-bodily expressions for over 20 states, including amusement (Keltner, 1995), awe (Shiota et al., 2017), contempt (Matsumoto & Ekman, 2004), contentment (Cordaro, Brackett, Glass, & Anderson, 2016), desire (Gonzaga, Turner, Keltner, Campos, & Altemus, 2006), distress (Perkins, Inchley-Mort, Pickering, Corr, & Burgess, 2012), embarrassment (Keltner, 1995), interest (Reeve, 1993), love (Gonzaga et al., 2001) pain (Prkachin, 1992), pride (Tracy & Robins, 2004), shame (Keltner, 1995; Tracy & Matsumoto, 2008), sympathy (Goetz, Keltner, & Simon-Thomas, 2010), and triumph (Tracy & Matsumoto, 2008). In this investigation, we seek to account for the wide range of emotions for which facial-bodily signals have been proposed, among other candidate states, although future studies could very well introduce further displays. With this caveat in mind, we, for the first time in one study, investigate how many emotions can be distinguished by facial-bodily expressions.

Second, beginning with Ekman and colleagues (1969), it has been typical in emotion recognition studies to use either reports of emotion categories or ratings of valence, arousal, and other appraisals to capture how people recognize emotion (for a recent exception, see Shuman et al., 2017). These disjoint methodological approaches—that measure emotion categories or appraisals but not both—have failed to capture the degree to which emotion categories and domain-general appraisals such as valence, arousal, and dominance organize emotion recognition.

Finally, the focus on expression prototypes fails to capture how people recognize variations of expression within an emotion category (see Keltner, 1995, on embarrassment, and Tracy & Robins, 2004, on pride), leading to potentially erroneous notions of discrete boundaries between categories (Barrett, 2006). Guided by these critiques, we adopt methods that allow for a fuller examination of the semantic space of emotion recognition from facial-bodily behavior.

Basic and Constructivist Approaches to the Semantic Space of Emotion Recognition

Two theories have emerged that produce competing predictions concerning the three properties of the semantic space of emotion recognition. Basic emotion theory starts from the assumption that different emotions are associated with distinct patterns of expressive behavior (see Keltner, Sauter, et al., 2019; Keltner, Tracy, et al., 2019; Lench et al., 2011). Recent evidence suggests that people may recognize upward of 20 states (e.g., Cordaro et al., in press). It is asserted that emotion categories, more so than appraisals of valence, arousal, or other broad processes, organize emotion recognition (e.g., Ekman, 1992; Nummenmaa & Saarimäki, 2019; Scarantino, 2017) and that the boundaries between emotions are discrete (Ekman & Cordaro, 2011; Nummenmaa & Saarimäki, 2019).

Constructivist approaches, by contrast, typically presuppose that what is most core to all emotions are domain-general appraisals of valence and arousal, which are together referred to as core affect (Russell, 2009; Watson & Tellegen, 1985). Layered on top of core affect are context-specific interpretations that give rise to the constructed meaning of expressive behavior (Barrett, 2017; Coppin & Sander, 2016; Russell, 2009). This framework posits that the recognition of emotion is based on the perception of a limited number of broader features such as valence and arousal (Barrett, 2017), and that there are no clear boundaries between emotion categories.

Although often treated as two contrasting possibilities, basic emotion theory and constructivism are each associated with separable claims about the dimensionality, conceptualization, and distribution of emotion. In Table 1, we summarize these claims, which remain largely untested given the empirical focus on prototypical expressions of six emotions.

Table 1.

Past Predictions Regarding the Semantic Space of Emotion Recognition

| Theory | Dimensionality How many emotions are signaled in facial-bodily behavior? |

Conceptualization What concepts capture the recognition of emotion in expression? |

Distribution Are emotions perceived as discrete clusters or continuous gradients? |

|---|---|---|---|

| Basic emotion theory | Perceptual judgments rely on a variety of distinct emotions, ranging from six in early theorizing to upwards of 20 in more recent theorizing. | Emotion categories capture the underlying organization of emotion recognition. Ekman (1992): “Each emotion has unique features … [that] distinguish emotions from other affective phenomena.” |

Boundaries between categories are discrete. Ekman (1992): “Each of the basic emotions is … a family of related states. … I do not propose that the boundaries between [them] are fuzzy.” |

| Constructivist theory | Perceptual judgments of expressions will be reducible to a small set of dimensions. | Valence, arousal (and perhaps a few other appraisals) will organize the recognition of emotion. | Categories, constructed from broader properties that vary smoothly and independently, will be bridged by continuous gradients. |

| Barrett (2017): “Emotional phenomena can be understood as low dimensional features.” | Russell (2003): “Facial, vocal … changes … are accounted for by core affect [valence and arousal] and … instrumental action.” | Barrett (2006): “evidence … is inconsistent with the view that there are kinds of emotion with boundaries that are carved in nature.” | |

| Methods | Multidimensional reliability assessment. | Statistical modeling applied to large, diverse data sets. | Visualization techniques, direct analysis of clusters, and continuous gradients. |

The Present Investigation

To test these competing predictions about the semantic space of emotion recognition, we applied large-scale data analytic techniques (Cowen et al., 2018; Cowen & Keltner, 2017; Cowen et al., 2019) to the recognition of emotion facial-bodily behavior. Departing from the field’s typical focus on prototypical expressions of six emotions, we (a) compiled a rich stimulus set incorporating numerous, highly variable expressions of dozens of recently studied emotional states (addressing pervasive limitations of low sample sizes and the reliance on prototypical expressions of a limited number of emotions); (b) collected hundreds of thousands of judgments of the emotion categories and dimensions of appraisals perceived in these expressions; (c) applied model comparison and multidimensional reliability techniques to determine the dimensions (or kinds of emotion categories) that explain the reliable recognition of emotion from facial/bodily expression (moving beyond the limitations of traditional factor analytic approaches; see Supplemental Discussion section in the online supplemental materials); (d) investigated the distribution of states along the distinct dimensions people recognize, including the boundaries between emotion categories; and finally, (e) created an online interactive map to provide a widely accessible window into the taxonomy of emotional expression in its full complexity.

Guided by findings of candidate expressions for a wide range of emotions, along with recent studies of the semantic space of subjective experience and vocal expression, we tested the following predictions.

Hypothesis 1: With respect to the dimensionality of emotion recognition, people will be found to recognize a much wider array of distinct emotions in expressive behavior than typically thought (i.e., greater than 20 distinct dimensions of emotion, as opposed to fewer than 10).

Hypothesis 2: With respect to the conceptualization of emotion recognition, emotion categories more so than domain general appraisals will capture the emotions people recognize in facial bodily expressions.

Hypothesis 3: With respect to the distribution of emotion recognition, emotion categories will not be discrete but joined by continuous gradients of meaning. These gradients in the categories of expression people recognize in facial-bodily expressions will correspond to smooth variations in appraisals of affective features.

We also answered more exploratory questions about the influence of context and ecological validity on emotion recognition relevant to recent debate (Aviezer et al., 2008; Barrett, 2017; Matsumoto & Hwang, 2017).

Exploratory Question 1: Features of the context—what the person is wearing, clues to the social nature of the situation, and other expressive actions—can strongly influence the recognition of facial-bodily expression. For instance, a person holding a soiled cloth is more likely to be perceived as disgusted than a person poised to punch with a clenched fist (Aviezer et al., 2008). However, the extent to which context is required for the recognition of emotion more generally from facial-bodily expression is not well understood. Here, we determine whether context is necessary for the recognition of a wide array of emotions.

Exploratory Question 2: A longstanding concern in the study of facial bodily expression has been the ecological validity of experimental stimuli (e.g., Russell, 1994). Are people only adept at recognizing facial bodily expressions that are extreme and caricature like (e.g., the photos used by Ekman and colleagues [1969])? Do people encounter a more limited variety of expressions in everyday life (Matsumoto & Hwang, 2017)? We will ascertain how often people report having perceived and produced the expressions investigated here in their everyday lives.

Method

To address the aforementioned hypotheses and questions, we created a diverse stimulus set of 1,500 facial-bodily expressions and gathered ratings of each expression in four response formats: emotion categories, affective scales, free response, and assessments of ecological validity.

Participants

Judgments of expressions were obtained using Mechanical Turk. Based on effect sizes observed in a similarly designed study of reported emotional experiences (Cowen & Keltner, 2017), we recruited 1,794 U.S.-English-speaking raters aged 18 to 76 years (940 female, Mage = 34.8 years). Four separate surveys were used: one for categorical judgments, one for appraisal judgments, one for free-response judgments, and one for judgments of ecological validity. The experimental procedures were approved by the Institutional Review Board at the University of California, Berkeley (see the online supplemental materials for details regarding surveys and payment).

Creation of Stimulus Set of 1,500 Naturalistic Expressions

Departing from past studies’ focus on a narrow range of prototypical expressions, and informed by the crucial dependence of multidimensional analytic results on sample size (MacCallum, Widaman, Zhang, & Hong, 1999), we collected widely varying stimuli—1,500 naturalistic photographs in total—centered on the face and including nearby hand and body movements. We did so by querying search engines with contexts and phrases (e.g., “roller coaster ride,” “flirting”) that range in their affect- and appraisal-related qualities and their associations with a rich array of emotion categories. The appraisal properties were culled from theories of the appraisal/construction of emotion. The categories were derived from taxonomies of prominent theorists (see Keltner, Sauter, et al., 2019), along with studies of positive emotions such as amusement, awe, love, desire, elation, and sympathy (Campos, Shiota, Keltner, Gonzaga, and Goetz, 2013); states found to occur in daily interactions, such as confusion, concentration, doubt, and interest (Benitez-Quiroz, Wilbur, & Martinez, 2016; Rozin & Cohen, 2003); and more nuanced states such as distress, disappointment, and shame (Cordaro et al., 2016; Perkins et al., 2012; see also Table S2 of the online supplemental materials for references). These categories by no means represent a complete list of emotion categories, but instead those categories of expressive behavior that have been studied thus far.1 Given large imbalances in the rates of expressions that appear online (e.g., posed smiles), we selected expressions for apparent authenticity and diversity of expression but not for resemblance to category prototypes. Nevertheless, given potential selection bias and the scope of online images, we do not claim that the expressions studied here are exhaustive of facial-bodily signaling.

Judgment Formats

In each of four different judgment formats, we obtained repeated (18–20) judgments from separate participants of each expression. This was estimated to be sufficient to capture the reliable variance in judgments of each image based on findings from past studies (Cowen et al., 2018; Cowen & Keltner, 2017). One set of participants (n = 364; 190 female; Mage = 34.3 years; mean of 82.4 faces judged) rated each image in terms of 28 emotion categories derived from an extensive review of past studies of emotional expression (e.g., Benitez-Quiroz et al., 2016; Campos et al., 2013; Cordaro et al., 2016; Rozin & Cohen, 2003; see Keltner, Sauter, et al., 2019, for review). The categories included amusement, anger, awe, concentration, confusion, contemplation, contempt, contentment, desire, disappointment, disgust, distress, doubt, ecstasy, elation, embarrassment, fear, interest, love, pain, pride, realization, relief, sadness, shame, surprise, sympathy, and triumph, along with synonyms (see Table S2 of the online supplemental materials). To our knowledge, this list encompasses the emotions for which facial-bodily expressive signals have previously been proposed (and more), but we do not claim that it is exhaustive of all facial-bodily expression. A second set of participants (n = 1,116; 584 female; Mage = 34.3 years; mean of 24.2 faces judged) rated each expression in terms of 13 appraisals derived from constructivist and appraisal theories (Osgood, 1966; Smith & Ellsworth, 1985; Table S3 of the online supplemental materials). Given the potential limitations of forced-choice recognition paradigms (DiGirolamo & Russell, 2017), a third set of participants (n = 193; 110 female; Mage = 37.1 years; mean of 69.9 faces judged) provided free-response descriptions of each expression. In this method, as participants typed, a drop-down menu appeared displaying items from a corpus of 600 emotion terms containing the currently typed substring. For example, typing the substring “lov-” caused the following terms to be displayed: love, brotherly love, feeling loved, loving sympathy, maternal love, romantic love, and self-love. Participants selected as many emotion terms as desired. To address potential concerns regarding ecological validity (Matsumoto & Hwang, 2017), a final group of participants (n = 121; 56 female; Mage = 36.8 years; mean of 37.2 faces judged) rated each expression in terms of its perceived authenticity, how often they have seen the same expression used in real life, how often they themselves have produced the expression, and whether it has reflected their inner feelings. Questions from each survey are shown in Figure S1 of the online supplemental materials.

All told, this amounted to 412,500 individual judgments (30,000 forced-choice categorical judgments, 351,000 9-point appraisal scale judgments, 13,500 free-response judgments, and 18,000 ecological validity judgments). These methods capture 87.6% and 88.0% of the variance in population mean category and appraisal judgments, respectively (see the Supplemental Methods section of the online supplemental materials), indicating that they accurately characterized people’s responses to each expression.

Overview of Statistical Analyses

Hypothesis 1: Dimensionality.

Four methods were used to assess how many distinct varieties of emotion were required to explain participants’ recognition judgments: (a) interrater agreement levels, (b) split-half canonical correlations analysis (SH-CCA) applied to the category judgments, (c) partial correlations between individual judgments and the population average, and (d) canonical correlations analysis (CCA) between category and free-response judgments.

Hypothesis 2: Conceptualization.

Linear and nonlinear predictive models (linear regression and nearest neighbors) were applied to determine how much of the explainable variance in the categorical judgments can be explained by the domain-general appraisals, and vice versa.

Hypothesis 3: Distribution.

Visualization techniques were applied, and we assessed whether gradients between categories of emotion corresponded to discrete shifts or smooth, recognizable transitions in the meaning of expressions by interrogating their correspondence with changes in more continuous appraisals.

Exploratory Question 1: Context.

Judgments of context-free versions of each expression were compared with judgments of the original 1,500 images. We estimated correlations between the population average judgments of expressions with and without context, and performed CCA between the original and context-free judgments to determine the number of preserved dimensions.

Exploratory Question 2: Ecological validity.

We examined how often perceivers rated expressions from each category of emotion as authentic and how often they reported having seen them in real life, produced them in the past, and produced them in reflection of internal feelings.

Results

Distinguishing 28 Distinct Categories of Emotion From Naturalistic Expressions

We first analyzed how many emotions participants recognized at above-chance levels in the 1,500 expressions. Most typically, emotion recognition has been assessed in the degree to which participants’ judgments conform to experimenters’ expectations regarding the emotions conveyed by each expression (Cordaro et al., 2016; Elfenbein & Ambady, 2002). We depart from this prescriptive approach, as we lack special knowledge of the emotions conveyed by our naturalistic stimuli. To capture emotion recognition, we instead focus on the reliability of judgments of each expression, operationalized as interrater agreement (see Jack, Sun, Delis, Garrod, & Schyns, 2016).

We first examined whether the 28 categories of emotion were recognized by traditional standards, that is, with significant interrater agreement rates across participants. We found that the 28 emotions used in our categorical judgment task were recognized at above-chance rates, each category reliably being used to label between three and 125 expressions (M = 65.6 significant expressions per category; see Figure S2 of the online supplemental materials). Ninety-two percent of the 1,500 expressions were reliably identified with at least one category (25%+ interrater agreement, false discovery rate [FDR] < .05, simulation test; see Supplemental Methods section of the online supplemental materials) and over a third of the expressions elicited interrater agreement levels of 50% or greater (FDR < 10−6, simulation test; see Figure S2 of the online supplemental materials for full distribution of ratings). These interrater agreement levels are comparable with those documented in metaanalyses of past studies of the recognition of emotion in prototypical expressions (Elfenbein & Ambady, 2002), even though the present study included a larger set of emotion categories and variations of expressions within categories. Thus, the 28 categories serve as fitting descriptors for facial-bodily expressions (see Figure S3 of the online supplemental materials for other metrics of importance of each category).

Although we find that the 28 emotion categories were meaningful descriptors of naturalistic expressions, further analyses are needed to show that they each had distinct meaning in how participants labeled each of the 1,500 expressions. How many kinds of emotion were represented along separate dimensions within the semantic space of emotion recognition from facial-bodily expressions? Within our framework, every expression corresponds to a point along some combination of dimensions. Each additional category beyond the first could require a separate dimension, for a maximum of 27, because of the forced-choice nature of the task. Or, as an alternative, the categories could reduce to a smaller number, for example, a low-dimensional space of emotion defined by valence and arousal (Russell, 2003).

Addressing these competing possibilities requires moving beyond traditional methods such as factor analysis or principal components analysis, which are limited in important ways. Most critically, the derivation of the factors or principal components is based on correlations or covariance between different judgments, not on the reliability of reports of individual items (Cowen et al., 2019). Thus, these methods cannot identify when an individual category, like fear, is reliably distinguished from every other rated category (see Supplemental Video 1 of the online supplemental materials for illustration). To determine how many emotion categories are reliably distinguished in the recognition facial-bodily expression, we used the recently validated multidimensional reliability assessment method called SH-CCA (Cowen & Keltner, 2017). In SH-CCA, the averages obtained from half of the ratings for each expression are compared using CCA with the averages obtained from the other half of the ratings. This yields an estimate of the number of dimensions of reliable variance (or kinds of emotion that emerge) in participants’ judgments of the 1,500 naturalistic expressions (see online supplemental materials and Figure S4 for details of the method and validation in 1,936 simulated studies). Using SH-CCA, we found that 27 statistically significant semantic dimensions of emotion recognition (p ≤ .013, r ≥ .019, cross-validated; see Supplemental Methods section of the online supplemental materials for details)—the maximum number for 28-way forced-choice ratings—were required to explain the reliability of participants’ recognition of emotion categories conveyed by the 1,500 expressions (see Figure S5 of the online supplemental materials). This would suggest that, in keeping with our first hypothesis, the expressions cannot be projected onto fewer semantic dimensions without losing information about the emotional states people reliably recognized. Participants recognized 28 distinct states in the 1,500 facial-bodily expressions.

Varieties of Emotion Recognized in Free-Response Description of Expressions

Forced-choice judgment formats may artificially inflate the specificity and reliability of emotion recognition (DiGirolamo & Russell, 2017; Russell, 1994). Given this concern, we assessed how many dimensions of emotion recognition were also reliably identified when participants used their own free-response terms to label the meaning of each expression (see Figure 1). In other words, how many of the dimensions of expression captured by the categorical judgments (e.g., fear, awe) were also reliably described using distinct free-response terms (e.g., shock, wonder)?

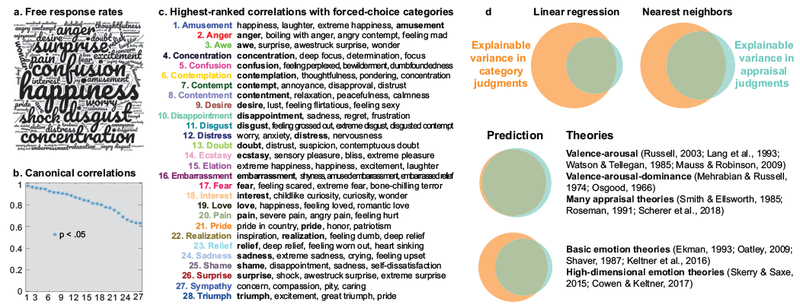

Figure 1.

Comparing categorical to free-response and appraisal judgments: All 28 categories are reliable, distinct, and accurate descriptors of perceived emotion and cannot reduced to a small set of domain-general appraisals. (a) Relative usage rates of free-response terms. Font size is proportional to the number of times each term was used. Participants employed a rich array of terms. (b) Canonical correlations between categories and free response. All 27 dimensions of variance of the categorical judgments were recognized via free response (p <.05), indicating that the 28 categories are reliable, semantically distinct descriptors of emotional expression. (c) Free-response terms correlated with each forced-choice category. Correlations (r) were assessed between category judgments and free-response terms, across images. The free-response terms most highly correlated with each category consistently include the categories themselves and synonyms. (d) Proportion of variance explained in 28 categories by 13 affective scales and vice versa using linear and nonlinear regression. Affective scale judgments do not fully explain category judgments (39.9% and 56.1% of variance explained, Ordinary least squares [OLS] linear regression and k-nearest neighbors [kNN] regression, respectively), whereas category judgments do largely explain affective scales (91.4% and 87.1% of variance, OLS and kNN, respectively). Categories offer a richer conceptualization of emotion recognition. The smaller Venn diagrams illustrate results predicted by different theories. Our findings are consistent with theories that expressions convey emotion categories or are high dimensional and cannot be captured by 13 scales (Skerry & Saxe, 2015). See the online article for the color version of this figure.

We answered this question by applying CCA between the category and free-response judgments of the 1,500 expressions. Here, CCA extracted linear combinations among the category judgments of 28 emotions that correlated maximally with linear combinations among the free-response terms. This analysis revealed 27 significant linearly independent patterns of shared variance in emotion recognition (p ≤ .0008, r ≥ .049, cross-validated; see of the online supplemental materials for details)—the maximum attainable number (Figure 1b). Furthermore, forced-choice judgments of each of the 28 categories were closely correlated with identical or near-identical free-response terms (Figure 1c), indicating that they accurately capture the meaning attributed to expressions (ruling out methodological artifacts such as process of elimination; see DiGirolamo & Russell, 2017). Together, these results confirm that all 28 categories are reliable, semantically distinct, and accurate descriptors for the emotions perceived in naturalistic expressions.

How Do People Recognize Emotion in Expressive Behavior: Categories or Domain-General Appraisals?

In comparable studies of emotional experience and vocal expression recognition (Cowen et al., 2018, 2019; Cowen & Keltner, 2017), with respect to how people conceptualize emotion, we found that emotion categories largely explained, but could not be explained by, domain-general appraisals (valence, arousal, dominance, safety, etc.). These results informed our second hypothesis, that emotion categories capture a richer conceptualization of emotion than domain-general appraisals. To test this hypothesis, we ascertained whether judgments of each expression along the 13 appraisal scales would better explain its categorical judgments, or vice versa. Do judgments of the degree to which a sneer expresses dominance, unfairness, and so on better predict whether it will be labeled as “contempt,” or vice versa? Using regression methods (Figure 1d), we found that the appraisal judgments explained 56.1% of the explainable variance in the categorical judgments, whereas the categorical judgments explained 91.4% of the explainable variance in the appraisal judgments (see the online supplemental materials for details). That the categorical judgments explained the appraisal judgments substantially better than the reverse (using linear [91.4% vs. 39.9%] and nonlinear models [87.1% vs. 56.1%]; both ps < 10−6, bootstrap test) suggests that categories have more value than appraisal features in explicating emotion recognition of facial-bodily expression. Emotion recognition from expression cannot be accounted for by 13 domain-general appraisals.

The Nature of Emotion Categories: Boundaries Between and Variations Within Categories

Our third hypothesis regarded the distribution of emotion recognition. In prior studies, it was found that categories of emotional experience and vocal expression were not discrete but joined by smooth gradients of meaning. We thus predicted that the same would be true of emotion categories perceived in facial-bodily expressions. Our results were in keeping with this prediction. Namely, if we assign each expression to its modal judgment category, yielding a discrete taxonomy, these modal categories explain 78.9% of the variance in the 13 appraisal judgments, substantially less than the 91.4% of variance explained using the full proportions of category judgments (p < 10−6, bootstrap test). Confusion over the emotion category conveyed by each expression, far from being noise, instead carries information regarding how it is perceived.

To explore how the emotions recognized in facial-bodily expression were distributed, we used a visualization technique called t-distributed stochastic neighbor embedding (t-SNE; Maaten & Hinton, 2008), which projects data into a two-dimensional space that preserves the local distances between data points without imposing assumptions of discreteness (e.g., clustering) or linearity (e.g., factor analysis). The results are shown in Figure 2 and online at https://s3-us-west-1.amazonaws.com/face28/map.html . The map reveals within-category variations in the relative location of each expression, in keeping with the notion that within an emotion category there is heterogeneity of expressive behavior (Ekman, 1992, 1993; Keltner, 1995). For example, some expressions frequently labeled “surprise” were placed closer to “fear” and others closer to “awe.”

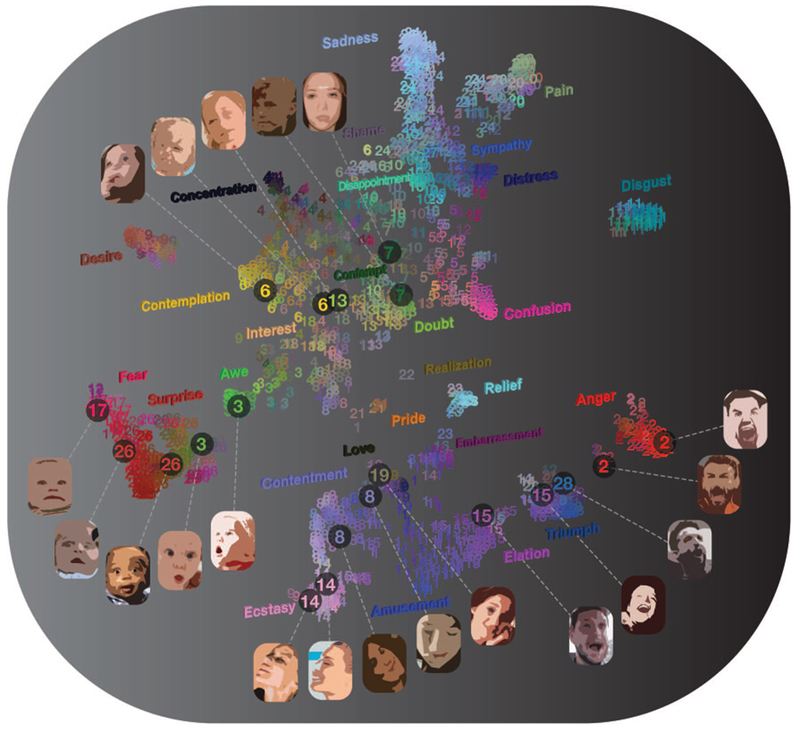

Figure 2.

Mapping of expression judgment distributions with t-distributed stochastic neighbor embedding (t-SNE). t-SNE projects the data into a two-dimensional space that approximates the local distances between expressions. Each number corresponds to a single naturalistic expression and reflects the most frequent category judgment. The t-SNE map reveals smooth structure in the recognition of emotion, with variations in the proximity of individual expressions within each category to other categories. These variations are exemplified by the example expressions shown. Face images have been rendered unidentifiable here via artistic transformation. The expressions and their assigned judgments can be fully explored in the interactive online version of this figure: https://s3-us-west-1.amazonaws.com/face28/map.html. See the online article for the color version of this figure.

To quantify the gradients revealed by t-SNE, we tested statistically for potential nondiscrete structure in the categorical judgments. We investigated whether expressions rated with two emotion categories (e.g., awe and fear) scored intermediate ratings between the categories on our 13 appraisal scales. If categories were perceived as discrete, then the meaning attributed to each expression would (a) shift abruptly across category boundaries, and (b) have higher standard deviation across raters at the midpoint between categories, as expressions would be perceived as belonging to either one category or the other. Instead, we found that patterns in the 13 appraisal scales were significantly correlated with patterns in the categorical judgments within (not just across) modal categories, and location near the midpoint along gradients between categories was typically uncorrelated with standard deviation in the appraisal features (mean correlation r = .00039; see the online supplemental materials for details).2 That the appraisal scales vary smoothly both within and across modal category boundaries confirms that the categories occupy continuous gradients.

The Role of Context: Does the Recognition of 28 Emotion Categories Depend on Contextual Cues?

In natural settings, facial-bodily expressions are framed by contextual cues: clothing, physical settings, activities individuals may be engaged in, and so forth. In the interest of ecological validity, many of the expressions presented here included such cues. To ascertain whether these contextual cues were necessary to distinguish certain categories of emotion from expression, we eliminated them from each image. To do so, we employed facial landmark detection and photographic interpolation techniques to crop out or erase visual details besides those of the face and body. We eliminated scenery (e.g., sporting fields, protest crowds), objects (e.g., headphones), clothing, jewelry, and hair when its position indicated movement.3 We were able to remove contextual cues from all but 44 images in which contextual cues overlapped with the face (we allowed hand and body gestures to be fully or partially erased from many of the photographs; see Figure 3a for examples). We then collected 18 forced-choice categorical judgments of each of these context-free expressions from a new set of participants (N = 302; 151 female, Mage = 37.6 years).

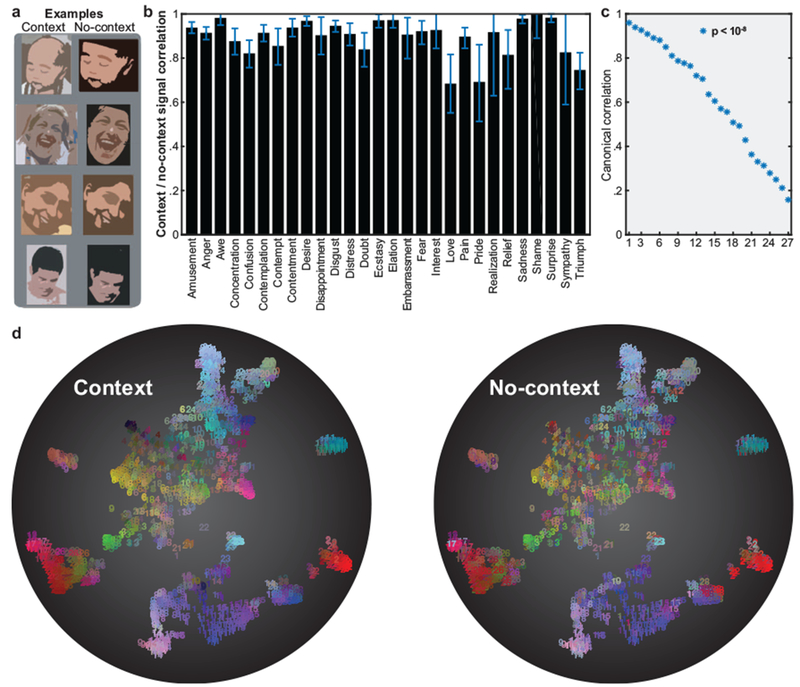

Figure 3.

The recognition of 28 distinct categories of emotion from naturalistic expression does not require contextual cues. (a) Examples of images before and after the removal of contextual cues. (b) Correlations between judgments of 1,456 expressions in their natural context and in isolation, adjusted for explainable variance. (c) Canonical correlations between judgments of expressions in context and in isolation. All 27 dimensions of recognized emotion were preserved after the removal of contextual cues (p < .01; leave-one-rater-out cross-validation; see the online supplemental materials for details). (d) Categorical judgments of the original and isolated images. Here, colors are interpolated to precisely capture the proportion of times each category was chosen for each expression. The resulting map reveals that the smooth gradients among distinct categories of emotion are preserved after the removal of contextual cues. Face images have been rendered unidentifiable here via artistic transformation. The isolated expressions and their assigned judgments can be fully explored within another online interactive map: https://s3-us-west-1.amazonaws.com/face28/nocontext.html. See the online article for the color version of this figure.

A comparison of judgments of the 1456 context-free expressions with the corresponding judgments of the original images revealed that context played, at best, a minor role in the recognition of the 28 categories of emotion. As shown in Figure 3b, judgments of the 28 emotion categories of the context-free expressions were generally highly correlated with judgments of the same categories in the original images (r > .68 for all 28 categories, and r > .9 for 18 of the categories). Furthermore, applying CCA between judgments of the original and context-free expressions again revealed 27 significant dimensions (p ≤ .033, r ≥ 030, cross-validated; see the online supplemental materials for details), the maximum number (Figure 3c). And finally, projecting the judgments of the context-free expressions onto our chromatic map revealed that they are qualitatively very similar to those of the original images (Figure 3d).

These results indicate that 28 categories of emotion can be distinguished from facial-bodily posture absent contextual clues. Although a few expressions are more context-dependent—especially triumph, love, and pride (see Figure 3d)—the vast majority are perceived similarly when isolated from their immediate context. Isolated facial and bodily expressions are capable of reliably conveying 28 distinct categories of emotion absent contextual cues.

Ecological Validity of Expressions of 28 Emotions

The focus of past studies on posed expressions raises important questions about how often the 1,500 expressions we have studied occur in daily life (Matsumoto & Hwang, 2017; Russell, 1994). To examine the ecological validity of the 1,500 expressions presented here, we asked an independent group of participants to rate each expression in terms of authenticity, how often they have seen the same expression in daily life, how often they have produced the expression, and whether it has reflected their inner feelings (for a similar approach, see Rozin & Cohen, 2003). Overall, expressions were reported as authentic 60% of the time, as having been seen in daily life 92% of the time, as having been produced in daily life 83% of the time, and as having been produced in reflection of raters’ inner feelings 70% of the time. Figure 4a presents these results broken down by emotion category. Expressions labeled with each category were reported to have been witnessed, on average, by 82% (“desire”) to 97% (“sympathy”) of participants at some point in their lives, confirming that many of the expressions in each emotion category were ecologically valid.

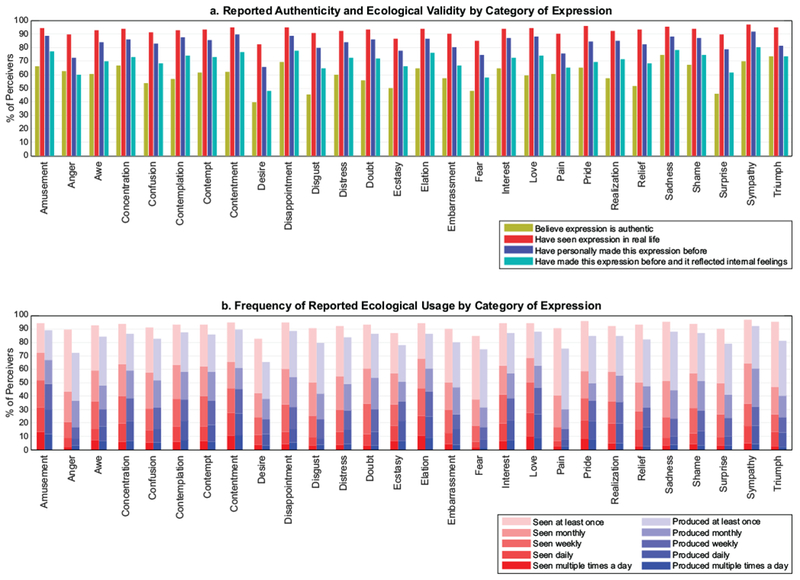

Figure 4.

Expressions of each of the 28 emotion categories are widely reported to occur in real life. (a) Percentage of perceivers who rated expressions from each category of emotion as authentic, as having been seen in real life, as having been produced by the perceiver in the past, and as having been produced by the perceiver in reflection of internal feelings. Percentages are weighted averages for each category; that is, an expression that was rated “amusement” by 50% of raters in the categorical judgment task would count twice as much toward the averages shown here as an expression rated “amusement” by 25% of raters. Expressions in every category were rated as authentic and as having been perceived and produced in real life by a substantial percentage of raters. (b) How often people reported having perceived and produced expressions from each category. Expressions from all of the emotion categories were rated as having been perceived and produced somewhat regularly (e.g., daily, weekly or monthly) by a substantial proportion of raters. See the online article for the color version of this figure.

To verify that all 28 categories of emotion could be distinguished in ecologically valid expressions, we applied CCA between judgments of the original and context-free expressions, this time restricting the analysis to 841 expressions that were rated as authentic and having been encountered in real life by at least two thirds of raters. This analysis still revealed 27 significant dimensions (p < .01; leave-one-rater-out cross-validation; see online supplemental materials for details), the maximum number. Ecologically valid expressions convey 28 distinct emotion categories.

A further question is how frequently these expressions occur in daily life. People’s self-reports suggest that a wide range of expressions occur regularly. Figure 4b shows the frequency of reported occurrence of expressions in each category, in terms of reported perception and production of each expression. Fifty-seven percent of the expressions were reported as having been perceived in real life on at least a monthly basis, 34% on at least a weekly basis, and 16% on at least a daily basis. The percentage of raters reporting at least weekly perception of individual expressions from each emotion category varied from 17% (expressions of “pain”) to 52% (expressions of “amusement”). Weekly rates of expression production varied from 15% (“pain”) to 49% (“amusement”). Presumably, expressions within an emotion category can vary considerably in how often they occur—for instance, expressions of extreme pain are likely rarer than expressions of slight pain. Nevertheless, the present findings suggest that at least 28 categories of emotion are regularly perceived and experienced by a considerable portion of the population.

Inspection of Figure 4b also reveals striking findings about the profile of the expressions that people observe and produce in their daily lives (Rozin & Cohen, 2003). The most commonly studied emotions—“anger,” “disgust,” “fear,” “sadness,” and “surprise”—were reported to occur less often than expressions of understudied emotions such as “awe,” “contempt,” “interest,” “love,” and “sympathy” in terms of daily, weekly, and monthly perception and production. These findings reinforce the need to look beyond the six traditionally studied emotions.

Discussion

Evidence concerning the recognition of emotion from facial-bodily expression has been seminal to emotion science and the center of a long history of debate about the process and taxonomy of emotion recognition. To date, this research has largely been limited by its focus on prototypical expressions of a small number of emotions; by conceptual approaches that conflate the dimensionality, conceptualization, and distribution of emotion; and by limitations in statistical methods, which have not adequately explored the number of reliably recognized dimensions of emotion, modeled whether emotion recognition is driven by emotion categories or dimensions of appraisal, or mapped the distribution of states along these dimensions.

Guided by a new conceptual approach to studying emotion-related responses (Cowen et al., 2018, 2019; Cowen & Keltner, 2017, 2018), we examined the dimensionality, conceptualization, and distribution of emotion recognition from facial-bodily expression, testing claims found in basic emotion theory and constructivist approaches. Toward these ends, we gathered appraisal, categorical, free-response, and ecological validity judgments of over naturalistic 1,500 facial-bodily expressions of 28 emotion categories, many of which have only recently been studied.

In keeping with our first hypothesis, large-scale statistical inference yielded evidence for the recognition of 28 distinct emotions from the face and body (Figure 2, and Figures S2–S4 of the online supplemental materials). These results were observed both in forced-choice and free-response formats (DiGirolamo & Russell, 2017; Russell, 1994). We document the most extensive, systematic empirical evidence to date for the distinctness of facial-bodily expressions of positive states such as amusement, awe, contentment, desire, love, ecstasy, elation, and triumph, and negative states such as anger, contempt, disappointment, distress, doubt, pain, sadness, and sympathy. These results overlap with recent production studies showing that over 20 emotion categories are expressed similarly across many cultures (Cordaro et al., 2018) and suggest that the range of emotions signaled in expressive behavior is much broader than the six states that have received most of the scientific focus.

It is worth reiterating that the expressions studied here are likely not exhaustive of human expression—rather, our findings place a lower bound on the complexity of emotion conveyed by facial-bodily signals. The present study was equipped to discover how facial-bodily expressions distinguish up to 28 categories of emotion of wide-ranging theoretical interest, informed by past studies. We were surprised to find that perceivers could reliably distinguish facial-bodily expressions of every one of these 28 emotion categories (see Cowen et al., 2018, and Cowen & Keltner, 2017, for studies in which perceivers could only distinguish a subset of categories tested). These results should motivate further studies to examine an even broader array of expressions. It is also worth noting that, as with any dimensional analysis, the semantic space we derive is likely to be one of many possible schemas that effectively capture the same dimensions. For example, an alternative way to capture “awe,” “fear,” and “surprise” expressions might be with categories that represent blends of these and other emotions, such as “horror,” “astonishment,” and “shock.”

Our second hypothesis held that the recognition of emotion categories would not be reducible to domain-general scales of affect and appraisal, including valence and arousal, as is often assumed. In keeping with this hypothesis, we found that 13 domain-general appraisals captured 56.1% of the variance in categorical judgments, whereas emotion categories explained 91.4% of the variance in these appraisals (Figure 1d). This pattern of results poses problems for the perspective that the recognition of emotion categories is constructed out of a small set of general appraisals including valence and arousal (Barrett, 2017; Russell, 2003, 2009). Instead, our results, now replicated in several modalities, support an alternative theoretical possibility: that facets of emotion—experience, facial/bodily display, and, perhaps, peripheral physiology—are more directly represented by nuanced emotion categories, and that domain-general appraisals capture a subspace of—and thus may potentially be inferred from—this categorical semantic space.

With respect to the distribution of emotion, our third hypothesis held that the distribution of emotion categories would not be discrete but instead linked by smooth dimensional gradients that cross boundaries between emotion categories. This contrasts with a central assumption of discrete emotion theory, that at the boundaries of emotion categories—for example, awe and interest—there are sharp boundaries defined by step-like shifts in the meaning ascribed to an emotion-related experience or expression (Ekman & Cordaro, 2011). Our results pose problems for this assumption. As shown in Figure 2, smooth gradients of meaning link emotion categories. In addition, we were able to demonstrate, using the appraisal scales in conjunction with the category judgments, that confusion over the category of each expression is meaningful (Figures 1 and 3, and Figures S5–S7 of the online supplemental materials). In particular, the position of each expression along a continuous gradient between categories defines the precise emotional meaning conveyed to the observer. This latter finding suggests that emotion categories do not truly “carve nature at its joints” (Barrett, 2006).

A further pattern of results addressed the extent to which the recognition of emotion is dependent upon context. Most naturalistic expressions of emotion occur in social contexts with myriad features—signs of the setting, people present, evidence of action. Such factors can, in some cases, powerfully shape the meaning ascribed to an expressive behavior. For example, perceptions of disgust depend on whether an individual is engaged in action related to disgust (e.g., holding a soiled article of clothing) or another emotion (e.g., appearing poised to punch with a clenched fist; Aviezer et al., 2008). However, our results suggest that such findings concerning the contextual shaping of the recognition of emotion from facial-bodily expression may prove to be the exception rather than the rule. Namely, across 1,500 naturalistic expressions of 28 emotion categories, many sampled from actual in vivo contexts, all 28 categories could reliably be recognized from facial-bodily expression alone, and their perception was typically little swayed by nuanced contextual features such as clothing, signs of the physical and social setting, or cues of the actions in which people were engaged (see Figure 3).

Our final pattern of findings addressed the ecological validity of our results. People report having perceived and produced most expressions of each of the 28 distinct emotion categories in daily life (see Figure 3). Judging by people’s self-report, none of the 28 categories of emotional expression we uncovered are particularly rare occurrences; on any given day, a considerable proportion of the population will witness similar expressions of each emotion category.

Taken together, the present results yield a nuanced, complex representation of the semantic space of facial-bodily expression. In different judgment paradigms, observers can discern 28 categories of emotion from facial-bodily behavior. Categories capture the recognition of emotion more so than appraisals of valence and arousal. However, in contrast to discrete emotion theories, expressions are distributed along smooth gradients between many categories.

To capture the emotions conveyed by facial-bodily expression, we focused here on recognition by U.S. English speakers, leaving open whether people in other cultures attribute the same emotions to these expressive signals. On this point, recent studies have suggested that people in at least nine other cultures similarly label prototypical expressions of many of the emotions we uncovered (e.g., Cordaro et al., in press). However, such studies have not yet incorporated broad enough arrays of expressions to understand what is universal about the conceptualization, dimensionality, and distribution of emotion recognition. Moving in this direction, a recent study of emotion recognition from speech prosody found that at least 12 dimensions of recognition are preserved across the United States and India, and that cultural similarities are driven by the recognition of categories such as “awe” rather than appraisals such as valence and arousal (Cowen et al., 2019). Similar methods can be applied to examine universals in the recognition of facial-bodily expression, guided by the semantic space we derive here.

Another important limitation of our findings, specifically those regarding the dimensionality of emotion, is that they rely on linear methods. We find that expressions convey at least 27 linearly separable dimensions of emotion (Figures 1b, 2, and 3). Given that the vast majority of psychology studies employ only linear models, such findings are critical. A linear model of emotion must utilize variables that linearly capture the variance in emotion-related phenomena, and Figure 1d indicates that emotion categories linearly capture nearly all of the explainable variance in appraisal judgments (valence, arousal, and so on). Using nonlinear predictive models, we have also ruled out hypotheses that valence, arousal, dominance and 10 other domain-general appraisals can account for the recognition of emotional categories. These findings rule out most low-dimensional theories of emotional expressions (Lang, Greenwald, Bradley, & Hamm, 1993; Mauss & Robinson, 2009; Russell, 2003, 2009; Watson & Tellegen, 1985). Nevertheless, it is possible that emotion recognition can be represented nonlinearly using other undiscovered dimensions, possibly ones that are not directly represented in language. Testing for such nonlinear dimensions is a difficult problem that will require methods such as training a deep autoencoder (a type of artificial neural network) on a sufficiently rich data set.

By applying high-dimensional statistical modeling approaches to large-scale data, we have uncovered an initial approximation of the semantic space of emotion conveyed by facial-bodily expression. It is worth noting that 17 of the emotions we find to be distinguished by facial-bodily expression were previously found to be distinguished by both vocal expression (Cowen et al., 2018, 2019) and experiences evoked by video (Cowen & Keltner, 2017), including amusement, anger, anxiety/distress, awe, confusion, contentment/calmness, desire, disgust, elation/joy, embarrassment/awkwardness, fear, interest, love/adoration, pain, relief, sadness, and surprise. Taken together, these findings illuminate a rich multicomponential space of emotion-related responses.

Facial-bodily signals have received far more scientific focus than other components of expression, perhaps because of their potency: They can imbue almost any communicative or goal-driven action with expressive weight, can be observed passively in most experimental contexts, and are routine occurrences in daily life. More focused studies of particular facial-bodily expressions have revealed their powerful influence over critical aspects of our social lives, including the formation of romantic partnerships (Gonzaga et al., 2001), the forgiveness of transgressions (Feinberg et al., 2012), the imitation of role models (Martens & Tracy, 2013), the resolution of negotiations (Reed et al., 2014), and even the allocation of investments (ten Brinke & Adams, 2015). Such findings can be refined and profoundly expanded upon by applying measurements and models guided by a broader semantic space of expression. In many ways, the understanding of how emotion-related responses are arranged within a high-dimensional space has the promise of revamping the study of emotion.

Supplementary Material

Biographies

Alan S. Cowen

Dacher Keltner

Footnotes

For a range of queries and potential associations with categories and appraisal features, see Table S1 of the online supplemental materials. Note that queries were used in combination, i.e., not every image is associated with a single query.

The smooth transition in patterns in the appraisal ratings across category boundaries for select category pairs is shown in Figure S6 of the online supplemental materials, and Figure S7 documents all smoothly related category pairs. In Figure S8, the smooth meaning of categorical gradients is illustrated more qualitatively using an overall CCA analysis between the categorical and appraisal judgments.

Note that compared with the elaborate contextual cues examined in studies of the maximum degree to which context can influence emotion recognition (e.g., Aviezer et al., 2008), cues in the present stimuli were relatively subtle and more naturalistic.

References

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, … Bentin S. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science, 19, 724–732. 10.1111/j.1467-9280.2008.02148.x [DOI] [PubMed] [Google Scholar]

- Barrett LF (2006). Are emotions natural kinds? Perspectives on Psychological Science, 1, 28–58. 10.1111/j.1745-6916.2006.00003.x [DOI] [PubMed] [Google Scholar]

- Barrett LF (2017). Categories and their role in the science of emotion. Psychological Inquiry, 28, 20–26. 10.1080/1047840X.2017.1261581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benitez-Quiroz CF, Wilbur RB, & Martinez AM (2016). The not face: A grammaticalization of facial expressions of emotion. Cognition, 150, 77–84. 10.1016/j.cognition.2016.02.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campos B, Shiota MN, Keltner D, Gonzaga GC, & Goetz JL (2013). What is shared, what is different? Core relational themes and expressive displays of eight positive emotions. Cognition and Emotion, 27, 37–52. 10.1080/02699931.2012.683852 [DOI] [PubMed] [Google Scholar]

- Coppin G, & Sander D (2016). Theoretical approaches to emotion and its measurement In Meiselman HL (Ed.), Emotion measurement (pp. 3–30). New York, NY:Woodhead Publishing; 10.1016/B978-0-08-100508-8.00001-1 [DOI] [Google Scholar]

- Cordaro DT, Brackett M, Glass L, & Anderson CL (2016). Contentment: Perceived completeness across cultures and traditions. Review of General Psychology, 20, 221–235. 10.1037/gpr0000082 [DOI] [Google Scholar]

- Cordaro DT, Sun R, Kamble S, Hodder N, Monroy M, Cowen AS, … Keltner D. (in press). Beyond the “Basic 6”: The recognition of 18 facial-bodily expressions across nine cultures. Emotion. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cordaro DT, Sun R, Keltner D, Kamble S, Huddar N, & McNeil G (2018). Universals and cultural variations in 22 emotional expressions across five cultures. Emotion, 18, 75–93. 10.1037/emo0000302 [DOI] [PubMed] [Google Scholar]

- Cowen AS, Elfenbein HA, Laukka P, & Keltner D (2018). Mapping 24 emotions conveyed by brief human vocalization. American Psychologist. Advance online publication. 10.1037/amp0000399 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, & Keltner D (2017). Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proceedings of the National Academy of Sciences of the United States of America, 114, E7900–E7909. 10.1073/pnas.1702247114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, & Keltner D (2018). Clarifying the conceptualization, dimensionality, and structure of emotion: Response to Barrett and colleagues. Trends in Cognitive Sciences, 22, 274–276. 10.1016/j.tics.2018.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, Laukka P, Elfenbein HA, Liu R, & Keltner D (2019). The primacy of categories in the recognition of 12 emotions in speech prosody across two cultures. Nature Human Behavior, 3, 369–382. 10.1038/s41562-019-0533-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diamond LM (2003). What does sexual orientation orient? A biobehavioral model distinguishing romantic love and sexual desire. Psychological Review, 110, 173–192. 10.1037/0033-295X.110.1.173 [DOI] [PubMed] [Google Scholar]

- DiGirolamo MA, & Russell JA (2017). The emotion seen in a face can be a methodological artifact: The process of elimination hypothesis. Emotion, 17, 538–546. 10.1037/emo0000247 [DOI] [PubMed] [Google Scholar]

- Ekman P (1992). An argument for basic emotions. Cognition and Emotion, 6, 169–200. 10.1080/02699939208411068 [DOI] [Google Scholar]

- Ekman P (1993). Facial expression and emotion. American Psychologist, 48, 384–392. 10.1037/0003-066x.48.4.384 [DOI] [PubMed] [Google Scholar]

- Ekman P, & Cordaro D (2011). What is meantby calling emotions basic. Emotion Review, 3, 364–370. 10.1177/1754073911410740 [DOI] [Google Scholar]

- Ekman P, Sorenson ER, & Friesen WV (1969). Pan-cultural elements in facial displays of emotion. Science, 164, 86–88. 10.1126/science.164.3875.86 [DOI] [PubMed] [Google Scholar]

- Elfenbein HA, & Ambady N (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128, 203–235. 10.1037/0033-2909.128.2.203 [DOI] [PubMed] [Google Scholar]

- Elfenbein HA, Beaupré M, Lévesque M, & Hess U (2007). Toward a dialect theory: Cultural differences in the expression and recognition of posed facial expressions. Emotion, 7, 131–146. 10.1037/1528-3542.7.1.131 [DOI] [PubMed] [Google Scholar]

- Feinberg M, Willer R, & Keltner D (2012). Flustered and faithful: Embarrassment as a signal of prosociality. Journal of Personality and Social Psychology, 102, 81–97. 10.1037/a0025403 [DOI] [PubMed] [Google Scholar]

- Frijda NH, Kuipers P, & ter Schure E (1989). Relations among emotion, appraisal, and emotional action readiness. Journal of Personality and Social Psychology, 57, 212–228. 10.1037/0022-3514.57.2.212 [DOI] [Google Scholar]

- Godinho RM, Spikins P, & O’Higgins P (2018). Supraorbital morphology and social dynamics in human evolution. Nature Ecology & Evolution, 2, 956–961. 10.1038/s41559-018-0528-0 [DOI] [PubMed] [Google Scholar]

- Goetz JL, Keltner D, & Simon-Thomas E (2010). Compassion: An evolutionary analysis and empirical review. Psychological Bulletin, 136, 351–374. 10.1037/a0018807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gonzaga GC, Keltner D, Londahl EA, & Smith MD (2001). Love and the commitment problem in romantic relations and friendship. Journal of Personality and Social Psychology, 81, 247–262. 10.1037//0022-3514.81.2.247 [DOI] [PubMed] [Google Scholar]

- Gonzaga GC, Turner RA, Keltner D, Campos B, & Altemus M (2006). Romantic love and sexual desire in close relationships. Emotion, 6, 163–179. 10.1037/1528-3542.6.2.163 [DOI] [PubMed] [Google Scholar]

- Hertenstein MJ, & Campos JJ (2004). The retention effects of an adult’s emotional displays on infant behavior. Child Development, 75, 595–613. 10.1111/j.1467-8624.2004.00695.x [DOI] [PubMed] [Google Scholar]

- Jack RE, Sun W, Delis I, Garrod OGB, & Schyns PG (2016). Four not six: Revealing culturally common facial expressions of emotion. Journal of Experimental Psychology: General, 145, 708–730. 10.1037/xge0000162 [DOI] [PubMed] [Google Scholar]

- Jessen S, & Grossmann T (2014). Unconscious discrimination of social cues from eye whites in infants. Proceedings ofthe National Academy of Sciences of the United States of America, 111, 16208–16213. 10.1073/pnas.1411333111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazemi V, & Sullivan J (2014). One millisecond face alignment with an ensemble of regression trees. 2014 IEEE Computer Society Conference on CVPR, Columbus, OH 10.1109/CVPR.2014.241 [DOI] [Google Scholar]

- Keltner D (1995). Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. Journal of Personality and Social Psychology, 68, 441–454. 10.1037/0022-3514.68.3.441 [DOI] [Google Scholar]

- Keltner D, Sauter DA, Tracy J, & Cowen AS (2019). Emotional expression: Advances in basic emotion theory. Journal of Nonverbal Behavior. Advance online publication. 10.1007/s10919-019-00293-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keltner D, Tracy J, Sauter DA, & Cowen AS (2019). What basic emotion theory really says for the twenty-first century study of emotion. Journal of Nonverbal Behavior. Advance online publication. 10.1007/s10919-019-00298-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang PJ, Greenwald MK, Bradley MM, & Hamm AO (1993). Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology, 30, 261–273. 10.1111/j.1469-8986.1993.tb03352.x [DOI] [PubMed] [Google Scholar]

- Lench HC, Flores SA, & Bench SW (2011). Discrete emotions predict changes in cognition, judgment, experience, behavior, and physiology: A meta-analysis of experimental emotion elicitations. Psychological Bulletin, 137, 834–855. Retrieved from http://web.ebscohost.com/ehost/delivery?sid=a420c7ef-fb52-4e8b-8d4c-5648266d91 [DOI] [PubMed] [Google Scholar]

- Maaten LVD, & Hinton G (2008). Visualizing data using t-SNE. Journal of Machine Learning Research, 9, 2579–2605. [Google Scholar]

- MacCallum RC, Widaman KF, Zhang S, & Hong S (1999). Sample size in factor analysis. Psychological Methods, 4, 84–99. [Google Scholar]

- Martens JP, & Tracy JL (2013). The emotional origins of a social learning bias: Does the pride expression cue copying? Social Psychological and Personality Science, 4, 492–499. 10.1177/1948550612457958 [DOI] [Google Scholar]

- Matsumoto D, & Ekman P (2004). The relationship among expressions, labels, and descriptions of contempt. Journal of Personality and Social Psychology, 87, 529–540. 10.1037/0022-3514.87.4.529 [DOI] [PubMed] [Google Scholar]

- Matsumoto D, & Hwang HC (2017). Methodological issues regarding cross-cultural studies of judgments of facial expressions. Emotion Review, 9, 375–382. 10.1177/1754073916679008 [DOI] [Google Scholar]

- Mauss IB, & Robinson MD (2009). Measures of emotion: A review. Cognition & Emotion, 23, 209–237. 10.1080/02699930802204677 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa L, & Saarimäki H (2019). Emotions as discrete patterns of systemic activity. Neuroscience Letters, 693, 3–8. 10.1016/j.neulet.2017.07.012 [DOI] [PubMed] [Google Scholar]

- Osgood CE (1966). Dimensionality of the semantic space for communication via facial expressions. Scandinavian Journal of Psychology, 7, 1–30. 10.1111/j.1467-9450.1966.tb01334.x [DOI] [PubMed] [Google Scholar]

- Peltola MJ, Hietanen JK, Forssman L, & Leppanen JM (2013). The emergence and stability of the attentional bias to fearful faces in infancy. Infancy, 18, 905–926. 10.1111/infa.12013 [DOI] [Google Scholar]

- Perkins AM, Inchley-Mort SL, Pickering AD, Corr PJ, & Burgess AP (2012). A facial expression for anxiety. Journal of Personality and Social Psychology, 102, 910–924. 10.1037/a0026825 [DOI] [PubMed] [Google Scholar]

- Prkachin KM (1992). The consistency of facial expressions of pain: A comparison across modalities. Pain, 51, 297–306. 10.1016/0304-3959(92)90213-U [DOI] [PubMed] [Google Scholar]

- Reed LI, DeScioli P, & Pinker SA (2014). The commitment function of angry facial expressions. Psychological Science, 25, 1511–1517. 10.1177/0956797614531027 [DOI] [PubMed] [Google Scholar]

- Reeve J (1993). The face of interest. Motivation and Emotion, 17, 353–375. 10.1007/BF00992325 [DOI] [Google Scholar]

- Roseman IJ (1991). Appraisal determinants of discrete emotions. Cognition and Emotion, 5, 161–200. 10.1080/02699939108411034 [DOI] [Google Scholar]

- Rozin P, & Cohen AB (2003). High frequency of facial expressions corresponding to confusion, concentration, and worry in an analysis of naturally occurring facial expressions of Americans. Emotion, 3, 68–75. 10.1037/1528-3542.3.1.68 [DOI] [PubMed] [Google Scholar]

- Russell JA (1994). Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychological Bulletin, 115, 102–141. 10.1037/0033-2909.115.1.102 [DOI] [PubMed] [Google Scholar]

- Russell JA (2003). Core affect and the psychological construction of emotion. Psychological Review, 110, 145–172. 10.1037/0033-295X.110.1.145 [DOI] [PubMed] [Google Scholar]

- Russell JA (2009). Emotion, core affect, and psychological construction. Cognition and Emotion, 23, 1259–1283. 10.1080/02699930902809375 [DOI] [Google Scholar]

- Scarantino A (2017). How to do things with emotional expressions: The theory of affective pragmatics. Psychological Inquiry, 28, 165–185. 10.1080/1047840X.2017.1328951 [DOI] [Google Scholar]

- Scherer KR (2009). The dynamic architecture of emotion: Evidence for the component process model. Cognition and Emotion, 23, 1307–1351. 10.1080/02699930902928969 [DOI] [Google Scholar]

- Schirmer A, & Adolphs R (2017). Emotion perception from face, voice, and touch: Comparisons and convergence. Trends in Cognitive Sciences, 21, 216–228. http://dx.doi.org/10.10167j.tics.2017.01.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt KL, & Cohn JF (2001). Human facial expressions as adaptations: Evolutionary questions in facial expression research. American Journal of Physical Anthropology, 116(Suppl. 33), 3–24. 10.1002/ajpa.20001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shiota MN, Campos B, Oveis C, Hertenstein MJ, Simon-Thomas E, & Keltner D. (2017). Beyond happiness: Building a science of discrete positive emotions. American Psychologist, 72, 617–643. 10.1037/a0040456 [DOI] [PubMed] [Google Scholar]

- Shuman V, Clark-Polner E, Meuleman B, Sander D, & Scherer KR (2017). Emotion perception from a componential perspective. Cognition and Emotion, 31, 47–56. 10.1080/02699931.2015.1075964 [DOI] [PubMed] [Google Scholar]

- Skerry AE, & Saxe R (2015). Neural representations of emotion are organized around abstract event features. Current Biology, 25, 1945–1954. 10.1016/j.cub.2015.06.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith CA, & Ellsworth PC (1985). Patterns of cognitive appraisal in emotion. Journal of Personality and Social Psychology, 48, 813–838. 10.1037/0022-3514.48.4.813 [DOI] [PubMed] [Google Scholar]

- Smith CA, & Lazarus RS (1990). Emotion and adaptation In Parvin LA (Ed.), Handbook of personality: Theory and research (pp. 609–637). New York, NY: Guilford Press. [Google Scholar]

- Smith ER, & Mackie DM (2008). Intergroup emotions In Lewis M, Haviland-Jones JM, & Barrett LF (Eds.), Handbook of emotions (pp. 428–439). New York, NY: Guilford Press. [Google Scholar]

- Sorce JF, Emde RN, Campos JJ, & Klinnert MD (1985). Maternal emotional signaling: Its effect on the visual cliff behavior of 1-year-olds. Developmental Psychology, 21, 195–200. 10.1037/0012-1649.21.1.195 [DOI] [Google Scholar]

- ten Brinke L, & Adams GS. (2015). When emotion displays during public apologies mitigate damage to organizational performance. Organizational Behavior and Human Decision Processes, 130, 1–12. 10.1016/j.obhdp.2015.05.003 [DOI] [Google Scholar]

- Tomkins SS (1984). Affect theory In Scherer KR & Ekman P (Eds.), Approaches to emotion (pp. 163–195). New York, NY: Psychology Press. [Google Scholar]

- Tracy JL, & Matsumoto D (2008). The spontaneous expression of pride and shame: Evidence for biologically innate nonverbal displays. Proceedings of the National Academy of Sciences of the United States of America, 105, 11655–11660. 10.1073/pnas.0802686105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tracy JL, & Robins RW. (2004). Show your pride: Evidence for a discrete emotion expression. Psychological Science, 15, 194–197. 10.1111/j.0956-7976.2004.01503008.x [DOI] [PubMed] [Google Scholar]

- Walle EA, Reschke PJ, Camras LA, & Campos JJ (2017). Infant differential behavioral responding to discrete emotions. Emotion, 17, 1078–1091. 10.1037/emo0000307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson D, & Tellegen A (1985). Toward a consensual structure of mood. Psychological Bulletin, 98, 219–235. 10.1037/0033-2909.98.2.219 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.