Abstract

Supplemental Digital Content is available in the text.

Keywords: cardiology, critical care, echocardiography, education, ventricular function

The use of echocardiography in the ICU is a rapidly developing field and in expert hands, has proven utility (1–3). The utility of echocardiography in doctors with limited training is unclear; with available data coming from small studies focusing on qualitative assessment (4–10).

The College of Intensive Care Medicine (CICM) in Australia and New Zealand has mandated a minimum focused cardiac ultrasound (FCU) component to its curriculum (11) and the minimum training requirements are based on the International expert statement on training standards for FCU (12, 13). It is unknown whether trainees can reliably perform FCU after this training. Furthermore, only qualitative assessments are expected in this minimum standard, even though it is known that certain quantitative measurements are invaluable in the assessment of the critically ill patient (1, 14, 15). These are outlined in guidelines on the use of echocardiography as a monitor (1) and include left ventricular outflow tract velocity time integral and diameter (LVOT VTI and LVOTd), left ventricular internal diameter in diastole (LVIDD), inferior vena cava diameter (IVCD) in inspiration and expiration, tricuspid regurgitation maximum velocity (TRVmax), and tricuspid annular plane systolic excursion (TAPSE) (1, 14, 15).

We developed a 12-month CICM accredited FCU program and delivered it to Intensive Care Registrars working at Epworth Richmond ICU.

The aim of this study was to determine the reliability of transthoracic echocardiography (TTE) assessment performed by Intensive Care Registrars after completing this training program.

MATERIALS AND METHODS

Trial Design

This study was a single-center, prospective reliability study using paired trainee and expert performed TTE scans in an Australian, private not-for-profit academic hospital. The study ICU is a 26 bed, CICM accredited training unit situated in Melbourne, Australia admitting over 2,400 patients annually.

Ethical approval was granted by Epworth HealthCare’s human research ethics committee (reference number EH2016-133). Written consent was obtained from all participants.

The year-long teaching program included 38 hours of scheduled education time and a requirement for a logbook of 30 scans. The scheduled teaching included didactic lectures, supervised hands-on practice with a cardiac simulator (Vimedix; CAE Healthcare, Sarasota, FL), live models, patients, and regular assessments. Trainees also had access to learning material via an online platform.

We recommended trainees spent 20 minutes per week reading the online materials and performed one logbook TTE each week. All 30 logbook scans were directly supervised or reviewed soon after being performed. We estimated this took 30 minutes per scan. Most trainees required 9–12 months to complete the 30 supervised scans required for the logbook.

The teaching program meets the requirements of both CICM and the Australian Society of Ultrasound in Medicine for their certificate in clinician performed ultrasound; Rapid Cardiac Echocardiography. Details of these are available online (16, 17) and the syllabus and multiple choice questions assessments for our teaching program are submitted as Appendix 1 (Supplemental Digital Content 1, http://links.lww.com/CCM/F36), Supplement 1 (Supplemental Digital Content 2, http://links.lww.com/CCM/F37), Supplement 2 (Supplemental Digital Content 3, http://links.lww.com/CCM/F38), Supplement 3 (Supplemental Digital Content 4, http://links.lww.com/CCM/F39), and Supplement 4 (Supplemental Digital Content 5, http://links.lww.com/CCM/F40).

Many of the lectures are available in video form, and these are available as Appendix 2 (Supplemental Digital Content 6, http://links.lww.com/CCM/F41). Our training echo report proforma is included as Appendix 3 (Supplemental Digital Content 7, http://links.lww.com/CCM/F42).

Inclusion and Exclusion Criteria for Trainee and Patient Participants

Trainees were eligible for inclusion after completing the CICM accredited echocardiography program described above. Trainees were excluded if they had prior significant experience in echocardiography as defined by completion of a CICM accredited course or more than five supervised scans.

Patient inclusion criteria specified adult patients in ICU or coronary care unit (CCU) not likely to be discharged within the next 2 hours. Exclusion criteria were atrial fibrillation, subcostal or intercostal drains, pneumothorax, or deemed inappropriate by the treating intensivist.

Data Collection

Data were prospectively collected comparing trainee echocardiograms with independent, blinded expert echocardiograms. Data were included only for study scans (acquired after the training program was completed) and hence does not include the baseline 30 scans performed as part of training. Experts were defined as an ICU consultant with a Diploma of Diagnostic Ultrasound qualification, or a research sonographer with at least 5 years clinical experience. Scans were performed sequentially, with the trainee scan immediately following the expert scan.

All study scans were completed independently and trainees received no assistance with any aspect of performing echocardiography including machine operation, image acquisition, and image interpretation.

Images and cineloops were acquired using a Philips CX50 with a S5-1 phased array probe (Philips, Andover, MA) and saved in Digital Imaging and Communications in Medicine format using unique study codes for operator and patient.

Allocation Concealment and Blinding

Patients were screened, enrolled, and allocated to trainees by the experts. A convenience sample of patients was taken, and trainee allocation was determined by availability. Trainees had no role in patient selection or allocation.

Trainees were blinded to their allocated patient’s diagnosis as well as the expert’s scan. Blinding was achieved by the expert immediately exporting and deleting their study from the echo machine prior to the trainee commencing the study. Trainees received no feedback during the data collection phase.

STATISTICAL METHODS

Sample Size Calculation

A sample size calculation based upon sample size for planning (18) was performed based on the primary outcome measure, a binary measure of normal or abnormal left ventricular (LV) function. Agreement between two binary measures is generally measured by Cohen’s kappa (19, 20), a chance-corrected measure of agreement. Conventionally, a kappa of 0.81 or above is regarded as excellent agreement, 0.61 to 0.8 substantial, 0.41 to 0.6 moderate and 0.21 to 0.40 fair, and 0 to 0.20 slight (21). Balancing practicality and conservatism, expected kappa values of 0.60, 0.70, and 0.80, with a one-sided half-width or precision of 0.29 (i.e., the lower 95% CIs for the above three kappa values would be 0.31, 0.41, and 0.51, respectively) would require at least 37, 35, or 31 observations per registrar or expert for kappa values of 0.60, 0.70, and 0.80, respectively. Therefore, at least 37 ratings by each registrar and expert were required. Calculations were performed using kappaSize:CIbinary package within R 3.4.3 (R Foundation for Statistical Computing, Vienna, Austria), assuming a 25% prevalence of abnormal LV function.

Statistical Analyses

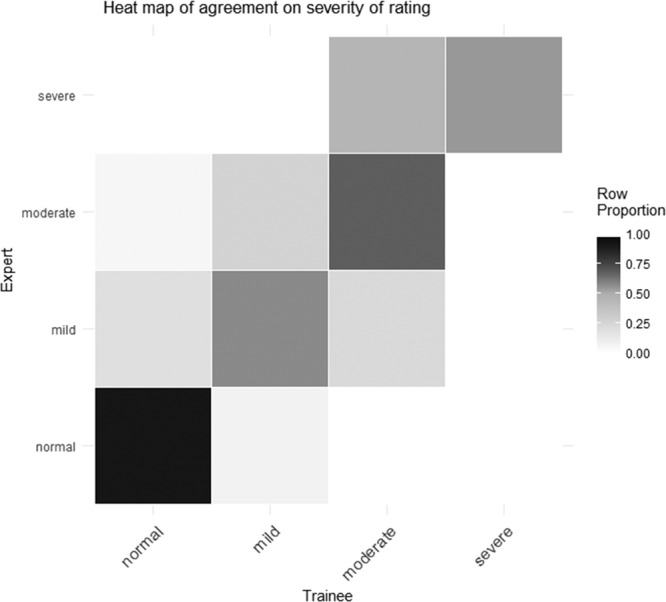

Sensitivity and specificity (20) were calculated for binary data, employing the expert as the reference or gold standard (22). LV function was rated as normal or mild, moderate, severe impairment. In the case of the latter ratings, weighted kappa (22, 23) employing quadratic weights (20), was employed. Finally, Lin’s concordance correlation coefficient (CCC) (24, 25), recently applied to ICU data by Labbé et al (26), was used to assess agreement on continuous data such as LVOT VTI. The CCC is bounded by –1 and +1 but unlike Pearson product moment correlation, only reaches 1 when the two sets of scores are identical. A CCC of greater than 0.8 is regarded as representing good reliability (20). A heat map (27) comprising a rectangular matrix, with each cell or “tile” shaded to represent the percentage of trainee and expert ratings appearing in each cell was generated using R.3.4.3 (R Foundation for Statistical Computing). Unless otherwise specified, all statistical analyses were performed using Stata 15 (StataCorp, College Station, TX). CIs for quadratic weighted kappa’s were calculated using the bootstrap method (28, 29) with 10,000 replications.

Outcomes

Reliability of diagnostic accuracy was assessed on nine measurements. The primary outcome was agreement on LV function, assessed as a binary coded variable (normal vs mild/moderate/severe combined).

Secondary outcomes included agreement between expert and trainee on the following measures which were selected on the basis of their inclusion in relevant guidelines (1):

LV function assessed using a quadratic weighted kappa on the four categories of normal or mild, moderate or severe impairment, presence of pericardial effusion greater than 5 mm in diastole, right ventricular (RV):LV size ratio greater than 1 in the apical four chamber, LVIDD, LVOTd, LVOT VTI, TRVmax, TAPSE, IVCD in inspiration and expiration, trainee scan duration time and number of loops saved by trainee.

RESULTS

Seven of the nine trainees in our ICU were eligible to participate in the research and all eligible trainees consented to participation. Data were collected over a period of 5 months and 270 paired echocardiograms were performed.

Table 1 outlines the characteristics of patients in our study. There was a mix of ICU and CCU patients with a large proportion of obese patients (body mass index > 30) and some ventilated patients.

TABLE 1.

Patient Characteristics of the Study

Patients were not screened for image quality prior to enrollment and trainees could elect to omit a measurement if they could not obtain an adequate image. All enrolled patients were included in the analysis. The percentage of measurements obtained by trainees was LV function 100%, LV:RV size ratio 85%, presence of pericardial effusion 98%, LVIDD 83%, LVOTd 83%, LVOT VTI 85%, IVCD 47%, TAPSE 65%, and TRVmax 45%.

Full results for all trainees are provided in the supplementary tables. Supplementary Table 1 (Supplemental Digital Content 8, http://links.lww.com/CCM/F43) outlines the agreement (Kappa) for all categorical results as well as sensitivity and specificity for binary data. Supplementary Table 2(Supplemental Digital Content 9, http://links.lww.com/CCM/F44) details agreement for continuous variables and Supplementary Table 3 (Supplemental Digital Content 10, http://links.lww.com/CCM/F45) agreement on abnormal values of continuous variables.

LV Size and Function

Overall, the proportion of studies with abnormal LV function on expert scan was 25% (68/269). LV function as a binary analysis showed substantial agreement (kappa 0.77; 95% CI, 0.65–0.89) with good specificity 97.0% (95% CI, 93.9–98.8%) and sensitivity of 81.3% (95% CI, 63.6–92.8%). Analysis of LV function by category demonstrates that overall, trainees showed excellent agreement with experts (kappa, 0.86; 95% CI, 0.79–0.90) (2). Of note trainee, six had only 7% of patients (3/40) with abnormal LV function and a single error may have distorted the kappa value as kappa is adversely affected by very low or very high prevalences (20)

Figure 1 shows a heat map for agreement between expert and trainee by category of LV function. A high level of agreement (darker shading) is demonstrated across all categories.

Figure 1.

Heatmap for agreement of left ventricular function. Expert finding (y-axis) plotted against trainee finding (x-axis). The row proportion scale (right of image) defines the finding overlap (student and expert) for the expert row by gray scale density. Agreement is defined as matching category which ascends from bottom left to top right.

LV size was assessed with LVIDD and as a group trainees showed excellent agreement with experts with a CCC of 0.82 (95% CI, 0.78–0.86). Results for each individual are shown in Supplementary Table 2 (Supplemental Digital Content 9, http://links.lww.com/CCM/F44).

RV Size and Function

Assessment of RV:LV size ratio showed substantial agreement (kappa, 0.76; 95% CI, 0.59–0.93) and this measure showed good sensitivity and specificity. Trainee 7 did not encounter any abnormal scans for this measure which precluded individual analysis. RV systolic function was assessed with TAPSE which for the group showed a CCC of 0.71 (95% CI, 0.64–0.78) and a sensitivity for 92.2% (95% CI, 86.1–96.2%) for detecting abnormal values.

Presence of Pericardial Effusion

In our study, the prevalence of pericardial effusion overall was low at 6.3% (17/261) and for two trainees there were no studies with a pericardial effusion which prevented analysis of agreement. Overall the agreement for this variable was low (kappa, 0.32; 95% CI, 0.09–0.56). For trainees that did perform studies with a pericardial effusion present the specificity of this finding was high.

Volume State and Hemodynamic Monitoring

Supplementary Table 2 (Supplemental Digital Content 9, http://links.lww.com/CCM/F44) provides Agreement between Trainees and experts for continuous variables via analysis with Lin’s correlation coefficient. LVOT VTI, LVIDD, and TAPSE were the measures that showed the best correlation. Supplementary Table 3 (Supplemental Digital Content 10, http://links.lww.com/CCM/F45) shows agreement between Trainee and expert on continuous variable categorized as normal or abnormal. Trainee assessment of LVOT VTI, TAPSE, TRVmax, and LVIDD showed good sensitivity for detecting abnormal values although in some cases not displaying good correlation throughout the range of measurements. Agreement on IVCD was low with a CCC for the group of 0.56 (95% CI, 0.45–0.68).

DISCUSSION

This is the first study to describe the accuracy of Australian trainees after the recently mandated critical care ultrasound requirement from CICM. Overall, our data demonstrate that after the described period of training, trainees can produce echocardiographic results that show substantial agreement with the findings of experts for some but not all measures. To our knowledge, this was also the first study to examine the reliability of novice echo cardiographers performing a set of quantitative measurements in comparison to experts.

Trainees showed excellent agreement with experts when assessing LV function. For this study, the visual estimation method (VEM) technique was chosen because of its utility in the ICU setting (30). It is known that experienced assessors can use VEM to assess LV function accurately (31). Our study is consistent with other research in this field which has demonstrated success with teaching VEM using a 30 scans training period (32) and supports the use and teaching of this measure in ICU. Trainee assessment of LV:RV size ratio as a marker of RV dysfunction showed substantial agreement. This is consistent with other research in this field (4, 10).

However, many of the other measures showed either relatively low concordance or substantial variability among trainees. Concordance for the detection of pericardial effusion was low. The prevalence of pericardial effusion in our study was low which may preclude use of the kappa statistic which is known to be unstable when employed with low prevalence (20). Regardless this still raises important questions in relation to the minimum training required to accurately detect this finding on echocardiography and how to define competency when the prevalence in training scans is also likely to be low.

Surprisingly, given its popularity for assessing volume status, the agreement on assessment of IVCD was also low. The reliability of IVCD has been questioned by other studies (33–36), and recent literature has described the pitfalls of using this technique in isolation (37).

More promising was the ability of trainees to measure LVOT VTI. This measure showed an overall CCC of 0.79 (95% CI, 0.74–0.84) between trainee and expert. Our findings are consistent with results from another validation study of a teaching intervention to assess LVOT VTI (31) which highlights this measure as a teachable and useful measure in ICU. Measurement of LVOT VTI can be used to assess cardiac output and fluid responsiveness hence providing more information than measurement of IVCD alone (14). However, again significant individual trainee variability raises questions as to both minimum training requirement and assessment of competence. Similar variability was seen in the assessment of LVIDD, TRVmax, and TAPSE. TAPSE and TRVmax can be used to quantify RV systolic function and estimate pulmonary artery systolic pressure, respectively. Although TAPSE measures only longitudinal function, it has shown good correlation with techniques estimating overall RV systolic function (38).

Also notable was the ability of trainees to detect abnormal values for the quantitative measures of LVOT VTI, LVIDD, TRVmax, and TAPSE. The high sensitivity raises the possibility that trainee echocardiography could be used as a screening test for abnormalities in these parameters.

The main strengths of this study are the independent blinded nature of the trainee assessments and the power to assess the accuracy of individual trainees. This precluded the possibility that results were driven by high performing individuals and enabled detailed examination of individual reliability on each measure.

There are also several limitations to our study. Our department has a structured teaching program that exceeds the minimum CICM syllabus including modules on quantitative assessments. This, as well as our exclusion criteria, may limit the generalizability of our findings. The number of scans performed for study purposes also exceeded the number performed during baseline training thus providing the opportunity for additional skill acquisition. No additional feedback was provided during this period; however, a Hawthorne effect may have resulted in improved performance compared to a typical training environment.

The significant heterogeneity in the skill level of trainees raises some important questions for ongoing echo training in ICU. Specifically, what elements of echo training can be reliably taught to large cohorts across multiple sites, what are the minimum training requirements and how can individual competence be assessed?

CONCLUSIONS

ICU trainees demonstrated very high overall agreement with experts on the assessment of LV function, and agreement on other measures varying from poor to substantial. Identifying reliable echo skill acquisition is the first step in defining a critical care FCU curriculum as it provides us with the knowledge of the key measurements that can be accurately reproduced by trainees after a defined period of training. Further studies are required to determine the minimum training requirements for ICU echo training and a suitable method to assess for individual competence.

ACKNOWLEDGMENTS

We thank the ICU research sonography staff Karen Scholz and Katrina Timmins.

Supplementary Material

Footnotes

This work was performed at Epworth Richmond, 89 Bridge Rd, Richmond, 3121 Victoria, Australia.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s website (http://journals.lww.com/ccmjournal).

Supported, in part, by grant from the Epworth Research Institute which was used to employ sonographers for the research. No additional funding was required to run the teaching program which was delivered using existing nonclinical time from the consultant group.

Drs. Brooks’s and Barrett’s institutions received funding from Epworth Research Institute. Drs. Brooks and Kelly received support for article research from Epworth Research Institute. The remaining authors have disclosed that they do not have any potential conflicts of interest.

Trial registration: NCT02961439—https://clinicaltrials.gov.

REFERENCES

- 1.Porter TR, Shillcutt SK, Adams MS, et al. Guidelines for the use of echocardiography as a monitor for therapeutic intervention in adults: A report from the American Society of Echocardiography. J Am Soc Echocardiogr 2015; 28:40–56 [DOI] [PubMed] [Google Scholar]

- 2.Spencer KT, Kimura BJ, Korcarz CE, et al. Focused cardiac ultrasound: Recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr 2013; 26:567–581 [DOI] [PubMed] [Google Scholar]

- 3.Wharton G, Steeds R, Allen J, et al. A minimum dataset for a standard adult transthoracic echocardiogram: A guideline protocol from the British Society of Echocardiography. Echo Res Pract 2015; 2:G9–G24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.See KC, Ong V, Ng J, et al. Basic critical care echocardiography by pulmonary fellows: Learning trajectory and prognostic impact using a minimally resourced training model*. Crit Care Med 2014; 42:2169–2177 [DOI] [PubMed] [Google Scholar]

- 5.Beraud AS, Rizk NW, Pearl RG, et al. Focused transthoracic echocardiography during critical care medicine training: Curriculum implementation and evaluation of proficiency*. Crit Care Med 2013; 41:e179–e181 [DOI] [PubMed] [Google Scholar]

- 6.Vignon P, Dugard A, Abraham J, et al. Focused training for goal-oriented hand-held echocardiography performed by noncardiologist residents in the intensive care unit. Intensive Care Med 2007; 33:1795–1799 [DOI] [PubMed] [Google Scholar]

- 7.Price S, Via G, Sloth E, et al. ; World Interactive Network Focused On Critical UltraSound ECHO-ICU Group: Echocardiography practice, training and accreditation in the intensive care: Document for the World Interactive Network Focused on Critical Ultrasound (WINFOCUS). Cardiovasc Ultrasound 2008; 6:49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Breitkreutz R, Uddin S, Steiger H, et al. Training and accreditation in the intensive care: Document for the world interactive network focused on critical ultrasound. Focused echocardiography entry level: New concept of a 1-day training course. Minerva Anestesiol 2009; 75:285–292 [PubMed] [Google Scholar]

- 9.Melamed R, Sprenkle MD, Ulstad VK, et al. Assessment of left ventricular function by intensivists using hand-held echocardiography. Chest 2009; 135:1416–1420 [DOI] [PubMed] [Google Scholar]

- 10.Vignon P, Mücke F, Bellec F, et al. Basic critical care echocardiography: Validation of a curriculum dedicated to noncardiologist residents. Crit Care Med 2011; 39:636–642 [DOI] [PubMed] [Google Scholar]

- 11.College of Intensive Care Medicine of Australia and New Zealand: Focused cardiac ultrasound in intensive care. 2018. Available at: https://cicm.org.au/Trainees/Training-Courses/Focused-Cardiac-Ultrasound. Accessed February 3, 2019.

- 12.Cholley BP, Mayo PH, Poelaert J, et al. International expert statement on training standards for critical care ultrasonography. Intensive Care Med 2011; 37:1077–1083 [DOI] [PubMed] [Google Scholar]

- 13.Maclean AS. International recommendations on competency in critical care ultrasound: Pertinence to Australia and New Zealand. Crit Care Resusc 2011; 13:56–58 [PubMed] [Google Scholar]

- 14.Cavallaro F, Sandroni C, Marano C, et al. Diagnostic accuracy of passive leg raising for prediction of fluid responsiveness in adults: Systematic review and meta-analysis of clinical studies. Intensive Care Med 2010; 36:1475–1483 [DOI] [PubMed] [Google Scholar]

- 15.Feissel M, Michard F, Faller JP, et al. The respiratory variation in inferior vena cava diameter as a guide to fluid therapy. Intensive Care Med 2004; 30:1834–1837 [DOI] [PubMed] [Google Scholar]

- 16.College of Intensive Care Medicine of Australia and New Zealand: Focused cardiac ultrasound in intensive care FAQ. 2018. Available at: https://www.cicm.org.au/Trainees/Training-Courses/Focused-Cardiac-Ultrasound#FAQ. Accessed February 3, 2019.

- 17.Australasian Society for Ultrasound in Medicine: Rapid cardiac echocardiography syllabus. 2018. Available at: https://www.asum.com.au/files/public/Education/CCPU/Syllabi/CCPU-Rapid-Cardiac-Echocardiography-Syllabus.pdf. Accessed February 1, 2019.

- 18.Cumming G. Understanding the New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis. 2012New York, NY, Routledge. [Google Scholar]

- 19.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas 1960; 20:37–46 [Google Scholar]

- 20.Streiner Dl, Norman GR, Cairney J. Health Measurement Scales: A Practical Guide to Their Development and Use. 2015Fifth Edition Oxford, United Kingdom, University Press. [Google Scholar]

- 21.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics 1977; 33:159–174 [PubMed] [Google Scholar]

- 22.Johnson BK, Tierney DM, Rosborough TK, et al. Internal medicine point-of-care ultrasound assessment of left ventricular function correlates with formal echocardiography. J Clin Ultrasound 2016; 44:92–99 [DOI] [PubMed] [Google Scholar]

- 23.Cohen J. Weighted kappa: Nominal scale agreement with provision for scaled disagreement or partial credit. Psychol Bull 1968; 70:213–220 [DOI] [PubMed] [Google Scholar]

- 24.Lin LI. A concordance correlation coefficient to evaluate reproducibility. Biometrics 1989; 45:255–268 [PubMed] [Google Scholar]

- 25.Lin LI. A note on the concordance correlation coefficient. Biometrics 2000; 56:324–325 [Google Scholar]

- 26.Labbé V, Ederhy S, Pasquet B, et al. Can we improve transthoracic echocardiography training in non-cardiologist residents? Experience of two training programs in the intensive care unit. Ann Intensive Care 2016; 6:44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wilkinson L, Friendly M. The history of the cluster heat map. Am Stat 2009; 63:179–184 [Google Scholar]

- 28.Efron B, Tibshiani R. An Introduction to the Bootstrap. 1993Boca Raton, FL, Chapman & Hall. [Google Scholar]

- 29.Lee J, Fung KP. Confidence interval of the kappa coefficient by bootstrap resampling. Psychiatry Res 1993; 49:97–98 [DOI] [PubMed] [Google Scholar]

- 30.Bergenzaun L, Gudmundsson P, Öhlin H, et al. Assessing left ventricular systolic function in shock: Evaluation of echocardiographic parameters in intensive care. Crit Care 2011; 15:R200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Shahgaldi K, Gudmundsson P, Manouras A, et al. Visually estimated ejection fraction by two dimensional and triplane echocardiography is closely correlated with quantitative ejection fraction by real-time three-dimensional echocardiography. Cardiovasc Ultrasound 2009; 7:41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Unlüer EE, Karagöz A, Akoğlu H, et al. Visual estimation of bedside echocardiographic ejection fraction by emergency physicians. West J Emerg Med 2014; 15:221–226 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hutchings S, Bisset L, Cantillon L, et al. Nurse-delivered focused echocardiography to determine intravascular volume status in a deployed maritime critical care unit. J R Nav Med Serv 2015; 101:124–128 [PubMed] [Google Scholar]

- 34.Fields JM, Lee PA, Jenq KY, et al. The interrater reliability of inferior vena cava ultrasound by bedside clinician sonographers in emergency department patients. Acad Emerg Med 2011; 18:98–101 [DOI] [PubMed] [Google Scholar]

- 35.Bowra J, Uwagboe V, Goudie A, et al. Interrater agreement between expert and novice in measuring inferior vena cava diameter and collapsibility index. Emerg Med Australas 2015; 27:295–299 [DOI] [PubMed] [Google Scholar]

- 36.Akkaya A, Yesilaras M, Aksay E, et al. The interrater reliability of ultrasound imaging of the inferior vena cava performed by emergency residents. Am J Emerg Med 2013; 31:1509–1511 [DOI] [PubMed] [Google Scholar]

- 37.Orde S, Slama M, Hilton A, et al. Pearls and pitfalls in comprehensive critical care echocardiography. Crit Care 2017; 21:279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rudski LG, Lai WW, Afilalo J, et al. Guidelines for the echocardiographic assessment of the right heart in adults: A report from the American Society of Echocardiography endorsed by the European Association of Echocardiography, a registered branch of the European Society of Cardiology, and the Canadian Society of Echocardiography. J Am Soc Echocardiogr 2010; 23:685–713; quiz 786–788 [DOI] [PubMed] [Google Scholar]