Abstract

Purpose of review:

Advances in display technology and computing have led to new devices capable of overlaying digital information onto the physical world or incorporating aspects of the physical world into virtual scenes. These combinations of digital and physical environments are referred to as extended realities. Extended reality (XR) devices offer many advantages for medical applications including realistic 3D visualization and touch-free interfaces that can be used in sterile environments. This review introduces extended reality and describes how it can be applied to medical practice.

Recent findings:

The 3D displays of extended reality devices are valuable in situations where spatial information such as patient anatomy and medical instrument position is important. Applications that take advantage of these 3D capabilities include teaching and pre-operative planning. The utility of extended reality during interventional procedures has been demonstrated with through 3D visualizations of patient anatomy, scar visualization, and real-time catheter tracking with touch-free software control.

Summary:

Extended reality devices have been applied to education, pre-procedural planning, and cardiac interventions. These devices excel in settings where traditional devices are difficult to use, such as in the cardiac catheterization lab. New applications of extended reality in cardiology will continue to emerge as the technology improves.

Introduction

The rise of computing has transformed nearly every field, and medical practice is no exception. Despite the massive influence of computing on medicine, desktop computers and mobile devices are cumbersome or impossible to use in many aspects of clinical practice. Desktop and mobile devices rely on 2-dimensional (2D) screens to display graphics, text, and interface controls that users interact with using a keyboard and mouse or touch screen. These devices are difficult to use in sterile environments such as operating rooms, and trying to comprehend 3-dimensional (3D) information such medical instrument locations or patient anatomy on a 2D display can be challenging.

Newly available devices are radically changing the human-computer interaction paradigm with 3D displays and new ways for users to interact with devices. These include virtual reality (VR) displays that can completely immerse users in 3D worlds. Augmented reality (AR) displays can project 3D objects into the user’s physical environment while still permitting full visibility of the user’s surroundings. 3D AR devices can create shared experiences, so that multiple users can view objects in the same location in their physical space. These devices also enable new user interactions including spatially tracked 3D controllers, voice inputs, gaze tracking, and hand gesture controls.

It’s intriguing to imagine what medicine will look like once these devices are fully deployed. Parents of congenital heart disease patients could put on AR goggles to view a 3D hologram of their child’s anatomy in the center of the room with their physician [1]. Using the spatial sharing capabilities of these devices, the doctor could also be wearing googles so that the parents and doctor could view and point to the same images and discuss the treatment options. In the treatment planning phase, teams of clinicians could use 3D displays to view patient-specific anatomy obtained from CT to determine the optimal therapeutic intervention [2]. During procedures, interventionalists could view patient anatomy and real-time catheter positions in 3D while still having full visibility of the patient and operating room [3]. The interventionalists can remain sterile and have full control of this software using voice control, eye gaze, and hand gestures. While performing procedures, doctors and staff could see regions of bright colors near the fluoroscopy machine when it is in use, indicating areas of high radiation exposure [4]. This visible feedback can help ensure that safety equipment is properly positioned and unnecessary radiation exposure is avoided. After the procedures, medical students could review immersive 3D videos of these procedures as though they were present in the operating room.

Researchers are already iterating toward this possible future and making exciting progress. In this review, we will describe the technologies that enable these new human-computer interactions, and we will review research that is already demonstrating how these technologies can be applied to medical practice.

Human-Computer Interface Evolution

On December 9, 1968 at the Fall Joint Computer Conference, Douglas Engelbart presented a computer system his team of engineers and programmers had built to around 1,000 colleagues [5]. The features that Engelbart demonstrated for the first time included the computer mouse, windowed applications, hyperlinked media, file sharing, teleconferencing, and real-time collaborative editing. The system that Engelbart demonstrated would become the dominant paradigm of human-computer interaction in the decades that followed, and the computer has transformed nearly every industry in that time.

The 50 years since Engelbart’s demonstration have seen continuous exponential growth in computing power with a simultaneous decrease in cost—a phenomenon known as Moore’s Law [6]. While the raw power of computing devices has shown sustained exponential growth, innovation of human-computer interfaces has been much slower. Modern desktop operating systems (Windows 10, macOS, and Linux) still largely resemble the paradigm Engelbart demonstrated in 1968. In recent years, however, the rate of innovation in human-computer interaction has accelerated. Introduced in 2007, the iPhone was one of the first mainstream multi-touch devices. While interaction with touch-based devices is fluid and intuitive, this interface is in many ways an incremental advancement over the traditional keyboard and mouse paradigm. There are many scenarios in clinical practice, particularly during interventions, that desktop and mobile computer interactions are cumbersome or impossible to use.

Newly available devices allow computers to be used in completely different ways. Users can view immersive virtual worlds or view 3D digital information overlaid onto the physical world. These 3D views can give clinicians a better sense of patient anatomy and can help track surgical tooling and register images. 3D devices also have novel user inputs such as motion sensing controllers, voice input, visual gaze tracking, and hand gesture input. These inputs enable interactions that are not possible with a mouse and keyboard or touchscreen. Importantly, many of these human-computer interactions do not require any physical contact with a computer, which addresses a critical pain point during clinical interventions and procedures.

Many Realities

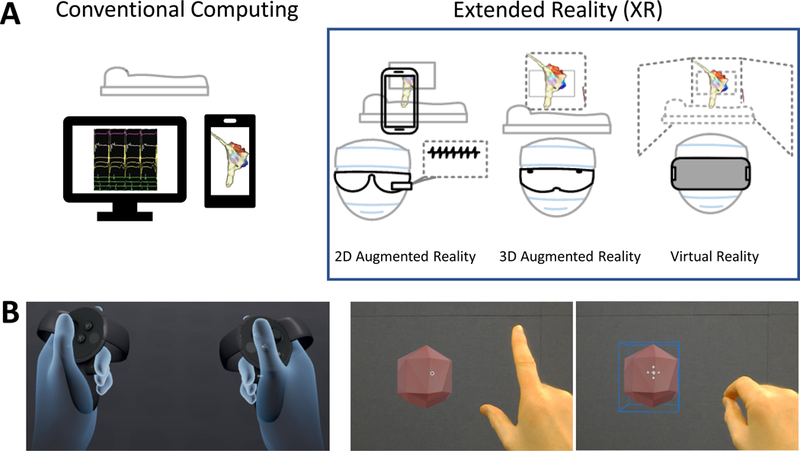

Recent years have seen computing devices emerge that can immerse users in a digital reality or overlay digital information onto physical reality [Fig 1A]. There are many terms used to describe and classify these devices, some with overlapping definitions or ambiguous interpretations. Rather than being overly focused on the taxonomy of these devices, we will define the most common terms and then use general terminology.

Figure 1.

Extended reality devices and interfaces. A. Conventional computing (left) displays images on a 2D screen with a clear separation between physical and digital realities. User interaction with conventional computing devices generally requires keyboard and mouse or touch screen. Extended reality devices (right) mix digital and physical realities. 2D augmented reality (AR) devices may be head-mounted or displayed on a phone or tablet screen by using a camera to display physical reality. 3D AR devices incorporate spatial mapping to display 3D objects in the user’s physical space. Virtual reality (VR) devices occlude the user’s vision but may incorporate views of the user’s surroundings. B. Extended reality interfaces. VR controllers (left) use optical tracking and button proximity sensors to display the user’s hand position and posture accurately in the VR headset. Gaze and gesture interfaces (right) enable users to select 3D objects by targeting them with a visual cursor (the white ring) and performing a pinch gesture to interact with the object.

Virtual Reality

The term virtual reality (VR) is likely a familiar one. VR refers to devices that occlude the users view of the physical world, so that the user only sees digitally rendered images. VR devices can mimic 3D stereoscopic vision by presenting separate images to each eye. In addition to stereoscopic 3D, more advanced devices perform real-time tracking of the VR headset so that as the user moves his or her head around in the physical world, the movement is matched in the digital world. Headset tracking and rendering framerate are very important for the comfort of VR users. Poor tracking, low framerates, or rendering lag can create discrepancies between what users see visually and what their vestibular system experiences. This mismatch can result in discomfort and nausea. Commercial examples of VR devices include the Oculus Rift, the HTC Vive, and a variety of devices that support the Windows Mixed Reality platform. VR devices are well suited for medical imaging, education, and pre-operative planning because of their excellent 3D visualization. Because VR devices completely cover users’ eyes, their clinical adoption will be limited by situations where clinicians do not require a direct view of the patient or their surroundings.

Augmented Reality

Another term that is rapidly growing in importance is augmented reality (AR). AR is a view of the physical world that is augmented by digital information. This view can be direct (such as a head-mounted display) or indirect (displayed on a phone or tablet screen). The most widely available form of AR is on mobile devices through Apple’s ARKit or Google’s ARCore frameworks. These applications typically use the device camera to display the physical world and process features such as walls, text, or faces that can be augmented in real-time with digital information. Popular examples of AR apps include measurement apps, real-time text translation, and the Pokémon Go game.

Google Glass was the first well-known direct AR device. Google Glass displays 2D information on a lens of glasses that the user wears, but the platform has not gained much traction. More recent technical advances have greatly expanded the capabilities of direct AR devices. Microsoft’s HoloLens and the Magic Leap One have integrated IR cameras that can track the user’s environment and project 3D objects into the user’s environment in real-time. Head-mounted AR devices may be particularly well suited for use during medical interventions because they can provide physicians with valuable information without blocking their view of the patient and operating room.

Extended Reality

The term “mixed reality” (MR) was created to describe a spectrum of VR and AR devices that blend the physical world with the digital world [7]. The term MR is sometimes used differently, and may include or exclude certain VR or AR applications depending on the exact definition. For this reason, the term “extended reality” (XR) has recently gained favor as an umbrella term that encompasses all of AR, VR, and MR. In this review, we use the term VR to refer to devices that completely occlude the user’s view of the physical world, AR to refer to devices that allow the user to view the physical and digital worlds, and XR as a general term. Details of various XR device types are presented in Table 1.

Table 1.

Extended reality device types, details, and clinical applications.

| Extended Reality Classification | Hardware Examples | User Interface | Technical Strengths | Technical Limitations | Clinical Applications |

|---|---|---|---|---|---|

| Virtual Reality | • Oculus Rift • HTC Vive |

• Handheld motion-tracked controllers | • Superior 3D graphics performance and highest resolution | • User has no direct view of physical

environment • Requires controller inputs |

• The Stanford Virtual

Heart • The Body VR • MindMaze |

| 2D Augmented Reality (Indirect) | • iPhone • iPad • Android Devices |

• Touchscreen | • Widely available, inexpensive | • Phone or tablet must be held or

mounted • Requires touch input |

• Echocardiographic probe orientation (Kiss, 2015) |

| 2D Augmented Reality (Direct) | • Google Glass | • Side-mounted touchpad • Voice |

• Lightweight head mounted display | • 2D display • UI does not interact with physical environment |

• First-In-Man use in Interventional Cath (Opolski, 2016) |

| 3D Augmented Reality | • Microsoft HoloLens • Magic Leap • RealView Holoscope |

• Voice • Gaze • Gestures |

• Touch-free input • 3D display • Full visibility of surroundings |

•Narrow field of view for 3D graphics | • HoloAnatomy • EchoPixel • RealView • Intraprocedural scar visualization (Jang, 2018) • Enhanced Electrophysiology Visualization and Interaction System (Silva, 2017) |

Human-Computer Interaction in Extended Reality

One of the more interesting aspects of extended reality in the context of medical practice is that these devices necessitate new paradigms for human-computer interaction. Traditional interaction requires physical contact with a keyboard and mouse or touchscreen to interact with a computer. These devices also use 2D metaphors of buttons, text, images, and other user interface elements to convey program state to the user. Immersive devices such as VR headsets are not easily compatible with a keyboard or mouse because the user’s view is completely occluded. Additionally, the metaphor of a pointer controlled by a mouse or trackpad in 2D is not intuitively applicable to 3D XR devices. The primary user inputs for XR devices are described below.

Voice

Voice interfaces are now ubiquitous thanks to mobile devices and standalone smart speakers. Apple’s Siri, Amazon’s Alexa, Google’s Assistant, and Microsoft’s Cortana are all voice-driven software interfaces that are continuously gaining new capabilities. These interfaces are most commonly used for productivity tasks, smart home interfaces, or music controls. Voice interfaces play an important role in XR interfaces where text entry is more cumbersome and users don’t have a 2D cursor to move around for selection actions. The hands-free nature of voice interfaces may be particularly useful in clinical interventional settings where physicians are directly manipulating tools with their hands. There are still hurdles to overcome when implementing voice interfaces, such as ambient noise and speed of speech.

Extended Reality Controllers

Many XR devices enable user control with handheld controllers. These controllers have capabilities beyond button press inputs. As an example, the Oculus Touch Controllers used by the Oculus Rift VR system are designed to provide the user with “hand presence” in the VR experience. The controller and locations are tracked in the same coordinate system as the headset, which allows the users to see the location of their hands in the virtual world. In addition to buttons and 2-axis thumb sticks, the controllers also have proximity sensing, which allows the users to see their hands opening, closing, and changing postures based on how the controllers are held. An example of VR controllers with virtual hand presence is shown in Figure 1B (left). Although physical controllers provide a tactile method of interaction with 3D digital data, they are another device competing for an operator’s physical workspace, which can be limited in a sterile field.

Gesture and Gaze

While voice-driven interfaces were accelerated largely by the rise of mobile computing, other human-computer interactions have been developed specifically for XR devices. These interfaces include gaze and gesture controls. XR users can look at 3D objects to perform gaze inputs. In the simplest implementations, the user has a visual cursor in the middle of the device’s visual field. More advanced gaze input can be achieved using eye-tracking technology. Another form of inputs available to users are gestures. The Microsoft HoloLens enables users to perform a “click” gesture by tapping their index finger and thumb together in range of the device’s cameras. Importantly, voice, gaze, and gesture inputs can all be performed without requiring the user to physically touch any hardware. This makes devices like the HoloLens and Magic Leap One intriguing tools for interventional work. An example of gesture and gaze interaction is shown in Figure 1B (right). These two modalities of interaction provide a sterile alternative to controllers, but gesture inputs limit operators’ ability to control other tools with their hands. These inputs also require a steady gaze for accuracy when performing “click” gestures.

Cardiovascular Applications of Extended Reality

The simplest medical applications of XR take advantage of the ability of XR displays to provide 3D visualizations of anatomy. These applications are typically aimed at education or pre-operative planning. More advanced applications are aimed at bringing XR into interventional procedures. Some of these applications make use of real-time tracking to give physicians a greater level of spatial understanding and control than conventional computing can achieve. Examples of these applications are described below.

Education

The motivation behind applying XR to education is that students or patients can gain a better understanding of anatomy through 3D XR views than through traditional materials. Examples of educational XR applications include the Case Western Reserve University HoloAnatomy application [9] and the Stanford Virtual Heart Project [1]. The HoloAnatomy application for the Microsoft HoloLens allows users to interactively explore human anatomy using the device’s holographic display. The Stanford Virtual Heart Project was created to help families better understand their child’s cardiac anatomy. The project later expanded to include Stanford medical students to aid visualization ofnormal and abnormal anatomy. Students are using The Stanford Virtual heart to learn about congenital heart defects and visualize surgical procedures. The students have reported that VR is a much more engaging way to learn about anatomy than textbooks, videos, models, and cadavers. It is not yet clear how much benefit is gained by incorporating XR into education, though there is some evidence that students perform better on material that incorporates 3D technologies [10].

Diagnosis and Pre-Procedural Planning

Diagnosis and pre-procedural planning are another category of XR applications. Similar to educational applications, the idea behind these approaches is that clinicians can gain a better appreciation for patient anatomy using 3D displays. The Echopixel system demonstrated the utility of this approach. The system uses 3D displays that function like 3D televisions as opposed to head-mounted XR displays. An initial cardiology study used the Echopixel system to visualize arteries in patients with pulmonary atresia [11]. Cardiac radiologists interpreted CT angiography images using traditional tomographic readouts and 3D displays in different sessions separated by 4 weeks. The study found that radiologists who used the 3D display had interpretation times of 13 min compared to 22 min for those who used traditional tomographic reading. Both groups had similar accuracy in their interpretations compared to catheter angiography.

Intraprocedural Applications

The simplest intraprocedural XR applications are similar to educational and pre-procedural applications in that their primary function is to display anatomy for the physicians during procedures. While VR displays are a good choice for educational or pre-procedural applications, 3D AR displays are generally better suited for use during procedures. The AR displays have the advantage of not obscuring the physician’s vision during procedures. A recent example of this approach is a study that created a 3D visualization of myocardial scar imaged by late gadolinium enhancement [12]. Physicians viewed the 3D scar visualization with the Microsoft HoloLens during an animal ablation procedure. Operators and mapping specialists who used the visualization remarked on its usefulness during the intervention. Similar work has been performed using the RealView Holographic Display system, which projects holographic images without the need for any headset [2]. Researchers used this system to display 3D rotational angiography and live 3D transesophageal echocardiography during catheter procedures.

While true 3D visualization is a valuable aspect of clinical XR, real-time visual feedback about the physical world improves the utility of XR applications. An example of an indirect 2D AR application used ultrasound probe tracking to help novice sonographers understand the relationship between probe orientation and the cardiac imaging plane. By tracking anatomical landmarks and the position and orientation of an ultrasound probe, researchers were able to provide users with a tablet-based visualization of the probe’s imaging plane in a heart model [13]. The system was beneficial for teaching purposes and as a tool to help inexperienced ultrasound users. Direct head-mounted 2D AR has also been applied clinically. Cardiologists used CT angiography displayed on the Google Glass to assist in restoring blood flow to a patient’s right coronary artery [14]. Physicians used the display to visualize the distal coronary vessel and verify the direction of the guide wire relative to the blockage.

Another potential use of XR visualization is to reduce intraoperative radiation exposure to patients and clinicians during X-ray guided procedures. One proposed method for decreasing X-ray exposure is to use XR to visualize scattered radiation. Previous studies demonstrated that considerable radiation exposure and risk underestimation results from lack of awareness and poor knowledge of radiation behavior [15]. A proposed solution to this problem is to use AR to visualize areas of high radiation intensity to help optimize the use of protective measures to avoid overexposure [4]. Researchers demonstrated that handheld 2D AR screens can be used to display information about radiation intensity by incorporating the user’s position and the position and orientation of the X-ray device. This approach could show users regions of high X-ray intensity and alert users when sources are actively emitting X-rays. The 2D displays in the study could be replaced by head-mounted AR displays so that operating room personnel can see this information without holding any devices.

Our prototype HoloLens system for electrophysiology procedures demonstrates the utility of 3D visualization and a hands-free user interface during interventions [3]. The system visualizes patient-specific 3D cardiac anatomy, real-time catheter locations, and electroanatomic maps. Importantly, the system enables direct control of the software using a sterile voice, gaze, and gesture interface. The system also utilizes the sharing ability of the HoloLens to enable multiple users to view and interact with a single shared holographic model. This functionality can allow additional operators to assist a clinician with software control as necessary and return the control the physician when desired. We demonstrated the ability of this system to display historical cases for review as well as a live case observed in real-time from a control room. Other studies have demonstrated the feasibility of displaying pre-operative imaging, real-time 2D trans-esophageal ultrasound, and magnetic tracking of surgical tools in VR [16]. Incorporating all of these inputs into a 3D visualization tool can be an important tool for applications such as mitral valve implantation and atrial septal defect repair.

Challenges, Complimentary Approaches, and Future Directions of Extended Reality in Cardiology

Display Technology

While XR offers new possibilities for clinical cardiology, there are still technical hurdles. Some VR users report side effects such as nausea or headaches. One study of the effectiveness of VR as a tool for teaching anatomy found that VR users were more likely to exhibit adverse side effects such as headaches, dizziness, or blurred vision [17]. Improvements to hardware framerate and headset tracking have helped decrease these symptoms in many users, and it is likely that further improvements are necessary to improve user comfort.

A current limitation in 3D AR displays such as the HoloLens and Magic Leap One is the field of view. The field of view on the HoloLens is 34 degrees, compared to the normal human field of view of 150–170 degrees. The result of this small field of view is that virtual objects can only be seen in a relatively small volume directly in front of the user. Objects can be partially clipped or completely invisible when outside of the device’s field of view, despite remaining in the user’s natural field of view, which can be jarring. Newer devices are demonstrating that a field of view greater than 100 degrees is possible by reducing angular resolution, but it will likely take some time before mainstream devices deliver the same performance [18].

Physical Assessment

Additional limitations of XR relate to its interaction with the physical world. While XR has considerable utility as a pre-operative planning tool, other approaches can offer benefits in some situations. As an example, determining the appropriate valve size for a transcatheter valve replacement is an important pre-procedural task. XR devices can provide highly detailed anatomy and measurements, but to test the physical interface between the valve and the anatomy would require complex physical modeling. This is an area that may be well served by 3D printing [19]. 3D printing enables the creation of anatomically accurate physical models, and the physical properties can be controlled to some extent by the materials used to create the models.

Sensors and Mechanical Feedback

Medical procedures rely on a multitude of sensors to track and display patient vitals. XR devices offer incredible flexibility for displaying sensor data. Sensor information is often displayed on large screens in the operating room, but XR devices have the capability of displaying this information in full 3D or on 2D “virtual windows.” This provides users with greater flexibility for moving, resizing, and hiding this information as necessary to help manage visual and physical clutter in the operating room. Integrating sensor information into XR devices requires network-enabled sensors. Network connectivity adds complexity to sensors, but network-connected sensors are increasingly common, as evidenced by the rise of the internet of things (IoT).

A difficulty of incorporating XR into more medical procedures is the lack of mechanical feedback. XR visualization is a natural fit for robotic surgeries, but the available control paradigms do not map well to these procedures. While motion controllers offer precise tracking and visual feedback, they cannot provide mechanical resistance and could fall out of sync with any controlled tools that experience mechanical resistance to motion. Forces experienced in laparoscopic or catheter-based procedures are often due to contact with surroundings rather than the tip, and can make mechanical feedback difficult to interpret [20]. A useful feedback mechanism requires careful design of both force sensing and application of the force to the user. Prior work with the da Vinci surgical robotic system found that pneumatic balloon tactile feedback was an effective solution [21]. One area where XR could improve on direct manipulation of surgical tools is in procedures with forces that are too small to perceive without artificial feedback. Previous work demonstrated that small forces can be amplified to improve the user’s perception of the forces [22].

Conclusions

Recent advances have led to a new class of devices such as 3D VR and AR displays. These devices offer new advantages to cardiology, where spatial reasoning is very important. Additionally, many of these devices use new input paradigms that can give interventionalists control of software interfaces in sterile environments where computer interaction was previously not possible. The most readily available benefits of XR are in the form of visualizations of 3D anatomy and real-time display of anatomy and tooling. There are currently no published prospective clinical trials using AR or XR in human subjects. However, given the rapid development in the field, we expect to see human data in the near future. The XR hardware landscape is changing rapidly. Future hardware advances should improve visual realism and user comfort. Incorporation of haptic feedback into XR systems may be an important breakthrough for interventional procedures. Readers interested in more information about this field, particularly XR display hardware considerations, should consult our previous review [23]. The book Mixed and Augmented Reality in Medicine [24] will be of interest to readers looking for an in-depth resource about XR in medicine.

References

- 1.The Stanford Virtual Heart – Revolutionizing education on congenital heart defects [Internet] Available from: https://www.stanfordchildrens.org/en/innovation/virtual-reality/stanford-virtual-heart.

- 2.Bruckheimer E, Rotschild C, Dagan T, Amir G, Kaufman A, Gelman S, et al. Computer-generated real-time digital holography: first time use in clinical medical imaging. Eur Heart J Cardiovasc Imaging 2016;17:845–9. [DOI] [PubMed] [Google Scholar]

- * 3.Silva JN, Southworth MK, Dalal A, Van Hare GF. Improving Visualization and Interaction During Transcatheter Ablation Using an Augmented Reality System: First-in-Human Experience (abstr). Circulation 2017;136.Our prototype electrophysiology mapping software developed for the Microsoft HoloLens demonstrates the power of XR by visualizing patient-specific 3D anatomy and real-time catheter locations with a completely sterile user interface.

- 4.Loy Rodas N, Barrera F, Padoy N. See It With Your Own Eyes: Markerless Mobile Augmented Reality for Radiation Awareness in the Hybrid Room. IEEE Trans Biomed Eng 2017;64:429–40. [DOI] [PubMed] [Google Scholar]

- 5.Doug’s Great Demo: 1968 [Internet] Available from: http://www.dougengelbart.org/content/view/209/448/

- 6.Over 50 Years of Moore’s Law [Internet] Available from: https://www.intel.com/content/www/us/en/silicon-innovations/moores-law-technology.html.

- 7.Milgram P, Kishino F. A taxonomy of mixed reality visual displays. IEICE Trans Information Systems 1994;vol. E77-D, no. 12:1321–9. [Google Scholar]

- 8.What is mixed reality? [Internet] Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/mixed-reality.

- 9.HoloAnatomy app wins top honors [Internet] Available from: http://engineering.case.edu/HoloAnatomy-honors.

- 10.Peterson DC, Mlynarczyk GSA. Analysis of traditional versus three-dimensional augmented curriculum on anatomical learning outcome measures. Anatomical sciences education 2016;9:529–36. [DOI] [PubMed] [Google Scholar]

- 11.Chan F, Aguirre S, Bauser H. Head tracked stereoscopic pre-surgical evaluation of major aortopulmonary collateral arteries in the newborns. Paper presented at: Radiological Society of North America 2013 Scientific Assembly and Annual Meeting; Chicago, IL 2013; [Google Scholar]

- 12.Jang J, Tschabrunn CM, Barkagan M, Anter E. Three-dimensional holographic visualization of high-resolution myocardial scar on HoloLens. PLoS ONE 2018;13:e0205188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kiss G, Palmer CL, Torp H. Patient adapted augmented reality system for real-time echocardiographic applications. In: Linte CA, Yaniv Z, Fallavollita P, editors. Augmented environments for computer-assisted interventions Cham: Springer International Publishing; 2015. pp. 145–54. [Google Scholar]

- 14.Opolski MP, Debski A, Borucki BA, Szpak M, Staruch AD, Kepka C, et al. First-in-Man Computed Tomography-Guided Percutaneous Revascularization of Coronary Chronic Total Occlusion Using a Wearable Computer: Proof of Concept. Can J Cardiol 2016;32:11–3. [DOI] [PubMed] [Google Scholar]

- 15.Katz A, Shtub A, Roguin A. Minimizing ionizing radiation exposure in invasive cardiology safety training for medical doctors. Journal of Nuclear Engineering and Radiation Science 2017;3. [Google Scholar]

- 16.Linte CA, Moore J, Wedlake C, Bainbridge D, Guiraudon GM, Jones DL, et al. Inside the beating heart: an in vivo feasibility study on fusing pre- and intra-operative imaging for minimally invasive therapy. Int J Comput Assist Radiol Surg 2009;4:113–23. [DOI] [PubMed] [Google Scholar]

- 17.Moro C, Štromberga Z, Raikos A, Stirling A. The effectiveness of virtual and augmented reality in health sciences and medical anatomy. Anat Sci Educ 2017;10:549–59. [DOI] [PubMed] [Google Scholar]

- 18.Statt N. Realmax’s prototype AR goggles fix one of the HoloLens’ biggest issues. Available from: https://www.theverge.com/2018/1/9/16872110/realmax-ar-goggles-microsoft-hololens-prototype-field-of-view-ces-2018.

- 19.Vukicevic M, Mosadegh B, Min JK, Little SH. Cardiac 3D Printing and its Future Directions. JACC: Cardiovascular Imaging 2017;10:171–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.van der Meijden OAJ, Schijven MP. The value of haptic feedback in conventional and robot-assisted minimal invasive surgery and virtual reality training: a current review. Surgical endoscopy 2009;23:1180–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.King C-H, Culjat MO, Franco ML, Lewis CE, Dutson EP, Grundfest WS, et al. Tactile Feedback Induces Reduced Grasping Force in Robot-Assisted Surgery. IEEE transactions on haptics 2009;2:103–10. [DOI] [PubMed] [Google Scholar]

- 22.Stetten G, Wu B, Klatzky R, Galeotti J, Siegel M, Lee R, et al. Hand-held force magnifier for surgical instruments. 2011;90–100.

- * 23.Silva JNA, Southworth M, Raptis C, Silva J. Emerging Applications of Virtual Reality in Cardiovascular Medicine. JACC Basic Transl Sci 2018;3:420–30.Our previous review of this subject includes additional information about extended reality display hardware.

- * 24.Peters TM, Linte CA, Yaniv Z, Williams J. Mixed and Augmented Reality in Medicine CRC Press; 2018.Readers interested in a thorough treatment of XR in medical practice should consider this book.