Abstract

Background:

Clinicians are key drivers for improving health care quality and safety. However, some may lack experience in quality improvement and patient safety (QI/PS) methodologies, including root cause analysis (RCA).

Objective:

The Department of Veterans Affairs (VA) sought to develop a simulation approach to teach clinicians from the VA’s Chief Resident in Quality and Safety program about RCA. We report the use of experiential learning to teach RCA, and clinicians’ preparedness to conduct and teach RCA post-training. We provide curriculum details and materials to be adapted for widespread use.

Methods:

The course was designed to meet the learning objectives through simulation. We developed course materials, including presentations, a role-playing case, and an elaborate RCA case. Learning objectives included (1) basic structure of RCA, (2) process flow diagramming, (3) collecting information for RCA, (4) cause and effect diagramming, and (5) identifying actions and outcomes. We administered a voluntary, web-based survey in November 2016 to participants (N = 114) post-training to assess their competency with RCA.

Results:

A total of 93 individuals completed the survey of the 114 invited to participate, culminating an 82% response rate. Nearly all respondents (99%, N = 92) reported feeling at least moderately to extremely prepared to conduct and teach RCA post-training. Most respondents reported feeling very to extremely prepared to conduct and teach RCA (77%, N = 72).

Conclusions:

Experiential learning involving simulations may be effective to improve clinicians’ competency in QI/PS practices, including RCA. Further research is warranted to understand how the training affects clinicians’ capacity to participate in real RCA teams post-training, as well as applicability to other disciplines and interdisciplinary teams.

Keywords: root cause analysis, medical education, quality improvement, patient safety, curriculum, simulation

Introduction

The Institute of Medicine maintains that patient safety and unintentional patient harm is an urgent public health issue.1 Patient harm is often a consequence of medical errors, which can involve system-based mistakes, including missed or delayed diagnoses, miscommunication between care teams, and misadministration of medication.2-5 The resulting impact on the patient ranges from no harm to death.2-5 While current initiatives and collaborative practices strive to make patient care safer, adverse events still occur. In the United States, medical errors have emerged as the third leading cause of death, accounting for more than 250 000 deaths each year.6 This further emphasizes the need to improve patient safety. Common efforts include standardization of care processes, or recognizing and improving specific aspects of care.2-5 Moreover, hospitals dedicated to patient safety may use a more foundational approach, such as establishing and promoting a patient safety culture.7,8 This may result in system-wide changes that improve a broader range of care aspects. One framework for this approach is high-reliability organizations (HROs), which are often used in industries such as commercial aviation.7,8

High-reliability organizations adhere to 5 core principles: (1) sensitivity to operations and how they affect the organization; (2) reluctance to accept simple explanations and striving to identify the root source of problems; (3) preoccupation with failure including anticipating and correcting potential problems before they occur; (4) deference to individuals with expertise of the particular task at hand, regardless of hierarchy; and (5) commitment to resilience, and adaptive when developing new solutions to unexpected problems.7,8 As a result, major harm events in HROs are rare.7,8 When applied to the health care system, a culture of safety means identifying systematic causes contributing to patient harm, encouraging error reporting and removing individual blame, and promoting multi-level collaboration to develop solutions that may prevent similar errors from happening again.7,8 There are existing tools intended to support this process, such as root cause analysis (RCA).9,10 Clinicians in particular are key drivers for improving health care, but some may lack experience or knowledge in the use of such systems-based practices or quality improvement and patient safety (QI/PS) methodologies, including how to conduct an RCA.11-13

Although RCA has been promoted as both tangible evidence of high reliability and an important tool for improving patient safety, literature suggests that execution of RCA within the health care system is often suboptimal.13,14 One leading cause of this is a lack of knowledge and skill of personnel carrying out the RCA.15,16 There is substantial literature outlining the ideal RCA process; however, little information is available regarding a formal curriculum for RCA in the published literature.15,16 Kung et al17 developed and implemented a case-based teaching method involving a 1-hour presentation on RCA for radiology residents. In addition, Lambton and Mahlmeister18 paired a simulation activity with RCA training for nursing students. While these 2 studies put forward the concept of a simulation-based method to teach RCA, both describe a more superficial overview of the RCA process rather than a structured curriculum.

This work is unique in that we report on our development and execution of an interactive, simulation-based method to teach RCA in a short session. The purpose of this report is to share the curriculum and results of using RCA simulations for training, and clinicians’ preparedness to conduct and teach RCA after the training. Furthermore, this study provides educational materials and details on the curriculum to teach RCA using a low-technology simulation method.

Methods

This project was reviewed and approved by the Research and Development Committee, White River Junction, VA Medical Center. Participants were US-based clinicians from various medical specialties in the Department of Veterans Affairs (VA) Chief Resident in Quality and Safety (CRQS) training program. The CRQS program was developed for residents to gain experience teaching QI/PS concepts and leading related projects.19,20

Conceptual model

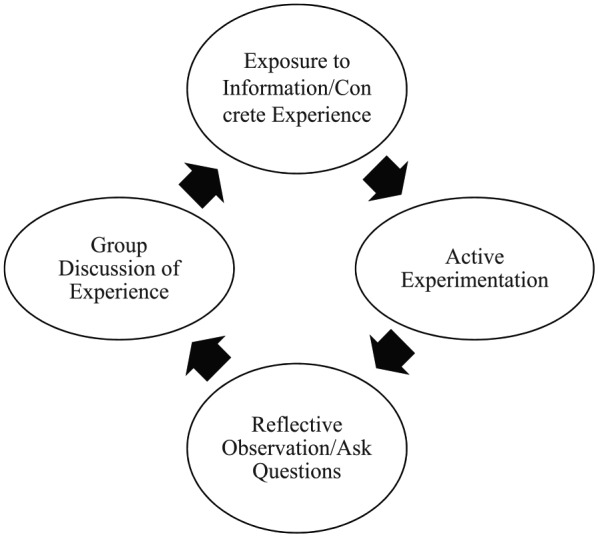

The overall course design was informed by adult learning theory (Figure 1). This conceptual model is based on the work of Kolb et al, which suggests that learning is best accomplished through concrete experience, followed by reflection and discussion. Participants are exposed to new information or have a concrete experience, actively practice what was learned with example cases, reflect on the experience and ask questions, and internalize or learn the abstract concepts through group discussion.21,22

Figure 1.

Conceptual model of optimal learning through simulation, adapted from Kolb et al.21,22

Learning objectives

The course learning objectives were developed through consensus discussion among patient safety experts. These objectives were based on the course’s goal to develop clinicians’ ability to actively engage in patient safety activities such as RCA. The learning objectives included (1) basic structure of an RCA (eg, main components and how it is done), (2) how to do an initial understanding and final event flow diagram, (3) how to collect information for RCA (eg, interviews), (4) how to do cause and effect diagramming to obtain actionable items (eg, fishbone diagram), and (5) how to develop actions and outcomes. We developed course materials, including presentations, a role-playing case, and an elaborate RCA case. Presented information may be found in Table 1, and case summaries may be found in Table 2. The full version of these materials is available online and may be modified to meet user needs.

Table 1.

RCA presentation content and take-home message for each learning objective.23

| Learning objective | PowerPoint content | Take-home message |

|---|---|---|

| 1. Basic structure of an RCA |

Introduction to RCA

Purpose of an RCA In complex systems such as health care, reporting medical errors and evaluating their causes is necessary for improving safety. RCA is a structured and standardized method for investigating the causes of medical errors by focusing on the systematic and organizational factors that led to the adverse event. RCAs are useful for determining what happened, why it happened, and what can be done to prevent it from happening again. When to conduct an RCA RCAs should be done following a known adverse event, close call or near miss adverse event, or an event that resulted in an unexpected negative outcome. The Joint Commission sentinel event policy also requires RCAs following sentinel events such as wrong-site surgery. In the VA, RCAs are completed locally by hospital staff and are sent to the NCPS and cataloged. Following an adverse event, the patient safety manager at each VA facility determines whether to commission an RCA team by using a matrix that incorporates the severity, frequency, and vulnerability to recurrence. The patient safety manager is trained in RCA and patient safety protocols and procedures, and facilitates teams to conduct the RCA. When NOT to conduct an RCA RCAs should not be done when the event is the result of an illegal act of someone in the hospital (eg, angel of death or taking medications on purpose), when the event resulted from an intentionally unsafe act (eg, wheelchair races), when illegal drug or alcohol use is involved (eg, nursing assistant came to work intoxicated), or when patient abuse is suspected. Legal aspects of RCA Information from RCA cannot be obtained from lawsuits, and RCA data should be de-identified during the process. In the VHA, the information cannot be discussed under 5705 protections. Furthermore, RCA cannot be used for personnel action. Staff cannot be punished, fired, or reprimanded based on what is learned in RCA. |

• The goal is to explore and identify root causes and systems issues rather than blame individuals. |

| 2. How to do process flow diagramming |

Process flow diagramming

Purpose of a process flow diagram A process flow diagram may be used to gain an initial understanding of what is known and what is not known about the event, and develop a plan to further investigate the event. Process flow diagram rules Process flow diagramming is based on the sequence of events, and should describe the steps and timeline of the adverse event. It would include actions leading up to, during, and after the case. |

• Think about what actually happened and use the questions

that arise to inform the interviews. The goal is to

determine the “holes in the story” and to end up with a

final understanding of the flow diagram. This goal makes it

different from a flow diagram used for quality improvement

purposes, which is a sequencing of events. • It might be easy for 1 person to lead this process, but it is essential that all members participate to get a factual and holistic understanding of what happened. |

| 3. RCA data collection: interviews |

Interviews

Once an initial understanding process flow diagram is created, missing elements of the event and additional questions may be clarified through staff interviews. The interview should begin with informing the subject of the RCA process and its protections. Interviewers should be clear about what they hope to learn from the interview and remain sensitive to the subject’s feelings. |

• Everything that is documented in the medical record did

not always happen, and everything that happened is not

always documented in the medical record; although this is

ideal, participants need to seek the truth of what actually

happened. • To truly understand what went wrong, you have to go to the place of the incident and examine it. • People may be emotional during interviews, and it is the interviewer’s job to compassionately respond to these emotions while doing their best to obtain information. The interviewee needs to be assured that no repercussions will result from the interview, or they may not openly share about the case. |

| 4. How to do cause and effect diagramming |

Cause and effect diagramming

The goal of a cause and effect diagram is to help determine root causes and contributing factors of the event. The cause and effect diagram should follow the 5 rules of causation. (1) Clearly show the cause and effect relationship (eg, residents are scheduled 80 hours per week; as a result, fatigued residents are more likely to misread instructions, leading to error), (2) use specific and accurate descriptors for what occurred rather than negative and vague words (eg, the pump manual had 8-point font and no illustrations; as a result, nursing staff rarely used it, increasing the likelihood of incorrect pump programming), (3) identify the preceding cause(s), not the human error (eg, due to the lack of automated software to check high risk medication dosage limits, there was increased likelihood of insulin overdose), (4) identify the preceding cause(s) of procedure violations (eg, noise and confusion in the preparation area, coupled with production pressures, increased the likelihood of missing steps in the CT scan protocol), and (5) failure to act is only causal when there is a preexisting duty to act (eg, the absence of an assignment for designated nurses to check for STAT orders every 30 minutes increased the likelihood that STAT orders would be missed or delayed). Develop a fishbone or cause and effect diagram by identifying the problem statement and the factors (eg, people, process/methods, equipment, materials, measurements, environment) that contributed to the problem. |

• Identifying root causes are more akin to hypothesis of

causes. When actions are implemented and evaluated, they

provide information on if the root causes were on target.

• Ask “why” multiple times because what appears to be the root cause may not always be the root cause. It may be a surface-level factor, which requires further investigation to arrive at the system-level issue. |

| 5. Identifying actions and outcomes |

Actions and outcomes

When all necessary information is gathered and diagrams are finalized, potential actions and outcomes may be identified. Actions should be driven by root causes and may follow a hierarchy of actions by Bagian et al. This involves aiming for strong actions whenever possible. Stronger actions include architectural/physical plant changes, usability testing, or engineering control (forcing functions). Although these actions may be costly, they involve changes to the physical environment that remain constant over time and may be ideal to support lasting improvements. Intermediate actions may help streamline workflow and include checklists/cognitive aids, software enhancements/modifications, or implementing tools for enhanced communication and documentation. Finally, weaker actions involve double checks, warnings and labels, or training. Such actions may prove effective when coupled with stronger actions; however, they are considered weaker because they require reminders or re-training of staff. Outcomes should be driven by the event and potential solution (actions). |

• Unless actions are implemented and outcomes are evaluated, similar adverse events may occur. |

Abbreviations: CT, computed tomography; NCPS, National Center for Patient Safety; RCA, root cause analysis; VA, Department of Veterans Affairs; VHA, Veterans Health Administration.

Table 2.

Case summaries.

| Case | Summary |

|---|---|

| Sticky eyeball case | The case of the sticky eyeball takes place in a crowded, busy ER at a teaching hospital. A 3-year-old child fell and struck his right supra-orbital ridge on the corner of a coffee table, which requires sutures. The mother requests liquid topical adhesive to prevent scar formation. The overwhelmed ER attending instructs his resident to perform the procedure (who has 2 previous, supervised experiences using the product), and asks an ER nurse to provide the resident with the necessary materials. The procedure is performed in a cramped room that was previously a janitor’s closet. The child began moving and crying during the procedure, and the resident dripped glue into the child’s eye. The ER attending wants to know how this could have happened. |

| Mr Smith fictitious RCA case | Mr Smith is a young veteran of the war in Iraq and Afghanistan. He presented to the outpatient clinic for pre-surgical labs in anticipation of his scheduled (right) ACL repair the following morning. Mr Smith was admitted overnight primarily due to transportation difficulty. The ACL repair was uneventful, and Mr Smith appeared stable following surgery. During post-surgical rounds, he mentioned that physical therapy aggravated an old high school football injury in his left knee. Dr Martin saw Mr Smith again on Friday afternoon and then handed off care to Dr Miller for the weekend. On Saturday, Mr Smith was discharged home and received transportation by his cousin. One week later, Mr Smith’s mother called the outpatient clinic to cancel his follow-up appointment. The clerk rescheduled for the same time slot the following week. Two days later, Mr Smith passed away at the university hospital in his hometown. Although his family declined an autopsy, he was found to have sepsis, which likely was the cause of death. |

Abbreviations: ACL, anterior cruciate ligament; ER, emergency room; RCA, root cause analysis.

Course structure: 3 parts

The course is taught in a workshop format. It involves brief lectures, followed by exercises and discussions facilitated by faculty. Most exercises are done in small groups of 3 to 5 learners.

Part 1 (approximately 45 minutes)

The course begins with a presentation (10 minutes) introducing RCA concepts and how to construct a process flow diagram. Please see Supplemental Appendix A for the full presentation. Participants read and act out the role-play case (5 minutes; Supplemental Appendix B). They practice constructing an initial understanding process flow diagram with the given information, and are encouraged to reflect on the process (eg, what was the purpose, what was easy and/or hard) and ask questions of the course instructors (20 minutes). This exercise is followed by group discussion of RCA structure and process flow diagramming, and participants are instructed to make a list of questions about the case that they still want to know to complete the final process diagram (10 minutes).

Part 2 (approximately 45 minutes)

Part 2 of the course is parallel to Part 1. Several additional components of RCA are described that largely focus on the investigative aspects of RCA, including how to conduct interviews and perform site visits. Cause and effect diagrams as well as construction of actions and outcomes are also described. Participants use their unanswered questions from Part 1 to structure their interviews, and practice interviewing simulated providers. They use the information obtained from the interviews to complete their cause and effect diagram, and identify actions and outcomes (35 minutes). They are encouraged to reflect on the process (eg, did they have enough information to determine causality, did they go backward enough in the causal cascade, what was easy and/or hard about the process), as well as ask questions of the course instructors. This exercise is followed by group discussion (10 minutes). If pressed for time, faculty could proceed directly to the more elaborate RCA case to practice skills instead of conducting mock interviews on the role-play case. Please see Supplemental Appendix C for a sample agenda.

Part 3 (approximately 2 hours)

After the initial exposure and experience, participants practice again with a more complex RCA case. Participants apply the learned skills from Parts 1 and 2 to entirely new information, and they gain experience conducting an RCA from start to finish. They are first given a simulated medical record (Supplemental Appendix D) that includes an adverse event, and learners construct an initial flow diagram of the event (Supplemental Appendix E). Please see Supplemental Appendix F for the RCA medical unit diagram. Based on this initial understanding of the case, learner groups develop a list of people from the case that they would want to interview and draft a list of questions for each interview. The instructors coach the learner RCA teams on how to conduct interviews during RCA. Participants then conduct interviews with faculty who play the roles of the various staff involved in the case. The role-play simulation is directed by an interview guide for each simulated role (Supplemental Appendix G). These guides inform the individuals simulating the roles about what information they know, and how they should behave during the interview. After completing the interviews, each group completes a final understanding process map (Supplemental Appendix H), constructs a cause and effect diagram (Supplemental Appendix I), and identifies actions and outcomes. This section of the course lasts for approximately 1 hour 45 minutes.

Debriefing (15 minutes)

The course ends with a 15-minute debriefing exercise. Learners have an opportunity to discuss any lingering unclear points. The focus of the debriefing is a discussion of planned involvement with future RCAs at their institution.

Course evaluation

External evaluators administered a voluntary, non-incentivized, web-based survey to participants following RCA training in November 2016 (N = 114) using Qualtrics (Provo, UT, USA). The goal of this survey was to evaluate participants’ preparedness to conduct RCA and teach the key principles of RCA. The question types were Likert-type scale (1 = not at all, 5 = extremely), and we calculated the frequency as a basic descriptive statistics.

Results

Participants

A total of 93 individuals completed the survey of the 114 invited to participate, culminating an 82% response rate.

Preparedness to conduct and teach RCA

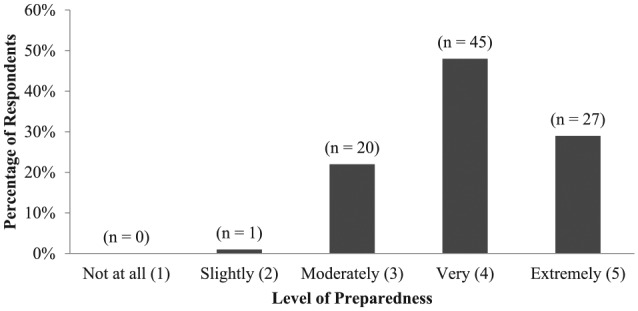

Nearly all respondents (99%, N = 92) reported feeling at least moderately to extremely prepared to conduct and teach RCA following training (Figure 2). The majority of respondents reported feeling very to extremely prepared to conduct and teach RCA (77%, N = 72).

Figure 2.

Reported preparedness to conduct and teach root cause analysis among 93 alumni of the Chief Resident in Quality and Safety program. Chief Resident in Quality and Safety alumni are US-based clinicians from various medical specialties. The data presented are Likert-type scale (1 = not at all, 5 = extremely) and were collected through a voluntary, non-incentivized, web-based survey administered in November 2016.

Participant feedback

We report a few antidotes from participants but did not receive enough open-ended responses to conduct any analyses.

“The content was very useful and practical, and designed to engage learners at all levels.”

“I enjoyed the interactive nature of the class, there was never an opportunity to check out. I felt each session was very hands-on, which helped me to remember major concepts and implement the concepts we were being taught. I also thought that the manner in which the information was presented gave me ideas for ways I could teach the residents at my program.”

“The hands-on, practical approach to the session in which we utilized real-world examples was useful and effective.”

“Some of the material was a little basic, and we could have benefited from more in-depth knowledge and discussion of the topic.”

Discussion

To achieve the goal of safer health care aligned with high-reliability principles, heath care workers must gain an understanding of event investigation tools such as RCA. It is highly desirable to have personnel specifically trained to participate in RCA teams, and we provide an easy to implement, low-technology, simulation-based RCA curriculum to aid in this process. We tested our curriculum on clinicians of the national CRQS program and evaluated their perceived sense of competency with RCA following training. Overall, physician attendees reported better confidence in their ability to conduct and teach RCA with the training they received. The novelty of this work is that it provides the materials to simulate an RCA in a relatively brief time period (4-5 hours). Studies have demonstrated that to maximize learning, participants need to experience, reflect, and engage in active experimentation to make abstract concepts meaningful.12,21,24-27 Participants appeared to enjoy this hands-on, interactive, and practical aspect of the course. However, we suggest modifying the difficultly of the course content depending on participants’ experience with the topics to ensure they remain engaged. At the start of the course, we suggest asking participants whether they have participated in an RCA team or whether they have been asked to teach about RCA.

We acknowledge several limitations to this study. Our evaluation strategy was not mature, and we ideally would need to evaluate participants’ performance on a real RCA team following training to obtain a better performance assessment. We are unable to make any definitive conclusions on their knowledge gained because we did not have an RCA testing scenario, and we did not assess for a baseline knowledge score before participating in the course. Furthermore, clinicians’ experience with QI/PS, including RCA, may have differed prior to course involvement. This study may also be subject to sampling bias, because our participants are residents that took 1-year off to participate in the CRQS program, and were eager to learn QI/PS methodology and tools. Therefore, it is unclear how this method would work on mid-career providers or medical students, where QI/PS is not typically a core aspect of their training.

Experiential learning is not a new teaching modality, and this advanced training is commonly used for various educational purposes, including graduate medical education, clerkships, and residency.12,19,24,25,27-31 However, we believe that application of these methods including the use of simulation to teach about content specifically related to quality and patient safety is novel. Students and clinicians master what they learn in the classroom by practicing with patient actors and real-life cases. Furthermore, this method is not specific to only clinicians and may be applied to any discipline or interdisciplinary team. Watts et al26 report that experiential learning worked well when used for industrial engineers and related industries. Increasing emphasis has been placed on educating clinicians regarding ways to deliver safer, higher quality care, and some graduate medical programs are incorporating QI/PS into their curriculum.12,24,25,29,31

The Accreditation Council for Graduate Medical Education (ACGME) developed the Clinical Learning Environment Review (CLER) program to provide guidance for an optimal clinical learning environment.11,32 The goal of CLER is to promote experiential learning and enhance clinicians’ competency in QI/PS practices.11,32 Clinical Learning Environment Review focuses on the idea that QI/PS work should be similar to clinical care training, where the expectation is that clinicians should be directly involved in the work to gain proficiency with such skills.11,32 As health care systems move toward meeting the goals of CLER, they should try to provide both basic and advanced education on tools for QI/PS, including RCA.11,32,33 We provide an example of how this may be achieved. In addition, Boussat et al33 developed a simple model for standardized RCA reporting that presents causes and corrective actions, and may be used during time-constraining circumstances. Overall, clinicians reported enjoying the course, and our hope is that it will enhance their capacity to meaningfully participate in RCA teams by the end of the training.

Conclusions

Clinicians are leaders in patient safety and integral to the success of health care improvement initiatives. However, to meaningfully participate in such practices, they need to be trained in QI/PS methodologies and tools, including RCA. We report success with RCA simulations and experiential learning to improve their competency in QI/PS practices. Further research is needed to understand how the training affects clinicians’ capacity to participate in actual RCA teams following training, as well as applicability to other disciplines and interdisciplinary teams.

Supplemental Material

Supplemental material, AppendixA_RCA_Powerpoint_xyz27040aa30c22c for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixB_DocUDrama_Sticky_Eyeball_xyz27040c674734e for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixC_RCA_Session_SampleAgenda_xyz27040c148b9c5 for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixD_RCA_Case_Medical_Chart_xyz27040b5af615f for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixF_RCA_Medical_Unit_Diagram__xyz270402ac53212 for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixG_RCA_Simulated_Interview_Key_xyz27040d8527b6c for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixH_Final_Flow_Diagram_xyz27040b1edcd2d for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, AppendixI_Cause_and_Effect_Diagram_Answer_Key_xyz270409a808698 for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental Material

Supplemental material, Appendix_E_Initial_Flow_Diagram_xyz27040ca1139cf for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Footnotes

Funding:The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This material is the result of work supported with resources and the use of facilities at the Department of Veterans Affairs National Center for Patient Safety at the Veterans Affairs Medical Centers, White River Junction, Vermont. The views expressed in this article do not necessarily represent the views of the Department of Veterans Affairs or of the US Government. This work was supported by the Department of Veterans Affairs, and as a government product, we do not hold the copyright.

Declaration of Conflicting Interests:The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Author Contributions: Each author was involved in the conceptual design or acquisition of data, data analysis or presentation of results and drafting, revision, or final review of the manuscript.

Presentation/Publication: This paper has not been previously presented or published in any format.

ORCID iD: Maya Aboumrad  https://orcid.org/0000-0001-6140-4250

https://orcid.org/0000-0001-6140-4250

Supplemental Material: Supplemental material for this article is available online.

References

- 1. Institute of Medicine Committee on Quality of Health Care in A. In: Kohn LT, Corrigan JM, Donaldson MS, eds. To Err Is Human: Building a Safer Health System. Washington, DC: National Academies Press; 2000;17-158. [PubMed] [Google Scholar]

- 2. Aboumrad M, Fuld A, Soncrant C, Neily J, Paull D, Watts BV. Root cause analysis of oncology adverse events in the Veterans Health Administration. J Oncol Pract. 2018;14:e579-e590. [DOI] [PubMed] [Google Scholar]

- 3. Aboumrad M, Shiner B, Riblet N, Mills PD, Watts BV. Factors contributing to cancer-related suicide: a study of root-cause analysis reports. Psycho-Oncology. 2018;27:2237-2244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Neily J, Mills PD, Paull DE, et al. Sharing lessons learned to prevent incorrect surgery. Am Surg. 2012;78:1276-1280. [PubMed] [Google Scholar]

- 5. Weingart SN, Price J, Duncombe D, et al. Patient-reported safety and quality of care in outpatient oncology. Jt Comm J Qual Patient Saf. 2007;33:83-94. [DOI] [PubMed] [Google Scholar]

- 6. Makary MA, Daniel M. Medical error—the third leading cause of death in the US. BMJ. 2016;353:i2139. [DOI] [PubMed] [Google Scholar]

- 7. Aboumatar HJ, Weaver SJ, Rees D, Rosen MA, Sawyer MD, Pronovost PJ. Towards high-reliability organising in healthcare: a strategy for building organisational capacity. BMJ Qual Saf. 2017;26:663-670. [DOI] [PubMed] [Google Scholar]

- 8. Sutcliffe KM, Paine L, Pronovost PJ. Re-examining high reliability: actively organising for safety. BMJ Qual Saf. 2017;26:248-251. [DOI] [PubMed] [Google Scholar]

- 9. Bagian JP, Gosbee J, Lee CZ, Williams L, McKnight SD, Mannos DM. The Veterans Affairs root cause analysis system in action. Jt Comm J Qual Improv. 2002;28:531-545. [DOI] [PubMed] [Google Scholar]

- 10. Bagian JP, Lee C, Gosbee J, et al. Developing and deploying a patient safety program in a large health care delivery system: you can’t fix what you don’t know about. Jt Comm J Qual Improv. 2001;27:522-532. [DOI] [PubMed] [Google Scholar]

- 11. Weiss KB, Bagian JP, Wagner R, Nasca TJ. Introducing the CLER pathways to excellence: a new way of viewing clinical learning environments. J Grad Med Educ. 2014;6:608-609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hall Barber K, Schultz K, Scott A, Pollock E, Kotecha J, Martin D. Teaching quality improvement in graduate medical education: an experiential and team-based approach to the acquisition of quality improvement competencies. Acad Med. 2015;90:1363-1367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Wu AW, Lipshutz AK, Pronovost PJ. Effectiveness and efficiency of root cause analysis in medicine. JAMA. 2008;299:685-687. [DOI] [PubMed] [Google Scholar]

- 14. Latino RJ. How is the effectiveness of root cause analysis measured in healthcare? J Healthc Risk Manag. 2015;35:21-30. [DOI] [PubMed] [Google Scholar]

- 15. Charles R, Hood B, Derosier JM, et al. How to perform a root cause analysis for workup and future prevention of medical errors: a review. Patient Saf Surg. 2016;10:20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Rooney JJ, Heuvel LNV. Root cause analysis for beginners. Qual Prog. 2004;37: 45-56. [Google Scholar]

- 17. Kung JW, Brook OR, Eisenberg RL, Slanetz PJ. How-I-do-it: teaching root cause analysis. Acad Radiol. 2016;23:881-884. [DOI] [PubMed] [Google Scholar]

- 18. Lambton J, Mahlmeister L. Conducting root cause analysis with nursing students: best practice in nursing education. J Nurs Educ. 2010;49:444-448. [DOI] [PubMed] [Google Scholar]

- 19. Watts BV, McKinney K, Williams LC, Cully JA, Gilman SC, Brannen JL. Current training in quality and safety: the current landscape in the Department of Veterans Affairs. Am J Med Qual. 2016;31:382. [DOI] [PubMed] [Google Scholar]

- 20. Watts BV, Paull DE, Williams LC, Neily J, Hemphill RR, Brannen JL. Department of Veterans Affairs Chief Resident in Quality and Patient Safety Program: a model to spread change. Am J Med Qual. 2016;31:598-600. [DOI] [PubMed] [Google Scholar]

- 21. Kolb AY, Kolb DA. Learning styles and learning spaces: enhancing experiential learning in higher education. Acad Manag Learn Educ. 2005;4:193-212. [Google Scholar]

- 22. Stice JE. Using Kolb’s learning cycle to improve student learning. Eng Educ. 1987;77:291-296. [Google Scholar]

- 23. Department of Veterans Affairs National Center for Patient Safety. VHA National Patient Safety Improvement Handbook. Washington, DC: Veterans Health Administration; 2011. [Google Scholar]

- 24. Ogrinc G, Cohen ES, van Aalst R, et al. Clinical and educational outcomes of an integrated inpatient quality improvement curriculum for internal medicine residents. J Grad Med Educ. 2016;8:563-568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Ogrinc G, Nierenberg DW, Batalden PB. Building experiential learning about quality improvement into a medical school curriculum: the Dartmouth experience. Health Aff (Millwood). 2011;30:716-722. [DOI] [PubMed] [Google Scholar]

- 26. Watts BV, Shiner B, Cully JA, Gilman SC, Benneyan JC, Eisenhauer W. Health systems engineering fellowship: curriculum and program development. Am J Med Qual. 2015;30:161-166. [DOI] [PubMed] [Google Scholar]

- 27. Yardley S, Teunissen PW, Dornan T. Experiential learning: transforming theory into practice. Med Teach. 2012;34:161-164. [DOI] [PubMed] [Google Scholar]

- 28. Karasick AS, Nash DB. Training in quality and safety: the current landscape. Am J Med Qual. 2015;30:526-538. [DOI] [PubMed] [Google Scholar]

- 29. Splaine ME, Aron DC, Dittus RS, et al. A curriculum for training quality scholars to improve the health and health care of veterans and the community at large. Qual Manag Health Care. 2002;10:10-18. [DOI] [PubMed] [Google Scholar]

- 30. Splaine ME, Ogrinc G, Gilman SC, et al. The Department of Veterans Affairs National Quality Scholars Fellowship Program: experience from 10 years of training quality scholars. Acad Med. 2009;84:1741-1748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Tartaglia KM, Walker C. Effectiveness of a quality improvement curriculum for medical students. Med Educ. 2015;20:27133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Weiss KB, Bagian JP. Challenges and opportunities in the six focus areas: CLER National Report of Findings 2016. J Grad Med Educ. 2016;8:25-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Boussat B, Bougerol T, Detante O, Seigneurin A, Francois P. Experience Feedback Committee: a management tool to improve patient safety in mental health. Ann Gen Psychiatry. 2015;14:23. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, AppendixA_RCA_Powerpoint_xyz27040aa30c22c for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixB_DocUDrama_Sticky_Eyeball_xyz27040c674734e for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixC_RCA_Session_SampleAgenda_xyz27040c148b9c5 for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixD_RCA_Case_Medical_Chart_xyz27040b5af615f for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixF_RCA_Medical_Unit_Diagram__xyz270402ac53212 for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixG_RCA_Simulated_Interview_Key_xyz27040d8527b6c for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixH_Final_Flow_Diagram_xyz27040b1edcd2d for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, AppendixI_Cause_and_Effect_Diagram_Answer_Key_xyz270409a808698 for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development

Supplemental material, Appendix_E_Initial_Flow_Diagram_xyz27040ca1139cf for Teaching Root Cause Analysis Using Simulation: Curriculum and Outcomes by Maya Aboumrad, Julia Neily and Bradley V Watts in Journal of Medical Education and Curricular Development