Abstract

Converging evidence now supports the idea that auditory cortex is an important step for the emergence of auditory percepts. Recent studies have extended the list of complex, nonlinear sound features coded by cortical neurons. Moreover, we are beginning to uncover general properties of cortical representations, such as invariance and discreteness, which reflect the structure of auditory perception. Complexity, however, emerges not only through nonlinear shaping of auditory information into perceptual bricks. Behavioral context and task-related information strongly influence cortical encoding of sounds via ascending neuromodulation and descending top-down frontal control. These effects appear to be mediated through local inhibitory networks. Thus, auditory cortex can be seen as a hub linking structured sensory representations with behavioral variables.

Introduction

Auditory perception is an essential, complex process through which humans and other animals use pressure waves to interpret and interact with the environment. The cochlea initially decomposes pressure waves into their frequency spectrum and transduces these signals into neural impulses [1]. This tonotopic organization propagates throughout the auditory system. At the level of perception, however, audition is not merely designed for precise frequency encoding [2], but rather interprets complex acoustic motifs into distinct auditory objects reflecting our experience of the acoustic environment. Thus, one may struggle to identify the absolute pitch of a tone but most people accustomed to western music will recognize if the tone is coming from a piano [3]. How does the auditory system turn the initial spectral decomposition of sounds into the timbre of instruments, the words of a phrase, or the siren of a police car? In vision science, Gestalt theories [4,5] argue that perception is based on a skeleton of complex representations which does not correspond to a ‘one-to-one copy’ of the input signals but rather to archetypal building blocks that are innate or experience-dependent and are used to construct perception [2,6]. This idea likely extends to audition [7–9]. A major challenge for understanding auditory perception is to experimentally isolate and mechanistically explain the elements of such complex auditory representations. These sensory percepts, moreover, are bound to be sensitive to the current internal state and near-term goals of the subject while it explores or interacts with the environment. Increasing evidence now shows that, in auditory cortex, an elaborate vocabulary of auditory representations comes along with information about behavioral context. The purpose of this review is to highlight recent discoveries about these two levels of complexity in auditory cortex and discuss their functional roles and potential interactions.

Structure of auditory cortex representations and their link to perception

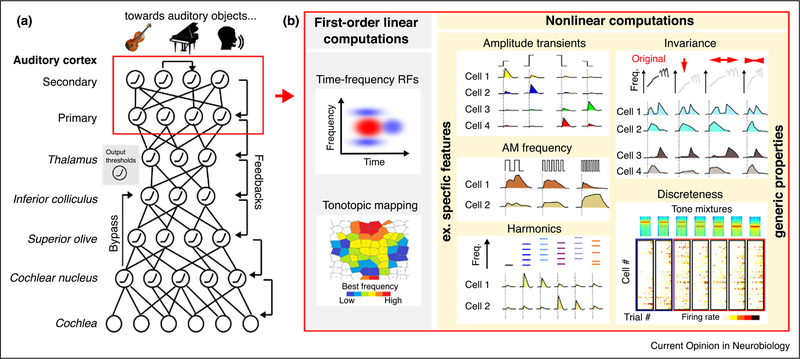

Whether auditory cortex represents simple acoustic features or building blocks for ‘auditory objects’ is a longstanding question [7,10–13]. The existence of frequency tuned neurons and their tonotopic mapping in primary auditory subfields [14,15] suggests a degree of similarity to cochlear representations. Yet this might be the only similarity. The precision of the tonotopic map weakens in secondary areas [15]. Moreover, frequency selectivity is, in general, more broadly tuned in auditory cortex than in subcortical areas [16,17], and response time constants appear to be longer [7] so that fine temporal details present in the input are lost or converted into rate codes [18•]. This readily suggests that cortex integrates over a wider range of information than subcortical structures. But most importantly, multiple studies have shown that selectivity of auditory cortex neurons greatly extend pure tone frequency coding [13,19–22]. Recently, two-photon calcium imaging and electrophysiology in rodents demonstrate that loosely mapped ensembles of cortical neurons in primary and secondary auditory cortex code for the direction of frequency variations [23], interaural differences [24•] as well as the frequency [25], amplitude and direction [26••] of intensity variations (Figure 1). These temporal features are crucial for recognizing particular classes of sounds. For example, recognition of musical instruments greatly depends on the steepness of tone intensity rise and decay [3] which is coded in primary auditory cortex, even in mice [26••]. Frequency modulations are important components of vocalization in most species [27–29]. For highly vocal animals, the structure of frequency harmonics represent important cues, as in human language [12]. Interestingly, a recent study showed that the core auditory cortex of primates includes cells which specifically detect patterns of frequency harmonics [30••] (Figure 1).

Figure 1.

Structuring of auditory information via nonlinear computations in the auditory cortex. (a) Throughout the auditory system raw cochlear inputs are structured into biologically relevant percepts (auditory objects). This transformation requires a complex ensemble of non-linear computations, which we here schematize as a multilayer network linking simple nonlinearities (e.g. spike threshold) with an elaborate connection graph that includes feedforward, feedback and lateral connections. Interestingly, appropriately trained multilayer networks (Deep Learning) were recently demonstrated to boost performance on artificial perceptual tasks such as speech recognition. (b) Beyond simple computations such as spatially organized preference for particular frequency ranges (left), the auditory cortex displays a number of non-linear computations. These leads to the emergence of neurons sensitive to specific features, as sketched in the middle column, including sound onsets and offsets of particular amplitude [26••], amplitude modulation (AM) frequencies [25], or even harmonicity (at least in primates) [30••]. Also, generic response properties, such as invariance to modification of basic acoustic parameters as sketched in the top right graph [31] and discrete coordinated population response switches (bottom right) (data from [33]), indicate that these nonlinear computations endow cortical representations with some of the expected properties of auditory object representations.

The presence of complex features in auditory cortex, however, does not prove that it codes for auditory objects. To address this issue, two major and somewhat orthogonal properties of object-like representations must be observed: invariance and discreteness. Invariance refers to the stability of representations with respect to small changes in acoustic parameters. Discreteness refers to the categorical and rapid switching of representations between objects as separate entities. Two recent studies in rats have shown that auditory cortex neurons respond to vocalizations or water sounds with a certain degree of robustness against various acoustic modifications [31,32]. Interestingly, invariance for vocalization was tested both in primary and non-primary auditory cortex and was found to be more pronounced in non-primary areas [31] (Figure 1), suggesting that invariance properties progressively emerge along the cortical hierarchy, correlating with the weakening of the tonotopic map [15]. As for discreteness, a two-photon calcium imaging study has shown that local ensemble of neurons in the mouse auditory cortex respond in a step-wise manner to gradual changes in sound mixtures [33] (Figure 1). The object-like representation in cortex predicted how mice categorized diverse sounds during a behavioral task [33]. Thus, many of the ingredients necessary to build object-like or categorical representations are present in auditory cortex and evidence exists that cortical representations are close to perceptual space.

Are object-like representations hard-wired into the cortex or are they experience-dependent, dynamically updating based on the ecological needs of a particular animal? In rodents, for example, ultrasonic pup vocalizations elicit robust responses even in low frequency areas [34], suggesting the possibility for an object-like representation. Future studies will need to address this on the axes described above, invariance and discreteness, and determine whether these representations are innate or experience-dependent. Moreover, a comparative approach that looks across the phylogenetic tree in mammals might yield further insights into the types of objects encoded by the auditory cortex, including speech in humans.

Computations and circuits underlying auditory cortex representations

Computational models of sound encoding must account for the richness, diversity and complexity of auditory cortex representations. One influential model, linear spectro-temporal receptive fields (STRFs) [35,36] attempts to explain the activity of auditory cortex neurons as generic weighted sums of spectral characteristics over multiple time steps (i.e. the neurons computations are approximated by a linear filter). Combined with a simple threshold non-linearity, this model can capture many frequency selectivity characteristics, including selectivity to the direction of frequency variations [37], and can even be used, with significant success, to reconstruct played sound spectrograms based on cortical activity [38]. But while they successfully account of the raw input space, STRFs struggle to capture many of the transformations of the acoustic inputs that are required to structure auditory information into percepts. In general, STRFs can only predict a fraction of cortical neurons’ responses [39,40]. The linearity hypothesis prevents them from capturing selectivity to amplitude modulations [26••,41], as well as the invariance [31,32] and discreteness [33] properties of cortical representations. A generic solution to these limitations might be to consider that the auditory system implements several layers of linear (STRF-like) computations interleaved with nonlinearities (Figure 1) [26••,42], as in artificial deep networks [43]. The primate visual system has functional analogies with deep networks trained to recognize visual objects [44] and ongoing work is beginning to address this in the auditory system [45]. Thus, deep networks might represent new tools, complementary to biologically constrained modeling [46], to identify the actual features encoded by auditory cortex neurons, particularly where linear filter methods and their weakly non-linear extensions [42,47,48] are not successful.

A major goal will be to link the emerging computational models, identified by deep networks or other approaches, to the underlying neural implementation and circuit mechanisms. A promising avenue is to combine observation of auditory cortical representations with manipulation of local and long-range circuit elements with optogenetics. Recent work demonstrates that interneurons influence representation of frequency in auditory cortex [49,50]. Currently, these studies are in their infancy with the tools now emerging to obtain more precise control of neural circuitry. Going forward, robust circuit dissection will require exploration of more complex sensory stimuli, cell-type specific genetic strategies [51], patterned and activity-dependent optogenetics [52–54], and incorporation of large-scale network modeling. This largely remains to be done, not only for interneuronal circuits but also for important elements of biological circuits such as intracortical layer connections and interareal feedback projections.

Modulations by behavior and context

Another current limitation of the essentially ‘sensory-centric’ framework proposed by existing encoding models is the absence of account for the context in which auditory perception occurs. However, this is an essential parameter in auditory cortex computations. David Hubel and colleagues demonstrated in the 1950s that the auditory cortex responded to non-sensory features when they found putative excitatory neurons that fired only when a cat would ‘pay attention’ [55]. They remarked, however, that ‘attention is an elusive variable that no one has yet been able to quantify’ [55]. This simple statement continues to resonate fifty years later. Yet beginning with pioneering studies done in the ferret [56], several experiments have chipped away at this notion and identified several emerging cortical computations related to behavioral context.

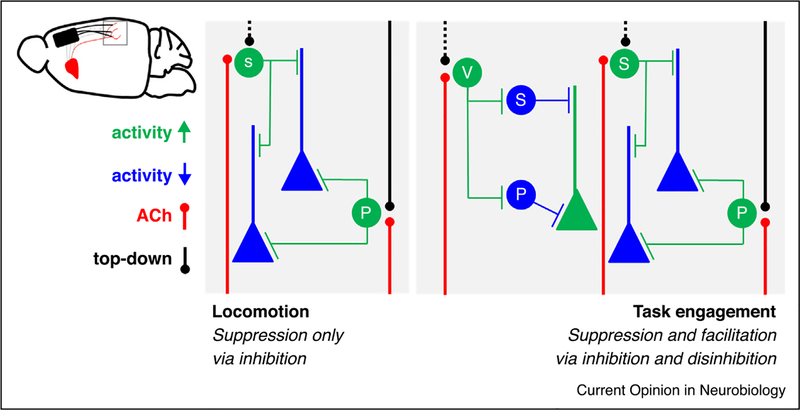

First, during locomotion, in which sounds have no apparent valence, multiple circuits work in concert to achieve robust suppression of auditory cortical output during locomotion [57•,58,59•]. Top-down projections from the motor cortex feed back into the auditory cortex, activate local inhibitory circuits, and suppress excitatory output [57•,58]. In parallel, ascending cholinergic projections from the basal forebrain innervate multiple inhibitory neurons to suppress excitatory output [60]. These signals likely supplement a reduction of the feedforward thalamic drive also induced by locomotion [60]. This shows that even a simple neural computation, such as blanket suppression when auditory information is less important to the animal, can be accomplished by the coordinated actions of distributed brain regions projecting in the auditory cortex.

Second, in contrast to these suppressive effects, cognitively demanding tasks appear to both suppress and facilitate auditory cortical responses [61••,62,63•,64]. Anticipatory top-down inputs from the pre-frontal cortex prepares auditory cortex to receive incoming sensory information based on behavioral conditions [63•]. Moreover, rats given the opportunity to voluntarily initiate a given trial exhibit similar anticipatory patterns of activity in the auditory cortex [62] and a reduction of baseline activity with trial initiation is also seen in gerbils [65]. These signals likely augment ascending cholinergic neuromodulation which alters the output of the auditory cortex during engagement in a cognitively demanding task [61••]. Interestingly, cholinergic modulation works to simultaneously suppress some excitatory neurons (via direct inhibition through parvalbumin and somatotastin-positive interneurons) and facilitate others (via disinhibition through VIP+ interneurons) (Figure 2). Thus, inhibitory networks in auditory cortex are not only enabling stimulus-related sensory computations [50] but also mediate the complex action of multiple long-range modulatory inputs [61••].

Figure 2.

Two computations that govern behavioral context in auditory cortex. During locomotion (left), evidence suggests that top-down inputs from the motor cortex (black solid line) drive the activity of PV+ interneurons which suppress the activity of pyramidal neurons. These same inputs may also activate somatostatin neuron (black dashed line, hypothetical). At the same time, ascending cholinergic neuromodulation (red lines) works on the same cell types but potentially in non-overlapping neurons. During task engagement (right), evidence suggests that all three major interneuron subtypes are co-activated by ascending cholinergic neuromodulation (red lines). Top-down inputs from the frontal cortex are known to modulate PV+ interneurons (black solid line) while potentially also operating on other interneuron subtypes (black dashed line, hypothetical).

These data suggest that all contexts are not created equal and, rather, that the auditory cortex integrates the specific features of the surrounding context. These effects may be distinct from and complementary to long-term neural plasticity, including changes in receptive fields and tonotopic maps, which have been observed in associative and perceptual learning [66–69]. Why and how does the auditory cortex treat locomotion differently than cognitively demanding tasks? These two different contexts likely reflect the importance of experience-dependent changes in circuitry. One possibility is that locomotion-related suppression reflects the hard-wiring of feedforward, top-down, and ascending projections onto inhibitory neurons that directly suppress excitatory output. Since sounds hold little to no value in these contexts, suppression dominates as the critical computation in auditory cortex. Some contexts, in contrast, may require the processing of behaviorally relevant sounds. In these tasks, the facilitation of a subnetwork of excitatory neurons may be crucial for faithful execution of a given task.

Broad suppression and selective facilitation may therefore be a prudent coding scheme in these cases to minimize metabolically expensive spikes [70] while maximizing information transfer. Population analysis further suggests that choice-related information transiently exists in the auditory cortex such that rate increases may be predictive of behavioral choice [64]. Moreover, these effects extend to more ethological behaviors such as motherhood. Oxytocin appears to work on auditory cortex through disinhibitory mechanisms to increase the sensitivity of auditory cortex to ultrasonic distress calls of pups [71,72] likely via multiple interneuron subtypes [73]. Taken together, the auditory cortex exhibits remarkable context-dependent responses, allowing for simple computations such as blanket suppression during locomotion and more complex computations such as bidirectional modulation during cognitively demanding tasks. In both cases, however, neurons in the auditory cortex negotiate a barrage of inputs including feedforward sensory drive, ascending neuromodulation and descending frontal control. These signals likely converge in the auditory cortex to prime downstream circuits for action.

Conclusions/perspectives

There is now broad evidence that auditory cortex combines high level representations of sounds with different types of behavioral modulations. Future work will need to systematically investigate the related algorithms, computations and neural circuit implementations. First, at the algorithmic level, some questions are unexplored. For example pioneering work has shown that sound guided navigation leads to plasticity of sound amplitude representations [74]. However it remains unclear which operations allow the brain to guide navigation with sounds and whether this involves processing of contextual inputs by auditory cortex. Second, at the computational level, it is not yet clear what are the circuit principles allowing auditory objects to gain behavioral relevance? One possibility is that there exist hard-wired computations in auditory cortex fostering the detection of behaviorally relevant stimuli. This could concern conspecific communication (e.g. mother-pup interactions in rodents, vocal interactions in primates) and/or vocalization-specific features (e.g. frequency modulations, harmonics) which could thereby be boosted against other sensory features. Similarly, sounds that gain behavioral relevance through experience may be detected by similar, but learnt computations operating above the blanket suppression induced by a non-specific active state (i.e. locomotion) to enhance them as particular auditory objects. Third, at the circuit level, what are the local and long-range connections that enable the interplay between sensory and behavioral complexity? Addressing these questions will require probing the activity of precisely identified functional cell types or neural ensembles during parametrically controlled behavioral contexts using more complex auditory objects and representations. Emerging technologies including large-scale optical and electrophysiological recordings, cell-type specific targeting, and longitudinal observation of the same neurons promise to aid in this endeavor. Thus, exciting times lie ahead, in which we may soon get a handle on the sensory and behavioral complexity of audition.

Acknowledgements

B.B. acknowledges support by the Agence Nationale pour la Recherche (ANR ‘SENSEMAKER’), the Marie Curie FP7 Program (CIG 334581), the Human Brain Project (SP3 — WP5.2), the Fyssen foundation, the DIM ‘Region Ile de France’, the International Human Frontier Science Program Organization (CDA-0064-2015), the Fondation pour l’Audition (Laboratory grant) and the Paris-Saclay University (Lidex NeuroSaclay, IRS iCode and IRS Brainscopes). We would like to thank Y. Frégnac for comments on the manuscript and Srdjan Ostojic for hosting K.V.K. at Ecole Normale Superieure as a visitor funded through an ANR TERC grant to SO and the NIDCD (DC05014).

Footnotes

Conflict of interest statement

Nothing declared.

References and recommended reading

Papers of particular interest, published within the period of review, have been highlighted as:

• of special interest

•• of outstanding interest

- 1.Hudspeth AJ: Integrating the active process of hair cells with cochlear function. Nat Rev Neurosci 2014, 15:600–614. [DOI] [PubMed] [Google Scholar]

- 2.Frégnac Y, Bathellier B: Cortical correlates of low-level perception: from neural circuits to percepts. Neuron 2015, 88:110–126. [DOI] [PubMed] [Google Scholar]

- 3.Cutting JE, Rosner BS: Categories and boundaries in speech and music. Percept Psychophys 1974, 16:564–570. [Google Scholar]

- 4.Wagemans J, Elder JH, Kubovy M, Palmer SE, Peterson MA, Singh M, von der Heydt R: A century of Gestalt psychology in visual perception: I. Perceptual grouping and figure-ground organization. Psychol Bull 2012, 138:1172–1217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wagemans J, Feldman J, Gepshtein S, Kimchi R, Pomerantz JR, van der Helm PA, van Leeuwen C: A century of Gestalt psychology in visual perception: II. Conceptual and theoretical foundations. Psychol Bull 2012, 138:1218–1252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gregory RL: Knowledge in perception and illusion. Philos Trans R Soc Lond B Biol Sci 1997, 352:1121–1127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nelken I, Fishbach A, Las L, Ulanovsky N, Farkas D: Primary auditory cortex of cats: feature detection or something else? Biol Cybern 2003, 89:397–406. [DOI] [PubMed] [Google Scholar]

- 8.Petkov CI, O’Connor KN, Sutter ML: Illusory sound perception in macaque monkeys. J Neurosci 2003, 23:9155–9161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bregman AS: Auditory Scene Analysis: The Perceptual Organization of Sound. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- 10.Mizrahi A, Shalev A, Nelken I: Single neuron and population coding of natural sounds in auditory cortex. Curr Opin Neurobiol 2014, 24:103–110. [DOI] [PubMed] [Google Scholar]

- 11.Nelken I, Bizley J, Shamma SA, Wang X: Auditory cortical processing in real-world listening: the auditory system going real. J Neurosci 2014, 34:15135–15138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wang X: The harmonic organization of auditory cortex. Front Syst Neurosci 2013, 7:114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chechik G, Nelken I: Auditory abstraction from spectrotemporal features to coding auditory entities. Proc Natl Acad Sci U S A 2012, 109:18968–18973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kanold PO, Nelken I, Polley DB: Local versus global scales of organization in auditory cortex. Trends Neurosci 2014, 37:502–510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Issa JB, Haeffele BD, Agarwal A, Bergles DE, Young ED, Yue DT: Multiscale optical Ca2+ imaging of tonal organization in mouse auditory cortex. Neuron 2014, 83:944–959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Barnstedt O, Keating P, Weissenberger Y, King AJ, Dahmen JC: Functional microarchitecture of the mouse dorsal inferior colliculus revealed through in vivo two-photon calcium imaging. J Neurosci 2015, 35:10927–10939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rauschecker JP: Auditory and visual cortex of primates: a comparison of two sensory systems. Eur J Neurosci 2015, 41:579–585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.•.Guo W, Hight AE, Chen JX, Klapoetke NC, Hancock KE, Shinn-Cunningham BG, Boyden ES, Lee DJ, Polley DB: Hearing the light: neural and perceptual encoding of optogenetic stimulation in the central auditory pathway. Sci Rep 2015, 5:10319. [DOI] [PMC free article] [PubMed] [Google Scholar]; Using subcortical optogenetic stimulation with temporal pattern this paper show that much subcortical temporal information is converted into firing rates in cortex.

- 19.Nelken I: Processing of complex stimuli and natural scenes in the auditory cortex. Curr Opin Neurobiol 2004, 14:474–480. [DOI] [PubMed] [Google Scholar]

- 20.Nelken I, Rotman Y, Bar Yosef O: Responses of auditory-cortex neurons to structural features of natural sounds. Nature 1999, 397:154–157. [DOI] [PubMed] [Google Scholar]

- 21.deCharms RC, Blake DT, Merzenich MM: Optimizing sound features for cortical neurons. Science 1998, 280:1439–1443. [DOI] [PubMed] [Google Scholar]

- 22.Chambers AR, Hancock KE, Sen K, Polley DB: Online stimulus optimization rapidly reveals multidimensional selectivity in auditory cortical neurons. J Neurosci 2014, 34:8963–8975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Issa JB, Haeffele BD, Young ED, Yue DT: Multiscale mapping of frequency sweep rate in mouse auditory cortex. Hear Res 2017, 344:207–222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.•.Panniello M, King AJ, Dahmen JC, Walker KMM: Local and global spatial organization of interaural level difference and frequency preferences in auditory cortex. Cereb Cortex 2018, 28:350–369. [DOI] [PMC free article] [PubMed] [Google Scholar]; The first two photon calcium imaging study of interaural difference coding in mouse auditory cortex showing a lack of spatial organization.

- 25.Gao X, Wehr M: A coding transformation for temporally structured sounds within auditory cortical neurons. Neuron 2015, 86:292–303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.••.Deneux T, Kempf A, Daret A, Ponsot E, Bathellier B: Temporal asymmetries in auditory coding and perception reflect multilayered nonlinearities. Nat Commun 2016, 7:12682. [DOI] [PMC free article] [PubMed] [Google Scholar]; This paper shows that sound amplitude modulations are coded by different cortical neurons specific to their magnitude, speed and direction.

- 27.Shepard KN, Lin FG, Zhao CL, Chong KK, Liu RC: Behavioral relevance helps untangle natural vocal categories in a specific subset of core auditory cortical pyramidal neurons. J Neurosci 2015, 35:2636–2645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Perks KE, Gentner TQ: Subthreshold membrane responses underlying sparse spiking to natural vocal signals in auditory cortex. Eur J Neurosci 2015, 41:725–733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Miller CT, Freiwald WA, Leopold DA, Mitchell JF, Silva AC, Wang X: Marmosets: a neuroscientific model of human social behavior. Neuron 2016, 90:219–233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.••.Feng L, Wang X: Harmonic template neurons in primate auditory cortex underlying complex sound processing. Proc Natl Acad Sci U S A 2017, 114:E840–E848. [DOI] [PMC free article] [PubMed] [Google Scholar]; This paper demonstrates the existence of neurons specifically sensitive to harmonic relationship in primate auditory cortex.

- 31.Carruthers IM, Laplagne DA, Jaegle A, Briguglio JJ, Mwilambwe-Tshilobo L, Natan RG, Geffen MN: Emergence of invariant representation of vocalizations in the auditory cortex. J Neurophysiol 2015, 114:2726–2740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Blackwell JM, Taillefumier TO, Natan RG, Carruthers IM, Magnasco MO, Geffen MN: Stable encoding of sounds over a broad range of statistical parameters in the auditory cortex. Eur J Neurosci 2016, 43:751–764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bathellier B, Ushakova L, Rumpel S: Discrete neocortical dynamics predict behavioral categorization of sounds. Neuron 2012, 76:435–449. [DOI] [PubMed] [Google Scholar]

- 34.Shepard KN, Chong KK, Liu RC: Contrast enhancement without transient map expansion for species-specific vocalizations in core auditory cortex during learning. eNeuro 2016:3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Aertsen AM, Johannesma PI: The spectro-temporal receptive field. A functional characteristic of auditory neurons. Biol Cybern 1981, 42:133–143. [DOI] [PubMed] [Google Scholar]

- 36.Depireux DA, Simon JZ, Klein DJ, Shamma SA: Spectro-temporal response field characterization with dynamic ripples in ferret primary auditory cortex. J Neurophysiol 2001, 85:1220–1234. [DOI] [PubMed] [Google Scholar]

- 37.Patil K, Pressnitzer D, Shamma S, Elhilali M: Music in our ears: the biological bases of musical timbre perception. PLoS Comput Biol 2012, 8:e1002759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Pasley BN, David SV, Mesgarani N, Flinker A, Shamma SA, Crone NE, Knight RT, Chang EF: Reconstructing speech from human auditory cortex. PLoS Biol 2012, 10:e1001251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Machens CK, Wehr MS, Zador AM: Linearity of cortical receptive fields measured with natural sounds. J Neurosci 2004, 24:1089–1100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Christianson GB, Sahani M, Linden JF: The consequences of response nonlinearities for interpretation of spectrotemporal receptive fields. J Neurosci 2008, 28:446–455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.David SV, Mesgarani N, Fritz JB, Shamma SA: Rapid synaptic depression explains nonlinear modulation of spectro-temporal tuning in primary auditory cortex by natural stimuli. J Neurosci 2009, 29:3374–3386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.McFarland JM, Cui Y, Butts DA: Inferring nonlinear neuronal computation based on physiologically plausible inputs. PLoS Comput Biol 2013, 9:e1003143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.LeCun Y, Bengio Y, Hinton G: Deep learning. Nature 2015, 521:436–444. [DOI] [PubMed] [Google Scholar]

- 44.Cadieu CF, Hong H, Yamins DL, Pinto N, Ardila D, Solomon EA, Majaj NJ, DiCarlo JJ: Deep neural networks rival the representation of primate IT cortex for core visual object recognition. PLoS Comput Biol 2014, 10:e1003963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Mlynarski W, McDermott JH: Learning midlevel auditory codes from natural sound statistics. Neural Comput 2017:1–39. [DOI] [PubMed] [Google Scholar]

- 46.Ahmad N, Higgins I, Walker KM, Stringer SM: Harmonic training and the formation of pitch representation in a neural network model of the auditory brain. Front Comput Neurosci 2016, 10:24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Atencio CA, Sharpee TO, Schreiner CE: Cooperative nonlinearities in auditory cortical neurons. Neuron 2008, 58:956–966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Schinkel-Bielefeld N, David SV, Shamma SA, Butts DA: Inferring the role of inhibition in auditory processing of complex natural stimuli. J Neurophysiol 2012, 107:3296–3307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kato HK, Asinof SK, Isaacson JS: Network-level control of frequency tuning in auditory cortex. Neuron 2017, 95:412–423 e414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Aizenberg M, Mwilambwe-Tshilobo L, Briguglio JJ, Natan RG, Geffen MN: Bidirectional regulation of innate and learned behaviors that rely on frequency discrimination by cortical inhibitory neurons. PLoS Biol 2015, 13:e1002308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Guo W, Clause AR, Barth-Maron A, Polley DB: A corticothalamic circuit for dynamic switching between feature detection and discrimination. Neuron 2017, 95:180–194 e185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Zhu P, Fajardo O, Shum J, Zhang Scharer YP, Friedrich RW: High-resolution optical control of spatiotemporal neuronal activity patterns in zebrafish using a digital micromirror device. Nat Protoc 2012, 7:1410–1425. [DOI] [PubMed] [Google Scholar]

- 53.Szabo V, Ventalon C, De Sars V, Bradley J, Emiliani V: Spatially selective holographic photoactivation and functional fluorescence imaging in freely behaving mice with a fiberscope. Neuron 2014, 84:1157–1169. [DOI] [PubMed] [Google Scholar]

- 54.Packer AM, Russell LE, Dalgleish HW, Hausser M: Simultaneous all-optical manipulation and recording of neural circuit activity with cellular resolution in vivo. Nat Methods 2015, 12:140–146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Hubel DH, Henson CO, Rupert A, Galambos R: Attention units in the auditory cortex. Science 1959, 129:1279–1280. [DOI] [PubMed] [Google Scholar]

- 56.Fritz J, Shamma S, Elhilali M, Klein D: Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci 2003, 6:1216–1223. [DOI] [PubMed] [Google Scholar]

- 57.•.Schneider DM, Nelson A, Mooney R: A synaptic and circuit basis for corollary discharge in the auditory cortex. Nature 2014, 513:189–194. [DOI] [PMC free article] [PubMed] [Google Scholar]; This study shows how auditory cortex is suppressed by movement via top-down feedback from M2 working through local PV+ interneurons.

- 58.Zhou M, Liang F, Xiong XR, Li L, Li H, Xiao Z, Tao HW, Zhang LI: Scaling down of balanced excitation and inhibition by active behavioral states in auditory cortex. Nat Neurosci 2014, 17:841–850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.•.Williamson RS, Hancock KE, Shinn-Cunningham BG, Polley DB: Locomotion and task demands differentially modulate thalamic audiovisual processing during active search. Curr Biol 2015, 25:1885–1891. [DOI] [PMC free article] [PubMed] [Google Scholar]; This study provides evidence that feedforward drive from the auditory thalamus is suppressed during locomotion.

- 60.Nelson A, Mooney R: The basal forebrain and motor cortex provide convergent yet distinct movement-related inputs to the auditory cortex. Neuron 2016, 90:635–648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.•.Kuchibhotla KV, Gill JV, Lindsay GW, Papadoyannis ES, Field RE, Sten TA, Miller KD, Froemke RC: Parallel processing by cortical inhibition enables context-dependent behavior. Nat Neurosci 2017, 20:62–71. [DOI] [PMC free article] [PubMed] [Google Scholar]; This study describes how auditory cortex is bidirectionally modulated by a cognitively demanding task via ascending cholinergic neuromodulation co-activating multiple interneurons in parallel.

- 62.Carcea I, Insanally MN, Froemke RC: Dynamics of auditory cortical activity during behavioural engagement and auditory perception. Nat Commun 2017, 8:14412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.•.Rodgers CC, DeWeese MR: Neural correlates of task switching in prefrontal cortex and primary auditory cortex in a novel stimulus selection task for rodents. Neuron 2014, 82:1157–1170. [DOI] [PubMed] [Google Scholar]; This study provides evidence that top-down control from the medial prefrontal cortex in rats may modulate the pre-stimulus activity of auditory cortical neurons.

- 64.Runyan CA, Piasini E, Panzeri S, Harvey CD: Distinct timescales of population coding across cortex. Nature 2017, 548:92–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Buran BN, von Trapp G, Sanes DH: Behaviorally gated reduction of spontaneous discharge can improve detection thresholds in auditory cortex. J Neurosci 2014, 34:4076–4081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Bieszczad KM, Weinberger NM: Remodeling the cortex in memory: increased use of a learning strategy increases the representational area of relevant acoustic cues. Neurobiol Learn Mem 2010, 94:127–144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Diamond DM, Weinberger NM: Physiological plasticity of single neurons in auditory cortex of the cat during acquisition of the pupillary conditioned response: II. Secondary field (AII). Behav Neurosci 1984, 98:189–210. [PubMed] [Google Scholar]

- 68.Reed A, Riley J, Carraway R, Carrasco A, Perez C, Jakkamsetti V, Kilgard MP: Cortical map plasticity improves learning but is not necessary for improved performance. Neuron 2011, 70:121–131. [DOI] [PubMed] [Google Scholar]

- 69.Caras ML, Sanes DH: Top-down modulation of sensory cortex gates perceptual learning. Proc Natl Acad Sci U S A 2017, 114:9972–9977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Deneve S, Machens CK: Efficient codes and balanced networks. Nat Neurosci 2016, 19:375–382. [DOI] [PubMed] [Google Scholar]

- 71.Marlin BJ, Mitre M, D’Amour JA, Chao MV, Froemke RC: Oxytocin enables maternal behaviour by balancing cortical inhibition. Nature 2015, 520:499–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Mitre M, Marlin BJ, Schiavo JK, Morina E, Norden SE, Hackett TA, Aoki CJ, Chao MV, Froemke RC: A distributed network for social cognition enriched for oxytocin receptors. J Neurosci 2016, 36:2517–2535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Cohen L, Mizrahi A: Plasticity during motherhood: changes in excitatory and inhibitory layer 2/3 neurons in auditory cortex. J Neurosci 2015, 35:1806–1815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Polley DB, Heiser MA, Blake DT, Schreiner CE, Merzenich MM: Associative learning shapes the neural code for stimulus magnitude in primary auditory cortex. Proc Natl Acad Sci U S A 2004, 101:16351–16356. [DOI] [PMC free article] [PubMed] [Google Scholar]