Supplemental Digital Content is available in the text.

Purpose

To investigate the effect of a change in the United States Medical Licensing Examination Step 1 timing on Step 2 Clinical Knowledge (CK) scores, the effect of lag time on Step 2 CK performance, and the relationship of incoming Medical College Admission Test (MCAT) score to Step 2 CK performance pre and post change.

Method

Four schools that moved Step 1 after core clerkships between academic years 2008–2009 and 2017–2018 were analyzed. Standard t tests were used to examine the change in Step 2 CK scores pre and post change. Tests of differences in proportions were used to evaluate whether Step 2 CK failure rates differed between curricular change groups. Linear regressions were used to examine the relationships between Step 2 CK performance, lag time and incoming MCAT score, and curricular change group.

Results

Step 2 CK performance did not change significantly (P = .20). Failure rates remained highly consistent (pre change: 1.83%; post change: 1.79%). The regression indicated that lag time had a significant effect on Step 2 CK performance, with scores declining with increasing lag time, with small but significant interaction effects between MCAT and Step 2 CK scores. Students with lower incoming MCAT scores tended to perform better on Step 2 CK when Step 1 was after clerkships.

Conclusions

Moving Step 1 after core clerkships appears to have had no significant impact on Step 2 CK scores or failure rates, supporting the argument that such a change is noninferior to the traditional model. Students with lower MCAT scores benefit most from the change.

As medical schools undertake curricular reforms that deviate from the traditional 2 + 2 (2 years of basic science and 2 years of clinical) model, the optimal timing for the United States Medical Licensing Examination (USMLE) Step 1 has been called into question.1 Traditionally, learners have taken Step 1 after completing their basic science curricula. This placement has helped ensure that learners are competent in foundational knowledge relevant to the practice of medicine before entering clinical clerkships. With curricular changes that emphasize both earlier clinical exposure and integrating basic, clinical, and health systems science across the undergraduate medical education continuum, the traditional timing may no longer be optimal for all schools.

Background

Daniel et al1 articulate reasons to consider altering the timing of Step 1 to after the core clerkships. These reasons include (1) promoting longer-term retention and understanding of basic science concepts by encouraging learning that is integrated with clinical care, (2) using a major national assessment as a motivator to drive foundational science learning later in the curriculum, and (3) promoting the review of basic science concepts after learners have developed a rich cadre of illness scripts from exposure to patient care. Additional reasons to consider altering the timing of Step 1 were articulated by Jurich et al.2 They emphasized a need for more flexible timing of Step 1 to allow for the implementation of innovative curricular reforms addressing the Triple Aim of “improving the experience of care, improving the health of populations, and reducing per capita costs of health care.”3 The authors demonstrated that altering the timing of Step 1 to after core clerkships was at least “noninferior” to the traditional model.2 Indeed, the psychometric analysis revealed that the change yielded small increases in Step 1 scores (2.78 scaled score points) and a significant reduction in failure rates (2.87% pre change to 0.39% post change, P < .001) for the analyzed set of schools in the study.

Altering the timing of Step 1 is typically undertaken in the context of other curricular reforms, which often include shortening the basic science curricula, earlier clinical immersion, and more integrated instruction of the basic and clinical sciences. Changing the timing of Step 1 in the absence of other reforms is not recommended because while there are potential benefits, there are also challenges that must be anticipated. Pock et al4 outlined 6 such challenges associated with moving Step 1 after the core clerkships: possible lack of readiness for the clinical phase, concerns that lower-performing students will not be identified and supported early, risk of lower performance on National Board of Medical Examiners (NBME) clinical subject exams, potential need for an extended Step 1 study period, increased student anxiety around residency choice, and reduced time to take and pass the USMLE Step 2 Clinical Knowledge (CK). The authors then go on to describe strategies to overcome each of these challenges.

Shifting Step 1 to after core clerkships may impact the timing of Step 2 CK. Learners typically take a period of 6 to 8 weeks to study and sit for Step 1 after the clerkships. Since most learners want to receive their score on Step 1 before they sit for Step 2 CK, moving the timing of Step 1 may delay scheduling of Step 2 CK. Since prior research has suggested that student performance on Step 2 CK declines as the time between finishing the core clerkships and sitting for Step 2 CK (termed “lag time”) increases,5 we were concerned that the change in Step 1 timing might negatively affect Step 2 CK scores. Thus, the primary purpose of this study was to determine the impact of a change in Step 1 timing to after core clerkships on Step 2 CK scores. We also aimed to determine how the lag time influenced Step 2 CK performance both before and after this curricular change. Finally, we investigated the predictive relationship of incoming Medical College Admission Test (MCAT) score on Step 2 CK performance before and after the change.

Method

Sample

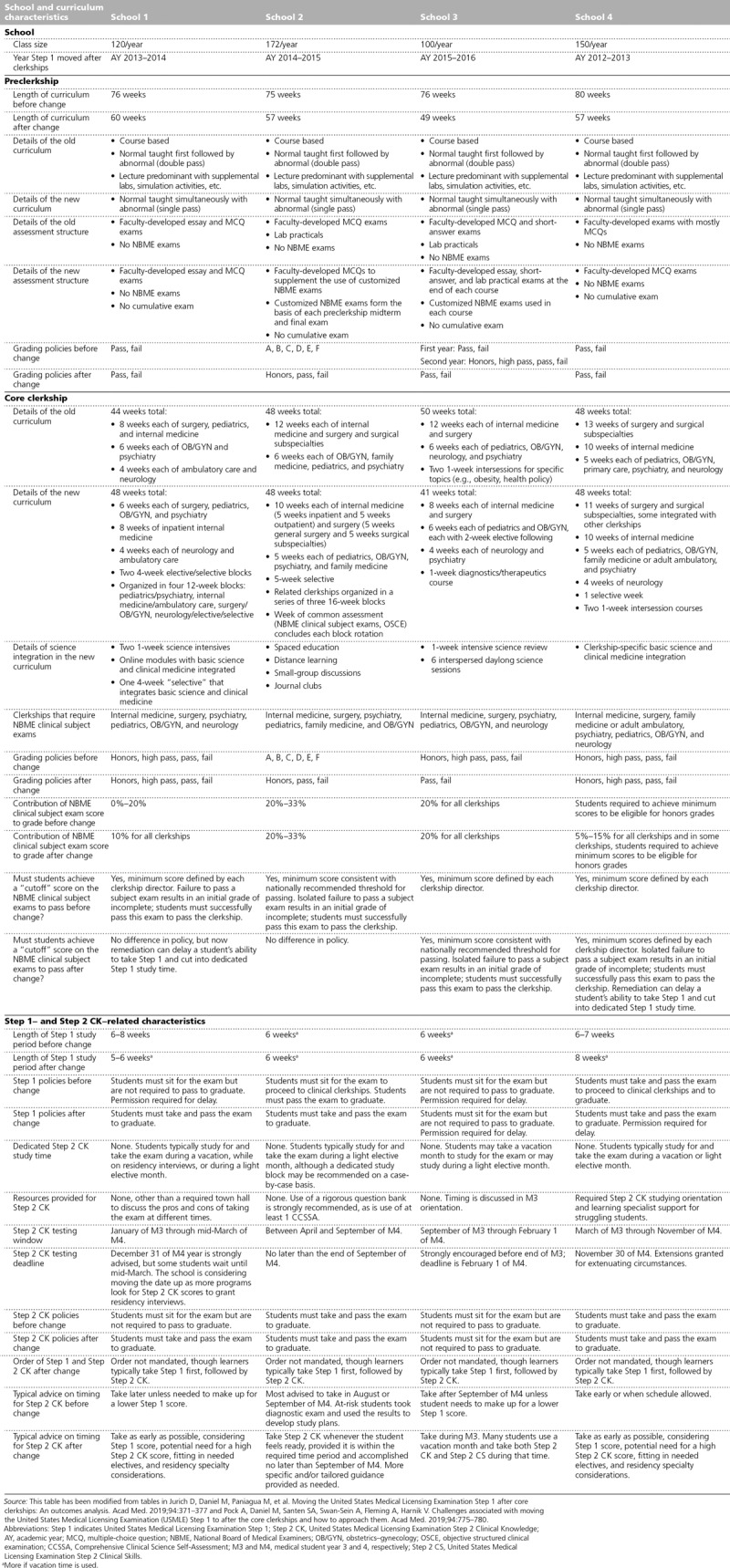

This study included students who completed the USMLE Step 1 and Step 2 CK exams at 4 Liaison Committee on Medical Education (LCME)–accredited schools between academic years 2008–2009 and 2017–2018. Each of these schools transitioned its curricula between these years to have Step 1 placed after the core clinical clerkships. Data 3 years before and 3 years after the change were examined for each school to allow for pre–post comparisons. Appendix 1 contains detailed information on the 4 schools’ curricular and assessment characteristics before and after implementation of the curricular change.

Within this time frame (see above), there were 3,206 students at the 4 schools that had Step 2 CK scores after removing all 121 MD/PhD students and 20 oral and maxillofacial surgery students because they followed a different curriculum. We excluded 3 students who transferred schools between their Step 1 and Step 2 CK exams, 1 student who took Step 2 CK before Step 1, and 3 students who waited over 4 years after taking Step 1 to take Step 2 CK. The final sample included 3,199 examinees. All scores used in the analyses reflected an examinee’s first attempt on the respective examination. Using these criteria, 1,637 examinees first attempted Step 2 CK before their school’s curricular change, and another 1,562 examinees first attempted Step 2 CK after the change. Characteristics between these 2 groups were comparable with regard to age, gender, ethnicity, and incoming MCAT score (see Supplemental Digital Appendix 1 at http://links.lww.com/ACADMED/A725).

Analyses

Following the methodology described in Jurich et al,2 several steps were taken to control for potential confounding factors in Step 2 CK performance. We accounted for rising national averages of Step 2 CK scores over the study years by computing the deviation between examinee scores and the average national score of all first-time examinees from LCME-accredited schools for the corresponding academic year, excluding the study schools from the national average. The deviation scores and other data were then aggregated into cohorts by year relative to implementation of the curricular change to create comparable groups for analysis despite the schools implementing the change during different academic years.

The primary analyses used standard t test techniques to examine the change in Step 2 CK deviation scores pre and post change. Although Step 1 may be seen as a useful covariate for this analysis, the curricular change altered both the circumstances of and examinee performance on Step 1.2 Thus, Step 1 scores and the curricular change (the independent variable) are strongly related, and the use of Step 1 scores as a covariate for Step 2 CK deviation scores may mask the true relationship between the change and Step 2 CK performance (the dependent variable).6 We also conducted tests of differences in proportions to evaluate whether Step 2 CK failure rates differed between curricular change groups.

Linear regressions were then conducted to determine how the curricular change interacted with other variables to influence Step 2 CK performance. First, we examined how the number of days students waited to take Step 2 CK after finishing their core clinical clerkships (i.e., lag time) affected Step 2 CK performance and whether this relationship was impacted by the curricular change. This was accomplished via a multiple regression predicting Step 2 CK deviation scores from curricular change group (pre or post) and delay after clerkship (in days) and the interaction between these 2 variables. Next, a similar regression was run examining the interaction effect between incoming MCAT scores and curricular change group on Step 2 CK deviation scores to evaluate whether the curricular change affected low and high MCAT performers differently.

All data analyses were conducted using R: A Language and Environment for Statistical Computing, version 3.5.2 (The R Foundation, Vienna, Austria).

Results

No change in Step 2 CK scores or failure rates

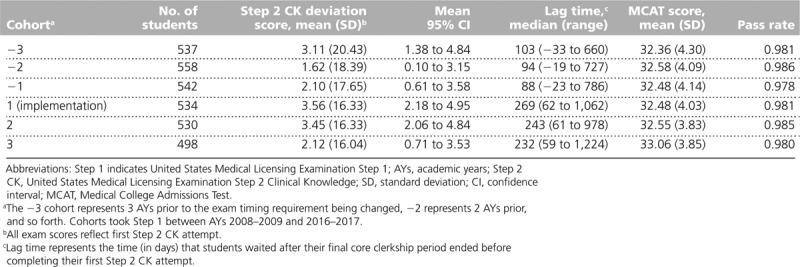

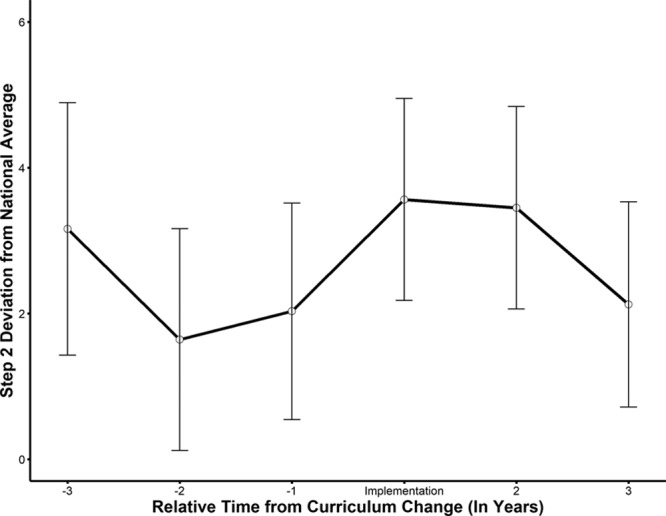

Table 1 presents descriptive statistics of variables included in the analysis for each cohort relative to time from implementation of the curricular change. The deviation scores show that Step 2 CK performance in these schools remained rather consistent relative to the national average pre and post change, with a t test for difference in means showing no significant change in scores post change (P = .20; 95% confidence interval [CI] of postchange minus prechange means: −0.42 to 2.02). Therefore, for the 4 schools, moving Step 1 to after the clerkships did not significantly affect Step 2 CK scores despite the postchange group waiting about 2.5 times longer to sit for Step 2 CK after completing their final core clerkship (see Table 1). Figure 1 visualizes these data by presenting the Step 2 CK deviation score by each cohort, along with their 95% CIs. Examining the figure, it is clear that the 95% CIs overlap considerably across the cohorts.

Table 1.

Descriptive Statistics by Cohort Relative to the Time From Implementation of a Curricular Change to Administer Step 1 After Core Clerkships From 4 Liaison Committee on Medical Education–Accredited Schools, AYs 2008–2009 to 2017–2018

Figure 1.

Average United States Medical Licensing Examination (USMLE) Step 2 Clinical Knowledge deviation (from national average) scores and 95% confidence intervals across 4 Liaison Committee on Medical Education–accredited schools, relative to time from implementation of a curricular change to administer the USMLE Step 1 after core clerkships, academic years 2008–2009 to 2017–2018.

Failure rates pre and post change also remained highly consistent. The aggregated failure rates before the curricular change were 1.83% compared with 1.79% after the change (Fisher’s exact test P > .99).

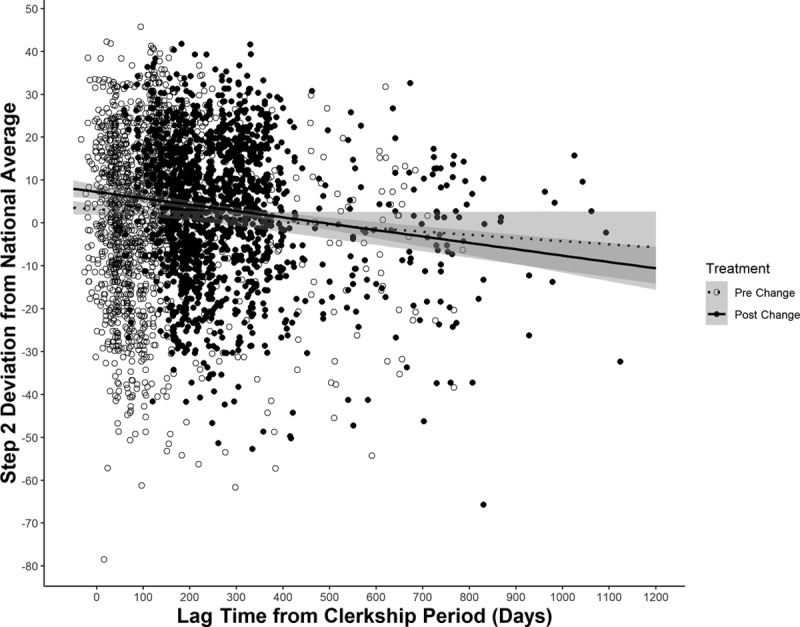

Effect of lag time on Step 2 CK scores

Figure 2 visualizes the regression of lag time after completing the final core clerkship, curricular change group, and their interaction on Step 2 CK deviation scores. The regression indicated that there was no significant interaction effect between lag time and curricular change group (b = −0.01, t = 1.58, P = .11, 95% CI: −0.02 to 0.00). This relationship was similar for the majority of lag times, only differing for the shortest lag times. Lag time had a significant main effect on Step 2 CK performance (b = −0.014, t = −4.44, P <. 001, 95% CI: 0.01 to 0.02). In general, students performed worse on Step 2 CK the longer they delayed taking the exam after the clerkship period regardless of curricular change group, though the degradation was slightly less for the prechange group.

Figure 2.

Regression lines depicting the relationship between United States Medical Licensing Examination (USMLE) Step 2 Clinical Knowledge (CK) deviation (from national average) scores and lag time (the time students waited after the final core clerkship period ended before completing their first USMLE Step 2 CK attempt) measured in days for each curricular change (i.e., moving USMLE Step 1 to after the core clerkships) group. The shaded area represents the conditional standard error of the regression line at the corresponding lag time. Data come from 4 Liaison Committee on Medical Education–accredited schools, academic years 2008–2009 to 2017–2018.

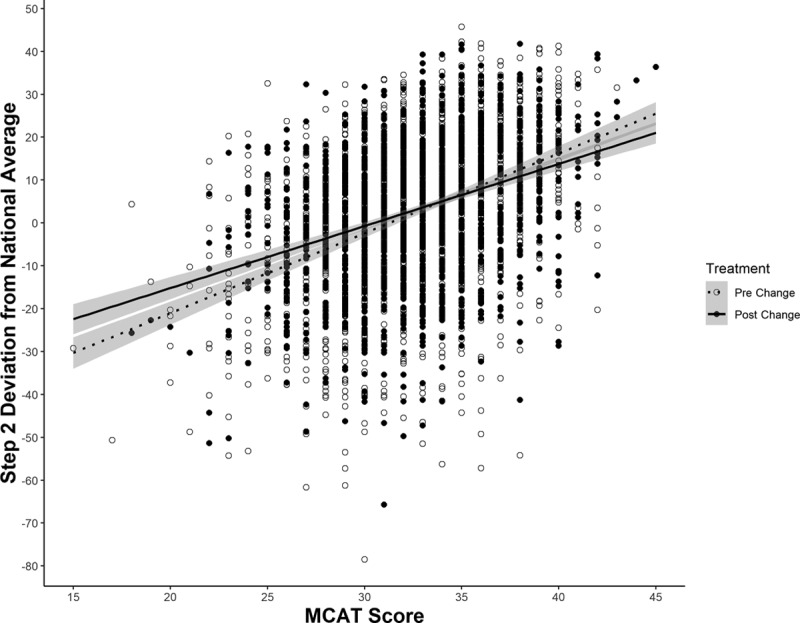

Effect of curricular change on low and high MCAT performers’ Step 2 CK scores

The regression investigating the interaction between incoming MCAT score and curricular change group, displayed in Figure 3, yielded a small but statistically significant interaction effect (b = −0.42, t = 2.86, P = .005, 95% CI: −0.70 to −0.13). The figure shows that students with lower incoming MCAT scores tended to perform better on Step 2 CK post change, whereas those with higher MCAT scores performed better pre change. However, these results only appear significantly different at the lower end of MCAT performance for this sample. Across more typical MCAT scores, the pre- and postchange regression lines overlap considerably. (Note that the significant interaction effect precludes the use of MCAT scores as a covariate in the pre–post deviation score comparison analysis as this violates a core assumption of analysis of covariance methods.)

Figure 3.

Regression lines depicting the relationship between United States Medical Licensing Examination (USMLE) Step 2 Clinical Knowledge deviation (from national average) scores and incoming Medical College Admission Test (MCAT) scores for each curricular change (i.e., moving USMLE Step 1 to after the core clerkships) group. The shaded area represents the conditional standard error of the regression line at the corresponding MCAT score. Data come from 4 Liaison Committee on Medical Education–accredited schools, academic years 2008–2009 to 2017–2018.

Discussion

The findings of this study provide evidence of “noninferiority” of a significant curricular change: Moving Step 1 after core clerkships appears to have had no significant impact on Step 2 CK scores or failure rates. These results may at first seem surprising, given that the median lag time to sit for Step 2 CK after completing the core clerkships was nearly 2.5 times longer for students in the post–curricular change group.5,7 We hypothesize that studying for Step 1 in closer proximity to Step 2 CK had some influence on testing performance, which likely mitigated the effect of the longer lag times.

Both pre and post curricular change, there was a degradation in Step 2 CK scores with increasing lag time from completion of the core clerkships. This makes sense when one considers that clinical and nonclinical electives after clerkships are often specialty-focused, lack the breadth of the core clerkships, and typically do not use NBME-style exams for assessment.8 Students also likely have knowledge decay as time elapses. Performance may further be influenced by competing priorities and motivations. Learners who take Step 2 CK before September when the Electronic Residency Application Service opens may be aiming for a higher 3-digit score to support their application, particularly if they are trying to offset a lower Step 1 score. Those who take the exam after September have to balance studying for Step 2 CK with preparing for and going on residency interviews. The predicted degradation in scores is small (~4 points from 100 to 600 days, Figure 2) for the prechange group, supporting earlier findings by Pohl et al,5 while the degradation is slightly more (~8 points from 100 to 600 days, Figure 2) for the postchange group.

In terms of advising students, these findings have several implications. In traditional curricula where learners complete Step 1 before the core clerkships and Step 2 CK after, learners who are most concerned about earning a higher 3-digit score may wish to take Step 2 CK in close proximity to completion of the core clerkships. However, learners at these institutions have less time to complete career exploratory electives, subinternships, and away rotations, so this can affect the feasibility of scheduling Step 2 CK quickly. Since the score degradations are relatively small, learners can weigh the pros and cons of delaying the exam. At institutions where Step 1 has been moved after core clerkships, learners more focused on their score may want to take Step 1 and Step 2 CK close together. Because of a shortened preclinical phase, learners at these institutions have more curricular time to complete electives after taking both licensure exams, so they may want to try to avoid the slightly larger score degradations seen among those in the postchange group who waited longer to take Step 2 CK. The increased time in the postclerkship phase at these institutions also allows for more flexible scheduling of Step 2 Clinical Skills (CS), allowing more students to complete this exam before residency applications.

Taking Step 1, Step 2 CK, and Step 2 CS shortly after completing the core clerkships and relatively early in relation to residency applications has the added advantage of ensuring that Step 2 CK and CS results are available to program directors as they are making interview selections. An increasing number of residency programs are considering Step 2 CK and CS results when offering interviews, and many require that these results be submitted before creating their final rank lists.9,10 If this trend continues, Step 2 CK and CS results may take on even greater importance. As Step 2 CK is an important predictor of performance in clinical practice,11,12 increasing emphasis on Step 2 CK scores for both residency selection and to help drive learning may be desirable. Further, including Step 2 CS results on residency applications may help ensure that competencies measured in a simulated practice setting are considered in the selection process.

A learner’s prior performance on other assessments (e.g., the MCAT exam and medical school knowledge assessments) and NBME exams (e.g., clinical subject exams, the Comprehensive Basic Science Self-Assessment) can help guide more tailored advice on exam timing. For example, a higher-performing student could be advised to take Step 2 CK without waiting for their Step 1 scores. A lower-performing student may want to wait 1 to 2 months to ensure that they get their Step 1 score back before taking Step 2 CK. Other factors such as fatigue, burnout, well-being, confidence, elective and away-elective schedules, and test anxiety may also be factored into specific advice. Neither the USMLE program nor the 4 schools in this study mandate that Step 1 be taken before Step 2 CK. Thus, if these 4 schools’ policies are representative of other medical schools (see Appendix 1), learners could conceivably take Step 2 CK first. There are limited data for students choosing to take Step 2 CK before Step 1 and their performance on these exams13; therefore, we are unable to make recommendations related to this.

Our regression analysis shows that students with lower incoming MCAT scores performed better on Step 2 CK after the curricular change. An unpublished analysis (D. Jurich, unpublished data, August 2018) found a similar trend to Step 1 (see Supplemental Digital Appendix 2 at http://links.lww.com/ACADMED/A725). This is important for several reasons. The schools included in this study and the prior study by Jurich et al2 tended to have students with higher incoming MCAT scores and better Step 1 and Step 2 CK performance than other schools, making it difficult to determine how a change in Step 1 timing could affect schools with students that have lower averages. This finding suggests that lower-performing learners may actually benefit the most from moving Step 1 to after the core clerkships. The schools in this study all implemented more integrated curricula in addition to moving Step 1. As schools that have students with lower MCAT scores at matriculation move Step 1 to after core clerkships, it will be helpful to examine the effect of the move on their Step 1 and Step 2 CK scores.

Future studies should ideally explore in depth the characteristics and motivations of students who take Step 2 CK early versus those who take it late with regard to both performance metrics and other factors, such as career selection. This will help further tailor advising. Some of the students who delayed 300 to 600 days may have taken a year off, either to pursue an advanced degree or for personal reasons. The duration of the Step 2 CK study period may also have been different for those who took the exam quickly versus those who delayed. Taking the exam immediately after both clerkships and Step 1 may result in less time needed to prepare, resulting in less “time out” of the core curricula to study. Understanding the specialty choices and Step 2 CK score goals of learners who take the exam early versus late could also be informative. Future studies should also provide rigorous psychometric analyses of the effects of changes to Step 1 timing on other outcomes, such as NBME clinical subject exam performance. Data from the University of Michigan show that mean NBME clinical subject exam scores decrease slightly (0.53–2.29 points, P < .05) when Step 1 is moved to after the core clerkships, with the greatest declines in the first few clerkships and in clerkships with the greatest breadth (e.g., internal and family medicine).14 The schools in this study all anecdotally report similar findings.4 Understanding the effects of a change in Step 1 timing on NBME clinical subject exam performance and how to best respond to them is critical.

When Step 1 is placed after the core clerkships, the NBME clinical subject exams take the place of Step 1 as the first major national standardized assessments that students must pass unless other NBME exams, such as the Comprehensive Basic Science Examination, are used by the schools. We believe this may have the following effects:

Schools may observe a slight increase in the number of students receiving an initial grade of incomplete by assessment (i.e., fail) on clerkships. These students may be required to retake the NBME clinical subject exam, which can delay them in taking Step 1 and Step 2 CK. Stronger test takers are thus more likely to take Step 2 CK early, potentially contributing to the higher overall scores seen in the early test-taking group in this study.

Taking and passing 6 to 7 national standardized NBME assessments before taking Step 1 may contribute to the lower observed Step 1 failure rates among the schools in this study, as observed by Jurich et al.2 Since nearly all learners pre and post change took Step 1 before taking Step 2 CK, they had experience taking a major national standardized assessment, which may explain why failure rates on Step 2 CK were not impacted.

The student perspective on the change in Step 1 timing is another important area for future research. Our students are generally appreciative of the positive impact the change has on Step 1 scores and failure rates. They also anecdotally state that their learning during the Step 1 study period is enhanced and that they appreciate reviewing basic science concepts after gaining clinical experience. The potential overall impact on student well-being, however, is unknown. Taking Step 1, Step 2 CK, and Step 2 CS in close proximity may increase stress. Lower NBME clinical subject exam performance may also adversely impact certain learners.

This study has some important limitations. Although we attempted to identify idiosyncratic situations, there may be examinees included in the analysis who did not follow the standard curriculum. However, the large sample size limits their potential impact on the results.

Other curricular and assessment changes occurring concurrently to the change in Step 1 timing are likely to have impacted scores as well. While different types of instruction have variably been shown to affect USMLE scores and competencies,15–17 test-enhanced learning has been shown repeatedly to improve performance.8,18 The study schools all transitioned from largely lecture-based curricula to ones that incorporate more active learning strategies. They altered their instruction and assessment practices to emphasize horizontal and vertical integration of the basic and clinical sciences (see Appendix 1), a practice aimed at enhancing learning and retention.19 Furthermore, 2 of the 4 schools added customized NBME exams to foster test-enhanced learning. These curricula and assessment changes may have positively impacted the lower-performing learners the most, as evidenced by the gains seen in the matriculants with lower incoming MCAT scores. This bodes well for institutions aiming to admit students based on a more holistic view of accomplishments. Until additional schools with a wider range of incoming MCAT scores and USMLE outcomes alter the timing of Step 1, it will remain difficult to generalize our results to other schools. We anticipate that in the next 2 to 3 years, more schools will change the timing of Step 1 and we will be able to analyze data from these institutions.

Conclusions

In summary, we found that when Step 1 is moved after the core clerkships, Step 2 CK scores and failure rates remained relatively consistent, adding to the growing body of evidence that such a change is at least noninferior to the status quo. Students with lower incoming MCAT scores appear to benefit the most, making us hopeful that as other schools with metrics that differ from those of the schools in this study implement similar reforms, we will see similar, if not better, results.

Acknowledgments:

The authors wish to thank Colleen Ward for her instrumental contributions throughout the planning and development of this manuscript.

Supplementary Material

Appendix 1. Curricular and Assessment Characteristics of 4 Liaison Committee on Medical Education–Accredited Schools Included in a Study of the Effects of Moving Step 1 After Core Clerkships on Step 2 CK Scores, AYs 2008–2009 to 2017–2018

Footnotes

Supplemental digital content for this article is available at http://links.lww.com/ACADMED/A725.

Funding/Support: The University of Michigan School of Medicine, Vanderbilt School of Medicine, and New York University School of Medicine have Accelerating Change in Medical Education grants from the American Medical Association. Virginia Commonwealth University School of Medicine receives funding from the American Medical Association for S.A. Santen’s consultation on the Accelerating Change in Medical Education grant.

Other disclosures: D. Jurich, M. Paniagua, and M.A. Barone work for the National Board of Medical Examiners, the organization that administers the United States Medical Licensing Examinations examined in this study.

Ethical approval: This study was reviewed and determined to be exempt by the American Institutes for Research (project number EX00398; June 27, 2016).

Disclaimer: The views expressed are those of the authors and do not reflect the official policy or position of their universities, the National Board of Medical Examiners, the Department of Defense, the United States Air Force, or the United States Government.

References

- 1.Daniel M, Fleming A, Grochowski CO, et al. Why not wait? Eight institutions share their experiences moving United States Medical Licensing Examination Step 1 after core clinical clerkships. Acad Med. 2017;92:1515–1524. [DOI] [PubMed] [Google Scholar]

- 2.Jurich D, Daniel M, Paniagua M, et al. Moving the United States Medical Licensing Examination Step 1 after core clerkships: An outcomes analysis. Acad Med. 2019;94:371–377. [DOI] [PubMed] [Google Scholar]

- 3.Berwick DM, Nolan TW, Whittington J. The Triple Aim: Care, health, and cost. Health Aff (Millwood). 2008;27:759–769. [DOI] [PubMed] [Google Scholar]

- 4.Pock A, Daniel M, Santen SA, Swan-Sein A, Fleming A, Harnik V. Challenges associated with moving the United States Medical Licensing Examination (USMLE) Step 1 to after the core clerkships and how to approach them. Acad Med. 2019;94:775–780. [DOI] [PubMed] [Google Scholar]

- 5.Pohl CA, Robeson MR, Veloski J. USMLE Step 2 performance and test administration date in the fourth year of medical school. Acad Med. 2004;79(10 suppl):S49–S51. [DOI] [PubMed] [Google Scholar]

- 6.Miller GA, Chapman JP. Misunderstanding analysis of covariance. J Abnorm Psychol. 2001;110:40–48. [DOI] [PubMed] [Google Scholar]

- 7.Dyrbye LN, Thomas MR, Natt N, Rohren CH. Prolonged delays for research training in medical school are associated with poorer subsequent clinical knowledge. J Gen Intern Med. 2007;22:1101–1106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Green ML, Moeller JJ, Spak JM. Test-enhanced learning in health professions education: A systematic review: BEME guide no. 48. Med Teach. 2018;40:337–350. [DOI] [PubMed] [Google Scholar]

- 9.National Residency Matching Program. Results of the 2012 NRMP Program Director Survey. http://www.nrmp.org/wp-content/uploads/2013/08/programresultsbyspecialty2012.pdf. Published August 2012. Accessed July 2, 2019.

- 10.National Residency Matching Program. Results of the 2018 NRMP Program Director Survey. https://www.nrmp.org/wp-content/uploads/2018/07/NRMP-2018-Program-Director-Survey-for-WWW.pdf. Published June 2018. Accessed July 2, 2019.

- 11.Norcini JJ, Boulet JR, Opalek A, Dauphinee WD. The relationship between licensing examination performance and the outcomes of care by international medical school graduates. Acad Med. 2014;89:1157–1162. [DOI] [PubMed] [Google Scholar]

- 12.Cuddy MM, Young A, Gelman A, et al. Exploring the relationships between USMLE performance and disciplinary action in practice: A validity study of score inferences from a licensure examination. Acad Med. 2017;92:1780–1785. [DOI] [PubMed] [Google Scholar]

- 13.United States Medical Licensing Examination. 2018 bulletin of information. https://www.usmle.org/pdfs/bulletin/2018bulletin.pdf. Accessed July 2, 2019.

- 14.Holman E, Bridge P, Daniel M, et al. The impact of moving USMLE Step 1 on NBME Clinical Subject Exam performance. Poster presented at: 2018 Central Group on Educational Affairs Regional Spring Meeting; April 2018; Rochester, MN. [Google Scholar]

- 15.Hoffman K, Hosokawa M, Blake R, Jr, Headrick L, Johnson G. Problem-based learning outcomes: Ten years of experience at the University of Missouri-Columbia School of Medicine. Acad Med. 2006;81:617–625. [DOI] [PubMed] [Google Scholar]

- 16.Norman GR, Schmidt HG. Effectiveness of problem-based learning curricula: Theory, practice and paper darts. Med Educ. 2000;34:721–728. [DOI] [PubMed] [Google Scholar]

- 17.Koh GC, Khoo HE, Wong ML, Koh D. The effects of problem-based learning during medical school on physician competency: A systematic review. CMAJ. 2008;178:34–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Larsen DP, Butler AC, Roediger HL., 3rd Test-enhanced learning in medical education. Med Educ. 2008;42:959–966. [DOI] [PubMed] [Google Scholar]

- 19.Brauer DG, Ferguson KJ. The integrated curriculum in medical education: AMEE guide no. 96. Med Teach. 2015;37:312–322. [DOI] [PubMed] [Google Scholar]