Abstract

Using sequence alignment to compare more than 12,000 pairs of progress notes, we find that progress notes were, on average, 74.5% redundant with the prior progress note written for the same patient.

Adoption of electronic health records (EHRs) has transformed ophthalmology documentation. Whereas hand-written paper charts encouraged brevity, documentation in EHRs may be composed with content-importing technologies such as templates and copy-paste,1 resulting in longer electronic notes than their paper counterparts2. Further, electronic notes can look remarkably similar, and may contain outdated or erroneous information that contributes to medical errors.3,4 One step toward weighing the risks and benefits of content-importing technologies is understanding similarity among notes. While a few studies find rates of redundancy around 30% in primary care notes,5,6 to our knowledge, no study has rigorously quantified similarity between subsequent outpatient ophthalmology notes.

This study was conducted at Casey Eye Institute, the ophthalmology department of Oregon Health & Science University (OHSU), a large academic medical center in Portland, Oregon, and approved by OHSU’s Institutional Review Board which granted a waiver of informed consent for analysis of EHR data. We first performed a large-scale analysis of note redundancy using natural language processing. We included all 48 faculty providers (42 ophthalmologists, 6 optometrists) who saw patients between January 1, 2017 and December 31, 2018, and collected progress notes for follow-up visits where the primary visit diagnosis (parent ICD-10 diagnostic code) was one of the provider’s three most common based on billing data. We paired each note with the progress note for the next office visit by the same patient to the same provider for the same diagnosis. We repeated this process to generate note pairs for all 12,355 patients who attended ≥2 follow-up appointments with a study provider during the study period. For comparison, we collected 10,000 random pairs of notes, ensuring each note represented a different patient.

We used sequence alignment to assess note redundancy, employing the modified Levenshtein edit-distance algorithm5 to generate a “master note” containing an aligned sequence of words from either note. Redundancy was defined as the percent of words from the second note that aligned with words from the first note. Redundancy calculations were performed in Python (version 3.7, Python Software Foundation, https://www.python.org/).

Next, to analyze redundancy by SOAP section, we manually reviewed 120 pairs of encounter reports which, at our institution, are automatically generated to include the progress note, exam findings, and any procedure notes for a visit. We analyzed encounter reports as many providers leave objective findings out of their progress notes. We selected serial encounter reports for 15 patients for each of eight attending ophthalmology providers (2 each from cornea, neuro-ophthalmology, retina, and comprehensive subspecialties), randomly selecting the first report from a pool of follow-up office visits where the primary visit diagnosis was one of the provider’s three most common (November 1, 2016 to October 31, 2017). One author (AEH) manually coded the first note of each encounter report pair into SOAP and Other sections and a second author (BH) independently coded 4 representative reports to assess inter-observer agreement. We used document comparison software (Workshare Compare; Workshare, San Francisco, CA) to highlight new text in the second report of each pair and counted new and total words per section. We used a Kruskal-Wallis test with Bonferroni correction to assess overall difference in redundancy between note sections and multiple post hoc Dunn’s tests to identify specific statistically-different sections. All calculations for this manual review were performed in spreadsheet software (Excel 2016; Microsoft, Redmond, WA) with XLSTAT add-in software (Addinsoft, New York, NY).

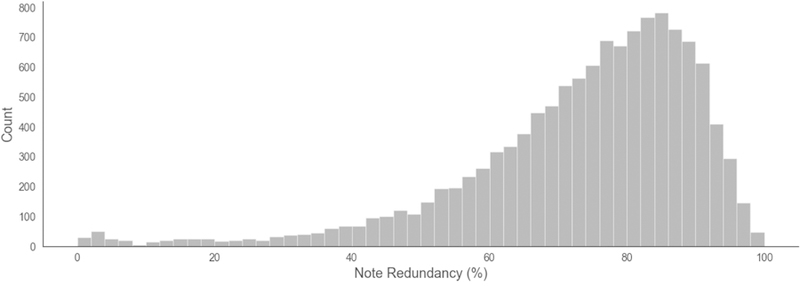

For the large-scale analysis with 12,355 progress note pairs, the second note was on average 74.5% redundant with the first (95% Confidence Interval, 74.2%−74.8%) (Figure 1). That is, 74.5% of note text was found verbatim in the progress note for the patient’s previous office visit. The 24,710 progress notes in our sample averaged 610±563 words in length. By comparison, across the 10,000 randomly-paired progress notes, the second note was on average 19.3% redundant with the first (95% CI, 19.1–19.5%).

Figure 1. Distribution of ophthalmology progress note redundancy based on automated text analysis between 12,355 pairs of serial notes by the same provider seeing the same patient.

On average, 74.5% of text in the second note was redundant with the first note. Forty-eight providers were included in this analysis.

The 120 manually reviewed encounter reports were, on average, 75.4% redundant (95% CI, 73.5–77.3%) and 1129±288 words long. Inter-observer agreement for word count by SOAP section was excellent (Spearman’s r=0.983, p<0.0001). The Plan section had the highest proportion of new words (44.6%, 95% CI, 39.7–49.6%). All other sections ranged from 22.7–27.4% new text (Table available at www.aaojournal.org). The percentage of new words in the Plan section was significantly higher than the other four sections (p<0.001, post hoc Dunn test). There were no statistically-significant differences among other sections.

To our knowledge, this is the first study quantifying redundancy of ophthalmology notes. We find the majority of text in both progress notes and encounter reports does not change between serial visits. Moreover, we find the Plan section changed the most between serial visits with nearly half of Plan text being new, possibly reflecting a greater propensity for providers to rethink and modify their plan each visit. Together, these findings identify a high level of redundancy in EHR documentation for ophthalmology (~75%), especially when compared to the 19.1% redundancy in randomly paired progress notes. While some of this redundancy may reflect helpful repeated structure (e.g., section headers) or stable objective findings, other redundant text may be included for non-clinical purposes (e.g., billing attestations) or irrelevant to the visit (e.g., some medication lists). Most encounter reports (88/120) even had duplicate exam text within the same report (Table available at www.aaojournal.org). The American Academy of Ophthalmology’s guideline that “the fina l patient note must be edited carefully to ensure accuracy and relevance to the current visit” after use of content-importing begins to address this issue.7 However, more may be done prospectively to ensure documentation aids such as templates support concise and accurate documentation.

Further study is needed to rigorously quantify the impact of redundancy on provider efficiency and patient safety. Moreover, the amount and type of redundant text that is helpful or harmful depends on specialty and end use which additional, more-targeted documentation review might clarify. Still, this high level of redundancy may reflect documentation designed as an aid for billing, medicolegal defense, or the consolidation of information across a fragmented record – rather than concise communication of clinical rea soning.

Supplementary Material

Acknowledgments

Financial Support: Supported by grants R00LM12238 and P30EY10572 from the National Institutes of Health (Bethesda, MD), and by unrestricted departmental funding from Research to Prevent Blindness (New York, NY). The funding organizations had no role in the design or conduct of this research. The authors have no commercial, proprietary, or financial interest in any of the products or companies described in this article. MFC is an unpaid member of the Scientific Advisory Board for Clarity Medical Systems (Pleasanton, CA), a Consultant for Novartis (Basel, Switzerland), and an initial member of Inteleretina, LLC (Honolulu, HI). AEH is a consultant for Advanced Clinical (Deerfield, IL).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Weis JM, Levy PC. Copy, Paste, and Cloned Notes in Electronic Health Records. Chest 2014;145(3):632–638. doi: 10.1378/chest.13-0886. [DOI] [PubMed] [Google Scholar]

- 2.Sanders DS, Lattin DJ, Read-Brown S, Tu DC, Wilson DJ, Hwang TS, Morrison JC, Yackel TR, Chiang MF. Electronic health record systems in ophthalmology: impact on clinical documentation. Ophthalmology 2013;120(9):1745–1755. doi: 10.1016/j.ophtha.2013.02.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tsou A, Lehmann C, Michel J, Solomon R, Possanza L, Gandhi T. Safe Practices for Copy and Paste in the EHR: Systematic Review, Recommendations, and Novel Model for Health IT Collaboration. Applied Clinical Informatics 2017;26(01):12–34. doi: 10.4338/ACI-2016-09-R-0150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Singh H, Giardina TD, Meyer AND, Forjuoh SN, Reis MD, Thomas EJ. Types and Origins of Diagnostic Errors in Primary Care Settings. JAMA Internal Medicine 2013;173(6):418. doi: 10.1001/jamainternmed.2013.2777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wrenn JO, Stein DM, Bakken S, Stetson PD. Quantifying clinical narrative redundancy in an electronic health record. J Am Med Inform Assoc 2010;17(1):49–53. doi: 10.1197/jamia.M3390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cohen R, Elhadad M, Elhadad N. Redundancy in electronic health record corpora: analysis, impact on text mining performance and mitigation strategies. BMC Bioinformatics 2013;14(1):10. doi: 10.1186/1471-2105-14-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Silverstone DE, Lim MC, American Academy of Ophthalmology Medical Information Technology Committee. Ensuring information integrity in the electronic health record: the crisis and the challenge. Ophthalmology 2014;121(2):435–437. doi: 10.1016/j.ophtha.2013.08.005. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.