Abstract

Endoscopic diagnosis is an important means for gastric polyp detection. In this paper, a panoramic image of gastroscopy is developed, which can display the inner surface of the stomach intuitively and comprehensively. Moreover, the proposed automatic detection solution can help doctors locate the polyps automatically and reduce missed diagnosis. The main contributions of this paper are firstly, a gastroscopic panorama reconstruction method is developed. The reconstruction does not require additional hardware devices and can solve the problem of texture dislocation and illumination imbalance properly; secondly, an end-to-end multiobject detection for gastroscopic panorama is trained based on a deep learning framework. Compared with traditional solutions, the automatic polyp detection system can locate all polyps in the inner wall of the stomach in real time and assist doctors to find the lesions. Thirdly, the system was evaluated in the Affiliated Hospital of Zhejiang University. The results show that the average error of the panorama is less than 2 mm, the accuracy of the polyp detection is 95%, and the recall rate is 99%. In addition, the research roadmap of this paper has guiding significance for endoscopy-assisted detection of other human soft cavities.

1. Introduction

Gastroscopy plays a major clinical role in the diagnosis of gastric diseases. The detection and diagnosis for gastric polyps by gastroscopic intervention is the most routine solution [1]. However, conventional endoscope diagnosis for polyps is prone to misdiagnosis, for the following reasons: first of all, as soft cavity, stomach is easy to deformation. Additionally, there are lots of folds in the gastric inner wall, which leads to the result that gastric polyps are not intuitive. During the examination, doctors need to move the camera lens of the endoscope back and forth to find polyps. What is more, doctors control the endoscope in vitro. Due to the narrow entrance and narrow vision, it is difficult for doctors to manipulate the lens flexibly to obtain a detailed and comprehensive observation of the gastric inner wall [2]. Last but not the least, the following-up examination for polyps detection often relies on the initial ink injection in the previous procedure (see Figure 1). In this case, the ink injection area may fall off as time goes on or is dissolved by gastric mucosa, which results in missing the located polyps [3]. Based on the above reasons, it is significant to improve the method that detects and identifies the polyp lesion during the gastroscopic examination, to reduce the rate of misdiagnosis.

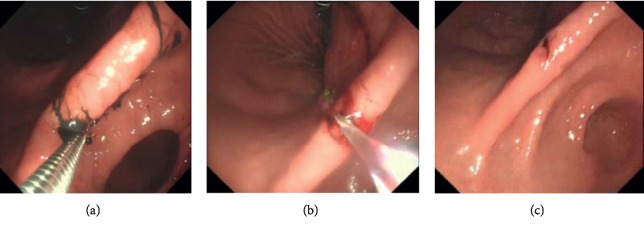

Figure 1.

Polyps detection in traditional procedure. The lesions are determined by ink injection, but the ink may fade away before second examination. And reflective areas can be found on the captured images.

Many literatures have been proposed to assist gastroenterologists to detect gastric polyp and reduce the rate of misdiagnosis with modern science and technology [4]. For instance, some researchers have focused on the method that combines computer vision technology and conventional endoscope diagnosis to detect gastric polyps. In those research studies, a typical example is that [5] proposed a method to estimate confidence distribution of polyps based on the polar matrix and covariance matrix. In this method, they used covariance matrix to prejudge the possible lesions in endoscopic image to assist doctors in diagnosis. However, the experimental result shows the detection accuracy depends on the range of viewing angles as camera moves, which limits its application. Besides, Gao et al. [6] design a noninvasive biopsy mark system applied in gastroscopic examination. In this technology, they construct a virtual static three-dimensional model of the gastric inner wall by the means of CT. And the model can assist in intraoperative navigation and biopsy resetting, but the detection and accuracy of navigation is not perfect because it is difficult to accurately calculate the flexible deformation of the stomach by the static preoperative model. To this end, some researchers developed a set real-time three-dimensional reconstructed method for soft cavity based on the RGB image of endoscope diagnosis. The latter method solved the problem that in the preoperative model it is unable to calibrate dynamically the deformation of soft organs [7]. However, because the endoscope image texture feature is not easily extracted, it is still an unsolved question that how to build stable and real-time models. In addition to three-dimensional models for the auxiliary examination of lesions, direct diagnosis in two-dimensional images is also an important research direction. For instance, Vemuri [8] has proposed a new real-time method of pathological localization, in which they regard stomach peristalsis as regional affine transformation. However, in soft cavity organs, regional affine hypothesis is generally not valid. Furthermore, taking advantages of the noninvasive property, probe-based confocal laser endoscope (PCLE) is developed for assisting in quick detecting and positioning of polyps in real time. However, this technology relies on extra hardware solution and cannot return visit and review the lesions [9]. There is another research direction of lesion examination which uses panorama technology to expend the inner wall of soft organs. Through the expended panorama, doctors can give a comprehensive and quick diagnosis for the inner wall of target organs, without the need to repeatedly check and change the viewing angle. It can avoid misdiagnosis caused by occlusion and other issues, and meanwhile improve the diagnostic effect. However, panorama technology has high requirement for image splicing and fusing. It is prone to appear shadow, blur, or even dislocation may occur between the spliced images. Besides, regional distortion of images in expended panorama can also affect the result of diagnosis [10, 11].

Based on the above research studies, this paper proposes an automatic diagnosis system for gastric polyps on basis of the panorama image of the gastric inner wall. Specifically, the main innovative work of this paper shows the following aspects: firstly, we build a panorama model for the gastric inner wall. Compared with the previous work, we do not rely on hardware to estimate camera position, so it is more available for operation. Furthermore, we optimize the method by means of optical consistency and solve problems as registration error, blur, and others caused by image stitching. Secondly, we develop an end-to-end panoramic multitarget detection network for gastroscopic procedure. Compared to the conventional deep learning target detection framework, this paper uses the panorama image as network input, supports the multitarget detection of polyps, and avoids distortion caused by stitching, which may mislead the doctors' diagnosis. Thirdly, we conduct clinical trials on entire system in Affiliated Hospital of Zhejiang University. The experimental result shows the model's error of our system is less than 2 mm and recalling rate of polyp detection is close to 100%. Our developed system can assist doctors in diagnosis, and it helps reduce the rate of misdiagnosis, improve the efficiency of diagnosis, and relieve the storage pressure of server data. Finally, the research method of this paper has a certain guiding significance for auxiliary diagnosis for other human soft organs, theoretically. In [12], a promising polyps detection method with CNN was also proposed. Compared with [12], the main significance of our method is our CNN framework considering the constructed gastric panoramic data as input. Moreover, our framework is designed as multitarget detection, which indicates our method can detect all the polyps of a patient just from one image.

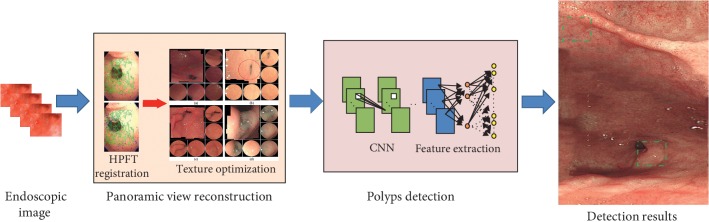

The algorithm flow chart is shown in Figure 2. We take original image sequence from endoscope as the input of the algorithm. After the image registration and the optimized texture fusion based on optical consistency theory, we obtain a more comprehensive view of the gastric inner wall. Then, a proposed deep learning framework is worked on the generated panorama data and achieved automatic detection of gastric polyps.

Figure 2.

The pipeline of our method. Original endoscopic images are used to generate panoramic result. Then, polyps are detected with our deep learning framework.

Relative to conventional computer vision problems, there are lots of challenges to reconstruct the panorama image of the gastric inner wall in the human soft cavity. These challenges can be summarized as the following aspects: first, it is hard to extract and match features, and endoscope is usually a fisheye lens, so the images captured are always seriously distorted (see Figure 3). Furthermore, in the soft cavity such as the stomach, the inner walls are almost covered with mucosa, so the captured RGB images are prone to generate certain degrees of reflective spots (see Figure 1). All these problems can influence the stability of feature descriptors. Second, there are shadow and dislocation in the seam caused by panorama image stitching, and texture fusion is necessary. Besides, the conventional panorama image technology generally relies on texture projection and expansion from a square or sphere model [13]. However, the soft cavity (e.g., stomach) totally differs with standard square or sphere. If reprojected directly, it will result in huge distortion. Therefore, it is necessary to develop a new texture projecting model.

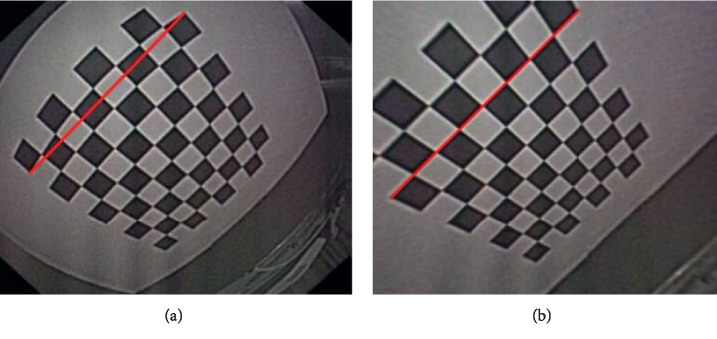

Figure 3.

(a) is originally captured by endoscope, and the chessboard is badly distorted (red line can be considered as reference). (b) is calibrated results.

2. Panoramic View Construction

2.1. Image Registration

In previous work, researchers used a electromagnetic tracking device to estimate real-time coordinates of endoscope in the stomach to solve the matching problem [7]. However, this method cannot be applied in traditional endoscope examination without hardware improving. In this paper, for the texture feature of soft organs, we adopt the Homographic Patch Feature Transform (HPFT) based on homology hypothesis [14]. The public data show that relative to the other patch feature transform of traditional computer vision, HPFT is more efficient in scene of soft organs represented by the stomach, of which the main motion feature is regional peristalsis.

First of all, we give an overview of HPFT. HPFT uses some patch feature transform to detect initial feature points, such as SIFT. If local image blocks of image registration satisfy the homographic principle of computer vision as

| (1) |

In the formula (1), m′ and m represent image local feature point pair to be determined. H represents homographic relationship that can be shown as 3 ∗ 3 matrix. ρ represents scaling scale. On basis of the homographic principle, it divides image sub-blocks for initial matching feature points. By means of KL similarity, it verifies the similarity of image sub-blocks. It iteratively subdivides from the center of the image sub-block, until the subdivided image sub-blocks satisfy the homographic hypothesis, for the sub-blocks that do not satisfy the homographic hypothesis.

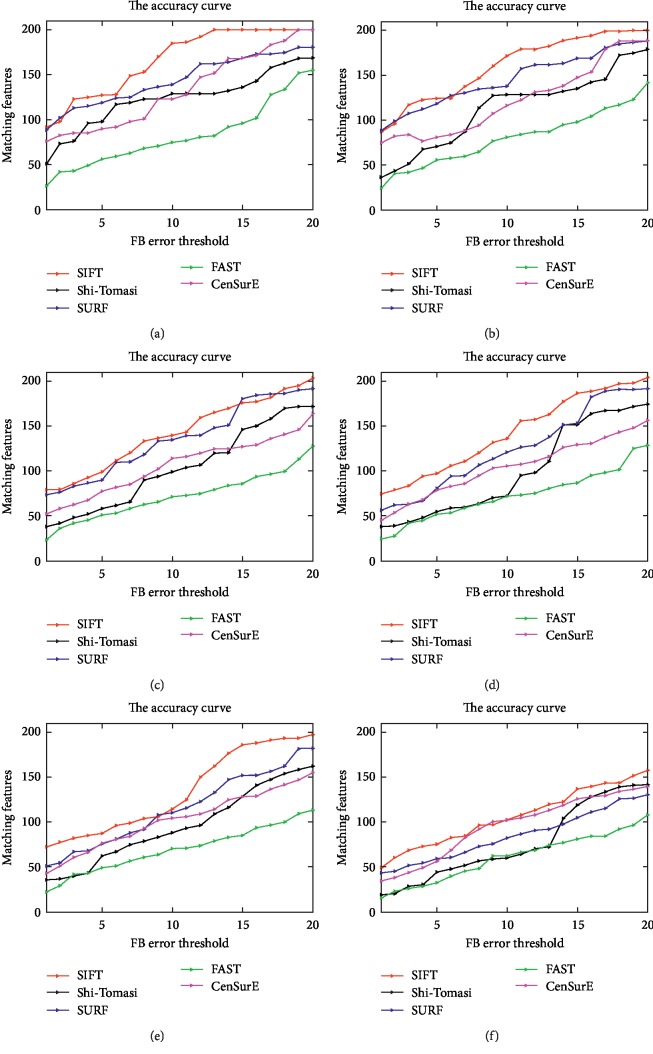

Our experimental result shows we get a better-distributed matching feature points by adopting HPFT, compared to patch feature transform of computer vision as SIFT, FAST, and so on (see Figure 4).

Figure 4.

Five tested method registration results. From (a) to (f), the registration methods were applied to original gastroscopic and noise images (the Gaussian noise scalar varies from 0.01 to 0.5). For each registration method, the initial detected feature number was 200, thus the ideal matching features' number was also 200. In the figures, the color curves represent the number of the detected features whose FB error is smaller than the corresponding FB error threshold (unit: pixels). The figure is quoted from [15, 16]. (a) Original Image. (b) Gaussian's scalar = 0.01. (c) Gaussian's scalar = 0.05. (d) Gaussian's scalar = 0.1. (e) Gaussian's scalar = 0.2. (f) Gaussian's scalar = 0.5. (g) Legends of the curves.

The other problem during image registration is to exclude the mismatching image feature point pairs. Conventional method adopts external polar line constraints and other methods to filter the matching results of image feature points. However, the accuracy of external polar line highly relies on camera viewing. So the filtering result is not satisfactory. In this paper, we regard the entire process from the gastroscope entering into the stomach to leaving as a closed chain process of image registration, and use closed chain optimization to filter and exclude the mismatching points of the image registration.

2.2. Optical Consistency Texture Fusion

After the image registration, the major problem in building panorama image is how to deal with stitching problem between the borders of the stitched image blocks after directly using the result of image registration. The stitching problem is generally caused by the two following reasons: first, in the result of registration obtained by HPFT and closed chain optimization, there exists mismatching point pairs inevitably, which lead to the error of transformation matrix between images calculated, and finally reflect on the seaming results; second, there are lots of mucosa on the gastric inner wall. The images captured from different viewings will have different degrees of glisten or even direct reflection. Eventhough the images reflect a same physiological location, there may be large difference in the pixel value.

Based on the above facts, we develop an optimized method of texture characterization on basis of optical consistency. This method directly regards pixel difference of minimizing stitched seam as optimized target, so as to obtain panorama image structure of the gastric inner wall of smoothing process. This method will be elaborated below.

Assume the sequence of images to be matched is In={I1, I2, I3,…}. Stitching relationship obtained by image registration can be represented by Ti−1,i as

| (2) |

Random coordinate (x, y) of the image Ii−1 converts to (x′, y′) by T transformation. The seam pixel difference after spliced is

| (3) |

The above formula can be organized into an optimized objective function:

| (4) |

The above formula can be seen as a function relative to pixel c and transformation matrix T. In this paper, so as to simplify the process, we regard c as gray average value of the corresponding pixel on the image. Besides, ω represents the weight of the value between the pixel differences and βTiT represents optimized regular term, so as to avoid overfitting.

We use an iterative method to optimize the formula (4). Firstly, assuming that c is unchanged, we can regard image as a four-degree-of-freedom vector Tk=(r1, r2, t1, t2), r1 and r2 represent rotating information. t1 and t2 represent moving information. On basis of the above assumption, formula (4) can be seen as a linear optimization equation, and then use KL or Gauss Newton method to solve the equation. When T is fixed, the formula (4) is equivalent to solving the average of pixel values spliced to some coordinate.

For texture projection model, we adopt the double cube projection model to project and expend the texture [17, 18]. Relative to the conventional cube or sphere projection method, the double cube projection model can do better to deal with deformation problem after model expending.

3. Automatic Detection for Polyps

After building the panorama image of the gastric inner wall, we develop an automatic detection technology for polyps to assist doctors in diagnosis. In the field of computer vision, this type of issues belongs to object detection. Currently, the mainstream object detection technology adopts a deep learning frame to recur and fit the target region labeled by images, such as faster RCNN [19] and SSD [20]. In the scene of the panorama image, the problems we need to solve are first, how to collect large amount of manual data labeled by the panorama image, and second, traditional object detection based on deep learning mostly consider natural images as input. Currently, there are not publicly published technical solutions based on panoramic images.

3.1. Network Model

First of all, we need to develop the network model for gastroscopic panorama image detection. In the literatures of deep learning, the classic idea of object detection technology is like this: it firstly predicts potential local bounding box on the image, and then determines the category prediction confidence of the bounding box in the thought of image classification. Finally, via a series of post processing optimization, the final results are finetuned. The representative technology of this method is RCNN and Faster RCNN [19], which is the most universal technology in the scene of target detection. However, the overcomplicated frame cascade structure and the slow convergence and performance are the existing problems. In this paper, we adopt a SSD [20] network frame to achieve the target detection of the panorama image. Relative to RCNN and other related methods, SSD technology converts target bounding box detection, category prediction and bounding box optimization to paralleled CNN convolution. Relative to Faster RCNN and other related methods, SSD technology has the advantage of faster convolution and more accurate in target locating [21].

During the panorama data processing, because the polyps are relatively small in the panorama image, after SSD prediction most of bounding boxes are negative samples. Compared to predicting directly the original images, a large amount of negative samples will lead to the unbalance of training sample sets and be hard to converge in training. For these issues, we propose an optimized SSD called selective SSD. In selective SSD, we sort negative samples detected in the process of iteration on SSD original loss function, and only select the negative samples with high confidence for training, directly filtering the low ones. This method solves the network convolution problem and improves the accuracy of convergence to a certain degree (see Table 1).

Table 1.

Different deep learning framework for polyp detection.

| Accuracy | Recall | |

|---|---|---|

| Selective SSD | 95% | 100% |

| Original SSD | 84% | 75% |

| Faster RCNN | 81% | 84% |

| RCNN | 79% | 71% |

3.2. Sample Collection

In addition to the network model, to get the training data of the panorama image is also a key in polyps' detection. In this paper, based on gastroscopic examination system of Zhejiang University Affiliated Hospital, we recruit clinical experts to mark the polyps in original gastric images. Then, we use the proposed panorama technology to construct the panorama image, and the global truth is marked by clinical experts. Multiple-check solution is adopted. Only the polyps that are found by two more experts can be considered as true positive samples. During the data training, apart from clinical experts' marking, we adopt data enhancement technology to obtain more training samples. The enhancement method is like this for any panorama image originally captured, and we take advantage of Gaussian function for local smoothing with different scales.

4. Experimental Evaluation and Results

So as to verify the accuracy of polyps detection method based on the panorama image that we proposed in this paper, we embed the developed system into a gastroscopy system of the gastroenterology in Zhejiang University Affiliated Hospital. The endoscope device is GIF-QX-420 from Olympus, Japan. The frame rate of the images collected by this endoscope is 30 frame per second. And the image resolution is 560 ∗ 480. All the volunteers recruited have a history of moderate or severe gastrointestinal diseases, 43 cases in total. Patients provided written informed consent. The clinical data collected can be used for evolution and follow-up visits. During the experiment, there is not any adverse event. Volunteer information is described in Table 2.

Table 2.

Volunteer information.

| Accuracy percent | |

|---|---|

| Average age (range) | 54 (40–64) |

| Male | 32 (75%) |

| Smoke | 27 (65%) |

| Alcohol | 39 (90%) |

| Intestinal metaplasia (mild/moderate/severe) | 0/12/31 |

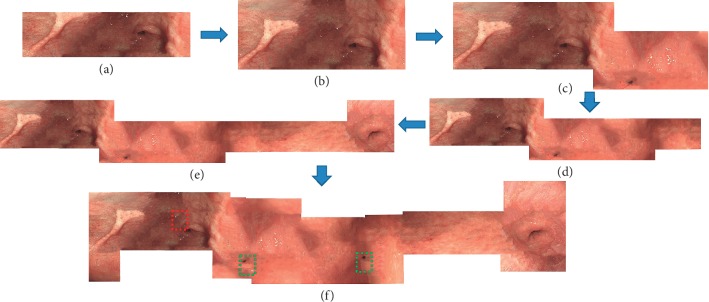

Gastroenterologists can construct the panorama image without extra interoperations. During the examination, this system extracts HPFT feature descriptors in real time, on basis of gastric inner wall texture information collected by doctors. Then, it estimates real-time position of the camera. The panorama image is gradually constructed and unfolded, as well as the polyps in the panorama image are detected in real time, shown in Figure 5.

Figure 5.

The experimental result.

4.1. Panorama Assessment

First we evaluate the performance of the panoramic result. Accordingly, our method is easier compared with the ones that construct a gastroscopic panorama image based on a electromagnetic guided tracking device [18]. This paper adopts the proposed texture metric error [22] to estimate the accuracy of the panorama image, and the results are demonstrated in Table 3. Generally speaking, the average error of the results is 0.33, and the effect is better than the published papers [18].

Table 3.

Quantitative evaluation about panorama results (43 volunteers). We evaluate the error score between Liu's [18] method and our method. The overall texture error of ours is 0.33, which is much better than [18]. Moreover, we also evaluate the results on angularis, antrum, and stomach body, respectively, and our method is better.

| Texture metric error | ||||

|---|---|---|---|---|

| Angularis | Antrum | Stomach body | Overall | |

| Liu's method [18] | 0.53 | 0.49 | 0.44 | 0.49 |

| Our method | 0.38 | 0.35 | 0.27 | 0.33 |

4.2. Polyp Detection Evaluation

To evaluate the accuracy of the polyp detection, we compare the results marked by clinical doctors to the ones of automatic detection for polyps (see Table 4). We consider the IOU >0.5 as positive samples, and IOU indicates that the intersection area between the area clinical doctors marks and the detection area that our method generates.

Table 4.

Polyp detection compared with clinical diagnosis. We evaluate the recall percentage and accuracy from different physiological location.

| Clinical diagnosis | Our method | Recall percentage | IOU >0.5 | IOU ≤0.5 | Accuracy | |

|---|---|---|---|---|---|---|

| Angularis | 58 | 58 | 100% | 2 | 56 | 96.5% |

| Antrum | 71 | 71 | 100% | 4 | 67 | 94.4% |

| Stomach body | 40 | 40 | 100% | 1 | 39 | 97.5% |

Finally, the comparing results show in the case of a recalling rate close to 100%, the accuracy is 95%, which meets the requirements of clinical auxiliary diagnosis.

Furthermore, we develop the selective SSD object detection framework for dealing with panoramic images; as a result, we also evaluate the performance of the selective SSD and other object detection method.

In Table 1, we can see that the selective SSD outperforms over the other published method. And there is a significant improvement between selective SSD and original SSD, which indicates and proves that our method is effective.

5. Discussion and Conclusion

Gastroscopy is one of the most routine solutions in the current gastric diseases. It is of importance to improve the efficiency of diagnosis and reduce the risk of misdiagnosis clinically. Compared with published papers, we first put forward an end-to-end full-view automatic detection technology. Specifically, we researched a method to assist doctors in understanding the whole picture of the human stomach by means of panorama obtained without extra device. Then, on the basis of the panorama image, we propose a polyp auxiliary diagnosis method based on the deep learning framework, which can improve the efficiency of doctors' diagnosis. The method is a good reference for endoscope intervention diagnosis in other human soft organs theoretically.

Acknowledgments

The project was supported by the Medical Scientific Research Foundation of Zhejiang Province, China (2018PY022).

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- 1.Van Der Post R. S., van Dieren J., Grelack A., et al. Outcomes of screening gastroscopy in first-degree relatives of patients fulfilling hereditary diffuse gastric cancer criteria. Gastrointestinal Endoscopy. 2018;87(2):397–404. doi: 10.1016/j.gie.2017.04.016. [DOI] [PubMed] [Google Scholar]

- 2.Wickremeratne T., Turner S., O’Beirne J. Systematic review with meta-analysis: ultra-thin gastroscopy compared to conventional gastroscopy for the diagnosis of oesophageal varices in people with cirrhosis. Alimentary Pharmacology & Therapeutics. 2019;49(12):1464–1473. doi: 10.1111/apt.15282. [DOI] [PubMed] [Google Scholar]

- 3.Denzer U. W., Rösch T., Hoytat B., et al. Magnetically guided capsule versus conventional gastroscopy for upper abdominal complaints. Journal of Clinical Gastroenterology. 2015;49(2):101–107. doi: 10.1097/mcg.0000000000000110. [DOI] [PubMed] [Google Scholar]

- 4.Lovat L. B., Johnson K., Mackenzie G. D., et al. Elastic scattering spectroscopy accurately detects high grade dysplasia and cancer in Barrett’s oesophagus. Gut. 2006;55(8):1078–1083. doi: 10.1136/gut.2005.081497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Leonard S., Sinha A., Reiter A., et al. Evaluation and stability analysis of video-based navigation system for functional endoscopic sinus surgery on in vivo clinical data. IEEE Transactions on Medical Imaging. 2018;37(10):2185–2195. doi: 10.1109/tmi.2018.2833868. [DOI] [PubMed] [Google Scholar]

- 6.Gao M., Chen Y., Liu Q., Huang C., Li Z. U., Zhang D. H. Three-dimensional path planning and guidance of leg vascular based on improved ant colony algorithm in augmented reality. Journal of Medical Systems. 2015;39(11):p. 133. doi: 10.1007/s10916-015-0315-2. [DOI] [PubMed] [Google Scholar]

- 7.Wang B., Liu J., Zong Y., Duan H. Dynamic 3D reconstruction of gastric internal surface under gastroscopy. Journal of Medical Imaging and Health Informatics. 2014;4(5):797–802. doi: 10.1166/jmihi.2014.1323. [DOI] [Google Scholar]

- 8.Vemuri A. S. Survey of computer vision and machine learning in gastrointestinal endoscopy. 2019. https://arxiv.org/abs/1904.13307.

- 9.Ye M., Johns E., Walter B., Meining A., Yang G.-Z. Robust image descriptors for real-time inter-examination retargeting in gastrointestinal endoscopy. 2016. https://arxiv.org/abs/1605.05757.

- 10.Horakerappa M., Ali K., Gouri M. A study on histopathological spectrum of upper gastrointestinal tract endoscopic biopsies. International Journal of Medical Research & Health Sciences. 2013;2:418–424. [Google Scholar]

- 11.Zeng J. L., Cheng Y. H., Wu T. Y., Liu D.-G., Cheng C.-H. MicroEYE: a wireless multiple-lenses panoramic endoscopic system. Proceedings of the International Conference on Advanced Engineering Theory & Applications; December 2017; Ho Chi Minh City, Vietnam. [Google Scholar]

- 12.Zhang X., Chen F., Yu T., et al. Real-time gastric polyp detection using convolutional neural networks. PLoS One. 2019;14(3) doi: 10.1371/journal.pone.0214133.e0214133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Güneri E. A., Olgun Y. Endoscope-assisted cochlear implantation. Clinical & Experimental Otorhinolaryngology. 2018;11(2):89–95. doi: 10.21053/ceo.2017.00927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cohen A. R. Endoscope-assisted microsurgical subtemporal keyhole approach to the posterolateral suprasellar region and basal cisterns. World Neurosurgery. 2017;103 doi: 10.1016/j.wneu.2017.02.054. [DOI] [PubMed] [Google Scholar]

- 15.Hu W., Zhang X., Wang B., et al. Homographic patch feature transform: a robustness registration for gastroscopic surgery. PLoS ONE. 2016;11(4) doi: 10.1371/journal.pone.0153202.e0153202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang B., Hu W., Liu J., Si J., Duan H. Gastroscopic image graph: application to noninvasive multitarget tracking under gastroscopy. Computational and Mathematical Methods in Medicine. 2014;2014 doi: 10.1155/2014/974038.974038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ma J., Zhang P., Zhang Y., et al. Effect of dezocine combined with propofol on painless gastroscopy in patients with suspect gastric carcinoma. Journal of Cancer Research & Therapeutics. 2016;12:p. C271. doi: 10.4103/0973-1482.200755. [DOI] [PubMed] [Google Scholar]

- 18.Liu J., Wang B., Hu W., et al. Global and local panoramic views for gastroscopy: an assisted method of gastroscopic lesion surveillance. IEEE Transactions on Biomedical Engineering. 2015;62(9):p. 1. doi: 10.1109/tbme.2015.2424438. [DOI] [PubMed] [Google Scholar]

- 19.Ren S., He K., Girshick R., Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. Proceedings of the International Conference on Neural Information Processing Systems; November 2015; Istanbul, Turkey. [Google Scholar]

- 20.Liu W., Anguelov D., Erhan D., et al. Ssd: single shot multibox detector. Proceedings of the European Conference on Computer Vision; October 2016; Amsterdam, The Netherlands. Springer; pp. 21–37. [Google Scholar]

- 21.Chen L. C., Papandreou G., Kokkinos I., et al. Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2017;40(4):834–848. doi: 10.1109/TPAMI.2017.2699184. [DOI] [PubMed] [Google Scholar]

- 22.Cheung G., Yang L., Tan Z., et al. A content-aware metric for stitched panoramic image quality assessment. Proceedings of the 2017 IEEE International Conference on Computer Vision Workshop (ICCVW); October 2017; Venice, Italy. IEEE; [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.