Summary

Driving perception by direct activation of neural ensembles in cortex is a necessary step for achieving a causal understanding of the neural code for auditory perception and developing central sensory rehabilitation methods. Here, using optogenetic manipulations during an auditory discrimination task in mice, we show that auditory cortex can be short-circuited by coarser pathways for simple sound identification. Yet when the sensory decision becomes more complex, involving temporal integration of information, auditory cortex activity is required for sound discrimination and targeted activation of specific cortical ensembles changes perceptual decisions, as predicted by our readout of the cortical code. Hence, auditory cortex representations contribute to sound discriminations by refining decisions from parallel routes.

Highlights

-

•

Auditory cortex is dispensable for discrimination of dissimilar pure tones in mice

-

•

Auditory cortex is involved in a sound discrimination requiring temporal integration

-

•

Focal cortical activations bias choices in cortex-dependent discriminations

-

•

Discrimination is faster for pure tones than for optogenetic cortical activations

In this study, Ceballo et al. show that targeted activation of specific neural ensembles in auditory cortex changes perceptual decisions in a difficult auditory discrimination task, although auditory cortex is not involved in easier decisions.

Introduction

The role of primary sensory cortical areas in perceptual decisions is complex. Primary sensory areas are often viewed as necessary links between peripheral sensory information and decision centers, but multiple observations challenge this simplified model. For example, human subjects with primary visual cortex lesions display residual visual abilities, a phenomenon termed “blindsight” (Sanders et al., 1974, Schmid et al., 2010). In animals, classical associative conditioning (Lashley, 1950, LeDoux et al., 1984) or some operant behaviors (Hong et al., 2018) based on sensory stimuli can be performed in the absence of primary sensory cortex. However, several studies also report sensory-based behaviors that are abolished or severely impaired by primary sensory cortex silencing (Letzkus et al., 2011, O’Connor et al., 2010, Poort et al., 2015, Sachidhanandam et al., 2013). In addition, cortical stimulation experiments show that primary cortex for all sensory modalities can perturb perceptual decisions or initiate sensory-driven behaviors (Choi et al., 2011, Houweling and Brecht, 2008, Huber et al., 2008, Musall et al., 2014, O’Connor et al., 2013, Peng et al., 2015, Salzman et al., 1990, Yang et al., 2008, Znamenskiy and Zador, 2013), suggesting a role in perception. This apparent contradiction is particularly evident in hearing, for which involvement of auditory cortex (AC) is controversial even for discrimination of two distinct sounds. Indeed, lesions or reversible silencing of auditory cortex lead to deficits or have little effect, depending on task conditions, silencing methods, and animal models (Diamond and Neff, 1957, Gimenez et al., 2015, Harrington et al., 2001, Jaramillo and Zador, 2011, Kuchibhotla et al., 2017, Ohl et al., 1999, Pai et al., 2011, Rybalko et al., 2006, Talwar et al., 2001). Thus, auditory cortex does not seem to be always necessary for sound discrimination.

Moreover, the requirement of auditory cortex, in particular task settings, points toward two alternative mechanistic implications. One alternative is that AC requirement reflects a permissive role for the task (Otchy et al., 2015), for example, by providing some global gating signals to other areas, which is not informative about the decision to be taken but without which the behavioral decision process is impaired. The second alternative is that AC actually provides for each stimulus distinct pieces of information that contribute to drive the discriminative choices. Optogenetic manipulations of AC activity can modulate auditory discrimination performance (Aizenberg et al., 2015), which could be both explained by a permissive or a driving role of AC in the task. Targeted manipulation of AC outputs in the striatum can bias sound frequency discrimination toward the sound frequency corresponding to the preferred frequency of the manipulated neurons (Znamenskiy and Zador, 2013). This indicates that AC output can be sufficient to drive discrimination, but as the effect of complete AC inactivation was not tested in this task, it remains possible that this manipulation does not reflect the natural drive occurring in the unperturbed behavior. In support of this, targeted manipulation of generic AC neurons failed to drive consistent biases in this task (Znamenskiy and Zador, 2013). Thus, necessity and sufficiency of precise auditory cortex activity patterns in a sound discrimination behavior remain to be established.

Here, we combine optogenetic silencing and patterned activation techniques in head-fixed mice to show that AC is not required in a simple frequency discrimination task but is necessary for a difficult discrimination involving sounds with frequency overlap. We also show, based on a discrimination of distinct optogenetically driven AC activity patterns, that specific AC information is sufficient for driving a discrimination, and decisions in this case also take longer than choices made in the simple task. Last, we show that focal stimulation of AC is able to modify the animal’s choice in the difficult task, but not in the simple one. We also show that the AC region most sensitive to focal stimulation encodes auditory features used by the mouse to discriminate the two trained sounds. These results indicate that auditory cortex provides necessary and sufficient information to drive decisions in difficult sound discriminations, although other pathways bypass it in simple discriminations.

Results

Requirement of Auditory Cortex Depends on Sound Discrimination Complexity

Lesion studies in gerbils, rats, cats, and monkeys (Diamond and Neff, 1957, Harrington et al., 2001, Ohl et al., 1999, Rybalko et al., 2006) suggest that the involvement of auditory cortex in sound discrimination could be related to the sound features that have to be discriminated and thus potentially to the difficulty of the discrimination. To test this idea, we trained different groups of mice to perform a head-fixed Go/NoGo task: one group was trained to discriminate pure frequency features (a 4-kHz against a 16-kHz pure tone; PTvsPT task), and a second group had to integrate frequency variations over time in order to discriminate a linearly rising, frequency-modulated sound (4- to 12-kHz) against a pure tone (4-kHz; FMvsPT task; Figure 1A). Although the PTvsPT task was rapidly learned, the FMvsPT task was more challenging, as it required much longer training (82 ± 12 trials, n = 34 mice for the PTvsPT against 416 ± 54 trials, n = 28 mice, for FMvsPT task to reach 80% performance; Wilcoxon rank-sum test; p = 2.5 × 10−8; Figures 1B and 1C). We thus wondered whether this difference in task difficulty could relate to differential involvement of AC. To address this question, we decided to silence AC by activating parvalbumin-positive interneurons (PV) expressing channelrhodopsin2 (hChR2-tdTomato). Electrophysiological calibration in awake, passive mice (Figures 2A and S1) showed that this manipulation strongly disrupted cortical activity (e.g., Figure 2B), decreasing population firing rates to target sounds by ∼70%, with about 50% of putative PV-negative neurons displaying a complete suppression of their response (Figures 2C and S1). To evaluate the effect of PV activation on task performance, we trained a linear support vector machine (SVM) classifier to discriminate the two target sounds based on the population activity of putative PV-negative neurons (n = 125 neurons; recorded in 14 separate populations across 4 mice). We then measured the performance of the classifier with AC responses to the same sounds during PV activation. For the pure tones, the SVM performance dropped from almost perfect classification to less than 70%, and for the pure tone versus FM sound, SVM performance dropped to chance levels (Figure 2D), suggesting that the optogenetic manipulation perturbs sound encoding, although, given the small size of our neuronal sample, we cannot verify to what extent the information remains at a broader scale.

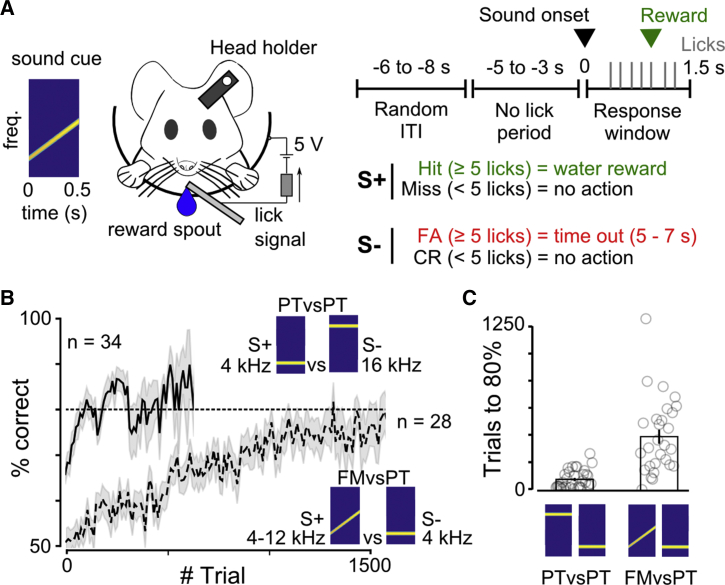

Figure 1.

Discriminating a Frequency-Modulated Sound from a Pure Tone Is More Difficult Than Discriminating Two Distant Pure Tones

(A) Sketch of the head-fixed Go/NoGo discrimination task.

(B) Learning curves for all mice performing each task.

(C) Number of trials needed to reach 80% discrimination performance for PTvsPT and FMvsPT discrimination task (PTvsPT, n = 34 mice; FMvsPT, n = 28 mice; Wilcoxon rank-sum test; p < 0.001). Open circles correspond to single mice. Bar plots show the mean and SEM.

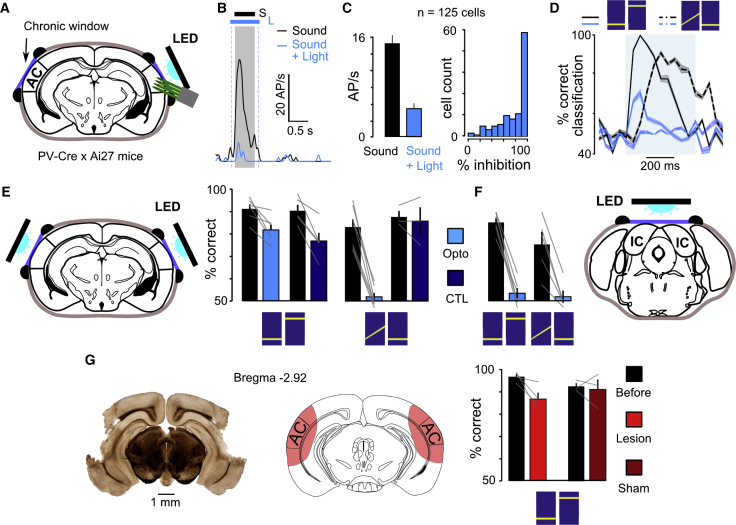

Figure 2.

Cortical Requirement for Sound Discrimination Depends on Discrimination Complexity

(A) Sketch of the optogenetic inactivation strategy through a cranial window covering the primary and secondary auditory cortex. For calibration, silicon probes were inserted beneath the window.

(B) Sound response post-stimulus time histogram (PSTH) of a sample PV-negative cell with and without light-driven PV neuron activation.

(C) Mean population response to sounds with and without optogenetic activation of parvalbumin neurons (15 ± 1.4 Hz versus 4.7 ± 0.9 Hz; n = 125 single units; p = 6.2 × 10−18; Wilcoxon rank-sum test) and distribution of sound response reduction during light-ON trials for putative PV-negative neurons.

(D) S+ versus S− discrimination performance for linear SVM classifiers trained on a sample of normal population responses (5 repetitions; one classifier for each 50-ms time bin) and tested on either normal population responses or on population responses recorded during optogenetic inactivation (5 independent repetitions on the same 50-ms time bin; n = 125 single units from 4 mice).

(E) Discrimination performance without (black) and with (blue) optogenetic inactivation of AC during PTvsPT (left; 91% ± 2.4% versus 82% ± 2%; n = 6 mice; p = 0.025; Wilcoxon rank-sum test) and FMvsPT (right; 83% ± 1.7% versus 52% ± 3.5%; n = 7 mice; p = 0.002; Wilcoxon rank-sum test) tasks in mice expressing ChR2 in PV interneurons. The same measurements are shown for control mice without ChR2 expression (dark blue).

(F) Discrimination performance without (black) and with (blue) optogenetic inactivation of inferior colliculus during PTvsPT (left; 85% ± 2.3% versus 54% ± 1.9%; n = 6 mice; p = 0.004; Wilcoxon rank-sum test) and FMvsPT (right; 75% ± 2.7% versus 52% ± 5.6%; n = 5 mice; p = 0.016; Wilcoxon rank-sum test) tasks.

(G) Left: example histological section showing the extent of bilateral AC lesions. Right: mean discrimination performance during PTvsPT before and after AC lesion (97% ± 1.1% versus 87% ± 2.9%; n = 4 mice; p = 0.043; Wilcoxon rank-sum test) or sham surgery (93% ± 1.4% versus 91% ± 4.5%; n = 4 mice; p = 0.8; Wilcoxon rank-sum test) is shown. Bar plots show the mean and SEM throughout the figure.

When bilaterally applying this PV activation protocol through cranial windows in behaving mice, we indeed observed a drop of performance down to chance level (50%) in the FMvsPT task, independent of whether the FM sweep was the rewarded or non-rewarded stimulus (Figure S2), while mice lacking ChR2 expression performed normally (Figure 2E). However, the same manipulation during the PTvsPT task yielded less than a 10% performance drop (Figure 2E), which is significantly lower than the ∼30% performance drop to chance level observed for the FMvsPT task (Wilcoxon rank sum test; p = 0.002; n = 6 and 7 mice). This mild impact was not due to the wide difference in frequency in our PTvsPT task, as mice were not more impaired by optogenetic AC silencing when discriminating 4-kHz versus 8-kHz or 4-kHz versus 6-kHz (Figure S2), but we do not exclude AC involvement for even finer discriminations. Because the same inactivation strategy applied to the inferior colliculus, an earlier stage of the auditory system, fully abolished PTvsPT performance (Figure 2F), we suspected that the continued performance of the PTvsPT task after AC perturbation was not due to incompleteness of the silencing (Figures 2C and S1) but rather to the absence of AC involvement. We thus performed bilateral lesions that covered the entire AC and observed only a ∼10% drop in behavioral performance, even for a smaller frequency difference, on the day following lesion (Figures 2G and S2). In summary, AC is dispensable for a simple pure tone discrimination, but it is necessary for the more difficult FMvsPT task.

Information from Auditory Cortex Can Drive Slow Discriminative Choices

Necessity alone does not prove that AC activity patterns causally drive decisions in the FMvsPT task (Hong et al., 2018, Otchy et al., 2015). Seeking to establish a proof of sufficiency for AC activity in this task, we first tested whether two distinct activity patterns unilaterally delivered to AC can provide enough information to drive a discrimination task, as so far, cortical stimulation studies have only demonstrated detection of a single cortical stimulation (Houweling and Brecht, 2008, Huber et al., 2008, Musall et al., 2014, O’Connor et al., 2013, Peng et al., 2015, Salzman et al., 1990, Znamenskiy and Zador, 2013) or discrimination of two stimulations in different cortical areas or hemispheres (Choi et al., 2011, Manzur et al., 2013, Yang et al., 2008). To this end, we used a micro-mirror device (Dhawale et al., 2010, Zhu et al., 2012) to apply two-dimensional light patterns onto the AC surface through a cranial window in Emx1-Cre × Ai27 mice expressing ChR2 in pyramidal neurons (Figure 3A).

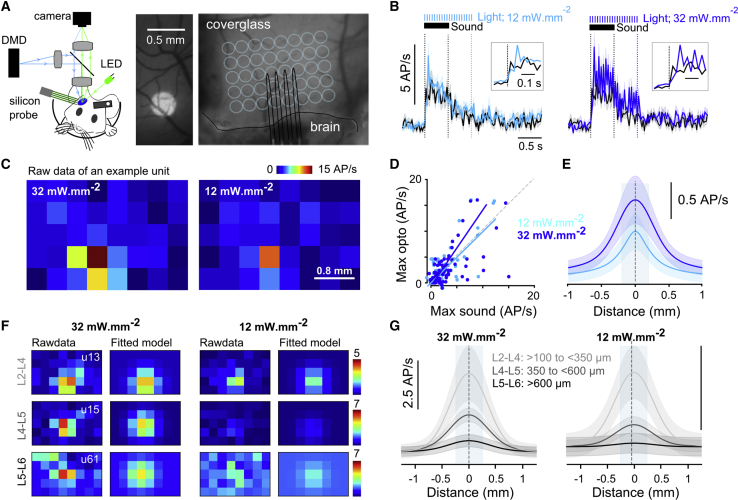

Figure 3.

2D Light Pattern Delivery Triggers Focal Activity in Auditory Cortex

(A) Left: photo-stimulation setup and optogenetic calibration protocol. Center: example image of an ∼400-μm disk projected onto the cranial window is shown. Right: image of the AC cranial window with a sketch of the silicon probe insertion site and grid locations where light was projected is shown (light blue).

(B) Time course of mean firing rate (50-ms time bin) in response to a single pure tone of 500 ms at 70-dB SPL (black line) or in response to 12-mW.mm−2 (left) or 32-mW.mm−2 (right) focal optogenetic stimulations (light blue line; disk diameter 400 μm) for n = 84 single units (shadings represent SEM). Insets: magnification at stimulus onset is shown.

(C) 2D maps of an example single unit for two light intensities, representing the mean firing rate over 10 repetitions during photo-stimulation of the different locations on the grid.

(D) Best photo-stimulation response plotted against best sound response measured as the largest trial-averaged response over a 500-ms window starting at stimulus onset. For 12 mW.mm−2, the slope of the regression line was 1.01 and the correlation coefficient 0.74; p = 2.4 × 10−16; n = 84 single units recorded between 300 and 900 μm in depth.

(E) Mean of Gaussian models that were fitted to the 2D lateral distribution profile of photo-stimulation responses (shadings represent SEM). Half-width diameter: 560 ± 147 μm at 12 mW.mm−2 and 800 ± 235 μm at 32 mW.mm−2 (n = 84 single units).

(F) 2D maps as in (C), together with the fitted 2D Gaussian model for three single units recorded at three different depths (L2–L4: >100 μm to <350 μm; L4 to L5: >350 μm to <600 μm; L5 to L6: >600 μm).

(G) Mean 1D profile of the fitted Gaussian models as in (E) but for each estimated depth range defined in (F) and for each light intensity. Number of cells: 32 mW.mm−2, L2–L4: n = 31, L4 to L5: n = 66, and L5 to L6: n = 47; 12 mW.mm−2, L2–L4: n = 29, L4 to L5: n = 62, and L5 to L6: n = 34.

We chose to trigger the two discriminated activity patterns with light disks of 0.4 mm diameter at two distinct positions. To make sure that optogenetic patterns were qualitatively similar to sound-evoked activity, we calibrated them in awake mice by recording isolated single units with multi-electrode silicon probes while targeting the light disks to many locations within the cranial window (Figures 3A and 3B). In this procedure, we observed that recorded single units tended to respond only to a subset of the tested locations (e.g., Figure 3C). Pooling together the activity of all recorded neurons, we observed that optogenetically and sound-triggered responses had similar latencies and time courses above a 50-ms timescale (Figure 3B; note that optogenetic responses followed the 20-Hz modulation of the light stimulus below the 50-ms timescale). Moreover, response amplitudes were similar in particular for the lowest light intensity tested (12 mW; Figure 3B), reflected by a high correlation of response amplitudes to the preferred sound and the optogenetic pattern (Figure 3D). Last, the spatial extent of optogenetic responses (600–800 μm diameter, depending on light intensity; Figure 3E) matched the extent of pure tone responses in primary auditory cortex (e.g., Figure S3) and changed little with depth (Figures 3F and 3G), although evoked population firing rates were clearly reduced in the deepest layers. Together, these measurements indicated that the chosen cortical stimulation patterns yielded response characteristics within the range of natural sound responses.

Using the same behavioral protocol as for the sound discrimination tasks, we trained mice to discriminate between unilateral cortical stimulations (32 mW.mm−2) in low- versus high-frequency tonotopic areas of the primary auditory field of AC (Figures 4A–4C). The locations of these areas with respect to blood vessel patterns were identified using intrinsic imaging (Figures 4C and S3) as previously established (Bathellier et al., 2012, Deneux et al., 2016, Kalatsky et al., 2005, Nelken et al., 2004). Blood vessels were then used as landmarks to robustly target the same regions across days. We observed that mice could learn to discriminate these artificial AC patterns within hundreds of trials (Figures 4D and S3), showing AC activity can drive a discrimination task.

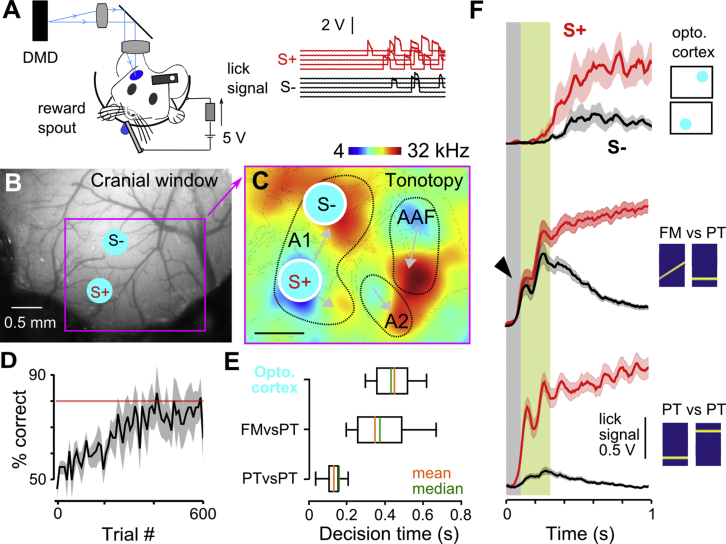

Figure 4.

Mice Can Discriminate Two Distinct Artificial Activity Patterns in Auditory Cortex

(A) Sketch of the Go/NoGo discrimination task for optogenetic stimuli with sample trials showing licking signals for each stimulus.

(B) Sample cranial window superimposed with the location of two optogenetic stimuli in AC.

(C) Localization of the two optogenetic stimuli in the AC tonotopic map obtained from intrinsic imaging; same mouse as in (B).

(D) Population learning curve for discrimination of two optogenetic stimulations (340 ± 105 trials to reach 80% correct performance; n = 5 mice).

(E) Mean decision times for the three different discrimination tasks (optogenetic 424 ± 58 ms, n = 5 mice; FMvsPT, 366 ± 25 ms, n = 29 mice; PTvsPT, 153 ± 22 ms, n = 30 mice). PTvsPT was significantly different from the two other groups (p = 7.3 × 10−9 for FMvsPT and p = 1.1 × 10−3 for optogenetic; Wilcoxon rank-sum test), which were not different from each other (p = 0.2; Wilcoxon rank-sum test).

(F) Mean licking traces for S+ and S− stimuli during optogenetic (top), FMvsPT (middle), and PTvsPT (bottom) discrimination. Arrowhead, unspecific initial licks; gray shading, typical early response delay; green shading, typical late discrimination delay.

Strikingly, however, discrimination of cortical patterns was executed about four times slower than the cortex-independent discrimination of real pure tones (∼400 ms versus ∼100 ms; Figures 4E, 4F, and S4), even for small tone frequency differences (Figure S4), as measured by determining the first time point after stimulus onset at which licking behavior significantly differed for S+ and S− stimuli (Figure S4). In addition, ∼400-ms reaction times were observed for the detection of optogenetic activation in AC, independent of pattern size and intensity (Figure S4), showing that the long decision latencies for AC-driven discrimination are not due to insufficient drive from the artificial cortical patterns but eventually to a rate-limiting process in the pathway downstream of AC that triggers the animal’s decisions. Alternatively, abnormal aspects of the optogenetic stimulation, such as weaker activation in deeper layers, may explain the long latencies. Interestingly, in the cortex-dependent auditory task (FMvsPT), discrimination times were also ∼400 ms (Figures 4E and 4F), but contrary to direct cortical stimulation, discrimination was preceded by an early non-discriminative response whose onset timing was similar to the discriminative lick response in the PTvsPT task (Figure 4F, arrowhead), which is likely a response to the 4-kHz frequency range, present at the onset of both S+ and S− stimuli.

These observations suggest that sound discrimination activates two pathways: (1) an AC-independent pathway producing an early response that can distinguish very distinct sounds but fails to discriminate more complex differences developing over time and (2) an AC-dependent, slower pathway that can provide specific information to improve the responses initiated by the AC-independent pathway, in case these fail to discriminate the proposed sounds, in particular when critical information arrives only after an ambiguous sound onset. In fact, AC is not required for discrimination of an FM sweep that starts at a frequency very distinct from the discriminated pure tone (Figure S2), and in this case, non-discriminative licking at sound onset is weak, allowing short latency discrimination (Figure S4). This suggests that what makes the discrimination difficult in our FMvsPT task is the necessity to ignore the non-informative sound onsets to make the correct decision.

Focal Auditory Cortex Stimulations Bias Decisions in the Complex Discrimination

If indeed auditory cortex provides specific information to drive the correct decision exclusively in the difficult task, then specific activation of neural ensembles in AC should influence behavioral decisions in the FMvsPT task, but not in the PTvsPT task. To test this hypothesis, we trained two groups of Emx1-Cre × Ai27 to perform these tasks. For both groups, the non-rewarded stimulus (S−) was the 4-kHz pure tone. The rewarded stimulus was the 16-kHz pure tone for one group and the 4- to 12-kHz FM sweep for the other group. After task acquisition, the right AC of each mouse was functionally mapped using intrinsic imaging to determine its tonotopic organization (Figure 5A). Then, a grid of optogenetic stimulus locations (400-μm disks) was aligned to this map. Each location was stimulated during behavioral performance as occasional non-rewarded catch trials (10% occurrence) together with the non-rewarded 4-kHz pure tone (Figure 5B). The purpose of this design was for the 4-kHz tone to drive the rapid non-specific response and test whether additional specific cortical drive mimics, at least partially, the perception of the rewarded stimulus instead of the presented S−, thereby changing the late discriminative response. To mimic sound-induced activation as closely as possible with our method, we used the lower of our two calibrated light intensities (12 mW.mm−2; Figures 3C and 3D). Unlike previous studies (O’Connor et al., 2013, Znamenskiy and Zador, 2013), these catch trials were not rewarded to measure a spontaneous interpretation of optogenetic perturbations by the brain and rule out reward-associated responses potentially generated by fast learning processes. Each of the 17 locations in the grid was tested across five catch trials, and the average lick count elicited was compared to the mean expected lick count distribution in five regular S− trials to assess statistical significance (e.g., Figure 5C; p < 0.05). We accounted for multiple testing across the 17 locations using the Benjamini-Hochberg correction. This conservative analysis showed that specific locations (e.g., Figure 5D) significantly increased lick counts above random fluctuations and brought the behavioral response close to the normal rewarded stimulus (S+) response for the FMvsPT task (Figures 5E and S5). Moreover, the fraction of locations perturbing behavior was significantly above the false-positive rate across all animals tested (Figure 5F). This was not the case for the easier PTvsPT task (Figure 5F), in which no stimulus location could raise the lick count close to S+ level (Figures 5E and S5). Together, this experiment showed that targeted, physiologically realistic AC stimulation is sufficient to change the decisions of mice in the difficult auditory task, but not in the simple task, corroborating the idea that AC is not involved in the former but contributes decisive information in the latter. This idea was also reinforced by the observation that locations significantly perturbing behavior clustered around a specific area of AC when mapped across all animals in the FMvsPT task, but not in PTvsPT task (Figures 5G and 5H). This area spanned the mid-frequency range of the primary auditory field (A1) and to a lesser extent the central region of AC (Figure 5H) as defined from intrinsic imaging maps (Figures 5A and 6A).

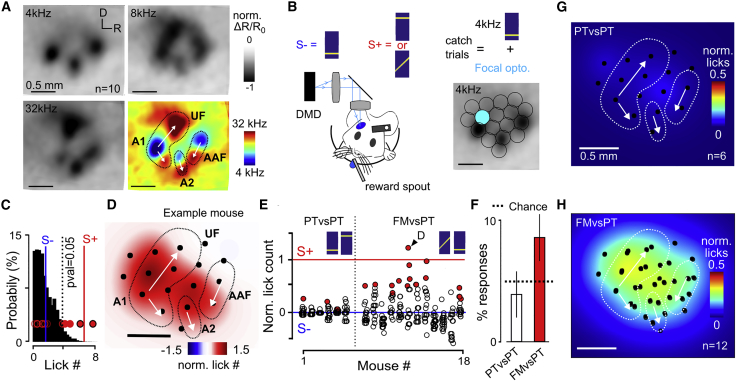

Figure 5.

Focal Optogenetic AC Activation Biases Decisions Only in the FMvsPT Task

(A) Intrinsic imaging response maps for three pure tones aligned and averaged across 10 mice. Bottom right: map of tonotopic gradients obtained by subtracting the 4- and 32-kHz response maps is shown. The approximate contours and direction of the main tonotopic gradients (primary auditory field A1, anterior auditory field AAF, and secondary auditory field A2) are superimposed.

(B) Schematic of the focal AC perturbation experiment: in occasional non-rewarded trials, the S− sound (4-kHz) was played synchronously with a local optogenetic stimulation in one out of 17 predefined locations aligned to the tonotopic map.

(C) Probability distribution of S− lick counts averaged over three random trials in one sample mouse. The superimposed red circles represent the mean lick counts over three trials for each optogenetic stimulus location. Open circles, non-significant responses; filled circles, lick count is larger than 95% of S− lick counts; filled circles with black contours, significant locations after Benjamini-Hochberg correction for multiple testing (p < 0.05).

(D) Map of normalized lick counts for optogenetic perturbations obtained by summing the estimated 2D neuronal population response profile for one light disk, multiplied by observed lick count and positioned at all optogenetic locations with a significant response.

(E) Mean lick counts for all optogenetic stimulus locations and all mice involved in the PTvsPT or FMvsPT tasks. Red filled circles correspond to significant locations (p < 0.05; corrected for multiple testing).

(F) Percentage of significant locations found in the PTvsPT and FMvsPT tasks across all mice. Data are represented as mean ± SEM. Dashed line, expected false discovery rate.

(G) Maps of normalized lick counts for significant optogenetic locations averaged across all mice performing the PTvsPT task. Black circles, centers of optogenetic stimuli.

(H) Same as (G) but for the FMvsPT task.

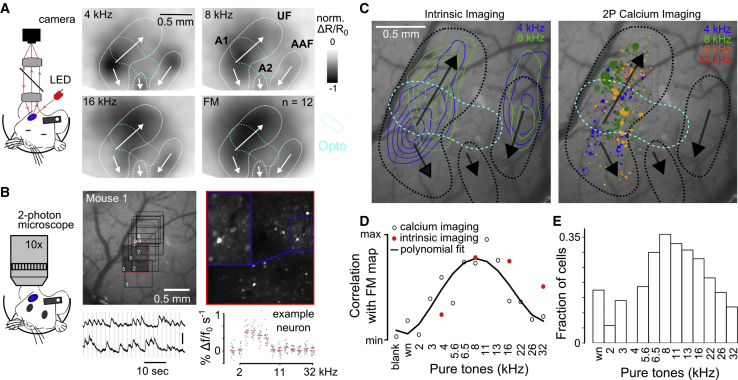

Figure 6.

AC Neurons Coding for Intermediate Frequencies Distinguish Sounds of the Difficult Task

(A) Sketch of intrinsic imaging setup and averaged re-aligned intrinsic imaging maps sampled over 12 mice for 4-, 8-, and 16-kHz pure tones and FM sweeps. White lines and arrows indicate the main tonotopic fields, as in Figure 5A.

(B) Sketch of 2-photon setup and picture of the AC surface with the localization of calcium imaging fields of view (FoVs) for a sample mouse. A magnification of FoV 5 is shown on the right. Raw calcium traces (scale bar: 50% Δf/f0) and a pure tone tuning curve (70 dB SPL) for a sample neuron are shown on the bottom. The calcium imaging setup is sketched on the left.

(C) Magnification of cranial window shown in (B) with the contours of intrinsic imaging responses to 4- and 8-kHz pure tones (left) and the locations of all significantly responding neurons, which do not respond to both 4-kHz and 4- to 12-kHz (discriminative neurons; see STAR Methods) recorded with 2-photon calcium imaging (non-discriminative neurons removed). Color code corresponds to their preferred frequency over the same sounds used during intrinsic imaging experiments. Circle diameters are proportional to the mean deconvolved calcium response for the best frequency sound over 20 repetitions (right). The dashed line indicates the position of the main tonotopic fields.

(D) Red dots: correlation of the pooled intrinsic response maps of the FM sweeps with pooled maps of four different pure tones (n = 12 mice for the aligned maps). Black dots: correlation between the population vector responses of the FM sweeps and of various pure tones is shown (time bin 0 to 1 s after sound onset; from 5,157 significantly responding neurons over 30 sessions in 5 mice). Intrinsic: max = 1; min 0.75. Calcium imaging: max = 0.25; min = 0.

(E) Fraction of neurons that respond significantly to the FM sweep (n = 520 neurons; Wilcoxon rank-sum test; α = 0.01) from the pool of significantly responding neurons (discriminative neurons; n = 4,692 neurons).

Tonotopic Location of Effective Focal Stimulations Matches Frequency Cues Used for Discrimination

The central, non-tonotopic region of auditory cortex, potentially part of secondary auditory areas (A2), was recently suggested to contain neurons specific for vocalizations and FM sweeps (Honma et al., 2013, Issa et al., 2014, Issa et al., 2017). This is in line with its contribution to the decisions in the FMvsPT task. We thus wondered whether the mid-frequency area of A1 is also involved in coding the 4- to 12-kHz FM sweep. First, we observed that strong intrinsic imaging responses to the FM sweep occur in A1, particularly in the mid-frequency area (Figure 6A). To better quantify the information contained in this area, we performed two-photon calcium imaging in layer 2/3 of AC in awake, passively listening mice who had been injected with pAAV1.Syn.GCaMP6s virus. All imaged fields were aligned to intrinsic imaging maps using blood vessel patterns (Figure 6B), allowing a comparison of micro- and mesoscale maps. Color coding cell locations with respect to their best frequency, we qualitatively observed a good match between tonotopic maps derived from the intrinsic and calcium imaging (Figures 6C and S6), even if a certain level of disorganization could be seen in the high-resolution map as reported previously (Bandyopadhyay et al., 2010, Rothschild et al., 2010). For example, the mid-frequency area identified with intrinsic imaging mostly contained cells with best frequencies at 8- or 16-kHz when imaged at high resolution (e.g., Figures 6C and S6). Based on these data, we calculated the similarity (correlation coefficient) between population responses to FM sweeps and to different pure tones and found, both at coarse and high spatial resolution, that the response to the FM sweeps is most similar to the responses to pure tones around 8-kHz (Figure 6D). We reasoned that neurons coding for this frequency range in A1 could be particularly important for discriminating the FM sweep from the 4-kHz tone. To quantify this idea with our two-photon calcium imaging measurements, we calculated the fraction of neurons responding to different pure tones within the population that responds to the FM sweep, but not to the 4-kHz pure tone (discriminative FM sweep neurons). This showed that neurons that discriminatively respond to the FM sweep indeed have a preference for frequencies around 8-kHz (Figure 6E), a result in line with the localization of the most disruptive optogenetic perturbations in the mid-frequency region of A1, at least if AC representations are similar in passive and active mice.

To evaluate whether context-dependent changes (Francis et al., 2018, Kuchibhotla et al., 2017) could impact our conclusions, we also performed calcium imaging in mice engaged in the FMvsPT task. We found, as previously reported, positive and negative modulations (Kuchibhotla et al., 2017) for a fraction of neural responses during engagement, which averaged to a net decrease of population responsiveness (Figure S7). However, AC population activity classifiers trained in the passive state maintained good performance in discriminating the sounds when tested in the active state (Figure S7), confirming the overall similarity of active and passive sound representations in AC.

Thus, together, our optogenetic and imaging experiments suggest that AC contributes to the discrimination of the 4- to 12-kHz sweep versus 4-kHz pure tone by engaging spatially segregated neurons that preferentially code for intermediate frequencies around 8-kHz. A prediction of this model is that mice engaged in the FMvsPT discrimination should strongly associate the 4- to 12-kHz sweep with pure tones in the 8-kHz frequency range. To test this prediction, we presented pure tones in occasional non-rewarded catch trials during the task and measured the licking responses of the mouse (Figure 7A). We observed that mice responded to 5.6-kHz and 8-kHz non-rewarded pure tones with a number of licks similar to the lick count observed after the rewarded 4- to 12-kHz sweep (Figure 7B), revealing the expected association of these frequencies with the FM sound. We thus wondered whether this categorization behavior crucially depends on neurons sensitive to frequencies in the 8-kHz region. We trained a linear classifier (SVM) to discriminate the 4- to 12-kHz sweep and the 4-kHz pure tone based on the responses of neurons imaged in passive mice and significantly activated by only one of the two sounds (discriminative neurons). When testing this classifier with the single-trial population responses elicited by pure tones, we could qualitatively reproduce the categorization curves observed during behavior (Figure 7B). This indicates a match between the structure of the coding space in AC and of the perceptual space as probed in the FMvsPT behavioral task. However, when the classifier was trained without the neurons that significantly respond to the 8-kHz pure tone, then no match was observed between behavioral and cortical categorization curves, although the remaining neurons still discriminate the FM sweep from the 4-kHz pure tone (Figure 7B). This result corroborates the idea that activation of neurons responding in the 8-kHz frequency range critically contributes to the identification of the FM sweep against the 4-kHz tone, in line with the observation that focal optogenetic stimulation in the region most sensitive to the mid-frequency ranges promotes licking responses associated with the FM sweep (Figure 5H).

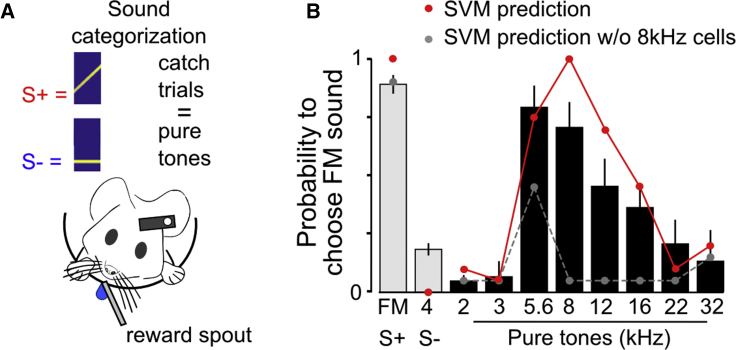

Figure 7.

Mice Use Intermediate Frequencies as a Cue to Discriminate between FM Sweeps and Low-Frequency Pure Tones

(A) Sketch of the auditory categorization assay.

(B) Mean response probabilities in response to unrewarded pure tones occasionally replacing trained stimuli in the FMvsPT discrimination task (mean ± SEM; n = 6 mice). Circles in red are the probabilities of an SVM classifier, trained to discriminate between FM sweeps and 4-kHz pure tones, to classify different pure tones as an FM sweep. Population vectors were used to train the classifier correspond to the mean deconvolved calcium responses of 4,692 significantly responding and discriminative neurons in a (220 ms; 448 ms) time bin with 20 repetitions for each sound. Circles in gray correspond to the same analysis but after exclusion of neurons that respond significantly to an 8-kHz pure tone.

Discussion

Together, our results demonstrate that, although auditory cortex is dispensable for a simple pure tone discrimination task, specific activity patterns in AC provide necessary and sufficient information for discrimination of a more complex sound pair (FMvsPT task). Here, the more complex task involves two sounds that overlap in frequency at their onset but then diverge due to upward frequency modulation for one of them. The two sounds are thus theoretically distinguishable thanks to both spectral (mid- and high frequencies of the FM sound) and temporal (continuous frequency variations) cues. It is striking that, despite the large spectral differences between the two sounds of the FMsPT task, this discrimination was much harder for mice to learn than the pure tone discrimination task. The challenge likely comes from the frequency overlap at the beginning of the two sounds, as suggested by the lack of AC involvement when the FM sweep starts from a frequency very different from the pure tone (Figures S2 and S4). This spectral overlap implies that both sounds activate a large group of identical neurons throughout the auditory pathway, as we observe in the auditory cortex (the FM sweep also activates the 4-kHz region; Figures 6A and S5). Activation of the set of neurons coding for the overlapping frequency is a strong and early predictor of rewards in S+ trials. Therefore, a simple reinforcement learning rule might associate activity of these neurons to the licking response, which makes it difficult for the animal to refrain from licking in S− trials, in which the same neurons are active. The challenge for the brain might be to use information from the subsequent, non-overlapping parts of the sound representations to counteract such generalization. Analysis of the licking profiles in the FMvsPT task indicates that mice do not fully resolve this problem. Indeed, their initial response both to S− and S+ is to lick shortly after sound onset. It is only after this initial impulse that modulation of licking leads to the discriminative response (Figure 4F). The role of auditory cortex might be to solve this initial confound, although alternative pathways are able to learn the correct associations when discriminated stimuli are encoded with little overlap, as expected for the distant pure tones of the PTvsPT task. Corroborating this explanation, when the direction of the FM sweep is reversed (12- to–4-kHz), thereby removing the initial frequency overlap, discrimination with the 4-kHz pure tone is much less sensitive to AC lesions (Figure S2), and discrimination time is similar to the PTvsPT task (Figure S4). Interestingly, a similar phenomenon is observed in a Pavlovian discriminative fear condition protocol, in which conditioning to a specific direction of frequency ramps cannot be achieved without AC, although AC is dispensable for fear conditioning to a specific pure tone (Dalmay et al., 2019 [in this issue of Neuron]). Thus, the requirement of auditory cortical areas for distinguishing temporal variations in frequency overlapping stimuli might be a generic principle independent of the sound association protocol used to uncover it (Diamond and Neff, 1957, Harrington et al., 2001, Ohl et al., 1999), with possible hemispheric specializations, as pointed out by a previous study (Wetzel et al., 2008).

Our study does not identify the alternative pathways that provide auditory information to motor centers in the absence of AC in the simple PTvsPT task. Connectivity studies suggest multiple connections between the auditory system and motor-related centers (Pai et al., 2011). Primary auditory thalamus is known to project to striatum (LeDoux et al., 1991), a brain region necessary for appetitive sound discrimination tasks (Guo et al., 2018). Thalamo-striatal projections could possibly implement the main sensory-motor association in the easy task (Gimenez et al., 2015, Guo et al., 2017). Sensory-motor associations are also possible at the mid-brain level, where inferior colliculus contacts superior colliculus, which in turn projects to several motor centers (Stein and Stanford, 2008). The strong impact of optogenetic silencing of the inferior colliculus on the PTvsPT discrimination suggests that the sensory-motor association does not occur at brainstem level before information reaches colliculus (Figure 2A), although further investigation would be necessary to verify that our colliculus-silencing experiment does not uncover a permissive effect of colliculus in the task (Otchy et al., 2015). Likewise, thalamic silencing was shown to strongly impact frequency discrimination (Gimenez et al., 2015), suggesting that the sensory-motor association in our PTvsPT task does not occur before auditory information reaches thalamus. Finally, another possibility is that decisions in the PTvsPT task are driven by alternative cortical routes excluding auditory cortex but receiving auditory information from primary or secondary thalamus. Such direct pathways, for example, to more associative areas, have been proposed to support blindsight abilities in patients lacking primary visual cortex (Cowey, 2010). In line with this possibility, recent results indicate that fear conditioning to simple sounds does not require auditory cortex but requires the neighboring temporal association area (Dalmay et al., 2019). Also, recordings in prefrontal cortex during an auditory discrimination task show the presence of fast responses to sounds (Fritz et al., 2010), compatible with a direct pathway. Similarly, in our task, bypass pathways to temporal or prefrontal associative areas could eventually be sufficient to make simple auditory decisions, even in absence of auditory cortex.

Independent of the identity of the alternative pathway involved in the PTvsPT, our results suggest that it provides a faster route to generate motor responses than the route that involves auditory cortex. This idea derives from the long discrimination times (∼400 ms) observed when mice discriminate two optogenetically driven cortical activity patterns as compared to the short discrimination times (∼150 ms) observed for the PTvsPT task (Figures 4E and 4F). This long discrimination time is unlikely to result from difficulties in differentiating the two optogenetic stimuli, as detection of one stimulus required also ∼400 ms independent of stimulus strength and size (Figure S4), consistent with previous measurements in another optogenetic stimulus detection task (Huber et al., 2008). Some electrical microstimulation experiments report shorter reaction times in behavior (Houweling and Brecht, 2008). This could be due to the weaker drive elicited by optogenetics in deeper layers (Figures 3F and 3G). Alternatively, microstimulation can directly activate principal neurons in subcortical areas by antidromic propagation, which does not occur in optogenetics due to specificity of expression and less efficient activation of antidromic spikes (Tye et al., 2011). Antidromic activations could recruit pathways faster than those activated by cortex alone. Calibration of our optogenetic stimuli also showed that the two chosen stimulus locations can be efficiently discriminated based on triggered cortical activity within 50 to 100 ms (Figure S4). However, although population firing rate and spatial extent parameters of optogenetic stimuli were similar to sound responses in AC (Figure 3), we cannot fully rule out that some non-controlled parameters of the artificial stimulations lead to extended reaction times. It is also possible that absence of subcortical drive in the optogenetic task leads to threshold effects that slow down the behavioral response. Yet, in support of a slow sensory cortical pathway, discrimination times observed in cortex-dependent sensory discrimination tasks in head-fixed mice using visual or tactile cues are typically above 300–400 ms (O’Connor et al., 2010, Poort et al., 2015, Sachidhanandam et al., 2013). In the cortex-dependent auditory task presented in our study (FMvsPT), discrimination times are also long (∼350 ms; Figures 4E and 4F). We measured that AC activity starts to be discriminative for the FM sweep and 4-kHz pure tone only about 100–150 ms after sound onset although two pure tones are discriminated by AC activity already 50 ms after onset (Figure 2D). This leaves a supplementary delay between information arrival and decision of at least 100 ms in the cortex-dependent FMvsPT task as compared to the cortex-independent PTvsPT task. This delay could be due to complex multisynaptic pathways downstream to AC (e.g., including associative cortical areas) or to downstream temporal integration processes. Timing differences could also play a role in the absence of AC engagement in simple tasks. Indeed, although AC rapidly receives auditory information (Figure 2D), its slow impact on behavior could allow alternative pathways that provide faster responses to short-circuit information coming from AC, which would be ignored by decision areas. It is also possible that alternative pathways inhibit AC output when they provide a solution to the task.

This might explain the absence of strong perturbations of the easy PTvsPT task when we applied non-rewarded focal stimulations of AC, calibrated to typical sound response levels, despite specific targeting to the tonotopic fields relevant for the discrimination. We do not exclude that rewarding licking responses to the occasional focal stimulations could rapidly lead, after some learning, to a reinforced participation of stimulated cortical areas in behavioral decisions.

In the case of the harder FMvsPT task, focal stimulations had a significant effect on behavior, despite the absence of rewards in our protocol. This indicates that, despite the coarseness of the stimulation approach that globally targets heterogeneous cell ensembles enriched with particular spectral information due to tonotopic organization (Figures 6 and S6), some optogenetically driven ensembles activate large enough parts of the stimulus representations to change behavioral decisions. Thus, our results demonstrate that manipulation of auditory cortex representations impact perception, at least, if the behavioral task for perceptual readout is appropriately chosen. This opens interesting possibilities for central auditory rehabilitation techniques. In the FMvsPT task, we took advantage of the fact that spectral cues can be used to distinguish the two auditory stimuli, such that neural ensembles relevant for discrimination in AC tend to be clustered in space and can be activated with broad light patterns, while minimizing activation of cell types carrying confounding information. However, spatial organization is not absolutely strict in mouse auditory cortex (Bandyopadhyay et al., 2010, Rothschild et al., 2010), especially for more complex features, including temporal modulations (Deneux et al., 2016, Kuchibhotla and Bathellier, 2018). Thus, fine-scale stimulation methods (Packer et al., 2015) implemented at a sufficiently large scale would be necessary to precisely interfere at AC level with the discrimination of sounds that only differ based on complex, non-spectral features and thereby eventually construct more precise artificial auditory perceptions.

The fact that the AC pathway is dispensable for simple discriminations suggests that auditory judgments, and potentially perception, result from the interplay between a coarse description of sensory inputs and a time-integrated, more elaborate description that involves auditory cortex. As we showed by attempting to drive auditory judgments directly at the cortical level (Figure 5), the coexistence of these pathways is a critical issue to manipulate auditory perception. It might thus be advantageous to combine stimulations of cortical and subcortical (Guo et al., 2015) levels in order to improve the quality of artificially generated perception in the central auditory system.

STAR★Methods

Key Resources Table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Antibodies | ||

| Anti-NeuN alexa fluor 488 conjugated | Merk | MAB377X // RRID:AB_2149209 |

| Bacterial and Virus Strains | ||

| pAAV1.Syn.GCaMP6s.WPRE.SV40 | Vector Core – University of Pennsylvania | Cat#: AV-1- PV2824 // RRID:Addgene_100843 |

| Experimental Models: Organisms/Strains | ||

| PV-Cre Mouse B6;129P2-Pvalbtm1(cre)Arbr/J | The Jackson Laboratory | JAX: 008069 // RRID:IMSR_JAX:008069 |

| Ai 27 Mouse: B6.Cg-Gt(ROSA)26Sortm27.1(CAG-COP4∗H134R/tdTomato)Hze/J | The Jackson Laboratory | JAX: 012567 // RRID:IMSR_JAX:012567 |

| Emx1-Cre Mouse B6.129S2-Emx1tm1(cre)Krj/J | The Jackson Laboratory | JAX: 005628 // RRID:IMSR_JAX:005628 |

| Software and Algorithms | ||

| AutoCell | Roland et al., 2017 | https://github.com/thomasdeneux/autocell |

| Klusta spike sorting | CortexLab (UCL) | https://github.com/kwikteam/klusta |

Lead Contact and Materials Availability

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Brice Bathellier (brice.bathellier@cnrs.fr). This study did not generate new unique reagents.

Experimental Model and Subject Details

We used the following mouse lines: PV-Cre (Jax #008069) x Ai27 (flex-CAG-hChR2-tdTomato; Jax # 012567), for optogenetic inactivation, Emx1-IRES-Cre (Jax #005628) x Ai27 (Jax # 012567) for optogenetic activation, and C57Bl6J for lesion experiments. In all experiments, young adult females and males between 8 to 16 weeks old were used. Animals were housed 1–4 animals per cage, in normal light/dark cycle (12 h/12 h). All procedures were in accordance with protocols approved by the French Ethical Committee (authorization 00275.01).

Method Details

Behavior

Behavioral experiments were monitored and controlled using homemade software (Elphy, G. Sadoc, UNIC, France) coupled to a National Instruments card (PCIe-6351). Sounds were amplified (SA1 Stereo power amp, Tucker-Davis Technologies) and delivered through high frequency loudspeakers (MF1-S, Tucker-Davis Technologies) in a pseudo-random sequence. Water delivery (5–6 μl) was controlled with a solenoid valve (LVM10R1-6B-1-Q, SMC). A voltage of 5V was applied through an electric circuit joining the lick tube and aluminum foil on which the mouse was sitting, so that lick events could be monitored by measuring voltage through a series resistor in this circuit (Figure 2F). Before starting the training procedure, mice were water restricted for two consecutive days. The first day of training consisted of a habituation period for head fixation and to reliably receive water by licking the lick port without any sound. After this period, S+ training was conducted for 2 or 3 days where S+ trials were presented with 80%–90% probability, while the remaining trials were blank trials (no stimulus). A trial consisted of a random inter-trial interval (ITI) between 6 and 8 s to avoid prediction of stimulus appearance, a random ‘no lick’ period between 3 and 5 s and a fixed response window of 1.5 s. Licking during the response window on an S+ trial above lick threshold (3-5 consecutive licks) was scored as a ‘hit’ and triggered immediate water delivery. No licks was scored as a ‘miss’ and the next trial immediately followed. Each behavioral session contained ∼150 rewarded trials allowing mice to obtain their daily water supply of ∼800 μl. At the beginning of each session, ∼20 trials with ‘free rewards’ were given independent of licking to motivate the mice. When animals reached more than 80% ‘hits’ for the S+ stimulus, the second sound (S-) was introduced, and the lick count threshold was set to 5 licks (in a few PTvsPT experiments, only 3 licks). During presentation of the S- sound, licking below threshold was considered as a ‘correct rejection’ (CR) and the next trial immediately followed, licking above threshold on S- trials was considered as a ‘false alarm’ (FA), no water reward was given, and the animal was punished with a random time out period between 5 and 7 s. Each session then contained 300 trials with 50% probability for each trial type. Sounds of 0.2 s at 60 dB were used for the PT versus PT tasks and sounds of 0.5 s at 70 dB SPL for the FMvsPT tasks. During the entire behavioral training period, food was available ad libitum and animal weight was monitored daily. Water restriction (0.8mL/day) was interleaved with a 12h ad libitum supply overnight every Friday. Mice performed behavior five days per week (Monday to Friday). With this schedule, mice initially showed a weight drop < 20% and progressively regained their initial weight over about 30 days.

Behavior analysis

Learning curves were obtained by calculating the fraction of correct responses over blocks of 10 trials. Discrimination performance over one session was calculated as (hits + correct rejections)/total trials. Discrimination time was calculated on collections of 10 trial blocks in which the discrimination performance was greater than or equal to 80%, containing a total of at least 100 trials. The licking signal for each stimulus was binned in 10 ms bins, and for every time bin, we used the non-parametric Wilcoxon rank sum test to obtain the p value for the null hypothesis that the licking signal was coming from the same distribution for S+ and S-. The discrimination time was determined as the first time bin above a p value threshold of 0.01 while applying the Benjamini-Hochberg correction for multiple testing over all time bins.

Cranial window implantation

To allow chronic unilateral access to AC (for 2-photon calcium imaging or electrophysiology), a cranial window was incorporated into the skull and a metal post for head fixation was implanted on the contralateral side of the craniotomy. Surgery was performed in 4 to 6 week old mice placed on a thermal blanket under anesthesia using a mix of ketamine (Ketasol) and medetomidine (Domitor) (antagonized with atipamezole - Antisedan, Orion pharma – at the end of the surgery). The eyes were covered using Ocry gel (TVM Lab) and Xylocaine 20mg/ml (Aspen Pharma) was injected locally at the site where the incision was made. The right masseter was partially removed and a large craniotomy (∼5 mm diameter) was performed above the auditory cortex (AC) using bone sutures of the skull as a landmark. For 2P calcium imaging, we did 3 to 5 injections (∼300 μm separation) of 200nL (35 nl/min) using pulled glass pipettes of rAAV1.syn.GCamP6s.WPRE virus (1013 virus particles per ml) diluted 10 times (Vector Core, Philadelphia, PA, USA). After this, the craniotomy was immediately sealed with a 5 mm circular coverslip using cyanolite glue and dental cement (Ortho-Jet, Lang). For AC inactivation experiments with optogenetics, the same procedure was repeated on both brain hemispheres in a single surgery. For inferior colliculus inactivation experiments, the 5mm cranial window was placed on the midline such that it covered the dorsal part of both hemispheres of the inferior colliculus. In all cases, mice were subsequently housed for at least one week without any manipulation.

Optogenetics inactivation of AC during behavior

For optogenetic inactivation experiments, mice were first trained to respond to the S+ stimulus alone (see Behavior), then when the S- stimulus was introduced for discrimination training, a pair of blue LEDs (1.1W, PowerStar OSLON Square 1+, ILS, wavelength 455nm) was placed 2 cm above their head, facing each other at 1 cm distance, and were flashed 1 over 5 trials for visual habituation of the animal to light flashes (initially inhibiting licking). When discrimination performance was above 80% and similar both with and without light flashes, one to three test sessions were performed in which the LEDs were either placed on the bilateral AC cranial windows, or on the inferior colliculus window (1 LED only). Stimulus presentation was pseudo-randomized over blocks of 100 trials and light flashes appeared in 1 out of 5 trials for each stimulus with the same reward or punishment conditions as regular trials. Blue light delivery followed a square wave (20Hz) time course and started 100ms before onset lasting for 700ms (sound duration was no longer than 500ms). Light intensity at the brain surface was measured to be 36 mW/mm2. Discrimination performance was computed as the number of hits and correct rejections over the total number of trials, separating trials in which the LED was turned ON or OFF.

Patterned optogenetics during behavior

For patterned optogenetic activation in the mouse AC, we used a LED-based video projector (DLP LightCrafter, Texas Instruments) tuned at 460 nm. To project a two-dimensional image onto the auditory cortex surface (Figures 2 and 4), the image of the micromirror chip was collimated through a 150 mm cylindrical lens (Thorlabs, diameter: 2 inches) and focused through a 50 mm objective (NIKKOR, Nikon). Light collected by the objective passes through a dichroic beam splitter (long pass, > 640nm, FF640-FDi01, Semrock) and is collected by a CCD camera (GC651MP, Smartek Vision) equipped with a 50 mm objective (Fujinon, HF50HA-1B, Fujifilm). For discrimination of artificial AC patterns, two disks of 400 μm were placed at two different locations in AC. The first disk was defined as the S+ stimulus and systematically located at the center of the low frequency domain in A1. The second disk, defined as the S- stimulus was placed at the center of the high frequency domain in the UF obtained with intrinsic imaging (Figure S4, see Intrinsic imaging and alignment of tonotopic maps across animals below). Alignment of optogenetic stimulus locations across days was done using blood vessel patterns at the surface of the brain with a custom made GUI in MATLAB. In short, a reference blood vessel image was taken at the beginning of the experiment. In subsequent days, a new blood vessel image was taken and aligned to the reference image by optimizing the image cross- correlation to obtain the appropriate rotation and translation matrix. Behavioral training with optogenetic stimuli was done with the same protocol as for sounds.

For focal AC activation during sound discriminations, a grid of 15 to 17 locations was constructed using disks of ∼400 μm distributed in 5 rows. The grid was aligned to auditory cortex for each animal using the three regions of maximal responses in the intrinsic imaging response map to a 4 kHz pure tone (see Intrinsic imaging and alignment of tonotopic maps across animals) as a reference for horizontal position and orientation. Each grid was constructed to maximize the coverage of the different frequency domains and tonotopic subfields seen in intrinsic imaging (see Figure 4). To probe responses to optogenetic perturbation during discrimination behavior we performed test sessions of 300 pseudo-randomized trials in which 45 to 54 trials were non-rewarded catch trials with stimulation of a grid location together with the S- sound. Sound (4 kHz, 500 ms at 70 dB SPL) and light (12 mW/mm2, 1 s duration, square wave intensity profile at 20 Hz) started at the same time. Responses to focal optogenetic perturbations were computed over 3 catch trial repetitions. The significance of these responses was assessed by computing the distribution of lick count responses over 10,000 random triplets of responses to S- alone. The p value of the response to a particular optogenetic stimulation location was taken as the percentile of this distribution corresponding to the trial-averaged lick count for the optogenetic stimulation. Based on the p values computed for all grid locations, we performed a Benjamini-Hochberg correction for multiple testing to identify the locations with a significant response at a false positive rate of 0.05. For display, lick count responses (L) to optogenetic perturbations were normalized for each mouse as (L-s-)/(s+ - s-), where s+ and s- are the mean lick counts observed for S+ and S- stimuli. Maps of response to optogenetic perturbation were aligned across different mice, by matching the grid locations placed on the 4 kHz landmarks, calculating the best rotation and translation matrix as for alignment of the intrinsic imaging maps (see Intrinsic imaging and alignment of tonotopic maps across animals). To account for the actual spread of the optogenetic stimulus in the AC network, the response at each location was represented by a two-dimensional spatial profile identical to the estimated profile shown in Figure 2A for 12 mW/mm2 focal stimuli. Profiles of all significant locations were summed to construct the maps (Figure 4F).

In vivo electrophysiology

Recordings for calibration of optogenetics were done in mice implanted already with a cranial window above AC for at least 2 or 3 weeks. On the day of the recording, the mouse was briefly anesthetized (∼30 min, ∼1% isoflurane delivered with SomnoSuite, Kent Scientific) to remove some of the cement seal and a piece of the coverslip of the cranial window was cut using a diamond drill bit. The dura was resected ventral to AC as previously delimited using intrinsic imaging (see methods below). The area was covered with Kwik-Cast™ silicon (World precision instruments) and the animal was placed in his home cage to recover from anesthesia for at least 1 hour before head-fixation in the recording setup (same as used for behavior). 14 recordings in 4 mice (84 single units) were performed using four shank Buzsaki32 silicon probes (Neuronexus). For estimation of optogenetic responses at different depths,3 recordings in 3 mice (144 single units) were performed using a single shank 64 contacts across 1.4 mm silicon probes (A1x64-Poly2-6mm-23 s-160, Neuronexus). Before each recording, the tips of the probe were covered with DiI (Sigma). The silicon was gently removed and the area cleaned using warm Ringer’s buffer. The probe was inserted at a ∼30° angle with respect to brain surface with a micromanipulator (MP-225, Butter Instrument) at 1-2 μm per second, with pauses of 1-2 minutes every 50 μm. Each recording session time, including probe insertion and removal, lasted no more than 3 hours. After the experiment, the animal was deeply anesthetized (isoflurane) and euthanized by cervical dislocation. Brains were fixed overnight by 4% paraformaldehyde (PFA) in 0.1 M phosphate buffer (PB). Coronal brain slides of 80 μm were prepared and imaged (Nikon eclipse 90i, Intensilight, Nikon) to identify the electrode track tagged with DiI and determine recording depth. For calibration of optogenetic inactivation experiments, sounds played during the recordings included a blank, 4, 4.5, 4.7, 5, 6, 8, 16 kHz pure tones of 200 ms duration at 60 dB SPL, a 4 kHz 500 ms pure tone at 70 dB SPL, 4 to 12 kHz 500 ms frequency modulated sound at 70 dB SPL and white noise ramps of 1000 ms from 60 to 85 dB SPL and 85 to 60 dB SPL. The same blue directional LED (24° light cone) as used for inactivation during behavior was placed above the cranial window, however at a slightly higher distance (about 0.75 cm) of the window to leave space for the electrode. Light was delivered for 700 ms, starting 100 ms before sound onset. Silicon probe voltage traces were recorded at 20 kHz and stored using RHD2000 USB interface board (Intan Technologies). Raw voltage traces were filtered using a Butterworth high-pass filter with a 100 Hz cutoff (Python). Electro-magnetic artifacts from the LED driving current were removed by subtracting a template calculated across all LED ON trials. For calibration of focal optogenetic activations, we used a grid of contiguous 8x5 400 μm circles. Each location was played randomly and repeated 10 times. Also, a 4 kHz pure tone of 500 ms at 70 dB SPL, 4-12 kHz FM sound of 500 ms at 70 dB SPL and white noise ramps of 1000 ms from 60 to 85 dB SPL and 85 to 60 dB SPL were played to compare later with optogenetic responses.

Analysis of electrophysiology

Spikes were detected and sorted using the KlustaKwik spike sorting algorithm (Harris et al., 2000) (Klusta, https://github.com/kwikteam/klusta) with a strong and weak threshold of 6 and 3 respectively. Each shank was sorted separately where putative single units were visualized and sorted manually using KlustaViewa. Data analysis was done using custom Python scripts. Spikes were binned in 25 ms bins. Firing rates during stimulation periods were calculated by averaging across trials. For AC inactivation, significant responses to at least one sound were identified using the Kruskal-Wallis H-test for independent samples and the Benjamini-Hochberg procedure was applied to correct for multiple testing across units (p < 0.05). Percentage of inhibition was calculated as follows: 100 – (L ∗ 100/ S), where S corresponds to best sound response and L to the response to the same sound during optogenetic activation of PV interneurons. To estimate the robustness of sound representations in our recordings, we trained a linear Support Vector Machine classifier to discriminate two sounds based on single unit responses binned in 50 ms bins. Only units that were inhibited by light (putative PV-negative neurons) were used to train the classifier. To evaluate classification performance without optogenetics, the classifier was trained over 5 trials for each pair of sounds and tested with other 5 trials. To test the effect of light on sound responses, the classifier was trained with 10 unperturbed sound delivery trials and tested with 10 sounds and light delivery trials. For focal optogenetic activations, we calculated for each single unit the trial-averaged firing rate change with respect to baseline over the 1 s optogenetic stimulation for all locations, yielding two-dimensional spatial response maps. Using the centers of each location, a 2D map was created for each unit and fitted with a two-dimensional Gaussian model that is composed of an offset term (constant over space) and a Gaussian spatial modulation term. Significance of the modulation was assessed using a bootstrap analysis (significance threshold, 0.05). Cells that did not show significant spatial modulation were described by the offset term only.

Auditory cortex lesions and immunohistochemistry

Mice after learning a PTvsPT discrimination task were anesthetized (∼1.5% isoflurane delivered with SomnoSuite, Kent Scientific) and placed on a thermal blanket. Craniotomies were performed as described above and focused thermo-coagulation lesions were done bilaterally. The area was then covered using Kwik-Cast silicon (World precision instruments) and closed with dental cement (Ortho-Jet, Lang). After a period of recovery on a heating pad with accessible food pellets, mice were taken back to their home cage and a nonsteroidal anti-inflammatory agent (Metacam®, Boehringer Ingelheim) was injected intramuscularly. To test if mice could still perform the discrimination task, they were placed on the behavioral setup the next day after surgery. If a mouse presented signs of pain, liquid meloxicam was given in drinking water or via subcutaneous injection. Discrimination was tested in a normal session and performance calculated as previously described. After the experiment, mice were transcardially perfused with saline followed by 4% paraformaldehyde (PFA) in 0.1 M phosphate buffer (PB) and then brains post-fixed overnight at 4°C. After washing with phosphate-buffered saline (PBS), brains were cut in 80 μm coronal slides and immuno-histochemical reactions were performed on free-floating brain slices as follows. Slides were blocked for 2 h using PBS + 10% goat serum and 1% Triton X-100 at room temperature. After washing in PBS (10min × 3), the sections were incubated 2h at room temperature with a dilution 1:100 of mouse anti-NeuN conjugated with Alexa Fluor® 488 (MAB377X, Merck). Slides were washed and mounted for imaging using a Nikon eclipse 90i microscope (Intensilight, Nikon).

Intrinsic imaging and alignment of tonotopic maps across animals

Intrinsic imaging was performed to localize AC in mice under light isoflurane anesthesia (∼1% delivered with SomnoSuite, Kent Scientific) on a thermal blanket. Images were acquired using a 50 mm objective (1.2 NA, NIKKOR, Nikon) with a CCD camera (GC651MP, Smartek Vision) equipped with a 50 mm objective (Fujinon, HF50HA-1B, Fujifilm) through a cranial window implanted 1-2 weeks before the experiment (4-pixel binning, field of view between 3.7 × 2.8 mm or 164 × 124 binned pixels, 5.58-μm pixel size, 20 fps). Signals were obtained under 780 nm LED illumination (M780D2, Thorlabs). Images of the vasculature over the same field of view were taken under 480 nm LED illumination (NSPG310B, Conrad). Two second sequences of short pure tones at 80 dB SPL were repeated every 30 s with a maximum of 10 trials per sound. Acquisition was triggered and synchronized using a custom made GUI in MATLAB. For each sound, we computed baseline and response images, 3 s before and 3 s after sound onset, respectively. The change in light reflectance ΔR/RO was calculated over repetitions for each sound frequency (4, 8, 16, 32 kHz, white noise). Response images were smoothed applying a 2D Gaussian filter (σ = 3 pixels). The different subdomains of AC corresponding to the tonotopic areas appeared as regions with reduced light reflectance. To align intrinsic imaging responses from different animals, the 4 kHz response was used as a functional landmark. The spatial locations of maximal amplitude responses in the 4 kHz response map for the A1, A2 and AAF (three points) was extracted for each mouse and a Euclidean transformation matrix was calculated by minimizing the sum of squared deviations (RMSD) for the distance between the three landmarks across mice. This procedure yielded a matrix of rotation and translation for each mouse that was applied to compute intrinsic imaging responses averaged across a population of mice.

Two-photon calcium imaging

Two-photon imaging during behavior was performed using a two-photon microscope equipped with an 8 kHz resonant scanner (Femtonics, Budapest, Hungary) coupled to a pulsed Ti:Sapphire laser system (MaiTai DS, Spectra Physics, Santa Clara, CA). The laser was tuned at 900 nm during recordings and light was collected through a 20x (XLUMPLFLN-W) or 10x (XLPLN10XSVMP) Olympus objective. Images were acquired at 31.5 Hz. For each behavioral trial, imaging duration was 7 s with a pause of 5 s in between trials. Sound delivery was randomized during the trial to prevent mice from starting licking with the onset of the sound (45 dB SPL) emitted by the microscope scanners at the beginning of each trial. All sounds were delivered at 192 kHz with a National Instruments card (NI-PCI-6221) driven by homemade software (Elphy, G. Sadoc, UNIC, France), through an amplifier (SA1 Stereo power amp, Tucker-Davis Technologies) and high frequency loudspeakers (SA1 and MF1-S, Tucker-Davis Technologies, Alachua, FL). During the active context mice performed the discrimination task, a regular session consisted in ∼250 trials, followed immediately after by a passive context session with ∼150 trials (lick tube was withdrawn). Data analysis was performed using MATLAB and Python scripts. Motion artifacts were first corrected frame by frame, using a rigid body registration algorithm. Regions of interest (ROIs) corresponding to the neurons were selected using Autocell a semi-automated hierarchical clustering algorithm based on pixel covariance over time (Roland et al., 2017). Neuropil contamination was subtracted (Kerlin et al., 2010) by applying the following equation: Fcorrected (t) = Fmeasured (t) – 0.7 Fneuropil (t), where Fneuropil (t) is estimated from the immediate surroundings (Gaussian smoothing kernel, excluding the ROIs (Deneux et al., 2016), σ = 170 μm). Then the change in fluorescence (ΔF/F0) was calculated as (Fcorrected (t) - F0) / F0, where F0 is estimated as the 3rd percentile of the low-pass filtered fluorescence over ∼40 s time windows period. To estimate the time-course of the firing rate, the calcium signal was temporally deconvolved using the following formula: r(t) = f’(t) + f(t) / τ in which f’ is the first time derivative of f and τ the decay constant set to 2 s for GCaMP6s. In total 7605 neurons were recorded across 11 active and 11 passive sessions in 3 mice. We kept for analysis only 1008 neurons significantly responding to the S+ or S- stimuli with respect to baseline activity (Wilcoxon rank-sum test, p = 0.01 and Bonferroni correction for multiple testing). The deconvolved signals were smoothed with a Gaussian kernel (σ = 33ms). To estimate the discriminability of two sounds based on cortical population responses, linear Support Vector Machine classifiers were trained independently on each time point to discriminate population activity vectors obtained from half of the presentations of each sound and context (training set), and were tested on activity vectors obtained on the remaining presentations of the same sounds and context or on all presentations of sounds in the non-trained context (test sets). To estimate behavioral categorization of sounds based on population activity in AC, we trained linear SVM classifier to discriminate AC responses to single presentations of the trained target sounds, and tested the classifiers with AC responses in single presentations of the non-trained sounds. Classification results were averaged across presentations to generate the categorization probability.

Quantification and Statistical Analysis

All quantification and statistical analysis were performed with custom MATLAB or Python scripts. Statistical assessment was based on non-parametric tests reported in figure legends together with the mean and SEM values of the measurements, the number of samples used for the test and the nature of the sample (number of neurons, recording sessions or mice). A custom bootstrap test (see STAR Methods) was used for identification of optogenetic stimulation locations producing a behavioral effect. Unless otherwise mentioned, false positive rates below 0.05 were considered significant and the Benjamini-Hochberg correction for multiple testing was applied for repeated-measurements in the experiments. In all analyses, all subjects which underwent a particular protocol in the study were included. For small groups, a minimum of 4 samples in each compared group was used to allow significance detection by standard non-parametric tests (e.g., Wilcoxon ranksum test).

Data and Code Availability

All data and analysis code are available from the corresponding author upon reasonable request.

Acknowledgments

We thank A. Chédotal, D. DiGregorio, Y. Frégnac, J. Letzkus, and E. Harrell for comments on the manuscript; G. Hucher for histology; and the GENIE Project, Janelia Farm Research Campus, Howard Hughes Medical Institute, for GCAMP6s constructs. This work was supported by the Agence Nationale pour la Recherche (ANR “SENSEMAKER”), the Fyssen Foundation, the Human Brain Project (WP 3.5.2), the DIM “Region Ile de France,” the Marie Curie Program (CIG 334581), the International Human Frontier Science Program Organization (CDA-0064-2015), the Fondation pour l’Audition (Laboratory grant), the European Research Council (ERC CoG DEEPEN), the DIM Cerveau et Pensée, and Ecole des Neurosciences de Paris Ile-de-France (ENP) (support to S.C.).

Author Contributions

B.B., Z.P., and S.C. designed the study. B.B., A.D., and S.C. performed behavioral experiments. B.B. and J.B. performed inferior colliculus surgeries. S.C. and Z.P. performed cortical activation experiments. S.C. and B.B. analyzed the data and wrote the manuscript with comments from all authors.

Declaration of Interests

The authors declare no competing interests.

Published: November 11, 2019

Footnotes

Supplemental Information can be found online at https://doi.org/10.1016/j.neuron.2019.09.043.

Supplemental Information

References

- Aizenberg M., Mwilambwe-Tshilobo L., Briguglio J.J., Natan R.G., Geffen M.N. Bidirectional regulation of innate and learned behaviors that rely on frequency discrimination by cortical inhibitory neurons. PLoS Biol. 2015;13:e1002308. doi: 10.1371/journal.pbio.1002308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandyopadhyay S., Shamma S.A., Kanold P.O. Dichotomy of functional organization in the mouse auditory cortex. Nat. Neurosci. 2010;13:361–368. doi: 10.1038/nn.2490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bathellier B., Ushakova L., Rumpel S. Discrete neocortical dynamics predict behavioral categorization of sounds. Neuron. 2012;76:435–449. doi: 10.1016/j.neuron.2012.07.008. [DOI] [PubMed] [Google Scholar]

- Choi G.B., Stettler D.D., Kallman B.R., Bhaskar S.T., Fleischmann A., Axel R. Driving opposing behaviors with ensembles of piriform neurons. Cell. 2011;146:1004–1015. doi: 10.1016/j.cell.2011.07.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowey A. Visual system: how does blindsight arise? Curr. Biol. 2010;20:R702–R704. doi: 10.1016/j.cub.2010.07.014. [DOI] [PubMed] [Google Scholar]

- Dalmay T., Abs E., Poorthuis R.B., Onasch S., Signoret J., Navarro Y.L., Tovote P., Gjorgjieva J., Letzkus J.J. A critical role for neocortical processing of threat memory. Neuron. 2019;104 doi: 10.1016/j.neuron.2019.09.025. Published online November 11, 2019. [DOI] [PubMed] [Google Scholar]

- Deneux T., Kempf A., Daret A., Ponsot E., Bathellier B. Temporal asymmetries in auditory coding and perception reflect multi-layered nonlinearities. Nat. Commun. 2016;7:12682. doi: 10.1038/ncomms12682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dhawale A.K., Hagiwara A., Bhalla U.S., Murthy V.N., Albeanu D.F. Non-redundant odor coding by sister mitral cells revealed by light addressable glomeruli in the mouse. Nat. Neurosci. 2010;13:1404–1412. doi: 10.1038/nn.2673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diamond I.T., Neff W.D. Ablation of temporal cortex and discrimination of auditory patterns. J. Neurophysiol. 1957;20:300–315. doi: 10.1152/jn.1957.20.3.300. [DOI] [PubMed] [Google Scholar]

- Francis N.A., Winkowski D.E., Sheikhattar A., Armengol K., Babadi B., Kanold P.O. Small networks encode decision-making in primary auditory cortex. Neuron. 2018;97:885–897.e6. doi: 10.1016/j.neuron.2018.01.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fritz J.B., David S.V., Radtke-Schuller S., Yin P., Shamma S.A. Adaptive, behaviorally gated, persistent encoding of task-relevant auditory information in ferret frontal cortex. Nat. Neurosci. 2010;13:1011–1019. doi: 10.1038/nn.2598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gimenez T.L., Lorenc M., Jaramillo S. Adaptive categorization of sound frequency does not require the auditory cortex in rats. J. Neurophysiol. 2015;114:1137–1145. doi: 10.1152/jn.00124.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo W., Hight A.E., Chen J.X., Klapoetke N.C., Hancock K.E., Shinn-Cunningham B.G., Boyden E.S., Lee D.J., Polley D.B. Hearing the light: neural and perceptual encoding of optogenetic stimulation in the central auditory pathway. Sci. Rep. 2015;5:10319. doi: 10.1038/srep10319. [DOI] [PMC free article] [PubMed] [Google Scholar]