Abstract

Identifying anomalies in data is central to the advancement of science, national security, and finance. However, privacy concerns restrict our ability to analyze data. Can we lift these restrictions and accurately identify anomalies without hurting the privacy of those who contribute their data? We address this question for the most practically relevant case, where a record is considered anomalous relative to other records.

We make four contributions. First, we introduce the notion of sensitive privacy, which conceptualizes what it means to privately identify anomalies. Sensitive privacy generalizes the important concept of differential privacy and is amenable to analysis. Importantly, sensitive privacy admits algorithmic constructions that provide strong and practically meaningful privacy and utility guarantees. Second, we show that differential privacy is inherently incapable of accurately and privately identifying anomalies; in this sense, our generalization is necessary. Third, we provide a general compiler that takes as input a differentially private mechanism (which has bad utility for anomaly identification) and transforms it into a sensitively private one. This compiler, which is mostly of theoretical importance, is shown to output a mechanism whose utility greatly improves over the utility of the input mechanism. As our fourth contribution we propose mechanisms for a popular definition of anomaly ((β, r)-anomaly) that (i) are guaranteed to be sensitively private, (ii) come with provable utility guarantees, and (iii) are empirically shown to have an overwhelmingly accurate performance over a range of datasets and evaluation criteria.

Keywords: privacy, anomaly identification, differential privacy, outlier detection

1. INTRODUCTION

At the forefront of today’s research in medicine and natural sciences is the use of data analytics to discover complex patterns from vast amounts of data [11, 23, 39]. While this approach is incredibly useful, it raises serious privacy-related ethical and legal concerns [5, 7, 20, 21] because inferences can be drawn from the analysis of the person’s data to the person’s identity, causing a privacy breach [19, 24, 26, 27, 37]. In this work, we focus specifically on the problem of identifying anomalous records, which has fundamental applications in many domains and is also crucial for scientific advancements [1, 3, 30, 40, 42]. For example, to treat cancer, we must tell if a tumor is malignant; to stop bank fraud, we must flag the suspicious transactions; and to counter terrorism, we must identify the individuals exhibiting extreme behavior. Note that in such settings, it is imperative to accurately identify the anomalies, e.g., it is critical to identify the fraudulent transactions. However, in all these situations, it is still essential to protect the privacy of the normal (i.e., non-anomalous) records [7, 21] (e.g., customers with a legitimate transaction or patients with a benign tumor) while not sacrificing accuracy (e.g., labeling a malignant tumor as benign).

We solve the problem of accurate, private, and algorithmic anomaly identification (i.e., labeling a record as anomalous or normal by an algorithm) with an emphasis on reducing false negative – labeling an anomaly as normal – rate. The current methods for protecting privacy work well for doing statistics and other aggregate tasks [17, 18], but they are inherently unable to identify anomalous records accurately. Furthermore, the modern methods of anomaly identification label a record as anomalous (or normal) based on its degree of dissimilarity from the other existing records [1, 3, 8, 35]. Consequently, the labeling of a record as anomalous is specific to a dataset, and knowing that a record is anomalous can leak a significant amount of information about the other records. This type of privacy leakage is the core obstacle that any privacy-preserving anomaly identification method must overcome. This work is the first to develop methods (in a general setting where anomalies are data-dependent) to accurately identify if a record is anomalous while simultaneously guaranteeing privacy by making it statistically impossible to infer if a non-anomalous record was included in the dataset.

We formalize a notion of privacy appropriate for anomaly detection and identification and develop general constructions to achieve this. Note that we assume a trusted curator, who performs the anomaly identification. If the data is distributed and the trusted curator is not available, one can employ secure multiparty computation to simulate the trusted curator [9], where now the same methodology as in the previous setting can be used.

Although the privacy definitions and constructions we develop are not tied to any specific anomaly definition, we instantiate them for a specific kind of anomaly: (β, r)-anomaly [35], which is a widely prevalent model for characterizing anomalies and generalizes many other definitions of anomalies [3, 22, 34, 35]. These technical instantiations naturally extend to the other well-known variants of this formalization [1]. Under this anomaly definition, a record (which lives in a metric space) is considered anomalous if there are at most β records similar to it, i.e., within distance r. The parameters β and r are given by domain experts [35] or found through exploratory analysis by possibly using differentially private methods [17, 18] (since these parameters can be obtained by minimizing an aggregate statistic, e.g., risk or average error) to protect privacy in this process.

1.1. Why do we need a new privacy notion?

We consider the trusted curator setting for the privacy. The trusted curator has access to the database, and it answers the anomaly identification queries using a mechanism. The privacy of an individual is protected if the output of an anomaly identification mechanism is unaffected by the presence or the absence of the individual’s record in the database (which is the input to the mechanism). This is the notion of privacy (i.e. protection) of a record that we consider here; it protects the individual against any risk incurred due to the presence of its information and was first formalized in the seminal work of differential privacy [15, 17] (where privacy is quantified by a parameter ε > 0: the smaller the ε, the higher the privacy) and can informally be stated as follows: a randomized mechanism that takes a database as input is ε-differentially private if for any two input databases differing by one record, the probabilities (corresponding to the two databases) of occurrence of any event are within a multiplicative factor eε (i.e., are almost the same in all cases). Unfortunately, simply employing differential privacy does not address the need for both privacy and practically meaningful accuracy guarantees in our case. For example, providing privacy equally to everyone severely degrades accuracy in identifying anomalies. For a database, the addition of a record in a region which is sparse in terms of data points creates an anomaly. Conversely, the removal of an anomalous record typically removes the anomaly altogether. Therefore, the accuracy achievable for anomaly identification via differential privacy is limited as explained below.

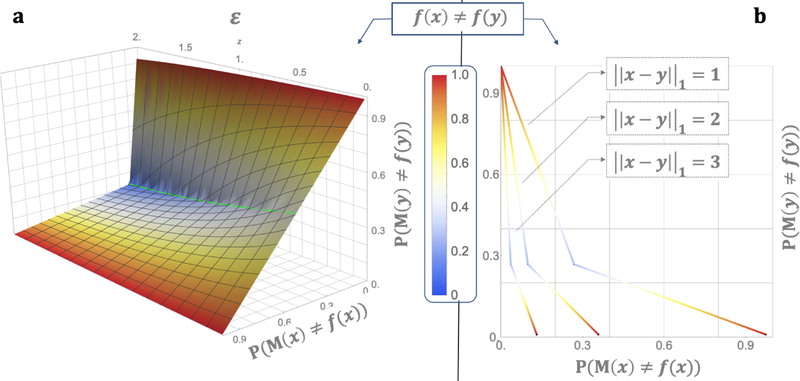

Differential privacy for binary functions , such as the anomaly identification, comes with inherent limitations that can be explained through the graph of Figure 1a. Fix any mechanism M that is supposed to compute f, with the property that this mechanism is differentially private. The mere fact that f is binary and M is differentially private has the following effect. For any two databases x and y that differ in one record say that f (x) = 0 and f (y) = 1. Now, a simple calculation shows that the differential privacy constraints create a tradeoff: whenever M makes a small error in computing f (x) then it is forced to err a lot when computing on its “neighbor” y and vice-versa. Moreover, the higher the privacy requirements are (i.e. for smaller ε) the stricter this tradeoff is, as depicted on Figure 1a. Formally, we state this fact as follows.

Figure 1:

(a) x and y differ by one record, the “ε axis” is for the privacy parameter, the “P (M (x) ≠ f (x)) axis” is for the minimum error over all ε-DP mechanisms M on x for a give error on y on the “P (M (y) ≠ f (y)) axis”. The graph depicts the tradeoff between the errors committed on x and y. (b) this plot is for ε = 1 and otherwise is the same but for different x’s and y’s.

Claim 1. Fix , and arbitrarily. For every x and y, if f (x) ≠ f (y) and ‖x − y‖1 = 1, then P (M (x) ≠ f (x)) ≥ 1/(1 + eε) or P (M (y) ≠ f (y)) ≥ 1/(1 + eε).

What happens to this inherent tradeoff when x and y differ in more than one record? As shown on Figure 1b this tradeoff is relaxed. We note that for deriving the tradeoff, there was nothing specific to the ℓ1 metric (used for differential privacy), but instead we could have used any metric over the space of databases; other works that considered general metrics are e.g., [25, 33]. Our work proposes a distance metric which is appropriate for anomaly identification, in conjunction to an appropriate relaxation of differential privacy. This way we will lay out a practically meaningful (but also amenable to analysis) privacy setting.

1.2. What do we want from the new notion?

We want to relax differential privacy since affording protection for everyone severely degrades the accuracy for anomaly identification. One possible relaxation, suitable for the problem at hand, is providing protection only for a subset of the records. We note that such a relaxation is backed by privacy legislation, e.g., GDPR allows for giving up privacy for an illegal activity [21]. Protecting a prefixed set of records, which is decided independent of the database, works when anomalies are defined independent of the other records. However, for a data-dependent anomaly definition, such a notion of privacy fails to protect the normal records. Here the problem arises due to the fixed nature of the set that is database-specific. In the case of a data-dependent definition of anomaly, if we wish to provide privacy guarantee to the normal – call them sensitive – records that are present in the database, then specifying the set of sensitive records itself leaks information and can lead to a privacy breach. Thus, sensitive records must be defined based on a more fundamental premise to reduces such dependencies. This notion of sensitive record plays a pivotal role in defining a notion of privacy, named sensitive privacy, which is appropriate for the problem identifying anomaly.

We remark that although anomaly identification method provide binary labeling, they assign scores to represent how outlying a record is [1, 3]; thus these models (implicitly or explicitly) assign a records a degree of outlyingness with respect to the other records, which the following discussion takes into account.

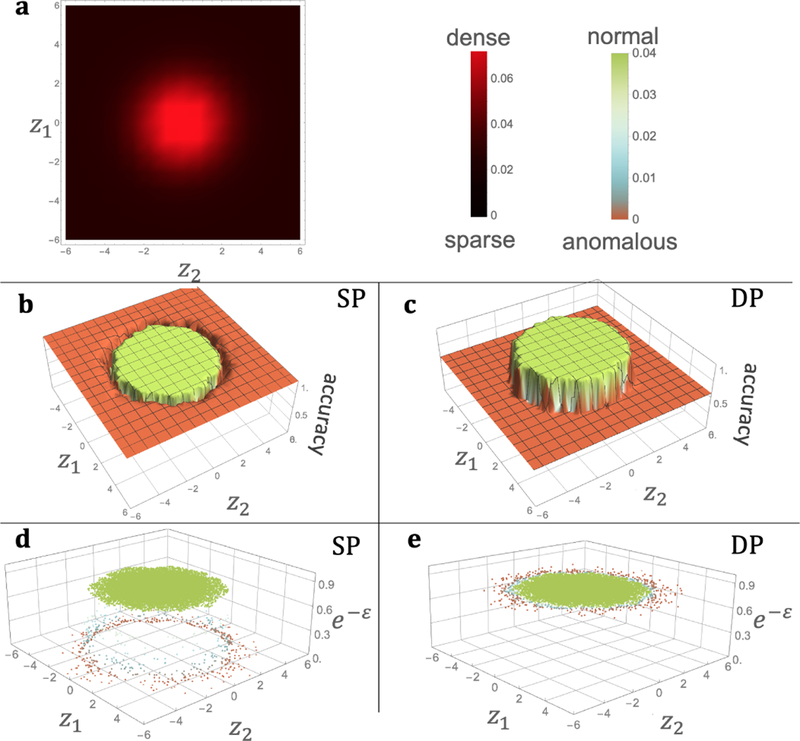

An appropriate notion of privacy in our setting must allow a privacy mechanism to have the following two important properties. First, the more outlying (or non-outlying) a record is, the higher the accuracy the privacy mechanism can achieve for anomaly identification, which is in contrast to DP (Figure 2c). Second, all the sensitive records should have DP like privacy guarantee for the same value of privacy parameter.

Figure 2:

(b), (c) is for the same data, and (d), (e) is for the same data. (a) gives the density plot of the distribution of the example data. z1 and z2 axes give the coordinate of a point (record). (b) and (c) resp. show the accuracy (on vertical axis) for anomaly identification (AId) via sensitively private (SP) and DP mechanisms for the data. The plots give the interpolated results to clarify the relationship of outlyingness and accuracy. (d) and (e) give the privacy (on vertical axis) for each record in the data for private AId. All the green (normal) points in (d) are at the same level as all the points in (e).

The mechanisms that are private under sensitive privacy achieve both the properties (see Figure 2, which gives the indicative experimental results on the example data; see Section A.1 for the details on the experiment and the values of the parameters). Furthermore, it has an additional property: in a typical setting, the anomalies do not lose privacy altogether; instead the more outlying a record is the lesser privacy it has (Figure 2d).

1.3. How do we define the new privacy notion?

To define privacy, we need a metric space over the databases since a private mechanism needs to statistically blur the distinction between databases that are close in the metric space. While differential privacy uses the ‖ · ‖1 − metric, we utilize a different metric over databases, which can be defined using the notion of sensitive record. Informally, we say a record is sensitive with respect to a database if it is normal or becomes normal under a small change—we formalize this in Section 3. We argue that this notion of sensitive record is quite natural, and it is inspired from the existing anomaly detection literature [1, 3]. Since, by definition, an anomalous record significantly diverges from other records in the database [1, 3], a small change in the database should not affect the label of an anomalous record. Given the definition of sensitive record, a graph over the databases is defined by adding an edge between two databases if and only if they differ in a sensitive record. The metric over the databases is now given by the shortest path length between the databases in this graph. This metric space has the property that databases differing by a sensitive record are closer compared to the databases differing in a non-sensitive record. We use the proposed metric space to define sensitive privacy, which enables us to fine-tune the tradeoff between accuracy and privacy.

2. PRELIMINARIES AND NOTATION

Database:

We consider a database as a multiset of elements from a set , which is the set of possible values of records. In a database, we assume each record is associated with a distinct individual. We represent a database x as a histogram in , where is the set all possible database, , and xi is the number of records in x that are identical to i.

Definition 2.1 (differential privacy [15, 17]). For ε > 0, a mechanism M with domain is ε-differentially private if for every x, such that ‖x − y‖1 ≤ 1, and every R ⊆ Range (M),

We implicitly assume that the R’s are chosen such that the events “M (x) ∈ R” are measurable.

Anomalies:

For any database x, record , r ≥ 0, and a distance function , , and define (β, r)-anomaly as follows.

Definition 2.2 ((β, r)-anomaly [35]). For a given database x and record i, we say i is a (β, r)-anomaly in the database x if i is present in x, i.e. xi > 0, and there are at most β records in x that are within distance r from i, i.e. Bx (i, r) ≤ β.

Whenever we refer to a (β, r)-anomaly, we assume there is an arbitrary distance function d over .

Anomaly identification:

Let us now introduce the important and related notion of anomaly identification function, , such that for a given anomaly definition, every record and database , g(i, x) = 1 if and only if i is present in x as an anomalous record (note that no change is made to x). This formulation is extensible to the case where the database over which anomaly identification is performed is considered to include the record for which anomaly identification is desired. Here, the anomaly identification for a record i over a data x can be computed over the database that consists of all the records in x as well as the record i1.

Private anomaly identification query (AIQ):.

Here, all the private mechanisms we consider have domain . Thus, we will consider the anomaly identification query to be for a fixed record. We will specify this by the pair (i, g), where i is a record and g an anomaly identification function. Now a private anomaly identification mechanism, , for a fixed AIQ, (i, g), can be represented by its distribution, where for every x, P (M (x) = g(i, x)) is the probability the M output correctly, and P (M (x) ≠ g(i, x)) is the probability that M errs on x.

3. SENSITIVE PRIVACY

Our notion of sensitive privacy requires privacy protection of every record that may be normal under a small change in the database. We use the notion of normality property p to identify the normal records that exist in the database. Formally, for a given definition of anomaly, a normality property, , is such that for every record i and database x, p (i, x) = 1 if and only if i is present in x as a normal record. Note that the normality property is not the negation of anomaly identification function because for the absent records p = 0 (same as those which do not satisfy the property). We formalize the notion of small change in the database as the addition or removal of k records from the database. We consider this change to be typical and want to protect the privacy of every record that may become normal under this small change in the database.

We now formalize the key notion of sensitive record. For a fixed normality property, all the records whose privacy must be protected are termed as sensitive records.

Definition 3.1 (sensitive record). For k ≥ 1 and a normality property p, we say a record i is k -sensitive with respect to a database x if, for a database y, ‖x − y‖1 ≤ k and p (i, y) = 1.

Next, we give a couple of definitions of the graphs we consider here. A neighborhood graph, , is a simple graph such that for every x and y in , (x, y) ∈ E ⇔ ‖x − y‖1 = 1. One of the important notions in this work is k-sensitive neighborhood graph, , for k ≥ 1 and a normality property, which is a subgraph of the neighborhood graph, , such that for every (x, y) ∈ E, (x, y) ∈ E′ ⇔ for some , |xi − yi| = 1 and i is k-sensitive with respect to x or y. Further, the two databases connected by an edge in a (sensitive) neighborhood graph are called neighbors. With this, we can state the notion of sensitive privacy. Note that the k-sensitive neighborhood graph is tied to the normality property, and hence, the anomaly definition.

Definition 3.2 (sensitive privacy). For ε > 0, k ≥ 1, and normality property, a mechanism M with domain is (ε, k)-sensitively private if for every two neighboring databases x and y in k-sensitive neighborhood graph, and every R ⊆ Range (M),

We omit k when it is clear from the context. The above condition necessitates that for every two neighbors, any test (i.e., event) one may be concerned about, should occur with “almost the same probability”, that is, the presence or the absence of a sensitive record should not affect the likelihood of occurrence of any event. Here, “almost the same probability” means that the above probabilities are within a multiplicative factor eε. The guarantees provided by sensitive privacy are similar to that of differential privacy. Sensitive privacy guarantees that given the output of the private mechanism, an adversary cannot infer the presence or the absence of a sensitive record. Thus for neighboring databases in a sensitive neighborhood graph (GS), the guarantee is exactly the same as in differential privacy. If x and y differ by one record, which is not sensitive, then they are not neighbors in GS, and the guarantee provided by sensitive privacy is weaker2 than differential privacy, nevertheless, has the same form. So, intuitively, if we only consider the databases, where all the records are sensitive, then differential privacy and sensitive privacy provide exactly the same guarantee. In general, every (ε, k)-SP mechanism M for GS satisfies for every x, y and R, where is the shortest path length metric over GS.

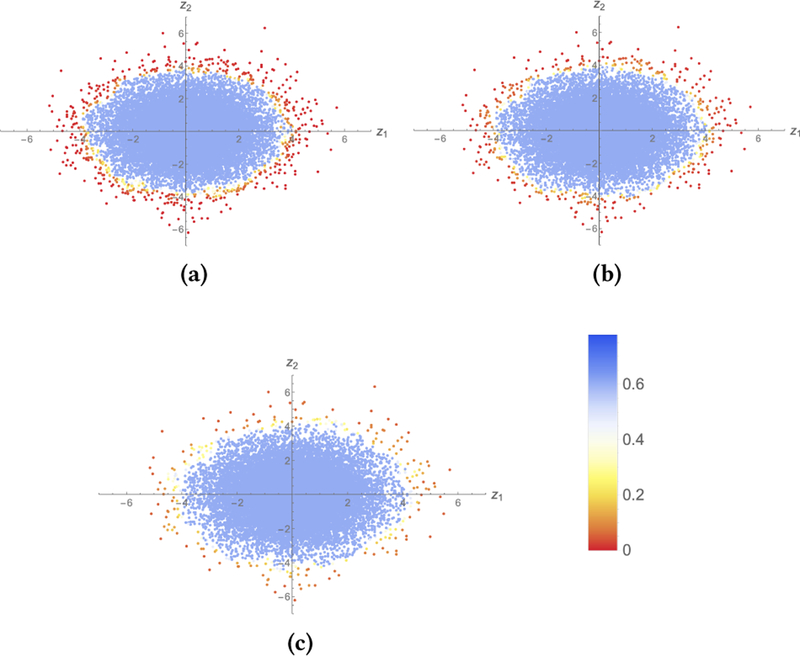

Similar to differential privacy, ε is the privacy parameter: the lower its value, the higher the privacy guarantee. The parameter k, which is associated with the sensitive neighborhood graph, provides a way to quantify what is deemed as a small change in the database, which varies from field to field, but nevertheless in many common cases can be quantified over an appropriate metric space.3. When we increase the value of k, we move the boundary between what is considered sensitive and what is non-sensitive (Figure 3, where the plots are similar to the ones given in Figure 2d for the same parameter values but for varying k): higher the value of k, the more records are considered sensitive, and therefore, must be protected. This is due to the fact that, for any k ≥ 1, if a record is k-sensitive with respect to a database x, it is also (k + 1)-sensitive with respect to x. For example, with respect to a database x, a 2-sensitive record, may not be 1-sensitive, but a 1-sensitive record will also be 2-sensitive.

Figure 3:

(a)-(c), the plot is for the same data. The two axes give the coordinate of a point (record). The color gives the level of privacy, i.e. the value e−ε, for 0.25-SP AIQ for every record (the data was generated using generated using the distribution given in Figure 2). (a), k = 1. (b), k = 7. (c), k = 14.

3.1. Composition

Our formalization of sensitive privacy enjoys the important properties of composition and post-processing [18], which a good privacy definition should have [32]. Hence, we can quantify how much privacy may be lost (in terms of the value of ε) if one asks multiple queries or post-processes the result of a private mechanism. Here, we recall that sensitive privacy is defined with respect to the k-sensitive neighborhood graph for the privacy parameter ε. Thus, the privacy composes with respect to both, the privacy parameter (i.e. ε) and the sensitive neighborhood graph.

Sequential composition provides the privacy guarantee over multiple queries over the same database, where the same record(s) in the database may be used to answer more than one query. Consider two mechanisms , which is ε1-sensitively private for k1-sensitive neighborhood graph , and , which is ε2-sensitively private for k2-sensitive neighborhood graph , with independent sources of randomness. Recall that for a private mechanisms for AIQ, (i, g), is fixed; thus M1 and M2 may correspond to different records and anomaly identification function. Now, M2 (x, M1(x)) (for every database x) is (ε1 + ε2)-sensitively private for (Claim 3). One application of this is that for a fixed GS, even performing multiple queries interactively will lead to at most a linear loss (in terms of ε) in privacy in the number of queries—in an interactive query over a database x, one firstly gets the answer of M1, i.e., M1(x), and based on the answer, one selects M2 and gets its answer. Furthermore, for a fixed normality property, if k1 ≤ k2 then is a subgraph of , then M2 is (ε1 + ε2)-sensitively private for .

Parallel composition deals with multiple queries, each of which only uses non-overlapping partition of the database. Let such that Y1 ∩ Y2 = ∅. Now, consider M1 and M2, each with domain , that are respectively ε1-sensitively private for and ε2-sensitively private for , where is a subgraph of . Further, M1 and M2 only depend on their randomness (each with its independent source) and records in Y1 and Y2 respectively. In this setting, a mechanism M (x) = (M1(x), M2 (x)) is max (ε1, ε2)-sensitively private for , or in general case for sensitive neighborhood graph (Claim 4), where E1 and E2 are the sets of edges for and respectively.

We also remark that privacy is maintained under post-processing.

Example:

Consider composition for sensitive privacy for the case of multiple (β, r)-AIQs. Let us say we answer anomaly identification queries for records i1, i2, … , in respectively for (β1, r1), (β2, r2), … ,(βn, rn) anomalies over the database x, while providing sensitive privacy. Let the mechanism for answering (βt, rt)-AIQ for it be εt-SP for kt-sensitive neighborhood graph corresponding to (βt, rt)-anomaly, and assume it depends on the partition of the database that contains the records within distance rt of it (because it suffices to compute (βt, rt)-AIQ) and its independent source of randomness. Let k = min(k1, … , kn), β = max(β1, … , βn), and r = min(r1, … , rn). In this case, the sensitive privacy guarantee for answering all of the queries is m ε for k-sensitive neighborhood graph corresponding to (β, r)-anomaly, where m is the maximum number of it’s that are within any ball of radius max(r1, … , rn) (Claim 5).

Thus, from the above, it follows that if we fix β, r and k and allow a querier to ask m many (β′, r)-AIQ’s (each may have a different value for β′) such that β′ ≤ β, then we can answer all of the queries with sensitive privacy mε in the worst case for k-sensitive neighbor for to (β, r)-anomaly. The same is true if the queries are for (β, r′) with r′ ≥ r. Furthermore, for fixed β, r and k, answering (β, r)-AIQ for i and i′ such that d (i, i′) > 2r still maintains (ε, k)-SP. One may employ this to query adaptively to carry out the analysis while providing sensitive privacy guarantees over analysis as a whole.

4. PRIVACY MECHANISM CONSTRUCTIONS

In this section we will show how to construct a private mechanisms for (β, r)-anomaly identification. Specifically, (i) we will give an SP mechanism that errs with exponentially small probability on most of the typical inputs (Theorem 4.6), (ii) we will provide a DP mechanism construction for (β, r)-AIQ, which we will prove is optimal (Theorem 4.4), (iii) we will present a compiler construction that can compile a “bad” DP mechanism for AIQ to a “good” SP mechanism (Theorem 4.7) – here good and bad are indicative of utility. We will use these mechanism to evaluate the performance of our method over real world and synthetic datasets.

Recall that a privacy mechanism, , for a fixed AIQ, (i, g), will output the labels of i for the given database, where g is an anomaly identification function and i is a record. The sensitive privacy requires that the shorter the distance between any two databases, x and y, in the sensitive neighborhood graph (GS), the closer the probabilities of any output (R) of the mechanism M corresponding to the two databases should be, that is, . Thus, for an x, the greater is the distance to the closest y such that g(i, x), ≠ g(i, y), the higher accuracy a private mechanism can achieve on the input x for answering g(i, x). We capture this metric-based property by the minimum discrepant distance (mdd) function. Fix an anomaly identification function g. For a given sensitive neighborhood graph GS, is mdd-function, if for every i and x,

| (1) |

A simple and efficient mechanism for anomaly identification that is both accurate and sensitively private can be given if g and the (the corresponding mdd-function) can be computed efficiently. However, computing the mdd-function efficiently for an arbitrary anomaly definition is a non-trivial task. This is because the metric, , which gives rise to the metric-based property captured by the mdd-function, is induced by (a) the definition of anomaly (e.g. specific values of β and r) and (b) the metric over the records. Thus, making it exceedingly difficult to analyze it in general.

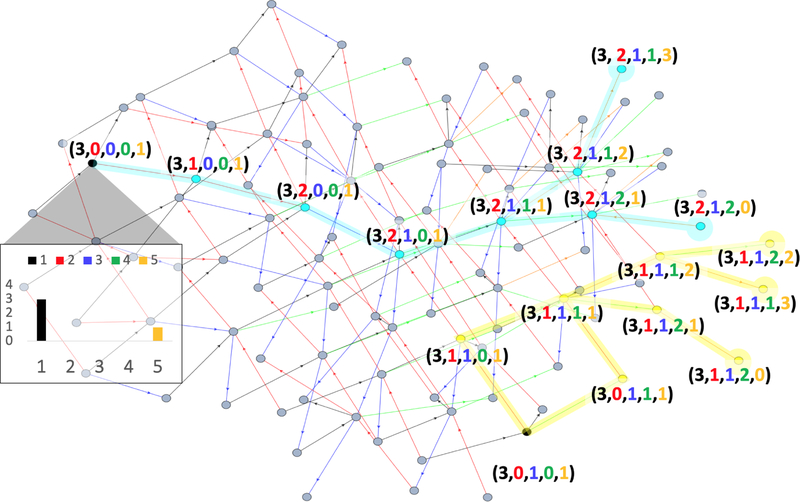

We use the example given in Figure 4 to explain the above mentioned relationships of mdd-function. This figure depicts a subgraph of 1-sensitive neighborhood graph for (β = 3, r = 1)-anomaly. One can appreciate the conceptual difficulty in calculating mdd-function, (for this setting) by for example thinking the value of (and recall that this is just a 1-sensitive neighborhood graph). Next, note that for a given database x and a record i, the shorter is the distance of the closest sensitive record from i, the smaller the value of , e.g. . Furthermore, the presence of non-sensitive records can also influence the value of the mdd-function, e.g. although the closest sensitive record to 5 is the same in both the databases. In addition, the values of β and r also affect the value of mdd-function, and in most realistic settings, the size of is large, and the sensitive neighborhood graph is quite complex.

Figure 4:

Sensitive neighborhood graph. A simple example of a 1-sensitive neighborhood graph, GS, with , ℓ1-metric over , and (β = 3, r = 1)-anomaly. Note that GS is an undirected graph; arrowheads indicates the record is added at the end node; the color of the edge corresponds (as per the given color code) to the value of the record added. Further, each database x is represented as a 5-tuple with xi for representing the number of records in x that have value i.

Below, we provide our constructions that uses a lower bound on the mdd-function to give sensitively private mechanism, which does not depend upon any particular definition of anomaly. Thus it can be used to give private mechanisms for AIQ’s as long as one is able to compute the lower bound.

4.1. Construction: SP-mechanism for AIQ by lower bounding mdd-function

Here, we show how to construct an SP mechanism for identifying anomalies by using a lower bound, λ, for the mdd-function. Our construction (Construction 1) will be parameterized by λ, which is associated with a sensitive neighborhood graph. Since the sensitive neighborhood graph is tied to an anomaly definition, it will become concrete once we give the definition of anomaly (e.g., see Section 4.1.1 and Section 4.1.2).

For any fixed AIQ, (i, g), and given λ, Construction 1 provably gives an SP mechanism as long as λ fulfills the following two properties: (1) for every i and x, λ(i, x) ≥ 1 and (2) λ is 1-Lipschitz continuous lower bound on the mdd-function (Theorem 4.1).

For a sensitive neighborhood graph, GS, we say a function is α-Lipschitz continuous if for every and neighboring databases x and y in GS, |f (i, x) − f (i, y)| ≤ α.

We remark that although at first it appears that the Lipschitz continuity condition is some side technicality, in fact bounding its value constitute the main part of our argument for privacy of our mechanisms. Thus giving an SP mechanism for (i, g) via Construction 1 reduces to giving a Lipschitz continuous lower bound for the mdd-function corresponding to g.

Construction 1. Uλ

Input .

Set t = e−ε(λ (i, x)−1)/(1 + eε).

Sample b from {0, 1} such that P (b ≠ g(i, x)) = t.

Return b.

Note that the above is a family of constructions parameterized by λ (as mentioned above), i.e., one construction, Uλ, for each λ. This construction is very efficiently realizable as long as we can efficiently compute g and λ. Furthermore, the error of the mechanism, yielded by the construction, for any input is exponentially small in λ (Claim 2, which immediately follows from the construction).

Claim 2. For given ε, (i, g), and λ, Uλ (Construction 1) is such that P (U (x) ≠ g(i, x)) = e−ε (λ (i, x)−1)/(1 + eε) for every x.

Theorem 4.1 (Uλ is SP). For any given ε, AIQ, and a 1-Lipschitz continuous lower bound λ on the corresponding mdd-function for k-sensitive neighborhood graph, GS, such that λ ≥ 1, Construction 1 yields an (ε, k)-sensitively private mechanism.

In order to show that the theorem holds, it suffices to verify that for every i and every two neighboring x and y in GS, the privacy constraints hold. For any AIQ, (i, g), this is immediate when g(i, x) = g(i, y) because λ is 1-Lipschitz continuous. When g(i, x) ≠ g(i, y), λ(i, x) = λ(i, y) = 1 because and λ ≥ 1. Thus, the constraints are satisfied in this case as well. The complete proof for Theorem 4.1 is given in Appendix A.4. Additionally, a simple observation on the proof of Theorem 4.1, shows that if the given λ is α-Lipschitz continuous with α ≥ 1, then Construction 1 yields an (ε · α)-sensitively private mechanism.

In the following two sections, we instantiate Construction 1 to give differentially private and sensitively private mechanisms for performing (β, r)-anomaly identification query. We will use these mechanisms in our empirical evaluation over real world datasets.

4.1.1. Optimal DP-mechanism for (β, r)-AIQ.

Here, we show how to use Construction 1 to give an optimal differentially private mechanism for (β, r)-AIQ. Note that we will use this mechanism in experimental evaluation (Section 5) and compare its performance with our SP mechanism (which we will present shortly). We begin by restating the definition of DP in terms of the neighborhood graph. This restatement will immediately establish that SP generalizes DP, a fact we will use to build DP mechanism.

Definition 4.2 (DP restated with neighborhood graph). For ε > 0, a mechanism, M, with domain , is ε-differentially private if for every two neighboring databases, x and y, in the neighborhood graph, and every R ⊆ Range (M),

From Definition 3.2 (of sensitive privacy) and Definition 4.2, it is clear that differential privacy is a special case of sensitive privacy, when the k-sensitive neighborhood graphs, GS, is the same as neighborhood graph, , i.e., . Thus, for , a mechanism is ε-differentially private if and only if it is ε-sensitively private. This observation is sufficient to give a differentially private mechanism for AIQ by using Construction 1.

We use in Construction 1 to give the DP mechanism for (β, r)-AIQ, where (mdd-function) for an arbitrary β, r, i and x is given below. This will yield a DP mechanism as long as the given for (β, r)-AIQ is 1-Lipschitz continuous, a fact that immediately follows from the above observation and Theorem 4.1. We claim that for any given β and r, (given by (2)) is mdd-function for the (β, r)-AIQ and is 1-Lipschitz continuous (Lemma 4.3).

| (2) |

Lemma 4.3. For any fixed (β, r)-AIQ, (i, g), the given by (2) is mdd-function for g and is 1-Lipschitz continuous.

The proof of Lemma 4.3 can be found in Appendix A.5.

We claim that for any fixed (β, r)-AIQ, (i, g), (given by our construction) is differentially private and errs minimum for all the inputs (Theorem 4.4), namely, it is pareto optimal. We say is pareto optimal ε-DP mechanism if (a) it is ε-DP and (b) for every ε-DP mechanism and every database , . Particularly, this implies that of all the DP mechanisms yielded by Construction 1, each corresponding to a different λ, the “best” mechanism is for .

Theorem 4.4 ( is optimal and DP). For any fixed (β, r)-AIQ, (Construction 1) is pareto optimal ε-differentially private mechanism, where is given by (2).

4.1.2. SP-mechanism for (β, r)-AIQ.

We employ Construction 1 to give a (ε, k)-sensitively private mechanism for (β, r)-AIQ. We provide λk below, which is 1-Lipschitz continuous lower bound on the mdd-function for the k-sensitive neighborhood graph for (β, r)-anomaly (Lemma 4.5). For the λk, Construction 1 yields that is (ε, k)-SP mechanism, and for non-sensitive records can have exponentially small error in β (Theorem 4.6).

| (3) |

Lemma 4.5. Arbitrarily fix k, β ≥ 1 and r ≥ 0. Let g be (β, r)-anomaly identification function and be the mdd-function for g, where GS is the k-sensitive neighborhood graph for (β, r)-anomaly. The λk given by (3) is 1-Lipschitz continuous lower bound on .

The proof of Lemma 4.5 is given in Appendix A.7.

It is clear form the definition of λk (given by 3) that when a record, i, is k-sensitive with respect to x, , which implies that there is no gain in utility (i.e. accuracy) compared to the optimal DP mechanism (in Section 4.1.1). However, when a record is not sensitive, , our SP mechanism achieves much higher utility compared to the optimal DP mechanism, which is especially true for strong (β, r)-anomalies (i.e. the records that lie in a very sparse region).

Theorem 4.6 (accuracy and privacy of ). Fix any (β, r)-AIQ, (i, g). The mechanism, (Construction 1 for λk above) is (ε, k)-SP such that for every i and x, if i not sensitive for x, then

The privacy claim follows from Lemma 4.5 and Theorem 4.1, while the accuracy claim is an immediate implication from Construction 1 based on the definitions of and λk – note that Bx (i, r) < β + 1 − k implies i is not sensitive for x (Lemma A.2).

We give an example to show that achieves high accuracy in typical settings. Fix k ≤ β/10. Now for any record i in a database x, satisfying Bx (i, r) ≤ β/2 is an outlier for which will err with probability less that e−2ε β/5.

4.2. Compiler for SP-mechanism for AIQ

In this section, we present a construction compiler, which compiles a differentially private mechanism for an anomaly identification query into a sensitively private one. This SP mechanism can outperform the differentially private mechanism. Furthermore, our compiler is not specific to any particular definition of anomaly or any specific DP mechanism. The differentially private mechanism, which the compiler takes, is given in terms of its distribution over the outputs for every input. The compiled SP mechanism comparatively has much better accuracy for the non-sensitive records; however, for the sensitive records, the SP and the input DP mechanism err by the same amount.

It is noteworthy that for many problems, we already know the distributions given by differentially private mechanisms [15, 17, 18]. Thus, our construction can be employed using these mechanism as long as the distributions given by the differentially private mechanism are not too “wild”, for example, the probability of the wrong answer for any input is not too high (we formalize this below), which is typically true.

The compiler construction is parameterized by δ. This δ must be a non-negative lower bound on that is also 2-Lipschitz continuous ( and are the mdd-functions for an arbitrarily fixed g, and GS is the k-sensitive neighborhood graph for anomaly definition for g). The non-negativity constraint is a side technicality; however, bounded divergence (i.e., the Lipschitz continuity constraint) and the lower bound constraint play a pivotal role in arguing bout the privacy of the compiled mechanism. Given below is our construction, and it will be useful when obtaining δ is easier than λ, and we already know the distributions of a DP mechanism for the problem.

Construction 2. Uδ

Input .

Set .

Sample b from {0, 1} such that P (b ≠ g(i, x)) = t.

Return b.

The differentially private mechanism (in terms of its distributions) that can be transformed (with provable guarantees) through our compiler is termed as a valid mechanism. For ε > 0 and any fixed AIQ, (i, g), we say an ε-DP mechanism, , is valid if for every two neighbors x and y in the neighborhood graph with g(i, x) = g(i, y) = b for some b ∈ {0, 1}, the following holds

Note that any ε-differentially private mechanism, M, for a fixed AIQ, (i, g), that satisfies P (M (x) ≠ g(i, x)) ≤ e2ε/(1 + e2ε) for every x is valid – this is shown below for ε > 0 and two arbitrary neighbors x and y such that b = g(i, x) = g(i, y); hence the notion of valid differentially private mechanism is well defined.

since M is ε-DP, it follows from the above

We claim that for a given valid differentially private mechanism, M, for a fixed AIQ, (i, g), and non-negative 2-Lipschitz continuous lower bound δ on , Construction 2 complies M into a sensitively private mechanism, Uδ (Theorem 4.7). We stress that for the compiled SP mechanism, the probability of error can be exponentially smaller compared to the input DP mechanism. which is especially true for the non-sensitive records. This leads to an improvement in accuracy. Clearly, as the input mechanism, M, to the compiler becomes better (i.e., has lower error) so does the compiled sensitively private mechanism, Uδ, since the error of Uδ, is never more than that of M.

Theorem 4.7. For k ≥ 1 and a given valid ε/2-DP mechanism, M, for any AIQ, (i, g), and non-negative 2-Lipschitz continuous lower bound, δ, on , Construction 2 yields an ε-SP mechanism, Uδ, for k-sensitive neighborhood graph corresponding to the anomaly definition for g such that

To confirm the above claim, we show that the mechanism, Uδ, given by the construction above indeed satisfies the privacy constraints imposed by the sensitive privacy definition for every two neighboring databases in k-sensitive neighborhood graph. We can accomplish this by showing that the privacy constraints are satisfied by any two arbitrarily picked neighbors, x and y, for an arbitrarily picked valid ε/2-differentially private mechanism, M, for an anomaly identification query, (i, g) and a δ as specified above. We can divide the argument into two cases, and confirm in each case that the privacy constraints are satisfied. Case 1: δ (i, x) = δ (i, y) = 0, which follows due to M being differentially private; because if M is ε/2-differentially private then it is also ε-differentially private. Case 2: δ (i, x) > δ (i, y) ≥ 0 — this is without loss of generality since x and y are picked arbitrarily. This case holds because of the following: M is valid ε/2-differentially private, δ is non-negative and 2-Lipschitz continuous, g(i, x) = g(i, y) (because for neighboring x and y, implies . We give the complete proof of Theorem 4.7 in Appendix A.8.

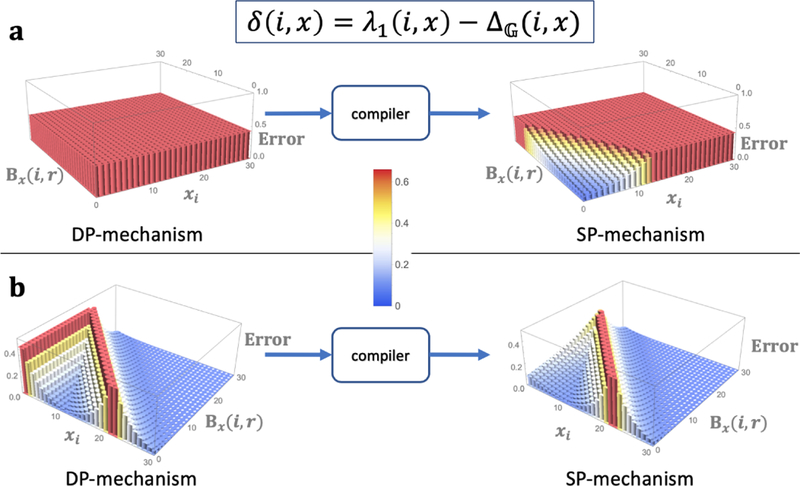

We highlight the effectiveness of the compiler by instantiating it for for every i and x for (β, r)-anomaly. Figure 5 shows the compilation of two DP mechanisms for (β, r)-AIQ, which widely differ in their performance. As expected, the compiled SP-mechanism outperforms the input DP-mechanism.

Figure 5:

compilation of DP-mechanism for (β, r)-AIQ into SP-mechanism. In both (a) and (b), the input mechanism is 0.25-DP for a fixed record i and δ (given in the figure). Each database x is given by (xi, Bx (i, r)) since (β, r)-anomaly identification function only depends upon xi and Bx (i, r)). Each mechanism is depicted by its error over databases i.e. P (M (x) ≠ g(i, x)). (a), DP-mechanism has constant error ≈ 0.44. (b), DP-mechanism has error .

In Figure 5a, the input DP mechanism, M, has a constant error for every input database, that is, 1/(1 + eε) for fixed ε = 0.25. Clearly, this mechanism has extremely bad accuracy. This is a difficult case even for the compiled mechanism, which nevertheless, attains exponential gain in accuracy for non-sensitive records. However, when we input the DP-mechanism given in Section 4.1.1, which is much better than the one in Figure 5a, the compiled mechanism is clearly superior compared to the one in Figure 5a (Figure 5b).

Note that the δ in Figure 5 is a non-negative 2-Lipschitz continuous lower bound on (as required by Theorem 4.7), where λ1 is given by (3) for k = 1 and is given by (2). follows because . The first inequality follows from Lemma 4.5. The second one trivially holds true for all the cases except for xi ≥ 1 and Bx (i, r) < β, where λ1(i, x) = β + 1 − Bx (i, r) and ; thus, even in this case, we get δ (i, x) = max(β + 1 Bx(i, r) − xi, 0) ≥ 0. The 2-Lipschitz continuity of δ follows from the λ1 and being 1-Lipschitz continuous (Lemma 4.5 and Lemma 4.3). Thus, for any i and two neighbors x and y in GS (1-sensitive neighborhood graph),

Remark:

We emphasize that both of our constructions are not tied to any specific definition of anomaly, and even the requirement of Lipschitz continuity is due to privacy constraints.

5. EMPIRICAL EVALUATION

To evaluate the performance of the SP-mechanism for (β, r)-anomaly identification, we carry out several experiments on synthetic dataset and real-world datasets from diverse domains: Credit Fraud [10] (available at Kaggle [23]), Mammography and Thyroid (available at Outlier Detection DataSets Library [41]), and APS Trucks (APS Failure at Scania Trucks, available at UCI machine learning repository [14]). Table 1 provides the datasets specifications.

Table 1:

dataset specifications and parameter values.

| Dataset | size | dim | (β, r) | true (β, r) - anomalies |

|---|---|---|---|---|

| Credit Fraud | 284,807 | 28 | (1022, 6.7) | 103 |

| APS Trucks | 60,000 | 170 | (282,16.2) | 677 |

| Synthetic | 20,000 | 200 | (97,3.8) | 201 |

| Mammography | 11,183 | 6 | (55,1.7) | 75 |

| Thyroid | 3, 772 | 6 | (18,0.1) | 61 |

To generate the synthetic data, we followed the strategy of Dong et al. [12], which is standard in the literature. The synthetic data was generated from a mixed Gaussian distribution, given below, where I is the identity matrix of dimension d × d, σ << 1, and eit is a standard base. In our experiments, we used ρ = .01 and a = 5, and chose a standard bases uniformly at random.

The aim of this work is to study the effect of privacy in identifying anomalies. So we keep the focus on evaluating the proposed approach for achieving privacy for this problem, and how it compares to differential privacy in real world settings. Our experiments make use of (popular) (β, r) notion of anomaly.

Following the standard practice for identifying outliers in the data with higher dimension [1, 28], we carried out the principal component analysis (PCA) to reduce the dimension of the three datasets with higher dimension. We chose, top 6, 9, and 12 features for the Credit Fraud, Synthetic, and APS Trucks datasets respectively. Next, we obtain the values of β and r, which typically are provided by the domain experts [35]. Here, we employed the protocol outlined in Appendix A.2 to find β and r; this protocol follows the basic idea of parameter selection presented in the work [35] that proposed the notion of (β, r)-anomaly. Table 1 gives the values of β and r, which we found through the protocol, along with the number of true (β, r)-anomalies (true anomalies identifiable by (β, r)-anomaly method for the given parameter values).

Error:

We measure the error of a private mechanism (which is a randomized algorithm) as its probability of outputting the wrong answer—recall that in the case of AIQ, there are only two possible answers, i.e. 0 or 1. For each AIQ for a fixed record, we estimate the error by the average number of mistakes over m trials. So for our experiments we choose m to be 10000.

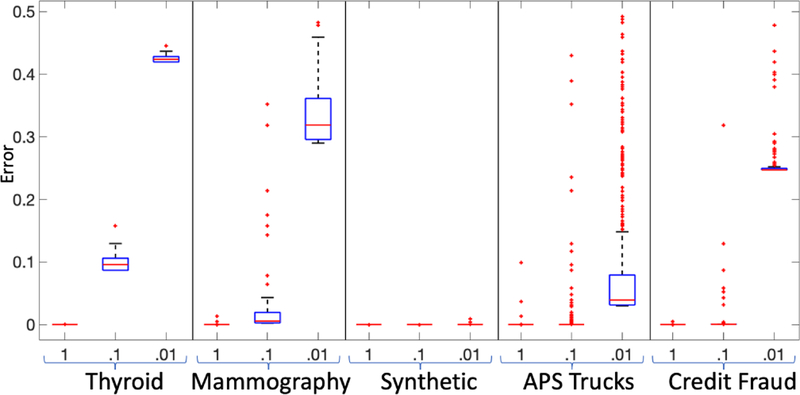

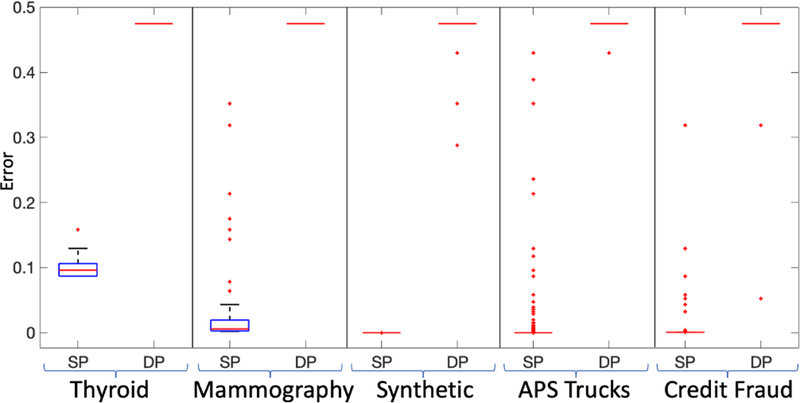

For each dataset, we find all the true (β, r)-anomalies and for each of them perform private anomaly identification query using SP-mechanism (given in Section 4.1.2) and DP-mechanism (given in Section 4.1.1) for ε = 0.01, 0.1, and 1 and compute the error, which we give by the box plot in Figure 6. The reason we only considered our DP mechanism for this part is that it is the best among the baselines (see Table 3) and it also has strong accuracy guarantees (Theorem 4.4). The error of SP-mechanism, in many cases, is so small (e.g. of the order 10−15 or even smaller for larger values of ε) that it can be considered zero for all practical purposes. Furthermore, as the data size increases (and correspondingly the value of β), the error of SP-mechanism reduces. However, in the case of anomalies, the error of DP-mechanism is consistently close to that of random coin flip (i.e. selecting 0 or 1 with probability 1/2) except for a few anomalous records in some cases – we will shortly explain the reason for this. The error of the SP-mechanism was overwhelmingly concentrated about zero (Figure 6), which is also true for the smaller values of ε. Thus, we can have higher privacy guarantee for sensitive records, while still being able to accurately identify anomalies. Also, note that as the size of the dataset increases, not only does the error of SP-mechanism reduces (for anomalies), but also its divergence. Thus, it indicates that our methodology is even more appropriate for big data settings. On the other hand, for anomalies, the errors of DP-mechanism are concentrated about 1/(1 + eε) (Figure 7). This is in accordance with our theoretical results and the assumption that the databases are typically sparse.

Figure 6:

box plots of the errors of the SP mechanism for (β, r)-AIQ over the true (β, r)-anomalies for ε = {.01, .1, 1}.

Table 3:

B1 and B2 are the best mechanisms from two families of mechanism. DP and SP are the mechanisms from Section 4.1.1 and Section 4.1.2 respectively. Going from red to blue the value decreases. ε = 0.1

| Dataset | Precision | Recall | F1-score | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B1 | B2 | DP | SP | B1 | B2 | DP | SP | B1 | B2 | DP | SP | |

| Credit Fraud | 0.0101 | 0.0230 | 0.9930 | 0.9963 | 1.0000 | 0.0498 | 0.5250 | 0.9968 | 0.0199 | 0.0315 | 0.6868 | 0.9966 |

| APS Trucks | 0.0115 | 0.0165 | 0.9870 | 0.9931 | 1.0000 | 0.0753 | 0.5263 | 0.9954 | 0.0227 | 0.0271 | 0.6865 | 0.9943 |

| Synthetic | 0.0101 | 0.0114 | 0.9930 | 0.9963 | 1.0000 | 0.1189 | 0.5250 | 0.9968 | 0.0199 | 0.0208 | 0.6868 | 0.9966 |

| Mammography | 0.0070 | 0.0081 | 0.0211 | 0.2004 | 0.8244 | 0.1000 | 0.5250 | 0.9977 | 0.0138 | 0.0149 | 0.0435 | 0.3337 |

| Thyroid | 0.0174 | 0.0191 | 0.1427 | 0.3100 | 0.6656 | 0.2918 | 0.5250 | 0.8993 | 0.0339 | 0.0358 | 0.2244 | 0.4610 |

Figure 7:

box plots of the error of the SP and the DP mechanisms for (β, r)-AIQ over the true (β, r)-anomalies for ε = 0.1.

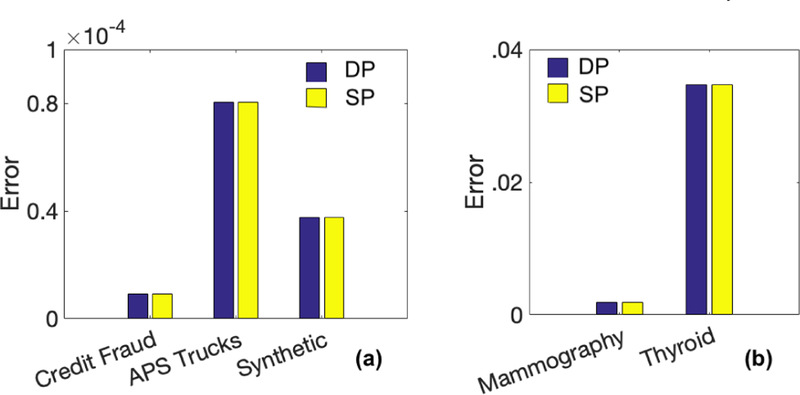

Next, we evaluated the performance over the normal records. Here, both the SP and the DP mechanisms performed equally (Figure 8). For the same value of ε, every sensitive record in the database has the same level of privacy under sensitive privacy as all the records under differential privacy; thus the same level of accuracy should be achievable under both the privacy notions. Here we see again that datasets with larger sizes exhibit very small error.

Figure 8: evaluation over normal records.

(a),(b), give the average error of SP and DP mechanism for AIQ over all the normal records from each data set; ε = 0.1.

To evaluate the performance over future queries, we picked n records uniformly at random from the space of possible (values of) records for each dataset – n was set to be 20% of the size of the dataset. Here too the SP-mechanism outperforms the DP-mechanism significantly (Table 2). This is because most of the randomly picked records are anomalous as per the (β, r)-anomaly, which is due to the sparsity of the databases. This fact becomes very clear when we compare the mean error over the random records to the mean error over the anomalous records in the randomly picked records (see the second and the last column of Table 2). Since the probability of observing a mistake is extremely small (e.g., 1 in 1010 trials), in Table 2, the mean is computed over the actual probability of error of the mechanism instead of the estimated error.

Table 2:

effect of sparsity of databases. “mean error” is over the randomly picked n records from the possible values of the records for each dataset for SP and DP mechanisms for (β, r)-AIQ. “mean error (anomalies)” is only over the anomalous records in the n picked records. Here, n is 20% of the size of the dataset and ε = 0.1.

| Dataset | mean error | mean error (anomalies) | |

|---|---|---|---|

| SP | DP | SP | |

| Credit Fraud | 1.1127E–21 | 0.4750 | 1.1127E–21 |

| APS Trucks | 2.9719E–13 | 0.4750 | 2.9719E–13 |

| Synthetic | 3.2173E–5 | 0.4750 | 3.2173E–5 |

| Mammography | 0.0022 | 0.4749 | 0.0021 |

| Thyroid | 0.0870 | 0.4750 | 0.0867 |

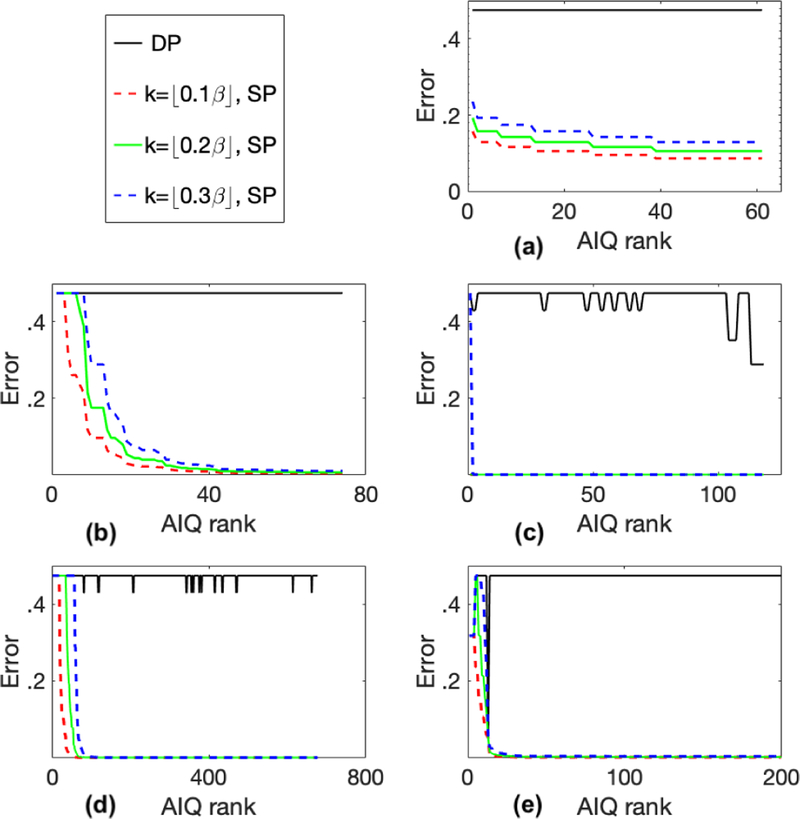

We already saw that by increasing k we move the boundary between sensitive and non-sensitive records (Figure 3). So to observe the effect of varying values of k on real world datasets, we carried out experiments on the datasets with k = ⌊0.1β⌋, ⌊0.2β⌋, and ⌊0.3β⌋ – recall that a record is considered k-sensitive with respect to a database if the record is normal or becomes normal under the addition and (or) deletion of at most k records from the database. Note that if k ≥ β + 1 then every record will be sensitive regardless of the database. The results are provided in Figure 9. Here we conclude that even for the higher values of k SP-mechanism performs reasonably well. Further, if the size of dataset is large enough, then the loss in accuracy for most of the records is negligible.

Figure 9: evaluation over true (β, r)-anomalies for varying k.

(a)-(e), give the errors of SP and DP mechanisms. AIQ rank is given by the error of SP-mechanism for each anomaly: the higher the rank, the lower the error. Mechanisms are as given in Section 4 and ε = 1. (a), Thyroid, (b), Mammography,(c), Credit Fraud, (d), APS Trucks, (e), Synthetic data.

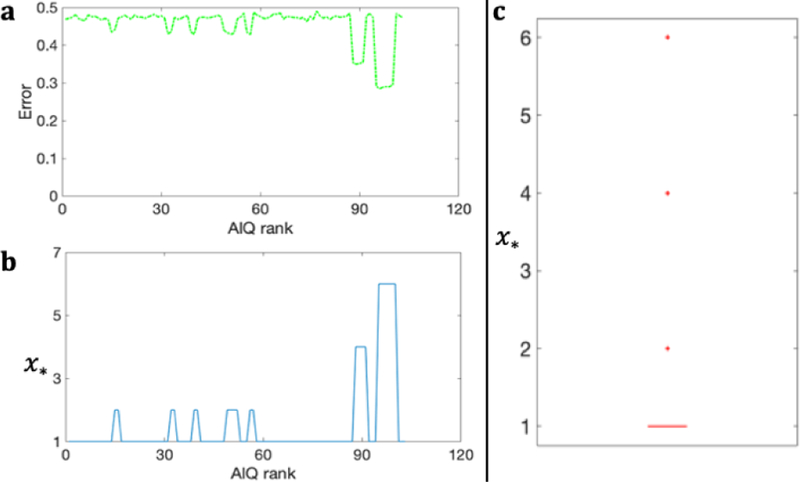

We see that for Credit Fraud and APS Trucks datasets, differentially private AIQ for some of the anomalous records give smaller error. We explain this deviation using the Credit Fraud dataset as an example. The above mentioned deviation in the error occurs whenever the anomalous record is not unique (Figure 10a–b), which is typically rare (Figure 10c). The reason DP-mechanism’s error remains constant in most cases is that the anomalies lie in a very sparse region of space and mostly do not have any duplicates (i.e., other records with the same value – xi ≈ 1).

Figure 10:

deviation in the DP-mechanism error for the Credit Fraud dataset. In (a), the plot is the same as given in Figure 9c for the DP-mechanism. In (b) and (c), x* for each record is the number of records in the database x that have the same value. (c), shows the box plot for the data.

Finally, to evaluate the overall performance of our SP-mechanism, we computed precision, recall, and F1-score [1]. We also provide a comparison with two different baseline mechanisms, B1, B2 in addition to pareto optimal DP mechanism (see Table 3).

B1 and B2 are the best performing mechanisms (i.e., with the highest F1-score) from two families of mechanisms. Each mechanism in each of the family is identified by a threshold t, where 0 ≤ t ≤ 1. Below, we describe the mechanisms from both the families for fixed ε, threshold t, record , and database . The mechanism in the first family is given as B1,t (x) = 1 if and only if ; here gives the number of anomalies in x and Lap(1/ε) is independent noise from Laplace distribution of mean zero and scale 1/ε. The mechanism in the second family is given as B2,t (x) = 1 if and only if . Note that, the mechanism from the first family are ε1-DP, where ε1 ≥ βε. This is due to the fact that [15]. However the mechanism from the second family are ε2-DP,| where ε2 ≥ ε.

Our mechanism outperforms all the baselines. Furthermore, DP-mechanism largely outperforms the rest of the baselines.

6. RELATED WORK

To our knowledge, there has been no work that formally explores the privacy-utility trade-off in privately identifying anomalies, where sensitive records (which include the normal records defined in a data-dependent fashion) are protected against inference attacks about their presence or absence in the database used.

Differential privacy [15, 17] has shaped the field of private data analysis. This notion aims to protect everyone, and in a sense, many of the DP mechanisms (e.g. Laplace mechanism) achieve privacy by protecting anomalies; and in doing so perturb the information regarding anomalies greatly. This adversely affects the accuracy of anomaly detection and identification. Furthermore, differential privacy is a special case of sensitive privacy (Section 4.1.1).

Variants of the notion of differential privacy address important practical challenges. In particular, personalized differential privacy [29], protected differential privacy [31], relaxed differential privacy [6], and one-sided differential privacy [13] have a reversed order of quantification compared to sensitive privacy. Sensitive privacy, quantifies sensitive records and their privacy after quantifying the database, which is in contrast to the previous work. Thus, under sensitive privacy, it is possible for a record of some value to be sensitive in one database and not in the other, while this cannot be the case in the above mentioned definitions. On the other hand, by labeling records independent to the database (as in the previous work) one can solve a range of privacy problems such as counting queries and releasing histograms. Hence, this work solves the open problem (in [31]). Next, we present an individual comparison with each of the above mentioned previous work along with some other relevant ones from the literature.

Protected differential privacy [31] proposes an algorithm for social networks to search for anomalies that are fixed and are defined independent of the database. This is not extensible to the case, where anomalies are defined relative to the other records [31]. Similarly, the proposed relaxed DP mechanism [6] is only applicable to anomalies defined in data-independent manner.

One-sided differential privacy (OSDP) [13] is a general framework, and is useful for the applications, where one can define the records to be protected independent of the database. Note that the notion of sensitive record in OSDP is different from the one considered here. Further, due to its asymmetric nature of the privacy constrains, OSDP fails to protect against the inference about the presence/absence of a sensitive record (in general), which is not the case with sensitive privacy (see Appendix A.10.1).

Tailored differential privacy (TDP) [36] provides varying levels of privacy for a record, which is given by a function, α, of the record’s value and the database. However, the work is restricted to releasing histograms, where outliers are provided more privacy. Whereas our focus is identifying anomalies, where anomalies may have lesser privacy. Further, the notion of anomaly used in the work [36] is the simple (β, 0)-anomaly. Extending it to the case of r > 0 is a non-trivial task since, here, changing a record in the database may affect the label (outlyingness) of another record with a different value. We also note that sensitive privacy is a specialized case of tailored differential privacy (see Appendix A.10.2.)

Blowfish privacy (BP) [25] and Pufferfish privacy (PP) [33] are general frameworks, and provide no concrete methodology or direction to deal with anomaly detection or identification, where anomalies are defined in a data-dependent fashion. Sensitive privacy is a specialized class of definitions under these frameworks.

Thus, in term of definition, our contribution in comparison with OSDP [13], TDP [36], BP [25], and PP [33], is defining the the notion of sensitive record and the sensitive neighborhood graph that is appropriate and meaningful for anomalies (when defined relative to the other records) and giving constructions and mechanisms for identifying anomalies.

Finally, [4] proposed a method for searching outliers, which can depend on data, but this is done in a rather restricted setting, which has theoretical value (in [4] the input databases are guaranteed to have only one outlier, a structure not present in the typical available datasets; this is in addition to other input database restrictions required by [4]).

Other relaxations of differential privacy such as [2] is specifically for location privacy and [16] is to achieve fairness in classification to prevent discrimination against individuals based on their membership in some group and as such are not applicable to the problem we consider here.

7. KEY TAKEAWAYS AND CONCLUSION

This work is the first to lay out the foundations of the privacy-preserving study of data dependent anomalies and develop general constructions to achieve this. It is important to reiterate that the formalization and conceptual development is independent of any particular definition of anomaly. Indeed, the definition of sensitive privacy (Definitions 3.1 and 3.2), and the constructions to achieve it (Construction 1 and Construction 2) are general and work for an arbitrary definition of anomaly (Theorem 4.1 and Theorem 4.7).

We noted earlier that sensitive privacy generalizes differential privacy. Thus, the guarantees provided by sensitive privacy are similar to that of differential privacy, and in fact, Construction 1 can be employed to give differentially private mechanisms for computing anomaly identification query or any binary function. However, in general, the guarantee provided by sensitive privacy to any two databases differing by one record could be correspondingly weaker than that offered by differential privacy depending on the distance between the databases in the sensitive neighborhood graph. There is also a divergence in guarantees in terms of composition. In differential privacy, composition is only in terms of the privacy parameter, ε. However, for sensitive privacy, composition needs to take into account not only the privacy parameter ε, but also the sensitive neighborhood graphs corresponding to the queries being composed. Nevertheless, the composition and post-processing properties (Section 3.1) hold regardless of the notion of the anomaly.

An extensive empirical study carried out over data from diverse domains overwhelmingly supports the usefulness of our method. The sensitively private mechanism consistently outperforms differentially private mechanism with exponential gain in accuracy in almost all cases. Although it is easy to come up with example datasets where a differentially private mechanism also performs well (e.g., (β, r)-AIQ for i and x when xi = Bx (i, r) = β/2), the experiments with real data show that such cases are unlikely to occur in practice. Indeed, the experiments show that most of the anomalies occur in the setting, where an ε-DP mechanism performs the worst, that is, its error is close to 1/(1 + eε) (a lower bound on the error of any ε-DP mechanism and follows from Claim 1).

To conclude, in this paper, we develop methods for anomaly identification that provide a provable privacy guarantee to all records, which is calibrated to their degree of being anomalous (in a data-dependent sense), while enabling the accurate identification of anomalies. We stress that the currently available methodologies for protecting privacy in data analysis are fundamentally unsuitable for the task at hand: they either fail to stop identity inference from the data, or lack the ability to deal with the data-dependent definition of anomaly. Note that anomaly identification is only the first step to tackling the problem of anomaly detection (finding all the anomalous records in a dataset). In the future, we plan to tackle this and instantiate our framework for other anomaly detection models.

CCS CONCEPTS.

• Security and privacy → Privacy-preserving protocols; • Computing methodologies → Anomaly detection.

ACKNOWLEDGMENTS

Research reported in this publication was supported by the National Science Foundation under awards CNS-1422501, CNS-1564034, CNS-1624503, CNS-1747728 and the National Institutes of Health under award R01GM118574. The content is solely the responsibility of the authors and does not necessarily represent the official views of the agencies funding the research.

A. APPENDIX

A.1. Empirical evaluation protocols

Evaluation over normally distributed data: If the data is from one dimensional normal distribution with mean μ and standard deviation σ then a record i is anomalous (or equivalently an outlier) if |i − μ| ≥ 3σ, and is statistically equivalent to (β = 1.2×10−3n, r = 0.13σ)-anomaly [35], where n is the size of the database.

To adapt this result for 2D normal distribution in Figure 2, set and compute β in a similar fashion as above. Next, take 30 samples of size 20K, i.e. n = 20, 000, from the 2D normal distribution, N (μ, Σ), where μ = (0, 0) and , and run SP-mechanism (given in Section 4.1.2) and DP-mechanism (given in Section 4.1.1) for (β, r)-anomaly identification query to compute accuracy, which is measured by the probability of outputting the correct answer by the private mechanism, and average the results over the samples for each query. We then plot the average accuracy and interpolate the results using one-degree polynomial in the two coordinates (Figure 2b–c). We used the “ListPlot3D” function of Mathematica with the argument “InterpolationOrder” set to 1.

In Figure 2d–e and Figure 3, we plot the level of privacy (in term of ε) that each record (point) has under private anomaly identification query. Here, the level of privacy for a record in a given database is measured by the maximum divergence divergence in the probability of outputting a label when we add or remove the record from the database. For ε-SP-mechanism, U, to compute the value of the privacy parameter, ε, for a record i in a given database x, consider databases y and z. y and z are same as x except for y has one more record of value i and z has one less record of value i—if there is no record of value i in x then z will be the same as x. Now we can calculate eε for record i be by (4).

| (4) |

A.2. Protocol for (β, r) selection

The main idea is to fix a value of β, for a dataset of size n, as (1 − p) ×n, where p is close to 1, and then search for an appropriate value of r. It is recommended [35] that for the datasets of sizes 103 and 106, β be (1 − 0.995) ×103 and (1−0.99995) ×106. By assuming that p is linearly related to n, one can use the provided values to find the value of β for any given dataset. For a fixed value of β, a search is performed to find r that maximize the F1-score (also known as balanced F-measure), which is a popular performance metric for imbalanced datasets [38], and it is the harmonic mean of precision and recall. We used the following protocol to select the value of r. Initialize rmin = .001, rmax = 40 (or the value that is not smaller than the maximum distance between any two points in the given dataset), r = 0, and S = 0. Next, set r1 = rmin + (rmax − rmin)/4, r2 = rmin + 3(rmax − rmin)/4, pick α from [0, 1] uniformly at random and set r3 = α r1 + (1 − α) r2. Compute F1-score for each of the r′s, i.e. , , and . Let be the maximum of the computed scores. If is greater than S then set and r = rt; further, if and r2 > r then set rmax = r2 but if it is not the case and and r1 < r then set rmin = r1, otherwise do nothing. Repeat this process, except for the initialization step, until the improvement in S becomes insignificant. In our experiments, repeating the process for ten iterations generally sufficed.

A.3. Proof of Claim 1

Proof. Arbitrarily fix ε > 0, , ε-differentially private mechanism , and x, such that f (x) ≠ f (y) and ‖x – y‖1 = 1; and let b = f (x).

If P (M (y) = b) ≤ 1/(1 + eε) then, by differential privacy constraints, we get that P (M (x) = b) ≤ eε/(1+eε); thus P (M (x) = 1 − b) ≥ 1/(1+eε). Similarly, P (M (x) = 1 − b) ≤ 1/(1+eε) implies P (M (y) = b) ≥ 1/(1 + eε). Hence, from the above, it follows that

Since M, x and y were fixed arbitrarily, the claim follows, and this completes the proof. □

A.4. Proof of Theorem 4.1

Proof. Fix arbitrary ε > 0 and a definition of anomaly. Let g be the anomaly identification function and GS be the k-sensitive neighborhood graph corresponding to it for an arbitrary value of k ≥ 1. Fix λ to be 1-Lipschitz continuous lower bound on the mdd-function, , for g such that λ ≥ 1. Let Uλ be as given by Construction 1. Next, fix an anomaly identification query, (i, g), and x, that are neighbors (i.e. connected by a direct edge) in GS.

If g(i, x) = g(i, y) = b from some b ∈ {0, 1} then

and

since λ is 1-Lipschitz continuous, it follows that

The first inequality holds because λ is 1-Lipschitz continuous, and the second one holds since λ ≥ 1.

On the other hand, if g(i, x) ≠ g(i, y), then λ(i, x) = λ(i, y) = 1. This holds because x and y are neighbors, i.e. , and hence, and λ is such that for every and . Thus, in this case, the privacy constraints trivially hold. This concludes the formal argument. □

A.5. Proof of Lemma 4.3

Proof. Let be the neighborhood graph over , d be the distance metric over , be the shortest path length metric over , and g be the anomaly identification function for (β, r)-anomaly for arbitrarily fixed values of β ≥ 1 and r ≥ 0.

Firstly, we prove the correctness of the -function given by (2) for (β, r)-AIQ. Arbitrarily fix and any database . We know that the value of g(i, x) only depends upon xi and Bx (i, r)—recall that g(i, x) = 1 ⇔ xi ≥ 1 and Bx (i, r) β. Further, since every two databases that differ by exactly one record are directly connected by an edge. Hence, it follows that for ,

| (5) |

We will consider four cases based on the condition (given in the -function) that x satisfies. From (5), we know that is the same as the minimum number of records by which a database y differs such that g(i, x) ≠ g(i, y). Thus in the proof we will modify the database x by adding or (and) removing records from x, and show that minimum number of changes required in x to change the output of g is given by -function.

Case 1: When x satisfies the first condition, g(i, x) = 0. For any database y such that g(i, y) = 1, it must hold that yi ≥ 1 and By (i, r) ≤ β. So we obtain a y by adding one record of value i to x. Thus .

Case 2: When x satisfies the second condition, here again similar to the case above, g(i, x) = 0, and for any database y such that g(i, y) = 1, it must hold that yi ≥ 1 and By (i, r) β. So we will have to add one record of value i to x to obtain a database y′, but now By′ (i, r) ≥ β + 1. Thus, to obtain a y, we will have to remove By′ (i, r) − β = Bx (i, r) + 1 – β records of values Ci\ {i} from y′ (or x). Thus, .

Case 3: Here we assume that x satisfies the third condition; hence g(i, x) = 1. For a y such that g(i, y) = 0, either yi = 0 or By (i, r) ≥ β + 1. Thus will be the minimum of xi (which corresponds to the number of records of value i present in x that we will have to remove) and β + 1 − Bx (i, r) (which corresponds to the number of records of values in Ci that we will have to add to x).

Case 4: In this case, g(i, x) = 0 because Bx (i, r) > β. Thus, we will have to remove Bx (i, r) − β records of values in Ci from x such that there is at least on record of value i in the modified x. Hence, .

Further, in all the cases, . Therefore, we conclude the -function is correct.

Next, we prove that the -function is 1-Lipschitz continuous. Arbitrary fix i and any two neighboring databases, x and y in . Let the (k, l) represent that x and y respectively satisfy the kth and lth conditions in the -function, where k, l ∈ [4] such that k ≤ l. We will prove that for each (k, l), the -function satisfies the 1-Lipschitz continuity condition. Here, note that if the -function satisfies the 1-Lipschitz continuity condition under (k, l) then it also satisfies the condition under (l, k) because .

For (1, 1), , and for (2, 2) and (4, 4), since . Below, we consider rest of the cases.

(3, 3): The case, when Bx (i, r) = By (i, r), is trivial. So, let Bx (i, r) = 1 + By (i, r)—this is without loss of generality since ‖x − y‖1 = 1 and . Thus, and . All the subcases, except for the following, trivially follow from ‖x − y‖1 = 1.

and

and

(a) is not possible as it requires xi < yi; this cannot happen because ‖x − y‖1 = 1 and Bx (i, r) = 1 + By (i, r). As for (b), the following holds for :

Thus, it follows that 1-Lipschitz continuity condition is satisfied in this case.

(1, 2): This happens when Bx (i, r) = β − 1 and By (i, r) = β, which is sufficient for the condition to be satisfied.

(1, 3): It is possible when yi = 1 and 1 ≤ By (i, r) ≤ β; hence and the case holds.

(1, 4): This case is not possible since ‖x − y‖1 = 1, and the case requires Bx (i, r) < β and By (i, r) > β.

(2, 3): This case too is not possible since it requires Bx (i, r) ≥ By (i, r) and xi < yi, when ‖x − y‖1 = 1.

(2, 4): Here, Bx (i, r) − By (i, r) = −1 (since xi = 0 and yi ≥ 1); hence the case follows.

(3, 4): Here, it must hold that Bx (i, r) = β and By (i, r) = β + 1. Hence, (since xi ≥ 1) and , and the case follows.

Since the -function satisfies 1-Lipschitz continuity condition under all the cases for arbitrary i and arbitrary neighbors, x and y, in the neighborhood graph, it holds for every i and every two neighbors. Thus the claim follows. This completes the proof. □

A.6. Proof of Theorem 4.4

Proof. Arbitrarily fix ε and a (β, r)-AIQ, (i, g). Let be as given by (2) and be as given by Construction 1.

Firstly, note that is ε-DP. It follows from the facts that , is 1-Lipschitz continuous (Lemma 4.3), and SP generalizes DP.

Next, we prove the optimality claim. We prove the claim using its contrapositive, that is, if there is a mechanism that is “better” than , then it must not be ε-DP.

Assume there exits a DP mechanism M such that for every x, and for a database y, (i.e. is not pareto optimal); fix this y. Note that gi (·) = g(i, ·). We will prove that M cannot be ε-DP.

Let z be such that and gi (z) ≠ gi (y). Let w be a neighbor of z such that and b = gi (w) = gi (y). Now, assume that M ε-DP. It follows that

| (6) |

The First inequality is due to the DP constrains on M. The second inequality is due to the fact that M is strictly better than on y and the fact that . Now if M is ε-DP, then P (M (z) ≠ 1 − b) ≥ e−ε P (M (w) ≠ 1 − b), which together with (6) gives us P (M (z) ≠ gi (z)) > 1/(1 + eε); alternatively, P (M (z) = gi (z)) < eε/ (1 + eε). Since we know that M is “better” than , and in particular, , the above implies that M is not ε-DP. Thus, we conclude the is pareto optimal. □

A.7. Proof of Lemma 4.5

Lemma A.1. Arbitrarily fix a graph, G, that contains all the nodes and a subset of edges of the neighborhood graph, , and an . If dG is the shortest path length metric over G, then for every x, ,

Proof. Let be the neighborhood graph over . Arbitrarily fix G, dG, and X as specified above (in the lemma). Since G contains all the nodes and a subset of edges of , , where is the shortest path length metric over and it is same as ℓ1-metric over the databases. Hence, it follows that dG (x, y) ≥ ‖x – y‖1. The second inequality holds since and . The Third inequality follows from the reverse triangle inequality. This completes the proof. □

Proof of Lemma 4.5. Arbitrarily fix β, k ≥ 1, r ≥ 0. Let λk be as given by (3), the -function be corresponding to the neighborhood graph, , the -functions be corresponding to the k-sensitive neighborhood graph, GS, for (β, r)-anomaly, and g be the (β, r)-anomaly identification function. Next, arbitrarily fix a record i and a node (database) x in GS.

We first show that . Below, we show that .

The first inequality follows from the fact that GS contains all the nodes and a subset of edges of . Hence, from Lemma 4.3, we conclude that if Bx (i, r) ≥ β + 1 − k then .

We now let Bx (i, r) < β + 1 − k and b = g(i, x). Here, it is clear that λk (i, x) ≥ 1. Fix any y in GS such that g(i, y) ≠ b and .

Consider the case of xi = 0. Here, it must hold that yi ≥ 1 and By (i, r) ≤ β. Now, on any of the shortest path from x to y, we will first reach a database z, where i is k-sensitive, and hence, Bz (i, r) ≥ β + 1 − k (from Lemma A.2). Thus, for this z, we get

The second inequality follows from Lemma A.1, and the third one follows because Bz (i, r) ≥ β + 1 − k.

In the case, when xi ≥ 1, it must hold that either yi = 0 or By (i, r) ≥ β + 1. If yi = 0, or By (i, r) ≥ β + 1. If yi = 0, then on any of the shortest path from x to y, we will first reach a database z, where i becomes k-sensitive, i.e., Bz (i, r) ≥ β + 1 − k (from Lemma A.2). If z is the first such database, then zi ≥ xi. Thus, we get the following.

| (7) |

The first inequality follows from Lemma A.1, and the second one follows from the fact that Bz (i, r) ≥ β + 1 – k and xi ≤ zi. But if By (i, r) ≥ β + 1, then

| (8) |

From (7) and (8), we get the following, which is sufficient to establish that λk is a lower bound on the -function.

Next, we show that λk is 1-Lipschitz continuous. Fix an arbitrary neighbor, y, of x such that λk (i, x) ≠ λk (i, y), otherwise, the continuity condition is trivially satisfied. If both x and y satisfy the first condition of λk, then the continuity condition is satisfied by Lemma 4.3. So assume that x and y satisfy the second condition of λk. Here, all the cases except for the following, trivially follow from the fact that ‖x − y‖1 = 1.

λk (i, x) = β + 1 − Bx (i, r) and λk (i, y) = β + 1 − By (i, r) + yi − k

λk (i, x) = β + 1 − Bx (i, r) + xi − k and λk (i, y) = β + 1 − By (i, r)

If (a) holds then (b) also does by symmetry (i.e., |λk (i, x) λk (i, y)| = λk (i, y) – λk (i, x)|) as x and y are picked arbitrarily. (a) holds if xi − k ≥ 0 and yi − k ≤ 0; further, ‖x − y‖1 = 1 implies that xi − k = 0 and −1 ≤ yi − k ≤ 0. When yi − k = 0, the continuity condition is satisfied as |Bx (i, r) − By (i, r)| ≤ 1. However, yi − k = −1 is not possible since ‖x − y‖1 = xi − yi = 1 implies that i is k-sensitive with respect to x or y, which implies that either Bx (i, r) or By (i, r) is at least β + 1 − k (from Lemma A.2); this contradicts the assumption for this case. Hence, it follows that here the continuity condition is satisfied as well.

Lastly, consider the case, where y and x respectively satisfy the first and the second condition of λk—this is without loss of generality due to symmetry. This will be possible if Bx (i, r) = β − k and By (i, r) = β − k + 1. Thus, in all the subcases below, xi ≤ yi ≤ xi + 1.

Consider the subcase of xi = 0. Here, λk (i, x) = 1 and yi is either 0 or 1. If yi = 0, then we have:

But if yi = 1, as k ≥ 1. Hence, the continuity condition is satisfied for this subcase, when xi = 0.

Next, let xi ≥ 1; thus under this subcase it follows that

Clearly, if xi < k, then λk (i, x) = 1 + xi and xi ≤ λk (i, y) ≤ xi + 1; but if xi ≥ k, then λk (i, x) = 1 + k and λk (i, y) = k since xi ≤ yi ≤ xi + 1; hence the continuity condition is fulfilled in this subcase as well.

In all of the above case, |λk (i, x) − λk (i, y)| ≤ 1. Since β, k, r, i, x, and y (neighbor of x) were picker arbitrarily, we conclude that λk is 1-Lipschitz continuous lower bond on the -function. This completes the proof. □

A.8. Proof of Theorem 4.7

Proof. Fix any k ≥ 1, ε > 0, a valid ε/2-differentially private mechanism, M, an anomaly identification query, (i, g), and a non-negative 2-Lipschitz continuous lower bound, δ, on , where and respectively correspond to the k-sensitive neighborhood graph for the anomaly definition corresponding to g, and the neighborhood graph. Let Uδ be the mechanism that Construction 2 yields. Next, fix arbitrary databases x and y that are neighbors in GS.

When δ (i, x) = δ (i, y) = 0, P (Uδ (z) = b) = P (M (z) = b) for every database z and b in {0, 1}. The privacy constraints in this case, are trivially satisfied.

Next, consider the case, where δ (i, x) > δ (i, y) 0 — this is without loss of generality as x and y are picked arbitrarily. Since M is valid ε/2-differentially private, we get the following for g(i, x) = b for some b ∈ {0, 1},

| (9) |

Recall that GS is a subgraph of and contains a subset of edges of , and for every database z. Hence, it follows that , and implies . Thus, from the above it follows that when , it must hold that . Since and , we have g(i, x) = g(i, y). So, let b = g(i, x). From (9), we get the following.