Abstract

Introduction

Historically, performance within the Prehospital Emergency Care (PEC) setting has been assessed primarily based on response times. While easy to measure and valued by the public, overall, response time targets are a poor predictor of quality of care and clinical outcomes. Over the last two decades however, significant progress has been made towards improving the assessment of PEC performance, largely in the form of the development of PEC-specific quality indicators (QIs). Despite this progress, there has been little to no development of similar systems within the low- to middle-income country setting. As a result, the aim of this study was to identify a set of QIs appropriate for use in the South African PEC setting.

Methods

A three-round modified online Delphi study design was conducted to identify, refine and review a list of QIs for potential use in the South African PEC setting. Operational definitions, data components and criteria for use were developed for 210 QIs for inclusion into the study.

Results

In total, 104 QIs reached consensus agreement including, 90 clinical QIs, across 15 subcategories, and 14 non-clinical QIs across two subcategories. Amongst the clinical category, airway management (n = 13 QIs; 14%); out-of-hospital cardiac arrest (n = 13 QIs; 14%); and acute coronary syndromes (n = 11 QIs; 12%) made up the majority. Within the non-clinical category, adverse events made up the significant majority with nine QIs (64%).

Conclusion

Within the South Africa setting, there are a multitude of QIs that are relevant and appropriate for use in PEC. This was evident in the number, variety and type of QIs reaching consensus agreement in our study. Furthermore, both the methodology employed, and findings of this study may be used to inform the development of PEC specific QIs within other LMIC settings.

Keywords: Emergency medical service, Quality indicators, Patient safety, South Africa

African relevance

-

•

Development of prehospital emergency care quality systems in Africa has been poor.

-

•

Measuring quality of care is highly contextual.

-

•

There are a multitude of quality indicators that are potentially appropriate for use in Africa.

African relevance

-

•

Development of prehospital emergency care quality systems has been poor in Africa.

-

•

Measuring quality of care is highly contextual.

-

•

There are a multitude of quality indicators that are appropriate for use in Africa.

Introduction

Historically, performance within the Prehospital Emergency Care (PEC) setting has been assessed primarily based on response times. While easy to measure and valued by the public, overall, response time targets are a poor predictor of quality of care and clinical outcomes outside of a small subset of patients [1., 2., 3.]. Over the last two decades however, significant progress has been made towards improving the assessment of PEC performance, largely in the form of the development of PEC-specific quality indicators (QIs) [4., 5., 6.]. QIs are designed to measure “the degree to which health services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge” [7]. Despite this progress, the development of these systems has largely been confined to services within North America and Europe, with little to no development of similar systems evident within the low- to middle-income country (LMIC) setting [6].

Compared to a high-income country setting, the development of quality systems within LMICs is arguably of greater importance, as insufficient quality of care is now perceived to be a bigger barrier to reducing mortality than insufficient access, with an estimated 60% of deaths from conditions amenable to healthcare, due to poor quality care in LMICs [8., 9., 10., 11.]. Despite this, emergency care has an important role to play in LMICs, where it has been estimated that up to 45% of deaths and 36% of all disability-adjusted life years are potentially amenable to secondary prevention via prehospital and in-hospital emergency care [12,13]. It stands to reason therefore that the development of quality systems and indicators aimed at improving and optimising care in this setting could have a significant potential impact on this burden.

Healthcare in South Africa (SA) shares several attributes common to health systems across LMICs [14]. Recent Department of Health policy reviews have similarly highlighted the importance of systems for developing, implementing and monitoring the quality of healthcare in SA [15]. Within the PEC setting, significant advances have been made towards improving the scope of practice, and training and education of PEC clinicians. However, little is known regarding the quality and performance delivered by these services in this setting.

Several similarities in scope of practice exist between the South African PEC services and other services within a high-income country setting [16., 17., 18., 19.]. Despite this, measures of quality and performance may not be equally appropriate across settings, given the differences in service use, structure, resource availability and deployment, and education and training of clinicians. As a result, the aim of this study was to identify a set of clinical quality indicators appropriate for use in the South African PEC setting, with implications for extrapolation to LMICs.

Methods

A three-round modified online Delphi study design was used to identify, refine and review a list of QIs for potential use in the South African PEC setting. This included both the consensus agreement on the appropriateness of QIs identified in the literature, and the development of QIs amongst an expert panel.

Literature review and quality indicator advisory group

A previous review of the literature identified several potential QIs for use in this study [6], a common starting point for the consensus rating of healthcare QIs [20]. The review mapped the extent, range and nature of the scientific literature regarding prehospital QIs, with a focus on methodological development and QI components necessary for implementation. The majority of the QIs identified lacked sufficient definition, data components and/or criteria for use. Therefore, in order to operationalise the indicators, a QI Advisory Group, consisting of five experts in prehospital quality assessment, was assembled to further refine the identified QIs for inclusion in this Delphi study. The QI Advisory Group consisted of a combination of SA and international emergency care practitioners with specific training in prehospital quality assessment and quality improvement. Guidelines outlined by Rubin et al., McGlynn et al., and Mainz were used to develop relevant definitions and criteria for each QI [7,21,22]. Table 1 outlines the template developed for use by the group (Supplementary data includes a full description and data dictionary of the final indicator set).

Table 1.

Quality indicator (QI) development template.

| Definition | Basic description/purpose of the QI |

|---|---|

| Category | Primary area of focus of the QI |

| Subcategory | Secondary area, within the Category that the QI is focused |

| Measure Type | Structure, process or outcome |

| Target Population | Population on whom the quality indicator is measured/applied |

| Unit of Analysis | Service component under study/assessment for quality and performance |

| Numerator Statement | Description of the subset of the subcategory population on whom the quality indicator is measured/applied |

| Denominator Statement | Description of the subcategory level of population on whom the quality indicator is measured/applied |

| Case Mix/Risk Adjustment | Suggested differentiation amongst the denominator population for greater accuracy (i.e., stratification) |

| Exclusion Criteria | Denominator cases to be excluded when applying the QI |

| Measure Calculation | The equation for calculating the QI |

| Numerical Reporting Format | Suggested format in which the numerical results should be reported |

| Graphical Reporting Format | Suggested format in which the results should be displayed/visualised |

| Reported Indicator | Suggested output in which results should be described |

| Data Source | Suggested data source to obtain the data required for calculating the QI |

| Suggested Reporting Period | Time frame, number of successive cases or other grouping strategies cases should be aggregated for reporting purposes |

| Recommended Review Period | Suggested time period at which the QI should be reviewed for validity and feasibility |

Operational definitions, data components and criteria for use were developed for 210 QIs by the QI Advisory Group. These were categorised into one of two categories; Clinical - QIs that assessed a specific intervention, or were dependent on the presence/absence of a disease or injury characteristic (e.g., vital signs, symptoms, or treatment administered); and Non-clinical - QIs that primarily focused on an aspect of service delivery (e.g., resource utilisation or documentation). Within each category, the QIs were further divided by subcategory: clinical pathway for Clinical QIs (n = 19 subcategories, 134 QIs); or by area of service for those QIs categorised as Non-clinical (n = 8 subcategories, 76 QIs). The categorisation was included to align with and allow for the easier implementation of the QIs into practice, as the PEC focused Clinical Practice Guidelines in SA are similarly based around broad diagnoses and/or symptom presentations [16]. Lastly, each of the QIs were classified according to Donabedian's classification of healthcare information and data, to further aid in identifying their role and purpose [23]:

-

•

Structure measures denote the attributes of the setting in which health care occurs, and primarily includes material resources (e.g., facilities, equipment, and financing), human resources, and organisational structure;

-

•

Process measures denote the steps in the actual delivery of health care (i.e., what the health care provider does to maintain or improve health; e.g., making a diagnosis or recommending/implementing treatment);

-

•

Outcome measures denote the effects or impact of care on the health status of patients and/or populations (i.e., changes in a patient's health status that could be attributed to antecedent care).

Modified/Online Delphi

Purposeful sampling was used to ensure appropriate experts were invited to participate due to the focus on both SA PEC and LMICs [20,24,25]. Given that emergency care focused quality assessment is new to SA, the pool of experts with sufficient knowledge and experience was limited. As a result, the range of potential participants invited was expanded to include: emergency medicine physicians, emergency care nurses, and prehospital emergency care practitioners with a wide variety of primary occupations, including: operations and clinical care, education and training, management, and quality assurance. In addition, given the focus on LMICs, international experts with prior experience in LMICs and with knowledge of emergency care focused quality assessment, were additionally considered as experts. Criteria for inclusion into the expert panel included those with a background in the above-mentioned fields, with preference given to potential participants who had one or more of the following: post-graduate qualification in prehospital or emergency care, previous experience in quality assessment and/or quality improvement, were employed either part-time or full-time in quality assessment or quality improvement at the time of the study, or had previous experience in working in emergency care in either SA and/or an LMIC. In total, 45 participants were contacted regarding potential participation in the study. Of this group, 35 participants agreed to participate prior to the start of Round 1 (Table 2).

Table 2.

Expert panel demographics (N = 35).

| Demographics | n | (%) |

|---|---|---|

| Healthcare background | ||

| Paramedic | 26 | (74) |

| Nurse | 2 | (6) |

| Physician | 7 | (20) |

| Gender | ||

| Male | 23 | (66) |

| Female | 12 | (34) |

| Location | ||

| South Africa | 28 | (80) |

| International | 7 | (20) |

The Delphi process was modified in this study in that each round was conducted online, and all correspondence was conducted electronically. No face-to-face consensus meetings were held [20,24,25]. The foci for each of the Delphi rounds were as follows:

-

•

Round 1: Agreement of QI subcategories. Consensus rating on the subcategories was initially sought to provide focus for the specific QIs to be presented in Round 2, as opposed to presenting all candidate QIs for rating in the first round [26].

-

•

Round 2: Presentation of QIs from participant selected subcategories. The individual QIs from the respective QI subcategories identified in Round 1 were presented for rating in Round 2.

-

•

Round 3: Representation of QI subcategories without consensus, individual QIs without consensus, and agreement of participant-proposed QIs. QIs that not did reach consensus approval in Rounds 1 and 2 were re-presented for rating in Round 3. Participant proposed QIs from Round 2 were additionally presented for rating.

Prior to the start of the Delphi, participants were sent information about the study and access to round 1 of the Delphi. For each subsequent round, the participants were sent a group summary of the previous round's output, as well as requirements for the subsequent round. For each round, participants were given the opportunity to propose additional QI categories and/or QIs for subsequent rounds.

For each round, participants were required to rate their level of agreement for the respective QI subcategories and QIs based on a 5-point Likert scale, ranging from strongly disagree (1) to strongly agree (5). To achieve consensus agreement, at least 70% of participants had to rate a QI subcategory or individual QI in the “agreement” range of scores (4 or 5). QI subcategories and individual QIs that achieved consensus agreement were not reiterated in subsequent rounds. QI subcategories and individual QIs that did not reach consensus agreement, and participant proposed QIs were refined based on feedback and suggestions and included in subsequent rounds for consensus rating. Data collection was considered concluded when each QI subcategory and individual QI had been evaluated via a consensus round by the panel of experts; and those in which no consensus could be reached were evaluated via a second round to allow participants the opportunity to potentially amend their previous rating.

Summaries of each round were distributed via email. Data for the consensus rating were collected using an online survey tool - Checkbox (Checkbox Survey Solutions, Massachusetts, USA, 2017), and collated and analysed using Microsoft Excel 2010 (Microsoft Corp, Richmond, WA). All data were analysed using univariate descriptive statistics to describe the Likert ratings of each Delphi round.

Ethical approval to conduct the study was granted by the University of Stellenbosch Health Research Ethics Committee (Ref no. S15/09/193).

Results

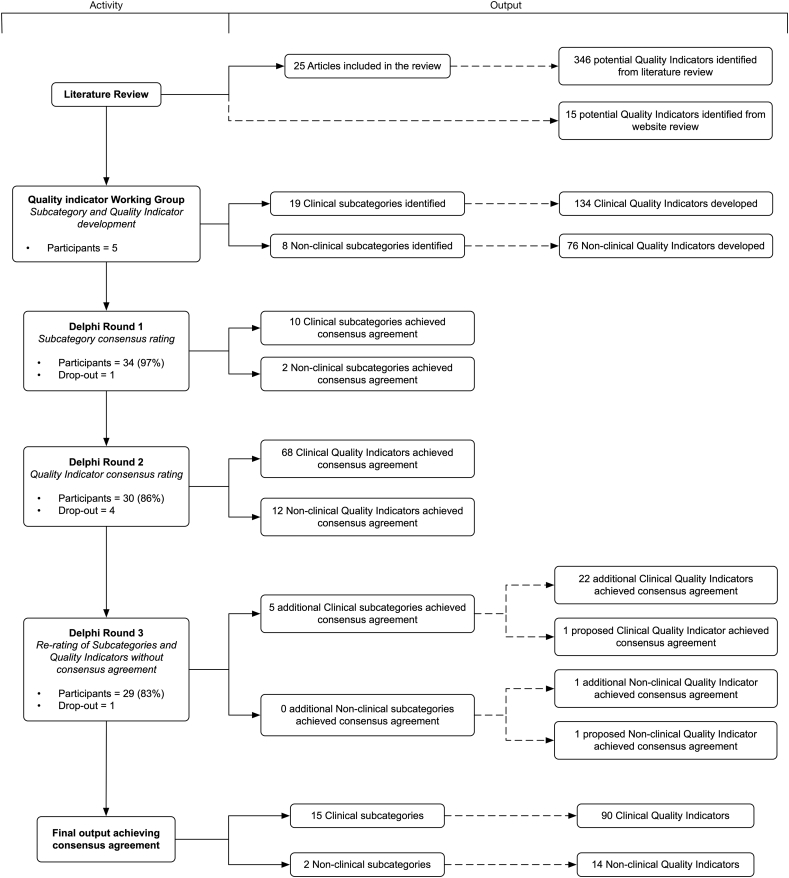

Round 1 achieved a 97% response rate (n = 34). Table 2 describes the expert panel demographics. Of the 28 subcategories proposed, ten Clinical subcategories and two Non-clinical subcategories reached consensus agreement amongst respondents. The proposed individual QIs from these subcategories went on to Round 2 for consensus agreement. The subcategories and their respective individual QIs not achieving consensus were re-presented in Round 3 to allow respondents to amend their choice from Round 1 (Fig. 1).

Fig. 1.

Delphi rounds and output.

Round 2 achieved an 86% response rate (n = 30). Within the ten clinical subcategories achieving consensus agreement in Round 1, 94 individual clinical QIs were proposed in Round 2, with 68 (72%) reaching consensus agreement amongst respondents. For the 2 non-clinical subcategories reaching consensus agreement in Round 1, 19 individual non-clinical QIs were proposed, with 12 (63%) reaching consensus (total reaching consensus, n = 80).

The response rate for Round 3 was 83% (n = 29). The QIs from the subcategories of Round 1 that did not reach consensus agreement, the remaining individual QIs from Round 2 that did not reach consensus, and the newly proposed QIs resulting from Round 2 were all presented in Round 3. Four new QIs were proposed, three clinical, and one non-clinical, of which one clinical and one non-clinical reached consensus agreement. For Round 3, in total, five of the subcategories that had not reached consensus in Round 1 reached consensus agreement, all within the clinical category. Twenty-two clinical QIs and two non-clinical QIs that had not reached consensus in Round 2, were accepted by consensus in Round 3.

In total, 104 individual QIs reached consensus agreement by the end of the Delphi study, 90 clinical QIs across 15 subcategories and 14 non-clinical QIs across two subcategories. Within the clinical category, airway management [n = 13 QIs (14%)]; out of hospital cardiac arrest (OHCA) (n = 13 QIs; 14%); and acute coronary syndromes (ACS) (n = 11 QIs; 12%) made up over a third of this category (Table 3). Within the non-clinical category, adverse events made up the significant majority with nine individual QIs (64%) (Table 4). The majority of QIs not reaching consensus agreement were found in the non-clinical category (n = 62 QIs), with time intervals (n = 15 QIs) and documentation (n = 13 QIs) making up the majority. Within the clinical subcategories not reaching consensus, the management of tachyarrhythmias (n = 5 QIs) and the management of bradyarrhythmias (n = 4 QIs) made up the majority.

Table 3.

Clinical category – QIs reaching consensus agreement.

| Subcategory type | Applicable scope of practice |

QI classification | Mean | SD | Round | ||

|---|---|---|---|---|---|---|---|

| BLS | ILS | ALS | |||||

| ACS/STEMI subcategory | |||||||

| Patients with a provisional diagnosis of ACS/STEMI who had an ALS practitioner in attendance | X | Process | 3.7 | 1.3 | 2 | ||

| Patients with a provisional diagnosis of ACS/STEMI who had a set of defined cardiac risk factors assessed and recorded | X | X | Process | 3.8 | 1.1 | 3 | |

| Patients with a provisional diagnosis of ACS/STEMI who had a 12 lead ECG obtained | X | X | Process | 4.3 | 1.1 | 2 | |

| Patients with a provisional diagnosis of ACS/STEMI who were administered Aspirin | X | X | X | Process | 4.7 | 0.6 | 2 |

| Patients with a provisional diagnosis of ACS/STEMI who were administered GTN | X | X | Process | 3.9 | 1.1 | 2 | |

| Patients with a provisional diagnosis of ACS/STEMI who were assessed for suitability for thrombolysis by defined checklist | X | X | Process | 4.0 | 1.3 | 2 | |

| Patients with a provisional diagnosis of ACS/STEMI who were administered prehospital thrombolysis | X | Process | 3.8 | 1.4 | 3 | ||

| Patients with a provisional diagnosis of ACS/STEMI who were transported directly to a Facility with PCI capabilities | X | X | X | Process | 4.5 | 0.9 | 2 |

| Patients with a provisional diagnosis of ACS/STEMI who had EMS activation of the receiving Cath Lab | X | X | X | Process | 4.0 | 1.2 | 2 |

| Patients who received/met all components of a defined ACS/STEMI composite bundle score | X | Process | 4.2 | 1.1 | 2 | ||

| Acute Pulmonary Oedema subcategory | |||||||

| Patients with a provisional diagnosis of APO who were administered GTN | X | X | Process | 4.3 | 1.0 | 3 | |

| Patients with a provisional diagnosis of APO who received CPAP | X | Process | 3.9 | 1.0 | 3 | ||

| Patients with a provisional diagnosis of APO who had a 12 lead ECG obtained | X | X | Process | 4.3 | 1.0 | 3 | |

| Airway Management subcategory | |||||||

| Patients who received a pre-ETI paralytic, following which there was a decrease in SpO2 > 10% from baseline/or decrease below 70% overall | X | Process | 3.9 | 1.2 | 2 | ||

| Patients successfully intubated by EMS personnel where EtCO2 monitoring was used post ETI | X | Process | 4.8 | 0.5 | 2 | ||

| Patients successfully intubated via RSI by EMS personnel where a paralytic agent was administered post-ETI | X | Process | 4.1 | 1.1 | 2 | ||

| Patients successfully intubated by EMS personnel where a sedative agent was administered post-ETI | X | Process | 4.5 | 0.8 | 2 | ||

| Patients successfully intubated by EMS personnel where a mechanical ventilator was used post-ETI for ventilation | X | Process | 4.5 | 0.7 | 2 | ||

| Patients in whom ETI was attempted by EMS personnel who had an alternative airway inserted as a final airway | X | Process | 4.4 | 1.0 | 2 | ||

| Patients in whom ETI was attempted by EMS personnel who had a surgical airway inserted | X | Process | 4.3 | 1.2 | 2 | ||

| Patients successfully intubated by EMS personnel with an EtCO2 < 30 mmHg or >50 mmHg post-ETI >10 min during EMS care | X | Process | 4.2 | 1.2 | 2 | ||

| Patients in whom RSI with ETI was unsuccessful when attempted by EMS personnel | X | Process | 4.4 | 1.1 | 2 | ||

| Patients in whom Non-RSI ETI was unsuccessful when attempted by EMS personnel | X | Process | 4.3 | 1.2 | 2 | ||

| Patients in whom RSI with ETI was successful when attempted by EMS personnel | X | Process | 4.4 | 1.0 | 2 | ||

| Total number of patients successfully intubated via RSI by EMS personnel | X | Process | 4.3 | 1.1 | 2 | ||

| Patients who received/met all components of the defined Airway management composite Bundle score | X | Process | 4.4 | 1.0 | 2 | ||

| Anaphylaxis subcategory | |||||||

| Patients with a provisional diagnosis of Anaphylaxis and evidence of bronchoconstriction documented who were administered a B2 agonist | X | X | Process | 4.0 | 1.0 | 3 | |

| Patients with a provisional diagnosis of Anaphylaxis and evidence of bronchoconstriction documented who were administered an Anti-cholinergic bronchodilator | X | X | Process | 4.3 | 1.2 | 3 | |

| Patients with a provisional diagnosis of Anaphylaxis who were administered an antihistamine | X | Process | 4.3 | 1.1 | 3 | ||

| Patients with a provisional diagnosis of Anaphylaxis who were administered a corticosteroid | X | Process | 4.6 | 1.1 | 3 | ||

| Patients with a provisional diagnosis of Anaphylaxis and signs of a severe systemic response recorded who were administered IM Adrenaline | X | Process | 3.8 | 0.7 | 3 | ||

| Asthma/Bronchoconstriction | |||||||

| Patients with a provisional diagnosis of Asthma/Bronchoconstriction with lung sounds assessed and documented (pre and post treatment) | X | X | X | Process | 4.3 | 1.1 | 2 |

| Patients with a provisional diagnosis of Asthma/Bronchoconstriction with a SpO2 documented (pre and post treatment) | X | X | X | Process | 4.3 | 1.1 | 2 |

| Patients with a provisional diagnosis of Asthma/Bronchoconstriction who were administered a B2 agonist bronchodilator | X | X | Process | 4.6 | 0.7 | 2 | |

| Patients with a provisional diagnosis of Asthma/Bronchoconstriction who were administered an anticholinergic bronchodilator | X | X | Process | 4.0 | 1.2 | 2 | |

| Patients with a provisional diagnosis of Asthma/Bronchoconstriction who were administered a corticosteroid | X | Process | 4.0 | 1.3 | 2 | ||

| Patients with a provisional diagnosis of Asthma/Bronchoconstriction recorded with documented severe wheezes/silent chest/BP < 90 mmHg systolic BP who were administered IM Adrenalin | X | Process | 3.9 | 1.4 | 2 | ||

| Patients who received/met all components of the defined Asthma/Bronchoconstriction composite bundle score | X | Process | 4.3 | 1.1 | 2 | ||

| Burns subcategory | |||||||

| Patients with a provisional diagnosis of Burns with burns dressings applied | X | X | X | Process | 4.4 | 1.3 | 3 |

| Patients with a provisional diagnosis of Burns with body surface area and burns type assessed and recorded | X | X | X | Process | 4.2 | 0.9 | 3 |

| General subcategory | |||||||

| Serviceable suction unit devices available per defined area and/or time period | N/A | Structure | 4.1 | 1.3 | 2 | ||

| Serviceable 3 lead ECG monitoring devices available per defined area and/or time period | N/A | Structure | 4.2 | 1.1 | 2 | ||

| Serviceable 12 lead ECG monitoring devices available per defined area and/or time period | N/A | Structure | 4.3 | 1.1 | 2 | ||

| Serviceable portable oxygen cylinders available per defined area and/or time period | N/A | Structure | 4.2 | 1.1 | 2 | ||

| Serviceable Defibrillator/AED devices available per defined area and/or time period | N/A | Structure | 4.4 | 1.1 | 2 | ||

| Serviceable mechanical ventilators available per defined area and/or time period | N/A | Structure | 4.1 | 1.3 | 2 | ||

| Patients with reduced level of consciousness with a blood glucose measured | X | X | X | Process | 4.4 | 1.2 | 2 |

| Patients with a recorded SpO2 <95% who were administered supplemental Oxygen | X | X | X | Process | 3.9 | 1.4 | 2 |

| Patients with a provisional diagnosis recorded | X | X | X | Process | 3.9 | 1.4 | 2 |

| Hypoglycaemia subcategory | |||||||

| Patients with a blood glucose level <5 mmol who were administered Glucose | X | X | X | Process | 4.5 | 1.1 | 3 |

| Patients with a blood glucose level measured and recorded following Glucose administration | X | X | X | Process | 4.0 | 0.7 | 3 |

| Neonate/Paediatric subcategory | |||||||

| One min APGAR score assessed and recorded for newborn patients | X | X | X | Process | 4.5 | 1.1 | 2 |

| Five min APGAR score assessed and recorded for newborn patients | X | X | X | Process | 4.4 | 1.1 | 2 |

| Paediatric patients with a provisional diagnosis of Croup who were administered oral/inhaled steroids | X | Process | 3.9 | 1.1 | 3 | ||

| Paediatric patients with a provisional diagnosis of Croup who were administered nebulised Adrenalin | X | Process | 3.8 | 1.3 | 2 | ||

| Patient transportation to a facility with specialist Paediatric capabilities/resources | X | X | X | Process | 4.2 | 1.1 | 2 |

| Obstetrics subcategory | |||||||

| Obstetric patients who deliver prior to EMS arrival | X | X | X | Process | 4.0 | 1.1 | 3 |

| Obstetric patients with postpartum haemorrhage who were administered TXA | X | Process | 4.5 | 1.2 | 3 | ||

| Obstetric patients with a provisional diagnosis of Eclampsia or Pre-eclampsia who were administered Magnesium sulphate | X | Process | 4.2 | 0.8 | 3 | ||

| Obstetric patients who deliver during EMS care | X | X | X | Outcome | 4.2 | 1.2 | 3 |

| OHCA subcategory | |||||||

| Patients with a provisional diagnosis of OHCA with a witnessed collapse documented | X | X | X | Process | 4.4 | 1.1 | 2 |

| Patients with a provisional diagnosis of OHCA who received documented bystander CPR | N/A | Process | 4.5 | 0.9 | 2 | ||

| Patients with a provisional diagnosis of OHCA who received documented telephonic CPR advice | N/A | Process | 4.1 | 1.3 | 2 | ||

| Patients with a provisional diagnosis of OHCA with VF/VT as first presenting rhythm on arrival of EMS | X | X | X | Process | 4.5 | 1.0 | 2 |

| Patients with a provisional diagnosis of OHCA with Asystole/PEA as first presenting rhythm on arrival of EMS | X | X | X | Process | 4.2 | 1.2 | 2 |

| Patients with a provisional diagnosis of OHCA intubated with alternative airway device | X | Process | 4.1 | 1.0 | 3 | ||

| Patients with a provisional diagnosis of OHCA for whom resuscitation was cancelled prior to arrival at hospital | X | Process | 4.2 | 1.3 | 2 | ||

| Patients with a provisional diagnosis of OHCA who were transported to hospital (incl. ROSC and Non-ROSC patients) | X | X | X | Process | 4.1 | 1.3 | 2 |

| Patients with a provisional diagnosis of OHCA with ROSC at hospital handover | X | X | X | Process | 4.4 | 1.1 | 2 |

| Patients with a provisional diagnosis of OHCA with VF/VT at hospital handover | X | X | X | Process | 4.1 | 1.3 | 2 |

| Patients with a provisional diagnosis of OHCA with Asystole/PEA at hospital handover | X | X | X | Process | 3.9 | 1.4 | 2 |

| Patients with a provisional diagnosis of OHCA with survival to Emergency Centre discharge | X | X | X | Process | 4.4 | 1.2 | 2 |

| Patients with a provisional diagnosis of OHCA with survival to hospital discharge | X | X | X | Outcome | 4.8 | 0.8 | 2 |

| Pain Management subcategory | |||||||

| Patients with level of Pain measured via defined pain score | X | X | X | Process | 4.4 | 0.8 | 2 |

| Patients with a defined pain score threshold who were administered analgesia | X | X | Process | 4.5 | 0.7 | 2 | |

| Patients with level of pain measured via defined pain score following analgesia administration | X | X | X | Process | 4.5 | 0.8 | 2 |

| Seizures subcategory | |||||||

| Patients with a provisional diagnosis of Seizures with a blood glucose measured and recorded | X | X | X | Process | 4.6 | 0.6 | 2 |

| Patients with a provisional diagnosis of Seizures who were administered an antiepileptic for ongoing Seizures | X | Process | 4.4 | 0.9 | 2 | ||

| Stroke/TIA subcategory | |||||||

| Patients with a provisional diagnosis of Stroke/CVA/TIA with a blood glucose measured and recorded | X | X | X | Process | 4.4 | 0.9 | 2 |

| Patients with a provisional diagnosis of Stroke/CVA/TIA with a Stroke screening assessment performed (e.g.: FAST) | X | X | X | Process | 4.7 | 0.6 | 2 |

| Patients with a provisional diagnosis of Stroke/CVA/TIA with serial blood pressure measurements recorded (X3) | X | X | X | Process | 4.1 | 1.1 | 3 |

| Patients with a provisional diagnosis of Stroke/CVA/TIA delivered to a specialist Stroke Centre | X | X | X | Process | 4.2 | 1.3 | 2 |

| Patients with a provisional diagnosis of Stroke/CVA/TIA with direct delivery to CT scan | X | X | X | Process | 4.0 | 1.2 | 2 |

| Patients who received/met all components of the defined Stroke/CVA/TIA composite bundle score | X | X | X | Process | 4.4 | 1.2 | 2 |

| Trauma subcategory | |||||||

| Patients designated as a trauma case with entrapment on scene documented | X | X | X | Process | 3.6 | 0.9 | 3 |

| Patients designated as a trauma case with a BP <90 mmHg | N/A | Process | 4.0 | 1.4 | 2 | ||

| Patients designated as a trauma case with partial/full amputation who had a tourniquet applied | X | X | X | Process | 4.0 | 1.4 | 2 |

| Patients designated as a trauma case with a femur fracture and traction splint use | X | X | X | Process | 3.7 | 1.3 | 2 |

| Patients designated as a trauma case with a BP <90 mmHg who were administered TXA | X | Process | 4.4 | 1.0 | 2 | ||

| Patients designated as a trauma case with direct transportation to a specialist Trauma Centre | X | X | X | Process | 4.1 | 1.3 | 2 |

Table 4.

Non-clinical category – QIs reaching consensus agreement.

| Subcategory type | Applicable scope of practice |

QI classification | Mean | SD | Round | ||

|---|---|---|---|---|---|---|---|

| BLS | ILS | ALS | |||||

| Adverse Events subcategory | |||||||

| Number of patient deaths while in EMS care | X | X | X | Sentinal Event | 4.6 | 0.8 | 2 |

| Number of defined Adverse Events reported during EMS care | X | X | X | Sentinal Event | 4.6 | 1.0 | 2 |

| Number of defined equipment/technical failures reported during EMS care | N/A | Sentinal Event | 4.6 | 0.9 | 2 | ||

| Number of accidental or unexpected extubations reported during EMS care | X | Sentinal Event | 4.4 | 1.0 | 2 | ||

| Number of patients with a decrease in GCS of 3 or more points during EMS care | X | X | X | Sentinal Event | 3.9 | 1.1 | 3 |

| Number of defined failed intubation attempts | X | X | X | Sentinal Event | 4.3 | 1.1 | 2 |

| Total number of patient injury reports during EMS care | X | X | X | Sentinal Event | 4.3 | 0.9 | 2 |

| Number of EMS staff on-duty injury reports | N/A | Sentinal Event | 4.3 | 1.0 | 2 | ||

| Number of defined medication errors during EMS care | X | X | X | Sentinal Event | 4.6 | 0.6 | 2 |

| Communications/Dispatch subcategory | |||||||

| Number of cases compliant with defined ALS Dispatch criteria | N/A | Structure | 4.0 | 1.1 | 2 | ||

| Number of cases with call processing time within defined limits | N/A | Structure | 4.1 | 1.1 | 2 | ||

| Number of Service Call Centre calls received per 10000 population | N/A | Structure | 4.2 | 1.0 | 2 | ||

| Number of unanswered/missed calls to the Service Call Centre | N/A | Structure | 4.4 | 1.0 | 2 | ||

| Number of cases with a delay in dispatch and/or response time waiting for a police/security escort | N/A | Process | 4.2 | 1.0 | 3 | ||

In terms of Donabedian's classification of healthcare information and data, within the final list of individual QIs, there were a total of ten (10%) structure-based QIs, 83 (80%) process-based QIs, two (2%) outcome-based QIs, and a further nine (8%) QIs categorised as sentinel events, given their specific focus on patient safety.

Quality systems in the PEC setting are in their infancy in SA. As a result, the pool of available experts for participation was smaller than would be expected in a country with more formal and advanced quality systems. The potential exists that participants with increased exposure and experience within these formal quality systems may have reached consensus agreement on a different set of indicators than that reported in this study. Despite this, the number and proportion of participants continuing through each round remained within the bounds of what is considered acceptable for a Delphi study for identifying QIs within healthcare [20]. Furthermore, such heterogeneity in the expert panel has previously been identified as an advantage towards decision making in the consensus rating process [27].

The study was facilitated entirely online with all correspondence conducted electronically via email. It is arguable that this approach limits response rates and the benefits of face-to-face contact, such as the real-time exchange of information [28]. Conversely however, this approach avoids the situation that would allow any single panel member from dominating the consensus process, a potential possibility in a physical metting of experts [28].

The focus for this study was on the identification of QIs appropriate for the SA setting, using QIs previously described in the literature. While opportunity was provided for participants to describe new QIs specific to the SA setting, this was not the primary objective of the study and remains an area for future research and expansion.

Discussion

Our study demonstrated that, through consensus, there are a broad set of QIs that are relevant and appropriate for use in the PEC setting in SA. Given the short amount of time that patients are exposed to these services, outcomes are difficult to measure, making the application of process-based QIs ideal for assessing quality and performance. This was evident in the output of our study, where process-based measures of care made up the majority of QIs reaching consensus agreement.

Historically, non-clinical/service-based measures have been the predominant focus for measuring and assessing PEC quality [6]. In contrast however, there was an overwhelming focus on clinical-based QIs reaching consensus in this study. Furthermore, the majority were focused on patient subsets for which PEC has been shown to have a positive impact, such as OHCA [29], ACS [30,31], airway management/breathing problems [32., 33., 34.] and stroke [35].

This represents a significant shift away from time-based measures, which are often difficult to achieve in countries with geographically dispersed populations (i.e., proportionally high rural population) or those with an under-resourced response capability, such as that seen not only SA, but the broader LMIC setting. Similarly, the majority of the indicators reaching consensus were those that could be readily implemented without the need for complex data and information systems such as electronic patient care records or computer aided dispatch systems, compared to QIs previously described for more mature, “developed” PEC systems [36]. Furthermore, 58 (64%) of the clinical QIs and 12 of the non-clinical QIs (86%) are potentially applicable to non-ALS levels of care and therefore suitable for less mature systems or those with a narrower scope of practice than seen in SA.

Quality assessment promotes accountability to all stakeholders, including both service users and service providers. QIs represent a promising and important component within the assessment process by helping to identify and measure levels of service quality and performance. In and of themselves, QIs cannot improve quality. They effectively act as flags or alerts to identify good practice, provide comparability within and between similar services, identify opportunities for improvement, and provide direction where a more detailed investigation of standards is warranted. As such, their implementation and the manner in which their output is acted on are as equally important as their development. Similarly, applying QIs within any healthcare field requires a reasonable standard of documentation quality to be maintained. Maintaining such a standard through regular documentation quality audit and/or amendment to facilitate the use of QIs is a necessity to ensure their success.

PEC lends itself to assessment by QIs. This was evident in the number, variety and type of QIs reaching consensus agreement in our study. However, measuring quality in any healthcare setting is highly contextual. Within the South African setting, there are nonetheless a multitude of QIs that are relevant and appropriate for use in PEC. Furthermore, both the methodology employed and findings of this study may be used to inform the development of PEC specific QIs within other LMIC settings.

Dissemination of results

The results have not been formally shared.

Authors' contributions

Authors contributed as follows to the conception or design of the work; the acquisition, analysis, or interpretation of data for the work; and drafting the work or revising it critically for important intellectual content: IH contributed 50%, VL contributed 25%, PC contributed 15%, and LW and MC each contributed 5%. All authors approved the version to be published and agreed to be accountable for all aspects of the work.

Declaration of Competing Interest

Prof Lee Wallis is an editor of the African Journal of Emergency Medicine. Prof Wallis was not involved in the editorial workflow for this manuscript. The African Journal of Emergency Medicine applies a double blinded process for all manuscript peer reviews. The authors declared no further conflict of interest.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.afjem.2019.07.003.

Appendix A. Supplementary data

Data dictionary and minimum data standards.

References

- 1.Price L. Treating the clock and not the patient: ambulance response times and risk. Qual Saf Health Care. 2006;15(2):127–130. doi: 10.1136/qshc.2005.015651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pons P.T., Markovchick V.J. Eight minutes or less: does the ambulance response time guideline impact trauma patient outcome? J Emerg Med. 2002;23(1):43–48. doi: 10.1016/s0736-4679(02)00460-2. [DOI] [PubMed] [Google Scholar]

- 3.Heath G., Radcliffe J. Performance measurement and the english ambulance service. Public Money Manag. 2007;27(3):223–228. [Google Scholar]

- 4.Maio R.F., Garrison H.G., Spaite D.W., Desmond J.S., M a Gregor, Cayten C.G. Emergency Medical Services Outcomes Project I (EMSOP I): prioritizing conditions for outcomes research. Ann Emerg Med. 1999 Apr;33(4):423–432. doi: 10.1016/s0196-0644(99)70307-0. [DOI] [PubMed] [Google Scholar]

- 5.Spaite D.W., Maio R.F., Garrison H.G., Desmond J.S., Gregor M.A., Stiell I.G. Emergency medical services outcomes project (EMSOP) II: developing the foundation and conceptual models for out-of-hospital outcomes. Ann Emerg Med. 2001;37(6):657–663. doi: 10.1067/mem.2001.115215. [DOI] [PubMed] [Google Scholar]

- 6.Howard I., Cameron P., Wallis L., Castren M., Lindstrom V. Quality indicators for evaluating prehospital emergency care: a scoping review. 2017;33(1):43–52. doi: 10.1017/S1049023X17007014. [DOI] [PubMed] [Google Scholar]

- 7.Mainz J. Defining and classifying clinical indicators for quality improvement. Int J Qual Heal Care. 2003 Dec;15(6):523–530. doi: 10.1093/intqhc/mzg081. [DOI] [PubMed] [Google Scholar]

- 8.Barber R.M., Fullman N., Sorensen R.J.D., Bollyky T., McKee M., Nolte E. Healthcare Access and Quality Index based on mortality from causes amenable to personal health care in 195 countries and territories, 1990–2015: a novel analysis from the Global Burden of Disease Study 2015. Lancet. 2017;390(10091):231–266. doi: 10.1016/S0140-6736(17)30818-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.BD 2016 Healthcare Access and Quality Collaborators Measuring performance on the Healthcare Access and Quality Index for 195 countries and territories and selected subnational locations: a systematic analysis from the Global Burden of Disease Study 2016. Lancet. 2018;391:2236–2271. doi: 10.1016/S0140-6736(18)30994-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.National Academies of Sciences; Engineering and Medicine . The National Academies Press; Washington, DC: 2018. Crossing the global quality chasm: improving health care worldwide. [PubMed] [Google Scholar]

- 11.Kruk M.E., Gage A.D., Arsenault C., Jordan K., Leslie H.H., Roder-dewan S. High-quality health systems in the Sustainable Development Goals era: time for a revolution. Lancet Glob Heal. 2018;6(November):e1196–e1252. doi: 10.1016/S2214-109X(18)30386-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kobusingye O., Hyder A., Bishai D. 2nd ed. Oxford University Press; New York: 2006. Emergency medical services; pp. 1261–1280. (Disease control priorities in developing countries). [Google Scholar]

- 13.Kobusingye O.C., Hyder A.A., Bishai D., Hicks E.R., Mock C., Joshipura M. Emergency medical systems in low- and middle-income countries: recommendations for action. Bull World Health Organ. 2005;83(8):626–631. [PMC free article] [PubMed] [Google Scholar]

- 14.Pillay-van Wyk V., Msemburi W., Laubscher R., Dorrington R.E., Groenewald P., Glass T. Mortality trends and differentials in South Africa from 1997 to 2012: second National Burden of Disease Study. Lancet Glob Health. 2016;4(9):e642–e653. doi: 10.1016/S2214-109X(16)30113-9. [DOI] [PubMed] [Google Scholar]

- 15.Department of Health . Dep Heal Repub South Africa; 2007. A policy on quality in health care for South Africa. [Google Scholar]

- 16.Professional Board for Emergency Care . Pretoria. 2018. South African emergency medical services clinical practice guidelines. [Google Scholar]

- 17.Ambulance Victoria . 2016. Clinical practice guidelines - ambulance and MICA paramedics. [Google Scholar]

- 18.National Association of State EMS Officials . 2017. National Model EMS Guidelines. [Google Scholar]

- 19.Pre-Hospital Emergency Care Council . 2014. Clinical Practice Guidelines. [Google Scholar]

- 20.Boulkedid R., Abdoul H., Loustau M., Sibony O., Alberti C. Using and reporting the Delphi method for selecting healthcare quality indicators: a systematic review. PLoS One. 2011;6(6) doi: 10.1371/journal.pone.0020476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rubin H.R., Pronovost P., Diette G.B. From a process of care to a measure: the development and testing of a quality indicator. International J Qual Health Care. 2001 Dec;13(6):489–496. doi: 10.1093/intqhc/13.6.489. [DOI] [PubMed] [Google Scholar]

- 22.McGlynn E.A., Asch S.M. Developing a clinical performance measure. Am J Prev Med [Internet] 1998 Apr;14(3):14–21. doi: 10.1016/s0749-3797(97)00032-9. Suppl. [DOI] [PubMed] [Google Scholar]

- 23.Donabedian A. Evaluating the quality of medical care. Milbank Q. 1966;44:166–206. Suppl. [PubMed] [Google Scholar]

- 24.Williams P., Webb C. The Delphi technique a methodological discussion. J Adv Nurs. 1994;19:180–186. doi: 10.1111/j.1365-2648.1994.tb01066.x. [DOI] [PubMed] [Google Scholar]

- 25.Cole Z.D., Donohoe H.M., Stellefson M.L. Internet-based Delphi research: case based discussion. Environ Manag. 2013;51(3):511–523. doi: 10.1007/s00267-012-0005-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–382. doi: 10.1046/j.1365-2648.2003.02537.x. [DOI] [PubMed] [Google Scholar]

- 27.Bantel K. Comprehensiveness of strategic planning: the importance of heterogeneity of a top team. Psychol Rep. 1993;73(1):35–49. [Google Scholar]

- 28.Walker A., Selfe J. The Delphi method: a useful tool for the allied health researcher. Br J Ther Rehabil. 2014;3(12):677–681. [Google Scholar]

- 29.Link M.S., Donnino M.W., White R.D., Paxton J.H., Kudenchuk P.J., Halperin H.R. Part 7: adult advanced cardiovascular life support. Circulation. 2015;132(18 suppl 2):S444–S464. doi: 10.1161/CIR.0000000000000261. [DOI] [PubMed] [Google Scholar]

- 30.Gara P.T.O., Kushner F.G., Ascheim D.D., Casey D.E. Guidelines et al on behalf of American C of CFHATF on P. ACCF/AHA guideline 2013 ACCF/AHA guideline for the management of ST-elevation myocardial infarction a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines. Circulation. 2013;(127):e362–e425. doi: 10.1161/CIR.0b013e3182742cf6. [DOI] [PubMed] [Google Scholar]

- 31.Ibanez B., James S., Agewell S., on behalf of The Task Force for the management of acute myocardial infarction in patients presenting with ST-segment elevation of the European Society of Cardiology 2017 ESC Guidelines for the management of acute myocardial infarction in patients presenting with ST-segment elevation. Eur Heart J. 2017;39(2):119–177. doi: 10.1093/eurheartj/ehx393. [DOI] [PubMed] [Google Scholar]

- 32.British Thoracic Society Standards of Care Committee British guideline on the management of asthma a national clinical guideline. Thorax. 2011;66(Suppl. 2):i1–23. [Google Scholar]

- 33.Japanese Society of Anesthesiologists JSA airway management guideline 2014: to improve the safety of induction of anesthesia. J Anesth. 2014;28(4):482–493. doi: 10.1007/s00540-014-1844-4. [DOI] [PubMed] [Google Scholar]

- 34.Mebazaa A., Gheorghiade M., Piña I.L., Harjola V.-P., Hollenberg S.M., Follath F. Practical recommendations for prehospital and early in-hospital management of patients presenting with acute heart failure syndromes. Crit Care Med. 2009;36:S129–S139. doi: 10.1097/01.CCM.0000296274.51933.4C. Suppl. [DOI] [PubMed] [Google Scholar]

- 35.Powers W.J., Rabinstein A.A., Ackerson T., Adeoye O.M., Bambakidis N.C., Becker K. 2018 Guidelines for the early management of patients with acute ischemic stroke: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2018;49 (46-110 p.) [Google Scholar]

- 36.Murphy A., Wakai A., Walsh C., Cummins F., O'Sullivan R. Development of key performance indicators for prehospital emergency care. Emerg Med J. 2016;33(4):286–292. doi: 10.1136/emermed-2015-204793. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data dictionary and minimum data standards.