Abstract

Artificial intelligence (AI), a discipline encompassed by data science, has seen recent rapid growth in its application to healthcare and beyond, and is now an integral part of daily life. Uses of AI in gastroenterology include the automated detection of disease and differentiation of pathology subtypes and disease severity. While a majority of AI research in gastroenterology focuses on adult applications, there are a number of pediatric pathologies that could benefit from more research. As new and improved diagnostic tools become available and more information is retrieved from them, AI could provide physicians a method to distill enormous amounts of data into enhanced decision making and cost saving for children with digestive disorders. This review provides a broad overview of AI and examples of its possible applications in pediatric gastroenterology.

Introduction

Artificial intelligence (AI) has become an integral part of daily living and many industries have integrated its utility into offering better products and services to customers (1). In healthcare, an interest in improving patient safety through increasing diagnostic accuracy has driven research into understanding how the computational power of AI can work within the complexities of clinical medicine (2, 3).

Pediatric gastroenterology is a specialty that combines highly technical procedural skills with diagnostic modalities from other disciplines such as pathology and radiology. Many of the diagnostic tools currently available are invasive requiring sedation and monitoring in a hospital setting. Newer tools, such as wireless capsule endoscopy (WCE), have been developed to observe luminal pathology without needing sedation (4). WCEs have the potential for a wide range of clinical uses but require physicians to process and analyze up to eight hours of variable imaging data (5). When traditional endoscopy is performed, endoscopic imaging and biopsy analysis is often combined with radiology and laboratory results to make an accurate diagnosis. Even with these tools, accuracy can be low for certain pathologies such as differentiating between ulcerative colitis (UC) and Crohn’s disease (CD) (6).

Research interest in the application of AI in gastroenterology has grown in the last decade, specifically to aid with disease characterization during procedures and ultimately to improve diagnostics (7). For example, adult studies have focused on improving polyp detection during colonoscopy (8, 9). As research on AI in gastroenterology advances it will be important to see how these applications can best assist physicians and advance areas within gastroenterology that most need this technology.

In this article, we describe the basics of AI and discuss recent advances in AI driven gastroenterology diagnostics. Our focus will primarily be on developments made in endoscopy, video capsule endoscopy, manometry, and endomicroscopy within pediatrics. We will then introduce research in other areas of gastroenterology. To conclude, we will broadly discuss the implications of AI in pediatric gastroenterology and the changes we foresee for this field. Both adult and pediatric studies have been reviewed here, and we acknowledge that initially paradigms will be borrowed from adult gastroenterology given the limited numbers of pediatric specific studies in the literature (Supplemental Table).

Artificial Intelligence: The Basics

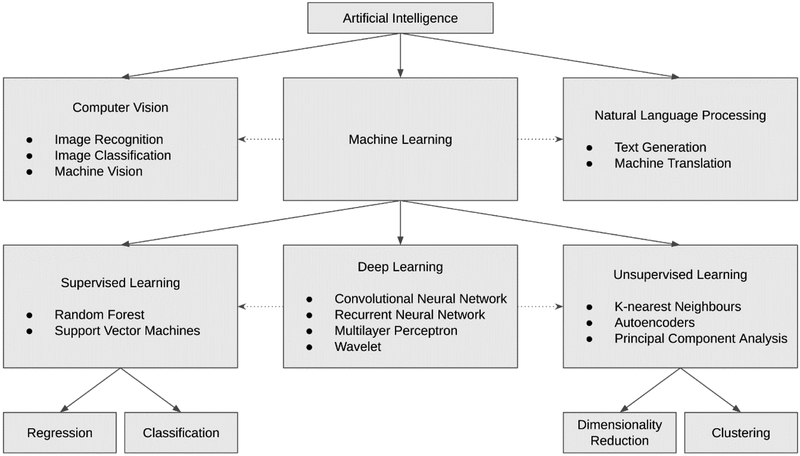

AI is the science of developing systems that can think and act like a rational human (10). The ultimate goal is to produce systems that are capable of performing tasks that normally require human effort (11). Machine learning is a type of AI (Figure 1) and deep learning, a sub-type of machine learning, incorporates specific approaches that use unique algorithms to make data processing and interpretation faster, easier, and more accurate (8).

Figure 1. AI and its sub-classifications.

Solid arrows represent subtypes of the preceding box (i.e. deep learning is a type of machine learning); dotted arrows represent interconnectedness in applications (i.e. machine learning is used in both natural language processing and computer vision which themselves are much broader fields)

Machine learning enables computers to learn from data to predict future outcomes (11) using algorithms developed to interpret and uncover patterns from large sets of data. Machine learning is further divided into unsupervised learning and supervised learning.

In unsupervised learning, the computer learns from large unlabeled data and identifies commonalities. A medical example of unsupervised learning is of personalized medicine (12, 13). Patient history, laboratory results, and imaging data can be analyzed by an algorithm to provide new insights for therapy. An example of this within gastroenterology and nutrition is the prospect of personalizing nutrition by predicting glycemic responses to foods (13). In supervised learning, pre-labeled data is used to train a computer algorithm that can learn and then accurately label new unseen data (11). These tasks focus on clustering and grouping new data based on information learned from previously seen data (11). Supervised learning has been used in healthcare to predict clinical patient outcomes (14).

Deep learning uses a different approach to data analysis. In deep learning, an algorithm is organized into layers where input data is transformed into more interpretable information in each layer and these transformations are learned by exposure to more data. From each transformation, different patterns can be recognized and smaller details can be discerned to help better perform the task at hand. Features of earlier layers usually contain generic details while later layers contain more specific classes of details (15). A common use for this form of AI is image classification. Deep learning, specifically artificial neural networks, has recently become an area of interest within AI as it has shown an ability to perform tasks at near human level and has the potential to be used in formal reasoning (11). Further, accuracy is a metric for evaluation of classification models in order to assess the fraction of predictions a model correctly classified and has been reported by several studies that are mentioned in our review (16). A number of AI techniques are discussed in our paper and a brief outline of overarching principles and a glossary of terms can be found in Table 1.

Table 1.

Major artificial intelligence (AI) principles and glossary of terms as used in studies and brief description.

| AI principle/term | Articles using these methods | Explanation |

|---|---|---|

| Convolutional Neural Networks (CNNs) (Szegedy et al. (28), Russell et al. (8)) | Wimmer et al. (12), Ozawa et al. (26), Zhou et al. (4), Biswas et al. (50), Liu et al. (79), Lamash et al. (78) | The basic framework consists of multiple layers that are responsible for feature extraction and mapping, data reduction, and a layer that classifies the input image to the output classes. There are 3 basic parts of a CNN. The convolution layer is responsible for extracting features from images and developing a map of all of the features using a series of values. Afterwards, a pooling layer reduces the dimensionality of the feature map, which allows for the network to recognize variable input images of similar classes. Lastly, a fully connected layer is responsible for classifying the input image to a number of output variables. |

| AlexNET (Krizhevsky et al. (88)) | Wimmer et al. (12) | This is a form of CNN that contains 5 convolutional layers, multiple max-pooling layers, and two connected layers. |

| Visual Geometry Group (VGG) Net (Chatfield et al. (89)) | Wimmer et al. (12) | A form of CNN that contains 5 convolutional layers and 3 fully connected layers. There are three versions of these nets. The f architecture is the fastest, m is the medium fast, and s is the slow architecture. |

| Extreme learning machines (56) | Biswas et al. (50) | This is fast learning algorithm that is thousands of times faster than traditional network learning algorithms. It does this through a single hidden layer feedforward neural network that has been optimized to reach a very small training error while having good generalizability performance. |

| K-nearest Neighbor (Russel et al. (8)) | Vecsei et al. (23) | Training data is used to create a statistical model to represent the classification of data points. New data is mapped to this model and is classified according to its closest training data point. |

| Principal Component Analysis (James et al. (90)) | Mossotto et al. (29) | Principal component analysis reduces complex and multivariable data into less dimensions in order to gain a better understanding of the data. The goal is to reduce features from data that are unimportant and to narrow in on important variables in order to save on computational power. |

| Support Vector Machines (91) | Mossotto et al. (29), Kumar et al. (42), Girgis et al. (41), Gatos et al. (52), Maeda et al. (70) | Support vector machines are models for classifying sets of data by creating a line or plane to separate data into distinct classes. This allows the machine to then classify new input data based on previously input data. |

| Hierarchical clustering (92) | Mossotto et al. (29) | This is a method of analyzing objects or data into groups called clusters. The goal is having a set of distinct clusters and the objects within each cluster being similar to each other. |

| Wavelet (93) | Vescsei et al. (23) | These are mathematical functions that separate data into different groups and then study each group according to a scale. It is of particular use in data compression and image processing. |

| Multilayer Perceptron (Müller & Reinhardt (94)) | Mielens et al. (63) | This is a form of neural network that uses multiple layers to create a model. The perceptron can be considered a linear model that takes multiple input variables, analyzes their relationship, and provides an appropriate output classification. This is often considered a basic artificial neural network. |

| Random Forest (Breiman (95)) | Plevy et al. (76) | Random forest algorithms randomly select data from a set to create multiple decision trees. New input data is then run through the different decision trees and the resulting classification is the average of all the possible results. |

| Local Binary Patterns (Ojala et al. (25)) | Vècsei et al. (23) | This is a method is a mode of feature extraction used primarily for object detection. A binary pattern is created from each pixel in an image based on each pixel’s surrounding qualities. |

| Concatenation | Biswas et al. (50) | In programming, this means to join two strings together. |

| Automata-based polling | Ciaccio et al. (37) | Automata are functional nodes in a network and these automata are then used for quantitative analysis of a particular input. Each automata is defined by a predefined set of rules and equations. For polling, each automata is polled to classify an input into a particular group. |

| K-fold cross validation (96) | Biswas et al. (50) | This is used in machine learning to estimate the skill of the model. The data is split into k groups of equal sizes. The model is run k times so that each group is treated as the validation set while the other groups are used for training. The summary of the skill of the model is calculated at the end based off the results from each testing fold. |

| Learning vector quantization (97) | Mielens et al. (63) | This is an artificial neural network algorithm that is composed of a collection of codebook vectors. The codebook vector is a list that has the same input and output features as the training data. Prediction on new input data is made by looking through all the codebook vectors for the most similar instance and classifying it as such. |

Endoscopy

White light endoscopic evaluation of the gastrointestinal tract is the primary disease diagnostic and monitoring modality in gastroenterology. In addition to white light endoscopy, there are additional endoscopic modalities (Table 2) and techniques to improve visualization of mucosal structures and vessels, such as narrow band imaging (a type of electronic chromoendoscopy) (17, 18). Narrow band imaging (NBI) capitalizes on blue light wavelengths (415 to 540nm) and allows visualization of hemoglobin (peak absorption 415nm) and increased contrast of microvessels (19). Endocytoscopy and endomicroscopy are newer endoscopic techniques that provide an additional level of magnification to better visualize mucosal structures and histological level visualization of intestinal pathology (20, 21). Higher magnification begets “bigger data” which would be ideal metadata to which AI can be applied for instant interpretability.

Table 2.

Key devices available to gastroenterologists for diagnostics and disease monitoring

| Device | Properties |

|---|---|

| White light endoscope | Standard definition endoscope; image resolutions of 100,000 – 400,000 pixels (17) |

| High resolution endoscope | High definition endoscope; image resolution up to 1 million pixels (translates to magnification of 30 – 35 fold) (17) |

| Magnifying endoscope | Provides 150 fold image magnification (17)(Jang, 2015) |

| Chromoendoscopy | Application of specific dyes to a surface of interest via endoscopy to improve visualization of microstructure and vascular patterns (17) |

| Narrow Band Imaging (NBI) | Blue light (415 nm) and green light (540 nm) are transmitted; this technology is integrated within the traditional white light endoscope with the ability to shift to NBI without losing visualization of the bowel. Magnification up to 80× can be achieved. NBI can help detect minor differences in the vascular patterns of the GI mucosa and the capillary bed, making it a very useful tool for screening of GI cancers. It can therefore enable diagnosis via visual assessment alone. (17, 98, 99) |

| Endocytoscopy | Contact light microscopy technology allows magnification of superficial mucosa up to 50um in depth; can be probe-based or endoscopy-integrated. (99) |

| Laser confocal endomicroscopy | Low power laser used for high magnification and high-resolution mucosal layer imaging. The depth of imaging ranges from 0 – 70 um in probe-based devices and 0 – 250 um in endoscope-based devices. Probe-based devices can generate image resolutions of 1um – 3.5um, and endoscope-based devices collect images at 0.7um lateral resolution and 7um axial resolution. (20) |

Polyps

In the adult population, research has focused on improving automated detection of polyps using AI (9, 22). The accurate detection of polyps is an area of interest because of the importance of early adenoma detection and the risk of carcinoma progression (23). Even with routine colonoscopy, a substantial number of polyps can be missed (24, 25), and adenoma detection rates vary widely between gastroenterologists (26). Accordingly, systems and technology to assist physician detection could improve diagnostics and thus patient outcomes. The majority of literature in artificial intelligence in gastroenterology involves improving automatic detection of polyps, especially in real time endoscopy (9, 22). Urban et al. developed a deep neural network to assist gastroenterologists in identifying and removing concerning polyps. The algorithm had a polyp detection accuracy of 96.4%, and the authors concluded that their trained model could identify and locate polyps in real time with high accuracy (22). This work highlighted the utilization of AI in automated lesion detection (27, 28).

Celiac disease

In the pediatric population, upper and lower endoscopies are more commonly and routinely used to diagnose and monitor enteropathies. For example, the gold standard for diagnosis of celiac disease is intestinal endoscopy, however multiple biopsies are required because of the patchy nature of the disease (29). We hypothesize that in the future, AI approachesmay be developed that can limit the risks associated with endoscopy by eliminating the need for biopsies. This may include use of AI combined with limited biopsies (or even serology without biopsies). Currently, researchers have looked to AI to enhance endoscopy techniques and improve cost savings, reduce time, and improve safety of the procedure (15, 30, 31).

Wimmer et al. hypothesized that AI approaches could be used to classify luminal endoscopic images of celiac disease (15). With a dataset of 1661 images, they developed a neural network that classified luminal endoscopic images from the duodenum gathered by white light and narrow band imaging endoscopy. The neural network achieved a 90.5% accuracy rate in identifying celiac disease from endoscopic images, and the authors concluded that their neural network performed with high accuracy in classifying celiac disease from endoscopic images alone. Overall, their work suggests that while the gold standard for celiac disease diagnosis remains unchanged, AI could prove useful in diagnostics in settings where obtaining biopsies is difficult.

Vècsei et al. developed a method for automating celiac severity classification on endoscopic images from pediatric patients (31). With a limited dataset of 612 endoscopic image patches, the authors compared a number of AI methodologies (31, 32). While their image classification technique was successful in differentiating celiac disease into two categories (disease versus no disease) with an 88% overall accuracy, it had a lower classification accuracy with classifying severity of celiac disease (63.7%). Their work highlighted that AI can differentiate between disease and no disease and, while not yet applicable, advances have been made to interpret disease severity.

Inflammatory Bowel Disease

Computer assisted support systems for assessing IBD can help physicians properly evaluate disease severity and improve IBD classification (33, 34). Ozawa et al. developed a computer-assisted support system to evaluate endoscopic disease severity for patients with ulcerative colitis. They developed a neural network that was trained on labeled colonoscopy images from over 800 patients with ulcerative colitis. The algorithm was then tested to identify normal mucosa and mucosal healing states in a test data set and found their model performed well at differentiating severe states of disease from remission, with an area under the receiver operating curve value of 0.98 when identifying Mayo 0–1 versus 2–3 (35). This study identified that computer vision can differentiate between various ulcerative colitis severities and this could guide physicians in determining both severity-based treatments and follow-up endoscopy intervals for IBD.

For the potential utility of these computer assisted support systems in the pediatric population, Mossotto et al. used machine learning to classify IBD activity using endoscopic and histologic imaging (36). This model was trained and then tested on 48 unseen patients and the accuracy was found to be 83.3%. The model was trained to classify Crohn’s and ulcerative colitis at diagnosis and not to reclassify undifferentiated IBD patients. Despite which 17 of the 29 undifferentiated IBD patients, subtype classification could be estimated with a probability greater than 80% which showed the potential use of such models for undifferentiated IBD. Overall, using both endoscopic and histological data resulted in the most accurate classification of IBD in the pediatric patients (36). This work highlighted the utilization of AI towards assisting physicians in differentiating between IBD subtypes accurately. In future studies, the incorporation of more data, including serological and inflammatory markers, could lead to improved diagnostic accuracies and allow for the option of targeted therapy.

Future Technology: Endomicroscopy

Other techniques have been developed in the field of gastroenterology that are poised to become integral support tools for the future clinician. One such technique includes endomicroscopy, a tool that enables optical magnification of the intestinal tract to the cellular level or up to a depth of 250 microns (56). Histologic observation can be performed at the time of endoscopy without requiring an invasive biopsy and even without the need for sedation (57). However, evaluating endomicroscopic images requires expert interpretation for accurate disease diagnosis (58). Endomicroscopy has been shown to be comparable to histological biopsy interpretations and could therefore have major future clinical potential in changing our current clinical diagnostic paradigms (59). Little research has been performed on integrating AI with endomicroscopy, and being able to analyze this microscopic data in real time could support gastroenterologists in making earlier diagnoses and tailor treatments at the time of endoscopy.

Maeda et al. evaluated the use of AI to analyze endocytoscopy (EC) and narrow band imaging (NBI) images and identify histologic inflammatory activity associated with ulcerative colitis (60). A total of 22,835 EC/NBI images were collected from 187 patients, with biopsies in each patient serving as the ground truth of histologic inflammation. They extracted features from each image and used AI to measure its ability to predict histologic inflammation (9) (60). Their results show a diagnostic accuracy 91% (95% CI, 83%−95%). Overall, they demonstrated the use of AI systems to predict histologic inflammation in patients with ulcerative colitis (60).

A recently developed method by Tabatabaei et al. combines endomicroscopy (57, 61) with video capsule endoscopy to allow for non-sedated evaluation of the luminal gastrointestinal tract (62, 63). Most recently, they tested a tethered confocal microscope capsule for diagnosis of eosinophilic esophagitis in four healthy and twelve previously diagnosed subjects using endomicroscopy, which delivers more cellular information than a traditional endoscopic biopsy (62). To study the performance of the device, they performed imaging and calculated image lengths and areas in the esophagus, and subsequently biopsied areas of interest. Image performance showed average length of imaging was 9.19 ± 2.25cm, average area covered was 13.52 ± 4.00cm2, equivalent to more than 7000 conventional microscopic high power fields. They found that endomicroscopy provided 56 times more tissue data for diagnosis of eosinophilic esophagitis than traditional endoscopy with biopsy and concluded that as this method was able to obtain images and cellular level microscopic data of the entire organ as opposed to specific regions (as is obtained via endoscopy) it would eliminate sampling error. Additionally, they also hypothesized that given the clear advantages in both cost and convenience of this procedure, non-sedated endomicroscopy could become an effective tool for diagnosis and monitoring of esophageal diseases (62). Overall, their results suggest AI can be used as a tool to interpret high quality imaging and large cellular level data in the esophagus.

Video Capsule Endoscopy

Advances in AI support systems in video capsule endoscopy (VCE) have also been of interest for a less invasive and cost effective way to classify luminal findings (37–42). While it is useful to visualize the entire small intestine without needing an invasive procedure, VCE imaging typically requires hours of human interpretation and analysis to process the large amount of video data (5).

Celiac Disease

Several studies have looked into combining computer assisted support systems with video capsule endoscopy as a modality for predicting celiac disease (5, 43, 44).

Ciaccio et al. hypothesized that an automated analysis of video capsule endoscopy images could accurately identify the distinctive small intestinal villous atrophy seen in celiac disease (44). They analyzed video capsule images from nine biopsy confirmed celiac patients and seven healthy control patients. The overall accuracy, specificity, and sensitivity of the AI algorithm was 88.1%, 92.9%, and 83.9%, respectively. The study suggested that AI can be used to recognize celiac disease on VCE imaging. Zhou et al. developed a more advanced AI algorithm to help analyze video capsule endoscopy images for celiac disease (5). They had positive results, with their AI analyses demonstrating 100% specificity and sensitivity for distinguishing celiac disease versus control in the testing set, and concluded that their deep learning algorithm could recognize celiac disease on VCE and possibly predict its severity (5).

Inflammatory Bowel Disease

In IBD monitoring and diagnosis, several studies have attempted to automate video capsule endoscopy analysis (45–49). Kumar et al. explored the assessment of discrete Crohn’s disease lesions and quantified lesion severity using a supervised learning method (48). They found that the classifier performed with 91% precision in detecting lesions, however it performed with a lower precision, 79%, when assessing lesion severity (48). They concluded that their classifier performed well at detecting the presence of a lesion but needed further development to assess severity (48). Their work showed AI can be applied to video capsule endoscopy to assess Crohn’s disease.

Girgis et al. made improvements on assessing disease severity in wireless capsule endoscopy images (47). They developed an algorithm and classifier to identify regions that show inflammation (50, 51). Their classifier performed with a total accuracy of 87% on the test data in detecting inflammation (47). Their methodology showed AI has possible utility in helping physicians non-invasively evaluate disease activity in IBD.

Ultrasound and other Imaging

Beyond luminal pathology, researchers have explored AI and its applications in other pathologies of interest in pediatric gastroenterology, including liver disease and dysmotility. In liver pathology, computer aided support systems have been developed to classify liver disease using ultrasound shear wave elastography (52). Gatos et al. found that their most optimized AI classifier was 87.3% accurate in identifying chronic liver disease, and showed that AI has the potential to support physicians in the evaluation of liver disease.

AI has also been used to interpret high-resolution manometry (HRM) and therefore in the evaluation of motility disorders (53–56). Mielens et al. evaluated the use of AI in identifying disordered swallowing from pharyngeal HRM (56). They processed data from HRM manometry studies using a previously defined method and used several AI classification algorithms (artificial neural networks, multilayer perceptron, learning vector quantization, and support vector machines) to automatically determine normal and abnormal swallowing (53, 57). They found that all the algorithms produced high average classification accuracies, with the multilayer perceptron performing the best with 96% classification accuracy, and concluded that AI classification models could be utilized to classify normal from abnormal swallowing based on HRM (56). Their study showed AI could be used to process data rich diagnostic tools and help physicians better understand complex motility disorders.

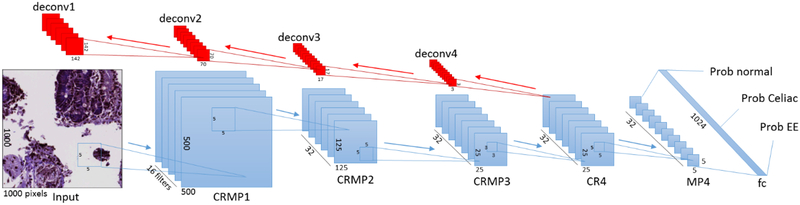

Our research group is currently developing and validating deep learning algorithms for the interpretation and diagnosis of common pediatric enteropathies using high resolution images of duodenal biopsies. Our work has specifically focused on understanding the mechanism of environmental enteropathy – a mal-absorptive disorder most commonly occurring in low and middle income countries, which has a histological resemblance to celiac disease (58). A convolutional neural network for multi-class classification of duodenal biopsies was developed (Figure 2), which allowed identification of high importance microscopic features and for these to be traced back to the corresponding positions in the input image (59). A total of 105 biopsies were obtained from children with celiac disease (n = 34), environmental enteropathy (n = 29), and histologically normal controls (n = 42). These biopsies were converted into 2817 images that were used for training. Analysis proved a false negative rate of 2.4% (59). Future directions of the group’s work will include a larger dataset of images, differentiating celiac disease images based on severity, and including a biomarker correlation model which would allow future prediction of biopsy features on the basis of certain blood, urinary, or stool biomarker results. This preliminary data suggests AI has utility in resource limited environments to help physicians differentiate between disease pathologies.

Figure 2. Duodenal Biopsy Convolutional Neural Network framework.

The CNN is comprised of 4 convolution layers and 1 fully connected layer. Each convolution layer has 3 sub-layers (CRMP: convolution, ReLU activation, & max pooling layers). Deconvolution layers are in red, these increase the resolution & find locations of “importance features” within the input image.

Conclusion

In this review, we provide an overview of the current literature on AI and its current and potential applications to pediatric gastroenterology. We focused particularly on key diagnostic modalities and how AI can be deployed to support current imaging techniques. Additionally, we explore future directions that data science can take within pediatric gastroenterology by discussing studies that go beyond commonly used diagnostic studies.

The immense success of AI within ophthalmology (60) with the recent FDA approval of the first ever system for clinical use (61) in interpretation of diabetic retinopathy funduscopic images has heralded in an era where computers are a critical part of the diagnostic process. AI harnesses the pattern recognition ability of computers and its role in the interpretation of gut specific diagnostic imaging data is poised to grow exponentially given the advent of new, innovative high resolution imaging and GI functional assessment tools that are becoming available for clinical use (7, 62). This is where the science of AI can be implemented to support disease monitoring, detection, and ultimately improve patient care. As we described the case examples above, advances have already been made in AI in almost every aspect of gastroenterology ranging from endoscopic differentiation of IBD to new techniques such as endomicroscopy (33, 36, 63, 64).

The strengths of AI include the ability to process and analyze data at a rate beyond which humans can perform, having limited, poor quality, inadequately annotated data is often the limiting factor for research. In many of the articles discussed in this review, limited datasets often prevented researchers from optimally training systems and this could be contributing to a decreased accuracy in classification. Future collaboration and organization among institutions will be critical in developing databases of expert annotated raw data that can be used for future research endeavors. AI has already shown its potential in providing accurate guidance to physicians in decision making and in diagnosis. The next step will be to optimize these AI techniques and work towards demonstrating their clinical utility.

No review of AI applications in medicine would be complete without addressing the growing fear that computer will soon take over the role of a practicing physician (65). While it is true that computers have the potential to be far more accurate and precise with diagnostics and laboratory skills, this in no way replaces what human physicians provide. Doctors will always be needed to distill, synthesize, process information and decide on an action plan. Most importantly, only an in-person physician will have the skills and ability to communicate with a patient and their families in an understandable and empathetic manner. For this reason, AI has and will continue to support physicians in decision making, but it cannot replace literally the ‘human’ touch – the personal comfort we can provide our patients.

Furthermore, AI seemingly has applications in almost every procedure or imaging within gastroenterology. Physicians and researchers still need to find where exactly AI could serve to benefit clinicians and patients in a meaningful way. In adults, AI appears to have the most clinical utility in helping physicians identify and remove more adenomas during routine colonoscopies (9, 22, 23). For pediatrics, this is much less clear: for example is it clinically relevant to correlate histologic and endoscopic celiac disease severity with outcomes (31)? Does AI enabled colonic polyp resection lead to increased cancer detection (28)? These and many other questions are currently unknown.

As can be seen in these reviewed articles, researchers have applied AI to many different aspects of gastroenterology. The computational capacity to process data, recognize patterns, and accurately assess complex medical issues has helped researchers advance the field of data science in gastroenterology. The next step is truly harnessing the power of AI and using it as a support tool to help physicians make more informed clinical decisions.

Lastly, our review was limited to key data science topics that are of interest to both data scientist, gastoenterologist and pediatricians. To cover all literature covering applications of AI in medicine would be outside of the scope of this work. The primary focus of this current work has been on imaging techniques as the majority of diagnostics in pediatric gastroenterology involves imaging. Additionally, our work was necessarily limited by the availability of pediatric related AI research, most of which focused on AI uses for diagnostic imaging in adult gastroenterology. While there is a growing interest in data sciences and its utility in clinical medicine, far less research is being done in pediatrics and even less so in pediatric gastroenterology. For data science within pediatric gastroenterology to expand, collaboration between scientists, physicians, and academic institutions will be important.

Supplementary Material

What is Known:

AI is increasingly being applied to provide disease insights in clinical medicine and research.

What is New:

In the last 20 years, there has been growth in the body of research in AI and its use in gastroenterology, specifically with diagnostic procedures.

This review addresses research developments aimed at improving diagnostic evaluations in gastroenterology, focusing on pathologies that most affect children.

Sources of funding:

University of Virginia Translational Health Research Institute of Virginia (THRIV), Mentored Career Development Award (SS) and the University of Virginia Engineering in Medicine SEED Grant (SS & DEB). National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health K23 Mentored Patient-Oriented Research Career Development Award [K23 DK117061-01A1] - Sana Syed.

Footnotes

Conflicts of Interest: None

References

- 1.Beam AL, Kohane IS Big Data and Machine Learning in Health Care. JAMA 2018;319(13):1317–18. [DOI] [PubMed] [Google Scholar]

- 2.Golden JA Deep Learning Algorithms for Detection of Lymph Node Metastases From Breast Cancer: Helping Artificial Intelligence Be Seen. JAMA 2017;318(22):2184–86. [DOI] [PubMed] [Google Scholar]

- 3.Neill DB Using Artificial Intelligence to Improve Hospital Inpatient Care. IEEE Intelligent Systems 2013;28(2):92–95. [Google Scholar]

- 4.Iddan G, Meron G, Glukhovsky A, et al. Wireless capsule endoscopy. Nature 2000;405(6785):417. [DOI] [PubMed] [Google Scholar]

- 5.Zhou T, Han G, Li BN, et al. Quantitative analysis of patients with celiac disease by video capsule endoscopy: A deep learning method. Comput Biol Med 2017;85(1–6. [DOI] [PubMed] [Google Scholar]

- 6.Farmer M, Petras RE, Hunt LE, et al. The importance of diagnostic accuracy in colonic inflammatory bowel disease. Am J Gastroenterol 2000;95(11):3184–8. [DOI] [PubMed] [Google Scholar]

- 7.Alagappan M, Brown JRG, Mori Y, et al. Artificial intelligence in gastrointestinal endoscopy: The future is almost here. World J Gastrointest Endosc 2018;10(10):239–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fernandez-Esparrach G, Bernal J, Lopez-Ceron M, et al. Exploring the clinical potential of an automatic colonic polyp detection method based on the creation of energy maps. Endoscopy 2016;48(9):837–42. [DOI] [PubMed] [Google Scholar]

- 9.Mori Y, Kudo S-e, Misawa M, et al. Real-time use of artificial intelligence in identification of diminutive polyps during colonoscopy: a prospective study. Annals of internal medicine 2018;169(6):357–66. [DOI] [PubMed] [Google Scholar]

- 10.Russell SJ, Norvig P Artificial intelligence: a modern approach. Malaysia; Pearson Education Limited; 2016. [Google Scholar]

- 11.Chollet F, Allaire JJ Deep Learning with R. Manning Publications Company; 2018. [Google Scholar]

- 12.Deo RC Machine Learning in Medicine. Circulation 2015;132(20):1920–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zeevi D, Korem T, Zmora N, et al. Personalized Nutrition by Prediction of Glycemic Responses. Cell 2015;163(5):1079–94. [DOI] [PubMed] [Google Scholar]

- 14.Asadi H, Dowling R, Yan B, et al. Machine learning for outcome prediction of acute ischemic stroke post intra-arterial therapy. PLoS One 2014;9(2):e88225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wimmer G, Vécsei A, Uhl A. CNN transfer learning for the automated diagnosis of celiac disease Image Processing Theory Tools and Applications (IPTA), 2016 6th International Conference on. IEEE; 2016:1–6. [Google Scholar]

- 16.Classification: Accuracy. https://developers.google.com/machine-learning/crash-course/classification/accuracy.

- 17.Gono K, Obi T, Yamaguchi M, et al. Appearance of enhanced tissue features in narrow-band endoscopic imaging. J Biomed Opt 2004;9(3):568–77. [DOI] [PubMed] [Google Scholar]

- 18.Jang JY The Past, Present, and Future of Image-Enhanced Endoscopy. Clin Endosc 2015;48(6):466–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.East JE, Vleugels JL, Roelandt P, et al. Advanced endoscopic imaging: European Society of Gastrointestinal Endoscopy (ESGE) Technology Review. Endoscopy 2016;48(11):1029–45. [DOI] [PubMed] [Google Scholar]

- 20.Committee AT, Kwon RS, Wong Kee Song LM, et al. Endocytoscopy. Gastrointest Endosc 2009;70(4):610–3. [DOI] [PubMed] [Google Scholar]

- 21.Chauhan SS, Dayyeh BKA, Bhat YM, et al. Confocal laser endomicroscopy. Gastrointestinal endoscopy 2014;80(6):928–38. [DOI] [PubMed] [Google Scholar]

- 22.Urban G, Tripathi P, Alkayali T, et al. Deep Learning Localizes and Identifies Polyps in Real Time With 96% Accuracy in Screening Colonoscopy. Gastroenterology 2018;155(4):1069–78e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kaminski MF, Regula J, Kraszewska E, et al. Quality indicators for colonoscopy and the risk of interval cancer. N Engl J Med 2010;362(19):1795–803. [DOI] [PubMed] [Google Scholar]

- 24.Ahn SB, Han DS, Bae JH, et al. The Miss Rate for Colorectal Adenoma Determined by Quality-Adjusted, Back-to-Back Colonoscopies. Gut Liver 2012;6(1):64–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Leufkens AM, van Oijen MG, Vleggaar FP, et al. Factors influencing the miss rate of polyps in a back-to-back colonoscopy study. Endoscopy 2012;44(5):470–5. [DOI] [PubMed] [Google Scholar]

- 26.Corley DA, Jensen CD, Marks AR, et al. Adenoma Detection Rate and Risk of Colorectal Cancer and Death. New England Journal of Medicine 2014;370(14):1298–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wision AI publishes data from first-ever prospective, randomized controlled trial evaluating AI in advanced diagnostics. https://m.dotmed.com/news/story/46461.

- 28.Wang P, Berzin TM, Glissen Brown JR, et al. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut 2019:gutjnl-2018–317500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ediger TR, Hill ID Celiac disease. Pediatr Rev 2014;35(10):409–15; quiz 16. [DOI] [PubMed] [Google Scholar]

- 30.Gadermayr M, Uhl A, Vécsei A Fully automated decision support systems for celiac disease diagnosis. IRBM 2016;37(1):31–39. [Google Scholar]

- 31.Vécsei A, Amann G, Hegenbart S, et al. Automated Marsh-like classification of celiac disease in children using local texture operators. Computers in Biology and Medicine 2011;41(6):313–25. [DOI] [PubMed] [Google Scholar]

- 32.Ojala T, Pietikäinen M, Harwood D A comparative study of texture measures with classification based on featured distributions. Pattern recognition 1996;29(1):51–59. [Google Scholar]

- 33.Ozawa T, Ishihara S, Fujishiro M, et al. Novel computer-assisted diagnosis system for endoscopic disease activity in patients with ulcerative colitis. Gastrointest Endosc 2019;89(2):416–21e1. [DOI] [PubMed] [Google Scholar]

- 34.Sasaki Y, Hada R, Munakata A Computer-aided grading system for endoscopic severity in patients with ulcerative colitis. Digestive Endoscopy 2003;15(3):206–09. [Google Scholar]

- 35.Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions. Proceedings of the IEEE conference on computer vision and pattern recognition 2015:1–9. [Google Scholar]

- 36.Mossotto E, Ashton J, Coelho T, et al. Classification of paediatric inflammatory bowel disease using machine learning. Scientific reports 2017;7(1):2427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.He J-Y, Wu X, Jiang Y-G, et al. Hookworm Detection in Wireless Capsule Endoscopy Images With Deep Learning. IEEE Transactions on Image Processing 2018;27(5):2379–92. [DOI] [PubMed] [Google Scholar]

- 38.Iakovidis DK, Koulaouzidis A Automatic lesion detection in capsule endoscopy based on color saliency: closer to an essential adjunct for reviewing software. Gastrointest Endosc 2014;80(5):877–83. [DOI] [PubMed] [Google Scholar]

- 39.Karargyris A, Bourbakis N Detection of small bowel polyps and ulcers in wireless capsule endoscopy videos. IEEE Transactions on biomedical engineering 2011;58(10):2777–86. [DOI] [PubMed] [Google Scholar]

- 40.Segui S, Drozdzal M, Pascual G, et al. Generic feature learning for wireless capsule endoscopy analysis. Comput Biol Med 2016;79(163–72. [DOI] [PubMed] [Google Scholar]

- 41.Yuan Y, Meng MQ Deep learning for polyp recognition in wireless capsule endoscopy images. Med Phys 2017;44(4):1379–89. [DOI] [PubMed] [Google Scholar]

- 42.Zou Y, Li L, Wang Y, et al. Classifying digestive organs in wireless capsule endoscopy images based on deep convolutional neural network Digital Signal Processing (DSP), 2015 IEEE International Conference on. IEEE; 2015:1274–78. [Google Scholar]

- 43.Ciaccio EJ, Bhagat G, Lewis SK, et al. Use of shape-from-shading to characterize mucosal topography in celiac disease videocapsule images. World J Gastrointest Endosc 2017;9(7):310–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ciaccio EJ, Tennyson CA, Bhagat G, et al. Implementation of a polling protocol for predicting celiac disease in videocapsule analysis. World J Gastrointest Endosc 2013;5(7):313–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bejakovic S, Kumar R, Dassopoulos T, et al. Analysis of Crohn’s disease lesions in capsule endoscopy images Robotics and Automation, 2009. ICRA’09. IEEE International Conference on. IEEE; 2009:2793–98. [Google Scholar]

- 46.Charisis VS, Hadjileontiadis LJ Use of adaptive hybrid filtering process in Crohn’s disease lesion detection from real capsule endoscopy videos. Healthcare technology letters 2016;3(1):27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Girgis HZ, Mitchell B, Dassopoulos T, et al. An intelligent system to detect Crohn’s disease inflammation in Wireless Capsule Endoscopy videos Biomedical Imaging: From Nano to Macro, 2010 IEEE International Symposium on. IEEE; 2010:1373–76. [Google Scholar]

- 48.Kumar R, Zhao Q, Seshamani S, et al. Assessment of Crohn’s disease lesions in wireless capsule endoscopy images. IEEE Transactions on biomedical engineering 2012;59(2):355–62. [DOI] [PubMed] [Google Scholar]

- 49.Seshamani S, Kumar R, Dassopoulos T, et al. Augmenting capsule endoscopy diagnosis: a similarity learning approach. Med Image Comput Comput Assist Interv 2010;13(Pt 2):454–62. [DOI] [PubMed] [Google Scholar]

- 50.Cheng Y Mean shift, mode seeking, and clustering. IEEE transactions on pattern analysis and machine intelligence 1995;17(8):790–99. [Google Scholar]

- 51.Comaniciu D, Meer P Mean shift: A robust approach toward feature space analysis. IEEE Transactions on pattern analysis and machine intelligence 2002;24(5):603–19. [Google Scholar]

- 52.Gatos I, Tsantis S, Spiliopoulos S, et al. A Machine-Learning Algorithm Toward Color Analysis for Chronic Liver Disease Classification, Employing Ultrasound Shear Wave Elastography. Ultrasound Med Biol 2017;43(9):1797–810. [DOI] [PubMed] [Google Scholar]

- 53.Hoffman MR, Mielens JD, Omari TI, et al. Artificial neural network classification of pharyngeal high-resolution manometry with impedance data. Laryngoscope 2013;123(3):713–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Jones C, Hoffman M, Lin L, et al. Identification of swallowing disorders in early and mid-stage Parkinson’s disease using pattern recognition of pharyngeal high-resolution manometry data. Neurogastroenterology & Motility 2018;30(4):e13236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Jungheim M, Busche A, Miller S, et al. Calculation of upper esophageal sphincter restitution time from high resolution manometry data using machine learning. Physiology & behavior 2016;165(413–24. [DOI] [PubMed] [Google Scholar]

- 56.Mielens JD, Hoffman MR, Ciucci MR, et al. Application of classification models to pharyngeal high-resolution manometry. J Speech Lang Hear Res 2012;55(3):892–902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.McCulloch TM, Hoffman MR, Ciucci MR High-resolution manometry of pharyngeal swallow pressure events associated with head turn and chin tuck. Ann Otol Rhinol Laryngol 2010;119(6):369–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Syed S, Ali A, Duggan C Environmental Enteric Dysfunction in Children. J Pediatr Gastroenterol Nutr 2016;63(1):6–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Syed S, Al-Boni M, Khan MN, et al. Assessment of Machine Learning Detection of Environmental Enteropathy and Celiac Disease in Children. JAMA Netw Open 2019;2(6):e195822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Ting DSW, Cheung CY, Lim G, et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA 2017;318(22):2211–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Administration F-USFD. FDA permits marketing of artificial intelligence-based device to detect certain diabetes-related eye problems. https://www.fda.gov/newsevents/newsroom/pressannouncements/ucm604357.htm. Accessed 12/6/2018.

- 62.Topol EJ High-performance medicine: the convergence of human and artificial intelligence. [DOI] [PubMed]

- 63.Maeda Y, Kudo S-e, Mori Y, et al. Fully automated diagnostic system with artificial intelligence using endocytoscopy to identify the presence of histologic inflammation associated with ulcerative colitis (with video). Gastrointestinal endoscopy 2019;89(2):408–15. [DOI] [PubMed] [Google Scholar]

- 64.Tabatabaei N, Kang D, Kim M, et al. Clinical Translation of Tethered Confocal Microscopy Capsule for Unsedated Diagnosis of Eosinophilic Esophagitis. Sci Rep 2018;8(1):2631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Krittanawong C The rise of artificial intelligence and the uncertain future for physicians. Eur J Intern Med 2018;48(e13–e14. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.