Abstract

Background:

Public reporting is seen as a powerful quality improvement tool, but data to support its efficacy are limited. The Centers for Medicare & Medicaid Services’ Hospital Compare program initially reported process metrics only but started reporting mortality rates for acute myocardial infarction, heart failure, and pneumonia in 2008.

Objective:

To determine whether public reporting of mortality rates was associated with lower mortality rates for these conditions among Medicare beneficiaries.

Design:

For 2005 to 2007, process-only reporting was considered; for 2008 to 2012, process and mortality reporting was considered. Changes in mortality trends before and during reporting periods were estimated by using patient-level hierarchical modeling. Nonreported medical conditions were used as a secular control.

Setting:

U.S. acute care hospitals.

Participants:

20 707 266 fee-for-service Medicare beneficiaries hospitalized from January 2005 through November 2012.

Measurements:

30-day risk-adjusted mortality rates.

Results:

Mortality rates for the 3 publicly reported conditions were changing at an absolute rate of −0.23% per quarter during process-only reporting, but this change slowed to a rate of −0.09% per quarter during process and mortality reporting (change, 0.13% per quarter; 95% CI, 0.12% to 0.14%). Mortality for nonreported conditions was changing at −0.17% per quarter during process-only reporting and slowed slightly to −0.11% per quarter during process and mortality reporting (change, 0.06% per quarter; CI, 0.05% to 0.07%).

Limitation:

Administrative data may have limited ability to account for changes in patient complexity over time.

Conclusion:

Changes in mortality trends suggest that reporting in Hospital Compare was associated with a slowing, rather than an improvement, in the ongoing decline in mortality among Medicare patients.

Primary Funding Source:

National Heart, Lung, and Blood Institute.

There is broad consensus that public reporting of provider performance can be an important tool to drive improvements in patient care. In the United States, the Hospital Compare program, led by the Centers for Medicare & Medicaid Services (CMS) and others, reports hospitals’ performance on a set of quality metrics for acute myocardial infarction (AMI), congestive heart failure (CHF), and pneumonia on a publicly accessible Web site. Hospital Compare initially reported process-of-care metrics only, but in 2008 it expanded to include 30-day mortality rates for these 3 conditions (1). The goal of this program is to increase transparency for consumers and, in doing so, to encourage hospitals to improve their performance and, ultimately, achieve better clinical outcomes for their patients. Many other public reporting programs, modeled after Hospital Compare, report processes, outcomes, or both online before moving to a pay-for-performance phase. These programs include Dialysis Compare, Nursing Home Compare, and Physician Compare. New conditions are frequently added to these reporting programs.

Although the idea that public reporting could improve patient outcomes has strong face validity, there has been surprisingly little evidence that it has actually done so. Despite evidence that hospital performance on process metrics has improved substantially during the public reporting period, it is unclear whether patient outcomes have improved commensurately. A study by Ryan and colleagues found that, indeed, public reporting of processes of care on Hospital Compare was not associated with improved trends in mortality for the publicly reported conditions because most improvements for these conditions predated the initiation of reporting (2). Advocates of public reporting countered that Ryan and colleagues examined a time period when hospitals were solely focused on public reporting of process measures and that improvements in clinical outcomes would follow when hospitals began to report them. However, whether public reporting of mortality led to lower mortality rates is as yet unknown.

Given the tremendous resources spent on Hospital Compare and its central importance to ongoing health reform efforts, understanding whether it has led to lower mortality rates for reported conditions is critically important. Therefore, in this study, we sought to answer 3 questions: First, did trends in 30-day mortality for the 3 publicly reported conditions improve after hospitals started to report their 30-day mortality rates, and, given broader secular trends in hospital outcomes during the study period, did these trends in outcomes differ from those for nonreported conditions? Second, were improvements particularly evident for subsets of hospitals, such as large, teaching, or for-profit hospitals? Third, did hospitals that were identified as outliers in the first release of the online reports respond differently from others?

Methods

Data

We used Medicare inpatient files to examine all hospitalizations for Medicare fee-for-service enrollees between 2005 and 2012 who were hospitalized with any of the 15 most common nonsurgical discharge diagnoses as assessed by diagnosis-related group codes and confirmed by International Classification of Diseases, 9th Revision, codes. A full list of these codes appears in Appendix Table 1 (available at www.annals.org). Patients with missing data for age or sex were excluded (<2% of patients). Each diagnosis was considered independently, and patients could be included in the sample more than once during the 7-year period. Hospitalizations with primary discharge diagnoses of AMI, CHF, or pneumonia made up the publicly reported cohort. We defined the nonreported group using the remaining 12 conditions (stroke, sepsis, gastroenteritis and esophagitis, gastrointestinal bleeding, urinary tract infection, metabolic disorder, arrhythmia, renal failure, chronic obstructive pulmonary disease, respiratory infection, chest pain, and lower-extremity fracture). We then excluded patients with chronic obstructive pulmonary disease, respiratory infection, and chest pain because of their close clinical overlap with the reported conditions, and we also excluded patients with extremity fracture because most of these patients were cared for by surgical or trauma services. In a sensitivity analysis, based on a previous publication suggesting a significant diagnosis shift for pneumonia, we also examined mortality rates for patients with a primary diagnosis of respiratory failure or sepsis with a secondary diagnosis of pneumonia (3).

This study included only hospitals that were reporting data on processes of care for at least 1 condition to the Hospital Compare program as of the program’s initiation in 2005 and that were reported on in the 2008 mortality reports. Thus, hospitals could not join the sample after 2008; hospitals that closed after 2008 were included for the study years in which they contributed data. We used the American Hospital Association survey to obtain data on hospital characteristics, including size, ownership, teaching status, urban location, region, and the proportion of patients with Medicaid as the primary payer.

Statistical Analysis

Hospital and patient characteristics are reported as percentages and, for age, as means. Because of significant seasonal variations, aggregate quarterly mortality rates were plotted after removal of the seasonal component using linear regression.

For our primary outcome, risk-adjusted 30-day mortality, we followed the CMS method used to calculate the mortality rates that are publicly reported on Hospital Compare (4–7). In accordance with CMS methods, we excluded patients discharged against medical advice and those enrolled in hospice services. We assigned medical comorbid conditions using the Hierarchical Condition Categories (HCCs) developed by CMS (8). We then created patient-level logistic regression models with hospital fixed effects to account for correlation over time and to allow time trends to be interpreted as within-hospital changes. The primary predictors in the model were linear time terms in the process-only reporting period (Q1 2005 through Q4 2007) and in the mortality-reporting period (Q1 2008 through Q4 2012). The models were adjusted for patient age, sex, and HCCs so that temporal changes in patient composition would not mask time trends. Marginal standardization based on the total population of patients was used to estimate the mortality rate at the start and end of each reporting period (9). The logistic regression model coefficients were used to calculate the predicted probability of death for each patient, with all of their observed covariates, except for the time terms being set to the appropriate reporting quarter. These individual probabilities, when averaged together, represent a standardized mortality rate that can be compared across time.

We then calculated the average change in mortality over the time period by subtracting the estimated initial rate from the estimated final rate and dividing by the number of quarters in the time period. Approximate test-based CIs were based on an estimated SE for the difference in mortality rate change, calculated from the logistic regression test statistic for the change in the time trend. Please see the Appendix (available at www.annals.org) for a full explanation of model specifications.

These logistic regression models were initially constructed with both a change in intercept and a change in trend, but we found that the change in intercept was small (0.2%) and did not alter our trend estimates. Thus, we removed it from our models for ease of presentation. In sensitivity analyses, we used alternative cut points: 1 year earlier to address concerns that hospitals knew that mortality reporting was on the horizon and 1 quarter later to address concerns that hospitals would not react immediately to a policy change.

In our primary analysis, we built regression models to examine trends in mortality in the process-only reporting period and process and mortality reporting period for each of the publicly reported conditions. We then combined these conditions into a single model run across all hospitalizations for reported conditions, including an indicator for primary diagnosis, and we examined trends in the process-only reporting period and process and mortality reporting period using identical methods. We repeated each of these steps for the nonreported conditions, building patient-level regression models with hospital fixed effects to examine trends in mortality in both periods for each of the nonreported conditions individually, as well as for these conditions as a group. We then ran a model with all reported and nonreported conditions and included indicators for primary diagnoses as well as an indicator for whether the condition was reported or nonreported. This model also included an interaction term between the reporting indicator and the posttrend, which allowed us to test whether the change in trends differed between reported and nonreported conditions.

To determine whether the relationship between public reporting and outcomes varied across distinct groups of hospitals, we conducted a set of prespecified subgroup analyses based on hospital characteristics. We hypothesized, for instance, that larger or teaching hospitals, or hospitals in more competitive markets, might be more sensitive to negative reputational effects of having high mortality rates, or, conversely, small, rural hospitals or those in less competitive markets may respond differently because patients have fewer alternatives. Therefore, we chose, a priori, to examine groups on the basis of hospital size, teaching status, urban versus rural location, ownership, and market competition (using the Hirschman–Herfindahl index). Each model accounted for the other characteristics of interest. Finally, we identified hospitals noted as negative outliers in the first publicly released performance report for mortality for each condition. The Centers for Medicare & Medicaid Services considers hospitals to be “worse than national rate” if they have at least 25 beneficiaries in the measure period and if the entire 95% interval estimate for their performance is above the national observed rate for that measure; this designation is posted on Hospital Compare (10). We examined each of these “worse than” outlier groups independently, as well as all outlier hospitals from the first report in aggregate. For the purposes of comparison, we also estimated the group of hospitals that would have been “worse than” outliers on the first report if the other conditions had been reported (that is, those for which the entire 95% CI of their estimated mortality rate from the regression model was above the national average mortality rate).

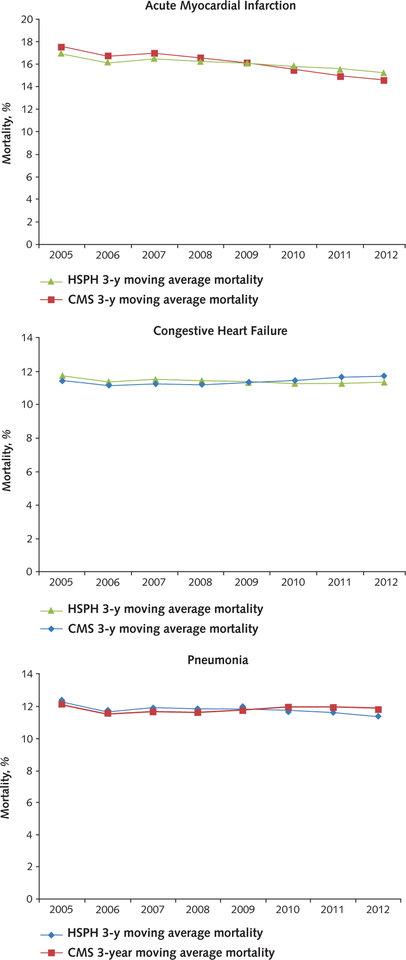

As a validation of our methods, we compared our calculated rates for the publicly reported conditions to those that were actually publicly reported by CMS on the Hospital Compare Web site. Because this Web site did not report mortality rates during the process reporting phase, we used the technical reports released by CMS in their measure construction to approximate rates in 2005 to 2007 (4–6). Because our data are calculated quarterly whereas CMS reports data on a 12-quarter rolling average, we compiled our quarterly data into rolling average rates to more closely match the CMS method for this comparison. These trends were similar and are shown in the Appendix Figure (available at www.annals.org).

A 2-sided P value less than 0.05 was considered to represent a statistically significant difference. All analyses were performed using SAS software, version 9.2 (SAS Institute Inc.). This study was approved by the Harvard School of Public Health Office of Human Research Administration; the requirement for informed consent was waived because of the observational nature of the study and its use of deidentified data.

Role of the Funding Source

Dr. Joynt was funded by grant 1K23HL109177-01 from the National Heart, Lung, and Blood Institute. The funding source had no role in this study’s design, conduct, or reporting or the decision to publish the results.

Results

Hospital and Patient Characteristics

Our sample included 3970 hospitals, representing roughly 85% of U.S. acute care hospitals; the hospitals not reporting were a combination of predominantly small, rural hospitals that are not required to do so because of small sample size and specialty hospitals to which the mortality metrics do not apply (Table 1). Nearly two thirds of participating hospitals were nonprofit, and 22% were public. Forty-three percent of hospitals in our sample were small, 46% were mediumsized, and 11% were large. Only 6.8% were major teaching hospitals, and nearly 80% were in an urban location. The hospitals were distributed across the United States, with 14% located in the Northeast, 30% in the Midwest, 39% in the South, and 17% in the West.

Table 1.

Hospital Characteristics, 2008*

| Variable | Participating Hospitals | Nonparticipating Hospitals |

|---|---|---|

| Hospitals with 2008 publicly reported mortality rates and American Hospital Association data, n | 3970 | 161 |

| Average annual Medicare volume, n | 2259.5 | 171.8 |

| Hospital size | ||

| Small | 1696 (42.7) | 131 (81.4) |

| Medium | 1837 (46.3) | 28 (17.4) |

| Large | 437 (11.0) | 2 (1.2) |

| Critical access hospitals | 889 (22.4) | 49 (30.4) |

| Ownership | ||

| For profit | 599 (15.1) | 60 (37.3) |

| Nonprofit | 2493 (62.8) | 59 (36.7) |

| Public | 878 (22.1) | 42 (26.1) |

| Teaching | ||

| Major | 270 (6.8) | 0(0) |

| Minor | 727 (18.3) | 28 (17.4) |

| Nonteaching | 2973 (74.9) | 133 (82.6) |

| Urban location | 3090 (77.8) | 121 (75.2) |

| Region | ||

| Northeast | 556 (14.0) | 1 (0.6) |

| Midwest | 1190 (30.0) | 30 (18.6) |

| South | 1529 (38.5) | 62 (38.5) |

| West | 695 (17.5) | 68 (42.2) |

| Median proportion (IQR), % | ||

| Medicare | 47.3 (41.1–55.1) | 43.2 (27.4–59.0) |

| Medicaid | 16.1 (10.6–20.8) | 7.8 (2.3–16.9) |

IQR = interquartile range.

Values are numbers (percentages) unless otherwise indicated. Percentages may not sum to 100 because of rounding.

Across our selected conditions, these hospitals cared for 20 707 266 patients during our study period, ranging from 1 278 495 patients for renal failure to 2 892 085 for CHF (see Appendix Table 2, available at www.annals.org, for condition-specific n values). Mean age in the overall sample was 79 to 80 years, and roughly 41% of patients were male (Table 2). The 15 most common comorbidities, as assessed by the HCCs, are shown in Table 2; CHF, arrhythmia, renal failure, and chronic obstructive pulmonary disease were the most common. The prevalence of most comorbid conditions was higher during process and mortality reporting than during process-only reporting (see Appendix Table 3, available at www.annals.org, for year-by-year comorbidity prevalence).

Table 2.

Patient Demographic Characteristics and Comorbidities

| Variable | Process-Only Reporting Period | Process and Mortality Reporting Period |

|---|---|---|

| Demographic characteristics | ||

| Patients, n | 8 291 310 | 12 415 956 |

| Mean age, y | 79.2 | 79.8 |

| Male, % | 41.3 | 42.0 |

| Comorbidities, %* | ||

| CHF | 45.1 | 44.0 |

| Renal failure | 27.5 | 39.8 |

| Specified heart arrhythmias | 37.7 | 38.8 |

| Chronic obstructive pulmonary disease | 29.6 | 26.6 |

| Diabetes without complication | 17.3 | 22.3 |

| Cardiorespiratory failure and shock | 13.9 | 21.0 |

| Septicemia/shock | 14.4 | 19.2 |

| Vascular disease | 6.7 | 12.1 |

| Protein-calorie malnutrition | 6.8 | 12.3 |

| AMI | 11.8 | 12.0 |

| Angina pectoris/old myocardial infarction | 4.2 | 6.7 |

| Polyneuropathy | 2.0 | 6.0 |

| Ischemic or unspecified stroke | 10.0 | 9.2 |

| Aspiration and bacterial pneumonia | 6.7 | 8.0 |

| Decubitus ulcer of skin | 5.3 | 6.8 |

AMI = acute myocardial infarction; CHF = congestive heart failure.

The 15 most frequent Hierarchical Condition Categories present in the sample population, listed in descending order based on 2012 prevalence rates.

Trends in Risk-Adjusted 30-Day Mortality Rates for Reported Conditions

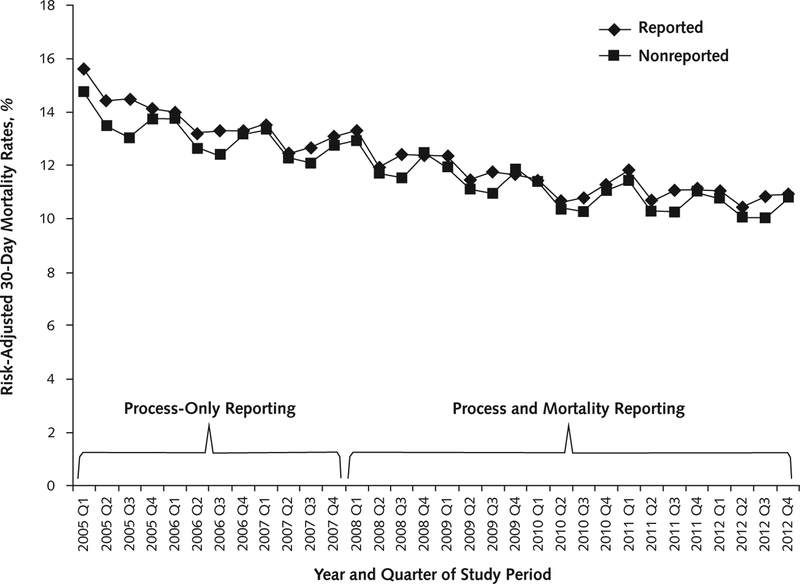

When we plotted 30-day mortality rates for reported and nonreported conditions over time, we saw a continuous decrease in mortality rates during the study period, with no obvious improvement at the point of onset of mortality reporting (Figure 1). When we formally examined trends in mortality for the 3 publicly reported conditions, we found that in aggregate, these conditions were improving at an absolute rate of −0.23% per quarter during the process-only reporting period. After the addition of public reporting of mortality rates in early 2008, this slowed to −0.09% per quarter (change, 0.13% per quarter; 95% CI, 0.12% to 0.14%) (Table 3).

Figure 1.

Risk-adjusted mortality rates for reported and nonreported conditions, 2005–2012.

Table 3.

Trends in Mortality Over Time for Reported and Nonreported Conditions*

| Variable | Baseline Mortality Rate | Quarterly Change in Mortality | Process-Only Versus Process and Mortality Reporting | ||

|---|---|---|---|---|---|

| Process-Only Reporting | Process and Mortality Reporting | Difference in Trend (95% CI) | P Value for Difference | ||

| Publicly reported | |||||

| AMI | 19.6 | −0.28 | −0.13 | 0.15 (0.12 to 0.18) | <0.001 |

| CHF | 14.8 | −0.21 | −0.06 | 0.15 (0.13 to 0.16) | <0.001 |

| Pneumonia | 14.3 | −0.21 | −0.10 | 0.11 (0.09 to 0.13) | <0.001 |

| All | 15.6 | −0.23 | −0.09 | 0.13 (0.12 to 0.14) | <0.001 |

| Nonreported | |||||

| Stroke | 22.2 | −0.15 | −0.15 | 0 (0 to 0.04) | 0.43 |

| Esophageal/gastric | 5.4 | −0.04 | −0.06 | −0.03 (−0.04 to −0.02) | <0.001 |

| Gastrointestinal bleed | 8.7 | −0.11 | −0.06 | 0.04 (0.02 to 0.07) | 0.002 |

| Urinary infection | 9.4 | −0.12 | −0.07 | 0.05 (0.02 to 0.08) | <0.001 |

| Metabolic | 12.4 | −0.20 | −0.02 | 0.18 (0.16 to 0.20) | <0.001 |

| Arrhythmia | 5.1 | −0.05 | −0.03 | 0.02 (0 to 0.03) | 0.021 |

| Renal failure | 21.3 | −0.32 | −0.13 | 0.19 (0.16 to 0.23) | <0.001 |

| Sepsis | 36.8 | −0.46 | −0.29 | 0.18 (0.14 to 0.21) | <0.001 |

| All | 14.8 | −0.17 | −0.11 | 0.06 (0.05 to 0.07) | <0.001 |

AMI = acute myocardial infarction; CHF = congestive heart failure.

Values are percentages.

We found that none of the individual conditions were improving faster in the process and mortality reporting period compared with the process-only reporting period. For AMI, mortality changed at a rate of −0.28% per quarter during process-only reporting and slowed to a change of −0.13% per quarter during process and mortality reporting (net change, 0.15% per quarter; CI, 0.12% to 0.18%); similarly, for CHF, the mortality rate was changing at a rate of −0.21% per quarter during process-only reporting, a rate that slowed to −0.06% per quarter during process and mortality reporting (change, 0.15% per quarter; CI, 0.13% to 0.16%). For pneumonia, mortality was changing at a rate of −0.21% per quarter during process-only reporting, and this rate slowed to −0.10% per quarter during process and mortality reporting (change, 0.11% per quarter; CI, 0.09% to 0.13%) (Table 3).

When we examined the nonreported conditions, we found that changes in mortality were similar. Mortality was changing during process-only reporting at −0.17% per quarter, but the rate slowed during process and mortality reporting to −0.11% per quarter (change, 0.06% per quarter; CI, 0.05% to 0.07%). Among the individual conditions, we found that trends in mortality were unchanged or worse in the process and mortality reporting period than in the process-only reporting period for all the study conditions, with the exception of esophageal/gastric disease (Table 3). A formal test for differences in the change in trend between reported and nonreported conditions was statistically significant (difference in change in trend, 0.07% per quarter; CI, 0.068% to 0.072%).

Findings from our main analysis were similar when we included patients who had respiratory failure or sepsis with a secondary diagnosis of pneumonia in our cohort (Appendix Table 4, available at www.annals.org). Results were unchanged after adjustment for race and ethnicity and urban versus rural status (Appendix Table 5, available at www.annals.org). When we varied the date of initiation of reporting to 1 year earlier, the decrease in improvement was greater (0.19% per quarter; Appendix Table 6, available at www.annals.org), and when we varied it to 1 quarter later, the decrease in improvement was similar (0.12% per quarter; Appendix Table 7, available at www.annals.org).

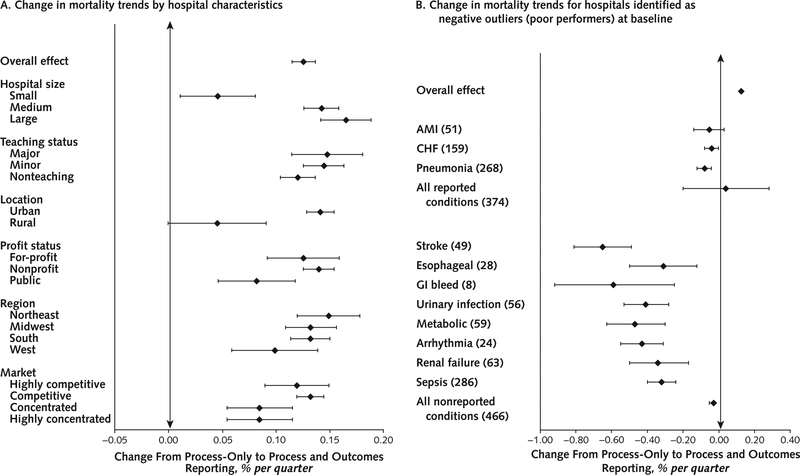

Hospital Subgroups and Changes in Mortality Rates

When we examined subsets of hospitals that we thought might respond differentially to public reporting, we found no groups of hospitals based on hospital characteristics in which overall mortality rates appeared to improve after the implementation of process and mortality reporting compared with process-only reporting (Figure 2). Improvements in mortality seemed to slow less for small and rural hospitals than for their larger or more urban counterparts.

Figure 2. Subgroup analysis of trends in overall mortality for reported conditions.

A. Hospital characteristics. Markers to the right of the vertical rule represent groups in which the rate of improvement in mortality slowed during outcomes reporting. Markers to the left represent groups in which the rate of improvement in mortality increased during outcomes reporting.

B. Outlier status. Each group comprises the hospitals that were the negative outliers (that is, the worst performers) during the baseline period. The numbers in parentheses indicate the number of hospitals identified as outliers in each group. For reported conditions, this was identified by hospitals that were negative outliers on Hospital Compare. For the nonreported conditions, we calculated “outlier status” using a similar method to identify the group of hospitals that would have been labeled as outliers if these conditions were being reported. Markers to the right of the vertical rule represent groups in which the rate of improvement in mortality slowed during outcomes reporting. Markers to the left represent groups in which the rate of improvement in mortality increased during outcomes reporting. AMI = acute myocardial infarction; CHF = congestive heart failure; GI = gastrointestinal.

We did find that hospitals identified as outliers for any of the 3 conditions in the first mortality report tended to improve their mortality rates for that specific condition during the outcomes reporting period compared with the process reporting period (change, −0.05% per quarter for AMI [CI, −0.14% to 0.03%], −0.04% per quarter for CHF [CI, −0.08% to 0.00%], and −0.08% per quarter for pneumonia [CI, −0.12% to −0.04%]) (Figure 2). However, these patterns were mirrored when we examined the group of hospitals that were poor performers at baseline on the nonreported conditions as well. The number of hospitals for which trends in mortality improved versus worsened is summarized in Appendix Table 8 (available at www.annals.org); overall, more hospitals improved than worsened, although the differences were small (51% versus 49% overall).

Discussion

Public reporting of hospital mortality rates on Hospital Compare for Medicare patients with AMI, CHF, and pneumonia was associated with less of a decline in 30-day mortality rates after implementation than that seen in Medicare patients with conditions not subject to reporting. However, absolute mortality rates continued to decrease throughout the study period for all conditions studied.

We are unsure why public reporting of mortality rates has not accelerated overall improvements in this outcome for reported conditions in U.S. hospitals. One would surmise that public reporting ought to work through a “peer-pressure” scenario, in which hospital leaders’ knowledge that their performance will be publicly viewable by their peer institutions would motivate them to improve outcomes. One possible explanation for a lack of effect of public reporting in the overall sample is that the manner in which CMS calculates and displays mortality results may dilute this peer-pressure effect. CMS displays performance rating for hospitals in 3 major categories (worse than the national average, no different than the national average, and better than the national average), with only 2% to 3% of hospitals being rated as being worse than expected on any condition in any given year (10). Because most hospitals are thus labeled as average (or, very occasionally, above average), there may be little motivation for hospital leaders to invest the substantial resources and energy needed to improve patient outcomes.

We found that for institutions identified in public reports as poor performers for pneumonia, evidence suggested condition-specific improvement in mortality trends after the onset of reporting. However, these trends were mirrored for hospitals that were poor performers on the nonreporting conditions; thus, it may be poor performance with regression to the mean, rather than the reporting itself, that was associated with a faster rate of improvement. It is possible that a reporting scheme that identified more hospitals as outliers, or one that provided a range of performance ratings (for example, much below average, below average, average, above average, and much above average), might have a larger effect overall.

Small and rural hospitals did seem to experience less of a slowing in improvement than their larger or more urban counterparts; because these were the hospital groups that were improving least rapidly in the prereporting period, this essentially led to an equalization in improvement rates with their larger and urban counterparts after the onset of reporting. It is possible that we saw a slowing of improvements in mortality rates overall because hospitals are reaching a lower limit of what is achievable; however, because some hospitals can still achieve mortality rates much below average, this seems less likely.

It is also possible that public reporting did not have a major effect on mortality rates overall because hospital leaders are not convinced that their peers or other important stakeholders will see their data or hold them accountable. Given prior data showing that consumers rarely use publicly reported quality and outcomes information (11, 12), health care leaders may have been unconcerned that poor performance would lead to a loss in market share.

Our study has limitations. We used administrative data, which may be limited in its ability to account for differences in severity of illness between hospitals and across time. It may be that the “sickness” profile of inpatients continues to rise in ways that we could not adequately take into account by using current risk-adjustment models. However, one would have to posit that hospitals were progressively less likely to code comorbid condition in order for unmeasured severity over time to account for our inability to find a benefit of reporting; we did not see this pattern in our patient characteristics data, and a decrease in coding over time seems unlikely given intense financial pressures to code an increasing number of comorbid conditions. We did not have access to sociodemographic data, such as education, income, and housing, that might affect patient outcomes. Probably because the 3 publicly reported conditions have been the subject of attention for many years, we did not have a control group with a pretrend identical to that in our intervention group. We included only hospitals that were reporting as of the first year of mortality reporting in our sample in order to test the intervention in as clean a manner as possible; our findings may not apply to hospitals that have newly opened since 2009. Finally, improving a hard outcome, such as 30-day mortality, may take longer than the 5 years of outcomes reporting data we included in this study. Whether benefits accrue over a longer period is unclear and requires further evaluation.

Our study adds to a growing body of literature on public reporting, both at the condition level (that is, AMI, CHF, and pneumonia) and at the procedural level (predominantly coronary artery bypass grafting, coronary artery bypass grafting, and percutaneous coronary intervention). Previous studies on Hospital Compare have been somewhat mixed; early studies of the process reporting program demonstrated an association between improvement in processes of care and improvement in mortality rates (13–15), although follow-up studies showed that mortality trends overall were unchanged after public reporting of processes alone (2). Therefore, the hypothesis that we did not find an effect because process reporting alone captured most of the benefit of public reporting is unlikely. Similarly, although early studies of coronary artery bypass grafting reporting demonstrated a reduction in mortality rates (16–18), more recent studies of reporting of coronary artery bypass grafting and percutaneous coronary intervention have failed to find a benefit of reporting (19–21) and have demonstrated potential adverse effects, such as reduced access to percutaneous coronary intervention in the setting of AMI (19, 22).

Hospital Compare’s switch from reporting only processes of care to also reporting 30-day mortality rates for common medical conditions was not associated with significant improvements in mortality rates for reported conditions in U.S. hospitals. Although CMS is increasingly moving toward pay-for-performance as a quality improvement strategy, public reporting remains a mainstay of its efforts as it moves into outcomes measurement across additional conditions in the hospital. as well as the postacute, provider, and practice settings, and typically predates pay-for-performance by 2 to 3 years. Our findings suggest that expectations for performance improvement from reporting alone should remain limited.

Grant Support:

Dr. Joynt was funded by grant 1K23HL109177-01 from the National Heart, Lung, and Blood Institute.

Appendix: Detailed Methods

Model Specifications

For our analyses, we used fixed-effects logistic regression models to look for trends over time after adjusting for patient age, sex, and HCC comorbid conditions. Logistic regression was used because our outcome was binary (dead/alive) and the binomial specification with the logit link assures us that the appropriate binomial likelihood will be used, that predicted probabilities will be in the correct range of [0,1], and that heterogeneity of variance will be accounted for. Fixed effects for hospitals were included in the model to account for correlation within hospital over time. Inclusion of fixed effects also ensures that the time effects reflect purely within-hospital changes in mortality over time. Otherwise, changes in mortality could be artifacts of changes in the sample of hospitals available at any given month (that is, if poor-quality hospitals closed over time, then mortality rates would appear to decrease even if there were no real changes within hospitals).The model included patient characteristics to ensure that changes in patient severity over time did not mask true changes in the probability of death due to the quality of care provided by a hospital (that is, if patients became sicker over time, mortality rates would appear to increase over time, even if a hospital’s quality of care did not change).

Thus, our model specification was as follows:

Wherein α1 hospital represents the set of indicator variables for the fixed effects of hospitals; β3 HCC variables represents all of the comorbidity terms in the HCC risk-adjustment model;β4 time in quarters represents the linear effect of time (on the log-odds of dying) in the process-only reporting period (the predictor“time in quarters” is measured in quarters from Q1 2005 [time in quarters = 1] to Q4 2012 [time in quarters = 32]); β5 time2 in quarters represents the change in slope from the process-only reporting period to the mortality reporting period (the predictor “time2 in quarters” is equal to 0 during the process-only reporting period from Q1 2005 to Q4 2007 and then increases incrementally from Q1 2008 [time2 in quarters = 1] to Q4 2012 [time2 in quarters = 20]).

Estimating Mortality Rates and Quarterly Changes in Mortality

Marginal standardization based on the total population of patients in the study database was used to estimate the mortality rate at the start and end of each reporting period: Q1 2005, Q4 2007, Q1 2008, and Q4 2012. The logistic regression model coefficients were used to calculate the predicted probability of death for each individual patient, with all of their observed covariates, except for the time terms being set to the prechosen reporting quarter.

For example, to estimate the mortality rate for a patient during Q1 2005, we have:

So that:

These individual probabilities, when averaged together, represent a predicted mortality rate for Q1 2005, standardized to the study population: Pred Mort(Q1 2005)

The analogous calculation is then carried out with “time” and “time2” taking on the appropriate values representing the other 3 quarters above. At the end, we will have 4 estimated mortality rates, representing identical patient populations, which can be compared fairly across time: Pred Mort(Q1 2005), Pred Mort(Q4 2007), Pred Mort(Q1 2008), and Pred Mort(Q4 2012).

We then calculated the average quarterly change in mortality over the pre- and postreporting period by subtracting the estimated initial rate from the estimated final rate and dividing by the number of quarters in the time period.

and

Similar calculations were performed for the “difference in trend” between the two time periods:

Approximate test-based CIs were calculated by estimating the SE for the “difference in trend” from the logistic regression test statistic for the change in the time trend. Specifically, because β5 represents the change in the time trend, we took the test-statistic for β5, Testβ5, and approximated: se(Diff in trend) = (Diff in trend)/Testβ5,with the final confidence interval: [Diff in trend ± 1.96 se(Diff in trend)]

Appendix Table 1.

ICD-9-CM Codes

| Condition | Code |

|---|---|

| AMI | 410.xx, excluding 410.x2 |

| CHF | 398.91, 404.x1, 404.x3, 428.0 to 428.9 |

| Pneumonia | 480 to 486 |

| Stroke | 430, 431, 4320, 4321, 4329, 43301, 43311, 43321, 43331, 43381, 43391, 43401, 43411, 43491 |

| Sepsis | 0031, 0362, 0363, 03689, 0369, 0380, 03810, 03811, 03819, 0382, 0383, 03840, 03841, 03842, 03843, 03844, 03849, 0388, 0389, 0545, 78552, 78559, 7907, 99590, 99591, 99592, 99593, 99594 |

| Esophagitis and gastroenteritis | 0030, 0040, 0041, 0042, 0043, 0048, 0049, 0050, 0053, 0054, 581, 589, 0059, 0060, 0061, 062, 0071, 0074, 0078, 00800, 00801, 00802, 00804, 00809, 0082, 0083, 00841, 00842, 00843, 00844, 00845, 00846, 00847, 00849, 0085, 00861, 00862, 00863, 00867, 00869, 0088, 0090, 0091, 0092, 0093, 11284, 11285, 1231, 1269, 1271, 1272, 1273, 1279, 129, 22804, 2712, 2713, 3064, 4474, 5300, 53010, 53011, 53012, 53019, 5303, 5304, 5305, 5306, 53081, 53083, 53084, 53089, 5309, 53500, 53510, 53520, 53530, 53540, 53550, 53560, 5360, 5361, 5362, 5363, 5368, 5369, 5371, 5372, 5374, 5375, 5376, 53781, 53782, 53789, 5379, 5523, 5533, 5583, 5589, 56200, 56201, 56210, 56211, 56400, 56401, 56402, 56409, 5641, 5642, 5643, 5644, 5645, 5646, 56481, 56489, 5649, 5790, 5791, 5792, 5793, 5794, 5798, 5799, 78701, 78702, 78703, 7871, 7872, 7873, 7874, 7876, 7877, 78791, 78799, 78900, 78901, 78902, 78903, 78904, 78905, 78906, 78907, 78909, 78930, 78931, 78932, 78933, 78934, 78935, 78936, 78937, 78939, 78960, 78961, 78962, 78964, 78966, 78967, 78969, 7899, 7921, 7934, 7936 |

| Gastrointestinal bleeding | 4560, 5307, 53082, 53100, 53101, 53120, 53121, 53140, 53141, 53160, 53161, 53200, 53201, 53220, 53221, 53240, 53241, 53260, 53261, 53300, 53301, 53320, 53340, 53341, 53360, 53400, 53401, 53420, 53440, 53441, 53460, 53501, 53511, 53521, 53531, 53541, 53551, 53561, 53783, 53784, 56202, 56203, 56212, 56213, 5693, 56985, 5780, 5781, 5789 |

| Urinary tract infection | 01600, 01634, 03284, 1200, 59000, 59010, 59011, 5902, 5903, 59080, 5909, 5933, 5950, 5951, 5952, 5953, 59581, 59589, 5959, 5970, 59780, 59781, 59789, 5990 |

| Nutritional or metabolic disorder | 2510, 2512, 2513, 260, 261, 262, 2630, 2631, 2638, 2639, 2651, 2661, 2662, 2669, 267, 2689, 2690, 2691, 2692, 2693, 2698, 2699, 2752, 27540, 27541, 27542, 27549, 2760, 2761, 2762, 2763, 2764, 2765, 27650, 27651, 27652, 2766, 2767, 2768, 2769, 27700, 27800, 27801, 27802, 2781, 2783, 2784, 2788, 7817, 7830, 7831, 78321, 78322, 7833, 78340, 78341, 7835, 7836, 7837, 7839, 79021, 79029 |

| Arrhythmia | 4260, 42610, 42611, 42612, 42613, 4262, 4263, 4264, 42650, 42651, 42652, 42653, 42654, 4266, 4267, 42681, 42682, 42689, 4269, 4270, 4271, 4272, 42731, 42732, 42741, 42742, 42760, 42761, 42769, 42781, 42789, 4279, 7850, 7851, 99601, 99604 |

| Renal failure | 40301, 40311, 40391, 40402, 40412, 40492, 5845, 5846, 5847, 5848, 5849, 585, 5851, 5852, 5853, 5854, 5855, 5856, 5859, 586, 7885, 9585 |

AMI = acute myocardial infarction; CHF = congestive heart failure; ICD-9-CM = International Classification of Diseases, Ninth Revision, Clinical Modification.

Appendix Figure. Comparison of calculated medicare mortality rates with publicly reported rates from Hospital Compare.

CMS = Centers for Medicare & Medicaid Services (publicly reported measures); HSPH = Harvard School of Public Health (authors’ internal calculations).

Appendix Table 2.

Sample Sizes and Percent Contribution to Sample in Each Year*

| Condition | Study Year | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | Total | |

| AMI | 201 663 (7.0) | 183 331 (6.8) | 173 964 (6.5) | 161 424 (6.4) | 153 345 (6.1) | 149 564 (6.0) | 145 840 (5.9) | 143 292 (6.0) | 1 312 423 (6.3) |

| CHF | 432 945 (14.9) | 406 694 (15.0) | 376 873 (14.1) | 346 920 (13.7) | 352 995 (14.1) | 342 806 (13.7) | 326 415 (13.1) | 306 437 (12.9) | 2 892 085 (14.0) |

| Pneumonia | 479 375 (16.5) | 405 928 (15.0) | 374 415 (14.0) | 344 941 (13.6) | 322 314 (12.8) | 315 042 (12.6) | 319 904 (12.8) | 291 801 (12.3) | 2 853 720 (13.8) |

| Stroke | 221 579 (7.6) | 210 193 (7.8) | 200 637 (7.5) | 189 159 (7.4) | 187 265 (7.5) | 186 704 (7.5) | 184 528 (7.4) | 176 833 (7.4) | 1 556 898 (7.5) |

| Arrhythmia | 321 253 (11.1) | 316 177 (11.7) | 310 424 (11.6) | 306 073 (12.0) | 309 112 (12.3) | 300 684 (12.1) | 291 529 (11.7) | 276 744 (11.6) | 2 431 996 (11.7) |

| Esophageal/gastric disease | 272 535 (9.4) | 290 739 (10.7) | 268 220 (10.0) | 237 853 (9.4) | 241 692 (9.6) | 249 891 (10.0) | 243 926 (9.8) | 225 652 (9.5) | 2 030 508 (9.8) |

| Gastrointestinal bleeding | 215 258 (7.4) | 203 674 (7.5) | 192 911 (7.2) | 180 184 (7.1) | 174 755 (7.0) | 170 780 (6.8) | 170 874 (6.9) | 160 650 (6.8) | 1 469 086 (7.1) |

| Metabolic disease | 211 409 (7.3) | 186 322 (6.9) | 193 281 (7.2) | 175 662 (6.9) | 171 742 (6.8) | 158 760 (6.4) | 143 938 (5.8) | 127 483 (5.4) | 1 368 597 (6.6) |

| Sepsis | 206 395 (7.1) | 152 499 (5.6) | 231 036 (8.6) | 245 879 (9.7) | 249 418 (9.9) | 267 750 (10.7) | 288 828 (11.6) | 303 721 (12.8) | 1 945 526 (9.4) |

| Renal failure | 143 231 (4.9) | 158 994 (5.9) | 165 030 (6.2) | 160 346 (6.3) | 149 052 (5.9) | 150 012 (6.0) | 177 467 (7.1) | 174 363 (7.3) | 1 278 495 (6.2) |

| Urinary tract infection | 195 502 (6.7) | 194 972 (7.2) | 193 851 (7.2) | 191 601 (7.5) | 198 706 (7.9) | 202 965 (8.1) | 198 297 (8.0) | 192 038 (8.1) | 1 567 932 (7.6) |

| Total | 2 901 145 (100.0) | 2 709 523 (100.0) | 2 680 642 (100.0) | 2 540 042 (100.0) | 2 510 396 (100.0) | 2 494 958 (100.0) | 2 491 546 (100.0) | 2 379 014 (100.0) | 20 707 266 (100.0) |

AMI = acute myocardial infarction; CHF = congestive heart failure.

Values are numbers (percentages).

Appendix Table 3.

Demographic Characteristics and Comorbidities, by Study Year

| Variable | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 |

|---|---|---|---|---|---|---|---|---|

| Demographic characteristics | ||||||||

| Patients, n | 2 694 750 | 2 557 024 | 2 449 606 | 2 294 163 | 2 260 978 | 2 227 208 | 2 202 718 | 2 075 293 |

| Mean age, y | 79.1 | 79.2 | 79.3 | 79.4 | 80.0 | 80.0 | 79.9 | 80.0 |

| Male, % | 40.9 | 41.1 | 41.3 | 41.2 | 41.5 | 41.7 | 41.9 | 41.5 |

| Comorbidities, %* | ||||||||

| CHF | 47.0 | 46.0 | 44.8 | 40.4 | 46.0 | 43.8 | 47.6 | 47.2 |

| Arrhythmias | 38.7 | 39.1 | 38.8 | 33.1 | 41.1 | 35.3 | 44.9 | 45.5 |

| Renal failure | 21.7 | 28.3 | 30.2 | 30.5 | 37.7 | 36.7 | 42.3 | 43.4 |

| Chronic obstructive pulmonary disease | 30.7 | 30.0 | 28.8 | 20.8 | 27.4 | 22.7 | 31.4 | 30.8 |

| Diabetes without complication | 17.7 | 17.3 | 17.2 | 16.2 | 24.2 | 18.1 | 26.8 | 27.1 |

| Cardiorespiratory failure/shock | 10.9 | 13.1 | 15.2 | 14.6 | 18.5 | 18.8 | 22.4 | 22.7 |

| Vascular disease | 6.8 | 6.7 | 6.7 | 6.6 | 11.9 | 8.3 | 16.9 | 16.9 |

| Protein-calorie malnutrition | 6.1 | 6.1 | 6.8 | 8.5 | 11.2 | 9.9 | 13.1 | 12.8 |

| AMI | 12.5 | 12.2 | 12.2 | 12.4 | 12.3 | 12.3 | 12.2 | 12.4 |

| Angina/old myocardial infarction | 4.7 | 4.4 | 4.0 | 3.2 | 6.7 | 3.3 | 10.7 | 10.7 |

| Septicemia/shock | 6.9 | 7.1 | 7.7 | 8.3 | 9.4 | 9.8 | 9.9 | 10.0 |

| Ischemic or unspecified stroke | 10.5 | 10.3 | 10.2 | 9.5 | 9.7 | 10.7 | 9.6 | 9.6 |

| Polyneuropathy | 1.9 | 1.9 | 2.1 | 2.3 | 5.4 | 3.5 | 9.0 | 9.4 |

| Decubitus ulcer | 4.5 | 4.4 | 5.0 | 5.7 | 5.3 | 4.2 | 7.0 | 6.7 |

| Aspiration/bacterial pneumonias | 5.9 | 5.8 | 6.1 | 6.3 | 6.9 | 6.9 | 7.0 | 6.7 |

AMI = acute myocardial infarction; CHF = congestive heart failure.

The 15 most frequent Hierarchical Condition Categories present in the sample population, listed in decreasing order based on 2012 prevalence rates.

Appendix Table 4.

Trends in Mortality Over Time, Including Respiratory Failure or Sepsis with a Secondary Diagnosis of Pneumonia in the Pneumonia Cohort*

| Variable | Quarterly Change in Mortality | Process-Only Versus Process and Mortality Reporting | ||

|---|---|---|---|---|

| Process-Only Reporting | Process and Mortality Reporting | Difference in Trend | P Value for Difference | |

| All reported conditions | −0.23 | −0.09 | 0.13 | <0.001 |

| All reported conditions, including respiratory failure or sepsis with a secondary diagnosis of pneumonia in the pneumonia cohort | −0.23 | −0.11 | 0.13 | <0.001 |

Values are percentages.

Appendix Table 5.

Trends in Mortality Over Time, Adjusted for Race/Ethnicity and Urban Versus Rural Location*

| Variable | Quarterly Change in Mortality | Process-Only Versus Process and Mortality Reporting | ||

|---|---|---|---|---|

| Process-Only Reporting | Process and Mortality Reporting | Difference in Trend | P Value for Difference | |

| Publicly reported | ||||

| AMI | −0.28 | −0.13 | 0.15 | <0.001 |

| CHF | −0.21 | −0.06 | 0.15 | <0.001 |

| Pneumonia | −0.21 | −0.10 | 0.11 | <0.001 |

| All | −0.23 | −0.10 | 0.13 | <0.001 |

| Nonreported | ||||

| Stroke | −0.16 | −0.15 | 0.00 | 0.517 |

| Esophageal/gastric | −0.04 | −0.06 | −0.03 | <0.001 |

| Gastrointestinal bleed | −0.11 | −0.06 | 0.04 | 0.001 |

| Urinary infection | −0.12 | −0.08 | 0.05 | <0.001 |

| Metabolic | −0.20 | −0.02 | 0.18 | <0.001 |

| Arrhythmia | −0.05 | −0.04 | 0.02 | 0.020 |

| Renal failure | −0.33 | −0.13 | 0.20 | <0.001 |

| Sepsis | −0.47 | −0.29 | 0.18 | <0.001 |

| All | −0.17 | −0.11 | 0.06 | <0.001 |

AMI = acute myocardial infarction; CHF = congestive heart failure.

Values are percentages.

Appendix Table 6.

Trends in Mortality Over Time: Start Date Q1 2007*

| Variable | Quarterly Change in Mortality | Process-Only Versus Process and Mortality Reporting | ||

|---|---|---|---|---|

| Process-Only Reporting | Process and Mortality Reporting | Difference in Trend | P Value for Difference | |

| Publicly reported | ||||

| AMI | −0.33 | −0.15 | 0.18 | <0.001 |

| CHF | −0.29 | −0.07 | 0.22 | <0.001 |

| Pneumonia | −0.27 | −0.10 | 0.17 | <0.001 |

| All | −0.29 | −0.10 | 0.19 | <0.001 |

| Nonreported | ||||

| Stroke | −0.15 | −0.15 | 0.00 | 0.925 |

| Esophageal/gastric | −0.03 | −0.06 | −0.02 | 0.002 |

| Gastrointestinal bleed | −0.14 | −0.08 | 0.06 | <0.001 |

| Urinary infection | −0.13 | −0.07 | 0.06 | <0.001 |

| Metabolic | −0.25 | −0.04 | 0.22 | <0.001 |

| Arrhythmia | −0.07 | −0.04 | 0.03 | <0.001 |

| Renal failure | −0.39 | −0.15 | 0.24 | <0.001 |

| Sepsis | −0.49 | −0.31 | 0.17 | <0.001 |

| All | −0.19 | −0.12 | 0.07 | <0.001 |

AMI = acute myocardial infarction; CHF = congestive heart failure.

Values are percentages.

Appendix Table 7.

Trends in Mortality Over Time: Start Date Q2 2008*

| Variable | Quarterly Change in Mortality | Process-Only Versus Process and Mortality Reporting | ||

|---|---|---|---|---|

| Process-Only Reporting | Process and Mortality Reporting | Difference in Trend | P Value for Difference | |

| Publicly reported | ||||

| AMI | −0.27 | −0.13 | 0.15 | <0.001 |

| CHF | −0.19 | −0.06 | 0.13 | <0.001 |

| Pneumonia | −0.20 | −0.09 | 0.11 | <0.001 |

| All | −0.22 | −0.09 | 0.12 | <0.001 |

| Nonreported | ||||

| Stroke | −0.16 | −0.15 | 0.01 | 0.558 |

| Esophageal/gastric | −0.04 | −0.06 | −0.02 | <0.001 |

| Gastrointestinal bleed | −0.10 | −0.06 | 0.04 | 0.002 |

| Urinary infection | −0.12 | −0.07 | 0.05 | <0.001 |

| Metabolic | −0.19 | −0.01 | 0.18 | <0.001 |

| Arrhythmia | −0.05 | −0.03 | 0.02 | 0.031 |

| Renal failure | −0.31 | −0.12 | 0.19 | <0.001 |

| Sepsis | −0.46 | −0.28 | 0.18 | <0.001 |

| All | −0.17 | −0.11 | 0.06 | <0.001 |

AMI = acute myocardial infarction; CHF = congestive heart failure.

Values are percentages.

Appendix Table 8.

Summary of Hospitals That Improved or Worsened in the Study Period for Each Condition*

| Conditions | Improve | Worsen |

|---|---|---|

| Publicly reported | ||

| AMI | 2189 (49.7) | 2216 (50.3) |

| CHF | 2304 (50.8) | 2235 (49.2) |

| Pneumonia | 2336 (51.2) | 2226 (48.8) |

| All | 2312 (50.5) | 2265 (49.5) |

| Nonreported | ||

| Stroke | 2312 (51.4) | 2187 (48.6) |

| Esophageal/gastric | 2321 (50.7) | 2256 (49.3) |

| Gastrointestinal bleed | 2307 (51.1) | 2208 (48.9) |

| Urinary infection | 2471 (54.1) | 2093 (45.9) |

| Metabolic | 2376 (52.0) | 2195 (48.0) |

| Arrhythmia | 2250 (49.6) | 2290 (50.4) |

| Renal failure | 2250 (50.0) | 2252 (50.0) |

| Sepsis | 2373 (52.6) | 2135 (47.4) |

| All | 2388 (51.9) | 2209 (48.1) |

| Total | 2356 (51.2) | 2245 (48.8) |

AMI = acute myocardial infarction; CHF = congestive heart failure.

Values are numbers (percentages).

Footnotes

Disclosures: Authors have disclosed no conflicts of interest. Forms can be viewed at www.acponline.org/authors/icmje/ConflictOfInterestForms.do?msNum=M15-1462.

Reproducible Research Statement: Study protocol: Not available. Statistical code: Available from Dr. Joynt at kjoynt@hsph.harvard.edu or Dr. Zheng at jzheng@hsph.harvard.edu. Data set: Not available because of data use agreements with CMS.

Current author addresses and author contributions are available at www.annals.org.

References

- 1.Chan PS, Patel MR, Klein LW, Krone RJ, Dehmer GJ, Kennedy K, et al. Appropriateness of percutaneous coronary intervention. JAMA. 2011;306:53–61. doi: 10.1001/jama.2011.916 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ryan AM, Nallamothu BK, Dimick JB. Medicare’s public reporting initiative on hospital quality had modest or no impact on mortality from three key conditions. Health Aff (Millwood). 2012;31:585–92. doi: 10.1377/hlthaff.2011.0719 [DOI] [PubMed] [Google Scholar]

- 3.Lindenauer PK, Lagu T, Shieh MS, Pekow PS, Rothberg MB. Association of diagnostic coding with trends in hospitalizations and mortality of patients with pneumonia, 2003–2009. JAMA. 2012;307: 1405–13. doi: 10.1001/jama.2012.384 [DOI] [PubMed] [Google Scholar]

- 4.Bratzler DW, Normand SL, Wang Y, O’Donnell WJ, Metersky M, Han LF, et al. An administrative claims model for profiling hospital 30-day mortality rates for pneumonia patients. PLoS One. 2011;6: e17401. doi: 10.1371/journal.pone.0017401 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006;113:1683–92. [DOI] [PubMed] [Google Scholar]

- 6.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with heart failure. Circulation. 2006;113:1693–701. [DOI] [PubMed] [Google Scholar]

- 7.Centers for Medicare & Medicaid Services. 2015 ConditionSpecific Measures Updates and Specifications Report: Hospital-Level 30-Day Risk-Standardized Mortality Measures: Acute Myocardial Infarction—Version 9.0, Heart Failure—Version 9.0, Pneumonia—Version 9.0, Chronic Obstructive Pulmonary Disease—Version 4.0, Stroke— Version 4.0. (Prepared by Yale New Haven Health Services Corporation/Center for Outcomes Research & Evaluation.) Baltimore: Centers for Medicare & Medicaid Services; 2015. [Google Scholar]

- 8.Li P, Kim MM, Doshi JA. Comparison of the performance of the CMS Hierarchical Condition Category (CMS-HCC) risk adjuster with the Charlson and Elixhauser comorbidity measures in predicting mortality. BMC Health Serv Res. 2010;10:245. doi: 10.1186/1472-6963-10-245 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Muller CJ, MacLehose RF. Estimating predicted probabilities from logistic regression: different methods correspond to different target populations. Int J Epidemiol. 2014;43:962–70. doi: 10.1093/ije/dyu029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.U.S. Department of Health and Human Services. Hospital Compare. 2016. Accessed at www.hospitalcompare.hhs.gov on 2 May 2016.

- 11.Schneider EC, Epstein AM. Use of public performance reports: a survey of patients undergoing cardiac surgery. JAMA. 1998;279: 1638–42. [DOI] [PubMed] [Google Scholar]

- 12.Ketelaar NA, Faber MJ, Flottorp S, Rygh LH, Deane KH, Eccles MP. Public release of performance data in changing the behaviour of healthcare consumers, professionals or organisations. Cochrane Database Syst Rev. 2011:CD004538. doi: 10.1002/14651858.CD004538.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Werner RM, Bradlow ET. Public reporting on hospital process improvements is linked to better patient outcomes. Health Aff (Millwood).2010;29:1319–24. doi: 10.1377/hlthaff.2008.0770 [DOI] [PubMed] [Google Scholar]

- 14.Jha AK, Orav EJ, Li Z, Epstein AM. The inverse relationship between mortality rates and performance in the Hospital Quality Alliance measures. Health Aff (Millwood). 2007;26:1104–10. [DOI] [PubMed] [Google Scholar]

- 15.Werner RM, Bradlow ET. Relationship between Medicare’s hospital compare performance measures and mortality rates. JAMA. 2006;296:2694–702. [DOI] [PubMed] [Google Scholar]

- 16.Hannan EL, Siu AL, Kumar D, Kilburn H Jr, Chassin MR. The decline in coronary artery bypass graft surgery mortality in New York State. The role of surgeon volume. JAMA. 1995;273:209–13. [PubMed] [Google Scholar]

- 17.Hannan EL, Kumar D, Racz M, Siu AL, Chassin MR. New York State’s Cardiac Surgery Reporting System: four years later. Ann Thorac Surg. 1994;58:1852–7. [DOI] [PubMed] [Google Scholar]

- 18.Peterson ED, DeLong ER, Jollis JG, Muhlbaier LH, Mark DB. The effects of New York’s bypass surgery provider profiling on access to care and patient outcomes in the elderly. J Am Coll Cardiol. 1998; 32:993–9. [DOI] [PubMed] [Google Scholar]

- 19.Joynt KE, Blumenthal DM, Orav EJ, Resnic FS, Jha AK. Association of public reporting for percutaneous coronary intervention with utilization and outcomes among Medicare beneficiaries with acute myocardialinfarction.JAMA.2012;308:1460–8. doi: 10.1001/jama.2012.12922 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ghali WA, Ash AS, Hall RE, Moskowitz MA. Statewide quality improvement initiatives and mortality after cardiac surgery. JAMA. 1997;277:379–82. [PubMed] [Google Scholar]

- 21.O’Connor GT, Plume SK, Olmstead EM, Morton JR, Maloney CT, Nugent WC, et al. A regional intervention to improve the hospital mortality associated with coronary artery bypass graft surgery. The Northern New England Cardiovascular Disease Study Group. JAMA. 1996;275:841–6. [PubMed] [Google Scholar]

- 22.Werner RM, Asch DA, Polsky D. Racial profiling: the unintended consequences of coronary artery bypass graft report cards. Circulation. 2005;111:1257–63. [DOI] [PubMed] [Google Scholar]