Abstract

Simple Summary

Animal monitoring normally requires procedures that are time- and labour-consuming. The implementation of novel non-invasive technologies could be a good approach to monitor animal health and welfare. This study aimed to evaluate the use of images and computer-based methods to track specific features of the face and to assess temperature; respiration rate and heart rate in cattle. The measurements were compared with measures obtained with conventional methods during the same time period. The data were collected from ten dairy cows that were recorded during six handling procedures across two consecutive days. The results from this study show over 92% of accuracy from the computer algorithm that was developed to track the areas selected on the videos collected. In addition, acceptable correlation was observed between the temperature calculated from thermal infrared images and temperature collected using intravaginal loggers. Moreover, there was acceptable correlation between the respiration rate calculated from infrared videos and from visual observation. Furthermore, a low to high relationship was found between the heart rate obtained from videos and from attached monitors. The study also showed that both the position of the cameras and the area analysed on the images are very important, as both had large impact on the accuracy of the methods. The positive outcomes and the limitations observed in this study suggest the need for further research

Abstract

Precision livestock farming has emerged with the aim of providing detailed information to detect and reduce problems related to animal management. This study aimed to develop and validate computer vision techniques to track required features of cattle face and to remotely assess eye temperature, ear-base temperature, respiration rate, and heart rate in cattle. Ten dairy cows were recorded during six handling procedures across two consecutive days using thermal infrared cameras and RGB (red, green, blue) video cameras. Simultaneously, core body temperature, respiration rate and heart rate were measured using more conventional ‘invasive’ methods to be compared with the data obtained with the proposed algorithms. The feature tracking algorithm, developed to improve image processing, showed an accuracy between 92% and 95% when tracking different areas of the face of cows. The results of this study also show correlation coefficients up to 0.99 between temperature measures obtained invasively and those obtained remotely, with the highest values achieved when the analysis was performed within individual cows. In the case of respiration rate, a positive correlation (r = 0.87) was found between visual observations and the analysis of non-radiometric infrared videos. Low to high correlation coefficients were found between the heart rates (0.09–0.99) obtained from attached monitors and from the proposed method. Furthermore, camera location and the area analysed appear to have a relevant impact on the performance of the proposed techniques. This study shows positive outcomes from the proposed computer vision techniques when measuring physiological parameters. Further research is needed to automate and improve these techniques to measure physiological changes in farm animals considering their individual characteristics.

Keywords: computer vision, physiological parameters, animal monitoring, imagery

1. Introduction

The livestock industry is continually seeking more sustainable systems, which implement novel technologies that contribute to better management strategies [1]. Precision livestock farming (PLF) has emerged as a response to the need for a regular monitoring system that provides detailed information, allowing quick and evidence-based decisions on the animal’s needs [2]. Sound sensors, cameras, and image analysis have been considered as part of the PLF development, which appear to be useful non-invasive technologies that allow the monitoring of animals without producing discomfort [3,4,5,6,7].

Some researchers are also developing and implementing new technologies in order to achieve automatic and less invasive techniques to monitor vital parameters such as heart rate (HR), respiration rate (RR) and core body temperature [8,9,10,11]. These parameters have been commonly measured through methods that require human–animal interaction, such as the use of stethoscope for HR and RR measurement, and thermometer for core body temperature measurement [12,13,14]. Although these and other contact techniques have been widely used for medical, routine and scientific monitoring of animals, most of them are labour-intensive, can generate discomfort to animals and consequently, they are not practical for continuous and large-scale animal monitoring [15,16]. Hence, computer vision (CV) techniques appear as promising methods to perform non-contact measuring of one or more physiological parameters, including temperature, HR, and RR in farm animals [17].

Thermal infrared (TIR) sensors have been used for decades in research aiming to assess skin temperature as an indicator of health and welfare issues in farm animals [11]. The implementation of these technologies is based on the fact that the alteration of blood flow underlying the skin generates changes in body surface temperature, which is detected as radiated energy (photons) by TIR cameras, and later observed as skin temperature when analysing the images [18]. This analysis allows the selection of one or more regions of interest (ROIs), which has led to the use of these technologies to detect signs of inflammation in certain areas of the body, or to assess the surface temperature of specific areas, as an index to body temperature in animals [19,20].

In terms of HR measurement, a variety of less invasive techniques, such as attached monitors, have been tested to assess HR in cattle and other farm animals. However, it has been found that this measurement can be affected by the equipment and the measurement technique [21]. For this reason, researchers are currently investigating computer-based techniques as a promising possibility for a contactless way of monitoring HR on farms [22]. This interest is also promoted by the results reported in humans, which have shown the ability to detect changes in blood flow, which allows the measurement of HR in people by using images and computer algorithms that are based on skin colour changes or the subtle motion that occurs in the body due to cardiac movements [23,24,25,26,27]. Although these studies showed promising results, most of them agree that the main noise observed in the results are related to motion and light conditions [25]. Some researchers such as Li et al. [28] and Wang et al. [29], have focused on improving these methods by including algorithms for tracking the ROI and pixels, and for illumination correction.

In terms of RR, as another important measurement used in human and animal health, the search for new and less labour-intensive methods of assessment has grown in the last decades. Methods such as respiratory belt transducers, ECG (electrocardiogram) morphology, and photoplethysmography morphology have been implemented in several studies [30]. However, these methods require the attachment of sensors, which can produce discomfort and stress in the person or animal [30,31]. Hence, the use of imagery and computer algorithms has increasingly been used in studies aiming to remotely assess the RR of people and animals [5,17,30,31]. Although these studies showed promising results when remotely measuring RR, they were only tested when the participants were motionless, or required to count the respiration movements manually.

This study aimed to develop and validate computer vision algorithms against more traditional measurements to measure (1) ‘eye’ and ‘ear-base’ temperature from TIR imagery, (2) RR from infrared videos, (3) HR from RGB videos, and (4) an algorithm for feature tracking in cattle. Additional aims were to identify the most suitable camera position and the most suitable ROI, both in terms of the validity as well as accuracy of the proposed algorithms, to facilitate possible implementation of these methods in an automated monitoring system on farm.

2. Materials and Methods

2.1. Study Site and Animals Description

This study was approved by The University of Melbourne’s Animal Ethics Committee (Ethics ID: 1714124.1). Data was collected during two consecutive days at the University of Melbourne’s robotic dairy farm (Dookie, VIC, Australia) in March 2017. Ten lactating cows (Holstein Friesian) varying in parity (2–5), average of daily milk production (32.2–42.3 kg), and liveweight (601–783 kg), were randomly selected from the herd. These cows were placed in a holding yard the night before starting the procedures and kept in this yard overnight during the experiment period.

2.2. Data Acquisition and Computer Vision Analysis

2.2.1. Cameras Description

Devices equipped with thermal and RGB sensors were built, allowing to simultaneously record thermal and RGB images of the animals involved. The sensors incorporated were an infrared thermal camera (FLIR® AX8; FLIR Systems, Wilsonville, OR. USA) and an RGB video camera (Raspberry Pi Camera Module V2.1; Raspberry Pi Foundation, Cambridge, UK).

The FLIR AX8 Thermal Camera has a spectra range of 7.5–13 μm and accuracy of ±2% The emissivity used in these cameras is 0.985, which has been reported as the emissivity of humans and other mammals skin (0.98 ± 0.01; [6,32]). Thermal imaging cameras with similar characteristics have been used in several studies to assess skin temperature in humans and animals [6,33,34,35,36]. Furthermore, the RGB video camera used for this project was a Raspberry Pi Camera Module V2.1, which is an 8-megapixel sensor, with a rate of 29 frames per second.

In addition to the built devices, two Hero 3+ cameras (GoPro, Silver Edition v03.02®, San Mateo, CA, USA) were attached to the milking robot to continuously record the side and front area of the cows face while they were being milked. These cameras record RGB videos with a rate of 29 frames per second, with a resolution of 1280 × 720 pixels. Moreover, a FLIR® ONE (FLIR Systems, Wilsonville, OR, USA) Thermal Imaging Case attached to an iPhone® 5 (Apple Inc., Cupertino, CA, USA) was used to record non-radiometric IR videos when animals were in the crush.

2.2.2. Feature Tracking Technique

This methodology was developed to avoid the misclassification of pixels that could later be considered for the physiological parameters prediction. The feature tracking procedure aimed to trace through sequential frames of a video, characteristic points over an ROI using computer vision algorithms. The complete feature tracking methodology was integrated on a graphical user interface built in Matlab® R2018b, which is divided into two main steps: (i) identification of features over the ROI and ii) reconstruction of a new ROI based on the tracked point over frames.

(i) For the feature identification step, the following pattern recognition techniques are automatically computed: the minimum eigenvalue [37], the speeded up robust features (SURF) [38], the binary robust invariant scalable points (BRISK) [39], the fusing points and lines for high performance tracking (FAST) algorithm [40], a combined corner and edge detection algorithm [41], and the histograms of oriented gradients algorithm [42]. As these techniques differ in performance and the target for feature detection, they are automatically compared within the ROI of each specific video. After these techniques have been evaluated, the one that allows to select the most representative features, for each case, is selected. Some of the functions developed as part of the methodology were based on the Computer Vision Toolbox available on Matlab® R2018b.

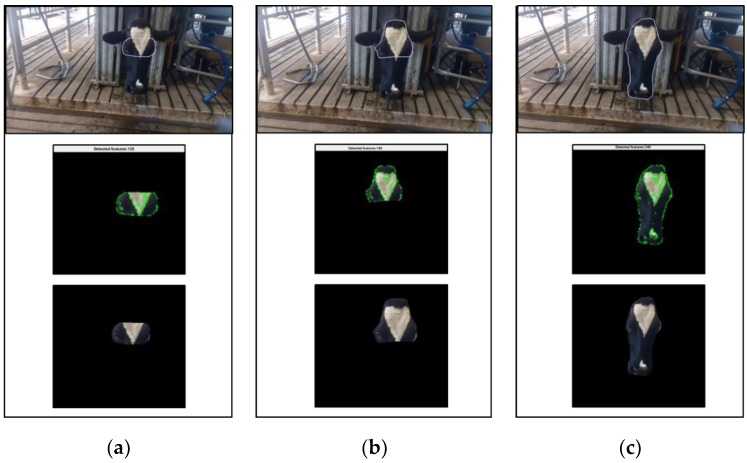

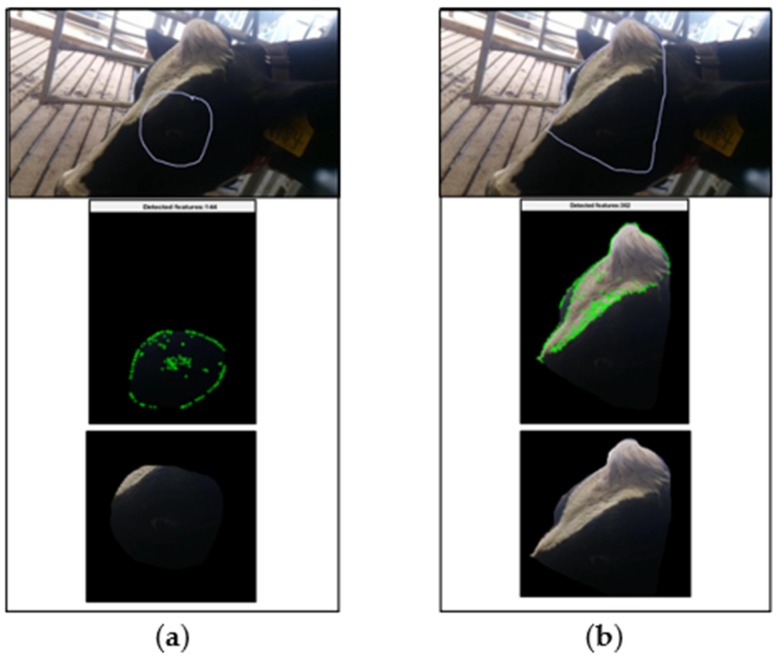

(ii) With the results from the previous step, the selected feature identification technique and the selected ROI are used for tracking the points over the sequential frames, using a modified KLT (Kanade–Lucas–Tomasi)-based algorithm [43]. This tracking procedure refreshes the selected ROI over all the consecutive frames and set up a new ROI based on the area which better includes the new identified points by masking (using a binary image segmentation) all the pixels which are outside of that region (Figure 1 and Figure 2). This new ROI is only created if the ratio between the selected point and the new identified points is higher than 70%. Finally, the physiological parameters prediction was applied over the frames containing only the information of interest.

Figure 1.

Stages of the feature tracking method when cameras are located in front of the animal on (a) the eye area, (b) the forehead and (c) the face.

Figure 2.

Stages of the feature tracking method when cameras are located next to the animal on (a) the eye area and (b) the forehead.

This method decreased the misclassification of pixels by increasing accuracy when analyzing the desired area. Another advantage of this methodology is that as it is automated (not supervised), it only requires the user input for the initial selection of the ROI and for the selection of the feature identification technique that better represents the features of the ROI.

2.2.3. Image Analysis

Image processing was performed separately for each physiological parameter. In the case of temperature, TIR images, obtained with FLIR AX8 cameras, were processed to extract temperature. To automatize the processing of the thermal images, a script was developed on MATLAB® R2018b (Mathworks Inc. Natick, MA, USA), using a software development kit (SDK) named FLIR® Atlas SDK [44,45]. The script consisted on automatically extracting the radiometric information from the original data and saving it into ‘.tiff and .dat’ files. In addition, a ROI was set on the images for extracting the statistical parameters of the temperature on the specific area selected. In this study ‘eye area’ and ‘ear base’ were used as ROI to extract temperature. The selection of these two areas was based on some reported studies, which analysed these areas when assessing temperature of farm animals as a measurement of body temperature [46,47].

The non-radiometric infrared (IR) videos obtained with the FLIR ONE were processed through an algorithm that was developed in Matlab® for this study, to measure the RR in the participating cows. This algorithm calculates RR measures based on the changes in pixel intensity values detected within the ROI (nose area) due to respiratory airflow, which indicates the inhalations and exhalations performed by the animal during the time analysed.

For the HR analysis, RGB videos obtained with Raspberry Pi and GoPro cameras were firstly examined and classified per cow. Once the videos of each animal were identified, they were processed through a customized algorithm developed in Matlab® 2018b, which identifies the changes of luminosity in the green colour channel from ROIs obtained, using the photoplethysmography principle and based on the peak analysis of the signal obtained from luminosity over time. A second-order Butterworth filter and a fast Fourier transformation (FFT) are performed after this signal is obtained. This process provides HR values (BPM) every 0.5 s [26]. The ROI was tracked using a feature tracking algorithm described above (Section 2.2.2). The areas analysed as ROI for HR assessment were the ‘eye area’, ‘forehead’ and ‘face’. This selection was based on the areas that some studies have used as ROI when implementing computer algorithms to analyse HR in humans [17,25].

2.2.4. Data Collected

The protocol of this study was designed to compare invasively and remotely obtained data, collected simultaneously from the same animals during six periods across two consecutive days. The periods recorded included two different conditions (restraint within the crush and during robotic milking).

Physiological parameters included in this study (body temperature, RR and HR) were assessed simultaneously by a gold-standard (labelled as ‘invasive’ for the purpose of this study) and a CV technique. The body temperature of these animals was assessed by the analysis of eye and ear-base temperature obtained from TIR images (frame rate: 1 per second), and by vaginal temperature obtained from temperature loggers (Thermochron®; Maxim Integrated Products, Sunnyvale, CA, USA) which were set to record every 60 s, accommodated in a blank controlled internal drug release (CIDR; Pfizer Animal Health, Sterling, CO, USA) device and placed into each participating cow [48]. RR was measured using the respective CV method and by visual observations from RGB video obtained with a Raspberry Pi Camera when cows were in the crush. These visual observations were always performed by the same observer, who counted breathing movements during one minute. Finally, HR of cows was measured using the proposed CV method as well as with a commercial heart rate monitor (Polar WearLink®; Polar Electro Oy, Kempele, Finland) as the ‘invasive’ technique, which has been validated and used in cattle in several studies [21,49,50].

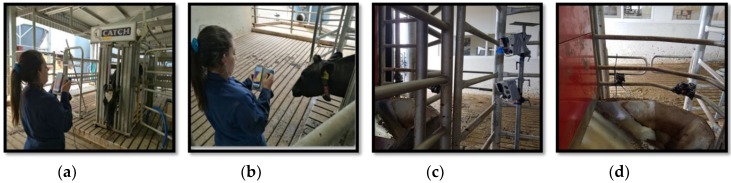

One of the built devices (including TIR and RGB cameras) was held outside the crush to record the face of cows from the front (within 1.5 m) and next to them (within 0.5 m). Another of these devices was attached next to the milking robot to record one side of the face of cows (within 0.5 m) during the milking process (Due to the structure of the milking robot and the size of this device it was not possible to place it in front of the face of cows). In addition, two GoPro cameras were attached to the milking robot to record videos from the front and side of the face of cows (within 0.5 m). Consequently, thermal images were obtained from the front of the animals within a distance of 1.5 m (in the crush), and next to the animals’ face within a distance of 0.5 m (in the crush and in the milking machine). While, RGB videos were recorded from three different positions: in front of cows within a distance of 1.5 m, in front of cows within a distance of 0.5 m (in the milking machine), and alongside within a distance of 0.5 m (in the crush and in the milking machine). In the case of non-radiometric IR videos, these were obtained in front of the animals from a distance of 1.5 m (in the crush).

2.2.5. Experiment Procedures

As the first procedure, the group of cows was drafted to a yard that was connected to the crush via a raceway, from where cows were individually moved into the crush. In this position, a patch on the left anterior thorax was shaved, which has been considered as the correct place to measure heart rate in bovines [51,52]. Then, the heart rate monitor was placed using an elastic band around the thorax of the cow, and lubricated with gel (Figure 3). When the HR monitor was attached, the head of the cow was restraint in the head bail for 4 min. The recording was performed in front of the cow’s face the first 3 min, and by the side of the cow’s head during the last minute (Figure 4a,b). After this period, the cow was released from the crush, allowing her to visit the milking robot to be milked.

Figure 3.

Polar heart rate sensor and Polar watch fastened by elastic band.

Figure 4.

The various camera positions used; (a) the developed device is held in front of the animals, within a distance of 1.5 m, (b) the developed device is held next to the animals, within a distance of 0.5 m, (c) developed devices are located next to the milking robot, within a distance of 0.5 m and (d) GoPro cameras are located in the milking robot, within a distance of 0.5 m.

Cameras were also placed in the milking robot in order to record videos and IR images of the face of these cows when they were being milked (duration 6–11 min) (Figure 4c,d). When the cow finished the milking process, she was moved to the crush again in order to record images similarly to the previous time. Once this recording was finished, the HR monitor was removed and the cow was released on pasture with the rest of the herd during the afternoon. These procedures were performed during the morning (7:00–11:00) in two consecutive days. Non-radiometric IR videos of ten cows were recorded for one minute during the first crush procedure on the first day of the experiment (due to technical difficulties, it was not possible to record during the following procedures). Finally, the CIDR was removed at the end of the last day at the time when the Polar HR monitor was removed.

2.3. Statistical Analysis

The accuracy of the feature tracking method was evaluated by measuring the number of frames in which this algorithm correctly tracked the desired area over the total frames processed.

Once the images were processed for the assessment of physiological parameters, statistical analyses were performed using Minitab® Statistical Software 18 (Minitab Pty Ltd., Sydney NSW, Australia). Firstly, normality tests were performed in order to check the distribution of the data obtained. After that, Pearson correlation was calculated to measure the strength of the linear association between each remotely measured parameter (surface temperature, RR and HR) with its respective parameter measured by an ‘invasive’ method (intravaginal temperature loggers, visual observations for RR assessment, and Polar HR monitors). In addition, a linear regression analysis was performed to determine whether remotely measured parameters could accurately predict invasively measured parameters. In terms of temperature and HR, correlations were firstly made with the set of data obtained from the whole group and considering the average of every minute recorded as well as considering the average obtained for each handling procedure (1st crush, milking and 2nd crush). Then, the correlation analyses were performed per cow. From these last correlations, the average and standard deviation (SD) of the correlation coefficients obtained from each setting (type of camera; camera position; camera distance; area of face analysed) were calculated and presented as ‘mean ± SD’.

Furthermore, the differences between the parameters obtained from both methods were calculated.

3. Results

The data obtained from ‘invasive’ and remote methods were compared within the group and within individual animals.

Firstly, the accuracy of the feature tracking algorithm was calculated. Table 1 displays its level of accuracy when tracking different areas of a cows’ head. This method showed a high accuracy tracking the three areas analysed (92%–95%). Moreover, it improved in accuracy as the size of the area tracked increased.

Table 1.

Analysis of the accuracy of the tracking method for each feature (eyes, forehead, face).

| Tracked Feature | Number of Frames Analysed | Number of Frames Correctly Tracked | Accuracy (%) |

|---|---|---|---|

| Eyes | 134,966 | 124,207 | 92.0 |

| Forehead | 134,966 | 124,526 | 92.3 |

| Face | 125,715 | 118,813 | 94.5 |

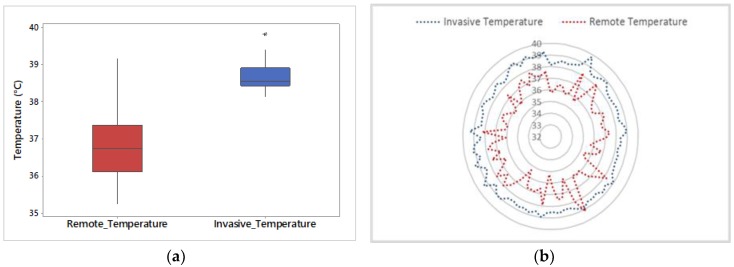

When comparing intravaginal temperatures with eye temperatures, eye temperature had a larger variability than the vaginal temperature (Figure 5a). Furthermore, Figure 5b shows the trend of intravaginal and eye temperature, which shows that eye temperature was permanently lower than intravaginal temperature.

Figure 5.

Comparison between intravaginal temperature (Invasive Temperature) and eye temperature obtained from TIR images (Remote Temperature) using (a) boxplots and (b) a trend graph. For the measurement of remote temperature, the thermal infrared camera (FLIR® AX8) was located beside the animals within a distance of 0.5 m. Data used was the average for each cow within a handling period (milking or crush). N = 60. On a boxplot (a), asterisks (*) denote outliers.

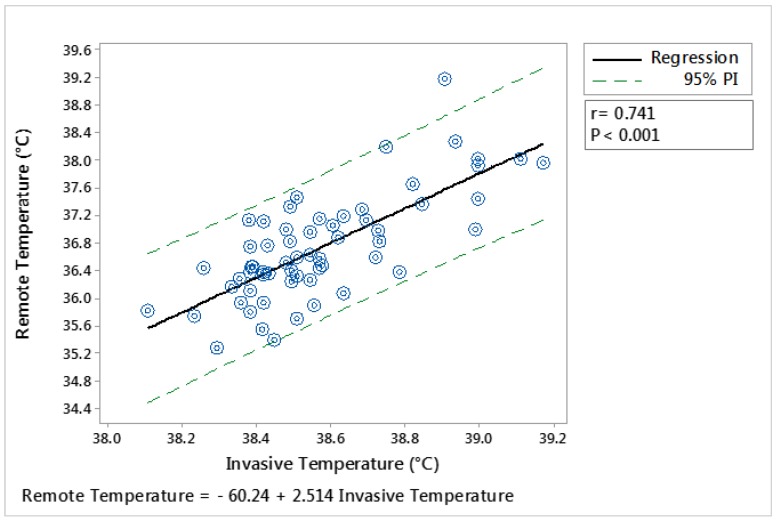

When the analysis among temperatures was performed including all temperature data obtained from the whole group, eye temperature extracted from images that were recorded on the side of cows within 0.5 m of distance, showed a high correlation with intravaginal temperature (r = 0.74; p < 0.001; Figure 6).

Figure 6.

Regression analysis of the relationship between (i) intravaginal temperature (Invasive Temperature) and (ii) eye temperature obtained from thermal infrared images (Remote Temperature). For the measurement of remote temperature, the thermal infrared camera (FLIR® AX8) was located beside the animals within a distance of 0.5 m. The solid line shows the line of best fit, the dotted lines show the 95% and the equation and associated r and p value are shown. Each point represents an average for the animal within a handling period (milking or crush). N = 60.

The temperature data were then analysed per cow, where correlations were higher for the analysis that used the average of temperature obtained during each handling period (Table 2, Table 3), compared to when the analysis was performed considering the average temperature obtained for each minute recorded. In addition, the highest correlations between invasively and remotely obtained temperatures were observed when ‘eye area’ was selected as the ROI during the processing of TIR images (r = 0.64–0.93), compared to the correlation obtained when ‘ear-base’ was used as ROI (r = 0.33–0.70).

Table 2.

Pearson correlation coefficients (r) between the core temperature of individual animals obtained from intravaginal loggers and temperature measured using thermal infrared images, analyzing different areas on the animal (eye area, ear base) and different camera positions relative to the animal (front, side, 1.5 m, 0.5 m). Data used is the average for the animal within a period of handling (six handling periods per animal).

| Invasive Method | Computer-Vision Method | Mean Correlation Coefficient (r) * | Range (r) | p-Value ** | |||

|---|---|---|---|---|---|---|---|

| Camera | Position | Distance | Analysed Area | ||||

| Thermochron | FLIR AX8 | Front | 1.5 m | Eye area | 0.77 ± 0.12 | 0.64–0.93 | <0.01 |

| Thermochron | FLIR AX8 | Side | 0.5 m | Eye area | 0.8 ± 0.06 | 0.74–0.89 | <0.01 |

| Thermochron | FLIR AX8 | Side | 0.5 m | Ear base | 0.54 ± 0.15 | 0.33–0.70 | <0.05 |

* Correlation coefficients are presented as mean ± SD; ** p-value are the highest observed in each category.

Table 3.

Pearson correlation coefficients (r) within individual animals, between the core temperature obtained from intravaginal loggers and, temperature measured using thermal infrared images, analyzing different areas on the animal (eye area, ear base) and different camera positions relative to the animal (front, side, 1.5 m, 0.5 m). The data used were the average over one minute, for each animal, within all the handling periods (milking and crush).

| Invasive Method | Computer-Vision Method | Mean Correlation Coefficient (r)* | Range (r) | p-Value** | |||

|---|---|---|---|---|---|---|---|

| Camera | Position | Distance | Analysed Area | ||||

| Thermochron | FLIR AX8 | Front | 1.5 m | Eye area | 0.64 ± 0.18 | 0.42–0.83 | <0.05 |

| Thermochron | FLIR AX8 | Side | 0.5 m | Eye area | 0.68 ± 0.17 | 0.47–0.86 | <0.05 |

| Thermochron | FLIR AX8 | Side | 0.5 m | Ear base | 0.43 ± 0.23 | 0.33–0.67 | <0.05 |

* Correlation coefficients are presented as mean ± SD; ** p-value are the highest observed in each category.

Individual correlations between eye temperature and intravaginal temperature were higher when images were recorded on the side of the animal within a distance of 0.5 m (r = 0.8 ± 0.06 (mean ± SD); p < 0.01), than when images were obtained from the front. On the other hand, remote temperatures obtained from the ear base showed a moderate correlation with intravaginal temperature (r = 0.54 ± 0.15 (mean ± SD); p > 0.05) when comparing the temperature averages obtained during each handling period.

The absolute differences between ‘invasive’ and remote temperatures were analysed per individual animal. Eye temperature from front images was identified to be on average 2.4 ± 0.8 °C (mean ± SD) lower than the intravaginal temperature, while eye temperature extracted from images recorded on the side of animals was on average 1.1 ± 0.8 °C (mean ± SD) lower than intravaginal temperature. In addition, ear-base temperature was on average 3.1 ± 0.7 °C (mean ± SD) lower than intravaginal temperature.

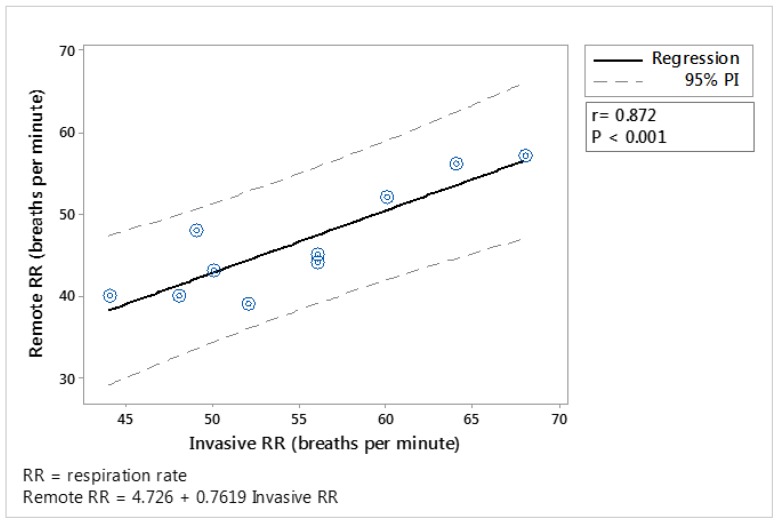

In the case of RR assessment, the ten observations obtained from each method (‘invasive’ and remote method) during the first time cows were restrained in the crush were compared through linear regression, and showed a high correlation (r = 0.87; p < 0.001; Figure 7). The RR parameters obtained from CV techniques were on average 8.4 ± 3.4 (mean ± SD) breaths per minute lower than the RR parameters obtained by visual observations.

Figure 7.

Regression analysis of the relationship between respiration rate (RR) obtained from visual observations (Invasive RR) and the respiration rate remotely obtained (remote RR). For the measurement of remote RR, the camera (FLIR® ONE) was located in front of the animal within a distance of 1.5 m, while the animal was in the first crush. The solid line shows the line of best fit, the dotted lines show the 95% and the equation and associated r and p-value are shown. Each point represents an average for the animal while in the crush. N = 10.

Following this analysis, the HR data were evaluated. Due to inconsistences observed in some of the Polar monitors, the HR data obtained from two animals were not used in this study. The exclusion of this data was based on the large standard deviation (SD) observed. Hopster and Blokhuis [53], and Janzekovic et al. [50] reported a SD of 1.44–6.39 bpm and 6.27–13.92 bpm from the HR of cows obtained from ‘Polar® Sport Testers; therefore, it was decided that data would be excluded from the analysis when it displayed a SD over 20 bpm.

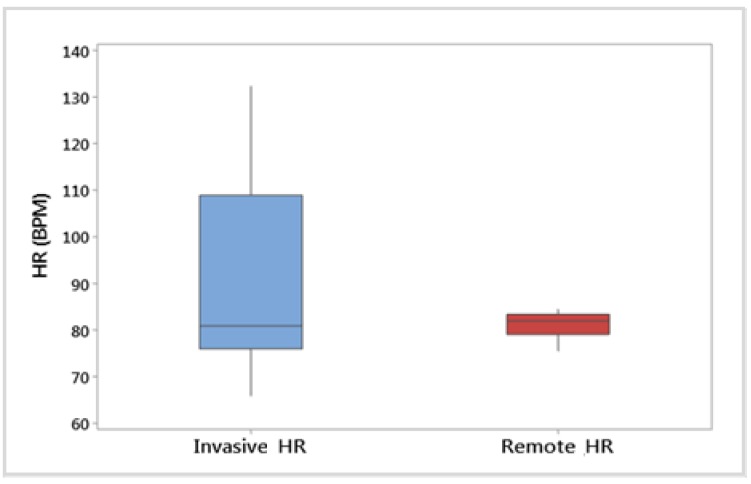

As Figure 8 shows, the HR obtained invasively had a higher variability than the remote measurements. However, similar medians were obtained from both methods (80.9 and 81.9, respectively).

Figure 8.

Data distribution for heart rate (HR, beats per minute, BPM) obtained from Polar HR monitors (Invasive HR) and from videos recorded with a Raspberry-pi camera, with the camera in the front within a distance of 1.5 m, while the animal was in the crush, and analysing the ‘eye area’ (Remote HR). Each data point was an average for the animal across all crush handling periods. N = 32.

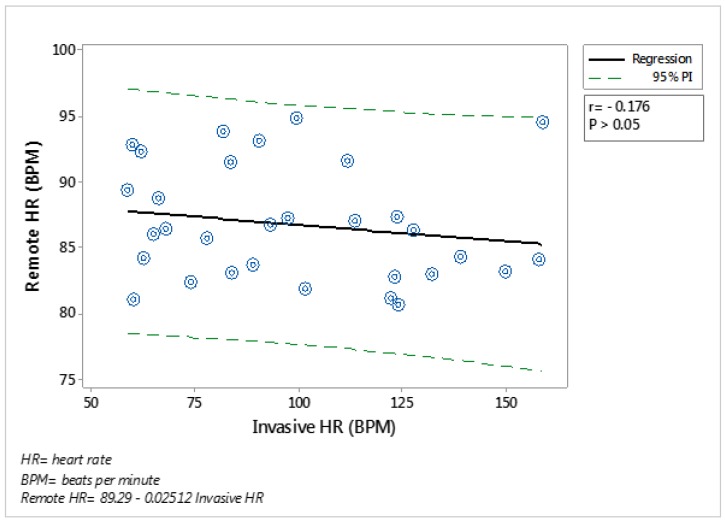

No correlations (p > 0.05) were found between heart rate recorded by the Polar HRM and analysed from the RGB cameras when the analysis was performed including all HR data obtained from the whole group (Figure 9).

Figure 9.

Regression analysis of the relationship between heart rate (HR) obtained from Polar HR monitors and from videos recorded with a Raspberry-pi camera located in front of the animal, within a distance of 1.5 m, while the animal was in the crush, and analysing the ‘eye area’. The solid line shows the line of best fit, the dotted lines show the 95% and the equation and associated r and p-value are shown. Each point represents an average for the group within the each crush handling period. N = 32.

The correlation between methods was then compared within the animal, for the average for each handling period (Table 4), and within the animal, using the average heart rate for each minute across all handling periods (Table 5). The highest correlation was found when comparing the HR average obtained using both methods during each handling procedure (Table 4), in comparison to the analysis that compared the HR average obtained per minute recorded (Table 5). In addition, the highest positive correlations between HR obtained invasively and remotely were observed when ‘eye area’ was selected as the ROI during the processing of RGB videos obtained with Raspberry Pi and GoPro cameras (r = 0.75–0.89; Table 4, Table 5). The mean of the individual correlations was higher when RGB videos were recorded with Raspberry Pi Cameras located in front of the animal within a distance of 1.5 m (r = 0.89 ± 0.09 (mean ± SD); p < 0.05; Table 4). On the other hand, the lowest mean of individual correlations, which was not significant, was observed when the ‘face’ was selected as the ROI (r = 0.16 ± 0.2 (mean ± SD); p > 0.05; Table 4).

Table 4.

Pearson correlation coefficients (r) between heart rate measurements of individual animals obtained from Polar monitors and, heart rate measured using computer vision techniques using different cameras (GoPro, Raspberry Pi), different areas on the animal (eye area, forehead, face) and with cameras located within different distances from the animal (1.5 m, 0.5 m). Data are the average for the animal within each period of handling (six handling periods per animal).

| Invasive Method | Computer-Vision Method | Mean Correlation Coefficient (r) * | Range (r) | p-Value ** | |||

|---|---|---|---|---|---|---|---|

| Camera | Position | Distance | Analysed Area | ||||

| Polar monitor | RaspberryPi | Front | 1.5 m | Eye area | 0.89 ± 0.09 | 0.72–0.99 | <0.05 |

| Polar monitor | RaspberryPi | Front | 1.5 m | Forehead | 0.62 ± 0.23 | 0.32–0.90 | <0.05 |

| Polar monitor | RaspberryPi | Front | 1.5 m | Face | 0.16 ± 0.2 | −0.11–0.39 | >0.05 |

| Polar monitor | RaspberryPi | Side | 0.5 m | Eye area | 0.78 ± 0.04 | 0.74–0.84 | <0.01 |

| Polar monitor | RaspberryPi | Side | 0.5 m | Forehead | 0.71 ± 0.18 | 0.53–0.89 | <0.05 |

| Polar monitor | GoPro | Side | 0.5 m | Eye area | 0.75 ± 0.14 | 0.55–0.92 | <0.05 |

| Polar monitor | GoPro | Front | 0.5 m | Forehead | 0.65 ± 0.08 | 0.58–0.76 | <0.05 |

* Correlation coefficients are presented as mean ± SD; ** p-value are the highest observed in each category.

Table 5.

Pearson correlation coefficients (r) between heart rate measurements of individual animals, obtained from Polar monitors and, heart rate measured using computer vision techniques using different cameras (GoPro, Raspberry Pi), different areas on the animal (eye area, forehead, face) and with cameras located within different distances from the animal (1.5 m, 0.5 m). The data used were the average over one minute, within all the handling periods (milking and crush).

| Invasive method | Computer-Vision Method | Mean Correlation Coefficient (r) * | Range (r) | p-Value ** | |||

|---|---|---|---|---|---|---|---|

| Camera | Position | Distance | Analysed Area | ||||

| Polar monitor | RaspberryPi | Front | 1.5 m | Eye area | 0.83 ± 0.15 | 0.55–0.99 | <0.05 |

| Polar monitor | RaspberryPi | Front | 1.5 m | Forehead | 0.78 ± 0.19 | 0.41–0.99 | <0.05 |

| Polar monitor | RaspberryPi | Front | 1.5 m | Face | 0.2 ± 0.23 | −0.1–0.4 | >0.05 |

| Polar monitor | RaspberryPi | Side | 0.5 m | Eye area | 0.75 ± 0.19 | 0.46–0.96 | <0.01 |

| Polar monitor | RaspberryPi | Side | 0.5 m | Forehead | 0.77 ± 0.20 | 0.39–0.98 | <0.05 |

| Polar monitor | GoPro | Side | 0.5 m | Eye area | 0.77 ± 0.11 | 0.61–0.87 | <0.01 |

| Polar monitor | GoPro | Front | 0.5 m | Forehead | 0.79 ± 0.18 | 0.50–0.99 | <0.05 |

* Correlation coefficients are presented as mean ± SD; ** p-value are the highest observed in each category.

4. Discussion

Although changes of physiological parameters have largely been used to detect stress and illness in animals, their assessment still includes some invasive methods that can elevate stress and pain in animals, affecting results and probably animal wellbeing, as well as being time-consuming and labour-intensive. Computer vision techniques could assist animal monitoring and provide valuable information for animal wellbeing assessment. Developed computer algorithms were evaluated to track specific features of cows on the RGB videos, measure surface temperature, RR and HR in cattle.

Animal recognition and tracking is an area of investigation that has become relevant due its contribution to the development of automatic animal monitoring systems [54,55]. This study developed and implemented a feature tracking algorithm in order to improve image processing. This algorithm showed great accuracy when tracking three different areas of the face of the cow (92%–95%). This algorithm had a similar accuracy to the method that Taheri and Toygar [56] proposed to detect and classify animal faces from images (95.31%). However, their method was not applied in moving images. Furthermore, the developed algorithm showed a higher accuracy than the accuracy reported by Jaddoa et al. [57], who tracked the eyes of cattle from TIR images (68%–90% of effectiveness) and was similar to the average accuracy shown by Magee [58], who classified cows from videos by the mark in their body (97% accuracy average).

In terms of the temperatures obtained invasively and remotely during this study, they were compared in order to evaluate the performance of thermal imagery to detect temperature changes in cattle. Following what was observed from the HR analysis of the current study and the observations reported by Hoffmann et al. [6], the comparison between methods was carried out with the data obtained from the whole group, as well as with each cow’s individual temperatures. The current study shows a better performance from the measurements of eye temperature than from ear-base temperature, which is supported by several studies that have used eye temperature of animals with positive outcomes [11,59,60]. The correlation coefficients resulted in this study when comparing vaginal and eye temperatures (r = 0.64–0.80) are higher than the correlation coefficient showed by George et al. [11] (r = 0.52) and Martello et al. [61] (r = 0.43) when compared with vaginal and rectal temperature of cattle, respectively. However, ear-base temperature may be a useful measure when the images are obtained from above, and the eye area is not visible [62]. This has been observed in some studies, where ear-base appeared to be a useful measure when assessing changes of temperature in pigs [19,47].

The correlation between ‘invasive’ and remote temperature measurement was higher when the analysis was run per individual. This can be related to the individual effects, including the individual changes and the level of reactivity to stimuli of each animal, mentioned by Marchant-Forde et al. [63] and Hoffmann et al. [6]. In addition, it is hypothesized that the higher correlation observed when the analysis was performed with the mean temperature obtained within a handling period is related to a lower sensitivity to temperature changes from the vaginal loggers compared to the sensitivity from thermal imagery. Related to this, George et al. [11] argued that the relationship between temperatures obtained from vaginal loggers and TIR images could be affected by the sampling frequency and sensitivity of the techniques. In the case of the current study, the difference of sampling frequency between the temperature loggers (one recording every minute) and the thermal cameras (one image every second) could have had an impact on the correlations obtained. In addition, Stewart et al. [64] claimed that TIR imagery is very sensitive, detecting photons that are emitted in substantial amounts even when there are small changes of temperature in animals, explaining why remotely obtained temperatures seemed to be more sensitive to changes than the temperatures obtained by intravaginal loggers. Furthermore, some researchers have observed that in contrast to intravaginal or rectal temperature, eye temperature appears to decrease immediately after a stressful situation, which is followed later by an increase [59]. Although this decrease was not observed in this study, it could have occurred when animals were being moved to the holding yard just before the crush procedure. This initial decrease and other variabilities of surface temperature can also be due to the natural thermoregulatory mechanism of animals, which can modify the flow of blood through the skin as an adaptative response to changes in ambient temperature, illness and stress among others [65,66].

Furthermore, the results from this study show that a shorter distance between the animal and the thermal cameras results in a more accurate assessment of eye temperature. Apart from the correlation being higher between vaginal loggers and TIR imagery when thermal cameras are located on the side of the animal within 0.5 m of distance, the difference between these measurements is lower than when these images are recorded within 1.5 m of distance (1.1 ± 0.8 and 2.4 ± 0.8, respectively). Differences of performance dependent on distance from subject and camera location have been also observed by Church et al. [60] and Jiao et al. [67], who pointed out the importance of a correct and constant camera location to obtain consistent and accurate measurements from TIR images. Similarly to the current results, Church et al. [60] observed a better performance of TIR imagery when the eye-camera distance was up to 1 m.

Due to the effect that the external conditions and the physiological status of animals have on the temperatures obtained from TIR images, it is important to consider that variations can be observed in the relationship between core body temperature and surface temperature.

As RR is another relevant parameter for animal monitoring, research is being carried out in order to develop accurate and practical methods to assess it. This study showed positive results that could open the opportunity for further research and implementation of TIR imagery as a remote technique to assess RR of animals. Researchers, such as Stewart et al. [31] have also investigated the potential of TIR imagery to measure RR in cattle. Although they used radiometric IR videos to observe the changes around cows’ nostrils and manually counted their RR, while this study implemented non-radiometric IR videos and a developed algorithm to semi-automatically count cows’ RR, both studies observed good agreement between the proposed methods and visual observation. In addition, both studies showed similar differences between the RR means obtained from compared methods (2.4–8.4 and 8.4 ± 3.4 breaths per minute, respectively). However, Stewart et al. [31] reported that respiration rates from IR images were overestimated when compared to the RR obtained from visual observations, while the present study showed that the respiration rates from IR images were underestimated. These results suggest a promising opportunity to continue the research focused on the improvement of CV techniques based on TIR to automatically assess RR in animals.

The evaluation of HR parameters showed a larger variability in the measurements obtained from the Polar monitors (range: 66–132 bpm), in comparison with the variability observed from the HR remotely obtained (range: 75–102 bpm). These variabilities were similar to what has been observed in other studies. For instance, Janzekovic et al. [50] observed a range of 60–115 bpm from Polar monitors that measured HR of cows during several milking periods. In addition, Wenzel et al. [49] observed a range of 70–103 bpm when measuring HR in dairy cows before and during being milked. However, this difference of variability between remotely and invasively obtained HR differs from the results obtained by Balakrishnan et al. [68], who observed a similar distribution of the HR of humans, when comparing the measurements obtained from ECG and RGB videos. The difference of variability between methods and the narrow range of HR obtained from the proposed CV technique, could indicate that further research is needed to adjust this method to the specific characteristics of cattle.

As Janzekovic et al. [50] suggested, the changes of HR parameters observed in this study appeared to be greatly influenced by the individual response of the animal. This could indicate that measuring HR changes of individuals could be a more precise assessment than only measuring HR per se. Furthermore, as Hopster and Blokhuis [53] identified, the performance of Polar monitors appeared to differ. Taking this into consideration, the comparison between methods was carried out per animal. From this analysis, the highest correlation coefficient resulted from the comparison between the ‘invasive’ and remote HR measurements (r = 0.89 ± 0.09; mean ± SD) and was slightly lower than the correlation observed by Takano and Ohta [9], who reported a correlation coefficient of 0.90 when comparing the human HR provided by pulse oximeters and the HR extracted by CV techniques that identified the change of brightness within the ROI (cheek). However, it was higher than the correlation reported by Cheng et al. [69] when evaluating computer algorithms to assess human HR from RGB videos (r = 0.53).

Some limitations were encountered during the evaluation of HR, which lead to recommendations for improvements in further research. Firstly, similarly to what some authors have reported [25,69], the light conditions and excessive animal motion could have had an impact on the current results. Moreover, as the proposed method involves the detection of brightness changes within the pixels of the ROI, it is suspected that the variation in colour in faces of cows could lead to artefacts in the HR assessment. Considering this, further research is suggested to adjust this method to the different colours and combination of colours present in cows. Secondly, Polar monitors have shown some inconsistency in their performance when used on cattle, which led to some noise in their HR measurements. Similar issues were reported by other researchers, who mentioned that monitors performance was affected by electrodes position and animals movement [53,63]. Marchant-Forde et al. [63] also found a delay in the HR changes observed on the monitors data, compared to the HR changes showed by the ECG, which had a slight effect on the correlation between methods. They also observed that the correlation between methods was greatly dependent on individual animal effects. All these factors could explain why the present study showed better correlations between Polar monitors and computer vision heart rates when the analysis was performed by handling period instead of by minute, and per individual animal instead of the whole group of animals.

A large number of studies in humans and some studies in animals suggested the potential of computer vision techniques to perform several tasks that can contribute to human and animal monitoring. This study shows a promising potential for computer vision techniques to monitor changes in physiological parameters of cattle and other animals, promoting further research focused on improving these techniques to generate useful tools for the assessment of animal health and welfare.

5. Conclusions

This study involved the development and validation of computer vision techniques in order to evaluate the potential of remotely sensed data (TIR and RGB imagery) for assessing heart rate, eye and ear-base temperature, and respiration rate in cattle. Although some limitations were identified during the comparison of ‘invasive’ and remote methods, the methods evaluated showed the ability to detect changes in physiological parameters in individual animals. These techniques could lead to the development of useful methods to constantly monitor farm animals and alert any relevant physiological changes on them. Nevertheless, more research is needed to investigate the feasibility of implementing these methods on a larger scale and to decrease the impact that environmental and animal factors have on these measurements.

Acknowledgments

This research was supported by the Australian Government through the Australian Research Council (Grant number IH120100053). Maria Jorquera-Chavez acknowledge the support from the scholarship funded by the CONICYT PFCHA/Beca de Doctorado en el Extranjero Becas Chile,/2016 – 72170291.

Author Contributions

Conceptualization, F.R.D., E.C.J. and M.J.-C.; methodology, M.J.-C., E.C.J., S.F., F.R.D., R.D.W. and T.P; software, S.F. and T.P.; formal analysis, M.J.-C.; investigation, M.J.-C.; resources, F.R.D.; data curation, M.J.; writing—original draft preparation, M.J.; writing—review and editing, M.J.-C., E.C.J., S.F., F.R.D and R.D.W.; supervision, S.F., E.C.J, F.R.D. and R.D.W.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Tullo E., Finzi A., Guarino M. Environmental impact of livestock farming and precision livestock farming as a mitigation strategy. Sci. Total Environ. 2019;650:2751–2760. doi: 10.1016/j.scitotenv.2018.10.018. [DOI] [PubMed] [Google Scholar]

- 2.Norton T., Berckmans D. Engineering advances in precision livestock farming. Biosyst. Eng. 2018;173:1–3. doi: 10.1016/j.biosystemseng.2018.09.008. [DOI] [Google Scholar]

- 3.Matthews S.G., Miller A.L., Plötz T., Kyriazakis I. Automated tracking to measure behavioural changes in pigs for health and welfare monitoring. Sci. Rep. 2017;7:17582. doi: 10.1038/s41598-017-17451-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Tscharke M., Banhazi T.M. A brief review of the application of machine vision in livestock behaviour analysis. Agrárinformatika/J. Agric. Informa. 2016;7:23–42. [Google Scholar]

- 5.Barbosa Pereira C., Kunczik J., Zieglowski L., Tolba R., Abdelrahman A., Zechner D., Vollmar B., Janssen H., Thum T., Czaplik M. Remote welfare monitoring of rodents using thermal imaging. Sensors. 2018;18:3653. doi: 10.3390/s18113653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hoffmann G., Schmidt M., Ammon C., Rose-Meierhöfer S., Burfeind O., Heuwieser W., Berg W. Monitoring the body temperature of cows and calves using video recordings from an infrared thermography camera. Vet. Res. Commun. 2013;37:91–99. doi: 10.1007/s11259-012-9549-3. [DOI] [PubMed] [Google Scholar]

- 7.Van Hertem T., Lague S., Rooijakkers L., Vranken E. Towards a sustainable meat production with precision livestock farming; Proceedings of the Food System Dynamics; Innsbruck-Igls, Austria. 15–19 February 2016; pp. 357–362. [Google Scholar]

- 8.Vainer B.G. A novel high-resolution method for the respiration rate and breathing waveforms remote monitoring. Ann. Biomed. Eng. 2018;46:960–971. doi: 10.1007/s10439-018-2018-6. [DOI] [PubMed] [Google Scholar]

- 9.Takano C., Ohta Y. Heart rate measurement based on a time-lapse image. Med. Eng. Phys. 2007;29:853–857. doi: 10.1016/j.medengphy.2006.09.006. [DOI] [PubMed] [Google Scholar]

- 10.Strutzke S., Fiske D., Hoffmann G., Ammon C., Heuwieser W., Amon T. Development of a noninvasive respiration rate sensor for cattle. J. Dairy Sci. 2019;102:690–695. doi: 10.3168/jds.2018-14999. [DOI] [PubMed] [Google Scholar]

- 11.George W., Godfrey R., Ketring R., Vinson M., Willard S. Relationship among eye and muzzle temperatures measured using digital infrared thermal imaging and vaginal and rectal temperatures in hair sheep and cattle. J. Anim. Sci. 2014;92:4949–4955. doi: 10.2527/jas.2014-8087. [DOI] [PubMed] [Google Scholar]

- 12.Andrade O., Orihuela A., Solano J., Galina C. Some effects of repeated handling and the use of a mask on stress responses in zebu cattle during restraint. Appl. Anim. Behav. Sci. 2001;71:175–181. doi: 10.1016/S0168-1591(00)00177-5. [DOI] [PubMed] [Google Scholar]

- 13.Selevan J. Method and Apparatus for Determining Heart Rate. Application No. 10/859,789. U.S. Patent. 2004 Jun 3;

- 14.Vermeulen L., Van de Perre V., Permentier L., De Bie S., Geers R. Pre-slaughter rectal temperature as an indicator of pork meat quality. Meat Sci. 2015;105:53–56. doi: 10.1016/j.meatsci.2015.03.007. [DOI] [PubMed] [Google Scholar]

- 15.Neethirajan S. Recent advances in wearable sensors for animal health management. Sens. Bio-Sens. Res. 2017;12:15–29. doi: 10.1016/j.sbsr.2016.11.004. [DOI] [Google Scholar]

- 16.Pastell M., Kaihilahti J., Aisla A.-M., Hautala M., Poikalainen V., Ahokas J. A system for contact-free measurement of respiration rate of dairy cows. Precis. Livest. Farm. 2007;7:105–109. [Google Scholar]

- 17.Zhao F., Li M., Qian Y., Tsien J.Z. Remote measurements of heart and respiration rates for telemedicine. PLoS ONE. 2013;8:e71384. doi: 10.1371/journal.pone.0071384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rocha L.M. Ph.D. Thesis. Université Laval; Québec, QC, Canada: 2016. Validation of Stress Indicators for the Assessment of Animal Welfare and Prediction of Pork Meat Quality Variation at Commercial level. [Google Scholar]

- 19.Soerensen D.D., Pedersen L.J. Infrared skin temperature measurements for monitoring health in pigs: A review. Acta Vet. Scand. 2015;57:5. doi: 10.1186/s13028-015-0094-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Alsaaod M., Schaefer A., Büscher W., Steiner A. The role of infrared thermography as a non-invasive tool for the detection of lameness in cattle. Sensors. 2015;15:14513–14525. doi: 10.3390/s150614513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Janžekovič M., Muršec B., Janžekovič I. Techniques of measuring heart rate in cattle. Tehnički Vjesnik. 2006;13:31–37. [Google Scholar]

- 22.Jukan A., Masip-Bruin X., Amla N. Smart computing and sensing technologies for animal welfare: A systematic review. ACM Comput. Surv. (CSUR) 2017;50:10. doi: 10.1145/3041960. [DOI] [Google Scholar]

- 23.Irani R., Nasrollahi K., Moeslund T.B. Improved pulse detection from head motions using dct; Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP); Lisbon, Portugal. 5–8 January 2014; Piscataway, NJ, USA: IEEE; 2014. pp. 118–124. [Google Scholar]

- 24.Wei L., Tian Y., Wang Y., Ebrahimi T., Huang T. Automatic webcam-based human heart rate measurements using laplacian eigenmap; Proceedings of the Asian Conference on Computer Vision; Daejeon, Korea. 5–9 November 2012; Berlin, Germany: Springer; 2012. pp. 281–292. [Google Scholar]

- 25.Sikdar A., Behera S.K., Dogra D.P. Computer-vision-guided human pulse rate estimation: A review. IEEE Rev. Biomed. Eng. 2016;9:91–105. doi: 10.1109/RBME.2016.2551778. [DOI] [PubMed] [Google Scholar]

- 26.Viejo C.G., Fuentes S., Torrico D.D., Dunshea F.R. Non-contact heart rate and blood pressure estimations from video analysis and machine learning modelling applied to food sensory responses: A case study for chocolate. Sensors. 2018;18:1802. doi: 10.3390/s18061802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Poh M.-Z., McDuff D.J., Picard R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express. 2010;18:10762–10774. doi: 10.1364/OE.18.010762. [DOI] [PubMed] [Google Scholar]

- 28.Li X., Chen J., Zhao G., Pietikainen M. Remote heart rate measurement from face videos under realistic situations; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Columbus, OH, USA. 23–28 June 2014; Piscataway, NJ, USA: IEEE; 2014. pp. 4264–4271. [Google Scholar]

- 29.Wang W., Stuijk S., De Haan G. Exploiting spatial redundancy of image sensor for motion robust rppg. IEEE Trans. Biomed. Eng. 2015;62:415–425. doi: 10.1109/TBME.2014.2356291. [DOI] [PubMed] [Google Scholar]

- 30.Barbosa Pereira C., Czaplik M., Blazek V., Leonhardt S., Teichmann D. Monitoring of cardiorespiratory signals using thermal imaging: A pilot study on healthy human subjects. Sensors. 2018;18:1541. doi: 10.3390/s18051541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stewart M., Wilson M., Schaefer A., Huddart F., Sutherland M. The use of infrared thermography and accelerometers for remote monitoring of dairy cow health and welfare. J. Dairy Sci. 2017;100:3893–3901. doi: 10.3168/jds.2016-12055. [DOI] [PubMed] [Google Scholar]

- 32.Bernard V., Staffa E., Mornstein V., Bourek A. Infrared camera assessment of skin surface temperature–effect of emissivity. Phys. Med. 2013;29:583–591. doi: 10.1016/j.ejmp.2012.09.003. [DOI] [PubMed] [Google Scholar]

- 33.Hadžić V., Širok B., Malneršič A., Čoh M. Can infrared thermography be used to monitor fatigue during exercise? A case study. J. Sport Health Sci. 2019;8:89–92. doi: 10.1016/j.jshs.2015.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Litscher G., Ofner M., Litscher D. Manual khalifa therapy in patients with completely ruptured anterior cruciate ligament in the knee: First preliminary results from thermal imaging. N. Am. J. Med. Sci. 2013;5:473–479. doi: 10.4103/1947-2714.117307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Frize M., Adéa C., Payeur P., Gina Di Primio M., Karsh J., Ogungbemile A. Detection of Rheumatoid Arthritis Using Infrared Imaging. In: Dawant B.M., Haynor D.R., editors. Medical Imaging 2011: Image Processing. International Society for Optics and Photonics; Bellingham, WA, USA: 2011. p. 79620M. [Google Scholar]

- 36.Metzner M., Sauter-Louis C., Seemueller A., Petzl W., Klee W. Infrared thermography of the udder surface of dairy cattle: Characteristics, methods, and correlation with rectal temperature. Vet. J. 2014;199:57–62. doi: 10.1016/j.tvjl.2013.10.030. [DOI] [PubMed] [Google Scholar]

- 37.Shi J., Tomasi C. Good features to track; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Seattle, WA, USA. 21–23 June 1994; Piscataway, NJ, USA: IEEE; 1994. pp. 593–600. [Google Scholar]

- 38.Bay H., Tuytelaars T., Van Gool L. Surf: Speeded up robust features; Proceedings of the European Conference on Computer Vision; Graz, Austria. 7–13 May 2006; Berlin, Germany: Springer; 2006. pp. 404–417. [Google Scholar]

- 39.Leutenegger S., Chli M., Siegwart R. Brisk: Binary robust invariant scalable keypoints; Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV); Barcelona, Spain. 6–13 November 2011; Piscataway, NJ, USA: IEEE; 2011. pp. 2548–2555. [Google Scholar]

- 40.Rosten E., Drummond T. Fusing points and lines for high performance tracking; Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV); Beijing, China. 17–21 October 2005; Piscataway, NJ, USA: IEEE; 2005. pp. 1508–1515. [Google Scholar]

- 41.Harris C.G., Stephens M. A combined corner and edge detector; Proceedings of the Alvey Vision Conference; Manchester, UK. 1 August–2 September 1988. [Google Scholar]

- 42.Dalal N., Triggs B. Histograms of oriented gradients for human detection; Proceedings of the International Conference on Computer Vision & Pattern Recognition (CVPR’05); San Diego, CA, USA. 20–25 June 2005; pp. 886–893. [Google Scholar]

- 43.Viola P., Jones M. Rapid object detection using a boosted cascade of simple features; Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’01); Kauai, HI, USA. 8–14 December 2001. [Google Scholar]

- 44.Kim T., Kim S. Pedestrian detection at night time in fir domain: Comprehensive study about temperature and brightness and new benchmark. Pattern Recognit. 2018;79:44–54. doi: 10.1016/j.patcog.2018.01.029. [DOI] [Google Scholar]

- 45.Rashid T., Khawaja H.A., Edvardsen K. Measuring thickness of marine ice using ir thermography. Cold Reg. Sci. Technol. 2019;158:221–229. doi: 10.1016/j.coldregions.2018.08.025. [DOI] [Google Scholar]

- 46.Schaefer A.L., Cook N., Tessaro S.V., Deregt D., Desroches G., Dubeski P.L., Tong A.K.W., Godson D.L. Early detection and prediction of infection using infrared thermography. Can. J. Anim. Sci. 2004;84:73–80. doi: 10.4141/A02-104. [DOI] [Google Scholar]

- 47.Schmidt M., Lahrmann K.-H., Ammon C., Berg W., Schön P., Hoffmann G. Assessment of body temperature in sows by two infrared thermography methods at various body surface locations. J. Swine Health Prod. 2013;21:203–209. [Google Scholar]

- 48.Burdick N., Carroll J., Dailey J., Randel R., Falkenberg S., Schmidt T. Development of a self-contained, indwelling vaginal temperature probe for use in cattle research. J. Therm. Biol. 2012;37:339–343. doi: 10.1016/j.jtherbio.2011.10.007. [DOI] [Google Scholar]

- 49.Wenzel C., Schönreiter-Fischer S., Unshelm J. Studies on step–kick behavior and stress of cows during milking in an automatic milking system. Livest. Prod. Sci. 2003;83:237–246. doi: 10.1016/S0301-6226(03)00109-X. [DOI] [Google Scholar]

- 50.Janzekovic M., Stajnko D., Brus M., Vindis P. In: Advanced Knowledge Application in Practice. Fuerstner I., editor. Sciyo; Rijeka, Croatia: 2010. pp. 157–172. Chapter 9. [Google Scholar]

- 51.Miwa M., Oishi K., Nakagawa Y., Maeno H., Anzai H., Kumagai H., Okano K., Tobioka H., Hirooka H. Application of overall dynamic body acceleration as a proxy for estimating the energy expenditure of grazing farm animals: Relationship with heart rate. PLoS ONE. 2015;10:e0128042. doi: 10.1371/journal.pone.0128042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Bourguet C., Deiss V., Gobert M., Durand D., Boissy A., Terlouw E.C. Characterising the emotional reactivity of cows to understand and predict their stress reactions to the slaughter procedure. Appl. Anim. Behav. Sci. 2010;125:9–21. doi: 10.1016/j.applanim.2010.03.008. [DOI] [Google Scholar]

- 53.Hopster H., Blokhuis H.J. Validation of a heart-rate monitor for measuring a stress response in dairy cows. Can. J. Anim. Sci. 1994;74:465–474. doi: 10.4141/cjas94-066. [DOI] [Google Scholar]

- 54.Guo Y.-Z., Zhu W.-X., Jiao P.-P., Ma C.-H., Yang J.-J. Multi-object extraction from topview group-housed pig images based on adaptive partitioning and multilevel thresholding segmentation. Biosyst. Eng. 2015;135:54–60. doi: 10.1016/j.biosystemseng.2015.05.001. [DOI] [Google Scholar]

- 55.Burghardt T., Calic J. Real-time face detection and tracking of animals; Proceedings of the 2006 8th Seminar on Neural Network Applications in Electrical Engineering; Belgrade/Montenegro, Serbia. 25–27 September 2006; Piscataway, NJ, USA: IEEE; 2006. pp. 27–32. [Google Scholar]

- 56.Taheri S., Toygar Ö. Animal classification using facial images with score-level fusion. IET Comput. Vis. 2018;12:679–685. doi: 10.1049/iet-cvi.2017.0079. [DOI] [Google Scholar]

- 57.Jaddoa M.A., Al-Jumaily A., Gonzalez L., Cuthbertson H. Automatic eyes localization in thermal images for temperature measurement in cattle; Proceedings of the 2017 12th International Conference on Intelligent Systems and Knowledge Engineering (ISKE); Nanjing, China. 24–26 November 2017; Piscataway, NJ, USA: IEEE; 2017. pp. 1–6. [Google Scholar]

- 58.Magee D.R. Ph.D. Thesis. The University of Leeds; Leeds, UK: 2001. Machine Vision Techniques for the Evaluation of Animal Behaviour. [Google Scholar]

- 59.Stewart M., Stafford K., Dowling S., Schaefer A., Webster J. Eye temperature and heart rate variability of calves disbudded with or without local anaesthetic. Physiol. Behav. 2008;93:789–797. doi: 10.1016/j.physbeh.2007.11.044. [DOI] [PubMed] [Google Scholar]

- 60.Church J.S., Hegadoren P., Paetkau M., Miller C., Regev-Shoshani G., Schaefer A., Schwartzkopf-Genswein K. Influence of environmental factors on infrared eye temperature measurements in cattle. Res. Vet. Sci. 2014;96:220–226. doi: 10.1016/j.rvsc.2013.11.006. [DOI] [PubMed] [Google Scholar]

- 61.Martello L.S., da Luz E Silva S., da Costa Gomes R., da Silva Corte R.R., Leme P.R. Infrared thermography as a tool to evaluate body surface temperature and its relationship with feed efficiency in bos indicus cattle in tropical conditions. Int. J. Biometeorol. 2016;60:173–181. doi: 10.1007/s00484-015-1015-9. [DOI] [PubMed] [Google Scholar]

- 62.Tabuaciri P., Bunter K.L., Graser H.-U. Proceedings of the AGBU Pig Genetics Workshop, 24 October 2012. Animal Genetics and Breeding Unit, University of New England; Armidale, Australia: 2012. Thermal imaging as a potential tool for identifying piglets at risk; pp. 23–30. [Google Scholar]

- 63.Marchant-Forde R., Marlin D., Marchant-Forde J. Validation of a cardiac monitor for measuring heart rate variability in adult female pigs: Accuracy, artefacts and editing. Physiol. Behav. 2004;80:449–458. doi: 10.1016/j.physbeh.2003.09.007. [DOI] [PubMed] [Google Scholar]

- 64.Stewart M., Webster J., Schaefer A., Cook N., Scott S. Infrared thermography as a non-invasive tool to study animal welfare. Anim. Welf. 2005;14:319–325. [Google Scholar]

- 65.Taylor N.A., Tipton M.J., Kenny G.P. Considerations for the measurement of core, skin and mean body temperatures. J. Therm. Biol. 2014;46:72–101. doi: 10.1016/j.jtherbio.2014.10.006. [DOI] [PubMed] [Google Scholar]

- 66.Godyń D., Herbut P., Angrecka S. Measurements of peripheral and deep body temperature in cattle—A review. J. Therm. Biol. 2019;79:42–49. doi: 10.1016/j.jtherbio.2018.11.011. [DOI] [PubMed] [Google Scholar]

- 67.Jiao L., Dong D., Zhao X., Han P. Compensation method for the influence of angle of view on animal temperature measurement using thermal imaging camera combined with depth image. J. Therm. Biol. 2016;62:15–19. doi: 10.1016/j.jtherbio.2016.07.021. [DOI] [PubMed] [Google Scholar]

- 68.Balakrishnan G., Durand F., Guttag J. Detecting pulse from head motions in video; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Washington, DC, USA. 23–28 June 2013; pp. 3430–3437. [Google Scholar]

- 69.Cheng J., Chen X., Xu L., Wang Z.J. Illumination variation-resistant video-based heart rate measurement using joint blind source separation and ensemble empirical mode decomposition. IEEE J. Biomed. Health. 2017;21:1422–1433. doi: 10.1109/JBHI.2016.2615472. [DOI] [PubMed] [Google Scholar]