Abstract

Next-generation sequencing (NGS) methods for cancer testing have been rapidly adopted by clinical laboratories. To establish analytical validation best practice guidelines for NGS gene panel testing of somatic variants, a working group was convened by the Association of Molecular Pathology with liaison representation from the College of American Pathologists. These joint consensus recommendations address NGS test development, optimization, and validation, including recommendations on panel content selection and rationale for optimization and familiarization phase conducted before test validation; utilization of reference cell lines and reference materials for evaluation of assay performance; determining of positive percentage agreement and positive predictive value for each variant type; and requirements for minimal depth of coverage and minimum number of samples that should be used to establish test performance characteristics. The recommendations emphasize the role of laboratory director in using an error-based approach that identifies potential sources of errors that may occur throughout the analytical process and addressing these potential errors through test design, method validation, or quality controls so that no harm comes to the patient. The recommendations contained herein are intended to assist clinical laboratories with the validation and ongoing monitoring of NGS testing for detection of somatic variants and to ensure high quality of sequencing results.

Next-generation sequencing (NGS) for the detection of somatic variants is being used in a variety of molecular oncology applications and scenarios, ranging from sequencing entire tumor genomes and transcriptomes for clinical research to targeted clinical diagnostic gene panels. This guideline will focus on targeted gene panels and their diagnostic use in solid tumors and hematological malignancies. The expanding knowledge base of molecular alterations that initiate and drive tumor growth and metastasis has resulted in the development and clinical laboratory implementation of a diversity of targeted gene panels. Individual gene panels may focus on solid tumors, or hematological malignancies, or may be technically designed to interrogate both, with interpretation focused on the tumor phenotype. The information generated by targeted gene panels can inform diagnostic classification, guide therapeutic decisions, and/or provide prognostic insights for a particular tumor. The numbers of genes included in panels can differ substantially between laboratories. Some laboratories include only core genes for which substantial literature exists with regard to their diagnostic, therapeutic, or prognostic relevance. Other panels include a larger gene set that includes the aforementioned core set of genes and additional genes that are being investigated in clinical trials and/or for which evidence is still accruing. The analysis of genes in a panel may be restricted to mutational hotspots relevant to a therapeutic agent or it may be broader and include flanking regions or the entire gene sequence. When planning the development of a targeted gene panel, the laboratory needs to define its intended use, including what types of samples will be tested (eg, testing only primary tumor samples or also used to monitor residual disease post-therapy) and what types of diagnostic information will be evaluated and reported. These considerations, among others, will influence the design, validation, and quality control of the test. The Association of Molecular Pathology (AMP) convened a working group of subject matter experts with liaison representation from the College of American Pathologists to address the many issues pertaining to the analytical validation and ongoing quality monitoring of NGS testing for detection of somatic variants and for ensuring high quality of sequencing results. These developed professional recommendations will be described in detail in the following sections.

Overview of Targeted NGS for Oncology Specimens

General Considerations

NGS offers multiple approaches for investigation of human genome, including sequencing of whole genome, exome, and transcriptome. However, targeted panels are often practical in the clinical setting for detection of clinically informative genetic alterations. They are currently the most frequently used type of NGS analysis for molecular diagnostic somatic testing for solid tumors and hematological malignancies. Before introducing clinical NGS testing, several issues need to be considered. The choice of a commercially available targeted NGS panel, or whether to design one’s own, is dependent on clinical indication of the test and the genes to be tested. Germline applications may necessitate different genes/panels than sporadic cancer applications. Solid tumor applications may necessitate different choices than hematological malignancies. Available pan-cancer panels are attractive in that they permit batching of samples across multiple indications with resultant savings on cost, human labor, and turnaround time.

Targeted NGS panels can be designed to detect single-nucleotide variants (SNVs; alias point mutations), small insertions and deletions (indels), copy number alterations (CNAs), and structural variants (SVs), or gene fusions. Within a single panel, target sequences can be designed to cover hotspot regions of a single gene (eg, exons 9 and 20 of PIK3CA, exon 15 of BRAF, exons 18 to 21 of EGFR, or exons 12 and 14 of JAK2) or to cover the entirety of the coding and noncoding sequences relevant to a given gene (eg, KRAS, NRAS, or TP53) or SV. This design is important, as it relates ultimately to the potential capability of the panel to be used for detection of CNAs versus SNVs and small indels. SNVs are the most common mutation type in solid tumors and hematological malignancies [eg, KRAS p.Gly12 variants (eg, p.Gly12Asp), PIK3CA p.His1047Arg, EGFR p.Leu858Arg, and JAK2 p.Val617Phe]. Indels include nucleotide insertions, deletions, or both insertion and deletion events within close proximity. This can include the loss of one wild-type allele accompanied by duplication of a mutation-bearing allele, resulting in maintenance of overall copy number. Indels range in size from 1 to <1 kb in length, although most indels are only several base pairs (bp) to several dozen bp in length. These changes may be in-frame, resulting in the loss and/or gain of amino acids from the protein sequence (eg, EGFR exon 19 deletions), or frameshift, resulting in a change to the protein’s amino acid sequence downstream of the indel (eg, NPM1 p.Trp288 frameshift variants).

Another consideration in choosing or designing a gene panel is whether gene copy number will be assessed as part of the analysis. CNAs are structural changes resulting in gain or loss of genomic DNA in a chromosomal region, common in solid tumors and affecting both tumor suppressor genes and oncogenes. One example is TP53, one of the most frequently mutated genes in cancer; these mutations are often accompanied by loss of the remaining wild-type allele. Other examples include PTEN, CDKN2A, and RB1, losses of which may have clinical implications. Increased copy number can also be important, as in the case of ERBB2 (HER 2) in breast and gastric cancers. Similarly, copy number gains in MET, RICTOR, MDM2, and other genes are of clinical interest. Algorithms for assessing copy number have been established for sequencing data derived from both hybridization-capture and amplicon-based libraries. Regardless of the method, CNA assessment is influenced by the number of probes or amplicons covering the gene of interest. Copy number estimates from a single hotspot region in a gene are not as accurate as measurements averaged from probes or amplicons covering all exonic regions. The limit of detection (particularly for gene losses) is heavily dependent on the fraction of tumor cells present in the tested sample. SVs include translocations and other chromosomal rearrangements. SVs have been identified in many types of human malignancies and serve as important markers for cancer diagnosis, patient prognostication, and for selection of targeted therapies (eg, RET/PTC fusions are used for diagnosis of papillary thyroid carcinoma; TMPRSS2/ERG to predict favorable outcome in prostate cancer; EML4/ALK fusion for selection of targeted therapies in lung adenocarcinomas). There are two major approaches used for detection of gene fusions in targeted oncology NGS panels, either to sequence DNA using hybridization capture method or to sequence RNA (cDNA) by amplification-based methods.1,2 Most of the breakpoints occur in the introns of genes. Therefore, if DNA is used as a starting material, hybrid capture probes have to be designed either to span the whole gene, including intron regions, or to capture those exons/introns that are most frequently involved in the fusion of interest. Another practical approach is to use RNA, reverse transcribe it to cDNA, and to amplify it with fusion-specific primers or using other approaches (eg, hybrid capture). Each of these methods is currently used in the clinical setting. It is important to select and appropriately validate the bioinformatics pipeline for fusion detection.

Targeted NGS Method Overview

Overall, targeted NGS methods include four major components: sample preparation, library preparation, sequencing, and data analysis.3

Sample Preparation

The first step in clinical NGS analysis of a tumor is to assess the submitted sample. In the case of hematological specimens, tumor cell content may be inferred from separate analyses. For example, a white blood cell count differential from a peripheral blood sample or flow cytometric data from a bone marrow aspirate may establish the approximate fraction of tumor cells in the material used for nucleic acid extraction. Solid tumor samples, however, require microscopic review by an appropriately trained and certified pathologist before being accepted for NGS testing. This review ensures that the expected tumor type has been received and that there is sufficient, nonnecrotic tumor for NGS analysis. Microscopic review can be used to mark areas for macrodissection or microdissection (eg, through use of a dissecting microscope), thereby enriching the tumor fraction and increasing sensitivity for gene alterations. Estimation of tumor cell fraction, which is critical information when interpreting mutant allele frequencies and CNAs, should be performed. However, the estimation of tumor percentages based purely on review of hematoxylin and eosin–stained slides can be affected by many factors and experience significant interobserver variability.4 Non-neoplastic cells, such as inflammatory infiltrates and endothelial cells for example, which are often smaller than the neoplastic cells and intimately associated with the tumor, may remain inconspicuous and lead to gross underestimation of tumor proportion. In cases with more abundant inflammation and necrosis, it is important to remain conservative in the estimations and further correlate with the sequencing results. Review of mutant allele fractions (including silent mutations) in these cases would allow for more precise estimates of tumor purity and would allow for more accurate results and confident recommendations for further testing if needed.

Library Preparation

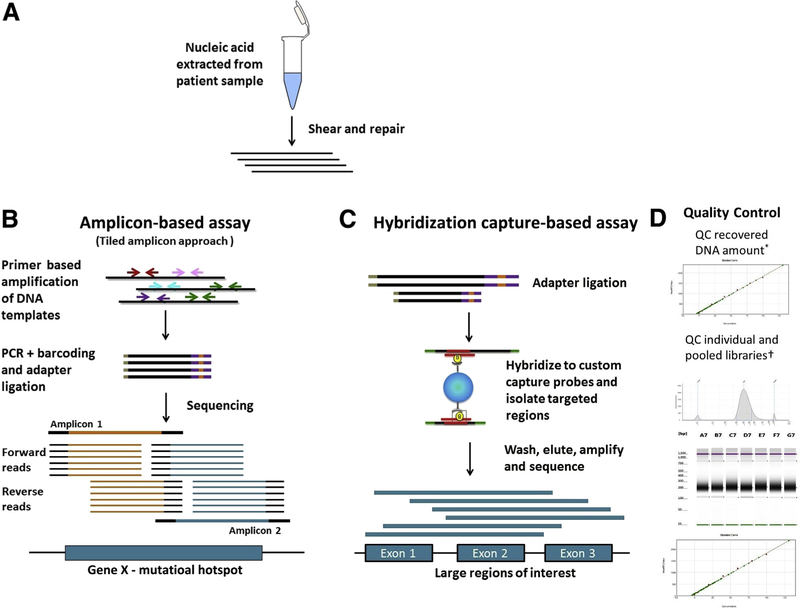

Library preparation is the process of generating DNA or cDNA fragments of specific size range. Two major approaches are used for targeted NGS analysis of oncology specimens: hybrid capture–based and amplification-based approaches (Figure 1).

Figure 1.

High-level comparison of target enrichment workflow for amplicon and capture hybridization NGS assays. A: Nucleic acid is extracted and quantified. The DNA is sheared and repaired to generate fragments of uniform size distribution, and the fragment size can be monitored by gel electrophoresis or Agilent Bioanalyzer. B: Amplification-based assays: Target enrichment in amplification-based assays consists of PCR amplification of the desired region using primers. A tiled amplicon approach is depicted in which primers are designed to generate multiple overlapping amplicons of the same region to avoid allele dropout. The sequencing reads generated will have the same start and stop coordinates dictated by the primer design. C: Hybridization capture–based assays: Target enrichment in hybridization capture–based assays uses long biotinylated oligonucleotide probes complementary to a region of interest. Probes hybridize to target regions contained within larger fragments of DNA. As a result, regions flanking the target will also be isolated and sequenced. Targeted fragments are isolated using streptavidin magnetic beads, followed by washing, elution, amplification, and sequencing. The sequencing reads from these molecules will have unique start and stop coordinates when aligned to a reference, allowing identification and removal of PCR duplicates. D: Quality control: The size distribution pattern of the individual and pooled libraries are quality controlled using Agilent TapeStation and quantified using a Spectramax microplate reader. Example images as visualized using an Agilent Bioanalyzer and Spectramax microplate reader are shown. QC, quality control.

Hybrid capture NGS

Hybrid capture–based enrichment methods use sequence-specific capture probes that are complementary to specific regions of interest in the genome. The probes are solution-based, biotinylated oligonucleotide sequences that are designed to hybridize and capture the regions intended in the design. Capture probes are significantly longer than PCR primers and therefore can tolerate the presence of several mismatches in the probe binding site without interfering with hybridization to the target region. This circumvents issues of allele dropout, which can be observed in amplification-based assays. Because probes generally hybridize to target regions contained within much larger fragments of DNA, the regions flanking the target are also isolated and sequenced. Compared to amplicon-based assays, hybrid capture–based assays enable the interrogation of neighboring regions that may not be easily captured with specific probes. However, hybrid capture–based assays can also isolate neighboring regions that are not of interest, thereby reducing overall coverage in the regions of interest if the off-target sequencing is not appropriately balanced. Also, in cases with rearrangements, isolated neighboring regions may also be from genomic areas far from the intended or predicted targets. Fragment sizes obtained by shearing and other fragmentation approaches will have a large influence over the outcome of the assays. Shorter fragments will be captured with higher specificity than longer fragments as they will contain a lower proportion of off-target sequences. On the other hand, longer reads would be expected to map to the reference sequence with less ambiguity than shorter reads.

Examples of hybridization capture technology that are currently commercially available include Agilent SureSelect (Agilent Technologies, Santa Clara, CA), Nimblegen(F. Hoffmann-La Roche Ltd, Basel, Switzerland), and Illumina TruSeq (Illumina, San Diego, CA). Custom panels can be developed to interrogate large regions of the genome (typically 50 to several thousand genes). Once regions of interest are determined, the size of the target regions will determine the number of probes required to capture each specific region. Probe densities can be increased for regions that prove difficult to enrich. General sample preparation steps for hybridization capture enrichment include initial DNA shearing, followed by several enzymatic steps encompassing end repair, A-base addition and ligation of sequence adaptors followed by PCR amplification, and clean-up. Next, the NGS library is hybridized to the custom biotinylated oligonucleotide capture probes. Because of the random nature of shearing, the size and nucleotide content of the individual captured fragments will differ. The resulting sequencing reads from the captured fragments will contain unique start and stop coordinates once they are aligned to the reference sequence. This enables the identification and removal of PCR duplicates from the data set, allowing a more accurate determination of depth of coverage and variant frequencies.

A modified approach to the hybridization capture options described above is used by the HaloPlex target enrichment system (Agilent Technologies). The HaloPlex technique is based on restriction enzyme digestion of genomic DNA followed by hybridization of biotinylated DNA probes, which are designed with homology only to the 5′ and 3′ ends of the regions of interest. This promotes the circularization of the regions of interest and increases capture specificity. The probe/fragment circular hybrids are captured using streptavidin-coated beads, which are then ligated, purified, and amplified.5,6

Hybridization capture is sensitive to sample base composition. Sequences that are adenine-thymine rich can be lost through poor annealing, whereas regions with high guanine-cytosine content can be lost through formation of secondary structures.7

Amplification-based NGS

Amplification-based library preparation methods rely on a multiplex PCR amplification step to enrich for target sequences. Target sequences are tagged with sample-specific indexes and sequencing adaptors used to anchor the amplicons to complimentary oligonucleotides embedded in the platform’s sequencing substrate before initiation of the sequencing process. Depending on target sequence primer and kit design, the amplification step in library preparation can be either a one-stage or a two-stage PCR approach. Amplification-based library preparation methods are versatile and scalable, and can be used to construct libraries of a range of sizes (eg, Illumina’s 26-gene TruSight Tumor panel and ThermoFisher’s 409-gene AmpliSeq Comprehensive Cancer Panel (ThermoFisher Scientific, Waltham, MA). The hands-on time in the laboratory setting for amplification-based library preparation is typically shorter than for hybridization capture methods.

Amplification-based library preparation methods are vulnerable to chemistry issues associated with PCR primer design. For example, allele dropout may occur if there is a single-nucleotide polymorphism or short indel in the primer region of the sequence, as the primer will be mismatched and not bind. This will result in lower-than-expected coverage for the amplicon and potential for incorrect assessment of variant allele frequencies for any variants in that amplicon. In addition, amplification-based library preparation is less likely to work effectively for genes with high guanine-cytosine content (eg, CEBPA8) or regions with highly repetitive sequences. Furthermore, amplification-based library preparation may not enable detection of indels if the indel removes the primer region of the sequence, or the indel sufficiently alters the size of the amplicon. Finally, sequencing quality diminishes at the ends of amplicons, leading to potential miscalling of variants in poor quality regions. This last issue can be ameliorated by tiling amplicons to ensure overlap if a critical hotspot region lies at the end of an amplicon.

Sequencing

Currently available sequencing platforms have different chemistries for sequencing that include sequencing by synthesis (Illumina NGS platforms) and ion semiconductor–based sequencing (ThermoFisher’s Ion systems), as well as different detection methods. Given the market share and popularity of both platforms, and the engineering differences between them, head-to-head comparison of the two sequencing technologies across multiple applications has been frequently undertaken. A number of studies have examined the performance of both NGS platforms across a diverse set of applications and found that the Illumina and Ion sequencers produce comparable results.9–12 Illumina and Ion sequencers have been evaluated for potential uses in clinical microbiology,9 germline variant detection,11 and prenatal testing.12,13 Recently, an evaluation of the Illumina MiSeq and ThermoFisher Ion Proton systems for detection of somatic variants in oncology determined that both platforms showed equal performance in detection of somatic variants in DNA derived from formalin-fixed, paraffin-embedded (FFPE) tumor samples using amplicon-based commercial panels.14 These comparisons are routinely reported with a caveat associated with the Ion sequencer’s ability to accurately detect homopolymer tracts, which is because of limitations in the linear range of detection of voltage changes associated with the addition of multiple identical nucleotides during sequencing. Despite the similarities in technical performance, differences between platforms exist, notably different DNA input requirements, different cost of reagents, run time, read length, and cost per sample. These differences have implications to the instruments’ capacity to handle low-quality samples, capability of detecting insertions/deletions, and sample throughput.

It is recognized by the Working Group that technological improvements in NGS will outpace published method and/or platform-specific clinical practice recommendations for the foreseeable future. The authors anticipate that detailed discussions of newer methods will be incorporated into a revised and updated version of this article in the near future.

Data Analysis

The data analysis pipeline (alias the bioinformatics pipeline) of NGS can be divided into four primary operations: base calling, read alignment, variant identification, and variant annotation.15,16 A wide number of commercial, open source, and laboratory-developed resources are available for each of these steps. Although detailed information on the requirements of the data analysis pipeline is available, two general points need emphasis. First, it is well established that the four main classes of sequence variants (SNVs, indels, CNAs, and SVs) each require a different computational approach for sensitive and specific identification. Second, the range of software tools, and the type of validation required, depends on assay design. For SNV detection, many popular NGS analysis programs are designed for constitutional genome analysis with algorithms that may ignore SNVs with variant allele frequencies (VAFs) falling outside the expected range for homozygous and heterozygous variants. Published comparisons of various bioinformatics tools for SNV detection may be helpful.17,18

Alignment of indel-containing sequence reads is technically challenging, and algorithms specifically designed for the task are required. One such specialized approach is called local realignment, which essentially tweaks the local alignment of bases within each mapped read so as to minimize the number of base mismatches.19 Probabilistic modeling based on mapped sequence reads can be used to identify indels that are up to 20 bp, but these methods do not provide an acceptable sensitivity for detection of larger indels, such as FLT3 internal tandem duplications that may exceed 300 bp in length.20 Split-read analysis approaches to indel detection use algorithms that can appropriately map the two ends of a read that is interrupted (or split) by insertion or deletion. These algorithms can also manage reads that have been trimmed (soft-clipped) because of misalignments caused by indels.20,21

Although less common than SNVs, CNAs account for the majority of nucleotide differences between any two genomes because of the large size of individual CNAs.22 Detection of CNAs is conceptually different from identification of SNVs or indels because the individual sequence reads arising from CNAs often do not have sequence changes at the bp level but instead are simply underrepresented or overrepresented. Assuming deep enough sequencing coverage, the relative change in DNA content will be reflected in the number of reads mapping within the region of the CNA after normalization to the average read depth across the same sample.23–25 Analysis of allele frequency at commonly occurring SNVs can be a useful indicator of CNAs or loss of heterozygosity in NGS data.26

Finally, detection of SVs also presents some challenges. The breakpoints for interchromosomal and intrachromosomal rearrangements are usually located in noncoding DNA sequences, introns of genes, often in highly repetitive regions, and therefore are difficult to both capture and to map to the reference genome. In addition, SV breakpoints often contain superimposed sequence variation ranging from small indels to fragments from several chromosomes.27,28 Discordant mate-pair methods (with analysis of associated soft-clipped reads) and split-read methods can be used to identify SVs,29–32 and often provide single base accuracy for the localization of the breakpoint, which is a significant advantage in that such precise localization of the breakpoint facilitates orthogonal validation by PCR. Multiple tools should be evaluated to determine which has optimal performance characteristics for the particular assay under consideration, because, depending on the design of capture probes and specific sequence of the target regions, different SV detection tools have large differences in sensitivity or specificity.

Detection of SVs using RNA (cDNA) as starting material uses different bioinformatics approaches, especially when it is performed using amplification-based sequencing. In this case, fused transcripts are aligned to a gene reference of targeted chimeric fusion transcripts.

Considerations for Test Development, Optimization, and Familiarization

Designing Panel Content

Targeted NGS panels can range from hotspot panels focused on individual codons to more comprehensive panels that include the coding regions of hundreds of genes. When designing the NGS panel content, it is important to understand the panel’s intended use. Is it going to be used to search for therapeutic targets and enrolling a patient in a specific clinical trial? Such panels are usually designed as pan-cancer panels and contain a large number of genes, including many genes with scientific evidence of therapeutic response. Panels designed for diagnosis and patient prognostication are usually tumor specific, tend to be smaller in size, and include only those genes that are directly implicated in the oncobiology of the tumor. Overall, selection of specific genes and determining the number of genes in the NGS panel has to be thoroughly considered by laboratories during test development. The scientific evidence for including specific genes in a panel needs to be documented in the validation protocol. The size of the panel may affect sequencing reagent cost, depth of sequencing, laboratory productivity, and complexity of analytical and clinical interpretation. It is recommended to include only those genes that have sufficient scientific evidence for the disease diagnosis, prognostication, or treatment [eg, professional practice guidelines, published scientific literature, test registries (eg, National Center for Biotechnology Information Genetic Testing Registry, http://www.ncbi.nlm.nih.gov/gtr and Eurogen Tests, http://www.eurogentest.org/index.php?id=160, both last accessed January 8, 2016)].33

We recommend that the laboratory should determine gene content based on available scientific evidence and clinical validity and utility of the NGS assay. The scientific evidence used to support NGS panel design should be documented in the validation protocol.

Choosing Sequencing Platform and Sequencing Method

When deciding on a clinical sequencing platform and method, there are numerous considerations that must be taken into account. Important components in the decision-making process include required turnaround time, samples to be tested, required sensitivity, expected volume of testing, type and complexity of the genetic variants to be assessed, degree of bioinformatics support, infrastructure, and resources available in the laboratory (particularly computational resources), and expenses associated with the instrument and test validation. Other considerations include, but are not limited to, the ability to achieve a simple and reproducible workflow in the clinical laboratory, regulatory issues, and reimbursement. Choice of sequencing method will also depend highly on the number of genes required for the panel and the specific needs for the regions of interest.

At the time of this article’s preparation, the most commonly used NGS platforms in a clinical laboratory include the Illumina series and ThermoFisher’s Ion Torrent series. Each platform has pros and cons; therefore, good knowledge of the limitations and advantages of each is important. Illumina platforms provide high versatility and scalability to perform a wide spectrum of assays from small and targeted panels to highly comprehensive. However, they require higher DNA and RNA input and have longer sequencing time. Illumina instruments also require more comprehensive bioinformatics support and are associated with higher cost of instruments. Ion Torrent series, on the other hand, has much shorter sequencing time and may be the platform of choice for many institutions to run small gene panels (<50 genes) and on samples with limited amount of DNA or RNA (ie, biopsy specimens). In addition, this platform is less expensive and comes with sufficient build-in bioinformatics pipelines. However, Ion Torrent series have increased error rate in homopolymer regions and have low scalability.

We recommend that the laboratory directors should consider the following during clinical NGS platform selection: size of the panel (number of genes and the extent of gene coverage); expected testing volume; required test turnaround time; availability of bioinformatics support; provider’s degree of technological innovation, platform flexibility, and scalability; and laboratory resources, technical expertise, and manufacturer’s level of technical support.

Assessing Potential Sources of Error during the NGS Assay Development Process

Careful evaluation of the intended use of an assay will determine potential sources of error that must be addressed. This error-based approach is explained in the Clinical and Laboratory Standards Institute guidance document EP23, which states: “the laboratory should systematically identify the potential failure modes … and estimate the likelihood that harm would come to a patient.”34,pp16 That is to say, the likelihood, detectability, and severity of harm are determined at each step throughout a process. Each source of error can then be addressed at three different levels–assay design, method validation, and/or quality control.

With a complex process such as NGS, this error-based approach to design and optimization is exceedingly important. A thorough understanding of the probability of potential failure points helps determine what level of validation and quality control is needed for particular steps in the process. It will also assist in troubleshooting errors that may arise as well as validating modifications to parts of the test system.

There are potential errors associated with the detection of somatic variants in tumor tissue by NGS that bear specific consideration. Table 1 summarizes a number of preanalytical and analytical factors that can negatively affect NGS assay performance. During the process of nucleic acid extraction, it is critical to avoid cross-contamination between samples, by changing scalpel blades between tissue dissections, wiping work surfaces frequently with bleach, and ensuring that samples are handled only one at a time. Nucleic acid yield can be a problem when working with small samples, particularly FFPE samples; therefore, optimization of the entire extraction procedure is often necessary to minimize transfers and loss of material through multiple steps.35–37 DNA obtained from older FFPE blocks (eg, >3 years) often shows evidence of deamination, which can significantly increase background noise in the final NGS reads, depending on the sequencing method used.35 Treatment with uracil N-glycolase can be helpful with such samples,37 but this may require increasing input DNA into the library step and should be validated thoroughly before being adopted routinely. Stochastic bias is also a concern when working with small samples, as the number of genome equivalents present in the sample may be insufficient to consistently detect variants with low allele burden. In addition, during library preparation, it is important to keep in mind the possible impact of amplification errors and content bias related to the library method used. Because potential sources of error can be addressed through assay design (in addition to method validation and quality controls), these should be considered early in the design phase of test development.

Table 1.

Potential Sources of Error Affecting Next-Generation Sequencing Assays Designed for Formalin-Fixed, Paraffin-Embedded Tissue

| Step | Assay design considerations | Quality assessment during Validation |

|---|---|---|

| DNA yield | Optimize extraction | Measure yield |

| DNA purity and integrity | Optimize DNA library preparation | Monitor DNA library preparation |

| Deamination or depurination | Ung treatment, duplex reads | Confirm all positives with orthogonal method |

| Contamination | Change blades during tissue dissection | No template control |

| Stochastic bias | Increase input, multiple displacement amplification, single-molecule barcoding | Sensitivity control |

| Amplification errors | High-fidelity polymerase, duplex reads | Confirm all positives with orthogonal method |

| Capture bias | Optimize enrichment, long-range PCR | Define minimum coverage, back-fill with orthogonal method |

| Primer bias and allele dropout | Assess causes of false negatives, design overlapping regions | Bioinformatically flag homozygosity of rare variants |

This is not a comprehensive list.

We recommend that the likelihood, detectability, and severity of harm of potential errors should be determined at each step. Anticipated potential errors specific to the detection of somatic variants in tumor tissue by NGS should be addressed. Potential errors should be addressed through assay design, method validation, and/or quality controls.

Optimization and Familiarization Process

Before the formal process of assay validation can begin, a phase of assay development generally referred to as optimization and familiarization (O&F) is required. O&F is the process by which physical samples, supplemented by model data sets (eg, well-curated data sets available in the public domain as well as so-called in silico mutagenized data sets), are subjected to the NGS test to systematically evaluate whether the test meets design expectations. O&F invariably uncovers unanticipated assay design and bioinformatics problems. In addition, by providing laboratory technologists with the opportunity to become familiar with the testing procedures, O&F often uncovers logistical issues. The O&F phase should address library complexity, required depth of sequence, and preliminary performance specifications using well-characterized reference materials.

Preliminary Performance Specifications

The O&F process includes all aspects of NGS test, from sample and library preparation to sequencing and variant calling. Because O&F is performed to identify unanticipated problems with an NGS test, and to make necessary test changes, by definition O&F involves running samples before the formal assay validation process begins. Therefore, it is recommended that the O&F process would involve well-characterized normal cell lines (eg, the HapMap cell line NA12878; Coriell Institute for Medical Research, Camden, NJ), tumor cell lines with well-characterized alterations, as well as patient specimens of different clinical specimen types (eg, fresh, FFPE tissue, cytology specimens as dictated by the assay’s intended use), different technologists performing the testing on different days, and so on, as part of a systematic process to seek and correct unanticipated quality issues associated with the so-called wet bench portion of the test.

Likewise, the O&F phase should include a systematic evaluation of the bioinformatics component to ensure that the pipeline performs as expected based on the sequencing depth of coverage achieved in actual testing, variant class, and VAF. The O&F phase for the bioinformatics pipeline should be performed on sequence files from physical samples, as well as on model data sets designed to challenge particular aspects of the pipeline.

Although some bioinformatics tools make it possible to detect VAFs of 1% (or even lower), validation of assays with such low levels of detection must take into account two confounding factors. First, the current intrinsic error rates of NGS library preparation approaches, sequencing chemistries, and platforms complicate reliable discovery of variants at low VAFs <2% without compromising specificity, although recent advances in NGS methods that employ unique molecular identifiers increase sequencing accuracy and permit reliable detection of low-frequency variants.38–40 Second, the presence of contaminants in clinical NGS data sets can interfere with reliable detection of low-frequency variants.41,42

In the setting of inherited disease testing, the minimum VAF (which is essentially the minimum allelic ratio for those diseases not characterized by CNAs) indicates the lowest level of mosaicism that can be detected. For testing of oncology specimens, because of the intrinsic genetic instability that is a feature of many tumor types, the minimum VAF for detection of a sequence variant is not highly correlated with the percentage tumor cellularity of the specimen or the percentage of tumor cells that harbor the sequence change.

In the setting of cancer, two different features of tumor samples affect the metrics of the limit of variant detection (namely, tissue heterogeneity in that no tumor specimen is composed of 100% neoplastic cells and tumor cell heterogeneity in that malignant neoplasms often contain multiple clones).43–45 Interpretation of test results when NGS is performed on actual tumor samples must take this heterogeneity into account because it affects the lower limit of minor variant allele detection (eg, an assay with a validated lower limit of detection of 10% VAF will fail to detect a heterozygous mutation present in 50% of tumor cells if the percentage tumor cellularity is <40%).

Whether the sample is fresh or fixed also affects the limit of detection. It is well established that formaldehyde reacts with DNA and proteins to form covalent crosslinks, engenders oxidation and deamination reactions, and leads to the formation of cyclic base derivatives,46–49 and these chemical changes can lead to errors in low coverage NGS data sets50,51 or assays designed to detect variants at low VAFs.52

Although highly optimized bioinformatic tools can be used for extremely sensitive detection of specific classes of variants in NGS data, in practice the lower limit of detection is usually defined by the intended use. As examples, among laboratories that perform NGS of tumor samples to guide targeted therapy, the lower limit of VAF that has clear clinical utility is generally in the range of 5%, but in the setting of minimal residual disease testing, accurate detection of variants at frequencies substantially <1% may be required.53

Laboratories should use reference materials composed of well-characterized normal cell lines (eg, the HapMap cell line NA12878) and allogenic or isogenic cell line mixtures to estimate performance specifications given defined quality metrics and thresholds for each type of genetic alteration intended to detect. Initially, the laboratory needs to sequence a normal reference cell line (eg, HapMap cell line NA12878) and compare the sequencing results against the reference sequence provided in an external database (eg, The International Genome Sample Resource, http://www.1000genomes.org, last accessed June 30, 2016). For each reported variant class (ie, SNVs, indels, CNAs, SVs), the performance as positive percentage agreement (PPA) and positive predictive value (PPV) has to be established and documented (Table 2).54 If this experiment does not allow documentation of multiple genomic alterations using the reference cell line because of small size of targeted regions of the NGS panel and absence of a specific variant class, a well-characterized reference material can be used [eg, National Institute of Standards and Technology Reference Material 8398 (https://www.nist.gov/programs-projects/genome-bottle, last accessed January 5, 2017), other commercially available reference materials]. Next, a mixing experiment of reference cell lines (eg, HapMap cell lines NA12878 and NA12877) needs to be performed. If a mix of HapMap cell lines does not allow testing for all types of genetic alterations intended to be detected by the panel, a mixture of tumor cell lines with well-characterized alterations can be used (eg, SW620 cell line; ATCC, Manassas, VA). These reference materials can be used to estimate the performance for different variant types and to evaluate the limits of detection (LODs). The Working Group has developed a series of templates and resources to assist the laboratory in documenting both mixing studies and performance specifications (AMP Validation Resources, http://www.amp.org/committees/clinical_practice/ValidationResources.cfm, last accessed August 22, 2016).

Table 2.

Grid to Assist Laboratories in Calculation of Positive Percentage Agreement and Positive Predictive Value

| Next-generation sequencing testing result | Orthogonal method positive | Orthogonal method negative | Total |

|---|---|---|---|

| Positive | A | B | A + B |

| Negative | C | D | C + D |

| Total | A + C | B + D | A + B + C + D |

Positive percentage agreement (PPA; PPA = [A/(A + C)]).

Positive predictive value (PPV; PPV = [A/(A + B)]).

Reproduced and modified from G. A. Barnard.54 Reproduced with the permission of the Council of the Royal Society from The Philosophical Transactions © Published by Oxford University Press.

We recommend that the O&F phase must be performed before NGS test validation, the wet bench protocol and the bioinformatics pipeline should be established for all clinically relevant variant types (eg, SNVs, indels, CNAs, SVs), reference cell lines or reference materials should be used for initial evaluation of panel performance, mixing studies should be performed to estimate assay performance for each variant type intended for clinical use, and whenever possible, all specimen types and preparations intended for clinical use should be tested during O&F phase to evaluate the sequencing process in an end-to-end manner.

Library Complexity

At a specific depth of sequence coverage, the number of independent DNA template molecules (sometimes referred to as genome equivalents) sequenced by the assay has an independent impact on variant detection. For example, the information content of 1000 sequence reads derived from only 10 genome equivalents of a heterogeneous tumor sample (via a higher number of amplification cycles) is less than the information content of 1000 sequence reads derived from 100 different genome equivalents (via a lower number of amplification cycles). The number of unique genome equivalents sequenced by the assay is often referred to as the library complexity. The library complexity affects objective measures of assay performance in ways that are analogous to the impact of depth of sequence coverage.

It is well recognized that quantitation of input nucleic acid by simple measurement of the mass of DNA in the sample often provides an unreliable estimate of library complexity because the efficiency with which a given mass of DNA can be sequenced is variable. This is not surprising given the wide range of preanalytic variables that affect DNA quality (eg, the presence or absence of fixation; the type of fixative; the length of fixation). Measurement of library complexity is straightforward in a hybrid capture–based assay because the sequence reads have different 5′ and 3′ termini reflecting the population of DNA fragments captured during the hybridization step. However, measurement of library complexity in a DNA library produced by an amplification-based method is more difficult because all amplicons have identical 5′ and 3′ termini regardless of the size of the population of DNA fragments from which they originated. Dilution experiments using various amounts of DNA input during assay O&F phase can provide data on library complexity.

Traditionally, methods for accurate measurement of amplifiable input nucleic acid involve a quantitative PCR–based approach to measure the cycle threshold of amplification of the test sample. The quantitative PCR approach is cumbersome in routine practice, and indirect. Methods that involve unique molecule identifiers or single molecule tags (eg, single-molecule molecular inversion probes38,40,55; HaloPlex target enrichment system5,56) make it possible to directly measure library complexity. The method selected for evaluation of library complexity should be at the discretion of the laboratory director.

We recommend that the library complexity should be evaluated by dilution experiments using various amounts of DNA input during assay O&F phase or by other methods involving unique molecular identifiers as per discretion of the laboratory director.

Establishing Criteria for Depth of Sequencing

Depth of sequencing, or depth of coverage, is defined as the number of aligned reads that contain a given nucleotide position, and bioinformatics tools are extremely dependent on adequate depth of coverage for sensitive and specific detection of sequence variants. The relationship between depth of coverage and the reproducibility of variant detection from a given sample is straightforward in that a higher number of high-quality sequence reads lends confidence to the base called at a particular location, whether the base call from the sequenced sample is the same as the reference base (no variant identified) or is a nonreference base (variant identified).17,57–59 However, many factors influence the required depth of coverage, including the sequencing platform,9 the sequence complexity of the target region (regions with homology to multiple regions of the genome, the presence of repetitive sequence elements or pseudogenes, and increased guanine-cytosine content).57,58 In addition, the library preparation used for target enrichment and the types of variant being evaluated are important considerations. Thus, the coverage model for every NGS test must be systematically evaluated during assay development and validation.

In general, a lower depth of coverage is acceptable for constitutional testing where germline alterations are more easily identified because they are in either a heterozygous or homozygous state. However, in the setting of constitutional testing, the presence of mosaicism may complicate the interpretation of the presence (or absence) of a variant, which is not a trivial issue because it is clear that a large number of diseases are characterized by mosaicism [eg, neurofibromatosis type 1 (NF1)60; McCune-Albright syndrome61; PIK3CA-related segmental overgrowth62]. A minimum of 30× coverage with balanced reads (forward and reverse reads equally represented) is usually sufficient for germline testing.63,64 In contrast, much higher read depths are necessary to confidently identify somatic variants in tumor specimens because of tissue heterogeneity (malignant cells, as well as supporting stromal cells, inflammatory cells, and uninvolved tissue), intratumoral heterogeneity (tumor subclones), and tumor viability. An average coverage of at least 1000× may be required to identify heterogeneous variants in tissue specimens of low tumor cellularity. For NGS of mitochondrial DNA, an average coverage of at least 5000× is required to reliably detect heteroplasmic variants.65,66

The required depth of coverage can be estimated based on the required lower limit of detection, the quality of the reads, and tolerance for false-positive or false-negative results. Base calls at a specified genomic coordinate are fundamentally different from many quantitative properties that involve measurement of continuous variables, such as serum sodium concentration. Instead, each base call in a DNA sequence is a so-called nominal property in that it is drawn from a limited set of discontinuous values. This has implications for the statistical calculation of assay metrics.19,67–70

These performance parameters can and should be estimated during the development phase to help define acceptance criteria for validation. For example, for a given proportion of mutant alleles, the probability of detecting a minimum number of alleles can be determined using the binomial distribution equation:

| (1) |

Where P(x) is the probability of x variant reads, x is the number of variant reads, n is the number of total reads, and p is the probability of detecting a variant allele (ie, the proportion of mutant alleles in the sample). Excel allows one to calculate the binomial probability directly using the following formula: =BINOM.DIST(number_s, trials, probability_s, cumulative), where number s is the number of successes (x in the binomial equation), trials is the number of reads (n in the binomial equation), probability_s is the probability of success (p in the binomial equation), and cumulative refers to whether the determination should be the exact probability for a given x and n (FALSE) or the cumulative probability (TRUE).

By calculating the binomial probability for a given number of trials and probability of successes, one can define the binomial distribution (Figure 2). For example, for a given mutant allele frequency of 5% and 250 reads, the probability of detecting four or fewer mutations would be 0.457%. Therefore, the probability of detecting of five or more mutations is 1 minus 0.457% (or 99.543%). Thus, if the threshold for a variant call were set at five or more reads, the probability of a false negative would be <0.5% provided a minimum of 250 reads were obtained. For clinical NGS panels, a minimal depth of coverage of 250 reads per tested amplicon or target is strongly recommended.

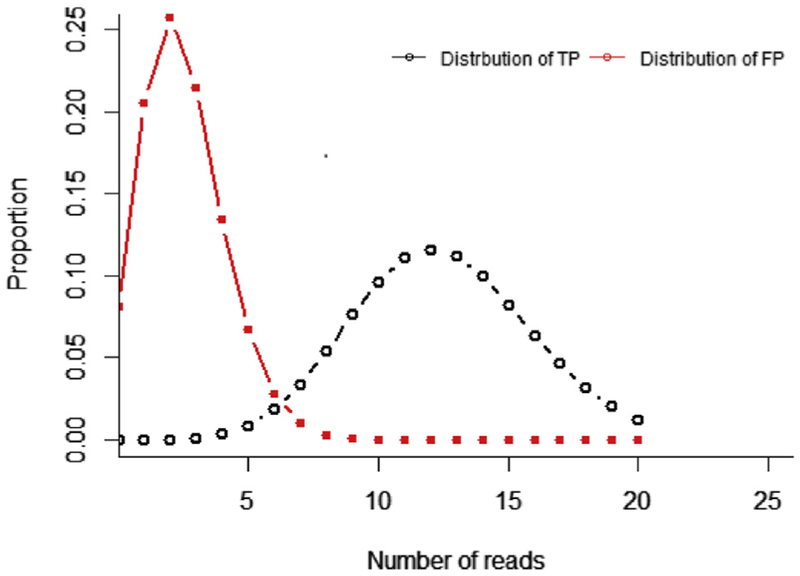

Figure 2.

Determining depth of sequence. Given an allele burden of 5% and 250 read depth, the binomial distribution of true positives (TPs) can be calculated. Also, given a sequence error rate of 1%, the binomial distribution of false-positive (FP) results can also be calculated and shown to overlap the true positive distribution. The overlap of true-positive and false-positive distributions should be considered when determining minimum depth of sequence needed to reliably detect a given allele burden.

The binomial probability distribution could also be used to calculate the probability of a false positive for a given error rate and threshold for variant calling (Figure 2). In this case, the probability of success would depend on the error rate. For example, if the test system has an error rate of 1% (ie, a sequence quality equivalent to a Phred Q score of 20), the probability of getting five or more errors at a particular base would be 10.78%. However, if the threshold was set at five or more reads, that rate of false positives would not be realized assuming the nucleotide errors would be random. For example, the probability that five or more random errors would all have the same nucleotide change is 0.01%. Of course, raising the threshold or reducing the error rate could reduce the probability of false positives yet further.

However, not all errors are random and platform-specific systemic errors do occur. Therefore, estimation of needed depth of coverage using the binomial distribution is only an estimate and determination of false-positive and false-negative rates for a given depth and threshold must be validated.

We recommend a minimal depth of coverage >250 reads per tested amplicon or target for somatic variant detection. In certain limited circumstances, minimal depth <250 reads may be acceptable but the appropriateness should be justified based on intended limit of detection, the quality of the reads, and tolerance for false-positive or false-negative results.

NGS Test Validation

After determining initial assay conditions and establishing bioinformatics pipeline configurations during O&F phase, the NGS test needs to be validated. Regulatory requirements under Clinical Laboratory Improvement Amendments call for all non–Federal Drug Administration–approved/cleared tests (alias laboratory-developed procedures or tests) to address accuracy, precision (repeatability), reportable range, reference range (normal range), analytical sensitivity (limits of detection or quantification), analytical specificity (interfering substances), and any other parameter that may be relevant (eg, carryover). Various guidance documents provide definitions for these performance characteristics, but the definition of accuracy can be refined to better meet the needs for NGS somatic analysis.3,71,72 Therefore, it is recommended that accuracy should be stated in terms of PPA and PPV. NGS panel validation should include the outlined validation protocol with defined types and number of samples, established PPA and PPV, reproducibility and repeatability of variant detection, reportable range, reference range, limits of detection, interfering substances, clinical sensitivity and specificity, if appropriate, validation of bioinformatics pipelines, and other parameters as described below.

Validation Protocol

The validation protocol should be completed before accumulating validation data. That is to say, data collected during development, optimization, and familiarization are not part of the validation. However, those data can be used to estimate test performance and thereby set performance criteria for acceptance as well as determine the number and types of samples as discussed below.

The validation protocol should start with an explicit statement of the intended use, which will determine the types of samples and the performance characteristics that need to be addressed. For example, a test that is intended to detect known hotspot mutations, including large insertions or deletions in formalin-fixed tissue, will need to include formalin-fixed samples with these types of mutations. The lower limit of detection that is clinically indicated should also be defined.

Careful design of the validation protocol is necessary to ensure that all relevant parameters are addressed as efficiently as possible. It may be helpful to include a validation matrix of the planned validation (Table 3). The validation protocol needs to be approved by the laboratory director before validation begins. Ideally, the standard operating procedures for generating sequence data and bioinformatics analysis, as well as the validation samples, should be given to technologists that were not involved in the development or optimization of the test so that they can acquire the validation data in a blinded manner. However, it is recognized that not all laboratories have sufficient staffing to support this approach. The Working Group has developed a template to assist the laboratory in documenting and describing studies performed in the validation phase (AMP Validation Resources, http://www.amp.org/committees/clinical_practice/ValidationResources.cfm, last accessed August 22, 2016).

Table 3.

Sample Next-Generation Sequencing Validation Matrix

| Next-generation sequencing run | Technologist | Sample | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | ||

| 1 | A | PS1 | LOD1 | PS2 | PS3 | PS4 | LOD1 | PS5 | PS6 | PS7 | PS8 | PS9 | PS10 |

| 2 | B | PS11 | PS12 | LOD2 | PS13 | PS14 | PS15 | LOD2 | PS16 | PS17 | PS18 | PS19 | PS20 |

| 3 | A | PS21 | PS22 | PS23 | LOD3 | PS24 | PS25 | PS26 | LOD3 | PS27 | PS28 | PS29 | PS30 |

| 4 | B | PS31 | PS32 | PS33 | PS34 | PS35 | PS36 | PS37 | PS38 | LOD1 | PS39 | LOD1 | PS40 |

| 5 | A | PS41 | PS42 | PS43 | PS44 | PS45 | PS46 | PS47 | PS48 | PS47 | LOD2 | PS50 | LOD2 |

| 6 | B | LOD3 | PS51 | PS52 | PS53 | LOD3 | PS54 | PS55 | PS56 | PS57 | PS58 | PS59 | PS60 |

Technologist A and B represent two individual technologists performing the validation testing. A validation matrix can help ensure that all performance parameters are addressed as efficiently as possible. Testing personnel should be blinded to the identity of samples and controls.

LOD, limit of detection sample; PS, previously tested patient sample.

Types and Number of Samples Required for Test Validation

Assay validation should be performed using samples of the type intended for the assay so that test performance is representative of the larger population. However, massively parallel sequencing of multiple genes cannot be validated as if it were a single-analyte test. There is far too much variation in the types of samples, types of variants, allele burden, and targeted exons or regions. Therefore, an error-based approach to validation must be used.

To use an error-based approach, the question that must be addressed is, to what extent can the performance of the test for a given sample type, variant type, genomic region, or allele burden be extrapolated to other sample types, variant types, genomic regions, and allele burdens? Performance is certainly expected to vary considerably for different sample types, variant types, and allele burden, and therefore it is essential to establish performance characteristics by these factors. A range of well-characterized samples should be selected that maximizes the variation by these factors, as indicated given the stated intended use of the test. The number of each sample type tested during validation should be in proportion to the anticipated sample types to be tested within the clinical service. However, if a sample type is known to be problematic (eg, FFPE tissue), additional validation samples are recommended to determine the impact of the sample quality or quantity on the test results regardless of the number that are anticipated in typical patient samples.

It is recognized that for most panels it is not practical to obtain well-characterized samples representing all of the pathogenic variants that might be detected. However, laboratories should strive to include samples with hotspot mutations relevant to the test’s intended use (eg, mutations involving KRAS codons 12, 13, and 61 for a colon cancer panel). Although sourcing these samples is not trivial, it is critical that laboratories make a substantial effort to show that their assay can actually detect common and clinically relevant mutations that they state they can detect. In silico data sets can augment, but not supplant, real samples. So a mix of real and in silico samples can be envisioned.

Test performance is less likely to vary by genomic region provided that quality metrics are met (eg, read quality, read length, strand bias, read depth). However, systematic errors do occur based on regional variation (eg, repetitive sequence, pseudogenes). Therefore, it is important to include at least two well-characterized samples that have known sequence for all targeted regions. Some commercially available cell lines have been well characterized and can serve such purpose (eg, HapMap cell line NA12878). In some cases, such reference cell lines have been subjected to formalin fixation and paraffin embedding, resulting in lower-quality DNA that may more closely mimic the material intended for use with a new assay. The intent is to detect potential systematic errors that are likely to be evident because of their recurrent nature. Such errors would be seen in many samples, including those for which known sequence was not available. The cell lines with known sequence of all regions could then be used to ascertain the cause of systematic errors in these regions.

A perennial question is how many samples need to be tested. Although performance is often stated in terms of CIs, the CI of the mean only gives an estimate of the population mean with a stated level of confidence. It does not define the distribution of the underlying population and does not give an indication of the performance of any given sample.

To estimate the distribution of the underlying population and the performance of individual samples, the tolerance intervals should be used. For a normally distributed population, the lower tolerance interval could be determined: , Where is the sample mean, s is the sample SD, and k is a correction factor for a two-sided tolerance interval, and defines the number of sample SDs required to cover the desired proportion of the population. The two-sided k value for 95% confidence and n = 20 is 2.75, which is significantly higher than the 1.96 corresponding to the z-score of a normal population distribution because the tolerance interval is based on the sample size and the error-prone estimates of the underlying population mean and population SD. As the number of samples increases, the k value approaches the z-score.

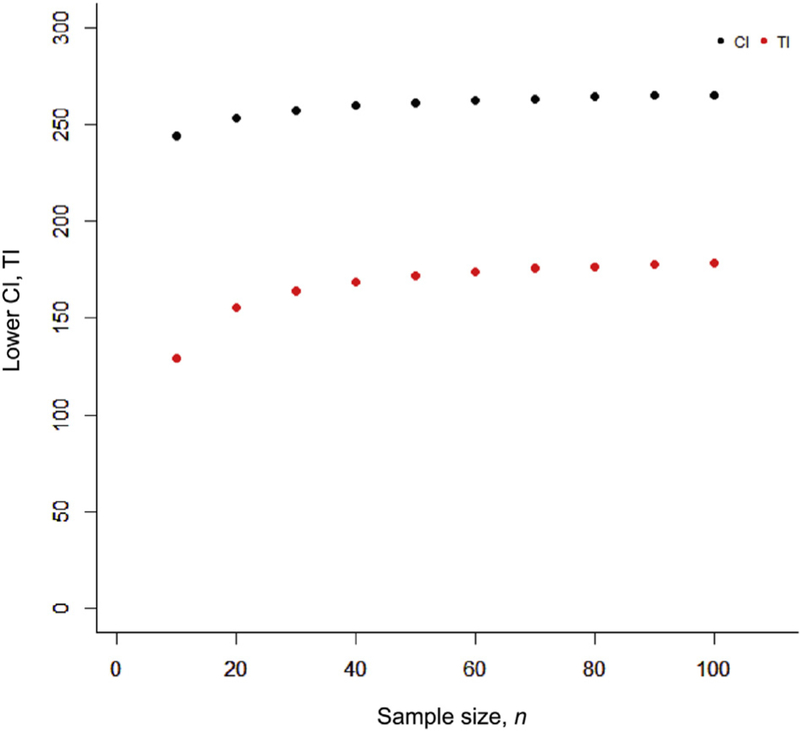

For example, perhaps we want to determine the probability of getting a minimum of 250 reads for a given region. If a validation set of samples shows a mean depth of coverage 275 reads and an SD of 50 reads, after running 100 samples the 95% lower confidence limit would indicate that we would be confident that our average depth of coverage would be >266 (Figure 3). However, our 95% lower tolerance interval indicates that for any given sample we could only be confident of reliably getting a read depth of 179 or greater (Figure 3).

Figure 3.

Determining the minimum number of reads: CI versus tolerance interval (TI). Determination of the CI and tolerance interval for minimum read depth (average of 275 reads with an SD of 50). The lower CI determines with 95% confidence the lower level of the average across the population. As the sample size increases, this estimate improves. The tolerance interval determines with 95% confidence the minimum number of reads above which 95% of the population will fall. The CI can be used to predict the average performance of a population, and tolerance interval can be used to predict the performance of a given sample.

The above estimate of the tolerance interval would only be applicable to a population that is normally distributed. However, the distribution of the underlying population is often not normal [eg, when there is a natural boundary that the data cannot exceed (ie, 0% or 100%)]. Therefore, it is helpful to define the tolerance intervals using nonparametric methods to estimate the performance parameters regardless of the distribution of the underlying population. The one-sided nonparametric tolerance interval can be determined by finding the value for k that satisfies the cumulative binomial equation.73

| (2) |

where

| (3) |

when k is an integer between 0 and n, 0 ≤ k ≤ n and CL is the confidence level (eg, 0.95). By setting k = 0 (ie, 0 failures), the formula can be simplified to: pn = 1 − CL

| (4) |

This equation is often used to determine the number of samples needed to verify a predetermined reliability and CI. For example, a one-sided tolerance interval with 95% confidence and 95% reliability could be determined by the performance on a set of 59 or more samples regardless whether that metric was parametric or nonparametric.73 This, of course, assumes that the performance of each sample is independent of others and the samples are representative of the population from which they are drawn. For example, a laboratory director may want to assess the maximum false-positive rate for his or her test (ie, false-positive variants to total number of variants per sample). After performing the test on 59 representative samples, the highest false-positive rate is 1.9%. Therefore, he or she could be 95% confident that 95% or more of his her samples will have a false-positive rate ≤ 1.9%.

By testing a minimum of 59 samples during validation, conclusions can be drawn as to the tolerance intervals of essentially any performance characteristic whether it is parametric or nonparametric in nature. It is expected that laboratories would be able to acquire quality metric data (eg, read depth, read length, bias, and quality scores) for 59 samples that contain SNVs. Ideally, these 59 samples would also have other variants such as indels. It is acknowledged that ascertainment of samples containing indels is more challenging and laboratories are encouraged to source as many samples with indels as possible to adequately determine assay performance. Variants that are more complex may be difficult to source so assay design approaches or quality controls may be needed to confidently detect these, as discussed below.

We recommend that the validation samples include previously characterized clinical samples of the specimen type intended for the assay (FFPE, blood, bone marrow); previously characterized clinical samples with each type of pathogenic alteration that the assay is intended to detect (eg, SNVs, indels, CNAs, SVs); samples with most common mutations relevant to the intended clinical use of the panel; two or more samples for which a consensus sequence has been previously established (eg, National Institute of Standards and Technology reference material) for all regions covered by the panel; and a minimum of 59 samples to assess quality metrics and performance characteristics.

PPA and PPV

PPA is the proportion of known variants that were detected by the test system. It requires that all true variants must be known. The true presence of genetic variants can be determined using reference samples or reference methods (eg, Sanger sequencing). When using reference methods, it is possible to use a combined reference method (eg, Sanger sequencing coupled with targeted mutation analysis when the allele burden is expected to be low). However, the combined reference method must be determined before collecting validation data and cannot be used for discrepancy resolution because this will bias the data.74 Because the performance will likely vary by mutation type, the PPA should be determined for each (eg, SNVs, small indels, larger indels, CNAs, SVs) (Table 2). Sourcing sufficient samples with known SNVs and perhaps small indels should not be a problem. It is not necessary that these be pathogenic variants because the goal is to demonstrate the analytical performance of the test. Nevertheless, common pathogenic variants should be included whenever possible. It may be difficult or impossible to find sufficient numbers of samples with larger indels or SVs. In such cases, the laboratory may choose to supplement the NGS test with another validated test (eg, FLT3-ITD fragment analysis, RT-PCR) until sufficient number of cases is reached, or include appropriate controls, or clearly state the test limitations within the report. When analyzing the validation data, the rate of detection of known positives for each sample should be determined and documented (ie, mean, SD, CIs, and tolerance intervals, or reliability). For example, a sample with 100,000 bp of known, targeted sequence may have 90 true SNVs or a set of 59 samples may have 62 true known variants. Assuming coverage and quality indicators met quality thresholds, the PPA would be the proportion of 90 or 62 variants that were detected, respectively. The discrepancy resolution should not be performed because it will bias the data.74

PPV is the proportion of detected variants that are true positives. Again, it requires that all true variants must be known and the true presence of genetic variants can be determined using reference samples or reference methods (eg, Sanger sequencing) (Table 2).

Similarly to PPA, the PPV should be determined for each mutation type (eg, SNVs, small indels, and larger indels, CNAs, SVs). When analyzing the validation data, the proportion of variants that are true positives should be determined. For example, a sample with 100,000 bp of known, targeted sequence may have 90 true SNVs. Assuming coverage and quality indicators for this 100,000 bp met quality thresholds, if all of these were detected and an additional 10 false-positive variants were detected, the PPV would be 90/100 or 90%. Again, discrepancy resolution should not be performed because it will bias the data74 and the overall performance for the validation set should also be determined.

We recommend that PPA and PPV should be documented for each variant type (eg, SNV, small indels, large indels, CNAs, SVs). For variant types for which 59 validation samples are not available, the laboratory should supplement the NGS test with another validated test until the number of samples reached, or to include appropriate controls, or clearly state the limitations in the report.

Repeatability/Reproducibility

Complex, multistep processes can introduce random error (or imprecision) at every step because of variation in instrumentation, reagents, and technique. To minimize variation, the instruments, reagents, and personnel must be qualified for the intended purpose. Nevertheless, variation can occur and this should be quantified through the method validation. Given the number of possible sources of variation, it is not practical to exhaustively assess all sources of variation independently. Rather, it is recommended to assess a minimum of three samples across all steps and over an extended period to include all instruments, testing personnel, and multiple lots of reagent. Replicate (within run) and repeat (between run) testing should be performed. Of course, acceptance criteria need to be set before the acquisition of validation data. For example, SNV allele frequency or CNA has to be within a specified range of variation from run to run. If acceptance criteria are not met, additional precision studies may be required to assess sources of variation. Given the extensive quality controls and quality metrics that are included in most steps, sources of variation should be identifiable and quantified.

We recommend that a minimum of three samples should be tested across all NGS testing steps to include all instruments, testing personnel, and multiple lots of reagents. Variance should be quantified at each NGS testing step for which data are available.

Reportable Range and Reference Range

The reportable range is the span of all test results that are considered valid. This should include the targeted regions that meet the minimum quality requirements, the variant types that have been validated, and the limits of detection for these. The reportable range should be included in the report, perhaps together with the methods and limitations so that it is clearly understood by the ordering provider what regions, variants, and allele burdens would not be detected.

The reference range is the range of normal values. It is not simply the reference Human Genome, which is a compilation of multiple genomes from healthy individuals, but rather the variants that are considered benign or nonpathogenic. For genetic variants, this could be difficult to define and may vary by intended use of the test. Because our understanding of genotype-phenotype correlations is far from complete, some laboratories may opt to report all detected variants, whereas others may opt to report only those that are considered clinically informative. Regardless, the reference range should be included in the report so that it is clearly understood by the ordering provider what types of variants would or would not be reported.

We recommend that the appropriate reportable range and reference range will depend on the intended use of the test and should be determined as part of the validation process. Reportable range and reference range should be included in the patient report.

Limits of Detection

The LOD for each type of genetic alteration is recommended to estimate during O&F phase using cell line mixing experiments, as described above and shown in a series of templates (AMP Validation Resources, http://www.amp.org/committees/clinical_practice/ValidationResources.cfm, last accessed August 22, 2016).

The lower LOD (LLOD) could be defined as the minor allele fraction at which 95% of samples would reliably be detected. Often, a laboratory director may choose to run 20 validation samples to demonstrate the LLOD assuming that 19 or 20 correct results would indicate a reliability ≥95%. However, by testing just 20 samples, the director could not be confident the test would reliably detect 95% or more samples at that lower limit of detection. If the true reliability were 90%, what would be the probability of getting 20 of 20 correct? That could easily be calculated as (0.90)20 and would show that the probability of getting 20 of 20 correct when the reliability is 90% would be 12%. In other words, it would not be particularly unlikely and 20 samples therefore would not give confidence that the reliability is at least 95%. If we had run 100 samples and all were correct, we could show that (0.90)100 would equal 0.003%, which would be unlikely. We would therefore feel confident that our reliability must be >90%.

The number of samples that would be required could therefore be calculated by defining the reliability and confidence that we would like to demonstrate: rn = α, where r is the reliability, n is the number of samples, and α is the confidence level (ie, probability of a type I error). By solving for n, it can be shown:

| (5) |

If we want to be 95% confident (α = 0.05) of at least 95% reliability (r = 0.95), the minimum number of samples could be calculated to be 59. If we wanted more reliability or confidence, of course that number of samples would be more and could be calculated. This minimum number of samples assumes that all results are correct. If a proportion is incorrect, the reliability together with CIs could be calculated using one of several complex statistical methods.73

Interestingly, the natural log of 0.05 is −3.00. Therefore, for a 95% confidence level, the rule of three can be applied.74,75 For example, for fluorescent in situ hybridization test, a director may choose to count 100 cells and seeing no translocation, claim that <1% of cells have the translocation. However, to be 95% confident that <1% of cells have the translocation, 300 cells would have to be counted without a single positive. Likewise, if a director wants to claim a reliability ≥95% with 95% confidence, he or she would need to test 3 × 20 or 60 samples. The rule of three is convenient and an accurate estimate of the binomial CI for sample sizes of 30 or more.

Different mutation types would likely have different lower limits of detection and therefore LLOD should be determined for each variant type. Of course, it may prove difficult to source 59 or more validation samples with the targeted mutations and VAF needed to validate the LLOD. Therefore, sensitivity controls would be needed to ensure detection of targeted mutations at the LLOD.

Plasmid controls and other artificial constructs may be used during validation and clinical testing to demonstrate accurate detection of certain variants. However, plasmids are far less complex than typical clinical samples, and it has been shown that they are more readily detected at a given allele burden.75 More recently, it has been shown that linearized plasmid controls performed with similar efficiency as formalin-fixed cell line genomic DNA and could be used to assess or monitor LLOD provided that the genomic DNA and plasmids were fragmented to comparable size.76 Therefore, plasmid controls and other artificial constructs potentially could be used for the validation of the LLOD and the monitoring of assay performance.77

We recommend that the LLOD for each variant type should be determined. A minimum of 59 samples should be used to establish the LLOD. If sufficient samples cannot be sourced, sensitivity controls should be used.

Interfering Substances and Carryover

Interfering substances that are known to affect molecular testing, particularly amplification-based methods, should be addressed during validation (eg, heavy metal fixation, melanin, hemoglobin). Nucleic acid extraction and purification steps normally eliminate possible contaminants. However, consideration must be given to the types of samples that are used in the validation to be sure all intended types can be amplified and sequenced. For example, melanomas need to be included in the validation if the intended use is to detect BRAF mutations. In addition, consideration should be given to interference from repetitive sequence and pseudogenes. For highly fragmented DNA, short reads can be misaligned if derived from pseudogenes and yield false-positive results. Alternatively, highly repetitive sequences may reduce on-target reads by depleting capture probes.

Carryover is a recognized problem with NGS sequencing tests that are designed to detect variants with low allele burden. Carryover should be addressed through test design and validation as well as through the inclusion of no template controls (NTCs). Bioinformatics approaches have been described that can be used to detect human-human sample contamination, and should also be used to monitor carry-over.42,78 During test design, procedures should be in place to avoid carryover from one sample to another (eg, changing scalpel blades between samples). In addition, during validation and every clinical run thereafter, an NTC should be included in every run to verify no carryover from neighboring wells during amplification steps. It is not necessary to take the NTC all of the way through sequencing as a quality check on amplified product may suffice.

We recommend that the possible sources of test interference should be specifically identified, with the impact of each systematically evaluated during validation. The risk of carryover should be evaluated at each step of the assay. A no template control should be included in every run but need not be evaluated all of the way through sequencing.

Clinical Validation and Clinical Utility