Abstract

Single-molecule research techniques such as patch-clamp electrophysiology deliver unique biological insight by capturing the movement of individual proteins in real time, unobscured by whole-cell ensemble averaging. The critical first step in analysis is event detection, so called “idealisation”, where noisy raw data are turned into discrete records of protein movement. To date there have been practical limitations in patch-clamp data idealisation; high quality idealisation is typically laborious and becomes infeasible and subjective with complex biological data containing many distinct native single-ion channel proteins gating simultaneously. Here, we show a deep learning model based on convolutional neural networks and long short-term memory architecture can automatically idealise complex single molecule activity more accurately and faster than traditional methods. There are no parameters to set; baseline, channel amplitude or numbers of channels for example. We believe this approach could revolutionise the unsupervised automatic detection of single-molecule transition events in the future.

Subject terms: Ion transport, Machine learning

Numan Celik et al. present a deep learning model that automatically detects single-molecule events against a noisy background in patch-clamp electrophysiological data, based on convolutional neural networks and long short-term memory architecture. This algorithm represents a step torward a fully automated electrophysiological platform.

Introduction

Ion channels produce functional data in the form of electrical currents, typically recorded with the Nobel Prize winning patch-clamp electrophysiological technique1,2. The role of ion channels in the generation of the nerve action potential was first described in detail in the Nobel Prize winning work of Hodgkin and Huxley3, but it is now known they sub-serve a wide range of processes via control of the membrane potential4. Loss or dysregulation of ion channels directly underlies many human and non-human animal diseases (so called channelopathies); including cardiovascular diseases such as LQT associated Sudden Death5. The first step in analysing ion channel or other single molecule data (which may, in fact, include several individual “single” proteins) is to idealise the noisy raw data. This is typically accomplished by human supervised threshold-crossing although other human supervised methods are available6,7. This produces time-series data with each time point binary classified as open or closed; with more complex data this is a categorical classification problem, with classifiers from zero to n channels open. Similar data are also acquired from other single molecule techniques such as lipid bilayer8 or single molecule FRET9–11. These data can then be used to re-construct the hidden Markov stochastic models underlying protein activity, using applications such as HJCFIT12, QuB (SUNY, Buffalo13) or SPARTAN11. The initial idealisation step, however, is well recognised by electrophysiologists as a time consuming and labour-intensive bottleneck. This was perhaps best summarised by Professors Sivilotti and Colquhoun FRS14 “[patch-clamp recording is] the oldest of the single molecule techniques, but it remains unsurpassed [in] the time resolution that can be achieved. It is the richness of information in these data that allows us to study the behaviour of ion channels at a level of detail that is unique among proteins. [BUT] This quality of information comes at a price […]. Kinetic analysis is slow and laborious, and its success cannot be guaranteed, even for channels with “good signals”. In the current report we show that the solution to these problems could be to apply the latest deep learning methodology to analyse single channel patch-clamp data. For straightforward research with manual patch-clamp equipment, and in patches with only one or two channels active at a time, it could be argued that the current methods are satisfactory, however, from our own experience, many patches have several channels gating simultaneously and need to be discarded, wasting experimenter time and quite possibly, increasing the numbers of animal donors required. Furthermore, several companies have now developed automated, massively parallel, patch-clamp machines15 that have the capacity to generate dozens or even hundreds of simultaneous recordings. Use of this technology for single channel recording is greatly compromised by limitations with current software. For example, in most currently available solutions the user has to set the number of channels in the patch, the baseline and the size of the channel manually. If there is baseline drift this would need to be corrected to achieve acceptable results. Our vision is that new deep learning methodology, could in the future make such analyses entirely plausible.

Deep learning16 is a machine-learning development that has been used to extract features and/or detect objects from different types of datasets for classification problems including base-calling in single-molecule analysis17,18. Convolutional neural network (CNN) layers are a powerful component of deep learning useful for learning patterns within complex data. Two-dimensional (2D) CNNs are most commonly applied to computer vision19–21, and we have previously used them for automatic diagnosis of retinal disease in images22. An adaptation of the 2D CNN is the one-dimensional (1D) CNN. These have been specifically developed to bring the power of the 2/3D CNN to frame-level classification of time series, and have previously been used in nanopore time-series single-molecule event classification23, but never previously patch clamp data. More commonly, the deep learning architecture known as recurrent neural networks (RNNs) have been applied to time series analyses24,25. General RNNs are a useful model for text/speech classification and object detection in time series data, however, the model begins degrading once output information depends on long time scales due to a vanishing gradient problem26. Long short-term memory (LSTM) networks, are a type of RNN that resolve this problem27–29. While 1D-CNN layers can effectively classify raw sequence data, in the current work we combine these with LSTM units to improve the detection of learn long-term temporal relationships in time series data30.

In theis current work, we introduce a hybrid recurrent CNN (RCNN) model to idealise ion channel records, with up to five ion channel events occurring simultaneously. To train and validate models, we developed an analogue synthetic ion channel record generator system and find that our Deep-Channel model, involving LSTM and CNN layers, rapidly and accurately idealises/detects experimentally observed single molecule events without need for human supervision. To our knowledge, this work is the first deep learning model designed for the idealisation of patch-clamp single molecule events.

Results

Benchmarking Deep-Channel event detection against human supervised analyses

Our data generation workflow is illustrated in Fig. 1a, c and our Deep-Channel architecture in Fig. 2. In training and model development we found that whilst LSTM models gave good performance, the combination with a time distributed CNN gave increased performance (Supplementary Table 1), a so called RCNN we call here Deep-Channel. After training and model development (see Methods) we used 17 newly generated datasets, previously unseen by Deep-Channel, and thus uninvolved with the training process. Training performance metrics are given in Supplementary Fig. 1. Authentic ion channel data (Fig. 1b) were generated as described in the methods from two kinetic schemes, the first; M1 (see Methods and Fig. 3a) with low channel open probability, and the second; M2 with a high open channel probability and thus an average of approximately three channels open at a time (Fig. 3b). Across the datasets we included data from both noisy, difficult to analyse signals and low noise (high signal to ratio samples) as would be the case in any patch-clamp project. Examples of these data, together with ground truth and Deep-Channel idealisation are shown in Fig. 4. Note that all the Deep-Channel results described in this paper were achieved with a single deep learning model script [capacity to detect a maximum of five channels] with no human intervention required beyond giving the script the correct filename/path. So, to clarify; there was no need to set baseline, channel amplitude or number of channels present, etc.

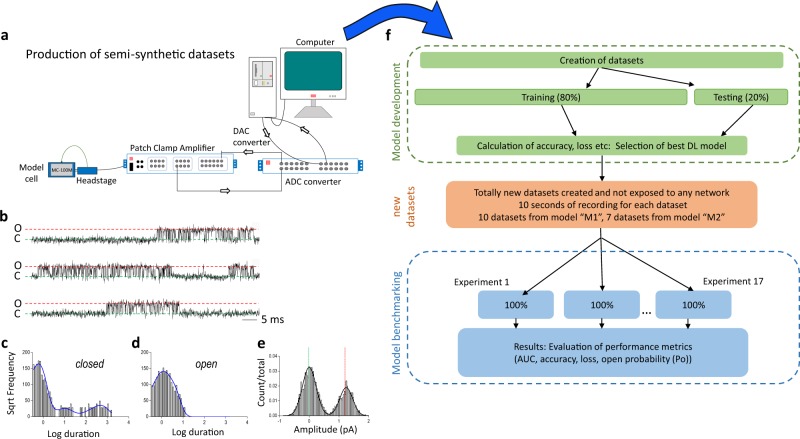

Fig. 1. Workflow diagram: generation of artificial analogue datasets.

a For training, validation and benchmarking, data were generated first as fiducial records with authentic kinetic models in MATLAB (Fig. 2); these data were then played out through a CED digital to analogue converter to a patch clamp amplifier that sent this signal to a model cell and recorded the signal back (simultaneously) to a hard disk with CED Signal software via a CED analogue to digital converter. The degree of noise could be altered simply by moving the patch-clamp headstage closer to or further from the PC. In some cases, drift was added as an additional challenge via a separate Matlab script. Raw single channel patch clamp data produced by these methods are visually indistinguishable from genuine patch clamp data. To illustrate this point, we show here a standard analysis work-up for one such experiment with b raw data, then it’s analyses with QuB: kinetic analyses of c channel open and d closed dwell times. Finally, we show (e) all points amplitude histogram. The difference between this and standard ion channel data is that here we have a perfect fiducial record with each experimental dataset, which is impossible to acquire without simulation. f Illustrates our over-all model design and testing workflow. The Supplementary Information includes training metrics from the initial validation and the main text here shows performance metrics acquired from 17 experiments with entirely new datasets. The training datasets typically contained millions of sample points and the 17 benchmarking experiments were sequences of 100,000 samples each.

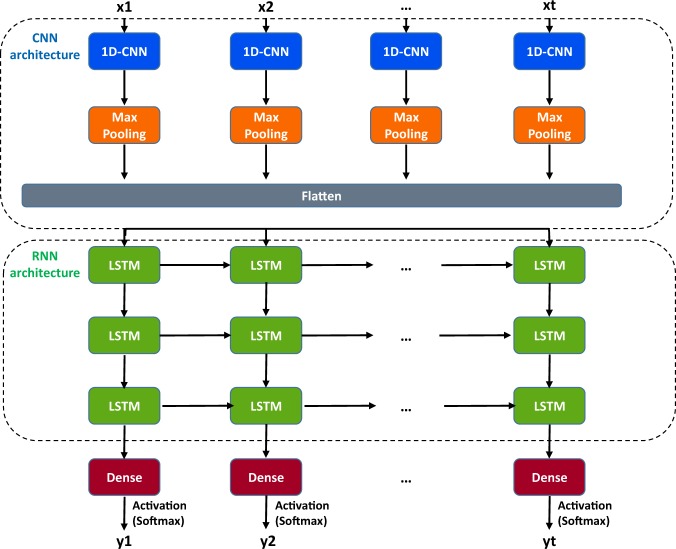

Fig. 2. Deep-Channel model architecture.

The input time series data were fed to the 1D Convolution layer (1D-CNN) which includes both 1D convolution layers and max pooling layers. After this, data was flattened to the shape of the next network layer, which is an LSTM. Three LSTM layers were stacked and each contains 256 LSTM units. Dropout layers were also appended to all LSTM layers with the value of 0.2 to reduce overfitting. This returned features from the stacked LSTM layers. The updated features are then forwarded to a regular dense layer with a SoftMax activation function giving an output representing the probability of each class (e.g., the probability × channels being open at each time step). In post-network processing, the most-likely number of channels open at a given time is calculated simply as the class with the highest probability at a given instant (Argmax).

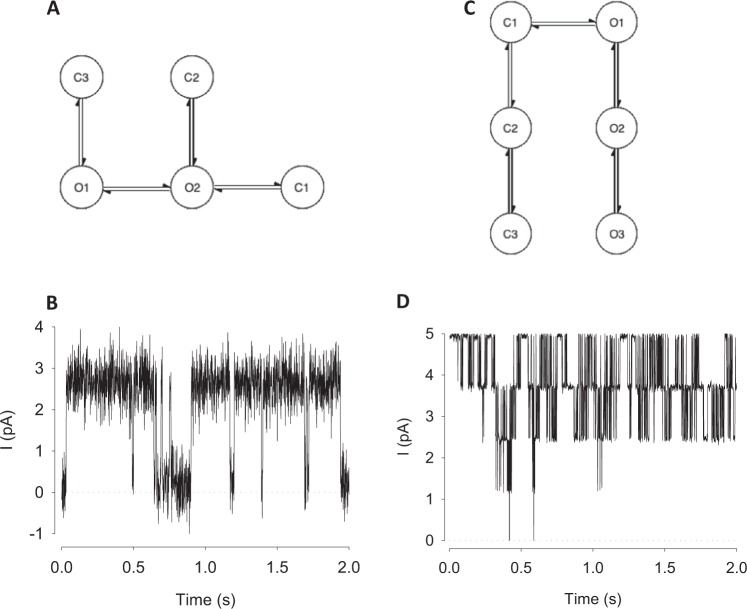

Fig. 3. “Patch clamp” data were produced from two different stochastic models.

a, c The Markovian models used for simulation of ion channel data. Ion channels typically move between Markovian states that are either closed (zero conductance) or open (unitary conductance, g). The current passing when the channel is closed is zero (aside from recording offsets and artefacts), whereas when open the current (i) passing is given by i = g × V, where V is the driving potential (equilibrium potential for the conducting ion minus the membrane potential). In most cases there are several open and closed states (“O1”–“O3”, or “C1”–“C3”, respectively). The central dogma of ion channel research is that the g will be the same for O1, O2, or O3. Although substates have been identified in some situations, these are beyond the scope of our current work. a Model M1; the stochastic model from Davies et al.41 and its output. b This model has a low open probability, and so the data is mostly a representation of zero or one channel open. c Model M2; the stochastic model from ref. 42 and its output data (d) since open probability is high the signal is largely composed of three or more channels simultaneously open.

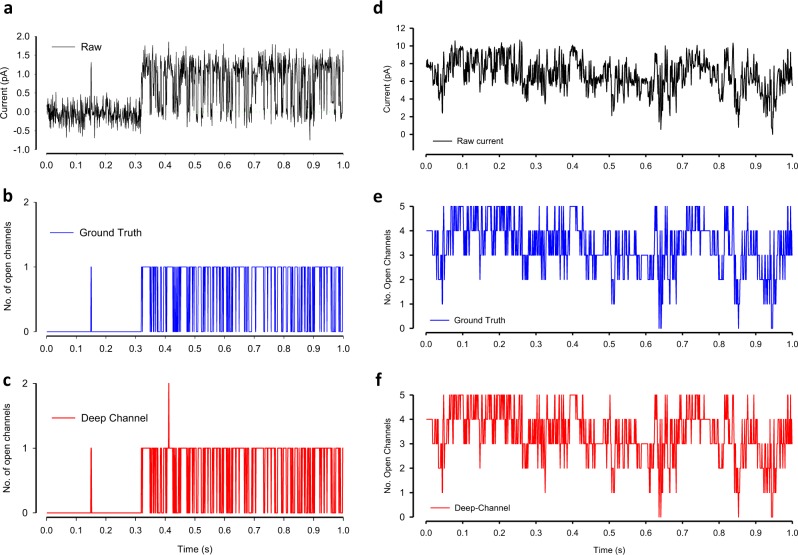

Fig. 4. Qualitative performance Deep-Channel with previously unseen data.

a–c Representative example of Deep-Channel classification performance with low activity ion channels (data from model M1, Fig. 3a, b): a The raw semi-simulated ion channel event data (black). b The ground truth idealisation/annotation labels (blue) from the raw data above in (a). c The Deep-Channel predictions (red) for the raw data above (a). d–f Representative example of Deep-Channel classification performance with 5 channels opening simultaneously (data from model M2, Fig. 3c, d). d The semi-simulated raw ion channel event data (black). e The ground truth idealisation/annotation labels (blue) from the raw data above in (d). f The Deep-Channel label predictions (red) for the raw data above (d).

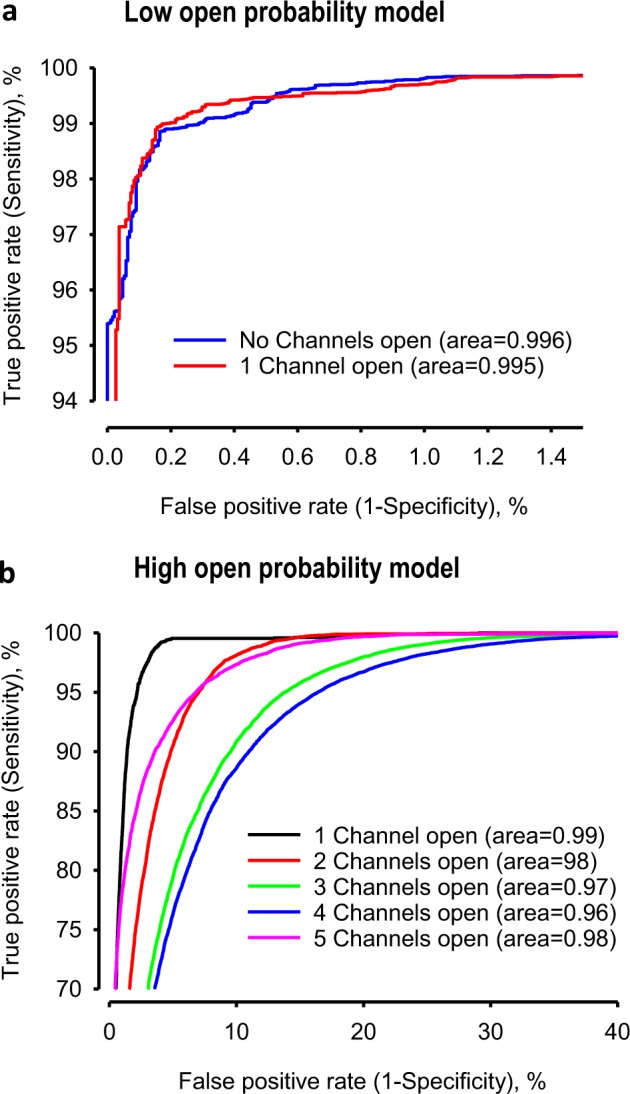

In datasets where channels had a low opening probability (i.e., from model M1), the data idealisation process becomes close to a binary detection problem (Fig. 4a), with ion channel events type closed or open (labels “0” and “1”, respectively). In this classification, the ROC area under the curve (AUC) for both open and closed event detection exceeds 96% (Fig. 5, Tables 1 and 2). Full data for a representative example experimental ROC is shown in Fig. 5a, with the associated confusion matrix shown in Table 2. Overall, in low open probability experiments, Deep-Channel returned a macro-F1 of 97.1 ± 0.02% (standard deviation), n = 10, whereas the SKM method in QuB resulted a macro-F1 of 95.5 ± 0.025%, and 50% threshold method in QuB gave a macro-F1 of 84.7 ± 0.05%, n = 10.

Fig. 5. Quantitative performance of Deep-Channel with previously unseen data.

a Representative receiver operating characteristic (ROC) curve for ion channel event classification using the M1 stochastic gating model (Fig. 3a) and with only one channel present. The associated confusion matrix is shown in Table 2. b Representative receiver operating characteristic (ROC) curve for ion channel event classification using the M2 stochastic gating model (Fig. 3) and with five channels present The associated confusion matrix is shown in Table 3. Mean AUC are given in Table 1. The mean ROC curve area under the curves (AUC) for all labels and all 17 experiments are given in Table 1.

Table 1.

Deep-Channel ROC area under the curve (AUC) values achieved during 17 separate experiments, representative examples shown in Fig. 5.

| Mean ROC AUC | ||

|---|---|---|

| Number of Channels open simultaneously | low Popen data model M1, n = 10 mean ± SD | High Popen data model M2, n = 7 mean ± SD |

| 0 | 0.99 ± 0.006 | 0.9997 ± 0.0002 |

| 1 | 0.96 ± 0.05 | 0.9973 ± 0.0022 |

| 2 | − | 0.9917 ± 0.0065 |

| 3 | − | 0.9824 ± 0.0139 |

| 4 | − | 0.9787 ± 0.0169 |

| 5 | − | 0.9937 ± 0.0050 |

Table 2.

The confusion matrix table for the example in Fig. 5a.

| Deep-Channel predicted labels | |||||||

|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | ||

| True labels | 0 | 96890 | 726 | 0 | 0 | 0 | 0 |

| 1 | 9 | 2371 | 0 | 0 | 0 | 0 | |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 5 | 0 | 0 | 0 | 0 | 0 | 0 | |

Label 0 = no channels open, label 1 = one channel open. Note that Deep-Channel is trained to recognise up to 5 channels opening at a time, however, with only one channel active at a time, the maximum ground truth class (True Label) is label 1 (one channel open). The emboldened numbers are the number of data points correctly predicted by Deep-Channel

In cases where datasets included highly active channels (i.e., from model M2, Figs. 3c, d, 4b) this becomes a multi-class comparison problem and here, Deep-Channel outperformed both 50% threshold-crossing and SKM methods in QuB considerably. The Deep-Channel macro-F1 for such events was 0.87 ± 0.07 (standard deviation), n = 7, however segmented-k means (SKM) macro-F1 in QuB, without manual baseline correction, dropped sharply to 0.57 ± 0.15, and 50% threshold-crossing macro-F1 fell to 0.47 ± 0.37 (Student’s paired t test between methods, p = 0.0052). An example ROC for high activity channel detection, and associated confusion matrix are shown in Fig. 5b and Table 3. Frequently, in drug receptor or toxicity studies, biologists look for changes in open probability and so we also compared the open probability from manual threshold crossing, SKM and Deep-Channel against ground truth (fiducial). In the presence of several simultaneously opening channels in some quite noisy datasets with baseline drift, careful manual 50% threshold crossing and SKM sometimes essentially fail entirely, but Deep-Channel continues to be successful. For example, in some experiments with very noisy data, threshold crossing open probability estimations were over 100% out and SKM detected only near half of the open events (~50% accuracy). Nevertheless, overall, there were highly significant correlations for both Deep-Channel vs. ground truth (0.9998, 95% confidence intervals: 0.9996–0.9999, n = 17) and threshold crossing vs ground truth (0.95, 95% confidence intervals: 0.87–0.98, n = 17). In terms of speed, Deep-Channel consistently outperforms threshold crossing. Deep-Channel analysed at the rate of approximately 10 s of data recording in under 4 s of computational time, whereas analysis time with threshold crossing in QuB was entirely dependent on the complexity of the signal.

Table 3.

The confusion matrix table for the example in Fig. 5b.

| Deep-Channel predicted labels | |||||||

|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | ||

| True labels | 0 | 129 | 15 | 0 | 0 | 0 | 0 |

| 1 | 130 | 1993 | 262 | 0 | 0 | 0 | |

| 2 | 0 | 932 | 9580 | 1621 | 0 | 0 | |

| 3 | 0 | 0 | 1884 | 25241 | 3054 | 0 | |

| 4 | 0 | 0 | 0 | 2782 | 31439 | 2579 | |

| 5 | 0 | 0 | 0 | 0 | 2050 | 16308 | |

Label 0 = no channels open, Label 1 = one channel open, label 2 = 2 channels open etc. The mean ROC curve area under the curves (AUC) for all labels and all 17 experiments are given in Table 1. The emboldened numbers are the number of data points correctly predicted by Deep-Channel

Deep-Channel also proved robust to different levels of signal-to-noise ratios (SNR). For example, F1 scores in low, medium and high SNR levels are: low (SNR = 5.35 ± 2.18, F1 = 0.91 ± 0.016), medium (SNR = 12.74 ± 3.65, F1 = 0.96 ± 0.011) and high (SNR = 60 ± 4.52, F1 = 0.98 ± 0.007).

Biological ion channel data testing metrics

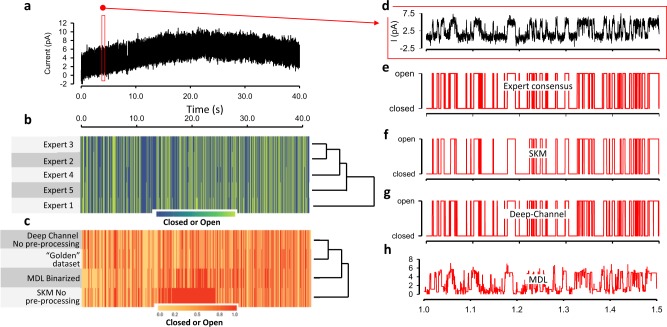

As stated earlier, a true Ground Truth is not possible with native ion channels signals recorded from biological membranes. However, with straightforward clear signals such as that shown in Fig. 6, experts can idealise these data with supervised methods. We therefore chose a stretch of real data from31 including moderate level of noise and drift (Fig. 6a). We then had five ion channel experts idealise these data. For each of the (approx.) 880,000 time-points we then took the mode of their binary idealisation value (0-closed or 1-open) to construct a “golden” dataset to use as an effective ground truth (Fig. 6d, e). The consensus idealisation included 3241 openings. To check for inter-user agreement (Fig. 6b) we calculated the over-all Fleiss Kappa32 implemented in R with the irr package, Fleiss Kappa was 0.953, with p ≤ 1e–6. We then idealised this raw data (blinded from the “golden” dataset) with Deep-Channel and a range of other alternatives (Fig. 6f, g, h). The two alternatives we benchmark here are SKM using QuB13 and Minimum description length (MDL, using MatLab)33. Note that with Deep-Channel, there are no parameters to set and no post-processing. With SKM one needs to identify closed and open state levels and number of channels present. In the case of MDL there is no pre-processing necessary and no parameters to set, but the output is non-binary. Therefore, we ran a 50% event threshold crossing method on this to output final open and closed calls. These idealisations were all then compared to the “golden” dataset with a Cohen’s kappa agreement script, Table 4. Also, we fitted these data with a clustering and heatmap model in R, this allows one to visually compare the agreement at each timepoint.

Fig. 6. Performance of Deep-Channel with biological ion channel data.

a Single channel data from ref. 31 with noise approximately 850,000 data points decimated by a factor of 50 for display only. b The modal idealisation by five ion channel experts (“golden” idealisation). c Agreement clustering and heatmap between “golden” dataset, MDL, Deep-Channel and SKM. d A zoomed view of 500 ms of raw biological data taken from (a). e The expert consensus (modal) idealisation. f The idealisation output by SKM (after setting channel closed and open levels and setting the number of channels to 1), g By Deep-Channel and h by MDL. Note that c, f, g and h were produced without baseline correction.

Table 4.

The Cohen’s kappa agreement scores for automatic analyses, including Deep-channel, SKM, and MDL with golden dataset which is built by five different human experts using their existing software tools.

| Cohen’s kappa score | 0.95% CI | |

|---|---|---|

| MDL | 0.766a | 0.7646–0.7674 |

| SKM | 0.6497b | 0.6481–0.6513 |

| Deep-channel | 0.9279 | 0.9288–0.9304 |

aMDL does not output an idealisation and so we binarized the MDL output using a 50% threshold crossing algorithm to allow comparison

bSKM implemented in QuB has no true automatic function, one need to set the starting baseline, channel amplitude and number of channels before starting. We did not perform additional baseline correction or input baseline nodes for this comparison

Discussion

Single molecule research, both FRET and patch-clamp electrophysiology provide high resolution data on the molecular state of proteins in real time, but their analyses are usually time consuming and require expert supervision. In this report, we demonstrate that a deep neural network, Deep-Channel, combining recurrent and convolutional layers can detect events in single channel patch-clamp data automatically. Deep-Channel is completely unsupervised and thus adds objectivity to single channel data analyses. With complex data, Deep-Channel also outperforms traditional manual threshold crossing both in terms of speed and accuracy. We find this method works with very high accuracy across a variety of input datasets.

The most established single molecule method to observe single-channel gating is patch-clamp recording2. Its development led to the award of the Nobel Prize to Sakmann and Neher in 199134 and the ability to observe single channels gate in real time validated the largely theoretical model of the action potential developed in the earlier Nobel Prize winning work of Hodgkin and Huxley3. Whilst the power and resolution of single-channel recording has never been questioned, it is well accepted to be a technically difficult technique to use practically since the data stream created requires laborious supervised analysis. In some cases, where several single channels gate simultaneously, it becomes impractical to analyse and data can be wasted. For practical purposes, drug screening etc., where subtle changes in channel activity could be crucial5, this means that the typical method is to measure bulk activity from a whole-cell simultaneously. Average current can be measured which is useful, but does not contain the detailed resolution that individual molecular recording has14. Furthermore, new technologies are emerging which can record ion channel data automatically15,35, but whilst whole-cell currents are large enough to be analysed automatically, there are currently no solutions to do the same with single-channel events. Currently, it could be observed that automated patch-clamp apparatus are not used a great deal for single-channel studies and so automated analysis software are of little value, however, we feel that the reverse is true; this equipment is rarely used for single channel recording because no fully automated analysis exists. In this report we show that the latest machine learning methods, that of deep learning, including recurrent and CNN layers could address these limitations.

The fundamental limitation of applying deep learning to classification of biological data of all kinds is the prerequisite for training data. Deep learning is a form of supervised learning where during the training phase, the network must be taught at every single instant what the ground truth state is (it looks open, but is it really open or closed?). We considered two possible approaches to deal with this conundrum: to collect data from easily analysable single molecule/patch clamp experiments and get a human expert to idealise this (classify or annotate it). This has two fundamental flaws that could be referred to as Catch-22. Firstly, if you train a network only to detect easy to analyse data, the output will be a network that can only detect easy-to-detect events. Secondly, even then, if the events had to be human detected in the first place, it would mean that the final (trained) network would learn the same events as the human taught it; and learn the same errors. Analyses of ambiguous events would not tend toward detection with perfect accuracy, but inherit human biased errors. We therefore developed an alternative approach. Single channels gate in a stochastic, Markovian manner and therefore an unlimited number of idealised records can be simulated. This approach has been successfully applied before with other analyses development studies12,36. The limitation is that there are inherent distortions and filtering that occur during collection of genuine data from a real analogue world. These can be imitated mathematically, but instead we used a previously unreported method of generating semi-synthetic training data; we played our idealised records out to a genuine patch-clamp amplifier using the dynamic-clamp approach37 and used an established analogue test cell (resistors and capacitors equivalent to a patch pipette and membrane). Our first data figure (Fig. 1b) shows the authenticity of this data and the approach. In summary, our methodology allows the creation of 100,000 training sets with noisy data in parallel to a ground truth idealisation. To conclude our work, we also compared deep channel performance against a simple “golden” idealisation by human supervised methods and two other existing methods. There are two obvious caveats with this approach, but we feel it is useful nonetheless. The first obvious caveat, is that in order for it to be possible to create the Golden datasets with human experts, it needed to be a very simple dataset with relatively few clear events. Secondly, it is not a ground truth. The small error between the Expert and Deep-Channel channels could be because the Experts were wrong rather than Deep-Channel. Bias, generally is discussed below, nevertheless the success of Deep-Channel in this experiment supports its potential for solving real ion channel idealisation problems.

Since our aim was to classify a time series, we developed a network with the combined power of both 1D-CNN layers and RNN (LSTM) units. Deep-Channel has a 1D-CNN at its core, but whilst ion channel activity is Markovian, the presence of both short and long duration underlying states means that it is important for a detection network to also be able to learn long-term dependencies across and so accuracy is improved with the LSTM (see Supplementary Information). Similar approaches combining RNNs and convolution layers have previously been applied to various analysis of biological gene sequences38 and cell detection in image classification39, but this is its first use for single-channel activity detection to our knowledge.

We used a number of metrics that are commonplace in machine learning and patch-clamp recording. Initially, to test the ability of Deep-Channel to detect events, we compared detected (predicted) idealised events against the fiducial idealised records. To compare against the human supervised methods, we analysed matching datasets with QuB and Deep-Channel and compared the summary parameter, open probability (Po) between them, together with F1 accuracies. Firstly, Deep-Channel was far quicker, to the degree that sometimes with complex multichannel data, manual analyses seems a stressful and near impossible task. With Deep-channel the complexity (within the datasets we used) made no difference to the speed or accuracy of performance. In terms of relative performance, note that whilst there was a strong correlation between open probabilities measured between Deep-Channel and threshold crossing, the F1-accuracy scores of the noisy data measured by threshold crossing fell of sharply. The significance of this is that whilst average open probability estimates from threshold crossing seem reasonable (some over estimates, some under-estimates cancelling out) the time-point by time-point accuracy essential for kinetic analyses is poor.

In the present work, we developed a method that works with channels of any size and kinetic distribution, but we did not include detection of multiple phenotypes or sub-conductances, etc. A perceived problem, specifically with a machine-learning model, is its generalisability. The concern is that the network would be good at detecting events in the exact dataset it was trained on, but fall short, when challenged with a quite different, but equally valid dataset; a problem known as over fitting. Furthermore, the heavy reliance on our model on training with synthetic data could lead to subtle and unexpected biases. In cell-attached mode, for example, the most feasible method for use in automated patch-clamp machines, there is often an asymmetry (relaxation) of larger events. This is shown to an extreme degree by Fenwick et al.40, but more subtle examples are often seen in native data. This could arrive from two situations, beyond the technical limitations of the headstage; for example, if the membrane potential changes during recording or if sufficient ion movement through the channel changes the ion driving force mid sojourn. Our training data did not see these types of anomalies. Furthermore, a subtle noise effect occurs with genuine ion channel events that experience patch clampers can see by eye; open channel noise as well as the well-known pink noise (1/f) of biological membranes. The noise level tends to increase during ion channel opening sojourns. To completely eliminate these or other biases long-term may be impossible, but potentially, mixing human annotated and synthetic data in an appropriate ratio may be one possible route. The goal would be to balance potential hidden biases from the synthetic data against the inevitable biases of human curation (humans will make human errors and be simply unable to label complex signals). Since perfect curation of simple datasets requires very simple datasets to annotate and is time consuming, potentially data augmentation could be used to build complex semi-synthetic datasets by building up layers of simpler data. Our tests against a Golden dataset produced by five ion channel experts found Deep-Channel to be remarkably good and it was a conservative test: the SKM method required us to define the initial closed and open state level, we did not do that in Deep-Channel. The SKM method required us to define that there would be one channel present, we did not do that with Deep-Channel. It recognised that there was only one channel present, simply from the waveform. Currently, Deep-Channel can recognise up to five channels opening simultaneously.

We have demonstrated here the effectiveness of Deep-Channel, an artificial deep neural network to detect events in single molecule datasets, especially, but not exclusively patch-clamp data, but the potential for deep learning convolution/LSTM networks to tackle other problems cannot be overestimated.

Methods

We develop a deep learning approach to automatically process large collections of single/multiple ion channel data series with detection of ion channel transition events, and re-construction of annotated idealised records. Datasets with pre-processing and analysis pipeline code will be made publicly available on GitHub (https://github.com/RichardBJ/Deep-Channel.git) including the model code to facilitate reproducibility. Figure 1 shows an overall workflow and experimental design; creation of the digitised synthetic analogue datasets for developing a deep learning model, together with steps for training and testing (validating).

Data description and dataset construction

Ion channel dwell-times were simulated using the method of Gillespie43 from published single channel models. Channels are assumed to follow a stochastic Markovian process and transition from one state to the next simulated by randomly sampling from a lifetime probability distribution calculated for each state. Authentic “electrophysiological” noise was added to these events by passing the signal through a patch-clamp amplifier and recording it back to file with CED’s Signal software via an Axon electronic “model cell”. In some datasets additional drift was applied to the final data with Matlab. Two different stochastic gating models, (termed M1 and M2) were used to generate semi-synthetic ion channel data. M1 is a low open probability model from ref. 41 (Fig. 3a, b), typically no more than one ion channel opens simultaneously. Model M2 is from refs. 42,44 and has a much higher open probability (Fig. 3c, d), consequently up to five channels opened simultaneously and there are few instances of zero channels open. The source code for generating a combination of different single/multiple ion channel recordings is also given along with the publicly available datasets. Using this system, we can generate any number of training datasets with different parameters such as number of channels in the patch, number of open/close states, sampling frequency and temporal duration, based on published stochastic models. Fiducial, ground truth annotations for these datasets were produced simultaneously using MATLAB. Recordings were sampled at 10 kHz and each record had 10 s duration. To validate the Deep-Channel model, six different validation datasets were used: three datasets for single; and three datasets for multi-channel recordings. Datasets for training typically contained 10,000 subsets of 10 s each. Each dataset includes raw current data and ground truth state labels from the stochastic model, which we refer to as the idealisation. Within these training datasets, the third column is the fiducial record/ground truth and includes the class labels; “0”–“5”. Each label indicates the instantaneous number(s) of open channels at a given time.

Model background

LSTM, is a type of RNN, the deep learning model architecture that is now widely adopted efficiently for time series forecasting with long-range dependencies. The major advantage of LSTM over RNN is its memory cell ct, which is computed by summing of the state information. This cell acts like a gate that activates or deactivates past information by several self-parametrised controlling gates including input, output and forget gates. As long as the input gate has a value of zero, then no information is allowed to access the cell. When a new input comes, its information is passed and summed to the cell if the input gate it is, in turn, activated. Ideally, the LSTM should learn to reset the memory cell information after it finishes processing a sequence and before starting a new sequence. This mechanism is dealt by forget gates ft and the past cell content history can be forgotten in this process and reset if the forget gate ft is activated. Whether a cell output ct will be passed to the final state ht is further allowed by the output gate ot. The main innovation of using gating control mechanisms in LSTMs is that it ameliorates the vanishing gradient problem. This limitation of the general RNN model27,45 is thus eliminated during forward and backward propagation periods. The key equations of an LSTM unit are shown in (1) below, where “∘” denotes the Hadamard product

| 1 |

The W*s are input weights, the R*s are recurrent weights, b*s are the biases, xt is denoted as the current input and is referred as the output from previous time step. The weighted inputs are accumulated and passed through tanh activation, resulting in zt. Multiple LSTMs can be stacked and temporarily combined with other types of deep learning architectures to form more complex structures. Such models have been applied to overcome previous sequence modelling problems46.

Model development—network architecture

Our Deep-Channel RCNN model was implemented in Keras with a Tensorflow backend47 using Python 3.6. Figure 2 shows a graphical representation of the model architecture consisting of an input layer and 1D-convolution layer, a ReLU layer, a max pooling layer, a fully connected layer, three-stacked LSTM with batch normalisation (BN) and drop out layers, and a final SoftMax48 output layer. For training; 3D data with ion channel current recordings (raw data), time steps (n = 1), and features (n = 1) served as input to the 1D convolution layer. This input layer feeds into a temporal convolution layer to investigate frame-level features. Afterwards, the output of the flattened convolutional layer is fed to the three-stacked LSTM layers. Finally, the network feeds into one dense neuron with a SoftMaxactivation function, outputting the probability of a given channel level. This combined 1D convolution LSTM (RCNN) model was then saved as a hierarchical data format file HDF5 to allow automatic detection in other datasets without need to retrain. HDF5 files, including trained tensor weighting, are also available via our GitHub site.

Class imbalance

Typical real-world ion channel time series data are usually inherently class imbalanced (see for example, the confusion matrices in Fig. 5); if only one channel is present, there could be very few openings or very few closures. If there is more than one channel in the patch, the number of channels open at any one instant will be distributed binomially. This increases the volume of data required to train the network. To address this, where we looked at highly active channels, our training process used an oversampling of the minority classes, rearranging datasets evenly using the synthetic minority oversampling technique49, implemented in the Python Imbalanced-Learn library50. SMOTE adds the over-samples to the end of the end of the data record, but for training purposes we shuffled those back into the body of the data.

1D-convolutional layer

The 1D Convolution layer (1D-CNN) step consists of 1D-CNN, rectified linear unit (ReLU) layer51 and max pooling layer. We used 64 filters and ReLU was applied as an activation function. After that, the max-pooling layer was added to each output to extract a representative value. Finally, data were flattened to allow input to the next network layer, an LSTM.

RNN-LSTM

Three LSTM layers were stacked and each contains 256 LSTM units with ReLU activation functions. In the next step, a BN52 was applied to standardise the inputs, meaning the mean will be close to 0, and the standard deviation close to 1, hence the training of the model is accelerated. Dropout layers were also appended to all RNN-LSTM layers with the value of 0.2 to reduce overfitting53. The returned features from the stacked RNN-LSTM layers were then fed into a flatten layer to have a suitable shape for the final layer. The updated features are then forwarded to a Dense output layer with a SoftMax activation function, in which output features one dense neuron PER potential channel level (zero to maximum channels). In our current model this maximum value is five channels. The output is the probability of each given class (e.g., the probability of x channels being open at each timepoint). In order to calculate the final classification, we take the class with maximum value probability at each instant.

Model training

In the model training stage, once the probability values are calculated, errors between the predicted values and true values were calculated with a sparse categorical cross entropy as a loss function. To optimise the loss value, stochastic gradient descent was applied as an optimiser with an initial learning rate of 0.001, momentum of 0.9, and the size of a mini-batch was set to between 256 and 2048 depending on the model. A learning rate decaying strategy was employed to the model to yield better performance. Based on this strategy, the learning rate (initially is 0.001) was decayed at each 10th epoch with decaying factor 0.01 of learning rate. The proposed Deep-Channel model was trained for 50 epochs. In the case of the training data an 80%-train and 20%-test split was performed.

Performance metrics

One of the clearest quality indicators of a classification deep learning method is the confusion matrix, sometimes called as contingency table54. This consists of four distinctive parameters, which are true positives (TP), false positives (FP), false negatives (FN) and true negatives (TN). If the model’s output accurately predicts the ion channel event, it is considered as TP. On the other hand, it is indicated as FP if the model incorrectly detected an ion channel event when there is no a channel opening. If the model output misses an ion channel event activity, then it is computed as FN. These metrics are used in calculation of evaluation metrics such as precision (positive predicted value), recall (sensitivity) and F-score as described below in (3)–(5), respectively

| 3 |

| 4 |

| 5 |

where P, R and F denote precision, recall and F-score, respectively. In addition, area under curve (AUC) and receiver operating characteristic (ROC) parameters are efficiently used to visualise the model performance in classification problems. The ROC shows the probability relations between true positive rate (sensitivity-recall), and false positive rate (1-specificity), while AUC represents a measure of the separability between classes.

As an additional metric, more familiar to electrophysiologists we also calculated the open probability (Po), and compared this metric between Deep-Channel, a traditional software package (QuB) and the ground truth. The equation for open probability is given in (Eq. 6) as follows:

| 6 |

where T denotes total time, N is defined as numbers of channels in the patch, and tj is referred to the time spent with j channels open55. Since true numbers of channels in a patch is always an unknown parameter this was estimated as the maximum number of simultaneous openings.

Computer hardware

In this work, we trained the Deep-Channel model on a workstation with an Nvidia Geforce GTX 1080Ti and 32GB RAM. The entire process of the proposed model including training, validating, evaluating, and visualising process was employed within Python Spyder 3.6. Speed estimations were made with a similar PC, but without a GPU.

Statistics

Statistical analyses were performed with R-studio, means are quoted with standard deviations, n is the number of experiments.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Description of additional supplementary files

Acknowledgements

This work was funded by a BBSRC Transformative Resources Development Fund award.

Author contributions

R.B.J., Y.Z. and F.C. designed the study. R.B.J. and N.C. wrote the code, N.C. prepared all the datasets. R.B.J., F.O.B., C.D., S.B., N.C. and R.D.R. contributed to the experimental work and the analysis and interpretation. R.B.J., F.O.B. and N.C. prepared the figures. R.B.J., N.C., Y.Z., F.C. and F.O.B. drafted the initial paper. All authors critically evaluated, edited and approved the final paper.

Data availability

Datasets with pre-processing and analysis pipeline code are made publicly available on GitHub (https://github.com/RichardBJ/Deep-Channel.git). Source data underlying plots are in Supplementary Data 1 and all other data (if any) are available upon request.

Code availability

All code will be available on GitHub (https://github.com/RichardBJ/Deep-Channel.git)

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information is available for this paper at 10.1038/s42003-019-0729-3.

References

- 1.Hamill OP, Marty A, Neher E, Sakmann B, Sigworth FJ. Improved patch-clamp techniques for high-resolution current recording from cells and cell-free membrane patches. Pflug. Arch. 1981;391:85–100. doi: 10.1007/BF00656997. [DOI] [PubMed] [Google Scholar]

- 2.Neher E, Sakmann B. Single-channel currents recorded from membrane of denervated frog muscle fibres. Nature. 1976;260:799–802. doi: 10.1038/260799a0. [DOI] [PubMed] [Google Scholar]

- 3.Hodgkin AL, Huxley AF. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952;117:500–544. doi: 10.1113/jphysiol.1952.sp004764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Abdul Kadir L, Stacey M, Barrett-Jolley R. Emerging roles of the membrane potential: action beyond the action potential. Front. Physiol. 2018;9:1661. doi: 10.3389/fphys.2018.01661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lehmann-Horn F, Jurkat-Rott K. Voltage-gated ion channels and hereditary disease. Physiol. Rev. 1999;79:1317–1372. doi: 10.1152/physrev.1999.79.4.1317. [DOI] [PubMed] [Google Scholar]

- 6.Colquhoun, D. & Sigworth, F. Single-Channel Recording. 483–587 (Springer, 1995).

- 7.Qin F, Auerbach A, Sachs F. A direct optimization approach to hidden Markov modeling for single channel kinetics. Biophys. J. 2000;79:1915–1927. doi: 10.1016/S0006-3495(00)76441-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.O'Brien F, et al. Enhanced activity of multiple TRIC-B channels: an endoplasmic reticulum/sarcoplasmic reticulum mechanism to boost counterion currents. J. Physiol. 2019;597:2691–2705. doi: 10.1113/JP277241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ha T. Single-molecule fluorescence resonance energy transfer. Methods. 2001;25:78–86. doi: 10.1006/meth.2001.1217. [DOI] [PubMed] [Google Scholar]

- 10.Blanco M, Walter NG. Analysis of complex single-molecule FRET time trajectories. Methods Enzymol. 2010;472:153–178. doi: 10.1016/S0076-6879(10)72011-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Juette MF, et al. Single-molecule imaging of non-equilibrium molecular ensembles on the millisecond timescale. Nat. Methods. 2016;13:341–344. doi: 10.1038/nmeth.3769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Colquhoun D, Hatton CJ, Hawkes AG. The quality of maximum likelihood estimates of ion channel rate constants. J. Physiol. 2003;547:699–728. doi: 10.1113/jphysiol.2002.034165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Nicolai C, Sachs F. Solving ion channel kinetics with the QuB software. Biophys. Rev. Lett. 2013;8:191–211. doi: 10.1142/S1793048013300053. [DOI] [Google Scholar]

- 14.Sivilotti L, Colquhoun D. In praise of single channel kinetics. J. Gen. Physiol. 2016;148:79–88. doi: 10.1085/jgp.201611649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dunlop J, Bowlby M, Peri R, Vasilyev D, Arias R. High-throughput electrophysiology: an emerging paradigm for ion-channel screening and physiology. Nat. Rev. Drug Discov. 2008;7:358–368. doi: 10.1038/nrd2552. [DOI] [PubMed] [Google Scholar]

- 16.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 17.Boza V, Brejova B, Vinar T. DeepNano: deep recurrent neural networks for base calling in MinION nanopore reads. PLoS One. 2017;12:e0178751. doi: 10.1371/journal.pone.0178751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Albrecht T, Slabaugh G, Alonso E, Al-Arif S. Deep learning for single-molecule science. Nanotechnology. 2017;28:423001. doi: 10.1088/1361-6528/aa8334. [DOI] [PubMed] [Google Scholar]

- 19.Angermueller C, Parnamaa T, Parts L, Stegle O. Deep learning for computational biology. Mol. Syst. Biol. 2016;12:878. doi: 10.15252/msb.20156651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 (Nips 2012) 1, 1097–1105 (2012).

- 21.Pratt Harry, Williams Bryan, Ku Jae, Vas Charles, McCann Emma, Al-Bander Baidaa, Zhao Yitian, Coenen Frans, Zheng Yalin. Automatic Detection and Distinction of Retinal Vessel Bifurcations and Crossings in Colour Fundus Photography. Journal of Imaging. 2017;4(1):4. doi: 10.3390/jimaging4010004. [DOI] [Google Scholar]

- 22.Al-Bander B, Al-Nuaimy W, Williams BM, Zheng YL. Multiscale sequential convolutional neural networks for simultaneous detection of fovea and optic disc. Biomed. Signal Process. Control. 2018;40:91–101. doi: 10.1016/j.bspc.2017.09.008. [DOI] [Google Scholar]

- 23.Misiunas K, Ermann N, Keyser UF. QuipuNet: convolutional neural network for single-molecule nanopore sensing. Nano Lett. 2018;18:4040–4045. doi: 10.1021/acs.nanolett.8b01709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Azizi S, et al. Deep recurrent neural networks for prostate cancer detection: analysis of temporal enhanced ultrasound. IEEE Trans. Med. Imaging. 2018;37:2695–2703. doi: 10.1109/TMI.2018.2849959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tang, D., Qin, B. & Liu, T. In: Màrquez, L., Callison-Burch, C. & Su, J. (eds.) Proc. 2015 Conference on Empirical Methods in Natural Language Processing 1422–1432 (2015).

- 26.Hochreiter S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzz. Knowledge Based Syst. 1998;6:107–116. doi: 10.1142/S0218488598000094. [DOI] [Google Scholar]

- 27.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9:1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 28.Graves, A., Mohamed, A.-R. & Hinton, G. In 2013 IEEE International Conference on Acoustics, Speech and Signal Processing 6645–6649 (IEEE, 2013).

- 29.Sutskever, I., Vinyals, O. & Le, Q. V. Sequence to sequence learning with neural networks. Proc. Advances in Neural Information Processing Systems. 27, (2014).

- 30.Lipton, Z. C., Berkowitz, J. & Elkan, C. A Critical Review of Recurrent Neural Networks for Sequence Learning. Preprint at: http://arXiv:1506.00019 (2015).

- 31.Mobasheri A, et al. Characterization of a stretch-activated potassium channel in chondrocytes. J. Cell. Physiol. 2010;223:511–518. doi: 10.1002/jcp.22075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fleiss JL. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971;76:378-&. doi: 10.1037/h0031619. [DOI] [Google Scholar]

- 33.Gnanasambandam R, et al. Unsupervised idealization of ion channel recordings by minimum description length: application to human PIEZO1-channels. Front. Neuroinform. 2017;11:31. doi: 10.3389/fninf.2017.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Aldhous P. Nobel prize. Patch clamp brings honour. Nature. 1991;353:487. doi: 10.1038/353487a0. [DOI] [PubMed] [Google Scholar]

- 35.Yajuan X, Xin L, Zhiyuan L. A comparison of the performance and application differences between manual and automated patch-clamp techniques. Curr. Chem. Genomics. 2012;6:87–92. doi: 10.2174/1875397301206010087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mukhtasimova N, daCosta CJ, Sine SM. Improved resolution of single channel dwell times reveals mechanisms of binding, priming, and gating in muscle AChR. J. Gen. Physiol. 2016;148:43–63. doi: 10.1085/jgp.201611584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sharp AA, O'Neil MB, Abbott LF, Marder E. Dynamic clamp: computer-generated conductances in real neurons. J. Neurophysiol. 1993;69:992–995. doi: 10.1152/jn.1993.69.3.992. [DOI] [PubMed] [Google Scholar]

- 38.Lanchantin, J., Singh, R., Wang, B. L. & Qi, Y. J. Deep motif dashboard: visualizing and understanding genomic sequences using deep neural networks. Pac. Symp. Biocomput.22, 254–265 (2017). [DOI] [PMC free article] [PubMed]

- 39.Kitrungrotsakul, T. et al. In Proc. ICASSP 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 1239–1243, https://ieeexplore.ieee.org/document/8682804 (IEEE, 2019).

- 40.Fenwick EM, Marty A, Neher E. A patch-clamp study of bovine chromaffin cells and of their sensitivity to acetylcholine. J. Physiol. 1982;331:577–597. doi: 10.1113/jphysiol.1982.sp014393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Davies LM, Purves GI, Barrett-Jolley R, Dart C. Interaction with caveolin-1 modulates vascular ATP-sensitive potassium (K(ATP)) channel activity. J. Physiol. 2010;588:3254–3265. doi: 10.1113/jphysiol.2010.194779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.O'Brien F, Barrett-Jolley R. CVS role of TRPV: from single channels to HRV assessment with Artificial Intelligence. FASEB J. 2018;32:732.736. [Google Scholar]

- 43.Gillespie DT. Exact stochastic simulation of coupled chemical-reactions. J. Phys. Chem. 1977;81:2340–2361. doi: 10.1021/j100540a008. [DOI] [Google Scholar]

- 44.Feetham CH, Nunn N, Lewis R, Dart C, Barrett-Jolley R. TRPV4 and KCa ion channels functionally couple as osmosensors in the paraventricular nucleus. Br. J. Pharm. 2015;172:1753–1768. doi: 10.1111/bph.13023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Karpathy Andrej, Fei-Fei Li. Deep Visual-Semantic Alignments for Generating Image Descriptions. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2017;39(4):664–676. doi: 10.1109/TPAMI.2016.2598339. [DOI] [PubMed] [Google Scholar]

- 46.Shi, X. J. et al. Convolutional LSTM network: a machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 28 (Nips 2015)28 (2015).

- 47.Chollet, F. Deep Learning with Python. (Manning Publications Co., 2017).

- 48.Goodfellow, I., Bengio, Y. & Courville, A. Deep learning. Adapt. Comput. Mach. Learn. 1–775 (2016).

- 49.Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002;16:321–357. doi: 10.1613/jair.953. [DOI] [Google Scholar]

- 50.Lemaitre, G., Nogueira, F. & Aridas, C. K. Imbalanced-learn: a Python toolbox to tackle the curse of imbalanced datasets in machine learning. J. Mach. Learn. Res. 18, 1–5 (2017).

- 51.Nair, V. & Hinton, G. E. In: Fürnkranz, J. & Joachims, T. (eds) Proceedings of the 27th International Conference on Machine Learning (ICML-10) 807–814 (2010).

- 52.Ioffe, S. & Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. ICML’15 Proceedings of the 32nd International Conference on International Conference on Machine Learning 37, 448–456 (2015).

- 53.Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014;15:1929–1958. [Google Scholar]

- 54.Story M, Congalton RG. Accuracy assessment: a user’s perspective. Photogrammetric Eng. Remote Sens. 1986;52:397–399. [Google Scholar]

- 55.Barrett-Jolley R, Comtois A, Davies NW, Stanfield PR, Standen NB. Effect of adenosine and intracellular GTP on K-ATP channels of mammalian skeletal muscle. J. Membr. Biol. 1996;152:111–116. doi: 10.1007/s002329900090. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Description of additional supplementary files

Data Availability Statement

Datasets with pre-processing and analysis pipeline code are made publicly available on GitHub (https://github.com/RichardBJ/Deep-Channel.git). Source data underlying plots are in Supplementary Data 1 and all other data (if any) are available upon request.

All code will be available on GitHub (https://github.com/RichardBJ/Deep-Channel.git)