Abstract

Introduction

Despite the extraordinary amount of time physicians spend communicating with patients, dedicated education strategies on this topic are lacking. The objective of this study was to develop a multimodal curriculum including direct patient feedback and assess whether it improves communication skills as measured by the Communication Assessment Tool (CAT) in fourth-year medical students during an emergency medicine (EM) clerkship.

Methods

This was a prospective, randomized trial of fourth-year students in an EM clerkship at an academic medical center from 2016–2017. We developed a multimodal curriculum to teach communication skills consisting of 1) an asynchronous video on communication skills, and 2) direct patient feedback from the CAT, a 15-question tool with validity evidence in the emergency department setting. The intervention group received the curriculum at the clerkship midpoint. The control group received the curriculum at the clerkship’s end. We calculated proportions and odds ratios (OR) of students achieving maximum CAT score in the first and second half of the clerkship.

Results

A total of 64 students were enrolled: 37 in the control group and 27 in the intervention group. The percentage of students achieving the maximum CAT score was similar between groups during the first half (OR 0.70, p = 0.15). Following the intervention, students in the intervention group achieved a maximum score more often than the control group (OR 1.65, p = 0.008).

Conclusion

Students exposed to the curriculum early had higher patient ratings on communication compared to the control group. A multimodal curriculum involving direct patient feedback may be an effective means of teaching communication skills.

INTRODUCTION

The doctor-patient relationship is deemed one of the most important aspects of a medical encounter. Effective communication has clear benefits to both the patient and the provider. Patients who perceive their healthcare providers as strong communicators tend to have better expectations of their healthcare course, adhere to positive health behaviors, and report higher satisfaction.1–4 For physicians, effective communication correlates with more positive patient interactions, decreased risk of litigation, and decreased burnout.5,6 Effective communication can be particularly challenging in the emergency department (ED) given the chaotic environment, time and resource constraints, and lack of continuity of care. In a prospective observational study, only two-thirds of emergency physicians discussed ED course and necessary follow-up with their patients and patients frequently misunderstood information conveyed by their provider.7 An emphasis on fostering communication skills in the emergency medicine (EM) clerkship may improve this competency.

There is an increased focus on interpersonal skills and communication in medical education.8 The Association of American Medical College has revised core competencies, and the Accreditation Council for Graduate Medical Education (ACGME) Entrustable Professional Activities for entering residency include interpersonal and communication skills.9,10,11 While many medical schools include specific courses on patient-centered communication during the preclinical years, there is often a lack of dedicated teaching on this topic during the clinical clerkships. Two studies demonstrated a decline in medical students’ interpersonal skills and patient-centered attitudes from the first through fourth year.12,13 A dedicated curriculum during the clinical years may help improve students’ communication skills and prevent this decline.

To address the need of improving our educational approach for physicians-in-training on effective communication, we developed and implemented a novel, multimodal curriculum incorporating direct patient feedback to teach and assess this competency in the EM clerkship. The objective of this study was to assess whether a multimodal curriculum including direct patient feedback improves medical student communication skills as measured by the Communication Assessment Tool (CAT).

METHODS

Study Design and Setting

This was a prospective, randomized, pilot study. Our study was reviewed by the institutional review board at our institution and was determined to be exempt. We included all students enrolled in the fourth-year EM clerkship from July 2016–October 2017. The study institution is an urban, tertiary care, Level 1 trauma center with an ED census of 55,000 patients annually and home to a three-year EM residency program.

Study Protocol

We developed a multimodal curriculum to teach communication skills consisting of two parts: 1) an asynchronous video on communication skills; and 2) delivery of direct patient feedback from the CAT questionnaire to the student. We designed this curriculum using principles of curricular development described by Kern.14 Through our needs assessment based on faculty evaluations, verbal nursing comments, and observation during simulation, we identified that students’ communication skills are extremely variable. Furthermore, medical students routinely do not receive direct patient feedback. Our goal was to develop a curriculum that would expose our targeted learner group, fourth-year medical students in EM, to this critical aspect of patient care and determine its utility in teaching and assessing communication skills in this population. To add framework to our curriculum, we included a video module based on prior work that has demonstrated efficacy of asynchronous curricula compared to traditional synchronous didactics.15,16 We then sought to implement and prospectively assess our curriculum by looking at patient ratings of communication skills.

Educational Research Capsule Summary.

What do we already know about this issue?

Effective communication is essential for the doctor-patient relationship, yet dedicated education and assessment strategies are lacking.

What was the research question?

Does a multimodal curriculum including direct patient feedback improve medical student communication in an emergency medicine clerkship?

What was the major finding of the study?

Students exposed to the curriculum showed improved patient ratings on communication abilities.

How does this improve population health?

Medical educators should consider a curriculum involving patient feedback as a means of teaching effective communication skills. This may in turn improve patient care.

The undergraduate medical education team designed the video that was made available online for student access. It is approximately 13 minutes long and includes evidence-based content on the importance of effective patient-doctor communication, barriers, and techniques for success. The format of the video includes narrated slides and structured interviews from EM academic faculty and the social work team. Faculty invited to participate in the video were those who consistently received the highest teaching scores by medical students and residents.

To assess medical student communication skills, we used the CAT along with free-response comments from patients (see Appendix 1). The CAT is a 15-item questionnaire that assesses communication skills from the patient perspective and has validity evidence to support its use. The questions use a 1–5 rating scale with 1 being “poor” and 5 being “excellent” and cover multiple domains related to communication and interpersonal skills.17 It has demonstrated utility in assessing communication skills in surgery and family medicine residents.18,19 The CAT has also been administered to ED patients and captures the patient’s perspective on the overall team’s communication skills.20 Its utility in assessing medical student communication skills has not yet been studied, nor have any other patient communication assessment tools been shown to have validity evidence in the medical student population. Because the last question of the CAT pertains to the communication skills of the entire ED team, we omitted this item and calculated student CAT scores out of 70 points for the remaining 14 questions based on previous approaches.20

During the study period, trained research assistants (RA) administered the CAT survey and free-response questions to ED patients cared for primarily by a fourth-year clerkship student. We implemented a system whereby a text page notification was sent to the RA team when a student signed up for a patient on our ED’s electronic tracking board. Pages were sent during the hours of 8 am–11 pm Monday through Friday and every odd weekend day when the RA staff was available. We included patients if they could identify the medical student who cared for them by photo, did not require interpreter services, and were at baseline alert and orientated to person, place and time. Only discharged patients were included in accordance with our institution’s policy regarding patient surveys.

The RA informed the patient that the purpose of the survey was to help the student better his or her communication skills. Written consent was obtained from eligible patients for the use of their de-identified survey data for research purposes. We field-tested the administration of the CAT questionnaire during the month prior to the start of the study as a training period for RAs and to ensure adequate selection of patients. In response to this field testing, we made changes specifically regarding the timing of the pages sent to the RAs in order to maximize the number of patients screened prior to discharge.

To study the effect of our curriculum, we assigned students into an intervention group or control group. Students were randomized based on clerkship month such that all students rotating in the department received the educational experience. Group assignment alternated every other month (ie, all students in July received the curriculum mid-month while all students in August received the curriculum at the end of the clerkship). All students were notified at the beginning of the clerkship that we were instituting a new communication curriculum involving collection of patient feedback. The students in the intervention group were assigned to watch the video at the end of the second week of the clerkship at which time they also were given their CAT scores and free-response patient comments from the first two weeks of the clerkship.

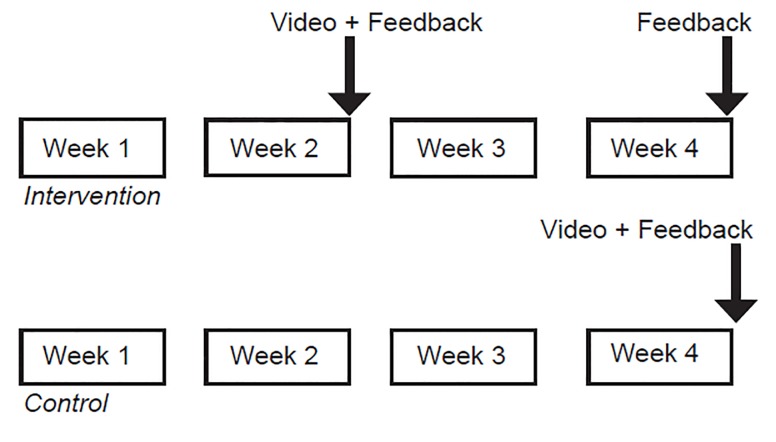

The clerkship directors delivered the patient’s feedback to the medical student in a face-to-face meeting. Additionally, the clerkship directors discussed with them ways to improve these skills. Students in the control group were assigned to watch the video at the end of the fourth week of the clerkship and received feedback from the CAT and patient comments for the entire four-week clerkship at that time (Figure). students in both groups were required to watch the video as part of the required clerkship curriculum. They were asked to verify they had viewed it via an email survey of confirmation.

Figure.

Multimodal communication curriculum for emergency medicine clerkship students for intervention versus control groups.

Outcome Measures

We compared CAT patient questionnaire ratings for students in the intervention vs control groups during the first and second halves of the clerkship. Free-response comments from patients regarding their medical student’s communication skills were also collected. Additionally, we assessed via our standard end-of-clerkship survey whether or not the students had ever received direct patient feedback previously in their medical school training. Student and patient participation in the study was voluntary. Students provided written consent for the use of their de-identified data for research purposes. By completing the survey, patients gave verbal consent for use of their de-identified data.

Outcome Measures and Data Analysis

CAT scores and free-response patient comments were de-identified and recorded in a REDCap database21 that was stored on a secure server. Prior studies using the CAT demonstrate that a dichotomized scoring system was more useful than mean score given the ceiling effect (ie, there is an inherent skewing of mean scores toward the upper end of the 5-point scale).17, 20, 22 Given this, we dichotomized the total score into maximal score (70 points) and sub-maximal (less than 70) as has been done previously. Categorical data were expressed as absolute numbers and percentages, and parameters with non-parametric distribution as median and interquartile range.

Differences in CAT scores between the intervention and control groups were assessed by Mann Whitney test for variables with non-parametric distribution and chi-square test (x2) for categorical variables. We used generalized estimating equation logistic regression model (unstructured matrix) to compare proportions of maximal CAT score (score = 70) between intervention vs control group. This accounts for the clustering of the responses by the same medical student, questionnaires at baseline (weeks 1–2 of the clerkship) and after intervention (weeks 3–4 of the clerkship). This statistical approach allows for adjustment of the results given the variability in number of CAT questionnaires per student and adjusts to the correlation between the different interviews of the same subject. This helps to achieve an unbiased estimate in the following hypothetical situation: one or more students in the intervention group is extremely responsive to the training and also has more questionnaires than others. P-values < 0.05 were considered statistically significant. We also calculated the percentage of students who reported receiving direct patient feedback previously in medical school. All statistical analyses were conducted using SPSS 25.0 (IBM Corp Armonk, NY).

RESULTS

We enrolled 64 students during the study period: 37 in the control group and 27 in the intervention group. All students confirmed they had watched the video. There were no major differences among gender, home vs visiting students, and percentage of students applying to EM between groups (Table 1). A total of 321 CAT questionnaires were administered. The median number of questionnaires per student was five. In the first half of the clerkship, the percentage of students with the maximum CAT score was similar between the intervention and control groups: 57.5% and 59.7%, respectively. In the second half of the clerkship, students in the intervention group achieved a maximum score more often than the control group: 62.3% and 51.1%, respectively.

Table 1.

Demographic characteristics of medical students in the emergency medicine clerkship multimodal communication curriculum.

| Intervention (n=27) | Control (n=37) | |

|---|---|---|

| Male, n (%) | 16 (59) | 22 (59) |

| Home institution medical students, n (%) | 8 (30) | 12 (32) |

| Visiting medical students, n (%) | 19 (70) | 25 (68) |

| Number of medical schools represented | 16 | 22 |

| Students applying to EM, n (%) | 19 (70) | 26 (70) |

EM, emergency medicine.

In the logistic regression model, prior to the intervention (weeks 1–2), there was no difference between the groups (odds ratio (OR) [0.70], 95% confidence interval (CI), 0.44–1.13, p = 0.148). During the second half of the clerkship (weeks 3–4), the intervention group students achieved a maximum score more often than the control group (OR [1.65], 95% CI, 1.14–2.41, p = 0.008, Table 3). Representative patient feedback comments are displayed in Table 2. On the post-clerkship survey, 27% of students in our study reported receiving patient feedback previously in medical school.

Table 3.

Odds ratio for maximal Communication Assessment Tool score (score = 70) for intervention versus control group questionnaires, at baseline (weeks 1–2) and after intervention (weeks 3–4).

| OR | 95% CI | P value | |

|---|---|---|---|

| Weeks 1–2 | 0.70 | 0.44–1.13 | 0.148 |

| Weeks 3–4 | 1.65 | 1.14–2.41 | 0.008 |

CI, confidence interval; OR, odds ratio.

Table 2.

Univariate analysis of intervention group vs control group.

| Total (n students =64, n questionnaires=321) | Intervention (n students =27, n questionnaires=150) | Control (n students=37, n questionnaires=171) | P value | |

|---|---|---|---|---|

| Questionnaires per student (Median, IQR) | 5 (3–7) | 5 (3–7) | 4 (3–6) | 0.202 |

| Questionnaires with maximal score at baseline (weeks 1–2) (n, %) among total questionnaires (n) | 88 (58.7) (n=150) | 42 (57.5) (n=73) | 46 (59.7) (n=77) | 0.784 |

| Questionnaires with maximal score after intervention (weeks 3–4) (n, %) among total questionnaires (n) | 96 (56.1) (n=171) | 48 (62.3) (n=77) | 48 (51.1) (n=94) | 0.139 |

n, number; IQR, interquartile range.

DISCUSSION

We demonstrated successful deployment of a multimodal curriculum consisting of an asynchronous online video coupled with direct patient feedback to teach and assess student communication skills in an EM clerkship. To the best of our knowledge, this is the first dedicated curriculum that incorporates direct patient feedback in the clinical clerkship years.

It is interesting to note that while there was an increase in CAT scores in the intervention group during the study period, there was an overall decrease in the control group. It is difficult to discern the reason for the drop in CAT scores in the control group during the study period. One possibility is the decline parallels the trend that has been previously demonstrated in interpersonal skills across the duration of medical school.12,13 It is possible that at baseline all students do indeed have a decrease in communication skills over the month of a clerkship and that our curriculum mitigated this decline in the intervention group. Alternatively, this decline may have been due to a sampling error given the relatively small study population.

Undergraduate medical education curricula for teaching communication skills typically use traditional teaching modalities. Systematic, standardized techniques such as the Calgary-Cambridge Observation Guide and CLASS protocol have been previously used for framing the patient interview with a focus on optimizing communication.23–25 Simulation is widely employed as an educational modality to improve learners’ communication skills and has demonstrated feasibility through learner self-assessment surveys.26,27 Rucker et al. developed a longitudinal communication curriculum for medical students consisting of seminars and videotaped interactions. After initiation of this curriculum, students’ communication scores improved significantly on an objective structured clinical examination (OSCE).28 The findings of our study add to the existing literature by offering another potential educational modality for teaching communication skills in the clerkship years.

In terms of assessment of communication skills, standardized patients and direct observation are commonly used modalities in EM students,23 and there is substantial evidence demonstrating their feasibility29,30 There are some limitations, however, with their day-to-day use. Standardized patients often require substantial scheduling efforts, nonclinical workspace, and monetary cost. These modalities may also introduce observer bias as the perception of the interaction is not made by the primary participants of the doctor–patient relationship. While the OSCE is an important means of evaluation, it still suffers from variability of rater scales.31 Using the patient as the assessor may lessen the resource utilization and funding needs often required of these more traditional modalities. It also allows for more distinct evaluative encounters, which thereby may increase feedback. While our study used RAs, an ED attending, nurse, or tech could easily administer the CAT, as the approximate amount of time spent to administer the survey was five minutes. This strategy could avoid the extra cost of RAs and therefore allow this program to be more feasibly implemented. The breakdown of time and monetary costs can be found in Appendix 2.

On a broader scale, there is limited data regarding the use of direct patient feedback in improving communication skills in EM. In a recent prospective, randomized, pilot study of EM attending physicians, an intervention using monthly email feedback and face-to-face meetings on Press-Ganey scores did not improve provider patient-satisfaction scores compared to the control group.32 These findings are in direct contrast with our results. Reasons for this are unclear, but there are inherent differences in the content assessed by Press-Ganey and the CAT as well as differences in motives for using these tools that may contribute. Further studies are needed to assess the effect of patient feedback on clinicians across all levels of training and practice. What is surprising is that in our post-clerkship survey, the overwhelming majority of students in our study (73%) had never received direct patient feedback in their medical school training up to this point, making our approach novel. This further highlights the potential role for this type of curriculum in undergraduate medical education.

Perhaps one of the more interesting aspects of our curriculum is the ability for incorporation into a 360-degree student evaluation. Prior studies have demonstrated successful implementation of multi-source, workplace-based assessment programs including patient feedback in various clinical settings.33,34 The data on whether or not these lead to improved performance is mixed, although such programs generally receive positive ratings by physicians.35 The ACGME has suggested the use of multi-source feedback and multiple evaluators for assessing trainees’ competencies across multiple domains.36 As healthcare continues to move toward a patient-centered view, this is critical to the development of future physicians. In a prospective study of pediatric residents, faculty and nurses rated the trainees higher on professionalism and interpersonal skills than did patients and families.37 Further investigations are needed to determine how patient ratings compare to those of faculty and other healthcare providers. Including the patients’ view in student evaluations may add depth to the feedback and specific focus for improvement.

LIMITATIONS

First, this was a proof-of-concept, single-center study with a relatively small sample size that may limit extrapolation to other institutions. We believe, however, that the fact that medical students in our study come from 31 different medical schools adds heterogeneity to our population and may enhance generalizability. Second, only patients who were discharged from the ED were included in our study, as we did not want to affect the Health Care Consumer Assessment of Healthcare Providers and Systems survey administration to admitted patients.38 This skews our patient population to those who are lower acuity, and therefore we cannot draw conclusions about medical student communication in the higher-acuity patient population. Third, the inherent ceiling effect (the nature of patients being surveyed to give high scores) we see with the CAT scores may further minimize differences between groups.

Fourth, due to the one-month nature of the clerkship, the post-intervention measures were collected immediately after the curriculum was delivered to the intervention group. A future study in which post-intervention CAT scores are collected at a later time is needed to assess for a washout effect. Fifth, it is possible that the Hawthorne effect may have contributed both in terms of student performance and patient responses. We attempted to minimize such effect in terms of student performance by notifying all students at the beginning of the clerkship that we would be gathering patient feedback. Finally, because our curriculum is multimodal, we could not discern the extent to which the patient feedback, the video module, or the feedback discussion session with the clerkship directors had effect on the observed outcome.

Table 4.

Representative patient free-response comments on emergency medicine clerkship students’ communication skills.

| “Appreciate how personable he was. He could elaborate more when he comes to the follow-up information.” |

| “He was very attentive and took time to explain things clearly.” |

| “He was good. But really the attending doctor gave me much more detailed information.” |

| “She has good communication skills, she is very friendly, and she has a general concern for helping patients.” |

| “She didn’t give me all of the information I wanted to know. She seemed very nervous and a bit uncomfortable.” |

| “She was excellent. At first I was unsure about a med student, but she actually spent a lot of time with me. She was very thorough and is an excellent physician.” |

| “I had felt very upset about my accident, and she made me feel much better. She legitimized my concerns and feelings 100%.” |

| “She listened attentively.” |

| “My suggestion would be to make sure that any information he has or knows is explained to me, the patient.” |

CONCLUSION

A multimodal curriculum incorporating asynchronous learning and direct patient feedback is a feasible modality for teaching and assessing medical student communication skills in a fourth-year EM clerkship. Students in the intervention group attained higher patient ratings on communication skills compared to the control group. Undergraduate medical educators should consider using this novel approach in teaching and assessing communication and interpersonal skills.

Supplementary Information

Footnotes

Section Editor: Andrew W. Phillips, MD, MEd

Full text available through open access at http://escholarship.org/uc/uciem_westjem

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources and financial or management relationships that could be perceived as potential sources of bias. This study was funded by the Eleanor and Miles Shore Fellowship Program for Scholars in Medicine, Department of Emergency Medicine, Beth Israel Deaconess Medical Center. There are no conflicts of interest to declare.

REFERENCES

- 1.Anhang Prince R, Elliott MN, Zaslavsky AM, et al. Examining the role of patient experience surveys in measuring health care quality. Med Care Res Rev. 2014;71(5):522–54. doi: 10.1177/1077558714541480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hauck FR, Zyzanski SJ, Alemango SA, et al. Patient perceptions of humanism in physicians: effects on positive health behaviors. Fam Med. 1990;22(6):447–52. [PubMed] [Google Scholar]

- 3.Kim SS, Kaplowitz S, Johnston MV. The effects of physician empathy on patient satisfaction and compliance. Eval Health Prof. 2004;27(3):237–51. doi: 10.1177/0163278704267037. [DOI] [PubMed] [Google Scholar]

- 4.Hojat M, Louis DZ, Markam FW, et al. Physician’s empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359–64. doi: 10.1097/ACM.0b013e3182086fe1. [DOI] [PubMed] [Google Scholar]

- 5.Levinson W, Roter DL, Mullooly JP, et al. Physician-patient communication: the relationship with malpractice claims among primary care physicians and surgeons. JAMA. 1997;277(7):553–9. doi: 10.1001/jama.277.7.553. [DOI] [PubMed] [Google Scholar]

- 6.Boissy A, Windover AK, Bokar D, et al. Communication skills training for physicians improves patient satisfaction. J Gen Intern Med. 2016;31(7):755–61. doi: 10.1007/s11606-016-3597-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rhodes KV, Vieth T, He T, et al. Resuscitating the physician-patient relationship: emergency department communication in an academic medical center. Ann Emerg Med. 2004;44(3):262–67. doi: 10.1016/j.annemergmed.2004.02.035. [DOI] [PubMed] [Google Scholar]

- 8.Schwartzstein RM. Getting the right medical students: nature versus nurture. N Engl J Med. 2015;372(17):1586–7. doi: 10.1056/NEJMp1501440. [DOI] [PubMed] [Google Scholar]

- 9.Core Competencies for Entering Medical Students. Association of American Medical Colleges website. [Accessed July 3, 2017]. Available at: https://www.staging.aamc.org/initiatives/admissionsinitiative/competencies/

- 10.Ten Cate O, Scheele F. Competency-based postgraduate training: Can we bridge the gap between theory and clinical practice? Acad Med. 2007;82(6):542–7. doi: 10.1097/ACM.0b013e31805559c7. [DOI] [PubMed] [Google Scholar]

- 11.Englander R, Cameron T, Ballard AJ, et al. Toward a common taxonomy of competency domains for the health professions and competencies for physicians. Acad Med. 2013;88(8):1088–94. doi: 10.1097/ACM.0b013e31829a3b2b. [DOI] [PubMed] [Google Scholar]

- 12.Hook KM, Pfeiffer CA. Impact of a new curriculum on medical students’ interpersonal and interviewing skills. Med Educ. 2007;41(2):154–9. doi: 10.1111/j.1365-2929.2006.02680.x. [DOI] [PubMed] [Google Scholar]

- 13.Hirsh D, Ogur B, Schwartzstein R. Can changes in the principal clinical year prevent the erosion of students’ patient-centered beliefs? Acad Med. 2009;84(5):582–6. doi: 10.1097/ACM.0b013e31819fa92d. [DOI] [PubMed] [Google Scholar]

- 14.Thomas PA, Kern DE, Hughes MT, et al. Curriculum Development for Medical Education – A Six-Step Approach. Baltimore, MD: The Johns Hopkins University Press; 1998. [Google Scholar]

- 15.Burnette K, Ramundo M, Stevenson M, et al. Evaluation of a web-based asynchronous pediatric emergency medicine learning tool for residents and medical students. Acad Emerg Med. 2009;16(Suppl2):S46–50. doi: 10.1111/j.1553-2712.2009.00598.x. [DOI] [PubMed] [Google Scholar]

- 16.Raupach T, Grefe C, Brown J, et al. Moving knowledge acquisition from the lecture hall to the student home: a prospective intervention study. J Med Internet Res. 2015;17(9):e223. doi: 10.2196/jmir.3814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Makoul G, Krupat E, Chih-Hung C. Measuring patient views of physician communication skills: development and testing of the communication assessment tool. Patient Educ Couns. 2007;67(3):333–42. doi: 10.1016/j.pec.2007.05.005. [DOI] [PubMed] [Google Scholar]

- 18.Stausmire JM, Cashen CP, Myerholtz L, et al. Measuring general surgery residents’ communication skills from the patient’s perspective using the communication assessment tool (CAT) J Surg Educ. 2015;72(1):108–16. doi: 10.1016/j.jsurg.2014.06.021. [DOI] [PubMed] [Google Scholar]

- 19.Myerholtz L, Simons L, Felix S, et al. Using the communication assessment tool in family medicine residency programs. Fam Med. 2010;42(8):567–73. [PubMed] [Google Scholar]

- 20.McCarthy DM, Ellison EP, Venkatesh AK, et al. Emergency department team communication with the patient: the patient’s perspective. J Emerg Med. 2013;45(2):262–70. doi: 10.1016/j.jemermed.2012.07.052. [DOI] [PubMed] [Google Scholar]

- 21.Harris P, Taylor R, Thielke R, et al. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mercer LM, Tanabe P, Pang PS, et al. Patient perspectives on communication with the medical team: pilot study using the Communication Assessment Tool-Team (CAT-T) Patient Educ Couns. 2008;23(2):200–3. doi: 10.1016/j.pec.2008.07.003. [DOI] [PubMed] [Google Scholar]

- 23.Hobgood CD, Riviello RJ, Jouriles N, et al. Assessment of communication and interpersonal skills competencies. Acad Emerg Med. 2009;9(11):1257–69. doi: 10.1111/j.1553-2712.2002.tb01586.x. [DOI] [PubMed] [Google Scholar]

- 24.Kurtz SM, Silverman J, Draper J. The Calgary-Cambridge Observation Guides: an aid to defining the curriculum and organizing the teaching in communication training programs. Med Educ. 1996;30(2):83–9. doi: 10.1111/j.1365-2923.1996.tb00724.x. [DOI] [PubMed] [Google Scholar]

- 25.Buckman R. How to Break Bad News: A Guide for Health Care Professionals. Baltimore, MD: Johns Hopkins University Press; 1992. [Google Scholar]

- 26.Nelson SW, Germann CA, MacVane CZ, et al. Intern a patient: a patient experience simulation to cultivate empathy in emergency medicine residents. West J Emerg Med. 2018;19(1):41–8. doi: 10.5811/westjem.2017.11.35198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Williams-Reade J, Lobo E, Whittemore AA, et al. Enhancing residents’ compassionate communication to family members: A family systems breaking bad news simulation. Fam Syst Health. 2018;36(4):523–7. doi: 10.1037/fsh0000331. [DOI] [PubMed] [Google Scholar]

- 28.Rucker L, Morrison E. A longitudinal communication skills initiative for an academic health system. Med Educ. 2001;35(11):1087–8. [PubMed] [Google Scholar]

- 29.Ditton-Phare P, Sandhu H, Kelly B, et al. Pilot evaluation of a communication skills training program for psychiatry residents using standardized patient assessment. Acad Psychiatry. 2016;40(5):769–75. doi: 10.1007/s40596-016-0560-9. [DOI] [PubMed] [Google Scholar]

- 30.Trickey AW, Newcomb AB, Porrey M, et al. Assessment of surgery residents’ interpersonal communication skills: validation evidence for the communication assessment tool in simulation environment. J Surg Educ. 2016;73(6):e19–e27. doi: 10.1016/j.jsurg.2016.04.016. [DOI] [PubMed] [Google Scholar]

- 31.Comert M, Zill JM, Chistalle E, et al. Assessing communication skills of medical students in objective structured clinical examinations (OSCE)-a systematic review of rating scales. PLoS One. 2016;11(3):e0152717. doi: 10.1371/journal.pone.0152717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Newgard CD, Fu R, Heilman J, et al. Using Press-Ganey provider feedback to improve patient satisfaction: a pilot randomized controlled trial. Acad Emerg Med. 2017;24(9):1051–9. doi: 10.1111/acem.13248. [DOI] [PubMed] [Google Scholar]

- 33.Brinkman WB, Geraghty SR, Lanphear BP, et al. Effect of multi-source feedback on resident communication skills and professionalism: a randomized controlled trial. Arch Pediatr Adolesc Med. 2007;16(1):44–9. doi: 10.1001/archpedi.161.1.44. [DOI] [PubMed] [Google Scholar]

- 34.Lockyer J, Vioato C, Fidler H. Likelihood of change: a study assessing surgeon use of multisource feedback data. Teach Learn Med. 2003;15(3):168–74. doi: 10.1207/S15328015TLM1503_04. [DOI] [PubMed] [Google Scholar]

- 35.Miller A, Archer J. Impact of workplace based assessment on doctors’ education and performance: a systematic review. BMJ. 2010;341:c5064. doi: 10.1136/bmj.c5064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Accreditation Council for Graduate Medical Education. Advancing education in interpersonal and communication skills: an educational resource from the ACGME Outcome Project. [Accessed June 14, 2018]. Available at: http://www.acgme.org/outcome/assess/toolbox.asp.

- 37.Chandler N, Henderson G, Park B, et al. Use of a 360-degree evaluation in the outpatient setting: the usefulness of nurse, faculty, patient/family, and resident self-evaluation. J Grad Med Educ. 2010;2(3):430–4. doi: 10.4300/JGME-D-10-00013.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.HCAHPS: Hospital Consumer Assessment of Healthcare Providers and Systems. 2018. [Accessed June 15, 2018]. Available at: http://www.hcahpsonline.org/en/quality-assurance.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.