Cuttlefish use image disparity between the two eyes to extract depth information when hunting.

Abstract

The camera-type eyes of vertebrates and cephalopods exhibit remarkable convergence, but it is currently unknown whether the mechanisms for visual information processing in these brains, which exhibit wildly disparate architecture, are also shared. To investigate stereopsis in a cephalopod species, we affixed “anaglyph” glasses to cuttlefish and used a three-dimensional perception paradigm. We show that (i) cuttlefish have also evolved stereopsis (i.e., the ability to extract depth information from the disparity between left and right visual fields); (ii) when stereopsis information is intact, the time and distance covered before striking at a target are shorter; (iii) stereopsis in cuttlefish works differently to vertebrates, as cuttlefish can extract stereopsis cues from anticorrelated stimuli. These findings demonstrate that although there is convergent evolution in depth computation, cuttlefish stereopsis is likely afforded by a different algorithm than in humans, and not just a different implementation.

INTRODUCTION

Cephalopods are visually driven hunters, with renowned cognitive and camouflage abilities and a brain so dissimilar from ours that the underlying logic remains to be understood. For example, thus far, the cephalopod brain is believed to lack somatotopy (the ordered topographic projection of a sensory surface) (1), a key feature of neural organization in vertebrates and invertebrate brains. However, the camera-type eyes of cephalopods exhibit noteworthy convergent evolution to those of vertebrates, including a cornea, lens, iris, vitreous cavity, and retina (2). Thus, their control and the processing of visual information that they acquire provide an ideal opportunity for understanding whether this convergence also extends to computations and algorithms [e.g., (3)] and how this is differently implemented in the cephalopod brain. To investigate this, we used a three-dimensional (3D) perception paradigm and tested whether the European cuttlefish Sepia officinalis can extract depth information from the disparities between the left and right images (i.e., stereopsis). We chose to study stereoscopic vision because a large body of knowledge exists for vertebrate stereopsis, notably in primates (4, 5). The “anaglyph” glasses paradigm recently used by Nityananda et al. (6) demonstrating stereoscopic vision in the praying mantis inspired us to pursue a similar line of experiments in the cuttlefish. Sepia stand uniquely as an invertebrate group with camera-type eyes that could show convergence in this computation. Our hypothesis that cuttlefish use stereopsis stems from the substantial binocular overlap they produce through ocular vergence (7), whereas neither squid nor octopuses are thought to use binocular mechanisms to resolve depth (8, 9). Moreover, Sepia cuttlefish require a precise distance estimation strategy when they strike using two tentacles to apprehend prey by gripping them with suckers (7) before subduing them through the use of biting and injecting toxins (10). When hunting, eye vergence allows their binocular overlap to increase up to 75° (7). Thus, stereopsis may play a role during hunting in this animal: This is a goal-directed behavior where visual cues could be manipulated, interactions with other cues could be assessed, and any failures could be observed.

There is some evidence that cuttlefish perceive depth: They (i) are able to differentiate real objects from a photograph despite the strong similarities of pictorial cues (11), (ii) make use of shading and directional illumination cues (12), and (iii) use a saccadic movement strategy to estimate distance from translational optic flow (13). Thus, they share with humans the use of monocular cues (pictorial and motion parallax) to extrapolate 3D information from images (14). Although Messenger (7) reported that cuttlefish must determine the distance to their prey, he also found that monocular animals, made blind surgically, can approximate depth when hunting; thus, the role for binocular cues during the predatory hunt remained ambiguous. Here, to test our hypothesis that cuttlefish use stereopsis, we manipulated the perception of depth through stereopsis-only cues. To this end, we used a 3D cinema approach (6) by combining anaglyph (colored filter) glasses with a narrow-band light-emitting diode (LED) color monitor. The visual stimulus presented creates an illusory sense of depth to the animal, but only if it has the ability to use binocular disparity cues. Cuttlefish, like mantids, have been demonstrated to be color blind (15), having only one visual pigment expressed in their eyes. This makes using anaglyph glasses a powerful technique to investigate stereopsis vision. We found that cuttlefish use stereopsis while hunting as they altered their position in the tank relative to disparate cues and that intact binocular cues sped up prey capture. Thus, to understand how binocular signals are processed by the brain, we used moving shrimp patterns camouflaged in a background of dots. Cuttlefish correctly determine 3D location of shrimp camouflaged in correlated and anticorrelated backgrounds, but not uncorrelated. This prompted a final experiment where we tested whether binocular overlap was tightly regulated during hunting. To our surprise, we found that the eyes moved independently similar to chameleons and that eye vergence immediately before striking at prey differed by up to 10° from the midline.

RESULTS

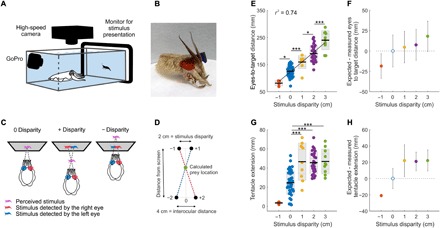

Cuttlefish use stereopsis when hunting prey

We presented cuttlefish with a movie, in which we introduced a shift between the left (red) and the right (blue) images of a walking shrimp (from here onward referred to as disparity). When this stimulus was detected through anaglyph glasses (Fig. 1, A to D, figs. S1 to S3, and movie S1), cuttlefish adjusted their position relative to the screen (Fig. 1E and movie S2). Despite presenting different positive and negative disparities randomly, the larger the disparity, the farther cuttlefish positioned themselves from the screen before striking at prey and vice versa (Fig. 1E). This suggests that cuttlefish use binocular cues to adjust their prey-striking position. When binocular cues are at play, the crossover point between the two images and the eyes should mark the expected location of the target in 3D (Fig. 1D). We found that for all tested disparities, animals placed themselves at a consistent distance from the illusionary expected 3D location of the prey (Fig. 1F). Moreover, we found that the tips of the tentacles extended significantly farther for 2-cm disparity stimuli versus 0-cm disparity [45.59 ± 11.50 mm and 24.64 ± 12.47 mm (mean ± SD), respectively; P = <0.0001; Fig. 1G]. This is because the tank wall in front of the computer monitor in the 0-cm treatment stops the tentacles, while in the 2-cm treatment, the tentacles extend past the perceived object. Because cuttlefish routinely exert sufficient force to strike their tentacles past a selected prey (7), our data further suggest that those animals that experienced positive disparity stimuli expected to hit the prey approximately 20 mm shorter than the full tentacle extension Fig. 1, G and H).

Fig. 1. Anaglyph glasses combined with 3D disparate stimuli demonstrate perception of stereopsis in cuttlefish.

(A) Experimental setup for tracking cuttlefish hunting behavior when presented with a prey stimulus. (B) Cuttlefish fitted with experimental anaglyph 3D colored glasses (see movies S1 and S2). Photo credit: Rachael Feord, University of Cambridge. (C) Stereoscopic stimulus geometry for the three disparity conditions. (D) Methodology used to calculate the illusory prey location using (i) the distance between the images at the screen (disparity), (ii) the interocular distance (measured for each animal), and (iii) the eyes to screen distance. (E) Distance from the animal’s eyes to the screen at the beginning of the ballistic part of the strike for a range of stimulus disparities. The stimulus image disparity range was −1 to +3 cm, with 4, 39, 9, 29, and 10 trials, respectively (n = 5). Significant differences are noted with star values, with the P values from left to right: *P = 0.0123, ***P = 0.0041, *P = 0.0161, and ***P < 0.0001 [one-way analysis of variance (ANOVA)]. Black line: mean; inner gray box: SEM; outer gray box: SD. (F) For each stimulus disparity and trial, the expected cuttlefish position when stereopsis is used [calculation method is shown in (D)] was subtracted from the measured position (P = 0.2490, 0.8897, 0.7498, and 0.4008; bootstrap test). (G) Length of tentacle extension for each stimulus disparity (P values from left to right: 0.0041, <0.0000, and <0.0000, one-way ANOVA). (H) For each stimulus disparity and trial, the difference between the measure and the expected length of tentacle extension was calculated (values are taken from 0 disparity; P < 0.0001, P = 0.2236, P = 0.0356, and P < 0.0001; bootstrap test). For (E) to (H), n = 2, 5, 2, 5, and 2 for −1-, 0-, 1-, 2-, and 3-cm disparities, respectively.

Cuttlefish responded to the 3D stimulus in the same manner when the image of the shrimp was walking or swimming and when we reversed the stimulus contrast (i.e., a dark shrimp against a light background or vice versa; fig. S4, A to D). Therefore, the data presented here (Fig. 1) combine trials from all these conditions. We also tested whether the animal’s head orientation relative to the target, at the start of the ballistic strike, could have affected the distance to target findings. There was no correlation between the head orientation and the distance from eyes to target. This was consistent across the range of disparities tested (fig. S5A). In addition, we found that the tentacles were consistently directed perpendicular to the line between the center of the eyes (r2 = 0.908; fig. S5B).

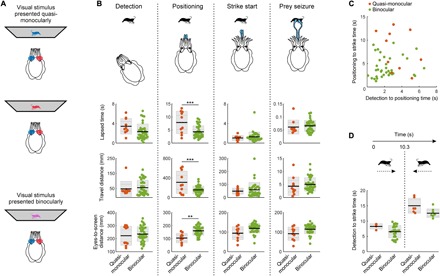

Intact binocular cues speed up prey capture

To investigate how binocular cues might improve predation by cuttlefish, we presented them with only one of the two colored images, thus creating a quasi-monocular stimulation [i.e., not fully monocular as there is photon leakage through the filters used for the anaglyph glasses; Fig. 2A]. We found that during the positioning phase, quasi-monocularly stimulated animals (via anaglyph goggles rather than monocular occlusions) took longer to strike, traveled farther, and struck at prey closer than animals stimulated binocularly [one-way analysis of variance (ANOVA); P < 0.0001, P < 0.0001, and P = 0.0018, respectively; Fig. 2B positioning]. For the other phases of attack (detection, striking, and seizure), behavioral responses to quasi-monocular stimuli were similar to binocular stimuli (Fig. 2B and movie S3). Thus, the reduced field of view in quasi-monocularly stimulated animals did not result in longer detection times. Furthermore, the duration of the detection-to-positioning phase and that of the positioning-to-strike phase were uncorrelated for both quasi-monocularly and binocularly stimulated animals (Fig. 2C). The initial distance from the target had no obvious impact on the time taken to strike by quasi-monocularly and binocularly stimulated cuttlefish (fig. S5C). In addition, although quasi-monocularly stimulated animals took longer to strike after the target had reversed in direction, these two datasets did not differ significantly (Fig. 2D).

Fig. 2. Binocular vision improves hunting behavior.

(A) Cuttlefish fitted with anaglyph 3D colored glasses enabled presentations of quasi-monocular and binocular visual stimuli (see movie S3). (B) Quantification of lapsed time, distance traveled, and distance from the animal’s eyes to the screen from quasi-monocularly (n = 3) and binocularly stimulated (n = 5) animals for four stages of the hunt: (i) detection = from stimulus appearance to first reaction, (ii) positioning = from first reaction to tentacles showing, (iii) strike start = from tentacles showing to beginning of ballistic strike, and (iv) prey seizure = from the ballistic start of the strike to animal contact. From one-way ANOVA, ***P < 0.0001 (top), ***P < 0.0001 (middle), and **P = 0.0018 (bottom). Black line: mean; inner gray box: SEM; outer gray box: SD. (C) Relationship between the time lapsed (i) until stimulus detection and (ii) between detection and positioning, combined quasi-monocular and binocular data: r2 = 0.0114; quasi-monocular alone (n = 3): r2 = 0.1331; binocular alone (n = 5): r2 = 0.0162. (D) Total lapsed time from stimulus presentation until “strike start” for quasi-monocular and binocular experiments. To test for the effect of target reversal, trials were categorized into those where the animal struck at prey before (left) or after (right) the direction of travel of the stimulus reversed (at 10.3 s). Quasi-monocular and binocular groups did not differ significantly [P = 0.3518 (left group) and 0.1040 (right group), one-way ANOVA].

Cuttlefish also perceive 3D locations correctly when stimuli are anticorrelated between the two eyes, but not uncorrelated

To probe the binocular mechanism that cuttlefish use for their hunts, we made stimulus videos with a random-dot pattern for the background and shrimp silhouettes, following the method presented by Nityananda et al. (16). We tested three conditions—the white-black dotty patterns of the left and right images were either correlated, anticorrelated (to test the role of local luminance), or uncorrelated (to test whether interocular correspondence is at all required) (Fig. 3, A and B, and movie S4). In these stimuli, the shrimp was indistinguishable from the background in any one monocular frame, and the random-dot pattern that fills the shrimp is fixed as it moves. In the case of correlated stimuli, the dotty visual scene is identical as perceived by both eyes (Fig. 3A). For the anticorrelated stimuli, we reversed the luminance of the dots from one eye to the other (Fig. 3B). When the stimulus was uncorrelated, the pattern of dots differed between the left and right eyes. We randomized disparity presentations so that animals could not have simply learned 3D prey locations. Of the 11 cuttlefish that had been successfully trained to strike at the moving shrimp stimuli on a monitor, 5 animals did not pursue any of the dotty shrimps and were excluded from this analysis. The remaining six animals consistently struck at the correlated and anticorrelated stimuli among trials and across days. However, they never struck at uncorrelated stimuli, despite consistently engaging in the early stages of the hunt (movie S4). When responding to the dotty pattern stimuli, animals adjusted their position in the tank such that they struck at the expected 3D location of the shrimp in both the correlated (Fig. 3A and movie S4) and anticorrelated (Fig. 3B) conditions.

Fig. 3. Cuttlefish perceive 3D locations correctly when stimuli are anticorrelated between the two eyes, but not uncorrelated.

(A) The stimulus contrast was correlated between the right and left eyes (e.g., at 0-cm disparity, left green + right blue = cyan stimulus). Using this principle, we generated stimuli where a shrimp silhouette was filled with a random pattern of dark and bright dots against a background of random dark and bright dots, i.e., the shrimp was indistinguishable from the background in any one monocular frame (shrimp outline was added here only for display purpose). Middle: Distance from the animal’s eyes to the screen at the beginning of the ballistic part of the strike for no disparity and for 2-cm disparity tests (middle: ***P < 0.0001, one-way ANOVA). Bottom: Distance of each group from the expected value (0 cm used as control; P = 0.730, bootstrap test; see movie S3). (B) Top: Test as in (A), but with the stimulus contrast anticorrelated between the left and right eyes. Middle: As in (A), ***P < 0.0001, one-way ANOVA. Bottom: P = 0.499, Bootstrap test. Black line: mean; inner gray box: SEM; outer gray box: SD. n = 6 and 3 for 0- and 2-cm correlated disparities, and n = 5 and 4 for 0- and 2-cm anticorrelated disparities.

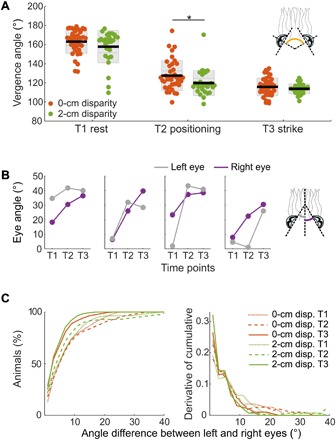

Cuttlefish have independent eye movements, and their eyes are not equally converged before strike

Stereopsis and eye movement control are tightly linked in mammals: If independent movements generate a large disparity between the two images, the correspondence problem becomes unsolvable and results in fusion failure (17). We chose to investigate eye movement control in cuttlefish as they also display conjugate eye movements (i.e., they preserve the angular relationship between eyes) (7, 18), yet we found that the angular movement of the eyes can be independent (unyoked) during different phases of the hunt (Fig. 4, A to C, and figs. S6 and S7). From detection to striking at the prey, eye vergence angle significantly decreased (T1 to T2: P = 1.90 × 10−28; T2 to T3: P = 1.45 × 10−5; T1 to T3: P = 2.80 × 10−47; Fig. 4A). We found little difference in eye vergence between animals viewing 0- and 2-cm disparity stimuli at the start (T1: P = 0.15) and at the end (T3: P = 0.30) of the hunt, but eye vergence differed significantly during positioning (T2: P = 0.04; Fig. 4A). When we viewed the raw data, we found examples where eye angle generally did not consistently increase across the three time points as the hunt progressed (Fig. 4B and figs. S6 and S7). At striking, 5% of the animals still had a 10° or greater difference between the left and the right eye angular positions for both 0- and 2-cm disparity stimuli, as shown by the derivative of the cumulative distribution of angle difference between the left and right eyes (Fig. 4C).

Fig. 4. Cuttlefish have independent eye movements, and their eyes are not equally converged before the strike.

(A) Eye vergence angle at three time points during predatory behavior for 0- and 2-cm disparity stimuli where 0° is the eye looking laterally. T1: immediately before shrimp presentation; T2: after animal has rotated its body to view the screen and is moving forward; *P < 0.05; T3: during ballistic tentacle shoot; P = 0.15, 0.04, and 0.30, respectively, one-way ANOVA with time points. (B) Four randomly chosen examples of the eye angle of the two eyes at the three time points in the trial (see figs. S6 and S7 for data from all trials). (C) For each 0- and 2-cm disparity stimulus trial, the difference between the angular positions of the two eyes at the three time points was calculated, here shown as the cumulative percentage of animals (left) and its derivative (right). For (A) and (C), n = 5 and 5 for 0- and 2-cm disparities, respectively.

DISCUSSION

To ensure that cuttlefish hit their prey successfully, they must acquire information about its location before the strike. Here, we show that cuttlefish use the disparity between their left and right eyes to perceive depth (Fig. 1). Cuttlefish use this information to aid in prey capture, as animals with intact binocular vision take less time to strike at prey and do so from farther away (Fig. 2.). In animals tested with quasi-monocular stimuli, the significant difference in latency, travel distance, and strike location during the positioning phase is consistent with Messenger’s study (7), as he found that attack success in unilaterally blinded animals decreased to 56% (versus 91% in binocular animals). Nonetheless, binocular cues cannot be the only depth perception mechanism used by the cuttlefish, as many quasi-monocularly and binocularly stimulated animals behaved equally well, both in Messenger’s study and ours. The absence of pictorial cues in our stimulus (the shrimp silhouette lacks any shadowing, shading, or occlusion) leads us to conclude that for monocular but not binocular depth perception, cuttlefish may rely on motion cues such as parallax (13) and/or motion in depth (19).

Before our investigation, cuttlefish were not known to use stereopsis (i.e., calculate depth from disparity between left and right eye views). They had been shown only to have a variable range of binocular overlap (7). Using anaglyph glasses and this 3D perception assay, we provide strong support that cuttlefish have and use stereopsis during the positioning to prey seizure phases of the hunt. However, as suggested by Messenger (7), other depth estimation strategies, such as oculomotor proprioceptive cues provided by the vergence of the two eyes (20, 21), could be at play. Accommodation cues, as used by chameleons to judge distance (22), could also provide an additional explanation as lens movements have been observed in cuttlefish (23). However, if proprioceptive or accommodation cues were being used by cuttlefish for depth estimation, it should not fail as it did when presented with a completely uncorrelated stimulus, i.e., each eye should still fixate and converge on the moving target without requiring correspondence between the images (Fig. 3). We observed that cuttlefish consistently engaged and reached the positioning phase when presented with uncorrelated stimuli, but they quickly aborted and never advanced to the striking phase of the hunt (movie S4). Because they could not solve the uncorrelated stimuli test, we conclude that cuttlefish rely on interocular correspondence to integrate binocular cues and not simply use binocular optomotor cues (vergence) or accommodation to estimate depth. This also indicates that cuttlefish stereopsis is different from praying mantis (also known as mantids) stereopsis, because mantids can resolve targets based on “kinetic disparity” (the difference in the location of moving object between both eyes) (16). Mantids can do this in the absence of “static disparity” provided by the surrounding visual scene, something which humans are unable to do (16).

To see how binocular overlap may play a role in stereopsis, we investigated eye convergence. A disparity difference of up to 10° between the left and right eye angular positions at the moment when they strike may seem large (Fig. 4). However, cuttlefish have a relatively low-resolution retina, 2.5° to 0.57° per photoreceptor (24). Thus, cuttlefish image disparity relative to their eye resolution is comparable to the relative magnitudes observed for these measures in vertebrates. Cuttlefish’s lower spatial resolution makes it plausible that they may also have neurons that encode disparity across a larger array of visual angles, as known to be the case in mice (25). To coordinate the relative positions of the left and right receptive fields for object tracking, cuttlefish may have evolved similar circuits as those used by chameleons for synchronous and disconjugate saccades (26, 27) and by rats for a greater overhead binocular field (28).

The evidence presented here establishes that cuttlefish make use of stereopsis when hunting and that this improves hunting performance by reducing the distance traveled, the time taken to strike at prey, and allowing it to strike from farther away. Further investigation is required to uncover the neural mechanisms underlying the computation of stereopsis in these animals.

METHODS

Animals

We conducted experiments using adult cuttlefish, S. officinalis, aged 22 to 24 months that originated from wild-collected eggs retrieved in the southern region of England, United Kingdom. We housed, fed, and reared animals in accordance to the methods detailed by Panetta et al. (29). We collected data from 11 adults. All procedures carried out in this study complied with the Marine Biological Laboratory (MBL) institutional recommendations for cephalopods (where the live animal work was undertaken).

Experimental setup

We developed a bioassay to train cuttlefish to hunt the image of shrimp presented on a screen. The setup (Fig. 1A) consisted of a computer monitor (Dell Ultrasharp LED U2413 24″ Premier Color) positioned against the side of a plastic tank (IRIS USA File Box, model no. 586490, 10.75 inches deep × 13.88 inches wide × 18.25 inches long) used as the experimental arena. Two cameras monitored the behavior of the animal. A high-speed camera (Photron SA3 or Photron FASTCAM Mini WX100 with Canon EF 24 to 70 mm f/2.8L USM macro lens) positioned over the tank captured the entirety of the arena at 250 or 500 frames/s. In addition, an underwater camera (GoPro Hero5 or Hero7 with Super Suit) placed inside the tank on the side opposite to the monitor provided an additional vantage point (see movie S1). After establishing the camera location and lens focus each day, we took an image of a ruler at the bottom of the tank before the experiments started. This served as the scale bar that allowed us to measure distances in x-y dimensions in the tank.

Over the course of the day, we exchanged the behavior arena seawater several times with water sourced from the Marine Resources Center (MRC) recirculating seawater system. Temperature varied by ~5°C maximum. We did not measure ammonia and pH throughout. Water quality changes were likely to be slow, and we noticed no trend with increased time out of the recirculating water system (hunting improved over time as the animals acclimated to the tank). Animals were motivated with live shrimp rewards and ate up to 30 grass shrimp (1.5 to 3 cm in length) per day.

Behavioral training

We withheld food for 2 days before training, beginning to motivate hunting and expedite learning. During the initial training stages, a live grass shrimp (Palaemonetes vulgaris) reward was delivered to the cuttlefish for each attempt by the animal to engage with the image of a moving shrimp presented on the screen (black shrimp on a white background). In the subsequent training stages, shrimp rewards were restricted to trials during which the cuttlefish responded to the on-screen target by extending its tentacles, i.e., it entered hunting mode and was preparing to strike at a target deemed suitable to capture. Once this behavior became consistent, we affixed a Velcro patch (approximately 1 cm2) to the dorsal surface of the animal’s head. We achieved this by netting the animals out of the tank, patting the skin dry with a paper towel three times, and applying a superglue-covered Velcro patch directly to the skin and holding in place for 10 s. Immediately after returning the animals to the tank with care, we fed them with a large grass shrimp. Subsequently, we repeated training as detailed above, except that we only gave a shrimp reward when the animal struck out its tentacles attempting to catch the on-screen prey. Once given, behavior was consistent, a custom-made pair of glasses (see below) was placed onto the animal, attached via the Velcro patch, and training was repeated. A few hours after being fitted with glasses, some animals would reliably hunt, while other more cautious animals or those initially not interested in viewing the screen took up to 2 days to reliably interact with shrimp video stimuli. We tested trained animals with positive and negative disparate stimuli as soon as five successful strikes of the black shrimp on white background video were completed. We repeated trials over the course of several days.

Visual stimuli

We constructed anaglyph glasses for the cuttlefish, adapted from those described by Nityananda et al. (6) for mantids (Fig. 1B, right eye: red = Lee 135 Deep Golden Amber; left eye: blue = Lee 797 Purple; see movies S1 and S2). Each filter allowed transmission of a subset of the spectrum of light while blocking or reducing other wavelengths. Note that we used a double layer of red and blue filters, instead of a single layer, to better separate the wavelengths reaching each eye. A Velcro patch on the underside of the glasses allowed easy attachment or removal of the glasses to the animals. This paradigm allowed us to selectively stimulate both the left and right eyes and create an offset between the right and left images to produce an illusory depth percept for animals that use stereopsis (Fig. 1C). However, we later found the amber filter to be at the far end of the cuttlefish opsin sensitivity; therefore, luminance was not matched between the two eyes. This, however, did not appear to affect the cuttlefish’s ability to detect a stereoscopic stimulus. This concurs with a previous report that the shape of the prey is more strongly discriminated by cuttlefish than the brightness of the prey (30). To remediate the intensity difference, in the second batch of animals/experiments, we used blue and green filters (right eye: green = Lee 736 Twickenham Green; left eye: blue = Lee 071 Tokyo Blue; as a double layer). We chose to use these blue and green filters as they had better intensity matches and more similar photon catches for cuttlefish, i.e., lower the chance that intensity differences between the two eyes would provide cues or create distraction artifacts. We repeated the behavioral tests with the blue/green filters. Five of the six animals that engaged with the random-dot stimuli experiments wore the blue and green filter combination. The behavioral results were the same between the animals wearing the two types of glasses, so we pooled the datasets together.

We referred to a positive disparity as disparity between images that causes the illusory depth percept to appear anterior to the screen, whereas a negative disparity will create a percept behind the screen. The value attributed to a disparity stimulus (1, 2, or 3 cm) indicates the offset between the images presented to each eye. For each animal, we tested a range of stimulus disparities and experimental protocols in a random order over the course of each experimental day.

We created the shrimp stimulus presented to the cuttlefish via the screen from videos of grass shrimp recorded underwater using a GoPro camera. We then converted this video into a gray scale. By duplicating some of the video frames but shifting the shrimp image location along the x axis, we created a clip of a shrimp traveling the full width of the screen. Then, we duplicated and flipped along the x axis all the frames of the video. When played consecutively, this made the shrimp “flip direction” and return walking toward the starting point (left side of the tank). We used this forward and return walk across the screen by the shrimp as the basis of most stimuli. To generate the silhouette of a black shrimp against a uniform white background, we converted the forward and return video into a binary format. The three LED channels of the screen (fig. S1B) were used individually or as a combination of two LEDs to create six possible colors of shrimp stimulus presented against a white or a black background (fig. S1C). Radiance spectra were measured using a National Institute of Standards and Technology calibrated Avantes AvaSpec 2048 Single-Channel spectrometer coupled to an Avantes ultraviolet-visible 600-μm fiber (numerical aperture = 0.22, acceptance angle = 25.4, and solid angle = 0.1521) positioned at a distance of 125 mm from the tank wall and monitor. We collected spectra by averaging 100 repetitions of 50-ms light integration time and smoothed using an eight-point moving average filter. We made the measurements in air, rather than in water, for equipment preservation purposes. The spectra of all stimuli were measured from a full screen of color matching RGB values for individual components of all stimuli videos (fig. S1C). Measurements were repeated with the addition of either blue or red filters by positioning the glasses in the light pathway from the screen to the fiber, a few millimeters from the fiber end (fig. S2, A and B). We also repeated measurements for the blue and green filters.

The spectral sensitivity of the S. officinalis visual pigment was calculated using the equations formulated by Stavenga et al. (31) using a peak wavelength λmax = 492 nm for the α wave of the template and a peak wavelength λmax = 360 nm for the β wave (29). The relative photon catch for each color of shrimp stimulus, that is, the number of photons (N) absorbed by a cuttlefish photoreceptor for a given stimulus, was obtained using the following equation (32)

where k is the quantum efficiency of transduction = 0.0067/μm (33), S(λ) is the spectral sensitivity of the visual pigment, l is the length of the rhabdom = 400 μm (34), and R(λ) is the measured radiance spectra of the stimulus on the screen. As our goal was to produce stimuli that would only be detected by either the left or the right eye of the cuttlefish when wearing the glasses. We assessed the cross-talk of these stimuli by establishing the ratio of the quantum catch by the eye supposedly blind to the stimulus and the quantum catch of the eye intended for the stimulus (figs. S2, A and B, and S3, bottom row). Our calculations show cross-talk between stimuli to be between 1 and 24%. Note the considerably lower cross-talk for the green/blue glasses (fig. S3; see movie S4). It is important to note here that other species perceived the 3D effect with anaglyph color glasses, although they suffered photon leak of the channels (i.e., cross-talk), which can be quite large when used for humans with color vision (6, 35). Thus, detection of light via the “blocked” channel did not preclude the stereopsis test from being valid.

For experiments in which we presented a video of a shrimp against a uniform background, we tested a dark silhouette against a white background as well as a light silhouette against a dark background (figs. S2, A and B, and S3). The 4-cm-wide shrimp subtends 17.74° when viewed at 12.5 cm, corresponding to the average distance of the eyes to the screen at the start of the ballistic strike. In the case of a white background, the band of light reflected from a green shrimp was not transmitted through either the blue or red filter, resulting in a contrast between the absence of light of the shrimp appearing dark against a light background. If the shrimp is presented as cyan, then the red filter will make the shrimp appear dark against a light background for the right eye, while the blue filter will transmit the light, thus removing the contrast between the shrimp object and background, thereby rendering the shrimp indistinguishable for the left eye. This same effect is reversed for the blue and red filters with a yellow shrimp. In the case of a dark background, a magenta shrimp will transmit light to both eyes, thus contrasting against the absence of light from the surrounding black. Blue and red lights, however, will only be transmitted through the blue and red filters, respectively. We also varied other parameters of the visual scene: The shrimp moving across the visual scene either walked or swam. We tested walking and swimming shrimp to demonstrate that the hunting behavior was not constrained to a particular stimuli video or movement (fig. S4A). We tested positive and negative contrasts to demonstrate that the motion detection system was not constrained (fig. S4B). We extracted other features of the behavior (fig. S5, A to C) to tease apart how the animal behaved and reacted to stimuli. To test for the mechanism subservient to the stereo ability, we followed and adapted the methodology in Nityananda et al. (25). Briefly, we used the walking shrimp video but covered the shrimp silhouette with a random black-white dot pattern and “camouflaged” it against the background with the same type of pattern. The dot pattern shown to the two eyes was either correlated (same contrast polarity), anticorrelated (flipped contrast for one of the two eyes), or uncorrelated (different patterns for each eye). The 1.4-mm dot stimuli, when viewed at 18 cm, subtends 0.44°. The 5-mm dot stimuli, when viewed at 18 cm, subtends 1.59°. Data from both size dot patterns were combined in Fig. 3, as we found no significant difference between these datasets (correlated 0- and 2-cm disparity, P = 1.0 and 0.1074; anticorrelated 0- and 2-cm disparity, P = 0.9986 and 0.5313; see movie S4). For these dotty shrimp stimuli and backgrounds, one cuttlefish wore the red/blue glasses, while five animals wore the green/blue glasses described above. We did not find a significant difference between animal positioning locations for the 0- and 2-cm disparity conditions for the red/blue and green/blue glasses (fig. S8).

We found that 5 of 11 animals did not engage in hunting when presented with the dotty task, even when the stimulus was correlated and had zero disparity. We interpreted that the difficulty of this test, imposed by similarities between the prey and background patterns, rendered those individuals unwilling to attempt it even if they had the ability to do so, as shown by the other six animals. The responses were animal specific and consistent across days so we can exclude the hunger state. Perhaps, differences in individual character, known to exist in cephalopods, may drive the differences in motivation (36–38).

Data digitization

We extracted images representing five different time points from each stimulus presentation from high-speed videos using the Photron FASTCAM Viewer (PFV 4.01) software. These time points corresponded to the transitions between stages of the attack sequence detailed by Messenger (1): (T1) transition from a screen “flash” to the appearance of the prey on the screen that signaled the commencement of a trial (i.e., middle frame of the transition was chosen); (T2) detection of the prey by the cuttlefish, as determined by the first eye or head or tentacle movement before moving eyes toward screen; (T3) positioning behavior, as determined by the first appearance of the tentacles beyond the arms; (T4) initiation of the ballistic tentacle extension, taken as the second last image before the velocity induced blur; and (T5) prey seizure or when the maximal tentacle extension, determined as the second nonblurry image. We also extracted an image of the calibration ruler for each day and then used it to identify the pixel to millimeter conversion. We scaled images using Fiji (also known as ImageJ) (39). For each of the five time points described above, from the high-speed camera recordings of each cuttlefish hunt, we identified and digitized coordinates manually using Fiji corresponding to the following locations: (i) monitor left and right edges, (ii) the center of the animal’s two eyes (central on midline of pupil skin flap), (iii) the anterior and posterior midline edges of the mantle, and (iv) the midpoint between tips of the tentacles.

Data analysis

For each animal and each treatment, we repeated the stimuli until we obtained three valid trials. Stimuli across the range of disparities were presented in a randomized order, and two stimuli of the same disparity were never presented sequentially such that cuttlefish were unable to learn a disparity condition from a previous trial. A trial was valid only if it was completed (i.e., the animal struck). The only exception was the uncorrelated stimuli, where we saved video from no-strike trials to demonstrate their rejection of the stimuli. Thereafter, trials were only included in the dataset if all five stages outlined above could be identified. Furthermore, trials were only included if the animal was greater than 125 mm from the screen upon stimulus detection (T2). This was done to ensure that the target was within the field of view of both eyes. Coordinates extracted from data digitization were used to calculate the distance from the midpoint of the eyes to the screen along the tentacle extension axis [eyes to screen distance in Figs. 1 (E to H) and 2B] and to full tentacle extension using MATLAB 2015b (The MathWorks Inc., Natick, MA). Digitization of data (i.e., coordinate measurements of eyes, tentacle tips, etc.) across time points of the hunt was performed without reference to the disparity condition of the stimulus. Once a stimulus disparity was introduced, however, the distance from predator to perceived prey location was determined using the value of the disparity, the interocular distance, and the distance from the eyes to the screen (Fig. 1, C and D). Using this methodology, we calculated the distance from eyes to the expected illusory prey location for all nonzero disparities at T4. We also calculated the angle of the eyes to the screen and the angle of the tentacles to the screen (fig. S5, B and C). For zero disparity and quasi-monocular stimuli trials, we tracked the animal’s position over the course of the hunt to measure the distance traveled (Fig. 2, B and C). For these analyses, high-speed videos were shortened by retaining every 1 in 10 frames, and any trial where the animal took longer than 10 s to detect the stimulus was discarded.

Note that the walking or swimming shrimp velocity when viewed from each eye during the positioning phase should be similar, as cuttlefish were found to strike relatively close to the target and perpendicular to the line drawn between the eye centers (r2 = 0.908; figs. S4, A to D, and S5C). This would likely limit the cuttlefish from using a method proposed by Harris et al. (40), where depth could be computed without precise correspondence using disparity in velocity information between the left and right views.

Eye angle calculations

In addition to time points above, we extracted an additional two frames from each trial video for eye angle measurements: (i) when the animal has rotated to view the screen and is moving forward (T2.5) and (ii) during ballistic tentacle strike, just before the tentacles extending past where the prey was expected to be located (i.e., before the sucker-covered clubs go out of their straight trajectory as they did not hit a target) (T4.5). Note that we labeled T2.5 as T2 and T4.5 as T3 in Fig. 3 and figs. S6 and S7. In addition to the other digitized locations described above, we digitized the eye pupil location in the T1, T2.5, and T4.5 images. The most dorsal and widest position in the W pupil (i.e., the bottom two points of the W) was digitized. Angles were calculated after digitization in MATLAB 2018a.

Supplementary Material

Acknowledgments

We particularly thank R. Hanlon for the provision of cuttlefish and hospitality in using his laboratory space and equipment. We thank V. Nityananda greatly for rapid and detailed advice to set up the anaglyph glasses experiments. We also thank the staff at the Marine Biological Laboratory MRC for providing shrimp and assistance with facilities. We thank K. Nordström, R. Olberg, R. Hanlon, and M. Hale for providing feedback on the manuscript. Funding: This work was funded by an MBL Whitman fellowship to P.T.G.-B., the University of Minnesota College of Biological Sciences to P.T.G.-B. and T.J.W., and the Biotechnology and Biological Sciences Research Council Doctoral Training Program scholarship to R.C.F. Author contributions: T.J.W. and P.T.G.-B. conceived the study. T.J.W. and P.T.G.-B. designed the stimuli. T.J.W., P.T.G.-B., and R.C.F. undertook the behavioral experiments. M.E.S., S.P., and L.K. undertook digitization and eye movement analysis. R.C.F. undertook most of the analysis and figure generation and wrote the first draft. All authors contributed to the writing and approved the final version of the paper. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/2/eaay6036/DC1

Fig. S1. Spectral content of screen and stimuli.

Fig. S2. Stimulus spectra, filter properties, and spectral content cross-talk measurements—red/blue glasses.

Fig. S3. Stimulus spectra, filter properties, and spectral content cross-talk measurements—blue/green glasses.

Fig. S4. Speed of stimuli and polarity or background contrast do not alter the perceived location of the 3D prey.

Fig. S5. Control analyses for different hunting behavior parameters.

Fig. S6. Cuttlefish eye angles vary greatly during the hunt.

Fig. S7. Diversity in cuttlefish eye vergence during the hunt.

Fig. S8. Positioning does not differ between stimuli and glasses types.

Movie S1. Method and animal behavior.

Movie S2. Example responses to control and disparate stimuli (relates to Fig. 1).

Movie S3. Example responses to quasi-monocular and binocular stimuli (relates to Fig. 2).

Movie S4. Example responses to correlated, anticorrelated, and uncorrelated random dot stimuli (relates to Fig. 3).

REFERENCES AND NOTES

- 1.Zullo L., Sumbre G., Agnisola C., Flash T., Hochner B., Nonsomatotopic organization of the higher motor centers in octopus. Curr. Biol. 19, 1632–1636 (2009). [DOI] [PubMed] [Google Scholar]

- 2.Packard A., Cephalopods and fish: The limits of convergence. Biol. Rev. 47, 241–307 (1972). [Google Scholar]

- 3.Nityananda V., Read J. C. A., Stereopsis in animals: Evolution, function and mechanisms. J. Exp. Biol. 220, 2502–2512 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cumming B. G., DeAngelis G. C., The physiology of stereopsis. Annu. Rev. Neurosci. 24, 203–238 (2001). [DOI] [PubMed] [Google Scholar]

- 5.Welchman A. E., The human brain in depth: How we see in 3D. Annu. Rev. Vis. Sci. 2, 345–376 (2016). [DOI] [PubMed] [Google Scholar]

- 6.Nityananda V., Tarawneh G., Rosner R., Nicolas J., Crichton S., Read J., Insect stereopsis demonstrated using a 3D insect cinema. Sci. Rep. 6, 18718 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Messenger J. B., The visual attack of the cuttlefish, Sepia officinalis. Anim. Behav. 16, 342–357 (1968). [DOI] [PubMed] [Google Scholar]

- 8.Chung W.-S., Marshall J., Range-finding in squid using retinal deformation and image blur. Curr. Biol. 24, R64–R65 (2014). [DOI] [PubMed] [Google Scholar]

- 9.Byrne R. A., Kuba M., Griebel U., Lateral asymmetry of eye use in Octopus vulgaris. Anim. Behav. 64, 461–468 (2002). [Google Scholar]

- 10.Ghiretti F., Cephalotoxin: Crab-paralysing agent of the posterior salivary glands of cephalopods. Nature 183, 1192–1193 (1959). [Google Scholar]

- 11.Kelman E. J., Osorio D., Baddeley R. J., A review of cuttlefish camouflage and object recognition and evidence for depth perception. J. Exp. Biol. 211, 1757–1763 (2008). [DOI] [PubMed] [Google Scholar]

- 12.Zylinski S., Osorio D., Johnsen S., Cuttlefish see shape from shading, fine-tuning coloration in response to pictorial depth cues and directional illumination. Proc. Biol. Sci. 283, 20160062 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Helmer D., Geurten B. R. H., Dehnhardt G., Hanke F. D., Saccadic movement strategy in common cuttlefish (Sepia officinalis). Front. Physiol. 7, 660 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.V. Bruce, P. R. Green, M. A. Georgeson, Visual Perception: Physiology, Psychology, and Ecology (Psychology Press, 1996). [Google Scholar]

- 15.Marshall N. J., Messenger J. B., Colour-blind camouflage. Nature 382, 408–409 (1996).8684479 [Google Scholar]

- 16.Nityananda V., Tarawneh G., Henriksen S., Umeton D., Simmons A., Read J. C. A., A novel form of stereo vision in the praying mantis. Curr. Biol. 28, 588–593.e4 (2018). [DOI] [PubMed] [Google Scholar]

- 17.Otero-Millan J., Macknik S. L., Martinez-Conde S., Fixational eye movements and binocular vision. Front. Integr. Neurosci. 8, 52 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Collewijn H., Oculomotor reactions in the cuttlefish, Sepia officinalis. J. Exp. Biol. 52, 369–384 (1970). [Google Scholar]

- 19.Thompson L., Ji M., Rokers B., Rosenberg A., Contributions of binocular and monocular cues to motion-in-depth perception. J. Vis. 19, 2 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Donaldson I. M. L., The functions of the proprioceptors of the eye muscles. Philos. Trans. R. Soc. Lond. B Biol. Sci. 355, 1685–1754 (2000). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gonzalez F., Perez R., Neural mechanisms underlying stereoscopic vision. Prog. Neurobiol. 55, 191–224 (1998). [DOI] [PubMed] [Google Scholar]

- 22.Harkness L., Chameleons use accommodation cues to judge distance. Nature 267, 346–349 (1977). [DOI] [PubMed] [Google Scholar]

- 23.Schaeffel F., Murphy C. J., Howland H. C., Accommodation in the cuttlefish (Sepia officinalis). J. Exp. Biol. 202, 3127–3134 (1999). [DOI] [PubMed] [Google Scholar]

- 24.Groeger G., Cotton P. A., Williamson R., Ontogenetic changes in the visual acuity of Sepia officinalis measured using the optomotor response. Can. J. Zool. 83, 274–279 (2005). [Google Scholar]

- 25.Scholl B., Burge J., Priebe N. J., Binocular integration and disparity selectivity in mouse primary visual cortex. J. Neurophysiol. 109, 3013–3024 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ott M., Chameleons have independent eye movements but synchronise both eyes during saccadic prey tracking. Exp. Brain Res. 139, 173–179 (2001). [DOI] [PubMed] [Google Scholar]

- 27.Katz H. K., Lustig A., Lev-Ari T., Nov Y., Rivlin E., Katzir G., Eye movements in chameleons are not truly independent – evidence from simultaneous monocular tracking of two targets. J. Exp. Biol. 218, 2097–2105 (2015). [DOI] [PubMed] [Google Scholar]

- 28.Wallace D. J., Greenberg D. S., Sawinski J., Rulla S., Notaro G., Kerr J. N. D., Rats maintain an overhead binocular field at the expense of constant fusion. Nature 498, 65–69 (2013). [DOI] [PubMed] [Google Scholar]

- 29.Panetta D., Solomon M., Buresch K., Hanlon R. T., Small-scale rearing of cuttlefish (Sepia officinalis) for research purposes. Mar. Freshw. Behav. Physiol. 50, 115–124 (2017). [Google Scholar]

- 30.Guibe M., Poirel N., Houde O., Dickel L., Food imprinting and visual generalization in embryos and newly hatched cuttlefish, Sepia officinalis. Anim. Behav. 84, 213–217 (2012). [Google Scholar]

- 31.Stavenga D. G., Smits R. P., Hoenders B. J., Simple exponential functions describing the absorbance bands of visual pigment spectra. Vision Res. 33, 1011–1017 (1993). [DOI] [PubMed] [Google Scholar]

- 32.Warrant E., Vision in the dimmest habitats on earth. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 190, 765–789 (2004). [DOI] [PubMed] [Google Scholar]

- 33.Warrant E. J., Nilsson D. E., Absorption of white light in photoreceptors. Vision Res. 38, 195–207 (1998). [DOI] [PubMed] [Google Scholar]

- 34.R. T. Hanlon, J. B. Messenger, Cephalopod Behaviour. (Cambridge Univ. Press, Cambridge, 1996), pp. 232. [Google Scholar]

- 35.Woods A. J., Harris C. R., Comparing levels of crosstalk with red/cyan, blue/yellow, and green/magenta anaglyph 3D glasses. Proc. SPIE 7524, 75240Q (2010). [Google Scholar]

- 36.Carere C., Grignani G., Bonanni R., Gala M. D., Carlini A., Angeletti D., Cimmaruta R., Nascetti G., Mather J. A., Consistent individual differences in the behavioural responsiveness of adult male cuttlefish (Sepia officinalis). Applied Animal Behaviour Science 167, 89–95 (2015). [Google Scholar]

- 37.Sinn D. L., Gosling S. D., Moltschaniwskyj N. A., Development of shy/bold behaviour in squid: Context-specific phenotypes associated with developmental plasticity. Anim. Behav. 75, 433–442 (2008). [Google Scholar]

- 38.Zoratto F., Cordeschi G., Grignani G., Bonanni R., Alleva E., Nascetti G., Mather J. A., Carere C., Variability in the “stereotyped” prey capture sequence of male cuttlefish (Sepia officinalis) could relate to personality differences. Anim. Cogn. 21, 773–785 (2018). [DOI] [PubMed] [Google Scholar]

- 39.Schindelin J., Arganda-Carreras I., Frise E., Kaynig V., Longair M., Pietzsch T., Preibisch S., Rueden C., Saalfeld S., Schmid B., Tinevez J.-Y., White D. J., Hartenstein V., Eliceiri K., Tomancak P., Cardona A., Fiji: An open-source platform for biological-image analysis. Nat. Methods 9, 676–682 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Harris J. M., Nefs H. T., Grafton C. E., Binocular vision and motion-in-depth. Spat. Vis. 21, 531–547 (2008). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/2/eaay6036/DC1

Fig. S1. Spectral content of screen and stimuli.

Fig. S2. Stimulus spectra, filter properties, and spectral content cross-talk measurements—red/blue glasses.

Fig. S3. Stimulus spectra, filter properties, and spectral content cross-talk measurements—blue/green glasses.

Fig. S4. Speed of stimuli and polarity or background contrast do not alter the perceived location of the 3D prey.

Fig. S5. Control analyses for different hunting behavior parameters.

Fig. S6. Cuttlefish eye angles vary greatly during the hunt.

Fig. S7. Diversity in cuttlefish eye vergence during the hunt.

Fig. S8. Positioning does not differ between stimuli and glasses types.

Movie S1. Method and animal behavior.

Movie S2. Example responses to control and disparate stimuli (relates to Fig. 1).

Movie S3. Example responses to quasi-monocular and binocular stimuli (relates to Fig. 2).

Movie S4. Example responses to correlated, anticorrelated, and uncorrelated random dot stimuli (relates to Fig. 3).