Significance

Sequential firing patterns of hippocampal place cells during spatial navigation are replayed in both forward and reverse directions during offline idling states, but functional roles of these replays are not clearly understood. To clarify this matter, we examined hippocampal replays in a spatial sequence memory task. We found that reward increases the fidelity of forward replays, while increasing the rate of reverse replays for all possible trajectories heading toward a rewarding location. We also found more faithful reactivation of place-cell sequences for upcoming rewarding trajectories than for already rewarded trajectories in forward replays. Our results suggest that hippocampal forward and reverse replays contribute to constructing a map of potential navigation trajectories with associated values (“value map”) via distinct mechanisms.

Keywords: hippocampus, place cell, value, sequence memory task

Abstract

To better understand the functional roles of hippocampal forward and reverse replays, we trained rats in a spatial sequence memory task and examined how these replays are modulated by reward and navigation history. We found that reward enhances both forward and reverse replays during the awake state, but in different ways. Reward enhances the rate of reverse replays, but it increases the fidelity of forward replays for recently traveled as well as other alternative trajectories heading toward a rewarding location. This suggests roles for forward and reverse replays in reinforcing representations for all potential rewarding trajectories. We also found more faithful reactivation of upcoming than already rewarded trajectories in forward replays. This suggests a role for forward replays in preferentially reinforcing representations for high-value trajectories. We propose that hippocampal forward and reverse replays might contribute to constructing a map of potential navigation trajectories and their associated values (a “value map”) via distinct mechanisms.

Place cells of the hippocampus fire selectively at specific locations in a given environment (1, 2), leading them to fire sequentially according to an animal’s navigation trajectory. The sequential firing patterns that hippocampal place cells exhibit during spatial navigation are replayed in a temporally condensed manner during non-REM sleep (3, 4) and during awake immobility (5–8). These temporally condensed place-cell replays are observed mostly in association with sharp-wave ripple events (SWRs), which are large-amplitude negative potentials (sharp waves) associated with brief high-frequency oscillations (ripples) in local field potentials (9). Disruption of SWRs during sleep (10, 11) and awake (12) states induces subsequent deficits in spatial-memory performance. This suggests that SWR-associated hippocampal replays contribute to hippocampal memory functions rather than representing a passive by-product of experience-induced network activity (9, 13, 14).

Although the functions of hippocampal replays are not clearly understood, they have been proposed to play roles in memory retrieval (8, 15), memory consolidation (4, 7, 10, 16), learning from experience (5, 17–19), planning future navigation (6, 20), linking recent and remote memories (8), and building cognitive maps (21). In this study, to better understand the function of hippocampal replays, we designed a spatial sequence memory task in which rats had to take 1 of 4 different trajectories to obtain a reward at a designated location. This allowed us to examine hippocampal replays related not only to a recently experienced trajectory, but also to other trajectories that lead to a rewarding location. Using this task, we asked how reward and navigation history affect hippocampal replays during the awake state. Because hippocampal place-cell activation patterns are replayed during SWRs in the same order as they fired during the experienced spatial trajectory as well as in reverse (6, 22), we examined the effects of reward and navigation history on forward and reverse replays separately. We found that reward enhances both forward and reverse replays, but in different ways. Reward enhances the rate of reverse replays, while increasing the fidelity of forward replays for recently traveled as well as other trajectories heading toward a rewarding location. We also found that forward replays show more faithful reactivation of upcoming rewarding trajectories than already rewarded trajectories. We propose, based on these findings, that hippocampal forward and reverse replays might contribute to building a map of potential navigation trajectories with associated values via distinct mechanisms.

Results

Behavior.

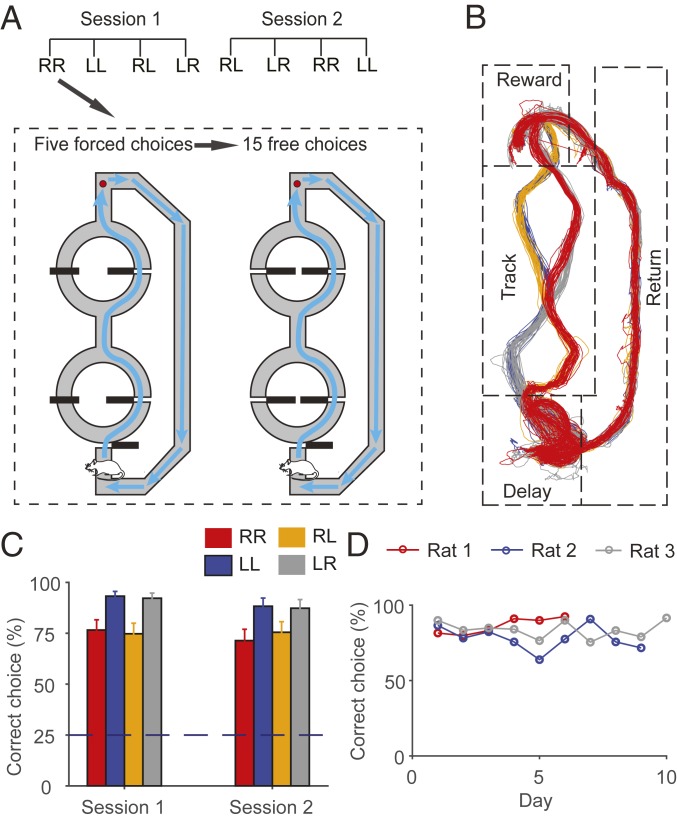

Three rats were trained in a spatial sequence memory task (Fig. 1A). The maze contained a figure 8-shaped section through which the rats could navigate in 1 of 4 different spatial sequences [left–left (LL), left–right (LR), right–left (RL), and right–right (RR)]. The rats performed 2 consecutive sessions each day with each session comprising 4 blocks of trials associated with 4 different correct sequences. The order of the correct block sequences was randomized. Each block consisted of 5 forced-choice trials followed by 15 free-choice trials, and a short delay of 3 s was imposed in the delay zone in each trial. A fixed amount (30 µL) of water reward was provided at a designated location in the forced-choice trials and also in free-choice trials when the animal chose the same spatial sequence as in the forced-choice trials (correct trials), but not when the animal chose a different spatial sequence (error trials). One animal’s movement trajectory in one sample recording session is shown in Fig. 1B. As shown, in well-trained rats, the movement trajectories and head directions varied across different blocks of trials in the track zone and the proximal part of the reward zone (between the track zone and reward location), but not in the rest of the maze (SI Appendix, Fig. S1).

Fig. 1.

Behavior. (A) Spatial sequence memory task. Rats were allowed to navigate to a reward location (red circle) in 1 of 4 possible spatial sequences (RR, LL, RL, and LR). Rats performed 2 consecutive sessions each day. Each session consisted of 4 blocks of different correct navigation sequences (RR, LL, RL, and LR) that were randomly ordered, and each block consisted of 5 forced-choice and 15 free-choice trials. Black horizontal bars denote sliding doors. (B) The animal’s movement trajectory in one sample session. Trajectories in different blocks are color-coded; RR (red), LL (blue), RL (orange), and LR (gray). Trajectories in error trials were excluded. (C and D) Behavioral performance during unit recording. (C) The bar graphs show percentages of correct choices (mean ± SEM across sessions) for 4 different navigation sequences in sessions 1 and 2. (D) Daily performance of each animal during unit recording.

The rats were trained in the task until they chose correct sequences in >60% of trials for 2 consecutive days before electrode implantation (total of 30 to 60 d of training). They were further trained for 9 to 15 d after recovery from surgery before unit recording began. The rats’ performance during unit recording was well above the level of chance in sessions 1 and 2 (mean ± SD, 84.2 ± 18.9% and 80.6 ± 23.7% correct choices, respectively; Fig. 1 C and D). All analyses were based on behavioral and neural data obtained from 25 sets of sessions (sessions 1 and 2; SI Appendix, Table S1).

Spatial Firing.

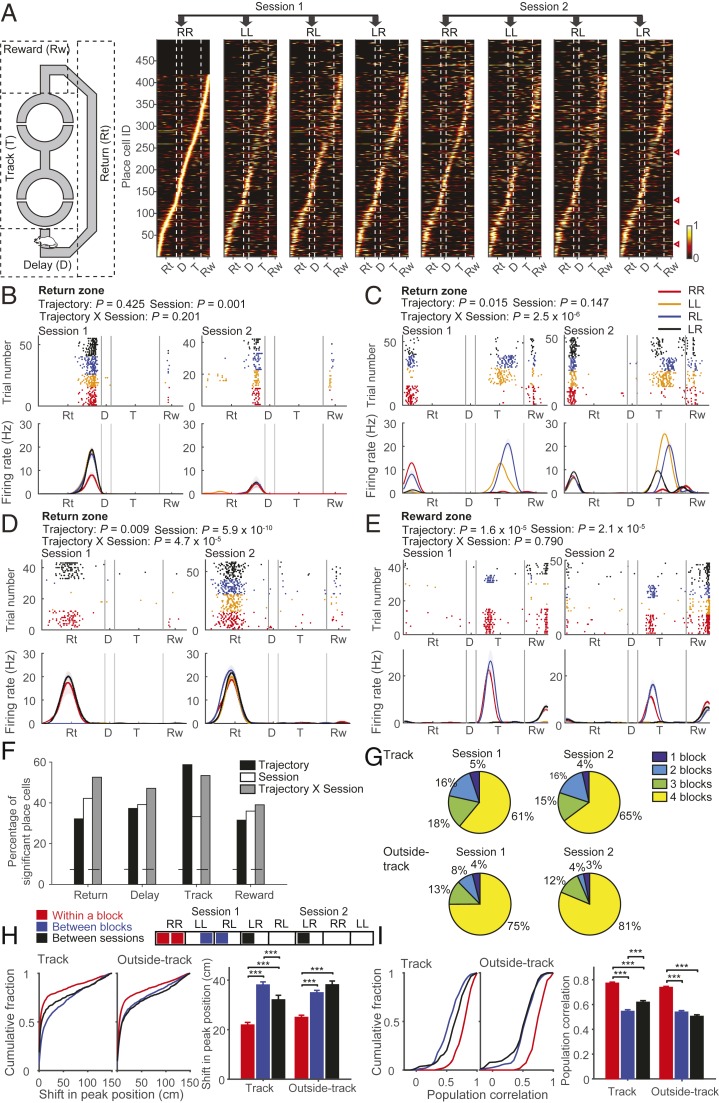

Throughout the study, we linearized the animal’s spatial position by projecting each spatial position in the maze onto the mean spatial trajectory for each session (SI Appendix, Fig. S1A). We recorded 698 single units from the dorsal CA1 region, and 648 of them (92.8%) were classified as putative pyramidal cells (SI Appendix, Fig. S2). Because we were interested in how replay events relate to place-cell firing along different spatial trajectories, and because CA1 spatial firing varies across different spatial trajectories (23–26) and over time (27–29), we determined the place cells for each trial block and for each session separately using forced-choice and correct free-choice trials. We determined a total of 479 (73.9%) and 483 (74.5%) pyramidal cells as place cells in at least one block of sessions 1 and 2, respectively (the number of place cells in each session set is shown in SI Appendix, Table S1). Fig. 2A shows the spatial firing of all place cells arranged according to the position of peak firing on a linearized maze. As shown, the spatial firing pattern differed between blocks not only in the track zone, but also in the rest of the maze. Note that variations in actual trajectories and head directions can explain block (i.e., trajectory)-dependent firing in the track zone, but not in the other zones (SI Appendix, Fig. S1). The spatial firing pattern also differed between sessions 1 and 2 even for the same spatial sequences. Thus, CA1 spatial firing varied as a function of both spatial trajectory and session.

Fig. 2.

Spatial firing. (A, Left) Schematic showing different spatial zones of the maze. (A, Right) Normalized spatial firing rates of all place cells (determined based on forced-choice and correct free-choice trials) are shown as heat maps on the linearized maze for each sequence block. Each row shows spatial firing of one place cell. Place cells were aligned according to the position of the maximal firing rate during the RR block of the first session. Vertical dashed lines divide different spatial zones on the maze. Rt, return; D, delay; T, track; Rw, reward. Triangles indicate sample place cells shown in B–E. (B–E) Sample place cells that showed trajectory- and/or session-dependent firing outside the track zone. Shown is neural activity during correct free-choice trials. Spatial spike raster (Top) and spatial firing-rate plots (Bottom; smoothed by a Gaussian kernel with σ = 4 cm; shading, SEM) are shown along the linearized maze separately for sessions 1 (Left) and 2 (Right). Colors denote different sequence blocks. P values for the main effects of trajectory and session as well as trajectory × session interaction (ANCOVA) are indicated for each sample place cell. (F) Percentages of place cells in each zone that were significantly responsive to trajectory, session, and/or the trajectory × session interaction (ANCOVA, P < 0.05). The horizontal dashed line indicates the level of chance (binomial test, alpha = 0.05). (G) Pie charts showing percentages of place cells with peak firing inside (Top) or outside (Bottom) the track zone that had place fields in 1, 2, 3, or 4 blocks in each session (Left, session 1; Right, session 2). (H and I) Each block was divided in half (∼7 trials each), and the difference in place-field peak-firing locations (H; Left, cumulative plot; Right, mean ± SEM) and spatial correlation of place-cell population vectors (I; Left, cumulative plot; Right, mean ± SEM) were computed between the halves of each block (within block), between the adjacent halves of adjacent blocks (between blocks), and between the corresponding halves of identical blocks of the 2 sessions (between sessions) for the track zone and the rest of the maze (outside track). ***P < 0.0005 (Kruskal–Wallis tests followed by Bonferroni’s post hoc tests).

Fig. 2 B and E shows 4 sample place cells that showed trajectory- and/or session-dependent firing that could not be explained by variations in spatial trajectory (additional examples are shown in SI Appendix, Fig. S3). Fig. 2B is a sample of place-cell firing that showed significant session-dependent firing (analysis of covariance [ANCOVA], main factors, session, and trajectory) in the return zone. The firing of this place cell in the return zone was stronger in session 1 than in session 2. Fig. 2C is a sample of a place cell that showed significant trajectory and trajectory × session interaction effects in the return zone. The firing of this place cell in the return zone was stronger during RR and RL than the other sequences in session 1, but not in session 2. Fig. 2D is a sample of the place-cell firing that showed significant trajectory, session, and trajectory × session interaction effects in the return zone. The firing of this place cell in the return zone was stronger in RR and LR than the other sequences in session 1, but not in session 2. Fig. 2E is a sample place-cell firing that showed significant trajectory and session effects in the reward zone. The majority of place cells showing significant trajectory-dependent firing outside the track zone showed significant trajectory × session interaction effects as well (134 of 185; 72.4%). Also, the majority of session-sensitive place cells showed abrupt changes in activity across sessions 1 and 2 (Fig. 2 and SI Appendix, Fig. S3). This indicates that the session-dependent firing that we observed in our study is more likely to reflect nonspatial context (task structure)-dependent firing rather than gradual changes in firing over time (27, 28). As shown by these examples, both global remapping and rate remapping (30) were observed across blocks and/or sessions.

We performed additional analyses to examine trajectory- and/or session-dependent firing of place cells using correct free-choice trials. We first examined the proportions of place cells that showed trajectory- and/or session-dependent firing using ANCOVA (main factors: trajectory and session; cofactors: lateral deviation of head position, head direction, and speed). The proportion of place cells with significant main effects of trajectory was ∼60% in the track zone and >30% in the rest of the maze. This indicates that spatial trajectory strongly modulates CA1 spatial firing. The proportion of place cells with significant main effect of session was >30%, and those with the significant trajectory × session interaction was >40% in all parts of the maze (Fig. 2F). The majority of trajectory- and/or session-sensitive neurons were both trajectory- and session-sensitive. Only a small number of cells showed strictly trajectory- or session-dependent firing (ANCOVA, main effect of only trajectory without significant trajectory × session interaction, n = 27, 24, and 15 in the delay, reward, and return zones, respectively; main effect of session only without significant trajectory × session interaction, n = 32, 38, and 34 in the delay, reward, and return zones, respectively).

We also calculated the percentage of place cells that had place fields in 1, 2, 3, or all 4 blocks in a session using correct free-choice trials. Sixty-one and 65% of place cells maintained their firing fields inside the track zone in all 4 blocks in sessions 1 and 2, respectively, while 75 and 81% did so outside the track zone in sessions 1 and 2, respectively (Fig. 2G). In addition, we quantified the change in place field location for the place cells that did not undergo global remapping. For this, we divided each block into halves (correct trials only; ∼7 trials) and compared the peak firing positions of the place fields between the halves of the same block across 2 adjacent blocks of the same session (to examine trajectory effect) and across 2 sessions for identical sequence blocks (to examine session effect; Fig. 2H). The shift in peak-firing position varied significantly across the 3 comparisons (Kruskal–Wallis test, track zone, χ2(2) = 208.686, P = 4.8 × 10−46, n = 1,242, 1,147, and 581 place-field pairs, respectively; outside-track zone, χ2(2) = 143.294, P = 7.7 × 10−32, n = 1,951, 1,886, and 919 place-field pairs, respectively). It was significantly greater between adjacent blocks than within the same block (Bonferroni’s post hoc test, track zone, P = 9.8 × 10−47; outside-track zone, P = 4.4 × 10−27; data from sessions 1 and 2 combined) as well as between sessions than within a session for the same sequence (track zone, P = 1.3 × 10−6; outside-track zone, P = 1.7 × 10−18; Fig. 2H). Finally, to compare the overall changes in place fields due to changes in firing rate and shifts in place fields, we calculated population vector correlations for within-block, between-block, and between-session pairs. We found that spatial correlation of place-cell population vectors (30) varied significantly across the 3 comparisons (Kruskal–Wallis test, track zone, χ2(2) = 149.788, P = 2.9 × 10−33, n = 205, 179, and 204 population vector pairs, respectively; outside-track zone, χ2(2) = 189.753, P = 6.2 × 10−42, n = 205, 179, and 204 population vector pairs, respectively). The spatial correlation was significantly greater within the same block than between adjacent blocks (Bonferroni’s post hoc test, track zone, P = 2.8 × 10−32; outside-track zone, P = 1.2 × 10−27) and between the same blocks of each pair of sessions (track zone, P = 8.5 × 10−16; outside-track zone, P = 9.4 × 10−36; Fig. 2I). These results indicate strong effects of trajectory and session on the spatial firing of place cells in our task.

Replay Events.

The majority of SWRs were observed in the reward zone (n = 76,609, 57.3%; delay zone, n = 7,603, 5.7%; the rest of the maze, n = 48,453, 36.5%). Instead of waiting quietly, the rats moved their heads frequently during the delay period, and theta oscillations were stronger in the delay than in the reward zone (SI Appendix, Fig. S4). This likely explains why we observed SWRs so infrequently in the delay zone. We therefore focused our analysis on SWR-associated neural activity recorded in the reward zone.

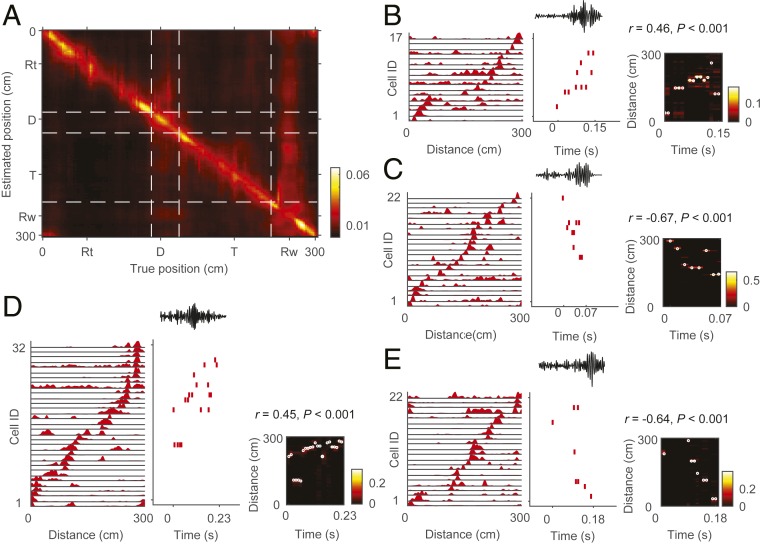

Fig. 3A shows a neural decoding of the animal’s position on a linearized maze averaged across all sessions (SI Appendix, Materials and Methods). We found that the averaged spatial position predicted by neuronal ensemble activity in 50-ms time bins correlated well with the actual position of the animal in the track, return, and delay zones, each of which showed relatively high movement velocity (track, 15.8 ± 0.05; return, 17.5 ± 0.06; delay, 12.2 ± 0.12 cm/s). In the reward zone, however, where average animal movement velocity was low (7.3 ± 0.27 cm/s), the estimated position was broadly distributed throughout the maze (cf. ref. 31). This is consistent with the finding that hippocampal replays in the waking state are observed mostly during immobility (6, 20).

Fig. 3.

Candidate replay events. (A) Neural decoding of animal position. The heat map shows the posterior probability of estimated animal position (ordinate) ordered by the true animal position (abscissa) averaged across all sessions. Note the broad distributions of the estimated position spanning the entire maze in the reward (Rw) zone, but not in return (Rt), delay (D), or track (T) zones. (B–E) Four examples of candidate replay events for the current trajectory at the reward site. Left, a sequentially arranged neural firing-rate template for the current block; Middle, a spike raster plot during a candidate replay event along with simultaneously recorded local field potential (LFP) signals (filter, 100 to 250 Hz) on top; Right, a heat map of reconstructed position for each event. White circles show the reconstructed position with maximum probability. The numbers above each plot show the weighted correlation (r) and P value (SI Appendix, Materials and Methods) for each event.

We identified a candidate replay event as a set of spikes emitted by at least 5 different place cells from a given block preceded by a >60-ms silent period and with at least one coincident SWR (Fig. 3 B–E). Thus, we restricted our analysis to replay events related to the spatial firing of a particular block of interest. We constructed place-cell templates using forced-choice and correct free-choice trials for each block and analyzed replay events during free-choice trials unless otherwise noted. We found 2,573 candidate replay events that are related to the current trajectory (correct path in the current block), and their mean (±SD) duration was 151.9 ± 61.5 ms. We combined the analysis results from sessions 1 and 2 to increase the sample size and included in the analysis only significant replay events that were determined with a permutation test (SI Appendix, Materials and Methods; n = 1,342 for the current trajectory). We then calculated a weighted correlation (r) (SI Appendix, Materials and Methods) (32–34) for each significant replay event, a measure of how well the decoded positions match to a linear trajectory corresponding to the correct path in the current block. We then divided significant replay events for the current trajectory into forward and reverse replays based on the signs of their weighted correlation coefficients (forward replays, r > 0; reverse replays, r < 0). Forward replays were significantly more frequent than reverse replays (707 and 625, respectively, χ2-test, χ2 = 6.811, P = 0.009).

Effect of Reward on Replays of the Current Trajectory.

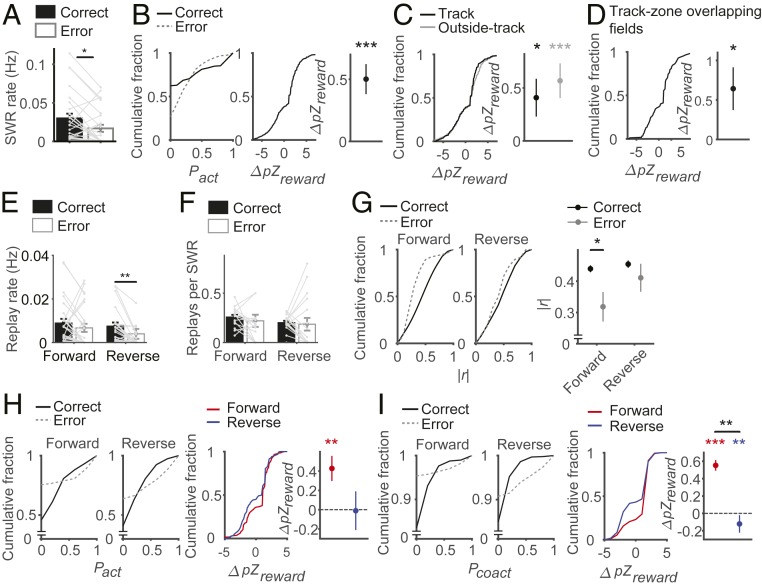

Place cell reactivation during SWR.

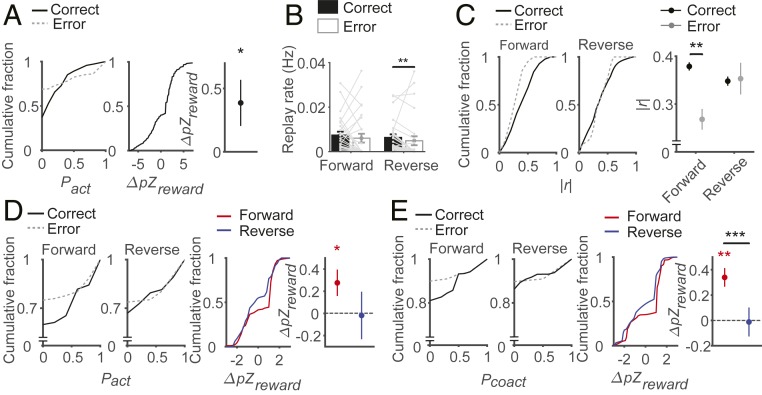

Prior to examining effects of reward on replay, we first examined how reward affects reactivation of place cells during SWRs in the reward zone. For each block, we determined place cells using forced-choice and correct trials and compared how they are reactivated during SWRs in correct versus error trials. The animals stayed significantly longer in the reward zone in correct compared to error trials (time spent at the reward zone, mean ± SD, 16.53 ± 4.10 s and 6.18 ± 9.47 s, respectively; signed-rank test, z = 4.008, P = 6.1 × 10−5). As a consequence, the number of SWRs was significantly larger in correct than in error trials (0.61 ± 0.13 and 0.13 ± 0.04 per trial, respectively; signed-rank test, z = 4.084, P = 4.4 × 10−5; SI Appendix, Fig. S5). When the number of SWRs was normalized to the time spent in the reward zone, the rate of SWR was still higher in correct than in error trials (signed-rank test, z = 2.571, P = 0.010, n = 25 session sets, Fig. 4A). We also calculated the normalized difference between correct and error trials in place-cell activation per SWR. For each place cell in the current block, we calculated its activation probability in the SWRs of correct and error trials. We then normalized the difference in activation probabilities between correct and error trials with the SE of the difference based on a binomial distribution to obtain a z score (ΔpZreward). Place cells were activated at higher probabilities per SWR in correct than in error trials so that ΔpZreward was significantly larger than zero (signed-rank test; z = 4.441, P = 8.9 × 10−6, n = 627 place cells; Fig. 4B).

Fig. 4.

Effect of reward on replays of the current rewarding trajectory. (A–D) Effect of reward on place-cell reactivation during SWRs. (A) Mean ± SEM rates (Hz) of SWR in correct and error trials. *P < 0.05 (signed-rank test). (B, Left) Place-cell activation probabilities (Pact) per SWR in correct and error trials shown as cumulative plots. (B, Middle and Right) Normalized difference in Pact between correct-trial and error-trial SWRs (ΔpZreward) shown as a cumulative plot (Middle) or mean ± SEM (Right). ***P < 0.0005 (signed-rank test for difference from 0). (C and D) ΔpZreward (Left, cumulative plot; Right, mean ± SEM) is shown for place cells inside and outside the track zone separately (C) and for place cells inside the track zone the fields of which were crossed by the animal in both correct and error trials (D). *P < 0.05, ***P < 0.0005 (signed-rank test for difference from 0). (E–I) Effect of reward on forward and reverse replays. (E) Mean ± SEM rates (Hz) of forward and reverse replays in correct and error trials. **P < 0.005 (signed-rank test). (F) Mean ± SEM numbers of forward and reverse replays per SWR in correct and error trials. (G) Absolute weighted correlations (|r|) of forward and reverse replays (Left, cumulative plot; Right; mean ± SEM). *P < 0.05 (rank-sum test). (H and I, Left) Activation (Pact, H) and coactivation (Pcoact, I) probabilities of place cells in forward and reverse replays in correct and error trials shown as cumulative plots. (Middle and Right) Normalized difference in Pact (H) or Pcoact (I) between correct-trial and error-trial replays (ΔpZreward) shown as a cumulative plot (Middle) or mean ± SEM (Right). **P < 0.005, ***P < 0.0005 (signed-rank test for difference from 0; rank-sum test for comparisons between forward and reverse replays).

We performed additional analyses to explore whether higher reactivation of place cells in correct than in error trials can be explained by factors other than reward. For example, higher reactivation of place cells in correct trials might be because of an experience effect. If recently activated place cells during navigation are more likely to be reactivated during SWRs, those place cells the fields of which are crossed by the animal in correct, but not error, trials would be more likely to be reactivated in correct than in error trials. This is a confounding factor in our analysis because we determined place cells using correct and forced-choice, but not error, trials. To test this possibility, we examined reactivation of place cells within the track zone and outside the track zone separately. We found similar reward effects for the 2 groups of place cells (signed-rank test; place cells inside track, ΔpZreward, z = 2.336, P = 0.019, n = 258; place cells outside track, ΔpZreward, z = 3.827, P = 1.3 × 10−4, n = 369, Fig. 4C). Because navigation trajectory outside the track zone was similar across blocks (SI Appendix, Fig. S1), enhanced reactivation of outside-track-zone place cells in correct trials cannot be attributed to an experience effect. Also, for the place cells within the track zone, we selected those fields that were crossed by the animal during both correct and error trials. These place cells were reactivated at higher probabilities per SWR in correct than in error trials (signed-rank test, ΔpZreward, z = 1.994, P = 0.046, n = 102 place cells, Fig. 4D). Again, the difference cannot be attributed to an experience effect because it is identical for the analyzed place cells. We then examined whether place-cell reactivation differs between forced-choice and error trials. We found that place-cell reactivation probability per SWR was significantly higher in forced-choice than in error trials, but similar between forced-choice and correct trials (SI Appendix, Fig. S6B). Thus, reward, rather than being correct or incorrect, seems to be a determining factor in enhancing place-cell reactivation. Collectively, these results consistently support the possibility that reward enhances not only SWR rate, but also place-cell reactivation per SWR, which is consistent with previous reports (18, 19).

Replay rate.

We then assessed the effects of reward on forward and reverse replays by comparing replays during correct and error trials. We first examined how reward affects replays of the current trajectory (i.e., sequential place-cell firing along the correct trajectory in the ongoing block). Replay rate (Hz) was significantly higher in correct than in error trials for reverse replays, but not for forward replays (signed-rank tests; forward replays, z = 1.429, P = 0.153, n = 24 session sets; reverse replays, z = 3.269, P = 0.001, n = 24 session sets; Fig. 4E). The numbers of forward and reverse replays per SWR did not differ significantly between correct and error trials (signed-rank tests; forward replay, z = 1.254, P = 0.210, n = 17 session sets; reverse replay, z = 0.414, P = 0.679, n = 17 session sets; Fig. 4F). These results indicate that reward increases the rate of reverse but not of forward replays, which is consistent with previous reports (19, 35).

Replay fidelity.

We then compared the absolute weighted correlation (|r|) (33, 34) between correct and error trials as a measure for the fidelity of replays. The absolute weighted correlation was significantly higher in correct trials than in error trials for forward replays (rank-sum test; z = 2.666, P = 0.008, n = 500 and 36), but not for reverse replays (z = 0.942, P = 0.346, n = 433 and 30; Fig. 4G).

As additional measures, we compared the probability for a place cell to be activated in replays (Pact) as well as the probability for 2 place cells to be activated together in replays (Pcoact) between correct and error trials. Specifically, we calculated the normalized differences in Pact and Pcoact between correct and error trials. For each place cell (or place-cell pair) in the current block, we calculated its probability of activation (or coactivation) in the replays of correct and error trials. Then we normalized the difference in activation (or coactivation) probabilities between correct and error trials with the SE of the difference based on a binomial distribution to obtain a z score (ΔpZreward) (36). For both Pact and Pcoact, ΔpZreward was significantly larger than zero in forward replays (signed-rank test, Pact, z = 3.367, P = 7.6 × 10−4, n = 222 place cells; Pcoact, z = 7.915, P = 4.6 × 10−11, n = 731 place-cell pairs), but not in reverse replays (Pact, z = −0.219, P = 0.827, n = 102 place cells; Pcoact, z = −2.872, P = 0.004, n = 430 place-cell pairs; Fig. 4 H and I). The normalized difference in Pcoact (ΔpZreward) was also significantly larger in forward than in reverse replays (rank-sum test; Pact, z = 1.918, P = 0.055; Pcoact, z = 2.837, P = 0.005; Fig. 4 H and I).

Additional analyses indicated that the observed differences between correct and error trials are not because of a systematic change in the animal’s behavior over time [e.g., an ‘automation’ effect (37)] (SI Appendix, Fig. S7) or the difference in recent navigation experience between correct and error trials (SI Appendix, Fig. S8). We also found similar differences between forced-choice and error trials (SI Appendix, Fig. S6). These results consistently support the conclusion that reward increases the rate of reverse replays, while enhancing the fidelity of forward replays.

Effect of Reward on Replays of Alternative Trajectories.

Place-cell reactivation during SWR.

Hippocampal replays are not restricted to recently experienced navigation trajectories (8, 21). We therefore examined the effect of reward on replays related to the rewarding trajectories in the blocks other than the current one. Prior to examining reward effect on replay, we examined reward effect on reactivation of other-block place cells during SWRs in the reward zone. We included only track-zone place cells (SI Appendix, Materials and Methods) in this analysis and examined whether reward affects reactivation of those place cells the fields of which were located off the current correct trajectory. For example, if the correct trajectory in the current block is RR, the place fields located in the first or second L (determined on the basis of neural and behavioral data in LL, LR, or RL block) were analyzed. We found that these place cells were activated at higher probabilities per SWR in correct than in error trials (signed-rank test; ΔpZreward, z = 2.343, P = 0.019, n = 250 place cells, Fig. 5A). This result indicates that reward enhances activation of place cells during SWRs the fields of which are not only on, but also off, the recently taken trajectory.

Fig. 5.

Effect of reward on replays of alterative rewarding trajectories. Shown are reward effects on replays for rewarding trajectories other than the current one. (A) Effect of reward on reactivation of place cells located on alternative trajectories during SWRs. Those place cells with firing fields located inside the track zone, but not in the correct trajectory of the current block, were analyzed. The same format as in Fig. 4B. *P < 0.05 (signed-rank test). (B–E) Effect of reward on forward and reverse replays for alternative rewarding trajectories. Replays were determined using place-cell activity in each of the blocks other than the current one, and those overlapping with current-block replays were excluded from the analysis. (B) Mean ± SEM rates (Hz) of forward and reverse replays for alternative rewarding trajectories. **P < 0.005 (signed-rank test). (C) Absolute weighted correlations (|r|) of forward and reverse replays for alternative rewarding trajectories. The same format as in Fig. 4G. **P < 0.005 (rank-sum test). (D and E) Activation (Pact, D) and coactivation (Pcoact, E) probabilities of place cells in forward and reverse replays for alternative rewarding trajectories. The same format as in Fig. 4 H and I. *P < 0.05, **P < 0.005, ***P < 0.0005 (signed-rank test for difference from 0; rank-sum test for comparisons between forward and reverse replays).

Replay rate and fidelity.

We then examined the effects of reward on forward and reverse replays of the trajectories other than the current one (i.e., place-cell sequences in the other blocks in a given session). In this analysis, we used place cells in each of the blocks other than the current one to determine candidate replay events. If a candidate event matched significantly to a correct path in a given block (a template of place cells determined using forced-choice and correct free-choice trials in a given block), it was considered as a replay of that specific trajectory. We also used place cells in each of the other blocks to calculate replay rates, absolute weighted correlations (|r|), Pact, and Pcoact. All other procedures were identical to those in the analysis of current-trajectory replays. Note that place cells of the other blocks overlap substantially with those of the current block. Consequently, replays for the current block overlap substantially with those for the other blocks (SI Appendix, Fig. S9). To alleviate this problem, we excluded those other-trajectory replays that were current-trajectory replays as well (i.e., common replays) from this analysis (forward replays: 446 excluded from 857; reverse replays: 372 excluded from 760).

The analysis of other-trajectory replays (with common replays excluded) yielded results largely similar to those obtained with the analysis of current-trajectory replays. The replay rate was significantly higher in correct than in error trials for reverse, but not for forward, replays (signed-rank tests; forward replays: z = 1.410, P = 0.159, n = 24 session sets; reverse replays: z = 2.832, P = 0.005, n = 24 session sets; Fig. 5B). The absolute weighted correlation was significantly higher in correct than error trials for forward, but not reverse, replays (rank-sum test; forward replays: z = 3.325, P = 8.9 × 10−4, n = 377 and 34, respectively; reverse replays: z = −0.365, P = 0.715, n = 359 and 29, respectively; Fig. 5C). Also, for both Pact and Pcoact, the normalized difference between correct and error trials (ΔpZreward) was significantly different from zero in forward replays (signed-rank test, Pact, z = 2.237, P = 0.025, n = 130 place cells; Pcoact, z = 3.246, P = 0.001, n = 390 place-cell pairs; Fig. 5 D and E), but not in reverse replays (Pact, z = −0.172, P = 0.863, n = 49 place cells; Pcoact, z = −0.478, P = 0.633, n = 156 place-cell pairs), and ΔpZReward for Pcoact was significantly larger in forward than in reverse replays (rank-sum test; Pact, z = 1.726, P = 0.084; Pcoact, z = 4.803, P = 1.6 × 10−6; Fig. 5 D and E). These results indicate similar effects of reward on replays of the current and other alternative rewarding trajectories.

Effect of Navigation History on Replay.

Next, we asked whether and how place-cell sequences in the previous and upcoming blocks in a given session (referred to as recent and upcoming trajectories, respectively) are reactivated differently in forward and reverse replays. In this analysis, if a candidate event matched significantly to a correct path in an upcoming (or previous) block (a template of place cells determined using forced-choice and correct free-choice trials in an upcoming or previous block), it was considered as the replay of the upcoming (or recent) trajectory. All other procedures were identical to those in the analysis of other-trajectory replays. We included only correct trials in this analysis. We also excluded those replays the directions of which were inconsistent across blocks (e.g., forward for one trajectory and reverse for another; 28 and 46 forward replays for recent and upcoming trajectories, respectively; 53 and 42 reverse replays for recent and upcoming trajectories, respectively). In addition, we excluded those replays that were common to both recent and upcoming trajectories (193 forward and 177 reverse replays; SI Appendix, Fig. S9) to minimize overlap between recent and upcoming trajectories.

Replay frequency.

The numbers of replays for recent and upcoming trajectories were not significantly different from each other for both forward and reverse replays (χ2-tests; forward replays: 271 and 259, χ2 = 0.543, P = 0.461; reverse replays: 220 and 224, χ2 = 0.072, P = 0.788, Fig. 6A).

Fig. 6.

Effect of navigation history on replays. Replays for recent (already rewarded) and upcoming rewarding trajectories in a given session were compared using only correct trials. Those replays matching significantly with both recent and upcoming trajectories were excluded from the analysis. (A and B) The number (A) and absolute weighted correlation (|r|) (B; Left, cumulative plot; Right; mean ± SEM) were compared between replays for upcoming and recent trajectories. *P < 0.05 (rank-sum test). (C and D) Activation (Pact, C) and coactivation (Pcoact, D) probabilities of place cells in replays for upcoming and recent trajectories were compared. (Left) Pact (C) and Pcoact (D) of place cells in forward and reverse replays for upcoming and recent trajectories are shown as cumulative plots. (Middle and Right) Normalized difference in Pact (C) or Pcoact (D) between replays for upcoming and recent trajectories (ΔpZhistory) are shown as a cumulative plot (Middle) or mean ± SEM (Right). *P < 0.05, **P < 0.005, ***P < 0.0005 (signed-rank test for difference from 0; rank-sum test for comparisons between forward and reverse replays).

Replay fidelity.

Absolute weighted correlation (|r|) was slightly, but significantly, stronger for upcoming than for recent trajectories in forward replays (rank-sum test, z = 1.966, P = 0.049, n = 259 and 271 replays for upcoming and recent trajectories, respectively), but not in reverse replays (z = 0.219, P = 0.827, n = 224 and 220 replays for upcoming and recent trajectories, respectively; Fig. 6B). We also compared place-cell activation (Pact) and coactivation (Pcoact) probabilities between replays of upcoming and recent trajectories by calculating normalized differences in Pact and Pcoact between replays of upcoming and recent trajectories (ΔpZhistory). Both Pact and Pcoact were significantly higher for upcoming than for recent trajectories in forward replays (signed-rank tests; ΔpZhistory for Pact, z = 3.737, P = 1.9 × 10−4, n = 656 place cells; ΔpZhistory for Pcoact, z = 9.505, P = 2.0 × 10−21, n = 2220 place-cell pairs; Fig. 6 C and D), but not reverse replays (ΔpZhistory for Pact, z = −0.606, P = 0.544, n = 611 place cells; ΔpZhistory for Pcoact, z = −2.359, P = 0.018, n = 2128 place-cell pairs; Fig. 6 C and D). Also, ΔpZhistory was significantly larger in forward than in reverse replays for both Pact and Pcoact (rank-sum tests; ΔpZhistory for Pact, z = 2.946, P = 0.003; ΔpZhistory for Pcoact, z = 8.419, P = 3.8 × 10−31, Fig. 6 C and D). Similar results were obtained with the analysis of place cells outside the track zone (SI Appendix, Fig. S10), indicating that the effects of navigation history on forward replays are not because of the difference in recent navigation experience or the amount of overlap with the current trajectory. An additional analysis further indicated that the effects of navigation history are not related to trajectory overlap (SI Appendix, Fig. S11). These results indicate more faithful reactivation of place-cell firing for upcoming rewarding than for already rewarded (recent) trajectories in forward replays.

Discussion

In this study, we identified differential effects of reward and navigation history on CA1 forward and reverse replays during the awake state in a spatial sequence memory task. Reward increased the rate of reverse replays, while increasing the fidelity of forward replays. The enhancing effect of reward was observed not only for the current, but also for other alternative trajectories heading toward a rewarding location in both forward and reverse replays. In addition, upcoming rewarding trajectories showed more faithful reactivation than did already rewarded trajectories in forward replays.

Previous studies have shown positive effects of reward on SWRs and hippocampal replays. Reward enhances the rate of waking SWRs (wSWRs) and CA3 place-cell coactivity during wSWRs (18). Reward increases the rate of reverse replays during wSWRs (19, 35). Place-cell coactivity during wSWRs is stronger for CA1 neurons with place fields near reward locations than for those with place fields farther from reward locations (16). Also, trajectories reconstructed from replays of CA1 place cells are preferentially directed toward previously visited as well as unvisited (but observed) reward locations during wSWRs and sleep-associated SWRs (5, 20, 21, 38). In humans, hippocampal activity patterns for high-reward contexts are preferentially reactivated during postlearning rest, and this reactivation is predictive of memory retention (39). These studies consistently indicate facilitating effects of reward on hippocampal replays. Consistent with the results of these studies, we found that reward facilitates both forward and reverse replays, but in different ways. We found that reward increases the rate of reverse but not forward replays significantly, which is consistent with previous studies (19, 35). Even though reward enhanced forward replay rate in many sessions, reward also decreased it in some sessions so that the overall reward effect on forward replay rate was not significant. We also found that reward enhances the fidelity of trajectory reactivation for forward but not reverse replays significantly. Our findings indicate that the rate and fidelity of replays are differentially modulated by reward, suggesting differences in their underlying neural mechanisms. Changes in replay rate and fidelity may have different functional consequences as well. We expect that determining the reasons that forward and reverse replay rate and fidelity are differently modulated by reward as well as determining how they affect subsequent hippocampal mnemonic processes will provide important insights into the function of forward and reverse replays and the neural mechanisms that cause them.

Another finding of our study is that reward enhances hippocampal replays related to not only the most recently experienced trajectory, but also to other trajectories heading toward a rewarding location. Given that SWRs provide favorable conditions for synaptic plasticity (9), this suggests hippocampal replays contribute to reinforcing representations for all possible rather than only recently experienced rewarding trajectories. This would clearly be useful for flexibility in planning future navigations to a rewarding location. Although it is less straightforward to imagine how place-cell sequences activated in reverse can contribute to strengthening forward trajectory representations, dopamine may allow reverse replays to strengthen forward associations (40, 41). In addition, we found that not all trajectories are reactivated equally in forward replays. In forward replays, upcoming rewarding trajectories are more faithfully reactivated than already rewarded trajectories. This difference is rather small, and hence its functional consequences are likely to be subtle. Also, it is currently unclear why upcoming rewarding trajectories are reactivated preferentially to already rewarded ones. Nevertheless, this may indicate preferential reactivation of the more valuable trajectories in forward replays. In our task, the animals may increase and decrease reward values associated with upcoming and recent trajectories, respectively, because they are forced to take trajectories alternative to those already experienced in a given session to obtain a reward. The reactivation of trajectories other than the most recently experienced one has been proposed as one of the mechanisms necessary for maintaining a stable representation of an environment (i.e., a cognitive map) (21). Our results raise the possibility that hippocampal replays may contribute to building not simply a spatial map, but a map of potential navigation trajectories and their associated values (i.e., a “value map”). In the future, we hope to test this possibility using behavioral tasks that are designed to more directly test value dependence of hippocampal replays.

How might forward and reverse replays contribute to building a value map? Reverse replays may contribute to reinforcing representations of all rewarding trajectories indiscriminately, while forward replays may preferentially reinforce representations for high-value trajectories. Alternatively, as proposed previously (5), hippocampal reverse replays may contribute to assigning values to hippocampal place cells according to their distances from a rewarding location. We have proposed a model (42) in which value-dependent CA1 place-cell activity during navigation (43, 44) facilitates preferential replays of high-value trajectories during SWRs. Briefly, during navigation, value-dependent CA1 neuronal activity allows preferential strengthening of synapses between those CA3 and CA1 neurons with overlapping place fields near a rewarding location via activity-dependent synaptic plasticity. During subsequent SWR episodes that occur when inhibitory tone is low and neuromodulatory signals differ from those during navigation (9), CA3 generates diverse sequences. Then, rewarding sequences are preferentially replayed in CA1 because of the enhancement of CA3-CA1 synapses for place cells near rewarding locations. In this scenario, reverse replays increase the reward values of those CA1 place cells having firing fields near a rewarding location, thereby facilitating preferential forward replays of rewarding trajectories during SWRs. In other words, reverse replays are used in constructing a map of CA1 place-cell values, while forward replays are used to construct a map of potential trajectories and their associated values. Further studies are needed to test this admittedly speculative hypothesis and to determine how forward and reverse replays affect hippocampal and extrahippocampal neural circuits to guide adaptive future navigation.

Materials and Methods

Experimental procedures for animal behavior, single-unit recording, and data analysis are described in detail in SI Appendix, Materials and Methods. The experimental protocol was approved by the Ethics Review Committee for Animal Experimentation at the Korea Advanced Institute of Science and Technology.

Data Availability.

The raw data is deposited in Figshare with identifier doi.org/10.6084/m9.figshare.10032866.v2 (45).

Supplementary Material

Acknowledgments

We thank A. D. Redish for his helpful comments on the initial manuscript. This work was supported by the Research Center Program of the Institute for Basic Science Grant IBS-R002-G1 (to M.W.J.).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

Data deposition: The raw data is deposited in Figshare with identifier doi.org/10.6084/m9.figshare.10032866.v2.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.1912533117/-/DCSupplemental.

References

- 1.O’Keefe J., Dostrovsky J., The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 34, 171–175 (1971). [DOI] [PubMed] [Google Scholar]

- 2.O’Keefe J., Nadel L., The Hippocampus as a Cognitive Map (Clarendon Press, Oxford, 1978). [Google Scholar]

- 3.Skaggs W. E., McNaughton B. L., Replay of neuronal firing sequences in rat hippocampus during sleep following spatial experience. Science 271, 1870–1873 (1996). [DOI] [PubMed] [Google Scholar]

- 4.Lee A. K., Wilson M. A., Memory of sequential experience in the hippocampus during slow wave sleep. Neuron 36, 1183–1194 (2002). [DOI] [PubMed] [Google Scholar]

- 5.Foster D. J., Wilson M. A., Reverse replay of behavioural sequences in hippocampal place cells during the awake state. Nature 440, 680–683 (2006). [DOI] [PubMed] [Google Scholar]

- 6.Diba K., Buzsáki G., Forward and reverse hippocampal place-cell sequences during ripples. Nat. Neurosci. 10, 1241–1242 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.O’Neill J., Senior T. J., Allen K., Huxter J. R., Csicsvari J., Reactivation of experience-dependent cell assembly patterns in the hippocampus. Nat. Neurosci. 11, 209–215 (2008). [DOI] [PubMed] [Google Scholar]

- 8.Karlsson M. P., Frank L. M., Awake replay of remote experiences in the hippocampus. Nat. Neurosci. 12, 913–918 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Buzsáki G., Hippocampal sharp wave-ripple: A cognitive biomarker for episodic memory and planning. Hippocampus 25, 1073–1188 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Girardeau G., Benchenane K., Wiener S. I., Buzsáki G., Zugaro M. B., Selective suppression of hippocampal ripples impairs spatial memory. Nat. Neurosci. 12, 1222–1223 (2009). [DOI] [PubMed] [Google Scholar]

- 11.Ego-Stengel V., Wilson M. A., Disruption of ripple-associated hippocampal activity during rest impairs spatial learning in the rat. Hippocampus 20, 1–10 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jadhav S. P., Kemere C., German P. W., Frank L. M., Awake hippocampal sharp-wave ripples support spatial memory. Science 336, 1454–1458 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Roumis D. K., Frank L. M., Hippocampal sharp-wave ripples in waking and sleeping states. Curr. Opin. Neurobiol. 35, 6–12 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Atherton L. A., Dupret D., Mellor J. R., Memory trace replay: The shaping of memory consolidation by neuromodulation. Trends Neurosci. 38, 560–570 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wu C. T., Haggerty D., Kemere C., Ji D., Hippocampal awake replay in fear memory retrieval. Nat. Neurosci. 20, 571–580 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dupret D., O’Neill J., Pleydell-Bouverie B., Csicsvari J., The reorganization and reactivation of hippocampal maps predict spatial memory performance. Nat. Neurosci. 13, 995–1002 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cheng S., Frank L. M., New experiences enhance coordinated neural activity in the hippocampus. Neuron 57, 303–313 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Singer A. C., Frank L. M., Rewarded outcomes enhance reactivation of experience in the hippocampus. Neuron 64, 910–921 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ambrose R. E., Pfeiffer B. E., Foster D. J., Reverse replay of hippocampal place cells is uniquely modulated by changing reward. Neuron 91, 1124–1136 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Pfeiffer B. E., Foster D. J., Hippocampal place-cell sequences depict future paths to remembered goals. Nature 497, 74–79 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gupta A. S., van der Meer M. A., Touretzky D. S., Redish A. D., Hippocampal replay is not a simple function of experience. Neuron 65, 695–705 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wikenheiser A. M., Redish A. D., The balance of forward and backward hippocampal sequences shifts across behavioral states. Hippocampus 23, 22–29 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ainge J. A., Tamosiunaite M., Woergoetter F., Dudchenko P. A., Hippocampal CA1 place cells encode intended destination on a maze with multiple choice points. J. Neurosci. 27, 9769–9779 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Frank L. M., Brown E. N., Wilson M., Trajectory encoding in the hippocampus and entorhinal cortex. Neuron 27, 169–178 (2000). [DOI] [PubMed] [Google Scholar]

- 25.Grieves R. M., Wood E. R., Dudchenko P. A., Place cells on a maze encode routes rather than destinations. eLife 5, e15986 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wood E. R., Dudchenko P. A., Robitsek R. J., Eichenbaum H., Hippocampal neurons encode information about different types of memory episodes occurring in the same location. Neuron 27, 623–633 (2000). [DOI] [PubMed] [Google Scholar]

- 27.Manns J. R., Howard M. W., Eichenbaum H., Gradual changes in hippocampal activity support remembering the order of events. Neuron 56, 530–540 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mankin E. A., et al. , Neuronal code for extended time in the hippocampus. Proc. Natl. Acad. Sci. U.S.A. 109, 19462–19467 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kraus B. J., Robinson R. J. II, White J. A., Eichenbaum H., Hasselmo M. E., Hippocampal “time cells”: Time versus path integration. Neuron 78, 1090–1101 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Leutgeb S., et al. , Independent codes for spatial and episodic memory in hippocampal neuronal ensembles. Science 309, 619–623 (2005). [DOI] [PubMed] [Google Scholar]

- 31.Jensen O., Lisman J. E., Position reconstruction from an ensemble of hippocampal place cells: Contribution of theta phase coding. J. Neurophysiol. 83, 2602–2609 (2000). [DOI] [PubMed] [Google Scholar]

- 32.Davidson T. J., Kloosterman F., Wilson M. A., Hippocampal replay of extended experience. Neuron 63, 497–507 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wu X., Foster D. J., Hippocampal replay captures the unique topological structure of a novel environment. J. Neurosci. 34, 6459–6469 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Grosmark A. D., Buzsáki G., Diversity in neural firing dynamics supports both rigid and learned hippocampal sequences. Science 351, 1440–1443 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Michon F., Sun J. J., Kim C. Y., Ciliberti D., Kloosterman F., Post-learning hippocampal replay selectively reinforces spatial memory for highly rewarded locations. Curr. Biol. 29, 1436–1444.e5 (2019). [DOI] [PubMed] [Google Scholar]

- 36.Singer A. C., Carr M. F., Karlsson M. P., Frank L. M., Hippocampal SWR activity predicts correct decisions during the initial learning of an alternation task. Neuron 77, 1163–1173 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Jackson J. C., Johnson A., Redish A. D., Hippocampal sharp waves and reactivation during awake states depend on repeated sequential experience. J. Neurosci. 26, 12415–12426 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ólafsdóttir H. F., Barry C., Saleem A. B., Hassabis D., Spiers H. J., Hippocampal place cells construct reward related sequences through unexplored space. eLife 4, e06063 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Gruber M. J., Ritchey M., Wang S. F., Doss M. K., Ranganath C., Post-learning hippocampal dynamics promote preferential retention of rewarding events. Neuron 89, 1110–1120 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhang J. C., Lau P. M., Bi G. Q., Gain in sensitivity and loss in temporal contrast of STDP by dopaminergic modulation at hippocampal synapses. Proc. Natl. Acad. Sci. U.S.A. 106, 13028–13033 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Brzosko Z., Zannone S., Schultz W., Clopath C., Paulsen O., Sequential neuromodulation of Hebbian plasticity offers mechanism for effective reward-based navigation. eLife 6, e27756 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Jung M. W., Lee H., Jeong Y., Lee J. W., Lee I., Remembering rewarding futures: A simulation-selection model of the hippocampus. Hippocampus 28, 913–930 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lee H., Ghim J. W., Kim H., Lee D., Jung M., Hippocampal neural correlates for values of experienced events. J. Neurosci. 32, 15053–15065 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lee S. H., et al. , Neural signals related to outcome evaluation are stronger in CA1 than CA3. Front. Neural Circuits 11, 40 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bhattarai B., Lee J. W., Jung M. W., Supplementary data: Distinct effects of reward and navigation history on hippocampal forward and reverse replays. Figshare. 10.6084/m9.figshare.10032866.v2. Deposited 24 October 2019. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The raw data is deposited in Figshare with identifier doi.org/10.6084/m9.figshare.10032866.v2 (45).