Abstract

Objectives:

To evaluate the impact of movement and motion-artefact correction systems on CBCT image quality and interpretability of simulated diagnostic tasks for aligned and lateral-offset detectors.

Methods:

A human skull simulating three diagnostic tasks (implant planning in the anterior maxilla, implant planning in the left-side-mandible and mandibular molar furcation assessment in the right-side-mandible) was mounted on a robot performing six movement types. Four CBCT units were used: Cranex 3Dx (CRA), Ortophos SL (ORT), Promax 3D Mid (PRO), and X1. Protocols were tested with aligned (CRA, ORT, PRO, and X1) and lateral-offset (CRA and PRO) detectors and two motion-artefact correction systems (PRO and X1). Movements were performed at one moment-in-time (t1), for units with an aligned detector, and three moments-in-time (t1-first-half of the acquisition, t2-second-half, t3-both) for the units with a lateral-offset detector. 98 volumes were acquired. Images were scored by three observers, blinded to the unit and presence of movement, for motion-related stripe artefacts, overall unsharpness, and interpretability. Fleiss’ κ was used to assess interobserver agreement.

Results:

Interobserver agreement was substantial for all parameters (0.66–0.68). For aligned detectors, in all diagnostic tasks a motion-artefact correction system influenced image interpretability. For lateral-offset detectors, the interpretability varied according to the unit and moment-in-time, in which the movement was performed. PRO motion-artefact correction system was less effective for the offset detector than its aligned counterpart.

Conclusion:

Motion-artefact correction systems enhanced image quality and interpretability for units with aligned detectors but were less effective for those with lateral-offset detectors.

Keywords: cone beam CT, patient movement; motion artifacts; image quality

Introduction

Cone beam CT (CBCT) images in dental exams are deeply affected by movements during the acquisition, which can result in motion artefacts in the reconstructed volumes.1 These are more prone to happen due to the long acquisition time of CBCT, especially in children and patients with conditions exhibiting uncontrollable movement, such as Parkinson’s disease.2

The explanation for such artefacts lies in the characteristics of the CBCT reconstruction algorithm, which assumes a complete stationary geometry in all the basis images.3 When the object of study (i.e. the patient) moves during the examination, the pixel intensities representing the same area are backprojected into different positions, resulting in artefacts that may be present in the reconstructed images.4,5 These are often visible as stripes, double contours, and overall unsharpness.6

The method used for CBCT image acquisition (e.g. the detector position) may affect the formation of motion artefacts. Some producers use a lateral-offset detector as a manner to reduce the unit’s production costs, since this allows the acquisition of a FOV (field-of-view), which is larger than the sensor size.5,7,8 When this is the case, the centre of the volume is acquired in all projections, while the boundaries are present in only some projections. When lateral-offset detectors are used, and in the presence of patient movement, different regions of the FOV may be affected by artefacts, depending on which region was being acquired during the movement.8

Methods for movement detection and quantification during CBCT acquisition have been proposed, either directly, by using an actual head tracker,9,10 or indirectly, by using optical flow measurements (i.e. how pixel value patterns move in subsequent projection image sections) as the basis for motion tracking.11 Based on these data, for some units the volumes can be corrected using an iterative reconstruction algorithm that adjusts for the movements, reducing the motion artefacts visible in the final image.9 However, the available motion-artefact correction systems were tested only for acquisitions based on an aligned detector.9

The aims of the present study were to evaluate the impact of head motion artefacts and two motion-artefact correction systems on CBCT image quality and interpretability, for three simulated diagnostic tasks, when using aligned and lateral-offset detectors.

Methods and materials

CBCT units

Four CBCT units were used: Cranex 3Dx (CRA, Soredex Oy, Finland), Ortophos SL 3D (ORT, Sirona Dental Systems GmbH, Germany), Promax 3D Mid (PRO, Planmeca Oy, Finland) and X1 (3Shape, Denmark). In all these units, images are acquired with the patient standing up.

Eight image-acquisition protocols (Table 1) were used, and the parameters were selected as the default mA and kVp for each CBCT unit, considering the smallest voxel size available for the selected FOV. Two units had protocols based on the use of a lateral-offset detector (Cranex 3Dx and Promax 3D Mid), while the other two had an aligned detector. Both units with a lateral-offset detector had a similar approach, in which some FOVs are acquired using an aligned detector (up to 8 × 8 in CRA and 10 × 10 in PRO), while FOVs larger than those use a lateral-offset detector to acquire the images. For that, CRA performs two partial rotations, with the detector offset to the right side in the first rotation, and to the left side in the second rotation. PRO performs a 360o rotation with the detector offset to the right side.

Table 1.

CBCT units and protocols used for volume acquisition

| Unit | Field-of-view (diameter x height, cm) |

Detector position (offset type) | Motion artefact correction | kVp | mA |

|---|---|---|---|---|---|

| Aligned detector | |||||

| Cranex 3Dx (CRA) | 8 × 8 | Aligned | No | 89.8 | 6 |

| Orthophos SL 3D (ORT) | 8 × 8 | Aligned | No | 85.0 | 6 |

| ProMax 3D Mid (PRO) | 10 × 10 | Aligned | No | 90.0 | 10 |

| ProMax 3D Mid (PRO) | 10 × 10 | Aligned | Yes (CALM) | 90.0 | 10 |

| X1 | 8 × 8 | Aligned | Yes (head tracker) | 90.0 | 12 |

| Lateral-offset detector | |||||

| Cranex 3Dx (CRA) | 15 × 8 | Offset (two rotations) | No | 89.8 | 5 |

| ProMax 3D Mid (PRO) | 16 × 10 | Offset (360o) | No | 90.0 | 10 |

| ProMax 3D Mid (PRO) | 16 × 10 | Offset (360o) | Yes (CALM) | 90.0 | 10 |

Two of the units have motion-artefact correction systems (PRO and X1). In PRO the correction system is named “CALM”, and it is possible to disable the system, therefore, images were reconstructed and evaluated in both conditions (i.e. motion-artefact correction turned ON and OFF). X1 requires the use of a head-tracking device and does not allow images to be acquired without it, so only volumes with the motion-artefact correction system were obtained.

Experimental setup and robot programming

A partially dentate human skull embedded in wax to simulate soft-tissues12 was used in this study. Three regions-of-interest were selected in this skull according to their position in the FOV: anterior maxilla, posterior mandible left and right sides.

The skull was mounted on a robot (UR10, Universal Robots, Odense, Denmark) that is programmed to execute pre-defined movements with precise control of angular position, velocity, and acceleration.9 Six different head movement types (described in Table 2) were selected, based on a previous study.9 These movements can be considered of high intensity, to better identify the regions affected, with a movement pattern not returning to the initial position, a distance of 3 mm, and a speed of 5 mm/s. Two movements types were categorized as uniplanar or non-complex (head anteroposterior translation and lifting) and four as multiplanar or complex (head anteroposterior translation +lifting, lateral rotation, 2 s tremor, and continuous tremor). In the image-acquisition protocols with an aligned detector, the movements were executed at one possible moment-in-time (t1), when the X-ray source was behind the skull. In the units with a lateral-offset detector, the movements were executed in one of three possible moments-in-time (t1 - when the source was behind the skull, t2- source in front of the skull for PRO, due to the 360o rotation, and behind the skull in the second rotation for CRA, and t3 – both at t1 and t2, executing the movement backwards in t2). To assure consistency among the acquisitions, t1, t2, and t3 were adjusted for each unit individually and programmed in the robot. The basis images showing the moment the movements started in all units are seen in Figure 1. The continuous tremor was an exception, as it runs during the full acquisition for aligned and lateral-offset detectors (t3), and during the entire first (t1) or second half (t2) of the acquisition for lateral-offset detector units.

Table 2.

Movements executed by the robot

| Movement types | |

|---|---|

| 1 | Head antero posterior translation |

| 2 | Head lifting |

| 3 | Head antero posterior translation + head lifting (two separate movements) |

| 4 | Head lateral rotation |

| 5 | Tremor lasting 2 s |

| 6 | Continuous tremor (6 Hz) |

| 0 | Still – no movement (control) |

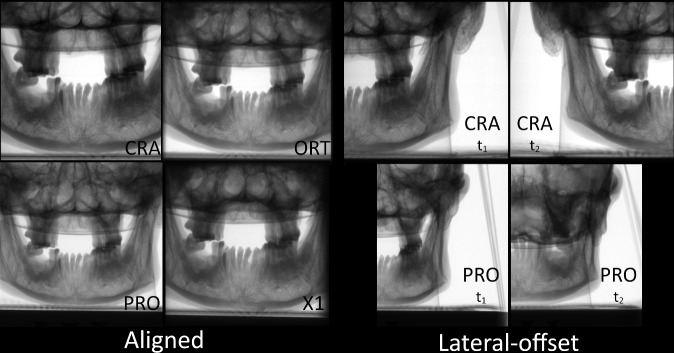

Figure 1.

Projection images showing the moment-in-time, in which the movements started for all movement types (except continuous tremor), for all the units.

Image acquisition

In total, 98 images were acquired and evaluated: seven volumes for each protocol with an aligned detector (still + six movement types) and 21 volumes for each protocol with a lateral-offset detector (still + six movement types, for t1, t2, and t3). The choice of FOV was based on: 1) if the device had lateral-offset and aligned projections, to keep the same height, and 2) to choose a comparable FOV for all units except PRO, in which the smallest height for lateral-offset acquisitions is 16 × 10 cm, and therefore a 10 × 10 cm FOV for aligned acquisitions was chosen, following criterion #1. PRO uses a motion-artefact correction system based on an iterative projection reconstruction and does not need an external apparatus to work. PRO images were acquired only once and reconstructed with and without the motion-artefact correction system, resulting in four sets of volumes (two for aligned, and two for lateral-offset).

Image evaluation

All image volumes were anonymized, and observers were blinded to which unit, motion-artefact correction system, and movement had been performed. Dedicated software (OnDemand3D, CyberMed, South Korea) was used to evaluate the images, in a low light room and using a large screen size (60’) and FullHD resolution (1920 × 1080 pixels) monitor.

Three diagnostics tasks of clinical relevance in dentistry were simulated. The first task – implant planning in the anterior region of the upper arch (IAU) - was an edentulous region for implant and/or bone graft planning in the maxilla, located in the anterior part of the FOV. For this task, observers should focus on the contours of the bone tissue and visibility of the nasopalatine canal. The second task – implant planning on the left side of the lower arch (ILL) - was an edentulous region in the mandible located in the left side-of the FOV, in which the observers should focus on the contours of the bone tissue and visibility of the mandibular canal. And the third task – molar furcation on the right side of the lower arch (MRL) - was a region with interradicular bone loss (furcation involvement) in the lower first molar on the right side-of the FOV. For this task, the observers should focus on the identification of both the radicular anatomy and the bone contours. The diagnostic tasks combined formed a triangle, with one task in each of the vertices of the triangle, and most movements took place at the exact time the rotation centre intersected the IAU region (X-ray source behind or in front of the skull).

Three observers with experience in assessing images with patient motion artefacts scored the images individually but in the same session. Observers scored three parameters: presence of stripes (0 = no stripes or enamel stripes/1 = movement stripes), overall unsharpness (0 = none or mild, bony and dental contours are easily discernible/1 = moderate to severe, bony and dental contours are not discernible, sometimes with double contours) and image interpretability (0 = interpretable/1=not interpretable) for each diagnostical task. The regions-of-interest did not have any metal or other high-density materials that could generate other types of artefacts. After opening the volumes, images of the IAU region were observed in all three main reconstruction planes (axial, sagittal, and coronal), while the observers gave an overall score for the three evaluated parameters. Then, the planes were re-adjusted to evaluate ILL and then MRL. This was repeated for all image volumes, with a limit of 15 volumes per session to avoid observer fatigue.

Data treatment

The scores of the three observers were tabulated and evaluated using dedicated software SPSS (IBM Corp., New York, NY; formerly SPSS Inc., Chicago, IL) and Minitab (Minitab, LLC, State College, PA). Interobserver agreement, considering the three observers, was measured by Fleiss' κ. The findings for all observers were summarized and a cross-table was made showing the consensus of the findings between the presence of movement stripes/presence of unsharpness/not interpretable images and the CBCT unit/movement characteristics (i.e. movement type, detector offset, and moment) for each task. Data were reported as percentage and agreement values.

Results

Interobserver agreement was substantial13 for all evaluated parameters: presence of stripe artefacts (0.66), overall unsharpness (0.67), and image interpretability (0.68).

All images acquired without movement (“still”) were scored by all observers as interpretable and did not present movement stripes or unsharpness for any protocol. Therefore, only images acquired with movements were further described for those parameters. Table 3 summarizes the percentage of images scored with movement stripes, overall unsharpness, and as non-interpretable, according to the diagnostic task and imaging protocol. The score distribution (considering the consensus among the three observers) for the diverse diagnostic tasks and imaging protocols, is presented in tables 4 and 5. Figures 2–4 show examples of the images.

Table 3.

Percentage of images, acquired with movement, scored with motion-related stripes, unsharpness, and as non-interpretable, according to the acquisition protocol and diagnostic task

| Unit | Moment-in-time | IAU - Implant planning in the anterior region of the upper arch | ILL - Implant planning in the left side-of the lower arch | FRL - Molar furcation assessment in the right side-of the lower arch | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Stripes (%) | Unsharpness (%) | Non-interpretable (%) | Stripes (%) | Unsharpness (%) | Non-interpretable (%) | Stripes (%) | Unsharpness (%) | Non-interpretable (%) | ||

| CRA | t1 | 100 | 100 | 88.9 | 88.9 | 77.8 | 55.6 | 94.4 | 94.4 | 83.3 |

| ORT | t1 | 100 | 94.4 | 88.9 | 83.3 | 83.3 | 61.1 | 88.9 | 83.3 | 55.6 |

| PROwo | t1 | 94.4 | 88.9 | 83.3 | 94.4 | 72.2 | 55.6 | 100 | 94.4 | 66.7 |

| PROwi | t1 | 88.9 | 44.4 | 33.3 | 61.1 | 44.4 | 16.7 | 83.3 | 50.0 | 16.7 |

| X1wi | t1 | 5.6 | 0 | 0 | 11.1 | 0 | 0 | 11.1 | 0 | 0 |

| Lateral-offset detector | ||||||||||

| CRA | t1 | 100 | 94.4 | 94.4 | 66.7 | 22.2 | 5.6 | 100 | 100 | 77.8 |

| t2 | 66.7 | 33.3 | 16.7 | 77.8 | 50.0 | 22.2 | 5.6 | 11.1 | 0 | |

| t3 | 100 | 94.4 | 88.9 | 100 | 83.3 | 72.2 | 100 | 94.4 | 72.2 | |

| PROwo | t1 | 88.9 | 94.4 | 77.8 | 61.1 | 44.4 | 22.2 | 100 | 94.4 | 66.7 |

| t2 | 66.7 | 61.1 | 61.1 | 83.3 | 77.8 | 77.8 | 83.3 | 77.8 | 55.6 | |

| t3 | 83.3 | 22.2 | 22.2 | 88.9 | 61.1 | 61.1 | 88.9 | 33.3 | 27.8 | |

| PROwi | t1 | 100 | 94.4 | 61.1 | 72.2 | 38.9 | 11.1 | 100 | 83.3 | 61.1 |

| t2 | 94.4 | 77.8 | 55.6 | 94.4 | 66.7 | 50.0 | 94.4 | 72.2 | 61.1 | |

| t3 | 77.8 | 38.9 | 33.3 | 83.3 | 55.6 | 16.7 | 61.1 | 38.9 | 22.2 | |

Table 4.

The observers’ consensus scores regarding the presence of movement stripes (filled circle, present; empty circle, absent), overall unsharpness (filled circle, present; empty circle, absent), and image interpretability (filled circle, non-interpretable; empty circle, interpretable), according to movement pattern and regions evaluated for protocols with an aligned detector

| IAU – Implant planning in the anterior region of the upper arch | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Movement stripes | Unsharpness | Non-interpretable image | ||||||||||||

| CRA | ORT | PROwo | PROwi | X1wi | CRA | ORT | PROwo | PROwi | X1wi | CRA | ORT | PROwo | PROwi | X1wi | |

| AP translation | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ● | ● | ● | ○ | ○ |

| Head lifting | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ● | ○ | ● | ○ | ○ |

| AP translation + Head lifting | ● | ● | ● | ● | ○ | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ |

| Lateral rotation | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ○ | ● | ○ | ○ | ○ |

| Tremor | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ● | ● | ● | ○ | ○ |

| Continuous tremor | ● | ● | ● | ● | ○ | ● | ● | ● | ● | ○ | ● | ● | ● | ● | ○ |

| Still | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| ILL - Implant planning in the left side of the lower arch | |||||||||||||||

| Type | Movement stripes | Unsharpness | Non-interpretable image | ||||||||||||

| CRA | ORT | PROwo | PROwi | X1wi | CRA | ORT | PROwo | PROwi | X1wi | CRA | ORT | PROwo | PROwi | X1wi | |

| AP translation | ● | ● | ● | ○ | ○ | ○ | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Head lifting | ● | ● | ● | ○ | ○ | ● | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ |

| AP translation + Head lifting | ● | ● | ● | ● | ○ | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ |

| Lateral rotation | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Tremor | ● | ● | ● | ● | ○ | ● | ● | ○ | ○ | ○ | ● | ● | ○ | ○ | ○ |

| Continuous tremor | ● | ● | ● | ● | ● | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ |

| Still | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| FRL - Molar furcation assessment in the right side of the lower arch | |||||||||||||||

| Type | Movement stripes | Unsharpness | Non-interpretable image | ||||||||||||

| CRA | ORT | PROwo | PROwi | X1wi | CRA | ORT | PROwo | PROwi | X1wi | CRA | ORT | PROwo | PROwi | X1wi | |

| AP translation | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| Head lifting | ● | ● | ● | ○ | ○ | ● | ○ | ● | ○ | ○ | ● | ○ | ● | ○ | ○ |

| AP translation + Head lifting | ● | ● | ● | ● | ○ | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ |

| Lateral rotation | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ● | ○ | ○ | ○ | ○ |

| Tremor | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ | ● | ● | ● | ○ | ○ |

| Continuous tremor | ● | ● | ● | ● | ● | ● | ● | ● | ● | ○ | ● | ● | ● | ○ | ○ |

| Still | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

Table 5.

The observers’ consensus scores regarding the presence of movement stripes (filled circle, present; empty circle, absent), overall unsharpness (filled circle, present; empty circle, absent), and image interpretability (filled circle, non-interpretable; empty circle, interpretable), according to movement pattern and regions evaluated for protocols with a lateral-offset detector

| Type | Moment-in-time | Movement stripes | Unsharpness | Non-interpretable | ||||||

| CRA | PROwo | PROwi | CRA | PROwo | PROwi | CRA | PROwo | PROwi | ||

| AP translation | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ○ | ○ | ● | ○ | ○ | ● | ○ | ○ | ○ | |

| t3 | ● | ● | ○ | ● | ○ | ○ | ● | ○ | ○ | |

| Head lifting | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ○ | ○ | ● | ○ | ○ | ● | ○ | ○ | ● | |

| t3 | ● | ● | ● | ● | ○ | ○ | ● | ○ | ○ | |

| AP translation + Head lifting | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ● | ● | ● | ○ | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ○ | ● | ● | ○ | ● | |

| Lateral rotation | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ○ |

| t2 | ● | ● | ● | ● | ● | ● | ○ | ● | ○ | |

| t3 | ● | ● | ● | ● | ○ | ○ | ● | ○ | ○ | |

| Tremor | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ● | ● | ● | ○ | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ○ | ○ | ● | ○ | ○ | |

| Continuous tremor | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ○ |

| t2 | ● | ● | ● | ● | ● | ○ | ● | ● | ○ | |

| t3 | ● | ● | ● | ● | ● | ● | ● | ● | ● | |

| Still | t1 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| t2 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | |

| t3 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | |

| Type | Moment-in-time | Movement stripes | Unsharpness | Non-interpretable | ||||||

| CRA | PROwo | PROwi | CRA | PROwo | PROwi | CRA | PROwo | PROwi | ||

| AP translation | t1 | ● | ● | ● | ○ | ● | ● | ○ | ○ | ○ |

| t2 | ○ | ○ | ● | ○ | ○ | ○ | ○ | ○ | ○ | |

| t3 | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ | |

| Head lifting | t1 | ● | ● | ● | ○ | ● | ● | ○ | ● | ● |

| t2 | ● | ● | ● | ● | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ● | ○ | ● | ● | ○ | |

| AP translation + Head lifting | t1 | ● | ● | ● | ● | ● | ○ | ○ | ○ | ○ |

| t2 | ● | ● | ● | ● | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ○ | ● | ● | ○ | ○ | |

| Lateral rotation | t1 | ● | ● | ● | ● | ● | ○ | ○ | ○ | ○ |

| t2 | ● | ● | ● | ● | ● | ● | ○ | ● | ○ | |

| t3 | ● | ● | ● | ● | ● | ● | ○ | ● | ○ | |

| Tremor | t1 | ● | ● | ● | ○ | ○ | ○ | ○ | ○ | ○ |

| t2 | ● | ● | ● | ○ | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ● | ● | ● | ● | ○ | |

| Continuous tremor | t1 | ○ | ○ | ● | ○ | ○ | ● | ○ | ○ | ○ |

| t2 | ● | ● | ● | ● | ● | ○ | ● | ● | ○ | |

| t3 | ● | ● | ● | ● | ● | ● | ● | ● | ● | |

| Still | t1 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| t2 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | |

| t3 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | |

| Type | Moment-in-time | Movement stripes | Unsharpness | Non-interpretable | ||||||

| CRA | PROwo | PROwi | CRA | PROwo | PROwi | CRA | PROwo | PROwi | ||

| AP translation | t1 | ● | ● | ● | ● | ● | ● | ○ | ○ | ● |

| t2 | ○ | ○ | ● | ○ | ○ | ● | ○ | ○ | ● | |

| t3 | ● | ● | ○ | ● | ○ | ○ | ○ | ○ | ○ | |

| Head lifting | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ○ | ● | ● | ○ | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ○ | ● | ● | ○ | ● | ● | ○ | |

| AP translation + Head lifting | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ○ | ● | ● | ○ | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ○ | ● | ● | ○ | ○ | |

| Lateral rotation | t1 | ● | ● | ● | ● | ● | ○ | ● | ○ | ○ |

| t2 | ○ | ● | ● | ○ | ● | ● | ○ | ○ | ○ | |

| t3 | ● | ● | ○ | ● | ○ | ○ | ○ | ○ | ○ | |

| Tremor | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ○ |

| t2 | ○ | ● | ● | ○ | ● | ● | ○ | ● | ● | |

| t3 | ● | ● | ● | ● | ○ | ○ | ● | ○ | ○ | |

| Continuous tremor | t1 | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| t2 | ○ | ● | ● | ● | ● | ○ | ○ | ○ | ○ | |

| t3 | ● | ● | ● | ● | ● | ● | ● | ● | ● | |

| Still | t1 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ |

| t2 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | |

| t3 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | ○ | |

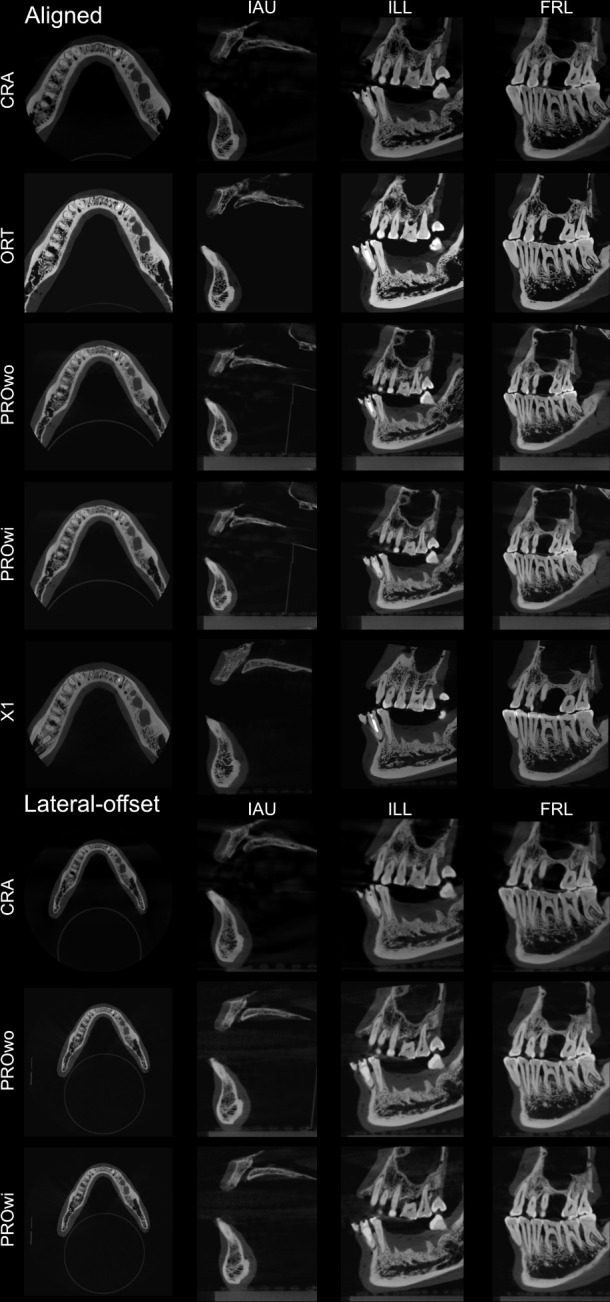

Figure 2.

Representative images of the “control” (still) group at t1 showing the entire FOV acquired and images of the evaluated tasks for all the protocols.

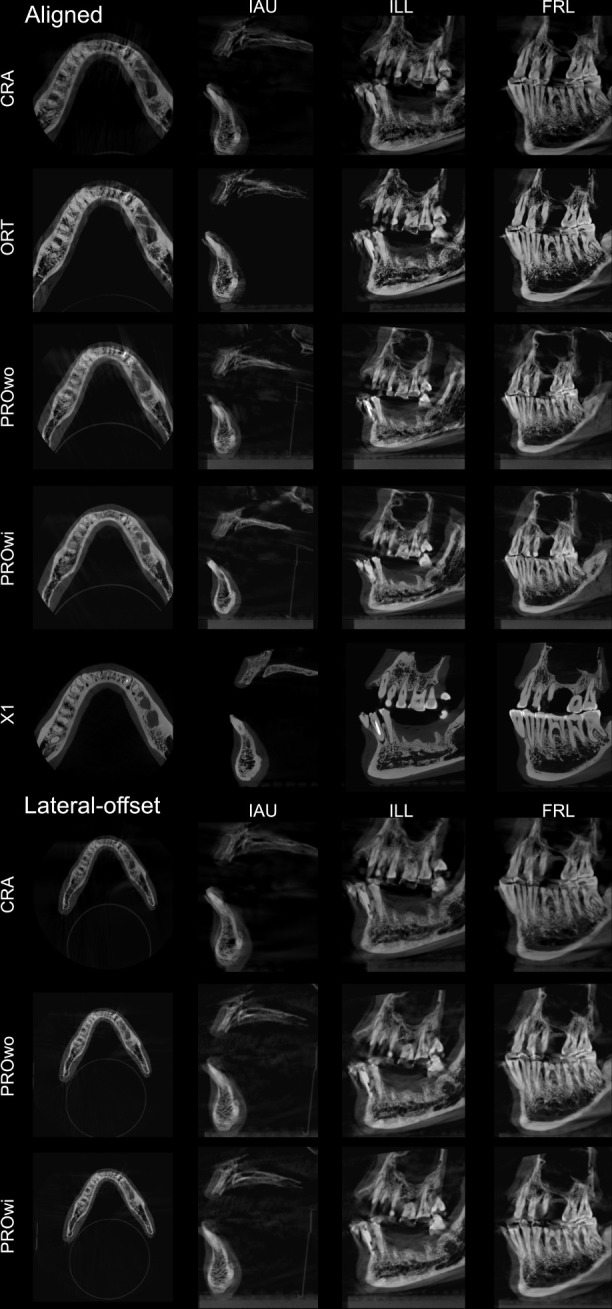

Figure 3.

Representative images of the “head anteroposterior translation + lifting” movement group at t1 showing the entire FOV acquired and images of the evaluated tasks for all the protocols.

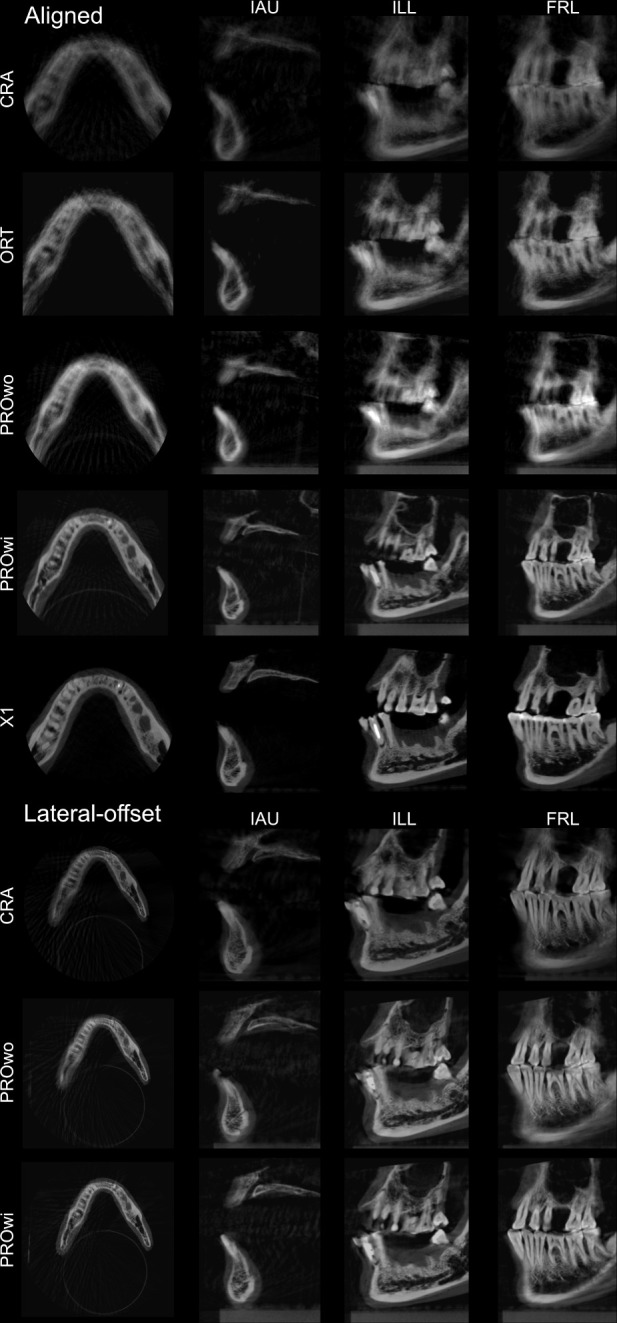

Figure 4.

Representative images of the “continuous tremor” movement group at t1 showing the entire FOV acquired and images of the evaluated tasks for all the protocols.

Discussion

The presence of motion artefacts in CBCT images is well-documented in the literature as one of the main pitfalls of this diagnostic method.1,5,6,8,14,15 The causes for the problem are established as discrepancies in the acquired images when the object of the investigation (i.e. patient) moves during the examination, leading to mismatched voxel intensities used by the image-reconstruction algorithm,5 leading to stripe-like artefacts, overall unsharpness, and double contours.1,16

To this date, only one study suggested the use of a direct method to detect head movements during the acquisition in dental CBCT units, which was later tested as part of motion-artefact correction systems.10 This method demands a head-band to be worn by the patient during the examination, carrying a dotted-pattern plate, which is tracked by three cameras positioned above the patient to detect head movements in all axes.10 Such system has been previously tested and showed excellent image quality compared to units without the correction system.9 The present study is the first to test different motion-artefact correction systems: one based on the aforementioned approach (X1), and the other, which is algorithm-based, relying on optical flow measurements to presume movements, as detected in the projection images (PRO). Due to the nature of the methods used to avoid motion artefacts, one could speculate that the latter rather compensates instead of corrects for movement artefacts. As optical flow measurements are directly related to brightness variation in the images, it may be affected by the innate voxel value variation present in CBCT data sets, leading to inaccuracy in patient movement tracking.17–20 In the present study, however, the authors chose to name both systems equally, as motion-artefact correction systems.

The use of lateral-offset detectors by some CBCT units to acquire volumes with a diameter, which are larger than the width of the detector, is described in previous studies,5,7,21,22 but with no considerations on how this parameter might affect diagnostic tasks. A previous study7 described the two possibilities for using a lateral-offset detector tested in the present study: a 360° rotation with the detector offset to the right as used by PRO, and a double partial rotation, with the detector offset to the right side in the first rotation, and to the left side in the second rotation, as used by CRA. Image quality was assessed using a utility wax phantom, not related to clinical diagnosis.7

A previous study8 used a unit with lateral-offset acquisition (NewTom™ 5G; QR srl, Verona, Italy)21 to assess the prevalence of simulated patient motion artefacts in the images. In that study, the protocol corresponding to t1 in the present study showed an artefact distribution within the FOV, which is not compatible with that observed in the present study, considering the tasks located in the posterior mandible. In the present study, this result was observed in the units that had an aligned detector, while the ones with lateral-offset presented differences between the left and right sides for most protocols (Figure 5). An explanation for that could be if the unit in the previous study also uses an aligned-detector approach for the tested FOV. In other words, although available, the lateral-offset position of the detector was actually not used. This is plausible since the detector size listed (20 × 25 cm) is large enough to accommodate the FOV (12 × 8 cm).8 In the present study, we checked all projection images, to be sure that the lateral-offset protocol was selected and that the movements happened in the planned moment.

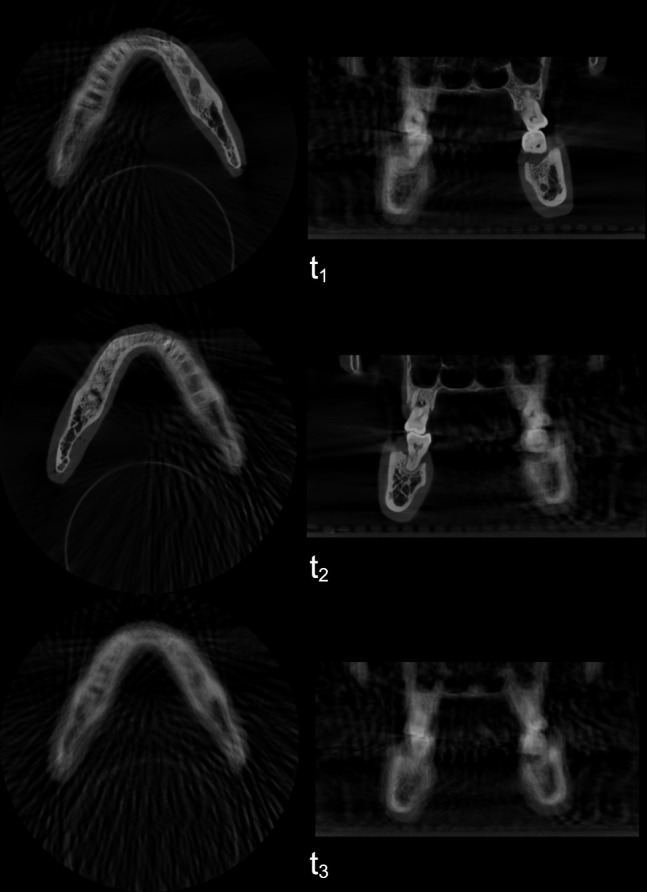

Figure 5.

Axial and coronal images of the “continuous tremor” group of the CRA protocol with the lateral-offset detector in t1, t2, and t3.

The movement types in the present study are considered intense, and most are multiplanar.6,9 The distance, speed, and pattern were chosen to increase the risk of obtaining images considered as “not interpretable”.9,15 The present results show that motion artefacts were directly related to the acquisition geometry. When evaluating the units with aligned detectors, most of the multiplanar movements resulted in non-interpretable images for the three evaluated tasks, in the units without a motion-artefact correction system (CRA, ORT, and PROwo). A different behaviour was observed for units with lateral-offset detectors, in which the moment-in-time in which the movement took place affected image interpretability differently, for the diverse diagnostic tasks.

When designing the study, we hypothesized that t1 would affect more the anterior and right regions of the FOV, while t2 would affect more the anterior and left regions, and t3 would affect all regions. This was true for most cases and observed more for CRA than for PROwo, which might be due to the different type of detector lateral-offset position and image-acquisition dynamics for the two units. Therefore, even though the movements were the same, in protocols with a lateral-offset detector there is a chance of intense movements not affecting the region of interest, depending on its location and the moment-in-time in which the movement took place during acquisition. In other words, the present results suggest that CBCT image acquisition geometry greatly influences which regions are to be affected by motion artefacts.

However, it is important to highlight that, in the tested units, choosing a lateral-offset protocol meant an increased acquisition time (sometimes even twice the time, as for CRA). A comparable increase in the acquisition time was observed for PRO, which required a partial rotation for the aligned protocol, and a full rotation for the lateral-offset protocol. It is well documented that the chances of movements happening during a CBCT examination are directly related to the total time required for the acquisition.1 Also, even though the radiation dose was not measured in this study, it should be noted that the lateral-offset protocols require a larger FOV (CRA and PRO) and therefore expose a larger area than its aligned counterparts. In addition, there is a central area in the volume that is exposed during the full rotation for PRO and in both rotations for CRA, which might slightly increase the total absorbed dose compared to a protocol that has an aligned detector and a partial rotation.

Considering the automated motion-artefact correction systems in the aligned detector protocols, X1 provided interpretable images by all protocols, the same as recently observed by Spin-Neto et al. (2018).9 PROwi had comparable results in the present study, except for the continuous tremor in IAU. However, this was the most intense type of movement, not commonly seen in patients, tested in this study mostly to accentuate the lateral-offset geometry effects. PROwi provided an excellent image quality for protocols with aligned detectors. X1 showed no images with unsharpness and only one movement that resulted in motion-related stripe artefacts, while PROwi was less effective enhancing image quality with most protocols showing motion-related stripe artefacts and overall unsharpness, even though the images were still interpretable.

When compared to the lateral-offset detector protocols, the PROwi motion-artefact correction system was less effective than its aligned counterpart. It sometimes resulted in non-interpretable images that were considered interpretable with the motion-artefact correction system turned off.

Conclusion

Motion-artefact correction systems enhanced image quality and interpretability for units with aligned detectors but were less effective for those with lateral-offset detectors. Lateral-offset detectors resulted in motion artefacts in different regions of the FOV, depending on when the movements were executed, which interfered unevenly with image interpretability within the FOV (i.e. the region of interest was not necessarily affected when the opposite side was).

Footnotes

Funding: This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001

Contributor Information

Gustavo Machado Santaella, Email: gustavoms@live.com.

Ann Wenzel, Email: awenzel@dent.au.dk.

Francisco Haiter-Neto, Email: haiter@fop.unicamp.br.

Pedro Luiz Rosalen, Email: rosalen@fop.unicamp.br.

Rubens Spin-Neto, Email: rsn@dent.au.dk.

REFERENCES

- 1. Spin-Neto R, Wenzel A. Patient movement and motion artefacts in cone beam computed tomography of the dentomaxillofacial region: a systematic literature review. Oral Surg Oral Med Oral Pathol Oral Radiol 2016; 121: 425–33. doi: 10.1016/j.oooo.2015.11.019 [DOI] [PubMed] [Google Scholar]

- 2. Spin-Neto R, Matzen LH, Schropp L, Gotfredsen E, Wenzel A. Factors affecting patient movement and re-exposure in cone beam computed tomography examination. Oral Surg Oral Med Oral Pathol Oral Radiol 2015; 119: 572–8. doi: 10.1016/j.oooo.2015.01.011 [DOI] [PubMed] [Google Scholar]

- 3. Feldkamp LA, Davis LC, Kress JW. Practical cone-beam algorithm. J Opt Soc Am A 1984; 1: 612. doi: 10.1364/JOSAA.1.000612 [DOI] [Google Scholar]

- 4. Barrett JF, Keat N. Artifacts in CT: recognition and avoidance. Radiographics 2004; 24: 1679–91. doi: 10.1148/rg.246045065 [DOI] [PubMed] [Google Scholar]

- 5. Schulze R, Heil U, Gross D, Bruellmann DD, Dranischnikow E, Schwanecke U, et al. Artefacts in CBCT: a review. Dentomaxillofac Radiol 2011; 40: 265–73. doi: 10.1259/dmfr/30642039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Spin-Neto R, Mudrak J, Matzen LH, Christensen J, Gotfredsen E, Wenzel A. Cone beam CT image artefacts related to head motion simulated by a robot skull: visual characteristics and impact on image quality. Dentomaxillofac Radiol 2013; 42: 32310645. doi: 10.1259/dmfr/32310645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Santaella GM, Rosalen PL, Queiroz PM, Haiter-Neto F, Wenzel A, Spin-Neto R. Quantitative assessment of variation in CBCT image technical parameters related to CBCT detector lateral-offset position. Dentomaxillofac Radiol 2019; 20190077. doi: 10.1259/dmfr.20190077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Nardi C, Molteni R, Lorini C, Taliani GG, Matteuzzi B, Mazzoni E, et al. Motion artefacts in cone beam CT: an in vitro study about the effects on the images. Br J Radiol 2016; 89: 20150687. doi: 10.1259/bjr.20150687 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Spin-Neto R, Matzen LH, Schropp LW, Sørensen TS, Wenzel A. An ex vivo study of automated motion artefact correction and the impact on cone beam CT image quality and interpretability. Dentomaxillofac Radiol 2018; 15: 20180013. doi: 10.1259/dmfr.20180013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Spin-Neto R, Matzen LH, Schropp L, Sørensen TS, Gotfredsen E, Wenzel A. Accuracy of video observation and a three-dimensional head tracking system for detecting and quantifying robot-simulated head movements in cone beam computed tomography. Oral Surg Oral Med Oral Pathol Oral Radiol 2017; 123: 721–8. doi: 10.1016/j.oooo.2017.02.010 [DOI] [PubMed] [Google Scholar]

- 11. Schulze RKW, Michel M, Schwanecke U. Automated detection of patient movement during a CBCT scan based on the projection data. Oral Surg Oral Med Oral Pathol Oral Radiol 2015; 119: 468–72. doi: 10.1016/j.oooo.2014.12.008 [DOI] [PubMed] [Google Scholar]

- 12. Lopes PA, Santaella GM, Lima CAS, Vasconcelos KdeF, Groppo FC. Evaluation of soft tissues simulant materials in cone beam computed tomography. Dentomaxillofac Radiol 2019; 48: 20180072. doi: 10.1259/dmfr.20180072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics 1977; 33: 159–74. doi: 10.2307/2529310 [DOI] [PubMed] [Google Scholar]

- 14. Spin-Neto R, Matzen LH, Schropp L, Gotfredsen E, Wenzel A. Detection of patient movement during CBCT examination using video observation compared with an accelerometer-gyroscope tracking system. Dentomaxillofac Radiol 2017; 46: 20160289. doi: 10.1259/dmfr.20160289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Spin-Neto R, Costa C, Salgado DM, Zambrana NR, Gotfredsen E, Wenzel A. Patient movement characteristics and the impact on CBCT image quality and interpretability. Dentomaxillofac Radiol 2018; 47: 20170216. doi: 10.1259/dmfr.20170216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Lee K-M, Song J-M, Cho J-H, Hwang H-S. Influence of head motion on the accuracy of 3D reconstruction with cone-beam CT: landmark identification errors in maxillofacial surface model. PLoS One 2016; 11: 11. doi: 10.1371/journal.pone.0153210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Barron JL, Khurana M. Determining optical flow for large motions using parametric models in a hierarchical framework. Vision Interface 1997; 2: 47–56. [Google Scholar]

- 18. Spin-Neto R, Gotfredsen E, Wenzel A. Variation in voxel value distribution and effect of time between exposures in six CBCT units. Dentomaxillofac Radiol 2014; 43: 20130376. doi: 10.1259/dmfr.20130376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Pauwels R, Jacobs R, Singer SR, Mupparapu M. CBCT-based bone quality assessment: are Hounsfield units applicable? Dentomaxillofac Radiol 2015; 44: 20140238. doi: 10.1259/dmfr.20140238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Spin-Neto R, Gotfredsen E, Wenzel A. Standardized method to quantify the variation in voxel value distribution in patient-simulated CBCT data sets. Dentomaxillofac Radiol 2015; 44: 20140283. doi: 10.1259/dmfr.20140283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Molteni R. Prospects and challenges of rendering tissue density in Hounsfield units for cone beam computed tomography. Oral Surg Oral Med Oral Pathol Oral Radiol 2013; 116: 105–19. doi: 10.1016/j.oooo.2013.04.013 [DOI] [PubMed] [Google Scholar]

- 22. Scarfe WC, Farman AG. What is cone-beam CT and how does it work? Dent Clin North Am 2008; 52: 707–30. doi: 10.1016/j.cden.2008.05.005 [DOI] [PubMed] [Google Scholar]