Abstract

Here we describe the public neuroimaging and behavioral dataset entitled “Cross-Sectional Multidomain Lexical Processing” available on the OpenNeuro project (https://openneuro.org). This dataset explores the neural mechanisms and development of lexical processing through task based functional magnetic resonance imaging (fMRI) of rhyming, spelling, and semantic judgement tasks in both the auditory and visual modalities. Each task employed varying degrees of trial difficulty, including conflicting versus non-conflicting orthography-phonology pairs (e.g. harm – warm, wall – tall) in the rhyming and spelling tasks as well as high versus low word pair association in the semantic tasks (e.g. dog – cat, dish – plate). In addition, this dataset contains scores from a battery of standardized psychoeducational assessments allowing for future analyses of brain-behavior relations. Data were collected from a cross-sectional sample of 91 typically developing children aged 8.7- to 15.5- years old. The cross-sectional design employed in this dataset as well as the inclusion of multiple measures of lexical processing in varying difficulties and modalities allows for multiple avenues of future research on reading development.

Keywords: fMRI, Reading, Language, Development, Children

Specifications Table

| Subject | Developmental Cognitive Neuroscience |

| Specific subject area | Neuroimaging of Reading and Language Development |

| Type of data | Tables Images |

| How data were acquired | 1.5 T General Electric (GE) Signa Excite scanner, quadrature birdcage head coil. E-prime software was used to display tasks and collect behavioral data. |

| Data format | Raw |

| Parameters for data collection | Participants were required to be right-handed, native English speakers, not diagnosed with neurological or psychiatric disorders or delays, not taking medication affecting the central nervous system, and have normal hearing and vision. |

| Description of data collection | 91 children completed battery of standardized assessments as well as fMRI while performing auditory and visual rhyming, spelling, and meaning judgment tasks. |

| Data source location | Evanston Hospital and Northwestern University Evanston, IL, USA |

| Data accessibility | Repository name: OpenNeuro Data identification number: 10.18112/openneuro.ds002236.v1.0.0 Direct URL to data: https://openneuro.org/datasets/ds002236/versions/1.0.0 |

| Related research articles | This dataset has been used, in part, in 23 previous publications. See dataset [1] for full list. The dataset has been extended in two separate publically available datasets [2,3]. |

Value of the Data

|

1. Data

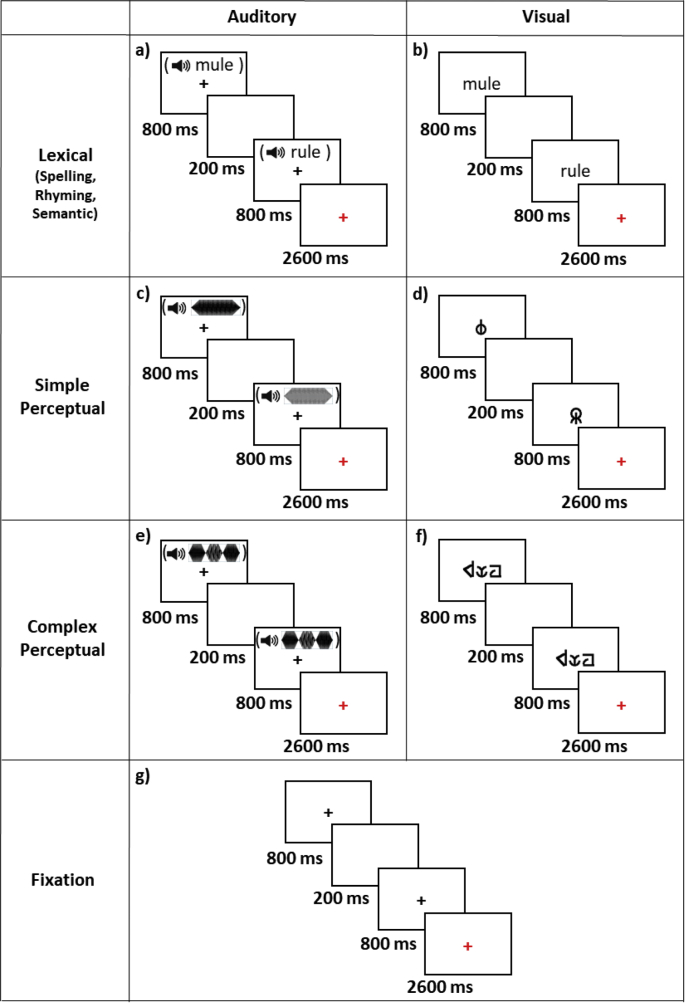

This dataset entitled “Cross-Sectional Multidomain Lexical Processing” [1] is publically available on OpenNeuro.com and is organized according to the Brain Imaging Data Structure specifications version 1.3.0 [4]. The dataset contains (1) raw T1-weighted SPGR anatomical images, (2) fMRI images acquired while participants were completing six different lexical processing tasks, (3) behavioral data from in-scanner tasks, (4) scores from standardized assessments of reading and cognitive abilities, (5) participant demographics, and (6) all stimuli used for lexical processing tasks. Included in this article, Table 1 describes the number of participants having completed each lexical task, Table 2 describes the standardized assessment measures collected, and Fig. 1 illustrates the task trial design. This dataset was extended upon in a separate publically released dataset, not described herein, entitled “Longitudinal Brain Correlates of Multisensory Lexical Processing in Children” which contains longitudinal data on auditory, visual, and audio-visual rhyming tasks and is hosted on OpenNeuro.org [2].

Table 1.

Number of participants completing each task. Number of participants having completed one or more runs of the experimental task and sex distribution.

| Number of participants |

||||

|---|---|---|---|---|

| Female | Male | Total | ||

| Rhyming | Auditory | 28 | 34 | 62 |

| Visual | 37 | 50 | 87 | |

| Spelling | Auditory | 28 | 32 | 60 |

| Visual | 33 | 48 | 81 | |

| Meaning | Auditory | 32 | 38 | 70 |

| Visual | 32 | 38 | 70 | |

Table 2.

Standardized psycho-educational assessment subtests. Subtests administered from each standardized assessment.

| Measure | Test | Subtest |

|---|---|---|

| Achievement | Woodcock-Johnson III (WJ-III) | Letter-Word Identification |

| Oral Comprehension | ||

| Passage Comprehension | ||

| Word Attack | ||

| Picture Vocabulary | ||

| Reading Fluency | ||

| Wide Range Achievement Test: Revision 3 (WRAT-3) | Arithmetic | |

| Spelling | ||

| Intelligence | Wechsler Abbreviated Scale of Intelligence (WASI) | Vocabulary |

| Block design | ||

| Similarities | ||

| Matrix reasoning | ||

| Phonological Processing | Comprehensive Test of Phonological Processing (CTOPP) | Elision |

| Blending Words | ||

| Memory for Digits | ||

| Nonword Repetition | ||

| Rapid Digit Naming | ||

| Rapid Letter Naming | ||

| Reading | Test of Word Reading Efficiency (TOWRE) | Sight Word Efficiency |

| Phonemic Decoding Efficiency | ||

| Vocabulary | Peabody Picture Vocabulary Test: Third Edition (PPVT-III) | n/a |

Fig. 1.

Trial type stimuli and timing. Illustration of the stimuli and timing for (a) auditory lexical trials, (b) visual lexical trials, (c) auditory simple perceptual trials, (d) visual simple perceptual trials, (e) auditory complex perceptual trials, (f) visual complex perceptual trials, and (g) fixation control trials.

2. Experimental design, materials, and methods

2.1. Participants

Ninety-one children (mean age = 11.4, SD = 2.1, 39 female) were included in the described dataset. Age, sex, handedness, ethnicity, and race of each participant is detailed in the participants.tsv at the root level of the dataset. Participants were recruited from the greater Chicago area through advertisements, community events/organizations, and brochures. Differences in sex distributions in this dataset recruitment could have been due to the guardians of male participants being concerned about their child's reading skill. All participants met the following inclusionary criteria: (1) native English speakers; (2) right-handedness; (3) normal hearing and normal or corrected-to-normal vision; (4) free of neurological disease or psychiatric disorders; (5) not taking medication affecting the central nervous system; (6) no history of intelligence, reading, or oral-language deficits; and (7) no learning disability or Attention Deficit Hyperactivity Disorder (ADHD), as determined by parental report in an informal interview prior to enrollment. All procedures were approved by the Institutional Review Board at Northwestern University and Evanston Northwestern Healthcare Research Institute. Informed consent was obtained from parent/guardian(s) including permission for the future release of de-identified data.

2.2. Standardized psycho-educational assessments

During the first visit, participants were administered six standardized psycho-educational assessments to quantify their cognitive abilities. These assessments included the Comprehensive Test of Phonological Processing (CTOPP) [5], the Peabody Picture Vocabulary Test – Third Edition (PPVT-III) [6], the Test of Word Reading Efficiency (TOWRE) [7], the Wechsler Abbreviated Scale of Intelligence (WASI) [8], the Woodcock-Johnson III Tests of Achievement (WJ-III) [9], and the Wide Range Achievement Test - Revision 3 (WRAT3) [10]. See Table 2 for a complete list of all subtests administered. Test order was counterbalanced across participants to account for fatigue effects during testing. Raw, standardized, and composite scores are separated by assessment and stored as tab-separated values in the phenotype directory of the dataset. Each data file is accompanied by a data dictionary describing the assessment and scores.

2.3. Practice imaging procedure

All participants completed a practice imaging session in a mock scanner within one week of their first imaging session. This practice session included explaining the task to the child and allowing them to practice the tasks in an MRI simulator. Participants were trained to reduce head movement in the practice scanner using feedback from an infrared tracking device placed in front of a computer screen. This session was included to increase task understanding and reduce movement and anxiety in the real MRI scanner. Practice tasks did not include any stimuli used in the experimental tasks.

2.4. Functional MRI tasks

Participants completed spelling, rhyming, and semantic relatedness lexical judgment tasks in both the visual and auditory modality, resulting in six tasks overall. For all tasks, two stimuli were presented in sequential order. All tasks contained three condition types: lexical, perceptual control, and fixation control. Fig. 1 provides an illustration of trial types. Lexical trials are described by task below.

In additional to lexical trials, all tasks contained simple and complex perceptual control trials, which differed based on sensory modality. In visual tasks, participants were presented with a pair of single symbols (simple) or a pair of three symbols (complex) and were asked if the pair matched or not. In the complex perceptual condition, individual three-symbol stimuli did not contain repeating symbols and non-matching symbol pairs only differed in one symbol. In auditory tasks, participants were presented with a pair of pure tones (simple) or a pair of three tone stimuli (complex) and were asked if the pair matched or not. In addition, all tasks contained a fixation condition to control for motor responses. In these trials, participants were presented with a black fixation during the first and second stimulus phases and a red fixation during the response phase. Participants were asked to press the button under their index finger when the black cross turned red.

In all trials, the first stimulus was presented for 800 ms followed by an intertrial interval of 200 ms and the second stimulus for 800 ms. The second stimulus was followed by a red fixation cross lasting 2600 ms indicating that participants should respond. Participants could respond as soon as the second stimulus was presented up until the start of the next trial. For visual tasks, the second stimulus was offset right or left 1/2 a letter/symbol from the first to ensure that judgment could not be based on visual persistence. All auditory stimuli were presented with a black fixation which remained on the screen for 800 ms regardless of stimulus duration. Stimuli were presented in the same order for all participants, optimized for event-related design using OptSeq [11]. Each task was divided into two runs to minimize individual scan time and reduce participant fatigue. Behavioral data from functional tasks are stored alongside imaging files and titled sub-<sub_ID>_task-<task_name>_run-<run_ID>_events.tsv and include trial onset, duration, type, accuracy, response time, stimulus 1 file, and stimulus 2 file. Task and task data file descriptions are also included at the root level of the dataset in task-<task_name>_bold.json and task-<task_name>_events.json respectively.

2.4.1. Rhyming judgment task

In rhyming judgement tasks, participants were asked if the pair of words rhymed. Word pairs were grouped into four lexical conditions, 24 pairs were orthographically similar and phonologically similar (O+P+,gate-hate), 24 pairs were orthographically different and phonologically similar (O−P+, has-jazz), 24 pairs were orthographically similar and phonologically different (O+P–, pint-mint), and 24 pairs were orthographically different and phonologically different (O−P−, press-list). The same words were used in the auditory and visual versions of the rhyming task because there were not enough monosyllable English words to create balanced conflicting conditions (O + P−, O−P+). In addition to lexical trials, each task included 12 matching simple perceptual trials, 12 non-matching simple perceptual trials, 12 matching complex perceptual trials, 12 non-matching complex perceptual trials, and 72 fixation control trials. Trial order was optimized and divided into two 108 trial runs collected in 240 volumes.

2.4.2. Spelling judgment task

In spelling judgement tasks, participants were asked if the pair of words had the same rime spelling, including all letters from the first vowel onwards. Stimuli were grouped into the same lexical conditions as those described for the rhyming tasks, and no stimulus was used in both tasks. However, as noted above, the same words were used in the auditory and visual versions of the spelling task because there are not enough monosyllable words to create balanced conflicting conditions (O+P−, O−P+). In addition to lexical trials, each task included 12 matching simple perceptual trials, 12 non-matching simple perceptual trials, 12 matching complex perceptual trials, 12 non-matching complex perceptual trials, and 72 fixation control trials. Trial order was optimized and divided into two 108 trial runs collected in 240 volumes.

2.4.3. Semantic relatedness task

In semantic relatedness judgment tasks, participants were asked if the pair of words were semantically associated, or related in meaning. Word pairs were grouped into three conditions based on free association values [12], 24 word pairs were strongly related (found-lost), 24 pairs were weakly related (dish-plate), and 24 pairs were unrelated (tank-snap). Although grouped into three categories, association should be treated as a continuous variable as there was not a clear separation between high and low association word pairs. In addition to lexical trials, each task included 12 matching simple perceptual trials, 12 non-matching simple perceptual trials, 12 matching complex perceptual trials, 12 non-matching complex perceptual trials, and 60 fixation control trials. Trial order was optimized and divided into one 91 trial run one 89 trial run. Due to difference in length, run 1 for both the visual and auditory task was collected in 203 volumes and run 2 was collected in 198 volumes.

2.5. Stimuli

All stimuli are provided in the stimuli directory of the dataset. Lexical stimuli are organized by task and perceptual stimuli are located in symbols and tones directories for visual and auditory perceptual trials respectively. Auditory words were recorded in a soundproof booth and all words and tones were normalized to equal amplitude. Word duration varied from 400 to 800 ms. All words are non-homophonic, two syllables or less, and matched across modality, condition, and pair position, for written word frequency in children [13]. Spelling and Rhyming word pairs are also matched on two measures of word consistency; orthographic consistency (i.e. friends/friends + orthographic enemies) and phonological consistency (i.e. friends/friends + phonological enemies) [14]. We defined friends as number of rhymes that are spelled the same or number of rimes that are pronounced the same, orthographic enemies as number of different spellings for the same rhyme, and phonological enemies as number of different pronunciations for the same rime. Friends and enemies are matched across task and word, however, they could not be matched across conditions due to the limited number of available words in English and the specific structure of the O+P– and O−P+ conditions. Word characteristics are provided in Stimulus_Characteristics.tsv in the stimuli directory of the dataset.

Symbols used for visual perceptual trials were created by rearranging parts of 24 courier letters (not Q or X) to create false fonts. Tones used in auditory perceptual trials ranged from 325 to 875 Hz in 25 Hz increments and were 600 ms in duration with a 100 ms linear fade in and out. Complex three-toned stimuli contained three tones each 200 ms with a 50 ms linear fade in and out. An equal number of complex three-toned stimuli were ascending, descending, low frequency peak in middle, and high frequency peak in the middle with differences between successive tones at least 75 Hz. For non-matching paired complex three-toned stimuli, half of the stimuli contained the same contour and half a different contour.

2.6. MR acquisition protocol

MR data were acquired using a 1.5 T General Electric (GE) Signa Excite scanner at Evanston Hospital, using a quadrature birdcage head coil. Participants were placed supine in the scanner and their head position was secured using a vacuum pillow (Bionix, Toledo, OH). A response box was placed in the participant's right hand to allow them to respond to functional imaging tasks. Task stimuli were projected onto a screen, which the participants viewed through a mirror attached to the inside of the head coil. Auditory stimuli were presented through sound attenuating headphones to minimize effect of the ambient scanner noise. In between tasks, participants were able to talk to the experimenter and were encouraged to remain still.

Structural MRI: T1-weighted SPGR images were collected using the following parameters: TR = 33.333 ms, TE = 8 ms, matrix size = 256 × 256, bandwidth = 114.922 Hz/Px, slice thickness = 1.2mm, number of slices = 124, voxel size = 1 mm isotropic, flip angle = 30°

Functional MRI: Blood oxygen level dependent signal (BOLD) was acquired using a T2-weighted susceptibility weighted single-shot echo planar imaging (EPI) and the following parameters: TR = 2000 ms, TE = 25 ms, matrix size = 64 × 64, bandwidth = 7812.5 Hz/Px, slice thickness = 5 mm, number of slices = 24, voxel size = 3.75 × 3.75 × 5 mm, flip angle = 90°. Slices were acquired interleaved from bottom to top, odd first.

2.7. De-identification and quality control

All data were converted from raw DICOM format to nifti format using dcm2niix v1.0.20180622. During conversion dcm2niix extracted necessary scanning parameters from the dicom header and saved this information in an accompanying sidecar json file to each nifti image. Facial features were scrubbed from all SPGR images using pydeface in order to de-identify the anatomical images.

Scanning took place over the course of three separate days to include all tasks and prevent fatigue. Shifted acquisition dates are provided to determine relative order and distance between scan dates. Acquisition dates and birthdates were randomly shifted per each participant −365 to 0 days to protect privacy of participants and retain relative dates within participants. Years of shifted dates were changed to years prior to 1900 to clearly indicate that dates were de-identified. Shifted acquisition dates are saved in the sidecar json file for each image under the field “ShiftedAquisitionDate” and shifted birthdates are saved in the participant demographic file, participants.tsv, at the root level of the dataset. Task order within a scanning session can be determined using the shifted date and “SeriesNumber” field in the sidecar file.

Due to high movement in pediatric populations, all functional images were reviewed for movement using the ArtRepair toolbox [15]. Images containing greater than 25% of volumes having volume-to-volume movement of greater than 2mm were removed from the dataset.

After facial features were removed from anatomical images and movement was checked in functional images, quality of all data were quantified using MRIQC version 0.11.0 [16]. Output from MRIQC is provided in the derivatives folder at the root level of the dataset.

Acknowledgments

This project was supported by the National Institute of Child Health and Human Development [grant numbers HD042049, HD093547] awarded to James R. Booth. We thank Joran Bigio, Donald Bolger, Douglas Burman, Fan Cao, Tai-Li Chau, Nadia Cone, Dong Lu, and Jennifer Minas for their help with data collection and disseminating research on this dataset. We thank Sweta Ghatti for her help with data curation.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.dib.2019.105091.

Contributor Information

Marisa N. Lytle, Email: marisa.n.lytle@vanderbilt.edu.

James R. Booth, Email: james.booth@vanderbilt.edu.

Conflict of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A. Supplementary data

The following is the Supplementary data to this article:

References

- 1.Lytle M.N., Bitan T., Booth J.R. 2019. Cross-Sectional Multidomain Lexical Processing, OpenNeuro, v1.0.0. [Google Scholar]

- 2.Lytle M.N., McNorgan C., Booth J.R. A longitudinal neuroimaging dataset on multisensory lexical processing in school-aged children. Sci. Data. 2019;6:329. doi: 10.1038/s41597-019-0338-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Suárez-Pellicioni M., Lytle M., Younger J.W., Booth J.R. A longitudinal neuroimaging dataset on arithmetic processing in 8- to 16-year old children. Sci. Data. 2019;6:190040. doi: 10.1038/sdata.2019.40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gorgolewski K.J., Auer T., Calhoun V.D., Craddock R.C., Das S., Duff E.P., Flandin G., Ghosh S.S., Glatard T., Halchenko Y.O., Handwerker D.A., Hanke M., Keator D., Li X., Michael Z., Maumet C., Nichols B.N., Nichols T.E., Pellman J., Poline J.-B., Rokem A., Schaefer G., Sochat V., Triplett W., Turner J.A., Varoquaux G., Poldrack R.A. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. Data. 2016;3:160044. doi: 10.1038/sdata.2016.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bruno R.M., Walker S.C. Comprehensive test of phonological processing (CTOPP) Diagnostique. 1999;24:69–82. [Google Scholar]

- 6.McKinlay A. Peabody picture vocabulary test –Third edition (PPVT-III) In: Goldstein S., Naglieri J.A., editors. Encyclopedia of Child Behavior and Development. Springer; Massachusetts: 2011. [Google Scholar]

- 7.Torgesen J.K., Rashotte C.A., Wagner R.K. Psychological Corporation; 1999. TOWRE: Test of Word Reading Efficiency. [Google Scholar]

- 8.Wechsler D. Harcourt Assessment; 1999. Wechsler Abbreviated Scale of Intelligence. [Google Scholar]

- 9.Woodcock R.W., McGrew K.S., Mather N., Schrank F. Riverside Publishing; 2001. Woodcock-Johnson III. [Google Scholar]

- 10.Wilkinson G.S. Jastak Association; Delaware: 1993. Wide Range Achievement Test–Revision 3. [Google Scholar]

- 11.Greve D.N. Optseq2 computer software. 2002. http://surfer.nmr.mgh.harvard.edu/optseq

- 12.Nelson D.L., McEvoy C.L., Schreiber T.A. The University of South Florida word association, rhyme, and word fragment norms. 1998. http://www.usf.edu/freeassociation/ [DOI] [PubMed]

- 13.Zeno S., Ivens S.H., Millard R.T., Duvvuri R. Touchstone Applied Science Associates; 1995. The Educator's Word Frequency Guide. [Google Scholar]

- 14.Plaut D.C., McClelland J.L., Seidenberg M.S., Patterson K. Understanding normal and impaired word reading: computational principles in quasi-regular domains. Psychol. Rev. 1996;103:56–115. doi: 10.1037/0033-295x.103.1.56. [DOI] [PubMed] [Google Scholar]

- 15.Mazaika P.K., Whitfield-Gabrieli S., Reiss A.L. Artifact repair of fMRI data from high motion clinical subjects. Neuroimage. 2007;36:S142. [Google Scholar]

- 16.Esteban O., Birman D., Schaer M., Koyejo O.O., Poldrack R.A., Gorgolewski K.J. MRIQC: advancing the automatic prediction of image quality in MRI from unseen sites. PLoS One. 2017;12(9):e0184661. doi: 10.1371/journal.pone.0184661. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.