Abstract

From psychology to economics, there has been substantial interest in how costs (e.g., delay, risk) are represented asymmetrically during decision-making when attempting to gain reward or avoid punishment. For example, in decision-making under risk, individuals show a tendency to prefer to avoid punishment rather than to acquire the equivalent reward (loss aversion). Although the cost of physical effort has recently received significant attention, it remains unclear whether loss aversion exists during effort-based decision-making. On the one hand, loss aversion may be hardwired due to asymmetric evolutionary pressure on losses and gains and therefore exists across decision-making contexts. On the other hand, distinct brain regions are involved with different decision costs, making it questionable whether similar asymmetries exist. Here, we demonstrate that young healthy human participants (females, 16; males, 6) exhibit loss aversion during effort-based decision-making by exerting more physical effort to avoid punishment than to gain a same-size reward. Next, we show that medicated Parkinson's disease (PD) patients (females, 9; males, 9) show a reduction in loss aversion compared with age-matched control subjects (females, 11; males, 9). Behavioral and computational analysis revealed that people with PD exerted similar physical effort in return for a reward but were less willing to produce effort to avoid punishment. Therefore, loss aversion is present during effort-based decision-making and can be modulated by altered dopaminergic state. This finding could have important implications for our understanding of clinical disorders that show a reduced willingness to exert effort in the pursuit of reward.

SIGNIFICANCE STATEMENT Loss aversion—preferring to avoid punishment rather than to acquire equivalent reward—is an important concept in decision-making under risk. However, little is known about whether loss aversion also exists during decisions where the cost is physical effort. This is surprising given that motor cost shapes human behavior, and a reduced willingness to exert effort is a characteristic of many clinical disorders. Here, we show that healthy human individuals exert more effort to minimize punishment than to maximize reward (loss aversion). We also demonstrate that medicated Parkinson's disease patients exert similar effort to gain reward but less effort to avoid punishment when compared with healthy age-matched control subjects. This indicates that dopamine-dependent loss aversion is crucial for explaining effort-based decision-making.

Keywords: reward, punishment, motor control, Parkinson's disease

Introduction

There has been substantial interest in how a cost, such as delay or reward uncertainty, discounts the utility, or “value” an individual associates with the beneficial outcome of a decision (Rachlin and Green, 1972; Kahneman and Tversky, 1979; Stephens and Krebs, 1986; Bautista et al., 2001; Stephens, 2001; Green and Myerson, 2004; Stevens et al., 2005; Daw and Doya, 2006; Rachlin, 2006; Fehr and Rangel, 2011). One cost that has recently received significant attention is physical effort (effort-based decision-making; Chong et al., 2015; Klein-Flügge et al., 2016; Le Bouc et al., 2016; Shadmehr et al., 2016; Skvortsova et al., 2017). Previous work has investigated the computational, neural, and neurochemical mechanisms involved when individuals evaluate rewards that are associated with physical effort (Prévost et al., 2010; Kurniawan et al., 2011; Burke et al., 2013; Hauser et al., 2017), with a diminished willingness to exert effort being a prevalent characteristic of many clinical disorders, such as Parkinson's disease (PD; Baraduc et al., 2013; Chong et al., 2015).

Prior work has examined how other costs, such as delay and uncertainty, are represented differently when attempting to gain reward or avoid punishment. For example, in decision-making under risk, individuals show a tendency to prefer to avoid punishment rather than to acquire the equivalent reward, a phenomenon called loss aversion (Kahneman and Tversky, 1979; Tversky and Kahneman, 1992). Surprisingly, it remains unclear whether people also exhibit loss aversion during effort-based decision-making. On the one hand, loss aversion may be hardwired due to asymmetric evolutionary pressure on losses and gains (Kahneman and Tversky, 1979; Tversky and Kahneman, 1992; Tom et al., 2007) and thus should be observed in any cost-benefit decision-making context. On the other hand, distinct brain regions are involved in decision-making with different costs (Prévost et al., 2010; Bailey et al., 2016; Hauser et al., 2017; Galaro et al., 2019), making it questionable whether similar asymmetries should exist. For example, whereas the cingulate cortex is implicated in effort-based decision-making, other brain areas, such as the ventromedial prefrontal cortex, are thought to play a more important role for decision-making under risk (Klein-Flügge et al., 2016). Although several studies have attempted to address this question, they do not directly examine loss aversion (Galaro et al., 2019), do not involve the execution of the effortful action (Nishiyama, 2016), or the cost of effort is confounded with the cost of temporal delay (Porat et al., 2014).

The neurotransmitter dopamine appears to be crucial for effort-based decision-making. For example, people with PD exhibit a reduced willingness to exert effort in the pursuit of reward when off dopaminergic medication, with medication restoring this imbalance (Chong et al., 2015; Le Bouc et al., 2016; Skvortsova et al., 2017). Interestingly, during decision-making under risk and reinforcement learning, Parkinson's disease patients on dopaminergic medication display an enhanced response to reward but a reduced sensitivity to punishment (Frank et al., 2004; Frank, 2005; Collins and Frank, 2014). Although this suggests that dopamine availability might shape loss aversion across contexts (Clark and Dagher, 2014; Timmer et al., 2017) and, in particular, that medicated PD patients should show reduced loss aversion, the role of dopamine during effort-based decision-making within a reward or punishment context has not been directly investigated.

In this article, we demonstrate that young healthy participants exhibit loss aversion during effort-based decision-making; individuals were willing to exert more physical effort to minimize punishment than maximize reward. In addition, behavioral and computational analysis revealed that medicated Parkinson's disease patients showed a reduction in loss aversion compared with age-matched control subjects. Specifically, although patients exerted similar physical effort in return for reward, they were less willing to produce effort to avoid punishment. Therefore, loss aversion is present during effort-based decision-making, and this asymmetry is modulated by dopaminergic state.

Materials and Methods

Participants

Ethics statement.

The study was approved by Ethical Review Committee of the University of Birmingham, and was conducted in accordance with the Declaration of Helsinki. Written informed consent was obtained from all participants.

Young healthy participants.

Twenty-two young healthy participants (age: 23.1 ± 4.56 years; 16 females) were recruited via online advertising and received monetary compensation upon completion of the study. They were naive to the task, had normal/corrected vision, and reported to have no history of any neurological condition.

PD patients and healthy age-matched control subjects.

Eighteen PD patients were recruited from a local participant pool through Parkinson's UK. They were on their normal schedule of medication during testing [levodopa-containing compound: n = 7, dopamine agonists (including pramipexole, ropinirole): n = 6, or combination of both: n = 5]. Clinical severity was assessed with the Unified Parkinson's Disease Rating Scale (UPDRS; Table 1; Fahn and Elton, 1987). Twenty age-matched healthy control subjects (HCs) were also recruited via a local participant pool. All patients/participants had a Mini-Mental Status Examination (Folstein et al., 1975) score >25 (Table 1). In addition, a BIS/BAS scale (Behavioral Inhibition System/Behavioral Activation System, Carver and White, 1994) was used to measure participant motivation to avoid aversive outcomes and to approach goal-oriented outcomes. The DASS-21 (Depression, Anxiety and Stress Scale - 21 Items, Antony et al., 1998) was used to measure participant emotional states of depression, anxiety and stress. Table 1 summarizes the demographics of the patients and age-matched control subjects. Both groups received monetary compensation upon completion of the study.

Table 1.

Demographics for PD and HC groups

| PD | HC | Group difference | |

|---|---|---|---|

| N | 18 | 20 | |

| Age (years) | 66 ± 7.68 | 69 ± 4.54 | t(36) = 1.30, p = 0.20 |

| Gender (M:F) | 9:9 | 9:11 | χ2(1) = 0.001, p = 0.97 |

| MMSE | 28.9 ± 1.5 | 29.5 ± 0.85 | t(36) = 1.61, p = 0.12 |

| BIS/BAS | |||

| BIS | 20.22 ± 2.75 | 20.18 ± 2.38 | t(36) = −0.05, p = 0.96 |

| Reward responsiveness | 9.11 ± 2.91 | 8.95 ± 1.58 | t(36) = −0.21, p = 0.83 |

| Drive | 9.77 ± 3.07 | 9.91 ± 2.22 | t(36) = 0.16, p = 0.88 |

| Fun seeking | 9.66 ± 2.45 | 8.72 ± 2.21 | t(36) = −1.27, p = 0.21 |

| DASS21a | |||

| Depression | 3.45 ± 3.76 | 4.93 ± 4.94 | t(36) = −1.03, p = 0.30 |

| Anxiety | 1.81 ± 2.75 | 6.13 ± 4.03 | t(36) = −3.87, p < 0.001 |

| Stress | 5.90 ± 5.53 | 6.93 ± 5.00 | t(33) = −0.57, p = 0.46 |

| UPDRSb | 23.61 ± 18.88 | N/A | |

| Hoehn and Yahr stage | 1.85 ± 0.60 | N/A | |

| Disease duration (months) | 39.22 ± 30.1 | N/A | |

| Duration since last dose (hours) | 2.08 ± 0.90 | N/A |

Values are the mean ± SD. MMSE, Mini-Mental Status Examination (30-point questionnaire that is used extensively in clinical and research settings to measure cognitive impairment; Folstein et al., 1975); BIS/BAS, behavioral inhibition system/behavioral activation system (Carver and White, 1994); DASS21, depression (normal: 0–9), anxiety (normal: 0–7), and stress (normal: 0–14) scale (Antony et al., 1998); N/A, not applicable.

aThree PD patients chose not to finish this questionnaire.

Experimental design

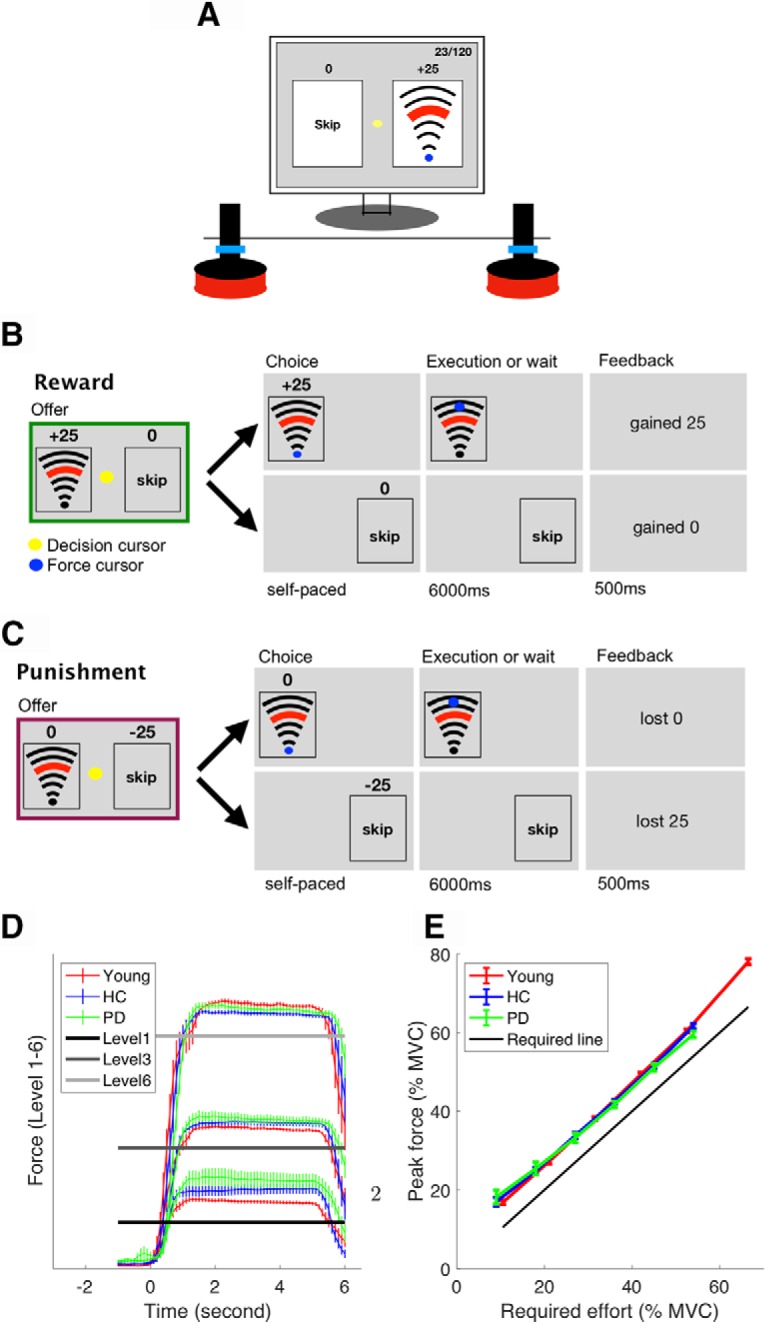

Experimental setup.

Participants were seated in front of a computer (Fig. 1A) running a task implemented in Psychtoolbox (http://Psychtoolbox.org) and MATLAB (MathWorks). Two custom-built vertical handles were positioned on a desk in front of the participants, each of which housed a force transducer with a sample rate of 200 hertz (https://www.ati-ia.com). The force produced on each handle enabled participants to independently control two cursors on the computer screen (Fig. 1A). During the main experiment, one handle was assigned as the decision-making handle; participants grasped this handle with their hand and produced a left- or right-directed force to move the decision cursor into the appropriate option box to indicate their choice. The other handle was designated as the force execution handle; participants rested their index finger next to the bottom of the handle and produced a force by pressing their index finger inward on the handle (i.e., push left for the right index finger, push right for the left index finger). As the lateral force recorded by the transducers was sensitive to the height at which the force was applied to the handle, participants were asked to maintain their index finger below a protective ring placed 1.5 cm above the bottom of the handle (Fig. 1A). This ensured that the finger position on the handle did not change across the experiment. In addition, to maintain a consistent arm position and minimize the use of alternative proximal muscles, participants' forearms were firmly strapped to the table at the wrist and elbow.

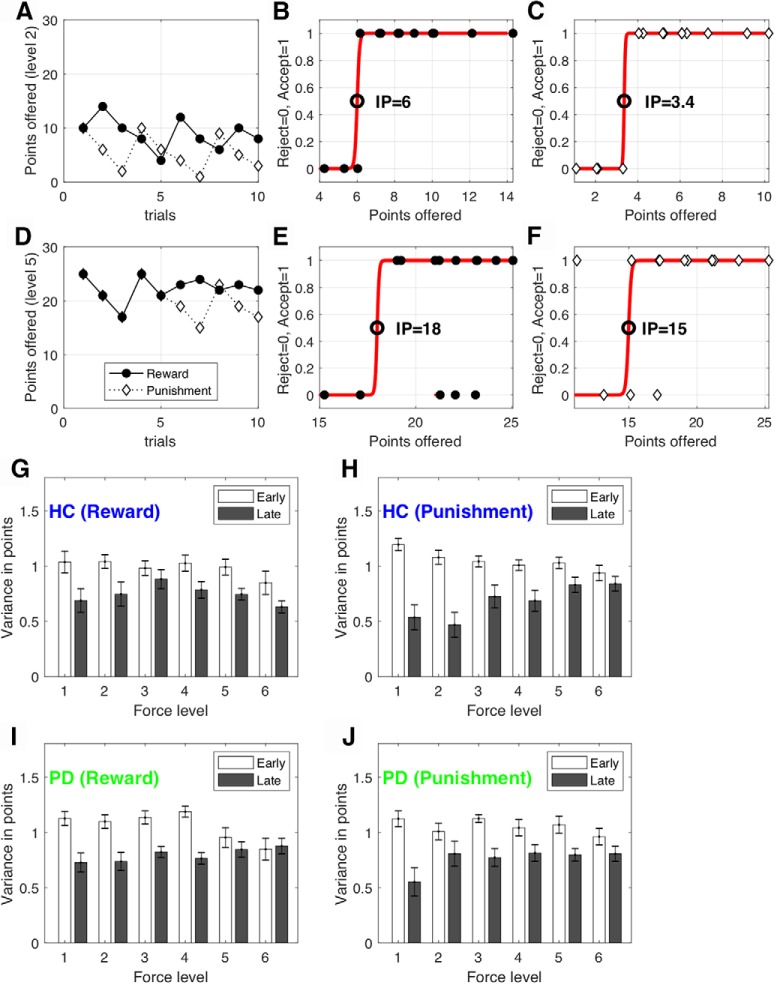

Figure 1.

Experimental setup. A, Experimental equipment. B, C, Typical reward (B) and punishment (C) trials. D, Average force trace across participants on Levels 1, 3, and 6. Zero seconds (x-axis) is the moment at which the participants indicated their choice and they were allowed to start exerting the force. Error bars represent SEM across participants. E, Young participants (red), PD patients (green), and healthy age-matched control subjects (blue) all modulated their force appropriately. The solid black line indicates the minimum force required. Error bars represent SEM across participants.

Procedure.

Before the main effort-based decision-making task, participants were asked to produce a maximal voluntary contraction (MVC) of their first dorsal interosseous muscle (isometric contraction of the index finger against the handle) for 3 s. This was repeated three times, and the average maximum force was taken as their MVC. For the young healthy participants, the index finger of the dominant hand was chosen to produce the force. For people with PD, the index finger of the most affected side was chosen to produce the force (dominant hand: n = 11; nondominant hand: n = 7). For the HCs, we chose a similar ratio of dominant hand and nondominant hand as their force producing hand (dominant hand: n = 12; nondominant hand: n = 8). Following the MVC, participants had 12 trials to practice the six force levels that were used in the main decision-making task (see the Effort-based decision-making task section for details). The force levels were shown to participants as a set of arcs (Fig. 1A).

The effort-based decision-making task consisted of two conditions (reward and punishment), the order of which was counterbalanced across participants. For both PD and HC groups, each condition (reward or punishment) consisted of 10 epochs of six trials (60 trials). Each epoch included one trial of each of the six force levels in a randomized order, ensuring an even distribution of force levels. At the beginning of each condition (reward or punishment), the score started at 0. In the reward condition, the total score was positive and the participants were asked to maximize the points they gained. In the punishment condition, the total score was negative and the participants were asked to minimize the points they lost. Following the effort-based decision-making task, participants were again asked to produce three consecutive 3 s MVCs. They were instructed that this had to be >90% of the MVC they produced at the beginning of the experiment. Importantly, participants were made aware of this requirement at the beginning of the study (after the first MVC and before the main effort decision-making task). This protocol was intended to ensure that participants maintained an interest in not becoming overly fatigued by continually choosing the effortful (high reward, low punishment) choice throughout. In addition to the fixed monetary compensation for participating in the study (£15; ∼90 min), participants were told at the beginning of the experiment that they had the chance to be entered into a lottery to win an extra £100 if their performance (total score) was among the top five of participants (one lottery per group) and they were able to maintain 90% MVC at the end of the experiment. Therefore, all participants were encouraged to accumulate as many points as possible (and lose as few points as possible) while avoiding unnecessary effort.

Effort-based decision-making task.

The task was adapted from classic effort-based decision-making paradigms (Bonnelle et al., 2015, 2016; Chong et al., 2016; Skvortsova et al., 2017; Le Heron et al., 2018). There were two trial types, reward and punishment (Fig. 1B,C), and the task consisted of one block of each. On a reward trial (Fig. 1B), participants chose between executing a certain force level in return for reward (gaining points) and skipping the trial in return for 0 points. On a punishment trial (Fig. 1C), participants chose between executing a certain force level in return for 0 points and skipping the trial in return for being punished (losing points).

On each trial, participants were presented with a combination of points and a force level, which was a percentage of their MVC (offer phase). For the young group, the force was one of six levels: 11, 21, 32, 42 53, and 67% of MVC. For both older age groups (PD and HC), these six levels were 9, 18, 27, 36, 45, and 54% of MVC. The force levels used for the older age groups were lower because a pilot study revealed they fatigued significantly faster than younger participants. At the beginning of each condition (reward, punishment), these six force levels were paired with 5, 10, 15, 20, 25, and 30 points, respectively. The initial pairings were selected based on pilot experiments. Unbeknown to participants, the points associated with each force level were then adjusted on a trial-by-trial basis using an adaptive staircase algorithm (see the Adaptive staircase algorithm section for details). Following the offer phase, participants indicated their choice by exerting a force on the decision handle, which moved the yellow decision cursor (Fig. 1A) from the middle of the screen into one of the option boxes (execute force or skip force). As soon as participants indicated their choice, the unchosen option disappeared. If the force option was chosen, participants were required to execute the force on the handle, which was represented by the blue force cursor moving from the start position toward a target line and staying above the target line for 4 s, at which point they heard a cash register sound (“ka-ching”) from the headphone. If they failed to exert the required force, the trial was repeated. The trial was always terminated 6.5 s after their choice. This meant that participants had to wait for 6 s if they chose to skip the force, or they had to produce the required force within 6 s. We carefully controlled the times for force execution and skip decisions to be identical so that there was no confound between delay and effort discounting as in previous studies (Loewenstein et al., 2002; Doyle, 2010).

Adaptive staircase algorithm

A staircase procedure was performed independently for each of the six force levels (Fig. 2A,D). Specifically, for each force level, the points offered were increased or decreased using an initial step size of eight, depending on whether participants rejected (skipped) or accepted the opportunity to execute the force to receive (or avoid losing) those points, respectively. The step size was doubled if participants rejected or accepted a force level three times in a row, and the step-size was halved if participants reversed their decision on the force level (i.e., an acceptance followed by a rejection on a force level or vice versa; Taylor and Creelman, 2005). As the staircase procedure was performed independently for each of the six force levels, it allowed us to determine the point of subjective indifference at which participants assigned equal value to acceptance and rejection for each force level. Importantly, the points and force combinations offered in the reward and punishment conditions were under the same adaptive procedure as described above, the only difference being whether the points were framed as rewards or punishments (Fig. 1B,C; Tversky and Kahneman, 1981).

Figure 2.

Procedure for determining the effort indifference point. Exemplary choices and fits are shown for one participant and two effort levels. A, D, The points offered for each force level [Level 2 (A); Level 5 (D)]. Unbeknownst to participants, the points associated with each force level were adjusted on a trial-by-trial basis using an adaptive staircase algorithm. Specifically, the points offered were increased or decreased using an initial step size of eight, depending on whether participants rejected (skipped) or accepted the opportunity to execute the force to receive (or avoid losing) those points. B, C, E, F, A sigmoid function (red line) was fitted separately to the choices generated at each effort level (y-axis: 0 = reject force, 1 = accept force) given the points (reward or punishment) offered for this force level (x-axis). The point of subjective IP (circle) was defined as the magnitude at which the sigmoid crossed y = 0.5. G–J, The variance of the points offered for each force level within the first and second halves of each condition for the HC group [reward (G), punishment (H)] and PD group [reward (I), punishment (J)]. Error bars represent SEM across participants.

A possibility to be noted is that the adaptive staircase procedure might not stabilize due to fatigue (Meyniel et al., 2013; Massar et al., 2018; Müller and Apps, 2019). A successful staircase procedure would lead to a situation where the points offered would fluctuate around a participant's indifference point (IP; see Data and statistical analysis) by the end of each condition (Fig. 2). For example, if the initial points offered are lower/higher than a participant's IP, then the participant should initially reject/accept the offer until the points offered resemble their IP. The points offered should then remain stable around the IP. In this case, the variance of the points offered will decrease from early to late trials (Fig. 2). However, if participants experience fatigue, then it is likely that they would begin to reject offers that they had accepted in earlier trials; this would cause the variance of the points offered to remain high in later trials and lead to an unstable IP. To test for this possibility, we compared the variance in points offered (Fig. 2A,D) for each force level between the first and second halves of the trials within each condition. A four-way mixed ANOVA examined the effect of (1) time (first vs second half), (2) force level (six levels), (3) condition (reward vs punishment), and (4) group (HC vs PD) on the variance of points offered (Fig. 2G–J).

Data and statistical analysis

Data were analyzed with MATLAB using custom scripts. The data and codes are available at https://osf.io/hw4rk/. Our first question was to ask whether young healthy participants expressed loss aversion during effort-based decision-making (i.e., a preference to exert more physical effort to minimize punishment than maximize reward). For each of the six force levels, we estimated the points at which the probability of accepting the force option was 50% (effort IP). Specifically, for each force level, a logistic function was fitted to the points offered and the binary choices made by participants (Fig. 2). As shown in Figure 2B, the effort IP was then defined as the reward magnitude (x-axis) at which the sigmoid crossed y = 0.5.

An average effort IP (across six force levels) was then calculated for each participant in the reward and punishment conditions (referred to as reward IP and punishment IP, respectively), indicating an individual's tendency to produce force in each condition. Each participant's loss aversion index was then defined as a ratio between reward IP and punishment IP. A loss aversion index that was larger than one indicated loss aversion. Due to non-normalities in the data, a Wilcoxon signed-ranks test (signrank function in MATLAB) was used to test whether the loss aversion index for young healthy participants was significantly greater than one. To assess effort-based loss aversion in PD patients and HCs, we compared their loss aversion index using nonparametric independent-samples Mann–Whitney U tests (ranksum function in MATLAB). To examine the loss aversion differences in more detail, a two-way mixed ANOVA compared the average effort indifference point across groups (PD vs HC) and conditions (reward vs punishment). To address nonlinearity and heteroscedasticity (unequal variance), the effort IP was log transformed.

Computational modeling of choice

Decision-making behavior was modeled using an effort-based discount model that quantifies how the utility of obtaining reward or avoiding punishment decreases as the physical effort associated with it becomes progressively more demanding. Such models have been extensively used to examine the behavioral and neural basis of effort-based decision-making (Botvinick et al., 2009; Prévost et al., 2010; Hartmann et al., 2013; Klein-Flügge et al., 2015; Białaszek et al., 2017; Lockwood et al., 2017). The key aim of the modeling analysis was to quantify each participant's willingness to invest effort for a beneficial outcome within a single parameter (i.e., the effort-discounting parameter). This enabled us to compare decision-making behavior between the HC and PD groups in the reward and punishment conditions in a relatively simple manner (Hartmann et al., 2013; Chong et al., 2017; Lockwood et al., 2017).

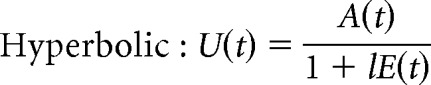

We fitted participant responses using linear, parabolic, and hyperbolic effort-discounting functions, which are often used to capture effort discounting (Hartmann et al., 2013; Klein-Flügge et al., 2015; Białaszek et al., 2017; Lockwood et al., 2017; McGuigan et al., 2019). The shape of these functions reflects how increasing costs (i.e., effort) discount or “devalue” the associated benefits (i.e., the number of points gained or avoided losing):

|

The total utility, U(t), of the offer on trial t is a function of (1) E(t), the physical effort required (scaled to the proportion of the MVC) to gain a reward or to avoid a punishment; (2) A(t), the reward/punishment amplitude (i.e., the number of points offered); and (3) l, the discounting parameter. The parameter l reflects the steepness of the effort-discounting parameter, with a higher value indicating that the participant required a greater reward to perform the same level of effort.

The probability of choosing the effort option at trial t is given by the softmax function:

|

where U(t) is the total utility of the offer on trial t, and β accounts for stochasticity in participant choices. Let y(t) be the participant choice on trial t (skip = 0; accept effort = 1). The parameters (l and β) that maximize the likelihood function over N trials were found for each participant, as follows:

|

where N is the number of trials for each participant (reward and punishment conditions combined; N = 120). The parameters that maximized this likelihood were found for each participant by using the search function fmincon in MATLAB (minimizing the negative of the log likelihood). In addition, to avoid local minima, the function MultiStart in MATLAB was used with a 1000 start position.

For each type of discount function (linear, hyperbolic, and parabolic), we explored both the possibility of one joint discounting parameter for reward and punishment and separate discounting parameters for reward and punishment. A total of six models were compared. To compare the models, we used Bayesian information criteria (BIC; Schwarz, 1978). Specifically, for each model, the BIC summed over all participants were compared (the lower the value is, the better the model fit is; Stephan et al., 2009; Rigoux et al., 2014). Such aggregation of BIC across participants corresponds to fixed-effect analyses (Stephan et al., 2009). To account for the random-effect analysis in which models are treated as a random variable that can differ between participants (Stephan et al., 2009), we also conducted Friedman's test on individual BIC to compare the model fits. To examine the effect of group (HC vs PD) and condition (reward vs punishment) on the discount parameter, a two-way mixed ANOVA was used. The normality assumption in the data (the discount parameter in each cell) was not violated, as assessed by Shapiro–Wilk's test of normality (p > 0.05). In addition, there was homogeneity of variances (p > 0.05) and covariances (p > 0.001) as assessed by Levene's test of homogeneity of variances and Box's M test, respectively.

Results

Evidence for loss aversion in young healthy participants

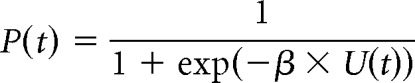

Our first question was to ask whether young healthy participants expressed loss aversion during effort-based decision-making. To examine this, we first assessed how the effort IP (Fig. 2) was affected by the force level in the reward and punishment conditions. As expected, the effort IP became progressively larger as the force level became more demanding, indicating a sensitivity to effort across reward and punishment conditions (Fig. 3A). For each participant, an average effort IP was obtained across force levels for the reward (reward IP) and punishment (punishment IP) conditions, with the loss aversion index being defined as a ratio between these values (>1 = loss aversion; Fig. 3B). As the loss aversion index was significantly greater than one (z = 3.65, p < 0.001, median = 1.369; Fig. 3B), this suggests that loss aversion was clearly evident in young healthy participants during effort-based decision-making.

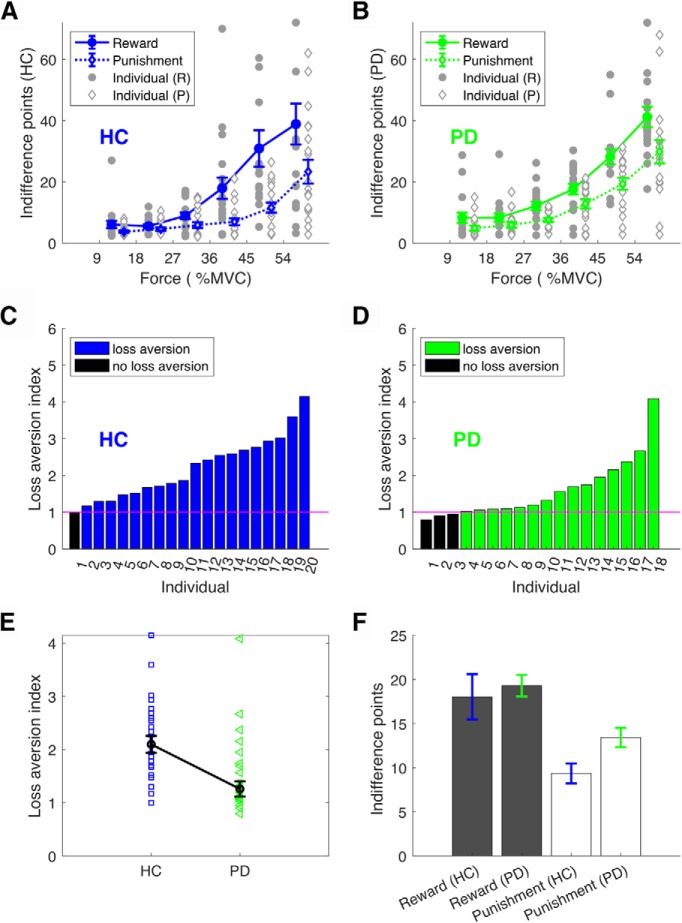

Figure 3.

Loss aversion in young healthy participants. A, Effort IP in reward (solid circles) and punishment (open diamonds). For each force level (x-axis), we estimated a score at which the probability of choosing to produce the force was 50% (effort IP, y-axis). Given a particular force level, a higher IP indicated less willingness to produce the force. Error bars represent SEM across participants. Gray circles/diamonds indicate individual data points. B, Loss aversion index for each individual. Loss aversion is reflected by participants being more willing to produce a force to avoid losses than receive same-sized gains (higher reward IP than punishment IP given a force level). Loss aversion was therefore quantified as a ratio between the reward IP and the punishment IP (loss aversion index; y-axis). A value >1 indicates loss aversion.

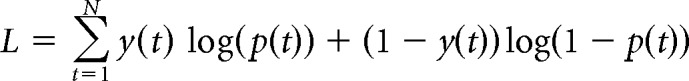

Reduced loss aversion in PD patients compared with HC

Similar to the young healthy participants, the effort IP for both the HC (Fig. 4A) and PD (Fig. 4B) groups increased progressively as the force level became more demanding, suggesting sensitivity to effort across reward and punishment conditions. In addition, as the loss aversion index was significantly greater than one for both HC (z = 3.823, p < 0.001, median = 2.09; Fig. 4C) and PD (z = 2.983, p = 0.003, median = 1.260; Fig. 4D), this indicates that loss aversion was present in both groups. Importantly, PD patients displayed significantly less loss aversion than HC (z = 2.441, p = 0.015; Fig. 4E), with this being a result of medicated PD patients appearing less sensitive to punishment (Fig. 4F). This was confirmed by a two-way mixed ANOVA, which revealed a significant interaction between group (HC vs PD) and condition (reward vs punishment; F(1,36) = 6.412, p = 0.016) for the average indifference point. Specifically, Bonferroni-corrected independent t tests revealed the PD and HC groups had a similar reward IP (p = 0.591; Fig. 4F), but the PD group displayed a higher punishment IP (p = 0.011; Fig. 4F). As the adaptive staircase procedure (i.e., the process of determining the IP for each participant) showed similar variability across conditions (reward, punishment) and groups (HC, PD), this suggests the results were unlikely due to differences in fatigue (Fig. 2). Specifically, although there was a decrease in variance in the points offered from early to late trials (F(1,36) = 12.744, p = 0.001), there were no significant effects of condition or group (reward vs punishment: F(1,36) = 0.230, p = 0.634; HC vs PD: F(1,36) = 3.780, p = 0.062). In addition, there were no significant differences between participants' MVC before and after the main effort-based decision-making task [HC: z = 0.635, p = 0.526; pre-MVC: 16.08 ± 14.04 N (median ± median absolute deviation); post-MVC: 12.00 ± 8.26 N; PD: z = 0.500, p = 0.617; pre-MVC: 12.66 ± 11.40 N; post-MVC 12.70 ± 6.18 N]. Therefore, it is unlikely that PD patients' reduced loss aversion was due to fatigue.

Figure 4.

Loss aversion in HC and PD groups. A, B, Effort IP in reward (solid circle) and punishment (open diamond) conditions for the HC (A) and PD (B) groups. For each force level (x-axis), we estimated a score at which the probability of choosing to produce the force was 50% (effort IP, y-axis). Given a particular force level, a higher IP indicated less willingness to produce the force. Error bars represent SEM across participants. Gray indicates individual data points. C, D, Loss aversion across participants for the HC (C) and PD (D) groups. Loss aversion is reflected by participants being more willing to produce a force to avoid losses than receive similar gains. Therefore, the loss aversion index was measured as a ratio between the reward IP and the punishment IP (y-axis). A value >1 indicates loss aversion. E, Loss aversion index. Error bars represent SEM across participants. F, Reward IP and punishment IP across groups.

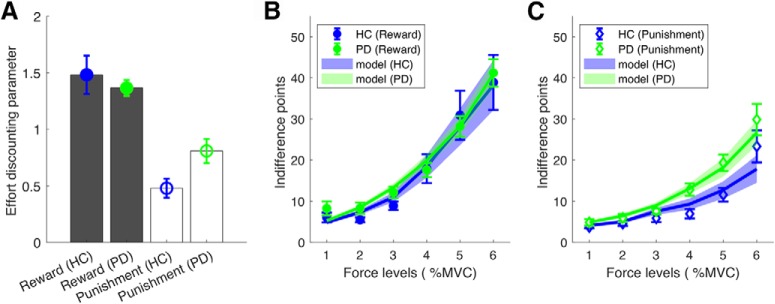

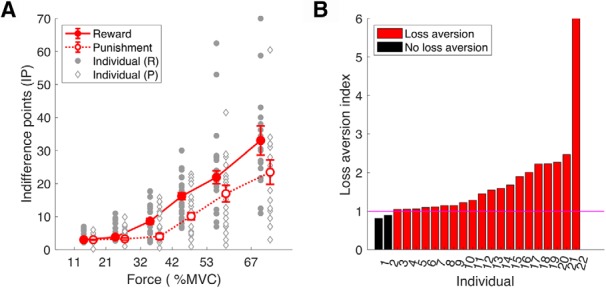

Decision-making behavior in our task was modeled using an effort-based discount model that quantifies how the utility of reward decreases as the physical effort associated with it becomes progressively more demanding. We fitted participant choices to three typical discounting functions: linear, parabolic, and hyperbolic, which are often used to capture effort discounting (Hartmann et al., 2013; Klein-Flügge et al., 2015; Białaszek et al., 2017; Lockwood et al., 2017; McGuigan et al., 2019). We found that a parabolic effort-discounting function with separate discounting parameters for the reward and punishment conditions provided the best fit for both the PD and HC groups (Table 2). Specifically, the summed BIC was lowest for the parabolic function with separate discounting parameters (the lower the value was, the better the model fit was; Table 2). To investigate this at a subject level, a Friedman's test on individual BIC was performed (Stephan et al., 2009; Rigoux et al., 2014). In general, similar results were observed, with the parabolic function consistently being associated with significantly lower BIC for both groups (Table 2). To reinforce these results, R2 was found to be greater for the parabolic function for both groups (Table 2).

Table 2.

Model comparison

| HC |

PD |

|||||

|---|---|---|---|---|---|---|

| BIC | Mean rank | R2 | BIC | Mean rank | R2 | |

| Linear | ||||||

| (l) | 3045 | 4.17 | 0.64 ± 0.21 | 2973 | 4.33 | 0.58 ± 0.22 |

| (l+, l−) | 3072 | 4.70 | 0.72 ± 0.18 | 2964 | 4.28 | 0.61 ± 0.22 |

| Parabolic | ||||||

| (l) | 2991 | 2.77 | 0.74 ± 0.24 | 2867 | 2.89 | 0.72 ± 0.25 |

| (l+, l−) | 2870 | 2.05 | 0.81 ± 0.22 | 2785 | 2.11 | 0.85 ± 0.26 |

| Hyperbolic | ||||||

| (l) | 3065 | 3.55 | 0.60 ± 0.18 | 2962 | 3.83 | 0.64 ± 0.22 |

| (l+, l−) | 3005 | 3.75 | 0.70 ± 0.21 | 2924 | 3.56 | 0.69 ± 0.25 |

| Friedman test | χ2 = 26.26 | χ2 = 19.11 | ||||

| p < 0.001 | p = 0.002 | |||||

The parabolic effort discounting with separate discount parameters ([l+, l−]) for the reward and punishment conditions provided the best fit for choices of both the PD and HC groups. Summed BIC, Friedman's test (Stephan et al., 2009; Rigoux et al., 2014), and R2 (median ± median absolute deviation) are provided for each group (HC, PD). Specifically, for each model, the BIC summed over all participants were compared (the lower the value was, the better the model fit was).

Using the winning model (parabolic function with separate discounting parameters), we compared parameters across the PD and HC groups. In the reward condition, the effort discounting parameter was found to be similar between the HC and PD groups, suggesting medicated PD patients were equally as motivated to exert effort in return for reward (Fig. 5A,B). However, in the punishment condition, the PD group had an increased effort-discounting parameter, suggesting they were less willing to exert effort to avoid punishment (Fig. 5A,C). This was confirmed by a two-way mixed ANOVA that showed a significant interaction between group (HC vs PD) and condition (reward vs punishment; F(2,36) = 5.22, p = 0.042). Bonferroni-corrected independent t tests revealed that although the discounting parameter (l) was similar between PD and HC (p = 0.548) for reward, it was significantly higher for the PD group in the punishment condition (p = 0.018; Fig. 5A).

Figure 5.

Parabolic (winning model) discounting parameter (l) for the HC and PD groups. A, Effort-discounting parameter (l) for the HC and PD groups in the reward and punishment conditions. B, C, Parabolic model predictions for the effort IP across force options in the reward (B) and punishment (C) conditions. The model predictions were calculated by estimating a score for which the probability of the model choosing the force option was 50%. Error bars represent SEM across participants.

Discussion

In summary, we have shown that loss aversion is consistently present during effort-based decision-making in young healthy participants and both people with PD and healthy older adults (HCs). Although loss aversion is widely regarded as one of the most robust and ubiquitous findings in economic decision-making (Kahneman and Tversky, 1979), the surprisingly few studies that have directly examined loss aversion during physical effort-based decision-making have found it to not exist. For instance, Porat et al. (2014) showed that whereas half of young healthy participants were willing to expend greater effort to avoid punishment rather than to gain an equivalent reward, the other half showed the opposite preference. In addition, Nishiyama (2016) found a similarly large degree of variability across participants in preference for maximizing gains or minimizing losses during an effort-based decision-making task. Therefore, although both studies found differences between gain and loss at an individual level, they did not find loss aversion during effort-based decision-making at a group level. However, we believe that there are several issues with the previous studies that may restrict their capacity to directly examine loss aversion during effort-based decision-making. First, in Porat et al. (2014), gaining reward or avoiding punishment required the participant to execute additional key presses. As a result, to obtain more reward (or avoid more punishment), the participants had to produce more effort and had to wait longer. Therefore, the additional effort cost was confounded with a delay cost. It is worth noting that the temporal discount for losses is generally less steep than that for gains (Estle et al., 2006). Importantly, this confound was carefully eliminated in our paradigm, as all trials, including the skip option trials, had identical durations. Second, in the study by Nishiyama (2016), participants were tasked with making a series of choices of whether to engage in an effortful task (to obtain reward or to avoid punishment) via a questionnaire. That is, participants did not actually have to perform an effortful task. The absence of loss aversion could be a result of participants being less sensitive to the imaginary effort involved in a questionnaire. This possibility is supported by our results in which loss aversion is more clearly expressed at higher effort levels.

The second key finding of the present study was that medicated PD patients showed a reduction in loss aversion compared with HC. This reduction in loss aversion was due to people with PD investing similar physical effort in return for a reward but being less willing to produce effort to avoid punishment. Although previous studies have already demonstrated that medicated PD patients are equally as motivated to exert effort in return for reward as aged-matched control subjects (Chong et al., 2015; Le Heron et al., 2018; McGuigan et al., 2019), this is the first study to show that medicated PD patients exhibit a reduction in their willingness to produce effort to avoid punishment.

To understand this reduced loss aversion in medicated PD patients, one key question is whether it is due to an altered sensitivity to the cost of effort, an altered sensitivity to the action outcomes (i.e., the reward or punishment that is associated with the action), or a combination of both. In effort-based decision-making, it has been repeatedly shown that PD patients exhibit reduced willingness to expend effort in return for reward, and dopaminergic medication is able to ameliorate this deficit (Chong et al., 2015; Skvortsova et al., 2017; Le Heron et al., 2018). Many earlier studies have also shown that manipulating dopamine can shift the effort/reward trade-off in healthy participants and animals (Salamone et al., 2007; Floresco et al., 2008; Bardgett et al., 2009; Chong et al., 2015). However, despite dopamine being clearly central to effort-based decision-making, its precise role is unclear. This uncertainty is because an increased sensitivity to reward or a decreased sensitivity to effort could both explain a similar shift in preference. On the one hand, previous work has highlighted the effect of dopamine on effort expenditure. Hyperdopaminergic rats, for example, have been shown to be more willing to expend physical effort to obtain reward (Beeler et al., 2010), whereas in humans, Le Heron et al. (2018) showed that medicated PD patients exert more effort to obtain a similar level of reward compared with when in an off-medication state. However, other work has claimed that even if dopamine seems to promote energy expenditure, it only does so as a function of the upcoming action outcome (reward) and not as a function of the upcoming energy cost itself (Le Bouc et al., 2016; Skvortsova et al., 2017; Walton and Bouret, 2019). Unfortunately, as the current study did not isolate effort from action outcomes, it is unable to provide any further insight into this argument. In the future, it would be interesting to test people with PD on and off medication during our task in addition to a task that selectively measures a participant's sensitivity to effort (Salimpour et al., 2015). This experiment should help determine whether the current results are linked to dopamine medication altering sensitivity to effort or due to it altering sensitivity to the action outcome associated with producing that effort.

Interestingly, similar differences in sensitivity to reward and punishment have previously been observed in medicated PD patients during reinforcement learning. Specifically, Frank et al. (2004) showed that medicated PD patients expressed normal learning from reward-based tasks (positive outcomes) but impaired learning from punishment-based tasks (negative outcomes). Conversely, unmedicated PD patients showed the opposite bias, where they were better at learning from punishment than reward. The authors used biologically based computational modeling to explain these results, where medicated PD patients, with sufficient dopamine, learn from positive feedback through the direct, prokinetic (“GO”) pathway of the basal ganglia (Frank, 2005). In contrast, learning from negative feedback is impaired because the medication blocks/reduces the dips in dopamine associated with punishment that would lead to learning via the indirect, antikinetic (“NoGo”) pathway. Such a dual opponent actor system represented by distinct striatal (D1/D2) populations can differentially specialize in discriminating positive and negative action values. As such, this model can explain the effects of dopamine on both learning and decision-making across a variety of tasks, including probabilistic reinforcement learning and effort-based choice (Shiner et al., 2012; Smittenaar et al., 2012; Collins and Frank, 2014). Therefore, although highly speculative, our current results could be explained by dopaminergic medication having a differential effect on the direct and indirect pathway of the basal ganglia, which have been associated with the processing of reward- and punishment-based action outcomes, respectively (Kravitz et al., 2012; Argyelan et al., 2018). At the very least, it would be interesting to interrogate whether unmedicated PD patients showed a reduced sensitivity to reward but normal sensitivity to punishment (reflecting enhanced loss aversion) as suggested by previous work (Frank, 2005; Collins and Frank, 2014).

In conclusion, loss aversion is clearly present during effort-based decision-making and is modulated by dopaminergic state. This presents interesting future questions surrounding clinical disorders that have shown a reduced willingness to exert effort, such as depression and stroke. For example, it is possible that disorders that have shown a reduced willingness to exert effort in the pursuit of reward could show a normal, or even enhanced, willingness to exert effort to avoid punishment.

Footnotes

This work was supported by a European Research Council starter grant to J.M.G. (MotMotLearn: 637488). Participants with Parkinson's disease were recruited via Parkinson's UK (www.parkinsons.org.uk). We thank Henry Marks and Lilian Berenyi for work on the pilot study for this project.

The authors declare no competing financial interests.

References

- Antony MM, Cox BJ, Enns MW, Bieling PJ, Swinson RP (1998) Psychometric properties of the 42-item and 21-item versions of the depression anxiety stress scales in clinical groups and a community sample. Psychol Assess 10:176–181. 10.1037/1040-3590.10.2.176 [DOI] [Google Scholar]

- Argyelan M, Herzallah M, Sako W, DeLucia I, Sarpal D, Vo A, Fitzpatrick T, Moustafa AA, Eidelberg D, Gluck M (2018) Dopamine modulates striatal response to reward and punishment in patients with Parkinson's disease: a pharmacological challenge fMRI study. Neuroreport 29:532–540. 10.1097/WNR.0000000000000970 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey MR, Simpson EH, Balsam PD (2016) Neural substrates underlying effort, time, and risk-based decision making in motivated behavior. Neurobiol Learn Mem 133:233–256. 10.1016/j.nlm.2016.07.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baraduc P, Thobois S, Gan J, Broussolle E, Desmurget M (2013) A common optimization principle for motor execution in healthy subjects and parkinsonian patients. J Neurosci 33:665–677. 10.1523/JNEUROSCI.1482-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bardgett ME, Depenbrock M, Downs N, Points M, Green L (2009) Dopamine modulates effort-based decision making in rats. Behav Neurosci 123:242–251. 10.1037/a0014625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bautista LM, Tinbergen J, Kacelnik A (2001) To walk or to fly? How birds choose among foraging modes. Proc Natl Acad Sci U S A 98:1089–1094. 10.1073/pnas.98.3.1089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beeler JA, Daw N, Frazier CR, Zhuang X (2010) Tonic dopamine modulates exploitation of reward learning. Front Behav Neurosci 4:170. 10.3389/fnbeh.2010.00170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Białaszek W, Marcowski P, Ostaszewski P (2017) Physical and cognitive effort discounting across different reward magnitudes: tests of discounting models. PLoS One 12:e0182353. 10.1371/journal.pone.0182353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnelle V, Veromann KR, Burnett Heyes S, Lo Sterzo E, Manohar S, Husain M (2015) Characterization of reward and effort mechanisms in apathy. J Physiol Paris 109:16–26. 10.1016/j.jphysparis.2014.04.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnelle V, Manohar S, Behrens T, Husain M (2016) Individual differences in premotor brain systems underlie behavioral apathy. Cereb Cortex 26:807–819. 10.1093/cercor/bhv247 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Huffstetler S, McGuire JT (2009) Effort discounting in human nucleus accumbens. Cogn Affect Behav Neurosci 9:16–27. 10.3758/CABN.9.1.16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke CJ, Brünger C, Kahnt T, Park SQ, Tobler PN (2013) Neural integration of risk and effort costs by the frontal pole: only upon request. J Neurosci 33:1706–1713. 10.1523/JNEUROSCI.3662-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carver CS, White TL (1994) Behavioral inhibition, behavioral activation, and affective responses to impending reward and punishment: the BIS/BAS scales. J Pers Soc Psychol 67:319–333. 10.1037/0022-3514.67.2.319 [DOI] [Google Scholar]

- Chong TT, Bonnelle V, Manohar S, Veromann KR, Muhammed K, Tofaris GK, Hu M, Husain M (2015) Dopamine enhances willingness to exert effort for reward in Parkinson's disease. Cortex 69:40–46. 10.1016/j.cortex.2015.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chong TT, Bonnelle V, Husain M (2016) Quantifying motivation with effort-based decision-making paradigms in health and disease. Prog Brain Res 229:71–100. 10.1016/bs.pbr.2016.05.002 [DOI] [PubMed] [Google Scholar]

- Chong TT, Apps M, Giehl K, Sillence A, Grima LL, Husain M (2017) Neurocomputational mechanisms underlying subjective valuation of effort costs. PLoS Biol 15:e1002598. 10.1371/journal.pbio.1002598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark CA, Dagher A (2014) The role of dopamine in risk taking: a specific look at Parkinson's disease and gambling. Front Behav Neurosci 8:196. 10.3389/fnbeh.2014.00196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins AG, Frank MJ (2014) Opponent actor learning (OpAL): modeling interactive effects of striatal dopamine on reinforcement learning and choice incentive. Psychol Rev 121:337–366. 10.1037/a0037015 [DOI] [PubMed] [Google Scholar]

- Daw ND, Doya K (2006) The computational neurobiology of learning and reward. Curr Opin Neurobiol 16:199–204. 10.1016/j.conb.2006.03.006 [DOI] [PubMed] [Google Scholar]

- Doyle JR. (2010) Survey of time preference, delay discounting models. Judgm Decis Mak 8:116–135. [Google Scholar]

- Estle SJ, Green L, Myerson J, Holt DD (2006) Differential effects of amount on temporal and probability discounting of gains and losses. Mem Cogn 34:914–928. 10.3758/BF03193437 [DOI] [PubMed] [Google Scholar]

- Fahn S, Elton RL (1987) The unified Parkinson's disease rating scale. In: Recent developments in Parkinson's disease, Vol 2 (Fahn S, Marsden CD, Calne DB, Goldstein M, eds), pp 153–163, 293–304. Florham Park, NJ: Macmillan Health Care Information. [Google Scholar]

- Fehr E, Rangel A (2011) Neuroeconomic foundations of economic choice—recent advances. J Econ Perspect 25:3–30. 10.1257/jep.25.4.3 21595323 [DOI] [Google Scholar]

- Floresco SB, Tse MT, Ghods-Sharifi S (2008) Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology 33:1966–1979. 10.1038/sj.npp.1301565 [DOI] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR (1975) “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 12:189–198. 10.1016/0022-3956(75)90026-6 [DOI] [PubMed] [Google Scholar]

- Frank MJ. (2005) Dynamic dopamine modulation in the basal ganglia: a neurocomputational account of cognitive deficits in medicated and nonmedicated parkinsonism. J Cogn Neurosci 17:51–72. 10.1162/0898929052880093 [DOI] [PubMed] [Google Scholar]

- Frank MJ, Seeberger LC, O'reilly RC (2004) By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science 306:1940–1943. 10.1126/science.1102941 [DOI] [PubMed] [Google Scholar]

- Galaro JK, Celnik P, Chib VS (2019) Motor cortex excitability reflects the subjective value of reward and mediates its effects on incentive-motivated performance. J Neurosci 39:1236–1248. 10.1523/JNEUROSCI.1254-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J (2004) A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull 130:769–792. 10.1037/0033-2909.130.5.769 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartmann MN, Hager OM, Tobler PN, Kaiser S (2013) Parabolic discounting of monetary rewards by physical effort. Behav Processes 100:192–196. 10.1016/j.beproc.2013.09.014 [DOI] [PubMed] [Google Scholar]

- Hauser TU, Eldar E, Dolan RJ (2017) Separate mesocortical and mesolimbic pathways encode effort and reward learning signals. Proc Natl Acad Sci U S A 114:E7395–E7404. 10.1073/pnas.1705643114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Tversky A (1979) Prospect theory: an analysis of decision under risk daniel. Econometrica 47:263–291. 10.2307/1914185 [DOI] [Google Scholar]

- Klein-Flügge MC, Kennerley SW, Saraiva AC, Penny WD, Bestmann S (2015) Behavioral modeling of human choices reveals dissociable effects of physical effort and temporal delay on reward devaluation. PLoS Comput Biol 11:e1004116. 10.1371/journal.pcbi.1004116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein-Flügge MC, Kennerley SW, Friston K, Bestmann S (2016) Neural signatures of value comparison in human cingulate cortex during decisions requiring an effort-reward trade-off. J Neurosci 36:10002–10015. 10.1523/JNEUROSCI.0292-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz AV, Tye LD, Kreitzer AC (2012) Distinct roles for direct and indirect pathway striatal neurons in reinforcement. Nat Neurosci 15:816–818. 10.1038/nn.3100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Guitart-Masip M, Dolan RJ (2011) Dopamine and effort-based decision making. Front Neurosci 5:81. 10.3389/fnins.2011.00081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Bouc R, Rigoux L, Schmidt L, Degos B, Welter ML, Vidailhet M, Daunizeau J, Pessiglione M (2016) Computational dissection of dopamine motor and motivational functions in humans. J Neurosci 36:6623–6633. 10.1523/JNEUROSCI.3078-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Heron C, Manohar S, Plant O, Muhammed K, Griffanti L, Nemeth A, Douaud G, Markus HS, Husain M (2018) Dysfunctional effort-based decision-making underlies apathy in genetic cerebral small vessel disease. Brain 141:3193–3210. 10.1093/brain/awy257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lockwood PL, Hamonet M, Zhang SH, Ratnavel A, Salmony FU, Husain M, Apps MAJ (2017) Prosocial apathy for helping others when effort is required. Nat Hum Behav 1:0131. 10.1038/s41562-017-0131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loewenstein G, Frederick S, O'donoghue T (2002) Time discounting and time preference: a critical review. J Econ Lit 40:351–401. 10.1257/jel.40.2.351 [DOI] [Google Scholar]

- Massar SAA, Csathó Á, Van der Linden D (2018) Quantifying the motivational effects of cognitive fatigue through effort-based decision making. Front Psychol 9:843. 10.3389/fpsyg.2018.00843 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGuigan S, Zhou SH, Brosnan MB, Thyagarajan D, Bellgrove MA, Chong TT (2019) Dopamine restores cognitive motivation in Parkinson's disease. Brain 142:719–732. 10.1093/brain/awy341 [DOI] [PubMed] [Google Scholar]

- Meyniel F, Sergent C, Rigoux L, Daunizeau J, Pessiglione M (2013) Neurocomputational account of how the human brain decides when to have a break. Proc Natl Acad Sci U S A 110:2641–2646. 10.1073/pnas.1211925110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller T, Apps MAJ (2019) Motivational fatigue: a neurocognitive framework for the impact of effortful exertion on subsequent motivation. Neuropsychologia 123:141–151. 10.1016/j.neuropsychologia.2018.04.030 [DOI] [PubMed] [Google Scholar]

- Nishiyama R. (2016) Physical, emotional, and cognitive effort discounting in gain and loss situations. Behav Processes 125:72–75. 10.1016/j.beproc.2016.02.004 [DOI] [PubMed] [Google Scholar]

- Porat O, Hassin-Baer S, Cohen OS, Markus A, Tomer R (2014) Asymmetric dopamine loss differentially affects effort to maximize gain or minimize loss. Cortex 51:82–91. 10.1016/j.cortex.2013.10.004 [DOI] [PubMed] [Google Scholar]

- Prévost C, Pessiglione M, Météreau E, Cléry-Melin ML, Dreher JC (2010) Separate valuation subsystems for delay and effort decision costs. J Neurosci 30:14080–14090. 10.1523/JNEUROSCI.2752-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H. (2006) Notes on discounting. J Exp Anal Behav 85:425–435. 10.1901/jeab.2006.85-05 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Green L (1972) Commitment, choice and self-control. J Exp Anal Behav 17:15–22. 10.1901/jeab.1972.17-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigoux L, Stephan KE, Friston KJ, Daunizeau J (2014) Bayesian model selection for group studies - revisited. Neuroimage 84:971–985. 10.1016/j.neuroimage.2013.08.065 [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A, Mingote SM (2007) Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl) 191:461–482. 10.1007/s00213-006-0668-9 [DOI] [PubMed] [Google Scholar]

- Salimpour Y, Mari ZK, Shadmehr R (2015) Altering effort costs in Parkinson's disease with noninvasive cortical stimulation. J Neurosci 35:12287–12302. 10.1523/JNEUROSCI.1827-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarz G. (1978) Estimating the dimension of a model. Ann Stat 6:461–464. 10.1214/aos/1176344136 [DOI] [Google Scholar]

- Shadmehr R, Huang HJ, Ahmed AA (2016) A representation of effort in decision-making and motor control. Curr Biol 14:1929–1934. 10.1016/j.cub.2016.05.065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shiner T, Seymour B, Wunderlich K, Hill C, Bhatia KP, Dayan P, Dolan RJ (2012) Dopamine and performance in a reinforcement learning task: evidence from Parkinson's disease. Brain 135:1871–1883. 10.1093/brain/aws083 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skvortsova V, Degos B, Welter ML, Vidailhet M, Pessiglione M (2017) A selective role for dopamine in learning to maximize reward but not to minimize effort: evidence from patients with Parkinson's disease. J Neurosci 37:6087–6097. 10.1523/JNEUROSCI.2081-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smittenaar P, Chase HW, Aarts E, Nusselein B, Bloem BR, Cools R (2012) Decomposing effects of dopaminergic medication in Parkinson's disease on probabilistic action selection - learning or performance? Eur J Neurosci 35:1144–1151. 10.1111/j.1460-9568.2012.08043.x [DOI] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ (2009) Bayesian model selection for group studies. Neuroimage 46:1004–1017. 10.1016/j.neuroimage.2009.03.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens DW. (2001) The adaptive value of preference for immediacy: when shortsighted rules have farsighted consequences. Behav Ecol 12:330–339. 10.1093/beheco/12.3.330 [DOI] [Google Scholar]

- Stephens DW, Krebs JR (1986) Foraging theory. Princeton, NJ: Princeton UP. [Google Scholar]

- Stevens JR, Rosati AG, Ross KR, Hauser MD (2005) Will travel for food: spatial discounting in two new world monkeys. Curr Biol 15:1855–1860. 10.1016/j.cub.2005.09.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor MM, Creelman CD (2005) PEST: efficient estimates on probability functions. J Acoust Soc Am 41:782–787. 10.1121/1.1910407 [DOI] [Google Scholar]

- Timmer MHM, Sescousse G, Esselink RAJ, Piray P, Cools R (2017) Mechanisms underlying dopamine-induced risky choice in Parkinson's disease with and without depression (history). Comput Psychiatry 2:11–27. 10.1162/CPSY_a_00011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tom SM, Fox CR, Trepel C, Poldrack RA (2007) The neural basis of loss aversion in decision-making under risk. Science 315:515–518. 10.1126/science.1134239 [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D (1981) The framing of decisions and the psychology of choice. Science 211:453–458. 10.1126/science.7455683 [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D (1992) Advances in prospect theory: cumulative representation of uncertainty. J Risk Uncertain 5:297–323. 10.1007/BF00122574 [DOI] [Google Scholar]

- Walton ME, Bouret S (2019) What is the relationship between dopamine and effort? Trends Neurosci 42:79–91. 10.1016/j.tins.2018.10.001 [DOI] [PMC free article] [PubMed] [Google Scholar]