Abstract

Background Increased adoption of electronic health records (EHR) with integrated clinical decision support (CDS) systems has reduced some sources of error but has led to unintended consequences including alert fatigue. The “pop-up” or interruptive alert is often employed as it requires providers to acknowledge receipt of an alert by taking an action despite the potential negative effects of workflow interruption. We noted a persistent upward trend of interruptive alerts at our institution and increasing requests for new interruptive alerts.

Objectives Using Institute for Healthcare Improvement (IHI) quality improvement (QI) methodology, the primary objective was to reduce the total volume of interruptive alerts received by providers.

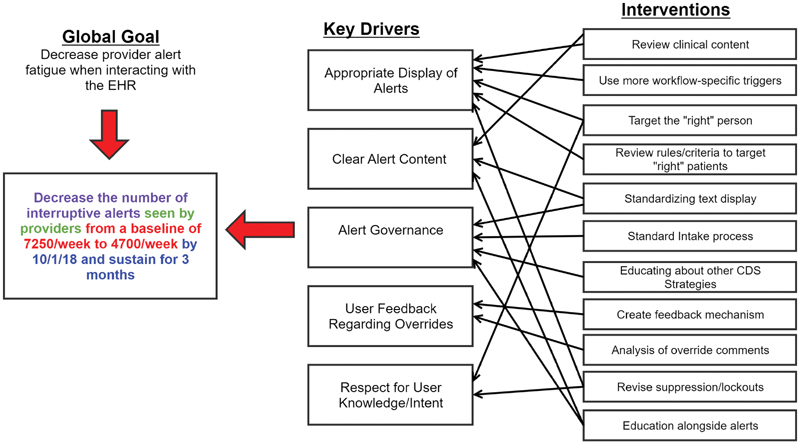

Methods We created an interactive dashboard for baseline alert data and to monitor frequency and outcomes of alerts as well as to prioritize interventions. A key driver diagram was developed with a specific aim to decrease the number of interruptive alerts from a baseline of 7,250 to 4,700 per week (35%) over 6 months. Interventions focused on the following key drivers: appropriate alert display within workflow, clear alert content, alert governance and standardization, user feedback regarding overrides, and respect for user knowledge.

Results A total of 25 unique alerts accounted for 90% of the total interruptive alert volume. By focusing on these 25 alerts, we reduced interruptive alerts from 7,250 to 4,400 per week.

Conclusion Systematic and structured improvements to interruptive alerts can lead to overall reduced interruptive alert burden. Using QI methods to prioritize our interventions allowed us to maximize our impact. Further evaluation should be done on the effects of reduced interruptive alerts on patient care outcomes, usability heuristics on cognitive burden, and direct feedback mechanisms on alert utility.

Keywords: clinical information systems, decision support systems, clinical alert fatigue, health personnel, quality improvement, electronic health records, human factors

Background and Significance

In the decade since the Health Information Technology for Economic and Clinical Health (HITECH) act was passed, electronic health records (EHRs) have become near universal in the US healthcare system. 1 Though this digital revolution has brought with it many benefits both to providers and patients, it has also produced several unintended consequences including increased interruption of provider workflows, increased cognitive burden, and alert fatigue. 2

Alert fatigue, also known as alarm fatigue, is not a new phenomenon nor one exclusive to medicine; it has been described in various other fields including off-shore oil drilling and aviation. 3 4 Alert fatigue has often been defined as excessive alerting causing users to become less receptive to further alerts. An alternative definition proposed at a 2011 summit regarding medical device alarms can easily be applied to EHR alerts and strikes at many of the core issues: “alarm fatigue is when a true life-threatening event is lost in a cacophony of noise because of the multitude of devices with competing alarm signals, all trying to capture someone's attention, without clarity around what that someone is supposed to do.” 5

Prior work on alert fatigue in medicine has primarily focused on alerts related to computerized physician order entry (CPOE). Several recent studies of CPOE decision support have shown consistently low acceptance rates, ranging from 4 to 11%. 6 7 8 9 10 Low acceptance rates of alerts are concerning because when alerts are identified as inappropriate, clinicians have shown reduced responsiveness to future alerts. 11 In addition to reducing responsiveness to additional alarms, there are numerous deleterious effects of such interruptions on the user's primary task. In one study, emergency department physicians failed to return to 19% of interrupted tasks. 12 In other parts of the hospital, interruptions can occur 2 to 23 times per hour. 13 Additionally, interruptions have been associated with dispensing and administration medication errors, contributing to as much as 12% of medication dispensing errors by pharmacists 14 and a similar percentage increase in medication administration errors by nurses. 15 Excessive CPOE alerts considered inconsequential and ignored by users have become so commonplace that the Leapfrog Group now includes an “alert fatigue” category in their CPOE evaluation tool used by hospitals throughout the United States. 16 Reducing unhelpful or mistargeted alerts should be a priority for any well-functioning clinical decision support (CDS) system.

Increasingly, CDS is being created outside of CPOE. In our EHR system, Epic (Epic Systems Corporation, Verona, Wisconsin, United States), non-CPOE alerts primarily utilize an alert type called a Best Practice Advisory (BPA). This tool is widely versatile and can be triggered for display based upon a variety of patient or provider characteristics and at various times in the clinical workflow. For example, BPAs can remind providers to complete required documentation, to order overdue vaccinations, or to notify providers about high-risk conditions that may affect their care choices. However, because of this flexibility, BPAs are often the first CDS method requested for making any improvements in patient care, harkening back to a quote from the philosopher Abraham Kaplan: “Give a small boy a hammer, and he will find that everything he encounters needs pounding.” 17

Although there are studies in the literature focusing on reducing alert burden, there has been little work utilizing quality improvement (QI) tools and methodology. We aimed to use the Institute for Healthcare Improvement's (IHI) model for improvement to reduce the burden of interruptive alerts for providers in our institutions. 18 The IHI model for improvement focuses on rapid-cycle testing in the field to learn which interventions can predictably produce improvements and has been the primary QI model at Nationwide Children's Hospital. 19 The general steps in our application of the IHI model for improvement include using a cause and effect diagram (or Ishikawa diagram) to identify root causes of a problem, the creation of a key driver diagram to delineate the primary contributors to the goal and interventions to address each, and use of a Pareto chart to prioritize interventions to maximize impact and control charts to monitor performance.

Objectives

The primary objective was to utilize the IHI model for improvement methodology to reduce interruptive alert burden for the providers in the Nationwide Children's Hospital system. In the context of this paper, the term provider will be used to identify attending physicians, fellows, residents, nurse practitioners, and physician assistants. Our secondary objective was to increase physician feedback on alert utility and usability.

Methods

Context

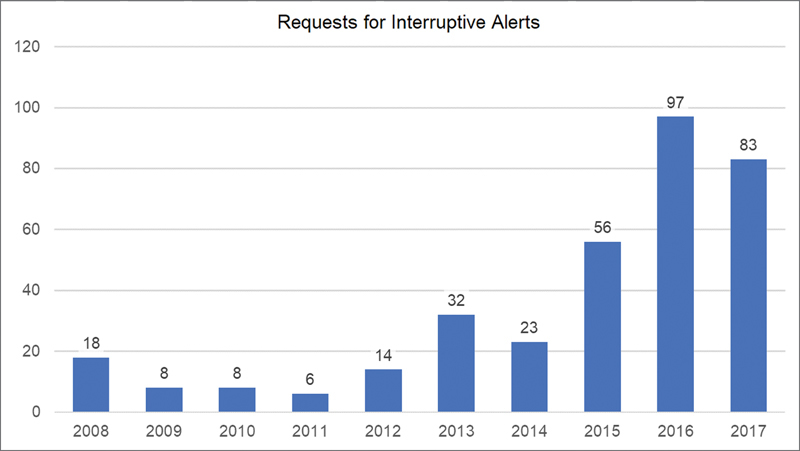

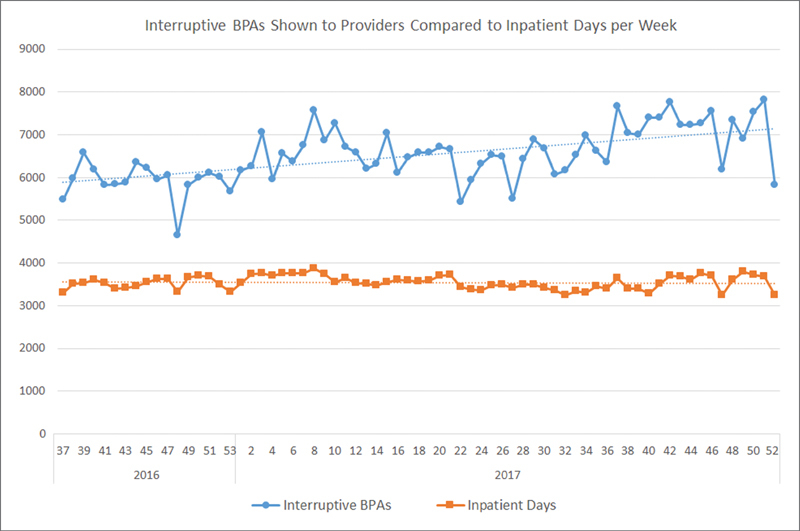

Nationwide Children's Hospital is an academic and free-standing children's hospital located in Columbus, Ohio; it implemented the Epic EHR system in 2005. From 2008 when we have available change request documentation, there has been an escalating number of requests from clinical and administrative groups within our system for CDS using BPAs. Although they can be displayed in a noninterruptive manner such as an inline notification, many of the requests were for interruptive, “pop-up” versions ( Fig. 1 ). At the same time, the total volume of these interruptive alerts shown to providers had also been increasing year over year and did not show signs of diminishing ( Fig. 2 ) as of the end of 2017 when this project began.

Fig. 1.

Requests for interruptive Best Practice Advisories per year.

Fig. 2.

Weekly volume of interruptive Best Practice Advisories shown to providers (defined as physicians, residents, fellows, nurse practitioners, and physician assistants) and total inpatient days as a marker of overall hospital volume with linear trendlines in the 16 months preceding our quality improvement project. This volume is not controlled per provider, but a global indicator of alert volume.

Planning the Intervention

In early 2018, we formed a multidisciplinary QI group to review and identify why we had increasing volumes of interruptive alerts. The group included attending physicians from several service areas, a resident physician, a nurse practitioner, and Epic analysts. As our first foray into reducing alerts, the group decided to focus specifically on reducing interruptive BPAs shown to providers. In this paper, we define interruptive alerts as BPAs displayed to providers that upon firing require an action (whether acceptance, acknowledgment, or dismissal) on the part of the provider to proceed with their workflow. Additionally, we excluded seasonal influenza vaccination alerts for this project as we felt they could potentially obfuscate the impact of our interventions. Because there is little existing data on establishing an appropriate or acceptable volume of alerts, the group chose an initial goal of reducing our total volume of alerts by 35% based on consensus. To identify failings in the current alert ecosystem, the group constructed an Ishikawa diagram regarding all the potential causes excess alerts may be showing to providers. The group then categorized these causes into four main themes: lack of clinical relevance, poorly built alerts, lack of governance, and increasing patient volume/acuity. Based upon these findings, a key driver diagram was developed to identify targets for interventions ( Fig. 3 ).

Fig. 3.

Key driver diagram. We established a focused goal for our QI project of reducing the number of interruptive alerts seen by providers by 35% within 6 months and sustaining this reduction for at least 3 months. Using the information from the Ishikawa exercise, we identified these five key drivers and designed several interventions to effect change in those areas. QI, quality improvement.

Interventions

Governance

A dedicated interruptive alert team (IAT), consisting of one physician informaticist and two Epic analysts, was established to act as the primary conduit for all interruptive alert build and changes for the Nationwide Children's Hospital system. All new interruptive alert requests require completion of a standard intake form focusing on several points:

Current provider workflow description.

Ideal future-state workflow with BPA description.

Plans for monitoring the impact of desired alert.

Data supporting the need for desired alert.

The IAT served as the intake point for all new alerts, deciding collaboratively on prioritization of requests as well as determining whether an interruptive alert, or other manner of CDS would be appropriate.

Monitoring

We implemented a QlikView (Qlik Technologies Inc., King of Prussia, Pennsylvania, United States) visualization tool that displays an interactive dashboard of all BPAs firing within our institution for a rolling 6-month window. Initial planning and strategy were performed using a static version of this report for the prior 18 months. Data included in this dashboard comprised encounter level information, basic patient demographic information, provider information, and provider-response information including actions taken and the unstructured information provided in the override reasons of alerts that allowed such entry.

Review of Top Alerts

Because many of our planned interventions were agnostic to the individual alert content, we prioritized interventions by addressing the highest volume interruptive alerts within the system to have the largest potential impact. In inspecting the top alerts, we used several different approaches to identify problems with the technical build. First approach was evaluating inclusion/exclusion criteria including applicable roles and provider locations (e.g., emergency department, ambulatory clinics, and inpatient).

The second approach utilized the monitoring dashboard to identify edge cases that happen infrequently and may not have been anticipated during the design phase to gain insight about potential build errors. In addition to monitoring alert activity from the perspective of the individual alert, we also sought to identify high-volume patients (patients for whom certain alerts were firing frequently) and high-volume providers (providers who received a significant proportion of specific alerts).

Additionally, acknowledgment reasons, free text entered by providers when overriding an alert, were reviewed manually to identify potential exceptions and problems with workflows. Lastly, we assessed each alert for clinical necessity and up-to-date content and alert logic, with subject matter experts contacted when necessary. We also validated with subject matter experts when changing alert logic or content significantly.

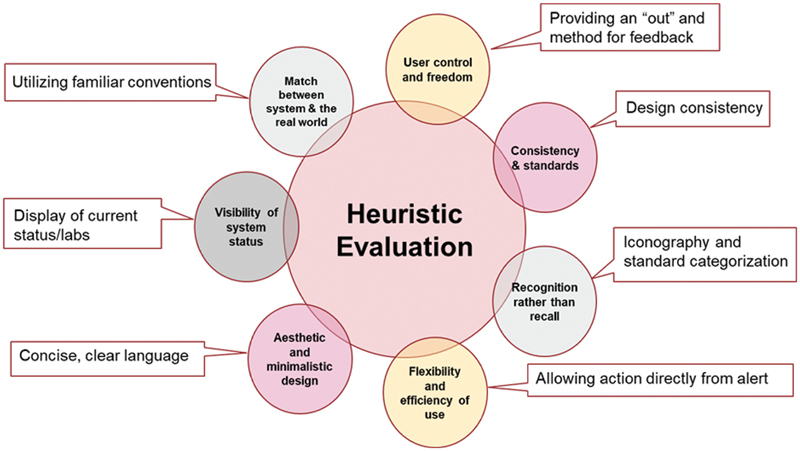

Usability

Drawing from Nielsen's heuristics for interface design, we redesigned the top alerts with the goal of being as easily interpretable and actionable as possible ( Fig. 4 ). 20 Alert visual content was simplified where possible, with concise yet clear language, use of iconography when appropriate, and displaying relevant information (e.g., laboratory results) when applicable. Because all new alerts were being funneled through the IAT, we were able to provide consistency in build style in those alerts as well.

Fig. 4.

Adaptation of Nielsen's web usability heuristics to alert design.

Feedback Mechanisms

Although some BPAs include a comment box for free-text feedback when overriding an alert, the objective of this box is not explicit and not all alerts provide this opportunity. To solicit more formal feedback, we designed a small badge in the corner of interruptive alerts asking the end user to provide positive or negative feedback, indicated by thumbs-up or thumbs-down icons. These icons open a REDCap survey with a single question to explain their satisfaction or dissatisfaction with the alert. REDCap is a secure and web-based software platform designed to support data capture for research studies. 21 22 This feedback was used by the IAT to further improve the alerts.

Timeline of Interventions

Alert governance including creating the IAT and intake form was initiated in October 2017. The monitoring system was initially stood up in November 2017. Initial alert changes first went into production in late March 2018. Because of the manifold nature of these changes, many alerts had changes implemented in a stepwise fashion; therefore, there are not specific implementation dates for alert-specific changes except in the cases of balancing metrics which are included in the related figures. The REDCap feedback survey was implemented during the sustain period of the project in early 2019.

Balancing Metrics

To assess whether our changes to reduce alert volume might affect the original goals of the BPAs, several alerts had balancing metrics followed during the intervention phase. One alert originally created for meaningful use focused on tobacco history review in patients aged ≥13 years. This alert was turned off completely given historically low acceptance of the alert and rates of marking tobacco history reviewed were tracked subsequently. A second alert regarded cosignature of admission orders within 24 hours of admission (a regulatory requirement). Changes made to this alert included allowing permanent deferrals for consulting physicians, locking out the alert for 1 hour to prevent repeated firings and changing the alert display as described above. We assessed the percentage of patients with their orders signed within 24 hours of admission before and after the interventions.

Results

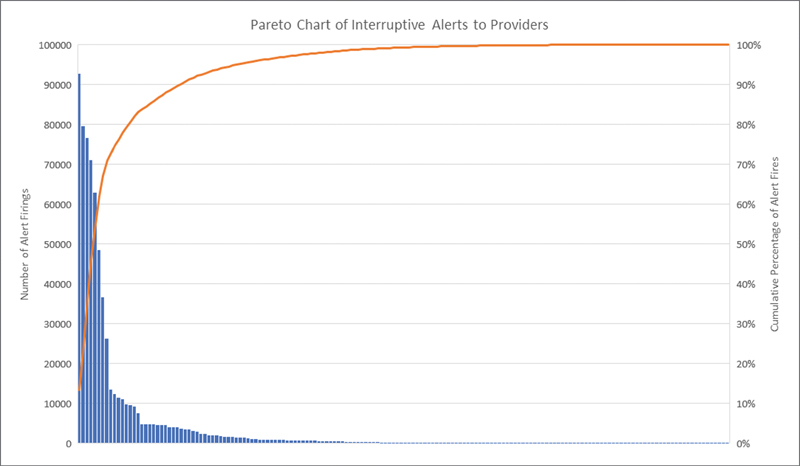

The Pareto chart in Fig. 5 demonstrates that the top 25 firing interruptive alerts accounted for 90% of the total volume of interruptive alerts ( Appendix A ), and the top 65 firing alerts accounted for 99%. This is despite having nearly 170 interruptive alerts targeting providers active in the production environment. The QI team decided to address these initial top 25 alerts as the first wave of improvements to reach our goal.

Fig. 5.

Pareto Chart. This chart, created using cumulative alert information from the 16 months preceding our QI project as seen in Fig. 2 , shows the individual number of firings for each interruptive alert as well as the cumulative total sorted from highest volume to lowest volume alerts. QI, quality improvement.

Appendix A. Description and categorization of the top 25 alerts by overall volume at initiation of project.

| Description | Category |

|---|---|

| Provider needs to review patient's tobacco history | Meaningful use |

| Provider needs to sign admission order | Compliance |

| Provider needs to reconcile home medications | Compliance |

| Patient is intraoperative, provider should not place orders for inpatient floor | Safety |

| Provider needs to document a hospital problem for an admitted patient | Meaningful use |

| Patient is admitted, provider should not place outpatient orders | Safety |

| Provider needs to reconcile orders on Medication Administration Record hold | Safety |

| Entered Hepatitis B vaccine is not due for this patient | Safety |

| NICU patient is eligible to start cue-based feeding | Quality of care |

| Reorder needed for restraints that are documented to be in place | Compliance |

| Patient is on antipsychotic medications and needs monitoring laboratory studies | Quality of care |

| Provider needs to complete discharge medication reconciliation | Compliance |

| Surgical patient needs to order perioperative antibiotics | Safety |

| Patient in ED is at high risk of sepsis and requires additional monitoring | Safety |

| Diabetes patient needs lipid monitoring laboratory studies | Quality of care |

| Entered rotavirus vaccine is not due for this patient | Safety |

| Patient has elevated creatinine while on nephrotoxic medications | Safety |

| Entered Prevnar vaccine is not due for this patient | Safety |

| Entered HiB vaccine is not due for this patient | Safety |

| Patient with congenital heart disease should receive additional health maintenance | Quality of care |

| Provider needs to discuss breastfeeding with parents of NICU patient | Quality of care |

| Patient in ED at risk for sepsis due for reassessment | Safety |

| Provider needs to notify pharmacy of total parenteral nutrition order placed after daily cutoff | Safety |

| Provider to place maintenance orders for patient awaiting admission for asthma | Safety |

| PICU patient is eligible to start enteral feeds | Quality of care |

Abbreviations: ED, emergency department; NICU, neonatal intensive care unit; PICU, pediatric intensive care unit.

Regarding targeting and inclusion criteria, we identified several changes to reduce inappropriate firings. We identified several alerts that targeted users because of their role/title, but not necessarily their actual workflow. For example, radiologists and pathologists have the role type of physician and frequently enter patient charts but may not be in the position to perform certain suggested actions. By reviewing alert specific data including actions taken based on specialty or department, we were able to identify clinical workflows that would likely benefit from exclusion from future alerts. We also identified several alerts where certain provider types had been inadvertently omitted (e.g., an alert intended for all providers, but the fellow role had not been included).

Reviewing high-volume patients and high-volume providers also yielded several insights. We encountered one alert that had fired several hundred times on a single patient because the alert logic to stop further firings required nursing documentation that had not been performed. Because there was no transparency in the alert to let the provider know what was causing continued firings, the acknowledgment comments showed obvious frustration with the continued alerting. We found a second alert for which a handful of providers in a single specialty received nearly half of the alerts. We found that this particular alert directly conflicted with their intended workflow and was able to be amended to account for their process. Provider feedback also proved essential. In several of the alerts, the free-text acknowledgment reasons provided insights as to why providers found the alert unhelpful or not applicable, whether it was a clinical condition or situation that had simply not been accounted for in the original build.

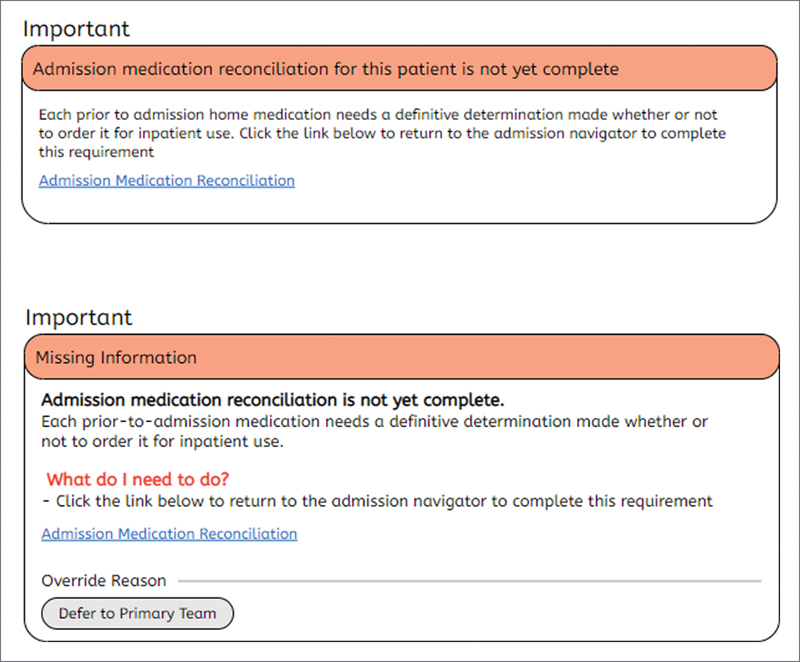

As an example of the usability revisions we made, we modified an existing alert recommending completion of the admission medication reconciliation ( Fig. 6 ). The initial alert had a relatively lengthy title and a short paragraph of plain text explaining the alert. The modifications included the following. The title was changed to a category of alert “missing information” to allow more rapid recognition of the content providers should expect (we utilized the same category for several alerts of similar content). We also emphasized why the provider was seeing the alert with bolded text to increase visibility of system status. Additionally, in all the alerts we revised, creation of a standard “what do I need to do?” section was included. If providers read nothing else, they would at least understand what steps are needed to be taken to complete the recommendation or to defer/acknowledge the alert.

Fig. 6.

Stylized depictions of initial version of alert before (above) and after (below) usability changes.

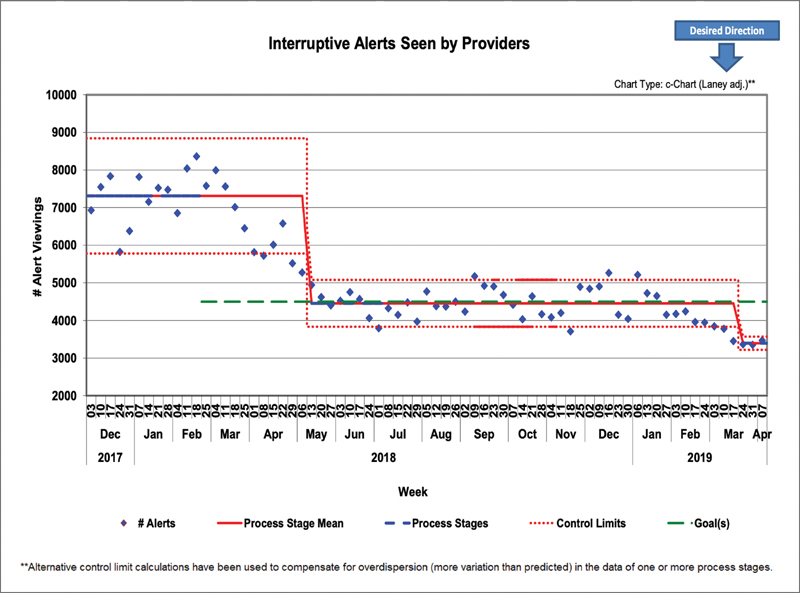

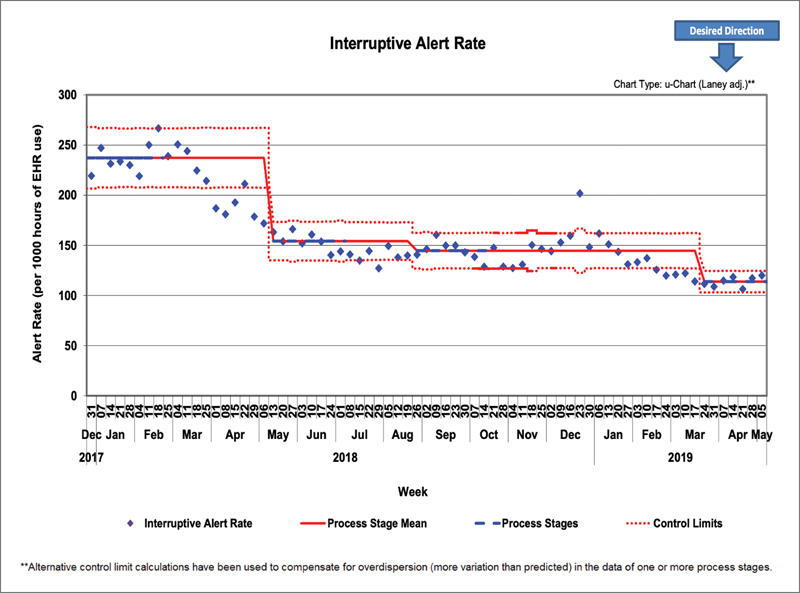

Our initial QI goal was to reduce the total volume of weekly interruptive alerts shown to providers by 35% from a baseline of 7,250 to 4,700 per week. We reached the initial goal and surpassed it—reaching a new baseline of 4,400 per week—a decrease of 39% by October 2018 ( Fig. 7 ), which followed by a further downward shift in the spring of 2019. This was despite the introduction of several new alerts during this period. During our project, we continued to look for a metric that would account for variations in patient volumes as well as a growing medical staff. Using Epic log-in records that document when provider sessions begin and end, we analyzed the alert volumes as a measure of alerts per 1,000 hours of time logged in ( Fig. 8 ). We found that even when controlling for time logged, we still achieved multiple baseline drops in our control chart, exceeding our initial goal of 35% reduction.

Fig. 7.

Control chart of total interruptive BPAs seen by providers per week. BPA, best practice advisory.

Fig. 8.

Control chart of interruptive BPAs seen by providers per 1,000 hours logged in as measured using log-in and log-out times. BPA, best practice advisory.

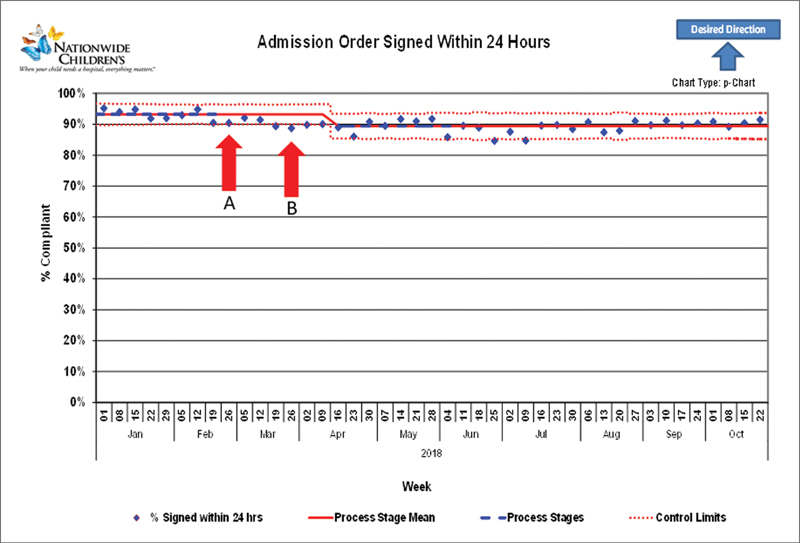

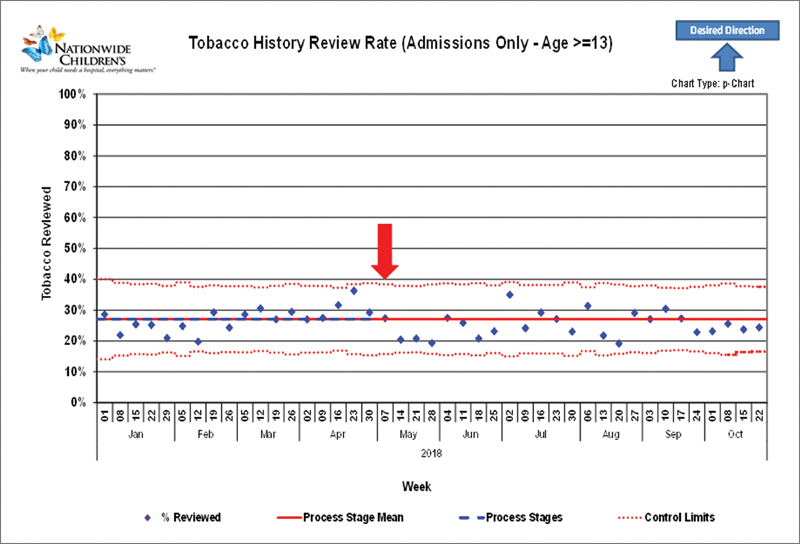

With regards to the balancing metrics, we did see a small downward shift in the percentage of admission orders signed within 24 hours after the initial changes ( Fig. 9 ). Interestingly, after turning off the tobacco history alert completely, there was no change in rates of tobacco history review ( Fig. 10 ).

Fig. 9.

Admission order balancing metric: Arrow A denotes the initial revision of the visual appearance of the alert. Arrow B denotes changes in alert restrictions.

Fig. 10.

Tobacco history alert balancing metric. This alert was turned completely off at week 18 of 2017 (arrow). There was no significant change in the percentage of patients 13 years or older with tobacco history reviewed.

Despite only being active for a short period, the REDCap feedback mechanism helped to identify multiple previously unrecognized build issues. For example, we found that emergency department providers were receiving an alert intended only for inpatient providers if they signed in after a patient was admitted because the alert was based on the patient's location only. This was able to be quickly fixed. In a period of 6 months, we received a total of 127 feedback surveys, with 89 (70.1%) critical feedback responses and 38 (29.9%) positive responses regarding 14 of the alerts. Additionally, it has also provided a new avenue of communication between informaticists and front-line clinicians. More than one respondent has commented after being emailed for follow-up that they are glad to have confirmation of someone who has heard their frustrations. This provider engagement is also evident in that several providers have left feedback on more than one alert, potentially signifying confidence in the feedback system.

Discussion

Improving patient care and safety has been one of the core missions of Nationwide Children's Hospital, and as such there is a strong emphasis on continuous QI. 23 24 25 Although interruptive alerts and other forms of CDS have frequently been used to support QI initiatives, there are limited instances of QI methodologies being used directly to improve the CDS itself. One study from researchers in Valencia, Spain used Lean Six Sigma and the Define-Measure-Analyze-Improve-Control (DMAIC) cycle methodology to reduce CPOE alerts by 28%. 26 In that study, they also prioritized high-volume alerts for review and made changes only involving 32 molecules to achieve their goals. The study by Simpao et al also used QI methods including a visual dashboard to review the most frequently triggered drug–drug interaction alerts, leading to deactivation of 63 alert rules. 27 In this study, we demonstrated that a systematic approach using QI tools can effect meaningful improvements in interruptive alert volume for providers. One of the factors most limiting to the improvement of CDS systems is the extensive labor often involved in manually reviewing alert and response appropriateness. 28 By using tools such as Pareto charts and live monitoring dashboards, we were able to better focus our limited resources toward changing alerts that would provide the most return. We were able to surpass our initial targeted reduction ahead of our initial achieve deadline and sustain those interventions with a small focused team. This is consistent with previous studies demonstrating the importance of monitoring alert activities to identify abnormal behaviors and to strategize interventions. 27 29 30

One unique aspect to our use of the alert dashboard was to evaluate alerts from the perspective of the individual patient and provider to identify edge cases to guide our changes. Although we utilized the IHI's model for improvement methodology, these techniques of prioritizing interventions to maximize impact and reducing variability are common across multiple QI methodologies.

During our project, we made significant efforts to implement usability principles into our alert redesigns. Others have shown that usability factors affect the success of CDS systems. 31 32 33 34 35 Although we initially based our alert design changes upon Nielsen's usability heuristics, we found our changes aligned with many of the best practice guidelines published by other human factors research groups. 36 37 38 39 We received positive feedback responses from nearly one third of users who took the time to complete the feedback survey and we also received informal feedback from users that the alerts were clearer and more distinguishable. A recent publication regarding an embedded survey tool found categorized only 2% of their feedback as positive. 40 One significant difference between our feedback tool and this previously published tool was using separate links to provide positive and negative feedback with associated iconography of the familiar thumbs-up and thumbs-down, respectively. We chose this design specifically so that users did not feel that the only feedback we were soliciting was negative.

Aaron et al described using natural language processing (NLP) to identify “cranky comments” provided in the free-text alert override comments. 41 Although manual review of these override comments was part of our process, this required significant resources without NLP. We found that using the embedded feedback survey provided a more focused venue for users to vent their frustration or to notify us when something had gone wrong. This not only allowed us to correct problems in the system but was a chance for outreach to our colleagues. The IAT reviews and responds to each feedback received, and in so doing, often opens a dialogue and appreciation for our efforts.

One of the most difficult questions encountered was how to balance reducing alert burden with the potential for negatively affecting patient care. In much of medicine, the benefits and risks of not doing a test or procedure are more concrete. By not showing an interruptive alert, we may reduce a provider's alert burden but could cause miss an opportunity to provide information the clinician needed to treat the patient correctly. This could lead to both errors of omission or commission. Multiple studies have shown EHR experience and specifically alert fatigue to be a risk factor or predictor of physician burnout. 42 However, it is not yet well established what specific aspect of alerting causes these cognitive and affective outcomes: total number of alerts, proportion of alerts that are incorrect or unhelpful, time density of alerts, or perhaps something else. Because there is not yet a well-established metric of alert burden, we initially used total alert volume as our measure. However, we quickly realized that this did not adequately reflect changes in patient volume or provider time in the system. For that reason, we decided to monitor alerts per provider time logged into the EHR. There is even the question of the term alert fatigue itself; is this truly alert fatigue or rather alert distrust based on prior experience? Bliss et al showed that humans exhibit probability matching behavior in response to alarms of varying reliability, quickly identifying those alerts that are of low reliability. 43 Perhaps it is not only the volume, but also the quality and reliability of these alerts that need to improve. There is much still to elucidate in the study of alert fatigue and in particular, how we measure and quantify it. As part of this, balancing metrics remain an important and necessary aspect of our pursuit to improve interruptive alerts.

Limitations

Although our initial aim was to reduce total alert volume seen by providers, we recognized early on that this was not an ideal metric. We therefore pursued tracking alerts per unit time logged into the Epic system as a more standardized measure. Although this does not account for idle time such as when a provider takes a phone call and leaves Epic open, we believe this could provide a more standard metric of total alert burden, a measure which has still not yet been well defined in the literature. We also recognize that alert fatigue is not simply a measure of number of alerts but also the usefulness of these alerts. Because of the inherent difficulty in measuring the usefulness of many individual alerts, our outcomes focused on the impact of alert volume as a measure of burden. Although some researchers have used alert override rate as a surrogate marker of an alert's effectiveness in previous studies, Baysari et al found that when users are in the state of alert overload, alerts are unlikely to even be read and considered and may be overridden simply as habit. 6 7 8 9 10 44

Another limitation inherent to many QI projects is that because of the nature of QI with multiple often simultaneous interventions, it is unclear how much effect these revisions had in improving provider acceptance individually. Because we were addressing aspects of 25 different alerts, for much of our intervention period we had multiple alerts undergoing multiple changes concurrently. While this expedited the process and allowed us to achieve our goal, it does limit our ability to know which changes were the most effective.

An additional limitation of our study is that although we made significant efforts to ensure the alert logic for these alerts was correct, without manual review, it is often not easily possible to assess the “correctness” of our alerts. Thus, a potential unintended consequence of our changes to increase provider receptiveness could be that alerts that fire incorrectly could have an increased chance of being followed.

Lastly, much of our usability feedback was of a purely qualitative nature. We did not perform formal usability testing on revised alerts for this project although we are exploring such testing for future work. While there exists a formal instrument for testing human-factors principles in medication-related alerts, I-MeDeSa, there is not currently an equivalent for custom alerts such as BPAs. 45

Conclusion

In summary, we found that QI methodologies can be successfully used to reduce interruptive alert volume in for providers at a large, academic hospital system. QI methods allowed effective prioritization of efforts and guided our interventions. Further work is needed to define a more accurate measure of alert burden and in formal evaluation of human-factors principles in custom alerts.

Clinical Relevance Statement

As CDS systems continue to expand, providers face an increasing number of alerts while performing clinical duties. Interventions such as usability and improved targeting of alerts, guided by QI methods, have the potential for optimizing the value of these alerts.

Multiple Choice Questions

-

Reviewing the inclusion/exclusion criteria, alert logic, and other workings of interruptive alerts can be a time intensive process. Which QI tool can be best to help prioritizing your approach to reviewing alerts?

Pareto Chart.

Ishikawa Exercise.

Control Chart.

Swimlane diagram.

Correct Answer: The correct answer is option a. The Pareto chart helps to highlight the most important among a large set of factors that are contributing to your problem. By identifying the factors, in this case alerts, which account for the largest proportions, teams can more easily decide which alerts to address early on and make significant gains.

-

Clinically unhelpful or mistargeted interruptive alerts can lead to which of the following:

User frustration with the EHR system.

Decreased acceptance of future alerts.

Potential medical errors because of interruptions.

All of the above.

Correct Answer: The correct answer is option d. Unhelpful/mistargeted alerts are associated with physician burnout and frustration as well as decreased receptiveness to future alerts. When alerts are interruptive, they also pose the risk of contributing to medical errors by increasing cognitive load.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

Activities in this project were designed solely for evaluation of process and QI and did not require Institutional Review Board approval.

References

- 1.Henry J, Pylypchuk Y, Searcy T, Patel V.Adoption of Electronic Health Record Systems among U.S. Non-Federal Acute Care Hospital: 2008–2015 Washington, DC: The Office of the National Coordinator for Health Information Technology; Available at:https://dashboard.healthit.gov/evaluations/data-briefs/non-federal-acute-care-hospital-ehr-adoption-2008-2015.php2016 [Google Scholar]

- 2.Ash J S, Sittig D F, Campbell E M, Guappone K P, Dykstra R H.Some unintended consequences of clinical decision support systems AMIA Annu Symp. Proc 200726–30.. PMCID: PMC2813668 [PMC free article] [PubMed] [Google Scholar]

- 3.Billings C E.Aviation automation: the search for a human-centered approachIn:Mahwah, NJ: Lawrence Erlbaum Associates; 1997103, 105 [Google Scholar]

- 4.Walker G H, Waterfield S, Thompson P. All at sea: An ergonomic analysis of oil production platform control rooms. Int J Ind Ergon. 2014;44(05):723–731. [Google Scholar]

- 5.Instrumentation AftAoM.A siren call to action: priority issues from the medical device alarms summit Arlington, VA: Association for the Advancement of Medical Instrumentation; Available at:https://www.aami.org/productspublications/horizonsissue.aspx?ItemNumber=42232011 [Google Scholar]

- 6.Ariosto D. Factors contributing to CPOE opiate allergy alert overrides. AMIA Annu Symp Proc. 2014;2014:256–265. [PMC free article] [PubMed] [Google Scholar]

- 7.Topaz M, Seger D L, Lai K et al. High override rate for opioid drug-allergy interaction alerts: current trends and recommendations for future. Stud Health Technol Inform. 2015;216:242–246. [PMC free article] [PubMed] [Google Scholar]

- 8.Humphrey K, Jorina M, Harper M, Dodson B, Kim S Y, Ozonoff A. An investigation of drug-drug interaction alert overrides at a pediatric hospital. Hosp Pediatr. 2018;8(05):293–299. doi: 10.1542/hpeds.2017-0124. [DOI] [PubMed] [Google Scholar]

- 9.Nanji K C, Seger D L, Slight S P et al. Medication-related clinical decision support alert overrides in inpatients. J Am Med Inform Assoc. 2018;25(05):476–481. doi: 10.1093/jamia/ocx115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zenziper Straichman Y, Kurnik D, Matok I et al. Prescriber response to computerized drug alerts for electronic prescriptions among hospitalized patients. Int J Med Inform. 2017;107:70–75. doi: 10.1016/j.ijmedinf.2017.08.008. [DOI] [PubMed] [Google Scholar]

- 11.Rayo M F, Kowalczyk N, Liston B W, Sanders E B, White S, Patterson E S. Comparing the effectiveness of alerts and dynamically annotated visualizations (DAVs) in improving clinical decision making. Hum Factors. 2015;57(06):1002–1014. doi: 10.1177/0018720815585666. [DOI] [PubMed] [Google Scholar]

- 12.Westbrook J I, Coiera E, Dunsmuir W T et al. The impact of interruptions on clinical task completion. Qual Saf Health Care. 2010;19(04):284–289. doi: 10.1136/qshc.2009.039255. [DOI] [PubMed] [Google Scholar]

- 13.Grundgeiger T, Sanderson P. Interruptions in healthcare: theoretical views. Int J Med Inform. 2009;78(05):293–307. doi: 10.1016/j.ijmedinf.2008.10.001. [DOI] [PubMed] [Google Scholar]

- 14.Ashcroft D M, Quinlan P, Blenkinsopp A. Prospective study of the incidence, nature and causes of dispensing errors in community pharmacies. Pharmacoepidemiol Drug Saf. 2005;14(05):327–332. doi: 10.1002/pds.1012. [DOI] [PubMed] [Google Scholar]

- 15.Westbrook J I, Woods A, Rob M I, Dunsmuir W T, Day R O. Association of interruptions with an increased risk and severity of medication administration errors. Arch Intern Med. 2010;170(08):683–690. doi: 10.1001/archinternmed.2010.65. [DOI] [PubMed] [Google Scholar]

- 16.Group T L.Clinical Decision Support Related to the CPOE Evaluation Tool. [PDF]Available at:https://www.leapfroggroup.org/survey-materials/survey-and-cpoe-materials. Accessed July 29, 2019

- 17.Kaplan A. San Francisco, CA: Chandler Pub. Co.; 1964. The conduct of inquiry; methodology for behavioral science. [Google Scholar]

- 18.Quality I ET.Institute for Healthcare ImprovementAvailable at:http://www.ihi.org/resources/Pages/Tools/Quality-Improvement-Essentials-Toolkit.aspx2017

- 19.Scoville R, Little K.Comparing Lean and Quality Improvement Cambridge, Massachusetts: Institute for Healthcare Improvement; Available at:http://www.ihi.org/resources/Pages/IHIWhitePapers/ComparingLeanandQualityImprovement.aspx2014 [Google Scholar]

- 20.Nielsen J.Enhancing the explanatory power of usability heuristicsHuman Factors in Computing Systems, Chi '94 Conference Proceedings - Celebrating Interdependence.1994152–158.. Available at:https://dlnext.acm.org/doi/abs/10.1145/191666.191729

- 21.Harris P A, Taylor R, Thielke R, Payne J, Gonzalez N, Conde J G. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(02):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Harris P A, Taylor R, Minor B L et al. The REDCap consortium: Building an international community of software platform partners. J Biomed Inform. 2019;95:103208. doi: 10.1016/j.jbi.2019.103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brilli R J, McClead R E, Jr, Davis T, Stoverock L, Rayburn A, Berry J C. The Preventable Harm Index: an effective motivator to facilitate the drive to zero. J Pediatr. 2010;157(04):681–683. doi: 10.1016/j.jpeds.2010.05.046. [DOI] [PubMed] [Google Scholar]

- 24.Minneci P C, Brilli R J. The power of “we”: successful quality improvement requires a team approach. Pediatr Crit Care Med. 2013;14(05):551–553. doi: 10.1097/PCC.0b013e318291737f. [DOI] [PubMed] [Google Scholar]

- 25.Brilli R J, Davis J T. Pediatric quality and safety come of age. J Healthc Qual. 2018;40(02):67–68. doi: 10.1097/JHQ.0000000000000136. [DOI] [PubMed] [Google Scholar]

- 26.Cuéllar Monreal M J, Reig Aguado J, Font Noguera I, Poveda Andrés J L. Reduction in alert fatigue in an assisted electronic prescribing system, through the Lean Six Sigma methodology. Farm Hosp. 2017;41(01):14–30. doi: 10.7399/fh.2017.41.1.10434. [DOI] [PubMed] [Google Scholar]

- 27.Simpao A F, Ahumada L M, Desai B R et al. Optimization of drug-drug interaction alert rules in a pediatric hospital's electronic health record system using a visual analytics dashboard. J Am Med Inform Assoc. 2015;22(02):361–369. doi: 10.1136/amiajnl-2013-002538. [DOI] [PubMed] [Google Scholar]

- 28.McCoy A B, Thomas E J, Krousel-Wood M, Sittig D F. Clinical decision support alert appropriateness: a review and proposal for improvement. Ochsner J. 2014;14(02):195–202. [PMC free article] [PubMed] [Google Scholar]

- 29.Wright A, Hickman T T, McEvoy D et al. Analysis of clinical decision support system malfunctions: a case series and survey. J Am Med Inform Assoc. 2016;23(06):1068–1076. doi: 10.1093/jamia/ocw005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zimmerman C R, Jackson A, Chaffee B, O'Reilly M. A dashboard model for monitoring alert effectiveness and bandwidth. AMIA Annu. Symp. Proc. 2007:1176. [PubMed] [Google Scholar]

- 31.Czock D, Konias M, Seidling H M et al. Tailoring of alerts substantially reduces the alert burden in computerized clinical decision support for drugs that should be avoided in patients with renal disease. J Am Med Inform Assoc. 2015;22(04):881–887. doi: 10.1093/jamia/ocv027. [DOI] [PubMed] [Google Scholar]

- 32.Horsky J, Drucker E A, Ramelson H Z. Higher accuracy of complex medication reconciliation through improved design of electronic tools. J Am Med Inform Assoc. 2018;25(05):465–475. doi: 10.1093/jamia/ocx127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kawamoto K, Houlihan C A, Balas E A, Lobach D F.Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success BMJ 2005330(7494):765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Melton B L, Zillich A J, Russell S A et al. Reducing prescribing errors through creatinine clearance alert redesign. Am J Med. 2015;128(10):1117–1125. doi: 10.1016/j.amjmed.2015.05.033. [DOI] [PubMed] [Google Scholar]

- 35.Miller K, Mosby D, Capan M et al. Interface, information, interaction: a narrative review of design and functional requirements for clinical decision support. J Am Med Inform Assoc. 2018;25(05):585–592. doi: 10.1093/jamia/ocx118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Russ A L, Zillich A J, McManus M S, Doebbeling B N, Saleem J J. A human factors investigation of medication alerts: barriers to prescriber decision-making and clinical workflow. AMIA Annu Symp Proc. 2009;2009:548–552. [PMC free article] [PubMed] [Google Scholar]

- 37.Phansalkar S, Edworthy J, Hellier E et al. A review of human factors principles for the design and implementation of medication safety alerts in clinical information systems. J Am Med Inform Assoc. 2010;17(05):493–501. doi: 10.1136/jamia.2010.005264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Horsky J, Schiff G D, Johnston D, Mercincavage L, Bell D, Middleton B. Interface design principles for usable decision support: a targeted review of best practices for clinical prescribing interventions. J Biomed Inform. 2012;45(06):1202–1216. doi: 10.1016/j.jbi.2012.09.002. [DOI] [PubMed] [Google Scholar]

- 39.Middleton B, Bloomrosen M, Dente M Aet al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA J Am Med Inform Assoc 201320(e1):e2–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rubins D, Dutta S, Wright A, Zuccotti G. Continuous improvement of clinical decision support via an embedded survey tool. Stud Health Technol Inform. 2019;264:1763–1764. doi: 10.3233/SHTI190636. [DOI] [PubMed] [Google Scholar]

- 41.Aaron S, McEvoy D S, Ray S, Hickman T T, Wright A. Cranky comments: detecting clinical decision support malfunctions through free-text override reasons. J Am Med Inform Assoc. 2019;26(01):37–43. doi: 10.1093/jamia/ocy139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Gregory M E, Russo E, Singh H. Electronic health record alert-related workload as a predictor of burnout in primary care providers. Appl Clin Inform. 2017;8(03):686–697. doi: 10.4338/ACI-2017-01-RA-0003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bliss J P, Gilson R D, Deaton J E. Human probability matching behaviour in response to alarms of varying reliability. Ergonomics. 1995;38(11):2300–2312. doi: 10.1080/00140139508925269. [DOI] [PubMed] [Google Scholar]

- 44.Baysari M T, Tariq A, Day R O, Westbrook J I. Alert override as a habitual behavior - a new perspective on a persistent problem. J Am Med Inform Assoc. 2017;24(02):409–412. doi: 10.1093/jamia/ocw072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Zachariah M, Phansalkar S, Seidling H M et al. Development and preliminary evidence for the validity of an instrument assessing implementation of human-factors principles in medication-related decision-support systems--I-MeDeSA. J Am Med Inform Assoc. 2011;18 01:i62–i72. doi: 10.1136/amiajnl-2011-000362. [DOI] [PMC free article] [PubMed] [Google Scholar]