Abstract

Imaging biomarkers are being rapidly developed for early diagnosis and staging of disease. The development of these biomarkers requires advances in both image acquisition and analysis. Detecting and segmenting objects from images are often the first steps in quantitative measurement of these biomarkers. The challenges of detecting objects in images, particularly small objects known as blobs, include low image resolution, image noise and overlap between the blobs. The Difference of Gaussian (DoG) detector has been used to overcome these challenges in blob detection. However, the DoG detector is susceptible to over-detection and must be refined for robust, reproducible detection in a wide range of medical images. In this research, we propose a joint constraint blob detector from U-Net, a deep learning model, and Hessian analysis, to overcome these problems and identify true blobs from noisy medical images. We evaluate this approach, UH-DoG, using a public 2D fluorescent dataset for cell nucleus detection and a 3D kidney magnetic resonance imaging dataset for glomerulus detection. We then compare this approach to methods in the literature. While comparable to the other four comparing methods on recall, the UH-DoG outperforms them on both precision and F-score.

Subject terms: Image processing, Diagnostic markers, Glomerular diseases

Introduction

There is great interest in tailoring diagnostic and therapeutic tools to individual patients. This concept reflects the growing recognition that there is significant variability between individuals. As therapies focus on molecular targets, diagnostic medical imaging tools must reveal focal pathologies and the effects of therapy in each patient. High-resolution object detection and image segmentation are thus critical to obtaining meaningful data in a heterogeneous image.

In image analysis, detection is used to identify objects such as organs and tumors, and segmentation is used to isolate the objects from an image. While large objects can often be automatically or semi-automatically isolated, small objects (blobs) are difficult to detect and segment. Blobs can range in size and location in images. Examples of blobs include cells or cell nuclei in images from optical microscopy1, exudative lesions in images of the retina2, breast lesions in ultrasound images3, and glomeruli in magnetic resonance (MR) images of the kidney4–6. Major challenges to detecting these blobs include low image resolution and high image noise. The small blobs are often numerous and can overlap each other. Many approaches have been proposed for blob detection7–9 of which intensity thresholding is among the most common10. Intensity thresholding assumes that the blobs have consistently different intensities from the background. Global differences can be addressed with a fixed threshold and local differences can be addressed with an adaptive threshold11,12. However, the assumptions required for consistent thresholding are often violated, and thresholding alone can lead to erroneous detection or segmentation. To address this, researchers have proposed multi-step pipelines13,14 in which thresholding is only the first step. Intensity-based features are then derived using filters for improved detection. One popular class of filters is based on mathematical morphology15,16. Operators such as erosion, dilation, opening and closing allow geometrical and topological properties of objects. This approach often begins with selected seed points in the image and iteratively adds connected points to form labeled regions. Mathematical morphology is preferred when the blobs are relatively large in size and small in number. Weaknesses of this approach include the tendency to under-segment and diminished performance in the presence of noise. Under-segmentation occurs when multiple blobs within close proximity are detected as one, resulting in an erroneously low detected number. Another type of filter is based on space transformation. For example, Radial-Symmetry17, a point detector for small blobs, uses radially symmetric space as a transformation space to detect radially symmetric blobs. SIFT18, SURF19 and BRISK20 are region detectors. Each of the region detectors extracts scale invariant features to detect small objects but may suffer from poor performance in optical imaging21. Recently, the Laplacian of Gaussian (LoG) detector22,23, from scale space theory, has attracted attention in blob detection8,24. Similar to the radially symmetric detector, the LoG detector is unreliable in detecting rotationally asymmetric blobs. To solve this, LoG extensions have been proposed, including the Difference of Gaussian (DoG)18,25–27 and the Generalized Laplacian of Gaussian (gLoG)21. While each approach detects small blobs to some extent, non-blob objects are detected as false blob candidates resulting in over-detection. A post-pruning procedure can remove false blob candidates, but results have been inconsistent28.

Here we focus on detecting individual glomeruli in MR images of the kidney as a specific blob detection problem. To date, most biomarkers of kidney pathology have come from histology using destructive techniques that estimate glomerular number29–31. A non-destructive imaging approach to measuring nephron endowment provides a new marker for renal health and susceptibility to kidney disease. Cationic ferritin enhanced MRI (CFE-MRI) enables the detection of glomeruli in animals32,33 and in human kidneys5,32–34. Because each glomerulus is associated with a nephron, CFE-MRI may provide an important imaging marker to detect changes in the number of nephrons and susceptibility to renal and cardiovascular disease5. Glomerulus detection by CFE-MRI presents difficulties because glomeruli are small and have a spatial frequency similar to image noise. Zhang et al. developed the Hessian-based Laplacian of Gaussian (HLoG) detector1 and the Hessian-based Difference of Gaussian (HDoG) detector28 to automatically detect glomeruli in CFE-MR images. They employed the LoG or DoG to smooth the images, followed by Hessian analysis of each voxel for pre-segmentation. Since LoG and DoG suffer from over-detection, a Variational Bayesian Gaussian Mixture Model (VBGMM) was implemented as a final step. LoG and DoG were the first two detectors applied to MR images of the kidney to identify glomeruli. However, deriving Hessian-based features from each blob candidate is computationally expensive, limiting high-throughput studies. In addition, unsupervised learning using the VBGMM in the post-pruning procedure requires a number of carefully tuned parameters for optimal clustering. Here we propose a new approach, termed UH-DoG, which applies joint constraints from spatial probability maps derived from U-Net, a deep learning model, and Hessian convexity maps derived from Hessian analysis on the DoG detector. The theoretical foundation of Hessian analysis guarantees that pre-segmentation will recognize all true convex blobs and some non-blob convex objects, resulting in a blob superset. Joining probability maps allows us to distinguish true blobs from the superset. The joint-constraint extension of the detector requires no post-pruning and thus is robust, generalizable and computationally efficient.

Within the field of deep learning, the Convolutional Neural Network (CNN) has been successfully implemented in medical imaging applications ranging from object detection and segmentation to classification35–37. The first generation of CNN models was used to classify images through fully connected layers. Shelhamer et al.38 first proposed a Fully Convolutional Network (FCN) that transfers the fully connected layers to deconvolutional layers and provides a dense class map with arbitrarily-sized input image. The FCN changes “image-label” mapping to “pixel/voxel-label” mapping for object detection and image segmentation. One limitation of the FCN for medical imaging is the need for large training datasets. A lightly weighted FCN model, the U-Net39, employs a modified FCN architecture to require fewer training images but yield precise, fast segmentation. U-Net has been implemented in various medical segmentation tasks such as nucleus, cell, and breast lesion segmentation40–42, all drawn from limited datasets. The U-Net yields a probability map where each pixel or voxel indicates the likelihood of being within the imaging object. However, based on our previous study43, U-Net does not reliably separate glomeruli within close proximity. Therefore, we choose to adopt the probability map as part of UH-DoG in conjunction with Hessian analysis for glomerulus detection from CFE-MR images.

There are three main advantages of the UH-DoG method. First, a global blob likelihood constraint from the U-Net probability map reduces over-detection by DoG. Second, a local convex constraint from the Hessian convexity map reduces under-segmentation. Third, integrating the probability map constraint with the Hessian convexity map eliminates the need for post-pruning. To validate the performance of UH-DoG, four methods were chosen from the literature: HLoG1, gLoG21, LoG22, and Radial-Symmetry17. We tested these on dataset of 2D fluorescent images (n = 200) where the locations of blobs were known. UH-DoG outperformed the other four methods in F-score and performed comparably to the other four methods in recall. Next, we compared blob detection of these methods on a 3D kidney MR dataset against the HDoG method. The differences between UH-DoG and HDoG were negligible but the average computation time of UH-DoG was 35% shorter than that of HDoG.

Methods

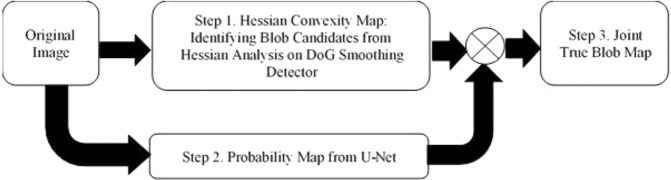

We propose UH-DoG, a joint constraint-based detector for glomeruli detection. UH-DoG consists of three steps (Fig. 1). Step 1 is to use the Difference of Gaussian (DoG) to smooth the images, followed by Hessian analysis to identify possible blob candidates based on local convexity. Step 2 is to use a trained U-Net to generate a probability map, which captures the most likely blob locations. Step 3 is to combine the probability map from Step 2 with blob candidates from Step 1 as joint constraints to identify true blobs. Each step is discussed in detail in the following sections.

Figure 1.

Proposed UH-DoG for glomerulus identification.

Hessian analysis and hessian convexity map

Before implementing Hessian analysis, DoG is used to smooth the images. By employing a convolution operator, DoG can filter image noise and enhance objects at the selected scale24. DoG is a fast approximation of the LoG filter to highlight blob structure4 and is thus computationally efficient18.

Let a 3D image be The scale-space representation at point (x, y, z), with scale parameter σ, is the convolution of image f(x, y, z) with the Gaussian kernel :

| 1 |

where * is the convolution operator and the Gaussian kernel . The Laplacian of is:

| 2 |

According to18, We approximate the partial derivative by a one-sided difference quotient, the DoG approximation of LoG is:

| 3 |

To locate an optimum scale for the blobs, similar to1, we add γ-normalization to form the normalized DoG detector , which is:

| 4 |

where γis introduced to automatically determine the optimum scale for the blobs. We set γ to 2 here. For details on tuning γ, refer to1. The normalized DoG transformation underlies Hessian-based convexity analysis to detect blobs.

After the image is smoothed by the normalized DoG, for a voxel (x, y, z) in the normalized DoG image at scale σ, the Hessian matrix for this voxel is:

| 5 |

In a normalized DoG-transformed 3D image, each voxel of a transformed bright blob has a negative definite Hessian matrix28. We define a binary indicator matrix, , termed the Hessian convexity map. when is negative definite; otherwise, .

To determine a single optimum scale σ*, the maximum value of the normalized DoG is used here28. Let the average DoG value per blob candidate voxel measure BGoG be:

| 6 |

We have . σ* is used to generate the optimum Hessian convexity map . This map is the local convexity constraint for detecting the convex blob regions. Result is a set of convex objects including all true blobs and some non-blob convex objects.

U-Net and Probability Map

A classical CNN usually consists of multiple convolutional layers followed by pooling layers, activation layers, and fully connected layers. Convolutional layers learn hierarchical and high-level feature representation. Pooling layers can reduce feature dimensions and capture spatial feature invariance. The final fully-connected layers categorize the images into different groups. Compared to classical CNNs, FCNs replace all fully-connected layers with a fully convolutional layer. There are several advantages to this approach. First, the input image size can be arbitrary because all models consist of convolution layers, so output size only depends on input size. Second, the FCN can be trained from whole images without patch sampling, thus the effects of patch-wise training need not be considered. However, FCNs require a large dataset for training. To address this issue, a modified FCN model, the U-Net, was proposed. Interested readers are referred to U-Net39 for details. The output of U-Net is a probability map in [0, 1].

In U-Net, let the input images be , and the output map be , where . A binary cross entropy loss function is used in the training process to obtain the output map:

where Yk is the true label and is the predicted probability for voxel k.

In our U-Net output, we obtain a probability map where x and y are dimensions of each 2D image slice and z is the slice number. The probability map is the global blob likelihood constraint in our joint constraints operations to detect the most likely blob regions. By setting a probability threshold, most noise is removed. However, some touching blobs could have a higher probability than the threshold in the boundary and might not be split up, resulting in reduced detection known as under-segmentation. Joining the Hessian convexity map with U-Net probability map will address the challenges of small blob detection.

Joint constraint operation for true blob identification

Given a 3D image Hessian analysis is applied to render a convexity map , U-Net is applied to render a probability map . We introduce a joint operator

| 7 |

where is a binary indicator matrix. Given a probability threshold = 1 when ; otherwise, = 0. We define the true blob candidate as a 27-connected voxel44, and the blob set is represented as:

| 8 |

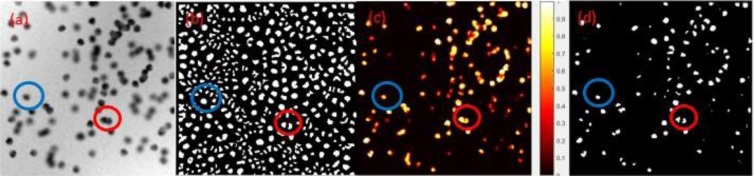

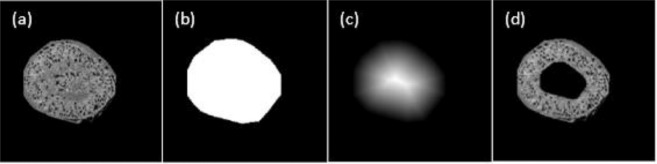

To illustrate, Fig. 2 shows images of blobs detected during the joint constraint operation of the U-Net probability map and the Hessian convexity map. The blue circle in Fig. 2a shows only one blob. The same blue circle on Fig. 2b, after application of the Hessian convexity map, shows there is one “bigger” blob in the middle and a number of smaller blobs around the boundary of the blue circle. Figure 2c shows the correct outcome, only one blob in the middle. This clearly illustrates the sensitivity of the Hessian matrix to noise. Even though the Hessian analysis guarantees the detection of the convex object, some non-blob convex objects (noise) will also be detected, resulting in over-detection. This noise can be readily filtered by the U-Net probability map (Fig. 2c). We conclude that U-Net may be useful for denoising, which alleviates the over-detection of Hessian analysis.

Figure 2.

(a) A 2D gray scale image preprocessed from experiment 1 fluorescent image (b) Binary Hessian convexity map of (a), the convex pixels are marked as the white color. (c) U-Net probability map of (a), pixel is illustrated with a color indicating a probability of the pixel belonging to a blob. (d) Blob identification map joined from Hessian convexity map and U-Net probability map with 0.5 threshold.

The red circle in Fig. 2a shows overlapped blobs. They are still overlapping in the U-Net probability map from Fig. 2c. But they are split up in the Hessian convexity map from Fig. 2b. By joining the Hessian convexity map and U-Net probability map with a single global threshold, the overlapped blobs in the red circle are visualized as distinct entities, as shown in Fig. 2d. We conclude Hessian analysis could alleviate the under-segmentation issue from U-Net.

Our proposed UH-DoG integrates the probability map from U-Net and convexity map from Hessian analysis to guarantee robustness to noise and effective blob detection. The detailed steps of UH-DoG are shown in Table 1.

Table 1.

Detail Steps of proposed UH-DoG.

| 1. Use a pretrained model to generate a probability map of blobs from original image |

| 2. Initialize the normalization factor γ, and range and step-size of parameter σ, to transform the original image into normalized DoG space. |

| 3. Calculate the Hessian matrix based on normalized DoG smoothed image and generate the Hessian convexity map . |

| 4. Calculate average DoG intensity and find the optimum scale section by. |

| 5. Get the optimum Hessian convexity map under scale σ*. |

| 6. Join the probability map with Hessian convexity map to identify true blobs. |

Experiments

Two experiments were conducted to validate of the performance of our proposed UH-DoG detector. The first experiment validated the UH-DoG on 200 fluorescence, 2D light microscopy images for cell detection45. The 2D cell images were of interest because (1) to the best of our knowledge, there are no 3D small blob datasets available for comparison; (2) the blobs from these images are small and each image could be used to test the performance of the algorithm in the presence of background noise; (3) this dataset has the ground truth of the locations of each blob. The detection accuracy measured by recall, precision, and F-score can be used to compare this approach with methods from the literature. The second experiment validated the performance of UH-DoG on CFE-MR images of mouse kidneys where each glomerulus was detected. All experiments were approved by the University of Virginia Institutional Care and Use Committee, in accordance with the NIH Guide for the Care and Use of Laboratory Animals.

Results

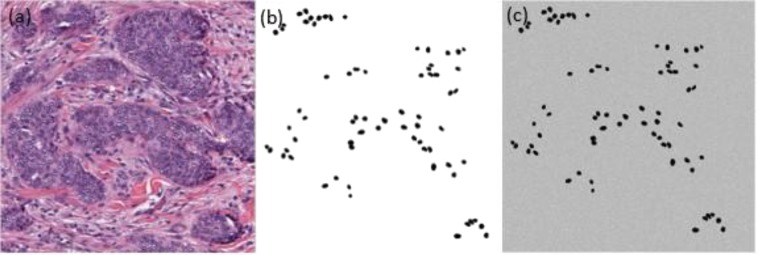

Training dataset and data augmentation

We used a public dataset46 to train our deep learning model, based on optical images of cell nuclei. This dataset has 141 optical microscopy pathology images (2,000 × 2,000 pixels), as shown in Fig. 3a. The 12,000 ground truth annotations are typically done by an expert, which involves delineating object boundaries over 40 hours46. Due to the large amount of time and effort required, the annotated nuclei in this dataset only represents a small fraction of the total number of nuclei present in all images. Since we aim to facilitate U-Net to denoise our blobs images based on the ground truth labeled images, as shown in Fig. 3b, we generated Gaussian distributed noise with and and we added it to the ground truth labeled images, resulting in 141 simulated training images, as shown in Fig. 3c. Data were augmented to increase the in variance and robustness properties of U-Net39. We generated the augmented data by a combination of rotation shift, width shift, height shift, shear, zoom, and horizontal flip.

Figure 3.

Training images. (a) Original image. (b) Ground truth labeled image. (c) Simulated training image.

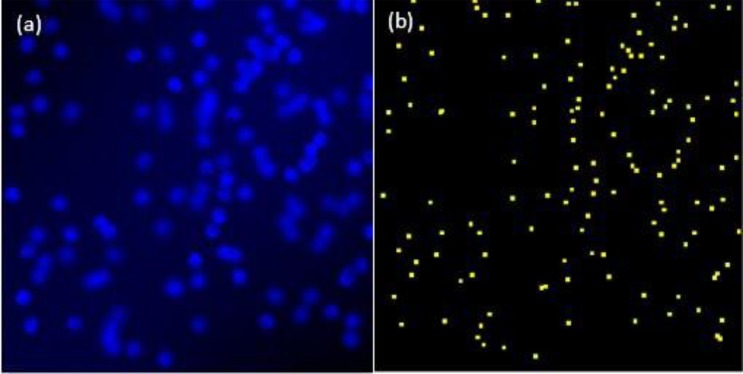

Experiment I: Validation experiments using 2D fluorescent images

Figure 4 illustrates an example fluorescent image (256 × 256 pixels). Since this was a 2D image, our proposed UH-DoG must incorporate a modified 2D DoG because comparison algorithms were from the 2D LoG and its extensions.

Figure 4.

(a) Sample 2D fluorescent image. (b) Ground truth dots of (a).

To revise the DoG to a 2D version, for 2D images with the Gaussian kernel , we modified the normalized 3D DoG detector from Eq. 4 in a 2D format:

| 9 |

Then the corresponding Hessian matrix were modified from Eq. 5 as follows:

| 10 |

The parameter settings for Hessian analysis and DoG were as suggested in28. γ is set to 2. σ varies from 0.5 to 3 with step-size 0.5. Δσ is set to 0.001.

We used precision, recall and F-score to evaluate the performance of our proposed algorithm. Precision measures the fraction of retrieved candidates confirmed by the ground-truth. Recall measures the fraction of ground-truth data retrieved. F-score measures overall performance. Since ground truth data were provided in the form of dots (the coordinates of the blob centers), as in the literature1,28, a candidate was considered a true positive if its intensity centroid was within a threshold d of the corresponding ground truth dot. Specifically, if the Euclidian distance Dij between dot i and blob candidate j was less than or equal to d, the blob was considered a true positive. To avoid duplicate counting, the number (#) of true positives TP was calculated by Eq. 11. Precision, recall, and F-score are calculated by Eqs. 12, 13, 14 respectively:

| 11 |

| 12 |

| 13 |

| 14 |

where m is the number of ground-truth and n is the number of blob candidates; d is a thresholding parameter set to a positive value . If d is small, fewer blob candidates are counted since the distance between the blob candidate centroid and ground-truth should be small. If d is too large, more blob candidates are counted. Here, since local intensity extremes could be anywhere within a small blob with an irregular shape, we set d to the average diameter of the blobs: .

Since the results of detection by the complete versions of HLoG, gLoG, Radial-Symmetry and LoG on 200 pathological images are available online1,17,21, the results were directly used from these papers for comparison.

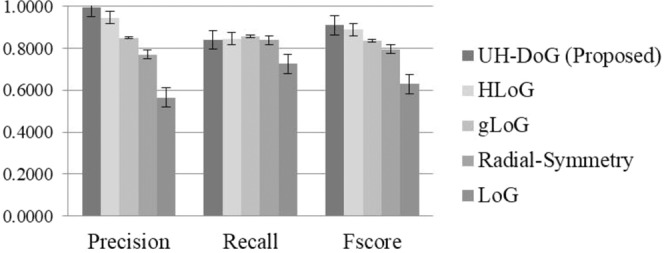

Figure 5 shows a comparison of UH-DoG to the HLoG, gLoG, LoG and Radial-Symmetry algorithms. While UH-DoG is comparable to HLoG, gLoG and Radial Symmetry algorithms in recall, it significantly outperforms the four algorithms in both precision and F-score (Table 2). The standard deviation of F-score in UH-DoG was 0.025, compared to 0.0377 with the HLoG method, compared to 0.1436 with the gLoG method, 0.0795 with the Radial-Symmetry method, and 0.0385 with the LoG method. We conclude that UH-DoG provides more accurate and robust detection of blobs in this dataset. In addition, statistical analysis was performed with the results summarized in Table 2. While comparable to the four algorithms on recall, our approach statistically outperformed the others on precision and F-score.

Figure 5.

Comparison of full versions of UH-DoG, HLoG, gLoG, Radial-Symmetry and LoG on 200 fluorescence images. The error bar indicates the standard deviation of the corresponding measure across 200 images. For precision and F-score, UH-DoG has significant different (see Table 2) with others. For recall, UH-DoG has significant difference with gLoG and LoG.

Table 2.

ANOVA using Tukey’s HSD pairwise test on 200 Fluorescent Images (*significance p < 0.05).

| UH-DoG vs. | Precision | Recall | F-Score |

|---|---|---|---|

| HLoG | *<0.0001 | 0.207 | *<0.0001 |

| gLoG | *<0.0001 | *0.001 | *<0.0001 |

| Radial Symmetry | *<0.0001 | 0.963 | *<0.0001 |

| LoG | *<0.0001 | *<0.0001 | *<0.0001 |

Experiment II: Validation experiments using 3D Kidney MRI

In this section, we conducted experiments on CF-labeled glomeruli from a dataset of 3D magnetic resonance images (256 × 256 × 256 voxels) to measure number (Nglom) and apparent size (aVglom) of glomeruli in diseased kidneys and healthy control kidneys. Acute kidney injury was induced in adult male C57Bl/6 mice using an intraperitoneal injection of folic acid (125 mg). A subset of the group receiving folic acid, the AKI group (n = 4) was euthanized 4 days after the folic acid was administered and the remainder of those that received folate were euthanized 4 weeks later and termed the chronic kidney disease (CKD) group, n = 3. The control groups for AKI (n = 5) and CKD (n = 6) were age-matched adult male C57Bl/6 mice that received intraperitoneal sodium bicarbonate.

For improved detection, we adopted a preprocessing step to segment the medulla from the image because no glomeruli are located there. Based on the segmented kidney image, shown in Fig. 6a, we converted it to a binary mask (Fig. 6b). Then we generated a distance mask, seen in Fig. 6c. With the map showing the distance between each kidney’s voxel and the kidney boundary, we set up a distance threshold to remove regions farther from the boundary than this threshold. Figure 6d shows the 2D image slice after removing the medulla.

Figure 6.

(a) One slice of healthy mouse kidney (ID: 477) image. (b) Binary image of (a). (c) Distance mask of (b). (d) Remove medulla from (a).

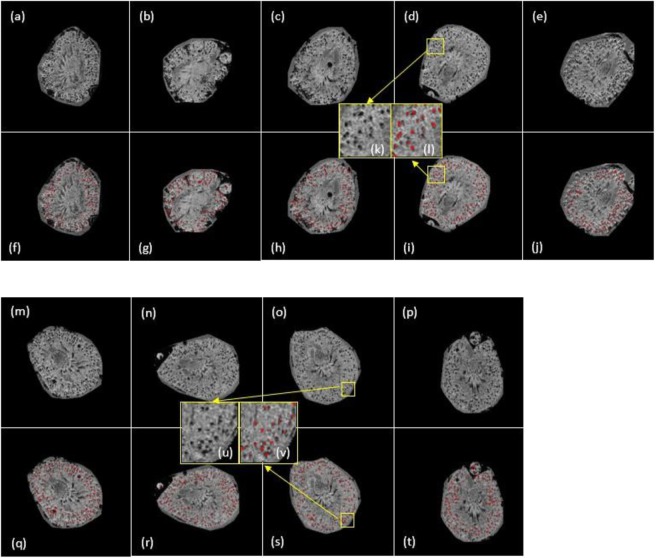

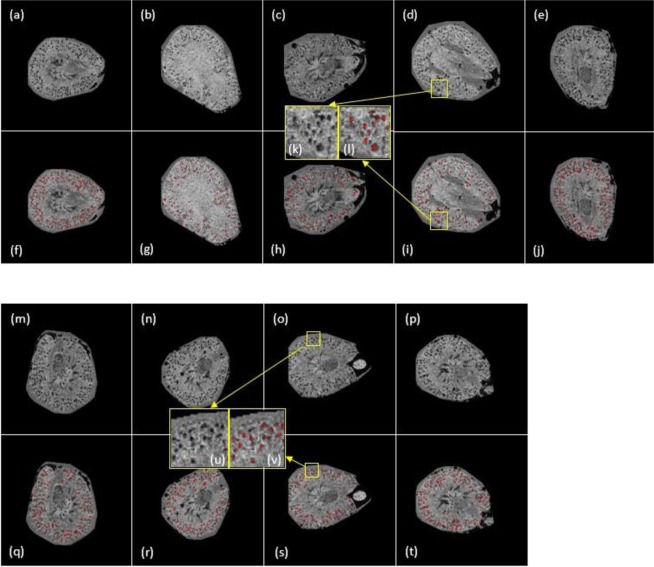

Then we performed the proposed UH-DoG method to segment the kidney glomeruli in Fig. 6d. The parameter settings are as follows: γ is set to 2. σ varies from 0.5 to 1.8 with step-size 0.1. Δσ is set to 0.001. Example segmentation results are shown in Figs. 7 and 8. The number of glomeruli (Nglom), mean apparent glomerular volume (aVglom) and median aVglom are reported in Table 3, where the UH-DoG method is compared to the HDoG method. We used the method of calculating apparent glomerular volume from the paper34. Similarly, Table 4 summarizes the results from the AKI and control groups.

Figure 7.

(a) Glomerular segmentation results from 3D MR images of mouse kidneys (selected slices presented). (a–e) One slice for the CKD group. (f–j) Identified glomeruli are marked in red. (k) is the zoom-in region of (d) while (l) is the segmentation result of (k). (b) Glomerular segmentation results from 3D MR images of mouse kidneys (selected slices presented). (m–p) One slice for the control group. (q–t) Identified glomeruli are marked in red. (u) is the zoom-in region of (o) while (v) is the segmentation results of (u).

Figure 8.

(a) Glomerular segmentation results from 3D MR images of mouse kidneys (selected slices presented). (a–e) One slice for the AKI group. (f–j) Identified glomeruli are marked in red. (k) is the zoom-in region of (d) while (l) is the segmentation result of (k). (b) Glomerular segmentation results from 3D MR images of mouse kidneys (selected slices presented). (m–p) One slice for the control group. (q–t) Identified glomeruli are marked in red. (u) is the zoom-in region of (o) while (v) is the segmentation results of (u).

Table 3.

Glomerular number (Nglom) and volume (aVglom) for the CKD and control mice kidneys using the proposed UH-DoG method comparing with HDoG method (*aVglom unit mm3 × 10−4).

| Mouse | Nglom (UH-DoG) | Nglom (HDoG) | Nglom Difference Ratio (%) | Mean aVglom (UH-DoG) | Mean aVglom (HDoG) | Mean aVglom Difference Ratio (%) | Median aVglom (UH-DoG) | Median aVglom (HDoG) | Median aVglom Difference Ratio (%) | |

|---|---|---|---|---|---|---|---|---|---|---|

| CKD | ID 429 | 7,346 | 7,656 | 4.05 | 2.92 | 2.57 | 11.99 | 1.74 | 1.48 | 14.94 |

| ID 466 | 8,138 | 8,665 | 6.08 | 2.06 | 2.01 | 2.43 | 1.15 | 0.94 | 18.26 | |

| ID 467 | 8,663 | 8,549 | 1.33 | 2.32 | 2.16 | 6.90 | 1.47 | 1.28 | 12.93 | |

| Avg | 8,049 | 8,290 | 2.91 | 2.43 | 2.25 | 7.67 | 1.45 | 1.23 | 15.14 | |

| Std | 663 | 552 | 0.44 | 0.29 | 0.30 | 0.27 | ||||

| Control | ID 427 | 12,701 | 12,724 | 0.18 | 1.61 | 1.49 | 7.45 | 1.26 | 1.15 | 8.73 |

| ID 469 | 11,347 | 10,829 | 4.78 | 2.20 | 1.91 | 13.18 | 1.41 | 1.20 | 14.89 | |

| ID 470 | 11,309 | 10,704 | 5.65 | 2.04 | 1.98 | 2.94 | 1.50 | 1.37 | 8.67 | |

| ID 471 | 12,279 | 11,943 | 2.81 | 1.56 | 1.5 | 3.85 | 1.22 | 1.13 | 7.38 | |

| ID 472 | 12,526 | 12,569 | 0.34 | 1.49 | 1.35 | 9.40 | 1.16 | 1.06 | 8.62 | |

| ID 473 | 11,853 | 12,245 | 3.20 | 1.58 | 1.50 | 5.06 | 1.25 | 1.18 | 5.60 | |

| Avg | 12,003 | 11,836 | 1.41 | 1.75 | 1.62 | 7.16 | 1.30 | 1.18 | 9.10 | |

| Std | 595 | 872 | 0.30 | 0.26 | 0.13 | 0.10 | ||||

Table 4.

Glomerular number (Nglom) and volume (aVglom) for the AKI and control mice kidneys using the proposed UH-DoG method comparing with HDoG method (*aVglom unit mm3 × 10−4).

| Mouse | Nglom (UH-DoG) | Nglom (HDoG) | Nglom Difference Ratio (%) | Mean aVglom (UH-DoG) | Mean aVglom (HDoG) | Mean aVglom Difference Ratio (%) | Median aVglom (UH-DoG) | Median aVglom (HDoG) | Median aVglom Difference Ratio (%) | |

|---|---|---|---|---|---|---|---|---|---|---|

| AKI | ID 433 | 11,033 | 11,046 | 0.12 | 1.63 | 1.53 | 6.13 | 1.27 | 1.17 | 7.87 |

| ID 462 | 10,779 | 11,292 | 4.54 | 1.48 | 1.34 | 9.46 | 1.17 | 1.00 | 14.53 | |

| ID 463 | 10,873 | 11,542 | 5.80 | 2.61 | 2.35 | 9.96 | 1.60 | 1.25 | 21.88 | |

| ID 464 | 11,340 | 11,906 | 4.75 | 2.40 | 2.31 | 3.75 | 1.59 | 1.17 | 26.42 | |

| Avg | 11,006 | 11,447 | 3.85 | 2.03 | 1.88 | 7.27 | 1.41 | 1.15 | 18.47 | |

| Std | 246 | 367 | 0.56 | 0.52 | 0.22 | 0.11 | ||||

| Control | ID 465 | 10,115 | 10,336 | 2.14 | 2.40 | 2.30 | 4.17 | 1.66 | 1.42 | 14.46 |

| ID 474 | 11,157 | 10,874 | 2.60 | 2.52 | 2.44 | 3.17 | 1.70 | 1.44 | 15.29 | |

| ID 475 | 10,132 | 10,292 | 1.55 | 1.70 | 1.74 | 2.35 | 1.26 | 1.16 | 7.94 | |

| ID 476 | 10,892 | 10,954 | 0.57 | 1.62 | 1.53 | 5.56 | 1.21 | 1.09 | 9.92 | |

| ID 477 | 11,335 | 10,885 | 4.13 | 1.70 | 1.67 | 1.76 | 1.27 | 1.19 | 6.30 | |

| Avg | 10,726 | 10,668 | 0.54 | 1.99 | 1.94 | 2.62 | 1.42 | 1.26 | 11.27 | |

| Std | 572 | 325 | 0.43 | 0.41 | 0.24 | 0.16 |

We performed quality control by visually checking the identified glomeruli in kidney images. Figure 7 shows glomerular identification for CKD and control kidneys. Figure 8 shows glomerular identification for kidneys in the AKI and control groups.

Discussion: computation cost

UH-DoG significantly decreases computation time compared to the HDoG algorithm28, as shown in Tables 5 and 6. The training time of U-Net is not included in the estimates of computation time as it is trained beforehand and can be used to test on all images.

Table 5.

Computation time for CKD and Control kidneys using HDoG and the proposed method with scale = 1 (Intel Xeon 3.6 GHz CPU and 16 GB of memory, NVIDIA TITAN XP and 12 GB of memory).

| Mouse | HDoG (seconds) | UH-DoG (seconds) | |

|---|---|---|---|

| CKD | ID 429 | 9.3 | 7.3 |

| ID 466 | 9.5 | 7.3 | |

| ID 467 | 11.4 | 7.6 | |

| Avg | 10.1 | 7.4 | |

| Std | 1.2 | 0.2 | |

| Control | ID 427 | 11.7 | 8.2 |

| ID 469 | 11.7 | 8.0 | |

| ID 470 | 12.0 | 8.0 | |

| ID 471 | 11.9 | 8.0 | |

| ID 472 | 12.0 | 8.1 | |

| ID 473 | 25.2 | 8.2 | |

| Avg | 14.1 | 8.1 | |

| Std | 5.5 | 0.1 | |

Table 6.

Computation time for AKI and Control kidneys using HDoG and the proposed method with scale = 1 (Intel Xeon 3.6 GHz CPU and 16 GB of memory, NVIDIA TITAN XP and 12 GB of memory).

| Mouse | HDoG (seconds) | UH-DoG (seconds) | |

|---|---|---|---|

| AKI | ID 433 | 13.7 | 7.9 |

| ID 462 | 13.4 | 8.0 | |

| ID 463 | 13.1 | 8.0 | |

| ID 464 | 14.3 | 8.3 | |

| Avg | 13.6 | 8.1 | |

| Std | 0.5 | 0.2 | |

| Control | ID 465 | 11.0 | 7.8 |

| ID 474 | 12.3 | 8.0 | |

| ID 475 | 11.4 | 7.8 | |

| ID 476 | 12.0 | 8.1 | |

| ID 477 | 11.6 | 7.9 | |

| Avg | 11.7 | 7.9 | |

| Std | 0.5 | 0.1 | |

Discussion: Clinical translation

The use of imaging biomarkers in humans has increased both for disease early detection and disease severity assessment. Additionally, imaging biomarkers can serve as surrogate endpoints in clinical trials, reducing cost and burden associated with these studies. For example, total kidney volume has recently been accepted as a surrogate marker for disease progression in autosomal dominant polycystic kidney disease trials47. Although the importance of glomerular number has been universally accepted, the detection of glomerular number and size has been limited because the only methodology to obtain these metrics were destructive stereological approaches that could only be performed post mortem. With the advent of CFE-MRI, the need for image analysis tools is paramount. However, it is critical to the success of any imaging biomarker that the marker be accurate and rapidly obtained. This study demonstrates some of the challenges in detecting small objects, such as glomeruli, particularly in the settings of low image resolution, image noise and overlap of objects. It also shows the promise of rapid acquisition where data can be used in a timeframe to influence patient care. Further work is necessary to validate the accuracy of the detection of diseased glomeruli to apply this algorithm to a wider range of renal disease models.

Conclusion

Discovering imaging biomarkers is important to inform disease diagnosis, prognosis, therapy development and treatment assessment. Of particular interest in this research is to identify quantitative glomeruli biomarkers from CFE-MR image. This is a challenging problem because the number of glomeruli is large, the size is small. In addition, the limitation from imaging acquisition such as hardware and variable acquisition parameters often renders the images with less desirable resolution resulting the overlapping glomeruli. In this paper, we demonstrated a new small blob detector by joining the Hessian convexity map and probability map from U-Net. This joint constraint-based approach overcomes under-segmentation by U-Net and over-detection by Hessian analysis. While it was successfully implemented in segmenting the kidney glomeruli, there are still some limitations. First, the assumption that the blobs are convex and similar in size may not be robust for non-convex objects with difference sizes. A future possible improvement is to enhance ability of U-Net to detect both convex and non-convex small objects. Second, the probability map is sensitive to the threshold. We plan to explore the use of thresholding to improve UH-DoG.

Acknowledgements

This research was supported by funds from the National Institute of Health award under grant number R01DK110622, R01DK111861. This work used the Bruker ClinScan 7 T MRI in the Molecular Imaging Core which was purchased with support from NIH grant 1S10RR019911-01 and is supported by the University of Virginia School of Medicine. The U.S. Government is authorized to reproduce and distribute for governmental purposes notwithstanding any copyright annotation of the work by the author(s). The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of NIH or the U.S. Government.

Author contributions

Y.X., T.W., F.G., J.R.C. and K.M.B. wrote the main manuscript. Y.X., T.W. and F.G. developed the computational methods and conducted the experiments. J.R.C. and K.M.B. acquired and provided the imaging data. All authors reviewed and approved this manuscript.

Data availability

The datasets generated during and/or analyzed during the current study will be made available upon request.

Competing interests

T.W., K.M.B., and J.R.C. are co-owners of Sindri Technologies, LLC. K.B. has a research agreement with Johnson & Johnson. K.B. has a sponsored research agreement with Jannsen Pharmaceuticals, Inc.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zhang, M., Wu, T. & Bennett, K. M. Small Blob Identification in Medical Images Using Regional Features From Optimum Scale. IEEE Transactions on Biomedical Engineering (2015). [DOI] [PubMed]

- 2.Sánchez, C. I. et al. Contextual computer-aided detection: Improving bright lesion detection in retinal images and coronary calcification identification in CT scans. Medical Image Analysis (2012). [DOI] [PubMed]

- 3.Moon, W. K. et al. Computer-aided tumor detection based on multi-scale blob detection algorithm in automated breast ultrasound images. IEEE Transactions on Medical Imaging (2013). [DOI] [PubMed]

- 4.Beeman SC, et al. Measuring glomerular number and size in perfused kidneys using MRI. AJP: Renal Physiology. 2011;300:F1454–F1457. doi: 10.1152/ajprenal.00044.2011. [DOI] [PubMed] [Google Scholar]

- 5.Beeman, S. C. et al. MRI-based glomerular morphology and pathology in whole human kidneys. AJP: Renal Physiology (2014). [DOI] [PMC free article] [PubMed]

- 6.Gao, F., Zhang, M., Wu, T. & Bennett, K. M. 3D small structure detection in medical image using texture analysis. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS (2016). [DOI] [PubMed]

- 7.Meijering, E., Dzyubachyk, O., Smal, I. & van Cappellen, W. A. Tracking in cell and developmental biology. Seminars in Cell and Developmental Biology (2009). [DOI] [PubMed]

- 8.Lindeberg, T. Detecting salient blob-like image structures and their scales with a scale-space primal sketch: A method for focus-of-attention. International Journal of Computer Vision (1993).

- 9.Crocker, J. C. & Grier, D. G. Methods of digital video microscopy for colloidal studies. Journal of Colloid and Interface Science (1996).

- 10.Wu, Q., Merchant, F. A. & Castleman, K. R. Microscope Image Processing. Microscope Image Processing (2007).

- 11.Phansalkar, N., More, S., Sabale, A. & Joshi, M. Adaptive local thresholding for detection of nuclei in diversity stained cytology images. In ICCSP 2011 - 2011 International Conference on Communications and Signal Processing (2011).

- 12.Li, G. et al. 3D cell nuclei segmentation based on gradient flow tracking. BMC Cell Biology (2007). [DOI] [PMC free article] [PubMed]

- 13.Bergmeir, C., García Silvente, M. & Benítez, J. M. Segmentation of cervical cell nuclei in high-resolution microscopic images: A new algorithm and a web-based software framework. Computer Methods and Programs in Biomedicine (2012). [DOI] [PubMed]

- 14.Guo, Y. et al. An image processing pipeline to detect and segment nuclei in muscle fiber microscopic images. Microscopy Research and Technique (2014). [DOI] [PubMed]

- 15.Dalle, J., Racoceanu, D. & Putti, T. C. Nuclear Pleomorphism Scoring by Selective Cell Nuclei Detection. In IEEE Workshop on Applications of Computer Vision (2009).

- 16.Malpica, N. et al. Applying watershed algorithms to the segmentation of clustered nuclei. Cytometry (1997). [DOI] [PubMed]

- 17.Loy, G. & Zelinsky, A. Fast radial symmetry for detecting points of interest. IEEE Transactions on Pattern Analysis and Machine Intelligence (2003).

- 18.Lowe, D. G. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision (2004).

- 19.Bay, H., Ess, A., Tuytelaars, T. & Van Gool, L. Speeded-Up Robust Features (SURF). Computer Vision and Image Understanding (2008).

- 20.Leutenegger, S., Chli, M. & Siegwart, R. Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the IEEE International Conference on Computer Vision (2011).

- 21.Kong, H., Akakin, H. C. & Sarma, S. E. A generalized laplacian of gaussian filter for blob detection and its applications. IEEE Transactions on Cybernetics (2013). [DOI] [PubMed]

- 22.Witkin, A. P. Scale-space Filtering. In Proceedings of the 8th International Joint Conference on Artificial Intelligence (1983).

- 23.Koenderink, J. J. The structure of images. Biological Cybernetics (1984). [DOI] [PubMed]

- 24.Lindeberg, T. Feature Detection with Automatic Scale Selection. International Journal of Computer Vision (1998).

- 25.Mikolajczyk, K. & Schmid, C. Scale & affine invariant interest point detectors. International Journal of Computer Vision (2004).

- 26.Mikolajczyk, K. & Schmid, C. A performance evaluation of local descriptors. IEEE Transactions on Pattern Analysis and Machine Intelligence (2005). [DOI] [PubMed]

- 27.Tuytelaars, T. & Mikolajczyk, K. Local Invariant Feature Detectors: A Survey. Foundations and Trends® in Computer Graphics and Vision (2007).

- 28.Zhang, M. et al. Efficient Small Blob Detection Based on Local Convexity, Intensity and Shape Information. IEEE Transactions on Medical Imaging (2016). [DOI] [PMC free article] [PubMed]

- 29.Bertram, J. F., Soosaipillai, M. C., Ricardo, S. D. & Ryan, G. B. Total numbers of glomeruli and individual glomerular cell types in the normal rat kidney. Cell & Tissue Research (1992). [DOI] [PubMed]

- 30.Cullen-Mcewen, L. A., Armitage, J. A., Nyengaard, J. R. & Bertram, J. F. Estimating nephron number in the developing kidney using the physical disector/fractionator combination. Methods in Molecular Biology (2012). [DOI] [PubMed]

- 31.Bertram, J. F. Analyzing Renal Glomeruli with the New Stereology. International Review of Cytology (1995). [DOI] [PubMed]

- 32.Bennett, K. M. et al. MRI of the basement membrane using charged nanoparticles as contrast agents. Magnetic Resonance in Medicine (2008). [DOI] [PMC free article] [PubMed]

- 33.Baldelomar, E. J. et al. Phenotyping by magnetic resonance imaging nondestructively measures glomerular number and volume distribution in mice with and without nephron reduction. Kidney International (2016). [DOI] [PMC free article] [PubMed]

- 34.Baldelomar EJ, Charlton JR, Beeman SC, Bennett KM. Measuring rat kidney glomerular number and size in vivo with MRI. American Journal of Physiology-Renal Physiology. 2017;314:F399–F406. doi: 10.1152/ajprenal.00399.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hoo-Chang Member S, et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning and Daniel Mollura are with Center for Infectious Disease Imaging HHS Public Access. IEEE Trans Med Imaging. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kamnitsas K, et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Medical Image Analysis. 2017;36:61–78. doi: 10.1016/j.media.2016.10.004. [DOI] [PubMed] [Google Scholar]

- 37.Gao, F. et al. SD-CNN: A shallow-deep CNN for improved breast cancer diagnosis. Computerized Medical Imaging and Graphics (2018). [DOI] [PubMed]

- 38.Shelhamer, E., Long, J. & Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence (2017). [DOI] [PubMed]

- 39.Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (2015).

- 40.Raza, S. E. A. et al. MIMO-Net: A multi-input multi-output convolutional neural network for cell segmentation in fluorescence microscopy images. Proceedings - International Symposium on Biomedical Imaging 337–340 (2017).

- 41.Yap MH, et al. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE Journal of Biomedical and Health Informatics. 2018;22:1218–1226. doi: 10.1109/JBHI.2017.2731873. [DOI] [PubMed] [Google Scholar]

- 42.Gao, F. et al. Deep Residual Inception Encoder-Decoder Network for Medical Imaging Synthesis. IEEE Journal of Biomedical and Health Informatics (2019). [DOI] [PubMed]

- 43.Xu, Y. et al. U-net with optimal thresholding for small blob detection in medical images. In IEEE International Conference on Automation Science and Engineering (2019).

- 44.Toolbox, I. P. Image Processing Toolbox. Image Processing (2004).

- 45.Lempitsky, V. & Zisserman, A. Learning To Count Objects in Images. Neural information processing systems (NIPS) 1–9 (2010).

- 46.Janowczyk, A. & Madabhushi, A. Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases. Journal of Pathology Informatics (2016). [DOI] [PMC free article] [PubMed]

- 47.Tangri, N. et al. Total kidney volume as a biomarker of disease progression in autosomal dominant polycystic kidney disease. Canadian Journal of Kidney Health and Disease (2017). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analyzed during the current study will be made available upon request.