Abstract

Background

Despite increasing use of the flipped classroom (FC) technique in undergraduate medical education, the benefit in learning outcomes over lectures is inconsistent. Best practices in preclass video design principles are rarely used, and it is unclear if videos can replace lectures in contemporary medical education.

Methods

We conducted a prospective quasi‐experimental controlled educational study comparing theory‐based videos to traditional lectures in a medical student curriculum. Medical students enrolled in an emergency medicine clerkship were randomly assigned to either a lecture group (LG) or a video group (VG). The slide content was identical, and the videos aligned with cognitive load theory‐based multimedia design principles. Students underwent baseline (pretest), week 1 (posttest), and end‐of‐rotation (retention) written knowledge tests and an observed structured clinical examination (OSCE) assessment. We compared scores between both groups and surveyed student attitudes and satisfaction with respect to the two learning methods.

Results

There were 104 students who participated in OSCE assessments (49 LG, 55 VG) and 101 students who participated in knowledge tests (48 LG, 53 VG). The difference in OSCE scores was statistically significant 1.29 (95% confidence interval = 0.23 to 2.35, t(102) = 2.43, p = 0.017), but the actual score difference was small from an educational standpoint (12.61 for LG, 11.32 for VG). All three knowledge test scores for both groups were not significantly different.

Conclusions

Videos based on cognitive load theory produced similar results and could replace traditional lectures for medical students. Educators contemplating a FC approach should devote their valuable classroom time to active learning methods.

Medical educators have increasingly proposed using a flipped classroom (FC) for medical student education.1, 2 In this model, the traditional lecture is a student's preclass homework, while in‐class time is spent on active, inquiry‐based learning facilitated by an instructor.1, 3, 4, 5 Most preclass lectures were recorded in the forms of podcasts, screencasts, and videos.6 Despite the promise of FC to promote active learning, outcomes are inconsistent.6, 7, 8 By dissecting how each element (preclass and in‐class) contributes to learning, perhaps we can design a better medical student curriculum.

The FC classroom incorporates active learning, with teachers explaining in‐depth knowledge instead of merely providing factual information, and students spend time discussing with peers.9 Apart from being interactive,8, 10 there is much heterogeneity in how in‐class activities are conducted, and little is known about how specific in‐class activities contribute to learning.7

When we focus on preclass videos, literature comparing videos to lectures is unclear, with some studies favoring videos,1, 2, 11, 12, 13, 14 and some finding no difference in learning outcomes.12, 14, 15, 16, 17 In other studies, video groups showed worse learning outcomes,3, 12, 18, 19, 20, 21 with authors citing reasons including no peer interaction, low compliance with learning plans, and lack of accountability.1, 3, 4, 5 Studies reported suboptimal use of videos,7 with view rate from around 60%22, 23, 24 to 83%.25 With no clear evidence to support videos, some authors have urged educators to stop replacing lectures with them.26, 27, 28

If we dissect these videos further, the variation in learning outcomes might be due to differences in design.29 Few curriculum developers subscribed to guidelines guided by pedagogical principles.29, 30 As there is diverse configuration, methods, and presentation,31, 32 Mayer's multimedia design principles based on cognitive load theory have been espoused to guide future designs.33, 34, 35 In Mayer's approach, multimedia instruction has the potential for cognitive overload, as the learner's cognitive processing exceeds the available capacity.34, 36 His principles reduce cognitive load to maximize learning.22, 36, 37, 38 However, with few exceptions, most studies did not explicitly reference any design.36, 39, 40 Scant description of video production was typically limited to the software programs and hardware equipment used. Format, if mentioned, varied.27 Examples included interactive games,31 animations,33 or videotaped lectures.22, 37 Given the lack of detail in these preclass materials, study replication is difficult.

Therefore, while the in‐class components are important, it is possible that video design partly accounts for the variability in published FC outcomes. To compare and replicate studies, it would be useful for preclass videos to adhere to good practice for format and design. More specifically, we need to establish whether videos adhering to Mayer's principles could replace lectures in the FC model.

We set out to determine if educational videos adhering to Mayer's multimedia design principles resulted in any difference in learning outcomes for medical students when compared to traditional lectures in the setting of an emergency medicine undergraduate clerkship.

Methods

We conducted a quasi‐experimental controlled study to evaluate the effectiveness of videos compared with lecture for teaching medical students trauma assessment.

Study Setting and Population

All 113 third‐year English stream medical students entering their mandatory emergency medicine rotation from the university were invited to participate and enrolled in a continuous fashion from March 2013 to March 2014. We received approval from the research ethics board from the Ottawa Research Health Institute and the University of Ottawa. As per institutional review board–approved protocol, students were informed that their participation and results would not affect their rotation assessment.

Lecture and Video Development

An emergency medicine clerkship director created the content for an “approach to trauma” presentation with university curriculum objectives. The PowerPoint (Microsoft Corp.) slide design adhered closely to Mayer's principles38 (see Table 1). There was minimal text (coherence principle). Instead, there was relevant nonanimated graphics with a few words next to them (multimedia principle). Each section started with a highlighted introduction and summary (signaling principle). These slides formed the basis of the lecture and the videos. The planned content was written as a script.

Table 1.

Examples in Slides Using Mayer's principles

| Principle | Examples |

|---|---|

| Coherence principle: eliminate extraneous material | One to two keywords only: “airway assessment,” “pneumothorax.” |

| Multimedia principle: present words and pictures rather than words alone | On “mechanism” of trauma, a single photo of a wrecked car is shown. |

| Signaling principle: highlight essential material | Included intro and summary. Slide heading emphasized key point. |

| Contiguity principle: place printed words near corresponding graphics | On slide with tension pneumothorax keywords “tension pneumothorax.” |

| Pretraining principle: provide pretraining in names and characteristics of key concepts | On primary survey slide included the list of “airway, breathing, circulation, disability and exposure.” |

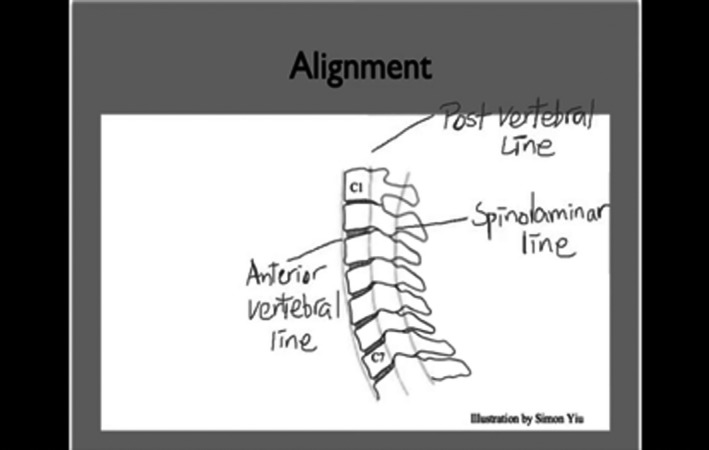

The same instructor used the slides and script to record five voice‐over videos (spanning 6–12 min). The videos further engaged Mayer's personalization and voice principles. Specifically, content was segmented into five videos (segmenting principle). Video contained narrated script (modality principle) in a conversational style (personalization principle) by a person (voice principle). The videos also use contiguity principle by showing words at the exact time of narration placed near corresponding graphics (see Figure 1).

Figure 1.

Still of cervical spine assessment video (https://flippedemclassroom.wordpress.com/2014/04/14/approach-to-trauma-by-stella-yiu/) Handwritten texts and graphics were added in real time during narration (using contiguity principle).

The slides and videos were pilot‐tested by another clerkship director and students. After suggested modifications, the videos were uploaded to a password‐protected Web page. An embedded Google Analytics tracker logged visits anonymously.

In the lecture, the instructor presented the slides over a 2‐hour session with the planned script. The first lecture was recorded and audited by another instructor to ensure consistency with the planned script.

Study Protocol

Once consent was obtained, participants were enrolled in the study. The university had already randomly assigned students into groups (of either 15 or 16) prior to start of clerkship. These groups were created to rotate student through the various disciplines during clerkship training. These groups were then assigned to either lecture group (LG) or video group (VG) in an alternating fashion.

On day 3, LG students attended the lecture and VG students were given access to the videos. On day 8, each participant performed alone in an observed structured clinical examination (OSCE) in a high‐fidelity simulation scenario in trauma assessment and management. Each performance was recorded. Participants also completed three written knowledge tests on day 1 (pretest), day 8 (posttest), and week 5/6 (retention test). After data collection, LG students were also given access to the videos.

Measurements

Our primary outcome measure was application of trauma management skills. Secondary measures included acquired knowledge and attitudes to the learning methods used.

OSCE

The OSCE assessment consisted of a checklist (total 20) and an anchored global rating scale.41 We delineated three domains of trauma management (airway, breathing, and circulation) and identified specific objective elements for each domain. Each item on the checklist requires an application of the cognitive domain of the objective. Each performance was recorded. Three raters (staff emergency physicians) independently reviewed two pilot videos and were trained on the checklist. After that, they discussed and revised unclear items on the checklist through consensus. These raters all had 5 or more years of experience rating OSCE performances. Two independent raters scored each performance on a later date. These raters were blinded to the group allocation and each other's score. There were three raters—rater 1 scored all recordings, with rater 2 and 3 scoring 50% of recordings, respectively.

Knowledge Assessments

We created 60 multiple‐choice questions based on representative sample of items in varying difficulty linked to the content domain. We followed previous guidelines for question construction.42, 43 The questions matched the learning objectives of the videos and the lecture. They were reviewed by three expert content reviewers (staff emergency physicians) and piloted on a separate group of students before the study. We refined ambiguous wordings. The questions were randomly divided based on the main content domains tested into three 20‐item tests. No interitem correlation for the content domains was analyzed. The participants answered these three knowledge tests on day 0, day 8, and week 5 or 6 depending on the rotation schedule.

Survey

The survey assessed the students’ experience and attitudes toward their assigned modality and learning style prior to the simulation. The question “Would you have preferred what you were assigned to?” was repeated after the OSCE simulation assessment. A research assistant extracted and collected the data. A random 10% of sample data was reviewed for accuracy.

Data Analysis

Study data were analyzed using SAS software. The experts agreed by consensus that a 10% difference in the OSCE score (an absolute value of 2) was educationally significant, in keeping with prior studies.44 Assuming a normal distribution, 100 participants (50 in each group) would provide a power of 0.80 to detect a 10% difference between the groups (two‐sided test at α = 0.050). Differences in examination scores between groups and their two‐sided 95% confidence intervals (CIs) were calculated using Student's t‐tests. Cohen's d was calculated for practical significance. Inter‐rater reliability was calculated between the independent raters. Categorical qualitative data were analyzed using Fisher's exact test. For ordinal data in the surveys (Likert scales), nonparametric statistics were used. Participants were analyzed in the groups they were assigned to.

Results

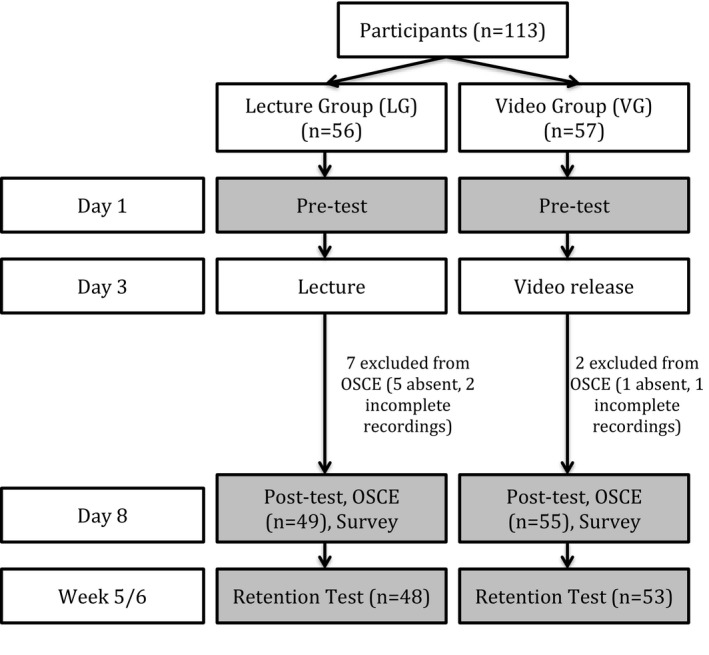

All 113 students entering the emergency medicine rotation provided consent. Half of these groups (two VG, two LG) have had prior surgery rotations while the rest (two VG, two LG) have not. They all had previous OSCE experience. There were 104 recordings (49 LG, 55 VG) available for analysis (see Figure 2).

Figure 2.

Study flow diagram. OCSE = observed structured clinical examination.

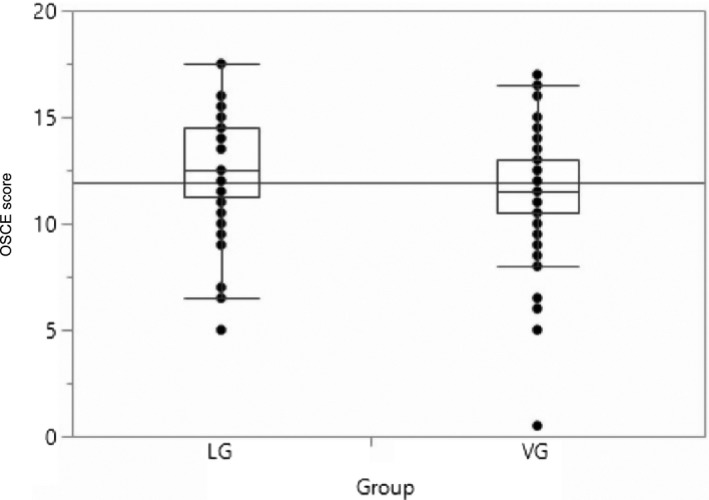

Primary Outcome

The means of the OSCE scores were 12.61 and 11.32 for the LG and VG, respectively. The mean difference was 1.29 (95% CI = 0.23 to 2.35, t(102) = 2.43, p = 0.017), indicating no educationally significant difference (as defined a priori as > 2). Using an independent t‐test (t(102) = 2.43), the difference in the OSCE scores was statistically significant (p = 0.017). Cohen's d was 0.481, suggesting a moderate practical significance (see Figure 3). Inter‐rater reliability was good between rater 1 and 2 (intraclass correlation [ICC] = 0.834, 95% CI = 0.685 to 0.909) and excellent between rater 1 and 3 (ICC = 0.953, 95% CI = 0.920–0.973).

Figure 3.

OSCE scores for both groups. LG = lecture group; OSCE = observed structured clinical examination; VG = video group.

Secondary Outcomes

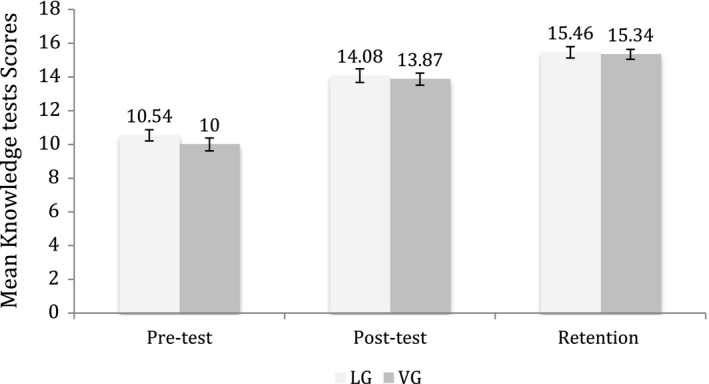

Knowledge Tests

Test scores from 101 participants were available for analysis (48 LG, 53 VG). There were no statistically significant differences between the pretest, posttest, and retention scores for the two groups (see Figure 4). Cohen's d was 0.105 and 0.034, respectively, indicating no practical difference.

Figure 4.

Mean knowledge tests scores for both groups. LG = lecture group; VG = video group.

Video Use

The highest numbers of visits occurred on the day prior to simulation (mean = 11.75, 10–16). Of the VG group, 90% (45 of 50) self‐reported that they had viewed all the videos. There were 25% (13 of 52) students who reported repeated viewing. The number of page visits over the rotation totaled more than the number of students, also suggesting repeated visits. These visits also captured potential LG students who were given access to the videos after data collection.

Survey

There were 103 students who participated in the survey (51 LG, 52 VG). Most students agreed (somewhat or strongly) that there were clear learning goals (88% LG vs. 94% VG, p = 0.1562) and the correct level of complexity (90% LG vs. 94% VG, p = 0.4878) and organization (85% LG vs. 96% VG, p = 0.0923). The majority of students (73% LG vs. 65% VG) agreed (somewhat or strongly) that it was better than the average teaching session (p = 0.5754). Significantly more LG (80%) than VG (33%) agreed (somewhat or strongly) that the session encouraged collaboration (p < 0.0001) and was interactive (88% LG vs. 34% VG, p < 0.0001) but was more demanding (28% LG vs. 13% VG, p = 0.0109). Most students agreed (somewhat or strongly) that the session was flexible to meet their needs (82% LG vs. 85% VG, p = 0.5195) and answered their own questions (85% LG vs. 73% VG, p = 0.3348).

Learning Preferences

Significantly more LG (71%) than VG (37%) preferred learning with a lecturer (p = 0.002), while more VG (73% VG vs. 55% LG, p = 0.1216) stated that they preferred learning on their own time. A third of both groups (33% LG vs. 34% VG, p = 0.9659) stated that they were prompted to look up their own resources.

Application

Most students (75% LG vs. 81% VG, p = 0.4248) felt that the session would help them apply their knowledge in a case scenario. Prior to simulation, 81% of the LG and 73% of the VG preferred what they were assigned to (p = 0.3319). After simulation, 81% LG versus 67% VG preferred their assigned group (p = 0.0994).

Discussion

In our study, we set out to determine if educational videos resulted in any difference in knowledge application for medical students compared to traditional lecture in an emergency medicine undergraduate clerkship. We found no educationally significant differences in knowledge application, acquisition, and retention. Students were satisfied with the group they were assigned to, with no significant difference before or after the application test. Therefore, videos adhering to multimedia design principles could replace traditional lectures in producing similar knowledge acquisition and application in an OSCE setting.

Inconsistency in learning outcomes in multimedia learning literature could be partly due to inconsistent adherence to design guidelines,30 such as Mayer's multimedia design principles based on cognitive load theory.33, 34, 35 Multimedia learning requires a substantial amount of cognitive processing in the verbal and visual channels.34 This cognitive processing is limited.45 Mayer's guidelines decrease channel overload and increase cognitive processing capacity by removing extraneous and confusing material.34 In Mayer's controlled experiments, students did better in tests when these individual principles were used,46 leading him to conclude that “cognitive load is a central consideration in the design of multimedia instruction.” This echoes previous study that suggests that sound pedagogy is more important than technology.47

The strength of our study is that we adhered strictly to Mayer's multimedia principles and attempted to keep curriculum confounders to a minimum. While a similar recent study with multimedia principle‐based videos focused on satisfaction and attitudes,48 we used skill application in an OSCE setting as our primary outcome.

Our study contributed to the voices of previous work that videos adherent to multimedia design principles could replace traditional lectures. These videos could become reusable learning objects, freeing up valuable expert faculty time to afford interactive in‐class learning time. Rather than comparing videos to lectures, future research should focus on what specific interactive elements would be suitable for in‐class learning.

Limitations

There are a few limitations in our study. We did not randomize students individually, but used an alternating block sequence for the intervention (VG) and control group (LG) to allow for a contemporaneous control group with respect to clinical maturity and exposure to trauma cases. As the university assigned student groups before the study, it is possible that students with preferences to clinical topics might have requested to be in specific groups. This preference was not captured.

While half of VG and LG had exposure to trauma patients before our study through previous mandatory surgery rotations, we did not collect data on previous experience in assessing trauma patients. We also did not collect data on previous exposure to multimedia learning and simulation training.

These confounders could potentially affect our outcome, as those with previous exposure to trauma patients, simulation, or multimedia learning might have done better in the OSCE. As students were not blinded to the group they were assigned to, there is potentially bias in their performances.

The lecture (1.75 hours) was longer than the combined length of the videos (0.76 hours). While we attempted to keep the material identical (same slides, annotations, and script and by auditing the first lecture), students asked questions during lectures. Those questions and subsequent answers therefore represented minor deviations from the planned script. Even though a tracker was used, we could not identify separate page visits to measure individual views.

The LG had a one‐time exposure to the lecture and the VG could access the videos at any time. It is possible VG had accessed the videos multiple times, as some VG self‐reported watching them repeatedly prior to the OSCE and posttest. This could have skewed the results toward VG via spaced repetition.

Cook49 has suggested that media‐comparative studies such as this should not be done as there are confounders even if the content is identical. Instead, he suggested research is best done within rather than between levels of hierarchy (configuration, instructional method, and presentation) to answer the question of “how” and “when” to use e‐learning effectively. Other authors lamented that the scope of evaluation was often limited to satisfaction or knowledge acquisition.50, 51 Addressing the concerns of Cook49 about comparing different media, we attempted to keep potential confounders between the two methods (narrator, material, script, slides, vignettes, etc.) to a minimum.

Conclusions

Videos strictly adhering to Mayer's multimedia design principles could replace traditional lectures in medical student skill acquisition and application. Medical educators should use well‐designed videos to transmit content and devote classroom time to active learning activities.

We thank the Clerkship Program and the Dean for Undergraduate Medical Program at the University of Ottawa and the University of Ottawa Simulation and Skills Centre for participation in this study.

AEM Education and Training 2020;4:10–17

The authors have no relevant financial information or potential conflicts to disclose.

Author contributions: SY and JRF were responsible for the conception and design of this study, data acquisition and interpretation, and drafting and revising of the manuscript; SY, AS, PP, and MW were responsible for the design of the study, acquisition and interpretation of data, and drafting of the manuscript; and ACL was responsible for analysis and interpretation of data in this study and drafting of the manuscript.

References

- 1. Prober CG, Heath C. Lecture halls without lectures–a proposal for medical education. N Engl J Med 2012;366:1657–9. [DOI] [PubMed] [Google Scholar]

- 2. Prober CG, Khan S. Medical education reimagined. Acad Med 2013;88:1407–10. [DOI] [PubMed] [Google Scholar]

- 3. Bergmann J, Sams A. Remixing chemistry class: two Colorado teachers make vodcasts of their lectures to free up class time for hands‐on activities. Learn Lead Technol 2009;36:22–7. [Google Scholar]

- 4. Roehl A, Reddy SL. The flipped classroom: an opportunity to engage millennial students through active learning strategies. J Fam Consum Sci 2013;105:44–9. [Google Scholar]

- 5. McDonald K, Smith CM. The flipped classroom for professional development: part I. Benefits and strategies. J Contin Educ Nurs 2013;44:437–8. [DOI] [PubMed] [Google Scholar]

- 6. Kraut A, Omron R, Caretta‐Weyer H, et al. The use of flipped classrooms in higher education: a scoping review. Internet High Educ 2019;25:527–36. [Google Scholar]

- 7. Chen F, Lui AM, Martinelli SM. A systematic review of the effectiveness of flipped classrooms in medical education. Med Educ 2017;51:585–97. [DOI] [PubMed] [Google Scholar]

- 8. Hew KF, Lo CK. Flipped classroom improves student learning in health professions education: a meta‐analysis. BMC Med Educ 2018;18:38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Bouwmeester RAM, de Kleijn RAM, van den Berg IET, ten Cate O.Th.J, van Rijen HVM, Westerveld HE. Flipping the medical classroom: Effect on workload, interactivity, motivation and retention of knowledge. Comput Educ 2019;139:118–28. 10.1016/j.compedu.2019.05.002 [DOI] [Google Scholar]

- 10. Jensen JL, Kummer TA, Godoy PD. Improvements from a flipped classroom may simply be the fruits of active learning. CBE Life Sci Educ 2015;14:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Means B, Toyama Y, Murphy R, Bakia M, Jones K; Center for Technology in Learning . Evaluation of Evidence‐based Practices in Online Learning: A Meta‐analysis and Review of Online Learning Studies. Washington, DC: U.S. Department of Education, Office of Planning, Evaluation, and Policy Development, 2010. [Google Scholar]

- 12. Fung K. Otolaryngology – head and neck surgery in undergraduate medical education: advances and innovations. Laryngoscope 2015;125(Suppl 2):1–14. [DOI] [PubMed] [Google Scholar]

- 13. Garcia‐Rodriguez JA, Donnon T. Using comprehensive video‐module instruction as an alternative approach for teaching IUD insertion. Fam Med 2016;48:15–20. [PubMed] [Google Scholar]

- 14. Weber U, Constantinescu MA, Woermann U, Schmitz F, Schnabel K. Video‐based instructions for surgical hand disinfection as a replacement for conventional tuition? A randomised, blind comparative study. GMS J Med Educ 2016;33:Doc57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Bishop JL, Verleger MA. The Flipped Classroom: A Survey of the Research. 120th ASEE National Conference & Exposition, 2013.

- 16. Saun TJ, Odorizzi S, Yeung C, Johnson M, Bandiera G, Dev SP. A peer‐reviewed instructional video is as effective as a standard recorded didactic lecture in medical trainees performing chest tube insertion: a randomized control trial. J Surg Educ 2017;74:437–42. [DOI] [PubMed] [Google Scholar]

- 17. Saiboon IM, Jaafar MJ, Ahmad NS, et al. Emergency skills learning on video (ESLOV): a single‐blinded randomized control trial of teaching common emergency skills using self‐instruction video (SIV) versus traditional face‐to‐face (FTF) methods. Med Teach 2014;36:245–50. [DOI] [PubMed] [Google Scholar]

- 18. Bergmann J, Sams A. Flip Your Classroom: Reach Every Student in Every Class Every Day. Washington, DC: International Society for Technology in Education, 2012. [Google Scholar]

- 19. Markova A, Weinstock MA, Risica P, et al. Effect of a web‐based curriculum on primary care practice: basic skin cancer triage trial. Fam Med 2013;45:558–68. [PubMed] [Google Scholar]

- 20. Platz E, Liteplo A, Hurwitz S, Hwang J. Are live instructors replaceable? Computer vs. classroom lectures for EFAST training. J Emerg Med 2011;40:534–8. [DOI] [PubMed] [Google Scholar]

- 21. Ahmed HM. Hybrid E‐Learning Acceptance Model: Learner Perceptions [Internet]. Decision Sciences Journal of Innovative Education. 2010;8:313–46. Available from: https://login.proxy.bib.uottawa.ca/login?url=http://www.biblio.uottawa.ca/html/index.jsp?xml:lang=en. Accessed October 18, 2017. [Google Scholar]

- 22. Rose E, Claudius I, Tabatabai R, Kearl L, Behar S, Jhun P. The flipped classroom in emergency medicine using online videos with interpolated questions. J Emerg Med 2016;51:284–91.e1. [DOI] [PubMed] [Google Scholar]

- 23. Liebert CA, Lin DT, Mazer LM, Bereknyei S, Lau JN. Effectiveness of the surgery core clerkship flipped classroom: a prospective cohort trial. Am J Surg 2016;211:451–1. [DOI] [PubMed] [Google Scholar]

- 24. Young T, Bailey C, Guptill M, Thorp A, Thomas T. The flipped classroom: a modality for mixed asynchronous and synchronous learning in a residency program. West J Emerg Med 2014;15:938–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Sowan AK, Idhail JA. Evaluation of an interactive web‐based nursing course with streaming videos for medication administration skills. Int J Med Inform 2014;83:592–600. [DOI] [PubMed] [Google Scholar]

- 26. Cook DA, Triola MM. What is the role of e‐learning? Looking past the hype. Med Educ 2014;48:930–7. [DOI] [PubMed] [Google Scholar]

- 27. Green LS, Banas JR, Perkins RA. The Flipped College Classroom: Conceptualized and Re‐Conceptualized. New York: Springer International Publishing, 2016. [Google Scholar]

- 28. Robin BR, McNeil SG, Cook DA, Agarwal KL, Singhal GR. Preparing for the changing role of instructional technologies in medical education. Acad Med 2011;86:435–9. [DOI] [PubMed] [Google Scholar]

- 29. Tang B, Coret A, Barron H, Qureshi A, Law M. Online lectures in undergraduate medical education: how can we do better? Can Med Educ J 2019;10:e137–9. [PMC free article] [PubMed] [Google Scholar]

- 30. De Leeuw RA, Westerman M, Nelson E, Ket JC, Scheele F. Quality specifications in postgraduate medical e‐learning: an integrative literature review leading to a postgraduate medical e‐learning model. BMC Med Educ 2016;16:759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Belfi LM, Bartolotta RJ, Giambrone AE, Davi C, Min RJ. “Flipping” the introductory clerkship in radiology. Acad Radiol 2015;22:794–801. [DOI] [PubMed] [Google Scholar]

- 32. Cook DA, Garside S, Levinson AJ, Dupras DM, Montori VM. What do we mean by web‐based learning? A systematic review of the variability of interventions. Med Educ 2010;44:765–74. [DOI] [PubMed] [Google Scholar]

- 33. Mata CA, Ota LH, Suzuki I, Telles A, Miotto A, Leão LE. Web‐based versus traditional lecture: are they equally effective as a flexible bronchoscopy teaching method? Interact Cardiovasc Thorac Surg 2012;14:38–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Mayer RE, Moreno R. Nine ways to reduce cognitive load in multimedia learning. Educ Psychol 2003;38:43–52. [Google Scholar]

- 35. Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Instructional design variations in internet‐based learning for health professions education: a systematic review and meta‐analysis. Acad Med 2010;85:909–22. [DOI] [PubMed] [Google Scholar]

- 36. van Merriënboer JJ, Sweller J. Cognitive load theory in health professional education: design principles and strategies. Med Educ 2010;44:85–93. [DOI] [PubMed] [Google Scholar]

- 37. McLaughlin JE, Griffin LM, Esserman DA, et al. Pharmacy student engagement, performance, and perception in a flipped satellite classroom. Am J Pharm Educ 2013;77:196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Mayer RE. Applying the science of learning to medical education. Med Educ 2010;44:543–9. [DOI] [PubMed] [Google Scholar]

- 39. Taveira‐Gomes T, Saffarzadeh A, Severo M, Guimarães MJ, Ferreira MA. A novel collaborative e‐learning platform for medical students ‐ ALERT STUDENT. BMC Med Educ 2014;14:143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Garcia‐Rodriguez JA. Teaching medical procedures at your workplace. Can Fam Physician 2016;62:351–4, e2225. [PMC free article] [PubMed] [Google Scholar]

- 41. Abdulghani HM, Ponnamperuma G, Amin Z. Value of the OSCE as an assessment tool In: Abdulghani HM, Ponnamperuma G, Amin Z, editors. An Essential guide to Developing, Implementing and Evaluating https://flippedemclassroom.wordpress.com/2014/04/14/approach-to-trauma-by-stella-yiu/ (OSCE). Singapore: World Scientific, 2014. p. 23–35. [Google Scholar]

- 42. Lowe D. Set a multiple choice question (MCQ) examination. BMJ 1991;302:780–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Anderson J. Multiple‐choice questions revisited. Med Teach 2009;26:110–3. [DOI] [PubMed] [Google Scholar]

- 44. Chenkin J, Lee S, Huynh T, Bandiera G. Procedures can be learned on the web: a randomized study of ultrasound‐guided vascular access training. Acad Emerg Med 2008;15:949–54. [DOI] [PubMed] [Google Scholar]

- 45. Chandler P, Sweller J. Cognitive load theory and the format of instruction. Cogn Instruct 1991;8:293–332. [Google Scholar]

- 46. Mayer RE, Moreno R. A split‐attention effect in multimedia learning: evidence for dual processing systems in working memory. J Educ Psychol 1998;90:312. [Google Scholar]

- 47. DeBourgh GA. Technology Is the Tool, Teaching Is the Task: Student satisfaction in Distance Learning. San Antonio: ERIC Institute of Education Sciences, 1999. [Google Scholar]

- 48. Lew EK. Creating a contemporary clerkship curriculum: the flipped classroom model in emergency medicine. Int J Emerg Med 2016;9:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Cook DA. The research we still are not doing: an agenda for the study of computer‐based learning. Acad Med 2005;80:541. [DOI] [PubMed] [Google Scholar]

- 50. Ruggeri K, Farrington C, Brayne C. A global model for effective use and evaluation of e‐learning in health. Telemed J E Health 2013;19:312–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet‐based learning in the health professions: a meta‐analysis. JAMA 2008;300:1181–96. [DOI] [PubMed] [Google Scholar]