Abstract

Background

Clinical teaching faculty rely on schemas for diagnosis. When they attempt to teach medical students, there may be a gap in the interpretation because the students do not have the same schemas. The aim of this analysis was to explore expert thinking processes through mind maps, to help determine the gaps between an expert's mind map of their diagnostic thinking and how students interpret this teaching artifact.

Methods

A novel mind‐mapping approach was used to examine how emergency physicians (EPs) explain their clinical reasoning schemas. Nine EPs were shown two different videos of a student interviewing a patient with possible venous thromboembolism. EPs were then asked to explain their diagnostic approach using a mind map, as if they were thinking to a student. Later, another medical student interviewed the EPs to clarify the mind map and revise as needed. A coding framework was generated to determine the discrepancy between the EP‐generated mind map and the novice's interpretation.

Results

Every mind map (18 mind maps from nine individuals) contained some discrepancy between the expert's mind and novice's interpretation. From the qualitative analysis of the changes between the originally created mind map and the later revision, the authors developed a conceptual framework describing types of amendments that students might expect teachers to make in their mind maps: 1) substantive amendments, such as incomplete mapping; and 2) clarifications, such as the need to explain background for a mind map element.

Conclusion

Emergency physician teachers tend to make jumps in reasoning, most commonly including incomplete mapping and maps requiring clarifications. Educating EPs on these processes will allow modification of their teaching modalities to better suit learners.

Dual‐process theory postulates that decision making relies on two types of thinking: heuristic and analytical, otherwise known as fast and slow thinking.1, 2 Fast thinking (a.k.a. system 1) is automatic and intuitive and requires little effort (e.g., knowing that an animal is either a zebra or horse); slow thinking (a.k.a. system 2) is effortful and process‐driven and requires focus (e.g., explaining to a student how to arrive at an answer).2 While it has been shown that both trainees and faculty similarly engage in both fast and slow thinking,3, 4 experience can lead novices to perceive jumps in diagnostic reasoning when listening to the explanations of those more seasoned.5

Within the medical field, fast thinking can allow physicians to quickly make diagnoses.6, 7, 8 Conversely, medical students are relatively inexperienced clinicians; as such, they are more likely to engage in “slow thinking” because their heuristic patterns have not yet fully developed.9 These novices must learn both implicitly by observing experts making clinical judgments and by explicit teaching. In the clinical teaching environment, the two phenomena intersect. To effectively teach novices, at times the expert needs to deconstruct their reasoning process into analytic steps.

Expert physicians use system 1 thinking to make diagnoses; medical students observe and infer diagnostic thinking based on experts’ observable or stated reasoning. Unsurprisingly, students are often left confused when observing their expert teachers make diagnostic decisions. What appears obvious to an attending because of their previous experience and well‐developed heuristics, seems like a leap of logic for the inexperienced apprentice. Teachers can use diagrams or drawings to explain their thinking to learners at the bedside.10, 11

Medical teachers use visual aids at the bedside to emphasize key teaching points to learners.10, 11, 12 Visual aids can take on many forms, ranging from short notes (“Post It Pearls,” which are often captured at the bedside on sticky notes1) to full didactic white board minilectures. The use of visual aids (such as mind maps) allows portrayal of each discrete point which contributes to the diagnostic conclusion, thus showing the slow thinking learning points entailed in the physician's final judgment. First developed in the 1970s, mind mapping is one such visual teaching resource. Mind mapping relies on the visual portrayal of information.10 Mind mapping can help learners to remember key points and quickly review information.13 Mind mapping also helps to make teaching points more accessible to learners, by portraying them in visually interesting ways, emphasizing only key words. Visual aids and mind maps may therefore provide an opportunity to augment traditional bedside teaching, by forcing teachers to clarify their clinical reasoning. While faculty use mind maps, handwritten algorithms, and diagrams to teach, it is not known if these are effective tools for novice student learners.

The objective of this study was to demonstrate the gap between faculty teaching aims and the student's learning. To do so, we reviewed mind maps as a teaching aid; we asked our participants to generate mind maps initially based on our observed simulated teaching videos and then asked them to explain their own mind maps at a later date with the aid of a trainee research assistant who was seeking clarification of elements within their diagrams.

Methods

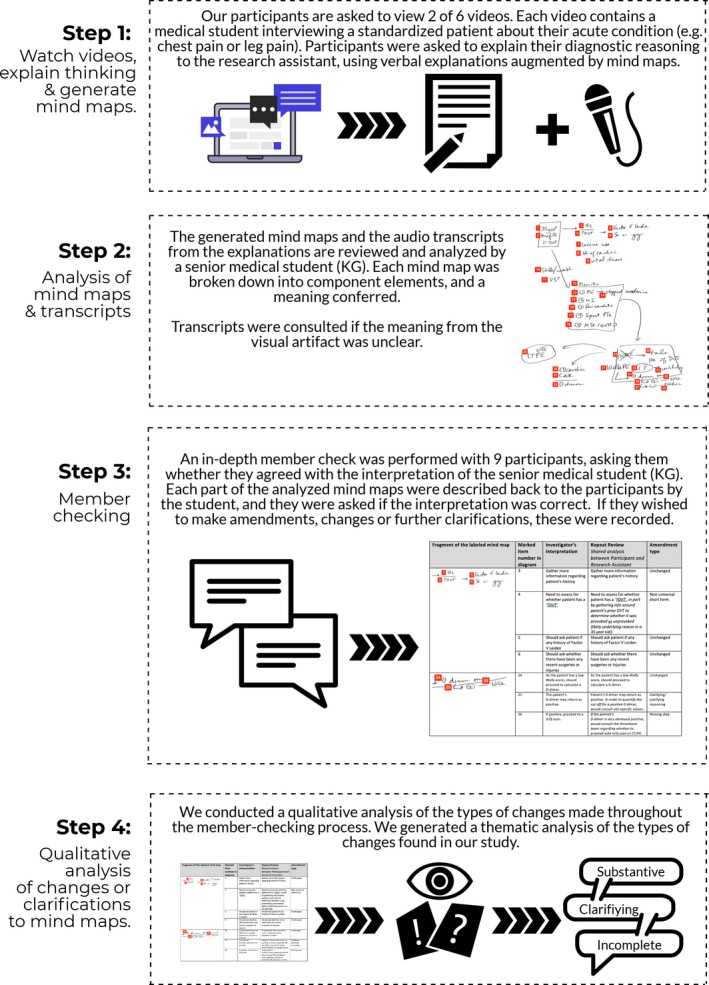

A novel mind‐mapping approach was used to examine how experienced emergency physicians (EPs) explain their diagnostic reasoning to learners, through a think‐aloud interview process14 using video‐prompted mind mapping.15 Figure 1 provides an overview of the entire study protocol. In this study, our EPs were asked to explain their diagnostic reasoning to a medical student via a mind map; the intent of our design was to examine the explicit cognitive processes they use to explain patient diagnosis during a simulated teaching setting. Example clinical cases suggestive of pulmonary embolism or deep vein thrombosis were created to elicit this reasoning, with presentations including chest pain, breathlessness, and leg symptoms. We chose to use this knowledge elicitation technique to go beyond the usual think‐aloud interview protocol, to leave a teaching “artifact”, which would allow us to revisit their initial thinking process.

Figure 1.

An overview of the study protocol.

This study contained analysis of the mind maps that were created during these think‐aloud interviews, as well as a planned, secondary analysis of the changes EPs made to their mind maps during a follow‐up interview (member check reinterpretation). The aim of this analysis was to determine the gaps between an expert's mind map of their diagnostic thinking (meant to portray the slow thinking underlying their reasoning) and a trainee's ability to interpret these maps.

Both the mind maps generated in their first interview and the later amendments to mind maps underwent qualitative analysis by two investigators, KG and TC. The investigators reviewed the original interpretation and the member check reinterpretation iteratively, generating a coding framework for the types of changes that were made to the mind map interpretations. Coding disagreements were resolved through a consensus building procedure, and only three codes required this process. Each of the spreadsheets was then recoded by a single investigator (KG) to generate a tally of the changes that occurred via the member checking process.

Participants

Study participants were university‐affiliated staff emergency medicine physicians, across three academic hospitals in Hamilton, Ontario, Canada. We recruited a total of nine physicians from these hospitals, through a convenience sample. Participants had varying levels of clinical experience. Participant demographics are found in Table 1.

Table 1.

Demographics of Physician Participants

| Sex | |

| Male | 7 (78) |

| Female | 2 (22) |

| Type of emergency medicine training | |

| College of Family Physicians of Canada, Emergency Medicine Program | 5 (56) |

| Royal College of Physicians and Surgeons of Canada Training Program | 4 (44) |

| Other descriptors | |

| Years in emergency medicine specialty training | 3 (1–5) |

| Years as a practicing physician | 18 (8–25) |

Data are reported as n (%) or median (interquartile range).

Procedures

A study protocol was written prior to the start of the study, and two research assistants (EG and MT) were trained to administer the protocol; EG and MT were both students at the time of administering the study protocol interviews (EG was a second‐year medical student, and MT was a premedical student). Ethical approval for this study was received from the Hamilton Integrated Research Ethics Board (HIREB #15‐246).

To ensure that they were familiar with the concept of mind mapping, EPs were first shown an example video demonstrating the construction of a mind map. A nonclinical example was used, involving a car that will not start (e.g., car will not start → check if proper key → check for visible damage …). This scenario was chosen as a practical, real‐life example to which EPs could relate, to demonstrate how mind mapping should present an approach to a problem. After watching this demonstrative example of mind mapping, EPs were shown a clinical video of a medical student taking a patient history. To capture a breadth of cases, a total of six patient interview videos were created (see Data Supplement S1, available as supporting information in the online version of this paper, which is available at http://onlinelibrary.wiley.com/doi/10.1111/acem.10379/full, for instructions for the participants and details about the cases). EPs were randomized to view two of these six possible videos. The scenarios in these videos were created with the consideration of venous thromboembolism (VTE) as a potential diagnosis, with varying degrees of clinical likelihood. The topic of VTE was selected since it is an area with many evidence‐based guidelines, which might drive diagnostic procedures and would result in rich discussions.

After viewing each video, EPs were asked to explain their clinical approach to the scenario. They were instructed to explain how they would diagnose the cause of the patient's symptoms by thinking aloud their decision‐making process and drawing out a mind map.

Emergency physicians were explicitly advised to assume that the interviewer had no prior knowledge surrounding VTE diagnosis, to encourage them to fully explain their diagnostic reasoning using slow thinking. After watching the video, EPs were provided with pen and paper to create their mind maps. EPs were not explicitly instructed regarding which specific information to include in their mind map, to allow a true representation of their approach. They drew a mind map and explained approach to VTE to the research assistant. The explanation was audiotaped and transcribed for reference alongside the visual aids.

Interpretation of Mind Maps

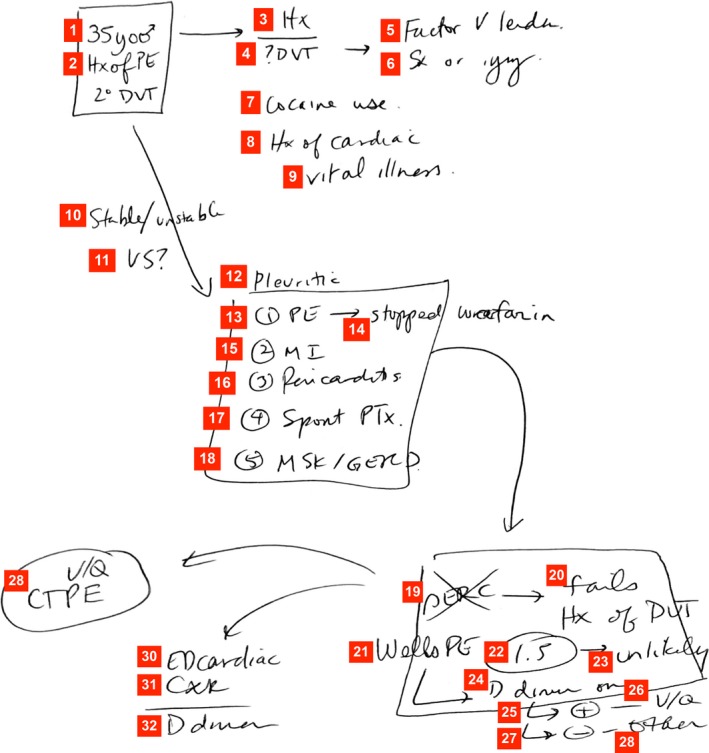

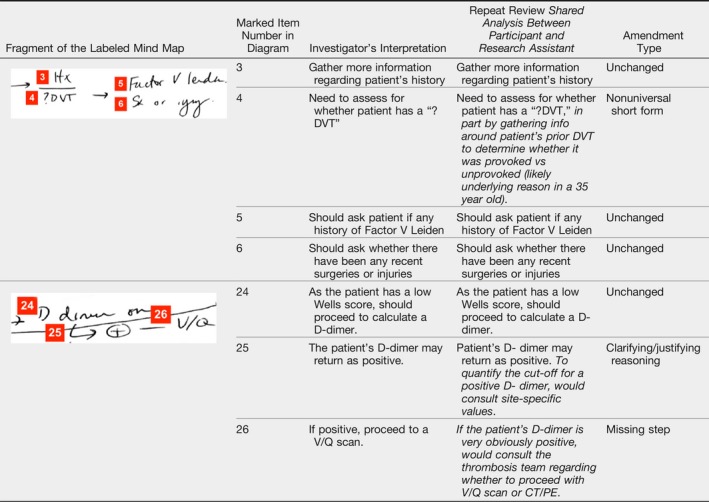

An investigator (a senior medical student [KG], not involved in the initial interviews) independently reviewed the mind maps to break down each map into component steps (or individual teaching items). An example of this is shown in Figure 2. The method of interpretation and analysis was done in the style of Cristancho and colleagues,15 who used this method to analyze drawings by surgeons. To augment the interpretation of the mind maps, our investigatory team used the original session transcripts to better understand the mind maps that were originally generated. This investigator also had access to the transcripts of the discussions, to augment the visual artifacts generated by our participants. Table 1 shows two examples of mind map subcomponent analysis, chosen as illustrative examples. Each of the 18 mind maps was treated similarly.

Figure 2.

Example of a resulting mind map (Redrawn by an author to protect confidentiality based on the mind map from Participant 4, Scenario 6). This diagram shows the thoughts of a participant on the case about a 35‐year‐old woman with a history of pulmonary embolism (PE) secondary to a deep vein thrombosis (DVT), highlighting the key elements of the case, the diagnostic reasoning, the application of clinical decision rules, diagnostic testing, and overall thinking process. Legend: yo = year old; Hx = history; PE = pulmonary embolism; 2o = secondary; DVT = deep vein thrombosis; Sx = surgery; VS = vital signs; MI = myocardial infarction; PTx = pneumothorax; MSK = musculoskeletal; GERD = gastroesophageal reflux disease; PERC = PE rule out criteria; Wells PE = Wells’ pulmonary embolism criteria; V/Q = ventilation perfusion study; CXR = chest x‐ray; CTPE = CT pulmonary Embolism.

Member Check

Our medical student investigator (KG) then re‐interviewed each physician to confirm the inferred learning points items, to question meaning behind those items that were not clear and to provide an opportunity for EPs to amend, supplement or change their maps. Each interview was based on the mind map that the EP generated and hence consisted of specific targeted questions concerning elements of each mind map that were present. Specifically, EPs were given the research assistant's interpretation and their original mind map and then asked if they agreed or not. If they confirmed agreement, the research assistant moved on, but if the query prompted a change, then the adjustment of the interpretation was written verbatim. This more structured member‐checking process allowed us to determine which subcomponents were misinterpreted or required further clarifications or additions. During the re‐interview stage our research assistant attempted to prompt EPs to engage in slow thinking teaching by asking key questions to clarify unclear mind map points. These questions were intended to make logical jumps explicit with slow thinking explanations, to augment learners’ clinical understanding.

Results

We recruited nine physicians to participate in this phase of the study. Table 1 depicts the demographic data of our participants. Data were included from the nine EPs yielding a total of 18 mind maps (two from each EP). Although there was no set time limit, EPs generally spent less than 10 minutes creating each map. The member checking interviews lasted 30 to 40 minutes.

Qualitative Analysis of Amendment Types

By examining the coding tables generated by discussing and comparing in the member‐check process, we were able to qualitatively classify the amendments made by the EPs when asked to clarify their mind maps. Tables 2 and 3 define the various types of changes, as pertaining to the redrawn diagram. The two major categories of changes were substantive deficits to the mind maps (e.g., incomplete, unclear, complete changes/revision) and changes that merely required verbal clarification (e.g., adaptations, clarifications around their writing).

Table 2.

Gaps in Understanding as Determined by the Qualitative Analysis of the Member Check Proceedings

| Main Category | Subcategory | Amendment Type | Definition of Amendment Type | Number of Type in Data Set | |

|---|---|---|---|---|---|

| Substantive deficits | Incomplete | Selective focus | Participants tended to expand more thoroughly in sections of the mind map on re‐interview, e.g., would go more in depth on a particular part of the schema they previously drew, calling to attention a certain aspect. | 4 | |

| Information missing from mind maps | Missing steps | Additional step added within the mind map, e.g., an additional diagnostic step such as ultrasound. | 41 | ||

| Missing rationale | Additional info needed to explain rationale behind clinical steps (e.g., CXR done to rule out pneumothorax, trauma). | 15 | |||

| Missing data | Adding additional data to map, e.g., adding additional factors to consider in patient's likelihood of DVT. | 4 | |||

| Missing critical diagnosis | Adding critical diagnosis to differential diagnoses within mind map, e.g., pneumothorax in chest pain differential. | 1 | |||

| Missing diagnosis | Adding noncritical diagnosis to differential diagnoses within mind map, e.g., musculoskeletal pain in chest pain differential. | 8 | |||

| Unclear | Vague | Mind map required additional info to be added for clarity; e.g., original map stated ‘1st and 2nd line imaging,’ requiring explanation that this meant CXR versus CT. | 3 | ||

| Non‐universal short form | Using a nonuniversal short form that is unclear to the reader, e.g., ?DVT to denote the question of whether or not the DVT was provoked. | 1 | |||

| Poorly constructed map | Map required modification because its construction was confusing to reader; e.g., one specific differential diagnosis was circled despite it being equally likely as all other differential diagnoses. | 1 | |||

| Poor placement of items | Changing the placement of existing items within the map, e.g., explaining that steps should be concurrent instead of sequential. | 3 | |||

| Complete change/revision | Removing step | Mind map step removed as either extraneous or incorrect. | 2 | ||

| Total | 83 | ||||

| Required verbal clarification | Adaptive techniques | Creating general rule | Applying a general rule to a clinical scenario; e.g., every patient with chest pain should receive an electrocardiogram. | 6 | |

| “What if?” | Extrapolating the current clinical scenario to an imaginary patient; e.g., if this patient had been pregnant, I would have done ‘x’ instead. | 7 | |||

| Clarifications required | Clarifications required | Required addition of background clarification, e.g., Other risks in history of DVT would include swelling, prior history. | 1 | ||

| Clarifying/justifying reasoning | Adding a reason for clinical decisions, e.g., decision to anticoagulate would be based on a local thrombosis study. | 21 | |||

| Expanding priorities | Additional information added to clarify which information is most relevant, e.g., ranking list of differential diagnoses by likelihood. | 2 | |||

| Expanding differential diagnosis | Adding additional differential diagnoses; e.g., PE and pneumothorax within respiratory causes of chest pain. | 7 | |||

| Expanding meaning of term | Adding additional information to established term; e.g., “examine leg” to mean looking for swelling, redness, bruising. | 2 | |||

| Logistic clarification | Explanation added for site‐specific logistics; e.g., would anticoagulate the patient and have them return the next day as CT scans unavailable overnight. | 4 | |||

| Total | 50 | ||||

CT = computed tomography; CXR = chest X‐ray; DVT = deep vein thrombosis; PE = pulmonary embolism.

Table 3.

Example of Mind Map Analysis from Participant 4, Scenario 6

Mind‐mapping Results

Box 1 provides a detailed summary of the discussion types that occurred during the re‐interview. As seen in the example within Box 1, participant 7 required the research assistant to prompt them for further clarification.

Box 1. Detailed Summary of Re‐interview with Participant 13.

Participant 13 concluded his map (Scenario 3) by stating “need to rule out pulmonary embolism (PE) regardless of deep venous thrombosis (DVT). If no PE, still need to rule out DVT”. When given the opportunity to add further clarifying points to this statement, the EP chose not to do so. However, the observer then prompted the physician, asking how to rule out PE. With this prompt, the EP was able to break down the end goal into smaller teaching points (demonstrating a slow thinking approach). The EP stated that the patient would need to undergo a CT scan, (with the exception of chronic renal failure cases, which would require a V/Q scan).

Subsequently, the EP was prompted again by the observer asking about steps following these investigations. With this prompt, the EP further explained that a positive scan would lead to treatment, while a negative scan would be followed up by a bilateral leg ultrasound.

Observations From Mind Map Member Checks

Certain EPs were able to shift to a slow‐thinking–based method of teaching upon review of their mind maps, while others remained unable to do so even with prompting. We inferred that the “fast‐thinking” jumps manifest as gaps in the mind maps that are resistant to further prompting. An example of this phenomenon was participant 7, who refused to further clarify their mind map after multiple prompts stating: “All the info is in my map, I don't need to add anything.” This may reflect an inability to switch out of their fast‐thinking approach (i.e., they are skipping steps institutively and unable to declare their thought processes), impacting the way in which they teach learners. Resulting mind maps thus displayed significant heterogeneity, both in learning points and in the extent to which EPs expanded these points.

Discussion

In this exploratory mind‐mapping study we found that experienced physicians tend to use fast thinking when diagnosing patients with symptoms of venous thromboembolism. We found that their teaching mind maps displayed fast‐thinking–type links, rather than including the slow‐thinking components we would expect to use when teaching inexperienced medical students. Experienced clinicians may generate incomplete depictions of decision making, simply because they are unaware of the logical jumps (e.g., system 1–type heuristics) that they engage upon in their normal clinical reasoning.

Herein lies the tension between the duality of a clinician–teacher's identity: whereas an expert clinician may develop and hone their diagnostic acumen, teachers need to be declarative and specific in their descriptions. Consider the work of Sibbald and colleagues,8 for instance, where they have shown that experienced clinicians can determine the clinical trajectory of a patient within a few minutes of observing the patient. Experts develop and hone their system 1 processes so that they can come to a decision about a patient's illness,7 but when we ask these same individuals to concurrently teach junior trainees who need these processes unpacked and explained, our findings show that they stumble. Knowing that expertise in diagnostic processes and declarative knowledge (i.e. what an expert is able to say aloud) are not the same thing may be a crucial point within faculty development, since teachers may find it difficult to explain what it is that they see and think, and have other barriers to communicating their thoughts. These barriers may disproportionately affect inexperienced learners when using mind maps for teaching, suggesting that even the most junior of medical learners have an important role in prompting teachers to explain unclear “fast‐thinking” teaching jumps.

Multiple studies have demonstrated mind maps to be a useful tool to improve knowledge retention among trainees.16 Mind maps are effective in helping students recall central ideas, integrate critical thinking, and apply problem‐solving skills.17 They are particularly effective when students have limited prior knowledge of the topic, as is the case with medical students.18 While the usefulness of mind maps as a study technique has been proven, less research has been done on their use as a teaching technique.

Mind mapping may prove especially useful within the fast pace of the emergency department, which can be challenging for learning as bedside teaching sessions tend to be focused and brief. However, mind map use may leave learners with unanswered questions, which should ideally be addressed promptly as learners are unlikely to have further opportunities to ask for clarification. Our findings suggest that learners should ask probing questions to augment their learning, as their overall understanding of mind maps (and underlying clinical reasoning) increased when given the opportunity to ask questions. However, it is also important for teachers to be proactive in illustrating their thinking to students, because in some cases learners may not have enough background knowledge to ask the right probing questions. The fast‐paced learning environment, as well as the learner's desire to be perceived as knowledgeable within a hierarchical system, can all act as barriers to the learner speaking up and asking clarifying questions.

During re‐interviews, multiple mind map amendments were made to each drawing. Some changes were prompted, while EPs made others independently after recognizing that their maps were missing key points or unclear explanations. These changes highlight gaps between a teacher and trainee's perspectives on the same material and offer an opportunity to educate teachers on their explicit teaching skills. Mind maps are helpful in making these unclear learning jumps explicit, because they illustrate each step of reasoning. For example, upon re‐interview one EP noted that the majority of their mind map reasoning relied on Wells’ pulmonary embolism criteria, but they never mentioned this aloud or noted it in their mind map. Reexamination of their mind map afterward was helpful in identifying this gap.

This study demonstrates that in order to teach with visual aids such as mind maps, there must be an effective dialogue between teacher and student. Constructivist models of teaching and learning show that co‐construction with trainees will allow them to link previously learned concepts to newer ones. As such, engaging with learners to identify learning gaps may reduce skipped steps and cognitive jumps. Furthermore, trainees must be empowered to prompt and ask questions, which help EP teachers to clarify and explain their thinking; it should be noted that all our participants were readily able to explain and adjust their thinking when asked.

Since learners engage in cognitive apprenticeship19 throughout their training, it is especially important for teachers to invite trainees into a discussion around visual artifacts (like mind maps) to ensure that the intended teaching points are communicated. Further faculty development research focused on training teachers to articulate their thinking or to develop new strategies for explaining their thinking may be prudent.

Limitations

Limitations of this study include the small number of participants, which makes it difficult to draw generalizations about all teachers. Of course, this study was performed as part of an ex vivo experimental design—and so flaws in the EP mind maps may have been purely an artifact of this design. Furthermore, the mind maps were reinterpreted a period of time after their initial interview, and as such, the memory of their exact thinking may have been incomplete and could have yielded some of the differences between the first‐round interview and the later member check. This study included physicians at three hospitals in one Canadian city and therefore may not be generalizable to other centers with different teaching experiences and teacher training. A convenience sample of EPs was used; therefore, EPs level of experience may influence the degree to which each EP engages in “fast” versus “slow” thinking. EPs were not shown the clinical scenario videos again during the re‐interview stage, which may have influenced the changes they made to their teaching points. Finally, although the technique of mind mapping is frequently used by our participants, none had formal training in this technique; some may have been more talented at explaining difficult concepts and generating robust mind maps and some may have found the process foreign causing the resultant errors or misperceptions during the member check.

Conclusions

When clinical teachers express their diagnostic thinking via mind maps, they may not fully represent all of their cognitive processes effectively within their diagrams. The use of mind maps provides a visual representation of expert thinking, but may be wrought with errors if the faculty are untrained in this technique. Meanwhile, trainees must be aware that they can use questioning to help elucidate gaps in their diagrams and engage teachers in amending and/or clarifying mind maps. The gaps we identified between a trainee's interpretation and teacher's visual representation of their thinking may be a starting point for effective faculty and trainee development.

The primary authorship team thanks the students who contributed to this research.

Supporting information

Data Supplement S1. Instructions & Six Clinical Scenarios from Video Prompts.

AEM Education and Training 2020;4:54–63.

Presented at the Canadian Conference on Medical Education (CCME), Halifax, Nova Scotia, May 2018, and the Canadian Association of Emergency Physicians (CAEP) Conference, Calgary, Alberta, June 2018.

This work was funded by the McMaster Continuing Health Sciences Education Research Innovation Fund Grant.

The authors have no potential conflicts to disclose.

The Hamilton Integrated Research Ethics Board granted approval for this study (#15‐246).

References

- 1. Evans JS. Dual‐processing accounts of reasoning, judgment, and social cognition. Annu Rev Psychol 2008;59:255–78. [DOI] [PubMed] [Google Scholar]

- 2. Kahneman D. Thinking, Fast and Slow. New York: Farrar, Straus and Giroux; 2011. [Google Scholar]

- 3. Monteiro SD, Sherbino JD, Ilgen JS, et al. Disrupting diagnostic reasoning. Acad Med 2015;90:511–7. [DOI] [PubMed] [Google Scholar]

- 4. Sherbino J, Dore KL, Wood TJ, et al. The relationship between response time and diagnostic accuracy. Acad Med 2012;87:785–91. [DOI] [PubMed] [Google Scholar]

- 5. Norman GR, Monteiro SD, Sherbino J, Ilgen JS, Schmidt HG, Mamede S. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med 2017;92:23–30. [DOI] [PubMed] [Google Scholar]

- 6. Croskerry P. A universal model of diagnostic reasoning. Acad Med 2009;84:1022–8. [DOI] [PubMed] [Google Scholar]

- 7. Wiswell J, Tsao K, Bellolio MF, Hess EP, Cabrera D. “Sick” or “not‐sick”: accuracy of system 1 diagnostic reasoning for the prediction of disposition and acuity in patients presenting to an academic ED. Am J Emerg Med 2013;31:1448–52. [DOI] [PubMed] [Google Scholar]

- 8. Sibbald M, Sherbino J, Preyra I, Coffin‐Simpson T, Norman G, Monteiro S. Eyeballing: the use of visual appearance to diagnose ‘sick’. Med Educ 2017;51:1138–45. [DOI] [PubMed] [Google Scholar]

- 9. Croskerry P. Clinical cognition and diagnostic error: applications of a dual process model of reasoning. Adv Health Sci Educ Theory Pract 2009;14 Suppl 1:27–35. [DOI] [PubMed] [Google Scholar]

- 10. Edwards S, Cooper N. Mind mapping as a teaching resource. Clin Teach 2010;7:236–9. [DOI] [PubMed] [Google Scholar]

- 11. Stobart M. #onshiftteaching with our MS3 rotator and @JeffEMRes today on my least fave ccx: “dizzy.” Twitter. 2019. Available at: https://twitter.com/Megsahokie/status/1130485252596666368. Accessed June 8, 2019.

- 12. Swaminathan A. Post It Pearls 10.0. Core EM. 2017. Available at: https://coreem.net/blog/post-it-pearls/post-it-pearls-10-0/. Accessed August 17, 2018.

- 13. Chang KE, Sung YT, Chen ID. The effect of concept mapping to enhance text comprehension and summarization. J Exp Educ 2002;71:5–23. [Google Scholar]

- 14. Jaspers MW, Steen T, van den Bos C, Geenen M. The think aloud method: a guide to user interface design. Int J Med Inform 2004;73:781–95. [DOI] [PubMed] [Google Scholar]

- 15. Cristancho S, Bidinosti S, Lingard L, Novick R, Ott M, Forbes T. Seeing in different ways: introducing “rich pictures”in the study of expert judgment. Qual Health Res 2015;25:713–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Adodo SO. Effect of mind‐mapping as a self‐regulated learning strategy on students’ achievement in basic science and technology. Mediterr J Soc Sci 2013;4:163–72. [Google Scholar]

- 17. Noonan M. Mind maps: enhancing midwifery education. Nurse Educ Today 2013;33:847–52. [DOI] [PubMed] [Google Scholar]

- 18. O'Donnell AM, Dansereau DF, Hall RH. Knowledge maps as scaffolds for cognitive processing. Educ Psychol Rev 2002;14:71–86. [Google Scholar]

- 19. Stalmeijer RE, Dolmans DH, Wolfhagen IH, Scherpbier AJ. Cognitive apprenticeship in clinical practice: can it stimulate learning in the opinion of students? Adv Heal Sci Educ 2009;14:535–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Supplement S1. Instructions & Six Clinical Scenarios from Video Prompts.