Abstract

Diagnosis and treatment in ophthalmology depend on modern retinal imaging by optical coherence tomography (OCT). The recent staggering results of machine learning in medical imaging have inspired the development of automated segmentation methods to identify and quantify pathological features in OCT scans. These models need to be sensitive to image features defining patterns of interest, while remaining robust to differences in imaging protocols. A dominant factor for such image differences is the type of OCT acquisition device. In this paper, we analyze the ability of recently developed unsupervised unpaired image translations based on cycle consistency losses (cycleGANs) to deal with image variability across different OCT devices (Spectralis and Cirrus). This evaluation was performed on two clinically relevant segmentation tasks in retinal OCT imaging: fluid and photoreceptor layer segmentation. Additionally, a visual Turing test designed to assess the quality of the learned translation models was carried out by a group of 18 participants with different background expertise. Results show that the learned translation models improve the generalization ability of segmentation models to other OCT-vendors/domains not seen during training. Moreover, relationships between model hyper-parameters and the realism as well as the morphological consistency of the generated images could be identified.

1. Introduction

Optical coherence tomography (OCT) is a non-invasive technique that provides 3D volumes of the retina at a micrometric resolution [1]. Each OCT volume comprises multiple cross-sectional 2D images, or B-scans, each of them composed of 1D columns, or A-scans. By means of the OCT imaging modality, clinicians are allowed to perform detailed ophthalmic examinations for disease diagnosis, assessment and treatment planning. Standard treatment and diagnosis protocols nowadays rely heavily on B-scan images to inform clinical decisions [2].

Several imaging tasks such as segmentation of anatomical structures or classification of pathological cases are successfully addressed by automated image analysis methods. These approaches are commonly based on machine learning (ML) models, which are trained on manually annotated datasets in a supervised setting. Among the existing ML tools, deep learning (DL) techniques based on convolutional neural networks have been remarkably successful in different domains [3], including automated OCT image analysis [4].

However, these models are usually prone to errors when deployed on real clinical scenarios. This is partially due to the differences in the data distributions of the training sets and the real world data sets. This phenomenon is known as covariate shift, as formally defined in [5]. An important example of this phenomenon is observed in practice when the training and deployment sets come from different acquisition devices. In the daily ophthalmological routine, several OCT devices from different vendors with varying acquisition protocols are used. As a consequence, regardless of the scanned area, the resulting OCT volumes present different image characteristics and patterns (Fig. 2). This covariate shift has been observed to cause remarkable drops in the performance of different DL models for retinal fluid and layer segmentation [6–8].

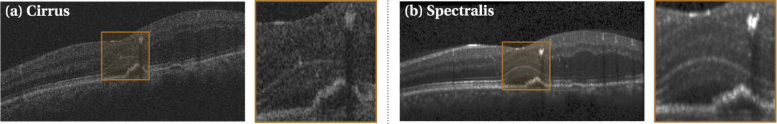

Fig. 2.

Cirrus (a) and Spectralis (b) B-scans with a corresponding close-up of the retinal layers. Both B-scans were acquired from the same patient at approximately the same time and retinal location, to illustrate their difference in appearance.

Related Work Image translation has been applied in cross-modality medical image analysis. Early approaches, requiring paired training data across the datasets, are frequently based on patch-based learning [9] and convolutional neural networks [10]. Recently, strategies based on generative adversarial networks (GAN) [11,12] allow a more flexible model training compared to the former since the training examples across multiple modalities do not need to be paired.

Another application for image translation in medical imaging is reducing the image differences within a medical image modality. Lately, the covariate shift phenomenon due to different acquisition devices has received a lot of attention across several imaging modalities in medical image analysis. For instance, [13] proposed a siamese neural network that generates device independent features and improves the generalization ability of brain tissue segmentation models in magnetic resonance imaging (MRI). The whole process requires a limited amount of fully annotated MRI images describing the tissue class in the target device. A cycle-consistency based loss was used in [14] to learn a stain-translating model for histology images across two datasets from different centers. The authors report that the stain-translation model improved the segmentation model’s performance in renal tissue slides. Cheng et al. [15] proposed a domain adaptation method for chest X-ray imaging based on unsupervised cycle consistency adversarial and semantic aware loss, improving the performance of lung segmentation models on different public datasets. In general, several of these recently proposed techniques are based on cycle-consistency adversarial losses [12,14–20] which were firstly introduced in the cycleGAN unpaired unsupervised learning algorithm [21]. Unpaired unsupervised learning techniques for alleviating the performance drop of retinal and choroidal layer segmentation models across OCT acquisition devices is also being proposed in non-peer reviewed pre-print [22].

Currently, the most straightforward approach to deal with image variability across OCT devices is to train vendor-specific models for each task. An example of this approach was shown in De Fauw et al. [8], where a two-stage DL approach was used to diagnose retinal disease. The first stage generates segmentation maps associated with retinal morphology and other OCT imaging related concepts. Then, the second stage generates referral predictions based on the segmented feature maps and is consequently device independent. However, time-consuming manual annotations needed to be collected for each vendor to adequately (re-)train the retinal segmentation models of the first stage, and hence assure device independence of the second stage. In contrast, using unpaired unsupervised algorithms to adjust the image properties of the B-scans would not require additional manual annotations. This remarkable advantage would likely have an important impact when OCT low-cost devices [23] become available, as they could benefit from the already pretrained state-of-the-art retinal segmentation DL models.

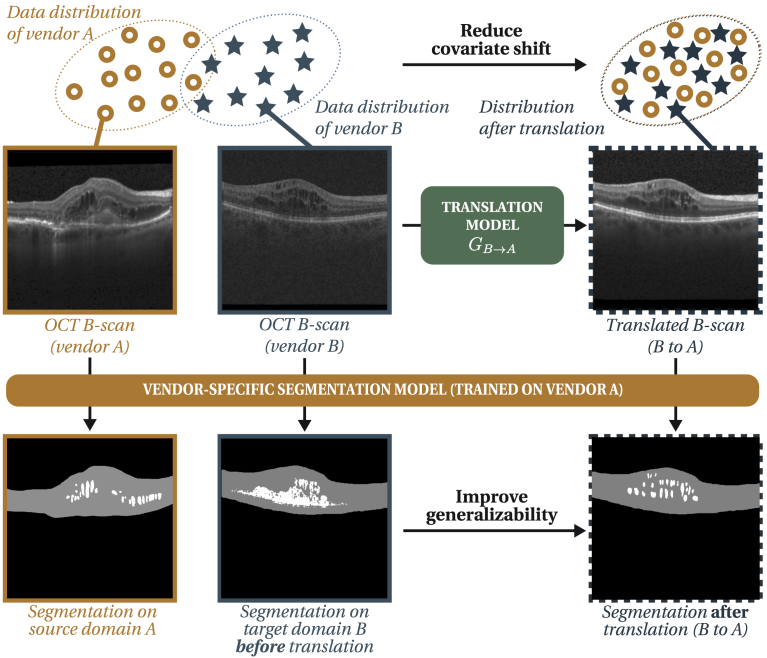

Contribution In this work, we evaluated the ability of the translations obtained by the cycleGAN algorithm [21] to reduce the covariate shift in DL segmentation models across different OCT acquisition devices. This paper is an extension of our previous work, where an unpaired unsupervised algorithm was evaluated on retinal fluid segmentation performance [24]. In that work, we observed that covariate shift in OCT imaging may be successfully alleviated for fluid segmentation task by means of cycleGANs. Such an approach allows to translate images acquired using an OCT device to resemble images produced by a different device (Fig. 1 ). As a result, the new image can then be processed using a segmentation model trained on images from without a significant drop in performance. In this paper, we extended the evaluation by analyzing the applicability of cycleGANs also in the context of an anatomical layer segmentation task. Furthermore, a visual Turing test was performed by 18 participants with different background expertise to comprehensively study the appearance of the resulting synthetic images. To the best of our knowledge, this is the first work that comprehensively explores cycleGAN algorithms in OCT images to reduce image variability across OCT acquisition devices.

Fig. 1.

Schematic overview demonstrating how unpaired translation models reduce performance drop due to covariate shift in models trained with different OCT acquisition vendors.

The main contributions of this paper can be summarised by the following three points:

-

•

We empirically demonstrated that unsupervised unpaired algorithms are able to reduce the covariate shift between different OCT acquisition devices. Segmentation performance in particular cases of retinal layer and fluid segmentation showed significant quantitative improvements. These tasks are clinically relevant, and possibly the most active research topics in automated OCT image segmentation.

-

•

We performed an extensive analysis of the effect of the unsupervised unpaired algorithm training patch size in the cross-vendor segmentation tasks. Particularly, we found that optimal patch size might depend on each task, with larger patch sizes more suitable for tasks in which larger structures need to be segmented.

-

•

We performed a visual Turing test with a large set of participants (n=18) with different background expertise. The results show that the image patch size at training stage is an important parameter, controlling the trade-off between “realism” and ”morphological fidelity” of the images generated by the translation model. The test demonstrated that translation models trained with larger patch sizes induce a more realistic appearance but were prone to generate artifacts distorting morphological features in the retina. On the other hand, models trained with smaller patch sizes rarely present such distortions, but are easily identified as “fake” images.

This paper is organized as follows. First, Section 2 describes the methods used in this work: the deep learning segmentation model, the cycleGAN and baseline translation algorithms. In Section 3 we describe the used datasets, training details of the models and the experimental evaluation setup. In Section 4, qualitative and quantitative results of the segmentation tasks and the visual Turing test are given. Finally, a discussion of these results and conclusions of the work are presented in Section 5 and Section 6.

2. Methods

This section presents a summary of the methodologies used in our study. In particular, Section 2.1 describes the unsupervised unpaired translation algorithm based on cycleGANs. Subsequently, Section 2.2 presents a brief description of alternative filtering-based translations that are used as baselines to compare with our proposed approach. Finally, Section 2.3 formalizes the segmentation tasks and describes the neural network architectures used for fluid and photoreceptor layer segmentation.

2.1. Unsupervised unpaired translation using cycleGANs

We propose to apply unsupervised unpaired generative adversarial networks to automatically translate OCT images from one vendor to another. Formally, let and be two different image domains, where any image has different visual characteristics compared to any other image . Cycle generative adversarial networks (cycleGANs) allow to learn a suitable translation function between the image domains and without requiring paired samples, allowing to tackle an ill-posed translation problem (i.e. unpaired translation) [21].

A cycleGAN uses two generator/discriminator pairs (/, /), which are implemented as deep neural networks. is supplied with an image from the source domain and translates it into the target domain . Analogously, translates the image back from the target to the source domain . () is trained to distinguish between real samples from the source (target) domain and the translated images, being associated to the likelihood that a certain image is sampled from domain (). The objective for the mapping function can be expressed as:

| (1) |

where the last two terms are the cycle consistency and the identity mapping loss , as defined in [21], with weights and that control their relevance in the overall loss. Both and serve as useful regularization terms improving the obtained translation functions and . Minimizing constrains the mentioned translations to be reversible, so successive application of and ( and ) to an image from domain () generates an image that matches the original source image. In contrast, minimizing regularizes the resulting translation () to generate a target image that is close to the source image, if the source image already have a target B (A) domain appearance. Intuitively speaking, this means that the translation () is constrained so that changes of source images that are not needed are avoided.

The first two terms correspond to the least square generative adversarial loss terms [25]:

| (2) |

where , is defined analogously. Both the generator and discriminator were implemented as deep neural networks following the ResNet based architecture presented in [21].

2.2. Baseline translation algorithms

Domain translation across images of different acquisition devices is not a common operation in OCT imaging. As such, no baselines are currently available for comparison purposes. However, some preprocessing pipelines including denoising and histogram matching algorithms have been used to standardize the appearance of OCT B-scans [26]. In this work, we followed the methodology applied in [27] to define two suitable translations to approximate the appearance of Spectralis OCTs from Cirrus B-scans. The first translation strategy () consist of an initial B-scan level median filtering operation (with kernel size) followed by a second median filtering operation across neighboring B-scans, with a kernel size. The second translation () consists of an initial histogram matching step using a random Spectralis OCT volume as a template, followed by the same filtering operations as described for . These strategies were applied as baseline translations when converting images from the Cirrus to Spectralis domain. Translations from Spectralis to Cirrus are not commonly employed in the literature, since Cirrus B-scans have a lower signal-to-noise ratio (SNR).

2.3. Segmentation models

A segmentation model with parameters aims to output a label for each pixel in an input image (i.e. a B-scan), with being the maximum number of classes and and the height and width of the images in pixels. In general, is modeled using convolutional neural networks. In that case, the parameters are learned in a supervised way from a training set with pairs of training images and their corresponding manual annotations . This is done by minimizing a pixel-wise loss function that penalizes the differences between the prediction and its associated ground truth labeling .

In the fluid and photoreceptor segmentation tasks analyzed in this paper, is a fully convolutional neural network with an encoder-decoder architecture, inspired by the U-Net [28] architecture. The encoder path consists of convolution blocks followed by max-pooling layers, which contracts the input and uses the context for segmentation. The decoder counterpart, on the other hand, performs up-sampling operations followed by convolution blocks, enabling precise localization in combination with skip-connections. For fluid segmentation, a standard U-Net architecture was applied. For photoreceptor segmentation, we took advantage of the recently proposed U2-Net [29], which is to the best of our knowledge the only existing deep learning approach for this task.

3. Experimental setup

We evaluated the ability of cycleGANs for translating OCT images from one vendor to another in a twofold basis. First, we estimated the generalization ability of models for fluid (i.e. pathology) and photoreceptor layer (i.e. regular anatomy) segmentation on a new unseen domain when translating the input images to resemble those used for training. Secondly, we performed a visual Turing test in which different expert groups analyzed the images produced by the cycleGAN. We additionally asked the experts to identify morphological changes introduced by the cycleGAN-based translations.

The datasets (Section 3.1), deep learning training setups (Section 3.2) and the evaluation of the translation models (Section 3.3) are described next.

3.1. Materials

All the OCT volumes used in our experiments were acquired either with Cirrus HD-OCT 400/4000 (Carl Zeiss Meditec, Dublin, CA, USA) or Spectralis (Heidelberg Engineering, GER) devices. Spectralis (Cirrus) devices utilize a scanning superluminescence diode to emit a light beam with a center wavelength of (), an optical axial resolution of () in tissue, and an optical transversal resolution of () in tissue. All the scans were centered at the fovea and covered approximately the same physical volume of . Cirrus images had a voxel dimension of or , and Spectralis volumes had a voxel dimension of . All Cirrus volumes were resampled using nearest-neighbor interpolation to match the resolution of Spectralis volumes (). As observed in Fig. 2 , the B-scans produced by each device differ substantially from each other. In addition to the differences mentioned earlier with respect to the scanning light source, axial and transversal OCT resolution, the Spectralis devices apply a B-scan averaging procedure (by default frames per B-scan). This procedure leads to an improved signal-to-noise ratio (SNR) compared to Cirrus scans. Image data was anonymized and ethics approval was obtained for research use from the ethics committee at the Medical University of Vienna (Vienna, Austria).

Four different datasets were used for our study, each of them for (1) training the unpaired translation model, (2) train and evaluate the fluid segmentation method, (3) train and evaluate the photoreceptor layer segmentation method and (4) for the visual Turing test. All the datasets and their corresponding partitions into training, validation and test are described in the sequel.

Unpaired Training Dataset This dataset comprises a total of OCT volumes ( B-scans after resampling Cirrus OCTs). () OCT volumes were acquired with the Spectralis (Cirrus) device. The Spectralis (Cirrus) OCT volumes correspond to pathological retinas of patients suffering from different diseases, with () samples with age-related macular degeneration (AMD), () with retinal vein occlusion (RVO) and () with diabetic macular edema (DME). For each vendor, the data was randomly split into training () and validation sets (), with no patient overlap between these two sets.

Fluid Segmentation Dataset A total amount of OCT volumes ( Spectralis, Cirrus) were selected for the fluid segmentation experiment, comprising a total number of B-scans. The Spectralis (Cirrus) set contained pathological retinas with AMD and with RVO. Experienced graders of the Vienna Reading Center performed manual pixel-wise annotations of intra-retinal cystoid fluid (IRC) and sub-retinal fluid (SRF) on those OCT volumes. The annotations were supervised by retinal expert ophthalmologists following a standardized annotation protocol. The datasets were randomly divided on patient distinct basis into (), (), and () B-scans used for training, validation and testing, respectively, on the Spectralis (Cirrus) set.

Photoreceptor Layer Segmentation Dataset Photoreceptor segmentation experiments were performed using two data sets of Cirrus and Spectralis scans, comprising and volumes, respectively. Each Cirrus (Spectralis) volume is composed of 128 (49) B-scans with a resolution of pixels. All the volumes were acquired from diseased patients. In particular, the Spectralis subset comprised images with DME, with RVO and with intermediate AMD. The distribution of diseases in the Cirrus subset was approximately uniform, with DME, RVO and early AMD cases. Each volume was manually delineated by trained readers and supervised by a retina expert that corrected the segmentations when needed. The datasets were randomly divided on patient-basis into (), () and () B-scans used for training, validation and test, respectively, on the Spectralis (Cirrus) set.

Visual Turing Test Dataset For the visual Turing test, we randomly selected a subset of B-scans from the fluid segmentation test set. In particular, B-scans were sampled from randomly selected OCT volumes following a Gaussian distribution, having a mean on the central B-scan (#) and a standard deviation of B-scans. A total of Spectralis and Cirrus B-scans were selected.

3.2. Training setup

The network architectures, training setups and configurations of each deep learning model are summarized in the sequel for each stage of our evaluation pipeline.

Unsupervised Image Translation Model Four different cycleGAN models were trained on the unpaired training dataset using squared patches of sizes of , , , and , respectively finally resulting in four different models (, , , ). Each training phase consisted of epochs using a mini batch size of . At each epoch, the deep learning model processes a patch randomly extracted from each B-scan in the training set. Each pair of generator/discriminator was saved after each epoch to subsequently select the best performing model. To address the fact that adversarial losses are unstable during training, we applied the following model selection strategy based on the validation set. First, all generators were used to translate the corresponding (Cirrus or Spectralis) central B-scans of the validation set. Then, the average term in Eq. 2 was computed for all associated discriminators using the same image sampling order for the target and source image sets. The maximum adversarial loss of the discriminators was then used as the final selection score for each generator. Finally, the generator with the minimum score was selected for a specific patch size configuration. This procedure allowed us to select pairs of generators that were not necessarily paired at the training stage.

The generator is an encoder/decoder ResNet based architecture with the following structure. The first layer was a Convolution-InstanceNorm-ReLU block with filters and a stride of , followed by two Convolution-InstanceNorm-ReLU blocks with and filters, respectively. Afterwards, a sequence of residual blocks were stacked, each of them comprising two convolutional layers with filters. Subsequently, two Transposed-Convolution-InstanceNorm-ReLU blocks with and filters (stride ) were followed by a final Convolution-InstanceNorm-ReLU block with filters. On the other hand, the discriminator consisted of four Convolution-InstanceNorm-LeakyReLU blocks with , , and filters.

Fluid Segmentation Model The fluid segmentation model is an encoder/decoder network inspired by the U-Net architecture. We used five levels of depth, with the number of output channels going from in the first to in the bottleneck layer, in powers of 2. Each convolutional block consisted of two convolutions, each followed by a batch-normalization layer and a rectified linear unit (ReLU). While max-pooling was used for downsampling, upsampling was performed using nearest-neighbor interpolation.

We used the negative log-likelihood loss in all our segmentation experiments, Kaiming initialization [30], Adam optimization [31], and a learning rate of , which was decreased by half every epochs. We trained our networks for epochs and selected the model with the best average -score on the validation set.

Photoreceptor Layer Segmentation Model Photoreceptor segmentation was performed by means of the U2-Net approach described in [29]. Such an architecture allows to retrieve probabilistic segmentations of the region of interest and uncertainty maps highlighting potential areas of pathological morphology and/or errors in the prediction. The core model is inspired by the U-Net, while incorporating dropout with a rate of after several convolutional layers. By using dropout in test time, Monte Carlo samples were obtained, retrieving the final segmentation as the pixel-wise average of the resulting samples.

3.3. Evaluation of the translation model

We evaluated the quality of the translated images obtained by the cycleGAN translation models in two different scenarios. On one hand, we measured the ability of the cycleGAN algorithm to reduce the covariate shift between images from different OCT vendors in automated retinal segmentation tasks. On the other hand, we carried out a visual Turing test to evaluate both the “realism” of the generated images and to identify potential morphological artifacts introduced in the translated version of the scans.

Evaluation via Segmentation Tasks The performance of the segmentation models was assessed in several versions of the B-scans in the corresponding test set. These B-scan versions were obtained by applying one of the following processes: (1) No translation, four different versions of cycleGAN models trained with different image patch size: (2) , (3) , (4) and (5) . Additionally, the (6) and (7) translations were only applied as baseline for the Cirrus-to-Spectralis translation (Section 2.2). We evaluated the performance of the segmentation models for the different image versions by computing the Dice score, precision and recall on the test set at voxel level. One-sided Wilcoxon signed-rank tests were performed to test for statistically significant differences.

Evaluation via Visual Turing Test The perceptual evaluation was carried out by a group of participants with a different professional background ( computer scientists, OCT readers and ophthalmologist), all of them experienced working with OCT images. The graphical user interface was implemented using the jsPsych library [32]. In the first task, the participants were asked to identify the “fake” translated image of a shown pair of original/translated B-scans. For this part of the visual Turing test, one-sided Wilcoxon signed-rank tests were performed to check for statistically significant differences.

In the second task, the participants were asked to identify morphological changes in the retina introduced by the cycleGAN-based translations. The evaluation resulted in a morphology preservation score (MPS) for each original/translated image pair, ranging from (morphological changes detected) to (no morphological changes detected). In particular, the participants were asked to rate their level of agreement (from ’strong disagreement’ to ’strong agreement’) with the statement “There are no morphological differences between the original/translated image”, meaning that MPS . If the MPS was or , the participants were further asked to select the portions of the image in which they found differences. This second task was carried out only by the ophthalmologist group.

Each participant observed translated/original B-scans pairs ( original Spectralis and original Cirrus B-scans), randomly sampled from the Visual Turing Test Dataset (Section 3.1). For each sample, randomly either the or model was used to generate the translated version of the image. For the second task, one-sided Mann-Whitney-U-Tests were performed to determine statistical significance.

4. Results

While general qualitative results for the Cirrus-to-Spectralis translation are illustrated in Fig. 3 , qualitative segmentation results are shown in Figs. 4 –5 for both translation directions. Retinal fluid segmentation results are presented in Section 4.1 (Figs. 6 –7 ) and results for retinal photoreceptor layer segmentation are provided in Section 4.2 (Fig. 8 ). Results of the Visual Turing test are covered in Section 4.3 (Figs. 9 –10 ).

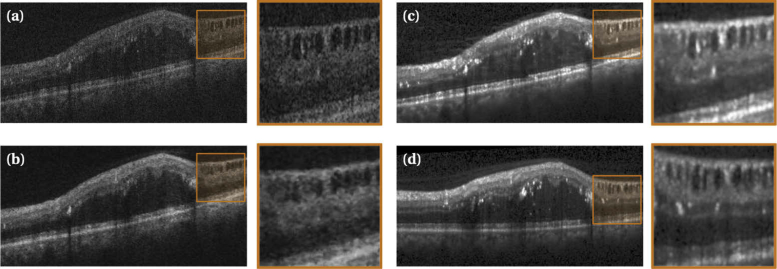

Fig. 3.

Qualitative results of the image translation algorithms. An original Cirrus OCT B-scan (a) was translated to the Spectralis domain using (b), and (c). The corresponding original Spectralis B-scan (d) acquired from the same patient at approximately the same time and retinal location is also shown for reference. The image generated in (c) has intensity values and an image noise level similar to those observed in the original Spectralis image (d).

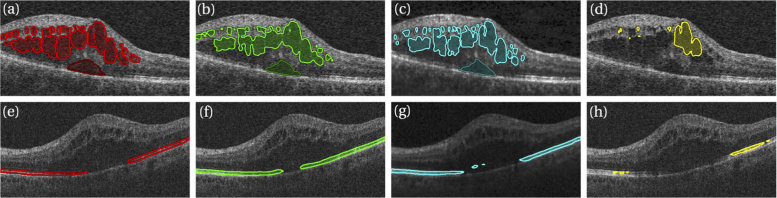

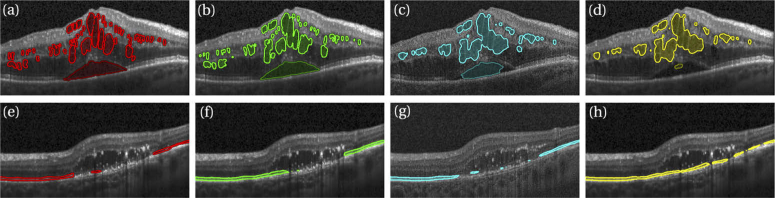

Fig. 4.

Qualitative results in the Cirrus test set, shown for fluid (top row: bright=IRC, dark=SRF) and photoreceptor layer segmentation tasks (bottom row). Manual annotations are shown in red (a,e). Segmentations of the upper-bound model (Cirrus-on-Cirrus) are highlighted in green (b,f). Results of the best cycleGAN-models are illustrated in cyan (c: CycleGAN256, g: CycleGAN128). Predictions of the Spectralis model on the original Cirrus-scans (no translation) are denoted in yellow (d,h).

Fig. 5.

Qualitative results in the Spectralis test set, shown for fluid (top row: bright=IRC, dark=SRF) and photoreceptor layer segmentation tasks (bottom row). Manual annotations are shown in red (a,e). Segmentations of the upper-bound model (Spectralis-on-Spectralis) are highlighted in green (b,f). Results of the best cycleGAN-models are illustrated in cyan (c: CycleGAN256, g: CycleGAN64). Predictions of the Cirrus model on the original Spectralis-scans (no translation) are denoted in yellow (d,h).

Fig. 6.

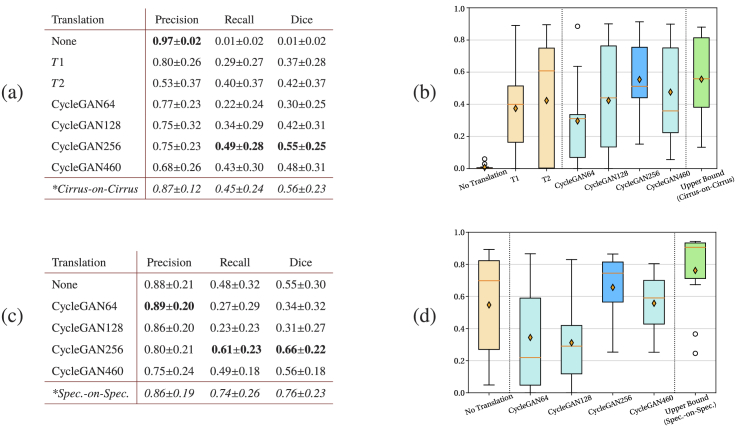

Quantitative results of intra-retinal cyst (IRC) segmentation, obtained on (a-b) Cirrus and (c-d) Spectralis scans. For different translation strategies Precision, Recall and Dice are shown together with the corresponding box-plots of Dice values. Native upper-bound models are indicated by * in (a-b). The CycleGAN model with highest mean Dice is highlighted in dark blue (b,d).

Fig. 7.

Quantitative results of sub-retinal fluid (SRF) segmentation, obtained on (a-b) Cirrus and (c-d) Spectralis scans. For different translation strategies Precision, Recall and Dice are shown together with the corresponding box-plots of Dice values. Native upper-bound models are indicated by * in (a-b). The CycleGAN model with highest mean Dice is highlighted in dark blue (b,d).

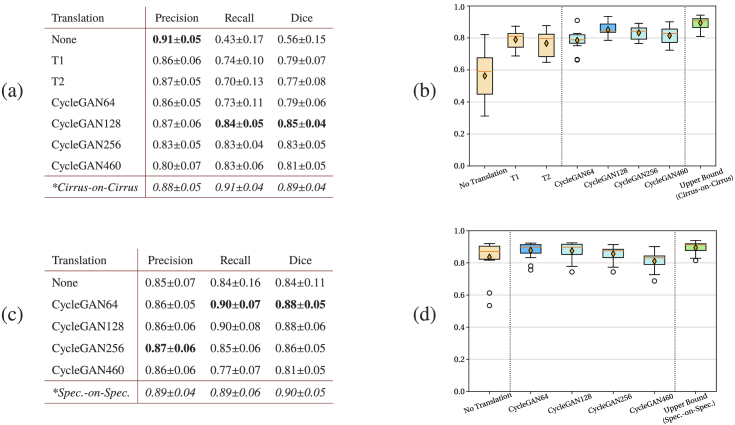

Fig. 8.

Quantitative results of layer segmentation, obtained on (a-b) Cirrus and (c-d) Spectralis scans. For different translation strategies Precision, Recall and Dice are shown together with the corresponding box-plots of Dice values. Native upper-bound models are indicated by * in (a-b). The CycleGAN model with highest mean Dice is highlighted in dark blue (b,d).

Fig. 9.

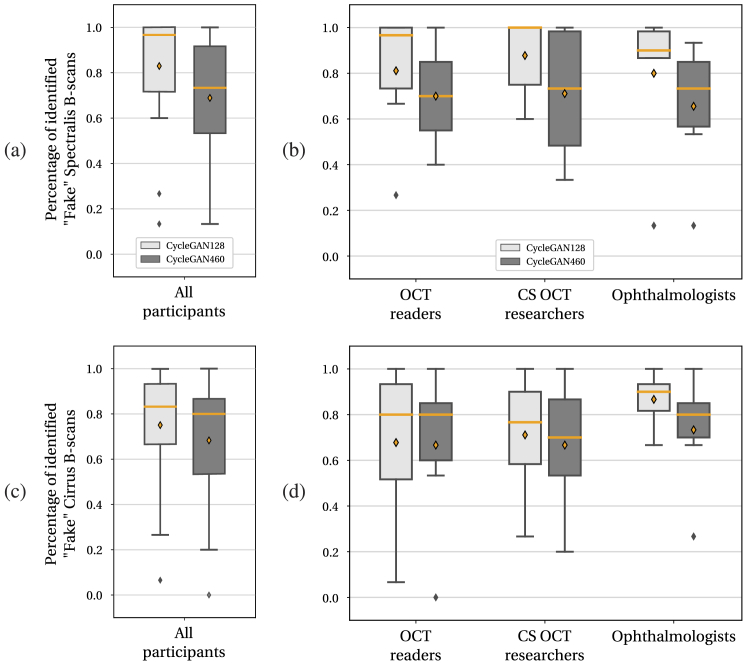

Box plots illustrating the amount of correctly identified “fake” B-scans, when showing the original/translated pairs to the participants (OCT readers (), computer scientists (), ophthalmologists ()). The amount of identified “fake” Spectralis B-scans for the Cirrus-To-Spectralis translation is shown in the top row for (a) all participants and (b) stratified by expertise background. The amount of identified “fake” Cirrus B-scans for the Spectralis-To-Cirrus translation is shown in the bottom row for (c) all participants and (d) stratified by expertise background.

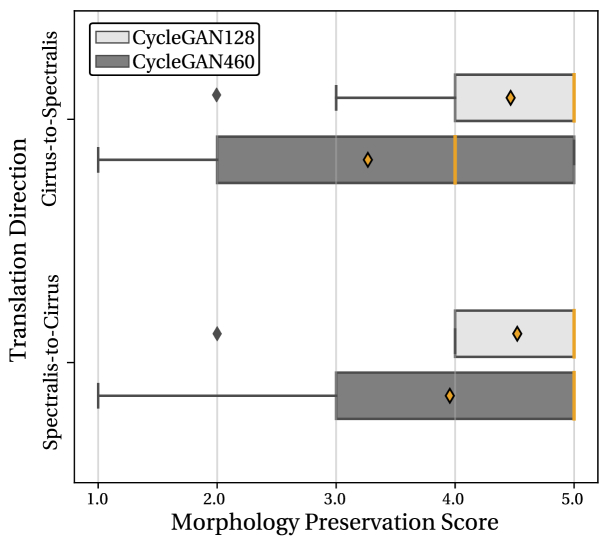

Fig. 10.

Boxplot of the morphology preservation scores (MPS) assigned by the ophthalmologist group to the original/translated B-scan pair. Top: Cirrus-to-Spectralis translation (). Bottom: Spectralis-to-Cirrus translation ().

4.1. Fluid segmentation results

Initial evaluation of the Spectralis segmentation model on the Spectralis test set showed a Precision, Recall and Dice for IRC (SRF) of (), () and (). The evaluation of the Cirrus segmentation model on the Cirrus test set yielded a Precision, Recall and Dice for IRC (SRF) of (), (), (). Notably, the performance of the native Cirrus models was lower than of their Spectralis counterparts, with the larger difference for the SRF class.

For the IRC class, the cross-vendor evaluation of both segmentation models (Spectralis and Cirrus) on the non-translated datasets showed a clear performance drop (Fig. 6), especially on the Cirrus test set (Fig. 6(a-b)). There, all applied translation strategies significantly improved the cross-vendor segmentation model performance with respect to the ’no translation’ scenario (). The approach with the highest Dice was the cycleGAN model trained with image patches of (CycleGAN256). This model showed a significantly better performance both compared to the and baseline approaches (). Finally, the tests did not yield a significant difference between the best translation model (CycleGAN460)) and the ’no translation’ scenario in the Spectralis test set (Fig. 6(c-d).

We also observed a performance drop in the cross-vendor evaluation of the SRF class when no translation was applied, where this drop was more prominent in the Cirrus test set (Fig. 7(a-b)). There, all the translation models showed an improvement with respect to the scenario without translation (). The best performing model was trained with images patches of (CycleGAN256), showing a significantly better performance than the and baseline translation algorithms (). Notably, the model performed on a par with the upper-bound Cirrus-on-Cirrus model (). In the Spectralis test set (Fig. 7(c-d)), the CycleGAN256 model achieved a higher mean Dice and a lower variance compared to the ”no translation” scenario. However, a significant difference between the distributions was not found ().

4.2. Photoreceptor layer segmentation results

Results for the photoreceptor layer segmentation task are summarized in Fig. 8. The upper-bound models obtained a Precision, Recall and Dice in the Cirrus (Spectralis) test set of (), () and (). A drop in Dice was observed for both segmentation models when no translation was used for both translation directions. The decrease was larger in the Cirrus test set ( to ). Moreover, the cross-vendor evaluation showed that all applied translation strategies significantly outperformed the “no translation” scenario in the Cirrus test set (, Fig. 8(a-b)). The best result in terms of Dice was obtained by , showing a significantly better performance than and (). In the Spectralis test set (Fig. 8(c-d)), the based translation achieved the highest Dice (), significantly outperforming the “no translation” scenario ().

4.3. Visual Turing test results

The results of the first visual Turing task involving an original/translated image pair (see Section 3.3) are summarized in Fig. 9, showing the percentage of identified “fake” Spectralis B-scans for the Cirrus-to-Spectralis translation in Fig. 9(a-b) and the percentage of identified “fake” Cirrus B-scans for the Spectralis-to-Cirrus translation in Fig. 9(c-d). Note that a value equal to would correspond to a scenario in which the participant randomly select any of the B-scans as fake, meaning that the transformed images could not be distinguished from the original scans by the participants.

When evaluating the median of the amount of identified “fake” images in the ( / ) models across all participants, a difference was found in both the median identification rate of the Cirrus-to-Spectralis ( / ) and the Spectralis-to-Cirrus ( / ) translations. This means that the images that were generated by the model were harder to identify than the images generated with the model. A paired one-sided Wilcoxon signed-rank significance test found that the difference in both directions was significant ( in the Cirrus-to-Spectralis and in the Spectralis-to-Cirrus direction). This is also reflected in Fig. 9(b), where for each expert group the generated “fake” Spectralis B-scans of the model were harder to identify than generated B-scans of the model. However, this effect was less pronounced in the other translation direction (Spectralis-to-Cirrus, Fig. 9(d)). Finally, we can observe that the ophthalmologists showed a lower variance compared to the other expert groups.

Quantitative results of the second visual Turing task (Fig. 10) are showing the distribution of the MPS for both the and model as well as for both translation directions. For the Cirrus-to-Spectralis translation, the median MPS was significantly higher for (median MPS=) than for (median MPS=), with . The same trend can be observed for the Spectralis-to-Cirrus translation (), although the median MPS was the same for both cycle-GAN models (median MPS=). In summary, these results clearly indicate that the model introduced fewer morphological changes during the translation compared with the model, for both translation directions. Exemplary qualitative results for introduced morphological changes are shown in Fig. 11 .

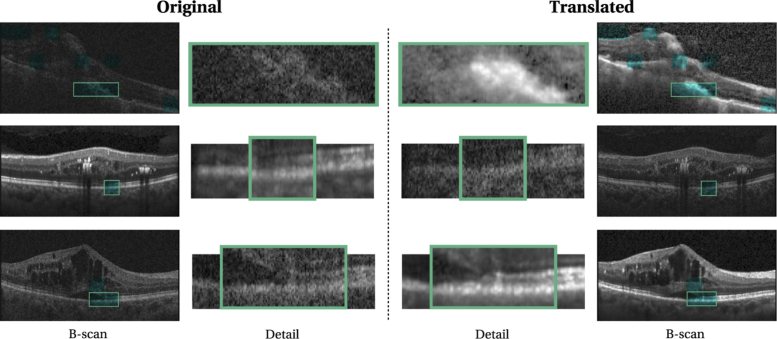

Fig. 11.

Left: Original B-scan and a zoomed-in region of interest ( original size). Right: Translated B-scan with the same zoomed-in region of interest. Regions that were identified as morphological differences by the experts are highlighted in cyan. First row: Spectralis-to-Cirrus translation. Second and third row: Cirrus-to-Spectralis translation.

5. Discussion

The main hypothesis of our work was that unsupervised cycleGAN based translation algorithms would allow to significantly reduce the covariate shift phenomenon in automated retinal segmentation models across different OCT acquisition devices. Our results show that these translation approaches indeed allowed deep learning models to improve its generalization ability in cross-vendor OCT images unseen at training stage. In all the evaluated segmentation tasks the effect of the covariate shift phenomenon was larger when using Spectralis models on the Cirrus test set than when using Cirrus models on the Spectralis test set, without any translations (Figs. 6(a-b), 7(a-b) and 8(a-b)). A possible explanation may be that the Spectralis segmentation model could not deal with the lower SNR in Cirrus scans, e.g. due to the noise in the fluid regions appearing much brighter than in Spectralis images. Cirrus models seemed to be more resilient against the covariate shift phenomenon when applied on Spectralis scans, showing a smaller but still significant performance drop compared to the upper bound (Figs. 6(c-d), 7(c-d) and 8(c-d)).

In most of the segmentation tasks and translation directions (Cirrus-to-Spectralis or Spectralis-to-Cirrus), we found that the cycleGAN-based translation models trained with image patch size of improved significantly or slightly – depending on the translation direction and segmentation task – the performance of the segmentation models, with respect to a scenario without any translation. However, each task and translation direction had a different optimal training image patch size. In the fluid segmentation task, in which the objective is to identify hypo-reflective black regions, the best performance was obtained with larger image patch sizes (, ). In contrast, for the photoreceptor layer segmentation task, in which the aim is to detect a thin layered region, the best performance was obtained with smaller image patch sizes (, ). One factor explaining these results may be the size of the structures that are segmented. Larger structures seem to require larger training image patches for the cycleGAN to capture the needed contextual information. Thus, the segmentation models may focus more on the global appearance (context) when segmenting larger structures, meaning that in this case it may be more important to reduce the covariate shift effect on a ”global appearance level” rather than on a local one. Additionally, using larger patches in the training stage allowed the translation models to generate more realistic images at test time. However, those models were also more likely to introduce image artifacts in the translated B-scans. This was empirically demonstrated in the visual Turing test, as discussed in the next paragraphs.

The first part of the visual Turing test was conducted to evaluate the realism of the generated B-scans. This part of the visual Turing test was inspired by [33], but our set-up constituted a more stringent assessment, since it not only required the participants to judge the “realism” of the generated images but also enabled a direct comparison with the original image. The main finding of this test was that using larger image patch sizes during training of the cycleGAN-based models resulted in more “realistic” translated B-scans (Section 3.3). The results of the visual Turing test showed that it was harder to identify the generated “fake” images both in Spectralis-to-Cirrus and Cirrus-to-Spectralis directions (Figs. 9(a,c)). This may be related with the above mentioned theory that larger patches during training allow to learn more complex/realistic translations and reduce the covariate shift effect also on a ”global appearance level”. When observing the percentage of identified “fake” B-scans stratified by expertise background (Figs. 9(b,d)), we found that the ophthalmologist group seemed to perform consistently in identifying “fake” images generated by the . This result might indicate that their knowledge about retinal structures and B-scan appearance in general helped them to robustly identify ”fake” images. However, this advantage was no longer evident for the model. Some of the reported cues the ophthalmologists and OCT readers used for identifying fake B-scans were the quality of choroid tissue, the vitreous border or the smoothness of retinal layer borders. Conversely, participants of the CS OCT researchers group reported relying more on visual cues based on differences in pre-processing operations used by the OCT acquisition devices (i.e quality of the B-scan filtering, B-scan tilt correction.

The results of the second part of the visual Turing test illustrate that translation models trained with smaller image patch sizes generate a smaller amount of retinal morphological differences in the translated images. An explanation of this phenomenon might be that training translation models with a smaller patch size results in more conservative (simpler) translations less likely to introduce artifacts (but also less realistic). In particular, the MPS distribution for the models (in both directions) had a significantly larger number of decisions rating the image pairs as having strong morphological differences () than that observed for the model. This indicates that models trained with larger patch sizes are prone to induce artifacts in the generated images. Additionally, the amount of perceived retinal anatomical differences also depended on the direction of the translation. When translating from Cirrus-to-Spectralis a slightly larger number of morphological differences were identified than in the B-scans translated from Spectralis-to-Cirrus.

We conjecture that translating from a low-SNR to a high-SNR B-scan required the translation models to “invent” information that was not present in the source image, meaning that the lower the signal-to-noise ratio the more likely it is that image artifacts are introduced. Moreover, we empirically observed that the quality of the input images was also a factor for the quality of the translated images. For instance, an extremely low contrast B-scan from any of the OCT vendors would likely generate a low quality B-scan after the translation. An example of such a case is presented in the first row of Fig. 11. We also found qualitatively that a substantial amount of regions highlighted by the ophthalmologists as artifacts were related to the border of the layers delimiting the retina. For instance, changes in the appearance of the bottom layers were observed in the two lower rows of Fig. 11. In the second row, the generated Cirrus B-scan attenuates/removes one of the layers observed in the original Spectralis B-scan. In the third row, the appearance of the retinal pigment epithelial (RPE) layer seemed to be altered in the translated Spectralis B-scan compared to the Cirrus original counterpart.

6. Conclusion

In this work, we presented an unsupervised unpaired learning strategy using cycleGAN to reduce the image variability across OCT acquisition devices. The method was extensively evaluated in multiple different retinal OCT image segmentation tasks (IRC, SRF, photoreceptor layer) and visual Turing tests. The results show that the translation algorithms improve the performance of the segmentation models on the target datasets coming from a different vendor than the training set, thus effectively reducing the covariate shift (the difference between the target and source domain). This demonstrates the potential of the presented approach to overcome the limitation of existing methods, whose applicability is usually limited to samples that match the training data distribution. In other words, the proposed translation strategy allows to improve the generalizability of segmentation models in OCT imaging. Since automated segmentation methods are expected to be part of routine diagnostic workflows [8] and could affect the therapy of millions of patients, this finding is of particular relevance. Specifically, the presented approach could help to reduce device-specific dependency of DL algorithms and therefore facilitate their deployment on a larger set of OCT devices.

Furthermore, results indicate that the training image patch size was an important factor for the performance of the cycleGAN-based translation model. Larger training image patch sizes usually resulted in models whose generated B-scans were more realistic, i.e., more difficult to identify as “fake” by human observers. However, such images were more likely to contain morphological differences in comparison with the source images. These results indicate that special care should be taken to reduce the likelihood of introducing morphological artifacts. Besides matching the pathological distributions across the domains in the cycleGAN training stage [34], a good practice may be to reduce the complexity of the learned translation by using smaller patch sizes, as there seems to be a trade-off between the “realism” and the quality of the translation models. In this context, future work should be focused on evaluating different architectures and/or loss-functions to address the problem of cross-vendor translation while preserving retinal anatomical features in the OCT image.

Acknowledgments

We thank the NVIDIA corporation for GPU donation.

Funding

Christian Doppler Research Association10.13039/501100006012; Austrian Federal Ministry for Digital and Economic Affairs; National Foundation for Research, Technology and Development.

Disclosures

DRB, PS, JIO and HB declare no conflicts of interest. SMW: Bayer (C,F), Novartis (C) and Genentech (F). BSG: Roche (C), Novartis (C,F), Kinarus (F) and IDx (F). US-E: Böhringer Ingelheim (C), Genentech (C), Novartis (C) and Roche (C).

References

- 1.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography,” Science 254(5035), 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Fujimoto J., Swanson E., “The development, commercialization, and impact of optical coherence tomography,” Invest. Ophthalmol. Visual Sci. 57(9), OCT1–OCT13 (2016). 10.1167/iovs.16-19963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Litjens G., Kooi T., Bejnordi B. E., Setio A. A. A., Ciompi F., Ghafoorian M., Van Der Laak J. A., Van Ginneken B., Sánchez C. I., “A survey on deep learning in medical image analysis,” Med. Image Anal. 42, 60–88 (2017). 10.1016/j.media.2017.07.005 [DOI] [PubMed] [Google Scholar]

- 4.Schmidt-Erfurth U., Sadeghipour A., Gerendas B. S., Waldstein S. M., Bogunović H., “Artificial intelligence in retina,” PROG RETIN EYE RES (2018). [DOI] [PubMed]

- 5.Storkey A. J., “When training and test sets are different: characterising learning transfer,” in Dataset Shift in Machine Learning (The MIT Press, 2009), , pp. 3–28. [Google Scholar]

- 6.Schlegl T., Waldstein S. M., Bogunovic H., Endstraßer F., Sadeghipour A., Philip A.-M., Podkowinski D., Gerendas B. S., Langs G., Schmidt-Erfurth U., “Fully automated detection and quantification of macular fluid in oct using deep learning,” Ophthalmology 125(4), 549–558 (2018). 10.1016/j.ophtha.2017.10.031 [DOI] [PubMed] [Google Scholar]

- 7.Terry L., Cassels N., Lu K., Acton J. H., Margrain T. H., North R. V., Fergusson J., White N., Wood A., “Automated retinal layer segmentation using spectral domain optical coherence tomography: evaluation of inter-session repeatability and agreement between devices,” PLoS One 11(9), e0162001 (2016). 10.1371/journal.pone.0162001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.De Fauw J., Ledsam J. R., Romera-Paredes B., Nikolov S., Tomasev N., Blackwell S., Askham H., Glorot X., O’Donoghue B., Visentin D., Van den Driessche G., Lakshminarayanan B., Meyer C., Mackinder F., Bouton S., Ayoub K., Chopra R., King D., Karthikesalingam A., Hughes C. O., Raine R., Hughes J., Sim D. A., Egan C., Tufail A., Montgomery H., Hassabis D., Rees G., Back T., Khaw P. T., Suleyman M., Cornebise J., Keane P. A., Ronneberger O., “Clinically applicable deep learning for diagnosis and referral in retinal disease,” Nat. Med. 24(9), 1342–1350 (2018). 10.1038/s41591-018-0107-6 [DOI] [PubMed] [Google Scholar]

- 9.Torrado-Carvajal A., Herraiz J. L., Alcain E., Montemayor A. S., Garcia-Cañamaque L., Hernandez-Tamames J. A., Rozenholc Y., Malpica N., “Fast patch-based pseudo-ct synthesis from t1-weighted mr images for pet/mr attenuation correction in brain studies,” J. Nucl. Med. 57(1), 136–143 (2016). 10.2967/jnumed.115.156299 [DOI] [PubMed] [Google Scholar]

- 10.Zhao C., Carass A., Lee J., He Y., Prince J. L., “Whole brain segmentation and labeling from ct using synthetic mr images,” in International Workshop on Machine Learning in Medical Imaging, (Springer, 2017), pp. 291–298. [Google Scholar]

- 11.Kamnitsas K., Baumgartner C., Ledig C., Newcombe V., Simpson J., Kane A., Menon D., Nori A., Criminisi A., Rueckert D., Glocker B., “Unsupervised domain adaptation in brain lesion segmentation with adversarial networks,” in Proc. of IPMI, (Springer, 2017), pp. 597–609. [Google Scholar]

- 12.Hiasa Y., Otake Y., Takao M., Matsuoka T., Takashima K., Carass A., Prince J. L., Sugano N., Sato Y., “Cross-modality image synthesis from unpaired data using cyclegan,” in International Workshop on Simulation and Synthesis in Medical Imaging, (Springer, 2018), pp. 31–41. [Google Scholar]

- 13.Kouw W. M., Loog M., Bartels W., Mendrik A. M., “Learning an mr acquisition-invariant representation using siamese neural networks,” arXiv preprint arXiv:1810.07430 (2018).

- 14.de Bel T., Hermsen M., Kers J., van der Laak J., Litjens G., “Stain-transforming cycle-consistent generative adversarial networks for improved segmentation of renal histopathology,” in Proc. of MIDL, (2019), pp. 151–163. [Google Scholar]

- 15.Chen C., Dou Q., Chen H., Heng P.-A., “Semantic-aware generative adversarial nets for unsupervised domain adaptation in chest x-ray segmentation,” in International Workshop on Machine Learning in Medical Imaging, (Springer, 2018), pp. 143–151. [Google Scholar]

- 16.Huo Y., Xu Z., Moon H., Bao S., Assad A., Moyo T. K., Savona M. R., Abramson R. G., Landman B. A., “Synseg-net: Synthetic segmentation without target modality ground truth,” IEEE Trans. Med. Imaging 38(4), 1016–1025 (2019). 10.1109/TMI.2018.2876633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jiang J., Hu Y.-C., Tyagi N., Zhang P., Rimner A., Mageras G. S., Deasy J. O., Veeraraghavan H., “Tumor-aware, adversarial domain adaptation from ct to mri for lung cancer segmentation,” in Proc. of MICCAI, (Springer, 2018), pp. 777–785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wolterink J. M., Dinkla A. M., Savenije M. H., Seevinck P. R., van den Berg C. A., Išgum I., “Deep mr to ct synthesis using unpaired data,” in International Workshop on Simulation and Synthesis in Medical Imaging, (Springer, 2017), pp. 14–23. [Google Scholar]

- 19.Zhang Y., Miao S., Mansi T., Liao R., “Task driven generative modeling for unsupervised domain adaptation: Application to x-ray image segmentation,” in Proc. of MICCAI, (Springer, 2018), pp. 599–607. [Google Scholar]

- 20.Zhang Z., Yang L., Zheng Y., “Translating and segmenting multimodal medical volumes with cycle-and shape-consistency generative adversarial network,” in Proc. of IEEE CVPR, (2018), pp. 9242–9251. [Google Scholar]

- 21.Zhu J.-Y., Park T., Isola P., Efros A. A., “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proc. of IEEE ICCV, (2017), pp. 2242–2251. [Google Scholar]

- 22.Wang J., Bian C., Li M., Yang X., Ma K., Ma W., Yuan J., Ding X., Zheng Y., “Uncertainty-guided domain alignment for layer segmentation in oct images,” arXiv preprint arXiv:1908.08242 (2019).

- 23.Song G., Chu K. K., Kim S., Crose M., Cox B., Jelly E. T., Ulrich J. N., Wax A., “First Clinical Application of Low-Cost OCT,” Trans. Vis. Sci. Tech. 8(3), 61 (2019). 10.1167/tvst.8.3.61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Seeböck P., Romo-Bucheli D., Waldstein S., Bogunovic H., Orlando J. I., Gerendas B. S., Langs G., Schmidt-Erfurth U., “Using cyclegans for effectively reducing image variability across oct devices and improving retinal fluid segmentation,” in Proc. of IEEE ISBI, (2019), pp. 605–609. [Google Scholar]

- 25.Mao X., Li Q., Xie H., Lau R. Y., Wang Z., Paul Smolley S., “Least squares generative adversarial networks,” in Proc. of IEEE ICCV, (2017). [DOI] [PubMed] [Google Scholar]

- 26.Xu X., Lee K., Zhang L., Sonka M., Abramoff M. D., “Stratified sampling voxel classification for segmentation of intraretinal and subretinal fluid in longitudinal clinical OCT data,” IEEE Trans. Med. Imaging 34(7), 1616–1623 (2015). 10.1109/TMI.2015.2408632 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tennakoon R., Gostar A. K., Hoseinnezhad R., Bab-Hadiashar A., “Retinal fluid segmentation in oct images using adversarial loss based convolutional neural networks,” in Proc. of IEEE ISBI, (IEEE, 2018), pp. 1436–1440. [Google Scholar]

- 28.Ronneberger O., Fischer P., Brox T., “U-net: Convolutional networks for biomedical image segmentation,” in Proc. of MICCAI, (Springer, 2015), pp. 234–241. [Google Scholar]

- 29.Orlando J. I., Seeböck P., Bogunović H., Klimscha S., Grechenig C., Waldstein S., Gerendas B. S., Schmidt-Erfurth U., “U2-net: A bayesian u-net model with epistemic uncertainty feedback for photoreceptor layer segmentation in pathological oct scans,” arXiv preprint arXiv:1901.07929 (2019).

- 30.He K., Zhang X., Ren S., Sun J., “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proc. of IEEE ICCV, (2015), pp. 1026–1034. [Google Scholar]

- 31.Kingma D. P., Ba J., “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980 (2014).

- 32.De Leeuw J. R., “jspsych: A javascript library for creating behavioral experiments in a web browser,” Behav. Res. 47(1), 1–12 (2015). 10.3758/s13428-014-0458-y [DOI] [PubMed] [Google Scholar]

- 33.Chuquicusma M. J., Hussein S., Burt J., Bagci U., “How to fool radiologists with generative adversarial networks? a visual turing test for lung cancer diagnosis,” in Proc. of IEEE ISBI, (IEEE, 2018), pp. 240–244. [Google Scholar]

- 34.Cohen J. P., Luck M., Honari S., “Distribution matching losses can hallucinate features in medical image translation,” in Proc. of MICCAI, (2018), pp. 529–536. [Google Scholar]