Highlights

-

•

Passive viewing fMRI task using dynamic emotional bodies and non-human objects.

-

•

Adults showed increased activation in the body-selective areas compared with children.

-

•

Adults also showed more activation than adolescents, but only in the right hemisphere.

-

•

Crucially, we found no age differences in the emotion modulation of these areas.

Keywords: Body perception, Emotion, fMRI, Children, Emotion modulation, Functional development

Abstract

Emotions are strongly conveyed by the human body and the ability to recognize emotions from body posture or movement is still developing through childhood and adolescence. To date, very few studies have explored how these behavioural observations are paralleled by functional brain development. Furthermore, currently no studies have explored the development of emotion modulation in these areas. In this study, we used functional magnetic resonance imaging (fMRI) to compare the brain activity of 25 children (age 6–11), 18 adolescents (age 12–17) and 26 adults while they passively viewed short videos of angry, happy or neutral body movements. We observed that when viewing dynamic bodies generally, adults showed higher activity than children bilaterally in the body-selective areas; namely the extra-striate body area (EBA), fusiform body area (FBA), posterior superior temporal sulcus (pSTS), as well as the amygdala (AMY). Adults also showed higher activity than adolescents, but only in the right hemisphere. Crucially, however, we found that there were no age differences in the emotion modulation of activity in these areas. These results indicate, for the first time, that despite activity selective to body perception increasing across childhood and adolescence, emotion modulation of these areas in adult-like from 7 years of age.

1. Introduction

A vast literature indicates that the ability to correctly perceive emotions from other people doesn’t reach adult levels until mid-adolescence (Chronaki et al., 2015, Herba et al., 2006). Moreover, the brain circuits specifically engaged while exposed to a stimulus depicting an emotion also undergo functional and structural changes in the period encompassing late childhood and adolescence (Blakemore and Choudhury, 2006, Blakemore, 2012, Giedd, 2008, Lenroot and Giedd, 2006, Paus, 2005). For instance, diffusion tensor imaging (DTI) studies have shown that fiber pathways connecting medial and lateral-temporal cortices are reorganized between childhood and adulthood (Loenneker et al., 2011). From a functional point of view, event-related potentials (ERPs) studies have shown that the signature of emotion processing when viewing faces doesn’t show adult patterns before the age of 14 (Batty and Taylor, 2006). Functional magnetic resonance imaging (fMRI) data in adults also reveals evidence of emotion modulation in the face selective-areas of the visual cortex (fusiform face area, occipital face area, posterior superior temporal sulcus) (Kret et al., 2011, Morris et al., 1998, Rotshtein et al., 2001, van de Riet et al., 2009, Vuilleumier et al., 2001, Winston et al., 2003) but not in children (Evans et al., 2010, Thomas et al., 2001, Todd et al., 2011), nor in adolescents (Pavuluri et al., 2009, Pfeifer et al., 2011). Yet, using angry and happy facial expressions, Hoehl et al. (2010) found that young children (5–6 years old) displayed heightened amygdala activation in response to emotional faces relative to adults (see also Thomas et al. (2001)). Todd et al. (2011) expanded upon this by demonstrating that children (3.5–8.5 years old), but not adults (18+ years old), showed greater amygdala activation to happy rather than angry faces; although amygdala activation for angry faces increased with age. Monk et al. (2003) reported that, when attention is unconstrained adolescents exhibit greater amygdala activity to fearful faces than adults, a finding replicated by Guyer et al. (2008). For happy and angry faces, developmental differences were observed in the orbitofrontal cortex and anterior cingulate cortex. These data point to a developing emotion recognition system in which age is not simply an additive effect driving increased activation. Rather, the system, including occipitotemporal, limbic and prefrontal regions, shows preferential activation for different emotional expressions that change with age.

1.1. Body-selective areas

All the aforementioned research has been conducted in relation to the perception of emotion from faces. However, emotion is also strongly conveyed by other means, in particular body posture and movements (Clarke et al., 2005, de Gelder, 2006, de Gelder, 2009). Previous work has shown that the capacity to recognize basic emotions from body movements also improves throughout childhood and adolescence (Boone and Cunningham, 1998, Lagerlof and Djerf, 2009, Ross et al., 2012). The question of whether related brain processes also change during this time has not yet been addressed.

Several brain areas have been identified as being specialized for the recognition and interpretation of human form and human motion: namely the extra-striate body area (EBA) located bilaterally in the lateral occipitotemporal cortex (LOTC), the fusiform body area (FBA) and areas in the inferior parietal lobe (IPL) and posterior superior temporal sulcus (pSTS) (de Gelder, 2006, Downing et al., 2001, Grosbras et al., 2012, Peelen and Downing, 2005, Weiner and Grill-Spector, 2011, Basil et al., 2016). Research consistently reports modulation by emotion in the fusiform gyrus (de Gelder et al., 2004, de Gelder et al., 2010, Grosbras and Paus, 2006, Kret et al., 2011) and in the LOTC (Grèzes et al., 2007, Grosbras and Paus, 2006). Peelen et al. (2007) demonstrated that the strength of emotion modulation in these areas was related, on a voxel by voxel basis, to the degree of body selectivity, but showed no such relationship with the degree of BOLD response when viewing faces. In other words, the emotional signals from the body might ‘modulate’ the complexes of neurons that code for the viewed stimulus (Sugase et al., 1999), rather than providing an overall boost in activation for all visual processing in the extra-striate visual cortex. Furthermore, the same authors have shown that the modulation of body-selective areas in adults is also positively correlated with amygdala activation (Peelen et al., 2007). To our knowledge, brain response in children and adolescents when viewing emotional body stimuli has not yet been tested.

1.2. Development of body-selective areas

To date, only three fMRI studies have explored the structural and functional development of the body-selective regions in children. While two of them reported that body-selective activity within the EBA and FBA was similar (in terms of location, intensity and extent) in children older than 7 and in adults (Peelen et al., 2009, Pelphrey et al., 2009), we found that it was not yet adult-like in pre-pubertal children (age 7–11; Ross et al. (2014)). The latter study mirrors findings in the face-selective regions in the ventral stream, which take more than a decade to mature (Grill-Spector et al., 2008, Scherf et al., 2007). Of the other two studies, only Peelen et al. (2009) included adolescent subjects but did not distinguish them from children in their group analysis, potentially masking any differences between the two age groups. Therefore the question remains open as to how the brain responds to viewing body movements during adolescence. Moreover, it is unknown how this brain activity would be modulated by the emotion conveyed by these stimuli. Given that emotional abilities, including emotion perception from social signals, change significantly during adolescence, we might expect different pattern of brain activity in adolescents compared to children and adults.

1.3. Current study

Here, building upon our previous data collected in pre-pubertal participants (Ross et al., 2014), our goal was to investigate, for the first time, the functional development of the EBA, pSTS, FBA and amygdala in relation to the perception of emotional human body movements across childhood and adolescence. Based on the developmental results reported for facial expression perception (Batty and Taylor, 2006, Peelen et al., 2009, Todd et al., 2011, Tonks et al., 2007), we hypothesised that the activity and emotion modulation of the body-selective areas in the visual cortex would develop along a similar protracted trajectory.

2. Materials and methods

2.1. Participants

Forty-six primary and secondary school participants were recruited from local schools and after-school clubs in the West End of Glasgow. Three of the younger children were excluded because of excessive head motion in the scanner. Thus data from 25 children (aged 6–11 years: M = 9.55 years, SD 1.46, 13 females) and 18 adolescents (aged 12–17: M = 14.82, SD = 1.88, 9 females) were analysed. Children were all at Tanner stage 1–2, that is, pre-pubertal, as assessed using the Pubertal Developmental Scale (Petersen et al., 1988), a sex-specific eight-item self-report measure of physical development (e.g. growth in stature, breast development, pubic hair, etc.) filled in by parents. Adolescents were all Tanner stage 3–5; i.e., pubertal or post-pubertal but not yet adults. Binning our subjects in this way avoids arbitrary adolescence age ranges and allows us to have homogenous groups in term of pubertal status. Permission was obtained from managers of after-school clubs and/or head teachers in order to promote the study. Written consent was then obtained from each child's parent or guardian before testing began (adolescents aged 16 and over were able to provide their own consent). The study was in line with the declaration of Helsinki, was approved by the local ethics board, and all participants understood that participation was voluntary and signed a consent-form. A sample of 26 adult volunteers recruited from the University of Glasgow also took part (aged 18–27 years: M = 21.28 years; SD = 2.11, 15 females). This gave us 69 subjects in total.

2.2. Stimuli and procedure

Forty-five short video-clips were taken from a larger set created and validated by Kret et al. (2011). Each clip depicted one actor, dressed in black against a green background, moving in an angry, happy or neutral manner. Six actors were males and nine females, with each actor recorded three times for each of the three emotions. The videos were recorded using a digital video camera and were edited to two-second long clips (50 frames at 25 frames per second). The faces in the videos were masked with Gaussian filters so that only information from the body was perceived (for full details and validation of stimuli (see Kret et al. (2011) and de Gelder and Van den Stock (2011))). In addition, to use as control stimuli, we selected videos depicting non-human moving objects (e.g. windscreen wipers, windmills, metronomes, etc.) from the internet. We edited these clips using Adobe Premiere so that they matched the body stimuli in terms of size, resolution, and luminance. A green border matching the colour of the human video background was added. In the fMRI experiment, stimuli were presented in blocks of five clips (10 s).

Furthermore, to control for potential low-level parameters effects on fMRI activity, we computed one measure of low-level local visual motion in the clips in order to enter it as a covariate in our fMRI regression analysis. In each clip, we first calculated frame-to-frame change in luminance in the background as a surrogate measure of noise level. Then for each pair of consecutive frames we counted the number of pixels where the change in intensity was higher than noise. We averaged these numbers yielding one value per clip, representing the motion in this clip. This measure showed a high correlation with measures of perceived motion in the clips (r = .571, n = 61, p < .001). Then we computed the cumulative motion for the five clips in each block. Overall the blocks of non-human clips had slightly more motion than the blocks of body movements clips, although this was not statistical significant (t(16) = 1.89, p = .076). In the emotion clips we found a significant difference in movement between the angry and neutral body expressions (t = 3.78, p < .005) only. To control for these effects these measures were added a covariate in our fMRI analysis.

Data acquisition: We acquired a series of 246 images of brain activity using a 3 T fMRI scanner (Tim Trio, Siemens, Erlangen, Germany) equipped with a 32-channels head coil, using standard EPI sequence for functional scans (TR/TE: 2600 ms/40 ms; slice thickness = 3 mm; in plane resolution = 3 × 3 mm). In addition, we performed a high-resolution T1-weighted structural scan (1 mm3 3D MPRAGE sequence) for anatomical localization.

Main experiment: The experiment was programmed with MATLAB using the Psychophysics Toolbox Extensions (Brainard, 1997). An experimental run consisted of 48 10-seconds long blocks: eighteen blocks of non-human stimuli (10 s; 5 clips), eighteen blocks of human stimuli (three blocks of each emotion) and twelve 10-seconds-long blocks of blank screen as a baseline, in a pseudo-randomized order based on an m-sequence avoiding correlation effects between blocks (Buracas and Boynton, 2002). Stimuli were back-projected onto a screen positioned at the back of the scanner bore. Participants were able to view the screen thanks to a mirror attached to the head-coil. They were instructed to maintain their gaze in the centre of the screen.

Procedure: Participants were installed comfortably in the scanner. Head motion was restricted by comfortable but tight padding. Parents/guardians were allowed to sit next to their children in the scanning room if they or their child wished (this was the case for three subjects). During the set up and the structural scan they watched a cartoon or movie of their choice. They were first familiarized with the scanner environment and noise with a 3-minutes dummy scan (with the same parameters as the experimental scan), during which they watched a movie. After that we gave them feedback on their head motion and, if head motion was an issue, redid a dummy scan encouraging them to keep still.

Before the main experiment started they were reminded to pay careful attention to the stimuli, to look at the central fixation cross and to keep their head still. Following the scan they were given a short forced-choice emotion recognition task using the same stimuli to gauge their understanding of the emotional content of the stimuli. There was no difference between age groups in emotion recognition accuracy (mean accuracy: 89%, 85% and 89% for children, adolescents and adults respectively; F(2,67) = .787, p = .46). Some of the subjects (16 children, 15 adolescents and 18 adults) also participated in another independent 8-min functional scan during the same scanning session, before completing the structural scans. This scan was a passive temporal voice area localizer and was counter-balanced with the current experimental scans to avoid any influence or confound on the current study.

2.3. Pre-processing

We used SPM 8 (Welcome Department of Imaging Neuroscience; www.fil.ion.ucl.ac.uk/spm) to process and analyse the MRI data. The functional data were corrected for motion by using a two-pass procedure. First we estimated the rigid-body transformation necessary to register each image to the first one of the time series and applied this transformation with a 4th Degree B-Spline interpolation. Then we averaged all these transformed images and repeated the procedure to register all images to the mean image. Movement correction was allowed up to 2 mm translation or 2 degrees rotation; the three participants who had larger head motion were excluded from the analysis. The realigned functional data were co-registered with the individual 3D T1-weighted scan. First we identified AC-PC landmarks manually and estimated the affine transformation from the mean functional image to the structural image. Then this transformation was applied to the whole realigned time series.

The anatomical scans were then segmented for different tissue types and transformed into MNI-space using non-linear registration. The parameters from this transformation were subsequently applied to the co-registered functional data.

Normalising the data from adults, adolescents and children into the same stereotactic template allowed us to directly compare the strength and extent of activation across age groups. Several studies examining the feasibility of this approach have found no significant differences in brain foci locations when the brains of children as young as 6 were transformed to an adult template (Burgund et al., 2002, Kang et al., 2003). These findings, and the careful inspection of the normalized images (looking for abnormalities in cortex/ventricle location, etc.), gave us confidence that there is no confound of brain size in our results.

Before performing the analyses we smoothed the data using a Gaussian kernel (8 mm FWHM). High-pass temporal filtering was applied at a cut off of 128 s to remove slow signal drifts.

2.4. Whole brain analysis

A general linear model was created with one predictor of interest for each of the four conditions (Happy, Angry, Neutral, Non-Body). We added our measure of luminance change (video clip motion) as a covariate, allowing us to control for the increased motion in the anger body movements. The six head-motion parameters were also added as regressors of non-interest. The model was estimated for each participant and we computed the following contrasts of interest between individual parameter estimates: Body > Non-Human; Angry > Neutral; and Happy > Neutral. These contrast images were taken to second-level random effect analysis of variance (ANOVA) to create group-averages separately for children, adolescents and adults and to compare the three groups. For the main group comparison analyses (One-way ANOVA and subsequent post-hoc t-tests for each contrast), resulting statistical maps are presented using a threshold of p < .05 after Familywise error (FWE) correction at the voxel level and a cluster extent of a minimum of 10 voxels. Anatomical locations for the peak functional activations were determined with the help of the Harvard-Oxford cortical and sub-cortical structural atlases as implemented in FSLview (Jenkinson et al., 2012). Unthresholded statistical maps are available at http://neurovault.org/collections/4178/.

2.5. Region of interest (ROI) definition and analysis

In order to directly address our hypotheses, we focused on six ‘body’ areas identified in Ross et al. (2014) (bilateral EBA, FBA and pSTS) and tested whether their activity was modulated by the emotional content of the body movement across ages, as it is the case in adults (de Gelder et al., 2004, de Gelder et al., 2010, Grèzes et al., 2007, Grosbras and Paus, 2006, Kret et al., 2011, Peelen et al., 2007). ROIs encompassing these areas were created by taking the set of contiguous voxels within a sphere of radius 8 mm surrounding the voxel in each anatomical region that showed the highest probability of activation in a meta-analysis of 20 studies examining contrasts between moving body and controls in adults (detailed in Grosbras et al., 2012). We also included ROIs covering the amygdala (AMY) bilaterally in the analysis. These were defined using the WFU PickAtlas software within SPM (Maldjian et al., 2003).

To explore differences in the strength of activity in these ROIs across age groups, we extracted the peak t-value from each ROI in each participant for the Body > Non-Human, Angry > Neutral and Happy > Neutral contrasts. We chose the peak t-value rather than mean/median ROI response as the peak has been shown to correlate better with evoked scalp electrical potentials than averaged activity (Arthurs and Boniface, 2003). Furthermore, the peak is guaranteed to show the best effect of any voxel in the ROI and is unaffected by spatial smoothing and normalization. Lastly, a lowest mean activity in the children and adolescent group will be confounded by the fact that the extent of activation differs across groups (Peelen et al., 2009, Ross et al., 2014), and that the ROIs are defined based on meta-analysis of studies conducted in adults, leaving the possibility of not capturing exactly the same ROI in children. Individual peak-t values for each ROI were entered into 3 × 8 mixed-design ANOVAs, with Age Group and ROI as between and within subject factors respectively. Furthermore, to explore more subtle effects over childhood and adolescence, we also performed further peak t-value analyses using age as a continuous variable. This can be found in the Supplementary Material. All statistical analysis was performed using SPSS Version 22.

3. Results

3.1. Whole brain contrasts

3.1.1. Bodies > Non-Human

3.1.1.1. Within groups

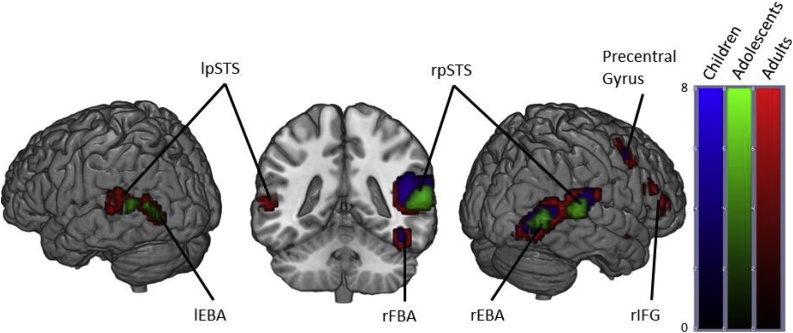

In adults, viewing dynamic bodies compared to non-human stimuli elicited activation in the right fusiform gyri (including FBA), bilateral pSTS, bilateral occipitotemporal cortices (including EBA), bilateral amygdalae, right inferior frontal gyrus, right precuneus and right precentral gyrus.

Adolescents displayed activation in the bilateral occipitotemporal cortices, bilateral superior temporal sulcus, but at our FWE corrected threshold showed no significant activation in the fusiform gyri or amygdalae.

Children also showed similar activation locations as the adults in the right hemisphere, but showed no significant activation in the left occipitotemporal cortex, left fusiform gyrus, left posterior superior temporal sulcus or left amygdala (Fig. 1 and Table 1).

Fig. 1.

Brain activity when contrasting Bodies > Non-Human stimuli in Children, Adolescents and Adults. (p < .05 FWE corrected, cluster extent threshold of 10 voxels. Colour-bar indicates the threshold of the t-value. Unthresholded statistical maps were uploaded to NeuroVault.org database and are available at http://neurovault.org/collections/4178/.) (For interpretation of the references to color in this figure legend, the reader is referred to the web version of the article.)

Table 1.

Regions activated in a whole-brain group-average random-effects analysis contrasting Bodies > Non-Bodies. (p < .05 FWE corrected, cluster extent threshold of 10 voxels, maximum cluster sphere 20 mm radius). Coordinates are in MNI space. (OTC = Occipitotemporal Cortex; FG = Fusiform Gyrus; STS = Superior Temporal Sulcus; IFG = Inferior Frontal Gyrus; AMY = Amygdala; Pre = Precuneus; PCG = Precentral Gyrus).

| Region | Adults |

Adolescents |

Children |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | t | cm3 | x | y | z | t | cm3 | x | y | z | t | cm3 | |

| rOTC | 51 | −73 | 4 | 12 | 7.9 | 51 | −70 | 4 | 7.6 | 3.0 | 48 | −76 | 1 | 8.8 | 4.0 |

| lOTC | −48 | −76 | 7 | 7.7 | 2.7 | −48 | −79 | 4 | 6.2 | .8 | |||||

| rFG | 42 | −46 | −17 | 10 | 2.3 | 42 | −46 | −17 | 6.0 | .4 | |||||

| rpSTS | 51 | −40 | 7 | 9.5 | 15 | 60 | −43 | 13 | 7.5 | 3.3 | 60 | −40 | 13 | 7.3 | 6.1 |

| lpSTS | −63 | −49 | 19 | 7.6 | 3.8 | −54 | −52 | 10 | 6.4 | 2.0 | |||||

| rIFG | 54 | 20 | 22 | 7.1 | 3.6 | ||||||||||

| rAMY | 18 | −7 | −14 | 8.6 | 2.3 | 18 | −7 | −11 | 6.3 | .7 | |||||

| lAMY | −18 | −10 | −14 | 6.3 | .4 | ||||||||||

| rPre | 3 | −55 | 34 | 5.4 | .4 | ||||||||||

| rPCG | 48 | 5 | 46 | 7.8 | 3.2 | 45 | 2 | 46 | 6.4 | 1.2 | |||||

3.1.1.2. Between groups

One-way ANOVA of the brain maps of parameters estimates contrasts with Age-group as the between groups factor revealed a main effect of age in the bilateral lingual gyrus (LG) (Table 2). We then performed 3 planned comparisons comparing each group individually with each other (Table 3).

Table 2.

Regions showing a main effect of age in the Bodies > Non-Human contrast. (p < .05 FWE corrected, cluster extent threshold of 10 voxels, maximum cluster sphere 20 mm radius). Coordinates are in MNI space.

| Region | Main effect of age |

||||

|---|---|---|---|---|---|

| x | y | z | t | cm3 | |

| Left Lingual Gyrus | −9 | −73 | −2 | 19.2 | .57 |

| Right Lingual Gyrus | 9 | −73 | −2 | 17.70 | .27 |

Table 3.

t-Tests contrasting Bodies > Non-Human across Age contrasts. (p < .05 FWE corrected, cluster extent threshold of 10 voxels, maximum cluster sphere 20 mm radius). Coordinates are in MNI space.

| Region | Post-hoc comparisons |

||||

|---|---|---|---|---|---|

| x | y | z | t | cm3 | |

| Adults > Children | |||||

| No regions active at given threshold | |||||

| Adults > Adolescents | |||||

| No regions active at given threshold | |||||

| Adolescents > Children | |||||

| No regions active at given threshold | |||||

| Adolescents > Adults | |||||

| Left Lingual Gyrus | −9 | −73 | −2 | 5.54 | .70 |

| Children > Adolescents | |||||

| No regions active at given threshold | |||||

| Children > Adults | |||||

| Right Lingual Gyrus | 6 | −73 | −2 | 5.59 | .86 |

| Left Lingual Gyrus | −9 | −73 | −2 | 5.32 | .57 |

We found that this main effect was driven by the children and adolescents showing higher bilateral and left LG activation than adults respectively.

3.1.2. Angry Bodies > Neutral Bodies

3.1.2.1. Within groups

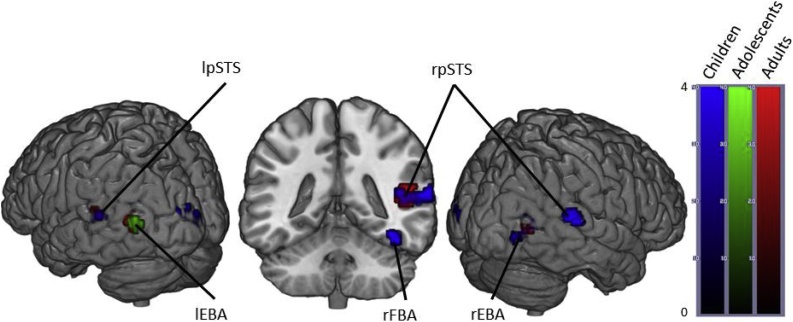

When contrasting the conditions Angry bodies and Neutral Bodies, adults showed activation in the bilateral occipital temporal cortices, bilateral occipital fusiform gyri, right middle STS, left posterior STS, right thalamus and right fusiform cortex.

Contrary to adults, adolescents only displayed significant activation in the bilateral occipital temporal cortices and the right occipital fusiform gyri.

Children showed activation in the same regions as adults except for the bilateral occipital fusiform gyri. In addition, they showed activation in the left occipital pole, the left superior fusiform gyrus, right hippocampus, left temporal pole, left amygdala and left thalamus (Fig. 2 and Table 4).

Fig. 2.

Brain activity when contrasting Angry > Neutral Bodies in Children, Adolescents and Adults. (p < .05 FWE corrected, cluster extent threshold of 10 voxels. Colour-bar indicates the threshold of the t-value. Unthresholded statistical maps were uploaded to NeuroVault.org database and are available at http://neurovault.org/collections/4178/.) (For interpretation of the references to color in this figure legend, the reader is referred to the web version of the article.)

Table 4.

Regions activated in a whole-brain group-average random-effects analysis contrasting Angry > Neutral. (p < .05 FWE corrected, cluster extent threshold of 10 voxels, maximum cluster sphere 20 mm radius). Coordinates are in MNI space. (OTC = Occipitotemporal Cortex; FG = Fusiform Gyrus; STS = Superior Temporal Sulcus; OFG = Occipital Frontal Gyrus; OP = Occipital Pole; THA = Thalamus).

| Region | Adults |

Adolescents |

Children |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | t | cm3 | x | y | z | t | cm3 | x | y | z | t | cm3 | |

| lOTC | −48 | −70 | 10 | 7.0 | 2.2 | −51 | −73 | 10 | 6.3 | .7 | |||||

| lpSTS | −51 | −52 | 10 | 4.8 | Sub-Peak | −63 | −52 | 13 | 5.4 | .4 | |||||

| rmSTS | 48 | −43 | 10 | 6.6 | 1.6 | 63 | −37 | 10 | 6.0 | 2.2 | |||||

| rOTC | 48 | −67 | 7 | 6.2 | .9 | 48 | −64 | 7 | 5.5 | .68 | 45 | −67 | 7 | 6.2 | 2.7 |

| rTHA | 18 | −31 | 1 | 6.2 | .7 | ||||||||||

| rFG | 39 | −49 | −17 | 4.5 | 2.19 | 39 | −49 | −17 | 6.1 | .76 | |||||

| rOFG | 27 | −76 | −8 | 5.6 | .4 | 18 | −85 | −8 | 6.6 | 1.4 | |||||

| lOFG | −30 | −76 | −8 | 5.9 | .5 | ||||||||||

| lOP | −9 | −100 | 7 | 5.7 | 1.0 | ||||||||||

3.1.2.2. Between groups

We observed no main effect of Age group when performing a One-Way ANOVA of Angry > Neutral brain maps with Age group as the between subjects factor.

3.1.3. Happy > Neutral

3.1.3.1. Within groups

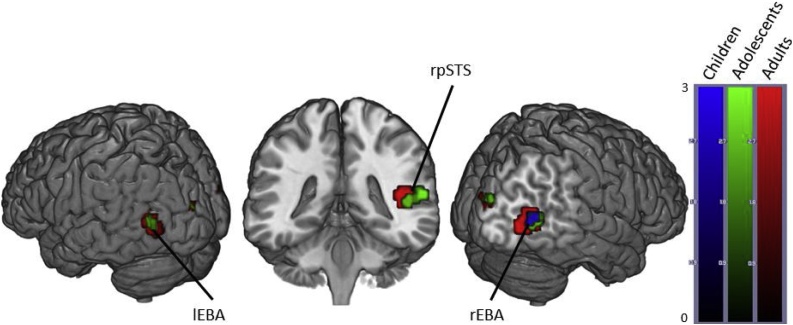

The Happy > Neutral contrast in adults revealed activation in the bilateral occipital temporal cortices, right middle and posterior STS and bilateral occipital pole.

Adolescents showed the same pattern of activation except for the right posterior STS. In addition, they also showed activation in the bilateral LG and the left posterior STS.

Children only showed significant activation in the right occipitotemporal cortex (Fig. 3 and Table 5).

Fig. 3.

Brain activity when contrasting Happy > Neutral Bodies in Children, Adolescents and Adults. (p < .05 FWE corrected, cluster extent threshold of 10 voxels. Colour-bar indicates the threshold of the t-value. Unthresholded statistical maps were uploaded to NeuroVault.org database and are available at http://neurovault.org/collections/4178/.) (For interpretation of the references to color in this figure legend, the reader is referred to the web version of the article.)

Table 5.

Regions activated in a whole-brain group-average random-effects analysis contrasting Happy > Neutral. (p < .05 FWE corrected, cluster extent threshold of 10 voxels, maximum cluster sphere 20 mm radius). Coordinates are in MNI space. (OTC = Occipitotemporal Cortex; STS = Superior Temporal Sulcus; OP = Occipital Pole; LG = Lingual Gyrus).

| Region | Adults |

Adolescents |

Children |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | t | cm3 | x | y | z | t | cm3 | x | y | z | t | cm3 | |

| rOTC | 48 | −70 | 4 | 6.8 | 3.7 | 45 | −64 | 7 | 6.4 | 1.2 | 45 | −64 | 7 | 5.1 | .5 |

| lOTC | −48 | −73 | 7 | 7.9 | 3.0 | −51 | −73 | 10 | 5.9 | .5 | |||||

| rmSTS | 45 | −40 | 7 | 7.3 | 1.5 | 48 | −37 | 7 | 5.8 | .8 | |||||

| rpSTS | 42 | −58 | 7 | 5.7 | Sub-Peak | ||||||||||

| lpSTS | −54 | −49 | 13 | 5.8 | .3 | ||||||||||

| rLG | 18 | −85 | −8 | 7.2 | 1.1 | ||||||||||

| lLG | −12 | −85 | −11 | 6.0 | .8 | ||||||||||

| rOP | 15 | −85 | −8 | 6.9 | 3.2 | 24 | −91 | 19 | 5.8 | .6 | |||||

| lOP | −12 | −85 | −8 | 8.7 | 3.6 | −18 | −91 | 16 | 5.4 | .5 | |||||

3.1.3.2. Between groups

We observed no main effect of age when performing a One-Way ANOVA of Happy > Neutral brain maps with Age Group as the between subjects factor.

3.2. Region of interest analysis

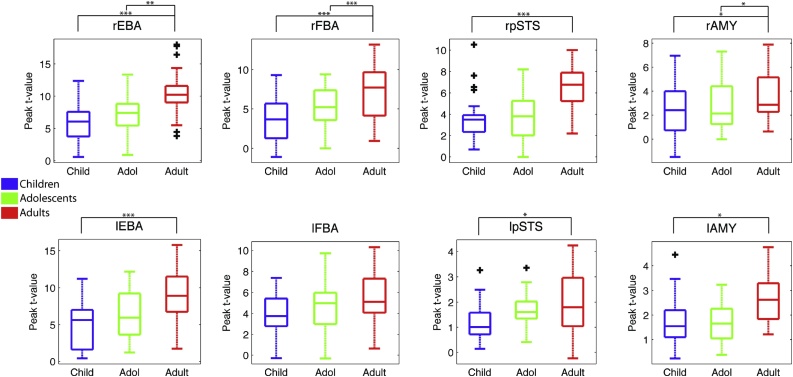

3.2.1. Bodies > Non-Human

The peak t-values for children, adolescents and adults in all eight ROIs under the Bodies > Non-Human contrast are presented in Fig. 4. A 3 × 2 × 4 Age Group × Hemisphere × ROI ANOVA revealed a main effect of Age Group (F(2,66) = 18.72, p < .001, η2p = .36), driven by adults showing significantly higher peak t-values than both children (p < .001) and adolescents (p = .002). We also found a main effect of ROI (F(3,198) = 124.41, p < .001, η2p = .65) and a main effect of hemisphere (F(1,66) = 105.82, p < .001, η2p = .62) driven by higher peak t-values in the right hemisphere (p < .001). Further, we observed an interaction between ROI and age group (F(6,198) = 4.24, p < .001, η2p = .11), interaction between hemisphere and age group (F(2,66) = 6.48, p = .003, η2p = 16) but no three-way interaction between hemisphere, ROI and age group (F(6,198) = 1.85, p = .09, η2p = .05).

Fig. 4.

Peak t-values in each ROI for each Age Group for the Bodies > Non-Bodies contrast. (It should be noted that the y-axis scales are not homogeneous across ROIs). *p < .05; **p < .01; ***p < .001.

Bonferroni corrected follow-up (post-hoc) analysis on the key ROI × age group interaction revealed a main effect of age in all ROIs with the exception of the lFBA (rEBA: F(2,66) = 13.83, p < .001; lEBA: F(2,66) = 10.42, p < .001; rFBA: F(2,66) = 12.14, p < .001; lFBA: F(2,66) = 2.06, p = .135; rpSTS: F(2,66) = 8.55, p < .001; lpSTS: F(2,66) = 4.00, p < .05; rAMY: F(2,66) = 5.69, p < .005; lAMY: F(2,66) = 5.92, p < .005).

These effects were driven by adults having increased activity when compared to children in all ROIs except the lFBA, which showed no main effect (rEBA: p < .001; lEBA: p < .001; rFBA: p < .001; lFBA: p = .145; rpSTS: p < .001; lpSTS: p < .05; rAMY: p < .05; lAMY: p < .05). Adults also showed increased activity over adolescents in the rEBA, rFBA and rAMY (rEBA: p < .005; rFBA: p < .001; rAMY: p < .05). No difference between adolescents and children reached significance.

Exploring the significant hemisphere and age group interaction, post-hoc analysis of hemisphere differences at each age group revealed significantly more right hemisphere activity in all three age groups (Children: t(99) = 6.7, p < .001; adolescents: t(71) = 5.6, p < .001; Adults: t(103) = 8.4, p < .001). We found that there was no significant difference in the right hemisphere between children and adolescents (p = .516), but more activity in adults than both children (p < .001) and adolescents (p < .001). In the left hemisphere there was no significant activity difference between children and adolescents (p = .063) or adults and adolescents (p > .99) but adults showed significantly more activation than children (p < .001).

3.2.2. Emotional Bodies > Neutral Bodies

To explore the developmental trajectories of emotion modulation we broke down the ‘Bodies’ condition into the 3 emotions (Angry, Happy and Neutral). A 3 × 2 × 3 × 4 Age Group × Hemisphere × Emotion × ROI ANOVA of the peak t-values revealed the following:

We found a main effect of emotion (F(2,132) = 71.03, p < .001, η2p = .52) which is driven by Angry and Happy giving significantly higher peak t values compared to Neutral (p < .001). We found no significant difference between Angry and Happy.

We found a main effect of hemisphere (F(1,66) = 79.5, p < .001, η2p = .55) driven by significantly higher peak t values in the right hemisphere overall.

We found a significant interaction between ROI and emotion (F(6,396) = 29.6, p < .001, η2p = .31) and a significant interaction between ROI and hemisphere (F(3,198) = 10.8, p < .001, η2p = .14).

Crucially, however, none of these effects showed any interaction with age (Emotion × Age F(4,132) = .37, p = .83, η2p = .01; Hemisphere × Age F(2,66) = 2.2, p = .123, η2p = .06; ROI × Emotion × Age F(12,396) = 1.06, p = .39, η2p = .03; ROI × Hemisphere × Age F(6,198) = 1.6, p = .136, η2p = .05).

Considering Age as a continuous variable, rather than a grouping factor, yielded the same results (presented as Supplementary Material).

4. Discussion

We investigated the development of the body-selective areas in the visual cortex and their modulation by emotion by comparing brain activity in adults, adolescents and children passively viewing angry, happy and neutral body movements, as well as objects’ movements. The present results show that, as expected, adults display bilateral activity in the main body-selective areas of the visual cortex (EBA, FBA and pSTS) when viewing dynamic bodies compared to non-human stimuli. For the same contrast, adolescents showed activity in the bilateral EBA and STS, and children in all three areas, but restricted to the right hemisphere. Whole-brain analyses revealed an additional main effect of age-group in the bilateral LG driven by higher activation in the children and adolescents compared to adults. Adults showed higher peak t-values than children in all the body-selective ROIs except the lFBA, and higher peak t-values than adolescents in the right ROIs (rEBA, rFBA and rAMY) except the right pSTS. This result complements our previous work (Ross et al., 2014) while showing that in these regions the magnitude of activity is still lower than adults in adolescents aged 12–17.

In terms of emotion modulation, and contrary to our hypothesis, we did not find any difference between age groups when contrasting Angry or Happy body movements to Neutral body movements: all three age-groups showed similar difference in activation in the body-selective areas and amygdala when viewing emotional compared to non-emotional stimuli.

4.1. Body-selective areas in adolescents and children

This is the first study to compare body-selective areas in children, adolescents and adults. When contrasting viewing dynamic bodies to viewing non-human movements, we found similar body-selective areas in children, adolescents, and adults. Interestingly, both children and adolescents showed higher activation in the bilateral LG compared to adults. The LG has been identified as having a role in various higher-level visual functions such as word processing (Mechelli et al., 2000), complex visual processing (Klaver et al., 2008), and most relevant to this study, the perception of human biological motion (Ptito et al., 2003, Santi et al., 2003, Servos et al., 2002, Vaina et al., 2001). Using diffusion tensor imaging (DTI), (Loenneker et al., 2011) found that fiber tracts in the ventral stream differed between adults (aged 20–30) and children (aged 5–7). They found no difference in the fiber tract volume, but instead showed that adults had additional connections to posterior lateral areas (OTC), whereas children showed additional connections to posterior medial areas (LG). In other words, the ventral visual system was ‘adult-like’ in terms of fiber tract volume, but these differences in connection trajectories between children and adults suggests a reorganisation of fiber pathways from medial to lateral-temporal cortex. Thus our data may illustrate developmental differences in processing pathways that could be linked to structural immaturities. Presumably this reorganisation is also not complete by adolescence, as our adolescent group showed the same effect. Loenneker et al. (2011) also showed that ventral stream connections to the right fusiform gyrus (containing the FBA) seem to not be completely established in their sample of 5–7 year old children. They suggest that the fiber bundles to the lingual visual areas of the cortex in children may prune until adulthood due to experience-based plasticity. This could explain our finding of increased activity in the LG of children and adolescents as compared to adults when viewing body stimuli. Unfortunately their study can shed no light on the functionality of this reorganisation, but it remains plausible then that the fiber tracts in children allow for visual stimuli to reach cortex dedicated to visual memory and language centres. As the child gets older and cerebral architecture is shaped by experience, more fiber bundles develop leading to the OTC, an area specialised in processing visual categories (Grill-Spector, 2003). This could be reflected in our uncorrected threshold maps, which seem to suggest that the adults, but not the children, show more activity in these areas when viewing objects compared to bodies motion.

The ROI analyses revealed higher sensitivity (represented by higher peak t-values) in adults than children in all ROIs except the lFBA. The adolescents, however, only showed significantly lower peak t-values than the adults in the rEBA, rFBA and rAMY. Given that these three regions also showed a significant difference between adults and children, but no difference between children and adolescents, one could argue for a developmental trajectory that is either so gradual during childhood and adolescence that it is undetectable here, or is simply flat, with maturation occurring at the end of adolescence.

It is notable that these developmental differences occur in the right hemisphere. This may partially support the right lateralisation we previously reported in children viewing body stimuli (Ross et al., 2014), and those observed in children using other modalities such as face stimuli (Golarai et al., 2010) or voice processing (Bonte et al., 2013, Rice et al., 2014). The literature, however, presents a mixed picture with some authors reporting that the cortex becomes less lateralised over age (Golarai et al., 2010), increases in lateralisation (Ross et al., 2014), or in the case of Pelphrey et al. (2009), a full reversal of lateralisation. Here we find that right lateralisation in our ROIs is present in children and continues through adolescence into adulthood. Altogether, our lateralisation results point towards a stronger activation and later maturation of body-related activity in the right compared to the left hemisphere. This might be related to the change in relative specialization of the different sub-regions of the ventral visual-occipital cortex during literacy maturation and development of the cortex sensitive to words in the left hemisphere (Dehaene et al., 2010), although this would need to be tested more specifically.

4.2. Emotion modulation of the body-selective areas

Similar to the Body > Non-Human contrast, the Angry > Neutral and Happy > Neutral contrasts produced activity in the body-selective areas. Interestingly, none of the significant main effects or interactions showed any interaction with age. So, contrary to the increase in activation over age in the Body > Non-Human contrast, the whole-brain Angry > Neutral and Happy > Neutral contrasts and ROI analyses revealed no age differences in emotion modulation of the body selective areas, and no age differences in amygdala response. This rules out an attentional explanation for our Body > Non-Human age differences. If the children were showing significantly lower peak t-values due to paying less attention to the stimuli than the adults or adolescents, one would expect that effect to be present in the emotion modulation analysis as well. Furthermore, (Sinke et al., 2010) demonstrated that, when presented with socially meaningful stimuli, the body-selective areas were the most active when subjects were not attending the stimulus. Thus, a lack of attention from the children to the stimuli in the current study would manifest itself in the data as greater activation in the body-selective areas (in the context of socially meaningful stimuli). The fact that this isn’t the case in our data and we see no age differences in activation for our emotional contrasts leads us to rule out an attentional explanation.

These findings suggest that even though the body-selective areas are increasing in their levels of recruitment between childhood and adulthood for processing dynamic body stimuli, the emotion modulation of these areas is already adult-like in children. This seems to be in accordance with previous behavioural results into emotion recognition from body movements (Lagerlof and Djerf, 2009, Ross et al., 2012). We previously described a sharp rise in emotion recognition accuracy from full-light human body movements between the ages of 4 and 8.5 years old (Ross et al., 2012). After 8.5 years we found a much slower rate of improvement in recognition accuracy. In the current study our children subjects range from 6 to 11 years old, so as a group they might be indistinguishable from the adult group in terms of recognition accuracy. In which case finding no age difference in the amygdala response and emotion modulation of the body-selective areas should come as no surprise (indeed, there was no significant age difference in the brief post-scan behavioural emotion recognition task we performed here). It is also possible that the age differences in behavioural performance are linked to the differences in other brain functional circuits (e.g. executive function). It could be the case, as shown using happy and angry faces in Hoehl et al. (2010), that 5–6 year old children would show heightened amygdala response to emotional bodies as compared to adults. Indeed, there is evidence that children younger than 6 years of age tend to analyse faces and bodies featurally, whereas older children (like the children in the current study) analyse expressions that include facial and postural cues holistically (Mondloch and Longfield, 2010). Exploring these questions in relation to emotional body perception would be a worthwhile extension of the current study; however, brain-imaging data from very young children (4–6 years old) would be needed to explore this possibility.

A question that cannot, by design, be addressed with our data is whether pubertal status could modulate brain response to body stimuli and its modulation by emotion. Indeed, during adolescence chronological age and sexual maturation interact in complex manners on their effect on brain structure and functions (Pfeifer and Berkman, 2018, Scherf et al., 2012, Sisk and Foster, 2004). In particular, a few studies addressing the development of emotional face processing suggest an effect of puberty even after accounting for age (Forbes et al., 2011, Moore et al., 2012), especially expressed as a reduction in amygdala response to emotion as puberty progresses during mid-adolescence (review in Pfeifer and Berkman, 2018). This effect is very small however, and not observed for all markers of puberty (Goddings et al., 2012). Also, it is not observed in many behavioural studies of basic emotion recognition (Motta-Mena and Scherf, 2017, Vetter et al., 2013). In light of the current evidence, we do not predict that pubertal status would influence activity related to body perception, and that is why we chose to equate this factor within each age group and focus on age effect. Nevertheless, adequately powered studies for this question could investigate whether puberty and related hormonal changes could have an effect similar to the one suggested for face perception.

4.3. Limitations and future directions

A key direction for future research using dynamic body stimuli is exploring the relative importance of several kinematic and postural body features in the classification of affective body movements. Recent evidence using our stimuli suggests that body movements differentially activate brain regions based on their postural and kinematic content (Solanas et al., 2019). This work tells us in more detail which features of the stimuli carry the critical information for emotion perception, and will allow further investigation into their developmental trajectories. Additionally, due to the dynamic nature of our stimuli, an alternative explanation for our age-related increase in body-selective area activity could be differences in sensitivity to moving stimuli across age. However, recent evidence suggests no difference between children and adults in areas related to motion perception; namely the human motion complex (hMT+) (Taylor et al., 2018). Here our study was not designed to test this possibility but we know that there is overlap between hMT+ and EBA (Vangeneugden et al., 2014) and that these areas are dissociable (Ross, 2014), therefore future work could perform motion sensitivity control analyses on the dissociated occipital motion area (OMA) to explore any potential age-related motion sensitivity differences.

One further potential limitation here is that as we define our body-selective areas through a meta-analysis of adult studies (Grosbras et al., 2012) rather than a standard static body-selective area localizer scan, one could argue that rather than reflecting age-related changes in activation for dynamic bodies, rather, our results reflect age-related changes in the precise location of body-selective areas. We do not believe this to be the case however, as we have previously shown that the average MNI coordinates of the highest positive t-value in each of the body-selective meta-analysis defined ROIs were similar in children and adults, confirming that these regions occupy the same cortical space (Ross et al., 2014). We have also previously shown that the spatial extent of fMRI signal change is larger in these regions in adults compared with pre-pubertal children. Therefore at our stringent p < .05 FWE corrected (10 voxel cluster extent) threshold for whole-brain analysis we might expect to see differences in age related functional changes compared to our optimal peak t-value analyses, as the peak is guaranteed to show the best effect of any voxel in the ROI and is unaffected by spatial smoothing, normalization, and extent of activation (or lack thereof). Future work could investigate this further by performing localizer scans to identify static body-selective areas in each subject and then performing peak t-extraction analyses on each individual's body-selective areas. However, given our previous findings detailed above regarding highest positive peak t-value coordinates occupying the same cortical space, we would predict very similar results to our own.

Finally, a passive viewing task such as the one employed here cannot address the function of the body-selective system. Using point-light displays, Atkinson et al. (2012) showed evidence that emotionally expressive movements do not solely modulate the neuronal populations that code the viewed stimulus category. This implies that a body-selective area that is responding more to emotional compared to neutral stimuli may only be doing so due to top-down influence from some higher cortical area. Pichon et al. (2008) provided evidence, in adults, of amygdala activation as well as activation in the fusiform areas when presented with angry body actions. They attribute this to the brain's natural response to threat, which has been replicated (van de Riet et al., 2009) and mirrored in primate studies (Amaral et al., 2003). So, if the stimuli do not modulate the body-selective areas directly, is there any influence from the amygdala? Or, in other words, do the emotional cues contained in the stimuli produce amygdala activity that in turn activates category-specific populations of neurons in the visual cortex? Future work should be designed specifically to test these possibilities.

5. Conclusions

To summarise, we found evidence that the body-selective areas of the visual cortex are not adult-like bilaterally in children and not adult-like in the right hemisphere in adolescents, when considering their responses to dynamic stimuli. Further, we present evidence, for the first time, of emotion modulation in these areas in children and adolescents. We found that emotion modulation of the body-selective areas activity was increased in response to body movements conveying an emotion, positive or negative, compared to neutral movements to the same extent in children and adolescents than in adults. This mirrors various behavioural findings showing that, by the age of 8 years, children recognize emotion from bodily cues with the same proficiency as adults. These data provide new directions for developmental studies focusing on emotion processing from the human body, and could have clinical applications in both typically and atypically developing populations showing deficits in socio-emotional abilities.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgements

This study was partly funded by the UK ESRC DTC grant ES/J500136/1. MHG was also supported by the AMIDEX foundation (France; grant number A_M-AAC-EM-14-28-140110-16.50).

Footnotes

Supplementary data associated with this article can be found, in the online version, at https://doi.org/10.1016/j.dcn.2019.100660.

Appendix A. Supplementary data

The following is the supplementary data to this article:

References

- Amaral D.G., Behniea H., Kelly J.L. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Arthurs O.J., Boniface S.J. What aspect of the fMRI BOLD signal best reflects the underlying electrophysiology in human somatosensory cortex? Clin. Neurophysiol. 2003;114:1203–1209. doi: 10.1016/s1388-2457(03)00080-4. [DOI] [PubMed] [Google Scholar]

- Atkinson A.P., Vuong Q.C., Smithson H.E. Modulation of the face- and body-selective visual regions by the motion and emotion of point-light face and body stimuli. NeuroImage. 2012;59:1700–1712. doi: 10.1016/j.neuroimage.2011.08.073. [DOI] [PubMed] [Google Scholar]

- Basil Rochelle A., Westwater Margaret L., Wiener Martin, Thompson James C. A causal role of the right superior temporal sulcus in emotion recognition from biological motion. bioRxiv. 2016 [Google Scholar]

- Batty M., Taylor Margot J. The development of emotional face processing during childhood. Dev. Sci. 2006;9:207–220. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Blakemore S.J. Imaging brain development: the adolescent brain. NeuroImage. 2012;61:397–406. doi: 10.1016/j.neuroimage.2011.11.080. [DOI] [PubMed] [Google Scholar]

- Blakemore S.J., Choudhury S. Development of the adolescent brain: implications for executive function and social cognition. J. Child Psychol. Psychiatry Allied Disciplines. 2006;47:296–312. doi: 10.1111/j.1469-7610.2006.01611.x. [DOI] [PubMed] [Google Scholar]

- Bonte M., Frost M.A., Rutten S., Ley A., Formisano E., Goebel R. Development from childhood to adulthood increases morphological and functional inter-individual variability in the right superior temporal cortex. NeuroImage. 2013;83:739–750. doi: 10.1016/j.neuroimage.2013.07.017. [DOI] [PubMed] [Google Scholar]

- Boone R.T., Cunningham J.G. Children's decoding of emotion in expressive body movement: the development of cue attunement. Dev. Psychol. 1998;34:1007–1016. doi: 10.1037//0012-1649.34.5.1007. [DOI] [PubMed] [Google Scholar]

- Brainard D.H. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Buracas Giedrius T., Boynton Geoffrey M. Efficient design of event-related fMRI experiments using M-sequences. NeuroImage. 2002;16:801–813. doi: 10.1006/nimg.2002.1116. [DOI] [PubMed] [Google Scholar]

- Burgund E.D., Kang H.C., Kelly J.E., Buckner R.L., Snyder A.Z., Petersen S.E., Schlaggar B.L. The feasibility of a common stereotactic space for children and adults in fMRI studies of development. NeuroImage. 2002;17:184–200. doi: 10.1006/nimg.2002.1174. [DOI] [PubMed] [Google Scholar]

- Chronaki G., Hadwin Julie A., Garner M., Maurage P., Sonuga-Barke Edmund J.S. The development of emotion recognition from facial expressions and non-linguistic vocalizations during childhood. Br. J. Dev. Psychol. 2015;33:218–236. doi: 10.1111/bjdp.12075. [DOI] [PubMed] [Google Scholar]

- Clarke T.J., Bradshaw M.F., Field D.T., Hampson S.E., Rose D. The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception. 2005;34:1171–1180. doi: 10.1068/p5203. [DOI] [PubMed] [Google Scholar]

- de Gelder B. Why bodies?. Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R Soc. Lond. B Biol. Sci. 2009;364:3475–3484. doi: 10.1098/rstb.2009.0190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 2006;7:242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B., Josh S., Doug G., George G., Nouchine H. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl. Acad. Sci. U.S.A. 2004;101:16701–16706. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Van den Stock J. The Bodily Expressive Action Stimulus Test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychol. 2011;2:181. doi: 10.3389/fpsyg.2011.00181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Van den Stock J., Meeren Hanneke K.M., Sinke Charlotte B.A., Kret Mariska E., Tamietto M. Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci. Biobehav. Rev. 2010;34:513–527. doi: 10.1016/j.neubiorev.2009.10.008. [DOI] [PubMed] [Google Scholar]

- Dehaene S., Pegado F., Braga Lucia W., Ventura P., Filho G.N., Jobert A., Ghislaine D.-L., Régine K., José M., Laurent C. How learning to read changes the cortical networks for vision and language. Science (New York, N.Y.) 2010;330:1359–1364. doi: 10.1126/science.1194140. [DOI] [PubMed] [Google Scholar]

- Downing P.E., Jiang Y., Shuman M., Kanwisher N. A cortical area selective for visual processing of the human body. Science (New York, N.Y.) 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Evans J.W., Todd R.M., Taylor M.J., Strother S.C. Group specific optimisation of fMRI processing steps for child and adult data. NeuroImage. 2010;50:479–490. doi: 10.1016/j.neuroimage.2009.11.039. [DOI] [PubMed] [Google Scholar]

- Forbes Erika E., Phillips Mary L., Ryan Neal D., Dahl Ronald E. Neural systems of threat processing in adolescents: role of pubertal maturation and relation to measures of negative affect. Dev. Neuropsychol. 2011;36:429–452. doi: 10.1080/87565641.2010.550178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giedd Jay N. The teen brain: insights from neuroimaging. J. Adolescent Health. 2008;42:335–343. doi: 10.1016/j.jadohealth.2008.01.007. [DOI] [PubMed] [Google Scholar]

- Goddings A.-L., Heyes S.B., Bird G., Viner Russell M., Blakemore S.-J. The relationship between puberty and social emotion processing. Dev. Sci. 2012;15:801–811. doi: 10.1111/j.1467-7687.2012.01174.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golarai G., Liberman A., Yoon Jennifer M.D., Grill-Spector K. Differential development of the ventral visual cortex extends through adolescence. Front. Hum. Neurosci. 2010:3. doi: 10.3389/neuro.09.080.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grèzes J., Pichon S., de Gelder B. Perceiving fear in dynamic body expressions. NeuroImage. 2007;35:959–967. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K. The neural basis of object perception. Curr. Opin. Neurobiol. 2003;13:159–166. doi: 10.1016/s0959-4388(03)00040-0. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Golarai G., Gabrieli J. Developmental neuroimaging of the human ventral visual cortex. Trends Cogn. Sci. 2008;12:152–162. doi: 10.1016/j.tics.2008.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosbras M.-H., Beaton S., Eickhoff Simon B. Brain regions involved in human movement perception: a quantitative voxel-based meta-analysis. Hum. Brain Mapp. 2012;33:431–454. doi: 10.1002/hbm.21222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosbras M.-H., Paus T. Brain networks involved in viewing angry hands or faces. Cereb. Cortex (New York, N.Y.: 1991) 2006;16:1087–1096. doi: 10.1093/cercor/bhj050. [DOI] [PubMed] [Google Scholar]

- Guyer Amanda E., Monk Christopher S., McClure-Tone Erin B., Nelson Eric E., Roberson-Nay R., Adler Abby D., Fromm Stephen J., Leibenluft E., Pine Daniel S., Ernst M. A developmental examination of amygdala response to facial expressions. J. Cogn. Neurosci. 2008;20:1565–1582. doi: 10.1162/jocn.2008.20114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herba Catherine M., Landau S., Russell T., Ecker C., Phillips Mary L. The development of emotion-processing in children: effects of age, emotion, and intensity. J. Child Psychol. Psychiatry Allied Disciplines. 2006;47:1098–1106. doi: 10.1111/j.1469-7610.2006.01652.x. [DOI] [PubMed] [Google Scholar]

- Hoehl S., Brauer J., Brasse G., Striano T., Friederici A.D. Children's processing of emotions expressed by peers and adults: an fMRI study. Social Neurosci. 2010;5:543–559. doi: 10.1080/17470911003708206. [DOI] [PubMed] [Google Scholar]

- Jenkinson M., Beckmann C.F., Behrens T.E., Woolrich M.W., Smith S.M. FSL. NeuroImage. 2012;62:782–790. doi: 10.1016/j.neuroimage.2011.09.015. [DOI] [PubMed] [Google Scholar]

- Kang H.C., Burgund E.D., Lugar H.M., Petersen S.E., Schlaggar B.L. Comparison of functional activation foci in children and adults using a common stereotactic space. NeuroImage. 2003;19:16–28. doi: 10.1016/s1053-8119(03)00038-7. [DOI] [PubMed] [Google Scholar]

- Klaver P., Lichtensteiger J., Bucher K., Dietrich T., Loenneker T., Martin E. Dorsal stream development in motion and structure-from-motion perception. NeuroImage. 2008;39:1815–1823. doi: 10.1016/j.neuroimage.2007.11.009. [DOI] [PubMed] [Google Scholar]

- Kret M.E., Pichon S., Grèzes J., de Gelder B. Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. NeuroImage. 2011;54:1755–1762. doi: 10.1016/j.neuroimage.2010.08.012. [DOI] [PubMed] [Google Scholar]

- Lagerlof I., Djerf M. Children's understanding of emotion in dance. Eur. J. Dev. Psychol. 2009;6:409–431. [Google Scholar]

- Lenroot Rhoshel K., Giedd Jay N. Brain development in children and adolescents: Insights from anatomical magnetic resonance imaging. Neurosci. Biobehav. Rev. 2006;30:718–729. doi: 10.1016/j.neubiorev.2006.06.001. [DOI] [PubMed] [Google Scholar]

- Loenneker T., Klaver P., Bucher K., Lichtensteiger J., Imfeld A., Martin E. Microstructural development: organizational differences of the fiber architecture between children and adults in dorsal and ventral visual streams. Hum. Brain Mapp. 2011;32:935–946. doi: 10.1002/hbm.21080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maldjian J.A., Laurienti P.J., Kraft R.A., Burdette J.H. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. NeuroImage. 2003;19:1233–1239. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Mechelli A., Humphreys G.W., Mayall K., Olson A., Price C.J. Differential effects of word length and visual contrast in the fusiform and lingual gyri during reading. Proc. Biol. Sci. 2000;267:1909–1913. doi: 10.1098/rspb.2000.1229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mondloch Catherine, Longfield Danielle. Sad or Afraid? Body posture influences children's and adults’ perception of emotional facial displays. J. Vision. 2010;10:579–679. [Google Scholar]

- Monk Christopher S. Adolescent immaturity in attention-related brain engagement to emotional facial expressions. Neuroimage. 2003;20(1):420–428. doi: 10.1016/s1053-8119(03)00355-0. [DOI] [PubMed] [Google Scholar]

- Moore William E., Pfeifer Jennifer H., Masten Carrie L., Mazziotta John C., Iacoboni M., Dapretto M. Facing puberty: associations between pubertal development and neural responses to affective facial displays. Social Cogn. Affective Neurosci. 2012;7:35–43. doi: 10.1093/scan/nsr066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris J.S., Friston K.J., Buchel C., Frith C.D., Young A.W., Calder A.J., Dolan R.J. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain J. Neurol. 1998;121(Pt 1):47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Motta-Mena Natalie V., Scherf Suzanne K. Pubertal development shapes perception of complex facial expressions. Dev. Sci. 2017;20:e12451. doi: 10.1111/desc.12451. [DOI] [PubMed] [Google Scholar]

- Paus Tomáš. Mapping brain maturation and cognitive development during adolescence. Trends Cogn. Sci. 2005;9:60–68. doi: 10.1016/j.tics.2004.12.008. [DOI] [PubMed] [Google Scholar]

- Pavuluri M.N., Passarotti A.M., Harral E.M., Sweeney J.A. An fMRI study of the neural correlates of incidental versus directed emotion processing in pediatric bipolar disorder. J. Am. Acad. Child Adolesc. Psychiatry. 2009;48:308–319. doi: 10.1097/CHI.0b013e3181948fc7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen M.V., Downing P.E. Selectivity for the human body in the fusiform gyrus. J. Neurophysiol. 2005;93:603–608. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- Peelen Marius V., Atkinson Anthony P., Andersson F., Vuilleumier P. Emotional modulation of body-selective visual areas. Social Cogn. Affective Neurosci. 2007;2:274–283. doi: 10.1093/scan/nsm023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen Marius V., Glaser B., Vuilleumier P., Eliez S. Differential development of selectivity for faces and bodies in the fusiform gyrus. Dev. Sci. 2009;12:F16–F25. doi: 10.1111/j.1467-7687.2009.00916.x. [DOI] [PubMed] [Google Scholar]

- Pelphrey Kevin A., Lopez J., Morris James P. Developmental continuity and change in responses to social and nonsocial categories in human extrastriate visual cortex. Front. Hum. Neurosci. 2009:3. doi: 10.3389/neuro.09.025.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petersen Anne C., Lisa C., Maryse R., Andrew B. Ph.D. candidate in Human Development, and Coordinator of the Clinical Research Training Program in Adolescence 1988. A self-report measure of pubertal status: reliability, validity, and initial norms. J. Youth Adolescence. 1988;17:117–133. doi: 10.1007/BF01537962. [DOI] [PubMed] [Google Scholar]

- Pfeifer J.H., Masten C.L., Moore W.E., 3rd, Oswald J.C., Mazziotta M., Dapretto Iacoboni M. Entering adolescence: resistance to peer influence, risky behavior, and neural changes in emotion reactivity. Neuron. 2011;69:1029–1036. doi: 10.1016/j.neuron.2011.02.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeifer Jennifer H., Berkman Elliot T. The Development of Self and Identity in Adolescence: Neural Evidence and Implications for a Value‐Based Choice Perspective on Motivated Behavior. Child Development Perspectives. 2018;12(3):158–164. doi: 10.1111/cdep.12279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichon S., de Gelder B., Grezes J. Emotional modulation of visual and motor areas by dynamic body expressions of anger. Social Neurosci. 2008;3:199–212. doi: 10.1080/17470910701394368. [DOI] [PubMed] [Google Scholar]

- Ptito M., Faubert J., Gjedde A., Kupers R. Separate neural pathways for contour and biological-motion cues in motion-defined animal shapes. NeuroImage. 2003;19:246–252. doi: 10.1016/s1053-8119(03)00082-x. [DOI] [PubMed] [Google Scholar]

- Rice K., Viscomi B., Riggins T., Redcay E. Amygdala volume linked to individual differences in mental state inference in early childhood and adulthood. Dev. Cogn. Neurosci. 2014;8:153–163. doi: 10.1016/j.dcn.2013.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross P.D. Body form and body motion processing are dissociable in the visual pathways. Front. Psychol. 2014;5:767. doi: 10.3389/fpsyg.2014.00767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross P.D., Polson L., Grosbras M.H. Developmental changes in emotion recognition from full-light and point-light displays of body movement. PLoS One. 2012;7:e44815. doi: 10.1371/journal.pone.0044815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross P., de Gelder B., Crabbe F., Grosbras M.-H. Body-selective areas in the visual cortex are less active in children than in adults. Front. Hum. Neurosci. 2014:8. doi: 10.3389/fnhum.2014.00941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rotshtein P., Malach R., Hadar U., Graif M., Hendler T. Feeling or features: different sensitivity to emotion in high-order visual cortex and amygdala. Neuron. 2001;32:747–757. doi: 10.1016/s0896-6273(01)00513-x. [DOI] [PubMed] [Google Scholar]

- Santi A., Servos P., Vatikiotis-Bateson E., Kuratate T., Munhall K. Perceiving biological motion: dissociating visible speech from walking. J. Cogn. Neurosci. 2003;15:800–809. doi: 10.1162/089892903322370726. [DOI] [PubMed] [Google Scholar]

- Scherf Suzanne K., Behrmann M., Dahl Ronald E. Facing changes and changing faces in adolescence: a new model for investigating adolescent-specific interactions between pubertal, brain and behavioral development. Dev. Cogn. Neurosci. 2012;2:199–219. doi: 10.1016/j.dcn.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherf K.S., Behrmann M., Humphreys K., Luna B. Visual category-selectivity for faces, places and objects emerges along different developmental trajectories. Dev. Sci. 2007;10:F15–F30. doi: 10.1111/j.1467-7687.2007.00595.x. [DOI] [PubMed] [Google Scholar]

- Servos P., Osu R., Santi A., Kawato M. The neural substrates of biological motion perception: an fMRI study. Cereb. Cortex. 2002;12:772–782. doi: 10.1093/cercor/12.7.772. [DOI] [PubMed] [Google Scholar]

- Sinke C.B., Sorger B., Goebel R., de Gelder B. Tease or threat? Judging social interactions from bodily expressions. NeuroImage. 2010;49:1717–1727. doi: 10.1016/j.neuroimage.2009.09.065. [DOI] [PubMed] [Google Scholar]

- Sisk Cheryl L., Foster Douglas L. The neural basis of puberty and adolescence. Nat. Neurosci. 2004;7:1040. doi: 10.1038/nn1326. [DOI] [PubMed] [Google Scholar]

- Solanas M., Vaessen M., de Gelder B. Multivariate analysis of affective body perception using postural and kinematic features of movement. Presented at Human Brain Mapping Conference; Rome, 2019; 2019. [Google Scholar]

- Sugase Y., Yamane S., Ueno S., Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Taylor C.M., Olulade O.A., Luetje M.M., Eden G.F. An fMRI study of coherent visual motion processing in children and adults. NeuroImage. 2018;173:223–239. doi: 10.1016/j.neuroimage.2018.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas K.M., Drevets W.C., Whalen P.J., Eccard C.H., Dahl R.E., Ryan N.D., Casey B.J. Amygdala response to facial expressions in children and adults. Biol. Psychiatry. 2001;49:309–316. doi: 10.1016/s0006-3223(00)01066-0. [DOI] [PubMed] [Google Scholar]

- Todd R.M., Evans J.W., Morris D., Lewis M.D., Taylor M.J. The changing face of emotion: age-related patterns of amygdala activation to salient faces. Social Cogn. Affective Neurosci. 2011;6:12–23. doi: 10.1093/scan/nsq007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tonks James W., Williams H., Frampton I., Yates P., Slater A. Assessing emotion recognition in 9–15-years olds: preliminary analysis of abilities in reading emotion from faces voices and eyes. Brain Injury. 2007;21:623–629. doi: 10.1080/02699050701426865. [DOI] [PubMed] [Google Scholar]

- Vaina L.M., Solomon J., Chowdhury S., Sinha P., Belliveau J.W. Functional neuroanatomy of biological motion perception in humans. Proc. Natl. Acad. Sci. U. S. A. 2001;98:11656–11661. doi: 10.1073/pnas.191374198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van de Riet W.A., Grezes J., de Gelder B. Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Social Neurosci. 2009;4:101–120. doi: 10.1080/17470910701865367. [DOI] [PubMed] [Google Scholar]

- Vangeneugden J., Peelen M.V., Tadin D., Battelli L. Distinct neural mechanisms for body form and body motion discriminations. J. Neurosci. 2014;34:574–585. doi: 10.1523/JNEUROSCI.4032-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vetter Nora C., Leipold K., Kliegel M., Phillips Louise H., Altgassen M. Ongoing development of social cognition in adolescence. Child Neuropsychol. 2013;19:615–629. doi: 10.1080/09297049.2012.718324. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Armony J.L., Driver J., Dolan R.J. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Weiner Kevin S., Grill-Spector K. Not one extrastriate body area: using anatomical landmarks, hMT+, and visual field maps to parcellate limb-selective activations in human lateral occipitotemporal cortex. NeuroImage. 2011;56:2183–2199. doi: 10.1016/j.neuroimage.2011.03.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winston J.S., Vuilleumier P., Dolan R.J. Effects of low-spatial frequency components of fearful faces on fusiform cortex activity. Curr. Biol. 2003;13:1824–1829. doi: 10.1016/j.cub.2003.09.038. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.