Abstract

Understanding others as intentional agents is critical in social interactions. We perceive others’ intentions through identification, a categorical judgment that others should work like oneself. The most primitive form of understanding others’ intentions is joint attention (JA). During JA, an initiator selects a shared object through gaze (initiative joint attention, IJA), and the responder follows the direction of the initiator’s gaze (reactive joint attention, RJA). Therefore, both participants share the intention of object selection. However, the neural underpinning of shared intention through JA remains unknown. In this study, we hypothesized that JA is represented by inter-individual neural synchronization of the intention-related activity. Additionally, JA requires eye contact that activates the limbic mirror system; therefore, we hypothesized that this system is involved in shared attention through JA. To test these hypotheses, participants underwent hyperscanning fMRI while performing JA tasks. We found that IJA-related activation of the right anterior insular cortex of participants was positively correlated with RJA-related activation of homologous regions in their partners. This area was activated by volitional selection of the target during IJA. Therefore, identification with others by JA is likely accomplished by the shared intentionality of target selection represented by inter-individual synchronization of the right anterior insular cortex.

Keywords: hyperscanning fMRI, joint attention, inter-brain synchronization, limbic mirror system, insular cortex

Introduction

An important question in cognitive neuroscience has been how we understand others as intentional agents in the sense that others have goals that are not necessarily associated with behavioral means (Tomasello, 1999). One of the hypotheses of developmental psychology is that we perceive other people’s functioning by identifying with them via an analogy to the self (Tomasello, 1999). During identification, an observer registers and assimilates another person’s bodily anchored psychological stance (in this case, intention) and uses this process as a possible way of relating to the world (Hobson & Hobson, 2007). Thus, identification is regarded as the categorical judgment that ‘others are like me and hence should work like me’ (Tomasello, 1999). One of the most primitive forms of understanding other’s intention is joint attention (JA). JA is the ability to coordinate attention between interactive social partners on a third significant object (Mundy et al., 1986). Since attention is regarded as a kind of intentional perception (Tomasello, 1999), its coordination requires understanding of oneself as well as others as intentional agents. JA is composed of initiative JA (IJA) and reactive JA (RJA). During JA, an initiator spontaneously creates a shared point of reference, and during RJA the responder follows the direction of the initiator’s gaze to share the attention on the target. This phenomenon emerges around 10 months of age (Corkum & Moore, 1998) and is a critical behavioral milestone in human development of social cognition because it is a pre-requisite for language acquisition (Tomasello, 2003) and a precursor of the theory of mind (Tomasello, 1999). Moreover, the lack of JA, particularly the lack of IJA, predicts the possibility of having autism spectrum disorder (ASD) (Mundy et al., 2009). Thus, understanding the neural mechanism of IJA and RJA is critical for understanding social cognition, its development and associated pathologies.

JA is usually cued by eye contact (Farroni et al., 2002; Striano & Reid, 2006). After focusing on the same object, participants make eye contact with each other to check whether they are looking at the same thing (Emery, 2000) and confirm shared attention toward that object (Perrett & Emery, 1994). Thus, eye contact and JA are tightly coupled. This coupling is reflected by the relationship between shared attention and JA, which has been discussed in studies on the development of the theory of mind (Baron-Cohen, 1994; Emery, 2000). Shared attention is a sophisticated form of communication that requires individuals X and Y to know the direction of each other’s attention and thus requires a means for confirming shared attention. Mutual gaze is a particular case of shared attention because if X observes Y and Y observes X simultaneously, it can be said that X and Y share attention. In this case, each participant’s attention is on the partner; therefore, the relationship is dyadic.

Baron-Cohen (1994) proposed a modular system for the theory of mind that contained components of the gaze communication system. The four modules were an Eye Direction Detector (EDD), Intentionality Detector (ID), Shared Attention Mechanism (SAM) and Theory of Mind Mechanism (ToMM). The EDD module represented gaze following and JA. Perrett & Emery (1994) proposed a Direction of Attention Detector (DAD) module for processing all potential attention cues (eyes, head or body) and a Mutual Attention Mechanism (MAM) for detecting mutual gazes. Emery (2000) predicted that JA would only require activation of the EDD or DAD modules and that the SAM would require the activation of the EDD or DAD and MAM modules. Thus, the MAM functions to link JA with the ID to enable reading of eye direction in terms of volitional states. Although shared attention and JA are used interchangeably in the literature (Emery, 2000), the former is regarded as JA in a broader sense, and the latter is regarded as JA in a narrower sense.

Previous neuroimaging studies that focused on the responses of individual brains revealed that the neural substrates of JA were located in the medial prefrontal cortex (Williams et al., 2005; Redcay et al., 2010; Schilbach et al., 2010), middle temporal gyrus (MTG) and the posterior portion of the superior temporal sulcus (pSTS) (Schilbach et al., 2010; Redcay et al., 2010, 2012; Tanabe et al., 2012; Caruana et al., 2015a; Oberwelland et al., 2016), temporoparietal junction (TPJ) (Redcay et al., 2010, 2012; Pfeiffer et al., 2014; Caruana et al., 2015a; Oberwelland et al., 2016), subcortical areas (Williams et al., 2005; Schilbach et al., 2010; Oberwelland et al., 2016) and cerebellum (Redcay et al., 2012). In addition to these common JA neural substrates, several studies have attempted to reveal the neural regions that are explicitly recruited during IJA (Schilbach et al., 2010; Redcay et al., 2012; Caruana et al., 2015) and RJA behavior (Schilbach et al., 2010; Redcay et al., 2012; Caruana et al., 2015; Oberwelland et al., 2016). However, controversy persists regarding the functional segregation of IJA and RJA.

Even more critically, the mechanisms underlying shared attention through JA, as well as its neural underpinning, have not been determined. This is partly because the conventional observation paradigm is insufficient to understand the social gaze that involves the mutual and recurrent transfer of information (Pfeiffer et al., 2013; Schilbach et al., 2013; Koike et al., 2019); thus, an interactive experimental setting is necessary to identify these mechanisms. Recently, several studies have approached this issue using a hyperscanning fMRI setup that simultaneously recorded brain activation from two individuals during interaction (Montague et al., 2002; Konvalinka & Roepstorff, 2012; Koike et al., 2015; Redcay & Schilbach, 2019). They used the inter-brain correlation of activation time series as a measure of sharing perception, knowledge, memory and perspective (Hasson et al., 2004; Chen et al., 2016). Additionally, in a study conducted by Koike et al. (2016), participants underwent 2 days of testing; on the first day, they participated in a real-time mutual gaze task followed by a JA task, and on the second day they participated in a mutual gaze task again. Results from this study indicated that JA-induced shared attention, which was behaviorally measured by increased inter-individual eye-blink synchronization during the mutual gaze task, increased pair-specific synchronization of the right inferior frontal gyrus (IFG) (Koike et al., 2016). Since this study showed the after- or learning-effect of JA through the mutual-gaze condition before and after the JA tasks, the neural substrates responsible for sharing attention by the JA task were not determined. Two hyperscanning fMRI studies reported that the intrinsic background activity during the JA task, which was obtained by modeling out the task-related activity, showed inter-brain synchronization only in the right IFG (Saito et al., 2010; Tanabe et al., 2012). Interestingly, the inter-brain synchronization disappeared in participants with ASD (Tanabe et al., 2012) who have difficulties in JA (Charman, 2003). This suggests that the inter-brain synchronization is the basis of sharing attention through JA. However, as assumed in these studies, the residual time series represents background activation during JA, and this represents eye contact. Eye contact is a dyadic relationship that is the basis of JA, which is a triadic relation. Recently, hyperscanning fMRI studies explored the neural basis of JA in a narrower sense (Bilek et al., 2015; Goelman et al., 2019). In their task, participants shared information about the location of target objects through eye movements (Bilek et al., 2015). They reported the involvement of the right TPJ/pSTS in sharing information. However, in the hyperscanning setting, participants could not make eye contact that had an essential role in JA in a broader sense with MAM. Therefore, the inter-brain synchronization in the right TPJ may represent the coordination of self-behavior with that of their partner during JA in a narrower sense (i.e. gaze following toward the third object without MAM). Thus, their findings may represent the coordination of the eye gaze movement as reported in another hyperscanning study of cooperation that required the mutual coordination of the movement (Abe et al., 2019). Therefore, the neural substrates of the shared attention toward the third object (i.e. JA in a broader sense that includes the MAM) have not been explored.

Recently, Koike et al. (2019) reported that online mutual eye contact activated the limbic mirror system that consists of the anterior cingulate cortex and anterior insular cortex. Given that this network represents the MAM proposed by Perrett & Emery (1994), we hypothesized that identification during JA is established by sharing the action of the gaze toward the third object through MAM. To explore the neural substrates of identification with a partner, we reanalyzed the data from the study conducted by Koike et al. (2016), which was obtained through hyperscanning fMRI during a JA task, and hypothesized that shared intention is represented by inter-individual synchronization of the intention-related neural activity. During the JA task, the initiator was requested to choose and look at one of four targets, and the responder followed the initiator’s gaze (Koike et al., 2016). Thus, the intention to select the target object was shared with the partner. Therefore, the neural substrates of shared intention would show inter-individual synchronization of the IJA-related activity in the initiator with the RJA-related activity in the responder in a pair-specific manner. In the current study, we introduced a novel inter-brain analysis method based on beta-series correlation analysis, which is used to detect intra-brain functional connectivity, in order to depict the inter-brain correlation caused by task-related activation (Rissman et al., 2004).

Methods

Participants

A total of 66 volunteers participated in the previously reported hyperscanning fMRI study (Koike et al., 2016). All participants, except for one, were right-handed according to the Edinburgh Handedness Inventory (Oldfield, 1971). None of the participants had a history of neurological or psychiatric illnesses. The protocol was approved by the ethical committee of the National Institute for Physiological Sciences, Okazaki, Japan, and the experiments were conducted in compliance with national legislation and the Code of Ethical Principles for Medical Research Involving Human Subjects of the World Medical Association (the Declaration of Helsinki). All participants gave written informed consent to participate in the study, and the participants had never met before the experiment. Before the experiment, we assigned the participants to same-gender pairs. Due to technical difficulties, we could not obtain fMRI data from one participant. Therefore, we analyzed data from a total of 65 participants (27 men, 38 women; aged 22.4 ± 5.04 years, mean ± standard deviation) to identify the neural activation related to JA and data from 32 dyads (64 participants) to reveal the inter-brain correlation (26 men, 38 women; aged 22.4 ± 5.08 years).

Experimental procedures

Setup

To measure neural activation during the online exchange of eye signals between two paired participants, we used a hyperscanning paradigm with two MR scanners (Magnetom Verio 3T; Siemens, Erlangen, Germany) (Koike et al., 2016, 2019). The visual stimuli for the JA tasks were generated using the Presentation software package (Neurobehavioral Systems, Albany, CA, USA) (RRID: SCR_002521). Video images of the participants’ faces were captured using an online grayscale video camera system and combined with visual stimuli using a Picture-in-Picture system (NAC Image Technology and Panasonic System Solutions Japan, Tokyo, Japan). The participants’ faces were presented at the center of the screen while the visual stimuli were presented in the periphery (Figure 1A). The combined visual stimuli were projected using a liquid crystal display (LCD) projector (CP-SX12000J; Hitachi, Tokyo, Japan) onto a half-transparent screen that stood behind a scanner bed approximately 190.8 cm from the participants’ eyes and presented at a visual angle of 13.06° × 10.45°. Through this double-video system, participants could monitor each other’s faces in real time (Koike et al., 2016).

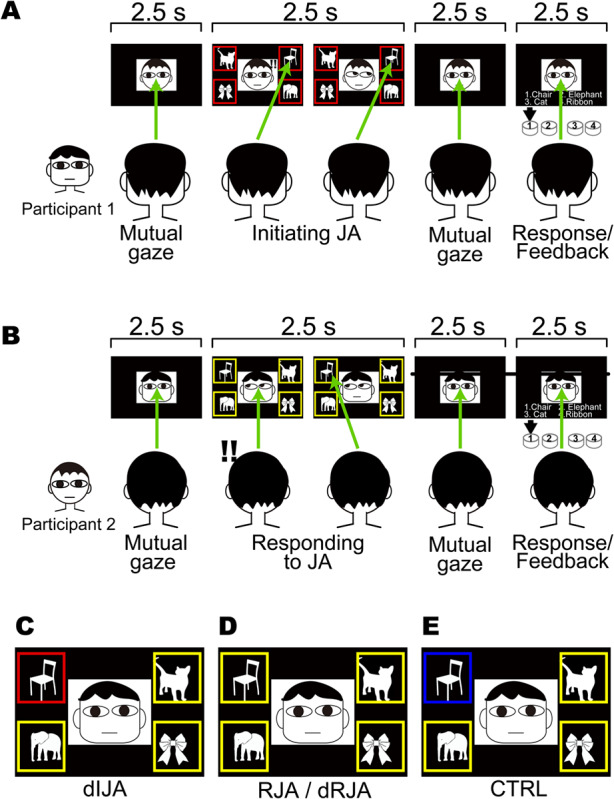

Fig. 1.

A. Time course of the free-choice IJA task epoch. The four target objects with red frames prompted Participant 1 to select one of the objects and to direct their gaze toward it (green allows). B. At the same time, a mirror-configured set of four objects with yellow frames was displayed to Participant 2, who was required to follow their partner’s gaze to the object (free-choice RJA task). C. Color cue for the designated IJA (dIJA) task. The participant was prompted to direct their gaze toward the red-framed object. D. Color cues for the designated RJA (dRJA) and RJA tasks were presented to the partner of a participant presented with the IJA or dIJA cue. E. Color cue for the control task (CTRL), which was presented to both participants, prompting them to direct their gaze towards the blue-framed object without regard to their partner’s gaze direction.

JA tasks

Participants performed the JA task by exchanging eye-gaze information in real time through the double-video system. During MRI scanning, four target objects were displayed in the corners of the screen so that the target objects and the partners’ faces were presented to each participant in both scanners simultaneously (Figure 1). The target objects were standardized line drawings of five animals (rabbit, crab, turtle, elephant and cat) and five objects (chair, fan, clock, bus and ribbon). In each trial, four of the five images were randomly selected within each category (i.e. animal or object), and each run contained a single task.

Free-choice JA task

The free-choice JA task involved the initiation of JA by the initiator looking at one of the target objects spontaneously (IJA) and their partner looking at the indicated object (RJA). Paired participants maintained eye contact through the double-video system for 2.5 s (‘Mutual gaze’ in Figure 1A). After the initial eye contact, four objects appeared in the corners of the screen. Participant 1 saw all four objects in red for 2.5 s (Figure 1A) and was required to shift his/her gaze toward one of the objects. At the same time, Participant 2 received mirror-aligned cues that were boxed in yellow frames in the corners that requested him/her to shift his/her gaze toward the object Participant 1 was looking at (Figure 1D). Both participants had to keep their gaze on the object until all four objects disappeared. This 2.5-s event was designated as either IJA or RJA depending on whether the participant was the initiator or responder, respectively (Figure 1A and B). After the disappearance of stimuli, the participants returned to a mutual gaze for 2.5 s (‘Mutual gaze’ in Figure 1A and B). When a list of numbered names of objects appeared on the screen for 2.5 s, both participants had to select the object they had both looked at by pressing a button. A feedback sound informed them if they had made a concordant judgment (‘Response/Feedback’ in Figure 1A and B). After the Response/Feedback process, they maintained eye contact again for the next epoch. During a set of 40 trials of free-choice JA, each participant played the role of initiator and responder 20 times each. The roles were switched randomly across trials to confirm the orthogonality between the IJA and RJA events. Each run lasted 7 min and was performed twice.

Designated-choice JA task

In our daily JA behavior, an intentionally selects one object in our environment, and the intention to select the object is a critical factor of JA. To highlight the neural basis of intentional selection of objects, we prepared another IJA condition that did not contain intentional selection process. The protocol for the designated-choice JA (dIJA/dRJA) task was identical to that of the free-choice JA; however, in the designated-choice JA, the initiator (Participant 1 in Figure 1A and C) saw one object with a red frame and the other three objects with yellow frames. This single red cue prompted Participant 1 to shift their gaze to the designated red-framed object (Figure 1C), and the responder (Participant 2 in Figure 1A) followed the initiator’s eye movement (Figure 1D). Each run contained a set of 40 trials that lasted 7 min and repeated twice.

Control task

The control task (CTRL) was identical to the dIJA/dRJA; however, one blue-framed object and three yellow-framed objects were presented to both participants in the control task. This single blue cue prompted both participants to shift their gaze to the designated blue-framed object. We required participants to perform this task without referring to their partner (Figure 1E). Participants participated in one run that contained a set of 40 trials.

JA performance definition

We defined the JA performance in each condition as follows. In the dIJA and CTRL conditions, a trial was considered successful when the paired participants selected the correct name of the cued object in the response phase (Figure 1). In the dRJA and RJA conditions, the trial was considered successful when a responder selected the correct object that the initiator looked at. We conducted a repeated-measures analysis of variance (ANOVA) to evaluate differences in performances. Bonferroni post hoc tests were conducted to correct for multiple comparisons, and data were performed using the R-script on RStudio.

Neuroimaging data acquisition

In order to acquire EPI images from two participants simultaneously, we drove two scanners synchronously by an external trigger generated by an MS-DOS program. MRI time series data were acquired in ascending order using T2*-weighted, gradient echo, and echo-planar imaging (EPI) sequences. Each volume consisted of 36 slices (thickness, 3.0 mm; gap, 0.5 mm) to cover the entire cerebral cortex and cerebellum. The acquisition time was 2300 ms, and the delay in repetition time (TR) was 200 ms. Thus, the time interval between the acquisition of the two volumes was 2500 ms with a flip angle (FA) of 80° and an echo time (TE) of 30 ms. The field of view (FOV) was 192 mm, and the in-plane matrix size was 64 × 64 pixels. During the JA experiments, we acquired 168 volumes per run. For anatomical reference, we obtained T1-weighted high-resolution images with three-dimensional (3D) magnetization-prepared rapid-acquisition gradient-echo sequencing (TR = 1800 ms; TE = 2.97 ms; FA = 9°; FOV = 256 mm; voxel dimensions = 1 × 1 × 1 mm).

Neuroimaging data analysis

Pre-processing of functional and anatomical images

We performed conventional pre-processing of fMRI data that was collected during the JA tasks using statistical parametric mapping with SPM12 (Wellcome Centre for Human Neuroimaging, London, UK) implemented in MATLAB 2017b (MathWorks, Natick, MA, USA). After all of the volumes were realigned for motion correction, differences in slice-timing within each image volume were corrected. The whole-head 3D MPRAGE volume was co-registered with the EPI volumes, and the whole-head 3D MPRAGE volume was normalized to the Montréal Neurological Institute (MNI) T1 image template using a non-linear basis function. The normalization parameters were applied to all the EPI volumes. Then, the normalized EPI images were spatially smoothed in three dimensions using an 8-mm full-width at half-maximum Gaussian kernel.

Estimation of task-related activation

First-level analysis

In this univariate general linear model (GLM) analysis, each JA trial was modeled separately (see Figure 2). In total, we had 40 trials per session. We included the 2.5 s response phase within the model as a regressor of no interest. The box-car-type regressors were convolved with a canonical hemodynamic response function. We used a high-pass filter with a cutoff period of 128 s. No global scaling was performed. Serial autocorrelation assuming a first-order autoregressive model was estimated from the pooled active voxels with a restricted maximum likelihood (ReML) procedure and was used to whiten the data (Friston, 2002). The estimated parameters were calculated by performing least-squares estimation on the high-pass-filtered and whitened data and design matrix using an AR(1) method that is a default function in SPM12. Using this process, we generated 40 beta maps per session that were used for inter-brain correlation analysis. Each participant participated in five runs: two IJA/RJA runs, two dIJA-dRJA runs and one CTRL run. Therefore, we obtained a total of 200 beta maps per subject covering all five conditions. We also applied a set of contrast vectors to specify each task condition and generate five contrast images (con*.nii), including IJA, RJA, dIJA, dRJA and CTRL, for each participant. Examples of the first-level design matrix are presented in Figure S5. These five categories of contrast images were used in the second-level random effect analysis.

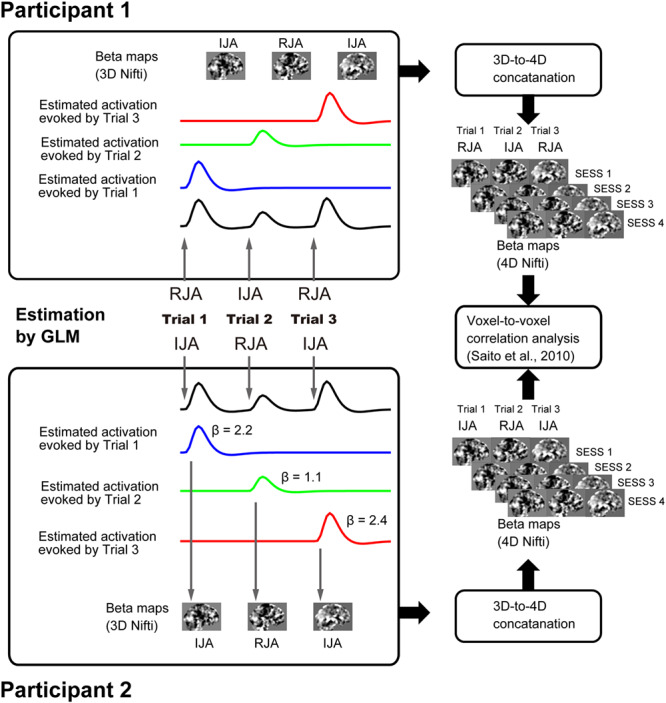

Fig. 2.

Procedure of inter-brain beta-series correlation analysis.

Second-level random effect analysis

The flexible factorial model analysis implemented in the SPM12 was applied to 325 contrast images (the five contrast images/participant multiplied by 65) that were used to assess the within-subject effect at the group level. We used the following pre-defined contrasts. First, we evaluated the regions showing greater activation in the JA conditions than in the CTRL conditions using the following contrast: [(IJA + dIJA + RJA + dRJA) > 4 × CTRL]. Second, we depicted brain regions that showed the initiator effect [(IJA + dIJA) > (RJA + dRJA)], the responder effect [(RJA + dRJA) > (IJA + dRJA)] and the volition effect [(IJA—RJA) > (dIJA—dRJA)]. Since we were only interested in the brain regions that showed greater activation during the JA conditions than during the CTRL conditions, the results of the JA effect contrasts mentioned above were used as an inclusive mask image to show task specificity within the JA region. We did not perform the small-volume correction method to correct for statistical significance. Images without the inclusive mask are shown in the Supplementary Figures (see Figures S1, S2, S3 and S4). The resulting set of voxel values for each contrast constituted a statistical parametric map of the t statistic (SPM {t}). The threshold for SPM {t} was set at P < 0.001 for height level and P < 0.05 with a family-wise error (FWE) correction at the cluster level for the entire brain (Friston et al., 1996). This relatively high cluster-forming threshold was enough to prevent the failure of the multiple-comparison problem in cluster-level statistical inference (Eklund et al., 2016; Flandin & Friston, 2019). For anatomical labeling, we used Automated Anatomical Labeling (Tzourio-Mazoyer et al., 2002) and the Anatomy toolbox v2.2b (Eickhoff et al., 2005). The final images were displayed on a standard template brain image (http://www.bic.mni.mcgill.ca/ServicesAtlases/Colin27) using MRIcron (https://www.nitrc.org/projects/mricron; Rorden & Brett, 2000).

Inter-brain correlation analysis.

In our previous study, we attempted to determine the inter-brain neural correlation representing synchronization of intrinsic/spontaneous/background activation during social interaction (Saito et al., 2010; Tanabe et al., 2012). In this study, we were interested in the neural linkage between two individuals via a shared task in a pair-specific manner. Our basic idea was that the shared neural representation of intention during a JA task would fluctuate between the paired participants through real-time mutual interactions. Thus, the amplitude of activation in each participant during a trial (say, IJA) would correlate with that of the partner (RJA). Additionally, there would be no correlation between participants who were not involved in simultaneous JA. Therefore, we could show the brain regions involved by comparing the inter-brain correlation of task-related activation. To achieve this, we prepared a novel analysis technique based on the beta-series correlation analysis described by Rissman et al. (2004).

Beta-series 4D Nifti map preparation

There were 40 beta maps per run after the first-level univariate GLM analysis. Then, we conducted 3D to 4D file conversion for each run (two JA/RJA task runs and two dIJA/dRJA task runs). The trial order was maintained in the 4D Nifti map and thus represented the trial-by-trial variation of brain activation caused by the JA event (see Figure 2).

Whole-brain beta-series correlation analysis

First-level analysis

The inter-brain beta series correlation was calculated by in-house scripts using MATLAB 2017b. As in our previous studies (Saito et al., 2010; Tanabe et al., 2012; Koike et al., 2016), we evaluated the inter-brain correlation between voxels representing homologous MNI coordination positions (x, y, z). However, instead of innovation time series (Saito et al., 2010; Tanabe et al., 2012) or spontaneous brain activation (Koike et al., 2016), we used the beta-series to estimate the inter-brain correlation between participants, and each had four beta-series. We calculated the inter-brain correlation between beta-series from each run using Pearson’s correlation coefficients (see Figure 2). The correlation coefficient was transformed into a z-score using Fisher’s r-to-z transformation, and we obtained four inter-brain correlation maps per dyad. Finally, by averaging these four maps, we generated one beta-series correlation map per dyad that represented the degree of inter-brain correlation. The normalized correlation map represented the average correlated brain activation for each dyad during the JA tasks and was delivered for second-level analysis.

Second-level random effect analysis

In the second-level random-effect analysis, we tested whether the inter-brain correlation between dyads during a task (pair group) was significantly higher than that between pseudo-dyads (non-pair group). The inter-brain correlation map of the non-pair group was prepared by selecting two participants randomly and calculating the inter-brain correlation. In this analysis, we only considered pseudo-dyads who performed JA tasks in the same order. Therefore, a total of 32 maps were assigned to the pair group, and 176 combinations were assigned to the non-pair group. Using these two data sets, we performed a two-sample t-test to depict regions with an inter-brain correlation that was significantly higher in the pair group than that in the non-pair group. The resulting set of voxel values for each contrast constituted a statistical parametric map of the t statistic (SPM {t}). The statistical threshold for SPM {t} was set to P < 0.001 for height level and P < 0.05 with a family-wise error (FWE) correction at the cluster level for the entire brain (Friston et al., 1996; Eklund et al., 2016; Flandin & Friston, 2019). Final images were displayed on a standard template brain image.

All the graphs showing details on data distribution of inter-brain correlation were prepared using the RainCloudPlots R-script (Allen et al., 2019) (https://github.com/RainCloudPlots/RainCloudPlots), which provided a combination of the box, violin and dataset plots. Each dot represented its respective data point in the dataset plot. The data from each dyad and pseudo dyad were calculated by summarizing the Z-value for all voxels within each cluster. The data was extracted using the MarsBaR toolbox (Brett et al., 2002).

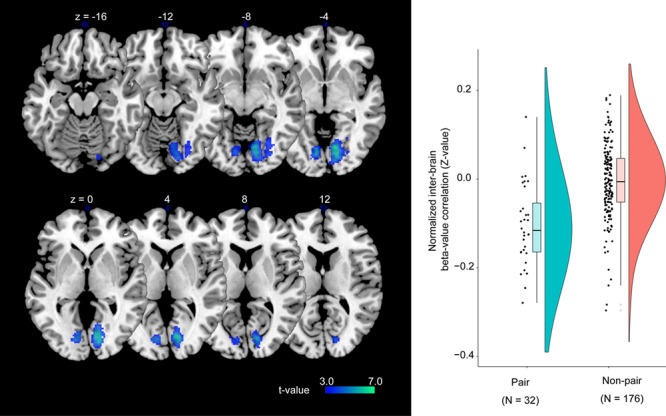

ROI-based beta-series correlation analysis

The whole-brain inter-brain correlation revealed that there was a significantly negative correlation in the bilateral primary visual cortex (V1) (Supplementary Figure S6 and Table S5). We interpreted that this anticorrelation (r < 0) was caused by the spatial allocation of visual attention that was mirrored in the participants’ visual fields during JA. For example, when the initiator shifts his/her attention toward the right hemifield coded on the left V1, the responder has to shift his/her attention toward the left hemifield coded on the right V1. If our interpretation is valid, we should find a positive correlation between the contralateral V1s (i.e. left and right V1 in different brains), and negative correlations (anticorrelation) between the ipsilateral V1s (i.e. left V1 s in different brains). To confirm our hypothesis, we introduced the ROI-based analysis.

Using the MarsBaR toolbox (Brett et al., 2002), we extracted the mean beta-series time series for each subject in each ROI for four sessions (except the CTRL condition). Using these extracted beta-series, we calculated three types of beta-series correlation values across two ROIs in each dyad. One represented the inter-brain correlation between the contralateral V1 (Left-to-Right), and the other two represented the inter-brain correlation between the ipsilateral V1 (Left-to-Left and Right-to-Right). Next, we calculated and averaged Pearson’s correlation coefficients between each beta-series for the same run, and the total numbers of contralateral and ipsilateral correlation values were 64 and 32, respectively. These correlation values were normalized using Fisher’s r-to-z transformation. The statistical significance of the normalized-correlation values was tested using the R-script on RStudio.

Results

Behavior

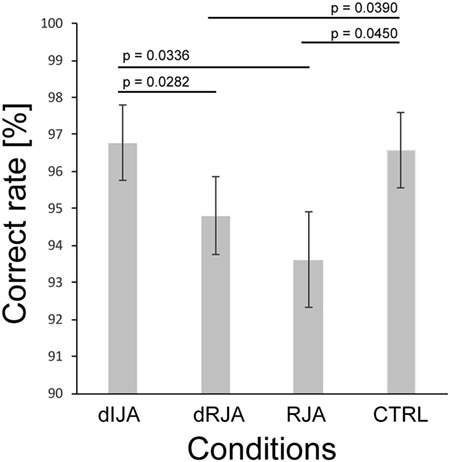

Figure 3 shows the JA performance in each condition except the IJA condition. Results from the ANOVA revealed that there was a significant effect of condition on JA performance (F (3, 189) = 7.3221, P = 0.0001). Additionally, there were significant differences between dIJA and dRJA (t (63) = 2.9314, P = 0.0282),dIJA and RJA (t (63) = 2.8664, P = 0.0336), CTRL and dRJA (t (63) = 2.8146, P = 0.0390) and CTRL and RJA (t (63) = 2.7622, P = 0.0450).

Fig. 3.

Behavioral performance.

Neuronal activation

The univariate GLM

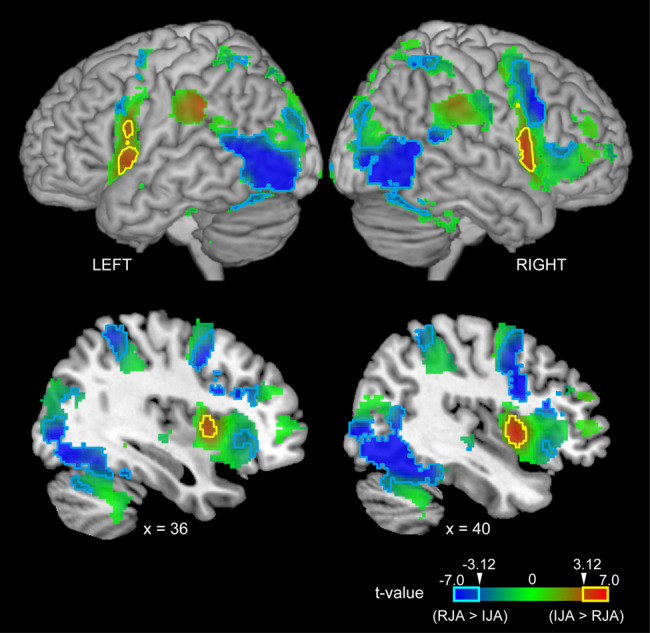

The main effect of JA ([IJA + dIJA + RJA + dRJA] > 4 × CTRL) was observed in regions in the bilateral cerebellum, occipital cortex, frontoparietal regions and frontoinsular cortex (Figure 4 and Table S1). Within these areas, initiating JA activated the bilateral anterior insular cortex (AIC)-IFG complex and left parietal cortex (yellow contour in Figure 5). Details are shown in Table S2. Images without the inclusive mask are presented in Supplementary Figure S2.

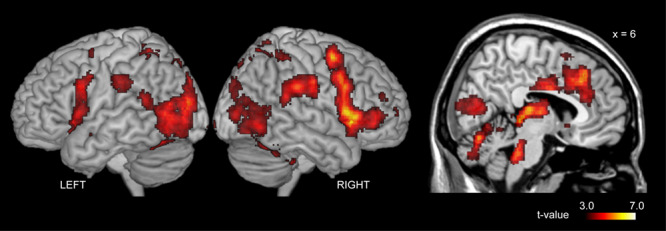

Fig. 4.

Effect of Joint attention. These regions were depicted by the predefined contrast: [(IJA + RJA + dIJA + dRJA)—4 × CTRL].

Fig. 5.

Task specificity within the JA region (see Figure 3). Red indicates IJA specificity ([IJA + dIJA] > [RJA + dRJA]), blue indicates RJA specificity ([RJA + dRJA] > [IJA + dIJA]) and green indicates intermediate specificity. The cyan and magenta lines indicate regions exhibiting statistically significant effects in terms of responding to and initiating JA, respectively (t > 3.12 corresponding to an few-corrected P < 0.05 at cluster level).

In contrast to IJA, RJA activated the bilateral cerebellum hemisphere, fusiform gyrus (FG), calcarine sulcus corresponding to V1, middle occipital gyrus (MOG), MTG, precentral gyrus (PreCG), opercular part of the IFG (IFGOp), anterior and middle cingulate cortex (ACC, MCC), thalamus and insula (INS) (cyan contour in Figure 5). Details are shown in Table S3. Images without the inclusive mask are presented in Supplementary Figure S3.

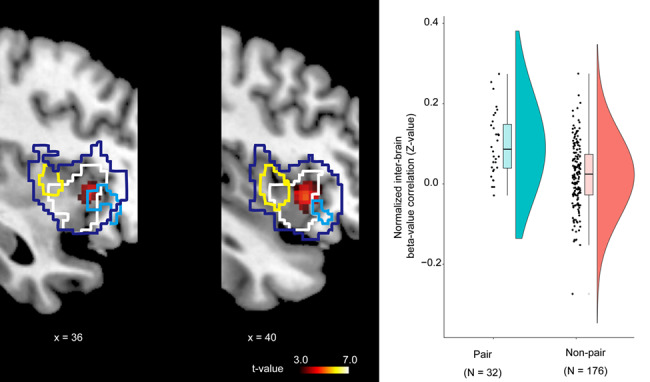

Using the ([IJA—RJA] > [dIJA—dRJA]) contrast, we depicted the activation associated with the intentional selection of the target stimulus within the JA region (Figure 4), and we observed significant activation in the ACC, MCC, bilateral AIC extending to the right IFG, precuneus (PCun), parietal cortex and left cerebellum (Figure 6 and Table S4). Images without the inclusive mask are shown in Supplementary Figure S4.

Fig. 6.

The region representing volitional selection in the IJA condition [(IJA-RJA) > (dIJA-RJA)]. The dark-blue line represents the boundary of the JA-prominent regions in the right insular cortex (see Figure 3). The cyan and yellow lines represent the boundary of initiator- and responder-specific region, respectively (see Figure 4).

Inter-brain beta-series correlation analysis

Whole-brain analysis

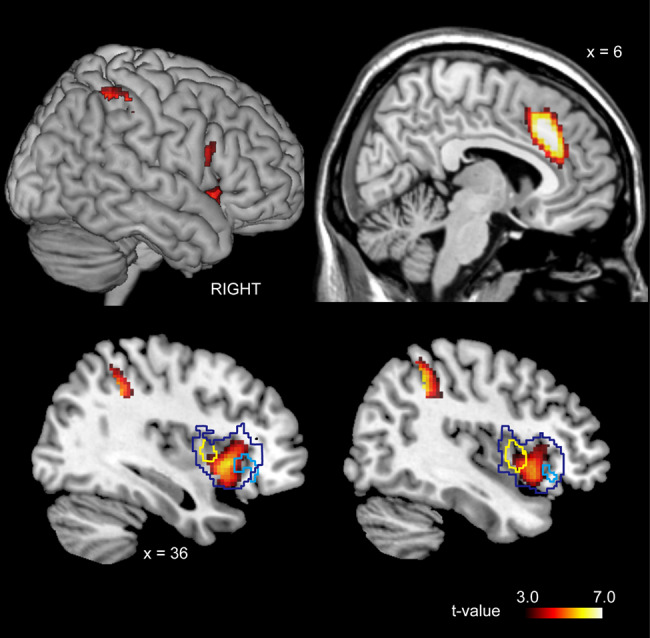

As shown in Figure 7, this analysis revealed that there was a significant inter-brain correlation in the right anterior insular cortex (Table S5) that overlapped with the volitional selection area (please also see Figure 6). We also found that there was a significant inter-brain anticorrelation in the bilateral V1 (Figure 8 and Table S5).

Fig. 7.

Inter-brain beta-series correlation. The dark-blue line represents the boundary of the JA-prominent regions in the right insular cortex (see Figure 3). The cyan and yellow lines represent the boundary of the initiator- and responder-specific regions, respectively (see Figure 4). The white line represents the region responsible for volitional selection in the IJA condition (see Figure 5).

Fig. 8.

Inter-brain beta-series correlation. These visual areas show negative inter-brain correlation.

ROI-based analysis

As shown in Supplementary Figure S6, the ROI-based analysis revealed that the inter-brain correlation between the contralateral V1 cortices showed positive correlation values (0.1847 ± 0.1166, mean ± standard deviation). Conversely, the ipsilateral V1 cortices showed an anticorrelation (r < 0) that was consistent with the whole-brain analysis (i.e. the normalized correlation between the right and left V1 cortices was −0.1848 ± 0.1241, and −0.1185 ± 0.1492 (mean ± standard deviation), respectively).

Discussion

Pair-specific inter-brain correlation

V1

The primary visual cortex showed a negative inter-brain correlation between the ipsilateral V1 cortices and a positive correlation between the contralateral V1 cortices. This inter-brain correlation of the V1 was likely caused by the sharing of spatial attention through the shifting of visual attention to the identical object. During the JA task, paired participants directed their attention toward the contralateral visual hemifield. When the initiator shifted their attention toward the right hemifield, the responder had to shift their attention toward the left hemifield. This means that left hemispheric activation in one subject corresponded to right hemispheric activation in their partner, and this caused a positive inter-brain correlation between the contralateral V1 cortices. Since selective visual attention to a specific location retinotopically enhances the neural activity of the early visual cortices (Kastner & Ungerleider, 2000), the inter-brain correlation found in the V1 represents the shared attention toward the same location. This finding is a piece of supporting evidence that our beta-series correlation method may depict the neural substrates of inter-individual sharing during social interaction.

The right AIC

The IJA-related activation of the right AIC in the initiator was positively correlated with the RJA-related activation of homologous regions in the responder in a pair-specific manner. Task-related activation of the right AIC and its inter-brain correlation further suggested its role in JA. First, the right AIC was previously shown to be part of the MAM (Koike et al., 2019). Second, the right AIC is related to intentional selection as shown by the IJA-dIJA contrast. Third, since RJA activates the right AIC, it is part of the DAD. Finally, previous studies repeatedly reported that there is dysfunction of the AIC in ASD (Uddin & Menon, 2009), which is associated with the lack of IJA (Charman, 2003). Thus, the finding that IJA-related activation coherently fluctuated with the corresponding RJA-related activation indicates that integration of ID and DAD by MAM occurs in the right AIC. Recently, Koike et al. (2019) investigated an unaddressed characteristic of mutual gaze (i.e. real-time mutual interaction as a form of automatic mimicry). Koike et al. (2019) reported that cerebellar and ACC activation and the functional connectivity between the ACC and AIC were enhanced during real-time mutual eye contact as compared with cerebellar and ACC activation and the functional connectivity between the ACC and AIC during the off-line condition. Thus, the AIC may be involved in the mutual interaction during eye contact for shared attention as part of the MAM.

Top-down volition in IJA

By comparing brain activation between free-choice IJA and dIJA, we depicted the ACC, AIC and right inferior posterior lobule (IPL) as the neural substrates for volition, which is unique to IJA as compared with RJA. Movement is initiated in the mesial areas, including the pre-supplementary motor area (preSMA) and cingulate motor area (CMA), and the sense of volition arises as a result of a corollary discharge to the parietal lobe and insular cortex (Hallett, 2007). The right IPL is related to both intention and motor awareness (Desmurget et al., 2009). The AIC is a core region for interoceptive information about one’s own body (Craig, 2002), which is related to self-awareness (Craig, 2002) and is pivotal in the selection of the reference point during IJA. Furthermore, the AIC is related to monitoring and evaluating outcomes of intentional action (Brass & Haggard, 2010). In the current study, the volition effect was found in the dorsal portion of the AIC (Chang et al., 2013), which is known as a central hub for different cognitive networks (Dosenbach et al., 2006). The ACC and AIC are part of the rostral limbic system and are classified as the limbic motor cortex and limbic sensory cortex, respectively (Singer et al., 2004). Additionally, the ACC and AIC play an essential role in initiation, motivation and goal-directed behaviors (Devinsky et al., 1995; Craig, 2002). According to the model established by Craig (2002), the AIC plays a role in ‘feeling of the urge’, while the ACC acts upon the ‘urge as motivation’. Therefore, they form core regions of the self because they jointly monitor and control volitional behavior (Lerner et al., 2009). Together with the ACC, the AIC is likely involved in the top-down direction of one’s gaze following the selection of a target during IJA. As part of the ‘salience network,’ they also function to segregate the most relevant of internal and extra-personal stimuli to guide behavior (Uddin & Menon, 2009; Menon & Uddin, 2010). Further, the AIC serves as an integral hub for mediating dynamic interactions between other large-scale brain networks that are involved in externally oriented attention and internally oriented or self-related attention (Sridharan et al., 2008). The tight functional coupling between the AIC and the ACC facilitates rapid access to the motor system (Menon & Uddin, 2010), which appears to be critical in rapid and dynamic social interactions, such as JA. Thus, the right AIC is involved in creating a shared point of reference by intentionally directing the eye gaze toward the target and monitoring the partner’s response as the outcome.

Bottom-up RJA-specific activation

Similar to the visual areas that extend to the MTG and pSTS, the AIC is involved in RJA. Previous fMRI studies on humans demonstrated that a dynamic gaze shift toward the observer specifically activates the right pSTS (Ethofer et al., 2011) and that following a partner’s eye gaze activates the pSTS (Marquardt et al., 2017). Additionally, there is functional and anatomical connectivity between the right pSTS and the right AIC (Ethofer et al., 2011), which suggests that the right AIC plays a role in bottom-up gaze processing by receiving input from the MTG. As a member of the salience network, several essential functions have been ascribed to the AIC, including bottom-up salience detection, switching between large-scale networks for externally oriented attention, and self-related cognition (Menon & Uddin, 2010). These functions are critical in RJA, during which salient eye movement of others prompts shifting of attentional direction toward the external object. The findings of this study indicate that the AIC, as part of the salience network, is critical for detecting the attentional direction of the partner in RJA through eye gaze direction detection; thus, it is part of the DAD. Since the AIC is related to the intentional selection of the target in IJA, the synchronous activation of the right AIC by RJA indicates that it is part of the ID.

The gradient of task specificity in the frontoinsular cortex

The frontoinsular cortex showed the local gradient of task specificity. For example, the anterior portion of the frontoinsular cortex was RJA-prominent, and the posterior portion was IJA-prominent. Additionally, volition in IJA more prominently activated the ventral IFG extending to the AIC, which is a conjunct of the RJA- and IJA-prominent insular cortex, on the right side than it did on the left side. Thus, the right AIC-IFG complex was activated in both IJA and RJA and is linked to the volitional control system through the AIC. Since there was no activation during the CTRL condition, the areas mentioned above represent the coordinated shift of attention per se rather than eye movement control. The coordinated attention shift is regarded as a prediction/control relation or forward/inverse internal model (Wolpert & Kawato, 1998; Wolpert et al., 1998; Sasaki et al., 2012). The internal model is defined as a set of input–output relations between motor commands and their sensory consequences (Penhune & Steele, 2012). The inverse and forward models are tightly coupled during both their acquisition and use (Wolpert & Kawato, 1998). Considering the inter-individual causal relationship between IJA and RJA, IJA-prominent activity is probably related to the prediction of the partner’s shift of attention that follows one’s own attentional shift (forward model), while RJA-prominent activity reflects the control of one’s action following another person’s attentional shift (inverse model). Thus, the right AIC-IFG complex may represent the sets of the internal model of JA that constitute the shared representations of the action (de Vignemont & Haggard, 2008), thereby providing a first-person perspective by allowing an observer to internalize someone else’s actions (i.e. identification). Hobson & Hobson (2007) made the following statement: ‘When, through identification, an individual shares experiences of the world with someone else in joint attention, he or she both resonate to the attitude of the other from the others’ bodily anchored stance and maintains enough of his or her own starting state to make the sharing “sharing”, not mere adjustment’. An important implication of the notion described by Hobson and Hobson is that identification is achieved through ‘resonance,’ which is a pair-specific, real-time and mutual interaction, and maintenance of the self-state, both of which are critical components of the internal models of the paired participants. We conclude that the right AIC-IFG complex is the core neural substrates of identification during JA.

JA-related regions non-specific to either IJA or RJA

The bilateral cerebellum, parietal cortices and fusiform gyri showed JA-related activation (Figure 4). The cerebellar hemispheres are associated with social cognition (Van Overwalle et al., 2014) and have shown similar activation across all JA conditions, which is compatible with their proposed role in shifting attention (Courchesne et al., 1994; Allen et al., 1997; Courchesne & Allen, 1997). This is probably due to a connection between the cerebellar hemispheres and the parietal attentional system (Posner & Petersen, 1990; Petersen & Posner, 2012). This activation is not related to the shifting of attention between the partner’s eyes and the target since the control condition requires the same attention shift. Instead, we attribute this activation to the shifting of attention between oneself and another person since participants rapidly switched roles between the initiator and responder during the JA runs, but role shifting was not required during the control run. Since recent resting-state functional MRI studies have revealed that the cerebellar hemispheric regions that showed greater activation during JA in this study are connected to the AIC and ACC (Buckner et al., 2011; Riedel et al., 2015), the cerebellum may support the self-other attention shifting function of the AIC and ACC. In our previous study, a double-video system showed that real-time mutual gaze activated the cerebellum and ACC and enhanced the functional connectivity between the ACC and AIC (Koike et al., 2019). The cerebellum is also crucial for non-motor time discrimination (Jueptner et al., 1995), error detection and processing of temporal contingency (Blakemore et al., 2003; Trillenberg et al., 2004; Matsuzawa et al., 2005), and real-time social communication (Gergely & Watson, 1999). Therefore, the cerebellum may have played a role in evaluating the temporal contingency between self-behavior and that of the partner; thus, the evaluated contingency may be used to link the self- and other-referenced processes in the AIC-ACC network (Koike et al., 2019).

Commonality and differences with previous studies

Several previous studies attempted to explore the neural basis of JA, especially RJA- and IJA-specific regions (Schilbach et al., 2010; Redcay et al., 2012; Caruana et al., 2015), using interactive experimental settings. While all studies used the experiment based on JA behavior, the experimental settings were completely different across studies. Despite the differences in experimental details, these studies and our study reported a similar JA-related region: the occipito-temporal region, including the pSTS, TPJ, AIC and inferior and middle frontal cortex.

However, there were also differences in task-specific regions that showed IJA- or RJA-related activation between studies. This discrepancy may be caused by the difference of task condition. For example, in Redcay et al. (2012), the initiator was instructed to search for a mouse-tail on one of four pieces of cheese and shift his/her gaze toward the tail (see Figure 1 in Redcay et al., 2012). Their results demonstrated that the number of eye movements per block was increased in the IJA condition as compared with that in the RJA condition, and this suggests that the initiators performed a visual search (see Figure 2 in Redcay et al., 2012). Conversely, in our experiment, participants were not required to perform a visual search. In the dIJA condition, the designated target was clearly cued by a red rectangle; therefore, initiators only had to shift his/her gaze toward it without visual search. In the IJA condition, initiators voluntarily selected one of the four objects. Similar to the dIJA condition, the IJA condition did not require participants to use the visual search process. Another factor that may explain the discrepancies in task-specific regions between the studies is the period analyzed as ‘joint attention’. In our analysis, we modeled the 2.5 s period after the appearance of a visual cue. During this 2.5 s, the initiator shifted his/her gaze toward the cue, and the responder perceived the initiator’s eye movement and shifted his/her gaze toward the same object. By returning to the eye-contact condition (i.e. mutual attention), the initiator perceived that the responder could follow his/her eye movements. Thus, brain activation represented shared attention (or JA in a broader sense) in the current study. In contrast, Redcay et al. (2012) modeled the timing that both the initiator and the responder directed their attention toward the object; thus, JA was represented in a narrower sense (see Figure 1 in Redcay et al., 2012).

Limitations and future directions

This study had multiple limitations. First, although we assumed that the AIC-IFG complex was an interface for sharing attention between the self and other, it is not clear if its role is limited within the framework of JA in which participants share their spatial visual attention. Further studies are necessary to identify whether the AIC-IFG complex universally merges self-other information regardless of the type of communication. Second, we did not evaluate the functional segregation within the AIC in detail. Therefore, further studies utilizing 7T-MRI with a higher spatial resolution are warranted.

Conclusion

In summary, we revealed the neural basis of JA using hyperscanning fMRI. The mirror property of the right AIC during JA is similar to that previously shown in emotions, such as pain or disgust (Wicker et al., 2003; Singer et al., 2004). Thus, the AIC is related to the sharing of intention that leads to identification with a partner through the category of intentional agents of self and others.

Funding

This work was supported, in part, by a Grant-in-Aid for Scientific Research A#15H01846 (N.S.), C#26350987 (H.C.T.), #16K16894 (E.N.), #15K12775 (T.K.) and #18H04207 (T.K.) from the Japan Society for the Promotion of Science; Scientific Research on Innovative Areas grants #16H01486 (H.C.T.) and #15H05875 (T.K.) from the Ministry of Education, Culture, Sports, Science and Technology of Japan (MEXT); and Japan Agency for Medical Research and Development under Grant Numbers JP18dm0107152 and JP18dm0307005.

Conflict of interest. None declared.

Supplementary Material

References

- Abe M.O., Koike T., Okazaki S., Sugawara S.K., Takahashi K., Watanabe K., et al. (2019). Neural correlates of online cooperation during joint force production. NeuroImage, 191, 150–61. doi: 10.1016/j.neuroimage.2019.02.003. [DOI] [PubMed] [Google Scholar]

- Allen M., Poggiali D., Whitaker K., Marshall T.R., Kievit R.A. (2019). Raincloud plots: a multi-platform tool for robust data visualization. Wellcome Open Research, 4. doi: 10.12688/wellcomeopenres.15191.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S. (1994). How to build a baby that can read minds: cognitive mechanisms in mindreading. Current Psychology of Cognition, 13, 513–52. [Google Scholar]

- Bilek E., Ruf M., Schäfer A., Akdeniz C., Calhoun V.D., Schmahl C., et al. (2015). Information flow between interacting human brains: identification, validation, and relationship to social expertise. Proceedings of the National Academy of Sciences of the United States of America, 112, 5207–12. doi: 10.1073/pnas.1421831112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore S.J., Boyer P., Pachot-Clouard M., Meltzoff A., Decety J. (2003). The detection of contingency and animacy from simple animations in the human brain. Cerebral Cortex, 13, 837–44. [DOI] [PubMed] [Google Scholar]

- Brass M., Haggard P. (2010). The hidden side of intentional action: the role of the anterior insular cortex. Brain Structure and Function, 214, 603–10. [DOI] [PubMed] [Google Scholar]

- Brett M., Anton J., Valabregue R., Poline J. (2002). Region of interest analysis using the MarsBar toolbox for SPM 99. NeuroImage, 16, 2. [Google Scholar]

- Buckner R.L., Krienen F.M., Castellanos A., Diaz J.C., Yeo B.T.T. (2011). The organization of the human cerebellum estimated by intrinsic functional connectivity. Journal of Neurophysiology, 106, 2322–45. doi: 10.1152/jn.00339.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caruana N., Brock J., Woolgar A. (2015). A frontotemporoparietal network common to initiating and responding to joint attention bids. NeuroImage, 108, 34–46. doi: 10.1016/j.neuroimage.2014.12.041. [DOI] [PubMed] [Google Scholar]

- Chang L.J., Yarkoni T., Khaw M.W., Sanfey A.G. (2013). Decoding the role of the insula in human cognition: functional parcellation and large-scale reverse inference. Cerebral Cortex, 23, 739–49. doi: 10.1093/cercor/bhs065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charman T. (2003). Why is joint attention a pivotal skill in autism? Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 358, 315–24. doi: 10.1098/rstb.2002.1199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J., Leong Y.C., Honey C.J., Yong C.H., Norman K.A., Hasson U. (2017). Shared memories reveal shared structure in neural activity across individuals. Nature Neuroscience, 20, 115–25. doi: 10.1038/nn.4450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corkum V., Moore C. (1998). The origins of joint visual attention in infants. Developmental Psychology, 34, 28–38. [DOI] [PubMed] [Google Scholar]

- Courchesne E., Allen G. (1997). Prediction and preparation, fundamental functions of the cerebellum. Learning & Memory, 4, 1–35. [DOI] [PubMed] [Google Scholar]

- Courchesne E., Townsend J., Akshoomoff N.A., Saitoh O., Yeung-Courchesne R., Lincoln A.J., et al. (1994). Impairment in shifting attention in autistic and cerebellar patients. Behavioral Neuroscience, 108, 848–65. doi: 10.1037/0735-7044.108.5.848. [DOI] [PubMed] [Google Scholar]

- Craig A.D. (2002). How do you feel? Interoception: the sense of the physiological condition of the body. Nature Reviews. Neuroscience, 3, 655–66. [DOI] [PubMed] [Google Scholar]

- Desmurget M., Reilly K.T., Richard N., Szathmari A., Mottolese C., Sirigu A. (2009). Movement intention after parietal cortex stimulation in humans. Science, 324, 811–3. doi: 10.1126/science.1169896. [DOI] [PubMed] [Google Scholar]

- Devinsky O., Morrell M.J., Vogt B.A. (1995). Contributions of anterior cingulate cortex to behaviour. Brain, 118, 279–306. doi: 10.1093/brain/118.1.279. [DOI] [PubMed] [Google Scholar]

- Dosenbach N.U.F., Visscher K.M., Palmer E.D., Miezin F.M., Wenger K.K., Kang H.C., et al. (2006). A core system for the implementation of task sets. Neuron, 50, 799–812. doi: 10.1016/j.neuron.2006.04.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff S.B., Stephan K.E., Mohlberg H., Grefkes C., Fink G.R., Amunts K., et al. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage, 25, 1325–35. [DOI] [PubMed] [Google Scholar]

- Eklund A., Nichols T.E., Knutsson H. (2016). Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proceedings of the National Academy of Sciences of the United States of America, 113, 7900–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emery N. (2000). The eye have it: the neuroethology, function and evolution of social gaze. Neuroscience and Biobehavioral Reviews, 24, 581–604. [DOI] [PubMed] [Google Scholar]

- Ethofer T., Gschwind M., Vuilleumier P. (2011). Processing social aspects of human gaze: a combined fMRI-DTI study. NeuroImage, 55, 411–9. doi: 10.1016/j.neuroimage.2010.11.033. [DOI] [PubMed] [Google Scholar]

- Farroni T., Csibra G., Simion F., Johnson M.H. (2002). Eye contact detection in humans from birth. Proceedings of the National Academy of Sciences of the United States of America, 99, 9602–5. doi: 10.1073/pnas.152159999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flandin G., Friston K.J. (2019). Analysis of family-wise error rates in statistical parametric mapping using random field theory. Human Brain Mapping, 40, 2052–4. doi: 10.1002/hbm.23839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K.J. (2002). Bayesian estimation of dynamical systems: an application to fMRI. NeuroImage, 16, 513–30. doi: 10.1006/nimg.2001.1044. [DOI] [PubMed] [Google Scholar]

- Friston K.J., Holmes A., Poline J.B., Price C.J., Frith C.D. (1996). Detecting activations in PET and fMRI: levels of inference and power. NeuroImage, 4, 223–35. doi: 10.1006/nimg.1996.0074. [DOI] [PubMed] [Google Scholar]

- Gergely G., Watson J.S. (1999). Early socio-emotional development: contingency perception and the social-biofeedback model In: Rochat P., editor. Early Social Cognition: Understanding Others in the First Months of Life, Mahwah, New Jersey: Lawrence Erlbaum Associates Publishers, pp. 101–36. [Google Scholar]

- Goelman G., Dan R., Stößel G., Tost H., Meyer-Lindenberg A., Bilek E. (2019). Bidirectional signal exchanges and their mechanisms during joint attention interaction - a hyperscanning fMRI study. NeuroImage, 198, 242–54. doi: 10.1016/j.neuroimage.2019.05.028. [DOI] [PubMed] [Google Scholar]

- Hallett M. (2007). Volitional control of movement: the physiology of free will. Clinical Neurophysiology, 118, 1179–92. doi: 10.1016/j.clinph.2007.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hobson J.A., Hobson R.P. (2007). Identification: the missing link between joint attention and imitation? Development and Psychopathology, 19, 411–31. doi: 10.1017/S0954579407070204. [DOI] [PubMed] [Google Scholar]

- Jueptner M., Rijntjes M., Weiller C., Faiss J.H., Timmann D., Mueller S.P., et al. (1995). Localization of a cerebellar timing process using PET. Neurology, 45, 1540–5. [DOI] [PubMed] [Google Scholar]

- Kastner S., Ungerleider L.G. (2000). Mechanisms of visual attention in the human cortex. Annual Review of Neuroscience, 23, 351–41. [DOI] [PubMed] [Google Scholar]

- Koike T., Tanabe H.C., Okazaki S., Nakagawa E., Sasaki A.T., Shimada K., et al. (2016). Neural substrates of shared attention as social memory: a hyperscanning functional magnetic resonance imaging study. NeuroImage, 125, 401–12. doi: 10.1016/j.neuroimage.2015.09.076. [DOI] [PubMed] [Google Scholar]

- Koike T., Sumiya M., Nakagawa E., Okazaki S., Sadato N. (2019). What makes eye contact special? Neural substrates of on-line mutual eye-gaze: a hyperscanning fMRI study. eNeuro, 6. doi: 10.1523/ENEURO.0284-18.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konvalinka I., Roepstorff A. (2012). The two-brain approach: how can mutually interacting brains teach us something about social interaction? Frontiers in Human Neuroscience, 6, 215. doi: 10.3389/fnhum.2012.00215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerner A., Bagic A., Hanakawa T., Boudreau E.A., Pagan F., Mari Z., et al. (2009). Involvement of insula and cingulate cortices in control and suppression of natural urges. Cerebral Cortex, 19, 218–23. doi: 10.1093/cercor/bhn074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marquardt K., Ramezanpour H., Dicke P.W., Thier P. (2017). Following eye gaze activates a patch in the posterior temporal cortex that is not part of the human “face patch” system. eNeuro, 4. doi: 10.1523/ENEURO.0317-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsuzawa M., Matsuo K., Sugio T., Kato C., Nakai T. (2005). Temporal relationship between action and visual outcome modulates brain activation: an fMRI study. Magnetic Resonance in Medical Sciences, 4, 115–21. doi: 10.2463/mrms.4.115. [DOI] [PubMed] [Google Scholar]

- Menon V., Uddin L.Q. (2010). Saliency, switching, attention and control: a network model of insula function. Brain Structure & Function, 214, 655–67. doi: 10.1007/s00429-010-0262-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague P., Berns G.S., Cohen J.D., McClure S.M., Pagnoni G., Dhamala M., et al. (2002). Hyperscanning: simultaneous fMRI during linked social interactions. NeuroImage, 16, 1159–64. doi: 10.1006/nimg.2002.1150. [DOI] [PubMed] [Google Scholar]

- Mundy P., Sigman M., Ungerer J., Sherman T. (1986). Defining the social deficits of autism: the contribution of non-verbal communication measures. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 27, 657–69. [DOI] [PubMed] [Google Scholar]

- Mundy P., Sullivan L., Mastergeorge A. (2009). A parallel and distributed-processing model of joint attention, social cognition and autism. Autism Research, 2, 2–21. doi: 10.1002/aur.61A. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberwelland E., Schilbach L., Barisic I., Krall S., Vogeley K., Fink G., et al. (2016). Look into my eyes: investigating joint attention using interactive eye-tracking and fMRI in a developmental sample. NeuroImage, 130, 248–60. doi: 10.1016/j.neuroimage.2016.02.026. [DOI] [PubMed] [Google Scholar]

- Oldfield R.C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia, 9, 97–113. [DOI] [PubMed] [Google Scholar]

- Penhune V.B., Steele C.J. (2012). Parallel contributions of cerebellar, striatal and M1 mechanisms to motor sequence learning. Behavioural Brain Research, 226, 579–91. doi: 10.1016/j.bbr.2011.09.044. [DOI] [PubMed] [Google Scholar]

- Perrett D.I., Emery N.J. (1994). Understanding the intentions of others from visual signals: neurophysiological evidence. Current Psychology of Cognition, 13, 683–94. [Google Scholar]

- Petersen S.E., Posner M.I. (2012). The attention system of the human brain: 20 years after. Annual Review of Neuroscience, 35, 73–89. doi: 10.1146/annurev-neuro-062111-150525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeiffer U.J., Vogeley K., Schilbach L. (2013). From gaze cueing to dual eye-tracking: novel approaches to investigate the neural correlates of gaze in social interaction. Neuroscience and Biobehavioral Reviews, 37, 2516–28. doi: 10.1016/j.neubiorev.2013.07.017. [DOI] [PubMed] [Google Scholar]

- Pfeiffer U.J., Schilbach L., Timmermans B., Kuzmanovic B., Georgescu A.L., Bente, et al. (2014). Why we interact: on the functional role of the striatum in the subjective experience of social interaction. NeuroImage, 101, 124–37. doi: 10.1016/j.neuroimage.2014.06.061. [DOI] [PubMed] [Google Scholar]

- Posner M.I., Petersen S.E. (1990). The attention system of the human brain. Annual Review of Neuroscience, 13, 25–42. doi: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- (A1-02) Redcay E., Schilbach L. (2019). Using second-person neuroscience to elucidate the mechanisms of social interaction. Nature Review Neuroscience, 20, 495–505. doi: 10.1038/s41583-019-0179-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redcay E., Dodell-Feder D., Pearrow M.J., Mavros P.L., Kleiner M., Gabrieli J.D.E., et al. (2010). Live face-to-face interaction during fMRI: a new tool for social cognitive neuroscience. NeuroImage, 50, 1639–47. doi: 10.1016/j.neuroimage.2010.01.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redcay E., Kleiner M., Saxe R. (2012). Look at this: the neural correlates of initiating and responding to bids for joint attention. Frontiers in Human Neuroscience, 6, 169. doi: 10.3389/fnhum.2012.00169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riedel M.C., Ray K.L., Dick A.S., Sutherland M.T., Hernandez Z., Fox P.M., et al. (2015). Meta-analytic connectivity and behavioral parcellation of the human cerebellum. NeuroImage, 117, 327–42. doi: 10.1016/j.neuroimage.2015.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rissman J., Gazzaley A., D’Esposito M. (2004). Measuring functional connectivity during distinct stages of a cognitive task. NeuroImage, 23, 752–63. doi: 10.1016/j.neuroimiage.2004.06.035. [DOI] [PubMed] [Google Scholar]

- Rorden C., Brett M. (2000). Stereotaxic display of brain lesions. Behavioural Neurology, 12, 191–200. [DOI] [PubMed] [Google Scholar]

- Saito D.N., Tanabe H.C., Izuma K., Hayashi M.J., Morito Y., Komeda H., et al. (2010). “Stay tuned”: inter-individual neural synchronization during mutual gaze and joint attention. Frontiers in Integrative Neuroscience, 4, 127. doi: 10.3389/fnint.2010.00127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasaki A.T., Kochiyama T., Sugiura M., Tanabe H.C., Sadato N. (2012). Neural networks for action representation: a functional magnetic-resonance imaging and dynamic causal modeling study. Frontiers in Human Neuroscience, 6, 236. doi: 10.3389/fnhum.2012.00236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schilbach L., Wilms M., Eickhoff S.B., Romanzetti S., Tepest R., Bente G., et al. (2010). Minds made for sharing: initiating joint attention recruits reward-related neurocircuitry. Journal of Cognitive Neuroscience, 22, 2702–15. doi: 10.1162/jocn.2009.21401. [DOI] [PubMed] [Google Scholar]

- Schilbach L., Timmermans B., Reddy V., Costall A., Bente G., Schlicht T., et al. (2013). Toward a second-person neuroscience. The Behavioral and Brain Sciences, 36, 393–414. doi: 10.1017/S0140525X12000660. [DOI] [PubMed] [Google Scholar]

- Singer T., Seymour B., O’Doherty J., Kaube H., Dolan R.J., Frith C.D. (2004). Empathy for pain involves the affective but not sensory components of pain. Science, 303, 1157–62. doi: 20.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Sridharan D., Levitin D.J., Menon V. (2008). A critical role for the right fronto-insular cortex in switching between central-executive and default-mode networks. Proceedings of the National Academy of Sciences of the United States of America, 105, 12569–74. doi: 10.1073/pnas.0800005105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Striano T., Reid V.M. (2006). Social cognition in the first year. Trends in Cognitive Sciences, 10, 471–6. doi: 10.1016/j.tics.2006.08.006. [DOI] [PubMed] [Google Scholar]

- Tanabe H.C., Kosaka H., Saito D.N., Koike T., Hayashi M.J., Izuma K., et al. (2012). Hard to “tune in”: neural mechanisms of live face-to-face interaction with high-functioning autistic spectrum disorder. Frontiers in Human Neuroscience, 6, 268. doi: 10.3389/fnhum.2012.00268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomasello M. (1999). The human adaptation for culture. Annual Review of Anthropology, 28, 509–29. doi: 10.1146/annurev.anthro.28.1.509. [DOI] [Google Scholar]

- Tomasello M. (2003). Constructing a Language: A Usage-Based Theory of Language Acquisition, Cambridge, MA: Harvard University Press. [Google Scholar]

- Trillenberg P., Verleger R., Teetzmann A., Wascher E., Wessel K. (2004). On the role of the cerebellum in exploiting temporal contingencies:; evidence from response times and preparatory EEG potentials in patients with cerebellar atrophy. Neuropsychologia, 42, 75463. doi: 10.1016/j.neuropsychologia.2003.11.005. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N., Landeau B., Papathanassiou D., Crivello F., Etard O., Delcroix N., et al. (2002). Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage, 15, 273–89. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Uddin L.Q., Menon V. (2009). The anterior insula in autism: under-connected and under-examined. Neuroscience and Biobehavioral Reviews, 33, 1198–203. doi: 10.1016/j.neubiorev.2009.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Overwalle F., Baetens K., Mariën P., Vandekerckhove M. (2014). Social cognition and the cerebellum: a meta-analysis of over 350 fMRI studies. NeuroImage, 86, 554–72. doi: 10.1016/j.neuroimage.2013.09.033. [DOI] [PubMed] [Google Scholar]

- de Vignemont F., Haggard P. (2008). Action observation and execution: what is shared? Social Neuroscience, 3, 421–33. doi: 10.1080/17470910802045109. [DOI] [PubMed] [Google Scholar]

- Wicker B., Keysers C., Plailly J., Royet J., Gallese V., Rizzolatti G. (2003). Both of us disgusted in my insula: the common neural basis of seeing and feeling disgust. Neuron, 40, 655–64. doi: 10.1016/s0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]

- Williams J.H.G., Waiter G.D., Perra O., Perrett D.I., Whiten A. (2005). An fMRI study of joint attention experience. NeuroImage, 25, 133–40. doi: 10.1016/j.neuroimage.2004.10.047. [DOI] [PubMed] [Google Scholar]

- Wolpert D.M., Kawato M. (1998). Multiple paired forward and inverse models for motor control. Neural Networks, 11, 1317–29. doi: 10.1016/S0893-6080(98)00066-5. [DOI] [PubMed] [Google Scholar]

- Wolpert D.M., Miall R.C., Kawato M. (1998). Internal models in the cerebellum. Trends in Cognitive Sciences, 2, 338–47. doi: 10.1016/S1364-6613(98)01221-2. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.