Abstract

This article aims to provide a synthesis on the question how brain structures cooperate to accomplish hierarchically organized behaviors, characterized by low‐level, habitual routines nested in larger sequences of planned, goal‐directed behavior. The functioning of a connected set of brain structures—prefrontal cortex, hippocampus, striatum, and dopaminergic mesencephalon—is reviewed in relation to two important distinctions: (a) goal‐directed as opposed to habitual behavior and (b) model‐based and model‐free learning. Recent evidence indicates that the orbitomedial prefrontal cortices not only subserve goal‐directed behavior and model‐based learning, but also code the “landscape” (task space) of behaviorally relevant variables. While the hippocampus stands out for its role in coding and memorizing world state representations, it is argued to function in model‐based learning but is not required for coding of action–outcome contingencies, illustrating that goal‐directed behavior is not congruent with model‐based learning. While the dorsolateral and dorsomedial striatum largely conform to the dichotomy between habitual versus goal‐directed behavior, ventral striatal functions go beyond this distinction. Next, we contextualize findings on coding of reward‐prediction errors by ventral tegmental dopamine neurons to suggest a broader role of mesencephalic dopamine cells, viz. in behavioral reactivity and signaling unexpected sensory changes. We hypothesize that goal‐directed behavior is hierarchically organized in interconnected cortico‐basal ganglia loops, where a limbic‐affective prefrontal‐ventral striatal loop controls action selection in a dorsomedial prefrontal–striatal loop, which in turn regulates activity in sensorimotor‐dorsolateral striatal circuits. This structure for behavioral organization requires alignment with mechanisms for memory formation and consolidation. We propose that frontal corticothalamic circuits form a high‐level loop for memory processing that initiates and temporally organizes nested activities in lower‐level loops, including the hippocampus and the ripple‐associated replay it generates. The evidence on hierarchically organized behavior converges with that on consolidation mechanisms in suggesting a frontal‐to‐caudal directionality in processing control.

Keywords: hippocampus, model‐based learning, nucleus accumbens, prefrontal cortex, striatum, ventral tegmental area

1. INTRODUCTION: WORLD MODELS OF OBSERVABLE AND NONOBSERVABLE VARIABLES

The idea that much of the neocortex is concerned with generating a world model, subserving decision‐making and action, has gained much prominence and support in recent years. Here, the concept of “world model” is taken widely, including not only the representation of objects and their spatiotemporal context but also their statistical and causal relationships. The construction of a world model depends on the inference of the causes of sensory inputs the brain receives (Friston, 2010; Lee & Mumford, 2003; Pennartz, 2018), culminating in conscious perception set in different sensory modalities (Figure 1; Friston, 2010; Lee & Mumford, 2003; Pennartz, 2018). Because the total influx of sensory information is limited and partially incomplete, and may contain conflicting elements, this process amounts to making a “best guess” representation of the agent's sensory world, which includes its own body (Friston, 2010; Gregory, 1980; Lee & Mumford, 2003; Marcel, 1983; Pennartz, 2015). Next to modeling the causes of environmental inputs, which reach the brain via sensory activation, a different component of world‐modeling addresses latent or nonobservable causes of events and situations. This type of cause cannot be directly verified by acute sensory input or motor behavior via which novel inferential information can be gained about sensory sources. In this context, “verification” reflects the process that a motor action, prompted by a particular sensory input (e.g., seeing a food item at a short distance, and reaching out to it), will result in sensory feedback through one or more other modalities (e.g., touching and tasting the object), which may turn out to be aligned with the initial visual estimate or not. Instead, nonobservable causes must be derived from long‐term exploration of the environment, including manipulation of its many state variables, resulting in the discovery of causal relationships between relevant elements. This more long‐lasting exploration is thought to result in a model laying out how specific hidden causes are related to each other as well as to observable effects. This more abstract model of nonobservable variables is as important for guiding behavior as perceptual, experiential representations are (Figure 1).

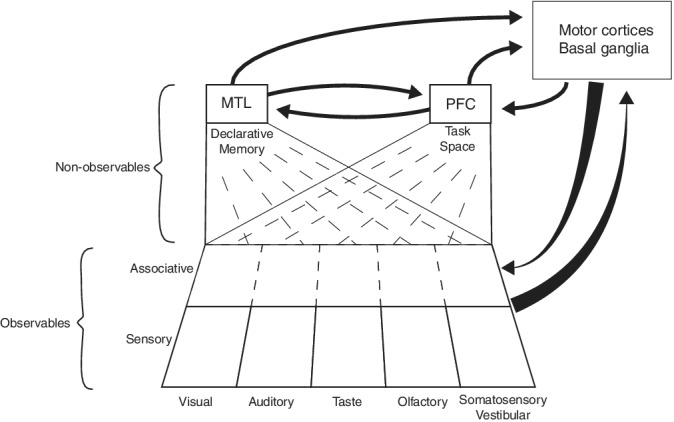

Figure 1.

Hierarchical organization of the mammalian brain defined by the progressive processing of observables and nonobservables and the interaction with brain structures for behavioral control and planning. Primarily unisensory areas are represented at the bottom row, feeding information to associative cortices for multisensory, higher‐order representations of observables such as visible and audible objects. This information is propagated into medial temporal lobe (MTL) and prefrontal (PFC) structures. The memory system of the MTL is involved in computing nonobservables, such as the subject's position in time and space, semantic meaning, name, and history of observed objects. The prefrontal cortex is proposed to encode a task space specifying relationships between task variables such as cues, actions, policies, outcomes, and motivational factors such as related to hunger or thirst. The interactions between PFC and the motor cortices and basal ganglia are expanded upon in Figure 2. In addition, the motor structures maintain bidirectional interactions with sensory and associative cortical areas. Note that not all known anatomical projections are included (e.g., from motor cortices to MTL). This scheme differs from previous proposals on hierarchical brain organization, for instance as proposed by Fuster (2001), who suggested two parallel hierarchies of sensory and motor processing streams converging upon the PFC as the highest center for integration. Note the following specific aspects of the current proposal: (a) the task space, encoding nonobservable relationships, has a rather abstract nature which is neither purely sensory nor motor; (b) The PFC and MTL, including the hippocampal formation, communicate intensively to plan and guide behavioral control; (c) the PFC and more caudally located motor‐related areas form a system of hierarchically organized loops with the basal ganglia, which are also fed by information from MTL areas such as HPC and amygdala

A key issue in building world models of hidden causes is that organisms must learn how their goals can be best achieved. The general goal of satisfying the organism's homeostatic variables (in terms of survival and reproduction) can be translated into concrete situational needs such as food, water, sex, and avoidance of pain or stress. A classic paradigm for learning to optimize homeostatic conditions through behavior is reinforcement learning (RL), whereby the agent learns to couple stimuli to those actions resulting in maximal reward and minimal punishment (Barto, 1994; Pennartz, 1996; Sutton & Barto, 1998). During classic RL, stimuli and actions come to be associated with a cached value (i.e., a scalar value), accumulated over a long history of prior experiences, that predicts the value of the outcome of situations (as defined by, for example, reward magnitude). By consequence, learning processes become dependent not only on absolute reward, but also on differences between expected and actual reward (Rescorla & Wagner, 1972; Sutton & Barto, 1998).

More recently, behavioral control based on cached value has been contrasted to “model‐based RL”, in which the agent builds an explicit, internal model of its state space, containing specific stimulus–outcome and action–outcome relationships. It is this latter type of sensory‐specific learning that is referred to in the characterization of world models given above. Whereas classic RL guides actions based on a single scalar value reflecting reward history, model‐based RL enables prospective cognition. It endows the agent with the capacity to predict specific future states, usually rendered as a decision tree with expanding ramifications (Daw & Dayan, 2014; Daw, Niv, & Dayan, 2005). Because an agent can rely on its internal model when facing a choice situation, it is able to improvise or respond on the fly, using its general knowledge of relationships between specific stimuli, actions, and outcomes. It can flexibly respond to novel situations based on general knowledge laid down in tree structures and can make specific predictions about the immediate outcomes of each action alternative by associatively “chaining” short‐term predictions. The internal model can be conceived as the core of a larger spatiotemporal model of causal structures of the world and the agent's position in it.

Model‐based learning (MBL) is intimately related to, although arguably different from, the concept of goal‐directed behavior (GDB). GDB does not refer just to any type of “goal‐directed movement” (such as saccades), but to actions which are initiated based on representational content to control behavior (e.g., the belief that an action A is causal in obtaining outcome O; Dickinson, 2012). Experimentally, GDB is assessed by testing whether a learned action is sensitive to outcome value, and whether animals learn that obtaining the outcome is contingent upon the action being performed (Balleine & Dickinson, 1998; Holland, 2004). In case neither of these criteria is met, actions are considered to result from stimulus–response learning followed by habit formation, which renders the execution of actions largely outcome‐insensitive.

Because classic RL is devoid of a specific causal model, it has also been labeled “model‐free learning” (MFL; Daw et al., 2005; Doya, 1999). In MBL, referral to a tree structure (or tree search) is motivated by the associative chains of short‐term predictions being represented by an architecture that temporally unfolds as a branching set of possible outcome situations, in which an initial state S 0 gives rise to multiple possible future states (e.g., S 1 and S 2; Daw et al., 2005).

Several operational indicators for characterizing neural systems involved in MBL versus MFL can be delineated. An MBL system will be sensitive to specific properties of the outcome of an action in a specific context (Daw et al., 2005; Jones et al., 2012). In contrast, MFL depends on a slow accumulation of reward value over time and results in habitual behavior (HB) that is less flexible and less susceptible to readjustment. In MBL, the outcome is not only specified in terms of motivational value, but also by the specific sensory features defining its identity (e.g., apple flavor of a reward; Daw et al., 2005). Whereas for MFL only the value of an outcome matters, internal models have space for coding of sensorily distinct outcomes, even if they have the same value. An animal may be equally motivated to pursue a banana versus apple reward, but can nonetheless distinguish which of these equally valued outcomes will be obtained given an action X in situation Y.

Importantly, this does not imply that MFL systems do not distinguish between outcome‐predicting actions or stimuli. Reward (or Q‐) values will depend on the action chosen in a particular state S or context, even though only one scalar value is associated with a state‐action pair (Daw et al., 2005). A second hallmark of MBL is its prospective and on‐the‐fly nature, going beyond the single value‐prediction emitted in MFL (Daw et al., 2005; Doya, 1999). Neurophysiologically, this hallmark can be studied via the neural coding of potential—but specific—choices or paths an animal may undertake or consider for future action, either during ongoing behavior, resting, or sleep.

Replay is the experience‐dependent recurrence of temporally ordered sequences of neural activity characteristic of a preceding behavioral episode. In the hippocampus (HPC), this is expressed by firing patterns of neuronal ensembles coding sequences of sensory‐specific states and is therefore richer, and more compatible with tree search, than would be expected from an integration across reward history such as in MFL. Moreover, replay may subserve both retrospective and prospective cognition, as illustrated in a spatial memory task (Jadhav, Kemere, German, & Frank, 2012). However, in applying these indicators for MBL versus MFL, it should be noted that they may not be uniquely characteristic for MBL but may also be compatible with other computational functions, and therefore do not offer proof for MBL per se.

Despite the close relationships between MBL and GDB, we argue that there are some conceptual and operational differences. First, whereas MBL originated from computational modeling, GDB was conceptualized to explain experimental observations on behavioral phenomena (outcome devaluation and contingency). Second, MBL and MFL are primarily concerned with particular structures and contents of what is learned and stored in memory. Although both types of learning subserve optimal action control, they do not specify how exactly actions are selected based on various neural systems. For instance, whether MBL and MFL systems cooperate and/or compete with each other remains a matter of debate (cf. Pezzulo, van der Meer, Lansink, & Pennartz, 2014). The MBL–MFL categorization does not specify computational mechanisms of directed action control or the precise neural substrates that play a role in them. Here, MBL and MFL differ conceptually from GDB versus HB, which are operationally defined via behavioral actions and outcomes, not as types of learning based on a particular structural model (e.g., a tree; Daw & Dayan, 2014). GDB hinges on the immediate importance of outcome value in guiding subsequent behavior and the causal importance of action–outcome relationships. While the concept of GDB does recognize the importance of representational content to control behavior (Dickinson, 2012), it does not specify how sensory‐specific an outcome representation would have to be (regardless of value), whereas sensory specificity is a hallmark of MBL. Third, in contrast to MBL, GDB is not defined by capacities for retrospective or prospective cognition or on‐the‐fly improvisation, although it is certainly compatible with such processes.

In addition to learned behaviors, reflexes and innate behaviors need to be considered in the optimization of homeostatic variables. However, due to space constraints, these largely fall outside the scope of the current review. The same is the case for learning paradigms that do not inherently contain a definition of the outcome (such as associative object‐place learning or socially transmitted food preference) and hence are compatible with either MBL or MFL. The current focus on the MBL–MFL and GDB–HB distinctions does not preempt other, often more detailed proposals for decision‐making; our focus is rather global and involves multiple connected brain systems. As such, it is broadly compatible with more detailed schemes that often zoom in on particular brain structures or systems (e.g., basal ganglia, Pennartz, Groenewegen, & Lopes da Silva, 1994; Redgrave, Prescott, & Gurney, 1999a; Wei & Wang, 2016; lateral intraparietal area [LIP], Shushruth, Mazurek, & Shadlen, 2018; orbitofrontal cortex [OFC], Padoa‐Schioppa & Conen, 2017).

While much remains unknown about the neural substrates of MBL versus MFL, there has been a tendency in the literature to associate MFL with the dorsal and ventral basal ganglia (Houk, Adams, & Barto, 1995; Schultz, 1998) and MBL with prefrontal cortex (PFC; Daw & Dayan, 2014; Daw et al., 2005; Jones et al., 2012). Within neuroscience, temporal difference reinforcement learning (TDRL; Sutton & Barto, 1998) has gained much prominence as an effective and plausible type of MFL. In mapping an actor–critic architecture that implements TDRL onto the basal ganglia, the dorsal striatum would function as “actor,” mediating actions that impinge on the subject's environment to evoke sensory and reinforcing feedback. In contrast, the ventral striatum (VS) would be the “Critic” that generates outcome‐predicting values based on the history of reinforcement obtained from these actions (Houk et al., 1995; Schultz, 1998). The ventral tegmental area (VTA) would compute errors between predicted and actual reward (reward prediction errors), and transmit these to the Actor module to guide learning of stimulus and action values, resulting in the selection of the most valued action.

Finally, the concept of hierarchical control needs some further introduction in the context of GDB and HB. GDB can be usually decomposed into a sequence of subroutines or skills carried out to reach an end means, such as an animal locomoting to a lever, pressing the lever, and moving over to a magazine site to ingest and swallow a food pellet. Classically, the analysis of sequential, goal‐directed behavior has supported the tenet that it cannot be understood as a simple chain of stimulus–response associations, but is characterized by a hierarchical organization in which low‐level subroutines are organized to subserve attainment of the end goal (Lashley, 1951; Miller, Galanter, & Pribram, 1960). This analysis was later supported by the notion that actions are not only controlled by cues acutely available to subjects (e.g., a light prompting a rat to press a lever for reward) but also by the wider spatiotemporal context in which actions are performed (e.g., the reward can only be obtained in a particular environment or after a chain of events). Thus, a higher‐order system is needed to consider options for actions in the light of the supraordinate context (Badre & Nee, 2018; Desrochers, Chatham, & Badre, 2015). A further, computational argument for hierarchical control, which arose from studies on RL, is that RL models become less effective when coping with many possible actions and world states. This “curse of dimensionality” can be mitigated by grouping small‐scale actions together into more abstract, temporally extended actions (e.g., “perform the lever press sequence” in the example above). This computational problem has been a driving force for developing hierarchical RL models, conforming to the idea that complex behavior comprises both elementary actions and overarching action patterns (Barto & Mahadevan, 2003; Botvinick, 2008; Chiang & Wallis, 2018; O'Reilly & Frank, 2006). Thus, behavioral and computational arguments support the notion of hierarchical control over behavior, which however does not imply that the underlying neural substrates necessarily have an explicitly hierarchical structure (Cleeremans, Destrebecqz, & Boyer, 1998; Elman, 1990).

It is in this theoretical and empirical context that we will review the evidence for the variously proposed roles of the PFC, HPC, and connected basal ganglia structures in GDB versus HB and MBL versus MFL, and in hierarchically organized behavior. Besides lesion and other interventional studies, we will emphasize electrophysiological studies on neural coding in these structures in the rodent brain. The review is structured as follows. First, we discuss evidence for roles of particular brain areas (and their main subdivisions) in MBL versus MFL and GDB versus HB, in relation to other functions associated with the same areas. Next, we consider how these structures are jointly organized in interacting cortico‐basal ganglia loops to provide a plausible neural substrate for a hierarchical organization of behavior, in which systems for GDB and MBL assume a higher position than systems for HB and MFL. Finally, we review communication mechanisms in cortico‐basal ganglia systems and ask whether “offline” processing in corticothalamic and hippocampal circuits aligns with the proposed frontal‐to‐caudal direction in behavioral hierarchical control.

2. PREFRONTAL CORTEX: MODEL‐BASED LEARNING, GOAL‐DIRECTED BEHAVIOR, AND THE CODING OF TASK SPACE

2.1. Introductory remarks

The question of whether the PFC is involved in GDB, MBL, or their counterparts is positioned in a rich literature having raised evidence for its role in a gamut of cognitive functions, which includes working and long‐term declarative memory, categorization, decision‐making, cognitive flexibility, attentional shifting, outcome valuation, control of emotions, and self‐initiation of behavior (Bari & Robbins, 2013; Eichenbaum, 2017; Fuster, 2001; Goldman‐Rakic, 1996; Miller & Cohen, 2001; Pennartz, van Wingerden, & Vinck, 2011). This diversity of functions may to some extent be attributable to distinct PFC subregions playing different roles. For instance, the medial PFC (mPFC) has been implicated in flexible rule learning and working memory (Euston, Gruber, & McNaughton, 2012; Mulder, Nordquist, Orgut, & Pennartz, 2003; Rich & Shapiro, 2009; Rushworth, Noonan, Boorman, Walton, & Behrens, 2011; Wallis, Anderson, & Miller, 2001), the anterior cingulate cortex in detecting response conflict and decision‐making based on effort and temporal cost–benefit constraints (Cowen, Davis, & Nitz, 2012; Haddon & Killcross, 2006; Hosokawa, Kennerley, Sloan, & Wallis, 2013; Rudebeck, Walton, Smyth, Bannerman, & Rushworth, 2006, but see Walton, Croxson, Behrens, Kennerley, & Rushworth, 2007), and the OFC in flexibly learning stimulus–outcome value (Jones et al., 2012; Ostlund & Balleine, 2007; Schoenbaum, Roesch, Stalnaker, & Takahashi, 2009; van Duuren, Lankelma, & Pennartz, 2008; van Duuren et al., 2009; van Wingerden et al., 2012; but see Stalnaker, Cooch, & Schoenbaum, 2015). In this sense, one applies a restricted lens on PFC when considering it in the light of GDB and MBL. Below we will treat the roles of OFC and mPFC separately.

2.2. Orbitofrontal cortex: model‐based versus model‐free learning and goal‐directed versus habitual behavior

While regional differences may account for part of the observed diversity in PFC functions, they also raise the question whether all or most of these functions can be subsumed under a common functional denominator, such as MBL as opposed to MFL. It could be argued that many results obtained from lesion and physiological studies, pertaining to decision‐making, cognitive flexibility, and outcome valuation may all be captured under the common framework of MFL. Conforming to this, the OFC has been proposed to fulfill the role of “critic” based on results from lesion and electrophysiological studies showing that this structure plays a major role in coding and applying stimulus‐value associations, in conjunction with the amygdala (Ostlund & Balleine, 2007; Pennartz, Ito, Verschure, Battaglia, & Robbins, 2011; Schoenbaum, Setlow, Saddoris, & Gallagher, 2003). Single units and ensembles in OFC display learning‐dependent, predecisional responses to odors or other outcome‐predicting stimuli (Schoenbaum et al., 2003; van Wingerden et al., 2012), correlating with the magnitude or probability of upcoming reward (van Duuren et al., 2008; van Duuren et al., 2009). Reward value is not coded by OFC neurons generally, but is often coupled to a specific sensory stimulus, such as one of several odors that predict reward (Ramus & Eichenbaum, 2000; van Duuren et al., 2008). By itself, however, such sensory specificity is compatible with both MBL and MFL.

However, there is considerable evidence that an MFL‐based scheme does not capture the full complexity of OFC coding. In a sensory preconditioning paradigm using muscimol and baclofen to inactivate the OFC, Jones et al. (2012) found that the OFC is needed for both MBL and MFL, but is not critically involved when cached value is sufficient for decision‐making. This conclusion could be drawn because, in this task, the value of a secondary cue, not paired with reward, had to be inferred from its pairing to a primary, reward‐paired cue (McDannald et al., 2012; Stalnaker et al., 2015). This result does not imply that the OFC is not involved in MFL but does allow us to conclude that its role goes further, using inferential representations of environmental structure. This proposal gains further support from the relatively fast, plastic changes in OFC firing responses to olfactory stimuli during reversal learning (van Wingerden, Vinck, Lankelma, & Pennartz, 2010a; cf. Burke, Takahashi, Correll, Brown, & Schoenbaum, 2009). Such fast changes would not be expected if the OFC would only support MFL (cf. Daw et al., 2005). In addition, lesion studies have implied the OFC in reversal learning (e.g., Rolls, 2000; but see Stalnaker et al., 2015).

Moreover, recent neurophysiological evidence supports a function of the OFC in MBL. When an animal has been trained to run a maze and make wait or skip choices for delayed delivery of differently flavored food pellets at distinct maze sites, a regret condition occurs when the animal commits to a high‐cost choice after having skipped a low‐cost option. In this situation, orbitofrontal (and ventral striatal) ensembles strongly represented the previous low‐cost option after the rat had entered the current, high‐cost zone (Steiner & Redish, 2014). Because this coding pertains to a previously encountered choice in the decision tree and indicates a form of retrospective cognition, this result is in line with the proposed role for OFC in MBL, while not refuting an additional role in MFL.

As concerns the role of OFC in GDB versus HB, evidence for its causal involvement in GDB has been mounting in recent years. In marmoset monkeys, Jackson, Horst, Pears, Robbins, and Roberts (2016) found that OFC lesions caused an insensitivity to degradation of action–outcome contingency, underpinning an important component of GDB (see Valentin, Dickinson, & O'Doherty, 2007, for fMRI results in humans). In mice subjected to a within‐subject lever‐pressing task using reinforcer devaluation, chemogenetic inhibition of OFC disrupted GDB, while optogenetic activation increased this behavior (Gremel & Costa, 2013). In rats, learning instrumental actions in the setting of an outcome devaluation paradigm, chemogenetic inhibition of the insular cortex inhibited goal‐directed control, while inhibition of the ventrolateral OFC also impaired GDB, albeit only after a switch in instrumental contingencies (reversal training; Parkes et al., 2018).

2.3. Medial prefrontal cortex: model‐based versus model‐free learning and goal‐directed versus habitual behavior

Although some studies refer to the mPFC as a neural substrate for MBL based on arguments from action contingency or devaluation, it is important to determine whether the experimental evidence supports a role specifically in MBL or GDB. While the evidence for GDB is significant (see below), there are some studies pointing to a role in MBL, as opposed to MFL, as well. In a value‐based decision task in which forward planning was contrasted with cache‐based choices acquired through extensive training, Wunderlich, Dayan, and Dolan (2012) found that BOLD activity in the human dorsomedial PFC, along with other structures such as the medial frontal gyrus and precuneus, was enhanced during planning relative to cache‐based trials. Similar studies contrasting MBL versus MFL found BOLD correlates in additional brain structures well connected to mPFC, such as the intraparietal sulcus and lateral PFC (Gläscher, Daw, Dayan, & O'Doherty, 2010) or medial temporal lobe and striatum (Simon & Daw, 2011). These findings are generally in line with models implying the mPFC in counterfactual reasoning and future planning (Barbey, Krueger, & Grafman, 2009).

Electrophysiological studies highlight that mPFC firing patterns are often highly specific for particular action sequences, run paths, goal sites, and memory strategies (Euston et al., 2012; Hok, Save, Lenck‐Santini, & Poucet, 2005; Ito, Zhang, Witter, Moser, & Moser, 2015; Kargo, Szatmary, & Nitz, 2007; Mulder et al., 2003; Rich & Shapiro, 2009). This sequence specificity is also shown in replay generated by mPFC assemblies during sleep or behavioral pausing (Euston, Tatsuno, & McNaughton, 2007). Evidence for a causal involvement of mPFC in prospective, MBL‐based cognition relates to its influence on vicarious trial and error (VTE) behavior and the associated process of “forward sweeps” (theta sequences) in hippocampal representations generated when animals are at a choice point in a maze. Schmidt, Duin, and Redish (2019) found that disruption of mPFC activity using DREADD manipulation diminished VTE behavior in a spatial foraging task and impaired theta‐sequence generation in area CA1. Altogether, the evidence supports a causal role of mPFC in MBL, although sensory‐specific aspects of outcome coding remain to be tested by single‐unit recordings.

A causal role of mPFC in GDB, as opposed to HB, has been suggested by Balleine and Dickinson (1998). Their paradigm tested for specific actions (lever pressing vs. chain pulling) being coupled to specific outcomes (food pellets vs. starch solution) and showed that the outcome sensitivity of action learning was dependent on mPFC integrity (Balleine & O'Doherty, 2010; Corbit & Balleine, 2003). Lesions of the mediodorsal thalamic nucleus, which is bidirectionally connected with the PFC, also degraded GDB as measured by action–outcome devaluation (Corbit, Muir, & Balleine, 2003). Coutureau and Killcross (2003) suggested that the prelimbic and infralimbic PFC subregions play differential roles in GDB versus HB, as muscimol inactivation of infralimbic cortex allowed animals to reinstate goal‐directed responding following extended training, whereas control‐infused animals continued to show HB (see, however, Shipman, Trask, Bouton, & Green, 2018).

2.4. Further analysis: Prefrontal coding of task space

Apart from the MBL–MFL distinction, additional observations suggest that mPFC function is more comprehensive than simply coding specific action–outcome relationships. Earlier studies noted that all elements relevant to task performance are represented in firing correlates of mPFC neurons, including trial‐initiation stimuli and stimuli coupled to contingent action and reward (e.g., Baeg et al., 2003; Kargo et al., 2007; Mulder et al., 2003). Because this sequential coding of task elements (“tessellation”; Pennartz, van Wingerden, & Vinck, 2011) includes a substantial component of stimulus and context information, the mPFC likely encodes task stages preceding the action–outcome phase. This tessellation has also been found in other cortical and subcortical areas (Allen et al., 2017; Bos et al., 2017; Harvey, Coen, & Tank, 2012; Lansink, Goltstein, Lankelma, & Pennartz, 2010). As regards mPFC, however, lesion and electrophysiological studies are consistent with a causally relevant role in coding behaviorally relevant stimuli and contexts (Birrell & Brown, 2000; Euston et al., 2012; Mulder et al., 2003; Takehara‐Nishiuchi & McNaughton, 2008).

Another indication for a broader repertoire of PFC functions came from studies showing coding of task rules and goals in this structure in macaques and rodents (notably, this coding is found in medial, but also lateral and ventral parts of PFC; Durstewitz, Vittoz, Floresco, & Seamans, 2010; Genovesio, Tsujimoto, & Wise, 2012; Wallis et al., 2001). These rules amount to if–then relationships applicable in a specific condition (e.g., “if stimulus X appears in situation Y, then perform action A; if X appears in situation Z, perform action B”). In a learning paradigm including multiple, serial reversals, De Bruin et al. (2000) found that lidocaine infusions in mPFC transiently impaired the first instance of reversal learning, suggesting that the mPFC is required for fast instatement of new task rules when expected outcomes are no longer obtained. These and other findings gave rise to the concept of PFC as coding a “task space” (Verschure, Pennartz, & Pezzulo, 2014). In contrast to geometric spaces, this concept holds that the PFC codes an abstract map of the nonobservable, causal relationships between task elements, as far as relevant for achieving end goals. The coding of task space may result from MBL and acts as an informational reservoir to drive GDB. A major outstanding question is whether the PFC stores task‐space information in its synaptic matrices, or mainly imports the information from other areas and utilizes it to compute its outputs on‐line, thus steering behavior (cf. Jones et al., 2012).

In summary, current evidence indicates that coding in the orbitofrontal and medial prefrontal cortices goes well beyond MFL and may be more adequately captured by MBL, while not excluding value coding according to cache‐based schemes. Similarly, experimental evidence supports a role for mPFC, OFC, and adjacent areas (e.g., insular cortex) in GDB. In addition, the functions of these cortices are not restricted to action–outcome relationships, but rather comprise the full “landscape” (task space) of stimuli, contexts, rules, actions, and outcomes as far as relevant for reaching goals. Within this task space, additional functions going beyond the MBL–MFL distinction are expressed by PFC ensembles, such as working memory and attentional set‐shifting.

3. HIPPOCAMPUS: MODEL‐BASED LEARNING, GOAL‐DIRECTED BEHAVIOR, AND THE MAPPING OF WORLD‐STATE VARIABLES

3.1. Introductory remarks

Hippocampal function has been the subject of excellent recent reviews (e.g., Buzsáki & Moser, 2013; Eichenbaum, Sauvage, Fortin, Komorowski, & Lipton, 2012), therefore we will restrict this section mainly to aspects relevant for the MBL–MFL and GDB–HB distinctions. First, we recall that hippocampal lesions primarily cause deficits in types of cognition not specifically associated with RL, viz. in spatial memory (Handelmann & Olton, 1981; Morris, Anderson, Lynch, & Baudry, 1986) and other aspects of episodic memory (Corkin, 2002; Milner, Squire, & Kandel, 1998; Tulving, 1983). In rodents, hippocampal lesions also affect working memory and time‐sensitive forms of conditioning (e.g., trace conditioning; Meck, Church, & Olton, 2013; Weiss & Disterhoft, 2015); working memory is defined here as a short‐term form of memory holding information and allowing its manipulation for subsequent behavioral decisions. There is little evidence to implicate the HPC in processes lying at the core of RL, such as Pavlovian conditioning (without a delay component) and learning of singular stimulus–response associations (S–R learning), consistent with the notion that, in general, procedural memory and classical conditioning are not affected by hippocampal lesions.

Before reviewing hippocampal functions in MBL, GDB, and their counterparts, we will first examine the range of parameters that the HPC codes, such as a subject's spatial location in its environment (O'Keefe & Dostrovsky, 1971; O'Keefe & Nadel, 1978; Wilson & McNaughton, 1993). This range has been recently broadened to include the coding of time (Eichenbaum, 2014; Kraus, Robinson 2nd, White, Eichenbaum, & Hasselmo, 2013) and other behaviorally relevant, parametric variables. Depending on task design, hippocampal cells code the time elapsed between task‐relevant events such as odor and object presentations, or the time spent during treadmill running (MacDonald, Carrow, Place, & Eichenbaum, 2013; MacDonald, Lepage, Eden, & Eichenbaum, 2011; cf. Pastalkova, Itskov, Amarasingham, & Buzsáki, 2008). Although the HPC was already proposed to represent nonspatial parameters in the 1980s (e.g., Meck, 1988; Weiss & Disterhoft, 2015), this concept recently gained strength by studies reporting hippocampal coding of temporal sequences during task execution. A causal role of the HPC in representing sequences of nonspatial events (i.e., odor choices) was indicated by a lesion study (Agster, Fortin, & Eichenbaum, 2002). Allen, Salz, McKenzie, and Fortin (2016) used an olfactory task in which rats were required to sample a sequence of odors and to identify each odor being presented as part of a sequence or “out of sequence.” This study showed that dorsal CA1 cells code for multiple stimulus parameters, including stimulus identity, stimulus rank, or rank identity associations.

A recent study by Aronov, Nevers, and Tank (2017) showed that hippocampal coding is not limited to unimodal representation of one parametric variable such as spatial position. When rats were trained to manipulate a joystick, which served to modulate tone frequency, and reach into a target zone of frequencies to obtain reward, they demonstrated the emergence of hippocampal “auditory maps.” In this task, the firing of CA1 neurons mapped onto the entire range of presented sound frequencies, similarly to place cells during spatial tasks, with subsets of cells showing preferred sound frequencies. In addition, a recent study showed that also sequences of egocentric body movements can be coded by HPC. In a star‐shaped maze, where spatial navigation was based either on external landmark configurations (place memory) or on memorized sequences of body turns, mouse hippocampal CA1 ensembles showed correlates not only of place, but also of specific components in the sequence of body movements (Cabral et al., 2014). In mice where NMDA receptors on CA1 pyramidal cells were genetically deleted, this body‐sequence based mapping was selectively degraded, paralleled by a behavioral deficit in memory for longer motor sequences. Thus, depending on task requirements, the HPC can code a broad range of parameters, which however does not preclude a role in MFL.

3.2. Role in model‐based versus model‐free learning and goal‐directed versus habitual behavior

In general, the rich episodic nature of hippocampal coding, as demonstrated by lesion studies and electrophysiological recordings, supports a role in MBL, while not excluding an additional function in MFL. More specific evidence for a hippocampal role in MBL has been raised by ensemble recording studies revealing a relationship between “forward sweeps” representing potential future trajectories and VTE behavior displayed by rats at decision points in a maze (see above; Johnson & Redish, 2007; Wikenheiser & Redish, 2015). Excitotoxic HPC lesions were shown to affect VTE behavior in a spatial memory task, whereas VTE behavior during a visual discrimination task was not deteriorated. In the spatial memory task, sham‐lesioned animals showed more VTE behavior as long as the reward location had not been identified, in comparison to trials performed after it had been located, whereas this difference was not found in lesioned animals (Bett et al., 2012). Neural substrates of these and other forms of prospective sequences (Pfeiffer & Foster, 2013) have been hypothesized to serve as an internal, sampling‐based mechanism for computing and evaluating potential paths toward goal sites, optimizing decision‐making (Penny, Zeidman, & Burgess, 2013; Pezzulo et al., 2014; Redish, 2016; Stoianov, Pennartz, Lansink, & Pezzulo, 2018).

As concerns hippocampal functions in GDB versus HB, Corbit and Balleine (2000) showed that electrolytic hippocampal lesions had no effect on the sensitivity of rat instrumental performance to outcome devaluation, but did have a deteriorating effect on sensitivity to degradation of action–outcome contingencies. At first, this suggested that the HPC is important for representing action–outcome causality, but in a follow‐up study, using excitotoxic lesions, this effect was attributed to the entorhinal cortex and its efferents to the retrohippocampal area (Corbit, Ostlund, & Balleine, 2002).

Despite this lack of conclusive evidence, there are physiological indications that hippocampal processing is compatible with a role in GDB. In rats navigating a Y‐maze, guided by nine spatially distributed cue lights predicting reward, Lansink et al. (2016) investigated theta and beta (15–20 Hz) rhythmicity in firing patterns and local field potentials (LFPs) in area CA1. Importantly, rats were not only prompted by a light cue to approach these goal sites, but also visited them without cue, by way of habitual “checking.” Theta and beta‐band (15–20 Hz) rhythmicities were augmented during goal approach guided by cues, relative to habitual approaches.

3.3. Further analysis: Hippocampal representation of world states

Given the evidence for a richer role of HPC than is captured by spatial coding, the question arises how this structure is distinct from other areas coding a similarly varied repertoire of behaviorally relevant variables, such as PFC. While HPC lesions do not disrupt behavior in general, they specifically impair those behaviors depending on episodic memory, that is, memory for objects and events, their spatial locations, temporal order, and times of occurrence (Eichenbaum et al., 2012; Eichenbaum, Dudchenko, Wood, Shapiro, & Tanila, 1999; Fortin, Agster, & Eichenbaum, 2002; Kesner, Hunsaker, & Warthen, 2008; Meck et al., 2013; Ranganath, 2010; Scoville & Milner, 1957). A key proposition holds that the HPC does not encode a “task space” as proposed for PFC, but a representation of world states (including the subject's body state; cf. Verschure et al., 2014). At first glance, the HPC and PFC seem to code many of the same variables, but we argue that this similarity is superficial. For instance, a collection of hippocampal place cells codes for every location occupied by an animal in space, including relevant as well as irrelevant sites, whereas prefrontal ensembles more prominently code task‐relevant elements, such as goals and larger spatial or temporal task segments leading up to goals (Genovesio et al., 2012; Hok et al., 2005; Mulder et al., 2003; Rich & Shapiro, 2009). This evidence is corroborated by lesion and pharmacological studies more generally implying PFC in executive functions (Dalley, Cardinal, & Robbins, 2004; Gläscher et al., 2012; Miller & Cohen, 2001).

This concept anchors the HPC more firmly to the memory of sensory, motor, and spatiotemporal variables and derived nonobservable variables such as allocentric position in space, and anchors the PFC to mapping the latent structure of relationships between task elements (in particular their causal relationships such as their instrumental role in achieving goals). Support for these differential roles comes from studies using mazes or other spatial environments, where dorsal CA1 neurons show small place fields scattered across the environment (Bos et al., 2017; Davidson, Kloosterman, & Wilson, 2009), whereas OFC, mPFC (and the interconnected perirhinal cortex, Bos et al., 2017) predominantly code large, task‐bound stretches of space, demarcated by preceding and consecutive task stages requiring switches in behavior (Rich & Shapiro, 2009).

To conclude, hippocampal function is not only characterized by coding state variables, but also includes its operation as a sequence generator and repository for pointers to store and retrieve elements of episodic memory (cf. Teyler & DiScenna, 1986). By this retrieval, relational information can be quickly utilized to plan and organize GDB. The apparent contrast between hippocampal lesion studies—indicating no causal role in GDB—and neurophysiological studies may be explained by assuming that the HPC strongly supports GDB via its episodic memory capacities and internally generating prospective sequences, in particular by providing contextualized sensory and motor information to GDB systems. However, the HPC is not causally required to code action–outcome relationships themselves, which may be uniquely or redundantly coded by other brain areas such as mPFC. In this sense, a lack of evidence for a causal role in GDB does not imply a lack of involvement in MBL. This conclusion is in line with the rationale to maintain a conceptual distinction between GDB and MBL (see section 1).

4. STRIATUM: MODEL‐BASED LEARNING, GOAL‐DIRECTED BEHAVIOR, AND THE CONVERSION FROM STATE TO ACTION INFORMATION

4.1. Introductory remarks

There are several, mutually consistent grounds to argue that the notion of the dorsal and ventral striatum recalled above—as “Actor” and “Critic” in MFL—is not sufficient to capture the diversity and complexity of processing within this structure, if the concept is valid at all (Pennartz, Ito, et al., 2011; van der Meer & Redish, 2011b). Consistent with the anatomically similar structure of cortico‐basal ganglia–thalamic loops involving dorsoventral but also mediolateral gradients in the striatum (Voorn, Vanderschuren, Groenewegen, Robbins, & Pennartz, 2004), lesion studies suggested that striatal sectors differ by the domain of information processing and learning, rather than by an actor–critic type of division (Pennartz, Ito, et al., 2011). The ventromedial striatum (nucleus accumbens shell, receiving substantial ventral hippocampal CA1‐subicular inputs; Groenewegen, Vermeulen‐Van der Zee, te Kortschot, & Witter, 1987) has been implicated in space–outcome and context–outcome associations (Ito, Robbins, Pennartz, & Everitt, 2008), whereas the ventrolateral sector (nucleus accumbens core, receiving strong amygdala inputs) is more clearly involved in cue‐outcome learning and Pavlovian‐to‐instrumental transfer (PIT; Cardinal, Parkinson, Hall, & Everitt, 2002; Hall, Parkinson, Connor, Dickinson, & Everitt, 2001). Both shell and core receive converging afferents from mPFC and OFC, albeit in a subregion‐specific manner (Pennartz et al., 1994; Pennartz, Ito, et al., 2011; Voorn et al., 2004). Similarly, the dorsal striatum has been subdivided into a dorsomedial and dorsolateral region (DMS and DLS) based on different anatomic input–output relationships and distinct functionalities. A comparison of electrophysiological studies studying neural coding in different striatal regions suggests prominent representation of cue value in rat VS and primate caudate (e.g., Kim & Hikosaka, 2013; Lansink et al., 2012; Roitman, Wheeler, & Carelli, 2005). Furthermore, information on task conditions (e.g., fixed versus free‐choice trials) and state value is strongly represented in rat VS (Ito & Doya, 2015; Lansink et al., 2012; Lansink et al., 2016; Schultz, Apicella, Scarnati, & Ljungberg, 1992), whereas action value is more prominently coded in dorsal striatum (Ito & Doya, 2015; Samejima, Ueda, Doya, & Kimura, 2005). Within dorsal striatum, the lateral and medial sectors display different firing‐rate dynamics and task correlates during learning (Thorn, Atallah, Howe, & Graybiel, 2010).

4.2. Dorsal striatum: model‐based versus model‐free learning and goal‐directed versus habitual behavior

The involvement of DMS and DLS in MBL versus MFL has been addressed by various methods. In a human fMRI study, Wunderlich et al. (2012) found evidence for MBL‐related activity in the anterior caudate in the value‐based forward planning task referred to above, whereas the putamen was implied in value representation acquired through extensive, MFL‐related training. Using a spatial planning task, Simon and Daw (2011) found BOLD correlates of plan‐based predicted values in VS but also putamen, arguing for a more widespread role of MBL in the striatum than previously thought. In ensemble recording studies, prospective coding was tested in a multiple T‐maze task where rats navigated for reward, while recordings were made from HPC, dorsal, and ventral striatum. In contrast to the HPC and VS, the dorsal striatum did not show prospective representations of path options and reward, but displayed more pronounced coding of task‐related actions, consistent with a stronger role of dorsal striatum in MFL (van der Meer, Johnson, Schmitzer‐Torbert, & Redish, 2010). In this study, DMS and DLS were not separately assessed. In an odor discrimination task for rats, neural coding of outcome specificity (identity), as dissociated from generic value, was surprisingly found in both DMS and DLS (Stalnaker, Calhoon, Ogawa, Roesch, & Schoenbaum, 2010).

The causal involvement of the dorsal striatum in GDB versus HB has been addressed in lesioning studies, implying the DMS in action–outcome learning (Hart, Bradfield, & Balleine, 2018; Hart, Leung, & Balleine, 2014; Yin, Ostlund, Knowlton, & Balleine, 2005), contrasting to the DLS, which has been implied in stimulus–response coupling and habit formation (Gremel & Costa, 2013; Pennartz et al., 2009; Smith & Graybiel, 2013; Yin, Knowlton, & Balleine, 2004). This dichotomy is supported by electrophysiological recordings reporting greater DMS engagement during goal‐directed actions, as opposed to less engagement of the DLS (Gremel & Costa, 2013).

4.3. Ventral striatum: model‐based versus model‐free learning and goal‐directed versus habitual behavior

Evidence for a role of the VS in MBL—in addition to MFL—has been raised in several lesioning and electrophysiological studies. Excitotoxic VS lesions impaired both MBL and MFL in a Pavlovian blocking and unblocking paradigm, indicating the causal importance of VS in coding specific features of expected outcomes (McDannald, Lucantonio, Burke, Niv, & Schoenbaum, 2011). Moreover, VS neurons respond differentially to stimuli that predict sensorily distinct, but equally or similarly valued rewards (Cooch et al., 2015; Gmaz, Carmichael, & van der Meer, 2018). In a task where rats ran a triangular track with distinct outcomes at spatially separated reward sites, Lansink et al. (2008) found cells that were either generally responsive to every site and every type of reward, or responded to reward at only one specific site. Following the hypothesis of distributed ensembles within the VS exerting different functions (Pennartz et al., 1994), these results indicate the presence of both generally and item‐specific reward‐predicting cell populations in VS. Further evidence for a VS function in MBL comes from the finding that VS cells emit spikes coding for expected reward along with look‐ahead sequences in HPC (Pezzulo et al., 2014; van der Meer & Redish, 2009).

As regards GDB versus HB, the VS appears to be more involved with the motivational control of behavioral performance rather than with selecting goal‐directed or habitual actions themselves (Hart et al., 2014). This is supported by excitotoxic lesion studies using PIT paradigms, showing that VS lesions disrupted gain modulation of instrumental action by Pavlovian stimuli, whereas the sensitivity to outcome devaluation or degradation of instrumental contingency was not affected, in contrast to DMS lesions (de Borchgrave, Rawlins, Dickinson, & Balleine, 2002; Hart et al., 2014).

4.4. Further analysis: Ventral striatal transformation of spatial and cue information into action strength

The discussion on VS involvement in MBL versus MFL finesses the original hypothesis of the VS as a “critic,” but does little to explain the role of the VS in regulating and invigorating motor activity (Mogenson, Jones, & Yim, 1980; Robbins & Everitt, 1996). Indeed, it would be too simple to regard the striatum as a structure receiving domain‐specific information from neocortical, hippocampal, and other sources, labeling this information with reward value, and passing it on to downstream structures for further action selection. If anything in the earlier literature on VS functions stands out, it is its role in gain modulation of specific behaviors, as mediated via VS‐to‐VTA output, ventral pallidal‐thalamic feedback loops and targets in the lateral hypothalamus, mesencephalon and pedunculopontine region (Groenewegen, Berendse, & Haber, 1993; Inglis & Winn, 1995; Kelley, 2004; Mogenson et al., 1980; Pennartz et al., 1994). In a Y‐maze where rats used path integration to identify which of three chambers was most often rewarded (Ito et al., 2008), Lansink et al. (2012) observed that VS cells, indeed, do not simply “copy” hippocampal place‐cell information and associate this with value information. Whereas CA1 neurons displayed regular place‐cell mapping in this environment, VS firing did not correlate to place, but rather to action phases of the task sequence, spanning from cue light onset to goal site approach and reward consumption. Thus, the VS incorporates spatial information to encode valued actions which are appropriate to the animal's current location and context. These and other findings (Roesch, Singh, Brown, Mullins, & Schoenbaum, 2009) indicate an integration of value and motor variables in the VS.

In conclusion, the functional roles of the VS and DMS go beyond that of the “critic” in classic RL schemes. A role of the DMS in GDB is indicated by action–outcome devaluation studies, whereas the DLS may predominantly mediate habit formation, while not ruling out additional functions in MBL (cf. Stalnaker et al., 2010). Evidence on Pavlovian blocking and prospective firing activity indicates a function of the VS in MBL along with the DMS, raising the question in which functions the VS and DMS differ. Here we recall that neural substrates for GDB and MBL may not be identical, and whereas the DMS is implicated in GDB, the VS mediates motivational control and invigoration of behaviors—but not the selection of goal‐directed actions per se. Conversely, coding of future reward has been found in support of MBL in the VS, but not DMS. Thus, the DMS and VS are functionally dissociable in multiple ways.

5. DOPAMINERGIC MESENCEPHALON: REWARD PREDICTION ERROR, MODEL‐BASED LEARNING, AND BEHAVIORAL REACTIVITY

5.1. Introductory remarks

Schultz and colleagues famously demonstrated that ventral mesencephalic dopamine (DA) neurons in the macaque brain signal unexpected reward, as well as unexpected cues that predict subsequent reward (Mirenowicz & Schultz, 1994; Schultz, 2016; Schultz, Dayan, & Montague, 1997; Schultz, Stauffer, & Lak, 2017; Watabe‐Uchida, Eshel, & Uchida, 2017). The resemblance between this phenomenology and the operation of units coding reward prediction errors in TDRL models is so striking that this algorithm is often embraced as an algorithm that is in fact implemented by circuits involving the dopaminergic mesencephalon. Here we briefly review to what extent classic TDRL (as a particular instantiation of MFL) is generally supported by experimental evidence and whether DA signaling may also convey MBL‐ and GDB‐related information. This is followed by a broader formulation of DA function as subserving behavioral reactivity.

5.2. Dopaminergic mesencephalon: model‐based versus model‐free learning and goal‐directed versus habitual behavior

In addition to macaque studies, recordings in rodents have tended to validate the reward prediction error hypothesis of DA neurons, as implied by TDRL models (Eshel et al., 2015; Takahashi, Langdon, Niv, & Schoenbaum, 2016) and thus support a role for DA neurons in MFL. The architecture of neural circuits feeding inputs into the VTA and processing its outputs is at least globally compatible with the requirements TDRL models pose on anatomic implementations (Berendse, Groenewegen, & Lohman, 1992; Eshel et al., 2015; Geisler, Derst, Veh, & Zahm, 2007; Menegas et al., 2015; Pennartz, 1996; Sesack & Grace, 2010; Watabe‐Uchida et al., 2017). However, the “dopaminergic” model implementation of TDRL still faces a number of challenges before it can be accepted as an established fact.

First, the TDRL model assumes that dopamine would affect its presynaptic and postsynaptic targets such that it flips a molecular switch between synaptic strengthening (long‐term potentiation, LTP; upon positive reward prediction errors) and weakening (long‐term depression, LTD; upon negative reward prediction errors). Although several studies, mostly on dorsal striatum, have confirmed this assumption (Fisher et al., 2017; Pawlak & Kerr, 2008; Reynolds, Hyland, & Wickens, 2001; Shen, Flajolet, Greengard, & Surmeier, 2008), a multitude of other DA effects (or lack of effects) on striatal synaptic plasticity remains to be accounted for (e.g., Calabresi, Picconi, Tozzi, & Di Filippo, 2007; Hansen & Manahan‐Vaughan, 2014; Pennartz, Ameerun, Groenewegen, & Lopes da Silva, 1993; Thomas, Malenka, & Bonci, 2000).

A second challenge to a dopaminergic implementation of TDRL is posed by the limited scope of reward‐dependent learning behaviors that are blocked or attenuated by DA receptor antagonists (Berridge, 2007; Hagan, Alpert, Morris, & Iversen, 1983; Pennartz, 1996). Some effects on behavior, initially attributed to learning impairments, may be due to sensory, motivational, motor, and/or planning deficiencies (Denenberg, Kim, & Palmiter, 2004; Hagan et al., 1983; Pennartz, 1996; Robbins, Cador, Taylor, & Everitt, 1989). Nonetheless, dopamine signaling in VS is at least required for acquisition of conditioned reward approach, even when controlling for motor deficits (Darvas, Wunsch, Gibbs, & Palmiter, 2014; Tsai et al., 2009). Third, Redgrave, Prescott, and Gurney (1999b) noted that DA neurons may fire too early after stimulus onset to allow the subject to identify the stimulus as being reward‐predictive. Recently, Schultz and coworkers (Schultz, 2016; Schultz et al., 2017) distinguished an early and late dopaminergic component in response to stimuli, reflecting the physical impact of stimulus detection and value prediction error, respectively. The early component raises two interesting issues on its function and consequences. First, because this component is value‐independent, it is more compatible with a role of DA cells in early behavioral reactions to salient environmental changes, prompting, for example, saccades toward the object. Second, the physical impact of any salient stimulus should raise dopamine release via the early component and, according to TDRL, would thereby induce a synaptic modification as if a “positive surprise” signal had been present, with potentially dysfunctional consequences.

Coming back to the MBL–MFL distinction, the above findings do not rule out a role for dopamine neurons in MBL. Indeed, they do not only signal error in value prediction but also errors in the prediction of sensory features of expected reward (Takahashi et al., 2017; cf. Bromberg‐Martin, Matsumoto, & Hikosaka, 2010). Assuming that these sensory prediction errors can guide adaptive behavior, this places the DA system in the domain of both MBL and MFL.

As regards GDB versus HB, different DA functions have been studied in relation to distinct target areas of the mesencephalic dopaminergic projections (in view of the fact that complete loss of DA function leads to severe motor incapacitation and starvation; Darvas et al., 2014). These studies reveal that DA can support both GDB and habit formation in a way that co‐depends on the function of the target area in each type of behavior. For instance, bilateral 6‐hydroxydopamine (6‐OHDA) lesions of the nigrostriatal pathway mainly targeting DLS maintained sensitivity to reward devaluation, indicating a function in habit formation (Faure, Haberland, Conde, & El Massioui, 2005; consistent with evidence that potentiating DA release by amphetamine accelerates habit formation; Nordquist et al., 2007). In contrast, pretraining 6‐OHDA lesions of prelimbic (but not infralimbic) cortex caused a deficit in adapting instrumental responses to changes in action–outcome contingency (Naneix, Marchand, Di Scala, Pape, & Coutureau, 2009), consistent with the role of prelimbic cortex in GDB. The same study also reported that instrumental responses remained sensitive to outcome devaluation under the same treatment, showing a dissociation between two hallmarks of GDB and thus suggesting that GDB is not mediated by a unitary mechanism. Yet a different study using DA receptor stimulation found a contrasting result: Whereas infralimbic infusions of DA amplified goal‐directed responding in an outcome‐devaluation paradigm, prelimbic manipulation had no such effect (Hitchcott, Quinn, & Taylor, 2007). Whether the differences with the Naneix et al. study are attributable to the overall balance of DA receptor functions affected by DA infusion, to differences in devaluation procedures or other factors, remains to be investigated.

5.3. Further analysis: Dopamine and behavioral reactivity

In addition to these unresolved questions, another enigma still remains: The relationship between DA neurons as reward prediction error coding units vis‐à‐vis the well‐known role of dopamine in movement initiation, posture regulation and other aspects of motivated motor behavior, as affected in Parkinson's disease. This seemingly dual role has been explained by the hypothesis that healthy motor behaviors are maintained by low‐level tonic DA neuron firing activity, whereas learning effects would be mediated by burst activity, resulting in strong DA release (Schultz, 2016). However, phasic dopamine neuron firing has also been associated with motor action, at least in a general sense (Schultz et al., 2017). This activation is associated with global limb and head movements or with small‐scale movements such as licking and chewing (DeLong, Crutcher, & Georgopoulos, 1983; Schultz, Ruffieux, & Aebischer, 1983). Movement‐related changes in DA cell firing have been somewhat ignored recently because of an apparent lack of consistency, and their predominant absence during simpler tasks such as Pavlovian conditioning, but spontaneity in complex behaviors may be a significant aspect of DA function, compromised as it is in Parkinson's disease. While many aspects of DA function in the MBL–MFL and GDB–HB distinctions await further testing, another critical question thus remains, namely which properties of graded DA‐release mechanisms determine the putative boundary between motor‐related versus reward prediction error related effects on DA release, and whether in fact any “hard” boundary can be delineated.

An alternative hypothesis holds that the basic function of dopamine is to enable behavioral reactivity to salient, unexpected sensory input in general—visual, auditory, proprioceptive, or otherwise—which aligns better with the motor deficits observed in Parkinson's disease (cf. Pennartz, 1996; Redgrave et al., 1999b; Robbins & Everitt, 1982; Rodriguez‐Oroz et al., 2009; Salamone, Cousins, & Bucher, 1994). As in the reward prediction error hypothesis, this account holds that DA neurons signal prediction errors, but these are of a more generalized nature, as they include both motivational (value‐related) errors and errors in sensorimotor predictions. The underlying rationale is that, functionally, surprising sensory changes require further exploratory, proactive and reactive movements, such as saccades, grabbing movements, locomotion, and postural adjustments.

Evidence for the behavioral reactivity hypothesis of DA comes, first, from the “early” dopamine response component and the movement‐related DA firing responses already mentioned, and, second, from studies reporting that laterally located mesencephalic DA neurons, mostly in the Substantia Nigra pars compacta (SNPC), are sensitive to salient and unexpected, but neutral sensory stimuli, whereas VTA cells respond to unexpected reward (Bromberg‐Martin et al., 2010; Pennartz, Ito, et al., 2011). Indeed, the SNPC receives predominantly excitatory inputs from the somatosensory and motor cortices, whereas the VTA is heavily innervated by the lateral hypothalamus (Watabe‐Uchida, Zhu, Ogawa, Vamanrao, & Uchida, 2012). Third, studies monitoring extracellular DA levels in striatum using fast‐scan cyclic voltammetry have noted marked deviations in DA signaling from transient, reward prediction error related firing of DA cells, emphasizing correlates and causal functions in the biasing of action selection (Howard, Li, Geddes, & Jin, 2017), reward expectancy, response invigoration, and the estimation of value versus costs of actions and internal operations (Berke, 2018).

The behavioral reactivity hypothesis is somewhat akin to the incentive–salience hypothesis (Berridge, 2007) in its emphasis on the motivational (“wanting”) function of the DA system, although this hypothesis holds that dopaminergic mechanisms attribute incentive salience specifically to reward‐related stimuli, not to salient or unexpected stimuli in general. The behavioral reactivity account seamlessly matches another critical point touching upon the scope of dopamine in general brain function: The expression of reward prediction error signaling by DA cells may just be the tip of an iceberg. The processing of unexpected reward‐ and sensory‐related signals is so essential for the survival and reproduction of animals that a wealth of brain areas is equipped with mechanisms to react to unexpected cues, contexts, movement, and outcomes, interdigitating with the specialized functions of each area (cf. Pennartz, 1997). For instance, neurons in layer II–III of mouse visual cortex code sensory prediction errors (Keller, Bonhoeffer, & Hubener, 2012; cf. Bastos et al., 2012; Leinweber, Ward, Sobczak, Attinger, & Keller, 2017). Furthermore, brain‐wide fMRI studies suggest that reinforcement‐related signals may be ubiquitous throughout the cortex (Serences, 2008; Vickery, Chun, & Lee, 2011). Even neurons in primary sensory cortex show reward‐expectancy correlates and reward‐dependent learning effects on sensory tuning and retinotopic mapping (Goltstein, Coffey, Roelfsema, & Pennartz, 2013; Goltstein, Meijer, & Pennartz, 2018; Shuler & Bear, 2006; cf. Bao, Chan, & Merzenich, 2001). Thus, coding of prediction errors may be so ubiquitous across the brain that an exclusive attribution of this function to DA cells might be the result of “searching under the streetlight.”

In conclusion, the original evidence on reward prediction error coding by DA neurons in the VTA remains firmly standing, which however does not imply that the mesolimbic DA system therefore exclusively functions to mediate MFL through TDRL. Evidence for sensory‐specific coding, area‐specific dopamine effects on GDB and motivational correlates of extracellularly recorded dopamine levels suggest a broader role of DA neurons, pointing to a more general functional repertoire subserving MBL, goal‐directed actions and the overarching concept of behavioral reactivity.

6. HIERARCHICAL CONTROL OF BEHAVIOR THROUGH TOPOGRAPHICALLY ORGANIZED CORTICO‐BASAL GANGLIA‐THALAMIC LOOPS

6.1. Introductory remarks

In this section, we will discuss in more detail how the brain areas, individually reviewed above, interact to accomplish hierarchically organized GDB. How do brain systems for global action policies and long‐term planning of sequential behavior control subroutines, carried out as short‐lasting, elemental sensorimotor skills? (Barto & Mahadevan, 2003; Botvinick, 2008; Dezfouli, Lingawi, & Balleine, 2014; Pezzulo et al., 2014). The evidence reviewed so far is consistent, first, with the engagement of HPC, PFC, and the ventromedial striatal region in behaviors requiring MBL (while not excluding MFL), whereas the DLS is more clearly linked to MFL (not excluding MBL); dopamine neurons may rely on both MBL and MFL. Second, the evidence suggests that GDB and MBL—despite their differences in provenance and conceptualization—share a significant number of common neural substrates, at least when defined at the coarse level of structures or regions. Therefore, we will regularly refer to neural substrates mediating both GDB and MBL in conjunction below.

6.2. Hierarchical organization of cortico‐basal ganglia loops

Previous proposals on hierarchically organized behavior mostly focused on the PFC and particularly on its dorsolateral regions. In particular, a topographic organization was distinguished within the frontal cortex, with higher levels of behavioral control being associated with rostral PFC areas and lower levels to caudal regions (Azuar et al., 2014; Botvinick, 2008; Koechlin & Hyafil, 2007; but see Badre & Nee, 2018). Adhering to the concept of hierarchical RL (Barto & Mahadevan, 2003; Botvinick, 2008; O'Reilly & Frank, 2006), low‐level behaviors or subroutines are temporally organized by nesting them in higher‐level representations of more global behaviors and this process would be mediated by the PFC (Botvinick, 2008; Botvinick, Niv, & Barto, 2009). Here, we emphasize that hierarchical behavioral control includes more than the (hierarchical organization of) classic RL alone, tied as this is to MFL. The question arises: How can GDB and MBL be fit in?

While in hierarchical RL top‐down control may be implemented by a rostrocaudal direction of connectivity in the PFC, it is less clear how the basal ganglia, HPC and associated structures such as the amygdala can be incorporated, and how sensorimotor subroutines are integrated into hierarchically organized behavior. Both in primates and rodents multiple cortico‐basal ganglia–thalamic loops have been identified (Alexander, Crutcher, & DeLong, 1990; Groenewegen, Berendse, Wolters, & Lohman, 1990; Groenewegen & Uylings, 2000; Voorn et al., 2004). Classically, the loops distinguished in primates are (a) a “limbic”‐affective loop including the anterior cingulate cortex and medial OFC; (b) dorsolateral and lateral orbitofrontal loops subserving cognitive functions such as working memory and attentional control; (c) an oculomotor loop comprising the frontal eye field and supplementary eye field; and (d) a motor loop comprising the motor cortex, supplementary motor area and premotor cortex (Alexander et al., 1990). In rodents, a similar distinction in loops is made, with (a) a limbic‐affective loop that originates primarily in orbitofrontal and ventral medial prefrontal areas (here abbreviated as omPFC), which mainly project to the VS (core and shell), (b) a more exteroceptively and cognitively oriented circuit originating in dorsal‐medial prefrontal areas (dmPFC; mainly dorsal prelimbic cortex, anterior cingulate cortex and area Fr2), which project to the DMS; and (c) a motor loop involving sensorimotor cortical areas projecting to the DLS (Flaherty & Graybiel, 1995; Groenewegen et al., 1990; Voorn et al., 2004). While these loops can be subdivided into finer sub‐loops, the most relevant partition here is that of the rodent PFC into omPFC and dmPFC (Groenewegen & Uylings, 2000; Voorn et al., 2004).

We propose that the limbic‐affective loop, including omPFC‐VS circuits, occupies the highest position in a hierarchy of loops (Figure 2). The next highest level is the loop comprising dmPFC and DMS, which mediates cognitive operations (e.g., working memory and attentional set‐shifting) and GDBs on the short term. In defining these loops, we primarily follow rodent prefrontal organization, noting that rat dmPFC bears functional similarities to primate dorsolateral PFC (Uylings, Groenewegen, & Kolb, 2003) but also shares anatomic features with primate orbitomedial PFC (Heilbronner, Rodriguez‐Romaguera, Quirk, Groenewegen, & Haber, 2016; Preuss, 1995; Wise, 2008). In humans, the frontopolar cortex may contribute to omPFC rather than to dmPFC‐like circuits (Gläscher et al., 2012).

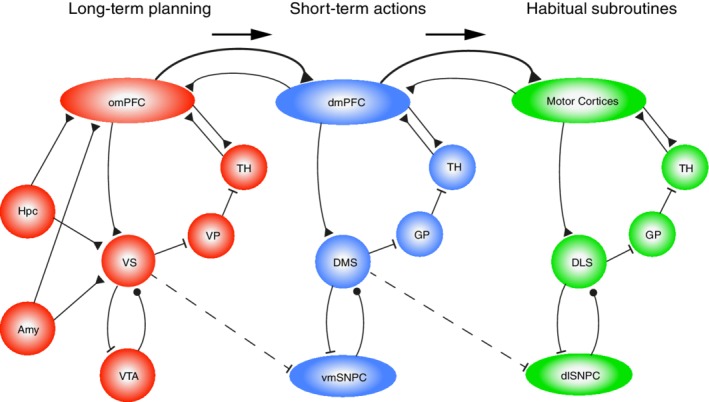

Figure 2.

Interactions between neural systems for (a) long‐term goal‐setting and planning, (b) short‐term actions, and (c) executing habitual subroutines. The leftmost system (in red) has orbitofrontal and ventromedial prefrontal structures (omPFC) and ventral striatum (VS) at its core, forming a limbic‐affective corticobasal ganglia loop that is proposed to mediate long‐term goal setting and planning to obtain outcomes desired on the long term. This loop is supported by episodic memory information retrieved via the hippocampus (Hpc) and positively or negatively valued information from the amygdaloid complex (Amy). OmPFC, dmPFC, and motor cortices are reciprocally connected, yet in terms of hierarchical control, it is proposed that omPFC exerts a top‐down control over dmPFC, which in turn controls the motor cortices (symbolized by arrows and stronger projections going rightward in the scheme). The dmPFC forms a loop (in blue) with the dorsomedial striatum (DMS), whereas the motor cortices form more caudally located loops (in green) with the dorsolateral striatum (DLS; convergence from sensory cortices onto DLS is not shown here). The hierarchical control from omPFC to dmPFC and motor cortical loops is reinforced by inhibitory outputs from striatal structures to parts of the dopaminergic midbrain specifically involved in these respective loops (VS inhibits ventromedial substantia nigra pars compacta, vmSNPC; DMS inhibits the dorsolateral substantia nigra pars compacta, dlSNPC). Excitatory, glutamatergic connections are represented by black triangular terminals; inhibitory GABAergic connections by flat endings; modulatory dopaminergic projections by black circular terminals. Note that this scheme primarily follows rodent brain organization, but that it can be applied to primates with some modifications. VP, ventral pallidum; GP, globus pallidus; TH, thalamus; VTA, ventral tegmental area

These two high‐level loops may not only control lower level, sensorimotor loops (Figure 2, rightmost module) through direct cortico‐cortical top‐down connections but also via selection mechanisms in the basal ganglia. These mechanisms may comprise lateral (or recurrent) inhibition between striatal medium‐sized spiny neurons (Burke, Rotstein, & Alvarez, 2017; Plenz, 2003; Taverna, van Dongen, Groenewegen, & Pennartz, 2004; van Dongen et al., 2005) and other, interneuron‐dependent inhibitory operations in the striatal–pallidal “funnel” (Bar‐Gad, Morris, & Bergman, 2003; Taverna, Canciani, & Pennartz, 2007). With “funnel” we mean that, when descending along the cortical–striatal–pallidal stages of each loop, the cell count dramatically decreases. With this reduction comes an increased degree of convergence of anatomical projections onto a small pallidal volume (Bar‐Gad et al., 2003; Pennartz et al., 1994). Thus, between‐loop interactions may also occur at the level of the globus pallidus and its interactions with the subthalamic nucleus and substantia nigra pars reticulata (Bugaysen, Bar‐Gad, & Korngreen, 2013; Sadek, Magill, & Bolam, 2007; Sato, Lavallee, Levesque, & Parent, 2000), or between thalamic subregions receiving basal ganglia outputs.

The outputs from the limbic‐affective loop are proposed to steer processing in more caudal and dorsal loops, viz. the dmPFC‐to‐DMS loop and the sensorimotor cortices‐to‐DLS loop. This results in short‐term GDB and habitual subroutines being controlled by higher‐level mechanisms for long‐term goal setting and planning. The DLS conforms to this layout, even though its topographic location in the striatum is not strictly “caudal.”

Placing the limbic‐affective (omPFC) loop at a higher level of control than the more “cognitive”, exteroceptive and action–outcome‐oriented (dmPFC) loop may seem surprising, but is motivated by evidence that omPFC areas are heavily involved in achieving the organism's long‐term goals, associated as these are with homeostatic variables and basic motivational drives (i.e., to satisfy hunger, thirst, sex, to avoid pain etc.; Carmichael & Price, 1996; Critchley & Rolls, 1996; Groenewegen & Uylings, 2000; Pennartz et al., 1994). In a strong functional‐evolutionary sense, cognitive operations such as working memory, short‐term action choices and attention are subordinate to achieving long‐term motivational goals. This high‐level position is further supported by evidence on ventral and mPFC lesions in humans, pointing to dysregulation of value‐based decision‐making in general (Gläscher et al., 2012) and on the key role of omPFC‐VS circuits in balancing behavioral policies on long‐term versus short‐term time scales (borne out by patterns of impulsivity and preference for delayed reward; Cardinal, Pennicott, Sugathapala, Robbins, & Everitt, 2001; Jimura, Chushak, & Braver, 2013; Mar, Walker, Theobald, Eagle, & Robbins, 2011). Moreover, omPFC areas have strong connections with the hypothalamus and various brain stem centers implicated in regulation of basic homeostasis and autonomous functions (Carmichael & Price, 1996; Groenewegen & Uylings, 2000). This proposal aligns well with the role of omPFC and VS in MBL and GDB. Thus, there exists no tension between the emotional connotations ascribed to orbitomedial prefrontal structures and their having a position high in the behavioral control hierarchy—on the contrary. Having said this, it should be emphasized that reciprocal control relations between omPFC and dmPFC likely exist. Further arguments for proposing this hierarchical arrangement are given below.

6.3. Hippocampal, amygdala, and dopaminergic outputs to cortico‐basal ganglia loops

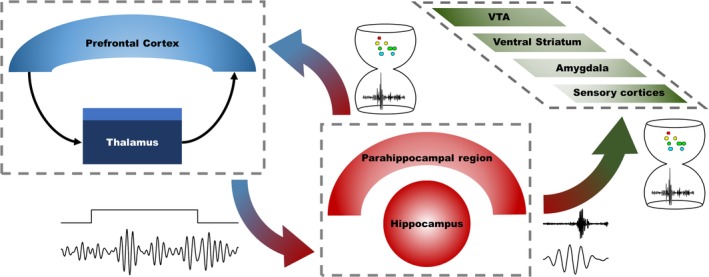

How may the HPC, amygdala and dopaminergic mesencephalon fit into this proposal? With its capacities for mapping world‐state variables and MBL, the hippocampal formation sends output to the omPFC and VS, whereas hippocampal–subicular output to the DLS and its associated sensorimotor loop is much scarcer (Groenewegen et al., 1987). This way, task‐space information in PFC is enriched with hippocampal information on world states and relationships between state variables, helping to identify expected outcomes and select which task rules and goals apply to the agent's current environmental context during planning and execution of GDB (see Wikenheiser, Marrero‐Garcia, & Schoenbaum, 2017, for a causal influence of ventral subiculum on OFC coding of expected outcome). This proposal may provide a solution for the previously raised observation that the HPC is involved in MBL but not required for GDB per se (see section on Hippocampus): By supplying the PFC with world state information acquired through MBL, the HPC may facilitate prefrontal mechanisms for implementing GDB without being a neural substrate necessarily and causally required for GDB itself (as defined in the Introduction). That Corbit et al. (2002) failed to observe any effect of excitotoxic hippocampal lesions on instrumental performance may thus be explained by assuming that world‐state knowledge provided by the HPC is not causally required to solve their particular task (viz. pressing two levers which were each coupled to delivery of a unique food outcome, followed by procedures for testing outcome devaluation and degradation of instrumental contingency).

Vice versa, the PFC sends signals to the medial temporal lobe, including HPC and parahippocampal regions, which query the stored database on world states, and stimulate memory retrieval and internal simulations of potential future scenarios, as expressed by replay and theta look‐ahead sequences (Pezzulo et al., 2014; Redish, 2016). How this query‐and‐retrieval process is implemented is unknown, although PFC output has been shown to influence hippocampal spatial information processing, as indicated by optogenetic–electrophysiological studies (Ito et al., 2015; Schmidt et al., 2019) and PFC lesion effects on area CA1 place field stability (Kyd & Bilkey, 2003).