Abstract

The Chieti Affective Action Videos (CAAV) is a new database designed for the experimental study of emotions in psychology. The main goal of the CAAV is to provide a wide range of standardized stimuli based on two emotional dimensions: valence and arousal. The CAAV is the first database to present emotional stimuli through videos of actions filmed and developed specifically for experimental research. 444 young adults were recruited to evaluate this database, which consisted of a sub-set of 90 actions filmed in four versions, for a total of 360 videos. The four versions differ based on the gender of the main actor (male or female) and in the perspective in which each action was shot (first-person or third-person). CAAV validation procedure highlighted a distribution of different stimuli based on valence and arousal indexes. The material provided by CAAV can be used in future experimental studies investigating the role of emotions, perception, attention, and memory in addition to the study of differences between gender and perspective taking.

Subject terms: Human behaviour, Attention, Perception

| Measurement(s) | emotion/affect behavior trait • valence • arousal domain measurement |

| Technology Type(s) | video |

| Factor Type(s) | age • sex |

| Sample Characteristic - Organism | Homo sapiens |

Machine-accessible metadata file describing the reported data: 10.6084/m9.figshare.11448795

Background & Summary

In psychology, a large range of procedures and experimental materials have been used to elicit emotions in a laboratory setting1–5. Studies have shown that the use of emotional movie clips could offer additional advantages6–8, compared to more simple stimuli. In fact, movie clips present more complex visual stimuli than words and images. Along with being highly capable of involving and capturing the observer’s attention9, at the same time, movie clips increase the level of ecological validity, simulating a real-life setting. Furthermore, meta-analyses of emotion induction have shown that movie clips appear to be among the most effective ways to elicit emotions10. Despite the advantages of ecological validity and emotional activation, it has been shown that variation in the camera angle and light exposure, within the same movie clip, may lead the observer to perceive some of the real-life situations as unusual or unrealistic11. Schaefer et al., also has pointed out that most movie clips activate both, the auditory and the visual systems, and that they depict multiple actions using different emotional levels. The multimodal features of most video clips could result in a mixing of different emotional experiences for the observers, confusing the evaluation of the different actions and the recollection of them. Furthermore, the gender of the main actor and the point of view (POV) shot, are not always strictly controlled. The first-person POV allows the observer to take on the perspective of the actor, while in the third-person POV, the observer assumes an external role, watching the action happening. Based on the above rationale, the CAAV (Chieti Affective Action Video) was created with the aim of solving, or at least smoothing-out, some of the issues that affect currently used databases, most of which rely on already existing video clips. Specifically, the CAAV was developed to control for the following critical aspects: the camera angle, the number of stimuli included in the scene, the number of actions presented, the gender of the actors, and the POV with which the actions are carried out.

The most innovative aspect of the CAAV consists in being the first database to present emotional stimuli through videos of actions filmed and developed specifically for experimental research. In relation to the emotional aspect, the development of the CAAV database is founded based on the Dimensional Model of Emotions. This model assumes that it is possible to classify emotions through fundamental dimensions that go beyond the different types of emotional responses12,13. This dimensional approach to study emotions is, itself, characterized by the circumplex model14, which establishes valence and arousal to be the two dimensions considered when evaluating an emotion. The valence dimension indicates whether the observer likes or dislikes an event, an object, or a situation. The arousal dimension refers to the level of psychological activation induced by an emotional stimulus. According to the circumplex model, from the linear combination of different levels of valence and arousal (e.g. high/low), it is possible to organize different discrete emotions. Specifically to the CAAV database, an example of an action with high level of valence would be “Finding cash”, while one with low level of valence would be “Poisoning a person”. Regarding arousal, an example of an action of the CAAV with high level of arousal would be “Being threatened with a knife”, while one with low level of arousal would be “Sharpening a pencil”. Research, based on this theoretical framework, has led to the development of databases, which has categorized different types of emotional stimuli, such as pictures or words, on the basis of these fundamental dimensions of emotions1,2. Therefore, based on this approach, experimental subjects were asked to rate the videos of the CAAV on both, valence and arousal, dimensions. Regarding the CAAV characteristics, controlling for the gender of the actors allows for this database to be used to investigate the role of gender identification. Researchers suggest that gender plays a crucial role in processes related to self-perception, face-recognition, emotion, and memory15–19. Furthermore, the perspective dimension (POV) is a critical feature in the present database, since the research participant will either watch the actions being executed by someone else (third-person), or will watch them being performed in first-person as if he/she were performing them. The perspective from which an action is viewed has a fundamental role during the imitation of a behavior, thus influencing the way through which the sensory information is transferred between the person performing an action and the imitator20. Several imitation studies have reported that sensory information available from the first-person POV, as if the imitator were observing the model from his/her own perspective, is greater than that viewed from the third-person perspective, where the model is facing the observer21–23. The first-person perspective model facilitates more accurate imitative behavior than the third-person perspective model24,25 and it induces greater activity in the mirror neuron system (MNS), which is implicated in the processing of visuomotor information26,27. Differently, studies investigating the first and third-person through videogames, have highlighted that the playing view influences the presence of emotional responses28. The first-person playing view seems to generate a greater emotional response compared to the third-person playing view. Furthermore, participants are more immersed in a game when they look at it through the eyes of the character (first-person condition), regardless of their preferred perspective29. Considering all the possible advantages that the use of the first-person POV can have on the observer; our movie clips were filmed using both, the third-person and the first-person point of view. Staging these two different perspectives allows to compare and analyze the different emotional aspects arising from both POVs. Finally, in relation to identification processes and emotional responses, the perspective dimension may further interact with the gender. Therefore, using the CAAV also allows to compare and analyze the mixed emotional aspects arising from the manipulation of these two variables.

Methods

Participants

444 healthy participants took part in the CAAV validation procedure voluntarily. Specifically, the sample consisted of 217 young males and 227 young females between the ages of 18 and 30 years (mean = 22.58 years; SD = 3.71), most of which were university students at G. d’Annunzio University of Chieti. They received no compensation and signed an informed consent before starting the experiment. IRB approval was obtained by the G. d’Annunzio University ethical committee.

CAAV stimuli

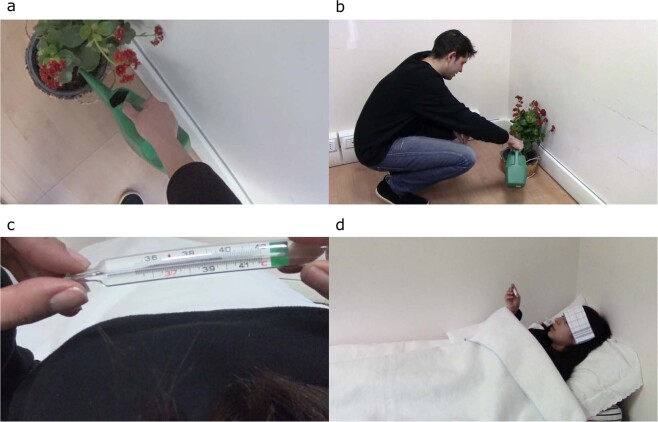

To select the actions to be included in the CAAV we took into account different criteria. Particularly, the actions had to be perceived as realistic as possible. Furthermore, the actions had to be reproducible within a laboratory setting, kept as controlled as possible in order to: (1) avoid presenting too many stimuli simultaneously, (2) avoid variations in camera angle and light exposure, and (3) keep the same setting and background across the videos. Finally, the goal was to select different emotional actions to obtain a subsequent representative distribution of CAAV videos along the valence and arousal dimensions continuum. All CAAV videos were filmed in an empty laboratory room which was kept as controlled as possible. Only if required by the movie clip setting creation, objects were incorporated into the laboratory room, for example the addition of a table, a chair, or a knife. The actions presented in the database were carried out by either female or male characters. Two 24-year-old amateur actors (one male and one female) were involved in the recording, always wearing a black shirt and blue jeans. Videos were recorded with a GoPro Sony HDR-as50. Each video lasted exactly 15 seconds. In order to make all videos the same duration, for actions that were carried out in less than 15 seconds, filler scenes were added (up to a maximum of 5 seconds). In these scenes, the actors were waiting to perform the action. These filler scenes were included to obtain the same standard duration for all stimuli, even though different actions for their own nature were performed by the actors in different time intervals. The CAAV’s emotional stimuli are only depicted through visual presentation. All the auditory stimuli have been muted from the movie clips. In addition, the CAAV presents just one main action in each video, avoiding the succession of many actions that could carry a different emotional valence. Furthermore, our videos have been created to represent a broad sample of contents across the entire affective space and to contain simple and easily understandable actions. The same conditions of brightness were ensured across filming sessions. For videos where differences were detected at a later stage, the brightness was modified using “VideoPad Video Editor”. Finally, the camera angle and the distance of the camera from the scene were controlled across all conditions. To get an overview of what has been described, frames extracted from some videos of the CAAV are shown in Fig. 1.

Fig. 1.

Frames extracted from CAAV videos. (a) Action description: “Watering a plant”, first-person POV, male actor. (b) “Watering a plant”, third-person POV, male actor. (c) Action description: “Measuring one’s fever”, first-person POV, female actor. (d) Action description: “Measuring one’s fever”, third-person POV, female actor.

Normative rating procedure for CAAV

In summary, our database consists of a sub-set of 90 actions filmed in 4 different conditions for a total of 360 videos: (1) first-person POV with male main actor; (2) first-person POV with female main actor; (3) third-person POV with male main actor and (4) third-person POV with female main actor. The resulting 360 videos were divided into 4 different lists (A, B, C, D). Each list contained all 90 actions but varied based on the gender of the main actor and perspective (first-person/third-person POV). The administration of the 4 different lists was balanced between experimental subjects. This subdivision was needed to reduce the subjects’ fatigue due to the excessive duration of the task1,2. To further control for fatigue, each participant was given the option to interrupt the task at any time if s/he was feeling tired. None of the subjects asked to interrupt the task. The presentation order of the videos was randomized within each list and each video was rated for arousal or valence after its presentation. Participants were divided into 2 groups (see Table 1). The first group, composed of 211 participants (101 M/110 F; mean = 22.99 years; SD = 3.81), evaluated the videos based on valence. The second group, composed of 233 participants (116 M/117 F; mean = 22.21 years; SD = 3.60), evaluated the videos based on arousal. The two dimensions of valence and arousal served as dependent measures and were measured through the Self-Assessment Manikin (SAM)25.

Table 1.

Study Characteristics.

| Subjects | Experiment | Protocol | Data |

|---|---|---|---|

| 211 | Rating CAAV videos | Measurement of Valence dimension | Online-only Table 1 |

| 233 | Rating CAAV videos | Measurement of Arousal dimension | Online-only Table 1 |

Tool for rating: self-assessment manikin

According to Russell & Barrett24, each emotion arises from the linear combination of valence and arousal. The valence dimension indicates whether the observer likes or dislikes an event, an object, or a situation. Valence ranges along a continuum that goes from negative valence to positive valence. Differently, arousal is defined as the physiological activation level. Arousal compares the states of low physiological activation, which can usually occur in conditions of sadness and relaxation, with those of high physiological activation which can usually be observed in conditions of anger and happiness. In order to measure the emotional dimensions of valence and arousal in relation to our action video clips, we used the Self-Assessment Manikin (SAM) which is a widely used tool in this research field30. The SAM is a non-verbal assessment technique which uses figures to measure the valence and arousal associated with a person’s emotional reaction to a variety of stimuli. To study the valence dimension, we used a version of the 9-point SAM scale30. In addition, we considered another version of the 9-point SAM scale to analyze the arousal dimension30. Using these tools, the subject can select any of the 9 points on the continuum, with 1 corresponding to the lowest possible rating on each dimension (i.e., negative valence/low arousal) and 9 corresponding to the highest possible rating (i.e., positive valence/high arousal).

Data Records

All data and videos are available on Figshare platform31. Specifically, all CAAV videos are reported in the file .zip named “CAAV_database”. Furthermore, eight sample videos of the CAAV are available for download on the Figshare platform as an overview of the CAAV stimuli. The eight sample videos include two different actions, “Losing hair” (low valence/medium-high arousal) and “Blowing candles”(high valence/medium arousal), both presented in four different conditions (in relation to gender and POV variables). All 360 videos are in.mpg format with a 1920 × 1080 resolution. Additionally, the results of the CAAV validation are reported in a separate Excel file. This file is called “CAAV_dataset” and contains a dataset with the average scores, for both valence and arousal dimensions, for each video. This table is accompanied by a legend that provides a detailed description of all the variables. The table reports the code assigned to each video. Each video was renamed with a corresponding code within the depository. Furthermore, this table reports a brief description of the action contained in each video, the type of perspective used (first-person POV vs. third-person POV), and the gender of the actor (male vs female). The average values and the standard deviation for both valence and arousal dimensions are also reported. Furthermore, the mean ratings and standard deviation distinguished by the gender of the experimental subjects (male vs female) are also reported. Finally, in a second Excel file named “CAAV_rawdata” the raw data for all experimental subjects are reported. The table contains: subject ID, age, gender, list of stimuli administered, and the rating for each of the 360 videos.

Technical Validation

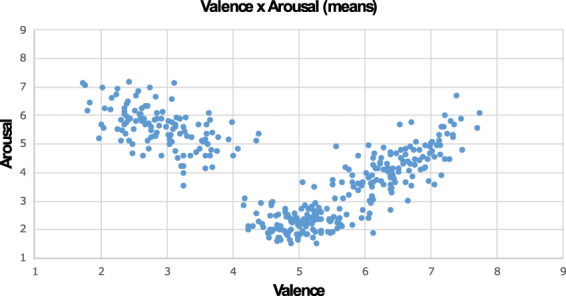

In the present study, participants could select any of the 9 points on the SAM rating scale by pressing the corresponding numeric key on a laptop keyboard. Experimental sessions were conducted individually in a laboratory room under similar lighting conditions. Each trial began with a preparatory slide (“Please rate the next video”) that was presented for 3 seconds. Then, the video was presented for its entire duration (15 seconds), and immediately after the video terminated, a new slide with rating instructions was shown. For the first group, instructions stated “Please rate the video based on valence” while for the second group, instructions stated “Please rate the video based on arousal”. After the video disappeared from the screen, subjects made their ratings of valence or arousal using SAM. A standard 5 seconds rating period was used. In addition to the 90 CAAV videos, 3 practice videos (with different randomized perspective and different randomized gender of the actor) were showed prior to the experimental block (“play with a balloon”, “waving a fan” and “punch a wall”). The purpose of these preliminary videos was to train the subjects for subsequent evaluations, informing them of the type of content they would have been exposed to, as well as to familiarize over the use of the rating scales. A laptop computer using E.Prime 2.0 software was used to control the randomization and timing of the stimuli presentation, as well as of the collection of the rating responses. The entire task lasted around 35 minutes. “CAAV_dataset” shows the mean ratings. The interaction between valence and arousal scores of each video is reported in Fig. 2.

Fig. 2.

Scatterplot of the interaction between valence and arousal scores of each video. The average valence score is reported on the X axis, while the average arousal score is reported on the Y axis for each video.

Usage Notes

The present database has the potential to be applied to several fields in psychology. Specifically, since the CAAV stimuli are indexed on both, valence and arousal dimensions, they can be used in experimental studies to investigate the role of emotions. Moreover, the CAAV stimuli can be suitable in cognitive studies to investigate perception, visual attention, and emotional memory. In social psychology, this database and its stimuli can be potentially useful to study morality, responsibility, and empathy. The CAAV can also be used with clinical populations. In this context, for example, video stimuli could be implemented in both emotional and memory training or to investigate reality monitoring and memory distortion. Also, considering further features of the CAAV, it is possible to use this database to investigate the differences related to gender and to perspective taking. Another possibility would be to manipulate these two variables to study the different levels of self-identification and the consequent emotional response. In relation to the limitations and the possible future developments of the CAAV, video stimuli could also be evaluated for other interesting attributes, such as the frequency and typicality of the action in daily life. Finally, since it has been shown that emotional stimuli are processed differently during the life span32, a further development could be to involve different age groups in the rating of the CAAV stimuli making the database more appropriate in studies on emotions and memory in aging.

Acknowledgements

We thank Miriam Curti and Rocco Martella for their assistance in filming the scenes.

Author contributions

Data collected by A. Di Crosta, P. La Malva, and C. Manna. Data descriptor drafted by A. Di Crosta, A. Marin, and R. Palumbo. A. Di Domenico, R. Palumbo, M.C. Verrocchio, M. Cortini and N. Mammarella have devised the experimental design and supported the data collection. All the authors have contributed to and reviewed the manuscript.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Bradley, M. M. & Lang, P. J. The International Affective Picture System (IAPS) in the study of emotion and attention. In Handbook of emotion elicitation and assessment 29–46 (Oxford University Press, 2007).

- 2.Soares AP, Comesaña M, Pinheiro AP, Simões A, Frade CS. The adaptation of the Affective Norms for English Words (ANEW) for European Portuguese. Behav. Res. Methods. 2012;44:256–269. doi: 10.3758/s13428-011-0131-7. [DOI] [PubMed] [Google Scholar]

- 3.Ekman P. Facial expression and emotion. Am. Psychol. 1993;48:384–392. doi: 10.1037/0003-066X.48.4.384. [DOI] [PubMed] [Google Scholar]

- 4.Fairfield B, Mammarella N, Palumbo R, Di Domenico A. Emotional Meta-Memories: A Review. Brain Sci. 2015;5:509–520. doi: 10.3390/brainsci5040509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Di Domenico A, Palumbo R, Fairfield B, Mammarella N. Fighting apathy in Alzheimer’s dementia: A brief emotional-based intervention. Psychiatry Res. 2016;242:331–335. doi: 10.1016/j.psychres.2016.06.009. [DOI] [PubMed] [Google Scholar]

- 6.Gross JJ, Levenson RW. Emotion elicitation using films. Cogn. Emot. 1995;9:87–108. doi: 10.1080/02699939508408966. [DOI] [Google Scholar]

- 7.Baveye Y, Dellandréa E, Chamaret C, Chen L. LIRIS-ACCEDE: A Video Database for Affective Content. Analysis. IEEE Trans. Affect. Comput. 2015;6:43–55. doi: 10.1109/TAFFC.2015.2396531. [DOI] [Google Scholar]

- 8.Deng, Y., Yang, M. & Zhou, R. A New Standardized Emotional Film Database for Asian Culture. Front. Psychol. 8, 1941 (2017). [DOI] [PMC free article] [PubMed]

- 9.Rottenberg, J., Ray, R. D. & Gross, J. J. Emotion elicitation using films. In Handbook of emotion elicitation and assessment 9–28 (Oxford University Press, 2007).

- 10.Westermann R, Spies K, Stahl G, Hesse FW. Relative effectiveness and validity of mood induction procedures: A meta-analysis. Eur. J. Soc. Psychol. 1996;26:557–580. doi: 10.1002/(SICI)1099-0992(199607)26:4<557::AID-EJSP769>3.0.CO;2-4. [DOI] [Google Scholar]

- 11.Schaefer A, Nils F, Sanchez X, Philippot P. Assessing the effectiveness of a large database of emotion-eliciting films: A new tool for emotion researchers. Cogn. Emot. 2010;24:1153–1172. doi: 10.1080/02699930903274322. [DOI] [Google Scholar]

- 12.Russell J. Core Affect and the Psychological Construction of Emotion. Psychol. Rev. 2003;110:145–72. doi: 10.1037/0033-295X.110.1.145. [DOI] [PubMed] [Google Scholar]

- 13.Posner J, Russell JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005;17:715–734. doi: 10.1017/S0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Russell JA, Barrett LF. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J. Pers. Soc. Psychol. 1999;76:805–819. doi: 10.1037/0022-3514.76.5.805. [DOI] [PubMed] [Google Scholar]

- 15.Lockwood P. “Someone Like Me can be Successful”: Do College Students Need Same-Gender Role Models? Psychol. Women Q. 2006;30:36–46. doi: 10.1111/j.1471-6402.2006.00260.x. [DOI] [Google Scholar]

- 16.Herlitz A, Lovén J. Sex differences and the own-gender bias in face recognition: A meta-analytic review. Vis. Cogn. 2013;21:1306–1336. doi: 10.1080/13506285.2013.823140. [DOI] [Google Scholar]

- 17.Wright D, Sladden B. An own sex bias and the importance of hair in face recognition. Acta Psychol. (Amst.) 2003;114:101–14. doi: 10.1016/S0001-6918(03)00052-0. [DOI] [PubMed] [Google Scholar]

- 18.Man TW, Hills PJ. Eye-tracking the own-gender bias in face recognition: Other-gender faces are viewed differently to own-gender faces. Vis. Cogn. 2016;24:447–458. doi: 10.1080/13506285.2017.1301614. [DOI] [Google Scholar]

- 19.Wang B. Gender difference in recognition memory for neutral and emotional faces. Mem. Hove Engl. 2013;21:991–1003. doi: 10.1080/09658211.2013.771273. [DOI] [PubMed] [Google Scholar]

- 20.Meltzoff, A. N. Imitation and Other Minds: The ‘Like Me’ Hypothesis. In Perspectives on imitation: From neuroscience to social science: Vol. 2: Imitation, human development, and culture 55–77 (MIT Press, 2005).

- 21.Vogt S, Taylor P, Hopkins B. Visuomotor priming by pictures of hand postures: perspective matters. Neuropsychologia. 2003;41:941–951. doi: 10.1016/S0028-3932(02)00319-6. [DOI] [PubMed] [Google Scholar]

- 22.Jackson PL, Meltzoff AN, Decety J. Neural circuits involved in imitation and perspective-taking. NeuroImage. 2006;31:429–439. doi: 10.1016/j.neuroimage.2005.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Oosterhof N, Tipper S, Downing P. Viewpoint (In)dependence of Action Representations: An MVPA Study. J. Cogn. Neurosci. 2012;24:975–89. doi: 10.1162/jocn_a_00195. [DOI] [PubMed] [Google Scholar]

- 24.Nishizawa H, Kimura T, Goh A-C. The effect of different imitation models on theaccuracy and speed of imitation of movement. J. Phys. Ther. Sci. 2015;27:3417–3420. doi: 10.1589/jpts.27.3417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ramenzoni VC, Sebanz N, Knoblich G. Synchronous imitation of continuous action sequences: The role of spatial and topological mapping. J. Exp. Psychol. Hum. Percept. Perform. 2015;41:1209–1222. doi: 10.1037/xhp0000093. [DOI] [PubMed] [Google Scholar]

- 26.Watanabe R, Higuchi T, Kikuchi Y. Imitation behavior is sensitive to visual perspective of the model: an fMRI study. Exp. Brain Res. 2013;228:161–171. doi: 10.1007/s00221-013-3548-7. [DOI] [PubMed] [Google Scholar]

- 27.Watanabe, R. & Higuchi, T. Behavioral Advantages of the First-Person Perspective Model for Imitation. Front. Psychol. 7, 701 (2016). [DOI] [PMC free article] [PubMed]

- 28.Kallinen, K., Salminen, M., Kedzior, R., Sääksjärvi, M. & Ravaja, N. Presence and emotion in computer game players during 1st person vs. 3rd person playing view: Evidence from self-report, eye-tracking, and facial muscle activity data. In Proceedings of the PRESENCE 2007 187–190 (2007).

- 29.Denisova, A. & Cairns, P. First Person vs. Third Person Perspective in Digital Games: Do Player Preferences Affect Immersion? In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems 145–148 (ACM, 2015).

- 30.Bradley MM, Lang PJ. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- 31.Di Crosta A, 2019. Chieti Affective Action Video - CAAV: Technical Manual and Affective Rating. figshare. [DOI]

- 32.Carstensen LL, Mikels JA. At the Intersection of Emotion and Cognition: Aging and the Positivity Effect. Curr. Dir. Psychol. Sci. 2005;14:117–121. doi: 10.1111/j.0963-7214.2005.00348.x. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Di Crosta A, 2019. Chieti Affective Action Video - CAAV: Technical Manual and Affective Rating. figshare. [DOI]