Abstract

Background:

Reflective practice has been identified as one way to increase participation in self-directed lifelong learning so that physicians maintain a level of current and relevant medical knowledge for their practice. This study sought to determine if reflective practice affected the readiness for self-directed learning in a sample of anesthesiology residents in the United States.

Methods:

An experimental design was used to employ quantitative methods to investigate the effects of a self-guided 8-week reflective practice exercise on readiness for self-directed learning as measured by Guglielmino's Self-Directed Learning Readiness Scale/Learning Preference Assessment (SDLRS/LPA). Participants were randomly assigned into an experimental group or control group.

Results:

Fifty-one anesthesiology residents in 3 residency programs completed this study. No significant difference was found between the posttest SDLRS/LPA scores of the control (median = 227) and experimental group (median = 225; U = 294; z = −.584; P = .559; r = 41.18) as well as the pretest and posttest scores (z = −.65; P = .518; r = −.129) of the experimental group.

Conclusions:

We should continue to explore ways to train physicians to engage in practices that promote self-directed lifelong learning.

Keywords: Reflective practice, life-long learning, residents

Introduction

In our rapidly changing health care environment, physicians cannot rely on skills and knowledge gained early in their medical training to sustain competency throughout their career.1 Reflective practice has emerged in the medical education literature as one way for physicians to identify gaps in their knowledge to attend to their learning needs and update their skills.2–4 Usually triggered by an unexpected phenomena, reflective practice is a process that helps the practitioner gather information, integrate relevant ideas, and evaluate results to help inform future practice.2,3

Many speculate that reflective practice fosters self-directed learning because it encourages practitioners to recognize gaps in their knowledge and attend to their own learning needs to gain expertise in their field.2,5,6 Reflection has been shown to help learners take more responsibility for their learning and teaching residents how to reflect upon their experiences may increase their desire to learn.7–9 A study of 103 medical students found that students garnered a greater benefit from course materials and enjoyed their medical studies more if they reflected upon their learning than if they did not.10 Similarly, another study of adult learners found that students using guided reflection learned more independently than those who did not.11 Although reflective practice is seen as one way to help learners identify gaps in knowledge and foster self-directed learning, little is known about how to cultivate reflective practice skills3 and whether or not reflective practice leads to self-directed learning.12,13

Teaching reflective practice may also increase the capacity for physicians to recognize gaps in knowledge through self-assessment. For decades, credentialing bodies have required physicians to self-assess their performance to identify and remediate deficits.14 However, an analysis of the self-assessment literature revealed that physicians—particularly those who were confident in their performance but lacked skills—were poor at self-assessing.14,15 One study looking at self-assessment and peer-assessment of practicing physicians found that physicians who rated in the lowest performance quartile (<25th percentile) by their peers had rated themselves 30 to 40 percentile ranks higher than their peer ratings. Furthermore, those in the >75th percentile rated themselves 30 to 40 percentile ranks lower. The authors concluded that self-assessment is flawed even in seasoned practicing physicians; by not incorporating activities such as reflective practice into self-assessment, physicians could mitigate erroneous knowledge and skill deficits.16

Accrediting bodies such as the Association Council for Graduate Medical Education are calling for physician training programs to develop reflective lifelong learners who can identify and mitigate gaps in knowledge.17 Although some evidence identifies reflective practice as a promising technique that may help physicians identify and mitigate gaps in knowledge, much of the literature is theoretical in nature and few empirical studies have been conducted to support the theories about reflective practice and its impact on learners.18,19 As such, the researchers for this study sought to investigate the effects of self-guided reflective practice on readiness for self-directed learning in a sample of anesthesiology residents training in the United States.

Materials and Methods

This study utilized an experimental design and employed quantitative methods. The Institutional Review Board at Oregon Health & Science University (OHSU) gave this study exempt status.

Sample and Design

A convenience sample was used to solicit participation from academic anesthesiology departments whose department chair had an established professional relationship with the OHSU department chair. Three department chairs agreed to have their residency program participate in this study. Programs were housed in academic medical centers and included 1 on the West Coast, 1 on the East Coast, and 1 in the Midwest. One researcher (AMJ) contacted the administrative program coordinator for each participating program via email to facilitate the solicitation of resident participants. The program coordinator sent study details to their respective residents and collected names of those interested in participating. A total of 247 anesthesiology residents in postgraduate years (PGYs) 2 to 5, were solicited to participate in this study. Participant names and email addresses were forwarded from each program coordinator to researcher AMJ. Residents who signed up and agreed to participate in the 8-week study received a $20 Visa check card during week 1 of the study to improve study compliance and data collection.20,21

Theoretical Framework and Intervention Tool

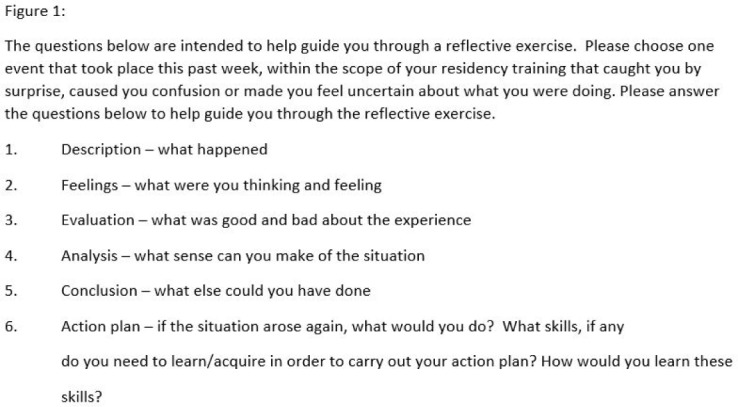

The researchers developed a 6-question intervention tool (Figure 1) based on the Gibbs22 model of reflection that led participants through a written reflective practice exercise. Gibbs contended that reflection takes place through following a sequence of 6 steps:

Description—What happened?

Feelings—What are you thinking and feeling?

Evaluation—What was good and bad about the experience?

Analysis—What sense can you make of the situation?

Conclusion—What else could you have done?

Action Plan—If it arose again what would you do?

Figure 1:

Reflective practice exercise.

Two additional prompts were added to step 6 to address the criticism that the Gibbs model does not adequately guide the learner to reflect on what actions need to be taken to successfully follow through with their identified “action plan”: (1) what skills or information, if any, do you need to learn/acquire to carry out your action plan, and (2) how would you learn/acquire the skills/information previously mentioned?23

Reflective thought is brought on by an event in one's life that causes doubt, perplexity, or uncertainty.6,24 Therefore, the intervention tool instructs participants to reflect on an incident that occurred during the week and within the scope of their residency training that caused them to experience this doubt, perplexity, or uncertainty. Participants engaged in the reflective exercise in a self-directed manner with no instructions or guidance other than those included on the reflective exercise.

Assessment Instrument

The Self-Directed Learning Readiness Scale/Learner Preference Assessment (SDLRS/LPA) is the most commonly used tool to analyze resident's readiness for self-directed learning.25 The SDLRS/LPA is a validated tool that specifically measures attitudes, abilities, and characteristics deemed to be associated with self-directed learning.25

The possible range of scores on the SDLRS/LPA is between 58 and 290, with an adult average score of 214.00 ± 25.59.25 The SDLRS/LPA is scored by adding the Likert-type scale responses of 41 of the 58 questions, reverse scoring the remaining 17 questions and adding the scores together to derive the final score. The SDLRS/LPA designates score ranges to help decipher between below average (58–201), average (202–226) and above average (227–290) readiness for self-directed learning.

The initial administration of the SDLRS/LPA contained 3 questions at the beginning of the survey for participants to answer regarding demographic information including ethnicity, medical school, and age range.

Procedures

The SDLRS/LPA was administered before the start of the 8-week intervention and administered again after the intervention period. The researchers chose an 8-week intervention period because educational assignments during anesthesiology training are typically 4 weeks in length. We hypothesized that engaging in reflective exercises over 2 educational assignments would allow participants to familiarize themselves with and integrate reflective practice into their training during the study period. In addition, an 8-week period during which learners reflect once per week has been demonstrated to be adequate to develop reflective thinking in health care professionals.20

SurveyMonkey was used to collect individual participant responses. Responses were linked to each participant's email address so pre- and post-SLDRS/LPA responses could be paired together as well as allowing targeted reminders to be emailed to participants who did not complete a reflective exercise or SDLRS/LPA survey. Before the data were analyzed, participants were coded by a research assistant not involved with analyzing the data, thereby maintaining anonymity.

Participants were randomly assigned either to the control group (CG) or intervention group (IG). Both groups were asked to take the pre- and post-SDLRS/LPA at week 1 and after week 8 of the study period. Only the IG received weekly reflective exercises. The reflective exercise was administered through SurveyMonkey. A link to the reflective exercise was emailed to all IG participants every Friday of the study period. Participants were asked to complete the reflective exercise within 3 days upon initial request. Participants who did not complete a reflective exercise were sent up to 3 email reminders, 2 days apart for 2 weeks.

Data Analysis

This study used quantitative analysis to answer 1 main research question: Does reflective practice affect readiness for self-directed learning in anesthesiology residents? Two secondary questions were developed to answer the main research question: (1) do posttest SDLRS/LPA scores differ between the CG and IG, and (2) do students' SDLRS/LPA scores prior to participating in reflective exercises differ from the scores after participation? Quantitative data from the SDLRS/LPA were analyzed using SPSS software version 24.26 Before a complete analysis was conducted, a Shapiro-Wilk test for data normality was conducted.

Results

A total of 53 participants enrolled in the study. One participant dropped out of the study before it began. Of the remaining 52 participants, 26 were randomly assigned to the CG and 26 were randomly assigned to the IG. Participants completed the intervention if they submitted 6 of the 8 reflective exercises. One participant completed only 2 reflective exercises; therefore, those data were eliminated from the analysis. Table 1 presents the mean score, standard deviation, median score, and range of the pretest and posttest SDLRS/LPA scores for the control group and the IG. Demographics for both the CG and the IG were fairly evenly distributed (Table 2) with the exception of ethnicity and gender.

Table 1.

Pretest and Posttest Scores for the Control and Experimental Groups

| Control Group | Experimental Group | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Median | Range | Mean | SD | Median | Range | |

| Pretest SDLRS/LPA scores | 223.31 | 20.48 | 223 | 178–272 | 221 | 20.66 | 227 | 174–269 |

| Posttest SDLRS/LPA scores | 226.54 | 20.58 | 227 | 171–250 | 221 | 24.45 | 225 | 139–255 |

Table 2.

Demographic Information for Study Participants

| Control | Experimental | All Participants | |

|---|---|---|---|

| Freq. (%)a | Freq. (%)a | Freq. (%)a | |

| Ethnicity | |||

| White | 18 (69) | 23 (92) | 41 (80) |

| Nonwhite | 8 (31) | 2 (8) | 10 (20) |

| Age range | |||

| 25–30 | 15 (58) | 13 (52) | 28 (55) |

| 31–35 | 9 (35) | 11 (44) | 20 (39) |

| >35 | 2 (7) | 1 (4) | 3 (6) |

| Gender | |||

| Male | 18 (69) | 11 (44) | 29 (57) |

| Female | 8 (31) | 14 (56) | 22 (43) |

| Year in training | |||

| Year 1 | 9 (35) | 7 (28) | 16 (31) |

| Year 2 | 9 (35) | 9 (36) | 18 (35) |

| Year 3 | 8 (30) | 8 (32) | 16 (31) |

| Missing | 0 (0) | 1 (4) | 1 (2) |

| Degree | |||

| MD | 25 (96) | 24 (96) | 49 (96) |

| DO | 1 (4) | 1 (4) | 2 (4) |

For all statistical tests, a 95% confidence level was used to determine statistical significance. The pretest scores for the control group were observed to be normally distributed (W = .977, df = 26, P = .799), as were the pretest SDLRS/LPA scores for the experimental group (W = .928, df = 25, P = .076). The posttest SDLRS/LPA scores for the control group were normally distributed, (W = .968, df = 26, P = .576) but the posttest SDLRS/LPA scores for the experimental group were not normally distributed (W = .869, df = 25, P = .004).

Prior to analysis of our secondary questions, we sought to determine if a difference existed between the control and experimental group in pretest SDLRS/LPA scores. An independent samples t test showed no significant difference between the 2 groups t(49) = .401, P = .691.

We also conducted independent sample t tests to determine if the pretest SDLRS/LPA scores for both the CG and IG differed from the national average adult score.25 The data showed no statistically significant difference between the pretest SDLRS/LPA scores (median = 221, SD = 20.66) of the IG and the adult average (median = 214), t(24) = 1.69, P = .103. However, the pre-test score of the CG (median = 223.31, SD = 20.48) was significantly higher than the average adult score (median = 214), t(25) = 2.31, P = .029.

Secondary Question 1

Whether the posttest SDLRS/LPA scores differed between the control group and the experimental group was addressed using a Mann-Whitney U test. There was a slight difference in the median scores between the posttest SDLRS/LPA score of the control group (227) and the posttest SDLRS/LPA score of the experimental group (225); however, the differences were not significant (U = 294, z = −.584, P = .559, r = 41.18).

Secondary Question 2

Before participating in reflective exercises, do residents' SDLRS/LPA scores differ from the scores after participation? This question was addressed using a Wilcoxon signed-rank test. The median pretest SDLRS/LPA score (227) was higher than the median posttest SDLRS/LPA score (225). Furthermore, 12 of the 25 participants in the experimental group had higher pretest SDLRS/LPA scores than their post scores, whereas 13 of the 25 had lower posttest SDLRS/LPA scores than pretest scores. However, there was no difference in the SDLRS/LPA scores (z = −.65, P = .518, r = −.129) before and after participating in the reflective exercise.

Discussion

Cox found that adult learners who engaged in self-guided reflective exercises were more self-directed in their learning than were learners who did not engage in reflective exercises.11 Studies have found that reflective exercises partnered with other learning strategies—such as education portfolios,27 developing a learning plan,28,29 and journal writing8—increased the readiness for self-directed learning.

In this study, no statistical significance was found between the pretest and posttest scores of participants who engaged in 8 weeks of self-guided reflective exercises. Additionally, no statistically significant difference was found in the posttest SDLRS/LPA scores between the control group and the experimental group. From these results, it can be concluded that involvement in self-guided reflective exercises did not increase the readiness for self-directed learning in these study participants.

There are several reasons the results of this study might differ from previous research. A majority of participants in both the control group and the experimental group (92% and 76%, respectively) scored in the average or above average category for readiness for self-directed learning on the pretest. This might mean that there was less opportunity for posttest SDLRS/LPA scores to increase to a level high enough to produce statistical significance between the IG's pretest and posttest scores. High pretest scores could also mean learners are taught self-directed learning skills in medical school or earlier.

The results of this study could have been affected by how long it took participants to guide themselves through the written reflective exercise. Since residents often work long hours with a priority on patient care, the participants in this study may have found the task of writing reflections burdensome or in conflict with their other educational priorities. Residents might not have had enough time to truly reflect on their practice because of these competing demands.

Self-directed learning is hypothesized to develop over time.30 Therefore, the length of the study period could have influenced the study results. This study asked participations to engage in reflective exercises over a short duration of time, and the reflective exercises were not formally incorporated into each program's curriculum. A longer study period or incorporating reflective practice into the training curriculum might be needed for participants to understand and know how to reflect upon their practice.

As previously mentioned, other studies looking at reflective exercises paired with other learning strategies increased the readiness for self-directed learning. Using the Gibbs model as a stand-alone reflective exercise, not paired with other learning strategies, may not be enough to affect readiness for self-directed learning.

Despite randomization, there were clear differences in the demographic distribution between the control group and the experimental group. Almost three-quarters (69%) of the participants in the control group were male and less than half of the experimental group (44%) were male. Additionally, the distribution of nonwhite participants between the 2 groups was skewed. It may be that there are inherent differences in the way males and females and whites and nonwhites reflect upon experiences or their predisposition for readiness for self-directed learning.

Physician lifelong learning is vital to provide the best and safest care for patients. As medical technology and information proliferates, it is important for physicians to be self-directed in their learning so they can better care for their patients. Although previous studies have shown reflective practice to increase self-directed learning,6,21,23,24 this study did not conclude with similar results. Additionally, despite the heightened discussion of reflection as a topic of importance in the medical education literature, there is little evidence to help educators understand how to cultivate the skill of reflection in their learners.28

Future directions for research include conducting a prospective power analysis and replicating this study with a larger sample size. However, in the absence of a power analysis, the researchers determined the confidence intervals of the IG's pretest and posttest scores to better understand the results. The mean scores of the pretest (221; 95% CI [212.5–229.5]) and posttest (221; 95% CI [2010.9–231.1]) fall within the confidence interval; therefore, it is not likely this intervention would increase readiness for self-directed learning in US anesthesiology residents. Future studies should consider other or additional forms of reflective exercises to see if they have an impact on self-directed learning. Perhaps using the Gibbs model to guide reflective practice in a social context—such as a physician faculty member thoughtfully questioning a trainee about patient care or a specific case, small group discussions, group debriefings, team-based reflections or critical incident analysis—could be used to elicit reflective thought.8,27 Additionally, some researchers assert that feedback on reflective writing is important to help learners attain a deep level of reflection.31 Research looking at both written and oral feedback from faculty on reflective writing would help us understand if feedback on reflection leads to higher posttest SDLRS/LPA scores than pretest scores. As the literature clearly demonstrates, physicians need to participate in self-directed, lifelong learning. Teaching learners to engage in reflective practice should begin well before residency training. The skills needed to be reflective should be taught in undergraduate education and perhaps assessed before matriculation to medical school. It is our obligation to ensure that we produce lifelong learners and, as such, we should explore ways in which we can help further develop and instill this skill in our trainees.

Footnotes

Financial disclosure: The financial support for this work was provided by the Department of Anesthesiology and Perioperative Medicine, Oregon Health & Science University.

References

- 1.Horsley T, O'Neill J, McGowan J, Perrier L, Kane G, Campbell C. 2010. Interventions to improve question formulation in professional practice and self-directed learning. Cochrane Database Syst Rev. 2010;12(5) doi: 10.1002/14651858.CD007335.pub2. CD007335. [DOI] [PubMed] [Google Scholar]

- 2.Westberg J, Jason H. Fostering Reflection and Providing Feedback: Helping Others Learn from Experience. Springer Publishing Company; New York, NY: 2001. [Google Scholar]

- 3.Mann K, Gordon J, MacLeod A. Reflection and reflective practice in health professions education: a systematic review. Adv Health Sci Educ Theory Pract. 2009;14(4):595. doi: 10.1007/s10459-007-9090-2. [DOI] [PubMed] [Google Scholar]

- 4.Sandars J. The use of reflection in medical education: AMEE Guide No. 44. Med Teach. 2009;31(8):685–95. doi: 10.1080/01421590903050374. [DOI] [PubMed] [Google Scholar]

- 5.Mamede S, Schmidt HG. The structure of reflective practice in medicine. Med Educ. 2004;38(12):1302–8. doi: 10.1111/j.1365-2929.2004.01917.x. [DOI] [PubMed] [Google Scholar]

- 6.Bennett N, Casebeer L, Zheng S, Kristofco R. Information-seeking behaviors and reflective practice. J Contin Educ Health Prof. 2006;26(2):120–7. doi: 10.1002/chp.60. [DOI] [PubMed] [Google Scholar]

- 7.Elwood J, Klenowski V. Creating communities of shared practice: The challenges of assessment use in learning and teaching. Assess Eval High Educ. 2002;27(3):243–56. [Google Scholar]

- 8.Riley-Douchet C, Wilson S. A three-step method of self-reflection using reflective journal writing. J Adv Nurs. 1997;25(5):964–8. doi: 10.1046/j.1365-2648.1997.1997025964.x. [DOI] [PubMed] [Google Scholar]

- 9.Shepard LA. The role of assessment in a learning culture. Educ Res. 2000;29(7):4–14. [Google Scholar]

- 10.Sobral DT. An appraisal of medical students' reflection-in-learning. Med Educ. 2000;34(3):182–7. doi: 10.1046/j.1365-2923.2000.00473.x. [DOI] [PubMed] [Google Scholar]

- 11.Cox E. Adult learners learning from experience: using a reflective practice model to support work-based learning. Reflective Practice. 2005;6(4):459–72. [Google Scholar]

- 12.Lockyer J, Gondocz S, Thivierge RL. Knowledge translation: the role and place of practice reflection. J Contin Educ Health Prof. 2004;24(1):50–6. doi: 10.1002/chp.1340240108. [DOI] [PubMed] [Google Scholar]

- 13.Andrews K. Evaluating Professional Development in the Knowledge Era. TAFE NSW ICVET International Centre for VET Teaching and Learning; Sydney, Australia: 2005. [Google Scholar]

- 14.Davis D, Mazmanian P, Fordis M et al. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006;296(9):1094–102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 15.Evans A, McKenna C, Oliver M. Self-assessment in medical practice. J R Soc Med. 2002;95(10):511–3. doi: 10.1258/jrsm.95.10.511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Violato C, Lockyer J. Self and peer assessment of pediatricians, psychiatrists and medicine specialists: implications for self-directed learning. Adv Health Sci Educ. 2006;11(3):235–44. doi: 10.1007/s10459-005-5639-0. [DOI] [PubMed] [Google Scholar]

- 17.ACGME Common Program Requirements. Chicago, IL: Accreditation Council for Graduate Medical Education; 2016. https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/CPRs_2017-07-01.pdf Accessed August 13, 2018. [Google Scholar]

- 18.Duke S, Appleton J. The use of reflection in a palliative care programme: a quantitative study of the development of reflective skills over an academic year. J Adv Nurs. 2000;32(6):1557–68. doi: 10.1046/j.1365-2648.2000.01604.x. [DOI] [PubMed] [Google Scholar]

- 19.Ruth-Sahd LA. Reflective practice: a critical analysis of data-based studies and implications for nursing education. J Nurs Edu. 2003;42(11):488–97. doi: 10.3928/0148-4834-20031101-07. [DOI] [PubMed] [Google Scholar]

- 20.Edwards P, Roberts I, Clarke M et al. Increasing response rates to postal questionnaires: systematic review. BMJ. 2002;324(7347):1183. doi: 10.1136/bmj.324.7347.1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Newby R, Watson J, Woodliff D. SME survey methodology: response rates, data quality, and cost effectiveness. Entrep Theory Pract. 2003;28(2):163–72. [Google Scholar]

- 22.Gibbs G. Learning by Doing: A Guide to Teaching and Learning Methods. Great Britain: FEU; 1988. [Google Scholar]

- 23.Jasper M. Beginning Reflective Practice. Nelson Thornes/Oxford Press; Northamptonshire, UK: 2003. [Google Scholar]

- 24.Dewey J. How We Think: A Restatement of the Relation of Reflective Thinking to the Educative Process. Vol. 8. DC Heath; Lexington, MA: 1933. [Google Scholar]

- 25.Guglielmino & Associates Learning Preference Assessment (LPA) www.lpasdlrs.com/ Accessed August 20, 2018.

- 26.IBM Corp Released 2016. IBM SPSS Statistics for Windows, Version 24.0. Armonk, NY: IBM Corp; [Google Scholar]

- 27.Fung MF, Walker M, Fung KF et al. An internet-based learning portfolio in resident education: the KOALA™ multicentre programme. Med Educ. 2000;34(6):474–9. doi: 10.1046/j.1365-2923.2000.00571.x. [DOI] [PubMed] [Google Scholar]

- 28.Bravata D, Huot S, Abernathy H et al. The development and implementation of a curriculum to improve clinicians' self-directed learning skills: a pilot project. BMC Med Educ. 2003;3(1):7. doi: 10.1186/1472-6920-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schilling L, Steiner J, Lundahl K, Anderson RJ. Residents' patient-specific clinical questions: opportunities for evidence-based learning. Acad Med. 2005;80(1):51–6. doi: 10.1097/00001888-200501000-00013. [DOI] [PubMed] [Google Scholar]

- 30.Guglielmino P, Guglielmino L, Long HB. Self-directed learning readiness and performance in the workplace. Higher Educ. 1987;16(3):303–17. [Google Scholar]

- 31.Bain J, Mills C, Ballantyne R, Packer J. Developing reflection on practice through journal writing: impacts of variations in the focus and level of feedback. Teachers and Teaching. 2002;8(2):171–96. [Google Scholar]