Abstract

It is imperative that college and university faculty members continue to collaborate to develop and assess innovative teaching methods that effectively encourage learning for all undergraduates, particularly in STEM. Here we describe a simple student-led classroom technique, recap and retrieval practice (R&RP), that we, as two instructors at different institutions, collaboratively implemented in three upper-level STEM courses. R&RP sessions are short, student-led reviews of previous course material that feature student voices prominently at the start of every class period. R&RP sessions require a small team of students to prepare and deliver a review of prior course content via active retrieval practice formats, which are well known to be particularly effective learning tools. These R&RP assignments were also designed to emphasize additional evidence-based learning practices (concrete examples, dual coding, elaboration, interleaving, and spaced practice). Our analysis of undergraduate student experiences both in leading and participating in R&RPs indicates that overall R&RP sessions were well-received, active learning strategies that our students indicated fostered their learning. As instructors, we found R&RPs an effective and efficient strategy to encourage class participation, assess class participation, and emphasize student voices in our classrooms. Moreover, we found that collaboratively deploying a learning activity allowed us to observe the impact of a specific pedagogical activity in varied instructional settings and enhanced our professional development as educators.

Keywords: active learning, retrieval practice, testing effect, inclusive pedagogy, faculty development, pedagogy

INTRODUCTION

In this manuscript we describe our experiences as two instructors collaboratively deploying a simple student-led learning activity in three of our regularly taught upper-level STEM courses, an adapted strategy we call recap and retrieval practice (R&RP). We each used R&RP sessions as a simple mechanism to start our class periods in ways that feature student voices, welcome student perspectives, and highlight student responsibility for active learning, while at the same time emphasizing evidence-based practices for effective out-of-class studying and learning. The development and implementation of this strategy was a response to the emerging evidence that it is critical to engage students in more active learning, to support inclusive excellence, and to teach and guarantee the use of evidence-based learning strategies often overlooked by students.

Active Learning

Students and faculty members are advocating with increasing intensity that traditional, passive, lecture-based college class sessions are no longer appropriate or effective mechanisms to teach and engage undergraduates. Fading are the days of instructors functioning in “sage on the stage” roles, responsible for conveying information not easily accessible elsewhere. With increasing frequency, instructors view their roles as “guides on the side” who coach students to work actively with information that is now far more readily available. Such active, student-centered approaches are heralded particularly for their ability to enhance inclusivity and recognize that most, if not all, students have strong and innate abilities to engage and learn (Lujan & DiCarlo, 2006; Haak et al., 2011; Aragón et al., 2018; Ballen et al., 2018; Supiano, 2018).

Stakeholders throughout higher education recommend implementation of active learning strategies wherein students demonstrably participate in the learning process during class periods rather than passively listening without substantial engagement (Prince, 2004; Michael, 2006; American Association of the Advancement of Science [AAAS], 2011; National Research Council [NRC], 2012; Freeman et al., 2014; Bradforth 2015). In response, some colleges and universities are remodeling classrooms and their furniture to move away from traditional fixed auditorium configurations in which all students face the instructor and board or screen at the front of the room in favor of much more flexible sandbox classrooms composed of moveable chairs and desks, clustered seating, numerous whiteboards and screens, connected technology, and no obvious front of the room (Cotner et al., 2013; Baepler et al., 2016). Similarly, many faculty members (with and without classroom renovations) are redesigning their class meetings in whole or in part to move away from one-sided, didactic lectures toward more interactive, student-centered class sessions wherein they coach their students to learn in class by doing, talking, questioning, reflecting, collaborating, problem-solving, etc. (Knight & Wood, 2005).

Not surprisingly, active learning strategies vary considerably in form and magnitude from short and simple lecture break activities (Faust & Paulson, 1998; Lom, 2012; Lang, 2016, Harrington & Zakrajsek, 2017) to dramatic course redesigns that rely substantially on peer instruction (Mazur, 1997; Beichner et al., 2007; Hoskins, 2008; Cahill & Bloch-Schulman, 2012). Given such considerable heterogeneity in the application of active learning strategies, it is not surprising that active learning is often discussed in overly broad and vague ways (Eyler, 2018) which can be challenging to measure, quantify, or compare (Smith et al., 2013; Stains et al., 2018). Our intention was to implement a “small” change (Lang, 2016) that would bring about meaningful classroom changes, and perhaps more importantly, lead to student engagement with effective learning strategies.

It is also important to note that active learning strategies can face significant barriers to adoption because such strategies can require considerable faculty and student time, energy, and/or creativity (Brownell & Tanner, 2012; Miller & Metz, 2014; Halonen & Dunn, 2018). Moreover, some faculty members question the value of active learning (Aragón et al., 2018) and/or fear lowered course evaluations (Henderson et al., 2018).

At present, roughly half of North American STEM courses are taught in didactic instructional styles (Stains et al., 2018). Many studies indicate that a variety of active learning strategies can significantly promote learning (Haak et al., 2011; Eddy & Hogan, 2014; Jensen et al., 2015; Barral et al., 2018) including a meta-analysis (Freeman et al., 2014). Recently a large study revealed that the adoption of a growth mindset (Dweck, 2007) was the single most important faculty factor that enhanced STEM student performance (Canning et al., 2019). Moreover, in another study faculty members adopting active learning strategies in STEM courses did not experience lowered evaluations from their students (Henderson et al., 2018). Thus, as educators we feel compelled and motivated to continue developing, refining, assessing, and adapting practical active learning strategies that recognize all students have potential to learn via ways that both students and faculty members can experience as efficient and effective learning practices.

Recap and Retrieval Practice (R&RP)

Recaps are a very common teaching strategy wherein an instructor begins a class period by briefly recapping, situating, and/or summarizing salient information discussed in a previous class period (Wyse, 2014). A recap is a familiar orienting device also used widely in serial television; episodes often begin with a dulcet voiceover saying, “Previously on…” followed by short replays of key moments from earlier episodes to jog the viewers’ memory and set them up to connect to new information in the forthcoming episode.

Retrieval practice is another familiar and widely used teaching technique also known as practice testing (Roediger & Butler, 2011; Roediger et al., 2011; Agarwal, 2019). It describes any strategy in which a learner actively brings information to mind through deliberate recall. Retrieval practice sessions often resemble quizzes, though are frequently delivered as learning activities with little or no penalty for incorrect responses. Powerfully supported by a large and growing body of research, retrieval practice is often the most effective learning strategy when compared to others, sometimes called “the testing effect” (Karpicke & Roediger, 2008; Brown et al., 2014; Weinstein et al., 2019a,b). Despite the remarkable efficacy of retrieval practice for learning in a wide variety of contexts, many learners do not implement self-quizzing strategies in their studying, electing more passive and less effective tactics such as rereading and highlighting notes and textbooks (Karpicke et al., 2009; Dunlosky et al., 2013). This may be a backlash to the increased standardized testing in K-12 education and is likely linked to student perception that tests are stressful and used mainly as summative assessment. Our R&RP technique implemented frequent practice testing but attempted to decrease the perceived stressors by having students administer the “tests” and by assessing participation as the only grade. Low-risk, weekly assessment, such as this, has been found to be disproportionately successful for underprepared students (Haak et al., 2011).

Additional Learning & Studying Strategies

Further important elements of learning that are well supported by scientific literature include interleaving, spaced practice, elaboration, dual coding, and concrete examples (Weinstein et al., 2019a,b). Briefly, interleaving is the intentional mixing of topics in a study session that may not have been originally introduced together (Rohrer, 2012). Spaced practice (a.k.a. distributed practice) is the opposite of cramming. It is regular, dispersed studying that occurs in multiple small sessions rather than one long session shortly before a high stakes testing event (Benjamin & Tullis, 2010; Carpenter et al., 2012). Elaboration is a method of learning and studying that builds greater detail by incorporating new information and making connections between ideas, including existing knowledge (Wong 1985; McDaniel & Donnelly, 1996). Dual coding is learning by combining words and visuals, representing information in multiple formats (Mayer & Anderson, 1992). Concrete examples are specific, relevant examples generated as ways to understand abstract concepts (Rawson et al., 2014). By intentionally introducing these learning strategies and then requiring that students use them for a R&RP, we were supporting the development of strong study skills that could move beyond our particular courses. In addition, presenting students were forced to space their practice by being assigned a R&RP that was distant from the exam data while all students were exposed to some retrieval practice each class meeting.

Research Question

We sought to use R&RP sessions as a simple student-centered class activity that emphasized evidence-based learning methods for our students. Specifically, we examined the experiences of students in three upper-level STEM courses regularly using R&RPs as a learning strategy. In addition, we reflected together on our experiences as instructors using R&RPs in our courses.

MATERIALS AND METHODS

Stimulus

This study was initiated after one of us (BL) shared preliminary experiences implementing recaps and retrieval practice sessions as separate activities in two upper-level Biology courses as a short presentation at the 2017 Faculty for Undergraduate Neuroscience (FUN) Summer Education Workshop. This presentation stimulated the other (AJS) to pilot R&RPs in fall 2017 in an upper-level Psychology course. Seeking to understand student experiences with R&RPs and to examine the implementation of R&RPs across instructors and courses, we then collaborated to generate similar R&RP descriptions, assignments, and assessments for implementation in our Spring and Fall 2018 courses as described here.

Assignment

All students in the College of Wooster Behavioral Neuroscience course (PSYC323) and Davidson College Developmental Biology course (BIO306) in the spring of 2018, as well as the College of Wooster Drugs and Behavior course (PSYC345) in the fall of 2018 led R&RP sessions as part of their regular, graded course work. PSYC323, which enrolled 20 students, was a standard lecture course that met for three hours/week, had a three-hour/week lab, and was also writing intensive. PSYC345, which enrolled 18, was a standard lecture course that met for three hours/week. BIO306 was a studio lab course (Round & Lom, 2015) that met for six hours/week and enrolled a total of 16 students. In all courses there was a mixture of class-years, with the majority in the junior year, though there were no first-year students.

Overall assessment in the courses, though variable across institutions, included in-class or take-home exams with short answer, problem-solving, and essay questions, brief written empirical reports or posters on mini-experiments, in-class discussions of empirical articles and assignments related to research methodology and laboratory skills. To be clear, our graded assessments were designed to match, to some extent, the way we were asking them to process information in order to create a R&RP. Wooster classes also included weekly online multiple-choice quizzes as reading and knowledge checks. To indicate their importance, R&RP sessions accounted for 9% (Davidson) or 10% (Wooster) of the final course grade for the presenting students. Each R&RP was graded by the instructor according to a 100-point rubric aligned with assignment criteria (Appendix 1).

Each syllabus explained that each student would lead a R&RP with a partner at least twice throughout the semester, and that the R&RP should take approximately the first five minutes of the class. To receive full credit, the students were instructed that they must review information in a new way, demonstrating synthesis, prioritization, and understanding of the previous lesson’s content via strong oral and visual delivery. They should include an original aid for the class such as a slide, handout, poster, infographic, video, etc., and each R&RP session must also include active retrieval practice that challenges classmates to use, apply, and retrieve previous knowledge in a low-stakes way. They were told they should meet with their teaching assistant or faculty member in advance of their presentation, and provide any handouts, slides, questions, etc. for photocopying and/or uploading to the course management system. After a R&RP presentation, each member of the team had to independently complete a short on-line reflection survey (Appendix 2). For a full description as provided on the syllabus from each class, please see Appendix 3.

Prior to the first R&RP each faculty member invested class or lab time discussing the importance of and evidence for retrieval practice as an effective learning strategy as well as other empirically supported learning strategies and time management skills using a variety of resources (Karpicke & Roediger, 2008; Brown et al., 2014; Agarwal, 2019; Weinstein et al, 2019a,b). Designed by cognitive scientists for students, the free posters (and other handy resources) at learningscientists.org describing and depicting retrieval practice, spaced practice, elaboration, interleaving, concrete examples, and dual coding were particularly emphasized to students (Weinstein et al., 2019a) along with an article on study strategies (Dunlosky, 2013).

Student Choices with R&RPs

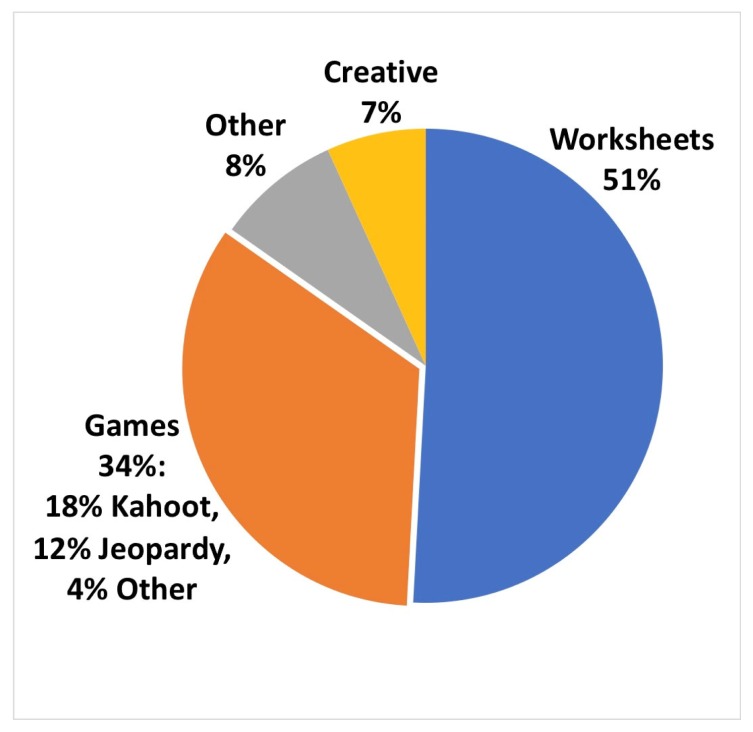

To determine the format of each of the 59 R&RP sessions designed and led by students, each instructor reviewed R&RP materials after presentation and assigned the format the students selected into one of four categories: worksheets, games, creative, or other (Figure 1).

Figure 1.

Percentage of activity types used in R&RP sessions. Analyzing the activities of 59 total R&RP sessions in three courses revealed that students most frequently (51%) developed worksheet formats with diagrams to label and/or quiz questions to answer. Students structured RP as games in 34% of R&RP sessions (Kahoot, Jeopardy, etc.). In 7% of R&RP sessions students created their own retrieval practice formats (e.g., acting out pathways) labeled here as creative. In the remaining 8% of R&RP sessions, labeled here as other, students created Bingo sheets or crossword puzzles.

R&RP Reflection Feedback

Each student was required to complete an online survey (Appendix 2) shortly after delivering a R&RP session in class. The reflection asked students what learning strategies they used, how they applied the learning strategies, what was the most challenging aspect of the R&RP, the most satisfying aspect of the R&RP, and how they would improve their R&RP. We collected 118 student reflections in the three different courses. Each R&RP session was presented by multiple students and each student presented multiple R&RPs in a semester; thus, the total number of reflections exceeds the total number of students enrolled in the three courses. The types of learning strategies students self-reported using in R&RPs were reviewed and when, necessary, recategorized by faculty members (Figure 2). In addition, narrative responses to open-ended questions 8, 9, 10, & 13 in Appendix 2 were anonymized and then examined by a blinded analyst, not part of the study, who identified the most frequent themes within these student experiences. Data from the reflection statements are presented as a percentage of total comments for each category.

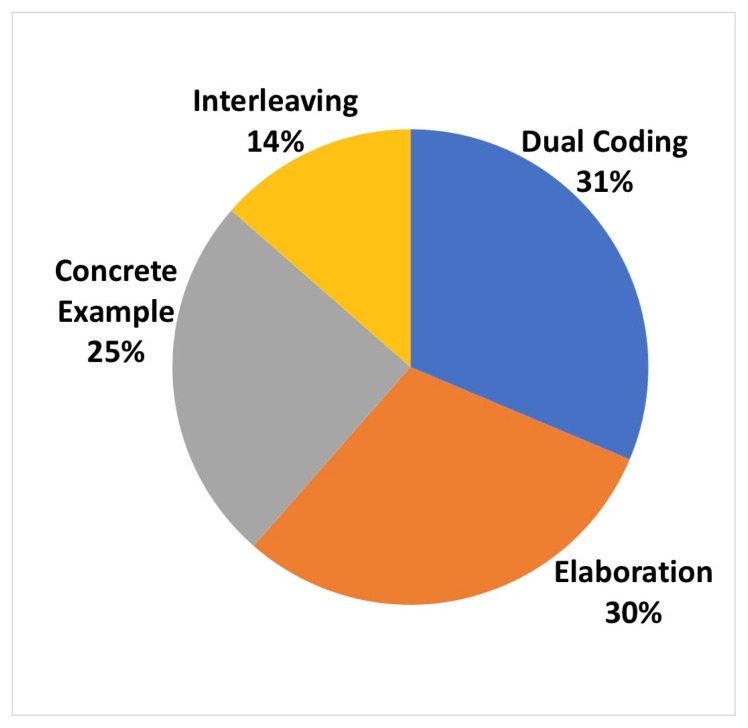

Figure 2.

Percentage of learning strategies students reported using in R&RPs. Students self-identified 236 learning strategies (from the four reviewed in class) in the 118 reflection statements. Dual coding was most frequently identified (31%), followed closely by elaboration (30%) and concrete examples (25%). Interleaving was least frequently identified (14%).

End-of-Semester Student Feedback

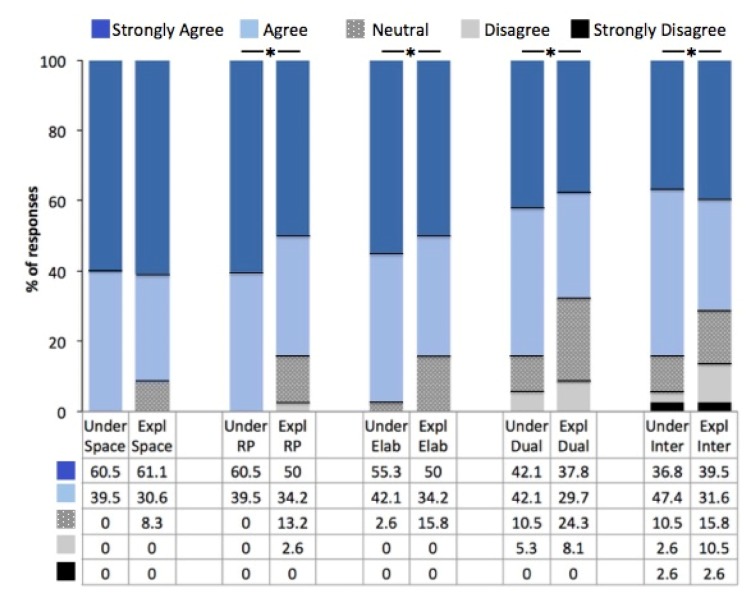

Using a voluntary and anonymous online survey (Appendix 4), we collected feedback data from 38 students in the three different upper level courses representing 70% of enrolled students (13/20 in PSYC323, 16/16 in BIO306, and 9/18 in PSYC345; Wooster N=22, Davidson N=16) at the end of the course. Students were asked to respond on a five-point Likert scale (1=strongly disagree, 3=neutral, 5=strongly agree) to a set of questions related to the five emphasized learning strategies (retrieval practice, interleaving, spaced practice, elaboration, and dual coding) as well as their personal experiences with the R&RPs. Questions related to the learning strategies asked students to rate both their level of understanding of each strategy and their ability to explain each strategy (Figure 3). Questions about personal experiences with R&RPs asked students about their appreciation for and understanding of each pedagogical strategy, their experiences of working with partners, and if they would have preferred faculty led R&RPs (Figure 5). Likert scale data from the end-of-semester assessment were analyzed using non-parametric, rank-based statistics, as specified below, because the data on a Likert scale are ordinal and not interval data. That is, the difference between 4 (agree) and 5 (strongly agree) in an ordinal scale recognizes the natural order that 5 indicates a stronger level of agreement than 4, but unlike an interval scale, the difference between 4 and 5 is not equivalent to 1 unit. An analysis of the data using t-test and ANOVA resulted in nearly all of the same statistical outcomes, but our data violate some basic assumptions of those tests and are therefore best analyzed using the Mann-Whitney Wilcoxon and Friedman tests. In addition to the Likert questions, several open-ended questions asked students if R&RPs led by classmates helped them learn and if R&RPs they prepped helped them learn. Narrative responses to open-ended questions 6 & 7 in Appendix 4 were anonymized and then examined by a blinded analyst not part of the study who identified the most frequent themes related to what they liked most about R&RP and what they liked least about R&RP. Data from the open-ended statements will be presented as a percentage of total comments for each category.

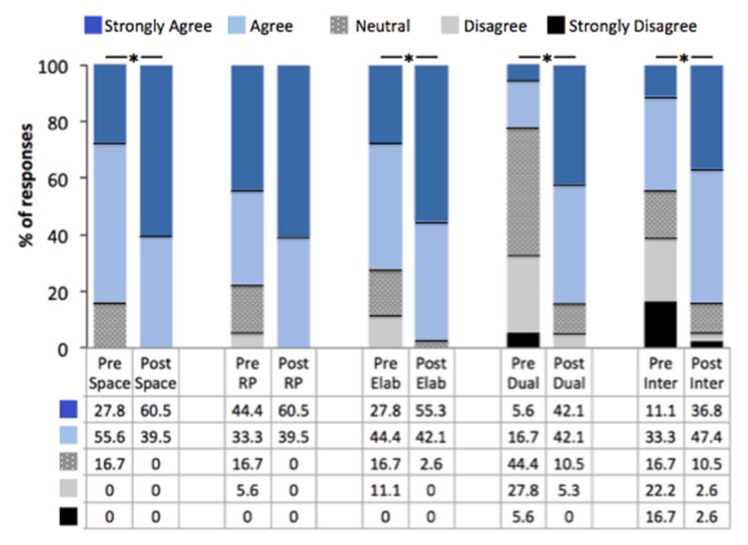

Figure 3.

Percentage of student rankings of understanding for each learning strategy from self-report Likert scale from before the semester began (Pre, N=18), and at the end of the semester (Post, N=38). At the end of the semester there was an increase in the percentage of students who reported a higher level of understanding for retrieval practice (RP), elaboration (Elab), dual coding (Dual), and interleaving (Inter). MWW U tests for those four learning strategies demonstrated significant increases in understanding, * represents p<0.02. When comparing across the learning strategies, there was also a significant difference, with spaced practice (Space), retrieval practice, and elaboration all having higher levels of understanding than dual coding and interleaving at both the pre- and post-semester time points, post-hoc Wilcoxon sign-ranked, ps<0.02.

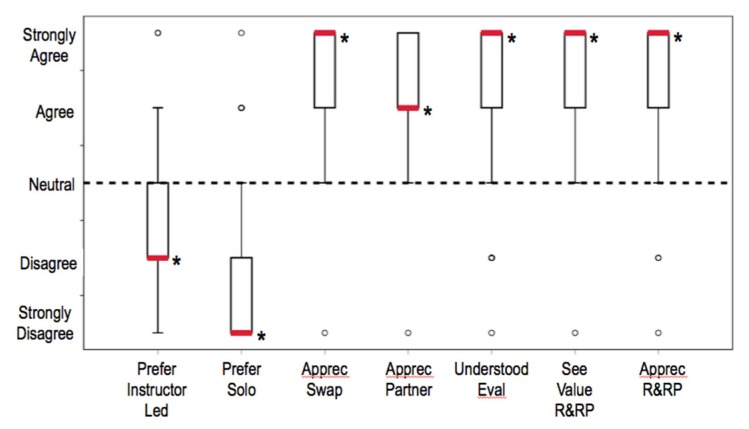

Figure 5.

A box and whisker plot representing student reported experiences with R&RP sessions. The bold red line represents the median for each question, the box represents the 25–75% quartile of responses, the whiskers show the minimum and maximum, and the circles represent outliers for Likert-scale questions (Appendix 4, question 2; N=38). At the end of the semester students demonstrated a significantly strong preference away from neutral for each of the seven experience questions asked on a Likert scale that together suggest they appreciated the R&RP experience and arrangements, * represents p<0.01.

Student Familiarity with Learning Strategies

An additional set of data on pre-instruction understanding of learning strategies (Appendix 4 questions 2 and 3) were collected from a Spring 2019 College of Wooster PSYC323 course to assess baseline understanding of each learning strategy (n=18; Figure 3). Both the Human Subjects Research Committee (HSRC) at Wooster and the Human Subjects Institutional Review Board (HSIRB) at Davidson approved all data collection. The main goal of data collection was to determine student interest in, response to and learning from the R&RP.

RESULTS

Types of Activities and Learning Strategies Used in the R&RP Sessions

The format of each of the 59 R&RP sessions as designed and led by students was categorized by each instructor (Figure 1). We observed that our students most frequently (51%) organized retrieval practices by preparing worksheets with quiz-like questions for their classmates to complete. Worksheet formats and implementation varied, however, as some worksheets involved dual coding by labeling or drawing diagrams, questions were posed in different formats (multiple choice, fill-in-the-blank, open-ended), and some student leaders instructed their classmates to work alone while others designed R&RPs for small teams. The students were also particularly inclined to use online tools to organize quiz games (34%) such as Kahoot, Jeopardy, and Family Feud. Occasionally (7%), students organized other creative interactive applications of knowledge, such as role-playing critical components in a signaling pathway. The remaining R&RP sessions (8%) were activities such as Bingo or crossword puzzles.

When students leading R&RPs completed their individual reflection for each session led, they were asked to identify all learning strategies employed during their R&RP session. The 59 R&RP sessions generated a total of 118 reflection statements because students worked in pairs or small groups. In the 118 reflections, students identified a total of 236 learning strategies, because groups were either required to (Davidson), or often chose to, use more than one strategy per session. Dual coding was the most frequently identified (31%) learning strategy as the students regularly included diagrams to label or identify, or figures from empirical articles for description and interpretation (Figure 2). Use of elaboration closely followed (30%) as students defined elaboration as use of open-ended questions or application of knowledge during the retrieval practice and as the explanations that took place when going over correct answers. Use of concrete examples (25%) included factual knowledge checks and multiple choice questions and students defined interleaving (14%) as presenting the material in a different order from class or mixing different aspects of knowledge in one area; for example, students reported using interleaving when they asked questions on drug administration, distribution and elimination intermixed on a worksheet.

Preliminary Analysis

Scores on end-of-semester Likert scaled questions related to learning strategies and personal experience (Appendix 4, questions 2–3) were first analyzed using a series of two-sample Mann-Whitney Wilcoxon (MWW) U-tests to compare both between courses and institutions. There were no statistically significant differences on any measure between the two classes taught at the College of Wooster (data not shown; p>0.14 for all comparisons). Therefore, the data from the two Wooster courses were aggregated to allow cross institutional comparisons.

There were no significant differences between institutions when comparing student self-assessment of their understanding of or ability to explain the five emphasized learning strategies (retrieval practice, spaced practice, elaboration, dual coding, and interleaving). Only one of the seven personal experience questions demonstrated statistically higher averages for Davidson students: I appreciated having a different partner for each R&RP I led, MWW U=87.00, p<0.02 (data not shown). We can offer no meaningful explanation for this difference. Student pairings were randomly assigned at both colleges, though Davidson students had three sets of partners, instead of only two at Wooster. Given the overall similarities in student responses between institutions, we aggregated the data across institutions to determine the level of student understanding of and ability to explain each learning strategy, and their personal experiences with R&RPs.

Learning Strategies

Following our initial data collection, we became interested in students’ initial pre-instruction understanding of each learning strategy. Given the lack of differences between courses and institutions in the reported end-of-semester scores, we assessed a new class of College of Wooster students (Spring 2019, PSYC323) on the same learning strategy questions before any instruction took place.

All pretest rankings were compared to end-of-semester aggregate rankings using a two-sample MWW U-test (because they were different participants across time). The understanding scores for elaboration, spaced practice, dual coding and interleaving shifted significantly toward a higher level of understanding by the end of the semester (sample MWW for elaboration U=211.00, p<0.02; Figure 3). Scores for retrieval practice did not differ before and after instruction (MWW U=257.00, p<0.10; Figure 3). This increase in scores at the end-of-semester assessment suggests improved understanding of these learning strategies following a semester of use and instruction.

In order to determine if the students had different levels of understanding of each learning strategy, we compared the reported level of understanding across learning strategies for the pre- and post-semester separately using a Friedman test, with the assumption that knowledge of learning strategies would be correlated with one another. We observed significantly different mean ranks between the learning strategies for both the pre-instruction X2(4)=25.64, p<0.001, and the post-instruction scores X2(4)=30.47, p<0.001 (Figure 3). Post-hoc Wilcoxon signed-rank comparisons revealed the same statistical pattern of differences between learning strategies before and after a semester of instruction. The reported level of understanding was similar between retrieval practice, spaced practice, and elaboration (all ps>0.10). The reported level of understanding was also the same between dual coding and interleaving (p>0.30). Mean rankings for retrieval practice, spaced practice, and elaboration, however, were all higher than those for dual coding and interleaving (all ps<0.03). This observation would indicate that the students enter our classes with a greater level of understanding of these three strategies, and though their self-reported understanding of all strategies increases, it grows only in relation to what they knew coming into the course.

Students generally reported higher levels of understanding than ability to explain each learning strategy when assessing end-of-semester responses. Using Wilcoxon signed-rank comparisons we observed a significantly higher rating for understanding than explaining for retrieval practice (Z=−2.65 p<0.01), elaboration (p<0.02), dual coding (p<0.01), and interleaving (p<0.04). The students, however, were equally confident in their understanding of and ability to explain spaced practice (Z=−0.82, p>0.40; Figure 4). This decreased perception in their ability to explain some learning strategies would indicate that students have a metacognitive understanding of the intellectual shift needed to explain information to someone else.

Figure 4.

Percentage of student rankings from self-report Likert scale responding to how well they understand and how well they can explain each learning strategy (N=38). Wilcoxon sign-ranked tests demonstrated significantly lower level ranks for how well the students can explain retrieval practice (RP), elaboration (Elab), dual coding (Dual), and interleaving (Inter) than they understand each. * represents p<0.04.

Student Experiences

To assess the personal experience questions, we compared all aggregated rankings using a one-sample Wilcoxon signed-rank test to a hypothesized median of 3.0, the Likert rating for “neutral”. If the distribution of scores differed significantly from 3.0, then the students were demonstrating a preference for, or against, the experience. Students rated “I would have preferred that the R&RP be led by the instructor” (Z=−2.86, p<0.01) and “I would have preferred to lead the R&RP solo” (p<0.001) as significantly lower than 3.0, indicating that they did not agree with those statements. They rated all other personal experience questions with a distribution significantly higher than 3.0, indicating that they agreed with those statements, all ps<0.001 (Figure 5). Taken together, these data indicate that students found R&RPs led by small teams of students useful and positive.

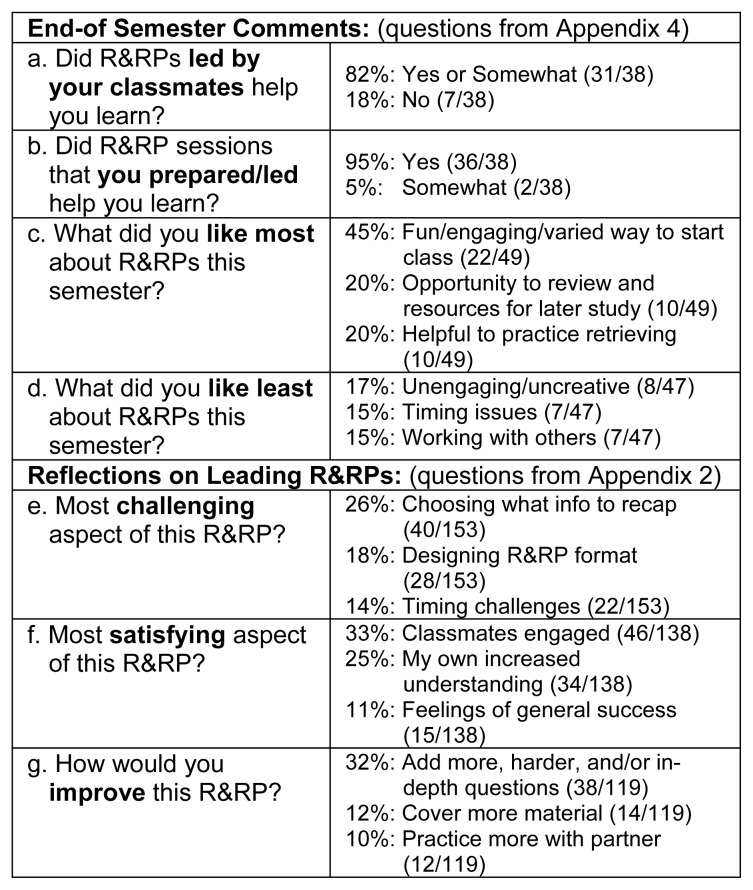

As a more qualitative assessment of student experiences with R&RPs, we coded the open-ended responses on the R&RP reflections that students completed shortly after leading a R&RP in class and end-of-semester assessments (Appendix 2). The combination of questions allowed us to determine what elements helped the students learn best, as well as what aspects they liked most and least, and what they found to be most challenging and satisfying to update the assignment for future semesters (Figure 6).

Figure 6.

Analysis of narrative student comments on R&RP experiences. The table above presents the most common themes identified by a blind analyst reviewing anonymous student comments on open-ended narrative questions asked by a voluntary end-of-semester survey (a–d) and by required reflections after presenting R&RPs in class (e–g) regarding specific aspects of R&RP experiences. Sample sizes (N) are slightly different as we coded all themes for each question, even by the same person, from the open-ended responses.

In response to open-ended questions on reflections completed soon after presenting a R&RP, 82% of students reported that listening to R&RPs presented by classmates helped them learn (Figure 6a), while 95% reported that the R&RP sessions that they prepped helped them learn the material (Figure 6b). In fact, one student commented, “I’m not sure that listening to the presentations helped as much as making them.” For all students to have the chance to make and present their R&RP, however, they must also be audience members. Another student reported that “the R&RPs gave us the opportunity to see the material again soon after learning it, in order to solidify confusing concepts as well as review previously taught information before going over it again in the next class.” This high percentage of students reporting increased learning when they presented was supported by the open-ended comments in which 25% identified their own increased understanding of the material as the most satisfying aspect of their R&RP. One student commented, “another satisfying aspect was feeling like I understood the material much better because I was taking the time to understand the material and find the best way to relay it to the class” (Figure 6f). Student reflections also revealed that 33% reported seeing their classmates engage in their activity and demonstrate understanding of the material as a satisfying aspect of R&RPs, and 11% reported a feeling of general success in implementing their own R&RP. As an example, another student stated, “the most satisfying was seeing how well it went and how some questions did have people thinking a little more than others, making them really think and challenging them.”

During the end-of-semester assessment, 45% of students reported that they most liked that R&RPs were fun, engaging, and/or varied ways to start each class; “the different strategies used to present material kept it interesting and enjoyable.” Twenty percent mentioned that they appreciated the opportunity to review the material and have resources for later study; “it was good to have something additional to review off of for exams.” Similarly, 20% mentioned that the act of retrieval practice was helpful; “I definitely felt like I was helped in my learning because it forced me to study along the way” (Figure 6c). Given the similarity in these categories, it is clear that the metacognitive aspects of learning as a presenter, seeing others learn, and feeling engaged with the activity were noted by students in both the immediate and end-of-semester responses to open-ended prompts.

In response to open-ended questions on reflections completed soon after presenting a R&RP, 26% of students noted one of the most challenging aspects was selecting what to recap because they felt overwhelmed with distilling and simplifying the relevant information; “choosing what parts of material from the previous class were central enough to be included in the recap” (Figure 6e). As there was no direct guidance from faculty members unless students chose to meet with us, and only general instructions in the syllabus, this challenge is an example of generative learning, which is certainly more difficult, but also results in better outcomes (Brown et al., 2014). This challenge is strongly related to the other most common challenges cited, with 18% reporting it was hard to decide how to design the R&RP, and 14% struggling with time constraints; “coming up with meaningful questions that would not take a long time”. On the end-of-semester assessment, the students reported their most common dislikes regarding R&RPs (Figure 6d). These responses were more general and holistic in comparison to the challenges reported immediately following the recaps. Seventeen percent reported that the R&RPs were unengaging or lacked creativity, 15% commented that the R&RPs took longer than the allotted time or felt rushed; “time it took and they often got repetitive”, and 15% reported they did not enjoy working with classmates. Unlike the high degree of similarity observed with elements students reported as satisfying, the responses to what was challenging following each R&RP were very different from what students reported disliking overall at the end of the semester. The challenges were more specific to the preparation for each R&RP, while the overall dislikes were more varied, less frequent in number and more individualistic toward preferred learning styles.

Lastly, the students were given an opportunity to reflect on their own experiences following R&RP sessions they led and think about what they would do differently if given the chance. As each student would complete two or three R&RP sessions throughout a semester, this metacognitive question was, in part, intended to allow them to make improvements for their next presentation (Figure 6g). The most common response related to changing the questions that were presented to their classmates in some way, 32% of students wanted to include more questions, harder questions, and/or more in-depth question with more images; “I would improve it by making the questions more challenging and involved, to ensure students truly understood and felt comfortable with the material.” Twelve percent of responses mentioned wanting to cover more material and 10% wishing they had practiced more with their partner(s) before the presentation.

DISCUSSION

Student Experiences with R&RPs

Overall, students reported very positive experiences with R&RP sessions in three upper-level STEM classrooms. On anonymous, end-of-semester surveys students strongly agreed that retrieval practice was valuable (median 5/5) and that they appreciated (median 5/5) the R&RP sessions they experienced (Figure 5). This high level of student regard provides encouraging evidence that students can and will engage well with an active pedagogical strategy known to produce strong learning gains in a wide variety of contexts (Agarwal, 201; Weinstein et al., 2019a,b).

Importantly, at the end of a semester in a class that featured daily R&RPs most students rated R&RPs as helpful to their learning (Figure 6) both when they were responsible for preparing and delivering R&RPs (95%) as well as when R&RPs were delivered by classmates (82%). This experience of reporting more value as the presenter than as a participant is not surprising given considerable evidence for the benefits of learning by teaching (Cohen et al., 1982; Duran 2017), which intriguingly may be related to benefits of interactivity (Kobayshi, 2019) and/or enhanced retrieval (Koh et al., 2018). As this metacognitive student reports, “I have always found that teaching information to a group of people forces you to understand the information in a better way, which is in turn consolidated”, a sentiment strongly supported in the literature, as teaching is one of the most effective forms of elaboration (Springer et al., 1999). This study did not measure student performance relative to R&RP delivery or reception, though this interesting student perception could be checked via a future study that examines performance on exam questions for which students prepared R&RPs.

Interestingly, students strongly rejected propositions of R&RP sessions led instead by the instructor (median 2/5) or by a solo student (median 1/5; Figure 5), suggesting that the R&RP format we describe here wherein student duos or trios are responsible for both designing and leading recaps are preferred. R&RP sessions as a small part of the course grade was exceptionally well-received by students as a group activity for which they preferred responsibility. Given that some faculty members cite student unwillingness to engage actively in class as a barrier to adopting student-centered class activities (Michael, 2007), our experiences with R&RPs suggest this particular in-class activity is strongly welcomed and embraced by our students. Perhaps even more importantly, this student sentiment was consistent across three separate classes at two different institutions. Furthermore, students reported appreciating having different R&RP partners throughout the semester (median 4/5) as well as the ability to swap dates of session leading responsibilities with classmates if needed (median 5/5; Figure 5) suggesting that introducing some flexibility into the logistics was appreciated. Providing moderate assignment flexibility is a practice that aligns well with inclusive pedagogies (Lombardi et al., 2011). Although these logistical considerations were appreciated, in practice our students very infrequently arranged changes to the randomly assigned R&RP partners or dates established at the start of the semester.

Working in teams on graded assignments and non-graded activities can be both challenging and rewarding for students and/or their instructors in many learning situations. It was, therefore, not surprising to learn that some students expressed frustrations working with a partner to develop and deliver R&RPs (Figure 6e). Though some students reported that “sometimes you get matched up with someone you don’t connect with particularly well due to personality and knowledge differences”, or, “I would have liked to be able to choose my partner. Quality of the partner really influenced my quality of experience,” others indicated that “doing it with another student made it easier to review the information if it was confusing.”

Contemporary STEM research is predominantly conducted in teams (Wuchty et al., 2007), thus it is very appropriate for STEM courses preparing the next generation of scientists to incorporate opportunities for students to develop their collaboration skills. In fact, group work aligns with recommendations from scientific organizations for best practices in undergraduate STEM education to prepare the scientific workforce for an increasingly collaborative scientific enterprise (Springer et al., 1999; NRC, 2003; AAAS, 2011). Emphasizing to students this important rationale for team projects, assigning roles, rotating partners, and/or sharing previous student opinions that discouraged solo R&RPs may reduce some student frustrations with R&RP partners (Springer et al., 1999; Chang & Brickman, 2018).

In executing their R&RPs students predominantly relied upon worksheet and game formats for organizing retrieval practice sessions for their classmates (Figure 1). Comments indicated that even without consequences for incorrect responses, some students found the inherently competitive nature of game formats engaging, such as, “I like how excited/competitive our class got about retrieval practices - it made it much more fun to learn.” Others found the same games unappealing because of the competitive nature or described fast paced R&RPs as “extremely stressful at times.” In addition, some found worksheets to be boring or repetitive, while others found that resource helpful while preparing for an exam. Thus, encouraging students to consider a wide variety of formats for R&RP sessions is warranted.

Although students in all three courses were required to provide the instructor with the retrieval practice questions or prompts that could be archived on the course management website for all students to access later, some students indicated a preference for having immediately tangible handouts or other takeaways from the R&RPs. Some formats, particularly online ephemeral game platforms such as Kahoot can be engaging in the moment yet challenging to archive the questions for sharing later with students. Consequently, we recommend that students be required to submit R&RP materials to the instructor in formats that can be readily archived for later access by their classmates, even if in a format different from the presentation format (such as a simple document listing the questions and responses entered into the Kahoot platform).

Learning Strategies

Embedded in R&RPs were expectations that students would consider additional practices with strong evidence for fostering effective learning (elaboration, interleaving, dual coding, concrete examples). When asked to reflect on which of these additional strategies they deployed in their R&RP sessions (Figure 2), presenters self-reported roughly equal use of dual coding and elaboration, with slightly less use of concrete examples, and least use of interleaving. This study did not explore participant or instructor definitions of learning strategies deployed in R&RPs, which could provide interesting contrast or confirmation of self-assessments. Interestingly, when asked to rate their understanding of and ability to explain these learning strategies, students reported the least confidence with the concept of interleaving and most confidence with the concept of retrieval practice and spaced practice (Figure 4). These observations align; all students necessarily designed retrieval practice sessions for their classmates and heard the term frequently in class, whereas interleaving was only explicitly mentioned when introducing learning strategies early in the semester. Interleaving was more difficult to implement as most R&RP sessions were confined to the specific boundaries of the previous class period’s content, not permitting the inclusion of information via spaced practice (Linderholm et al., 2016). Like interleaving, students reported less understanding or ability to explain dual coding (Figure 4), but unlike interleaving, students often reported using dual coding as a R&RP strategy (Figure 2), thus frequency of reported use does not correlate fully with student understanding of a learning strategy at the end of the semester.

To estimate incoming knowledge of learning strategies, naïve students in a similar fourth course (PSYC323 in Spring 2019) self-reported their confidence on the strategies. Given the strong similarity in responses between the three courses and two institutions, we felt confident that the data collected from this new class would be representative of incoming learning strategy knowledge. These pre-test results were significantly lower than the end-of-semester results from students in three courses using R&RPs, suggesting that students can increase their understanding of these best practices in learning throughout the semester. In line with the other data, the naïve students reported a lower understanding of interleaving and dual coding than retrieval practice, elaboration, and spaced practice (Figure 3). These differences in understanding between the learning strategies remained, however, even after the use throughout a semester, which might indicate an area for future instructional emphasis.

In all cases where students were asked about their understanding versus their ability to explain a specific learning strategy, they rated their level of understanding as higher than their ability to explain (Figure 3). This interesting result is consistent with common overconfidence effects that reveal subjective confidence biases in understanding that exceed ability (Pallier et al., 2002; Moore & Healey, 2008; Fisher et al., 2015). In addition, understanding any concept will fall at a lower and easier category along the continuum of Bloom’s taxonomy of learning than does the more active form of explanation that requires students use stricter decision criteria (Anderson & Krathwohl, 2001). This study did not explicitly assess student understandings of the highlighted learning strategies, require students to explain each of the learning strategies to assess their knowledge more directly, or explore metacognitive dimensions such as why students felt they learned more by leading R&RPs. In addition, we did not assess the students’ application of those learning strategies in other courses. These are all potential avenues for future investigation.

Instructor Experiences with R&RPs

An important feature of this study was that R&RPs were initially piloted by two instructors separately. The instructors then came together to study student experiences. Implementation parameters such as R&RP descriptions, logistics, grading, and assessments were intentionally designed to be as similar as possible for the two instructors, two institutions, and three upper-level STEM courses that did not overlap in content and varied in delivery format (BIO306 was a studio lab course that met for six hours/week (Round & Lom, 2015); PSYC345 was a lecture courses that met for three hours/week, and PSYC323 had three hours of lecture per week as well as a weekly lab session). Our collaboration indicates that student experiences with R&RPs were not specific to one instructor, institution, or course. Although both institutions are categorized as liberal arts colleges with small class enrollments, the results presented here suggest the benefits of R&RPs are likely broadly transferable to a wide variety of students, courses, instructors, and institutions. We note that we deployed R&RPs exclusively in upper-level STEM courses that did not enroll first-years students. Thus, additional considerations and modifications may be needed if R&RPs are implemented in introductory courses where students may have less experience, confidence, and/or identity with the discipline and/or with college generally.

One instructor (AJS) had an assigned student teaching assistant (TA) for her courses deploying R&RPs, which permitted a required meeting with the TA in advance of the R&RP presentation, an otherwise unsustainable schedule without a TA. TA expertise, investment, and guidance can therefore be a variable that may have affected the quality of student-led R&RPs. The other instructor (BL), at an institution that does not use TAs, openly offered (but did not require) students to meet with the instructor in advance. Very few students sought feedback on their R&RPs in advance. This study did not attempt to measure quality of student-led R&RP sessions, though both instructors describe R&RP quality as high and R&RP grades as very strong. Moreover, that students rated R&RP sessions as valuable and helpful to their learning and disliked the suggestion of instructor-led R&RPs also suggests, indirectly, that R&RP quality was strong both with and without the involvement of a TA.

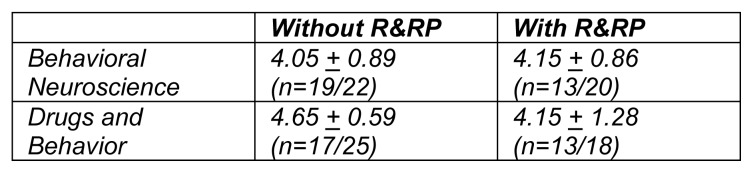

Importantly, and in support of the adoption of active learning in more classrooms, course evaluations were not negatively impacted when we implemented R&RP. Using the College of Wooster campus-wide student evaluation system, the students respond to the prompt, “I would rate the course overall as,” on a Likert scale from 1 as poor and 5 as excellent (Figure 7). Very few open-ended comments mentioned R&RP, however we believe that is a consequence of asking students to complete a specific R&RP assessment (Appendix 4) in parallel. Evaluations from the Drugs and Behavior course do show a slight drop, but this is paired with an increase in the standard deviation, indicating that a small number of students rated the course more negatively when R&RP was present, however, the median and mode for that semester were five. Davidson College course evaluation forms solicited exclusively narrative feedback, preventing quantitative comparisons.

Figure 7.

R&RP implementation did not negatively impact student course evaluation metrics. Course evaluation averages and SDs taken from the standard campus-wide evaluation question, “I would rate the course overall as,” rating the course on a Likert scale of 1 (poor) to 5 (excellent) in the course offering immediately before implementing and then during the use of R&RP sessions, n represents the number of student responses.

During the R&RP sessions both instructors took simple notes using the shared grading rubric (Appendix 1), which allowed grading R&RPs to be an efficient endeavor that could be completed rapidly and often in class. Moreover, the ability to measure student participation during R&RPs objectively was another benefit one instructor (AJS) experienced. Class participation, a frequently subjective metric that can be challenging to collect when the instructor is leading class, can be more accurately recorded when the instructor is focused on observing class dynamics. The other instructor (BL) found that participating in R&RP activities along with her students was an enjoyable experience and potentially contributed to strong class comradery. She also found that students creatively used supplies provided in the classroom for R&RPs such as inexpensive hand-held whiteboards, battery-operated game-show buzzers, markers, sticky notes, etc.

The instructors enjoyed the collaborative benefits of having a pedagogical partner off-campus with whom to discuss strategies for both R&RP implementation and assessment, a situation rare for most aspects of our attempting to improve our teaching. Such partnerships are often beneficial for faculty members’ ongoing professional development as effective educators (Martela, 2014; Seltzer 2015; Berg & Seeber, 2016).

A small disadvantage of student-led R&RP sessions we experienced was that the short, no-stakes, formative retrieval practice formats produced prompts that sometimes did not reflect the types of summative, assessment opportunities that we as instructors designed and factored into course grades. For example, student-generated quiz questions sometimes focused on ancillary details rather than central concepts and/or relied on lower levels of understanding such as remembering and recognizing. Less frequently student-generated questions on short R&RP exercises rose to levels of application, analysis, or evaluation. We did not specifically prompt or expect our students to push their peers to high levels of Bloom’s taxonomy but noted informally that questions we each wrote for our exams were often pitched at higher levels. To mitigate such discrepancies an instructor could certainly implement R&RPs in ways that expect and encourage students to design R&RP questions at higher levels. Submitting R&RP drafts or meetings with an instructor or TA in advance could also align diagnostic or formative lowstakes R&RP questions more directly with questions used on summative, higher-stakes exams and assessments.

Inclusivity Considerations

We were very pleased that our students took strong ownership of R&RP sessions as presenters and participated actively to support their classmates. Many effective inclusive pedagogies support the incorporation of opportunities for students to exercise active choices in course content and/or dynamics, and in fact some suggest requiring active participation from all participants (Nilson, 2013, Tanner, 2013). We believe that creating an opportunity for each student to lead R&RP sessions signals that we, as instructors, have trust and confidence in their intelligence and abilities, signals that can be critically important for students least likely to persist in STEM (Tsui, 2007; Tanner, 2013). Moreover, we intended R&RPs to help facilitate a shared sense of collaboration and community, with built in peer engagement, another critically important aspect of inclusive college teaching (Tsui, 2007; Palmer et al., 2011; McGuire, 2015). Recognition that the assignment and evaluation expectations were clear is a practice that aligns well with inclusive practices that emphasize making course structure and evaluation criteria explicit for all students (Allen & Tanner, 2006; Eddy & Hogan, 2015; Penner, 2018). Thus, it is imperative that welcoming and inclusive teaching methods be fostered in contemporary undergraduate STEM classrooms to ensure we as educators are reducing bias and are preparing a diverse next generation of scientists who will tackle complex scientific challenges (Dewsbury, 2017; Asplund & Welle, 2018; Asai, 2019; Grogan, 2019).

Conclusion

Overall, both students and instructors found R&RPs to be effective additions to our active classroom learning strategies. Students were required to engage with evidence-based learning strategies every time it was their turn to present a R&RP. They had to retrieve the relevant information in a spaced format (rather than the night before an exam), they had to choose what was most relevant, and then decide how to best present it to their classmates (elaborative rehearsal, dual coding, interleaving). One student commented, “the stress of an additional assignment is never something a student actively wants, but [R&RP] actually helps so it’s worth it.” Benefits were not limited to the presenters because during each R&RP session audience members experienced regular practice testing to gauge their understanding, in a no-stakes fashion. As one student summarized, R&RPs “were like learning checks (without the penalties) to help understand what I needed to work harder on.” Together the small groups of presenters, and the class as a whole, created a shared experience with peer support. The faculty members enjoyed giving the power of the first 5–10 minutes of each class to the students to allow them an opportunity to shine which very effectively set a strong tone for collaboration, fun, and engagement in the learning process throughout the semester.

Appendix 1. R&RP Grading Rubric

| Rating* | Multiplier | Max Points | |

|---|---|---|---|

| Format & Content | |||

| Original, synthetic, thoughtful work | 0–5 | 2.5 | 12.5 |

| Scientific information was accurate | 0–5 | 2.5 | 12.5 |

| Significance, take home message(s), big ideas, and/or general principles highlighted | 0–5 | 2 | 10 |

| Information was appropriate/relevant | 0–5 | 2 | 10 |

| Activity encouraged participants to retrieve information in a low-stakes fashion | 0–5 | 2 | 10 |

| Two or more deployed: interleaving, elaboration, dual coding, concrete examples | 0–5 | 2 | 10 |

| Reflection | |||

| Reflection (Appendix 2) completed within 48 hours of presentation | 0–5 | 2.5 | 12.5 |

| Reflection (Appendix 2) completed with thought/care/specifics | 0–5 | 2.5 | 12.5 |

| Logistics | |||

| Shareable resource created | 0–5 | 1 | 5 |

| Sharable resource provided to instructor before class | 0–5 | 1 | 5 |

| Total | 100 |

5 = Excellent (complete and exceptional or flawless work)

4 = Very Good (strong, complete work; minor improvements possible)

3 = Good (acceptable, complete work; minor/moderate improvements needed)

2 = Fair (work that meets minimal requirements; moderate improvements needed)

1 = Poor (weak and/or incomplete work that does not meet minimal requirements; significant Improvements needed

0 = Absent (work that was not attempted/completed/submitted)

Appendix 2. R&RP Reflection Questions

Your name.

-

When are you submitting this reflection?

On time – within 48 hours of my presentation.

Late – using one of my 24-hour “life happens” extensions (submitting 48–72 hours after my presentation)

Late – using both of my 24-hour “life happens” extensions (submitting 72–96 hours after my presentation).

Late – not using a free “life happens” extension (penalty described in syllabus)

-

Our R&R used the following techniques:

interleaving

elaboration

dual coding

concrete examples

If you used interleaving briefly explain how/where.

If you used elaboration briefly explain how/where.

If you used dual coding briefly explain how/where.

If you used concrete examples briefly explain how/where.

The most challenging aspect of preparing and/or executing this R&RP was _____.

The most satisfying aspect of preparing and/or executing this R&RP was _____.

If we had a free “redo” and plenty of time I would improve this R&RP by _____.

My partner’s contributions to this R&RP were _____.

My contributions to this R&RP were _____.

Please share at least one additional thought or reflection regarding your R&RP that was not elicited or captured in the questions above.

Appendix 3. Syllabus Descriptions of R&RP Assignments

Behavioral Neuroscience (PSYC323) & Drugs and Behavior (PSYC345) courses (2 × 5% each = 10% of course grade):

Good pedagogical practice says that we should spend a few minutes each class reviewing and retrieving what we covered the class before – it gets us all up to speed, ready to move forward, and strengthens the neuronal connections. But, it’s much more engaging and exciting for you to recap the information, than it is for you all to listen to me for even five more minutes. So, two times throughout the semester, you and a partner or two will spend the first five minutes recapping the previous class. R&RPs are short reviews of the most important concepts discussed in the previous class session. To receive full credit, students must review information in a new way (simply condensing the instructor’s slides is insufficient). A successful recap demonstrates synthesis, prioritization, and understanding of the previous lesson’s content via strong oral and visual delivery that includes an original aid for the class such as a slide, handout, poster, infographic, video, etc. Each R&RP session must include some retrieval practice that challenges classmates to use, apply, and retrieve previous knowledge in a low-stakes way – you should refer to our first lab readings for empirically supported successful learning strategies (i.e., elaboration, dual coding, concrete examples, practice testing). You must meet with your TA before your recap and provide her with any handout that requires photocopying or uploading to the Moodle page. After the R&RP each member of the team must independently complete a short online reflection survey – link is on the Moodle site.

Developmental Biology (BIO306) course (3 × 3% each = 9% of course grade):

R&RPs are short (8–10 min) student-led reviews of the most important concepts discussed in the previous class session (including reading and/or flipped lecture) presented by a pair of students. A successful recap demonstrates synthesis, prioritization, and understanding of the previous lesson’s content via strong oral and visual delivery that includes a new aid for the class such as a handout, poster, infographic, video, etc. (simply condensing the instructor’s slides is insufficient). Each R&RP must also include active retrieval practice elements that encourage classmates to use, apply, and retrieve previous knowledge in a low-stakes fashion. After a R&RP, each leader must also independently complete a short on-line reflection.

Appendix 4. End-of-Semester R&RP Survey Questions

-

Which course are you enrolled in?

Davidson College - BIO306 – Developmental Biology (Dr. Lom)

College of Wooster – PSYC323 - Behavioral Neuroscience (Dr. Stavnezer)

College of Wooster – PSYC345 – Drugs & Behavior (Dr. Stavnezer)

-

Please rate the following: (strongly agree, agree, neutral, disagree, strongly disagree):

I would have preferred R&RPs led by the instructor.

I would have preferred solo led R&RPs.

I appreciated the ability to swap R&RP dates/partners as needed.

I appreciated being scheduled with a different partner for each of the R&RPs I led.

I understood how I was being evaluated on R&RPs

I see value in retrieval practice

I appreciated the R&RP sessions.

-

Please rate the following: (strongly agree, agree, neutral, disagree, strongly disagree):

I understand retrieval practice.

I understand interleaving

I understand spaced practice.

I understand elaboration.

I understand dual coding.

I could explain retrieval practice.

I could explain interleaving.

I could explain spaced practice.

I could explain elaboration.

I could explain dual coding.

-

Did R&RPs led by your classmates help you learn?

Briefly explain your experience.

-

Did R&RP sessions that you prepared/led help you learn?

Briefly explain your experiences.

What did you like most about R&RPs this semester?

What did you like least about R&RPs this semester?

What other information would you like to share related to R&RPs this semester?

Footnotes

The authors thank their BIO251, BIO306, PSYC323, and PSYC345, students for creating and presenting engaging R&RP sessions and for providing candid feedback on their experiences, Olivia Rostagni for careful assistance with data analysis, and colleagues Kristi Multhaup, Laura Sockol, Lauren Stutts, Katie Boes, Marian Frazier and Missy Schen for helpful consultation.

REFERENCES

- Agarwal PK. Retrieval Practice. 2019. Available at http://www.retrievalpractice.org/retrievalpractice.

- Allen D, Tanner K. Rubrics: Tools for making learning goals and evaluation criteria explicit for both teachers and learners. CBE Life Sci Educ. 2006;5:197–203. doi: 10.1187/cbe.06-06-0168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Association for the Advancement of Science. Vision and change in undergraduate biology education: A call to action. Washington, DC: American Association for the Advancement of Science; 2011. [Google Scholar]

- Anderson LW, Krathwohl DR. A taxonomy of learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. New York, NY: Longman; 2001. [Google Scholar]

- Aragón OR, Eddy SL, Graham MJ. Faculty beliefs about intelligence are related to the adoption of active-learning practices. CBE Life Sci Educ. 2018;17:ar47. doi: 10.1187/cbe.17-05-0084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asai D. To learn inclusion skills, make it personal. Nature. 2019;565:537. doi: 10.1038/d41586-019-00282-y. [DOI] [PubMed] [Google Scholar]

- Asplund M, Welle CG. Advancing science: How bias holds us back. Neuron. 2018;99:635–39. doi: 10.1016/j.neuron.2018.07.045. [DOI] [PubMed] [Google Scholar]

- Baepler P, Walker JD, Brooks DC, Saichaie K, Petersen CI. A guide to teaching in the active learning classroom: History, Research and practice. Sterling, VA: Stylus; 2016. [Google Scholar]

- Ballen CJ, Wieman C, Salehi S, Searle JB, Zamudio KR. Enhancing diversity in undergraduate science: Self-efficacy drives performance gains with active learning. CBE Life Sci Educ. 2018;16:ar56. doi: 10.1187/cbe.16-12-0344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barral AM, Ardi-Pastores VC, Simmons RE. Student learning in an accelerated introductory biology course is significantly enhanced by a flipped learning environment. CBE Life Sci Educ. 2018;17:ar38. doi: 10.1187/cbe.17-07-0129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beichner RJ, Saul JM, Abbott DS, Morse JJ, Deardorff DL, Allain RJ, Bonham SW, Dancy MH, Risley JS. Redish EF, Coony P, editors. The student-centered activities for large enrollment undergraduate programs (SCALE-UP) project. Research-Based Reform of University Physics. 2007. Available at https://www.compadre.org/PER/per_reviews/volume1.cfm.

- Benjamin AS, Tullis J. What Makes Distributed Practice Effective? Cog Psych. 2010;61:228–247. doi: 10.1016/j.cogpsych.2010.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berg M, Seeber BK. The Slow Professor: Challenging the Culture of Speed in the Academy. Tooronto, ON: University of Toronto Press; 2016. [Google Scholar]

- Bradforth SE, Miller ER, Dichtel WR, Leibovich AK, Feig AL, Martin D, Bjorkman KS, Shultz ZD, Smith TL. Improve Undergraduate Science Education. Nature. 2015;523:282–285. doi: 10.1038/523282a. [DOI] [PubMed] [Google Scholar]

- Brown PC, Roediger HL, McDaniel MA. Make it stick: The science of successful learning. Cambridge, MA: The Belknap Press of Harvard University; 2014. [Google Scholar]

- Brownell SE, Tanner KD. Barriers to faculty pedagogical change: Lack of training, time, incentives, and….tensions with professional identity? CBE Life Sci Educ. 2012;11:339–46. doi: 10.1187/cbe.12-09-0163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cahill A, Bloch-Schulman S. Argumentation step by step: Learning critical thinking through deliberative practice. Teach Philo. 2012;35:41–62. [Google Scholar]

- Canning EA, Muenks K, Green DJ, Murphy MC. STEM faculty who believe ability is fixed have larger racial achievement gaps and inspire less student motivation in their classes. Sci Adv. 2019;5:eaau4734. doi: 10.1126/sciadv.aau4734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter SK, Cepeda NJ, Rohrer D, Kang SHK, Pashler H. Using spacing to enhance diverse forms of learning: Review of recent research and implications for instruction. Educ Psych Rev. 2012;24:369–78. [Google Scholar]

- Chang Y, Brickman P. When group work doesn’t work: Insights from students. CBE Life Sci Educ. 2018;17:ar52. doi: 10.1187/cbe.17-09-0199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen PA, Kulik JA, Kulik CLC. Educational outcomes of tutoring: A meta-analysis of findings. Am Educ Res J. 1982;19:237–48. [Google Scholar]

- Cotner S, Loper J, Walker JD, Brooks DC. It’s not you, it’s the room – are high-tech, active learning classrooms worth it? J Coll Sci Teach. 2013;42:82088. [Google Scholar]

- Dewsbury B. On faculty development of STEM inclusive teaching practices. FEMS Microbio Lett. 2017;364:fnz179. doi: 10.1093/femsle/fnx179. [DOI] [PubMed] [Google Scholar]

- Dunlosky J. Strengthening the student toolbox: Study strategies to boost learning. Am Educ. 2013;37:12–21. [Google Scholar]

- Dunlosky J, Rawson KA, Marsh EJ, Nathan MJ, Willingham DT. What works, what doesn’t. Sci Am Mind. 2013;24:47–53. [Google Scholar]

- Duran D. Learning-by-teaching: Evidence and implications as a pedagogical mechanism. Innov Educ Teach Int. 2017;54:476–84. [Google Scholar]

- Dweck CS. Mindset: The new psychology of success. New York, NY: Ballantine Books; 2007. [Google Scholar]

- Eddy SL, Hogan KA. Getting under the hood: How and for whom does increasing course structure work? CBE Life Sci Educ. 2014;13:453–68. doi: 10.1187/cbe.14-03-0050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eyler J. “Active learning” has become a buzzword (and why that matters) RICE Center for Teaching Excellence; 2018. Available at https://cte.rice.edu/blogarchive/2018/7/16/active-learning-has-become-a-buzz-word. [Google Scholar]

- Faust JL, Paulson DR. Active learning in the college classroom. J Excel Coll Teach. 1998;9:3–24. [Google Scholar]

- Fisher M, Goddu MK, Keil FC. Searching for explanations: How the internet inflates estimates of internal knowledge. J Exp Psych. 2015;144:674–87. doi: 10.1037/xge0000070. [DOI] [PubMed] [Google Scholar]

- Freeman S, Eddy SL, McConough M, Smith MS, Okoroafor N, Jordt H, Wenderoth MP. Active learning increases student performance in science, engineering, and mathematics. PNAS. 2014;111:8419015. doi: 10.1073/pnas.1319030111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grogan KE. How the entire scientific community can confront gender bias in the workplace. Nat Ecol Evol. 2019;3:3–6. doi: 10.1038/s41559-018-0747-4. [DOI] [PubMed] [Google Scholar]

- Haak DC, HilleRisLambers L, Pitre E, Freeman S. Increased structure and active learning reduce the achievement gap in introductory biology. Science. 2011;332:1213–16. doi: 10.1126/science.1204820. [DOI] [PubMed] [Google Scholar]

- Halonen JS, Dunn DS. Does ‘high-impact’ teaching cause high-impact fatigue? Chron Higher Educ. 2018. Available at https://www.chronicle.com/article/Does-High_impact-/245159.

- Harrington C, Zakrajsek TD. Dynamic lecturing: Research-based strategies to enhance lecture effectiveness. Sterling, VA: Stylus; 2017. [Google Scholar]

- Hoffman R, McGuire SY. Teaching and learning strategies that work. Science. 2009;325:1203–04. doi: 10.1126/science.325_1203. [DOI] [PubMed] [Google Scholar]

- Henderson C, Khan R, Dancy M. Will my student evaluations decrease if I adopt an active learning instructional strategy? Am J Phys. 2018;86:934. [Google Scholar]

- Hoskins S. Using a paradigm shift to teach neurobiology and the nature of science – a C.R.E.A.T.E.-based approach. J Undergrad Neurosci Educ. 2008;6:A40–52. [PMC free article] [PubMed] [Google Scholar]

- Jensen JL, Kummer TA, Goday dMPD. Improvements from a flipped classroom may simply be the fruits of active learning. CBE Life Sci Educ. 2015;14:ar5. doi: 10.1187/cbe.14-08-0129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karpicke JD, Roediger HL. The critical importance of retrieval for learning. Science. 2008;319:966–68. doi: 10.1126/science.1152408. [DOI] [PubMed] [Google Scholar]

- Karpicke JD, Butler AC, Roediger HL., III Metacognitive strategies in student learning: Do students practice retrieval when they study on their own? Memory. 2009;17:471–79. doi: 10.1080/09658210802647009. [DOI] [PubMed] [Google Scholar]

- Kobayshi K. Interactivity: A potential determinant of learning by preparing to teach and teaching. Front Psych. 2019;9:2755. doi: 10.3389/fpsyg.2018.02755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koh AWL, Lee SZ, Lim SWH. The learning benefits of teaching: A retrieval practice hypothesis. Appl Cog Psych. 2018;32:401–10. [Google Scholar]

- Knigh JK, Wood WB. Teaching More by Lecturing Less. Ell Biol Educ. 2005;4:298–310. doi: 10.1187/05-06-0082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang JM. Small teaching: Everyday lessons from the science of learning. San Francisco, CA: Jossey-Bass; 2016. [Google Scholar]

- Linderholm T, Dobson J, Yarbrough MB. The benefit of self-testing and interleaving for synthesizing concepts across multiple physiology texts. Adv Physiol Educ. 2016;40:329–34. doi: 10.1152/advan.00157.2015. [DOI] [PubMed] [Google Scholar]

- Lom B. Classroom activities: Simple strategies to incorporate student-centered activities within undergraduate science lectures. J Undergrad Neuro Educ. 2012;11:A64–71. [PMC free article] [PubMed] [Google Scholar]

- Lombardi AR, Murray C, Gerdes H. College faculty and inclusive instruction: Self-reported attitudes and actions pertaining to universal design. J Diver High Educ. 2011;4:250–61. [Google Scholar]

- Lujan HL, DiCarlo SE. Too much teaching, not enough learning: What is the solution? Adv Physio Educ. 2006;30:17–22. doi: 10.1152/advan.00061.2005. [DOI] [PubMed] [Google Scholar]

- Martela F. Sharing well-being in a work community – Exploring well-being-generating relational systems. Res Emotion Org. 2014;10:79–110. [Google Scholar]

- Mayer RE, Anderson RB. The instructive animation: Helping students build connections between words and pictures in multimedia learning. J Educ Psych. 1992;4:444–52. [Google Scholar]

- Mazur E. Peer Instruction: A User’s Manual. Upper Saddle River, NJ: Prentice-Hall; 1997. [Google Scholar]

- McDanile MA, Donnelly CM. Learning with Analogy and Elaborative Interrogation. J Educ Psych. 1996;88:508–519. [Google Scholar]

- McGuire S. Teach Students How to Learn: Strategies You Can Incorporate into Any Course to Improve Student Metacognition, Study Skills, and Motivation. Sterling, VA: Stylus; 2015. [Google Scholar]

- Michael J. Where’s the Evidence that Active Learning Works? Adv Physiol Educ. 2006;30:159–167. doi: 10.1152/advan.00053.2006. [DOI] [PubMed] [Google Scholar]

- Michael J. Faculty perceptions about barriers to active learning. J Coll Teach. 2007;55:42–47. [Google Scholar]

- Miller CJ, Metz MJ. A comparison of professional-level faculty and student perceptions of active learning: Its current use, effectiveness, and barriers. Adv Physiol Educ. 2014;38:246–52. doi: 10.1152/advan.00014.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore DA, Healy PJ. The trouble with overconfidence. Psych Rev. 2008;115:502–17. doi: 10.1037/0033-295X.115.2.502. [DOI] [PubMed] [Google Scholar]

- National Research Council. BIO2010: Transforming undergraduate education for future research biologists. Washington, DC: The National Academies Press; 2003. [PubMed] [Google Scholar]

- National Research Council. Discipline-based education research: Understanding and improving learning in undergraduate science and engineering. Washington, DC: The National Academies Press; 2012. [Google Scholar]

- Nilson L. Creating Self-Regulated Learners: Strategies to Strenghten Students’ Self-Awareness and Learning Skills. Sterling, VA: Sylus; (20113) [Google Scholar]

- Pallier G, Wilkinson R, Danthiir V, Kleitman S, Knezevic G, Stankov L, Roberts RD. The Role of Individual Differences in the Accuracy of Confidence Judgements. J Gen Psych. 2002;129:257–299. doi: 10.1080/00221300209602099. [DOI] [PubMed] [Google Scholar]

- Palmer RT, Maramba DC, Dancy TW., II A Quqalitative Investigation fo Factors Promoting the Retention and Persistence of Students of Color in STEM. J Negro Educ. 2011;80:491–504. [Google Scholar]

- Penner M. Building an Inclusive Classroom. J Undergrad Neuroscie Educ. 2018;16:A268–A272. [PMC free article] [PubMed] [Google Scholar]

- Prince M. Does Active Learning Work? A Review of the Research. J Eng Educ. 2004;93:223–231. [Google Scholar]

- Rawson KA, Thomas RC, Jacoby LL. The power of examples: Illustrative examples enhance conceptual learning of declarative concepts. Educ Psych Rev. 2014;27:483–504. [Google Scholar]

- Roediger HL, III, Butler AC. The critical role of retrieval practice in long-term retention. Trends Cog Sci. 2011;15:20–27. doi: 10.1016/j.tics.2010.09.003. [DOI] [PubMed] [Google Scholar]

- Roediger HL, Putnam AL, Smith MA. Ten benefits of testing and their applications to educational practice. In: Mestre J, Ross B, editors. Psychology of learning and motivation. Oxford, UK: Elsevier; 2011. pp. 1–36. [Google Scholar]

- Rohrer D. Interleaving helps students distinguish among similar concepts. Educ Psych Rev. 2012;24:355–57. [Google Scholar]

- Round J, Lom B. In situ teaching: Fusing labs and lectures in undergraduate courses to enhance immersion in scientific research. J Undergrad Neurosci Educ. 2015;13:A206–14. [PMC free article] [PubMed] [Google Scholar]

- Seltzer R. The coach’s guide for women professors who want a successful career and a well-balanced life. Sterling, VA: Stylus; 2015. [Google Scholar]

- Smith MK, Jones FH, Gilbert S, Wieman CE. The classroom observation protocol for undergraduate STEM (COPUS): A new instrument to characterize university STEM classroom practices. CBE Life Sci Educ. 2013;12:618–27. doi: 10.1187/cbe.13-08-0154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Springer L, Stanne ME, Donovan SS. Effects of small-group learning on undergraduates in science, mathematics, engineering, and technology: A meta-analysis. Rev Educ Res. 1999;69:21–51. [Google Scholar]

- Stains M, et al. Anatomy of STEM teaching in North American universities. Science. 2018;359:1468–70. doi: 10.1126/science.aap8892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Supiano B. Traditional teaching may deepen inequality. Can a different approach fix it? Chron High Educ. 2018. Available at https://www.chronicle.com/article/Traditional-Teaching-May/243339.

- Tanner KD. Structure matters: Twenty-one teaching strategies to promote student engagement and cultivate classroom equity. CBE Life Sci Educ. 2013;12:322–331. doi: 10.1187/cbe.13-06-0115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsui L. Effective strategies to increase diversity in STEM fields: A review of the research literature. J of Negro Education. 2007;76:555–581. [Google Scholar]