Abstract

Background

Decision-making is the process of choosing and performing actions in response to sensory cues to achieve behavioral goals. Many mathematical models have been developed to describe the choice behavior and response time (RT) distributions of observers performing decision-making tasks. However, relatively few researchers use these models because it demands expertise in various numerical, statistical, and software techniques.

New method

We present a toolbox — Choices and Response Times in R, or ChaRTr — that provides the user the ability to implement and test a wide variety of decision-making models ranging from classic through to modern versions of the diffusion decision model, to models with urgency signals, or collapsing boundaries.

Results

In three different case studies, we demonstrate how ChaRTr can be used to effortlessly discriminate between multiple models of decision-making behavior. We also provide guidance on how to extend the toolbox to incorporate future developments in decision-making models.

Comparison with existing method(s)

Existing software packages surmounted some of the numerical issues but have often focused on the classical decision-making model, the diffusion decision model. Recent models that posit roles for urgency, time-varying decision thresholds, noise in various aspects of the decision-formation process or low pass filtering of sensory evidence have proven to be challenging to incorporate in a coherent software framework that permits quantitative evaluation among these competing classes of decision-making models.

Conclusion

ChaRTr can be used to make insightful statements about the cognitive processes underlying observed decision-making behavior and ultimately for deeper insights into decision mechanisms.

Keywords: Decision making, Choice, Response time (RT), Diffusion decision model, DDM, Urgency gating, AIC, BIC, Model selection

1. Introduction

Perceptual decision-making is the process of choosing and performing appropriate actions in response to sensory cues to achieve behavioral goals (Freedman and Assad, 2011; Hoshi, 2013; Shadlen and Newsome, 2001; Gold and Shadlen, 2007; Shadlen and Kiani, 2013; O’Connell et al., 2018a). A sophisticated research effort in multiple fields has led to the formulation of cognitive process models to describe decision-making behavior (Donkin and Brown, 2018; Ratcliff et al., 2016). The majority of these models are grounded in the “sequential sampling” framework, which posits that decision-making involves the gradual accumulation of noisy sensory evidence over time until a bound (or criterion/threshold) is reached (Forstmann et al., 2016; Ratcliff et al., 2016; Shadlen and Kiani, 2013; Brunton et al., 2013; Ratcliff and Rouder, 1998; Ratcliff and McKoon, 2008; Gold and Shadlen, 2007; Hanks et al., 2014). Models derived from the sequential sampling framework are typically elaborated with various systematic and random components so as to implement assumptions and hypotheses about the underlying cognitive processes involved in perceptual decision-making (Ratcliff et al., 2016; Diederich, 1997).

The most prominent sequential sampling model of decision-making is the diffusion decision model (DDM), which has an impressive history of success in explaining the behavior of human and animal observers (e.g., Forstmann et al., 2016; Ratcliff et al., 2016; Palmer et al., 2005; Tsunada et al., 2016; Ding and Gold, 2012a,b). However, recent studies propose alternative sequential sampling models that do not involve the integration of sensory evidence over time. Instead, novel sensory evidence is multiplied by an urgency signal that increases with elapsed decision time, and a decision is made when the signal exceeds the criterion (Ditterich, 2006a; Thura et al., 2012; Cisek et al., 2009). Another line of research proposes that observers aim to maximize their reward rate and suggests that the decision boundary dynamically decreases as the time spent making a decision grows longer. Such a framework has been argued to provide a better explanation for decision-making behavior in the face of sensory uncertainty (Drugowitsch et al., 2012).

One approach to distinguish between these different models is to systematically manipulate the stimulus statistics and/or the task structure and then test whether behavior is qualitatively consistent with one or another sequential sampling model (Cisek et al., 2009; Thura and Cisek, 2014; Carland et al., 2015; Brunton et al., 2013; Scott et al., 2015). An alternative approach is to quantitatively analyze the choice and RT behavior with a large set of candidate models, and then carefully use model selection techniques to understand the candidate models that best describe the data (e.g., Hawkins et al., 2015b; Chandrasekaran et al., 2017; Purcell and Kiani, 2016; Evans et al., 2017). The quantitative modeling and model selection approach allows the researcher to determine whether a particular model component (e.g., an urgency signal, or variability in the rate of information accumulation) is important for generating the observed behavioral data. It also provides a holistic method for testing model adequacy because the proposed model is judged on its ability to account for all available data (e.g., Evans et al., 2017), rather than focusing on a specific subset of the data.

Despite the apparent benefits of model selection, there are technical and computational challenges in the application of decision-making models to behavioral data. Some researchers have surmounted these issues by simplifying the process: using analytical solutions for the predicted mean RT and accuracy from the simplest form of the DDM, applied to participant-averaged behavioral data (Palmer et al., 2005; Tsunada et al., 2016). However, the complete distribution of RTs is highly informative, and often necessary, to reliably discriminate between the latent cognitive processes that influence decision-making (Forstmann et al., 2016; Ratcliff and McKoon, 2008; Ratcliff et al., 2016; Luce, 1986). Until recently, applying sequential sampling models like the DDM to the joint distribution over choices and RT required bespoke domain knowledge and computational expertise. This has hindered the widespread adoption of quantitative model selection methods to study decision-making behavior.

Some recent attempts have demystified the application of cognitive models of decision-making to behavioral data, providing a path for researchers to apply these methods to their own research questions. For instance, Vandekerckhove and Tuerlinckx (2008) developed the Diffusion Modeling and Analysis Toolbox (DMAT), and Voss and Voss (2007) developed the diffusion model toolbox (fast-dm; for an updated version see fast-dm-30, Voss et al., 2015). Other modern toolboxes have improved the parameter estimation algorithms and can leverage multiple observers to perform hierarchical Bayesian inference (Wiecki et al., 2013). In hBayesDM (Ahn et al., 2017) and Dynamic Models of Choice (Heathcote et al., 2018) researchers can apply a range of models to behavior from a wide variety of decision-making paradigms ranging from choice tasks to reversal learning and inhibition tasks.

A common feature across all of the excellent toolboxes currently available is that they provide code to apply the DDM to data, or the DDM in addition to a few alternative models. As a consequence, the toolboxes provide no pathway for a researcher to rigorously compare the quantitative account of the DDM to alternative theories of the decision making process including models with an urgency signal (Ditterich, 2006a), urgency-gating (Cisek et al., 2009), or collapsing bounds (Hawkins et al., 2015b). Simply put, we currently have no openly available and extensible toolbox for understanding choice and RT behavior using the many hypothesized models of decision-making. We believe there is a critical need for examining how these different models perform in terms of explaining decision-making behavior.

The objective of this study was to address this need and provide a straightforward framework to analyze a range of existing sequential sampling models of decision-making behavior. Specifically, we aimed to provide an open-source and extensible framework that permits quantitative implementation and testing of novel candidate models of decision-making. The outcome of this study is ChaRTr, a novel toolbox in the R programming environment that can be used to analyze choice and RT data of humans and animals performing two-alternative forced choice tasks that involve perceptual or other types of decision-making. R is an open source language that enjoys widespread use and is maintained by a large community of researchers. ChaRTr can be used to analyze choice and RT behavior from the perspective of a (potentially large) range of decision-making models and can be readily extended when new models are developed. These new models can be incorporated into the toolbox with minimal effort and require only basic working knowledge of R and C programming; we explain the required skills in this manuscript. Similarly, new optimization routines that are readily available as R packages can be implemented if desired.

2. Methods and materials

The methods are focused on the specification of various mathematical models of decision-making, and the parameter estimation and model selection processes. For reference, the symbols we use to describe the models are shown in Table 1. The naming convention for the models we have developed in ChaRTr is to take the main architectural feature of the model and use it as a prefix to the model.

Table 1.

List of symbols used in the decision-making models implemented in ChaRTr.

| Parameter | Description |

|---|---|

| x(t) | State of the decision variable at time t. |

| Δt | Time step of the decision variable. |

| z, sz | Starting state of the decision variable (i.e., x(0) = z), and decision-to-decision variability in starting state. sz is the range of a uniform distribution with mean (midpoint) z. |

| vi, sv | Rate at which the decision variable accumulates decision-relevant information (drift rate, v) in condition i, and decision-to-decision variability in drift rate. sv is the standard deviation of a normal distribution with mean vi. |

| γ(t) | Urgency signal that dynamically modulates the decision variable as a function of t. Can take different functional forms in different models. |

| aupper, alower | Upper and lower response boundaries that terminate the decision process. |

| aupper(t), alower(t) | Upper and lower response boundaries that vary as a function of t. |

| Ter, st | Time required for stimulus encoding and motor preparation/execution (non-decision time), and decision-to-decision variability in non-decision time. st is the range of a uniform distribution with mean (midpoint) Ter. |

| s | Within-decision variability in the diffusion process. Represents the standard deviation of a normal distribution. By convention, set to a fixed value to satisfy a scaling property of the model. |

| E(t) | Momentary sensory evidence at time t. |

| b, sb | Intercept and variability of the intercept in urgency based models with linear urgency signals. |

| Normal distribution with zero mean and unit variance. | |

| Uniform distribution over the interval l1 and l2. |

The diffusion decision model, henceforth DDM, refers to the simplest sequential sampling model.

cDDM refers to a DDM with collapsing boundaries (Hawkins et al., 2015b).

cfkDDM refers to a DDM with collapsing boundaries and a fixed parameter for the function defining the collapsing boundary (Hawkins et al., 2015b).

uDDM refers to a DDM with a linear urgency signal with a slope and an intercept.

dDDM refers to a DDM with urgency signal defined by Ditterich (2006a).

UGM refers to an Urgency Gating Model (Cisek et al., 2009; Thura et al., 2012).

bUGM refers to a UGM with a linear urgency signal composed of a slope and an intercept (Chandrasekaran et al., 2017).

For reference, the models being considered, the parameters for the models and the number of parameters in each model are shown in Table 2.

Table 2.

List of the 37 models available in ChaRTr along with the individual parameters in each model and the total number of parameters. n refers to the number of stimulus conditions used. aU is the short form of aupper.

| Abbreviation | Parameters | N |

|---|---|---|

| Diffusion decision model (DDM) | ||

| References: Ratcliff (1978), Ratcliff and Rouder (1998),Ratcliff, 1978,Ratcliff (1978), Ratcliff and Rouder (1998) | ||

| DDM | v1...n, aU, Ter | n + 2 |

| DDMSv | v1...n, aU, Ter, Sv | n + 3 |

| DDMSt | v1...n, aU, Ter, St | n + 3 |

| DDMSvSz | v1...n, aU, Ter, Sv, Sz | n + 4 |

| DDMSvSt | v1...n, aU, Ter, Sv, St | n + 4 |

| DDMSvSzSt | v1...n, aU, Ter, Sv, Sz, St | n + 5 |

| Collapsing diffusion decision model (cDDM) | ||

| References: Drugowitsch et al. (2012), Hawkins et al. (2015b),Drugowitsch et al., 2012,Drugowitsch et al. (2012), Hawkins et al. (2015b) | ||

| cDDM | v1...n, aU, Ter, a′, k | n + 4 |

| cDDMSv | v1...n, aU, Ter, a′, k, Sv | n + 5 |

| cDDMSt | v1...n, aU, Ter, a′, k, St | n + 5 |

| cDDMSvSz | v1...n, aU, Ter, a′, k, Sv, Sz | n + 6 |

| cDDMSvSt | v1...n, aU, Ter, a′, k, Sv, St | n + 6 |

| cDDMSvSzSt | v1...n, aU, Ter, a′, k, Sv, Sz, St | n + 7 |

| Collapsing diffusion decision model with fixed k (cfkDDM) | ||

| References: Hawkins et al. (2015b) | ||

| cfkDDM | v1...n, aU, Ter, a′ | n + 3 |

| cfkDDMSv | v1...n, aU, Ter, a′, Sv | n + 4 |

| cfkDDMSt | v1...n, aU, Ter, a′, St | n + 4 |

| cfkDDMSvSt | v1...n, aU, Ter, a′, Sv, St | n + 5 |

| cfkDDMSvSzSt | v1...n, aU, Ter, a′, Sv, Sz, St | n + 6 |

| Linear urgency diffusion decision model (uDDM) | ||

| References: Ditterich (2006a), O’Connell et al. (2018b) | ||

| uDDM | v1...n, aU, Ter, b, m | n + 4 |

| uDDMSv | v1...n, aU, Ter, b, m, Sv | n + 5 |

| uDDMSt | v1...n, aU, Ter, b, m, St | n + 5 |

| uDDMSvSt | v1...n, aU, Ter, b, m, Sv, St | n + 6 |

| uDDMSvSb | v1...n, aU, Ter, b, m, Sv, Sb | n + 6 |

| uDDMSvSbSt | v1...n, aU, Ter, b, m, Sv, Sb, St | n + 7 |

| Ditterich urgency diffusion decision model (dDDM) | ||

| References: Ditterich (2006a) | ||

| dDDM | v1...n, aU, Ter, sx, sy, d | n + 5 |

| dDDMSv | v1...n, aU, Ter, sx, sy, d, Sv | n + 6 |

| dDDMSt | v1...n, aU, Ter, sx, sy, d, St | n + 6 |

| dDDMSvSt | v1...n, aU, Ter, sx, sy, d, Sv, St | n + 7 |

| dDDMSvSzSt | v1...n, aU, Ter, sx, sy, d, Sv, Sz, St | n + 8 |

| Urgency gating model (UGM) | ||

| References: Cisek et al. (2009), Thura et al. (2012),Cisek et al., 2009,Cisek et al. (2009), Thura et al. (2012) | ||

| UGM | v1...n, aU, Ter | n + 2 |

| UGMSv | v1...n, aU, Ter, Sv | n + 3 |

| UGMSt | v1...n, aU, Ter, St | n + 3 |

| UGMSvSt | v1...n, aU, Ter, Sv, St | n + 4 |

| Urgency gating model with intercept (bUGM) | ||

| (Chandrasekaran et al., 2017) | ||

| bUGM | v1...n, aU, Ter, b | n + 3 |

| bUGMSv | v1...n, aU, Ter, b, Sv | n + 4 |

| bUGMSvSt | v1...n, aU, Ter, b, Sv, St | n + 5 |

| bUGMSvSb | v1...n, aU, Ter, b, Sv, Sb | n + 5 |

| bUGMSvSbSt | v1...n, aU, Ter, b, Sv, Sb, St | n + 6 |

2.1. Mathematical models of decision-making

Sequential sampling models of decision-making assume that RT comprises two components (Ratcliff and McKoon, 2008; Ratcliff et al., 2016). The first component is the decision time, which encompasses processes such as the accumulation of sensory evidence and additional decision-related factors such as urgency. The second component is the non-decision time (or residual time), which involves the time required for processes that must occur to produce a response but fall outside of the decision-formation process, such as stimulus encoding, motor preparation and motor execution time.

We introduce various models of the decision-making process in approximately increasing level of complexity, beginning with the simple DDM.

2.1.1. Simple diffusion decision model (DDM)

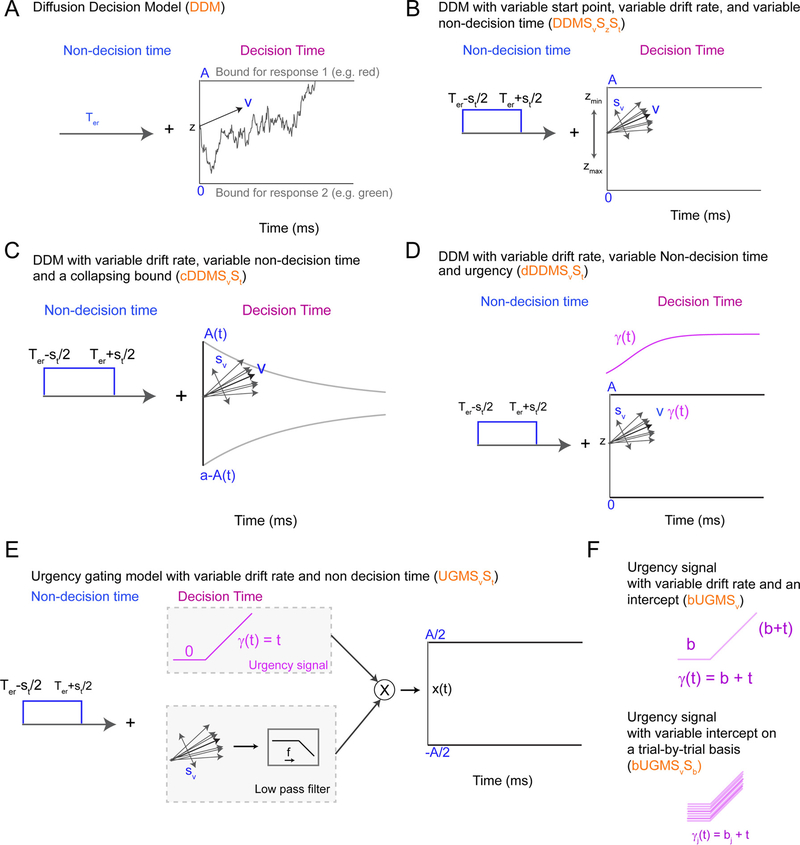

The diffusion decision model (or DDM) is derived from one of the oldest interpretations of a statistical test – the sequential probability ratio test (Wald and Wolfowitz, 1948) – as a model of a cognitive process – how decisions are formed over time (Stone, 1960). The DDM provides the foundation for the decision-making models implemented in ChaRTr and assumes that decision-formation is described by a one-dimensional diffusion process (Fig. 1A) with the stochastic differential equation

| (1) |

where x(t) is the state of the decision-formation process, known as the decision variable, at time t; v is the rate of accumulation of sensory evidence, known as the drift rate; Δt is the step size of the process; s is the standard deviation of the moment-to-moment (Brownian) noise of the decision-formation process; refers to a random sample from the standard normal distribution. A response is made when x (t + Δt) ≥ aupper or x(t + Δt) ≤ alower. Whether a response is correct or incorrect is determined from the boundary that was crossed and the valence of the drift rate (i.e., v > 0 implies the upper boundary corresponds to the correct response, v < 0 implies the lower boundary corresponds to the correct response). In Fig. 1A, and in all DDM models in ChaRTr, we specify alower = 0 and aupper = A, without loss of generality. z represents the starting state of the evidence accumulation process (i.e., the position of the decision variable at x(0)) and can be estimated between alower and aupper. When we assume there is no a priori response bias, z is fixed to the midpoint between alower and aupper (i.e., A/2). The decision time is the first time step t at which the decision variable crosses one of the two decision boundaries. The predicted RT is given as a sum of the decision time and the non-decision time Ter.

Fig. 1.

Schematic of some sequential sampling models of decision-making incorporated in ChaRTr. (A) The DDM model is the simplest example of a diffusion model of decision-making. (B) A variant of the DDM with variable non-decision time (St), variable drift-rate (Sv) and a variable start point (Sz). (C) A DDM with collapsing bounds and variability in the non-decision time and drift rate. The function A(t) takes the form of a Weibull function as defined in Eq. (6). (D) A variant of the DDM with variable non-decision time and drift rate, and an “urgency signal”. This urgency signal grows with elapsed decision time, which is implemented by multiplying the decision variable by the increasing function of time γ(t) (Eq. (10), following Ditterich, 2006a). (E) UGM with variable drift rate (Sv) and variable non decision time (St). In the standard UGM, the urgency signal is only thought to depend on time and thus starts at 0. The sensory evidence is passed through a low pass filter (typically a 100–250 ms time constant, Carland et al., 2015; Thura et al., 2012). The sensory evidence is then multiplied by the urgency signal to produce a decision variable that is compared to the decision boundaries. (F) Schematic of urgency signals with an intercept (top panel) and a variable intercept (bottom panel).

2.1.2. DDM with variable starting state, variable drift rate, and variable non-decision time

The (simple) DDM assumes a level of constancy from one decision to the next in various components of the decision-formation process: it always commences with the same level of response bias (z), the drift rate takes a single value (vi, for trials in experimental condition i), and the non-decision time never varies (Ter).

None of these simplifying assumptions are likely to hold in experimental contexts. For example, the relative speed of correct and erroneous responses can differ, and participants’ arousal may exhibit random fluctuations over time, possibly due to a level of irreducible neural noise. Decades of research into decision-making models suggests that these effects, and others, are often well explained by combining systematic and random components in each of the starting state, drift rate, and non-decision time (Fig. 1B). In ChaRTr, we provide variants of the DDM where all of these parameters can be randomly drawn from their typically assumed distributions over different trials,

| (2) |

| (3) |

| (4) |

| (5) |

where i denotes an experimental condition; j denotes an exemplar trial; denotes the uniform distribution. ChaRTr provides flexibility to the user such that they can assume the decision-formation process involves none, some or all of these random components. Furthermore, it provides flexibility to assume distributions for the random components beyond those that have been typically assumed and studied in the literature. For example, one could hypothesize that non-decision times are exponentially distributed rather than uniformly distributed (Ratcliff, 2013).

2.1.3. DDM with collapsing decision boundaries (cDDM)

The DDM with collapsing boundaries generalizes the classic DDM by assuming that the sensory evidence required to commit to a decision is not constant as a function of elapsed decision time. Instead, it assumes that the decision boundaries gradually decrease as the decision-formation process grows longer (e.g., Bowman et al., 2012; Drugowitsch et al., 2012; Hawkins et al., 2015a; Milosavljevic et al., 2010; Tajima et al., 2016). Collapsing boundaries terminate trials with weak sensory signals (i.e., lower drift rates) at earlier time points than models with ‘fixed’ boundaries (i.e., simple DDM) and otherwise equivalent parameter settings. The net result of collapsing boundaries is a reduction in the positive skew (right tail) of the predicted RT distribution relative to the fixed boundaries DDM. This signature in the predicted RT distribution holds whether there is variability in parameters across trials (Section 2.1.2) or not (Section 2.1.1).

Collapsing boundaries allow the observer to implement a decision strategy where they do not commit an inordinate amount of time to decisions that are unlikely to be correct (i.e., decision processes with weak sensory signals). This allows the observer to sacrifice accuracy for a shorter decision time, so they can engage in new decisions that might contain stronger sensory signals and hence a higher chance of a correct response. When a sequence of decisions varies in signal-to-noise ratio from one trial to the next, like a typical difficulty manipulation in decision-making studies, collapsing boundaries are provably more optimal than fixed boundaries in the sense that they lead to greater predicted reward across the entirety of the decision sequence (Drugowitsch et al., 2012; Tajima et al., 2016). In this type of decision environment, collapsing boundaries have provided a better quantitative account of animal behavior, including monkeys, who might be motivated to obtain rewards to a greater extent than humans, possibly due to the operant conditioning and fluid/food restriction procedures used to motivate these animals (Hawkins et al., 2015a). Whether humans also aim to maximize reward via collapsing boundaries is less clear (e.g., Evans et al., 2019).

Fig. 1C shows a schematic of a collapsing boundaries model. In ChaRTr we assume the collapsing boundary follows the cumulative distribution function of the Weibull distribution, following Hawkins et al. (2015a). The Weibull function is quite flexible and can approximate many different functions that one might wish to investigate, including the exponential and hyperbolic functions. We assume the lower and upper boundaries follow the form

| (6) |

| (7) |

where alower(t) and aupper(t) denote the position of the lower and upper boundaries at time t; a denotes the position of upper boundary at t = 0 (initial boundary setting, prior to any collapse); a′ denotes the asymptotic boundary setting, or the extent to which the boundaries collapsed (the maximal possible collapse – where the upper and lower boundaries meet – can occur when a′ = 1/2); λ and k denote the scale and shape parameters of the Weibull distribution.

The collapsing boundaries are denoted in ChaRTr as cDDM. When the k parameter is fixed to a particular value to aid stronger identifiability in parameter estimation (Hawkins et al., 2015a), we refer to the architecture as cfk to denote a fixed k value (cfkDDM), here chosen to be 3 but can be modified in user implementations.

The collapsing boundaries, as implemented here, are symmetric, though they need not be; ChaRTr provides flexibility to modify all features of the boundaries, including symmetry for each response option, and the functional form. For instance, one might hypothesize that linear collapsing boundaries are a better description of the decision-formation process than nonlinear boundaries (O’Connell et al., 2018a; Murphy et al., 2016). ChaRTr also permits DDMs with collapsing boundaries to incorporate any combination of variability in starting state, drift rate, and non-decision time (e.g., models of the form cDDMSvSzSt and cfkDDMSvSzSt).

2.1.4. DDM with an urgency signal (uDDM)

The DDM with an urgency signal assumes that the input evidence – consisting of the sensory signal and noise – is modulated by an “urgency signal”. This urgency-modulated sensory evidence is accumulated into the decision variable throughout the decision-formation process. As the process takes longer, the urgency signal grows in magnitude, implying that sensory evidence arriving later in the decision-formation process has a more profound impact on the decision-variable than information arriving earlier (Fig. 1D). To make the distinction between an urgency signal and collapsing boundaries clear, the DDM with an urgency signal assumes a dynamically modulated input signal combined with boundaries that mirror those in the classic DDM; the DDM with collapsing boundaries assumes a decision variable that mirrors the classic DDM combined with dynamically modulated decision boundaries.

As with the collapsing boundaries, the urgency signal can take many functional forms; we have implemented two such forms in ChaRTr. The general implementation of the urgency signal is

| (8) |

| (9) |

where E(t) denotes the momentary sensory evidence at time t; γ(t) denotes the magnitude of the urgency signal at time t. Note that with increasing decision time the urgency signal magnifies the effect of the sensory signal (vΔt) and the sensory noise .

The first urgency signal implemented in ChaRTr follows a 3 parameter logistic function with two scaling factors (sx, sy) and a delay (d), originally proposed by Ditterich (2006a, dDDM)Ditterich, 2006a,Ditterich (2006a, dDDM):

| (10) |

| (11) |

| (12) |

The second form of urgency signal implemented in ChaRTr follows a simple, linearly increasing function (uDDM)

| (13) |

where b is the intercept of the urgency signal and m is the slope.

As with the DDMs described above, urgency signal models can incorporate any combination of variability in starting state, drift rate and non-decision time, giving rise to a family of different decision-making models. We also allow for the possibility of variability across decisions in the intercept term of the linear urgency signal,

| (14) |

| (15) |

where j denotes an exemplar trial, and b and sb denote the mean (i.e., midpoint) and range of the uniform distribution assumed for the urgency signal respectively.

In ChaRTr, we have assumed that the urgency signal exerts a multiplicative effect on the sensory evidence (Eq. (9)). One variation of urgency signal models proposed in the literature posits that urgency is added to the sensory evidence, rather than multiplied by it (Hanks et al., 2011, 2014). In the one-dimensional diffusion models considered here, additive urgency signals make predictions that cannot be discriminated from a DDM with collapsing boundaries (Boehm et al., 2016). That is, for any functional form of an additive urgency signal, there is a function for the collapsing boundaries that will generate identical predictions. For this reason we do not provide an avenue for simulating and estimating additive urgency signal models in ChaRTr, and instead recommend the use of the DDM with collapsing boundaries.

2.1.5. Urgency gating model (UGM)

In a departure from the classic DDM framework, the urgency gating model (UGM) proposes there is no integration of evidence, at least not in the same form as the DDM (Cisek et al., 2009; Thura et al., 2012; Thura and Cisek, 2014). Rather, the UGM assumes that incoming sensory evidence is low-pass filtered, which prioritizes recent over temporally distant sensory evidence, and this low-pass filtered signal is modulated by an urgency signal that increases linearly with time (Eq. (13)).

Implementation of the UGM in ChaRTr uses the exponential average approach for discrete low-pass filters (smoothing). The momentary evidence for a decision is a weighted sum of past and present evidence, which gives rise to the UGM’s pair of governing equations

| (16) |

| (17) |

where τ is the time constant of the low-pass filter, which has typically been set to relatively small values of 100 or 200 ms in previous applications of the UGM, and α controls the amount of evidence from previous time points that influences the momentary evidence at time t. For instance, when α = 0 there is no low-pass filtering, and when τ = 100 ms (and Δt is 1 ms) the previous evidence is weighted by 0.99 and new evidence by 0.01.

The decision variable at time t is now given as

| (18) |

| (19) |

The intercept and slope of the urgency signal are set to particular values in standard applications of the UGM (b = 0, m = 1), reducing Eq. (19) to

| (20) |

In ChaRTr, we allow for variants of the UGM where the parameters of the urgency signal are not fixed. For instance, similar to the DDM with an urgency signal, we can test a UGM where the intercept (b) is freely estimated from data (bUGM), and even an intercept that varies on a trial-by-trial basis (Eq. (14)).

2.2. Fitting models to data

2.2.1. Parameter estimation

In ChaRTr, we estimate parameters for each model and participant independently, using Quantile Maximum Products Estimation (QMPE; Heathcote et al., 2002; Heathcote and Brown, 2004). QMPE uses the QMP statistic, which is similar to χ2 or multinomial maximum likelihood estimation, and produces estimates that are asymptotically unbiased and normally distributed with asymptotically correct standard errors (Brown and Heathcote, 2003). QMPE quantifies agreement between model predictions and data by comparing the observed and predicted proportions of data falling into each of a set of inter-quantile bins. These bins are calculated separately for the correct and error RT data. In all examples that follow, we use 9 quantiles calculated from the data (i.e., split the RT data into 10 bins), though the user can specify as many quantiles as they wish. Generally speaking, we recommend no fewer than 5 quantiles, to prevent loss of distributional information, and no more than approximately 10 quantiles, to prevent noisy observations in observed data especially at the tails of the distribution potentially bearing undue influence on the parameter estimation routine.

Many of the models considered in ChaRTr have no closed-form analytic solution for their predicted distribution. To evaluate the predictions of each model, we typically simulate 10,000 Monte Carlo replicates per experimental condition during parameter estimation. Once the parameter search has terminated, we use 50,000 replicates per experimental condition to precisely evaluate the model predictions and perform model selection. In ChaRTr, the user can vary the number of replicates used for parameter estimation and model selection; in previous applications, we have found these default values provide an appropriate balance between precision of the model predictions and computational efficiency. To simulate the models, we use Euler’s method, which approximates the models’ representation as stochastic differential equations.

Alternatives to our simulation-based approach exist, such as the integral equation methods of Smith (2000) or others that use analytical techniques to calculate first passage times (Gondan et al., 2014; Navarro and Fuss, 2009), to generate exact distributions. We do not pursue those methods in ChaRTr owing to the model-specific implementation required, which is inconsistent with ChaRTr’s core philosophy of allowing the user to rapidly implement a variety of model architectures.

We estimate the model parameters using differential evolution to optimize the goodness of fit (DEoptim package in R, Mullen et al., 2011). For the type of non-linear models considered in ChaRTr, we have previously found that differential evolution more reliably recovers the true data generating model than particle swarm and simplex optimization algorithms (Hawkins et al., 2015a). DEoptim also allows easy parallelization and can be used readily in clusters and the cloud with large number of cores to speed the process of model estimation. However, we again provide flexibility in this respect; the user can change this default setting and specify their preferred optimization algorithm (s).

2.2.2. Model selection

ChaRTr provides two metrics for quantitative comparison between models. Each metric is based on the maximized value of the QMP statistic, which is a goodness-of-fit term that approximates the continuous maximum likelihood of the data given the model.

The DDM is a special case of most of the model variants considered and will almost always fit more poorly than any of the other variants. We provide model selection methods that determine if the incorporation of additional components such as urgency or collapsing bounds provide an improvement in fit that justifies the increase in model complexity.

The raw QMP statistic, as an approximation to the likelihood, can be used to calculate the Akaike Information Criterion (AIC Akaike, 1974) and the Bayesian Information Criterion (BIC; Schwarz, 1978). We provide methods to compute AIC and BIC owing to the differing assumptions underlying the two information criteria (Aho et al., 2014), and differing performance with respect to the modeling goal (Evans, 2019b).

ChaRTr also provides functionality to transform the model selection metrics into model weights, which account for uncertainty in the model selection procedure and aid interpretation by transformation to the probability scale. The weight w for model i, w(Mi), relative to a set of m models, is given by

| (21) |

where Z is AIC, BIC, or the deviance (−2× log-likelihood; that is, −2× QMP statistic). The model weight is interpreted differently depending on the metric Z:

Where Z is the log-likelihood, the model weights are relative likelihoods. The log-likelihood should only be used in the model weight transformation when all models under consideration have the same number of freely estimated parameters.

Where Z is the AIC, the model weights become Akaike weights (Wagenmakers and Farrell, 2004).

Where Z is the BIC, and the prior probability over the m models under consideration is uniform (i.e., each model is assumed to be equally likely before observing the data), the model weights approximate posterior model probabilities (p(M|Data), Wasserman, 2000).

Although AIC and BIC are provided and easily computed in ChaRTr, their use for discriminating between models requires careful consideration from the researcher. Our perspective is influenced by an excellent paper that describes the worldviews for the two metrics (Aho et al., 2014). Here, we provide a succinct summary of the recommendations from Aho et al. (2014). Ultimately, whether AIC or BIC are used depends on the goals of the researcher.

If a researcher believes that all of the models implemented in ChaRTr or novel models they develop are all wrong but provide useful descriptions of choice and RT data, then AIC is more appropriate for model selection. In this scenario, the goal of model selection is to assess which model will provide the best predictions for new data. In this sense, AIC is closely linked to cross validation. As more and more data are collected, the assumption under AIC is that the model that produces the best predictions will become more and more complex.

In contrast, if a researcher believes that the true model is implemented in ChaRTr or in the set of novel models they develop, then BIC is likely to be the better tool. In this scenario, the goal of model selection is to address the question “Which of these models is correct?”. As more and more data are collected, the assumption under BIC is that the correct model will be identified. BIC is thus ideally suited to answer questions about identifying which model was most likely to have generated the data.

The only difference between AIC and BIC is the size of the penalty term correcting for model complexity. AIC considers false negatives (“Type II” errors) worse than false positives and errs on the side of selecting more complex models, and thus can be perceived as favoring “overfitting” models. In contrast, BIC is more conservative and considers false positives (“Type I” errors) worse than false negatives and errs on the side of the selecting simpler models, and thus could be perceived as favoring “underfitting” models. Both are valid perspectives and our opinion is that claiming one is better than the other is not a particularly fruitful endeavor.

Thus, our position is that both metrics have utility when a researcher applies ChaRTr to real data. Practically, we recommend using both AIC and BIC for model comparison as a method for identifying a set of likely models. We take this approach in the case studies described below, which leads us to some nuanced conclusions. Throughout this paper, and in other papers (Chandrasekaran et al., 2018), we argue that using model selection techniques such as AIC and BIC to identify a single best model might not be the best approach. Rather, we suggest researchers use these metrics judiciously to guide their analyses and ultimately new experiments.

2.2.3. Visualization: quantile probability plots

Visualization of choice and RT data is critical to understanding observed and predicted behavior. Such visualization can prove challenging in studies of rapid decision-making because each cell of the experimental design (e.g., a particular stimulus difficulty) yields a joint distribution over the probability of a correct response (accuracy) and separate RT distributions for correct and error responses. Since most decision-making tasks manipulate at least one experimental factor across multiple levels, such as stimulus difficulty, each data set is comprised of a family of joint distributions over choice probabilities and pairs of RT distributions (correct, error). Following convention and recommendation (Ratcliff et al., 2016; Ratcliff and McKoon, 2008), we visualize these joint distributions with quantile probability (QP) plots. QP plots are a compact form to display choice probabilities and RT distributions across multiple conditions.

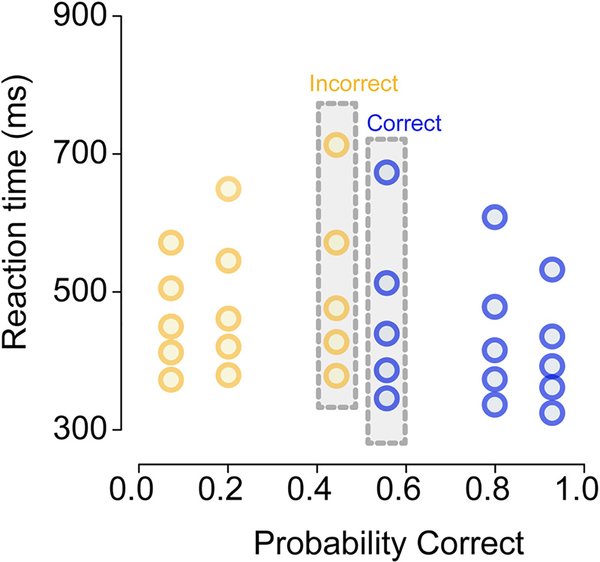

In a typical QP plot, quantiles of the RT distribution of a particular type (e.g., correct responses) are plotted as a function of the proportion of responses of that type. Consider a hypothetical decision-making experiment with three different levels of stimulus difficulty; Fig. 2 provides a plausible example of the data from such an experiment. Now assume that for one of the experimental conditions, the accuracy of the observer was 55%. To display the choice probabilities, correct RTs and error RTs for this condition, the QP plot shows a vertical column of N markers above the x-axis position ~0.55, where the N markers correspond to the N quantiles of the RT distribution of correct responses (rightmost gray bar in Fig. 2). The QP plot also shows a vertical columns of N markers at the position 1 − 0.55 = 0.45, where this set of N markers correspond to the N quantiles of the distribution of error RTs (leftmost gray bar in Fig. 2). This means that RT distributions shown to the right of 0.5 on the x-axis reflect correct responses, and those to the left of 0.5 on the x-axis reflect error responses.

Fig. 2.

A quantile probability (QP) plot of choice and RT data from a hypothetical decision-making experiment with three levels of stimulus difficulty. The three difficulty levels are represented as vertical columns mirrored around the midpoint of the x-axis (0.5). In this example, the lowest accuracy condition had ~55% correct responses, so the RTs for correct responses in this condition are located at 0.55 on the x-axis and the corresponding RTs for error responses are located at 1 − 0.55 = 0.45 on the x-axis; these two RT distributions are highlighted in gray bars. For each RT distribution we plot along the y-axis the 10th, 30th, 50th, 70th, 90th percentiles (i.e., 0.1, 0.3, 0.5, 0.7, 0.9 quantiles), separately for correct and error responses in each of the three difficulty levels. For clarity, correct responses are shown in blue and error responses are shown in yellow. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

The default ChaRTr QP plot displays 5 quantiles of the RT distribution: 0.1, 0.3, 0.5, 0.7 and 0.9 (sometimes also referred to as five percentiles: 10th, 30th, 50th, 70th, 90th). The .1 quantile summarizes the leading edge of the RT distribution, the 0.5 quantile (median) summarizes the central tendency of the RT distribution, and the 0.9 quantile summarizes the tail of the RT distribution. The goal of visualization with QP plots, or other forms of visualization, is to enable comparison of the descriptive adequacy of a model’s predictions relative to the observed data.

3. Results

The results section first provides guidance on the use of ChaRTr and how to apply the various models of the decision-making process to data. The second part of the results section illustrates the use of ChaRTr to analyze choice and RT data from hypothetical observers, followed by a case study modeling data from two non-human primates (Roitman and Shadlen, 2002). Code for the ChaRTr toolbox is available at Chartr.chandlab.org/ or directly from github at https://github.com/mailchand/ChaRTr and will eventually be released as an R library.

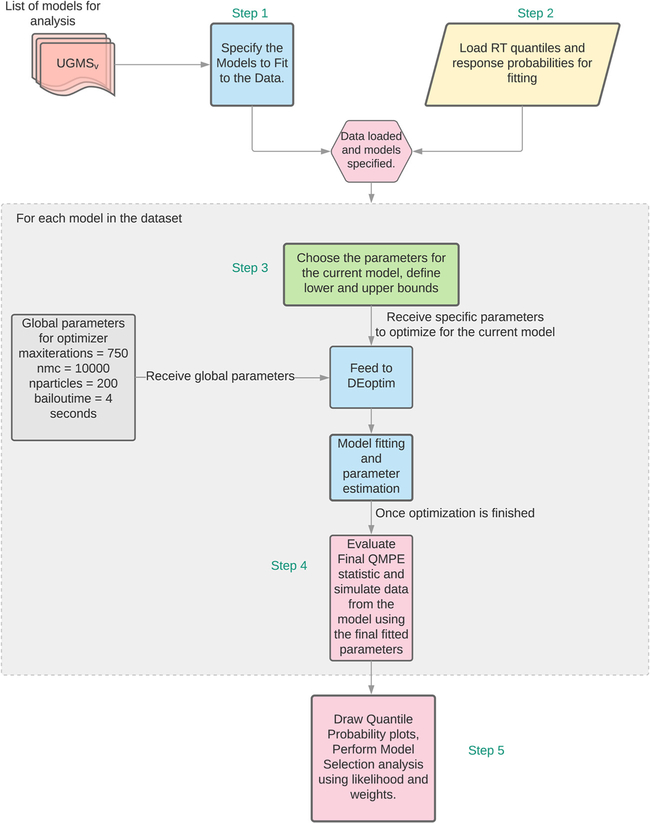

3.1. Toolbox flow

Figs. 3 and 4 provide flowcharts for ChaRTr. Fig. 3 provides an overview of the five main steps involved in the cognitive modeling process. Fig. 4 provides a schematic overview of the steps involved in the parameter estimation component of the process, which uses the differential evolution optimization algorithm (Mullen et al., 2011).

Fig. 3.

ChaRTr flow chart. Models are specified and once data is available, the parameters are estimated through the optimization procedure. Once parameter estimation is complete, the final goodness of fit statistic is calculated for every model under consideration, which is used for subsequent model selection analyses.

Fig. 4.

Flow chart for the parameter estimation component of ChaRTr, which uses the differential evolution optimization algorithm (Mullen et al., 2011).

The typical steps in ChaRTr for estimating the parameters of a decision-making model from data are as follows:

Model Specification: Specify models in the C programming language, and compile the C code to create the shared object, Chartr-ModelSpec.so, that is dynamically loaded into the R workspace. Future versions of ChaRTr will use the Rcpp framework and will not require the compilation and loading of shared objects (Eddelbuettel and François, 2011).

Formatting and Loading Data: Convert raw data into an appropriate format (choice probabilities, quantiles of RT distributions for correct and error trials). Save this data object for each unit of analysis (e.g., a participant, different experimental conditions for the same participant). Load this data object into the R workspace.

Parameter Specification: Choose the parameters of the desired model that need to be estimated along with lower and upper boundaries on those parameters (i.e., the minimum and maximum value that each parameter can feasibly take).

Parameter Estimation: Pass the parameters, model and data to the optimization algorithm (differential evolution). The algorithm iteratively selects candidate parameter values and evaluates their goodness of fit to data. This process is repeated until the goodness of fit no longer improves (Fig. 4).

Model Selection: The parameter estimates from the search termination point (i.e., the point where goodness of fit no longer improves), the corresponding goodness of fit statistics and model predictions are saved for subsequent model selection and visualization.

These 5 steps are repeated for each model and each participant under consideration. In the next few sections, we elaborate on each of the steps with examples to illustrate their implementation in ChaRTr. We note that use of ChaRTr requires a basic knowledge of R programming, and if one wishes to design and test a new decision-making model then also C programming. Owing to the many excellent online resources for both languages (a simple search of “R program tutorial” will return many helpful results), we do not provide a tutorial for either language here.

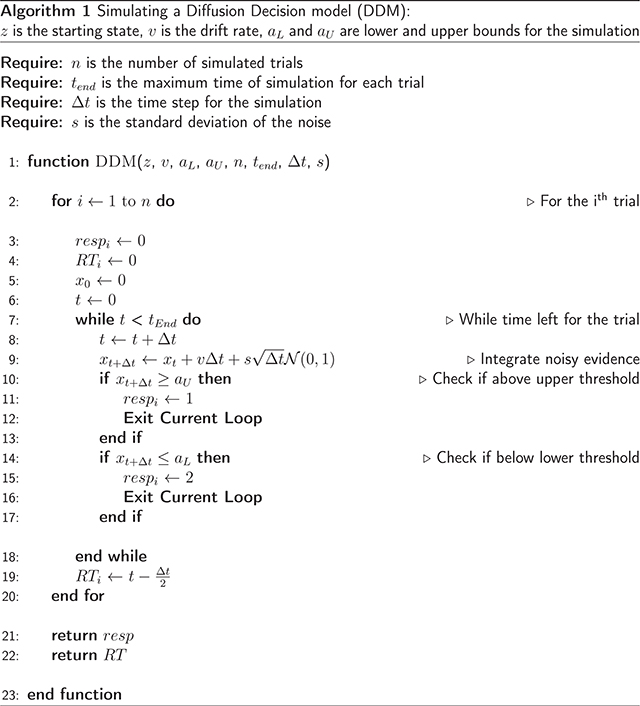

3.1.1. Model specification

The difference equation for the model variants implemented in ChaRTr is specified in C code in the file “Chartr-ModelSpec.c”. An example algorithm for the DDM (Section 2.1.1) is shown in Algorithm 1. The functions take as input the various parameters that are to be optimized along with various constants such as the maximum number of time points to simulate as well as the time step.

Once the C code has been specified for the model, the code is compiled using the following command that uses the SHLIB framework (R Core Team, 2019) at the terminal (usually ITERM in mac, Terminal Emulator in linux). The command shown in Listing 1 calls the appropriate compiler (clang on mac, gcc on linux), identifies the appropriate compiler to run, and loads the appropriate libraries and ensures the correct options are applied during compilation to create the architecture-specific shared object.

Listing 1.

Creating a shared library for loading the specified models into R.

| $ R CMD SHLIB chartr-ModelSpec.c |

The output of the compilation is a shared object called Chartr-ModelSpec.so that is dynamically loaded into R for use with the differential evolution optimizer. We anticipate that future versions of ChaRTr will use the Rcpp framework (Eddelbuettel and François, 2011), which will obviate the need for compiling and loading shared object libraries.

3.1.2. Formatting and loading data

To estimate the parameters of decision-making models in ChaRTr, the data need to be organized in a separate comma separated values (CSV) file for each participant in a simple three column format: “condition, response, RT”. “condition” is typically a stimulus difficulty parameter, “response” is correct (1) or incorrect (0), and RT is the response time (or reaction time when response time and movement can be separated). For example, in a typical file, data for a single stimulus difficulty (e.g., one level of motion coherence in a random dot motion task) would look like Listing 2.

Listing 2.

The required raw data format for parameter estimation in ChaRTr.

| condition, response, RT |

| 90,1,0.573 |

| 90,1,0.472 |

| 90,1,0.556 |

| . |

| . |

| . |

| 90,0,0.406 |

| 90,0,0.429 |

| 90,0,0.57 |

The raw data are converted in “chartr-processRawData.r” to generate 9 quantiles (10 bins) of correct and error RTs to be used in the parameter estimation process. It also stores the data as a R list named dat, which includes four fields: n, p, q, pb.

n is the number of correct and error responses in each condition.

p is the proportion of correct responses in each condition (derived from n).

q is the quantiles of the correct and error RT distributions in each condition.

pb is the number of responses in each bin of the correct and error RT distributions in each condition (derived from n).

dat is saved to disk as a new file. The dat file is loaded into the R workspace as required for the model estimation procedure.

3.1.3. Parameter specification

The next step in model estimation is, for each model, to specify a list of parameters that can be freely estimated from data along with each parameter’s lower and upper bound; we provide default suggestions for the lower and upper boundaries in ChaRTr. Model parameters can be generated by calling the function paramsandlims with two arguments: model name and the number of stimulus difficulty levels in the experiment. The number of stimulus difficulties is internally converted into drift rate parameters; for example, if there are n stimulus difficulties, then paramsandlims will estimate n independent drift rate parameters. There is also functionality in ChaRTr to specify fixed (non-estimated) values of some parameters, such as a drift rate of 0 for conditions with non-informative sensory information (e.g., 0% coherence in a random dot motion experiment). paramsandlims returns a named list with the following fields: lowers, uppers, parnames, fitUGM. These variables are used internally in the parameter estimation routines.

3.1.4. Parameter estimation

Steps 1–3 loaded the required data, identified the desired model to fit and specified the parameters of the model to be estimated. This information is now passed to the optimization algorithm (differential evolution). Parameter optimization is an iterative process of proposing candidate parameter values, accepting or rejecting candidate parameter values based on their goodness of fit, and repeating. This process continues until the proposed parameter values no longer improve the model’s goodness of fit. These are assumed to be the best-fitting parameter values, or the (approximate) maximum likelihood estimates. Fig. 4 provides an overview of the steps involved in parameter estimation when using the differential evolution optimization algorithm (Mullen et al., 2011).

The accompanying file “Chartr-DemoFit.r” provides a complete code example for estimating the parameters of a model with urgency.

3.1.5. Model selection

Once the best-fitting parameters have been estimated from a set of candidate models, the final step is to use this information to guide inference about the relative plausibility of each of the models given the data. Many different levels of questions can be asked of these models. The best practices for model selection are described generally in Aho et al. (2014) and for the specific problem of behavioral modeling in Heathcote et al. (2015).

In ChaRTr, we provide functions for converting the raw QMP statistic that approximates the likelihood. The likelihood is a goodness-of-fit statistic that can be combined with penalized model comparison metrics. This could entail comparison between two models at multiple levels of granularity. For instance, the question could be “which of the models considered provides the better description of the data”, or “is a DDM with variable baseline better than a DDM without a variable baseline”. It could also be used to compare between a model with collapsing boundaries and a model with drift-rate variability (O’Connell et al., 2018a) or between models with different forms of collapsing boundaries (Hawkins et al., 2015a). All of these questions can be answered using ChaRTr. As a guide, we provide illustrations of model selection analyses using ChaRTr in two case studies presented in Section 3.4. We also apply the model selection analyses to the behavior of monkeys performing a decision-making task (Roitman and Shadlen, 2002).

3.2. Extending ChaRTr

ChaRTr is designed with the goal of being readily extensible, to allow the user to specify new models with minimal development time. This frees the user to focus on the models of scientific interest while ChaRTr takes care of the model estimation and selection details behind the scenes. Here, we provide an overview of the steps required to add new models to ChaRTr.

Add a new function to “Chartr-ModelSpec.c” with the parameters needed to be estimated for the model. Specify the model in C code, following the structure of the pseudo-code example given in Algorithm 1. Provide the new model with a unique name (i.e., not shared with any other models in the toolbox), preferably using the convention defined in Table 2.

Add any new parameters of the model to the function makeparamlist, and to the paramsandlims function in script “Chartr-HelperFunctions.r”.

Add the name of the model to the function returnListOfModels, in script “Chartr-HelperFunctions.r”.

Make sure additional parameters are passed to the functions diffusionC and getpreds, in scripts “ChaRTr-HelperFunctions.r” and “ChaRTr-FitRoutines.r”, respectively.

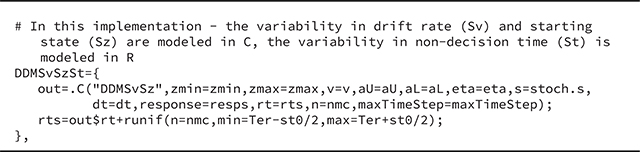

Finally, specify in function diffusionC the code for generating choices and RTs to use for model fitting. For example, the code for generating the choices and RTs for DDMSvSzSt is shown in Listing 3.

Listing 3. R Code for simulating choices and RTs for the model DDMSvSzSt.

3.3. Simulating data from models in ChaRTr

Once models are specified, they can be used to generate simulated RTs and discrimination accuracy for each condition. Simulated data help refine quantitative hypotheses. They also provide much greater insight into the dynamics of different decision-making models and how different variables in these models modulate the predicted RT distributions for correct and error trials (Ratcliff and McKoon, 2008).

ChaRTr provides straightforward methods to simulate data from decision-making models and generate quantile probability plots to compactly summarize and visualize RT distributions and accuracy. The function paramsandlims, used above in the parameter estimation routine, can also be used to generate hypothetical parameters to be passed to the function simulateRTs, which generates a set of simulated RTs and choice responses. By hypothetical parameters, we mean a set of reasonable starting values. An example is shown in Listing 4. These parameters can be changed by the user.

Listing 4.

R code for simulating RT and choice responses from the simple diffusion decision model (DDM).

| source(“chartr-HelperFunctions.r”) |

| nCoh = 5 |

| nmc = 50000 |

| model = “DDM” |

| fP = paramsandlims(model, nCoh, hypoPars = TRUE) |

| currParams = fP$hypoParams |

| R= simulateRTs(model, currParams, n=nmc, nds=nCoh) |

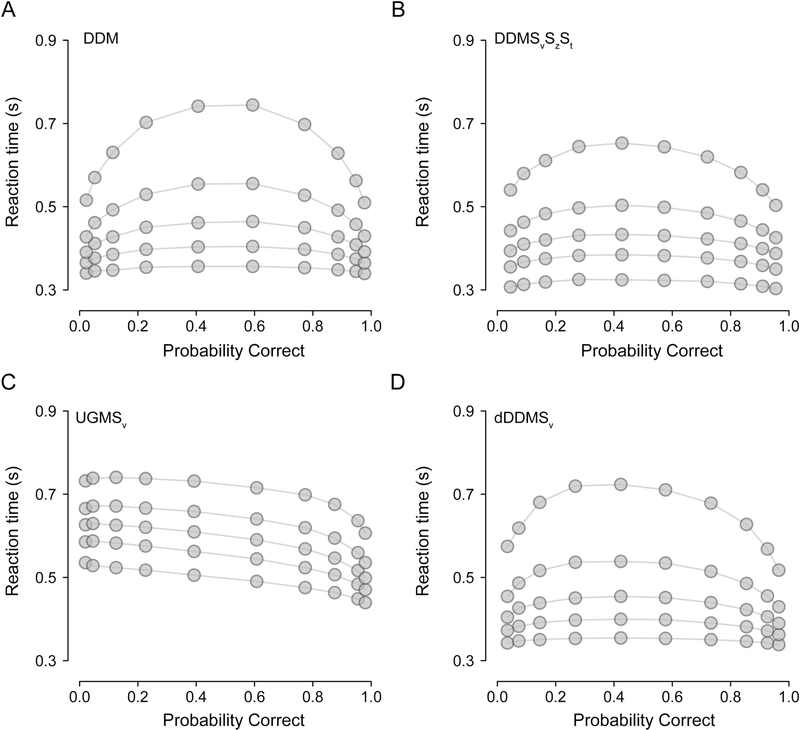

Fig. 5 shows the output of “Chartr-Demo.r”, which simulates and visualizes choice and RT data from four models in ChaRTr: DDM, DDMSvSzSt, UGMSv, and dDDMSv. Fig. 5A shows predictions of the simple DDM (see Section 2.1.1), a symmetric, inverted-U shaped QP plot (Ratcliff and McKoon, 2008); the symmetry implies that correct and error RTs are identically distributed. As variability is introduced to the DDM’s starting state (Sz) and/or drift-rate (Sv; see Section 2.1.2), the QP plot loses its symmetry (Fig. 5B); relative to correct RTs, error RTs can be faster (due to Sz) or slower (due to Sv). Fig. 5B also introduced variability in non-decision time (St), which increases the variance of the fastest responses.

Fig. 5.

Quantile probability plots of data simulated from four models in ChaRTr. (A) DDM, (B) DDM with variable drift rates, starting state and non-decision time (DDMSvSzSt), (C) urgency gating model with variable drift rates (UGMSv), and (D) DDM with an urgency signal and a variable drift rate defined as per Ditterich (2006a, dDDMS)Ditterich, 2006a,Ditterich, 2006a,Ditterich (2006a, dDDMS)Ditterich, 2006a,Ditterich, 2006a,Ditterich (2006a, dDDMS)Ditterich, 2006a,Ditterich (2006a, dDDMS),Ditterich, 2006a,Ditterich (2006a, dDDMS),Ditterich, 2006a,Ditterich (2006a, dDDMS),Ditterich, 2006a,Ditterich (2006a, dDDMS). Gray points denote data. Lines are drawn for visualization purposes.

Fig. 5C shows predictions of a standard variant of the UGM model (UGMSv) that assumes variable drift rate, zero intercept, a slope (β) of 1 and a time constant of 100 ms (see Section 2.1.5). The urgency gating mechanism in this model reduces the positive skew of the RT distributions, and leads to the prediction that error RTs are always slower than correct RTs (Fig. 5C; Hawkins et al., 2015b). Like the UGM, the dDDMSv model, another model of urgency (see Section 2.1.4), also predicts reduced positive skew of the RT distributions. Unlike the standard UGM, however, it can also predict error RTs that are faster or slower than correct RTs (Fig. 5D).

It is clear from Fig. 5 that various features in data discriminate between various features of the decision-making models: the relative speed of correct and error RTs, and critically the shape of complete RT distributions. We now provide three illustrative case studies that take advantage of the differential predictions of the models, demonstrating the use of ChaRTr for parameter estimation and selection amongst sets of competing models.

3.4. Case studies

To illustrate the utility of the toolbox, we provide three case studies where we simulated data from decision-making models in ChaRTr (case studies 1 and 2) or use ChaRTr to model data collected from monkeys performing a decision-making task (case study 3). We use the case studies to demonstrate the typical model estimation and selection analyses. The case studies also provide a modest test of model and parameter recovery. That is, whether ChaRTr reliably suggests that the true data-generating model is in the set of candidate models, and whether it reliably estimates the parameters of the true data-generating model.

3.4.1. Case study 1: hypothetical data generated from a DDM with variable drift rate and non-decision time (DDMSvSt)

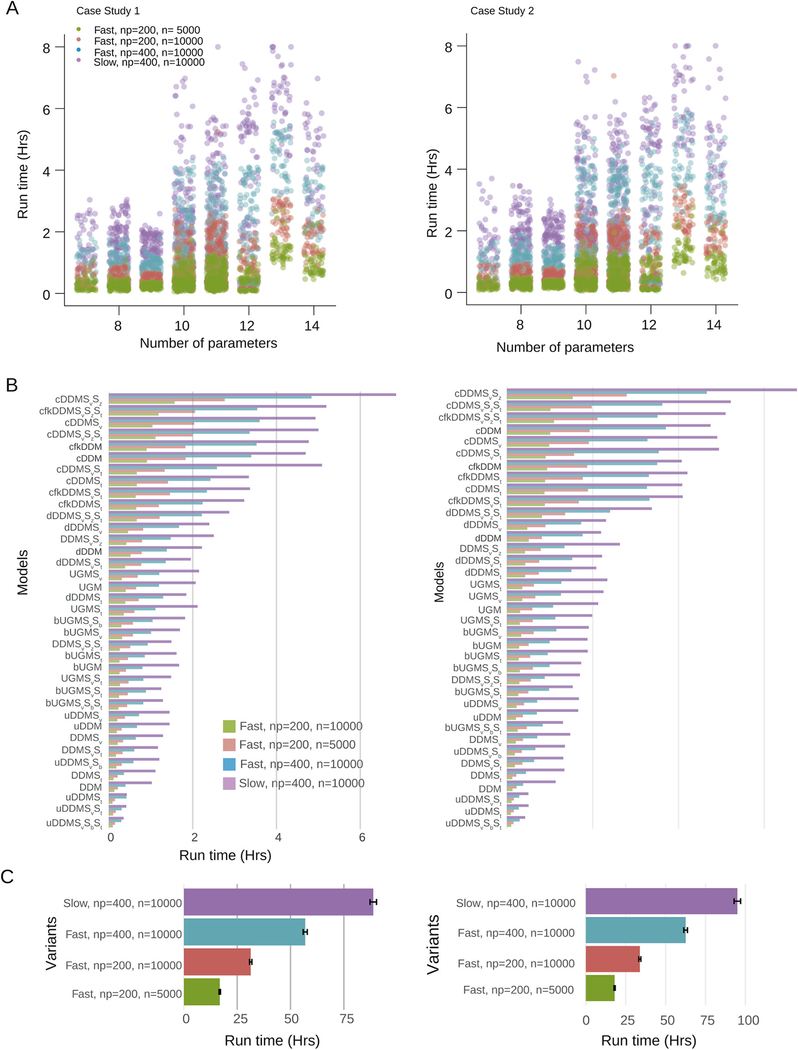

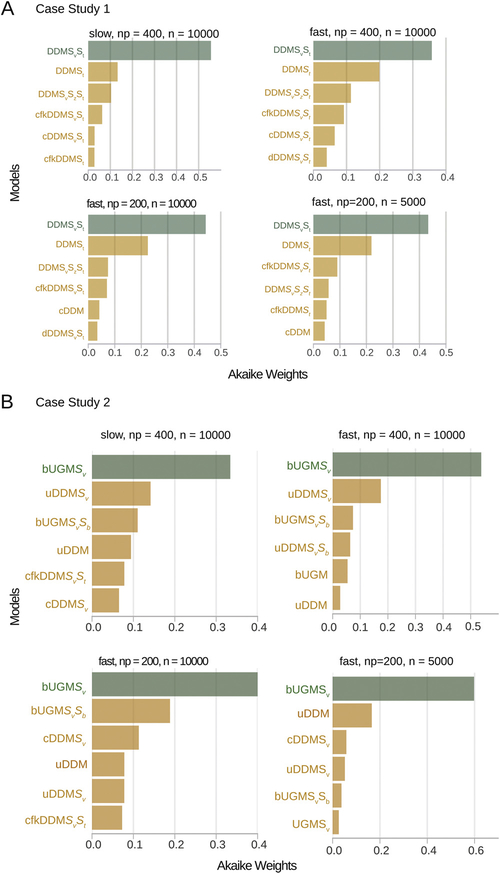

For our first case study, we assumed the data came from hypothetical observers who made decisions in a manner consistent with a DDM with variable drift rate (Sv) and variable start times (St). In ChaRTr, this corresponds to simulating data from the model DDMSvSt, where an observer’s RTs exhibit variability due to both the decision-formation process and the non-decision components. We simulated 300 trials for each of 5 stimulus difficulties, for 5 hypothetical participants.

For each model and hypothetical participant, we repeated the parameter estimation procedure 5 times, independently. We strongly recommend this redundant-estimation approach as it greatly reduces the likelihood of terminating the optimization algorithm in local minima, which can arise in simulation-based models like those implemented in ChaRTr. Variability occurs due to randomness in simulating predictions of the model at each iteration of the optimization algorithm, and randomness in the optimization algorithm itself (for a similar approach see Hawkins et al., 2015a,b). We then select the best of the 5 independent parameter estimation procedures (or ‘runs’) for each model and participant (i.e., the ‘run’ with the highest value of the QMP statistic). If computational constraints are not an issue, then we encourage as many repetitions as possible of the parameter estimation procedure.

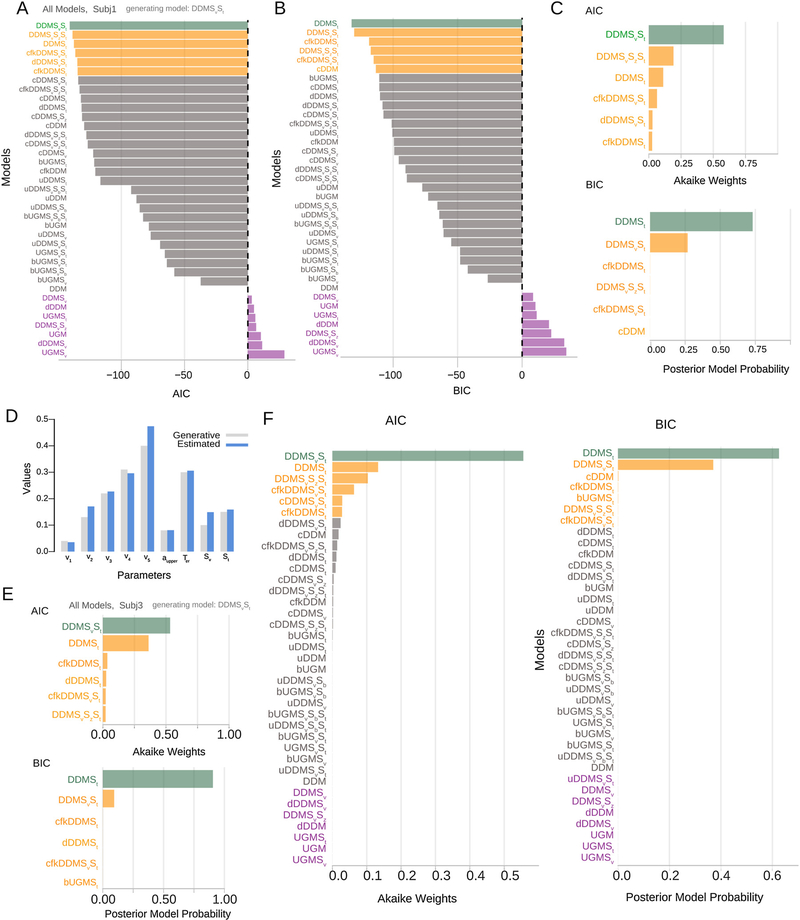

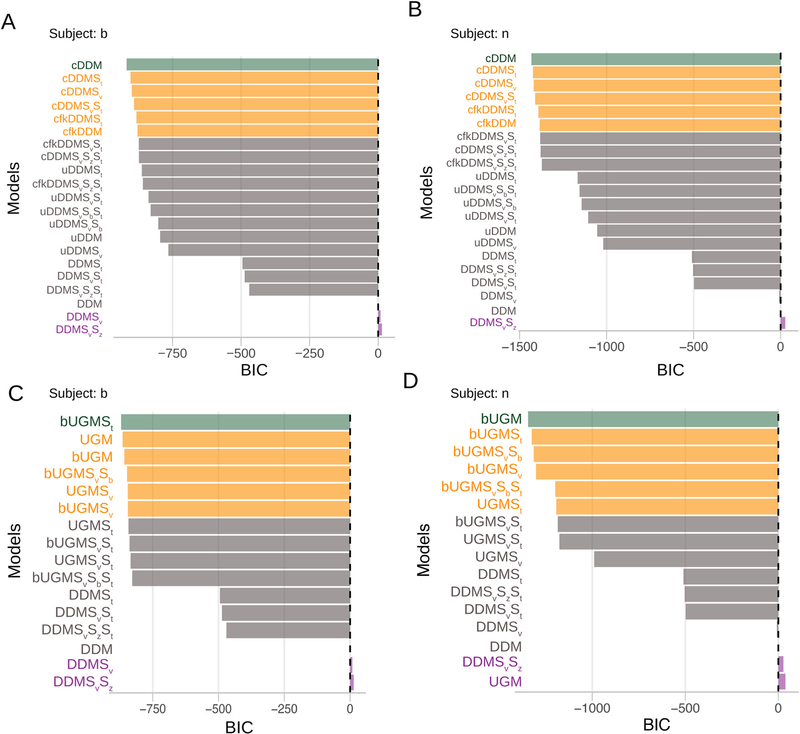

Fig. 6A and B shows the AICs and BICs for a set of models, obtained after using ChaRTr to fit the choice and RT data from one of the hypothetical observers. Both information criteria (ICs) are reported with reference to the DDM (i.e., as difference scores relative to the DDM). Thus, negative values suggest a more parsimonious account of the data than the DDM, and positive values suggest the opposite. Fig. 6C shows the Akaike weights and BIC-based approximate posterior model probabilities (Eq. (21)) for the top six models.

Fig. 6.

Model selection and parameter estimation outcomes from applying a range of cognitive models of decision-making to choice and RT data from five hypothetical observers (case study 1). A–C shows outcomes from one hypothetical observer and E shows outcomes from a second hypothetical observer. Data were generated using the model DDMSvSt. (A) AIC values for each model with the DDM model as the reference. To guide the eye and ease readability, bars are colored based on whether they are better or worse than the DDM in fitting the data. The best model is shown in green, and the next five best models are shown in orange. The remaining models better than the DDM are shown in gray and models worse than the DDM are shown in purple. (B) Same as A but using BIC as the model comparison metric. (C) Akaike weights and BIC-based approximate posterior model probabilities for the top six models that provided the best account of the data. ChaRTr correctly identifies the true data-generating model (DDMSvSt) as the one of the most likely candidates for describing the data. (D) Data-generative and estimated parameter values for the DDMSvSt model shown in A. Close alignment indicates ChaRTr recovered the true parameter values. (E) Akaike weights and posterior model probabilities from another hypothetical observer. Color conventions as in C. (F) Average akaike weights and posterior model probabilities across all five hypothetical observers, assuming the observers are independent. Reassuringly, DDMSvSt is identified as one of the most plausible models for the data. (For interpretation of the references to color in the print version for this figure legend, the reader is referred to the web version of this article.).

The AIC scores/weights suggest that DDMSvSt provides the best account of the data; by ‘best account’, we mean the model that provided the most appropriate tradeoff between model fit and model complexity among the specific set of models under consideration, according to AIC. This suggests that ChaRTr reliably recovers the generating model as one of the candidate models – a necessary test for any parameter estimation and model selection analysis. We strongly recommend this form of model recovery analysis when developing and testing any proposed cognitive model; if a data-generating model cannot be successfully identified as a set of candidate models in simulated data, where the true model is known, it is not a useful measurement model for real data.

The BIC scores/weights also suggest that DDMSvSt and DDMSt are the best models for describing the data. However, interestingly, BIC ranks DDMSt higher than DDMSvSt. This result does not suggest that ChaRTr is failing to recover the data generating model. Instead, our interpretation of the results is that both DDMSvSt and DDMSt should be considered candidate explanations for the data and that they are very close in terms of explanations for the choice and response time data. That is, the most likely explanation for the data is a DDM with variable non-decision time. There might also be a contribution from drift rate variability. As we explained in the methods, AIC is more focused on false negatives and thus places a lower penalty on complexity. BIC is more focused on false positives and thus places a higher penalty on complexity.

The models ChaRTr ranked 3rd to 6th using both AIC and BIC were sensibly related to the data-generating model. These models all assumed that observed RTs were influenced by factors other than sensory evidence (such as growing impatience), which might mimic the data-generating model’s RT variability that arose due to factors external to the decision-formation process (variable non-decision time). The results serve as an important reminder that model selection should not be used to argue for the “best” model in an absolute sense. Rather, when considering the collection of the highest ranked models (e.g., models in green and orange in Fig. 6A and B) it can be most constructive to rank useful hypotheses/explanations of the data that can then guide further study (Burnham et al., 2011), which is the approach we have used here. For instance, considering this set of highly-ranked models provides strong evidence that the true decision process involves perfect information integration (as opposed to low-pass filtering of sensory evidence, as in the UGM) and includes variability in non-decision time components, which were both components of the data-generating model.

Fig. 6D shows the estimated parameter values for the DDMSvSt model. The parameter estimates were very similar to the data-generating values, with some minor over- or under-estimation of the drift rate parameters. This suggests that ChaRTr can reasonably recover the data-generating model and parameters. As above, we also strongly recommend this form of parameter recovery analysis when developing and testing any proposed cognitive model.

Fig. 6E shows the model selection outcomes from another hypothetical observer. When using AIC, ChaRTr again identifies the best fitting model as DDMSvSt and the next best model as DDMSt. BIC again prefers DDMSt over DDMSvSt. A few other models also provided good accounts of the data. As was the case for observer 1, these models predict variability in RTs due to mechanisms outside the decision-formation process.

In the three other hypothetical observers that we simulated, the pattern of results returned by ChaRTr was consistent with the results shown for the two hypothetical observers in Fig. 6: DDMSvSt was chosen as the best fitting model for all observers by AIC. If we assume the set of observers are independent, which is true in the case of our hypothetical example and usually in experiments, we can average the individual-participant posterior model probabilities to obtain a group-level estimate. As shown in Fig. 6F, across the set of observers DDMSvSt is identified as the most plausible model for the data, indicating reasonably good model recovery; the next-best models are the same as those described earlier. The results from BIC were again consistent, preferring the DDMSt model over DDMSvSt for this group of hypothetical observers.

Fig. 7 shows QP plots of the data from two hypothetical observers overlaid on the predictions from a range of models. The simple DDM predicted greater variance than was observed in data, and therefore provided a poor account of the data. When the DDM is augmented with St and both Sv and St, it provided a much improved account of the data, capturing most of the RT quantiles and the accuracy patterns. Three other models provided an almost-equivalent account of the data in terms of log-likelihoods (DDMSvSzSt, cfkDDMSvSt, dDDMSvSt), but they did so with the use of more model parameters than DDMSvSt and DDMSt. This led to a larger complexity penalty for those models and thus larger AICs and BICs in comparison to the DDMSvSt model, as shown in the model selection analysis in Fig. 6.

Fig. 7.

Quantile probability (QP) plots showing correct RTs (blue) and error RTs (orange) for two hypothetical observers (case study 1), along with the model predictions (gray dots). Predictions from the four best-fitting models are shown along with the simplest model the DDM. The best fitting models DDMSvSt, DDMSt, DDMSvSzSt, cfkDDMSvSt, and dDDMSvSt are shown. Numbers at the top of each plot show the log likelihood, AIC, and BIC for the model under consideration. AIC and BIC are computed with respect to the DDM. Higher values of log-likelihood are better. When assuming the DDM as the base (reference) model and AIC as the penalized model selection metric, the model DDMSvSt provides the best account of the data. When using BIC as the penalized model selection metric, the model DDMSt provides a better description of the data. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Together, this case study highlights the power of ChaRTr in discriminating between 37 albeit overlapping models of decision-making and ranking the most likely models. As we have emphasized, the models selected by AIC and BIC will differ slightly because of the different penalties assumed for the two methods which underlie their different philosophies. If we obtained this result in real data, our interpretation would be that for this population of subjects, the data are consistent with a model that involves a DDM and variable non-decision time and that there is also the possibility of variability in the drift rate parameter. We would also conclude that the most likely models are DDMs without a dynamic component such as an urgency signal, since the DDMs performed better than models with collapsing boundaries or urgency.

3.4.2. Case study 2: hypothetical data generated from a UGM with variable intercept (bUGMSv)

In a second case study we simulated data from hypothetical observers whose decision-formation process was controlled by an urgency gating model (Cisek et al., 2009; Thura et al., 2012) with a variable drift rate and an intercept (Chandrasekaran et al., 2017), termed bUGMSv in ChaRTr. We again assumed five hypothetical subjects, five stimulus difficulties and simulated 500 trials for each of them. We then fit the data with the redundant-estimation approach as in case study 1 and evaluated the results of the model selection analysis, all using routines contained in ChaRTr.

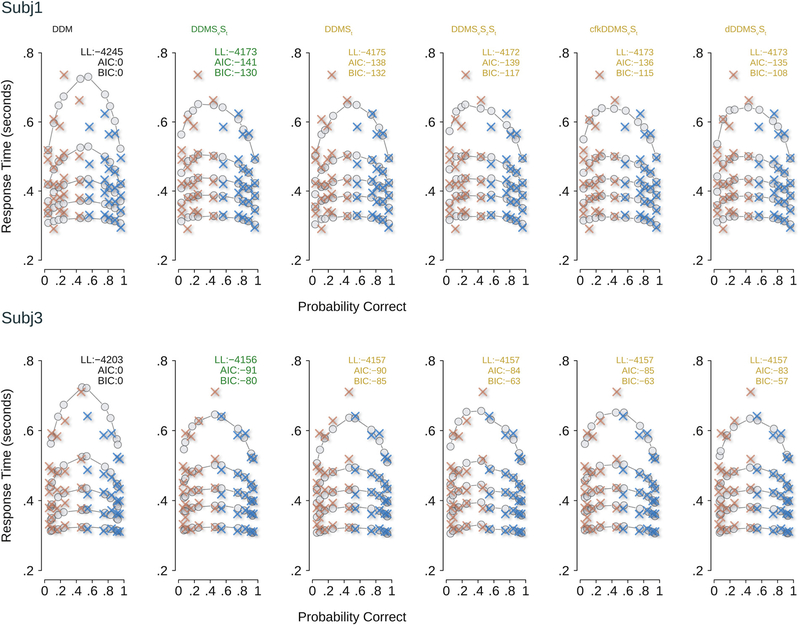

Fig. 8A and B shows the AICs and BICs for the set of models considered for one hypothetical observer’s data, again referenced to the DDM (i.e., as difference scores relative to the DDM). Negative values suggest a more parsimonious account of the data than the DDM, and positive values suggest the opposite. Fig. 8C shows the Akaike weights and posterior model probabilities for the top six models. bUGMSv provides the best account of the data for this hypothetical observer according to both AIC and BIC.

Fig. 8.

Model selection and parameter estimation outcomes from applying a range of cognitive models of decision-making to data from hypothetical observers (case study 2). Decision-making in these hypothetical observers is controlled by the model bUGMSv. (A) AIC values as a function of model with the DDM model as the reference for one hypothetical observer, Subj 3. Color conventions as in Fig. 6A. (B) BIC values as a function of model with the DDM model as the reference for the same subject shown in A. (C) Akaike Weights and Posterior model probabilities for the top six models that provided the best account of Subj 3’s behavior. (D) Results for another hypothetical subject. (E) Results for the population of hypothetical subjects. The most probable model for this set of hypothetical observers is the generative model, bUGMSv. However, we note that other models such as bUGM, UGMSv, and uDDMSv provide quite good descriptions of the behavior. This result is in keeping with the general notion that model selection ought to be used as a guide to the most likely models and not necessarily to argue for a “best” model. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

The models ChaRTr ranked 2nd to 6th were also sensibly related to the data-generating model; they all assumed the decision-formation process was influenced by factors other than sensory evidence, such as growing impatience or other variants of the urgency gating model. The second case study reaffirms our conclusion from the first case study that model selection may not be put to best use when arguing for a single “best” model in an absolute sense. This is especially true when the data-generating model is not decisively recovered from data.

For example, Fig. 8D shows the top six models identified by ChaRTr using AIC and BIC as providing the best account of another of the hypothetical observers’ data. For this particular hypothetical dataset, many other models provided a better account than the generative model bUGMSv. This result highlights two important points. First, some models under some circumstances can mimic each other (i.e., generate similar predictions), which makes their identification in data difficult. Second, some models may not be mimicked, but they may require very many data points to reliably recover. We note that these points are not specific to ChaRTr – they are properties of quantitative model selection in general and are an important reminder of the necessary careful steps needed when aiming to select between models (Chandrasekaran et al., 2018).

Fig. 8E shows the Akaike weights (left panel) and posterior model probabilities (right panel) for the different models averaged over all five observers considered. Reassuringly, the most plausible model across the set of observers is the generative model bUGMSv for both AIC and BIC. For AIC, the next five best models are all conceptually related to the data generating model. For instance, the next best model was uDDMSv which is a DDM with urgency but no gating. The third best model was bUGMSvSb which is an urgency gating model with variable intercept.

Similarly, when using posterior model probabilities, the most plausible model across the set of observers is the generative model bUGMSv. Again the next five best models are all conceptually related to the data generating model. For instance, the next best model was bUGM which is an urgency gating model with an intercept and no drift rate variability. The third best model was uDDM which is a DDM with an urgency signal but no gating.

Together these results serve as another reminder of the utility of ChaRTr in the analysis of decision-making models, including the ability to quantitatively assess a large set of conceptually similar and dissimilar models. If we were to obtain results like the case study in a hypothetical experiment, we would reject a simple DDM as an explanation for our data and suggest that a model with an urgency signal containing an intercept is a more likely model to explain the data. We would also likely suggest the presence of a gating component in the data but qualify our conclusions by saying that additional subjects and larger number of trials per subject would be needed for more confidence in the result.

3.4.3. Case study 3: behavioral data from monkeys reported in Roitman and Shadlen (2002)

To demonstrate the utility of ChaRTr in understanding experimental data, we model the freely available choice and RT data from two monkeys performing a random dot motion decision-making task (Roitman and Shadlen, 2002). In this classic variant of the random-dot motion task, the monkeys were trained to report the direction of coherent motion with eye movements. The percentage of coherently moving dots was randomized from trial to trial across six levels (0%, 3.2%, 6.4%, 12.8%, 25.6% and 51.2%). Monkey b completed 2614 trials and Monkey n completed 3534 trials.

We demonstrate that ChaRTr replicates key findings from past analyses of these behavioral data. Roitman and Shadlen (2002)’s behavioral (and neural) data were originally interpreted as a neural correlate of the DDM. Later studies suggested a stronger role for impatience/urgency in these data (Ditterich, 2006a; Hawkins et al., 2015b). This is the first result we wish to reaffirm using ChaRTr. The second result we aim to reaffirm is that Hawkins et al. (2015a) showed the urgency gating model provides a better description of the data than the DDM. We note that recent work suggests the evidence for impatience/urgency in Roitman and Shadlen (2002)’s data might be the result of the particular training regime their monkeys experienced that is not shared by other monkey training protocols (Evans and Hawkins, 2019).

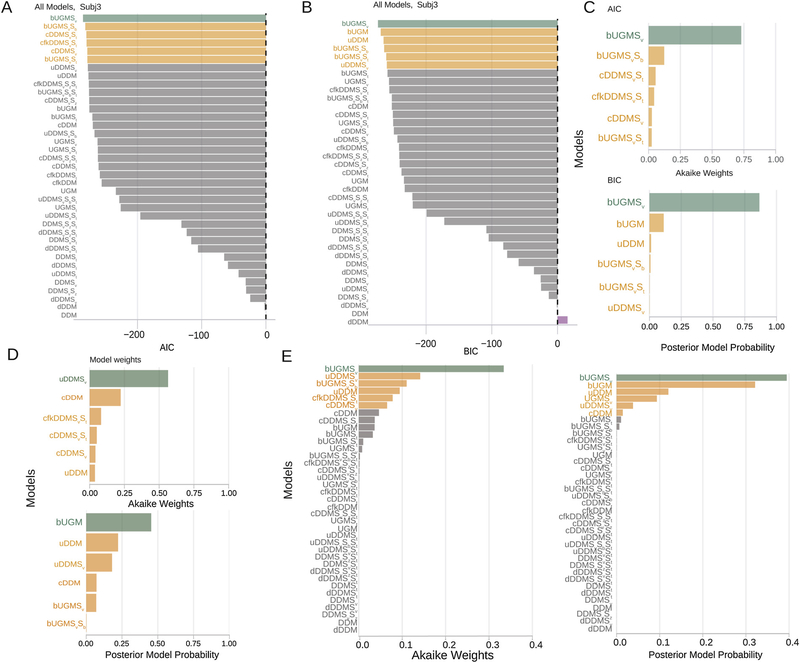

Fig. 9A and B shows the results from ChaRTr. For both monkeys, the four best-performing models all included a DDM with collapsing bounds, and the worst performing models were largely DDMs without any form of urgency. As mentioned above, for any functional form of a collapsing boundary there is a form of additive urgency signal that can generate identical predictions. So finding that collapsing bound models describe the data better is consistent with prior observations that (additive) urgency is an important factor. Together, the results are broadly consistent with those of Ditterich (2006a) and Hawkins et al. (2015b) who reported that models with forms of impatience are systematically better than models without it, for Roitman and Shadlen (2002)’s data. Fig. 9C and D shows that when the comparison is restricted to a subset of the ChaRTr models – UGMs and DDMs – variants of the UGM better explain the behavior of the monkeys than variants of the DDM, which is consistent with the findings of Hawkins et al. (2015a).

Fig. 9.

Model selection outcomes from applying a range of cognitive models of decision-making to data from two monkeys (Roitman and Shadlen, 2002). (A) and (B) show outcomes from monkeys b and n to compare models with various forms of urgency vs. simple diffusion decision models without urgency. For both monkeys, ChaRTr suggests models with urgency are better candidates for describing the data than DDMs without urgency. (C) and (D) show outcomes from monkeys b and n when comparing UGM vs. DDM models. For both monkeys, UGM based models substantially outperform the DDM based models.

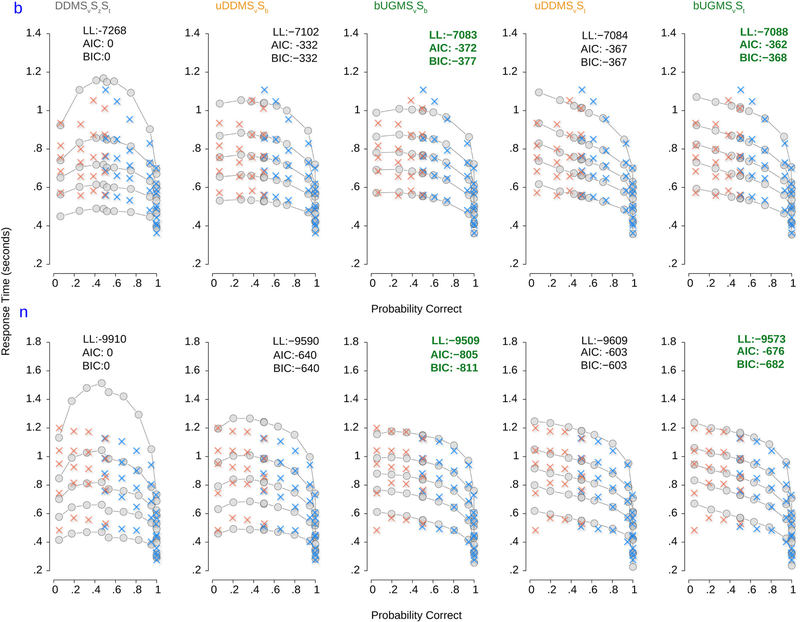

We can use ChaRTr to derive more insights into the behavior of the monkeys in this decision-making task, by examining whether urgency or the time constant of integration is a more important factor in explaining their behavior. Fig. 10 shows quantile probability plots for five models: DDMSvSzSt, a model from the DDM class without urgency but elaborated with variability in various parameters (Sv, Sz, St), two models with urgency and variability in some parameters (uDDMSvSt, uDDMSvSb), and two UGM models with variability in parameters (bUGMSvSb, bUGMSvSt). As was shown in the model selection outcomes in Fig. 9, the addition of urgency dramatically improved the ability of the models to account for the decision-making behavior of the two monkeys.

Fig. 10.