Highlights

* ERP and behavior: heterogeneity in emotion processing (EP) in faces and voices in ASD. * Though some group impairment, a subgroup displayed intact EP. * Early ERP latencies (N170 to face, N100 to voice) predicted within-modality EP. * ERP-indexed social information processing speed (SIPS): source of multimodal EP. * Faster SIPS predicted membership in intact EP subgroup: novel intervention target.

Keywords: Autism spectrum disorder, ERP, N170, Emotion processing, Emotion recognition, Social cognition

Abstract

This study sought to describe heterogeneity in emotion processing in autism spectrum disorders (ASD) via electrophysiological markers of perceptual and cognitive processes that underpin emotion recognition across perceptual modalities. Behavioral and neural indicators of emotion processing were collected, as event-related potentials (ERPs) were recorded while youth with ASD completed a standardized facial and vocal emotion identification task. Children with ASD exhibited impaired emotion recognition performance for adult faces and child voices, with a subgroup displaying intact recognition. Latencies of early perceptual ERP components, marking social information processing speed, and amplitudes of subsequent components reflecting emotion evaluation, each correlated across modalities. Social information processing speed correlated with emotion recognition performance, and predicted membership in a subgroup with intact adult vocal emotion recognition. Results indicate that the essential multimodality of emotion recognition in individuals with ASDs may derive from early social information processing speed, despite heterogeneous behavioral performance; this process represents a novel social-emotional intervention target for ASD.

Accurate recognition of affect is a requisite skill for adaptive social functioning and a noted domain of impairment in individuals with autism spectrum disorder (ASD; Baker et al., 2010, Philip et al., 2010, Rump et al., 2009). Deficits in identification of emotional content in faces (Rump et al., 2009) and voices (e.g. prosody; Baker et al., 2010) are well-documented in this population and are posited to represent a core deficit (Philip et al., 2010). Nevertheless, some research reveals subgroups of individuals with ASD who exhibit relatively preserved emotion recognition ability (Bal et al., 2010, Harms et al., 2010, O’Connor et al., 2005), highlighting the under-studied topic of heterogeneity within ASD. Despite variable presentation and a limited understanding of its nature and course, emotion decoding represents a common intervention target (Beauchamp and Anderson, 2010, Lerner et al., 2011, Solomon et al., 2004). A critical goal for research is elucidating sensory and cognitive processes (i.e. mechanisms) underlying emotion perception (i.e. attending to, noticing, decoding, and distinguishing nonverbal emotional inputs) in ASD; this will aid in identifying meaningful subgroups and concretizing appropriate treatment targets.

1. Multimodality of emotion perception

Emotion recognition in humans is often hypothesized to emerge from a unified, multimodal emotion processing ability (Borod et al., 2000) – that is, a latent capacity to process emotions in multiple domains. The vast majority of studies of emotion perception examine faces, often asserting that “basic to processing emotions is the processing of faces themselves” (Batty et al., 2011, p. 432). Crucially, this claim rests on the assumption of multimodal emotion processing; that is, the utility of facial emotional processing for informing understanding of general emotion perception lies in the notion that emotional face processing is an emergent process stemming from a more generalized latent emotion processing ability. So, assessment of the multimodality of emotion processing fills an essential gap in the literature linking emotional face processing to emotion processing generally.

In ASD populations, it is not known whether individuals exhibit multimodal deficits in emotion perception, and recent behavioral studies assessing the presence of a unified multimodal emotion recognition deficit have yielded inconsistent results. Often, emotion perception deficits in ASD have been hypothesized to reflect a generalized, rather than sensory modality-specific, deficit in social perception (Beauchamp and Anderson, 2010, Jones et al., 2010, Philip et al., 2010). This account characterizes emotion perception deficits in different domains as a common developmental consequence of impairments in more basic social perceptual abilities (Batty et al., 2011). Therefore, difficulties with emotion perception should be evident in multiple sensory domains, or multimodal. In consonance with this model, Philip et al. (2010) found substantial emotion recognition deficits in faces, voices, and bodies among adults with ASD.

In contrast, some research contradicts this account. Jones et al. (2010) found no fundamental difficulty with facial or vocal (multimodal) emotion recognition, and Rump et al. (2009) found intact basic emotion recognition, in adolescents with ASD. These results suggest an account in which putative “deficits” may simply reflect discrete modality-specific perceptual abnormalities, or instances of heterogeneity in social perception among youth with ASDs. However, studies considering the question of multimodal emotion perception deficits in ASD have primarily employed standardized and unstandardized behavioral tasks, which limit generalizability and do not address differences in underlying processes (Harms et al., 2010, Jones et al., 2010). Overall, it is unresolved whether observed deficits in emotion perception in ASD are modality-specific or reflect a generalized emotion processing difficulty.

In this study, we addressed this question directly by examining lower-level perceptual and cognitive processes across sensory modalities during emotion decoding within a sample of youth with ASD. By using event-related potentials (ERPs), stimulus-responsive peaks in the scalp-derived continuous electroencephalograph (EEG) that are effective for assessing basic processing in ASD (Jeste and Nelson, 2009), we measured multimodal perception in a fashion less vulnerable to variability (e.g. attention, task demands, motor skills) associated with behavioral measurement (see Rump et al., 2009). Indeed, the facial emotion perception in ASD literature has revealed highly variable behavioral performance across studies and tasks (see Harms et al., 2010), indicating the necessity of using more sophisticated methodologies such as eye-tracking (Rutherford and Towns, 2008), functional Magnetic Resonance Imaging (fMRI; Wicker et al., 2008), and EEG/ERP (Wong et al., 2008) to address processing of emotional information even when behavioral performance appears unimpaired. While ERP measurement involves its own set of limitations (e.g. movement artifacts, requirement of a relatively large number of trials, highly controlled environment), it provides a powerful method for isolating and quantifying cognitive processes that may underlie perceptual processes (Nelson and McCleery, 2008). Notably, high temporal resolution allows for description of both speed/efficiency (latency) and salience/intensity (amplitude) of such processes at discrete stages representing distinct cognitive events. We employed ERPs recorded during a normed facial and vocal emotion identification task to assess the presence and heterogeneity of a multimodal emotion processing abnormality in youth with ASD, and, if present, to specify its impact on emotion recognition.

2. Neural correlates of visual (facial) emotion perception

ERPs have frequently been used to examine facial emotion processing in typically developing (TD) populations, with two components yielding highly consistent evidence. The N170 has been shown to mark early perceptual processes involved in recognition and identification of configural information in faces (Bentin et al., 1996, Nelson and McCleery, 2008) and has been shown to respond to emotional expressions (Blau et al., 2007); however, see Eimer et al. (2003) for a contrasting account. Neural generators of the N170 have been localized to occipitotemporal sites critical for social perception, including fusiform gyrus (Shibata et al., 2002) and superior temporal sulcus (Itier and Taylor, 2004).

Meanwhile, components of processing subsequent to face structural encoding have been shown to be consistently reflective of emotional valence. Specifically, the fronto-central N250 (Luo et al., 2010) is evoked by observation of an emotionally expressive face (Balconi and Pozzoli, 2008, Carretie et al., 2001, Streit et al., 2001, Wynn et al., 2008). The N250 is considered to mark higher-order face processing such as affect decoding, and is presumed to reflect the modulatory influence of subcortical structures, including amygdala (Streit et al., 1999). Overall, while other components have sometimes been shown to be responsive to the presence of faces (e.g. the P1; Hileman et al., 2011), these two ERP components can be seen to reflect the earliest structural (N170) and evaluative (N250) processes typically involved in facial emotion perception.

In ASD populations, ERPs collected during presentation of facial stimuli have been shown to reveal important information about processing of such stimuli (Dawson et al., 2005). In particular, the N170 has received much attention. Several studies in youth and adults with ASD reveal slowed processing of facial information as measured by N170 latency (e.g. Hileman et al., 2011, McPartland et al., 2004, McPartland et al., 2011, O’Connor et al., 2005, O’Connor et al., 2007, Webb et al., 2006); however, see Webb et al. (2010) for a contrary account. Likewise, some research suggests smaller N170 amplitudes in response to facial emotions in ASD (O’Connor et al., 2005).

The degree to which cognitive processes marked by the fronto-central N250 are impacted in ASD are poorly understood. No ASD research to date has examined the relationship between facial emotion recognition ability and this ERP component. However, given consistent findings for the N170, as well as some subsequent components (e.g. N300; Jeste and Nelson, 2009), we predicted the N250 would evince a similar pattern of response.

3. Neural correlates of auditory (vocal) emotion perception

Recent work in TD adults has revealed that early emotion processing is indexed at emotional vocal (even sentential) stimulus onset in naturalistic speech. This research suggests that a series of three ERP components represents this process: a central N100 (early sensory processing), a frontal P200 (integration of prosodic acoustic cues), and a frontal N300 (early emotional evaluation; Paulmann et al., 2009, Paulmann and Kotz, 2008a, Paulmann and Kotz, 2008b). This sequence is thought to involve a pathway projecting from superior temporal gyrus, through anterior superior temporal sulcus, to right inferior frontal gyrus and orbito-frontal cortex (Paulmann et al., 2010).

Individuals with ASD also show ERP evidence of abnormal processing of auditory speech information (Nelson and McCleery, 2008). Reduced amplitude during perception of speech sounds has been reported for auditory ERP components such as P300, P1, N2, P3, and N4 (Dawson et al., 1988, Whitehouse and Bishop, 2008); however, little is known about neural correlates of emotion recognition during perception of vocal prosody in naturalistic speech in ASD. That said, the aforementioned component series (N100, P200, N300) has been effective in indexing abnormal vocal emotion processing in Williams Syndrome (WS), a disorder marked by heightened sensitivity to emotional information (Pinheiro et al., 2010). This study found that those with WS exhibited higher amplitudes in each of these components, as well as slightly earlier N100 and P200 latencies, relative to a TD group. Notably, previous research suggests that the heightened attention to social stimuli in WS contrasts with inattention to social stimuli in ASD (e.g. Riby and Hancock, 2009). Thus, while this series of ERP components has not yet been explored in ASD, we predicted that slower latency and smaller amplitudes of these components would relate to poorer emotion recognition performance in youth with ASD.

4. Aims and hypotheses

We sought to clarify issues of multimodality and heterogeneity of emotion perception in ASD by integrating age-normed behavioral measures and ERPs to explore whether ERP-indexed patterns of emotion processing (rather than behavioral task responses) are related across modalities. Crucially, rather than continuing to highlight group differences between TD and ASD individuals, we aimed to better explore the oft-neglected topic of individual differences within ASD populations. This work has vital implications for understanding extent and domain-specificity of emotion recognition deficits in ASD, as well as for identifying appropriate intervention targets and subpopulations defined by intact emotion recognition ability.

Based on the extant literature we specified three hypotheses. First, we predicted that youth with ASD would show deficits in facial and vocal emotion recognition on age-normed measures, but that a subset of the population would show intact emotion recognition ability. Second, we hypothesized that within-modality ERP latencies and amplitudes would correlate with performance on the associated emotion recognition task. Third, consistent with a unified multimodal emotion processing deficit, we predicted correlations across auditory and visual modalities for both behavioral measures and ERPs, before and after relevant controls (age, IQ, and reaction time). Finally, we aimed to test whether clinically meaningful effects could be detected among obtained results, since ERP components may significantly predict performance while still presenting only small effects. Thus, we hypothesized that, after relevant controls, within-modality ERP latencies and amplitudes would identify whether participants are likely to belong to distinct subgroups with intact versus impaired emotion recognition ability.

5. Methods

5.1. Participants

Participants included 40 consenting youth with ASD enrolled in a larger study of social functioning. All participants had pre-existing diagnoses of ASD, which were confirmed with the Autism Diagnostic Observation Schedule (Lord et al., 1999), the “gold standard” measure of the presence of ASD in research settings, administered by a research-reliable examiner (ML). All participants met ADOS cutoffs for ASD. A reliable and valid abridged version of the Wechsler Intelligence Scale for Children-IV (Vocabulary and Matrix Reasoning; Ryan et al., 2007, Wechsler, 2003) was administered to confirm normative cognitive ability (IQ>70). After EEG processing (six participants were excluded due to excessive artifact), the final sample included 34 individuals (26 male; Mage=13.07, SD=2.07). Independent-samples t-tests revealed no difference between excluded and included participants in terms of age (t=−.84, p=.41), IQ (t=−.29, p=.78), or ADOS score (t=−.12, p=.90), and a χ2 test revealed no association between exclusion status and sex (χ2=1.76, p=.18).

5.2. Behavioral measure

Diagnostic Analysis of Nonverbal Accuracy-2 (DANVA-2; Nowicki, 2004). The DANVA-2 is a widely used, age-normed, standardized measure of emotion recognition in adult and child faces (Nowicki and Carton, 1993) and voices (Baum and Nowicki, 1996, Demertzis and Nowicki, 1998). Participants rate visual stimuli: 24 child and 24 adult faces indicating high- or low-intensity happy, sad, angry, and fearful faces by pressing a button indicating the selected emotion. They also rate auditory stimuli: 24 child and 24 adult voices saying the phrase, “I'm leaving the room now, but I'll be back later,” indicating high- or low-intensity happy, sad, angry, and fearful emotion in the same manner. The DANVA-2 produces scores indicating raw number of errors in emotion recognition such that lower numbers indicate better performance. Both the facial (Solomon et al., 2004) and vocal (Lerner et al., 2011) modules have been used with ASD youth. In this study, stimuli were presented in a 2×2 (adult/child×faces/voices) block design, randomized within and between blocks. Adult/child and high/low-intensity scales were derived (scales were not derived for individual emotions due to lack of statistical power). Scale reliability (Cronbach's α) was high across the facial (.913) and vocal (.889) scales.

5.3. ERP measures

5.3.1. Stimuli and procedures

ERP data collection took place during administration of DANVA-2 stimuli. All stimuli were presented in a sound attenuated room with low ambient light using MatLab 7.9.0. Participants were seated before a 24in. flat-screen color monitor (60Hz, 1024×768 resolution) at a viewing distance of approximately 75cm, with a keyboard immediately in front of them. Each stimulus was accompanied by an on-screen listing of the four emotion choices, with each corresponding to a key press. Participants were permitted to take as long as needed to choose an emotion (even after cessation of presentation of each stimulus), and a 1000ms blank-screen inter-stimulus interval was provided after each choice. Participants were provided on-screen and verbal instructions prior to procedures; experimenters ensured that participants could comfortably reach the keyboard. Facial stimuli were presented on the monitor for up to 3000ms each against a black background (if a participant made his selection prior to 3000ms, the stimulus disappeared once the selection was made). Vocal stimuli were presented via screen-mounted speakers at approximately 65db.

5.3.2. EEG acquisition

EEGs were recorded continuously at ∼2000Hz using Actiview Software and active referencing. A 32-Channel BioSemi Active 2 cap, with Ag/AgCl-tipped electrodes arranged according to the 10/20 international labeling system, was fitted to each participant's head according to manufacturer specifications. A small amount of electrolyte gel was applied to each electrode to allow for low electrode offsets. Additional electrodes were placed at supra- and infra-orbital sites of each eye to monitor for vertical and horizontal eye movements. As specified by BioSemi's hardware setup (BioSemi, Amsterdam, The Netherlands), data were sampled with the ground electrode being formed by the Common Mode Sense active electrode and the Driven Right Leg passive electrode; this process ensures that the signal is insensitive to interference, and precludes the need to specify impedance thresholds. For all stimuli, ERPs were time-locked to stimulus onset.

5.3.3. ERP processing

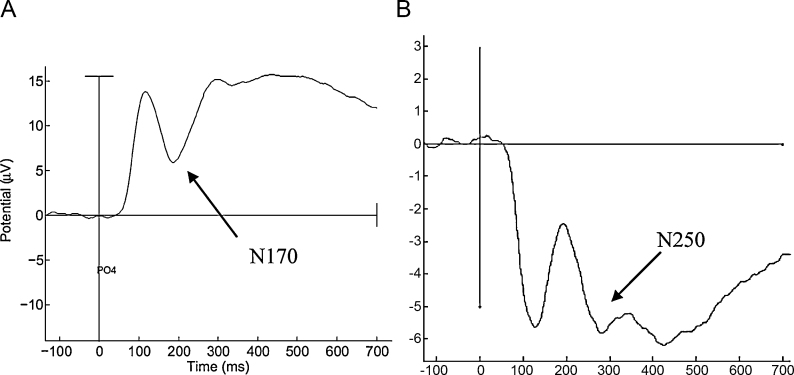

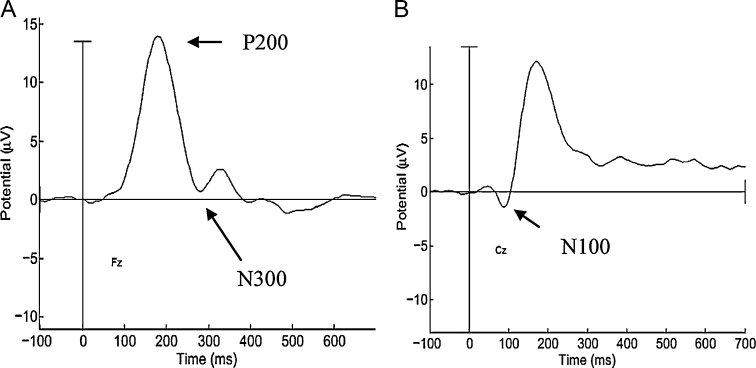

ERP processing was completed with EEGLab (version 9.0.3.4b) and ERPLab (version 1.0.0.42) Matlab packages. Data were re-sampled to 500Hz, re-referenced against a whole-head average, and digitally filtered at a low-pass of 30Hz using a Butterworth filter. Artifact rejection was conducted visually on continuous waveforms, with the researcher held blind to condition and participant; epochs with ocular or clear movement artifact were excluded from analyses. All participants used for final analyses had both >16 trials and >50% of trials marked “good” for both face and voices stimuli. The number of “good” trials did not differ significantly by condition. Epochs of 150 pre- to 700ms post-stimulus were created (limiting the possibility that ocular artifacts emerged due to lengthy epochs), and each epoch was corrected against a 100ms baseline. Finally, after confirming morphology in the grand average, and each individual, waveform, ERPs were extracted from electrode sites corresponding to those used in the previous literature. For face-responsive ERPs, N170 (peak between 160 and 220ms) was extracted from electrode PO4, and N250 (peak between 220 and 320ms) was extracted from a C4, F4, and FC2 composite (see Fig. 1; Jeste and Nelson, 2009, McPartland et al., 2004, Nelson and McCleery, 2008). For voice-responsive ERPs, N100 (peak between 90 and 130ms) was extracted from Cz, and P200 (peak between 180 and 220ms) and N300 (peak between 280 and 320ms) were extracted from Fz (see Fig. 2; Paulmann et al., 2009, Pinheiro et al., 2010). Peak amplitudes and latencies for each component were averaged for each participant and exported for analysis.

Fig. 1.

Grand averaged ERP waveforms to faces: (A) N170 (peak between 160 and 220ms) extracted from electrode PO4; (B) N250 (peak between 220 and 320ms) extracted from a composite of C4, F4, and FC2.

Fig. 2.

Grand averaged ERP waveforms to voices. (A) P200 (peak between 180 and 220ms) and N300 (peak between 280 and 320ms) extracted from electrode Fz. (B) N100 (peak between 90 and 130ms) extracted from Cz.

5.4. Data analytic plan

To test our first hypothesis, that youth with ASD would show deficits on the emotion recognition behavioral task, paired-sample one-tailed t-tests (testing effects in the direction of the ASD group showing greater impairments than TD norms) were conducted, comparing observed values to each participant's age-normed means. We also examined the distributions of scores to see whether a subset of the population performed at or above the norm-referenced mean.

To test our second hypothesis, that amplitudes and latencies of modality-specific ERPs (recorded during emotion recognition tasks) would correlate with concurrent emotion recognition performance, one-tailed Spearman's rho correlations (chosen because the stated aim was to describe within-sample individual differences, testing effects in the direction of shorter latencies and larger amplitudes predicting better performance) between within-modality performance (i.e. overall face or overall voice measures) and within-modality ERP latencies and amplitudes were conducted (4 and 6 familywise tests, respectively). If significant effects were found, post hoc probing was conducted to examine the source (child vs. adult; high vs. low intensity).

To test our third hypothesis, that behavioral performance and ERP response would correlate across modalities (indicating a unified multimodal emotion perception deficit), we first conducted one-tailed Spearman's rho correlations (testing effects in the direction of latencies and amplitudes each positively correlating across modalities) between performance on DANVA-2 Faces and DANVA-2 Voices. If significant effects were found, post hoc probing was conducted to examine the source (child vs. adult; high vs. low intensity). Second, we conducted one-tailed Spearman's rho correlations between face-specific ERP amplitudes and latencies and voice-specific ERP amplitudes and latencies (two sets of 6 familywise tests). As ERP amplitudes and latencies show shifts over ontogenetic time and may be influenced by IQ in both ASD and TD (Jeste and Nelson, 2009, Nelson and McCleery, 2008, Webb et al., 2006), we re-ran all significant correlations as partial correlations, controlling for age and IQ.

Next, since behavioral performance on emotion recognition tasks can also be influenced by reaction time (Harms et al., 2010), we considered its influence on our results. First, we conducted one-tailed Spearman's rho correlations between reaction time and ERP components, hypothesizing that longer latencies and smaller amplitudes would relate to longer reaction times. Second, we re-ran all significant partial correlations from hypothesis 3, adding in concurrent reaction time as a covariate.

Finally, we examined whether ERP predictors of performance that remained significant after controls could predict whether a given individual may belong to a subgroup without deficits relative to age norms. We did this by first establishing a threshold at the halfway point between the means of the normative sample and the ASD sample. Jacobson and Truax (1991) describe this as the “least arbitrary” (p. 13) indicator of clinical significance, as it represents a likelihood threshold above which (better performance, fewer errors) a participant is likely to be drawn from an unimpaired population, and below which (poorer performance, more errors) it is not. We then examined and dummy coded (1=intact ability, 0=impaired ability) whether each individual performed at or above (i.e. fewer errors) this threshold on each DANVA-2 subscale. Then, we ran a binary logistic regression predicting category membership from each remaining ERP predictor, controlling for age, IQ, and reaction time. If significant, we examined the exponent of the ERP predictor coefficient to obtain an odds-ratio of an individual's likelihood of having intact emotion recognition.

6. Results

6.1. Hypothesis 1: deficits in emotion recognition

Table 1 presents sample demographics and descriptive statistics. For adult stimuli, participants with ASD made significantly more emotion recognition errors for faces (t(33)=−1.98, p=.028) and marginally more errors for voices (t(33)=−1.63, p=.057), an average of .63 and .8 more errors compared to age group norms, respectively. For child stimuli, participants made significantly more emotion recognition errors for voices (t(33)=−3.47, p<.001), an average of 1.43 more errors compared to age group norms; however, number of errors did not differ from such norms for faces (t(33)=−1.24, p=.11). Notably, for all DANVA-2 scales, there was considerable variability in performance across participants, with the top quartile performing at least a standard deviation above the mean of the standardization sample, and the bottom quartile performing at least 3 standard deviations below standardization mean.

Table 1.

Descriptive characteristics of ASD participants.

| ASD sample |

DANVA-2 age-normed TD standardization sample | ||

|---|---|---|---|

| Mean (SD) | Range | Mean (SD) | |

| Age (years) | 13.07 (2.07) | 9.80–16.67 | |

| Full-Scale IQ | 111.06 (15.58) | 74–138 | N/A |

| ADOS | 11.03 (3.62) | 7–20 | N/A |

| Mean (SD) | Range | Mean (SD) | |

| #of errors | #of errors | #of errors | |

| %Correct | %Correct | %Correct | |

| Mean RT (SD) | RT | ||

| DANVA-2-Adult Faces | 5.53 (2.15) | 2–10 | 4.90 (.70) |

| 77% (9%) | 92–58% | 80% (3%) | |

| 3.23 (1.15) | 1.79–6.50 | ||

| DANVA-2-Child Faces | 3.68 (2.37) | 0–9 | 3.22 (.27) |

| 85% (10%) | 100–63% | 87% (1%) | |

| 2.39 (1.01) | 1.25–5.50 | ||

| DANVA-2-Adult Voices | 8.71 (3.21) | 4–18 | 7.91 (.96) |

| 64% (13%) | 83–25% | 67% (4%) | |

| 6.23 (1.80) | 3.87–11.33 | ||

| DANVA-2-Child Voices | 6.24 (2.71) | 1–13 | 4.81 (.47) |

| 74% (11%) | 96–46% | 80% (2%) | |

| 4.30 (.82) | 3.09–6.14 | ||

RT=reaction time in seconds. ADOS=Autism Diagnostic Observation Schedule. DANVA-2=Diagnostic Analysis of Nonverbal Accuracy-2. Q1=top of 1st quartile. Q3=top of 3rd quartile.

6.2. Hypothesis 2: ERP correlates of emotion recognition

6.2.1. Faces

A significant correlation was found between number of errors on DANVA-2 Faces and N170 latency, indicating that those with longer latencies made more errors (see Table 2). No relation was found between the N250 amplitude or latency and DANVA-2 performance. Post hoc probing suggested that the observed effect was driven by N170 response to adult (rho=.40, p<.01) and low-intensity faces (rho=.34, p=.02).

Table 2.

Correlation matrix showing correlation between number of errors and reaction time on DANVA-2-Faces and DANVA-2-Voices and within-modality ERP component latencies and amplitudes.

| DANVA-2-Faces # of errors |

DANVA-2-Faces Reaction time |

DANVA-2-Voices #of errors |

DANVA-2-Voices Reaction time |

|

|---|---|---|---|---|

| N170 latency | .33* | .17 | ||

| N250 latency | .21 | .13 | ||

| N170 amplitude | .04 | −.39* | ||

| N250 amplitude | −.12 | .25 | ||

| N100 latency | .44**,a | .17 | ||

| P200 latency | .04 | .09 | ||

| N300 latency | .17 | .30* | ||

| N100 amplitude | .04 | .04 | ||

| P200 amplitude | .04 | .03 | ||

| N300 amplitude | .17 | −.05 | ||

Note. Spearman's rho correlations. All are 1-tailed.

Correlation remained significant after controlling for age, IQ, and reaction time.

p<.05.

p<.01.

6.2.2. Voices

A significant correlation was found between errors on DANVA-2 Voices and N100 latency, indicating that those with longer latencies made more errors. No relation was found between amplitudes or latencies of the P200 or N300 and DANVA-2 performance. Post hoc probing suggested this effect to be driven by N100 response to adult (rho=.36, p=.02) and low-intensity voices (rho=.32, p=.03).

6.3. Hypothesis 3: multimodality of emotion processing

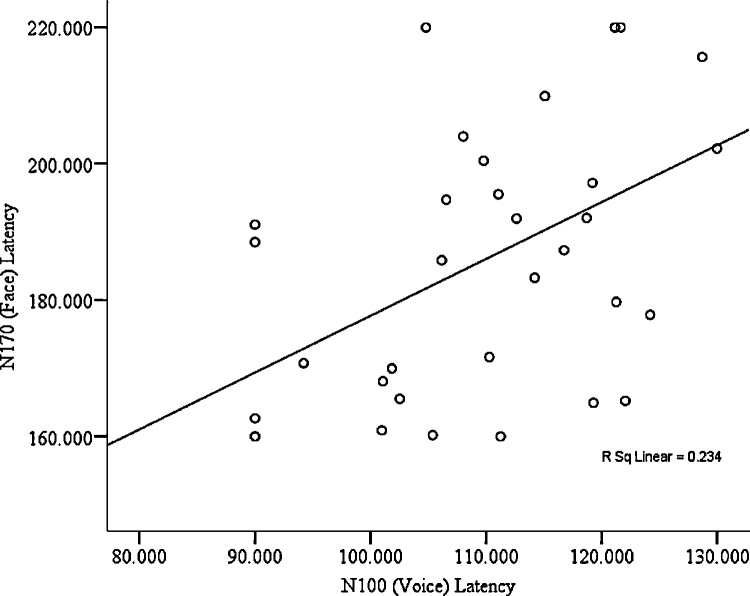

No significant relationship (p=.065) was found between performance on DANVA-2 Faces and Voices. However, positive correlations were found between N170 latency to faces and N100 (see Fig. 3) as well as P200 latency to voices (see Table 3). Positive correlations were also found between N250 amplitude to faces and N300 amplitude to voices, but not between any other ERP components across modalities.

Fig. 3.

Correlation between central N100 latency to voices and posterior N170 latency to faces.

Table 3.

Correlation matrix showing cross-modal correlations between ERP component latencies and amplitudes.

| N100 Lat. | P200 Lat. | N300 Lat. | N100 Amp. | P200 Amp. | N300 Amp. | |

|---|---|---|---|---|---|---|

| 1. N170 Lat. | .48**,a | .31* | .14 | |||

| 2. N250 Lat. | .03 | .17 | .08 | |||

| 3. N170 Amp. | −.00 | .03 | −.54 | |||

| 4. N250 Amp. | −.05 | .00 | .36*,a |

Note. Lat.=latency; Amp.=amplitude.

N170 and N250 are visual ERPs. N100, P200, and N300 are auditory ERPs. Spearman's rho correlations. All are 1-tailed.

Correlation remained significant after controlling for age, IQ, and reaction time.

p<.05.

p<.01.

6.4. Influence of age and IQ

After controlling for age and IQ, the relationship between N170 latency and DANVA-2 Faces was marginally significant (rho=.26, p=.07), while the relationships with adult (rho=.04, p=.42) and low intensity faces (rho=.21, p=.12) were no longer significant.

After controls, the relationship between N100 latency and DANVA-2 Voices remained significant (rho=.34, p<.05), as did the relationship with adult voices (rho=.43, p<.01); the relationship with low-intensity voices was marginally significant (rho=.27, p<.07).

After controls, the correlations between N170 latency to faces and N100 latency to voices (rho=.45, p<.01) and between N250 amplitude to faces and N300 amplitude to voices (rho=.46, p<.01) remained significant; correlations between N170 latency to faces and P200 latency to voices (rho=.23, p=.10) were no longer significant.

6.5. Influence of reaction time

Table 2 includes correlations between ERP components and concurrent DANVA-2 reaction time. There was no association between N250 latency or amplitude, or between N170 latency, and concurrent reaction time. However, there was a significant correlation between N170 amplitude and reaction time, indicating those with larger amplitudes had faster responses. This relationship was present for both adult (rho=−.40, p=.01) and child (rho=−.35, p=.02) faces. There was no association between voice-responsive ERP amplitudes and reaction time, or between N100 and P200 latency and reaction time. However, there was a significant correlation between N300 latency and reaction time, indicating those with shorter latencies had faster responses. This relationship was present only for adult (rho=.30, p=.04) faces.

The relationships between N100 latency and DANVA-2 total and adult Voices, between N170 and N100 latency, and between N250 and N300 amplitudes remained significant in the previous analyses; thus, these partial correlations were re-run controlling for reaction time. Each of these relationships remains significant (all rho>37, all p<.05) after this control.

6.6. Predicting intact and impaired subgroups

Only the relationships between N100 latency and DANVA-2 total and adult Voices remained significant in the previous analyses. Thus, these were used to predict category membership. After controlling for age, IQ, and reaction time, N100 latency did not predict likelihood of having intact vocal emotion recognition (exp(β)=.954, p=.18). However, after such controls, N100 latency did predict likelihood of having intact adult vocal emotion recognition (exp(β)=.917, p<.05). This indicates that for every 1ms decrease in N100 latency, there was an 8.3% increase in the likelihood that an individual would have intact emotion recognition to adult voices.

7. Discussion

This study sought to elucidate heterogeneity in emotion processing and assess the presence of multimodal deficits in emotion perception among youth with ASD using an emotion recognition task and ERP measurements of emotion processing. Participants showed statistically significant (though behaviorally minor) impairment in identification of adult faces and child voices. Across all indices, there was a wide range of performance, with the top quartile performing above age norms, and the bottom quartile performing well below. Thus, results suggest variability in emotion recognition ability in youth with ASD, with a considerable portion of the population evincing some deficits to either faces or voices. Latencies of early perceptual ERP markers of face and voice processing were related to behavioral measures such that faster responses were associated with somewhat improved performance. Subsequent ERP components commonly associated with emotion decoding were not related to behavioral performance. Thus, ERPs revealed systematic patterns in emotion processing, even in the presence of highly variable behavioral performance (Harms et al., 2010). This pattern of results suggests that, rather than emotion recognition deficits in ASD populations resulting from higher-order processes (Rump et al., 2009), they stem from atypicalities at the earliest stages of social perception (McPartland et al., 2011).

The relationship between ERP indices and behavioral performance was relatively strongest for subtler emotions and those displayed by adult stimuli regardless of modality. We see two interpretations of the effect for subtler emotions. This may reflect that individuals with ASD tend to use more “cognitive” rather than “automatic” strategies to interpret emotions (Dissanayake et al., 2003). Among high-intensity emotions, salient visual and auditory cues are clear, so cognitive strategies may be readily employed. However, among low-intensity emotions, cues are less clear, so only those youth who are able to quickly encode configural information may use it to make subsequent judgments of emotional content. Another possibility, given the correlations evident at early processing stages, is that stronger visual or auditory signals (i.e. more intense emotions) are more accessible as sensory inputs, thereby facilitating emotion processing. Regarding the finding for adult stimuli, we speculate that this may reflect greater familiarity and increased facility (reflected by fewer total errors) with child stimuli. Less-familiar adult stimuli may, like low-intensity stimuli, contain fewer familiar cues. Thus, those who more quickly encode configural information may use it for subsequent emotional evaluation.

Several effects were related to developmental and cognitive factors; however, the effect of N100 on emotion recognition in overall voices, adult voices and low-intensity voices, and the effect of N170 on facial emotion recognition, remained evident. Thus, as in typical development (Batty and Taylor, 2006), developmental differences in age (and IQ) may partially account for the relationship between early configural processing of faces and ability to accurately identify facial emotions. Meanwhile, the relationship of early sensory information processing to vocal emotion decoding ability was not significantly attenuated by age or IQ. However, given our limited age and IQ range, as well as the well-established patterns of change in ERP components over ontogenetic time, it is important for future research to examine these effects specifically and in broader samples to better elucidate the impact of development and IQ on this relationship.

Some ERP findings evinced a relationship to reaction time. Specifically, faster reaction time was related to larger N170 amplitudes to faces and faster N300 latencies to voices. This suggests that, in the visual modality, greater salience of configural facial information allowed for faster behavioral task response, while in the auditory modality, faster cognitive evaluation of emotional content permitted faster response. However, reaction times did not appreciably alter any relations between ERP components and emotion recognition performance or between components themselves, suggesting that observed emotion processing deficits may be unique to social inputs, and not simply the result of generalized processing speed delays.

Contrary to our hypothesis, emotion recognition performance did not correlate across modalities. This suggests within-individual variability in emotion recognition ability, as found previously in facial emotion perception research in ASD (Harms et al., 2010). Thus, behaviorally, emotion recognition deficits in ASD do not appear multimodal (Jones et al., 2010), suggesting that they are either discrete processes, or that cross-modal variability in cognitive processing may impact behavioral performance. Some ERP components did, however, associate across modalities, even after controlling for age, IQ, and reaction time. Specifically, latency of the early components (N170 and N100) and amplitude of later components (N250 and N300) were positively correlated. The former finding suggests that difficulty with speed of sensory processing of social information (or social information processing speed) in ASD populations is not limited to a specific modality, and may indicate a pervasive deficit in basic social perception emanating from the fusiform/superior temporal regions. This is consistent with fMRI research on affect perception in ASD (Pelphrey et al., 2007), and supports findings of selective slowed early social information processing speed (as indexed by N170) in response to facial stimuli in ASD compared to TD individuals (McPartland et al., 2011). Indeed, these effects suggest that efficiency of social information processing, rather than quality of mental representation of emotions (Rump et al., 2009) or indifference to social stimuli (Joseph et al., 2008, Whitehouse and Bishop, 2008) underlies general emotion perception deficits in ASD. Thus, there does appear to be a multimodal deficit in the basic stages of emotion processing speed in this population.

However, the latter finding indicates that intensity (if not speed) of some cognitive emotional evaluation may be consistent within individuals across modalities. This consistency may allow some youth to employ compensatory cognitive processes to mask or overcome otherwise early deficits in social perception. This is consistent with the “hacking hypothesis” (Bowler, 1992, Dissanayake et al., 2003) of ASD social cognition, which suggests that behaviorally intact social cognition in ASD may be a result of more effortfully “hacked out,” cognitively mediated processing of social information, rather than intuitive processing. This is consistent with past work revealing electrophysiological differences in social information processing in ASD in the presence of intact behavioral ability (Wong et al., 2008).

Finally, we found that N100 latency to adult voices, after controlling for age, IQ, and reaction time, predicted whether an individual was likely to belong to a subgroup with intact or impaired ability to identify emotions in those voices. While not robust across stimuli, this nonetheless provides clinically meaningful augmentation to the above statistically significant results, and suggests that ERP analysis of vocal emotion processing may be an especially fertile area for study. Additionally, it reveals that, not only do a substantial proportion of youth with ASD evince intact emotion recognition abilities, but ERP-indexed early sensory processing of social stimuli may differentiate those who do, thereby better describing the complex endophenotype of social cognition within ASD. This finding may also provide a window into an effective intervention mechanism, as adult voice emotion recognition has been shown to be amenable to intervention (Lerner et al., 2011).

It is also important to consider alternate interpretations of these findings. First, the range of obtained IQ and neuropsychological measures was limited. Thus, while we were able to rule out the possible confound of reaction time, we cannot rule out the influence of unmeasured third variables. For instance, given the frequent discrepancies between verbal and performance IQ in ASDs (Charman et al., 2011), it may be that those with higher verbal (but not performance) IQ did better on the verbally mediated task of identifying emotions, while those with higher performance (but not verbal) IQ exhibited faster social information processing speed. Second, it is possible that the observed slower ERP latencies were not due to slowed social information processing, but rather selective inattention to social stimuli (e.g. Klin et al., 2009). Finally, especially in a fairly lengthy, intensive task such as the full DANVA-2, we cannot rule out the possibility that individual differences in reward processing or systematic deficits in capacity for motivation by social stimuli (Chevallier et al., 2012) influenced these findings.

7.1. Limitations

There were several limitations to the present study. First, the DANVA-2 presents static faces, a single vocal sentence, and four basic emotions. Future studies should use dynamic faces (Rump et al., 2009), varying sentential content (Paulmann and Kotz, 2008b), and a broader range of emotions (Bal et al., 2010) during ERP data collection to assess generalizability of the obtained underlying multimodal emotion processing deficit. Second, while this study assessed the relationship between emotion processing and emotional recognition, it did not assess how these abilities relate to on-line social competence. Since social deficits are theorized to be the real-world consequences of emotion perception deficits (Beauchamp and Anderson, 2010), future work should include such measurement.

Third, our sample was limited in age and diagnostic severity. Future work should assess the presence of a multimodal emotion processing deficit in younger (Webb et al., 2006), older (O’Connor et al., 2007), and lower-functioning individuals with ASD. Indeed, given changes in social perception in ASD associated with adolescence (see Lerner et al., 2011), as well as shifts in socially salient ERP components during childhood in ASD and TD (Batty and Taylor, 2006, Webb et al., 2011), limitations in age range may considerably affect interpretation of results, even after controlling for age. Future research should thus consider this paradigm in younger and older samples. Fourth, this study did not assess the influence of past intervention experience on participants’ emotion recognition or processing abilities. Such work could provide more direct clues as to the malleability of these processes for future interventions.

Fifth, most of our analyses were conducted (and several of our results were only significant) using one-tailed tests; some may understandably see this as a concern. However, it is well established that faster latencies and larger amplitudes of well-defined ERP components tend to reflect relatively “better” functioning as assessed by that component (Key et al., 2005). Moreover, when studying ERP correlates of emotion processing in ASD as well as other populations, the majority of research indicates that faster latencies and larger amplitudes of well-defined components tend to reflect more “intact” functioning (Hileman et al., 2011, Jeste and Nelson, 2009, McPartland et al., 2004, McPartland et al., 2011, Nelson and McCleery, 2008, O’Connor et al., 2005, O’Connor et al., 2007, Streit et al., 2003, Webb et al., 2006). Thus, theoretical and past empirical work indicate effects in the hypothesized direction, and suggest that any effects found in the opposite direction may be spurious.

Sixth, this study lacked TD controls. Thus, while our results achieve our stated aim of elucidating the nature and variability in emotion processing within the ASD population, they shed little light on the degree of abnormality of this processing. Finally, the ERP methodology cannot provide precise information about spatial localization of the neural source of emotion processing. However, current high spatial resolution techniques (e.g. fMRI) do not have the temporal resolution to differentiate the observed early perceptual component processes revealed via electrophysiology. Thus, while future research should assess emotion processing in ASD populations using high spatial resolution techniques, using present technology our results could only be obtained through ERPs.

7.2. Future research and implications

This was among the first studies to obtain ERP measurement during an on-line emotion recognition task, to obtain ERP correlates of emotion recognition in vocal prosody, to use electrophysiology to assess multimodal emotion recognition ability, and to employ such methodology to differentiate the presence or absence of emotion recognition impairment in youth with ASD. Overall, these results suggest that early sensory processing of multimodal social information (social information processing speed) may be especially implicated in emotion recognition difficulties among youth with ASD, and that these difficulties may be consistent within individuals across modes of emotion perception. Such findings reinforce previous literature suggesting that, even in the presence of apparently intact behavior (Wong et al., 2008), some individuals with ASD may have difficulty employing otherwise “automatic” neural mechanisms of emotion processing (Jeste and Nelson, 2009, McPartland et al., 2011, Whitehouse and Bishop, 2008), and may thus rely on less efficient, explicit cognitive appraisals to render judgments of emotional content (Dissanayake et al., 2003). It also suggests that some individuals with ASD possess more intact social perception capabilities for evaluating emotions.

Future research should further explore relations between multimodal emotion processing and on-line behavioral outcomes, and the potential malleability of early ERP components in this population. Such work would be valuable in augmenting the effectiveness of interventions designed to improve social-emotional functioning. Additionally, research should explore whether these deficits are evident in young children with ASD, as they may be an outgrowth of already-identified ontogenetically early social perceptual abnormalities (Klin et al., 2009).

This study suggests that many youth with ASD do possess multimodal deficits in emotion recognition, and that these deficits are reflected in a construct of social information processing speed. This indicates that interventions that focus on cognitive appraisals of emotional information may fail to address the core deficit underlying emotion recognition impairment in this population (Lerner et al., 2012). Since recent research suggests that ERPs are sensitive and amenable to intervention in this population (Faja et al., 2012), treatments aiming to increase social information processing speed to improve social competence should be explored.

Conflict of interest

All authors report no biomedical financial interests or potential conflicts of interest.

Acknowledgments

This research was supported by Fellowships from the American Psychological Foundation, Jefferson Scholars Foundation, and International Max Planck Research School, and grants from the American Psychological Association and Association for Psychological Science to Matthew D. Lerner, and NIMH grant R00MH079617-03 to James P. Morris. James C. McPartland was supported by NIMH K23MH086785, NIMH R21MH091309 and a NARSAD Atherton Young Investigator Award. Portions of this article were presented at the 2011 American Academy of Child and Adolescent Psychiatry/Canadian Academy of Child and Adolescent Psychiatry Joint Annual Meeting. We would like to thank the children and parents who participated, as well as the research assistants who aided with data collection.

Contributor Information

Matthew D. Lerner, Email: mlerner@virginia.edu, mdl6e@virginia.edu.

James C. McPartland, Email: james.mcpartland@yale.edu.

James P. Morris, Email: jpmorris@virginia.edu.

References

- Baker K.F., Montgomery A.A., Abramson R. Brief report: perception and lateralization of spoken emotion by youths with high-functioning forms of autism. Journal of Autism and Developmental Disorders. 2010;40:123–129. doi: 10.1007/s10803-009-0841-1. [DOI] [PubMed] [Google Scholar]

- Bal E., Harden E., Lamb D., Van Hecke A.V., Denver J.W., Porges S.W. Emotion recognition in children with autism spectrum disorders: relations to eye gaze and autonomic state. Journal of Autism and Developmental Disorders. 2010;40:358–370. doi: 10.1007/s10803-009-0884-3. [DOI] [PubMed] [Google Scholar]

- Balconi M., Pozzoli U. Event-related oscillations (ERO) and event-related potentials (ERP) in emotional face recognition. International Journal of Neuroscience. 2008;118:1412–1424. doi: 10.1080/00207450601047119. [DOI] [PubMed] [Google Scholar]

- Batty M., Meaux E., Wittemeyer K., Roge B., Taylor M.J. Early processing of emotional faces in children with autism: an event-related potential study. Journal of Experimental Child Psychology. 2011;109:430–444. doi: 10.1016/j.jecp.2011.02.001. [DOI] [PubMed] [Google Scholar]

- Batty M., Taylor M.J. The development of emotional face processing during childhood. Developmental Science. 2006;9:207–220. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Baum K., Nowicki S. A measure of receptive prosody for adults: The Diagnostic Analysis of Nonverbal Accuracy-Adult Voices. Meeting of the Society for Research in Personality; Atlanta, GA ; 1996. [Google Scholar]

- Beauchamp M.H., Anderson V. SOCIAL: an integrative framework for the development of social skills. Psychological Bulletin. 2010;136:39–64. doi: 10.1037/a0017768. [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau V.C., Maurer U., Tottenham N., McCandliss B.D. The face-specific N170 component is modulated by emotional facial expression. Behavioral and Brain Functions. 2007;3:1–13. doi: 10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borod J.C., Pick L.H., Hall S., Sliwinski M., Madigan N., Obler L.K., Welkowitz J., Canino E., Erhan H.M., Goral M., Morrison C., Tabert M. Relationships among facial, prosodic, and lexical channels of emotional perceptual processing. Cognition and Emotion. 2000;14:193–211. [Google Scholar]

- Bowler D.M. ‘Theory of mind’ in Asperger's syndrome. Journal of Child Psychology and Psychiatry. 1992;33:877–893. doi: 10.1111/j.1469-7610.1992.tb01962.x. [DOI] [PubMed] [Google Scholar]

- Carretie L., Martin-Loeches M., Hinojosa J.A., Mercado F. Emotion and attention interaction studied through event-related potentials. Journal of Cognitive Neuroscience. 2001;13:1109–1128. doi: 10.1162/089892901753294400. [DOI] [PubMed] [Google Scholar]

- Charman T., Pickles A., Simonoff E., Chandler S., Loucas T., Baird G. IQ in children with autism spectrum disorders: Data from the Special Needs and Autism Project (SNAP) Psychological Medicine. 2011;41:619–627. doi: 10.1017/S0033291710000991. [DOI] [PubMed] [Google Scholar]

- Chevallier C., Kohls G., Troiani V., Brodkin E.S., Schultz R.T. The social motivation theory of autism. Trends in Cognitive Sciences. 2012 doi: 10.1016/j.tics.2012.02.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G., Finley C., Phillips S., Galpert L. Reduced P3 amplitude of the event-related brain potential: its relationship to language ability in autism. Journal of Autism and Developmental Disorders. 1988;18:493–504. doi: 10.1007/BF02211869. [DOI] [PubMed] [Google Scholar]

- Dawson G., Webb S.J., McPartland J.C. Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Developmental Neuropsychology. 2005;27:403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- Demertzis A., Nowicki S. Southeastern Psychological Association; Mobile, AL: 1998. Correlates of the Ability to Read Emotion in the Voices of Children. [Google Scholar]

- Dissanayake C., Macintosh K., Repacholi B., Slaughter V. Psychology Press; New York, NY, USA: 2003. Mind Reading and Social Functioning in Children with Autistic Disorder and Asperger's Disorder, Individual Differences in Theory of Mind: Implications for Typical and Atypical Development. pp. 213–239. [Google Scholar]

- Eimer M., Holmes A., McGlone F.P. The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cognitive, Affective & Behavioral Neuroscience. 2003;3:97–110. doi: 10.3758/cabn.3.2.97. [DOI] [PubMed] [Google Scholar]

- Faja S., Webb S.J., Jones E., Merkle K., Kamara D., Bavaro J., Aylward E., Dawson G. The effects of face expertise training on the behavioral performance and brain activity of adults with high functioning autism spectrum disorders. Journal of Autism and Developmental Disorders. 2012;42:278–293. doi: 10.1007/s10803-011-1243-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harms M.B., Martin A., Wallace G.L. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychology Review. 2010;20:290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- Hileman C.M., Henderson H., Mundy P., Newell L., Jaime M. Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Developmental Neuropsychology. 2011;36:214–236. doi: 10.1080/87565641.2010.549870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. Source analysis of the N170 to faces and objects. Neuroreport. 2004;15:1261–1265. doi: 10.1097/01.wnr.0000127827.73576.d8. [DOI] [PubMed] [Google Scholar]

- Jacobson N.S., Truax P. Clinical significance: a statistical approach to defining meaningful change in psychotherapy research. Journal of Consulting and Clinical Psychology. 1991;59:12–19. doi: 10.1037//0022-006x.59.1.12. [DOI] [PubMed] [Google Scholar]

- Jeste S.S., Nelson C.A., III Event related potentials in the understanding of autism spectrum disorders: an analytical review. Journal of Autism and Developmental Disorders. 2009;39:495–510. doi: 10.1007/s10803-008-0652-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones C.R.G., Pickles A., Falcaro M., Marsden A.J.S., Happé F., Scott S.K., Sauter D., Tregay J., Phillips R.J., Baird G., Simonoff E., Charman T. A multimodal approach to emotion recognition ability in autism spectrum disorders. Journal of Child Psychology and Psychiatry. 2010;52:275–285. doi: 10.1111/j.1469-7610.2010.02328.x. [DOI] [PubMed] [Google Scholar]

- Joseph R.M., Ehrman K., McNally R., Keehn B. Affective response to eye contact and face recognition ability in children with ASD. Journal of the International Neuropsychological Society. 2008;14:947–955. doi: 10.1017/S1355617708081344. [DOI] [PubMed] [Google Scholar]

- Key A.P.F., Dove G.O., Maguire M.J. Linking brainwaves to the brain: an ERP primer. Developmental Neuropsychology. 2005;27:183–215. doi: 10.1207/s15326942dn2702_1. [DOI] [PubMed] [Google Scholar]

- Klin A., Lin D., Gorrindo P., Ramsay G., Jones W. Two-year-olds with autism orient to non-social contingencies rather than biological motion. Nature. 2009;459:257–261. doi: 10.1038/nature07868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerner M.D., Mikami A.Y., Levine K. Socio-dramatic affective-relational intervention for adolescents with Asperger syndrome & high functioning autism: pilot study. Autism. 2011;15:21–42. doi: 10.1177/1362361309353613. [DOI] [PubMed] [Google Scholar]

- Lerner M.D., White S.W., McPartland J.C. Mechanisms of change in psychosocial interventions for autism spectrum disorders. Dialogues in Clinical Neuroscience. 2012;14:307–318. doi: 10.31887/DCNS.2012.14.3/mlerner. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord C., Rutter M., DiLavore P., Risi S. Western Psychological Services; Los Angeles, CA: 1999. Manual for the Autism Diagnostic Observation Schedule. [Google Scholar]

- Luo W., Feng W., He W., Wang N., Luo Y. Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage. 2010;49:1857–1867. doi: 10.1016/j.neuroimage.2009.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPartland J.C., Dawson G., Webb S.J., Panagiotides H., Carver L.J. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2004;45:1235–1245. doi: 10.1111/j.1469-7610.2004.00318.x. [DOI] [PubMed] [Google Scholar]

- McPartland J.C., Wu J., Bailey C.A., Mayes L.C., Schultz R.T., Klin A. Atypical neural specialization for social percepts in autism spectrum disorder. Social Neuroscience. 2011;6:436–451. doi: 10.1080/17470919.2011.586880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson C.A., III, McCleery J.P. Use of event-related potentials in the study of typical and atypical development. Journal of the American Academy of Child & Adolescent Psychiatry. 2008;47:1252–1261. doi: 10.1097/CHI.0b013e318185a6d8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nowicki S. vol. 2. Emory University; Atlanta, GA: 2004. (Manual for the Receptive Tests of the Diagnostic Analysis of Nonverbal Accuracy). [Google Scholar]

- Nowicki S., Carton J. The measurement of emotional intensity from facial expressions. Journal of Social Psychology. 1993;133:749–750. doi: 10.1080/00224545.1993.9713934. [DOI] [PubMed] [Google Scholar]

- O’Connor K., Hamm J.P., Kirk I.J. The neurophysiological correlates of face processing in adults and children with Asperger's syndrome. Brain and Cognition. 2005;59:82–95. doi: 10.1016/j.bandc.2005.05.004. [DOI] [PubMed] [Google Scholar]

- O’Connor K., Hamm J.P., Kirk I.J. Neurophysiological responses to face, facial regions and objects in adults with Asperger's syndrome: an ERP investigation. International Journal of Psychophysiology. 2007;63:283–293. doi: 10.1016/j.ijpsycho.2006.12.001. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Jessen S., Kotz S.A. Investigating the multimodal nature of human communication: insights from ERPs. Journal of Psychophysiology. 2009;23:63–76. [Google Scholar]

- Paulmann S., Kotz S.A. Early emotional prosody perception based on different speaker voices. Neuroreport. 2008;19:209–213. doi: 10.1097/WNR.0b013e3282f454db. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Kotz S.A. An ERP investigation on the temporal dynamics of emotional prosody and emotional semantics in pseudo- and lexical-sentence context. Brain and Language. 2008;105:59–69. doi: 10.1016/j.bandl.2007.11.005. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Seifert S., Kotz S.A. Orbito-frontal lesions cause impairment during late but not early emotional prosodic processing. Social Neuroscience. 2010;5:59–75. doi: 10.1080/17470910903135668. [DOI] [PubMed] [Google Scholar]

- Pelphrey K.A., Morris J.P., McCarthy G., LaBar K.S. Perception of dynamic changes in facial affect and identity in autism. Social Cognitive and Affective Neuroscience. 2007;2:140–149. doi: 10.1093/scan/nsm010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Philip R.C.M., Whalley H.C., Stanfield A.C., Sprengelmeyer R., Santos I.M., Young A.W., Atkinson A.P., Calder A.J., Johnstone E.C., Lawrie S.M., Hall J. Deficits in facial, body movement and vocal emotional processing in autism spectrum disorders. Psychological Medicine: A Journal of Research in Psychiatry and the Allied Sciences. 2010;40:1919–1929. doi: 10.1017/S0033291709992364. [DOI] [PubMed] [Google Scholar]

- Pinheiro A.P., Galdo-Ãlvarez S., Rauber A., Sampaio A., Niznikiewicz M., Goncalves O.F. Abnormal processing of emotional prosody in Williams syndrome: an event-related potentials study. Research in Developmental Disabilities. 2010;32:133–147. doi: 10.1016/j.ridd.2010.09.011. [DOI] [PubMed] [Google Scholar]

- Riby D.M., Hancock P.J.B. Do faces capture the attention of individuals with Williams syndrome or autism? Evidence from tracking eye movements. Journal of Autism and Developmental Disorders. 2009;39:421–431. doi: 10.1007/s10803-008-0641-z. [DOI] [PubMed] [Google Scholar]

- Rump K.M., Giovannelli J.L., Minshew N.J., Strauss M.S. The development of emotion recognition in individuals with autism. Child Development. 2009;80:1434–1447. doi: 10.1111/j.1467-8624.2009.01343.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutherford M.D., Towns A.M. Scan path differences and similarities during emotion perception in those with and without autism spectrum disorders. Journal of Autism and Developmental Disorders. 2008;38:1371–1381. doi: 10.1007/s10803-007-0525-7. [DOI] [PubMed] [Google Scholar]

- Ryan J.J., Glass L.A., Brown C.N. Administration time estimates for Wechsler Intelligence Scale for Children-IV subtests, composites, and short forms. Journal of Clinical Psychology. 2007;63:309–318. doi: 10.1002/jclp.20343. [DOI] [PubMed] [Google Scholar]

- Shibata T., Nishijo H., Tamura R., Miyamoto K., Eifuku S., Endo S., Ono T. Generators of visual evoked potentials for faces and eyes in the human brain as determined by dipole localization. Brain Topogr. 2002;15:51–63. doi: 10.1023/a:1019944607316. [DOI] [PubMed] [Google Scholar]

- Solomon M., Goodlin-Jones B.L., Anders T.F. A social adjustment enhancement intervention for high functioning autism, Asperger's syndrome, and pervasive developmental disorder NOS. Journal of Autism and Developmental Disorders. 2004;34:649–668. doi: 10.1007/s10803-004-5286-y. [DOI] [PubMed] [Google Scholar]

- Streit M., Brinkmeyer J., Wólwer W. EEG brain mapping in schizophrenic patients and healthy subjects during facial emotion recognition. Schizophrenia Research. 2003;61:121–122. doi: 10.1016/s0920-9964(02)00301-8. [DOI] [PubMed] [Google Scholar]

- Streit M., Ioannides A.A., Liu L., Wolwer W., Dammers J., Gross J., Gaebel W., Muller-Gartner H.W. Neurophysiological correlates of the recognition of facial expressions of emotion as revealed by magnetoencephalography. Brain Res Cogn Brain Res. 1999;7:481–491. doi: 10.1016/s0926-6410(98)00048-2. [DOI] [PubMed] [Google Scholar]

- Streit M., Wolwer W., Brinkmeyer J., Ihl R., Gaebel W. EEG-correlates of facial affect recognition and categorisation of blurred faces in schizophrenic patients and healthy volunteers. Schizophr Res. 2001;49:145–155. doi: 10.1016/s0920-9964(00)00041-4. [DOI] [PubMed] [Google Scholar]

- Webb S.J., Dawson G., Bernier R., Panagiotides H. ERP evidence of atypical face processing in young children with autism. Journal of Autism and Developmental Disorders. 2006;36:881–890. doi: 10.1007/s10803-006-0126-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb S.J., Jones E.J.H., Merkle K., Murias M., Greenson J., Richards T., Aylward E., Dawson G. Response to familiar faces, newly familiar faces, and novel faces as assessed by ERPs is intact in adults with autism spectrum disorders. International Journal of Psychophysiology. 2010;77:106–117. doi: 10.1016/j.ijpsycho.2010.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb S.J., Jones E.J.H., Merkle K., Venema K., Greenson J., Murias M., Dawson G. Developmental change in the ERP responses to familiar faces in toddlers with autism spectrum disorders versus typical development. Child Development. 2011;82:1868–1886. doi: 10.1111/j.1467-8624.2011.01656.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D. The Psychological Corporation/Harcourt Brace; New York: 2003. Manual for the Wechsler Intelligence Scale for Children-Fourth Edition (WISC-IV) [Google Scholar]

- Whitehouse A.J.O., Bishop D.V.M. Do children with autism ‘switch off’ to speech sounds? An investigation using event-related potentials. Developmental Science. 2008;11:516–524. doi: 10.1111/j.1467-7687.2008.00697.x. [DOI] [PubMed] [Google Scholar]

- Wicker B., Fonlupt P., Hubert B., Tardif C., Gepner B., Deruelle C. Abnormal cerebral effective connectivity during explicit emotional processing in adults with autism spectrum disorder. Social Cognitive and Affective Neuroscience. 2008;3:135–143. doi: 10.1093/scan/nsn007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong T.K.W., Fung P.C.W., Chua S.E., McAlonan G.M. Abnormal spatiotemporal processing of emotional facial expressions in childhood autism: dipole source analysis of event-related potentials. European Journal of Neuroscience. 2008;28:407–416. doi: 10.1111/j.1460-9568.2008.06328.x. [DOI] [PubMed] [Google Scholar]

- Wynn J.K., Lee J., Horan W.P., Green M.F. Using event related potentials to explore stages of facial affect recognition deficits in schizophrenia. Schizophr Bull. 2008;34:679–687. doi: 10.1093/schbul/sbn047. [DOI] [PMC free article] [PubMed] [Google Scholar]