Graphical abstract

Highlights

* Distinct N100 and N400 components could be seen at the central, parietal and occipital regions. * There was a consistent pattern of effects of emotional prosody perception on the N400 across scalp regions. * The N400 was attenuated to angry compared to happy and neutral voices.

Keywords: Vocal, ERP, N400, Children

Abstract

Introduction

Vocal anger is a salient social signal serving adaptive functions in typical child development. Despite recent advances in the developmental neuroscience of emotion processing with regard to visual stimuli, little remains known about the neural correlates of vocal anger processing in childhood. This study represents the first attempt to isolate a neural marker of vocal anger processing in children using electrophysiological methods.

Methods

We compared ERP wave forms during the processing of non-word emotional vocal stimuli in a population sample of 55 6–11-year-old typically developing children. Children listened to three types of stimuli expressing angry, happy, and neutral prosody and completed an emotion identification task with three response options (angry, happy and neutral/‘ok’).

Results

A distinctive N400 component which was modulated by emotional content of vocal stimulus was observed in children over parietal and occipital scalp regions—amplitudes were significantly attenuated to angry compared to happy and neutral voices.

Discussion

Findings of the present study regarding the N400 are compatible with adult studies showing reduced N400 amplitudes to negative compared to neutral emotional stimuli. Implications for studies of the neural basis of vocal anger processing in children are discussed.

1. Introduction

Human voices are auditory stimuli processed in specialised brain regions in healthy adults (Belin et al., 2000), as well as infants (Grossmann et al., 2010) and 4–5-year-old children (Rogier et al., 2010). Further research suggests that the human brain begins to become sensitive to emotional information expressed in vocal tone at an early stage in development (Grossmann et al., 2010, Grossmann et al., 2005). The ability to read others’ non-verbal social signals is argued to allow humans to navigate their social environment successfully and modify their behaviour according to others’ feelings and intentions (Frith and Frith, 2007). Furthermore, sensitivity to others’ vocal emotional expressions has been associated with social competence in childhood and adolescence (Goodfellow and Nowicki, 2009, Rothman and Nowicki, 2004, Trentacosta and Fine, 2010).

Emotional prosody (or speech melody) refers to changes in the intonation of the voice according to the speaker's emotional state (Banse and Scherer, 1996, Hargrove, 1997). According to recent research, words with an angry prosody, for example, elicited more negative ERP responses (latency 450 ms) over fronto-central sites compared to words with happy or neutral prosody in 7-month-old infants (Grossmann et al., 2005). Further studies showed increased brain activation patterns in the posterior temporal cortex to angry compared to neutral and happy prosody in 7-month-old infants (Grossmann et al., 2010). Similarly, functional Magnetic Resonance Imaging (fMRI) studies in adults have shown that angry relative to neutral prosody elicited enhanced responses in middle superior temporal sulcus (Grandjean et al., 2005, Sander et al., 2005). In contrast, happy voices (and not angry or neutral voices) have been found to evoke an increased response in right inferior frontal cortex in 7-month-old infants (Grossmann et al., 2010) and adults (Johnstone et al., 2006).

Several research studies have shown that children can reliably recognise anger on the basis of vocal tone (Baum and Nowicki, 1998, Nowicki and Mitchell, 1998, Tonks et al., 2007), however little is known about how this process changes with development. The N100 is a negative component occurring at about 100 ms post stimulus and is thought to reflect sound detection and early attentional orienting (Bruneau et al., 1997, Näätänen and Picton, 1987). The N100 is sensitive to differences in sound intensity or frequency of acoustic stimuli (Ceponiene et al., 2002, Näätänen and Picton, 1987). In 4–8-year-olds, the N100 peaks around 170 ms at temporal sites, for example, and is thought to reflect auditory behavioural orienting (Bruneau et al., 1997). A recent study showed no effect of vocal emotion (angry, happy) on the amplitude and latency of an early (i.e., 100–200 ms) negative component during a passive listening task in 13 healthy 9–11-year-old boys (Korpilahti et al., 2007). However, previous research has typically employed a small sample size and utilised speech stimuli (i.e., ‘give it to me’; see Korpilahti et al., 2007); making it difficult to disentangle sensitivity to emotional prosody from sensitivity to the language content. In the current study we isolated the N100 suggested to reflect early auditory attention orienting (Bruneau et al., 1997).

In addition, we explored the N400; a centro/parietal negative component occurring at about 400 ms after stimulus onset. The N400 is typically larger for words presented in incongruous than congruous sentence contexts (i.e., coffee with socks/sugar) (Kutas and Hillyard, 1980). Schirmer et al. (2005) presented adult listeners prime sentences (i.e., ‘she had her exam’) with happy or sad prosody followed by visual target words. Half the target words matched the prosody of the preceding sentence (i.e., ‘success’ following happy prosody) and half did not (i.e., ‘failure’ following happy prosody). Participants indicated whether the target word matched the prosody of the preceding sentence. Results showed that target words (i.e., ‘success’) elicited a larger N400 when preceded by a prime with incongruous (i.e., sad) as compared to congruous (i.e., happy) emotional prosody (Schirmer et al., 2005). This demonstrates sensitivity of the N400 component to an emotional prosodic context. In a similar study, Bostanov and Kotchoubey (2004) presented different word-exclamation pairs using spoken emotion words as primes (i.e., ‘joy’) and corresponding emotional exclamations as targets (i.e., grief) with consistent (i.e., positive–positive) and inconsistent (i.e., positive–negative) combinations. Results showed that N400 amplitude was significantly larger for the inconsistent condition. In summary, the N400 is thought to reflect cognitive processes related to context violation during emotion recognition and can prove a useful electrophysiological marker of emotional prosody comprehension.

In summary, previous research has focused on the N400 to vocal emotion in adults and incongruity effects following a prime, however, the time course (ERPs) of vocal anger processing in middle childhood remains unexplored.

1.1. The current study

The goal of the current study was to isolate the neural signature of anger prosody processing in children. To this end, we focused on N100, a putative marker of early auditory processing, and the N400, a late emotion-specific processing component. Following previous research (Bostanov and Kotchoubey, 2004, Schirmer et al., 2005, Toivonen and Rämä, 2009) we predicted that the N400 component would be modulated by emotional prosody (i.e., anger vs. neutral stimuli). Second, following previous findings (i.e., Korpilahti et al., 2007) we predicted that the N100 would be insensitive to the emotional content of the sounds and so be insensitive to anger related stimuli in children. We focused on the N100 to rule out that predicted N400 effects were not driven by pervasive problems in auditory orienting.

2. Methods

2.1. Participants

Of the 105 children initially approached via primary schools, 80 were recruited to the study. Children and their mothers gave informed written consent for participation. Of those, pilot data from 5 children (mean age = 7.30 years, SD = .73, age range 6.08–8 years, 2 boys) were excluded from analyses due to incomplete data and ERP artifacts. One boy (5.42 years) with a hearing threshold in the atypical range (46 dB; see below) in the right ear was excluded. In the end, complete ERP and behavioural data were available from 55 children (mean age = 8.90 years, SD = 1.60 years, age range 6.08–11.83, 39 boys). The study was approved by the University of Southampton, School of Psychology Ethics Committee.

2.2. Vocal expression stimuli

Three types of vocal stimuli expressed emotional prosody (interjection ‘ah’). One stimulus expressed anger, one happiness, both at a high intensity. There was also a neutral expression (Maurage et al., 2007).1 All stimuli derived from an adult female model and were standardised for acoustic parameters including mean intensity (76 dB) leading to a correspondent Sound Pressure Level (SPL) of 0.13 Pa, duration (700 ms), recording frequency (16,000 Hz), and rise and fall ramp times (20 ms). Acoustic analyses were conducted using Praat sound-analysis software (Boersma and Weenink, 2009). Acoustic properties of the vocal stimuli are presented in Table 1. Vocal stimuli were presented binaurally via headphones. These stimuli are well validated in adults (Maurage et al., 2007). The battery has high internal consistency for each emotion set and high levels of specificity (independence between the ratings in the different emotion sets) (Maurage et al., 2007).

Table 1.

Duration, fundamental frequency −f0 (in Hz) and intensity (in dB) of the vocal stimuli.

| Emotion | Mean f0 | Min f0 | Max f0 | Mean dB | Min dB | Max dB |

|---|---|---|---|---|---|---|

| Angry | 294.85 | 79.07 | 355.74 | 76.85 | 63.43 | 81.92 |

| Happy | 350.31 | 221.59 | 525.85 | 76.50 | 68.36 | 83.42 |

| Neutral | 191.30 | 181.21 | 194.54 | 76.34 | 70.30 | 78.31 |

We conducted a behavioural validation study of the stimuli in a separate community sample of 65 6–11-year-old children (mean age = 8.31 years, SD = 1.55, age range 6.00–10.75 years, 31 boys). These children gave informed written consent to participate out of a total of 97 children initially approached via local primary schools. After listening to one vocal stimulus at a time (Angry, Happy and Neutral, 12 trials per emotion type), children were asked to identify the emotion in the voice and press one of the three keyboard buttons with the labels ‘angry’, ‘happy’ or ‘okay’ to indicate their response. The mean percentage of trials classified correctly was as follows: Angry: M = 82.43, SD = 28.34, Happy: M = 71.28, SD = 33.17, Neutral: 40.14, SD = 21.99. Accuracy was above-chance for all emotion types, with chance defined as 33.3% given the three response options (data available from the authors). There were no significant associations between age and recognition accuracy for happy (r = .19, p > .05) and neutral (r = −.09, p > .05) voices and a marginal significant association between age and accuracy for angry voices (r = .26, p < .05).

2.3. Experimental paradigm and procedure

At the beginning of the experimental session, pure tone audiometric testing was conducted with a standard clinical audiometer to establish whether participants’ hearing threshold was within the average range defined as 25 dB (see British Society of Audiology Recommended Procedures, 2004). Children were instructed to indicate with a button press when a test tone was present and when it ceased to be present. This procedure was repeated for each ear (Right/Left) and for three frequency levels (1000, 1500, 500 Hz) separately. Each participant's hearing threshold was defined as the lowest level of sound they could hear. An average of the thresholds from the three frequencies was derived in each ear. An average of the thresholds from both ears was further created.

Subsequently, children participated in an emotion identification task with three response options (angry, happy and neutral/‘ok’). After listening to one vocal stimulus at a time, children were instructed to identify the emotion in the voice and press one of the three keyboard buttons with the labels ‘angry’, ‘happy’ or ‘okay’ to indicate their response. There were 180 experimental trials (60 trials per emotion type) presented in two blocks of 90 trials each. There was a 5-min rest break in between the two blocks. Children participated in 12 practice trials (four presentations of each emotion) at the beginning of the task. Button press responses were logged on the computer via Presentation software. Each trial began with the presentation of a central fixation cross (500 ms) followed by the presentation of the stimulus, followed by a blank screen until the participants gave a response and a 1000 ms inter-trial interval (ITI). Emotion stimulus presentation was randomised across participants. The overall testing time, including audiometric assessment, cap fitting and experimental testing, was one and a half hours.

2.4. Electrophysiological recording and processing

EEG data were recorded from an electrode cap (Easycap, Herrsching, Germany) containing 66 equidistant silver/silver chloride (Ag/AgCl) electrodes using Neuroscan Synamps2 70 channel EEG system. Cap electrodes were referenced to the nose. The EEG data were sampled at 250 Hz with a band pass filter at 0.1–70 Hz using an AC procedure and recorded from 19 sites (see Fig. 1). Analyses focused on 15 sites at central (sites 1, 2, 4, 6, 10, 16), parietal (sites 12, 13, 14, 24, 26) and occipital (sites 37, 38, 39, 40) areas. Selection of these sites was literature informed (Schirmer and Kotz, 2006) and aimed at maximising the number of artifact-free epochs. A ground electrode was fitted midway between the electrode at the vertex and frontal site 32. Vertical electro-oculogram (vEOG) was recorded from four electrodes: two bipolar electrodes were placed directly beneath the left and right eyes and affixed with tape, while the two electrodes placed above the right and left eye were included within the electrode cap. Impedances for vEOG, reference and cap electrodes were kept below 5 kΩ. The ERP epoch was defined as 100 ms pre-stimulus to 1000 ms post-stimulus. Each epoch had a baseline of 100 ms of pre-stimulus activity and was filtered with a low-pass filter down 48 dB at 32 Hz. An ocular artifact reduction procedure (Semlitsch et al., 1986) based on vEOG activity was used to remove the influence of blink and other eye movement; epochs were rejected if amplitudes exceeded ±150 μV in any EOG or scalp site included in analyses or if participants responded incorrectly. Average ERPs were calculated for each emotion type (Angry, Happy, Neutral). A minimum of 20 artifact free epochs out of a total of 60 epochs for each emotion type were used for calculating ERP averages.

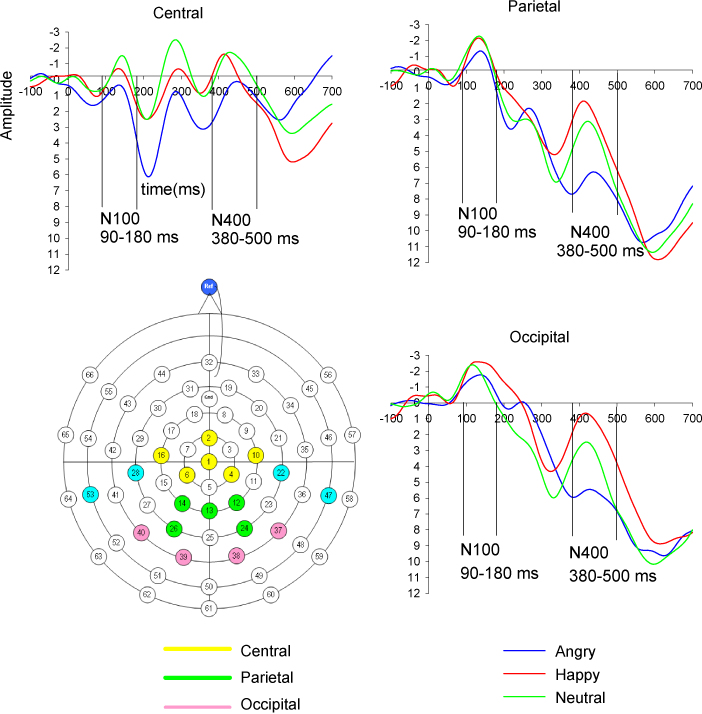

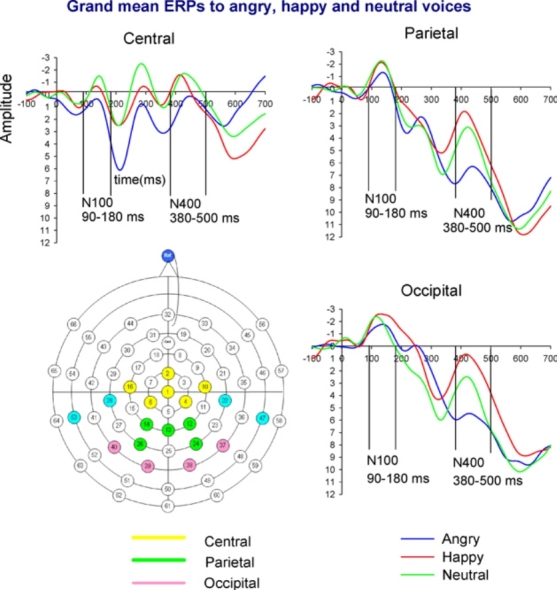

Fig. 1.

Grand mean ERPs to angry, happy and neutral voices per scalp region. Amplitude (μV) and time (ms) are marked at all regions with a pre-stimulus baseline of −100 ms. Scale is −3 to +12 μV. On the left, below, the montage with the sites used in EEG recording (in blue the sites used in EEG recording but not in analyses).

The mean and SD of the number of epochs included for each emotion condition in the overall sample were as follows: Angry: M = 45.74, SD = 9.32, Happy: M = 45.40, SD = 9.50, Neutral: M = 45.99, SD = 8.91. Given the young age of the children, we examined potential effects of age on artifact and trial rejection by dividing our sample into younger (6.08–8.75 years) and older (8.83–11.83 years) children using a median split. There was a significant effect of age on the number of correct and artifact free trials included in analyses for happy (F (1, 54) = 6.84, p < .05) and neutral (F (1, 54) = 6.98, p < .05) voices but not for angry (F (1, 54) = 3.41, p > .05), with fewer trials for younger children (Angry: M = 43.35, SD = 9.40, Happy: M = 42.04, SD = 9.60, Neutral: M = 42.80, SD = 8.90) compared to older children (Angry: M = 47.90, SD = 8.87, Happy: M = 48.41, SD = 8.47, Neutral: M = 48.82, SD = 8.00). Age effects in our study are similar to age effects reported in other developmental ERP studies with children of similar ages (Vlamings et al., 2010).

A baseline-to-peak mean amplitude method was followed for the N100 (90–180 ms) and N400 (380–500 ms). The above time windows were selected because they best captured each ERP component identified by visual inspection. Mean amplitude was initially calculated for each individual site and subsequently the mean amplitude for each ERP component was calculated as a combined score for a number of defined groups of electrode sites (‘scalp regions’-see Fig. 1) to increase the reliability of measurement (Dien and Santuzzi, 2005). Selection of electrode groups was based on the strong statistical similarity of the grand average ERPs for each electrode. Correlations between ERP waveforms within each region were statistically stronger (Pearson's r = from .63 to .95, p < .001) compared to correlations between amplitudes of electrode sites belonging to different regions (Pearson's r = from .25 to .64, p < .01).

3. Data analysis

3.1. Performance data

Raw data were transformed into measures of accuracy according to the two-high-threshold model. Discrimination accuracy (Pr) was computed for each target emotion using ‘hits’ (i.e., number of happy, angry or neutral expressions classified correctly) (Corwin, 1994). Values of discrimination accuracy (Pr) were entered in repeated measures non-parametric Friedman's ANOVA with emotion (Angry, Happy and Neutral) as within-subject factor and paired Wilcoxon follow up tests.

3.2. ERP data

Preliminary correlational analyses examined associations between child age and N100 and N400 mean amplitudes at the three scalp regions. Also, independent-samples t-tests examined differences in N100 and N400 mean amplitude values between males and females, given gender effects on emotional prosody perception reported in the literature (Schirmer et al., 2002). The main analyses examined the main effect of emotion type on amplitude of N100 and N400 component in each scalp region. N100 and N400 ERP data were entered into a repeated measures Analysis of Variance (ANOVA) with emotion type as the within-subject factor. Because the focus of the present study was on the ERP correlates of vocal anger processing, simple planned contrasts compared the angry voice condition with the neutral and happy voice condition.

4. Results

4.1. Performance

In order to optimise the chances of emotional modulation of ERP components the vocal stimuli were designed to be easy to classify. As predicted, therefore, mean accuracy for all three emotions was high (M = 91.60%, SD = 8.68%, see Table 2). There was a significant effect of emotion type on accuracy (x2 (2) = 12.91, p < .001). Accuracy was significantly higher for angry compared to neutral voices (T = 364.50, p < .001, r = −.51). Non-parametric Spearman's correlations showed that children's mean hearing threshold was not significantly associated with accuracy for angry or happy voices (p > .05) and it was significantly associated with accuracy for neutral voices (rs = −.28, p = .036). Childs’ age was not significantly associated with accuracy for angry, happy or neutral voices (ps > .05).

Table 2.

Mean percentage (SD) of trials classified correctly (in bold) and misattributions.

| Vocal expression | Child response |

||

|---|---|---|---|

| Angry | Happy | Neutral | |

| Angry | 92.30(9.87) | 2.24(3.72) | 3.21(3.65) |

| Happy | 2.72(4.16) | 91.33(9.98) | 3.42(6.72) |

| Neutral | 3.12(5.68) | 5.81(7.92) | 91.12(10.37) |

4.2. ERP data

Fig. 1 plots the grand mean averages for the waveforms to each vocal emotion stimuli for the three regions of interest. Fig. 2 plots the grand mean averages to each vocal emotion per individual site (see Appendix B). Distinct N100 and N400 components could be seen at the central, parietal and occipital regions. On the whole, neither age nor gender was associated with ERPs amplitude at any region for each condition (in the range of Pearson's r from .02 to −.22, p > .05). The exception was for amplitudes to neutral voices which were positively associated with child age for the occipital N400 (r = .28, p = .034). Thus, analyses were repeated for this region and component including childs’ age in the statistical model as a covariate.

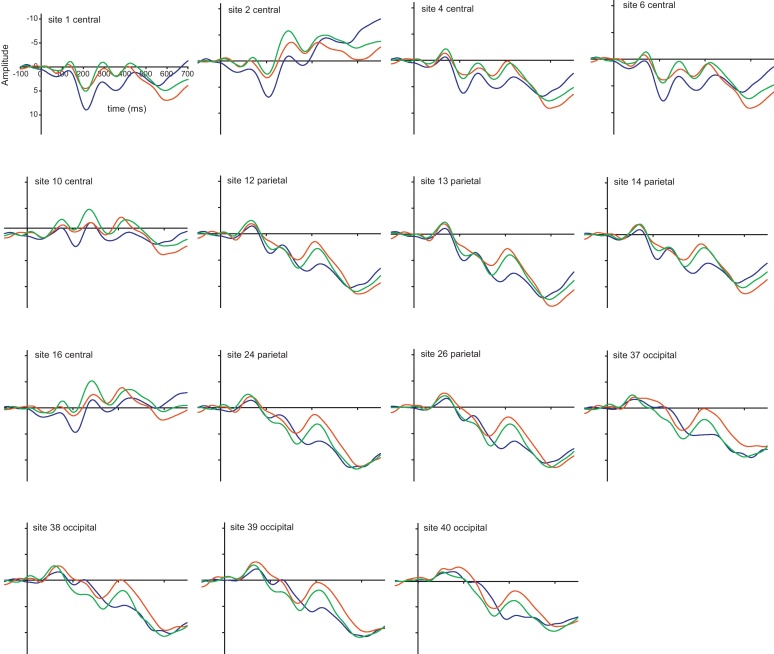

Fig. 2.

ERP Grand averages to voices at the 15 individual sites used in the EEG. Scale is −11 to +14 μV. Angry  Happy

Happy  Neutral .

Neutral .

The main analyses showed little evidence of modulation of N100 by emotional tone of stimuli. Results are reported with a Bonferroni correction for multiple comparisons with an accepted alpha of .05/12 = .004. There was no effect of emotion type on N100 amplitudes for the parietal and the occipital regions (F (2, 54) < 4.04, p > 05). There was an effect of emotion on central N100 amplitude (F (2, 108) = 5.41, p < 01) with angry voices having smaller amplitudes than happy (F (1, 54) = 9.60, p < 01, Angry: M = 1.60, SE = .44, Happy: M = −.27, SE = .62) and neutral (F (1, 54) = 9.97, p < 01, Neutral: M = −.26, SE = .55) voices. This effect did not survive correction for multiple testing. No other difference reached significance (p > .05).

In contrast, there was a consistent pattern of effects of emotional prosody on the N400 across domains which was most obvious in occipital (F (2, 108) = 10.62, p < 001) and parietal (F (2, 108) = 11.83, p < 001) regions (see Fig. 1), although still significant in the central region (F (2, 108) = 6.70, p < 01). In particular, the N400 was attenuated to angry compared to happy and neutral voices in all regions (p < .001), although the angry-neutral contrast was marginally significant (p = .040) in the occipital region. After including child age in the statistical model as a covariate, the difference in occipital N400 amplitude between angry and happy was rendered non-significant while the difference between angry and neutral voices became marginally significant (p = .055) and did not survive Bonferroni correction. All other emotion effects on the N400 reported above survived Bonferroni correction for multiple comparisons. We also examined the effect of age on ERPs (N100 and N400) by dividing our sample of 6–11-year olds into younger (6.08–8.75 years) and older (8.83–11.83 years) children using a median split. When running the analyses separately for older and younger children, the effects of emotion on the N400 remained significant in both age groups (p < .005) and results regarding the N100 did not change.

5. Discussion

The aim of the present study was to isolate the neural correlates of vocal anger processing in children. Based on previous research in adults (Bostanov and Kotchoubey, 2004, Schirmer et al., 2005, Toivonen and Rämä, 2009) we identified the N400, as implicated in vocal emotion processing. In line with previous research, the results showed that the N400 component was easily distinguished on the basis of grand mean averages, especially in the occipital and parietal regions (Schirmer and Kotz, 2006). Furthermore, this component was modulated by the emotional tone of the prosody; it was attenuated for angry compared to happy and neutral voices across regions. In contrast to the N400, the N100 did not show a strong pattern of emotion effects. The N100 is thought to reflect early attentional orienting to auditory stimuli (Bruneau et al., 1997, Näätänen and Picton, 1987), rather than being an ‘emotion-specific’ component. Findings of the present study suggest that latter components (N400) rather than earlier sensory processing mechanisms (N100) underlie emotional voice processing in 6–11-year olds. Findings of this study regarding the N400 are compatible with adult research showing reduced N400 amplitudes to negative compared to neutral emotional stimuli (Kanske and Kotz, 2007). The finding of reduced N400 amplitudes to negative stimuli has been replicated in a number of recent studies in healthy adults (De Pascalis et al., 2009, Gootjes et al., 2011, Stewart et al., 2010). Reduced N400 amplitude to negative stimuli has been suggested to reflect facilitated processing of negative compared to neutral words in healthy adults (Kanske and Kotz, 2007, Stewart et al., 2010).

The current study had a number of strengths compared with previous studies. First, the present study used a larger sample (N = 55) in contrast to previous studies with small samples and range. Second, in contrast to previous investigations with word stimuli, this study adopted non-word stimuli and these stimuli allowed us to examine the neural correlates of prosody processing independently of language processing. Third, this study used an explicit emotion identification task while previous studies with children employed simple passive listening (Korpilahti et al., 2007) or complex multimodal tasks (Shackman et al., 2007). Fourth, it included neutral voice as a control condition for comparison with emotional stimuli. Finally, we targeted both early (N100) and late (N400) components of voice processing. Therefore, the anger modulation of the N400 in the present age group is an interesting and novel finding in a vocal emotion recognition task. These findings extend the literature on the N400 as an electrophysiological marker of emotional prosody comprehension by demonstrating emotion modulation of the N400. Previous research on the N400 has mainly focused on adults (Bostanov and Kotchoubey, 2004, Kotchoubey et al., 2009, Schirmer et al., 2005) and infants (Grossmann et al., 2005). This is the first study to isolate the N400 as a neural marker of vocal anger processing in 6–11-year-old children. In contrast, the N100 was not sensitive to the emotional content of anger related vocal stimuli in children (Korpilahti et al., 2007).

The study also had some limitations. First, this study adopted a baseline-to-peak amplitude method, recommended when the component under analysis does not have a definite peak as was the case in this study (Fabiani et al., 2007). A disadvantage of this method, however, is that it may be sensitive to noise or nonlinear fluctuations in the baseline time window, relative to other (i.e., peak-to-trough) measures (Picton et al., 2000). In addition, one should acknowledge the limitations of the ERP signal in cases in which the component of interest appears to be superimposed on a slow drift or slow frequency (i.e., delta) oscillation (see parietal N400, Fig. 1). Recent research suggests that emotion processing may be related to delta frequency activity. For example, unpleasant pictures provoked greater delta responses than pleasant pictures in healthy adults (Klados et al., 2009). The study did not include ‘control’ sound categories beyond human voices to establish the selectivity of neural responses to vocal signals. This additional element would more robustly clarify whether the effects observed reflected vocal anger processing or the acoustical differences between sound categories. The findings cannot be generalized to all anger stimuli, such as for example higher intensity anger stimuli. Such conclusions can only be drawn with the inclusion in the study design of varying intensity levels of anger stimuli.

Future research should replicate the present findings in a larger sample of typically developing children. In addition, an important avenue for future research would be to explore associations between neural markers of vocal anger processing and childhood behaviour problems. Children who display externalising symptoms (i.e., conduct problems) are less accurate at recognising negative vocal expressions (Stevens et al., 2001) and display a selective perceptual bias to vocal anger in particular (Manassis et al., 2007). These children are especially susceptible to anger and frustration (Silk et al., 2003, Morris et al., 2002, Neumann et al., 2011) and tend to display hostile behaviours following threat interpretations of ambiguous stimuli (Barrett et al., 1996). Neurobiological models view impairments in brain systems that function to regulate anger as a key mechanism for impulsive aggressive behaviour (Davidson et al., 2000). Despite recent evidence on atypical brain reactions to affective speech prosody in children with Asperger Syndrome (Korpilahti et al., 2007), research on the time course (ERPs) of vocal anger processing in children with externalising problems is limited and would be a promising avenue for future research.

Conflict of interest

The authors declare the following conflict of interest: Professor Edmund Sonuga-Barke: Recent speaker board: Shire, UCB Pharma, Current & recent consultancy: UCB Pharma, Shire, Current & recent research support: Janssen Cilag, Shire, Qbtech, Flynn Pharma, Advisory Board: Shire, Flynn Pharma, UCB Pharma, Astra Zeneca, Conference support: Shire. Dr. Margaret Thompson: Recent speaker board: Janssen Cilag Current & recent consultancy: Shire, Current & recent research support: Janssen Cilag, Shire, Advisory Board: Shire.

Acknowledgements

The authors wish to thank the parents and children who took part in this research and Dr Pierre Maurage for the helpful advice on the vocal stimuli. The research was supported by Psychology, University of Southampton.

Footnotes

Further information about the vocal stimuli is provided in supplementary material (see Appendix B).

Supplementary data associated with this article can be found, in the online version, at doi:10.1016/j.dcn.2011.11.007.

Contributor Information

Georgia Chronaki, Email: gc4@soton.ac.uk.

Edmund J.S. Sonuga-Barke, Email: ejb3@soton.ac.uk.

Appendix A. Supplementary data

References

- Banse R., Scherer K.R. Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology. 1996;70(3):614–636. doi: 10.1037//0022-3514.70.3.614. [DOI] [PubMed] [Google Scholar]

- Barrett P.M., Rapee R.M., Dadds M.R., Sharon M. Family enhancement of cognitive style in anxious and aggressive children. Journal of Abnormal Child Psychology. 1996;24(2):187–203. doi: 10.1007/BF01441484. [DOI] [PubMed] [Google Scholar]

- Baum K., Nowicki S. Perception of emotion: measuring decoding accuracy of adult prosodic cues varying in intensity. Journal of Nonverbal Behavior. 1998;22(2):89–107. [Google Scholar]

- Belin P., Zatorre R.J., Lafaille P., Ahad P., Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403(6767):309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Boersma P., Weenink D. 2009. Praat: Doing Phonetics by Computer (Version 5.1.04) [Computer Program]http://www.praat.org/ Retrieved December 2009. [Google Scholar]

- Bostanov V., Kotchoubey B. Recognition of affective prosody: continuous wavelet measures of event-related brain potentials to emotional exclamations. Psychophysiology. 2004;41(2):259–268. doi: 10.1111/j.1469-8986.2003.00142.x. [DOI] [PubMed] [Google Scholar]

- British Society of Audiology Recommended Procedure (2004). Pure tone air and bone conduction threshold audiometry with and without masking and determination of uncomfortable loudness levels. BSA.

- Bruneau N., Roux S., Guerin P., Barthelemy C., Lelord G. Temporal prominence of auditory evoked potentials (N1 wave) in 4–8-year-old children. Psychophysiology. 1997;34(1):32–38. doi: 10.1111/j.1469-8986.1997.tb02413.x. [DOI] [PubMed] [Google Scholar]

- Ceponiene R., Rinne T., Näätänen R. Maturation of cortical sound processing as indexed by event-related potentials. Clinical Neurophysiology. 2002;113(6):870–882. doi: 10.1016/s1388-2457(02)00078-0. [DOI] [PubMed] [Google Scholar]

- Corwin J. On measuring discrimination and response bias: unequal numbers of targets and distractors and two classes of distractors. Neuropsychology. 1994;8(1):110–117. [Google Scholar]

- Davidson R.J., Putnam K.M., Larson C.L. Dysfunction in the neural circuitry of emotion regulation—a possible prelude to violence. Science. 2000;289(5479):591–594. doi: 10.1126/science.289.5479.591. [DOI] [PubMed] [Google Scholar]

- De Pascalis V., Arwari B., D’Antuono L., Cacace I. Impulsivity and semantic/emotional processing: an examination of the N400 wave. Clinical Neurophysiology. 2009;120(1):85–92. doi: 10.1016/j.clinph.2008.10.008. [DOI] [PubMed] [Google Scholar]

- Dien J., Santuzzi A.M. Application of repeated measures ANOVA to high-density ERP Database: a review and tutorial. In: Handy T.C., editor. Event-related Potentials. A Methods Handbook. MIT Press; Cambridge, MA: 2005. [Google Scholar]

- Fabiani M., Gratton G., Federmeier K.D. Event-related-potentials: methods, theory and applications. In: Cacciopo J.J., Tassinary L.G., Berntson G.G., editors. Handbook of Psychophysiology. 3rd ed. Cambridge University Press; 2007. pp. 85–119. [Google Scholar]

- Frith C.D., Frith U. Social cognition in humans. Current Biology. 2007;17:724–732. doi: 10.1016/j.cub.2007.05.068. [DOI] [PubMed] [Google Scholar]

- Goodfellow S., Nowicki S. Social adjustment, academic adjustment, and the ability to identify emotion in facial expressions of 7-year-old children. Journal of Genetic Psychology. 2009;170(3):234–243. doi: 10.1080/00221320903218281. [DOI] [PubMed] [Google Scholar]

- Gootjes L.L.C., Coppens L.C., Zwaan R.A., Franken I.H.A., Van Strien J.W. Effects of recent word exposure on emotion–word Stroop interference: an ERP study. International Journal of Psychophysiology. 2011;79(3):356–363. doi: 10.1016/j.ijpsycho.2010.12.003. [DOI] [PubMed] [Google Scholar]

- Grandjean D., Sander D., Pourtois G., Schwartz S., Seghier M.L., Scherer K.R., Vuilleumier P. The voices of wrath: brain responses to angry prosody in meaningless speech. Nature Neuroscience. 2005;8(2):145–146. doi: 10.1038/nn1392. [DOI] [PubMed] [Google Scholar]

- Grossmann T., Oberecker R., Koch S.P., Friederici A.D. The developmental origins of voice processing in the human brain. Neuron. 2010;65(6):852–858. doi: 10.1016/j.neuron.2010.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T., Striano T., Friederici A.D. Infants’ electric brain responses to emotional prosody. Neuroreport. 2005;16(16):1825–1828. doi: 10.1097/01.wnr.0000185964.34336.b1. [DOI] [PubMed] [Google Scholar]

- Hargrove P.M. Prosodic aspects of language impairment in children. Topics in Language Disorders. 1997;17(4):76–83. [Google Scholar]

- Johnstone T., van Reekum C.M., Oakes T.R., Davidson R.J. The voice of emotion: an FMRI study of neural responses to angry and happy vocal expressions. Social Cognitive and Affective Neuroscience. 2006;1(3):242–249. doi: 10.1093/scan/nsl027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanske P., Kotz S.A. Concreteness in emotional words: ERP evidence from a hemifield study. Brain Research. 2007;1148:138–148. doi: 10.1016/j.brainres.2007.02.044. [DOI] [PubMed] [Google Scholar]

- Klados M.A., Frantzidis C., Vivas A.B., Papadelis C., Lithari C., Pappas C., Bamidis P.D. A framework combining delta event-related oscillations (EROs) and synchronisation effects (ERD/ERS) to study emotional processing. Computational Intelligence and Neuroscience. 2009:1–16. doi: 10.1155/2009/549419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korpilahti P., Jansson-Verkasalo E., Mattila M.-L., Kuusikko S., Suominen K., Rytky S., Pauls D., Moilanen I. Processing of affective speech prosody is impaired in asperger syndrome. Journal of Autism and Developmental Disorders. 2007;37(8):1539–1549. doi: 10.1007/s10803-006-0271-2. [DOI] [PubMed] [Google Scholar]

- Kotchoubey B., Kaiser J., Bostanov V., Lutzenberger W., Birbaumer N. Recognition of affective prosody in brain-damaged patients and healthy controls: a neurophysiological study using EEG and whole-head MEG. Cognitive, Affective, & Behavioral Neuroscience. 2009;9(2):153–167. doi: 10.3758/CABN.9.2.153. [DOI] [PubMed] [Google Scholar]

- Kutas M., Hillyard S.A. Event-related brain potentials to semantically inappropriate and surprisingly large words. Biological Psychology. 1980;11:99–116. doi: 10.1016/0301-0511(80)90046-0. [DOI] [PubMed] [Google Scholar]

- Manassis K., Tannock R., Young A., Francis-John S. Cognition in anxious children with attention deficit hyperactivity disorder: a comparison with clinical and normal children. Behavioral and Brain Functions. 2007;3 doi: 10.1186/1744-9081-3-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maurage P., Joassin F., Philippot P., Campanella S. A validated battery of vocal emotional expressions. Neuropsychological Trends. 2007;2:63–74. [Google Scholar]

- Morris A.S., Silk J.S., Steinberg L., Sessa F.M., Avenevoli S., Essex M.J. Tempermental vulnerability and negative parenting as interacting of child adjustment. Journal of Marriage and Family. 2002;64:461–471. [Google Scholar]

- Näätänen R., Picton T. The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology. 1987;24(4):375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- Neumann A., van Lier P.A.C., Frijns T., Meeus W., Koot H.M. Emotional dynamics in the development of early adolescent psychopathology: a one-year longitudinal study. Journal of Abnormal Child Psychology. 2011;39(5):657–669. doi: 10.1007/s10802-011-9509-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nowicki S., Mitchell J. Accuracy in identifying affect in child and adult faces and voices and social competence in preschool children. Genetic Social and General Psychology Monographs. 1998;124(1):39–59. [PubMed] [Google Scholar]

- Picton T.W., Bentin S., Berg P., Donchin E., Hillyard S.A., Johnson R., Miller G.A., Ritter W., Ruchkin D.S., Rugg M.D., Taylor M.J. Guidelines for using human event-related potentials to study cognition: Recording standards and publication criteria. Psychophysiology. 2000;37(2):127–152. [PubMed] [Google Scholar]

- Rogier O., Roux S., Belin P., Bonnet-Brilhault F., Bruneau N. An electrophysiological correlate of voice processing in 4- to 5-year-old children. International Journal of Psychophysiology. 2010;75(1):44–47. doi: 10.1016/j.ijpsycho.2009.10.013. [DOI] [PubMed] [Google Scholar]

- Rothman A.D., Nowicki S. A measure of the ability to identify emotion in children's tone of voice. Journal of Nonverbal Behavior. 2004;28(2):67–92. [Google Scholar]

- Sander D., Grandjean D., Pourtois G., Schwartz S., Seghier M.L., Scherer K.R., Vuilleumier P. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. Neuroimage. 2005;28(4):848–858. doi: 10.1016/j.neuroimage.2005.06.023. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends in Cognitive Sciences. 2006;10(1):24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A., Friederici A.D. Sex differentiates the role of emotional prosody during word processing. Cognitive Brain Research. 2002;14(2):228–233. doi: 10.1016/s0926-6410(02)00108-8. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A., Friederici A.D. On the role of attention for the processing of emotions in speech: sex differences revisited. Cognitive Brain Research. 2005;24(3):442–452. doi: 10.1016/j.cogbrainres.2005.02.022. [DOI] [PubMed] [Google Scholar]

- Semlitsch H.V., Anderer P., Schuster P., Presslich O. A solution for reliable and valid reduction of ocular artifacts, applied to the P300 ERP. Psychophysiology. 1986;23(6):695–703. doi: 10.1111/j.1469-8986.1986.tb00696.x. [DOI] [PubMed] [Google Scholar]

- Shackman J.E., Shackman A.J., Pollak S.D. Physical abuse amplifies attention to threat and increases anxiety in children. Emotion. 2007;7(4):838–852. doi: 10.1037/1528-3542.7.4.838. [DOI] [PubMed] [Google Scholar]

- Silk J.S., Steinberg L., Morris A.S. Adolescents’ emotion regulation in daily fife: links to depressive symptoms and problem behavior. Child Development. 2003;74(6):1869–1880. doi: 10.1046/j.1467-8624.2003.00643.x. [DOI] [PubMed] [Google Scholar]

- Stevens D., Charman T., Blair R.J.R. Recognition of emotion in facial expressions and vocal tones in children with psychopathic tendencies. Journal of Genetic Psychology. 2001;162(2):201–211. doi: 10.1080/00221320109597961. [DOI] [PubMed] [Google Scholar]

- Stewart J.L., Silton R.L., Sass S.M., Fisher J.E., Edgar J.C., Heller W., Miller G.A. Attentional bias to negative emotion as a function of approach and withdrawal anger styles: an ERP investigation. International Journal of Psychophysiology. 2010;76(1):9–18. doi: 10.1016/j.ijpsycho.2010.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toivonen M., Rämä P. N400 during recognition of voice identity and vocal affect. Neuroreport. 2009;20(14):1245–1249. doi: 10.1097/WNR.0b013e32832ff26f. [DOI] [PubMed] [Google Scholar]

- Tonks J., Williams W.H., Frampton I., Yates P., Slater A. Assessing emotion recognition in 9–15-years olds: preliminary analysis of abilities in reading emotion from faces, voices and eyes. Brain Injury. 2007;21(6):623–629. doi: 10.1080/02699050701426865. [DOI] [PubMed] [Google Scholar]

- Trentacosta C.J., Fine S.E. Emotion knowledge, social competence, and behavior problems in childhood and adolescence: a meta-analytic review. Social Development. 2010;19(1):1–29. doi: 10.1111/j.1467-9507.2009.00543.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vlamings P.H.J.M., Jonkman L.M., Kemner C. An eye for detail: an event-related potential study of the rapid processing of fearful facial expressions in children. Child Development. 2010;81(4):1304–1319. doi: 10.1111/j.1467-8624.2010.01470.x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.