Highlights

► We compared object and action identification in adults and children using ERPs. ► Adults exhibited similar N300 and N400 responses for objects and actions. ► Children exhibited an N400 for objects and actions, but an N300 only to objects. ► Neuronal markers of object identification are similar to adults by ages 8–9. ► Markers of rapid, flexible action identification develop into middle childhood.

Keywords: Event related potentials, Action identification, Object identification, N300, N400, Development

Abstract

Semantic mastery includes quickly identifying object and action referents in the environment. Given the relational nature of action verbs compared to object nouns, how do these processes differ in children and adults? To address this question the Event Related Potentials (EPRs) of 8–9 year olds and adults were recorded as they performed a picture-matching task in which a noun (chair) or verb (sit) was followed by a picture of an object and action (a man sitting in a chair). Adults and children displayed similar central N400 congruency effects in response to objects and actions. Developmental differences were revealed in the N300. Adults displayed N300 differences between congruent and incongruent items for both objects and actions. Children, however, exhibited an N300 congruency effect only for objects, indicating that although object noun representations may be adult-like, action verb representations continue to solidify through middle childhood. Surprisingly, adults also exhibited a posterior congruency effect that was not found in children. This is similar to the late positive component (LPC) reported by other studies of semantic picture processing, but the lack of such a response in children raises important questions about the development of semantic integration.

1. Introduction

In acquiring and comprehending language, children must quickly and easily identify objects and actions in their environment. Typically, objects are labeled by nouns and actions by verbs. In picture naming tasks, children struggle disproportionally with action verb identification compared to object noun identification through at least age 7 (Sheng and McGregor, 2010). We argue that this is because children's verb representations are not as abstract or flexible as those of adults (Clark, 2003, Gentner, 2003, Maguire and Dove, 2008). Drawing on differences between the broad classifications of nouns and verbs, we use ERPs to investigate the development of these processes.

One core conceptual difference between object nouns and action verbs is that object nouns are concrete, referring to perceptually coherent entities, while verbs are relational, referring to relationships between objects or relationships between objects and their environment (Bird et al., 2003, Gentner, 1982, Maguire et al., 2006). For example, a simple action verb like falling is defined by the relationship between the figure and ground, regardless of the specific figure or context. Thus, potential referents can be as perceptually diverse as a leaf falling from a tree, a polar bear falling on ice, or the perception of oneself falling. Identifying each of these as falling requires abstracting the relationship between the agent and the ground. Object nouns such as tree do not vary as much across instances as verbs (Maguire et al., 2006, Gentner, 2003) and thus require less abstraction in identification.

In language development, this relational property of verbs is cited as one reason why verb acquisition trails behind noun acquisition (Gentner and Boroditsky, 2001, Gillette et al., 1999). Specifically, children's verb representations may be more specific and concrete than those of adults (Clark, 2003, Gentner, 2003, Smiley and Huttenlocher, 1995) resulting in a less flexible representation that may hinder rapid identification (Maguire and Dove, 2008, Mandler, 2001). For example, if a child's primary representation of falling is a person falling on the ground, this representation would be concrete and specific. Extending that representation to identify a leaf falling out of a tree as “falling” would require additional processing. Adults, in contrast, have more abstract, potentially schematic, representations of action verbs that can be quickly matched to a range of distinct actions (Jackendoff and Landau, 1991, Mandler, 1998, Talmy, 2000). Thus, differences in how abstractly children and adults represent action verbs could result in differences in how quickly action identification occurs.

By studying the N300 and N400 ERP responses, we can uncover processing differences between object and action identification that might otherwise go unnoticed. Although related, the N300 and N400 index different aspects of semantic integration. The N300 is more frontally distributed and occurs only in response to picture stimuli. For example, a picture of a dog following the word cat would elicit both an early, frontal N300 response and a later, central N400 response when compared to the same picture following the word dog (Barrett and Rugg, 1990, Mazerolle et al., 2007, Sitnikova et al., 2008). In response to a semantically unrelated word only the later N400 response would occur. Thus, the N300 is thought to index rapid matching of visual input to stored semantic knowledge (Schendan and Kutas, 2007, Sitnikova et al., 2008, Yum et al., 2011).

The N300 is of particular interest because the size of the effect indexes the ease with which a picture is matched to a word (Mazerolle et al., 2007, Tanaka et al., 1999). This was reported in studies of basic level (dog), superordinate level (animal) or subordinate level (beagle) word primes during picture identification. When the word and the picture are congruent, at any level of classification, the N300 response is attenuated relative to an incongruent pairing. The amplitude of the N300 to incongruent word-pictures pairs, however, differs across levels of classification in ways that provide insights about semantic integration. Specifically, when a basic level word (dog) precedes an incongruent picture (car), the picture elicits a larger N300 amplitude than when a superordinate level word (animal) precedes an incongruent picture. This is because the congruency difference is larger when the preceding semantic context is highly constrained and thus quickly matched, or not matched, to a picture. Thus, because the word dog provides a relatively detailed mental image it is easier to quickly identify if a given picture matches the stored semantic representation. Similarly, if object nouns evoke perceptually coherent and consistent semantic representations as compared to action verbs, then the N300 response to the object noun–incongruent picture pair should be more robust than the response to the action verb–incongruent picture pair.

The N400 is a more general measure of semantic processing found in children and adults in response to words or pictures (see Kutas and Federmeier, 2011 for review). In adults, the N400 amplitude varies inversely with a word's relationship to the semantic context, such that the less related the items, the larger the amplitude (e.g., Kutas, 1993). Federmeier and Kutas (2001) found that pictures revealed similar ease of integration N400 differences.

The goal of this paper is to better understand the nature of children's verb representations by identifying developmental changes in the amplitude of N300 and N400 in response to pictures that depict a clear action with known objects presented after an object noun or an action verb. Specifically, the ability to quickly match a semantic representation to the environment should elicit a large N300 Congruency response (i.e., the difference between the amplitude of the congruent and incongruent items). We predict that adults have robust, flexible representations for object nouns and action verbs that will elicit such N300 responses. Children, in contrast, have robust object noun representations like adults, but are predicted to have less flexible verb representations, making it difficult to quickly map an action verb to the environment. This will result in a significant N300 Congruency response for objects, but unlike adults, they will not exhibit that effect for actions. If our predictions are incorrect and children's verb representations are as flexible and abstract as those of adults, the N300 response should be robust for actions in both populations. Adults and children are predicted to exhibit the N400 response for both objects and actions, though it may be larger in response to objects. Importantly, although the N400 has been studied extensively in children in response to words (see Kuhl and Rivera-Gaxiola, 2008 for review), to our knowledge, this is the first developmental study of the N300 response to pictures as a measure of semantic integration.

In this study, we focus on 8–9 years olds because the neuronal areas recruited during semantic processing develop throughout childhood (Karunanayaka et al., 2010) and Sheng and McGregor (2010) note that action naming difficulties in children, through at least age 7, are related to semantic immaturity, but the nature of that immaturity is unclear. Here we test the hypothesis that the difference between verb representations in school-aged children and adults is related to the flexibility of their action verb representations.

2. Methods

2.1. Participants

Twenty right-handed, monolingual English-speaking adults ages 18–29 (9 male, 11 female; M = 21.51 years, SD = 50.20 months) and 20 right-handed monolingual English-speaking children ages 8–9 years (11 male, 9 female; M = 9.06 years, SD = 7.60 months) completed the task. Participants had no history of significant neurological issues (traumatic brain injury, CVA, seizure disorders, history of high fevers, tumors, or learning disabilities). Exclusion criteria included left-handedness, use of alcohol or controlled substances within 24 h of testing, and medications other than over-the-counter analgesics and birth-control pills.

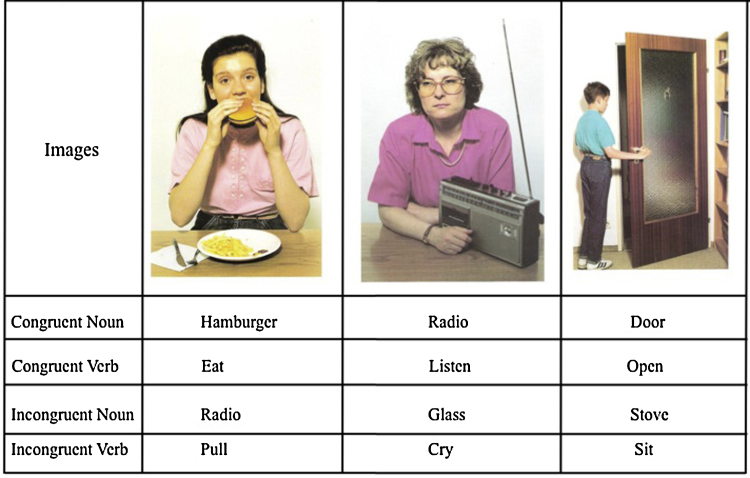

2.2. Stimuli

Pictures were selected from Set 1 of the “Everyday Life Activities Photo Series” (Stark, 1994; http://www.ela-photoseries.com/index.html), which are normed photos of typical daily actions (see Fig. 1 for examples). These images were created specifically for language training, teaching and testing of verb concepts using common activities such as getting dressed, shopping, and eating. We selected 33 images that clearly depict an object and an action with noun and verb labels. Videos were not used because video is generally not conducive to time-locked EEG studies. EEG requires millisecond accuracy in pinpointing the onset of a cognitive process. In video, most objects maintain their form, but actions unfold over time. Thus the onset time differences make it impossible to accurately identify temporal differences between object and action identification. Pictures are used successfully in verb–action identification tasks with children as young as 34 months (e.g. Golinkoff et al., 1996) and are commonly used as a measure of verb comprehension in developmental standardized tests, such as the PPVT-4 (Dunn and Dunn, 2007). Thus, there is substantial evidence that even very young children can accurately identify action verbs in pictures.

Fig. 1.

Example of the word-picture pairs. In the study, one word was presented from a centralized speaker then the picture was displayed on a monitor in front of the participant.

All words used in this study are in the average child's vocabulary by 30 months (Fenson et al., 1994). They include common objects and actions such as “eat”, “carry”, “sandwich” and “table”. To ensure that the stimuli elicited the target nouns and verbs at similar rates in adults and children, 27 college students and six 7–8 year olds described each picture using a word, phrase or sentence. Adults named the target verbs 64.7% of the time and target nouns 55.6% of the time. Children correctly named the target verbs 72.0% of the time and target nouns 61.1% of the time, with no statistical difference between the age groups or word types. Furthermore, the incongruent words were never used to describe their respected non-target pictures. Although the percentage of target responses may seem low, variability is often high in free response. Incorrect responses from adults and children were reasonable synonyms for the items. For example, for a picture of a child carrying a school bag the target words carry and bag were used 24% and 3% of the time, respectively. However, carry was often labeled as put on and bag was often labeled as backpack. Thus, in the recognition paradigm used for the ERP task, it is reasonable to believe the target objects and actions would be correctly identified with comparable consistency.

2.3. Procedure

Participants sat in a chair one meter in front of a computer monitor and one meter below a centralized speaker wearing a 64-electrode Neuroscan cap. EEG data were acquired with a Synamps II system (Compumedics, Inc.), sampled at 1 kHz and hardware filtered at 200 Hz and high pass filtered at 0.15 Hz. Electrode impedances were typically below 5 kΩ.

Participants were told that they would hear a word followed by a picture. They were instructed to press one button if the picture matched the word and another if it did not. The handedness of the button responses was counterbalanced across participants to remove motor related laterality differences. The sequence for each word–picture pair was: a fixation point (+) for 1 second, followed by a word played at 70 dB at the ear, followed by a colored photo centered on the screen until the participant responded.

There were 132 word–picture pairs created by pairing each of the 33 pictures with a word from each condition: congruent object, incongruent object, congruent action, incongruent action. Therefore, each picture acted as its own control assuring that differences between conditions were due to the processes associated with matching a particular word to a picture and not the picture itself. Incongruent items were created by randomly matching a word that is congruent to one picture with a different picture then changing any pairings that might be unclear (e.g. the verb “pull” was not used as an incongruent verb for a boy opening a door). Although individual words and pictures repeat, specific word–picture pairs do not. Some researchers argue that the repetition of specific semantic pairs may attenuate the N400 (Young and Rugg, 1992); however, recent systematic studies of this repetition effect report no such relationship (Renoult and Debruille, 2011, Renoult et al., 2012). Here, to offset the possibility of a confound, no word–picture pairs are repeated, picture repetition is equal across conditions, and each participant was randomly assigned to one of eight randomized stimulus presentation orders.

2.4. ERP calculations

Using Neuroscan's Edit program each continuous EEG was high-pass filtered at 0.15 Hz. Areas of muscle activity or electrode drift were excluded. Eye-blink artifacts were removed using a spatial filter in the Edit software. Online data were referenced to an electrode located near the vertex and, using the EEGLab toolbox in Matlab, the data were re-referenced offline to the average over the entire head. A spline-based estimate of the average scalp potential (Ferree, 2006) was computed using spherical splines (Perrin et al., 1989) from a standardized set of electrode positions to interpolate bad electrodes, yielding 62 data channels in each subject.

Using the EEGLab toolbox in Matlab, the average referenced data were low-pass filtered at 30 Hz and epoched at −100 to 1000 ms in relation to the picture onset. For each trial and electrode, the mean amplitude of the pre-stimulus interval (−100 to 0 ms) was subtracted from each time point in the post-stimulus interval to correct for baseline differences. Single trials were averaged together to obtain a stable waveform ERP for each condition and each electrode for every subject.

3. Results

3.1. Behavioral responses

Reaction times were calculated from picture onset to the participant's response. The experiment did not proceed until a response occurred and participants were given no information about the speed at which they should respond. Overall, this resulted in long reaction times in children as well as instances in which they failed to respond to an item because they stopped performing the task to ask the experimenter a question or take a short break. To account for this problem, reaction times that were two standard deviations from a participant's mean time were removed from the analysis as outliers. Following their removal, a 2 (word class) × 2 (congruency) × 2 (age group) mixed factors ANOVA revealed a significant effect of age, F(1, 38) = 43.88, p < 0.00001, with adults responding faster than children (See Table 1). As a result, we investigated the pattern of responses in each age group. In adults, a 2 (word class) × 2 (congruency) repeated-measures ANOVA revealed a main effect of word class, F(1, 29) = 38.4, p < 0.001, in which verbs had longer reaction times than nouns and a main effect of congruency, F(1,29) = 6.20, p = 0.019 in which incongruent items had longer reaction times than congruent items. For children, a 2 (word class) × 2 (congruency) repeated-measures ANOVA revealed no significant main effects or interactions (M = 2.034 s, SD = 720 ms).

Table 1.

Reaction times and percentage of correct responses with standard deviations for children and adults across conditions.

| Noun congruent | Noun incongruent | Verb congruent | Verb incongruent | |

|---|---|---|---|---|

| Reaction times (s) | ||||

| Children | 1.95 (0.63) | 2.00 (0.87) | 2.10 (0.92) | 2.00 (0.72) |

| Adults | 0.76 (0.17) | 0.77 (0.15) | 0.78 (0.17) | 0.84 (0.17) |

| Percent correct | ||||

| Children | 92.6% (2.3) | 97.8% (3.8) | 94.9% (4.9) | 95.2% (4.0) |

| Adults | 95.3% (3.8) | 98.2%(2.7) | 96.0% (5.1) | 97.6%(2.3) |

Regarding error rates, a 2 (word class) × 2 (congruency) × 2 (age group) mixed factors ANOVA revealed no effects of age, but a significant main effect of word class, F(1, 38) = 12.50, p = 0.001 and an interaction between word class and congruency, F(1, 38) = 5.653, p = 0.023. Focused analyses of simple effects uncovered that this interaction was driven by participants responding more accurately for incongruent nouns than congruent nouns, congruent verbs or incongruent verbs.

3.2. Event related potentials

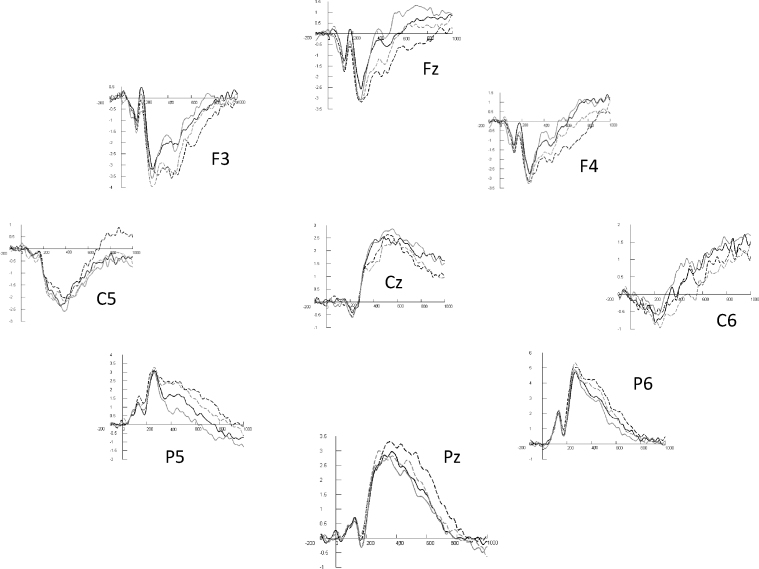

ERPs were calculated in response to the picture for correct responses only. For each age group we conducted a 3 (location: frontal, central, parietal) × 2 (congruency) × 2 (word class: object noun, action verb) ANOVA on midline sites (Frontal: Fz, Fpz; Central: Cz, CPz, C1, and C2; Parietal: Pz, P1, P2, POz) for the time periods of the N300 (125–300) and the N400 (300–500). Although this N300 time period is slightly earlier than used in some previous studies, visual inspection of the waveforms indicates that this earlier time period includes the beginning of the N300 effect (see Fig. 2, Fig. 3). Important interactions were followed by more specific analyses. Originally we included age as a variable, but the main effect of age between the adults and children was so large that it overpowered the more subtle cognitive differences (all p's < 0.0001). This is not surprising given that ERPs are larger in children than adults. As a result, in addition to the within group analyses, we performed analyses using difference scores with age included as a factor for N300 and N400 responses.

Fig. 2.

Grand Average ERPs for children from −100 ms to 1000. Positive is plotted up.

Fig. 3.

Grand Average ERPs for adults from −100 ms to 1000. Positive is plotted up.

3.2.1. Adults

The 3-way ANOVA on the N300 revealed a significant interaction between location and congruency F(2,38) = 8.561, p < 0.0001 and a main effect of location F(2, 38) = 20.831, p < 0.0001. Following the interaction, focused analyses of simple effects uncovered main effects of congruency over the frontal areas in which incongruent responses were more negative than congruent, F(1, 19) = 9.75, p < 0.006, and over parietal areas where the opposite pattern was found, F(1,19) = 9.80, p < 0.006. No other main effects or interactions were found.

The 3-way ANOVA on the N400 time window revealed significant main effects of location, F(1, 38) = 33.624, p < 0.0001 and congruency, F(1,19) = 13.90, p < 0.002 as well as interactions between location and word class, F(2, 38) = 9.75, p < 0.0005, and location and congruency, F(2,38) = 16.457, p < 0.0001. For frontal areas, follow up analyses revealed main effects of word class, F(1,19) = 6.96, p < 0.001 and congruency, F(1,19) = 14.68, p < 0.0001. For both nouns and verbs, as expected, incongruent items were more negative than congruent. However, verbs were overall more negative than nouns. Central areas revealed only a significant main effect of congruency, F(1,19) = 17.16, p < 0.00005, with incongruent items more negative than congruent. Parietal areas show a main effect of word class, F(1, 19) = 10.68, p < 0.005 and a main effect of congruency F(1,19) = 14.588, p < 0.002. The effects were opposite of those found over the frontal areas. Incongruent items were more positive than congruent items and nouns were more positive than verbs.

3.2.2. Children

The 3-way ANOVA on the N300 revealed a significant 3-way interaction, F(2, 38) = 4.71, p < 0.02 and a main effect of location, F(2, 19) = 43.075, p < 0.00001. In focused analyses of simple effects, only frontal areas revealed a significant result, which was an interaction between word class and congruency, F(1,19) = 5.61, p < 0.03. Post hoc analyses revealed that the congruency effect was significant for objects, t(19) = 2.24, p < 0.02, but not for actions.

The 3-way ANOVA on the N400 revealed a significant effect of congruency, F(1,19) = 11.29, p < 0.004, and of location, F(2, 38) = 58.778, p < 0.00001 with no other interactions or main effects. Incongruent responses were more negative than congruent ones.

3.3. Comparing ERP responses of adults and children

To identify differences in the magnitude of the difference between congruent and incongruent effects for children and adults we calculated a measure of the congruency effect by subtracting the amplitude of the congruent from the incongruent waveform for each subject for each time point in the N300 and N400 responses. Analyses focused on those locations that were significant for both age groups, which, as expected, included frontal areas for the N300 and central areas for the N400.

A 2 (age group) × 2 (word class) ANOVA on the N300 congruency effect revealed a significant interaction, F(1,76) = 4.4637, p < 0.04 and a main effect of word class, F(1,76) = 9.00, p = 0.0036. Focused analyses of simple effects revealed age group differences in the congruency effect, wherein adults displayed a larger congruency effect than children for verbs, t(38) = 2.1427, p < 0.02, but not nouns The main effect of word class was driven by nouns providing a larger congruency effect overall than verbs, which is not surprising given the lack of a verb effect in children.

Because the N300 includes a component of speed of processing, we also performed a 2 (age group) × 2 (word class) × 2 (congruency) ANOVA on the N300 latency which revealed no significant findings.

The 2 (age group) × 2 (word class) ANOVA for the N400 congruency effect revealed no significant effects. In conjunction with the findings above, this indicates that both children and adults revealed similar N400 responses.

4. Discussion

By examining differences in the N300 and N400 components between adults and children in object and action identification, our findings yield several new insights regarding the development of action verb representations. First, although the N400 response to words is well documented in children, this is the first study to show that, like adults, children display earlier, frontal N300 responses when integrating pictures with semantic contexts. This indicates a well-developed ability to quickly match stored semantic knowledge to visual perceptual input for known, object nouns (Schendan and Kutas, 2007, Sitnikova et al., 2006, Sitnikova et al., 2008, Yum et al., 2011). Importantly, these responses were found only in relation to pictures following object nouns, but not pictures following action verbs, despite the fact that the picture stimuli were the same for both conditions. Adults, in contrast, displayed N300 responses to pictures following object nouns and action verbs indicating that semantic integration related to object noun identification in 8–9 year olds is similar to that of adults but that their semantic integration related to action verb identification is not yet fully developed.

The lack of an adult-like N300 congruity effect in the action verb condition appears to reflect an inability of children to quickly map action referents to the environment. These findings in children are similar to previous findings in adults, wherein congruency differences were larger for pictures following basic level words than pictures following superordinate words (Mazerolle et al., 2007). This smaller effect for superordinate items was attributed to difficulties quickly mapping one's semantic representation of a more abstract word with the environment compared with the more robust semantic representation provided by basic level words. We argue that children's lack of an N300 congruency response to actions is related to a similar difficulty: quickly mapping their action verb representation to even a prototypical instance of that action. As such, these findings shed new light on current debates about verb learning and development.

There are two dominant views about the nature of children's verb representations. One, based on studies of verb acquisition, is that children's earliest representations are concrete and not readily extended to new exemplars (Gentner and Boroditsky, 2001, Maguire et al., 2010, Smiley and Huttenlocher, 1995). The other, based on early production, is that even young children use action verbs quite flexibly (Naigles et al., 2009). We took a different approach to address this question by using ERPs to study fast, early semantic integration abilities. While children performed well behaviorally in both object and action identification and showed robust N400 responses for each, the N300 differences between adults and children suggest important differences in how they represent and identify action verbs. The lack of an N300 difference in action verb response is consistent with the hypothesis that children in this age range possess action verb representations that are concrete, thus lacking the flexibility needed for rapid word-to-world identification.

Another possible reason for a lack of an N300 response in children compared to adults it that still images could be more abstract representations of actions than they are of objects. While this could be the case, this did not influence adults, hence the conclusion that adult representations are more flexible still holds true. The question of whether identification of an action occurring over time would result in similar findings is an important question for future work.

Interestingly, children did not exhibit differences between object and action identification in the later N400 response or their reaction times. The lack of differences between nouns and verbs for these later effects highlights the importance of the N300 as a measure of rapid semantic integration of visual stimuli to stored semantic representations because only this early measure of processing revealed important developmental differences.

In addition to the developmental findings surrounding the N300, the fact that adults displayed an N300 congruency effect for actions is unique. To date, much of the writing about the N300 specifies it as an index of “object recognition” (Schendan and Kutas, 2007, Sitnikova et al., 2008, Yum et al., 2011, West and Holcomb, 2002). This provides important information about how adults process action verbs, which are more concrete and conceptually more coherent than other verb types, such as mental state or state of being verbs (Bird et al., 2001). By adulthood these early learned, high frequency action verbs evoke representations that are quickly matched to the environment, similar to basic-level object nouns.

Adults displayed two other differences not revealed in children. First, adults demonstrated a main effect for word class in which the N400 to pictures following action verbs was larger than to pictures following object nouns. This most likely relates to the level of abstraction differences reported by Mazerolle et al. (2007) in comparing picture-matching to superordinate compared to basic level words. They reported that responses were more negative overall to pictures following superordinate object nouns (‘animal’) than basic level words (‘dog’) between 350 and 450 ms. They concluded that matching a picture to a more abstract word requires additional processes compared to words that refer to less perceptually diverse categories. This same process may explain these differences as well. Because verbs reference relationships between objects, they require additional processes in identification compared to object nouns.

Secondly, for adults, incongruent pictures elicited a positive peak over posterior areas through about 800–1000 ms after stimulus onset. We believe this is similar to the late positive component (LPC) that has been reported in response to integrating pictures with preceding semantic contexts (Barrett and Rugg, 1990, Lüdtke et al., 2008, Sitnikova et al., 2003, Sitnikova et al., 2008). Given this LPC is often attributed to a reevaluation of a response (Lüdtke et al., 2008), it is surprising that adults exhibit such robust LPC congruency differences in such a simple task, but children do not. Although more research is necessary to fully understand the developmental reasons for this, we suspect that the fact that the auditory presentation of words in isolation may make them more prone to reevaluation in the incongruent condition. Specifically, when a picture does not match a word, adults could be trying to access their working memory to identify if the picture might match a similar sounding word.

Adult reaction times also hinted at some type of reanalysis, specifically incongruent actions resulted in the longest reaction times. This additional time likely relates to actions having many more potential labels than objects. For example, a man sitting on a chair probably best matches the word sit, or potentially rest or relax, however, a word like carry or hold could apply to the chair's relationship to the man. Thus, if a verb does not fit a scene initially, it is worth a reevaluation to be sure that the relationship is not depicted in some other, less obvious, way. Interestingly, such reaction time differences were not found in this group of children.

In conclusion these data shed new light on the development of action verb and object noun representations and how they influence object and action identification. By using ERPs to study early processes in semantic integration we have found new evidence that suggests children's representations of action verbs remain more concrete and feature-based than adults’ representations through at least 8–9 years of age.

Conflict of interest statement

The authors of the paper have no financial or other relationship with people or organizations that may inappropriately influence this work within the last three years.

Funding

This work was funded in part by a University of Texas at Dallas Faculty Initiative grant awarded to the first author.

References

- Barrett S.E., Rugg M.D. Event-related potentials and the semantic matching of pictures. Brain and Cognition. 1990;14:201–212. doi: 10.1016/0278-2626(90)90029-n. [DOI] [PubMed] [Google Scholar]

- Bird H., Franklin S., Howard D. Age of acquisition and imageability ratings for a large set of words, including verbs and function words. Behavior Research Methods, Instruments and Computers. 2001;33:73–79. doi: 10.3758/bf03195349. [DOI] [PubMed] [Google Scholar]

- Bird H., Howard D., Franklin S. Verbs and nouns: the importance of being imageable. Journal of Neurolinguistics. 2003;16:113–149. [Google Scholar]

- Clark E.V. Language and representations. In: Gentner D., Goldin-Meadow S., editors. Language in Mind. The MIT Press; Cambridge, MA: 2003. pp. 17–24. [Google Scholar]

- Dunn L.M., Dunn D.M. 4th ed. NCS Pearson, Inc; Minneapolis: 2007. Peabody Picture Vocabulary Test. [Google Scholar]

- Federmeier K.D., Kutas M. Meaning and modality: influences of context, semantic memory organization and perceptual predictability on picture processing. Journal of Experimental Psychology: Learning, Memory and Cognition. 2001;27:202–224. [PubMed] [Google Scholar]

- Fenson, L., Dale, P.S., Reznick, J.S., Bates, E., Thal, D., and Pethick, S.J. (1994). Variability in early communicative development. Monographs of the Society for Research in Child Development, 59 (5, Serial No. 242). [PubMed]

- Ferree T.C. Spherical splines and average referencing in scalp EEG. Brain Topography. 2006;19(1/2):43–52. doi: 10.1007/s10548-006-0011-0. [DOI] [PubMed] [Google Scholar]

- Gentner D. Why nouns are learned before verbs: linguistical relativity versus natural partitioning. In: Kuczaj II S.A., editor. Language Development, vol. 2: Language, Thought, and Culture. Lawrence Erlbaum; Hillsdale, NJ: 1982. pp. 301–334. [Google Scholar]

- Gentner D., Boroditsky L. Individuation, relativity, and early word learning. In: Bowerman M., Levinson S.C., editors. Language Acquisition and Conceptual Development. Cambridge University Press; Cambridge: 2001. pp. 215–256. [Google Scholar]

- Gentner D. Why we’re so smart. In: Gentner D., Goldin-Meadow S., editors. Language in Mind. The MIT Press; Cambridge, MA: 2003. pp. 195–236. [Google Scholar]

- Gillette J., Gleitman H., Gleitman L., Lederer A. Human simulations of vocabulary learning. Cognition. 1999;73:135–176. doi: 10.1016/s0010-0277(99)00036-0. [DOI] [PubMed] [Google Scholar]

- Golinkoff R.M., Jacquet R.C., Hirsh-Pasek K., Nandakumar R. Lexical principles may underlie the learning of verbs. Child Development. 1996;67:3101–3119. [PubMed] [Google Scholar]

- Jackendoff R.S., Landau B. Spatial language and spatial cognition. In: Napoli D.J., editor. Bridges between psychology and linguistics: a Swarthmore festschrift for Lila Gleitman. Lawrence Erlbaum Associates; Hillsdale, NJ: 1991. pp. 145–169. [Google Scholar]

- Karunanayaka P., Schmithorst V.J., Vannest J., Szaflarski J.P., Plante E., Holland S.K. A group independent components analysis of covert verb generation in children: a functional magnetic resonance imaging study. NeuroImage. 2010;51(1):472–487. doi: 10.1016/j.neuroimage.2009.12.108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl P., Rivera-Gaxiola M. Neural substrates of language acquisition. Annual Review of Neuroscience. 2008;31:511–534. doi: 10.1146/annurev.neuro.30.051606.094321. [DOI] [PubMed] [Google Scholar]

- Kutas M. In the company of other words: electrophysiological evidence for single-word and sentence context effects. Language and Cognitive Processes. 1993;8:533–572. [Google Scholar]

- Kutas M., Federmeier K.D. Thirty years and counting: finding meaning in the N400 component of the event-related potential (ERP) Annual Review of Psychology. 2011;62:621–647. doi: 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lüdtke J., Friedrich C.K., De Filippis M., Kaup B. Event-related potential correlates of negation in a sentence-picture verification paradigm. Journal of Cognitive Neuroscience. 2008;20(8):1355–1370. doi: 10.1162/jocn.2008.20093. [DOI] [PubMed] [Google Scholar]

- Maguire M.J., Dove G.O. Speaking of events: what event language can tell us about event representations and their development. In: Shipley T.F., Zacks J., editors. Understanding Events: From Perception to Action. Oxford University Press; New York: 2008. pp. 193–220. [Google Scholar]

- Maguire M.J., Hirsh-Pasek K., Golinkoff R.M. A unified theory of verb learning: putting verbs in context. In: Hirsh-Pasek K., Golinkoff R.M., editors. Action Meets Word: How Children Learn Verbs. Oxford University Press; New York: 2006. pp. 364–391. [Google Scholar]

- Maguire M.J., Hirsh-Pasek K., Golinkoff R.M., Mutsumi I., Haryu E., Vanegas S., Okada H., Pulverman R., Sanchez-Davis B. A developmental shift from similar to language-specific strategies in verb acquisition: a comparison of English, Spanish, and Japanese. Cognition. 2010;114:299–319. doi: 10.1016/j.cognition.2009.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandler J.M. Representation. In: Damon W., editor. Handbook of Child Psychology: vol. 2: Cognition, Perception, and Language. Wiley; New York: 1998. pp. 255–308. [Google Scholar]

- Mandler J.M. Early concept learning in children. In: Smelser N.J., Baltes P.B., editors. International Encyclopedia of the Social and Behavioral Sciences. Elsevier; New York: 2001. [Google Scholar]

- Mazerolle E.L., D’Arcy R.C.N., Marchand Y., Bolster R.B. ERP assessment of functional status in the temporal lobe: examining spatiotemporal correlates of object recognition. International Journal of Psychophysiology. 2007;66:81–92. doi: 10.1016/j.ijpsycho.2007.06.003. [DOI] [PubMed] [Google Scholar]

- Naigles L.R., Hoff E., Vear D., Tomasello M., Brandt S., Waxman S.R., Childers J.B. Flexibility in early verb use: evidence from a multiple-N dairy study. Monographs of the Society for Research in Child Development. 2009;74(2):22–31. doi: 10.1111/j.1540-5834.2009.00513.x. [DOI] [PubMed] [Google Scholar]

- Perrin F., Pemier J., Betrand O., Echallier J.F. Spherical splines for scalp potential and current density mapping. Electroencephalography and Clinical Neurophysiology. 1989;72:184–187. doi: 10.1016/0013-4694(89)90180-6. [DOI] [PubMed] [Google Scholar]

- Renoult L., Debruille J.B. N400-like potentials index semantic relations between highly repeated individual words. Journal of Cognitive Neuroscience. 2011;23(4):905–922. doi: 10.1162/jocn.2009.21410. [DOI] [PubMed] [Google Scholar]

- Renoult L., Wang X., Calcagno V., Prevost M., Debruille J.B. From N400 to N300: variations in the timing of semantic processing with repetition. NeuroImage. 2012;61(1):206–215. doi: 10.1016/j.neuroimage.2012.02.069. [DOI] [PubMed] [Google Scholar]

- Sheng L., McGregor K.K. Object and action naming in children with specific language impairment. Journal of Speech, Language, and Hearing Research. 2010;53(6):1704–1719. doi: 10.1044/1092-4388(2010/09-0180). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schendan H.E., Kutas M. Neurophysiological evidence for the time course of activation of global shape, part, and local contour representations during visual object categorization and memory. Journal of Cognitive Neuroscience. 2007;19(5):734–749. doi: 10.1162/jocn.2007.19.5.734. [DOI] [PubMed] [Google Scholar]

- Sitnikova T., Kuperberg G., Holocomb P.J. Semantic integration in videos of real-world events: an electrophysiological investigation. Psychophysiology. 2003;40:160–164. doi: 10.1111/1469-8986.00016. [DOI] [PubMed] [Google Scholar]

- Sitnikova T., West W.C., Kuperberg G.R., Holcomb P.J. The neural organization of semantic memory: electrophysiological activity suggests feature-based segregation. Biological Psychology. 2006;71(3):326–340. doi: 10.1016/j.biopsycho.2005.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sitnikova T., Holcomb P.J., Kiyonaga K.A., Kuperberg G.R. Two neurocognitive mechanisms of semantic integration during the comprehension of visual real-word events. Journal of Cognitive Neuroscience. 2008;20(11):2037–2057. doi: 10.1162/jocn.2008.20143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smiley P., Huttenlocher J. Conceptual development and the child's early words for events, objects, and persons. In: Tomasello M., Merriman W.E., editors. Beyond the Names for Things: Young Children's Acquisition of Verbs. Lawrence Erlbaum; Hillsdale, NJ: 1995. pp. 21–61. [Google Scholar]

- Stark J. Psychology Press; New York, NY: 1994. Everyday Life Activities: Photo Series. [Google Scholar]

- Talmy L. vol. 1. M.I.T. Press; Cambridge, MA: 2000. Towards a cognitive semantics. (Concept structuring systems). [Google Scholar]

- Tanaka J., Luu P., Weisbrod M., Kiefer M. Tracking the time course of object categorization using event-related potentials. NeuroReport: For Rapid Communication of Neuroscience Research. 1999;10(4):829–835. doi: 10.1097/00001756-199903170-00030. [DOI] [PubMed] [Google Scholar]

- West C.W., Holcomb P.J. Event-related potentials during discourse-level semantic integration of complex pictures. Cognitive Brain Research. 2002;13:363–375. doi: 10.1016/s0926-6410(01)00129-x. [DOI] [PubMed] [Google Scholar]

- Young M.P., Rugg M.D. Word frequency and multiple repetitions as determinants of the modulation of event-related potentials in a semantic classification task. Psychophysiology. 1992;29:664–676. doi: 10.1111/j.1469-8986.1992.tb02044.x. [DOI] [PubMed] [Google Scholar]

- Yum Y.N., Holcomb P.J., Grainger J. Words and pictures: an electrophysiological investigation of domain specific processing in native Chinese and English speakers. Neuropsychologia. 2011;49(7):1910–1922. doi: 10.1016/j.neuropsychologia.2011.03.018. [DOI] [PMC free article] [PubMed] [Google Scholar]