Highlights

-

•

Brain responses were measured by presenting emotional faces in the context of emotional bodies.

-

•

ERP data showed that 8-month-old infants discriminate between facial expressions only when presented in the context of congruent body expressions.

-

•

Neural evidence for the existence of context-sensitive facial emotion perception in infants.

Keywords: Emotion, Infants, Body expressions, Priming, ERP

Abstract

Body expressions exert strong contextual effects on facial emotion perception in adults. Specifically, conflicting body cues hamper the recognition of emotion from faces, as evident on both the behavioral and neural level. We examined the developmental origins of the neural processes involved in emotion perception across body and face in 8-month-old infants by measuring event-related brain potentials (ERPs). We primed infants with body postures (fearful, happy) that were followed by either congruent or incongruent facial expressions. Our results revealed that body expressions impact facial emotion processing and that incongruent body cues impair the neural discrimination of emotional facial expressions. Priming effects were associated with attentional and recognition memory processes, as reflected in a modulation of the Nc and Pc evoked at anterior electrodes. These findings demonstrate that 8-month-old infants possess neural mechanisms that allow for the integration of emotion across body and face, providing evidence for the early developmental emergence of context-sensitive facial emotion perception.

1. Introduction

Responding to others’ emotional expressions is a vital skill that helps us predict others’ actions and guide our own behavior during social interactions (Frijda and Mesquita, 1994, Frith, 2009, Izard, 2007). The bulk of research investigating emotion perception has focused on facial expressions presented in isolation and been based on the standard view that specific facial patterns directly code for a set of basic emotions (Barrett et al., 2011). However, emotional face perception naturally occurs in context because during social interactions emotional information can be gleaned from multiple sources, including the body expression of a person (de Gelder, 2006, Heberlein and Atkinson, 2009, Van den Stock et al., 2008). Body expressions have been argued to be the most evolutionarily preserved and immediate means of conveying emotional information (de Gelder, 2006) and may provide potent contextual cues when viewing facial expressions during social interactions. For example, when body and face convey conflicting information then emotion recognition from the face is biased into the direction of the body expressions (Aviezer et al., 2012, de Gelder, 2006, Meeren et al., 2005). These findings indicate that emotional faces are interpreted in the context of body expressions. More generally, the notion that context plays an important role in the interpretation of facial expressions adds to the growing body of research which challenges predominant views that emphasize the encapsulated and independent nature of facial expression processing (for discussion, see Barrett et al., 2011).

With respect to the neural processes that underlie the impact of body expression on emotional face processing in adults, using face-body compound stimuli Meeren et al. (2005) presented evidence for a very rapid influence of emotional incongruence between face and body. Specifically, in this ERP study, incongruent compared to congruent body-face pairings evoked an enhanced P1 at occipital electrodes, suggesting that emotional incongruence between body and face affected the earliest stages of visual processing. The P1 is enhanced when attention is directed towards a certain stimulus location and has been shown to be generated in extrastriate visual areas (Clark and Hillyard, 1996, Di Russo et al., 2002, Hillyard and Anllo-Vento, 1998). This is in line with an increasing body of evidence suggesting that context influences early stages of visual processing. Meeren et al. (2005) also found that brain processes reflected in the N170 at electrodes over posterior temporal regions were not modulated by congruency between faces and bodies. The absence of an effect on the N170 in adults was taken to indicate that the structural encoding of the face is not affected by conflicting emotional information from the body.

Critically, the existing work with adults leaves unclear whether the neural processes involved in emotion integration across body and face emerge early in human ontogeny and can therefore be considered a key feature of human social perceptual functioning. In the current study we thus examined the neural processing of emotional information across bodies and faces in infancy using ERPs. Similar to adults the main focus of emotion perception research in infancy has been on facial expressions (Leppänen and Nelson, 2009). This work has shown that (a) beginning around 7 months of age infants discriminate between positive and negative emotions (especially fear) and (b) at that age, emotional facial expressions modulate neural processes associated with the perceptual encoding of faces (N290 and P400) and the allocation of attentional resources (Nc) and recognition of stimulus (Pc) (Leppänen et al., 2007, Nelson and De Haan, 1996, Nelson et al., 1979, Peltola et al., 2009a, Peltola et al., 2009b, Webb et al., 2005). Only recently, research has begun to examine how the neural correlates of the ability to perceive and respond to others’ emotional body expressions develops during infancy. In a first study, using dynamic point-light displays of emotional body expressions (Missana et al., 2015) reported that 8-month-olds, but not 4-month-olds, showed brain responses that distinguished between fearful and happy body expressions, suggesting that the ability to discriminate emotional body expressions develops during the first year of life. Furthermore, Missana et al. (2014) extended this line of work by showing that infants’ ERP responses also distinguished between fearful and happy static emotional body postures at the age of 8 months.

Another line of work has looked at the neural correlates of infants’ ability to integrate emotional information across modalities using ERPs. This work has shown that infants are able to match emotional information across face and voice (Grossmann et al., 2006, Vogel et al., 2012). Specifically, in 7-month-old infants detecting incongruent face-voice pairings resulted in an enhancement of the Nc component, indexing a greater allocation of attention, whereas detecting congruent face-voice pairings elicited an enhanced Pc, reflecting recognition of common emotion across face and voice (Grossmann et al., 2006). While this work has provided insights into the early development of cross-modal emotional integration processes, to date, the neural processes of integrating emotional information across body and face have not been studied in infancy.

The main goal of the current study was to examine whether and how emotional information conveyed through the body influences the neural processing of facial emotions. In order to investigate this question, we used a priming design in which we presented 8-month-old infants with emotional body expressions (fearful and happy) that were followed either by a matching or a mismatching facial expression. Priming has been shown to be a powerful method to elucidate implicit influences of context on social information processing in adults and children (see Stupica and Cassidy, 2014). However, despite its tremendous potential in studying how social information is represented in the brain of preverbal infants, to date there are only relatively few studies that have used priming designs to investigate the neural correlates of social information processing in infancy (Gliga and Dehaene-Lambertz, 2007, Peykarjou et al., 2014). Furthermore, 8-month-old infants were chosen because at this age infants have been shown to reliably detect and discriminate between fearful and happy expressions from faces (Nelson et al., 1979, Peltola et al., 2009a, Peltola et al., 2009b) but also from bodies (Missana et al., 2014, Missana et al., 2015), which is an important prerequisite for the detection of congruency across body and face. We predicted that if infants are indeed sensitive to the congruency between body and facial expressions then their ERPs would show priming effects on components shown to reflect early visual processes similar to those identified in prior work with adults (Meeren et al., 2005). In addition, we expected priming effects on later attentional processes and recognition memory processes (Nc and Pc), which might be similar to the effects identified in prior infant work on face-voice emotion matching (Grossmann et al., 2006), in which incongruent face-voice pairings resulted in an enhanced Nc and congruent face-voice pairings elicited a greater Pc. However, based on prior work with adults showing more specific interaction (hampering) effects between emotional faces and bodies (Aviezer et al., 2012, Meeren et al., 2005), viewing mismatching body expressions might impede emotion discrimination from facial expressions in infants. We therefore examined whether infants’ neural discrimination between emotional facial expressions (happy and fear) is impaired when they previously saw a mismatching body expression. Specifically, based on prior work with adults (Aviezer et al., 2012, Meeren et al., 2005), we hypothesized that ERPs (especially the Nc and Pc) would differ between facial expressions of emotion only when presented in the context of a congruent body expression but not when presented in the context of an incongruent body expression. Finally, we also examined face-sensitive ERP components (N290 and P400), but similar to prior work with adults (Meeren et al., 2005), we did not expect congruency effects on ERP responses associated with the structural encoding of faces.

2. Methods and materials

2.1. Participants

In the present study, the infants were recruited via the database of the Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany. The final sample consisted of 32 eight-month-old infants aged between 229 and 258 days (16 females, Median age = 245, Range = 29 days). An additional 18 eight-month-old infants were tested, but were excluded from the final sample due to fussiness (n = 12) or too many artifacts (n = 6). Note that an attrition rate at this level is within the normal range for an infant ERP study (DeBoer et al., 2007). The infants were born full-term (between 38 and 42 weeks) and had a normal birth weight (>2800 g). All parents provided informed consent prior to the study and were compensated financially for participation. The children were given a toy as a present after the session.

2.2. Stimuli

For the emotional body stimuli, we used full-light static body displays taken from previously validated stimulus set by Atkinson et al. (2004), see Fig. 1. For each emotion we presented body postures from 4 different actors (selected on the basis of high recognition rates) shown for these stimuli by a group of adults (Atkinson et al., 2004). The facial expressions that followed the emotional body postures were color photographs of happy and fearful facial expressions taken from the previously validated FACES database (Ebner et al., 2010). We selected photographs from four actresses (age 19 to 30, ID-numbers 54, 63, 85, 134). These actresses were selected on the basis of high recognition rates shown by a group of adult raters (Ebner et al., 2010). In order to keep the stimulus presentation protocol comparable with previous infant studies, we decided to include only pictures of women (Grossmann et al., 2007, Kobiella et al., 2008, Leppänen et al., 2007). The photographs were cropped so that only the face was visible within an oval shape. The body stimuli had a mean height of 12.52 cm and a mean width of 5.37 cm and the face stimuli had a mean height of 12 cm and a mean width of 9.3 cm.

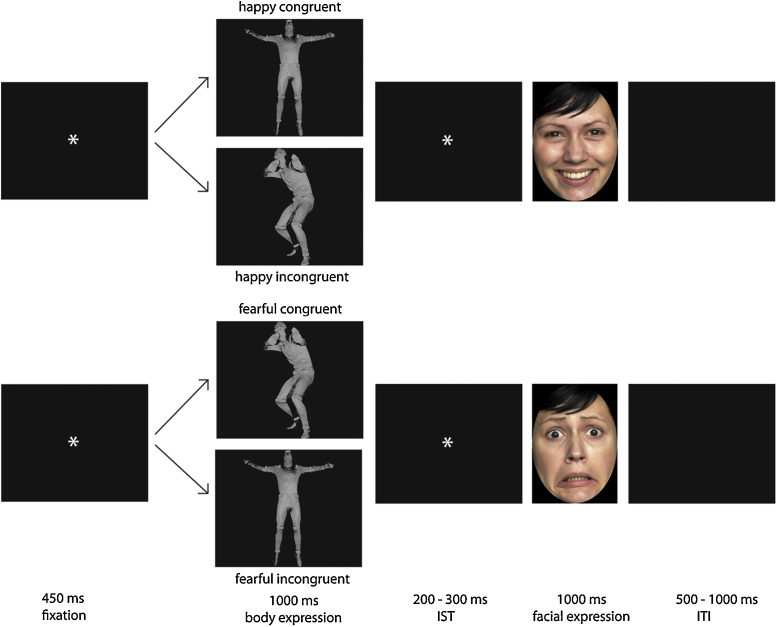

Fig. 1.

This figure shows an example of the stimuli and the design of the priming paradigm used in the current study.

2.3. Design

We employed a priming design in which we presented infants with emotional body expressions (fearful and happy) that were followed either by a matching (congruent) or a mismatching (incongruent) facial expression (see Fig. 1). All possible pairings between body and face stimuli (from 4 actors) were used for stimulus presentation, leading to 16 possible combinations per condition (i.e., 16 combinations for a happy face preceded by a happy body) and 64 combinations in total for the four conditions. Each specific combination was included in the presentation list four times, leading to a maximum number of 256 trials. Each trial began with the presentation of a fixation star on a black background (450 ms), followed by the full-light static body expression which was presented for 1000 ms. This was followed by an inter-stimulus interval which varied randomly between 200 and 300 ms, during which a fixation star was presented on a black background. Following the inter-stimulus interval, the facial expression was presented for 1000 ms. This was followed by an inter-trial interval where a black screen was presented for a duration that randomly varied between 500 and 1000 ms. Stimuli were presented in a pseudo-randomized order and a newly randomized order was generated for each infant. We ensured that trials from the same condition did not occur twice in a row.

2.4. Procedure

The infants were seated on their parent's lap in a dimly lit, sound-attenuated and electrically shielded room during testing. The stimuli were presented in the center of the screen on a black background, using a 70 Hz, 17 in. computer screen at a distance of 70 cm. The stimulus presentation was continuous but simultaneously controlled by the experimenter, who was able to stop the presentation, if required, and use attention getting videos including sounds in order to reorient the infant to the screen.

2.5. EEG measurement

The EEG was recorded from 27 Ag/AgCl electrodes attached to an elastic cap (EasyCap GmbH, Germany) using the 10–20 system of electrode placement. The data was online referenced to the CZ electrode. The horizontal electrooculogram (EOG) was recorded from two electrodes (F9, F10) that are part of the cap located at the outer canthi of both eyes. The vertical EOG was recorded from an electrode on the supraorbital ridge (FP2) that is part of the cap and an additional single electrode on the infraorbital ridge of the right eye. The EEG was amplified using a Porti-32/M-REFA amplifier (Twente Medical Systems International) and digitized at a rate of 500 Hz. Electrode impedances were kept between 5 and 20 kΩ. In addition, the sessions were video-recorded to off-line code for infants’ attention to the screen. The EEG session ended when the infant became fussy, or inattentive.

2.6. ERP analysis

Data processing for ERP analysis was performed using an in-house software package EEP, commercially available under the name EEProbeTM (Advanced Neuro Technology, Enschede). The raw EEG data was re-referenced to the algebraic mean of the left and right mastoid electrode and we used a 0.3–20 Hz bandpass finite impulse response filter (length: 1501 points; Hamming window; half-power [−3 dB] points of 0.37 Hz and 19.93 Hz). The recordings were segmented into epochs time-locked to the target onset, lasting from 100 ms before onset until the offset of the frame (total duration 1100 ms). The epochs were baseline corrected by subtracting the average voltage in the 100 ms baseline period (prior to picture onset) from each post-stimulus data point. During the baseline period a black screen with a white fixation star was presented. Data epochs were rejected off-line whenever the standard deviation within a gliding window of 200 ms exceeded 80 μV in any of the two bipolar EOG channels and 60 μV at EEG electrodes. Following the automated rejection procedure, EEG data was also visually inspected offline for remaining artifacts. Trials in which the infant did not attend to the screen during either prime or target presentation were excluded from further analysis. At each electrode, artifact-free epochs were averaged separately for happy-match, happy-mismatch, fearful-match and fearful-mismatch facial expressions to compute the ERPs. The mean number of trials included in the ERP average was 19.75 (SD = 7.85) for the happy congruent condition, 19.22 (SD = 7.15) for the happy incongruent condition, 19.41 (SD = 6.77) for the fear congruent condition, and 19.25 (SD = 8.67) for the fear incongruent condition. The mean number of trials watched by the infants was: M = 40.59 (SD = 10.27) for the happy congruent condition, M = 40.66 (SD = 10.47) for the happy incongruent condition, M = 40.75 (SD = 10.33) for the fear congruent condition, and M = 40.59 (SD = 10.46) for the fear incongruent condition.

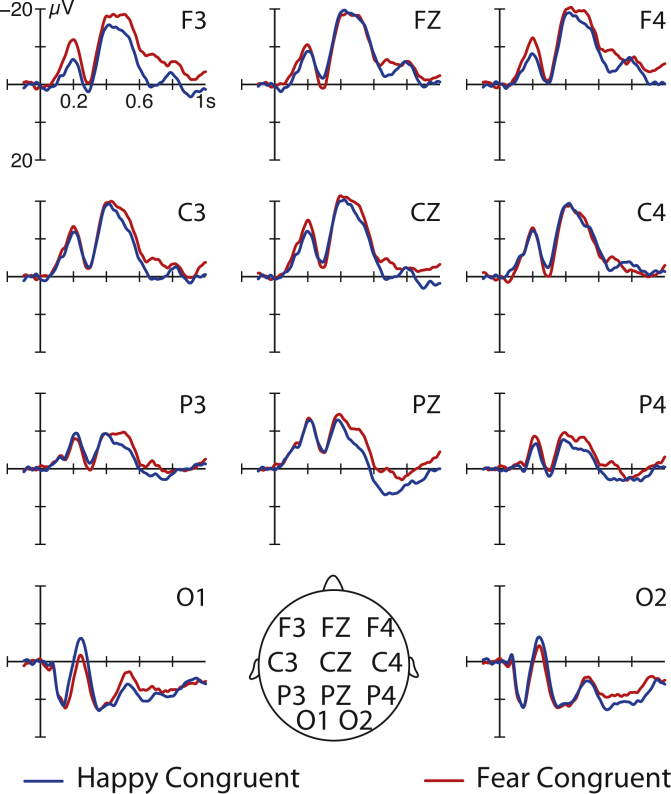

Based on prior ERP work (de Haan et al., 2003, Kobiella et al., 2008, Leppänen et al., 2007, Meeren et al., 2005, Missana et al., 2015, Grossmann et al., 2006, Pourtois et al., 2004, Webb et al., 2005) and visual inspection of the data (see Supplementary Fig. 1), our ERP analysis focused on the following ERP components: (A) early visual processing over posterior (occipital) regions (O1, O2) (P1 [100–200 ms]); (B) face-sensitive processing over posterior (occipital) regions (O1, O2) (N290 [200–300 ms], P400 [300–500 ms]); (C) later processes related to attention allocation (Nc [400–600 ms]) and recognition memory (Pc [600–800 ms]) over anterior regions (F3, F4, C3, C4, T7, T8). For these time windows and regions mean amplitude ERP effects were assessed in repeated measures ANOVAs with the within-subject factors emotion (happy versus fear), congruency (congruent versus incongruent), and hemisphere (left [F3, C3, T7] versus right [F4, C4, T8]).

3. Results

3.1. Early visual processing at posterior electrodes

3.1.1. P1

Our analysis did not reveal any congruency effects on the P1 from 100 to 200 ms at O1 and O2, F(1, 31) = 0.393, p = 0.535. Additionally, there was no significant main effect of emotion, F(1, 31) = 0.898, p = 0.351, and there was no significant interaction between congruency and emotion, F(1, 31) = 2.83, p = 0.102, during this time window at occipital electrodes.

3.2. Face-sensitive processing at posterior electrodes

3.2.1. N290

Our analysis did not reveal any congruency effects on the N290 from 200 to 300 ms at O1 and O2, F(1, 31) = 0.10, p = 0.920. Furthermore, there was no significant main effect of emotion, F(1, 31) = 1.902, p = 0.178, and there was no significant interaction between congruency and emotion, F(1, 31) = 1.764, p = 0.194, during this time window at occipital electrodes.

3.2.2. P400

Our analysis did not reveal any congruency effects on the P400 from 400 to 600 ms at O1 and O2 F(1, 31) = 0.008, p = 0.930. Additionally, there was no significant main effect of emotion, F(1, 31) = 1.225, p = 0.277, and there was no significant interaction between congruency and emotion, F(1, 31) = 0.003, p = 0.959, during this time window at occipital electrodes.

3.3. Later processes related to attention allocation (Nc) and recognition memory (Pc)

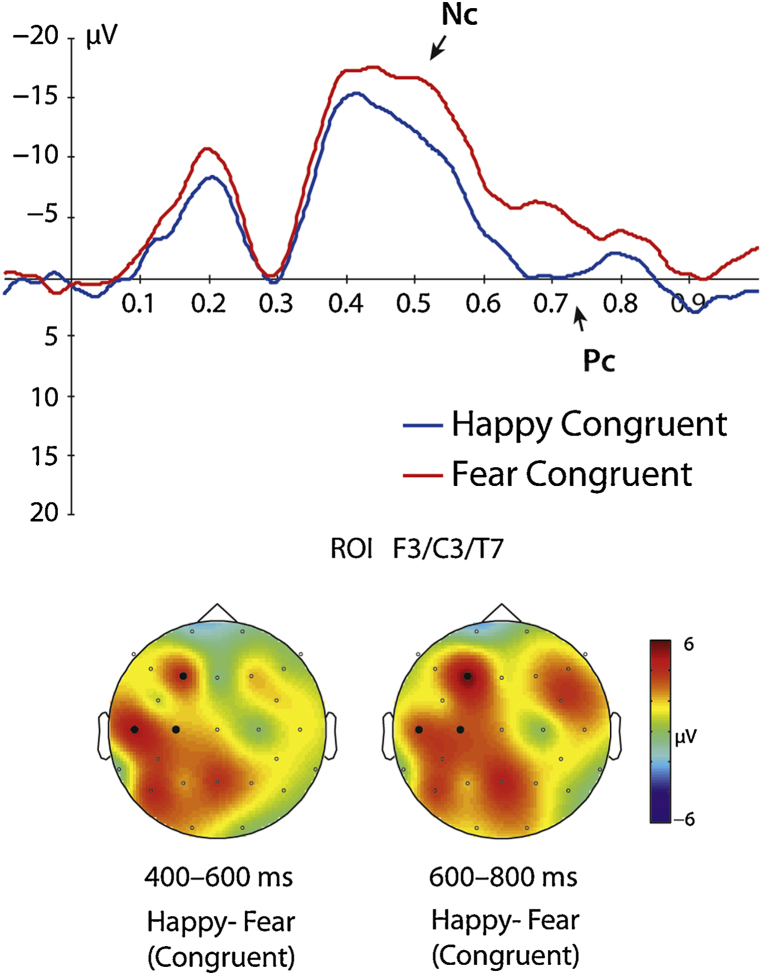

3.3.1. Nc

Our analysis revealed a significant interaction between the factors congruency, emotion and hemisphere at anterior electrodes (F3, F4, C3, C4, T7, T8) between 400 and 600 ms, F(1, 31) = 4.16, p = 0.05 (see Fig. 2, Fig. 3). Further analysis showed that the Nc differed between the emotions only over the left hemisphere in the congruent condition, t(31) = 2.81, p = 0.009, with fearful facial expressions primed with congruent body expressions being significantly more negative (M = −15.61 μV, SE = 1.35) than happy facial expressions primed with congruent body expressions (M = −11.70 μV, SE = 1.58) (see Fig. 2, Fig. 3 and Table 1). No significant differences between happy and fearful facial expressions were observed at the left hemisphere in the incongruent condition t(31) = 0.18, p = 0.856. Furthermore, there were no significant differences between emotions neither in the congruent condition t(31) = 1.00, p = 0.324 nor in the incongruent condition, t(31) = 0.88, p = 0.383 over the right hemisphere. Note that here we report uncorrected statistics for the paired comparisons using t-tests. However, the obtained significant difference between emotions in the congruent condition survives a conservative Bonferroni correction in which the p-value is adjusted for multiple comparisons (in our case by dividing it by 4, adjusted p-value is p = 0.0125, obtained p-value is below the threshold: p = 0.009).

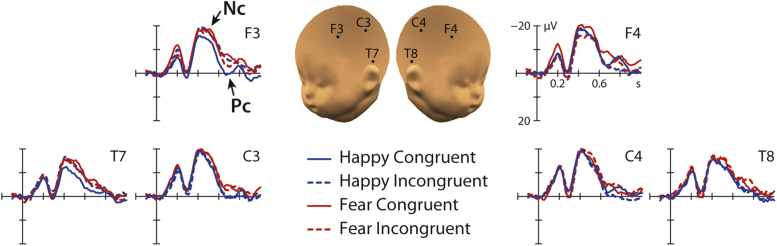

Fig. 2.

This figure shows 8-month-old infants’ ERP responses at anterior electrodes to fearful and happy facial expressions primed congruently and incongruently by body expressions.

Fig. 3.

This figure shows the ERP response to fearful and happy facial expressions congruently primed by body expressions at the anterior region of interest and the scalp topographies of ERP components Nc (400–600 ms) and Pc (600–800 ms).

Table 1.

This table provides an overview of the ERP findings of the current study.

| Attention allocation and recognition memory |

|||||

|---|---|---|---|---|---|

| Nc: 400–600 ms |

Pc: 600–800 ms |

||||

| F3/C3/T7 | F4/C4/T8 | F3/C3/T7 | F4/C4/T8 | ||

| Happy congruent | Mean (SE) μV | −11.70 (1.58)** | −14.28 (1.77) | −1.37 (1.12)** | −4.14 (1.54) |

| Fearful congruent | Mean (SE) μV | −15.61 (1.35) | −15.49 (1.60) | −5.61 (1.15) | −5.70 (1.19) |

| Happy incongruent | Mean (SE) μV | −14.54 (1.67) | −14.10 (1.50) | −3.78 (1.63) | −2.60 (1.29) |

| Fearful incongruent | Mean (SE) μV | −14.81 (1.44) | −15.42 (1.50) | −4.40 (1.57) | −5.61 (1.75) |

p < 0.01.

3.3.2. Pc

Our analysis revealed a significant interaction between the factors congruency, emotion and hemisphere at anterior electrodes (F3, F4, C3, C4, T7, T8) between 600 to 800 ms, F(1, 31) = 6.06, p = 0.02 (see Fig. 2, Fig. 3). Further analysis showed that the Pc differed between the emotions only at left hemispheric electrodes for the congruent condition, t(31) = 3.67, p = 0.001, with fearful facial expressions primed with congruent body expressions being significantly more negative (M = −5.61 μV, SE = 1.15) than happy facial expressions primed with congruent body expressions (M = −1.37 μV, SE = 1.12) (see Fig. 2, Fig. 3 and Table 1). No significant differences between happy and fearful facial expressions were observed at the left hemisphere when primed with incongruent body expressions, t(31) = 0.40, p = 0.692. Furthermore, there were no significant differences between emotion neither in the congruent t(31) = 1.35, p = 0.186 nor in the incongruent condition t(31) = 1.90, p = 0.066 over the right hemisphere. Note that similar to the analysis for the Nc, we also report uncorrected statistics for the paired comparisons for the Pc. As for the Nc, the obtained significant difference between emotions in the congruent condition for the Pc survives a conservative Bonferroni correction in which the p-value is adjusted for multiple comparisons (in our case by dividing it by 4, adjusted p-value threshold is p = 0.0125, obtained p-value is below the threshold: p = 0.01).

In addition, we observed a marginally significant main effect of emotion at anterior electrodes over both hemispheres for the Nc (400 to 600 ms), F(1, 31) = 3.65, p = 0.066 and a significant main effect of emotion at anterior electrodes over both hemispheres for the Pc (600 and 800 ms), F(1, 31) = 9.45, p = 0.004. However, this main effect on the Nc and Pc is difficult to interpret, because it needs to be qualified by the interaction effect reported above.

4. Discussion

The current study examined the impact that body expressions have on the processing of facial expressions in infancy by using an ERP priming paradigm. Our results show that body expressions affect the neural processing of facial expressions in 8-month-old infants. In particular, the ERP data demonstrate that viewing conflicting body expressions hampers the neural discrimination of facial expressions in infants. This finding suggests that early in development body expressions provide an important context in which facial expressions are processed. Furthermore, our results present developmental evidence for the view that emotion perception should be considered a context-sensitive and multi-determined process (Barrett et al., 2011). The finding that body cues play an important role when perceiving emotional facial expressions early in human ontogeny, suggests that context sensitivity during facial emotion perception can be considered a key feature of human social perceptual functioning.

With respect to the early visual processing at occipital electrodes, the results from the current study did not reveal any effects on the P1 (100–200 ms). Prior work with adults reported early ERP effects for the P1 at occipital electrodes related to detecting congruency of emotional information between body and face (Meeren et al., 2005). One possibility why we did not observe any such effects in our infant sample is that there might be developmental differences with respect to how infants and adults integrate emotions across body and face. It is plausible that only with development and more experience in seeing matching bodies and faces these early perceptual integration processes reflected in the P1 are established. Another possible explanation is related to the fact that there are methodological differences between prior work with adults and the current study with infants. Namely, we employed a priming design with infants, whereas Meeren et al. (2005) used body-face compound stimuli in their study with adults. It is thus possible that for these early effects on the P1 to be observed, it is necessary that both body and face are visible simultaneously, as was the case for the compound stimuli in the adult work, but not for the priming stimuli in the current infant study. Future work is needed to explicitly test between these alternatives. Similarly, the fact that we did not find an enhanced Nc to incongruent trials in general as seen in Grossmann et al. (2006) might also be explained by methodological differences across studies. More specifically, while in Grossmann et al.’s (2006) study face and voice were presented simultaneously, the current study used a priming design in which the body was used as a prime and the face as a target. Therefore, the information from the body was not available to the infant while processing the face, which may reduce any incongruence effects reflected in infant ERPs.

Critically, our results show that body context matters, because effects of body context on the processing of facial emotions were observed for later ERP components related to attention allocation (Nc, 400–600 ms) and recognition memory (Pc, 600–800 ms) at anterior electrodes. In particular, infants showed that only when presented with congruent body expressions, but not when presented with incongruent body expressions, the Nc and Pc discriminated between happy and fearful faces. This shows that conflicting body cues hamper discrimination between facial expressions during later stages of neural processing. Specifically, infants fail to dedicate differential attentional resources to fearful versus happy facial expressions as reflected in the absence of a relative enhancement of the Nc to fearful faces when compared to happy faces. This finding suggests that incongruent body cues impair what has been referred to as a fear bias (Leppänen and Nelson, 2009) in 7-month-old infants’ attentional responding to facial expressions. In the context of conflicting body cues, infants also show an impairment of processes related to differential employment of recognition memory as reflected in the Pc. In particular, happy facial expressions did not elicit a greater Pc than fearful facial expressions in the incongruent context. This indicates that incongruent body cues impair the recognition of highly familiar (happy) facial expressions, because in the congruent body context happy facial expressions evoked an enhanced Pc, which is thought of as a neural marker of recognition memory (Nelson et al., 1998). However, with respect to the effects on the Pc it is important to mention that, in addition to the interaction effect, we also observed a main effect of emotion on this ERP component. This indicates that conflicting body cues, while having a detrimental effect on brain processes associated with recognition memory, do not completely abolish the differential employment of recognition memory processes triggered by viewing facial emotional expressions. Nevertheless, our analysis also revealed that only during the congruent body context, but not during the incongruent body context, the Pc amplitude differed between emotions, as shown in the post hoc comparisons using paired t-tests. Taken together, these findings regarding the Nc and Pc support the notion that context plays a critical role in triggering attentional and memory related processes associated with the perception of emotional facial expressions.

With respect to the exact nature of the observed context effects for the Nc and Pc, the current data cannot tell us whether incongruent emotional body context impedes the discrimination of emotional faces rather than congruent emotional body context bolstering the detection of emotional faces. This is because to directly investigate whether congruent body context bolsters or incongruent body context hampers the perception of facial emotion in infants, or both, an unprimed facial emotion condition or irrelevant prime control condition would be required in addition to the emotional prime conditions. Moreover, it seems critical to extend the current work by employing faces as primes and bodies as targets in order to find out whether these contextual effects occur independently of what serves as a prime or whether bodies are more potent in impacting the interpretation of emotional information than faces, as suggested by prior work with adults (Aviezer et al., 2012).

Another point for discussion is that the context effects on the Nc and Pc observed in 8-month-old infants in the current study were lateralized to the left hemisphere. This, at first glance, might appear counterintuitive given previous work showing that both, emotion perception in general as well as facial and body perception in particular (Grèzes et al., 2007, Heberlein and Saxe, 2005, Missana et al., 2015) are lateralized to the right hemisphere. However, recent work using fMRI in adults shows that regions that categorically represent emotions regardless of modality (body or face) are lateralized to the left hemisphere (specifically to the left superior temporal sulcus) (Peelen et al., 2010). The existence of such modality-independent representations of emotional information in the left hemisphere might also explain the lateralization of the ERP effects in the current infant sample. However, it should be stressed that the infant ERP data does not provide any information regarding the cortical sources that generate the observed ERP effects. It is therefore not possible to draw any direct comparisons between fMRI findings from adults and ERP findings from infants. Clearly, future work is needed to address this issue and functional near-infrared spectroscopy (fNIRS) (Lloyd-Fox et al., 2010), which is particularly well suited to localize brain responses in infants, might provide a promising method to examine this question.

Finally, it is important to mention that no effects were observed for face-sensitive processing as reflected in the ERP components thought to be involved in face encoding in infants such as the N290 and the P400. This pattern is in line with prior work (Meeren et al., 2005), which also did not find any such effects in adults. However, prior work with 7-month-old infants shows that fearful faces elicit a larger P400 than happy faces (Leppänen et al., 2007). Therefore, the absence of any effects on face-sensitive ERP components in the current data may suggest that facial emotion does not impact face encoding when primed by bodies, regardless of whether those bodies provide congruent or incongruent emotional information.

Taken together, the current findings demonstrate that infants put facial expressions into context. Our ERP data show that (a) body expressions impact the way in which infants attend to and retrieve information from memory about facial expressions, and (b) body expressions exert contextual effects on the facial emotion processing, with conflicting body cues hampering the neural discrimination of facial expressions. These results provide developmental evidence to an emerging body of work challenging the standard view of emotion perception, demonstrating that emotion perception from faces greatly depends on context.

Footnotes

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.dcn.2016.01.004.

Contributor Information

Purva Rajhans, Email: rajhans@cbs.mpg.de.

Tobias Grossmann, Email: grossman@cbs.mpg.de.

Appendix A. Supplementary data

The following are the supplementary data to this article:

References

- Atkinson A.P., Dittrich W.H., Gemmell A.J., Young A.W. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004;33(6):717–746. doi: 10.1068/p5096. [DOI] [PubMed] [Google Scholar]

- Aviezer H., Trope Y., Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012;338(6111):1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- Barrett L.F., Mesquita B., Gendron M. Context in emotion perception. Curr. Dir. Psychol. Sci. 2011;20(5):286–290. [Google Scholar]

- Clark V.P., Hillyard S.A. Spatial selective attention affects early extrastriate but not striate components of the visual evoked potential. J. Cogn. Neurosci. 1996;8(5):387–402. doi: 10.1162/jocn.1996.8.5.387. [DOI] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 2006;7(3):242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Haan M., Johnson M.H., Halit H. Development of face-sensitive event-related potentials during infancy: a review. Int. J. Psychophysiol. 2003;51(1):45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- DeBoer T., Scott L.S., Nelson C.A. Methods for acquiring and analyzing infant event-related potentials. In: Michelle de Haan, editor. Infant EEG and Event-Related Potentials. Psychology Press; New York, NY, USA: 2007. pp. 5–37. [Google Scholar]

- Di Russo F., Martínez A., Sereno M.I., Pitzalis S., Hillyard S.A. Cortical sources of the early components of the visual evoked potential. Hum. Brain Mapp. 2002;15(2):95–111. doi: 10.1002/hbm.10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner N.C., Riediger M., Lindenberger U. FACES—a database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods. 2010;42(1):351–362. doi: 10.3758/BRM.42.1.351. [DOI] [PubMed] [Google Scholar]

- Frijda N.H., Mesquita B. vol. 385. American Psychological Association; Washington, DC, USA: 1994. (The Social Roles and Functions of Emotions). (xiii) [Google Scholar]

- Frith C. Role of facial expressions in social interactions. Philos. Trans. R. Soc. London, Ser. B: Biol. Sci. 2009;364(1535):3453–3458. doi: 10.1098/rstb.2009.0142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gliga T., Dehaene-Lambertz G. Development of a view-invariant representation of the human head. Cognition. 2007;102(2):261–288. doi: 10.1016/j.cognition.2006.01.004. [DOI] [PubMed] [Google Scholar]

- Grèzes J., Pichon S., de Gelder B. Perceiving fear in dynamic body expressions. NeuroImage. 2007;35(2):959–967. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Grossmann T., Striano T., Friederici A.D. Crossmodal integration of emotional information from face and voice in the infant brain. Dev. Sci. 2006;9(3):309–315. doi: 10.1111/j.1467-7687.2006.00494.x. [DOI] [PubMed] [Google Scholar]

- Grossmann T., Striano T., Friederici A.D. Developmental changes in infants’ processing of happy and angry facial expressions: a neurobehavioral study. Brain Cogn. 2007;64(1):30–41. doi: 10.1016/j.bandc.2006.10.002. [DOI] [PubMed] [Google Scholar]

- Heberlein A.S., Atkinson A.P. Neuroscientific evidence for simulation and shared substrates in emotion recognition: beyond faces. Emotion Rev. 2009;1(2):162–177. [Google Scholar]

- Heberlein A.S., Saxe R.R. Dissociation between emotion and personality judgments: Convergent evidence from functional neuroimaging. NeuroImage. 2005;28(4):770–777. doi: 10.1016/j.neuroimage.2005.06.064. [DOI] [PubMed] [Google Scholar]

- Hillyard S.A., Anllo-Vento L. Event-related brain potentials in the study of visual selective attention. Proc. Natl. Acad. Sci. U.S.A. 1998;95(3):781–787. doi: 10.1073/pnas.95.3.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izard C.E. Basic emotions, natural kinds, emotion schemas, and a new paradigm. Perspect. Psychol. Sci. 2007;2(3):260–280. doi: 10.1111/j.1745-6916.2007.00044.x. [DOI] [PubMed] [Google Scholar]

- Kobiella A., Grossmann T., Reid V.M., Striano T. The discrimination of angry and fearful facial expressions in 7-month-old infants: an event-related potential study. Cogn. Emotion. 2008;22(1):134–146. [Google Scholar]

- Leppänen J.M., Moulson M.C., Vogel-Farley V.K., Nelson C.A. An ERP study of emotional face processing in the adult and infant brain. Child Dev. 2007;78(1):232–245. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen J.M., Nelson C.A. Tuning the developing brain to social signals of emotions. Nat. Rev. Neurosci. 2009;10(1):37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd-Fox S., Blasi A., Elwell C.E. Illuminating the developing brain: the past, present and future of functional near infrared spectroscopy. Neurosci. Biobehav. Rev. 2010;34(3):269–284. doi: 10.1016/j.neubiorev.2009.07.008. [DOI] [PubMed] [Google Scholar]

- Meeren H.K., van Heijnsbergen C.C., De Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. U.S.A. 2005;102(45):16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Missana M., Rajhans P., Atkinson A.P., Grossmann T. Discrimination of fearful and happy body postures in 8-month-old infants: an event-related potential study. Front. Hum. Neurosci. 2014;8:531. doi: 10.3389/fnhum.2014.00531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Missana M., Atkinson A.P., Grossmann T. Tuning the developing brain to emotional body expressions. Dev. Sci. 2015;18(2):243–253. doi: 10.1111/desc.12209. [DOI] [PubMed] [Google Scholar]

- Nelson C.A., De Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Dev. Psychobiol. 1996;29(7):577–595. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Nelson C.A., Morse P.A., Leavitt L.A. Recognition of facial expressions by seven-month-old infants. Child Dev. 1979;50(4):1239–1242. [PubMed] [Google Scholar]

- Nelson C.A., Thomas K., de Haan M.M., Wewerka S.S. Delayed recognition memory in infants and adults as revealed by event-related potentials. Int. J. Psychophysiol. 1998;29(2):145–165. doi: 10.1016/s0167-8760(98)00014-2. [DOI] [PubMed] [Google Scholar]

- Peelen M.V., Atkinson A.P., Vuilleumier P. Supramodal representations of perceived emotions in the human brain. J. Neurosci. 2010;30(30):10127–10134. doi: 10.1523/JNEUROSCI.2161-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Mäki S., Hietanen J.K. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Soc. Cogn. Affect. Neurosci. 2009;nsn046:1–9. doi: 10.1093/scan/nsn046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Mäki S., Hietanen J.K. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Soc. Cogn. Affect. Neurosci. 2009;4(2):134–142. doi: 10.1093/scan/nsn046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peykarjou S., Pauen S., Hoehl S. How do 9-month-old infants categorize human and ape faces?. A rapid repetition ERP study. Psychophysiology. 2014;51(9):866–878. doi: 10.1111/psyp.12238. [DOI] [PubMed] [Google Scholar]

- Pourtois G., Grandjean D., Sander D., Vuilleumier P. Electrophysiological correlates of rapid spatiol orienting towards fearful faces. Cereb. Cortex. 2004;14(6):619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Stupica B., Cassidy J. Priming as a way of understanding children's mental representations of the social world. Dev. Rev. 2014;34(1):77–91. [Google Scholar]

- Van den Stock J., van de Riet W.A., Righart R., De Gelder B. Neural correlates of perceiving emotional faces and bodies in developmental prosopagnosia: an event-related fMRI-study. PLoS ONE. 2008;3(9):e3195. doi: 10.1371/journal.pone.0003195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel M., Monesson A., Scott L.S. Building biases in infancy: the influence of race on face and voice emotion matching. Dev. Sci. 2012;15(3):359–372. doi: 10.1111/j.1467-7687.2012.01138.x. [DOI] [PubMed] [Google Scholar]

- Webb S.J., Long J.D., Nelson C.A. A longitudinal investigation of visual event-related potentials in the first year of life. Dev. Sci. 2005;8(6):605–616. doi: 10.1111/j.1467-7687.2005.00452.x. [DOI] [PubMed] [Google Scholar]