Abstract

Background Despite progress in patient safety, misidentification errors in radiology such as ordering imaging on the wrong anatomic side persist. If undetected, these errors can cause patient harm for multiple reasons, in addition to producing erroneous electronic health records (EHR) data.

Objectives We describe the pilot testing of a quality improvement methodology using electronic trigger tools and preimaging checklists to detect “wrong-side” misidentification errors in radiology examination ordering, and to measure staff adherence to departmental policy in error remediation.

Methods We retrospectively applied and compared two methods for the detection of “wrong-side” misidentification errors among a cohort of all imaging studies ordered during a 1-year period (June 1, 2015–May 31, 2016) at our tertiary care hospital. Our methods included: (1) manual review of internal quality improvement spreadsheet records arising from the prospective performance of preimaging safety checklists, and (2) automated error detection via the development and validation of an electronic trigger tool which identified discrepant side indications within EHR imaging orders.

Results Our combined methods detected misidentification errors in 6.5/1,000 of study cohort imaging orders. Our trigger tool retrospectively identified substantially more misidentification errors than were detected prospectively during preimaging checklist performance, with a high positive predictive value (PPV: 88.4%, 95% confidence interval: 85.4–91.4). However, two third of errors detected during checklist performance were not detected by the trigger tool, and checklist-detected errors were more often appropriately resolved ( p < 0.00001, 95% confidence interval: 2.0–6.9; odds ratio: 3.6).

Conclusion Our trigger tool enabled the detection of substantially more imaging ordering misidentification errors than preimaging safety checklists alone, with a high PPV. Many errors were only detected by the preimaging checklist; however, suggesting that additional trigger tools may need to be developed and used in conjunction with checklist-based methods to ensure patient safety.

Keywords: radiology, domain, clinical care, error management and prevention, process improvement, system improvement, checklist, trigger tool, safety, process improvement, system improvement

Background and Significance

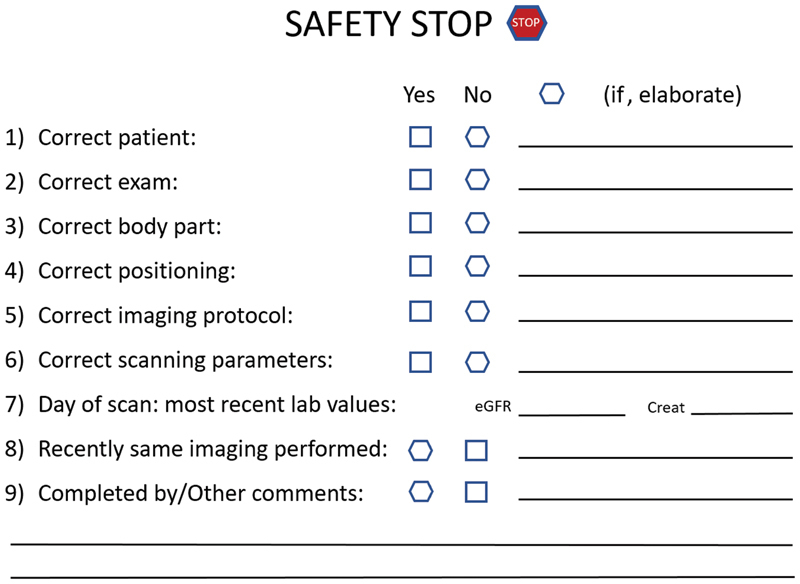

Medical errors relating to “wrong anatomic site/side” imaging remains a problem in radiology practice. 1 2 3 4 5 If undetected, these errors can cause patient harm directly through exposure to unnecessary radiation, or indirectly through misdiagnosis, or delayed diagnosis and treatment for example. 1 6 These errors can also decrease efficiency of radiology departmental workflow, 3 7 with the associated potential cost increases and commensurate decreased availability of imaging resources. The Joint Commission guideline “Universal Protocol for Preventing Wrong Site, Wrong Procedure, Wrong Person Surgery” describes a preprocedural verification process aimed at preventing such misidentification errors. 8 Given the potentially severe negative health consequences to the patient, 9 many studies recommend adapting similar preprocedural verification processes to nonsurgical settings such as radiology to address these errors. 1 2 4 6 9 10 11 Our radiology department implemented a quality improvement effort in June 2015 which included a preprocedural verification process called the “Safety-STOP.” Modeled after the Universal Protocol, the Safety-STOP required that radiology staff complete a manual checklist to identify multiple potential ordering errors at key phases of the imaging workflow, notably including misidentification errors ( Fig. 1 ). The Safety-STOP process, and the relevant portions of the associated checklist form, was mandated to be completed most importantly by the technologist at the patient imaging encounter, but also by radiology staff during preencounter examination scheduling, registration, and protocolling.

Fig. 1.

Safety-STOP checklist form to be completed by radiology staff.

Potential “wrong anatomic side” misidentification errors revealed during the Safety-STOP warrant correction. At our institution, electronic health record (EHR)-based imaging orders contain an anatomic side modifier with one of three structured values (LEFT/RIGHT/NONE), and a clinical history field with text entered by the ordering provider (e.g., “left knee pain”). Anatomic side discrepancies between the modifier and clinical history field are expected to be resolved via direct ordering provider communication before imaging can proceed. If the modifier is erroneous, staff is expected to correct the modifier in the EHR order requisition before proceeding. If the history field is erroneous, staff must instruct the ordering provider to cancel and reorder the examination with the correct history field information before proceeding. Inappropriate corrective actions may compromise EHR data integrity regardless of whether the correct body part is imaged. For each potential problem or discrepancy revealed during the Safety-STOP such as a misidentification error, staff is expected to record details of each discrepancy and subsequent corrective actions on the checklist form and enter these details along with a descriptive error categorization (e.g., “wrong side”) into a secured electronic spreadsheet for ongoing quality improvement purposes. Only a single discrepancy or descriptive error categorization is required, but there was no limit to the number of entries which could be recorded for any given incident. During the initial checklist implementation year, data entry audits were performed on a biweekly basis by departmental supervisors, for purposes of providing feedback and training reinforcement to staff on correct checklist performance.

Preprocedural verification processes are important but insufficient safeguards, as they may not identify errors occurring “upstream” or “downstream” from the time-out. 9 12 13 14 For instance, a preprocedural time-out may not identify an error in the order where imaging the wrong side of a noncommunicative or impaired patient is requested, provided the available information is consistently incorrect within the requisition and/or available clinical notes (“upstream error”). In another instance, if the wrong laterality marker is utilized for the imaging procedure itself following the time-out, the resulting radiology dictation may misreport the exam as pertaining to the contralateral side (“downstream error”). Error-prevention efforts should therefore emphasize identifying and addressing latent contributory conditions to prevent adverse events. 14 15 In the above examples, these efforts may include standardized physician to physician handoff prior to imaging or intervention of noncommunicative patients or standardizing the steps for applying laterality indicators during the time out itself, though these interventions would be dependent on the nature of the latent conditions. The use of “trigger tools” can facilitate detection of such safety risks, using specialized algorithms to identify characteristic occurrences, prompts, or flags within the EHR suggestive of medical error. 16 17 18 19 20 21 22 23 24 25 26 27 These tools have been used to detect misidentification errors such as “wrong patient” clinical notes, 24 “wrong patient” treatment orders, 16 25 or the performance of “wrong patient” procedures, 28 but their utility in detecting “wrong-side” ordering misidentification has not been demonstrated.

Objectives

We developed and validated a trigger tool-based methodology to detect discrepancies in EHR imaging orders suggestive of “wrong anatomic side” misidentification error. Our goal was to use this tool in conjunction with the Safety-STOP checklist to enable more comprehensive assessment of the incidence of “wrong-side” ordering misidentification errors within our radiology practice. This information could also provide important insight into departmental staff performance in error detection and remediation practices. While initially deployed retrospectively, our trigger tool could ultimately be deployed in prospective fashion to facilitate ongoing error-reduction efforts. This quality improvement study received exemption from the University of Wisconsin Health Sciences Institutional Review Board.

Methods

Preprocedural Checklist Analysis

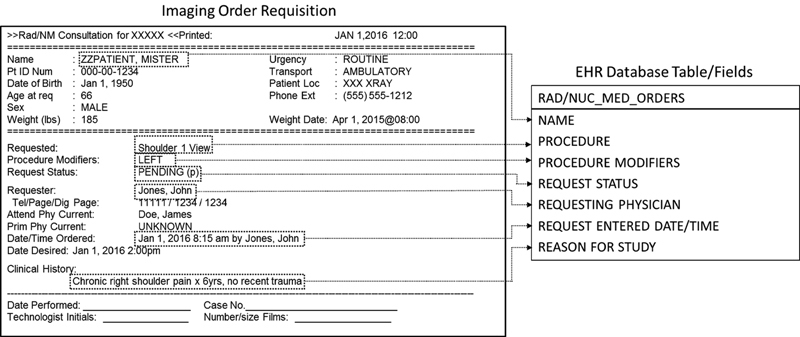

We reviewed Safety-STOP quality improvement records of all reported instances of “wrong anatomic side” misidentification for imaging orders placed during a 1-year period (June 1, 2015–May 31, 2016) at our tertiary care Department of Veterans Affairs hospital in collaboration with local healthcare informatics specialists to: (1) quantify the incidence of “wrong-side” imaging ordering detected during checklist performance, (2) categorize each error based on how the anatomic side was specified in the associated requisition, (3) establish a lexicon of the most common terminology and abbreviations for anatomic side specification used by providers in examination ordering, and (4) identify the EHR database table locations where relevant imaging order records data resides ( Fig. 2 ). This analysis was aimed at facilitating the development of electronic trigger tools which would automate misidentification error detection and enable the investigation of subsequent corrective actions taken.

Fig. 2.

EHR field mapping of order requisition data (all patient data are fictional). EHR, electronic health records.

Data Extraction

Building upon the checklist analysis, we developed an EHR trigger tool to detect potential instances of “wrong anatomic side” misidentification among radiology exam orders. The tool was designed to detect order records containing either discrepant specifications of the anatomic side to be imaged (e.g., “left” vs. “right”) between the side modifier and clinical history field, or order records for unilateral exams for which no side was specified. In the interest of focusing on the types of errors which pose higher risk of committed wrong-side patient imaging, we conservatively excluded consideration of bilateral examination orders where the ordering intent was clear, as risks of wrong-side imaging in such instances would be very low ( Table 1 ).

Table 1. Trigger algorithm development criteria.

| Criteria | Description | |

|---|---|---|

| Inclusion criteria | ||

| Examination type | Imaging order which requires a “side” modifier (e.g., knee radiograph, shoulder MRI, etc.) | |

| Demographic | All patients with imaging orders placed within local radiology department during the pilot year | |

| Ordering data discrepancy | Modifier: | Clinical history (text “contains”): |

| Left | “Right”, “ RT ”, “ R. ”, etc. | |

| Right | “Left”, “ LT “, “ L ”, etc. | |

| None | No anatomic side indicated | |

| Exclusion criteria | ||

| Bilateral examination orders | Presence of two orders of the same examination type (e.g., knee radiograph, shoulder MRI, etc.) with differing side indicators, placed within 15 minutes of each other by the same provider, and ultimately completed. a | |

| - OR - | ||

| Clinical history (text “contains”): “bilateral”, “ BIL”, “B/L,” etc. | ||

Abbreviation: MRI, magnetic resonance imaging.

Internal quality improvement work suggests that these data characteristics most closely reflect provider ordering behavior associated with initial intent to order bilateral examinations. Provider self-correction (retract and reordering) would involve order cancellation; the ordering time of follow-up imaging of an erroneous order would differ by more than 15 minutes.

Our trigger tool was coded as a structured query language routine and applied retrospectively to a secured departmental electronic database containing extracted EHR data for all imaging order records placed in our department during the 1-year study period.

Data Analysis and Validation

Each potential ordering error revealed by the trigger tool underwent retrospective EHR chart review validation, performed by the departmental lead in quality and safety (SES), the radiology supervisor of diagnostic radiology technicians (KK), and an experienced imaging technologist (SK). Study personnel performed this review in concert in effort to minimize the risk of interrater disagreement owing to the failed satisfaction of EHR review of any individual reviewer. 25 Orders were deemed misidentification errors, that is true positives, if any of the following scenarios applied: (1) a side modifier was specified, the history field indicated the contralateral side, clinical notes indicated unilateral symptoms, and no simultaneous or follow-up contralateral side imaging order was placed; (2) no side was specified in the order, clinical notes indicated unilateral symptoms only, and only a single exam was ordered; (3) the ordering provider acknowledged the error in the EHR; (4) the error was confirmed with providers prospectively, as documented in Safety-STOP spreadsheet. Orders not satisfying one of these scenarios were conservatively deemed false-positives. Potential discrepancies among reviewers were resolved via consensus discussion, with study design dictating that absence of unanimous agreement conservatively warranted a false-positive designation, though no such disagreement occurred. Each true positive order was further investigated via EHR and Safety-STOP spreadsheet review to identify: (1) the staff member involved in the imaging encounter; (2) where in the imaging workflow the error was detected (“before” or “during” the patient imaging encounter); (3) the nature of the error in the Safety-STOP spreadsheet; and (4) appropriateness of the error correction method. Corrective action was deemed “appropriate” if the erroneous or nonspecified modifier was edited by the technologist, or the erroneous history field data was corrected via examination cancellation and reordering. Failure to complete these corrective actions was deemed “inappropriate.” Finally, all completed imaging examinations were reviewed to determine which anatomic side was imaged to categorize instances as ordering near misses versus committed imaging errors.

As reported in similarly designed prior studies, trigger tool performance was measured via calculating a positive predictive value (PPV). 21 26 27 29 Pearson's Chi-squared testing was used to evaluate for effect of the type of error, the involved staff member, and presence of a corresponding Safety-STOP entry on the appropriateness of subsequent corrective actions, as well as for differences in Safety-STOP error categorization based on whether the entry was made prior to or during the imaging encounter. Statistical analysis was performed with R v3.2.0. 30

Results

A total of 76,468 imaging exams were ordered at our facility during the pilot year, attributable to 16,529 unique patients. Initial Safety-STOP spreadsheet analysis revealed entries for 11.1% (8,518/76,468) of these orders, with each entry representing a potential problem in the ordering workflow requiring further investigation. A total of 154 entries were prospectively categorized as “wrong-side” misidentification errors by the involved radiology staff, suggesting a 0.2% (154/76,468) detected “wrong-side” error rate. Of these, 42% (65/154) had discrepant or nonspecified side indicators in the associated requisition in which the trigger tool was designed to detect, but the majority contained nondiscrepant side indicators ( Table 2 ). Of note, 18 of these 65 requisitions contained incidental misspellings or atypical ordering template-related text in the history field, or had multiple cancelled companion orders in close temporal proximity which would have made trigger tool detection of these cases problematic.

Table 2. Classification of errors by detection method.

| Error Classification | Trigger tool validated errors (%) | Safety-STOP detected “wrong-side” errors (%) |

|---|---|---|

| Discrepant info (requisition clinical hx and side modifier indicate opposite anatomic sides) | 88 (22.6) | 24 (15.6) |

| Missing info (no side specified anywhere on the requisition) | 301 (77.4) | 41 (26.6) |

| Nondiscrepant info (side modifier and requisition clinical hx are not discrepant, but wrong side indicated) | 0 (0) a | 89 (57.8) |

| Total | 389 (100) | 154 (100) |

Trigger tool design criteria necessarily precludes detection of these errors.

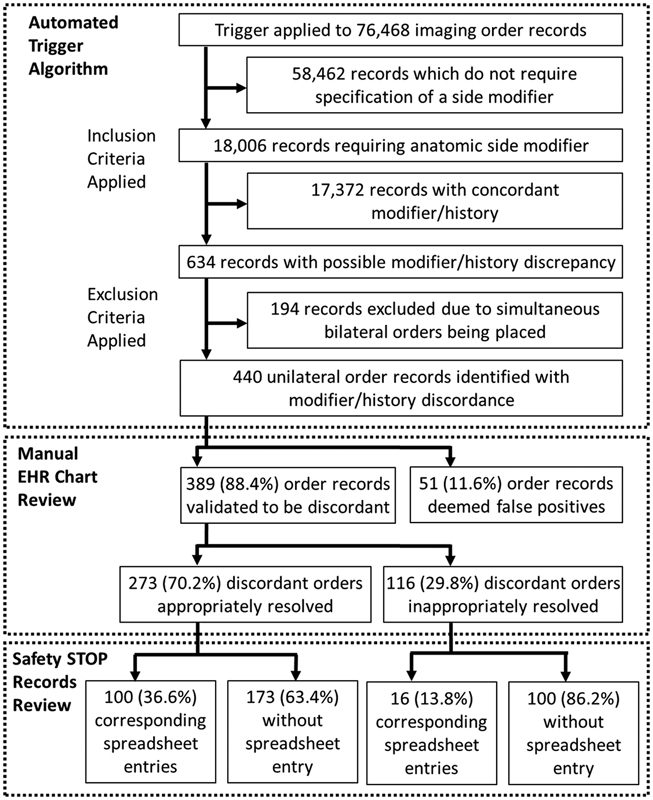

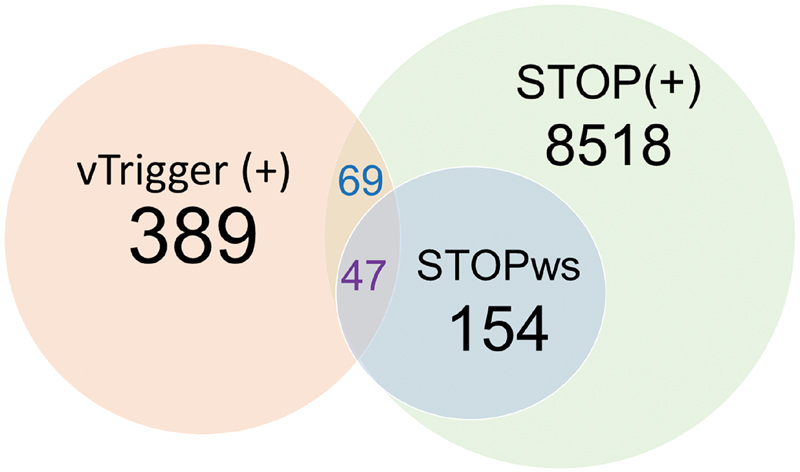

The trigger tool subsequently analyzed these 76,468 ordering records ( Fig. 3 ), and of the 440 order records which satisfied the inclusion and exclusion criteria and subsequently underwent chart-review validation, 389 were found to have discrepant or missing anatomic side specifications, with 51 false positives (PPV: 88.4%; 95% confidence interval [CI]: 85.4–91.4), suggesting a 0.5% (389/76,468) detected “wrong-side” error rate ( Table 2 ). Correlating the results of both detection methods and accounting for overlap ( Fig. 4 ), reveals an overall detected “wrong-side” error rate of 0.65% (496/76,468), or 6.5 errors for every 1,000 orders placed. All of the reviewed imaging examinations were completed on the correct side, indicating that all detected ordering errors were near miss events.

Fig. 3.

Flow chart demonstrating trigger tool operation and EHR/Safety-STOP records review process. EHR, electronic health records.

Fig. 4.

Venn diagram demonstrating the distribution of all cases of “wrong-side” misidentification identified in the study cohort by the trigger tool and checklist detection process. (vTrigger (+) = validated cases of “wrong-side” ordering misidentification errors identified by the trigger tool; STOP (+) = Safety-STOP spreadsheet entries for all instances of potential ordering error during the study year; STOPws = prospectively validated instances of ordering misidentification descriptively categorized as “wrong side” in the Safety STOP spreadsheet).

Errors detected by the trigger tool were more commonly found to be nonspecified anatomic side (77.4%, 301/389) or incorrect (17.7%, 69/389) side modifiers rather than erroneous clinical histories (4.9%, 19/389). Appropriate resolution steps were employed for 70.2% (273/389) of the erroneous orders revealed by the trigger tool, but the remaining 29.8% (116/389) were not appropriately corrected and/or documented per policy ( Fig. 3 ). Corrective actions were more appropriate when a simple modifier change was required ( p < 0.0001), as erroneous and nonspecified modifiers were appropriately corrected 84.1% (58/69) and 69.8% (210/301) of the time respectively, whereas only 26.3% (5/19) were appropriately corrected when placement of a new order was required.

Of the discrepant orders detected by the trigger tool, 29.8% (116/389) had a corresponding entry in the Safety-STOP spreadsheet ( Fig. 3 ), though only 12.1% (47/389) were acknowledged to be “wrong-side” misidentification errors, with the remaining 17.7% (69/389) reporting unrelated errors ( Fig. 4 ). Discrepant orders with a corresponding Safety-STOP spreadsheet entry were more often appropriately resolved (86.2%; 100/116) than those without (63.4%; 173/273), regardless of the error category or mandated corrective steps required ( p < 0.00001; 95% CI: 2.0, 6.9; odds ratio [OR]: 3.6). The spreadsheet entry notes for these orders ( Table 3 ) were more likely to acknowledge the anatomic side discrepancy when generated during the patient imaging encounter (51.9%; 28/54) than when generated prior to the imaging encounter (30.6%; 19/62), where patient input would not be immediately available ( p = 0.02; 95% CI: 1.1, 5.6; OR: 2.4). A total of 49 individual support staff processed at least one discrepant order (mean: 7.9, median: 5, min: 1, max: 59), with staff member identity showing a significant effect on the appropriateness of corrective action ( p = 0.0005).

Table 3. Safety-STOP order resolution reason.

| Time of correction in imaging workflow ( n , % total) | ||

|---|---|---|

| Reason for correction | At imaging encounter | Prior to imaging encounter |

| “Wrong-side ordered” | 28 (24.1) | 19 (16.4) |

| “Duplicate order placed” | 3 (2.6) | 9 (7.8) |

| “Provider canceled due to self-reported nonspecified error” | 0 (0) | 6 (5.2) |

| “Provider canceled order without providing a reason” | 0 (0) | 13 (11.2) |

| “Imaging technique modified (unrelated to sidedness)” | 19 (16.4) | 4 (3.4) |

| Other reasons | 4 (3.4) | 11 (9.5) |

| Total | 54 (46.5) | 62 (53.5) |

Discussion

We demonstrate the utility of EHR triggers for the detection of imaging ordering misidentification errors. Our trigger tool identified substantially more validated “wrong-side” errors relating to modifier/history field discrepancy than were acknowledged during performance of the Safety-STOP checklist, suggesting that checklist-based methods alone are insufficient. However, the trigger tool failed to capture more than two third of the “wrong-side” errors recorded in the checklist ( Fig. 4 ). The vast majority of these failures owed to the absence of discrepant information within the requisition ( Table 2 ), making these errors effectively undetectable by our employed trigger methodology. Incidental misspellings, nonstandardized text entry, and disorganized or unpredictable ordering behavior posed additional challenges to our trigger methodology. These findings suggest that further refinement of our trigger tool algorithm, and the development of additional trigger tools may be needed to maximize error detection rates.

While all detected misidentification errors were near misses (i.e., all patients received the correct exam), many were inappropriately resolved. Corrective actions were more appropriate when a simple modifier correction was indicated, a process which could be quickly completed by the radiology staff. When the history field was erroneous, the staff may have had to significantly prolong the imaging encounter to wait for a new order to be placed by the provider. Corrective actions were less appropriate for these cases, suggesting staff may be employing workarounds, 31 simply ensuring that the correct exam be performed without making the mandated order correction. These workarounds would need to be directly investigated and confirmed, but this performance difference represents a potential target for process improvement to facilitate staff compliance.

Many of the wrong-side errors captured by the algorithm were either not captured by the Safety-STOP ( Fig. 3 ) or were miscategorized by the responsible staff as another form of error ( Table 3 ). These findings raise questions about the reliability of staff adherence to departmental guidelines in faithful checklist performance and spreadsheet completion, and again raise suspicion of potential workarounds. The miscategorization of errors observed suggests that multiple errors may have been present on these orders which were either unrecognized or underreported, or possibly the spreadsheet entries may have simply been erroneous. Further, most miscategorized Safety-STOP entries were generated prior to the imaging encounter, where patient or provider input may not be readily available for clarification. These findings illustrate some fundamental weaknesses of exclusively checklist-based error detection methods, including vulnerability to “upstream” errors or order modifications, and may suggest areas of process improvement. Variability in individual staff performance is an important problem, and suggests ongoing targeted feedback and compliance training may be necessary. It would be impractical to expect that routine auditing alone can remediate these issues, as this would likely require staff to formally investigate each of the approximately 600 monthly STOP entries for accuracy relative to the EHR, or the more than 6,000 monthly orders placed at our department to capture otherwise undetected errors. Despite these limitations, the Safety-STOP process appears to provide benefit for the detection and remediation of misidentification errors, as many errors were only detected by the checklist process, and corrective actions were approximately 3.6 times more likely to be appropriate when the Safety-STOP process was faithfully followed. Ensuring adherence to the Safety-STOP process will likely be an ongoing challenge, and will likely require continued training and accrual of feedback toward process improvement.

Several limitations to our trigger tool methodology warrant discussion. Foremost, as trigger tool design was driven in part by data analysis of near miss events within our Safety-STOP records and relied upon intraorder side discrepancies, our trigger methodology may not detect actual wrong-side imaging events, misidentification errors where the order modifier and clinical history field are in agreement (i.e., both fields indicate the incorrect side), errors when unusual abbreviations or misspelled words are present, or when no anatomic side is specified in the history field. Our trigger tool algorithm implementation also restricted our investigation to cases where there was a higher likelihood of wrong-side error, conservatively excluding apparent bilateral exam orders. Pairs of bilateral order requisitions may still contain errors (i.e., “RIGHT” modifier with “left shoulder pain” history, and “LEFT” modifier with “right shoulder pain”), and their latent conditions may warrant investigation in future efforts. The development of additional trigger tools would likely improve detection rates, and may reduce the potential vulnerabilities of overreliance on checklist-based methods. Tools such as the “wrong patient retract-and-reorder” methodology, a National Quality Forum endorsed patient safety performance measure, 16 32 could potentially be applied toward the detection of “wrong-side” ordering errors. Our methodology could also be improved through the use of more robust EHR data mining techniques such as natural language processing, artificial intelligence, and machine learning. 20 If deployed prospectively, potentially as part of a clinical decision support (CDS) tool developed in the context of a robust sociotechnical model analysis, 33 34 these tools could potentially facilitate further error reduction as point-of-care provider feedback and ordering training and could supply critical data used in auditing and improving upon Safety-STOP checklist performance within the department. Additionally, our study design does not provide sufficient information to calculate sensitivity, specificity, or negative predictive value of our trigger tool. Given the large number of annual imaging orders, and likely low error incidence, it would be infeasible to perform the exhaustive manual review required to identify a sufficient number of true and false negative records to allow these calculations. Finally, this pilot study did not explore the contributing latent conditions for erroneous ordering or suboptimal staff compliance toward error remediation, but ongoing use of our methodology will enable prospective quality improvement efforts toward identifying and addressing these factors.

Conclusion

We demonstrate the utility of a combined approach toward the detection and remediation of radiology examination ordering “wrong-side” misidentification errors. We developed an EHR trigger tool capable of detecting substantially more of these errors than were detected with checklist-based methods alone, achieving a PPV of 88.4%. More than two third of the errors detected by the checklist were not captured by the trigger tool; however, suggesting that preimaging checklists remains important safeguards, and that additional trigger tools may need to be developed to best maximize error detection rates.

Clinical Relevance Statement

Patient misidentification errors, such as the ordering of medical imaging on the wrong anatomic side, remain a problem and may be underappreciated. We have shown that the use of trigger tools can reveal substantially more errors than would be detected by checklist-based methods alone, though preimaging checklists remain an important safeguard. Radiology departmental safety and quality improvement efforts would likely benefit from the development of additional trigger tools to further improve error detection rates.

Multiple Choice Questions

-

Examples of misidentification errors would include:

Inadvertently ordering a medication on the correct patient but with the wrong administration frequency.

Misdiagnosing a patient with the incorrect disease process due to misinterpretation of symptoms and clinical history.

Ordering a diagnostic imaging study on the wrong patient, or wrong anatomic site.

Committing a typographical error in a patient's EHR note.

Correct Answer: The correct answer is option c. The remaining options describe errors in which the patient identity and/or anatomic site are not in question.

-

Weaknesses of point-of-care checklist based methods for the detection of ordering misidentification errors include:

Vulnerability to errors occurring “upstream” or “downstream” from the time of checklist performance.

Reliability of the checklist may be compromised by variability in staff performance and/or training.

Patients may not be able to provide complete answers to checklist verification questions.

All of the above.

Correct Answer: The correct answer is option d, as all of the options are weaknesses of checklist-based methods. Option a refers to situations where the error is committed prior to or following checklist performance, times where systematic safeguards may not be in place to detect misidentification errors (e.g., the order is placed on the wrong patient, as two patient's may have similar symptoms or clinical histories but only one warrants the imaging study in question). Option b refers to situations where technologist or staff inexperience or workarounds may result in suboptimal checklist performance. Option c refers to checklist failure stemming from poor patient insight or impaired communication.

Funding Statement

Funding None.

Conflict of Interest No conflicts of interest to disclose. No specific funding support was provided toward the work reported in this manuscript. Nasia Safdar receives funding support from the VA Patient Safety Center, and from AHRQ R18 and R03 grants for unrelated work. Dr. Singh's research is funded by the Veterans Affairs (VA) Health Services Research and Development Service (HSR&D) (CRE-12–033) and the Presidential Early Career Award for Scientists and Engineers (USA 14–274), the Agency for Healthcare Research and Quality (R01HS022087 and R18HS017820), the VA National Center for Patient Safety, and the Houston VA HSR&D Center for Innovations in Quality, Effectiveness, and Safety (CIN 13–413).

Protection of Human and Animal Subjects

This quality improvement study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects, and received exemption from the University of Wisconsin Health Sciences Institutional Review Board.

References

- 1.Applying the universal protocol to improve patient safety in radiology. Vol 8. Pennsylvania Patient Safety Advisory. 2011;8(02):63–70. [Google Scholar]

- 2.Danaher L A, Howells J, Holmes P, Scally P. Is it possible to eliminate patient identification errors in medical imaging? J Am Coll Radiol. 2011;8(08):568–574. doi: 10.1016/j.jacr.2011.02.021. [DOI] [PubMed] [Google Scholar]

- 3.Duman B D, Chyung S YY, Villachica S W, Winiecki D. Root causes of errant ordered radiology exams: results of a needs assessment. Perform Improv. 2011;50(01):17–24. [Google Scholar]

- 4.Field C. Adapting verification process to prevent wrong radiology events. Pennsylvania Patient Safety Advisory. 2018;15(03):1–13. [Google Scholar]

- 5.Schultz S R, Watson R E, Jr, Prescott S L et al. Patient safety event reporting in a large radiology department. AJR Am J Roentgenol. 2011;197(03):684–688. doi: 10.2214/AJR.11.6718. [DOI] [PubMed] [Google Scholar]

- 6.Rubio E I, Hogan L. Time-out: it's radiology's turn--incidence of wrong-patient or wrong-study errors. AJR Am J Roentgenol. 2015;205(05):941–946. doi: 10.2214/AJR.15.14720. [DOI] [PubMed] [Google Scholar]

- 7.Duman B, Martin P.Reducing errant ordered radiology exams Radiol Manage 2012340118–22., quiz 23–24 [PubMed] [Google Scholar]

- 8.Universal protocol for preventing wrong site, wrong procedure, wrong person surgeryAvailable at:http://www.jointcommission.org/NR/rdonlyres/E3C600EB-043B-4e86-B04E-CA4a89AD5433/0/universal_protocol.pdf. Accessed September 2007 [PubMed]

- 9.Stahel P F, Sabel A L, Victoroff M S et al. Wrong-site and wrong-patient procedures in the universal protocol era: analysis of a prospective database of physician self-reported occurrences. Arch Surg. 2010;145(10):978–984. doi: 10.1001/archsurg.2010.185. [DOI] [PubMed] [Google Scholar]

- 10.Angle J F, Nemcek A A, Jr, Cohen A M et al. Quality improvement guidelines for preventing wrong site, wrong procedure, and wrong person errors: application of the joint commission “Universal Protocol for Preventing Wrong Site, Wrong Procedure, Wrong Person Surgery” to the practice of interventional radiology. J Vasc Interv Radiol. 2008;19(08):1145–1151. doi: 10.1016/j.jvir.2008.03.027. [DOI] [PubMed] [Google Scholar]

- 11.Brook O R, O'Connell A M, Thornton E, Eisenberg R L, Mendiratta-Lala M, Kruskal J B. Quality initiatives: anatomy and pathophysiology of errors occurring in clinical radiology practice. Radiographics. 2010;30(05):1401–1410. doi: 10.1148/rg.305105013. [DOI] [PubMed] [Google Scholar]

- 12.Aspden P; Institute of Medicine. (U.S.) Committee on Data Standards for Patient Safety.Patient safety: achieving a new standard for care Washington, D.C.National Academies Press; 2004. PMID: 25009854 [PubMed] [Google Scholar]

- 13.Paull D E, Mazzia L M, Neily J et al. Errors upstream and downstream to the Universal Protocol associated with wrong surgery events in the Veterans Health Administration. Am J Surg. 2015;210(01):6–13. doi: 10.1016/j.amjsurg.2014.10.030. [DOI] [PubMed] [Google Scholar]

- 14.Reason J.Human error: models and management BMJ 2000320(7237):768–770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chassin M R, Loeb J M. High-reliability health care: getting there from here. Milbank Q. 2013;91(03):459–490. doi: 10.1111/1468-0009.12023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Adelman J S, Kalkut G E, Schechter C B et al. Understanding and preventing wrong-patient electronic orders: a randomized controlled trial. J Am Med Inform Assoc. 2013;20(02):305–310. doi: 10.1136/amiajnl-2012-001055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Classen D C, Resar R, Griffin F et al. ‘Global trigger tool’ shows that adverse events in hospitals may be ten times greater than previously measured. Health Aff (Millwood) 2011;30(04):581–589. doi: 10.1377/hlthaff.2011.0190. [DOI] [PubMed] [Google Scholar]

- 18.Galanter W, Falck S, Burns M, Laragh M, Lambert B L. Indication-based prescribing prevents wrong-patient medication errors in computerized provider order entry (CPOE) J Am Med Inform Assoc. 2013;20(03):477–481. doi: 10.1136/amiajnl-2012-001555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Murphy D R, Laxmisan A, Reis B A et al. Electronic health record-based triggers to detect potential delays in cancer diagnosis. BMJ Qual Saf. 2014;23(01):8–16. doi: 10.1136/bmjqs-2013-001874. [DOI] [PubMed] [Google Scholar]

- 20.Murphy D R, Meyer A N, Sittig D F, Meeks D W, Thomas E J, Singh H.Application of electronic trigger tools to identify targets for improving diagnostic safetyBMJ Qual Saf2018 [DOI] [PMC free article] [PubMed]

- 21.Murphy D R, Thomas E J, Meyer A N, Singh H. Development and validation of electronic health record-based triggers to detect delays in follow-up of abnormal lung imaging findings. Radiology. 2015;277(01):81–87. doi: 10.1148/radiol.2015142530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Resar R K, Rozich J D, Classen D. Methodology and rationale for the measurement of harm with trigger tools. Qual Saf Health Care. 2003;12 02:ii39–ii45. doi: 10.1136/qhc.12.suppl_2.ii39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sharek P J. The emergence of the trigger tool as the premier measurement strategy for patient safety. AHRQ WebM&M. 2012;2012(05):120. [PMC free article] [PubMed] [Google Scholar]

- 24.Wilcox A B, Chen Y H, Hripcsak G. Minimizing electronic health record patient-note mismatches. J Am Med Inform Assoc. 2011;18(04):511–514. doi: 10.1136/amiajnl-2010-000068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Burlison J D, McDaniel R B, Baker D K et al. Using EHR data to detect prescribing errors in rapidly discontinued medication orders. Appl Clin Inform. 2018;9(01):82–88. doi: 10.1055/s-0037-1621703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kalenderian E, Obadan-Udoh E, Yansane A et al. Feasibility of electronic health record-based triggers in detecting dental adverse events. Appl Clin Inform. 2018;9(03):646–653. doi: 10.1055/s-0038-1668088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kane-Gill S L, MacLasco A M, Saul M I et al. Use of text searching for trigger words in medical records to identify adverse drug reactions within an intensive care unit discharge summary. Appl Clin Inform. 2016;7(03):660–671. doi: 10.4338/ACI-2016-03-RA-0031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rutberg H, Borgstedt-Risberg M, Gustafson P, Unbeck M. Adverse events in orthopedic care identified via the Global Trigger Tool in Sweden - implications on preventable prolonged hospitalizations. Patient Saf Surg. 2016;10(01):23. doi: 10.1186/s13037-016-0112-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Koppel R, Leonard C E, Localio A R, Cohen A, Auten R, Strom B L. Identifying and quantifying medication errors: evaluation of rapidly discontinued medication orders submitted to a computerized physician order entry system. J Am Med Inform Assoc. 2008;15(04):461–465. doi: 10.1197/jamia.M2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.R Core Team. (2015).R: A language and environment for statistical computingR Foundation for Statistical Computing, Vienna, Austria. Available at:http://www.R-project.org/

- 31.van der Veen W, van den Bemt P MLA, Wouters H et al. Association between workarounds and medication administration errors in bar-code-assisted medication administration in hospitals. J Am Med Inform Assoc. 2018;25(04):385–392. doi: 10.1093/jamia/ocx077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Patient Safety 2015: final technical reportNational Quality Forum; Feb 12, 2016. Available at:https://www.qualityforum.org/Publications/2016/02/Patient_Safety_2015_Final_Report.aspx

- 33.Sittig D F, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care. 2010;19 03:i68–i74. doi: 10.1136/qshc.2010.042085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wang J, Liang H, Kang H, Gong Y. Understanding health information technology induced medication safety events by two conceptual frameworks. Appl Clin Inform. 2019;10(01):158–167. doi: 10.1055/s-0039-1678693. [DOI] [PMC free article] [PubMed] [Google Scholar]