Abstract

Hybrid kinetic and kinematic intracortical brain-computer interfaces (iBCIs) have the potential to restore functional grasping and object interaction capabilities in individuals with tetraplegia. This requires an understanding of how kinetic information is represented in neural activity, and how this representation is affected by non-motor parameters such as volitional state (VoS), namely, whether one observes, imagines, or attempts an action. To this end, this work investigates how motor cortical neural activity changes when three human participants with tetraplegia observe, imagine, and attempt to produce three discrete hand grasping forces with the dominant hand. We show that force representation follows the same VoS-related trends as previously shown for directional arm movements; namely, that attempted force production recruits more neural activity compared to observed or imagined force production. Additionally, VoS-modulated neural activity to a greater extent than grasping force. Neural representation of forces was lower than expected, possibly due to compromised somatosensory pathways in individuals with tetraplegia, which have been shown to influence motor cortical activity. Nevertheless, attempted forces (but not always observed or imagined forces) could be decoded significantly above chance, thereby potentially providing relevant information towards the development of a hybrid kinetic and kinematic iBCI.

Subject terms: Brain-machine interface, Neurology, Biomedical engineering

Introduction

Intracortical brain-computer interfaces (iBCIs) that command neuroprosthetics have the potential to restore lost or compromised function to individuals with tetraplegia. iBCIs typically detect neural activity from motor cortex, which encodes kinetic and kinematic information in rhesus macaques1–14. iBCIs that extract kinematic parameters have allowed individuals to command one- and two-dimensional computer cursors15–26, prosthetics27–29, and functional electrical stimulation of paralyzed muscles30,31. Additional work has characterized closed-loop kinetic control in nonhuman primates32–34 and open-loop force modulation in human participants35,36. These studies could potentially move iBCI technology towards restoring functional tasks requiring both kinetic and kinematic control.

Motor cortex can exhibit activity in the absence of motor output, such as during mental rehearsal or movement observation37,38. Furthermore, rather than representing fixed motor parameters, M1 may adapt to achieve the task at hand39. A body of work has investigated how motor cortex modulates to different volitional states, including passive observation, imagined action, and attempted or executed action, mostly in the context of kinematic outputs. For example, kinematic imagery produces similar neural activity patterns as movement execution – or attempted movement, in persons with tetraplegia – but more weakly and in a smaller subset of motor areas40–43. Furthermore, in individuals with tetraplegia, observed, imagined, and attempted arm reaches recruit shared neural populations, but nonetheless yield unique patterns of activity44. This supports the existence of a “core” network that modulates to all volitional states, and the recruitment of additional neural circuitry during the progression from passive observation to attempted movement44–48.

The effects of volitional state on non-kinematic representation, including that of grasping forces, remains uncertain. In able-bodied participants with implanted with sEEG electrodes, executed forces produce stronger neural signals than imagined forces49. This supports fMRI findings in able-bodied individuals and participants with spinal cord injury, who exhibited weaker, less widespread BOLD activations when imagining (vs. attempting) forces50. However, while this trend was readily apparent during a standard two-tailed analysis of all able-bodied subjects, it only appeared in the SCI group when within-subject comparisons were implemented.

To our knowledge, neural modulation to observed, imagined, and attempted forces has not been evaluated at the resolution of intracortical activity in humans. Furthermore, while open-loop force decoding was achieved in a single individual with tetraplegia36, the extent to which force is neurally represented in this patient population, or how volitional state may affect this representation, is unresolved. Therefore, the present study evaluates how volitional state (observe, imagine, and attempt) during kinetic behavior (grasp force production) influences neural activity in motor cortex. Specifically, we characterize the topography of the neural space representing force and volitional state in three individuals with tetraplegia, both at the level of single neural features extracted from multiunit intracortical activity, and at the level of the neural population. We show that 1) volitional state affects how neural activity modulates to force; namely, that attempted forces generate stronger cortical modulation than observed and imagined forces; 2) grasping forces are reliably decoded when attempted, but not always when observed or imagined, and 3) volitional state is represented to a greater degree than grasping forces in the motor cortex.

Results

Characterization of individual features

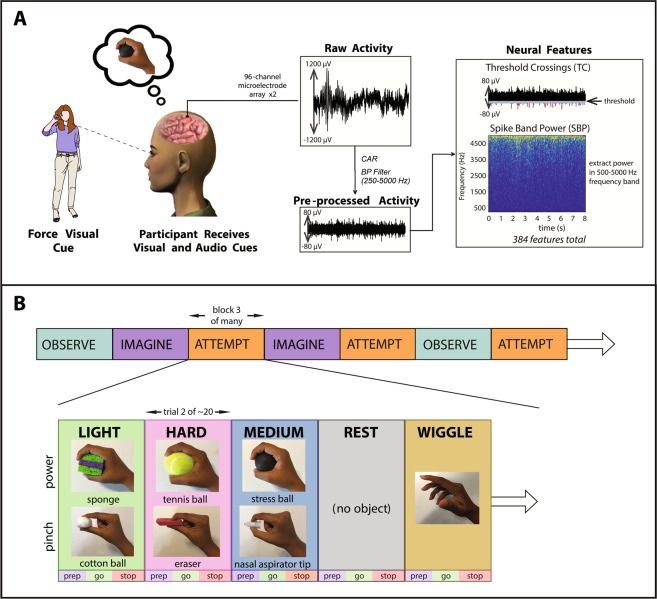

A major goal of this study was to determine whether force-related tuning was present at the level of single neural features extracted from multiunit intracortical activity (Fig. 1A), and to assess the extent to which volitional state affected this tuning. These neural features were extracted during a force-matching behavioral task, as illustrated in Fig. 1B, in which participants T8, T5, and T9 observed, imagined, and attempted producing three discrete forces using either a power or a pincer grasp. Supplementary Table S1 shows a full list of sessions and their associated parameters. In all participants, two neural features were extracted from each of the 192 recording electrodes implanted in motor cortex. Threshold crossing (TC) features, defined as the number of times the neural activity crossed a pre-defined, channel-specific noise threshold, are numbered from 1–192 according to the recording electrode from which they originate. Corresponding spike band power (SBP) features, defined as the root mean square of the signal in the spike band (250–5000 Hz) of each channel, are numbered from 193–384.

Figure 1.

(A) Experimental setup. Prior to the current study, participants were implanted with two 96-channel microelectrode arrays in motor cortex as a part of the BrainGate2 Pilot Clinical Trial. The microelectrode arrays recorded neural activity while participants completed a force task. Two neural features (threshold crossings, spike band powers) were extracted from each channel in the arrays. (B) Experimental session architecture. Each session consisted of 12–21 blocks, each of which contained ~20 trials (see Supplementary Table S1). In each trial, participants observed, imagined, or attempted to generate one of three cued forces with either a power grasp or a closed pincer grasp; to perform a wiggling finger movement; or to rest. Trial types were presented in a pseudorandom order. Each trial contained a preparatory (prep) phase, a go phase where forces were actively embodied, and a stop phase where neural activity was allowed to return to baseline. Participants were prompted with both audio and visual cues, in which a researcher squeezed an object associated with each force level. Visual cues were presented with a third person, frontal view, in which the researcher faced the participants while squeezing the objects. Lateral views are shown here for visual clarity, but were not displayed as such to the participants.

Figure 2 shows four representative TC and SBP features in participant T8 that are tuned to one of four marginalizations of parameters as evaluated with 2-way Welch-ANOVA: force only, volitional state only, neither force nor volitional state, both force and volitional state independently, and an interaction between force and volitional state. Supplementary Figs. S6 and S7 show corresponding feature traces for participants T5 and T9, respectively. Additionally, Supplementary Figs. S8–S11 contain rasters for exemplary single sorted units that are tuned to these factors in participant T8.

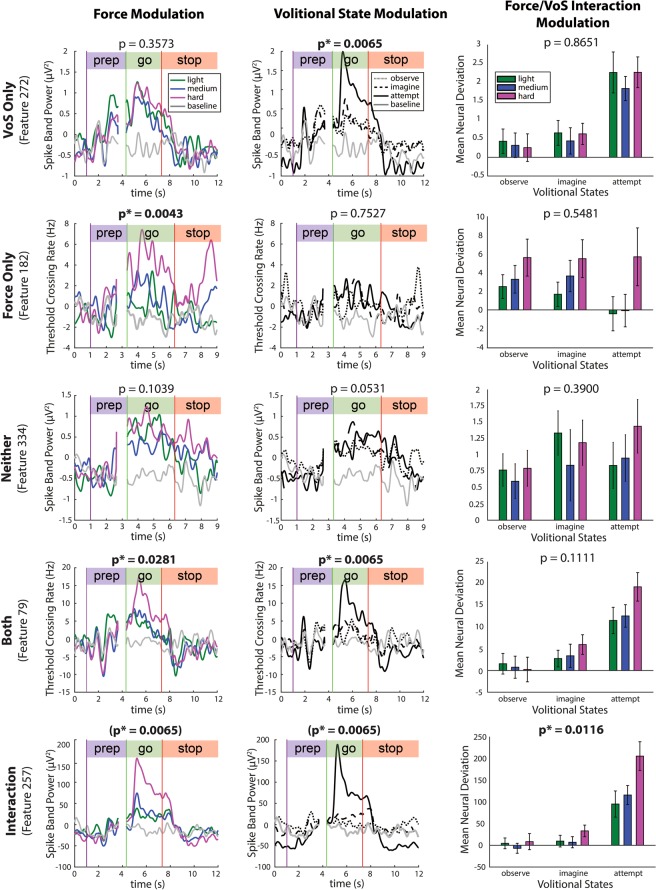

Figure 2.

Single features are tuned to force and volitional state. Rows: Average per-condition activity (PSTH) of five exemplary TC and SBP features tuned to force only (session 1), volitional state (VoS) only (session 4), neither factor (session 1), both factors (session 4), and an interaction between both factors (session 4) in participant T8 (2-way Welch ANOVA, corrected p < 0.05, Benjamini-Hochberg method). Neural activity was smoothed with a 100-ms Gaussian kernel prior to trial averaging to aid in visualization. Statistically significant p-values for force modulation, VoS modulation, and interaction are indicated with asterisks. Neural activity in Column 1 is averaged over all volitional states, such that observable differences in modulation are due to force alone (~50–90 trials per force level, depending on session number). Similarly, Column 2 depicts the activity of individual features during distinct volitional states, averaged over all force levels (~50–90 trials per volitional state, depending on session number). Simple main effects are represented graphically in Column 3 via normalized mean neural deviations from baseline activity during force trials within each of the three volitional states. Modulation depths were computed over the go phase of each trial, and then averaged within each force-VoS pair. Error bars indicate 95% confidence intervals.

For each neural feature, peristimulus time histograms (PSTHs) averaged within individual forces (Column 1) and within individual volitional states (Column 2) are illustrated in Fig. 2. SBP Feature 272 was tuned to force only (row 2); as such, it showed go-phase differentiation across multiple force levels that were statistically discriminable (corrected p < 0.05, 2-way Welch-ANOVA, Benjamini-Hochberg method). In contrast, TC feature 182 was tuned to volitional state only (row 1), and, therefore, did not exhibit force discriminability but were discriminable across multiple volitional states (corrected p < 0.05, 2-way Welch-ANOVA). TC Feature 79, which was tuned independently to both force and volitional state, was statistically discriminable for both parameters.

Column 3 of Fig. 2 graphically represents the simple main effects of the 2-way Welch-ANOVA analysis, as represented by mean go-phase neural deviations from baseline activity for each force level within each volitional state. In these panels, features that are tuned independently to force exhibit a similar pattern of modulation to light, medium, and hard grasping forces, regardless of the volitional state used in the trial. These include TC Feature 182 (row 2), which is tuned to force only, and TC Feature 79 (row 4), which is tuned independently to both force and volitional state. In contrast, SBP Feature 257 (row 5) exhibits a statistically significant interaction between force and volitional state (p < 0.05). For this feature, the mean neural deviations attributed to each force level are affected by the volitional states used to emulate them. This suggests that volitional state could affect the degree to which interacting features are tuned to force in participant T8.

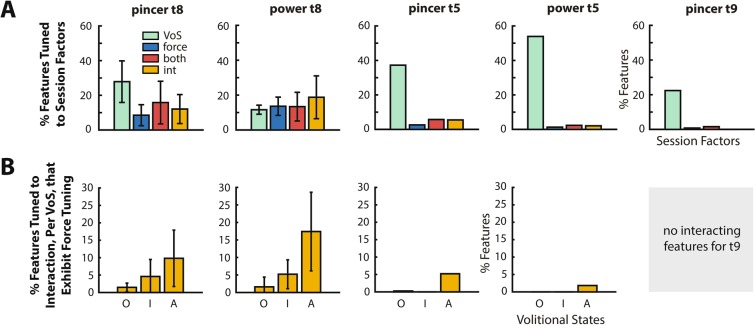

Figure 3 summarizes the tuning of all 384 TC and SBP features in each participant across both power and pincer grasps, during the active “go” phase of the behavioral task. In participant T8, these data are averaged over multiple experimental sessions. Figure 3A shows the average fraction of features in the neural population that were tuned to the four marginalizations of interest (corrected p < 0.05, 2-way Welch-ANOVA). In all participants, a substantial proportion of modulating features are tuned to volitional state only. A second population of features – denoted by blue and red bars – exhibit independent go-phase tuning to force (27.0% in T8 power, 24.3% in T8 pincer, 3.6% in T5 power, 8.3% in T5 pincer, and 2.3% in T9 pincer). A majority of these force-tuned features exhibit “mixed selectivity”, in that they are also independently tuned to volitional state. A final subset of features exhibits a statistically significant interaction (corrected p < 0.05, 2-way Welch-ANOVA) between force-related and volitional-state-related modulation. Figure 3B further subdivides these interacting features into those that are tuned to force within each individual volitional state (corrected p < 0.05, 1-way Welch ANOVA). Note that in participant T9, no interacting features were detected. In participants T8 and T5, a larger proportion of interacting features are recruited by attempted forces than by observed and imagined forces. Therefore, in these two participants, an overall greater proportion of neural features are tuned to force during the attempted volitional state.

Figure 3.

Overall Tuning of Neural Features. (A) Fraction of neural features significantly tuned (2-way Welch-ANOVA, corrected p < 0.05) to force and/or volitional state during the go phase of force production. For participant T8, results are averaged across multiple sessions. Error bars indicate standard deviation. (B) Fraction of total features exhibiting a statistically significant interaction (2-way Welch-ANOVA, p < 0.05) between force and volitional state, subdivided into force-tuned features during observation (O), imagination (I), and attempt (A). Force tuning within each volitional state was determined via one-way Welch-ANOVA (corrected p < 0.05). Error bars indicate standard deviation. Results show that features with an interaction between force and VoS are more likely to have force tuning in the attempt condition than in the observed or imagined conditions.

Neural population analyses

The next goal was to examine how volitional state affected force-related tuning at the neural population level. To this end, feature vectors representing individual trials were projected into low dimensional “similarity spaces” using continuous similarity (CSIM) analysis, adapted from Spike-Train Similarity (SSIM) analysis51, as described in the Methods. Briefly, CSIM makes pair-wise comparisons between patterns in neural features and then projects these pair-wise comparisons into a low-dimensional representation using t-distributed Stochastic Neighbor Embedding (t-SNE)52. In the low-dimensional representation, feature activity during an individual trial is represented as a single point, and the distance between points denotes the degree of similarity between trials.

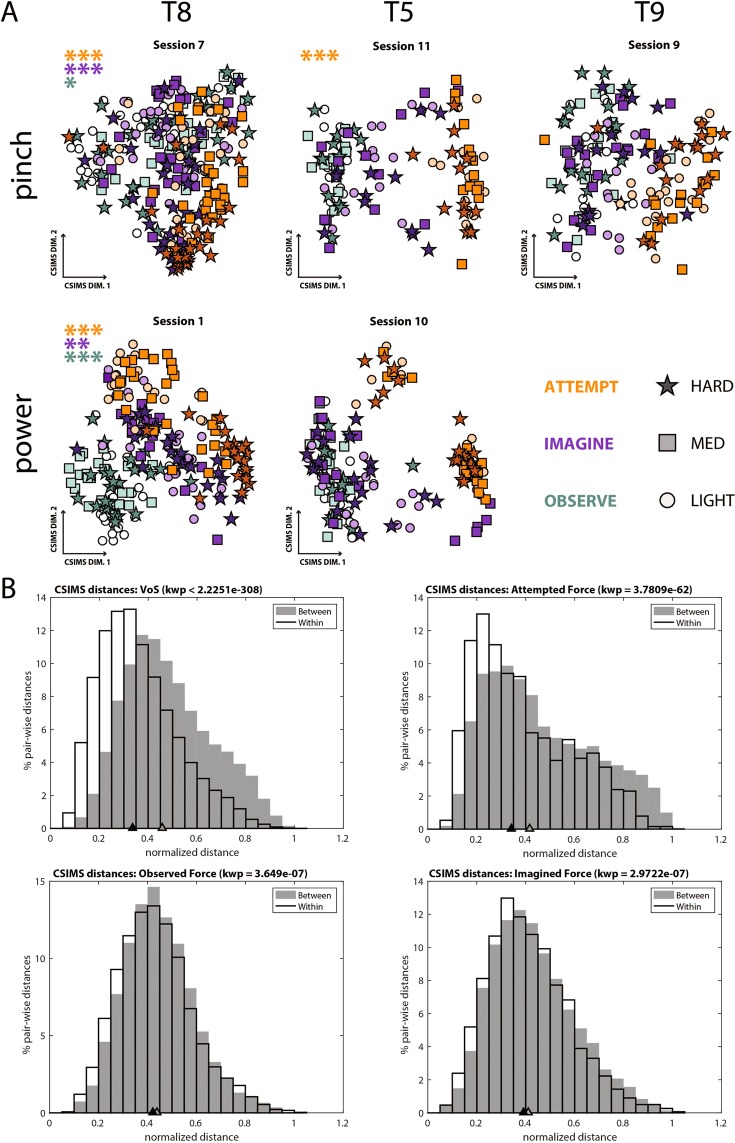

Figure 4A shows the relationship between single-trial activity patterns using two-dimensional CSIM plots for a representative session from each participant-grasp pair. In concordance with the single feature data, clustering of trials according to volitional state is evident in all participants during both power and pincer grasping (Kruskall-Wallis p < 0.00001). In contrast, force-related clustering of trials occurred only during sessions 1, 7, and 11 and was most apparent during attempted force production (Kruskal-Wallis, p < 0.0001). These trends are further illustrated in Fig. 4B, which shows the distribution of pairwise distances between trials within and between volitional states in the upper left panel, as well as within and between forces within each volitional state. The medians corresponding to the within-condition and between-condition distributions are farthest apart for volitional state, are closest together for observed and imagined forces, and are separated by an intermediate amount for attempted forces. This indicates that volitional state has a stronger influence on trial similarity than even attempted forces. In other words, trials cluster more readily according to volitional state than to force in the CSIM space.

Figure 4.

Feature population activity patterns. (A) Two-dimensional CSIM plots for a representative session from each participant-grasp pair. Each point represents the activity of the entire population of simultaneously-extracted features during a single trial. The distance between points indicates the degree of similarity between single trials. Clustering of similar symbols denotes similarity between trials with the same intended force level, while clustering of similar colors indicates similarity of trials within the same volitional state. In all panels, the distribution of distances for pairs of trials within the same VoS displayed a significantly smaller median than distances between trials in different VoS categories (Kruskall-Wallis p < 0.00001). Analyzing pair-wise distances for trials within and between force conditions produced different results across sessions. Asterisks in the top left corner denote sessions with significantly smaller within-force than across-force distances (*p < 0.05 **p < 0.01, and ***p < 0.0001) within each VoS, as indicated by the color of the asterisks. (B) Distribution of pairwise distances within and between categories for VoS (upper left) and and observed, imagined, and attempted force. Distances were normalized and pooled across all sessions shown. Triangles on the X-axis denote medians for each distribution. Overall, VoS had a stronger effect on trial similarity than even the attempted force condition.

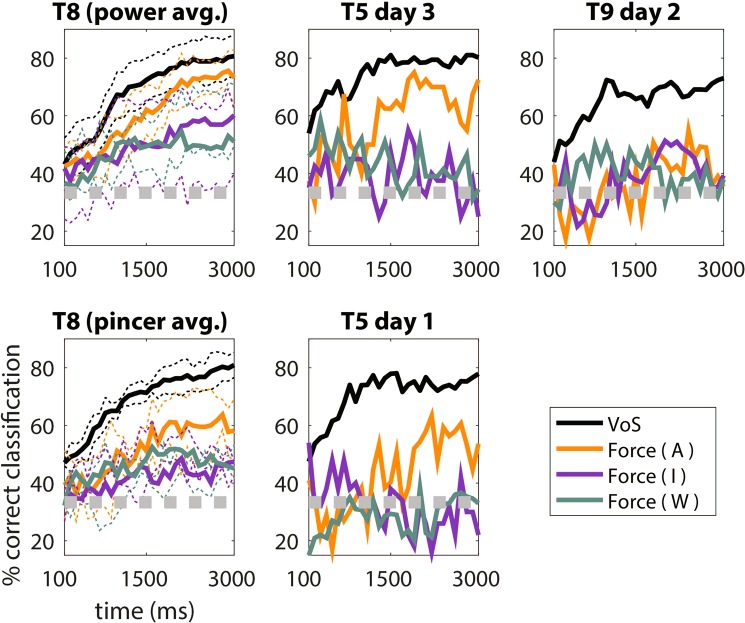

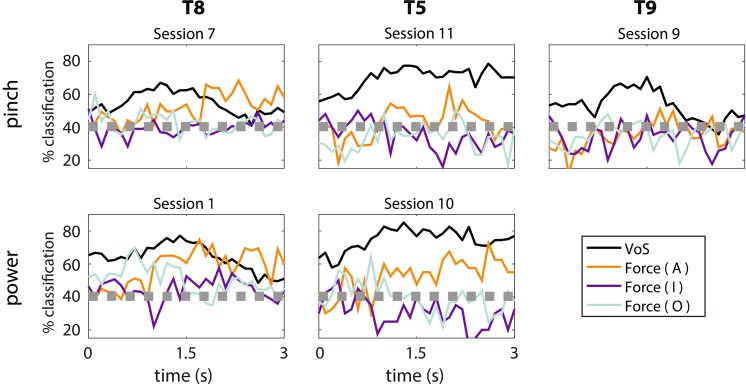

In order to further quantify the degree to which volitional state and force were separable at the population level, VoS and force classification was performed using an LDA classifier operating on 10-dimensional CSIM space projections. To determine the time resolution at which force- and VoS-related information could be decoded, the LDA was applied to 10-dimensional CSIM data of varying window lengths, beginning at the start of the go phase, as described in the Methods and as shown in Fig. 5. Further, to determine how force and volitional state were represented in the neural space as a function of time, time-dependent classification accuracies were determined by applying the LDA to CSIM data with a 400 millisecond sliding window stepped in 100 millisecond increments (Fig. 6). For both of these analyses, chance performance was estimated by applying the LDA to the neural data during 10,000 random shuffles of the trial labels. The mean of the empirical chance distribution (averaged across participants) was 33.9%, with 95% of samples between 26.6 and 40.3%. Both Figs. 5 and 6 show that, in agreement with the structure observed in the CSIM neural space in Fig. 4, VoS is decoded with greater accuracy than force for all participants and grasps. However, force-related information also appears to be present, and is decoded above chance throughout the go phase of attempted force production in all participants (but not always across other VoS conditions). In other words, volitional state appears to affect the degree to which force is represented at the level of the neural population.

Figure 5.

Feature ensemble CSIM force and volitional state decoding accuracies as a function of window length. Offline decoding accuracies were computed using an LDA classifier implemented within a 10-dimensional CSIM representation of the neural feature data, using 10-fold cross-validation. 10-dimensional CSIM data of window lengths ranging from 100 ms to 3000 ms were passed to the LDA, as described in the Methods. Each window began at the start of the go phase and ended at the time point indicated on the x-axis. For participant T8, each panel shows session-averaged decoding performances from each participant-grasp pair. The T8 power and pincer panels were averaged over 5 and 3 sessions, respectively. Standard deviations across T8 sessions are indicated by the dotted lines. Gray line indicates the upper boundary of the 95% empirical confidence interval of the chance distribution, estimated using 10,000 random shuffles of the trial labels.

Figure 6.

Time-dependent feature ensemble CSIM force and volitional state decoding accuracies. Offline decoding accuracies were computed using an LDA classifier implemented within a 10-dimensional CSIM representation of the neural feature data, using 10-fold cross-validation. The LDA was applied to a 400 ms sliding window, stepped in 100 ms increments. Each panel shows decoding performance for a representative session from each participant-grasp pair, where time = 0 indicates the start of the active “go” phase of the trial. The gray line indicates the upper boundary of the 95% empirical confidence interval of the chance distribution, estimated using 10,000 random shuffles of the trial labels.

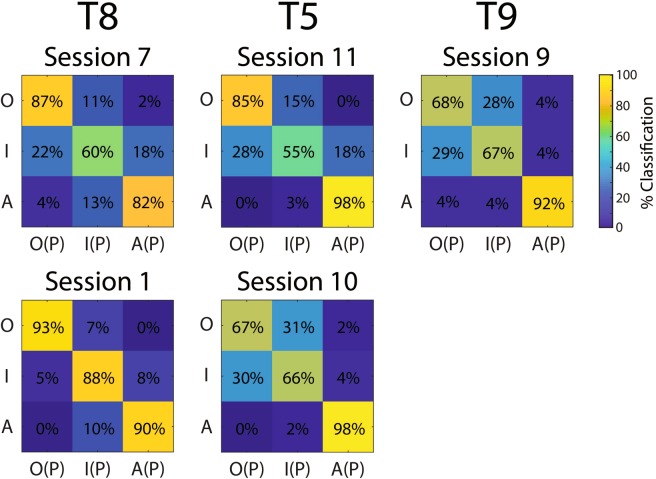

To elaborate the extent to which individual volitional states were represented in the neural space, classification accuracies of individual volitional states were computed, averaged over the go phase of the behavioral task, and then compiled into confusion matrices. Figure 7 shows that, while all volitional states are classified at above-chance accuracy, observation and attempt appear to be classified with greater accuracy than imagery in multiple datasets. In particular, attempt is classified with high accuracy across all sessions, while observation is classified with high accuracy during Sessions 1, 7, and 11. During Sessions 9 and 10, observation and imagery are classified with similar accuracy and tend to be confused with each other more often than they are confused with attempt. These results suggest that attempt (and possibly observation) drives volitional state representation to a greater degree than imagery.

Figure 7.

Feature ensemble volitional state go-phase confusion matrices. Offline decoding accuracies were computed using an LDA classifier implemented within a 10-dimensional CSIM representation of the neural feature data, using 10-fold cross-validation, over a 400 ms sliding window stepped down in 100 ms increments. Classification accuracies for individual volitional states were averaged over all time points within the go phase of the trial, resulting in a confusion matrices of true vs. predicted (P) observed (O), imagined (I), and attempted (A) volitional states. Note that the attempted volitional state is classified with a high accuracy rate across all sessions, while observed trials are classified with high accuracy during sessions 1, 7, and 11.

Discussion

Force representation persists in motor cortex after tetraplegia

The primary study goal was to characterize how volitional state affects neural representation of force in human motor cortex at the feature-ensemble level. We found that force-related activity persists in motor cortex, even after tetraplegia. This validates fMRI findings in individuals with spinal cord injury, who exhibited BOLD activity that modulated to imagined force50; as well as intracortical findings in an individual with tetraplegia36. The present work expands upon these studies by demonstrating force-related activity in multiple people with paralysis within single features (Figs. 2–3) and the neural population (Figs. 4–6).

The present work also shows quantitative, electrophysiological evidence that force modulation depends on volitional state. Briefly, attempted forces recruit more single features (Fig. 3), separate more distinctly in the CSIMS neural space (Fig. 4), and yield higher classification accuracies (Figs. 5–6) than observed and imagined forces. This validates previous kinematic40–44 and kinetic49,50 volitional state studies, in which attempted actions were more readily decoded and yielded stronger BOLD activations than other volitional states.

Volitional state modulates neural activity to a greater extent than force

The present work demonstrates that volitional state is represented robustly within the neural space in individuals with tetraplegia (Figs. 4–7). In particular, individual volitional states recruit an overlapping population of neural features (Fig. 3), but nonetheless result in unique activity patterns (Fig. 4). This supports the theory of overlapping yet distinct neural populations corresponding to each volitional state44. Additionally, while individual volitional states are represented at above-chance levels in all participants, the attempted state recruits the most neural features (Fig. 3) and is classified with high accuracy (Fig. 7), compared to the other volitional states. In contrast, the imagined volitional state was classified least accurately and was often confused with observation. These results appear to be consistent with previous intracortical volitional state investigations in humans, in which the attempted state was shown to recruit more single units and result in higher firing rates, while the imagined state recruited the fewest number of single units in a human participant with the highest neural volitional state representation44. Taken together, the current study results suggest that volitional states are represented similarly in multiple individuals with tetraplegia, regardless of whether these states were used to emulate kinematic vs. kinetic tasks.

Here, however, force representation was weaker than volitional state representation. Specifically, while many features modulated primarily to volitional state, few exhibited force tuning; and most of these were also tuned to volitional state (Fig. 3). Additionally, volitional state was discriminated more reliably from feature ensemble activity than even attempted forces (Figs. 4–6). This relative deficiency of force information, which contrasts with previous work in nonhuman primates1–3,12,53 and able-bodied humans35,49,50,54–57, could have potentially resulted from several factors. Here, we discuss the effects of two such factors, which include the visual cues used to prompt the force task and the effects of deafferentation in individuals with tetraplegia.

Effects of visual cues on neural activity

During most experimental sessions presented in this study, visual cues were used to prompt the observation, imagery, and attempt of forces. Briefly, a researcher lifted one of six graspable objects associated corresponding to light, medium, and hard power and pincer grasping forces to indicate the preparatory phase; squeezed the object during the active “go” phase; and ultimately released the object at the beginning of the stop phase of the trial. In response to these visual cues, participants were instructed to observe, imagine, or attempt emulating sufficient force to crush the object squeezed by the researcher.

As discussed, this visually-cued behavioral task yielded three separate yet overlapping populations of the neural features corresponding to each volitional state. Previous studies in non-human primates have identified a class of cells within motor cortex, termed “mirror neurons”, that exhibit changes in activity in response to the observed and executed volitional states58–61. The presence of these neurons may account for the degree of overlap between feature populations tuned to observation, imagery, and attempt. Additional studies have demonstrated that the activity of neurons responding to observed and executed actions depends on characteristics of how the motor actions are observed. For example, neural activity has been shown to depend on whether the observed action is static versus dynamic62, whether it occurs within versus beyond the participant’s reach63,64, and whether it is presented within an allocentric versus an egocentric reference frame65,66.

The visual cues in this study were static during the active “go” phase of the task, occurred within the participants’ extrapersonal space, and were presented using a third-person perspective. Since some of these characteristics are associated with weaker neural activity during action observation62,63,65, the visual cues included in this study may partially account for the weak force representation demonstrated here. However, many of these same non-human primate studies suggest that static, third-person, and extrapersonal visual cues can still elicit robust neural responses to observed actions in able-bodied individuals. For example, one study demonstrated force encoding during the reach phase of an observed motor task–before contact had been made with the object, such that the only force-related information available to the monkey was from previous knowledge of the object’s weight67. In light of these findings, it is likely that robust force-related representation can be elicited even in response to relatively “weak” visual stimuli, such as the static squeezing of graspable objects. Furthermore, while separate yet overlapping populations of neurons respond to first-person versus third-person views of motor acts, the number of neurons within each of these populations is relatively similar, albeit larger for first-person views65. Similar results were found for neurons recruited during the observation of motor acts within the peripersonal versus the extrapersonal space63. Therefore, while it is still possible that the current study’s visual cues contributed to the weak force representation observed here, this contribution was likely minor.

Effects of deafferentation in individuals with tetraplegia

An additional explanation for the deficiency in neural force representation could include the effects of deafferentation-induced cortical reorganization in tetraplegia68,69. Notably, these effects were absent in the previously cited non-human primate literature, which predicts robust representation of observed and executed motor actions in able-bodied individuals63,65,67. In individuals with spinal cord injury, force-related BOLD activity has been shown to overlap minimally with able-bodied force activity50, suggesting that altered cortical networks in tetraplegia could affect the extent of force representation in motor cortex. However, kinematic representation is well preserved in tetraplegia, as evidenced by high decoding accuracies in kinematic iBCIs16,18,19,23,27–30,44. Therefore, a discrepancy exists between kinematic and kinetic representation in tetraplegia.

This discrepancy may result from sensory feedback differences between the able-bodied and paralyzed states – and indeed, between kinematic and kinetic tasks attempted by individuals with tetraplegia. Without sensory feedback, motor performance is significantly compromised70–72; while reintroducing visual56,70, auditory57, tactile73, or proprioceptive74 feedback enhances motor-related neural modulation and BCI control. Moreover, in an individual with tetraplegia who had intact sensation, motor cortical neurons modulated to both passive joint manipulation and attempted arm actions75. Taken together, these studies suggest that multiple sensory inputs influence motor cortical activity, and that diminished sensory feedback compromises modulation to motor parameters.

Of all sensory modalities, tactile and proprioceptive feedback are the most relevant to fine control of grasping forces73,76,77. This is because kinetic tasks are largely controlled through feedback via somatosensory pathways78,79, which are profoundly altered in tetraplegia80. Participants T8, T5, and T9 were deafferented and received no feedback regarding their own force output. In contrast, kinematic studies in people with paralysis were performed in the context of directional movements that rely on visual feedback, which remains intact after tetraplegia. After complete sensorimotor deafferentation, parameters relying on visual pathways could be preserved in motor cortex, while those relying on somatosensory feedback could diminish. This could explain the relatively weak force tuning observed here, as opposed to the robust force modulation seen in able-bodied literature.

Limitations of open-loop task

In this study, participants observed, imagined, and attempted discrete grasping forces, with the understanding that they could not execute these forces. This open-loop investigation is a key first step towards elucidating neural force representation; however, it came with inherent limitations regarding how participants engaged in the task. For example, volitional states may be challenging for some individuals with paralysis to differentiate cognitively, though previous work suggests this is generally achievable44. Additionally, since participants received no sensory feedback, the study findings depended on each participant’s understanding of the task and their ability to kinesthetically observe/imagine/attempt discrete force levels despite their chronic tetraplegia. Furthermore, while the use of visual cues enhanced this understanding by increasing the applicability of the force task to participants, the various objects and the hand shapes used to squeeze them may have been reflected in the neural data along with forces and volitional states. Finally, while participants were instructed to vary only the amount of force needed to crush these objects, there was no way to measure their intended kinematic and kinetic outputs, which could have differed somewhat between force levels.

To address and mitigate these challenges, participants completed a Kinesthetic Force Imagery Questionnaire (KFIQ), adapted from the Kinesthetic and Visual Imagery Questionnaire81, in order to qualitatively assess the degree to which they emulated various force levels during each volitional state. In addition, participants reported in their own words how they differentiated between observed, imagined, and attempted forces. For example, participant T8 reported conceiving of a virtual arm performing the task during force imagery, whereas he emulated forces with his own arm during the attempt state. In all participants, KFIQ scores rose with volitional state in concordance with the neural data, as shown in Supplementary Fig. S5.

Furthermore, prior to completing the sessions presented in this main text, participant T8 completed a series of additional sessions, summarized in Supplementary Table S2, in which he was asked to observe, imagine, and attempt producing forces both when visual cues were included and when visual cues were omitted. Supplementary Figs. S1 and S2 show that the visual cues do introduce extraneous preparatory and stop phase neural activity within force trials, which is in agreement with previous non-human primate studies of neural activity occurring immediately before and after observed force tasks60,82. However, Supplementary Figs. S2 and S3 suggest that the visual cues do not influence neural activity during the active “go” phase of the trial.

Finally, participants performed kinematic control trials where they embodied finger wiggling movements. Supplementary Fig. S4 shows that force trials were more correlated with each other than with kinematic trials, especially during attempted forces. Additionally, the participants’ KFIQ scores, which reflect embodiment of forces as opposed to kinematics, were often lower for finger wiggling than for force (Supplementary Fig. S5). Though only a closed-loop study could guarantee that participants were emulating forces, the KFIQ scores, and their correlation with the neural data, indicate that this was likely the case.

Force representation differences across participants

Relative to other participants, T8’s motor cortical activity exhibited the most force-tuned features, the clearest separation between attempted forces in CSIM space, and the highest force decoding accuracy. In contrast, T5’s force modulation was more robust during pincer grasping (Session 11) than during power grasping (Session 10), and T9’s force modulation appeared to be relatively weak overall. The study’s open-loop nature partially explains these discrepancies: T5 had difficulty distinguishing light, medium, and hard forces during Session 10, but improved during Session 11. Additionally, differences in pathology may account for T9’s relatively weak force representation. While T8 and T5 were otherwise neurologically healthy participants with cervical spinal cord injury, T9 had advanced ALS, which may have comparatively affected his motor-related cortical activity. Future work with a greater number of participants, with different paralysis etiologies, will be needed to more comprehensively investigate these inter-participant differences.

Implications for iBCI development

A long-term motivation behind this study is to investigate the feasibility of incorporating force control into closed-loop iBCIs. This work validates the presence of kinetic information in motor cortex and shows that volitional state influences force modulation. Therefore, while decoding intended forces in real time appears feasible, future closed-loop force iBCIs will need to take non-motor parameters into account. For example, the data suggest that iBCI force decoders should be trained on neural activity generated during attempted (as opposed to imagined) force production.

More broadly, accurate force decoding will likely depend on increasing neural force representation in individuals with tetraplegia. Since closed-loop decoding performance often exceeds open-loop performance83–85, simply providing visual feedback about intended forces could improve neural force representation. In addition, somatosensory feedback has been shown to elicit motor cortical activity in a tetraplegic individual with intact sensation75. This supports the possibility of enhancing force-related neural representation with somatosensory feedback. In completely deafferented individuals, graded tactile percepts have been evoked on the hand via microstimulation of somatosensory cortex77,86, indicating that it is possible to provide closed-loop sensory feedback about intended grasping forces in this population. More work is needed to determine the extent to which sensory feedback affects motor cortical force representation, and how this representation translates into closed-loop iBCI force decoding performance. Nevertheless, this study shows promise for informing future closed loop iBCI design and provides further insight into to the complexity of motor cortex.

Methods

Study permissions and participants

Study procedures were approved by the US Food and Drug Administration (Investigational Device Exemption #G090003) and the Institutional Review Boards of University Hospitals Case Medical Center (protocol #04-12-17), Massachusetts General Hospital (2011P001036), the Providence VA Medical Center (2011-009), Brown University (0809992560), and Stanford University (protocol #20804). Participants were enrolled in the BrainGate2 Pilot Clinical Trial (ClinicalTrials.gov number NCT00912041). All research was performed in accordance with relevant guidelines and regulations. Informed consent, including consent to publish, was obtained from the participants prior to their enrollment in the study.

This study includes data from three participants with chronic tetraplegia. All participants were implanted in the hand and arm area on the precentral gyrus87 of dominant motor cortex with two, 96-channel microelectrode intracortical arrays (1.5 mm electrode length, Blackrock Microsystems, Salt Lake City, UT). Participant T8 was a 53-year-old right-handed male with C4-level AIS-A spinal cord injury that occurred 8 years prior to implant; T5 was a 63-year-old right-handed male with C4-level AIS-C spinal cord injury; and T9 was a 52-year-old right-handed male with tetraplegia due to ALS. Surgical details can be found at25,30,88 for each respective participant.

Neural recordings and feature extraction

In all participants, broad-band neural recordings were sampled at 30 kHz and pre-processed in Simulink using the xPC real-time operating system (The Mathworks Inc., Natick, MA, US; RRID: SCR_014744). From each pre-processed channel, extraction of two neural features from non-overlapping 20 millisecond time bins was performed in real time, as illustrated in Fig. 1A. These included 192 unsorted threshold crossing (TC) and 192 spike band power (SBP) features. Here, we evaluated the neural space by characterizing TC and SBP features as opposed to sorted single units, in order for our results to be directly applicable to iBCI systems, and because iBCI performance has been shown to be comparable when derived from thresholded data as opposed to spike-sorted data89–92. Supplementary Figs. S8–S11 illustrate a visual comparison of neural activity from threshold crossings and sorted single units extracted from identical channels. Unless otherwise stated, all offline analyses were performed using MATLAB software (The Mathworks Inc., Natick, MA, US; RRID: SCR_001622). Neural recordings, multiunit feature extraction methods, and single unit sorting methods for this study are described in more detail within the Supplementary Information.

Behavioral task

During data collection, all participants observed, imagined, and attempted producing three discrete forces (light < medium < hard) with the dominant hand, using either a power or a pincer grasp, over multiple data collection sessions. T8 completed eight sessions between trial days 511–963 relative to the date of his microelectrode array implant surgery; T9 completed one session on trial day 736; and T5 completed two sessions on trial days 365 and 396. Supplementary Table S1 lists all relevant sessions and their associated task parameters.

Each session consisted of multiple 4-minute data collection blocks, as illustrated in Fig. 1B. During each block, one volitional state (observe, imagine, or attempt) and one hand grasp type (power or pincer) were presented. Blocks were arranged in a pseudorandom order, in which volitional states were assigned randomly to each set of three blocks chronologically. This ensured an approximately equal number of blocks per volitional state, distributed evenly across the entire session. All blocks consisted of approximately 20 trials. Participants were encouraged to take an optional break between experimental blocks.

During each trial, participants were prompted to use kinesthetic imagery93,94 to internally emulate a) a “light” squeezing force, b) “medium” force, c) “hard” force, d) a finger wiggling movement, or e) rest, with the dominant hand. Finger wiggling trials served as a kinematic control for the force trials. Trials were presented in a pseudorandom order by repeatedly cycling through a complete, randomized set of five trial types until the end of the block.

Participants received audio cues indicating which force to produce (prep phase), when to produce it (go phase), and when to relax (stop phase). Participants also observed a researcher squeezing one of six graspable objects corresponding to light, medium, and hard forces (no object was squeezed during “rest” trials). These objects were grouped into two sets of three, corresponding to forces embodied using a power grasp (sponge = light, stress ball = medium, tennis ball = hard) and a pincer grasp (cotton ball = light, nasal aspirator tip = medium, eraser = hard), as shown in Fig. 1B. At the start of each trial, the researcher presented an object indicating the force level to be observed, imagined, or attempted. At the end of the prep phase (at the beginning of the go phase), the researcher squeezed the object. The prep phase lasted between 2.7 and 3.3 seconds. The variability in the prep phase time reduced confounding effects from anticipatory activity. The researcher squeezed the object during the go phase (3–5 seconds), and then released the object at the beginning of the stop phase (3–5 seconds). These visual cues were presented during the majority of experimental trials in a third-person, frontal perspective, in which the researcher faced the participants while squeezing the objects. Visual cues were presented during the majority of force trials; however, during two experimental sessions, visual cues were omitted during some trials to determine whether force-related information resulted from the presence of objects. Supplementary Figs. S1 and S2 visually compare neural feature activity recorded during these trials with feature activity recorded when both audio and visual cues were presented.

During force observation blocks, participants simply observed these actions without engaging in any activity. During force imagery and attempt blocks, participants imagined generating or attempted to generate sufficient force to “crush” the object squeezed by the researcher. For force imagery blocks, participants were instructed to mentally rehearse the forces needed to crush the various objects, without actually attempting to generate these forces.

To assess the extent to which participants embodied discrete forces during each volitional state, participants completed a Kinesthetic Force Imagery Questionnaire (KFIQ), adapted from the Kinesthetic and Visual Imagery Questionnaire (KVIQ)81 after each experimental block. Briefly, participants rated on a scale of 0–10 how vividly they kinesthetically emulated various force levels during light, medium, hard, and wiggling force trials, where 0 = no force embodiment, and 10 = embodiment as intense as able-bodied force execution. The KFIQ is described in more detail within the Supplementary Information.

Effects of audio vs. audiovisual cues

During all experimental sessions presented in Figs. 2–7, participants received audio cues indicating which forces to produce and when to embody these forces. Participants also received visual cues during most sessions, in which they observed a researcher squeezing objects corresponding to the forces they were asked to observe, imagine, or attempt to produce. These visual cues were presented in order to encourage participants to emulate light, medium, and hard forces within the framework of a real-world setting. The goal was to elicit appropriate neural responses to the force task by providing participants with meaningful visual information about which forces they were emulating. More specifically, the visual cues were implemented to make the physical concept of light, medium, and hard forces seem less abstract to the participants after several years of deafferentation.

To determine the extent of any resulting confounds of object size, hand grasp shape, and other factors within the neuronal responses to the force task, additional supplemental data was collected from participant T8, who was cued to observe, imagine, or attempt producing forces using either only audio cues or both audio and visual cues. These additional data, which are summarized in Supplementary Table S2, are solely presented within the Supplementary Information and do not appear in the main text. Within each supplemental session, correlation coefficients were computed between pairs of trial-averaged feature time courses that resulted from audio-only cues (a) and audiovisual cues (av). These correlations were computed within each volitional state, and within each phase of the task (prep, go, stop), in order to discern whether neuronal responses to the visual cues varied by volitional state or trial phase. For each individual session, this correlational analysis was performed on 120 neural features with the highest signal to noise ratio. Additional methods can be found in the Supplementary Materials.

Assessment of kinetic vs. kinematic activity

Prior to determining the effects of volitional state on neural force representation in the main dataset, we performed an initial analysis to determine whether force-related modulation of neural feature activity was distinct from modulation to kinematic activity. Briefly, correlation coefficients were computed between pairs of trial-averaged go-phase feature modulation time courses during force (light, medium, hard) and finger-wiggling trials, within each volitional state. For each participant, this analysis was performed on 120 neural features with the highest signal to noise ratio (SNR), as described and presented in the Supplementary Information.

Characterization of individual features

The first goal of this study was to characterize the tuning properties of individual features. Neural activity resulting from the three volitional states (observe, imagine, attempt) and the three discrete forces (light, medium, hard) resulted in nine conditions of interest. For each condition, each neural feature’s peristimulus time histogram (PSTH) was computed by averaging the neural activity over go-phase-aligned trials, which were temporally smoothed with a Gaussian kernel (100-ms standard deviation) to aid in visualization.

Additionally, the effect of discrete force levels and volitional states on the activity of individual features was assessed. This analysis consisted of feature pre-processing performed in MATLAB, as well as statistical analysis implemented within the WRS2 library in the R programming language95. Features were pre-processed to calculate each neural feature’s mean deviation from baseline during the go phase of each trial. For each feature, baseline activity was computed by averaging neural activity across multiple “rest” trials. Next, trial-averaged baseline activity was subtracted from neural activity that occurred during individual experimental trials. Finally, the resulting activity traces were averaged across multiple time points spanning each trial’s go phase, which yielded a collection of go-phase neural deviations from baseline.

In R, it was determined that the distribution of go-phase neural deviations passed normality tests (analysis of Q-Q plots and Shapiro-Wilk test, p < 0.05) but was heteroskedastic (Levene’s test, p < 0.05). Thus, a robust 2-way Welch ANOVA on the untrimmed means was implemented to evaluate the main effects (force and VoS), as well as their interaction (p < 0.05). Features that demonstrated a significant interaction between force and VoS were further separated into individual VoS conditions (observe, imagine, attempt). Within each VoS condition, a 1-way Welch ANOVA was implemented on go-phase neural deviations to find features that had significant force tuning (p < 0.05). All p-values were corrected for multiple comparisons using the Benjamini–Hochberg procedure96. Taken together, the results of the 1-way and 2-way Welch ANOVA constituted the tuning properties for each individual feature during a given experimental session.

Neural population analysis and decoding

In addition to characterizing individual features, this work sought to elucidate how much volitional state affects the neural representation of force at the population level. To this end, similarity analysis was used to examine the intrinsic relationship between the activity patterns observed across conditions51,97,98. This approach is based on pair-wise comparisons between neural activity patterns and makes no a priori assumptions about the structure of the data. T-distributed Stochastic Neighbor Embedding (t-SNE)52 was used to project these pair-wise measurements into a low-dimensional representation, which facilitated statistical analysis as well as data visualization while still capturing the relationship between individual trials. This dimensionality reduction technique is well suited to similarity analysis, because it explicitly attempts to preserve nearest-neighbor relationships in the data by minimizing KL-divergence between local neighborhood probability functions in the high and low dimensional spaces. That is, it explicitly attempts to preserve relationships between data points that are close together in the high-dimensional space, which makes it ideal for analyzing neural datasets that lie on or close to a nonlinear manifold. In the low-dimensional “similarity space”, a neural activity pattern is represented by a single point. The distance between points denotes the degree of similarity between the activity patterns they represent. Two identical activity patterns correspond to the same point in this space; the more different they are, the farther apart they lie in the similarity space projection.

We have previously used this method to compare spike trains using point process distance metrics51. In the present study, the algorithm was adjusted to operate on continuous (binned) data, in order to include threshold crossing (TC) and spike band power (SPB) extracted features. This modified technique is referred to as continuous similarity (CSIM) analysis. Similarity between feature vectors was evaluated as one minus the cosine of the angle between them. Note that this metric is not affected by the magnitude of the vectors; this property made the analysis more robust to non-stationarities in the data. All features were binned using non-overlapping 20-ms windows, and then smoothed with a 20-bin (400 ms) Gaussian kernel. Two-dimensional CSIM projections were derived over the entire duration of the go-phase. After CSIM was applied, all trials were labeled post-hoc with their associated forces and volitional states, in order to visualize how these experimental parameters affected the degree of similarity between neural activity patterns. Here, the goal was to visualize how force and volitional state affected the activity of the neural population, as represented by the low-dimensional CSIM manifold.

The force- and VoS-related information content within this manifold was quantified in two ways. First, force- and VoS-related clustering of trials was evaluated by comparing the distributions of CSIM distances within and between conditions using a Kruskal-Wallis test. The neural space was identified as representing volitional state when the median CSIM distances between trials with the same volitional states were smaller than the distances between trials with different volitional states. Similarly, when the median CSIM distances between trials with the same force were smaller than the distances between trials with different forces, the neural space was identified as representing force.

Second, CSIM was also used to generate discrete state force and volitional state classifiers. TC and SBP features were projected onto a 10 dimensional CSIM representation using data from a 400 ms sliding window, stepped in 100 ms increments. For each window, an LDA classifier was used to generate time-dependent force and volitional state decoding accuracies, using 10-fold cross-validation. Additionally, the effect of the analysis window size on classification accuracy was examined, starting with 100 ms following the go cue and increasing window length in 100 ms increments up to three seconds. For both sets of analyses, force decoding accuracies were determined within each volitional state, in order to determine how volitional state affected the degree to which force-related information was represented within the neural space. These decoding accuracies were compared to the empirical chance distribution of decoding accuracies, estimated using 10,000 random shuffles of trial labels.

Supplementary information

Acknowledgements

The authors would like to thank the BrainGate participants and their families for their contributions to this research. They also thank Glynis Schumacher for her artistic expertise during the creation of Fig. 1. Support provided by NICHD-NCMRR (R01HD077220, F30HD090890); NIDCD (R01DC009899, R01DC014034); NIH NINDS (UH2NS095548, 5U01NS098968-02); NIH Institutional Training Grants (5 TL1 TR 441-7, 5T32EB004314-15); Department of Veteran Affairs Office of Research and Development Rehabilitation R&D Service (N2864C, N9228C, A2295R, B6453R, A6779I, A2654R); Howard Hughes Medical Institute; MGH-Deane Institute; Katie Samson Foundation; the Executive Committee on Research (ECOR) of Massachusetts General Hospital; the Wu Tsai Neurosciences Institute; the ALS Association Milton Safenowitz Postdoctoral Fellowship; the A.P. Giannini Foundation Postdoctoral Fellowship; the Wu Tsai Neurosciences Institute Interdisciplinary Scholars Fellowship; the Larry and Pamela Garlick Foundation; the Simons Foundation Collaboration on the Global Brain (543045); and Samuel and Betsy Reeves. The contents do not represent the views of the Dept. of Veterans Affairs or the US Government. CAUTION: Investigational Device. Limited by Federal Law to investigational Use. Since the dates of their initial contributions to the study, B. Murphy, B. Franco, and J. Saab are no longer affiliated with Case Western and Brown Universities, respectively. Additionally, B. Walter has moved to the Department of Neurology & Neurosurgery at the Cleveland Clinic in Cleveland, OH.

Author contributions

A.R. conceived the study, performed the experiments, analyzed the data, wrote the main manuscript text, and compiled the Supplementary Information. C.V. designed and implemented the CSIM algorithm, assisted in writing the CSIM portions of the manuscript, and prepared Figs. 4–7. F.W. assisted in conceiving the study and performing data analysis. J.A. and D.C.C. implemented normality, heteroskedacity, and 2-way Welch-ANOVA analysis on the neural feature data and assisted in preparing Fig. 3. F.W., B.M., W.M., P.R., B.F., J.S., and S.S. assisted in data collection. K.S., J.H., L.H., R.K. and A.B.A. supervised data collection. J.M., J.S., B.W., and J.H. planned and performed the microelectrode array surgeries. R.K., A.B.A., and S.C. supervised and guided the project. L.H. is the sponsor-investigator of the BrainGate2 Pilot trial and oversaw the clinical trial, along with B.W., and J.H. at their respective sites. All authors reviewed and edited the manuscript.

Data availability

The data can be made available upon reasonable request by contacting the lead or senior authors.

Code availability

Source code for the CSIM algorithm is publicly available at https://donoghuelab.github.io/SSIMS-Analysis-Toolbox/.

Competing interests

The MGH Translational Research Center has a clinical research support agreement with Neuralink, Paradromics, and Synchron, for which L.H. provides consultative input. K.S. is a consultant to Neuralink Corp. and on the Scientific Advisory Boards of CTRL-Labs, Inc., MIND-X Inc., Inscopix Inc., and Heal, Inc. J.H. is a consultant for Neuralink, Proteus Biomedical and Boston Scientific, and serves on the Medical Advisory Board of Enspire DBS. This work was independent of and in no way influenced or supported by these commercial entities. All other authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

is available for this paper at 10.1038/s41598-020-58097-1.

References

- 1.Carmena JM, et al. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biol. 2003;1:E42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Evarts EV. Relation of pyramidal tract activity to force exerted during voluntary movement. J. Neurophysiol. 1968;31:14–27. doi: 10.1152/jn.1968.31.1.14. [DOI] [PubMed] [Google Scholar]

- 3.Evarts EV, Fromm C, Kroller J, Jennings VA. Motor Cortex control of finely graded forces. J. Neurophysiol. 1983;49:1199–1215. doi: 10.1152/jn.1983.49.5.1199. [DOI] [PubMed] [Google Scholar]

- 4.Fetz EE, Cheney PD. Postspike facilitation of forelimb muscle activity by primate corticomotoneuronal cells. J. Neurophysiol. 1980;44:751–772. doi: 10.1152/jn.1980.44.4.751. [DOI] [PubMed] [Google Scholar]

- 5.Georgopoulos AP, Kalaska JF, Caminiti R, Massey JT. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. J. Neurosci. 1982;2:1527–1537. doi: 10.1523/JNEUROSCI.02-11-01527.1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Sci. 1986;233:1416–1419. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- 7.Humphrey DR. A chronically implantable multiple micro-electrode system with independent control of electrode positions. Electroencephalogr. Clin. Neurophysiol. 1970;29:616–620. doi: 10.1016/0013-4694(70)90105-7. [DOI] [PubMed] [Google Scholar]

- 8.Kakei S, Hoffman DS, Strick PL. Muscle and movement representations in the primary motor cortex. Sci. 1999;285:2136–2139. doi: 10.1126/science.285.5436.2136. [DOI] [PubMed] [Google Scholar]

- 9.Morrow MM, Miller LE. Prediction of muscle activity by populations of sequentially recorded primary motor cortex neurons. J. Neurophysiol. 2003;89:2279–2288. doi: 10.1152/jn.00632.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Oby, E. R. et al. In Statistical Signal Processing for Neuroscience and Neurotechnology 369-406 (Elsevier Inc., 2010).

- 11.Pohlmeyer EA, Solla SA, Perreault EJ, Miller LE. Prediction of upper limb muscle activity from motor cortical discharge during reaching. J. Neural Eng. 2007;4:369–379. doi: 10.1088/1741-2560/4/4/003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sergio LE, Kalaska JF. Systematic changes in motor cortex cell activity with arm posture during directional isometric force generation. J. Neurophysiol. 2003;89:212–228. doi: 10.1152/jn.00016.2002. [DOI] [PubMed] [Google Scholar]

- 13.Vargas-Irwin CE, et al. Decoding complete reach and grasp actions from local primary motor cortex populations. J. Neurosci. 2010;30:9659–9669. doi: 10.1523/JNEUROSCI.5443-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhuang J, Truccolo W, Vargas-Irwin C, Donoghue JP. Decoding 3-D reach and grasp kinematics from high-frequency local field potentials in primate primary motor cortex. IEEE Trans. Biomed. Eng. 2010;57:1774–1784. doi: 10.1109/TBME.2010.2047015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hermes D, et al. Functional MRI-based identification of brain areas involved in motor imagery for implantable brain-computer interfaces. J. Neural Eng. 2011;8:025007. doi: 10.1088/1741-2560/8/2/025007. [DOI] [PubMed] [Google Scholar]

- 16.Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nat. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 17.Jarosiewicz B, et al. Virtual typing by people with tetraplegia using a self-calibrating intracortical brain-computer interface. Sci. Transl. Med. 2015;7:313ra179. doi: 10.1126/scitranslmed.aac7328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kim SP, Simeral JD, Hochberg LR, Donoghue JP, Black MJ. Neural control of computer cursor velocity by decoding motor cortical spiking activity in humans with tetraplegia. J. Neural Eng. 2008;5:455–476. doi: 10.1088/1741-2560/5/4/010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kim SP, et al. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 2011;19:193–203. doi: 10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kubler A, et al. Patients with ALS can use sensorimotor rhythms to operate a brain-computer interface. Neurol. 2005;64:1775–1777. doi: 10.1212/01.WNL.0000158616.43002.6D. [DOI] [PubMed] [Google Scholar]

- 21.Leuthardt EC, Schalk G, Wolpaw JR, Ojemann JG, Moran DW. A brain-computer interface using electrocorticographic signals in humans. J. Neural Eng. 2004;1:63–71. doi: 10.1088/1741-2560/1/2/001. [DOI] [PubMed] [Google Scholar]

- 22.Schalk G, et al. Two-dimensional movement control using electrocorticographic signals in humans. J. Neural Eng. 2008;5:75–84. doi: 10.1088/1741-2560/5/1/008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Simeral JD, Kim SP, Black MJ, Donoghue JP, Hochberg LR. Neural control of cursor trajectory and click by a human with tetraplegia 1000 days after implant of an intracortical microelectrode array. J. Neural Eng. 2011;8:025027. doi: 10.1088/1741-2560/8/2/025027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002;113:767–791. doi: 10.1016/S1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 25.Gilja V, et al. Clinical translation of a high-performance neural prosthesis. Nat. Med. 2015;21:1142–1145. doi: 10.1038/nm.3953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pandarinath, C. et al. High performance communication by people with paralysis using an intracortical brain-computer interface. Elife, 6, 10.7554/eLife.18554 (2017). [DOI] [PMC free article] [PubMed]

- 27.Collinger JL, et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hochberg LR, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nat. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wodlinger B, et al. Ten-dimensional anthropomorphic arm control in a human brain-machine interface: difficulties, solutions, and limitations. J. Neural Eng. 2015;12:016011. doi: 10.1088/1741-2560/12/1/016011. [DOI] [PubMed] [Google Scholar]

- 30.Ajiboye A Bolu, Willett Francis R, Young Daniel R, Memberg William D, Murphy Brian A, Miller Jonathan P, Walter Benjamin L, Sweet Jennifer A, Hoyen Harry A, Keith Michael W, Peckham P Hunter, Simeral John D, Donoghue John P, Hochberg Leigh R, Kirsch Robert F. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. The Lancet. 2017;389(10081):1821–1830. doi: 10.1016/S0140-6736(17)30601-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bouton CE, et al. Restoring cortical control of functional movement in a human with quadriplegia. Nat. 2016;533:247–250. doi: 10.1038/nature17435. [DOI] [PubMed] [Google Scholar]

- 32.Ethier C, Oby ER, Bauman MJ, Miller LE. Restoration of grasp following paralysis through brain-controlled stimulation of muscles. Nat. 2012;485:368–371. doi: 10.1038/nature10987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Moritz CT, Perlmutter SI, Fetz EE. Direct control of paralysed muscles by cortical neurons. Nat. 2008;456:639–642. doi: 10.1038/nature07418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pohlmeyer EA, et al. Toward the restoration of hand use to a paralyzed monkey: brain-controlled functional electrical stimulation of forearm muscles. PLoS One. 2009;4:e5924. doi: 10.1371/journal.pone.0005924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Flint RD, et al. Extracting kinetic information from human motor cortical signals. Neuroimage. 2014;101:695–703. doi: 10.1016/j.neuroimage.2014.07.049. [DOI] [PubMed] [Google Scholar]

- 36.Downey JE, et al. Implicit Grasp Force Representation in Human Motor Cortical Recordings. Front. Neurosci. 2018;12:801. doi: 10.3389/fnins.2018.00801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hatsopoulos NG, Suminski AJ. Sensing with the motor cortex. Neuron. 2011;72:477–487. doi: 10.1016/j.neuron.2011.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Sanes JN, Donoghue JP. Plasticity and primary motor cortex. Annu. Rev. Neurosci. 2000;23:393–415. doi: 10.1146/annurev.neuro.23.1.393. [DOI] [PubMed] [Google Scholar]

- 39.Kalaska JF. From intention to action: motor cortex and the control of reaching movements. Adv. Exp. Med. Biol. 2009;629:139–178. doi: 10.1007/978-0-387-77064-2_8. [DOI] [PubMed] [Google Scholar]

- 40.Filimon F, Nelson JD, Hagler DJ, Sereno MI. Human cortical representations for reaching: mirror neurons for execution, observation, and imagery. Neuroimage. 2007;37:1315–1328. doi: 10.1016/j.neuroimage.2007.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Grezes J, Decety J. Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Hum. Brain Mapp. 2001;12:1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Miller KJ, et al. Cortical activity during motor execution, motor imagery, and imagery-based online feedback. Proc. Natl Acad. Sci. USA. 2010;107:4430–4435. doi: 10.1073/pnas.0913697107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Porro CA, et al. Primary motor and sensory cortex activation during motor performance and motor imagery: a functional magnetic resonance imaging study. J. Neurosci. 1996;16:7688–7698. doi: 10.1523/JNEUROSCI.16-23-07688.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Vargas-Irwin, C. E. et al. Watch, Imagine, Attempt: Motor Cortex Single-Unit Activity Reveals Context-Dependent Movement Encoding in Humans With Tetraplegia. Frontiers in Human Neuroscience, 12, 10.3389/fnhum.2018.00450 (2018). [DOI] [PMC free article] [PubMed]

- 45.Jeannerod M. Neural simulation of action: a unifying mechanism for motor cognition. Neuroimage. 2001;14:S103–109. doi: 10.1006/nimg.2001.0832. [DOI] [PubMed] [Google Scholar]

- 46.Mukamel R, Ekstrom AD, Kaplan J, Iacoboni M, Fried I. Single-neuron responses in humans during execution and observation of actions. Curr. Biol. 2010;20:750–756. doi: 10.1016/j.cub.2010.02.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Page SJ, Levine P, Leonard A. Mental practice in chronic stroke: results of a randomized, placebo-controlled trial. Stroke. 2007;38:1293–1297. doi: 10.1161/01.STR.0000260205.67348.2b. [DOI] [PubMed] [Google Scholar]

- 48.Page SJ, Levine P, Sisto S, Johnston MV. A randomized efficacy and feasibility study of imagery in acute stroke. Clin. Rehabil. 2001;15:233–240. doi: 10.1191/026921501672063235. [DOI] [PubMed] [Google Scholar]

- 49.Murphy BA, Miller JP, Gunalan K, Ajiboye AB. Contributions of Subsurface Cortical Modulations to Discrimination of Executed and Imagined Grasp Forces through Stereoelectroencephalography. PLoS One. 2016;11:e0150359. doi: 10.1371/journal.pone.0150359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Cramer SC, Lastra L, Lacourse MG, Cohen MJ. Brain motor system function after chronic, complete spinal cord injury. Brain. 2005;128:2941–2950. doi: 10.1093/brain/awh648. [DOI] [PubMed] [Google Scholar]

- 51.Vargas-Irwin CE, Brandman DM, Zimmermann JB, Donoghue JP, Black MJ. Spike train SIMilarity Space (SSIMS): a framework for single neuron and ensemble data analysis. Neural Comput. 2015;27:1–31. doi: 10.1162/NECO_a_00684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.van der Maaten L, Hinton G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008;9:2579–2605. [Google Scholar]

- 53.Kalaska JF, Cohen DA, Hyde ML, Prud’homme M. A comparison of movement direction-related versus load direction-related activity in primate motor cortex, using a two-dimensional reaching task. J. Neurosci. 1989;9:2080–2102. doi: 10.1523/JNEUROSCI.09-06-02080.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Keisker B, Hepp-Reymond MC, Blickenstorfer A, Meyer M, Kollias SS. Differential force scaling of fine-graded power grip force in the sensorimotor network. Hum. Brain Mapp. 2009;30:2453–2465. doi: 10.1002/hbm.20676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Neely KA, Coombes SA, Planetta PJ, Vaillancourt DE. Segregated and overlapping neural circuits exist for the production of static and dynamic precision grip force. Hum. Brain Mapp. 2013;34:698–712. doi: 10.1002/hbm.21467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rearick MP, Johnston JA, Slobounov SM. Feedback-dependent modulation of isometric force control: an EEG study in visuomotor integration. Brain Res. Cogn. Brain Res. 2001;12:117–130. doi: 10.1016/S0926-6410(01)00040-4. [DOI] [PubMed] [Google Scholar]

- 57.Wang K, et al. A brain-computer interface driven by imagining different force loads on a single hand: an online feasibility study. J. Neuroeng. Rehabil. 2017;14:93. doi: 10.1186/s12984-017-0307-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Dushanova J, Donoghue J. Neurons in primary motor cortex engaged during action observation. Eur. J. Neurosci. 2010;31:386–398. doi: 10.1111/j.1460-9568.2009.07067.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kraskov A, et al. Corticospinal mirror neurons. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2014;369:20130174. doi: 10.1098/rstb.2013.0174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Mazurek KA, Rouse AG, Schieber MH. Mirror Neuron Populations Represent Sequences of Behavioral Epochs During Both Execution and Observation. J. Neurosci. 2018;38:4441–4455. doi: 10.1523/JNEUROSCI.3481-17.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Vigneswaran G, Philipp R, Lemon RN, Kraskov A. M1 corticospinal mirror neurons and their role in movement suppression during action observation. Curr. Biol. 2013;23:236–243. doi: 10.1016/j.cub.2012.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Lanzilotto M, et al. Anterior Intraparietal Area: A Hub in the Observed Manipulative Action Network. Cereb. Cortex. 2019;29:1816–1833. doi: 10.1093/cercor/bhz011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Caggiano V, Fogassi L, Rizzolatti G, Thier P, Casile A. Mirror neurons differentially encode the peripersonal and extrapersonal space of monkeys. Sci. 2009;324:403–406. doi: 10.1126/science.1166818. [DOI] [PubMed] [Google Scholar]

- 64.Umilta MA, et al. I know what you are doing. a neurophysiological study. Neuron. 2001;31:155–165. doi: 10.1016/s0896-6273(01)00337-3. [DOI] [PubMed] [Google Scholar]

- 65.Caggiano V, et al. View-based encoding of actions in mirror neurons of area f5 in macaque premotor cortex. Curr. Biol. 2011;21:144–148. doi: 10.1016/j.cub.2010.12.022. [DOI] [PubMed] [Google Scholar]

- 66.Maranesi M, Livi A, Bonini L. Spatial and viewpoint selectivity for others’ observed actions in monkey ventral premotor mirror neurons. Sci. Rep. 2017;7:8231. doi: 10.1038/s41598-017-08956-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Alaerts K, de Beukelaar TT, Swinnen SP, Wenderoth N. Observing how others lift light or heavy objects: time-dependent encoding of grip force in the primary motor cortex. Psychol. Res. 2012;76:503–513. doi: 10.1007/s00426-011-0380-1. [DOI] [PubMed] [Google Scholar]

- 68.Green JB, Sora E, Bialy Y, Ricamato A, Thatcher RW. Cortical motor reorganization after paraplegia: an EEG study. Neurol. 1999;53:736–743. doi: 10.1212/WNL.53.4.736. [DOI] [PubMed] [Google Scholar]

- 69.Lacourse MG, Cohen MJ, Lawrence KE, Romero DH. Cortical potentials during imagined movements in individuals with chronic spinal cord injuries. Behav. Brain Res. 1999;104:73–88. doi: 10.1016/S0166-4328(99)00052-2. [DOI] [PubMed] [Google Scholar]

- 70.Ghez C, Gordon J, Ghilardi MF. Impairments of reaching movements in patients without proprioception. II. Effects of visual information on accuracy. J. Neurophysiol. 1995;73:361–372. doi: 10.1152/jn.1995.73.1.361. [DOI] [PubMed] [Google Scholar]

- 71.Gordon J, Ghilardi MF, Ghez C. Impairments of reaching movements in patients without proprioception. I. Spatial errors. J. Neurophysiol. 1995;73:347–360. doi: 10.1152/jn.1995.73.1.347. [DOI] [PubMed] [Google Scholar]

- 72.Sainburg RL, Poizner H, Ghez C. Loss of proprioception produces deficits in interjoint coordination. J. Neurophysiol. 1993;70:2136–2147. doi: 10.1152/jn.1993.70.5.2136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Tan DW, et al. A neural interface provides long-term stable natural touch perception. Sci. Transl. Med. 2014;6:257ra138. doi: 10.1126/scitranslmed.3008669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Ramos-Murguialday A, et al. Proprioceptive feedback and brain computer interface (BCI) based neuroprostheses. PLoS One. 2012;7:e47048. doi: 10.1371/journal.pone.0047048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Shaikhouni A, Donoghue JP, Hochberg LR. Somatosensory responses in a human motor cortex. J. Neurophysiol. 2013;109:2192–2204. doi: 10.1152/jn.00368.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Schiefer MA, Graczyk EL, Sidik SM, Tan DW, Tyler DJ. Artificial tactile and proprioceptive feedback improves performance and confidence on object identification tasks. PLoS One. 2018;13:e0207659. doi: 10.1371/journal.pone.0207659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Tabot GA, Kim SS, Winberry JE, Bensmaia SJ. Restoring tactile and proprioceptive sensation through a brain interface. Neurobiol. Dis. 2015;83:191–198. doi: 10.1016/j.nbd.2014.08.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Brochier T, Boudreau MJ, Pare M, Smith AM. The effects of muscimol inactivation of small regions of motor and somatosensory cortex on independent finger movements and force control in the precision grip. Exp. Brain Res. 1999;128:31–40. doi: 10.1007/s002210050814. [DOI] [PubMed] [Google Scholar]

- 79.Carteron A, et al. Temporary Nerve Block at Selected Digits Revealed Hand Motor Deficits in Grasping Tasks. Front. Hum. Neurosci. 2016;10:596. doi: 10.3389/fnhum.2016.00596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Solstrand Dahlberg L, Becerra L, Borsook D, Linnman C. Brain changes after spinal cord injury, a quantitative meta-analysis and review. Neurosci. Biobehav. Rev. 2018;90:272–293. doi: 10.1016/j.neubiorev.2018.04.018. [DOI] [PubMed] [Google Scholar]

- 81.Malouin F, et al. The Kinesthetic and Visual Imagery Questionnaire (KVIQ) for assessing motor imagery in persons with physical disabilities: a reliability and construct validity study. J. Neurol. Phys. Ther. 2007;31:20–29. doi: 10.1097/01.NPT.0000260567.24122.64. [DOI] [PubMed] [Google Scholar]

- 82.Fabbri-Destro M, Rizzolatti G. Mirror neurons and mirror systems in monkeys and humans. Physiol. 2008;23:171–179. doi: 10.1152/physiol.00004.2008. [DOI] [PubMed] [Google Scholar]

- 83.Chase SM, Schwartz AB, Kass RE. Bias, optimal linear estimation, and the differences between open-loop simulation and closed-loop performance of spiking-based brain-computer interface algorithms. Neural Netw. 2009;22:1203–1213. doi: 10.1016/j.neunet.2009.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Koyama S, et al. Comparison of brain-computer interface decoding algorithms in open-loop and closed-loop control. J. Comput. Neurosci. 2010;29:73–87. doi: 10.1007/s10827-009-0196-9. [DOI] [PubMed] [Google Scholar]

- 85.Jarosiewicz B, et al. Advantages of closed-loop calibration in intracortical brain-computer interfaces for people with tetraplegia. J. Neural Eng. 2013;10:046012. doi: 10.1088/1741-2560/10/4/046012. [DOI] [PMC free article] [PubMed] [Google Scholar]