Highlights

* Coarticulatory differences elicited a PMN in typical children. * Incongruent coarticulation modulated N100 in SLI but not control groups. * No group differences were found for lexical processing of single words. * Relative insensitivity to subphonemic features in SLI.

Keywords: Specific Language Impairment, Coarticulation, Phonological processing, Event-related potentials, N100, N280, PMN, N400

Abstract

Specific Language Impairment (SLI) is a developmental disorder affecting language learning across a number of domains. These difficulties are thought to be related to difficulties processing auditory speech, given findings of imperfect auditory processing across nonspeech tones, individual speech sounds and syllables. However the relationship of auditory difficulties to language development remains unclear. Perceiving connected speech involves resolving coarticulation, the imperceptible blending of speech movements across adjacent sounds, which gives rise to subtle variations in speech sounds. The present study used event-related potentials (ERPs) to examine neural responses to coarticulation in school age children with and without SLI. Atypical neural responses were observed for the SLI group in ERP indices of prelexical-phonological but not lexical stages of processing. Specifically, incongruent coarticulatory information resulted in a modulation of the N100 in the SLI but not typically developing group while a Phonological Mapping Negativity was elicited in the typically developing but not SLI group, unless additional cues were present. Neural responses to unexpected lexical mismatches indexed by the N400 ERP component were the same for both groups. The results demonstrate a relative insensitivity to important subphonemic features in SLI.

1. Introduction

Children exhibiting a Specific Language Impairment (SLI) fail to learn their native language as expected despite otherwise typical development and sociocultural opportunities (Leonard, 1998). Studies demonstrating imperfect auditory processing in SLI (e.g., McArthur and Bishop, 2004, Shafer et al., 2005) suggest that this group may miss valuable information available in the speech stream. Each sound in a spoken utterance contains subtle clues about its neighboring sounds, an acoustic quality not present when the same sound is produced in isolation. Although these effects tend to be imperceptible, both infants (Johnson and Jusczyk, 2001) and adults (Marslen-Wilson and Warren, 1994) appear to use this information during speech processing. For example, adults (Archibald et al., 2009) and typically developing children (Archibald and Gathercole, 2007) repeat unfamiliar words more accurately when these overlapping phonetic cues are present. In the present study, we examined the neural correlates of this sensitivity to the subtle redundancies available in the speech signal in children with either typical-development or language-impairment.

Speech production requires the rapid coordination of articulators, which provides opportunities for economization (e.g., /n/may be realized as/ŋ/in the phrase ‘one game’). This process known as coarticulation results in modifications to the speech stream that creates an acoustic signature of the context. These subtle sound differences are not usually perceptible to the listener despite findings that they influence behavioral (McQueen et al., 1999) and neural responses (Mitterer and Blomert, 2003). Their presence provides opportunities for fine-grained qualitative differences in encoding language. Indeed, recent research suggests that typical learners are highly attuned to the acoustic differences in the speech stream arising from coarticulatory information, which in turn allows the rapid acquisition and generalization of these subtle cues to speech (and language) perception (Connine and Darnieder, 2009).

The role of poor auditory processing in the language learning difficulties experienced by children with SLI has received considerable research attention (see Rosen, 2003). In a series of studies, Tallal and colleagues (Tallal and Piercy, 1973a, Tallal and Piercy, 1973b, Tallal and Piercy, 1974, Tallal and Piercy, 1975, Tallal and Stark, 1981) reported that children with SLI were unable to detect rapid changes in auditorily presented tones and synthesized speech sounds. Several subsequent studies demonstrated SLI deficits in a variety of auditory tasks including detecting a tone that precedes masking (Wright et al., 1997), brief gaps in sound bursts (Ludlow et al., 1983), and amplitude modulations (Menell et al., 1999). However, null findings for SLI groups on temporal processing tasks have also been reported (e.g., Bishop et al., 2005, Sussman, 1993). It has been suggested that differences in the attentional or cognitive load imposed by respective auditory processing tasks may account for some of the contradictory findings (Rosen, 2003).

Processing of coarticulatory information provides a means of investigating subtle differences in speech perception. The present study takes a novel approach to examining responses to these subtle coaticulatory differences without imposing a cognitive load by measuring event-related potentials (ERPs). ERPs are generated by neural processes time-locked to sensory and cognitive events. ERPs have a high degree of temporal resolution (Woldorff and Hillyard, 1992) thereby providing a glimpse into the cognitive processing of a stimulus as it unfolds. With ERPs, we can examine the characteristics of different stages of neural responses to a word, even prior to the individual registering any overt behavioral response. Averaged over many trials, patterns of brain responses become visible as ERP components, marked by positive or negative deflections in scalp voltages.

Of interest are three components related to the processing of spoken words, the N100, N280/PMN and N400. One of the earliest ERP components following an auditory stimulus is the N100, a negativity occurring around 100 ms post-stimulus onset. The N100 is considered to reflect early sensory processes responsive to steep changes in a level of physical energy sustained for at least a short time (Näätänen and Picton, 1987); for example, it is modulated by loudness (Picton et al., 1974, Picton et al., 1977) syllable stress (Sanders and Neville, 2003), and voicing onset (Martin and Boothroyd, 1999, Steinschneider et al., 1999). The N280 is a negative-going component occurring 250–300 ms post-stimulus onset sensitive to differences in the expected vs. perceived phonological form of a word.1 Of particular interest is the PMN, or ‘Phonological Mapping Negativity’, which is a modulation to the N280 component due to a mismatch between an auditory word form and a previously-established expectation (Steinhauer and Connolly, 2008; the component has also been previously described by Connolly and Phillips, 1994 as the Phonological Mismatch Negativity; it should not be confused with the Mismatch Negativity or MMN, which indexes a different set of processes and is elicited under different circumstances). The N400 is a negative-going component occurring approximately 400 ms post-stimulus onset and is sensitive to semantic or lexical information in words or sentences (Holcomb and Neville, 1991). Although still a matter of debate (Van den Brink and Hagoort, 2004, Van Petten et al., 1999), the N100, PMN and N400 are widely considered to reflect distinct prelexical-phonological and lexical stages of spoken word processing (Connolly and Phillips, 1994, Newman and Connolly, 2009, Steinhauer and Connolly, 2008). In any case, modulations to these ERP components can be differentially evoked by manipulating lexical or sublexical matches, and as a consequence provide complementary information about spoken word processing as it unfolds.

Speech variations due to coarticulation provide fine-grained information related to the phonological features of a sound but do not change the word itself. Coarticulatory violations, then, should be detected during prelexical rather than lexical stages of spoken word processing. It follows from this that responses to unexpected violations in coarticulation could result in modulation of the N100 reflecting early processing of acoustic variation not required for later phonemic recognition, or a PMN suggesting integrated processing of subphonemic and phonemic information. The question of how coarticulatory information contributes to speech processing has been considered only recently (Gaskell, 2001, Gow and McMurray, 2007). According to one view, subphonemic and phonemic effects are processed by the system in an indistinguishable manner such that they influence word recognition at whatever point they become available (Gow and McMurray, 2007). A growing number of studies provide support for this hypothesis. For example, sensitivity to coarticulatory differences has been found to influence lexical access (e.g., Dahan et al., 2001, Fowler and Brown, 2000), lexical choices (McQueen et al., 1999), and the temporal sequence of looks to a target (McMurray et al., 2008).

We have recently used ERP to examine neural responses to coarticulatory cues in healthy adults (Archibald and Joanisse, in press). We employed the visual-picture/spoken word matching paradigm of Desroches et al. (2009) in which the picture stimulates a phonological and lexical expectation and the spoken word matches or mismatches the expectation phonemically and/or lexically. Studies employing such picture-word matching paradigms have reported N400 effects in lexical manipulations (Stelmack and Miles, 1990) and differentiated PMN and N400 effects in phonemic/lexical manipulations (Desroches et al., 2009, Connolly et al., 1995). Our study manipulated both phonemic and coarticulatory information, creating stimuli that matched/mismatched expectations lexically as in these earlier studies, or else in the coarticulatory information inherent in the initial phoneme of the word. Findings revealed a PMN to mismatching coarticulatory information regardless of lexical status. In contrast, an N400 was not observed to invalid coarticulation provided the word matched lexical expectations. These results provided strong evidence that subphonemic coarticulatory cues and phonemic information are processed similarly in spoken word recognition in healthy adults.

The purpose of the present study was to investigate neural responses to coarticulation in a typically developing group of children, and in children with Specific Language Impairment (SLI). The children completed a picture-word matching task where words matched or mismatched lexically or in coarticulation, or both. One aim was to extend our previous findings (Archibald and Joanisse, in press) to a typically developing developmental sample. We hypothesized that typically developing children would show a similar pattern to what we previously established in adults, with coarticulatory mismatches eliciting a PMN and lexical mismatches eliciting an N400. A second goal was to compare this pattern of neural responses to coarticulatory information in children with SLI. The picture-word matching task presented minimal cognitive load and the auditory word presentation resulted in automatic processing measured by the ERP response. As a result, the paradigm is ideally suited to examining auditory processing in SLI providing a relatively clean measure of on-line auditory word processing. Given the low language demands of the task, we hypothesized that the groups would show similar N400 modulations to picture-word mismatches. In contrast, we anticipated group differences in response to coarticulatory mismatches either in the N100 or PMN. An absence of differentiated neural responses to coarticulatory mismatches in the SLI group would reflect a failure to process meaningful, subtle subphonemic information available in the speech stream. Differences in neural patterns to coarticulatory cues in SLI would point to the subtle nature of auditory processing deficits in language impairment.

2. Method

2.1. Participants

Fifteen children with SLI and 15 typically developing (TD) chronological age-matched control children were recruited from our existing databases (Table 1). The parents of eligible children were contacted by phone. Written consent was provided by the parents upon arrival at our laboratory, and children gave verbal assent throughout the process. All participants achieved a standard score of 80 or above on the Test of Nonverbal Intelligence (TONI-3, Brown et al., 1997; n = 25) or scaled scores of 7 or greater on both the Block Design and Matrix Reasoning subtests of the Wechsler Intelligence Scale for Children (WISC-4, Wechsler, 2003; SLI: n = 1; TD: n = 3), except one child in the SLI group who scored 75 on the TONI-3 and one child in the TD group who did not complete this testing. By parent report, none of the children were diagnosed with ADD/ADHD, Autism Spectrum Disorder, or hearing impairment, and all spoke English as their primary language. The methods and procedures were approved by the University of Western Ontario Health Science Research Ethics Board.

Table 1.

Participant demographics.

| SLI (n = 15) |

TD (n = 15) |

|||

|---|---|---|---|---|

| M | SD | M | SD | |

| Number male | 7 | 7 | ||

| Number right-handed | 12 | 12 | ||

| Age (years) | 8.33 | 0.83 | 8.33 | 0.80 |

| Composite Language Score (CLS)a | 72.64 | 10.47 | 107.92 | 8.88 |

| Test of Nonverbal Intelligence (TONI-3)a | 91.57 | 9.97 | 105.64 | 13.48 |

Standard scores with mean of 100 and standard deviation of 15.

SLI Group. Fourteen of the children were classified into the SLI group based on scoring at least 1.0 SD below the mean on the Composite Language Score (CLS) of the Clinical Evaluation of Language Fundamentals, 4th edition (CELF-4; Semel et al., 2003). The CLS is based on performance on four subtests, Concepts and Following Directions, Recalling Sentences, Formulating Sentences, and either Word Structure (under 9 years; n = 13) or Word Classes. One child was classified into the SLI group based on a score below 85 on the Test for Reception of Grammar (TROG; Bishop, 1982) and below the 10th percentile (Archibald and Joanisse, 2009) on the Nonword Repetition Test (NRT; Dollaghan and Campbell, 1998).

TD Group. None of the children in the TD group had any history of speech or language problems, or any type of exceptional educational needs. All of the children scored within 1.0 SD of the mean for their age on either the CELF-4 (n = 11) or the TROG and NRT (n = 3). Participants in the TD group were matched to the SLI group on handedness, age (mean age difference in months = 1.47, SD = 1.25), and sex.

2.2. Stimuli

Procedures for stimulus preparation are identical to those employed in our previous study (Archibald and Joanisse, in press). Briefly, the stimuli consisted of 30 CV or CVC word pairs (60 words) starting with a continuant (/f, s, ∫, t∫, d∫, m, n, h/) and differing in the subsequent vowel such that one member of the pair (hereafter, cohort) had a back vowel (i.e., /u, μΩ, μ, Λ/) and the other, a front vowel (i.e., /i, æ, eI/). Digitized recordings (16 bits; 22,050 Hz) were made of three adult female English speakers producing each word four times. The use of multiple speakers increased the robustness of the results by guarding against effects due to individual nuance in speech production.

Stimulus items were re-spliced in order to create a set of words containing either valid or invalid coarticulation cues. The word-initial sound was spliced using Sound Forge 6.0 (Sonic Foundry Inc., 2002) either to other productions of the same word to create spliced words with valid coarticulation, or to productions of the source word's cohort to create spliced words with invalid coarticulation. For example, /h/was spliced from one production of hat onto/æt/from another production of hat, creating a coarticulatory valid token of haat (the superscript denotes the vowel following the consonant in the source production) whereas an invalid coarticulatory token was created by splicing together/h/from hat and/μt/from hot creating haot. Initial sounds were 147.74 ms on average (SD = 55.09), and ranged in duration from 38 to 299 ms. For each speaker and each word, two randomly chosen productions were used to create words with valid coarticulation, and two for words with invalid coarticulation. The set of 30 word pairs were randomly presented in each of four conditions, yielding 240 randomly presented trials. The speaker for each word was randomly selected such that one third of the words were spoken by each speaker within a condition and the speaker varied in repetitions of the word across all conditions.

2.3. Procedures

Auditory stimuli were presented to the right ear using ER-3A insert earphones (Etymotic Research, Elk Grove Village, IL). Visual stimuli were color stock photographs corresponding to each word, presented on a white background using a 19-in. CRT monitor. Stimulus presentation was controlled by E-prime (Psychology software tools, v. 1.1). On each trial, a fixation cross appeared for 250 ms, followed by a picture stimulus. After a 1500 ms delay, a spoken word was played while the picture remained on-screen. Participants were asked to indicate whether the picture and word matched by pressing one of two keys on a handheld keypad. Participants performed six practice trials prior to the experimental task in order to familiarize them with the procedure.

The four experimental conditions are outlined in Table 2. The first two conditions were lexical matches to the picture but the coarticulatory information contained in the initial phoneme was manipulated. In the valid target condition (e.g., HAT-haat), the auditory word matched the picture as did the coarticulatory information inherent in the initial sound; in the invalid target condition (e.g., HAT-hoat), words containing invalid coarticulation were presented with the correct picture; consequently, the initial sound contained inaccurate coarticulatory information despite being a lexical match for the picture. The remaining two conditions were lexical mismatches to the picture and again manipulated coarticulatory information in the initial phoneme. In the valid cohort condition (e.g., HAT-hoot), the picture and auditory word were cohorts (i.e., lexical mismatches) and the coarticulation of the initial phoneme was not consistent with the pictured word (i.e., coarticulatory mismatches). In the invalid cohort condition (e.g., HAT-haot), the picture and auditory word were cohorts but the coarticulatory information contained in the initial phoneme of the auditory word matched the pictured item even though the whole word ultimately did not match the picture.

Table 2.

Summary of conditions.

| Condition | Picture | Auditory word | Coarticulation |

Lexical match? | Hypothesized modulation relative to valid target of: | |||

|---|---|---|---|---|---|---|---|---|

| Valid for word? | Matches initial sound of pictured word? | N100 | PMN | N400 | ||||

| Valid target | HAT | haat | □ | □ | □ | |||

| Invalid target | HAT | hoat | X | X | □ | X | □ | X |

| Valid cohort | HAT | hoot | □ | X | X | X | □ | □ |

| Invalid cohort | HAT | haot | X | □ | X | X | X | □ |

2.4. Electrophysiological recordings

EEG was recorded at 500 Hz sample rate using a 32-channel cap (Quik-Caps; Neuroscan Labs, El Paso, TX) embedded with Ag/AgCl sintered electrodes, referenced to the nose tip. Impedances were kept below 7 kΩ. Scalp electrodes were placed according to the international 10-20 system; linked pairs of electrooculogram (EOG) electrodes recorded horizontal (electrodes on the outer canthi) and vertical (electrodes above and below the left eye) eye movements. Electrophysiological data were filtered on-line at .1–100 Hz with a 60 Hz notch filter and off-line using a zero phase shift digital filter (24 dB, band-pass frequency: 0.1–20 Hz). ERPs were calculated from −100 to 800 ms, time-locked to the onset of the auditory word (i.e., initial consonant). Each trial was baseline corrected to the average voltage of the 100-ms prestimulus interval. Trials containing physiological artifacts were removed, determined by a maximum voltage criterion of ±75 μV relative to baseline on any scalp electrode (rejected trials ranged from 20 to 28% for all conditions and groups). Analyses were completed on accurate trials only. Two participants in the SLI group and one participant in the TD group had at least one condition with fewer than three accepted and accurate trials (out of 60) while all remaining participants had no fewer than 18 correct trials in a single condition. All ERP analyses were completed with data from these remaining 27 participants. The mean number trials retained for analysis did not differ across conditions, F(3,78) = 1.897, p > .05 (valid target: M = 42.3, SD = 10.8, range = 19–58; valid cohort: M = 42.4, SD = 9.6, range = 18–55; invalid target: M = 43.3, SD = 9.0, range = 22–57; invalid cohort: M = 44.8, SD = 8.7; range = 24–58).

2.5. ERP analyses

Analyses focused on three negative-going components commonly associated with auditory word recognition: the N100, PMN, and N400. The amplitude of each was quantified by averaging voltage within three distinct time intervals as follows: N100: 70–130 ms; PMN: 230–340 ms; N400: 340–500 ms.

Statistical analyses were performed at 15 scalp sites (Fz, F3, F4, F7, F8, Cz, C3, C4, T7, T8, Pz, P3, P4, P7, P8) that provided appropriate scalp coverage and have been found to differentiate the components of interest (Archibald and Joanisse, in press, Desroches et al., 2009). A 4 (match type) × 15 (site) repeated measures ANOVA with group (SLI vs. TD) as a between groups factor was performed on mean voltages at each time interval. Conservative degrees of freedom (Greenhouse and Geisser, 1959) were used to guard against sphericity violations common to multi-electrode ERP data. In the case of significant effects involving match type and group, separate secondary analyses comparing the baseline valid target condition to each of the remaining match type conditions were conducted. In these secondary analyses, the site factor was divided into two factors to allow examination of scalp topography (Newman and Connolly, 2009, Archibald and Joanisse, in press): region (frontal, central, parietal) and hemisphere (left, right). Each region by hemisphere combination was linearly derived from a combination of two sites: left frontal (F3, F7), right frontal (F4, F8), left central (C3, T7), right central (C4, T8), left parietal (P3, P7), and right parietal (P4, P8). The midline sites were excluded from this analysis in the interest of disambiguating effects and interactions involving electrode laterality, although a parallel analysis of midline sites was performed where needed to disambiguate results. In cases of significant interactions with group in the secondary analyses, group patterns were further investigated by completing corresponding 2-way within-subjects ANOVAs for each group separately.

3. Results

3.1. Behavioral results

Accuracy and reaction time are listed in Table 3 for each group and match type. Two group × match type ANOVAs revealed no significant differences between the four conditions for accuracy, F(3,75) = 1.216, p > .05, . A significant main effect of reaction time, F(3,75) = 24.293, p < .001, , resulted from significantly faster reaction times to the valid target and invalid target compared to both the valid cohort and invalid cohort conditions (p ≤ .001, all cases). The main effect of group was not significant (accuracy: F(1,25) = 3.740, p > .05; reaction time: F(1,25) = 3.555, p > .05), and did not interact with match type (accuracy: F(3,75) = 1.177, p > .05, ; reaction time: F(3,75) = 0.556, p > .05, ). The data suggest that the match type conditions were well-balanced with respect to difficulty for both groups.

Table 3.

Mean (SD) accuracy and reaction time for each match type and group.

| Match type | Accuracy (%) |

RT (msec) |

||

|---|---|---|---|---|

| SLI | TD | SLI | TD | |

| Valid target | 80.77 | 85.29 | 891.44 | 954.17 |

| (e.g., HAT-haat) | (15.73) | (14.21) | (117.77) | (109.83) |

| Valid cohort | 73.92 | 86.14 | 965.52 | 1043.85 |

| (e.g., HAT - hoot) | (16.40) | (9.71) | (146.66) | (87.47) |

| Invalid target | 78.92 | 85.93 | 891.55 | 955.87 |

| (e.g., HAT - hoat) | (15.79) | (10.30) | (148.88) | (891.55) |

| Invalid cohort | 78.85 | 89.93 | 983.92 | 1083.68 |

| (e.g., HAT - haot) | (18.33) | (7.44) | (137.23) | (90.48) |

3.2. Electrophysiological results

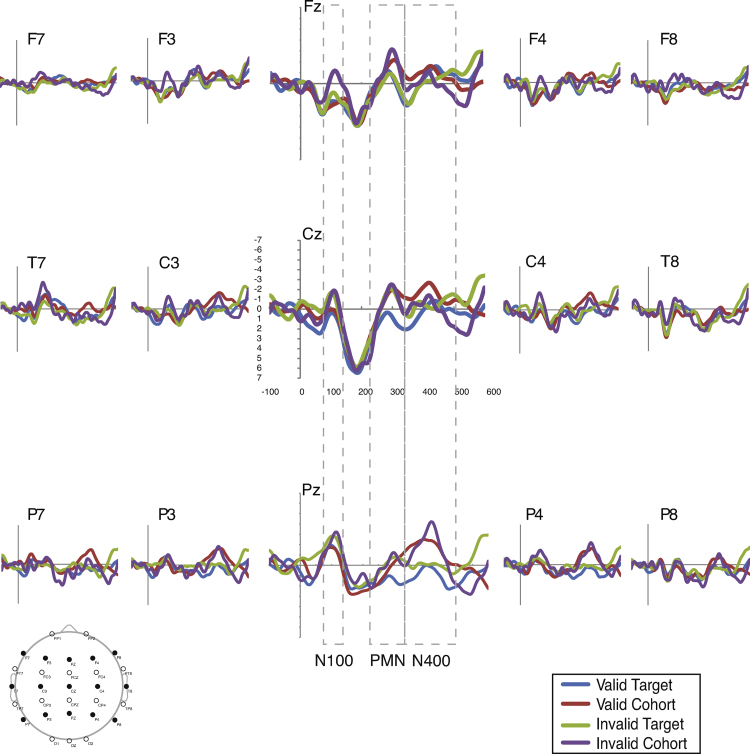

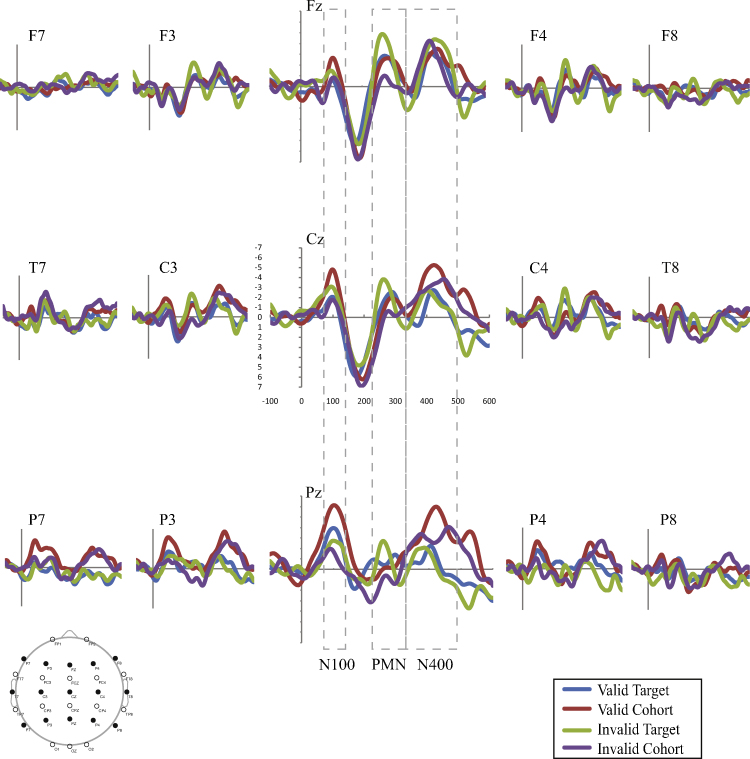

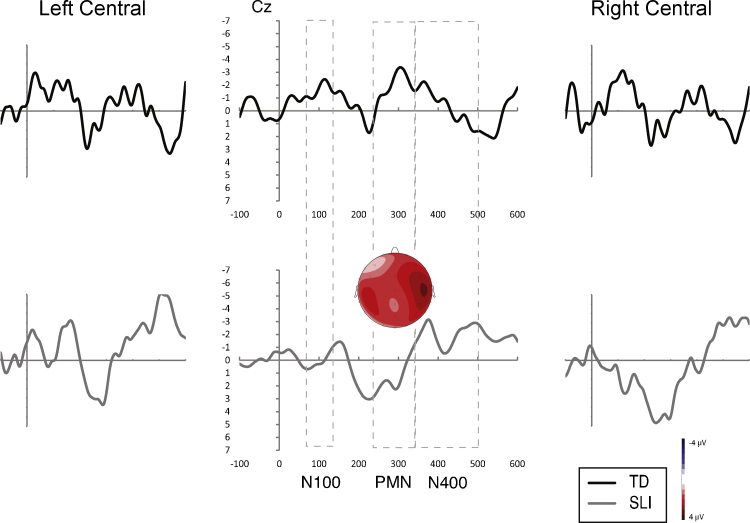

Fig. 1, Fig. 2 present the average waveforms for all match type conditions for the 15 electrodes of interest for both participant groups. Omnibus match type (4) × site (15) × group (2) ANOVAs were completed for each component of interest. Significant effects with group were found for the N100 (group: F(1,25) = 4.869, p = .037, ), and PMN (group × match type: F(3,75) = 2.553, p = .066, ) but not the N400 (F < 1.95, , all remaining effects involving group for all analyses).

Fig. 1.

Average waveforms for the typically developing group across conditions. Results indicate PMN effects to the invalid target and valid cohort, and N400 effects to the valid and invalid cohorts.

Fig. 2.

Average waveforms for the SLI group across conditions. Results indicate N100 effects to the invalid target and valid cohort, PMN effects to the valid cohort only, and N400 effects to the valid and invalid cohorts.

The group and match type differences were explored further by comparing the valid target condition to all other conditions in separate ANOVAs. Region (frontal, central, parietal) and hemisphere (left, right) factors were included in these secondary ANOVAs to examine scalp topography. The results are presented below for each component and summarized in Table 4. A summary of significant component modulations is presented in Table 5. Group comparisons of difference waves created by calculating the difference between each group's average waveform for the baseline valid target condition (e.g., HAT-haat) and each respective remaining condition are presented in Fig. 3, Fig. 4, Fig. 5 for the invalid target (e.g., HAT-hoat; Fig. 3), valid cohort (e.g., HAT-hoot; Fig. 4), and invalid cohort conditions (e.g., HAT-haot; Fig. 5). In these figures, significant positive or negative deflections from zero reflect differences between the conditions.

Table 4.

Summary of ANOVAs with factors of region (3), hemisphere (2), and group (2) for comparisons of valid target condition and each of the remaining match type conditions all components.

| Effect | df | Comparing baseline valid target (HAT- haat) to |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Invalid target (HAT-hoat) |

Valid cohort (HAT-hoat) |

Invalid cohort (HAT-hoat) |

||||||||

| N100 | PMN | N400 | N100 | PMN | N400 | N100 | PMN | N400 | ||

| Group F | 1,25 | 2.468 | 0.313 | 1.565 | 5.103 | 0.358 | 1.209 | 0.198 | 0.270 | 1.766 |

| NS/0.09 | NS/0.01 | NS/0.06 | .033/0.17 | NS/0.01 | NS/0.05 | NS/0.01 | NS/0.01 | NS/0.07 | ||

| Match type | 1,25 | 0.091 | 0.017 | 0.077 | 1.460 | 0.063 | 6.727 | 0.018 | 2.022 | 12.282 |

| NS/0.004 | NS/0.001 | NS/0.003 | NS/0.06 | NS/0.003 | .016/0.21 | NS/0.001 | NS/0.08 | .002/0.33 | ||

| Hemisphere | 1,25 | 4.522 | 11.632 | 1.577 | 4.487 | 8.173 | 5.040 | 3.417 | 9.706 | 9.651 |

| .044/0.15 | .002/0.32 | NS/0.06 | .044/0.15 | .008/0.25 | .034/0.17 | NS/0.12 | .005/0.28 | .005/0.28 | ||

| Anterior-posterior region | 2,50 | 11.304 | 7.120 | 2.887 | 16.138 | 9.718 | 0.334 | 14.142 | 6.475 | 0.532 |

| .001/0.31 | .007/0.22 | NS/0.10 | <.001/0.4 | .001/0.28 | NS/0.01 | <.001/0.4 | .006/0.21 | NS/0.02 | ||

| Match type × group | 1,25 | 0.146 | 0.116 | 0.061 | 4.729 | 0.088 | 0.004 | 0.955 | 5.138 | 0.134 |

| NS/0.006 | NS/0.01 | NS/0.002 | .039/0.16 | NS/0.004 | NS/<.001 | NS/0.037 | .032/0.17 | NS/0.01 | ||

| Region × group | 2,50 | 0.143 | 0.193 | 0.204 | 0.269 | 0.248 | 0.184 | 0.272 | 0.077 | 0.388 |

| NS/0.006 | NS/0.01 | NS/0.01 | NS/0.01 | NS/0.01 | NS/0.01 | NS/0.01 | NS/0.003 | NS/0.02 | ||

| Hemi × group | 1,25 | 0.248 | 1.202 | 0.096 | 0.974 | 0.540 | 0.072 | 0.131 | 0.003 | 0.200 |

| NS/0.01 | NS/0.05 | NS/0.004 | NS/0.04 | NS/0.02 | NS/0.003 | NS/0.005 | NS/<.001 | NS/0.01 | ||

| Region × match type | 2,50 | 2.558 | 0.350 | 0.047 | 0.002 | 0.056 | 24.535 | 0.930 | 2.091 | 9.698 |

| NS/0.09 | NS/0.01 | NS/0.002 | NS/<.001 | NS/0.002 | <.001/0.5 | NS/0.04 | NS/0.08 | .003/0.28 | ||

| Type × region × group | 2,50 | 3.279 | 5.940 | 3.052 | 1.220 | 0.430 | 0.604 | 0.782 | 1.330 | 0.066 |

| .069/0.12 | .014/0.19 | NS/0.11 | NS/0.05 | NS/0.02 | NS/0.02 | NS/0.03 | NS/0.05 | NS/0.003 | ||

| Hemi × match type | 1,25 | 0.360 | 1.757 | 0.071 | 0.663 | 0.260 | 2.211 | 1.204 | 0.618 | 3.060 |

| NS/0.01 | NS/0.07 | NS/0.003 | NS/0.03 | NS/0.01 | NS/0.08 | NS/0.05 | NS/0.01 | NS/0.11 | ||

| Type × hemi × group | 1,25 | 1.889 | 1.692 | 1.115 | 0.190 | 0.429 | 0.626 | 0.102 | 0.120 | 0.027 |

| NS/0.07 | NS/0.06 | NS/0.04 | NS/0.01 | NS/0.02 | NS/0.02 | NS/0.004 | NS/0.01 | NS/0.001 | ||

| Region × hemi | 2,50 | 0.626 | 0.114 | 0.290 | 2.253 | 2.327 | 1.145 | 0.289 | 0.623 | 0.262 |

| NS/0.02 | NS/0.01 | NS/0.01 | NS/0.08 | NS/0.09 | NS/0.04 | NS/0.01 | NS/0.02 | NS/0.01 | ||

| Group × hemi × region | 2,50 | 0.425 | 0.343 | 0.180 | 1.754 | 1.871 | 2.022 | 0.368 | 0.274 | 0.440 |

| NS/0.02 | NS/0.01 | NS/0.01 | NS/0.07 | NS/0.07 | NS/0.08 | NS/0.01 | NS/0.01 | NS/0.02 | ||

| Type × region × hemi | 2,50 | 0.717 | 0.407 | 0.71 | 0.403 | 4.917 | 1.522 | 0.910 | 0.001 | 0.937 |

| NS/0.03 | NS/0.02 | NS/0.003 | NS/0.02 | .022/0.16 | NS/0.06 | NS/0.04 | NS/<.001 | NS/0.04 | ||

| Type × region × hemi × group | 2,50 | 0.722 | 3.488 | 0.464 | 1.060 | 1.250 | 1.134 | 0.870 | 0.052 | 0.157 |

| NS/0.03 | 0.045/0.1 | NS/0.02 | NS/0.04 | NS/0.05 | NS/0.04 | NS/0.03 | NS/0.002 | NS/0.01 | ||

Table 5.

Summary of ERP modulations and group effects for each component.

| Valid cohort (HAT-hoot) |

Invalid target (HAT-hoat) |

Invalid cohort (HAT-haot) |

||||

|---|---|---|---|---|---|---|

| SLI | TD | SLI | TD | SLI | TD | |

| N100 | Increased negativity | – | Frontal negativity/parietal positivity | – | _ | – |

| PMN | Left parietal negativity | – | Left parietal negativity | Positivity | – | |

| N400 | Parietal negativity | – | Parietal negativity | |||

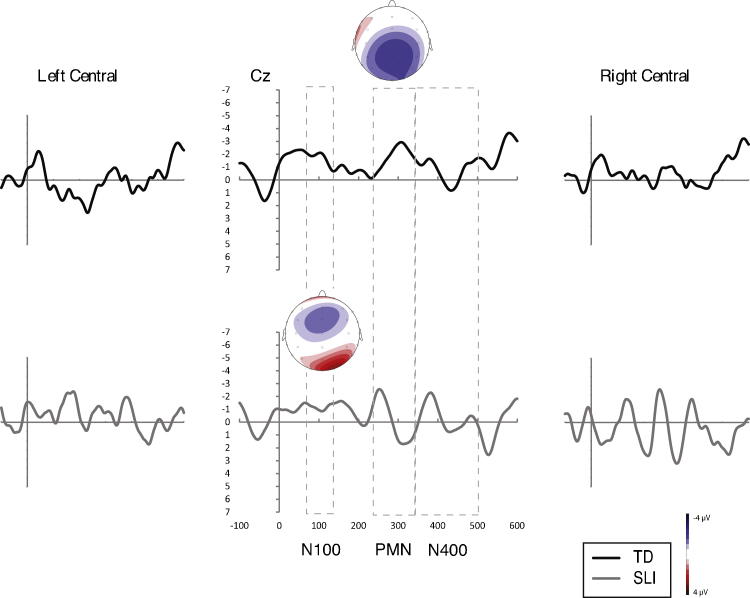

Fig. 3.

Average difference waveforms relative to the baseline valid target condition for the invalid target condition for both groups. Voltage maps illustrate topographic distribution of within group effects.

Fig. 4.

Average difference waveforms relative to the baseline valid target condition for the valid cohort condition for both groups. Voltage maps illustrate topographic distribution of within group effects.

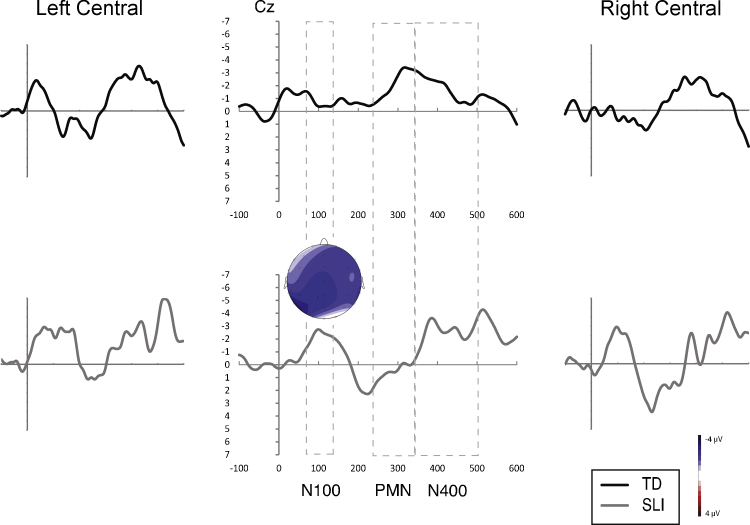

Fig. 5.

Average difference waveforms relative to the baseline valid target condition for the invalid cohort condition for both groups. Voltage maps illustrate topographic distribution of within group effects.

N100. In the match type (2) × region (3) × hemisphere (2) × group (2) ANOVA of the N100 amplitudes for the invalid target condition (e.g., HAT-hoat), the interaction between match type, region, and group approached significance (p = .069, ). To corroborate this finding, we completed a parallel ANOVA of the midline sites (6) and found a significant group effect, F(1,25) = 5.288, p = .030, , modified by a significant interaction between match type, site, and group, F(5,125) = 3.169, p = .048, . In corresponding ANOVAs completed for each group, there were no significant effects with match type for the TD group ( ) whereas the interaction between match type and region was significant for the SLI group ( ). The latter was due to increased negativity in the frontal and positivity in the parietal regions for the SLI group in response to the invalid target (see Fig. 3, bottom). For the valid cohort condition (e.g., HAT-hoot), there were significant effects of group (p = .033, ) and group × match type (p = .039, ). Follow up ANOVAs conducted separately for each group revealed increased negativity in the SLI group ( ) to the valid cohort (see Fig. 4, bottom) but no significant match type difference in the TD group ( ). There were no significant effects with group (F < 0.96, , all cases) or match type (F < 1.21, , all cases) in the analysis of the invalid cohort condition (e.g., HAT-haot).

Results for the N100 (see Table 5) indicate atypical neural responses on the part of the SLI group to the two conditions in which the initial sound of the word contained mismatching coarticulatory information despite being a phonemic match to expectations. SLI group responses were characterized by increased negativity to the valid cohort (e.g., HAT-hoot) and increased frontal negativity and parietal positivity to the invalid target (e.g., HAT-hoat) relative to the baseline condition. The N100 responses for the TD group, on the other hand, were not differentiated by match type.

PMN. Analysis of the PMN for the invalid target condition (e.g., HAT-hoat) revealed a significant three-way interaction between match type, region, and group (p = .012, ), modified by a four-way interaction between match type, region, hemisphere, and group (p = .045, ). Remaining effects involving group were nonsignificant (F < 1.202, , all cases). One clue to understanding this complex interaction was results of the ANOVAs completed for each group separately comparing the invalid target (e.g., HAT-hoat) to baseline valid target conditions. One significant match type effect was found: the interaction between match type and region in the analysis of the TD group, F(2,26) = 6.972, p = .012, , due to increased negativity at parietal sites in response to the invalid target (e.g., HAT-hoat). Closer inspection of these results in comparison with the SLI group showed that this effect was greater at left hemisphere sites (see Fig. 3, top). All remaining effects with match type were not significant in these analyses (F < 2.251, , all cases).

For the valid cohort condition (e.g., HAT-hoot), there were no significant effects involving group although there was one significant effect involving match type. The significant interaction between match type, region, and hemisphere (p = .022, ) was due to increased negativity at left parietal sites to the valid cohort condition. Finally, the analysis of the invalid cohort condition (e.g., HAT-haot) revealed a significant interaction between match type and group (p = .032, ). All remaining effects (except main effects of region and hemisphere) were not significant. In separate group analyses, the SLI group had significant positivity (see Fig. 5, bottom), F(1,12) = 7.383, p = .019, , whereas the TD group showed no significant differences, F(1,13) = 0.336, p > .05, , in response to the invalid cohort condition (e.g., HAT-haot) in this temporal region.

To summarize the results of the PMN analyses (see Table 5), a PMN at left parietal sites was present for both groups in response to the valid cohort (e.g., HAT-hoot), which contained an initial coarticulatory mismatch (and lexical mismatch) to expectations. A corresponding PMN was observed for the TD but not the SLI group to the invalid target (e.g., HAT-hoat). Thus, a PMN was noted in the TD group to both conditions presenting an initial coarticulatory mismatch to expectations, while the SLI group showed a PMN only when a lexical mismatch also occurred. Increased positivity was noted in this temporal region for the SLI but not the TD group in response to the invalid cohort condition (e.g., HAT-haot) with matching initial coarticulatory information (but mismatching lexically).

N400. The final set of analyses examined the N400. Recall that no significant group effects were found in either the omnibus ANOVA or the secondary analyses of the N400. In separate ANOVAs comparing each match type condition to the baseline valid target condition (e.g., HAT-haat), there were significant effects involving match type to the valid cohort (e.g., HAT-hoot) (type: p = .016, ; type × region: p < .001, ) and the invalid cohort (e.g., HAT-haot) (type: p = .002, ; type × region: p < .002, ). The interactions were due to increased negativity at parietal sites. Remaining effects (except hemisphere) in these analyses were not significant. As well, there were no significant effects in the invalid target (e.g., HAT-hoat) analysis. Thus, both groups showed N400 modulations to the two conditions presenting lexical mismatches (i.e., valid cohort; invalid cohort) regardless of the coarticulatory match but not to the condition presenting a lexical match (i.e., invalid target).

4. Discussion

The present study used ERP to examine the temporal nature of neural responses to unexpected coarticulatory or lexical information in school age children with either typical development or Specific Language Impairment (SLI). The congruity between presented pictures and subsequently presented auditory words was manipulated across four conditions varying the coarticulatory and/or lexical match to expectations. As summarized in Table 5, group- and condition-wise differences in the modulations of the N100, PMN, and N400 were observed. The neural response pattern of the typically developing group replicated that of our previous study with adults (Archibald and Joanisse, in press). In both cases, a PMN was elicited in response to mismatching coarticulatory information regardless of lexical match whereas N400 modulations occurred in response to mismatching words regardless of coarticulatory cues. The SLI group pattern was different for the processing of coarticulatory but not lexical information. N100 modulations were found in the SLI group to mismatching coarticulatory information while a PMN was observed only when additional cues to the incongruity were present. Lexical mismatches, on the other hand, elicited N400 modulations in the SLI group as they did for the typically developing group.

These results add to the growing evidence that coarticulatory differences in productions of the same phoneme contribute to spoken word recognition (e.g., McMurray et al., 2008, McMurray et al., 2002), at least for typical groups. In the typically developing children observed here, and in an adult group in our previous work (Archibald and Joanisse, in press), subphonemic coarticulatory information elicited a PMN – a response previously established in response to mismatching phonological expectations (Desroches et al., 2009, Newman et al., 2003, D’Arcy et al., 2000, Connolly and Phillips, 1994). Taken together, these findings strongly suggest that subphonemic and phonemic information are processed similarly resulting in a PMN to both within- and between-category phonetic variation. It follows from this that subphonemic coarticulatory information may play an important role in facilitating speech segmentation and recognition (Gow and McMurray, 2007). The results also provide some information about the typical development of these processes in the sense that our control group of 8–10-year-old children showed the same sensitivity to subphonemic coarticulatory cues as our adult group in our previous study. The findings indicate that typical speech perception processes have developed sufficiently to discriminate subtle phonemic differences by this age. Such a suggestion is supported by reports that infants are sensitive to subtle phonological information (McMurray and Aslin, 2005).

Of potentially greater importance are our SLI findings. We demonstrated atypical sensitivity to phonetic detail in children with SLI in the present study, and most notably not at the whole sound (or phoneme) level but instead at a subphonemic level. Neural responses of interest were differentiated based on the subtle coarticulatory cues present in our stimuli, indicating that children in the SLI group did perceive/process these differences, albeit in a different way from the control group. Unlike typical language users, the SLI group consistently showed an N100 modulation to unexpected coarticulatory information. The N100 modulation could reflect greater sensitivity to the acoustic variation associated with coarticulatory information at this early time point on the part of the SLI group. The group differences in the N100 may also reflect more top-down modulations such as the influence of expectations (Zhang et al., 2005) or attention (Vogel and Luck, 2000). It may be that early neural processing is less attuned to relevant acoustic and phonemic distinctions in children with SLI than typical development. This suggestion is supported by findings that greater language experience leads to decreased within-category discrimination such as in 12 vs. 6-month-old infants (Stager and Werker, 1997) or first vs. second language speakers (Zhang et al., 2005).

While further studies are needed in order to more fully understand the group differences in the N100, there are several potential consequences to the early processing of irrelevant phonetic detail observed in the SLI group. For one, this may amount to unnecessary processing tapping resources that are consequently unavailable for other cognitive activities. Secondly, the temporal integration of information available at different stages of spoken word recognition may be interrupted by processing that occurs too early. And thirdly, errors in early processing may interfere with critical processing at later stages of recognizing spoken words.

Results for the PMN provided some evidence that later critical processing of coarticulatory information was impaired in the SLI group. PMNs were noted consistently in response to mismatching coarticulatory information in both the typically developing children in the present study and adults in our previous work (Archibald and Joanisse, in press) suggesting that this reflects a crucial stage in phonological processing related to mapping acoustic inputs onto phonological categories (Desroches et al., 2009). Unlike the typically developing groups, the children with SLI did not show a consistent pattern of PMNs to coarticulatory mismatches. When words were presented that mismatched expectations in both coarticulatory and lexical information, a PMN was observed as expected. However, a PMN was not elicited when only coarticulatory incongruities were present. These results suggest the influence of top-down processing such that lexicality influenced phonological processing. When lexicality provided additional cues to the mismatch, processing of the mismatching coarticulatory information was facilitated. However, when lexicality matched expectations, fine-grained phonological processing was not prompted. Consistent with this, some researchers have argued that the PMN reflects early lexical processing (Van den Brink and Hagoort, 2004, Van Petten et al., 1999).

How might the N100 and PMN findings for our SLI group be integrated? Coarticulatory mismatches yielded N100 modulations, but they did not also induce PMNs unless a mismatching lexical cue was also present. There are three possible explanations of these findings. One is that the entire spoken word recognition process is shifted to an earlier temporal period in SLI. However, this seems unlikely given that the SLI group exhibited N400 modulations in response to word-level information as expected indicating normal lexical processing at the typical temporal window. A second suggestion is that only phonological processing is shifted leading to a temporal desynchronization in phonological and lexical processing in SLI. The phonological information may be available too early reducing the impact of phonemic (or subphonemic) information and forcing the system to rely more heavily on later lexical processing. One observation makes this explanation also unlikely: the presence of a PMN at least in cases of coarticulatory and lexical mismatches in the SLI group demonstrates phonological processing at the expected time window.

A third, and in our view the most likely, possibility is that coarticulatory cues are not recognized as important information contributing to phonological processing in SLI. Additional early processing occurred, but this early processing prompted no further phonological processing. Lexical violations, however, can trigger a rechecking of the signal resulting in processing of subphonemic variations. The failure to use subphonemic information available in the speech stream in a meaningful way could have important implications for rapid language processing. As a result, language learning may require more repetitions than expected and proceed more slowly overall, two characteristics known to be typical of children with SLI. It is clear that further investigation of the processing of coarticulatory information in SLI is warranted. One approach addressing the possible role of lexical violations in prompting rechecking would be to examine SLI responses to coarticulatory mismatches in words and nonwords. As well, group differences in behavioral responses to coarticulatory mismatches would provide further evidence of a reduced SLI sensitivity to subphonemic information.

We found no group differences in N400 modulations in the present study. It must be acknowledged, however, that we only assessed the presence or absence of modulations in comparisons between lexical matches and mismatches involving highly familiar words. In contrast to these findings, qualitative differences in this temporal region have been reported: For example, Neville et al. (1993) reported attenuation of the N400 in some children with SLI in response to a sentence-level task while Helenius et al. (2009) found a weaker lexicality effect in their SLI group for the equivalent component in magnetoencephalography studies, the N400m. These findings illustrate an important point concerning the use of ERP in language disorders, namely, that modulations of a specific component are influenced by the task being completed. Thus, findings of atypical timing or shape of ERP responses must be considered within the context of the cognitive processes engaged during their elicitation. As well, exploration of these questions with a larger subject sample involving both ERP measures of peak values around a short time window and corresponding behavioral tests may provide a better understanding of individual and possible subgroup differences.

Increased positivity reflecting attenuation was noted for the SLI group for two components, the N100 in response to a coarticulatory mismatch and lexical match and a PMN in response to a coarticulatory match and lexical mismatch. The attenuation may reflect negativity at an earlier time point, or more variability in the timing of a peak from one trial to the next. It may be that there is reduced synchronization of the N00 response to the onset of the word in the SLI group resulting in a smaller average response. Clearly, further investigation (including replication) is warranted.

5. Conclusions

The present findings provide evidence of a Phonological Mapping Negativity (PMN) to subphonemic coarticulatory information in typically developing children replicating our previous findings with adults (Archibald and Joanisse, in press). Children with SLI, on the other hand, did not show the same pattern of neural responses. We capitalized on the temporal sensitivity of ERPs to investigate the subtle nature of the auditory processing deficit in SLI. The SLI group in the present study completed our task as accurately and rapidly as the typically developing group. As well, N400 responses to lexical information did not differ between the two groups. Group differences arose solely in response to subphonemic coarticulatory cues, and were only evident when no other cues were available. These findings may aid in explaining some of the contradictory findings pertaining to auditory processing deficits in SLI (e.g., Tallal and Piercy, 1973a, Tallal and Piercy, 1973b, Bishop et al., 2005). Children with SLI may be able to use redundant information inherent to language to overcome subtle auditory processing deficits resulting in better performance on tasks in which such redundancies are available.

The suggestion that children with SLI have phonological processing deficits is not a new one (Chiat and Hunt, 2001). Indeed, past ERP studies have demonstrated abnormal SLI responses to oddball auditory stimuli (the MMN; Shafer et al., 2005) and individual speech and nonspeech sounds (McArthur and Bishop, 2004). Results of the current study, however, shed new light on this issue by extending findings to word-level linguistic stimuli within a more naturalistic language processing paradigm. The present findings are also the first to isolate subphonemic speech deficits in SLI. It is clear that the processing of subtle but meaningful acoustic variation in the speech stream is a challenge for children with SLI that may impact language learning.

Acknowledgments

This work was supported by a National Science and Engineering Research Council of Canada postdoctoral fellowship to the first author, and a Canada Institute of Health Research Operating Grant to the second author. Infrastructure support was provided by the Canada Foundation for Innovation and the Ontario Innovation Trust.

Footnotes

Although the N280 has also been studied in the context of the visual processing of open and closed lexical sets (Neville et al., 1992), it is the modulation of this component to auditorily presented words that is of interest to the present study. It should be noted that the neural response to acoustic/phonemic changes has also been referred to as the acoustic change complex response (Martin and Boothroyd, 1999).

References

- Archibald L.M.D., Gathercole S.E. Nonword repetition in specific language impairment: more than a phonological short-term memory deficit. Psychon. B: Rev. 2007;14:919–924. doi: 10.3758/bf03194122. [DOI] [PubMed] [Google Scholar]

- Archibald L.M.D., Gathercole S.E., Joanisse M.F. Mulitsyllabic nonwords: more than a string of syllables. JASA. 2009;125:1712–1722. doi: 10.1121/1.3076200. [DOI] [PubMed] [Google Scholar]

- Archibald, L.M.D., Joanisse, M.F. Electrophysiological responses to coarticulatory and word level miscues. J. Exp. Psychol. Human, in press. [DOI] [PubMed]

- Archibald L.M.D., Joanisse M.F. On the sensitivity and specificity of nonword repetition and sentence recall to language and memory impairments in children. J. Speech Lang. Hear. R. 2009;52:899–914. doi: 10.1044/1092-4388(2009/08-0099). [DOI] [PubMed] [Google Scholar]

- Bishop D.V.M. Chapel Press; Oxford, UK: 1982. Test for Reception of Grammar. Medical Research Council. [Google Scholar]

- Bishop D.V.M., Adams C.V., Nation K., Rosen S. Perception of transient non-speech stimuli is normal in specific language impairment: evidence from glide discrimination. Appl. Psycholinguist. 2005;26:175–194. [Google Scholar]

- Brown L., Sherbenou R.J., Johnsen S.K. 3rd ed. Pearson Assessments; 1997. Test of Nonverbal Intelligence. [Google Scholar]

- Chiat S., Hunt J. Connections between phonology and semantics: an exploration of lexical processing in a language-impaired child. CLTT. 2001;9:200–213. [Google Scholar]

- Connine C.M., Darnieder L.M. Perceptual learning of co-articulation in speech. J. Mem. Lang. 2009;61:412–422. doi: 10.1016/j.jml.2009.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connolly J.F., Phillips N.A. Event-related potential components reflect phonological and semantic processing of the terminal word of spoken sentences. J. Cognitive Neurosci. 1994;6(3):256–266. doi: 10.1162/jocn.1994.6.3.256. [DOI] [PubMed] [Google Scholar]

- Connolly J.F., Phillips N.A., Forbes K.A.K. The effects of phonological and semantic features of sentence-ending words on visual event-related brain potentials. Electroen. Clin. Neuro. 1995;94:276–287. doi: 10.1016/0013-4694(95)98479-r. [DOI] [PubMed] [Google Scholar]

- Dahan D., Magnuson J.S., Tanenhaus M.K., Hogan E.M. Subcategorical mismatches and the time course of lexical access: Evidence for lexical competition. Lang. Cognitive Proc. 2001;16(5–6):507–534. [Google Scholar]

- D’Arcy R.C.N., Connolly J.F., Eskes G.A. Evaluation of reading comprehension with neuropsychological and event-related brain potential (ERP) methods. J. Int. Neuropsychol. Soc. 2000;6:556–567. doi: 10.1017/s1355617700655054. [DOI] [PubMed] [Google Scholar]

- Desroches A.S., Newman R.L., Joanisse M.F. Investigating the time course of spoken word recognition: electrophysiological evidence for the influences of phonological similarity. J. Cognitive Neurosci. 2009 doi: 10.1162/jocn.2008.21142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dollaghan C., Campbell T.F. Nonword repetition and child language impairment. J. Speech Lang. Hear. R. 1998;41:1136–1146. doi: 10.1044/jslhr.4105.1136. [DOI] [PubMed] [Google Scholar]

- Fowler C.A., Brown J.M. Perceptual parsing of acoustic consequences of velum lowering from information for vowels. Percept. Psychophysics. 2000;62(1):21–32. doi: 10.3758/bf03212058. [DOI] [PubMed] [Google Scholar]

- Gaskell M.G. Phonological variation and its consequences for the word recognition system. Lang. Cognitive Proc. 2001;16(5–6):723–729. [Google Scholar]

- Gow, D.,W., McMurray, B., 2007. Word recognition and phonology: The case of English coronal place assimilation. Cole, J.S., Hualdo, J. (Eds.), Papers in Greenhouse, S.W., Geisser, S. (1959). On methods in the analysis of profile data. Psychometrika 24, 95–112.

- Helenius P., Parviainen T., Paetau R., Salmelin R. Neural processing of spoken words in specific language impairment and dyslexia. Brain. 2009;132:1918–1927. doi: 10.1093/brain/awp134. [DOI] [PubMed] [Google Scholar]

- Holcomb P.J., Neville H.J. Natural speech processing: an analysis using event-related brain potentials. Psychobiology. 1991;19(4):286–300. [Google Scholar]

- Johnson E.K., Jusczyk P.W. Word segmentation by 8-month-olds: when speech cues count more than statistics. J. Mem. Lang. 2001;44:548–567. [Google Scholar]

- Leonard L.B. MIT Press; Massachusetts: 1998. Children with Specific Language Impairments. [Google Scholar]

- Ludlow C., Cudahy E., Bassich C., Brown G. The auditory processing skills hyperactive language impaired and reading disabled boys. In: Katz J., Lasky E., editors. Central auditory processing disorders: Problems of speech, language, and learning. University Park Press; Baltimore: 1983. pp. 163–185. [Google Scholar]

- Marslen-Wilson W., Warren P. Levels of perceptual representation and process in lexical access: words, phonemes, and features. Psychol. Rev. 1994;101(4):653–675. doi: 10.1037/0033-295x.101.4.653. [DOI] [PubMed] [Google Scholar]

- Martin B.A., Boothroyd A. Cortical, auditory, event-related potentials in response to periodic and aperiodic stimuli with the same spectral envelope. Ear Hear. 1999;20:33–44. doi: 10.1097/00003446-199902000-00004. [DOI] [PubMed] [Google Scholar]

- McArthur G.M., Bishop D.V.M. Frequency discrimination deficits in people with specific language impairment: reliability, validity, and linguistic correlates. J. Speech Lang. Hear. R. 2004;47:527–541. doi: 10.1044/1092-4388(2004/041). [DOI] [PubMed] [Google Scholar]

- McMurray B., Aslin R.N. Infants are sensitive to within-category variation in speech perception. Cognition. 2005;95:B15–B26. doi: 10.1016/j.cognition.2004.07.005. [DOI] [PubMed] [Google Scholar]

- McMurray B., Clayards M., Tanenhaus M., Aslin R. Tracking the timecourse of phonetic cue integration during spoken word recognition. Psychon. B. Rev. 2008;15(6):1064–1071. doi: 10.3758/PBR.15.6.1064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McMurray B., Tanenhaus M.K., Aslin R.N. Gradient effects of within-category phonetic variation on lexical access. Cognition. 2002;86(2):B33–B42. doi: 10.1016/s0010-0277(02)00157-9. [DOI] [PubMed] [Google Scholar]

- McQueen J.M., Norris D., Cutler A. Lexical influence in phonetic decision making: Evidence from subcategorical mismatches. J. Exp. Psychol. Human. 1999;25(5):1363–1389. [Google Scholar]

- Menell P., McAnally K., Stein J. Psychophysical sensitivity and physiological response to amplitude modulation in adult dyslexic listeners. J. Speech Hear. Res. 1999;42:797–803. doi: 10.1044/jslhr.4204.797. [DOI] [PubMed] [Google Scholar]

- Mitterer H., Blomert L. Coping with phonological assimilation in speech perception: evidence for early compensation. Percept. Psychophs. 2003;65(6):956–969. doi: 10.3758/bf03194826. [DOI] [PubMed] [Google Scholar]

- Neville H.J., Coffey S.A., Holcomb P.J., Tallal P. The neurobiology of sensory and language processing in language-impaired children. J. Cognitive Neurosci. 1993;5:235–253. doi: 10.1162/jocn.1993.5.2.235. [DOI] [PubMed] [Google Scholar]

- Neville H.J., Mills D.L., Lawson D.S. Fractionating language: different neural subsystems with different sensitive periods. Cereb. Cortex. 1992;2:244–258. doi: 10.1093/cercor/2.3.244. [DOI] [PubMed] [Google Scholar]

- Newman R.L., Connolly J.F. Electrophysiological markers of pre-lexical speech processing: evidence for bottom-up and top-down effects on word processing. Biol. Psychol. 2009;80:114–121. doi: 10.1016/j.biopsycho.2008.04.008. [DOI] [PubMed] [Google Scholar]

- Newman R.L., Connolly J.F., Service E., McIvor K. Influence of phonological expectations during a phoneme deletion task: Evidence from event-related brain potentials. Psychophysiology. 2003;40(4):640–647. doi: 10.1111/1469-8986.00065. [DOI] [PubMed] [Google Scholar]

- Picton T.W., Hillyard S.A., Krausz H.I., Galambos R. Human auditory evoked potentials: I. evaluation of components. Electroen. Clin. Neuro. 1974;36(2):179–190. doi: 10.1016/0013-4694(74)90155-2. [DOI] [PubMed] [Google Scholar]

- Picton T.W., Woods D.L., Baribeau-Braun J., Healey T.M.G. Evoked potential audiometry. J. Otolaryngol. 1977;6:90–119. [PubMed] [Google Scholar]

- Rosen S. Auditory processing in dyslexia and specific language impairment: is there a deficit? What is its nature?. Does it explain anything? J. Phonetics. 2003;31:509–527. [Google Scholar]

- Sanders L.D., Neville H.J. An ERP study of continuous speech processing. I Segmentation, semantics, and syntax in native speakers. Brain Res. Cogn. Brain Res. 2003;15:228–240. doi: 10.1016/s0926-6410(02)00195-7. [DOI] [PubMed] [Google Scholar]

- Semel E.M., Wiig E.H., Secord W.A. Psych Corp/Harcourt; San Antonio, TX: 2003. Clinical evaluation of language fundamentals – 4. [Google Scholar]

- Shafer V.L., Morr M., Datta H., Kurtzberg D., Schwartz R. Neurophysiological indexes of speech processing deficits in children with specific language impairment. J. Cognitive Neurosci. 2005;17:1168–1180. doi: 10.1162/0898929054475217. [DOI] [PubMed] [Google Scholar]

- Stager C.L., Werker J.F. Infants listen for more phonetic detail in speech perception than in word-learning tasks. Nature. 1997;388:381–382. doi: 10.1038/41102. [DOI] [PubMed] [Google Scholar]

- Steinhauer K., Connolly J.F. Event-related potentials in the study of language. In: Stemmer B., Whitaker H., editors. Handbook of the Neuroscience of Language. Elsevier; Amsterdam: 2008. pp. 91–104. [Google Scholar]

- Steinschneider M., Volkov I.W., Noh M.D., Garell P.C., Howard M.A., III Temporal encoding of the voice onset time (VOT) phonetic parameter by field potentials recorded directly from human auditory cortex. J. Neurophsyiol. 1999;82:2346–2357. doi: 10.1152/jn.1999.82.5.2346. [DOI] [PubMed] [Google Scholar]

- Stelmack R.M., Miles J. The effect of picture priming on event-related potentials of normal and disabled readers during a word recognition memory task. J. Clin. Exp. Neuropsychol. 1990;12:887–903. doi: 10.1080/01688639008401029. [DOI] [PubMed] [Google Scholar]

- Sussman J. Perception of formant transition cues to place of articulation in children with language impairments. J. Speech Hear. Res. 1993;36:1286–1299. doi: 10.1044/jshr.3606.1286. [DOI] [PubMed] [Google Scholar]

- Tallal P., Piercy M. Deficits of nonverbal auditory perception in children with developmental aphasia. Nature. 1973;241:468–469. doi: 10.1038/241468a0. [DOI] [PubMed] [Google Scholar]

- Tallal P., Piercy M. Developmental aphasia: Impaired rate of non-verbal processing as a function of sensory modality. Neuropsychologia. 1973;11:389–398. doi: 10.1016/0028-3932(73)90025-0. [DOI] [PubMed] [Google Scholar]

- Tallal P., Piercy M. Developmental aphasia: Rate of auditory processing and selective impairment of consonant perception. Neuropsychologia. 1974;12:83–93. doi: 10.1016/0028-3932(74)90030-x. [DOI] [PubMed] [Google Scholar]

- Tallal P., Piercy M. Developmental aphasia: The perception of brief vowels and extended stop consonants. Neuropsychologia. 1975;12:83–94. doi: 10.1016/0028-3932(75)90049-4. [DOI] [PubMed] [Google Scholar]

- Tallal P., Stark R. Speech acoustic cue discrimination abilities of normally developing and language impaired children. JASA. 1981;69:568–574. doi: 10.1121/1.385431. [DOI] [PubMed] [Google Scholar]

- Van den Brink D., Hagoort P. The influence of semantic and syntactic context constraints on lexical selection and integration in spoken-word comprehension as revealed by ERPs. J. Cognitive Neurosci. 2004;16(6):1068–1084. doi: 10.1162/0898929041502670. [DOI] [PubMed] [Google Scholar]

- Van Petten C., Coulson S., Rubin S., Plante E., Parks M. Time course of word identification and semantic integration in spoken language. J Exp. Psychol. Learn. 1999;25(2):394–417. doi: 10.1037//0278-7393.25.2.394. [DOI] [PubMed] [Google Scholar]

- Vogel E.K., Luck S.J. The visual N1 component as an index of a discrimination process. Psychophysiology. 2000;37:190–203. [PubMed] [Google Scholar]

- Wechsler D. Psychological Corporation; Antonio: 2003. Weschler Intelligence Scales for Children – IV. [Google Scholar]

- Woldorff M.G., Hillyard S.A. Modulation of early auditory processing during selective listening to rapidly presented tones. Electron. Clin. Neurol. 1992;79(3):170–191. doi: 10.1016/0013-4694(91)90136-r. [DOI] [PubMed] [Google Scholar]

- Wright B.A., Lombardino L.D., King W.M., Puranik C.S., Leonard C.M., Merzenich M.M. Deficits in auditory temporal spectral resolution in language-impaired children. Nature. 1997;387:176–178. doi: 10.1038/387176a0. [DOI] [PubMed] [Google Scholar]

- Zhang Y., Kuhl P.K., Imada T., Kotani M., Tohkura Y. Effects of language experience: neural commitment to language-specific auditory patterns. NeuroImage. 2005;26:703–720. doi: 10.1016/j.neuroimage.2005.02.040. [DOI] [PubMed] [Google Scholar]