Highlights

-

•

We observed neurophysiological correlates of music-syntactic processing in 30-month-olds.

-

•

This indicates that children of that age process harmonic sequences according to complex syntactic regularities.

-

•

These representations of music-syntactic regularities must have been acquired before and stored in long-term memory.

-

•

Similar to syntax processing in language, these processes are highly automatic and do not require attention.

Keywords: Music perception, Musical syntax, Neurophysiology, ERPs, EEG, Infants (30-month-olds)

Abstract

Music is a basic and ubiquitous socio-cognitive domain. However, our understanding of the time course of the development of music perception, particularly regarding implicit knowledge of music-syntactic regularities, remains contradictory and incomplete. Some authors assume that the acquisition of knowledge about these regularities lasts until late childhood, but there is also evidence for the presence of such knowledge in four- and five-year-olds. To explore whether such knowledge is already present in younger children, we tested whether 30-month-olds (N = 62) show neurophysiological responses to music-syntactically irregular harmonies. We observed an early right anterior negativity in response to both irregular in-key and out-of-key chords. The N5, a brain response usually present in older children and adults, was not observed, indicating that processes of harmonic integration (as reflected in the N5) are still in development in this age group. In conclusion, our results indicate that 30-month-olds already have acquired implicit knowledge of complex harmonic music-syntactic regularities and process musical information according to this knowledge.

1. Introduction

Music is one of the oldest, most basic, and ubiquitous socio-cognitive domains of the human species (cf., e.g., Fitch, 2006, Huron, 2001, Koelsch, 2012). In early childhood, musical communication (such as maternal singing) has been proposed to play a major role in the emotional, cognitive, and social development of children (cf., e.g., Gerry et al., 2012, Trehub, 2003). Responsiveness to infant-directed singing appears to be inborn (Masataka, 1999), and infants demonstrate more sustained attentiveness and engagement during maternal singing than maternal speaking (Nakata and Trehub, 2004, Trehub, 2003). Music also has an arousal-modulating effect, leading to changes in cortisol levels in 6-month-olds (Shenfield et al., 2003) and promoting reciprocal emotional ties (for a review, see, e.g., Trehub and Nakata, 2001).

Musical sequences contain perceptually discrete elements (tones and chords) that unfold in time and that are concatenated according to music-syntactic regularities into sequences to form melodic and harmonic structures. In order to fully appreciate harmonic sequences, knowledge about such regularities must be acquired (for reviews, see, e.g., Stalinski and Schellenberg, 2012, Trainor and Corrigall, 2010). It has been hypothesised that the order of knowledge acquisition about different aspects of music is related to the degree of universality (Hannon and Trainor, 2007). Sensitivity to consonance or dissonance is regarded universal and emerges earliest, suggesting processing constraints in the auditory system (cf., e.g., Chiandetti and Vallortigara, 2011, Fishman et al., 2001, Fritz et al., 2009, Trainor et al., 2002). Knowledge about which pitches belong to a particular scale (i.e., key membership) develops next: in Western tonal music, an octave is divided into 12 pitches, which constitute the so-called chromatic scale. Smaller subsets of these 12 pitches form the diatonic scales (e.g., the major scale). Behavioural studies with melodies as stimuli indicate that knowledge of key membership is established in 5-year-olds (Trainor and Trehub, 1994). Musical chords refer to several pitches sounding simultaneously. The succession of chords follows regularities that refer to local as well as non-local dependencies. Regularities regarding local dependencies have been described by the theory of harmony, and can be demonstrated using statistical analyses of transition probabilities in music corpora (Rohrmeier and Cross, 2008). Regularities regarding non-local dependencies have been described by generative theories of (tonal) music (cf. Lerdahl and Jackendoff, 1996, Rohrmeier, 2011). The acquisition of these music-syntactic regularities by exposure to music represents a complex learning problem which is solved by employing statistical learning in concert with other learning mechanisms (cf. McMullen and Saffran, 2004, McMullen Jonaitis and Saffran, 2009, Saffran et al., 1999, Rohrmeier and Rebuschat, 2012).

It was previously assumed, that “sensitivity to culture-specific details of tonal and harmonic structure seems to emerge between 5 and 7 years of age” (Trehub, 2003, p. 670). This assumption was built upon results from several behavioural studies exploring how this knowledge is acquired during development: most of these experiments (Krumhansl and Keil, 1982, Trainor and Trehub, 1992, Trainor and Trehub, 1994) used short melodies to explore knowledge about tonal and harmonic music-syntactic regularities. In Krumhansl and Keil (1982) sequences of six tones were presented, the first four tones (C – E – C – G) established a tonal context followed by two tones. These two tones either belonged to the diatonic scale or were two non-diatonic (out-of-key) tones. Tones from the diatonic scale were further categorised into tonics (C), belonging to the tonic triad (C – E – G), or not belonging to the tonic triad (D – F – A – B). Six possible combinations of the last two tones were evaluated: (a) both tonics, (b) both belonging to the triad, (c) first belonging to the triad, second not belonging to the triad, (d) first not belonging to the triad, second belonging to the triad, (e) both not belonging to the triad, and (f) both non-diatonic. Children judged whether these tones sounded “good” or “bad”. Krumhansl and Keil (1982) demonstrated that, with increasing age, children become capable of differentiating (i.e., giving different ratings to) the six conditions listed above. Whereas 6–7-year-olds gave different preference ratings only to diatonic vs. non-diatonic probe tones, older 8–9-year-olds in addition gave different preference ratings for diatonic continuations that either belonged to the tonic triad or not. Adults gave different ratings to all six conditions. This was taken as evidence that the 6–7-year-olds had acquired knowledge about scale membership, whereas 8–9-year-olds had additional knowledge, which tones belonged to the tonic triad (and are therefore most stable within the tonal system). Trainor and Trehub, 1992, Trainor and Trehub, 1994 used short melodies of 10 tones. In some, the 6th was replaced by another tone. The replacement tones either belonged to the same scale (diatonic change) or not (non-diatonic change); in Trainor and Trehub (1994) the diatonic change was further divided into a within-harmony and an out-of-harmony change (the out-of-harmony condition represents a change to another syntactically correct in-key harmony, and the within-harmony condition a change to another tone of the same syntactically correct in-key harmony). Participants were trained to signal if they detected a change in comparison to the standard melody, using a go/no-go head turn procedure (Trainor and Trehub, 1992), or by raising their hand (Trainor and Trehub, 1994). In one of these studies (Trainor and Trehub, 1992), 8- to 9-month-old infants and adults were tested. Whereas adults detected non-diatonic (out-of-key) more readily than diatonic (within-key) changes, infants detected both changes equally well, indicating that the performance in infants was not affected by knowledge about key membership. The other study (Trainor and Trehub, 1994) compared 5 and 7 year old children to adults. It was revealed that all age groups detected replacement tones that did not belong to the established key (non-diatonic change) more easily than those which belonged to the same key, indicating that all age groups had acquired sufficient knowledge about which tones constitute a scale. Furthermore, adults and 7-year-olds also performed better on the out-of-harmony than on the within-harmony change, suggesting that 5-year-olds were not capable of differentiating as well as 7-year-olds which tones belong to a chord function.

More recent behavioural and neurophysiological evidence indicates that already 4–5-year old children possess some implicit knowledge about harmonic regularities. Corrigall and Trainor (2010) demonstrated both knowledge of key membership as well as of harmonic regularity in this age group, using a short well-known melody (Twinkle, Twinkle Little Star), its harmonised version, and short sequences of chords (which were derived from the harmonisation of the melody but unknown to the children). The last tone of the melody, the last chord in the harmonised version, or the last chord of the chord sequences was modified. Children compared the modified to the unmodified sequences and gave preference ratings. We focus on the chord sequences which are most comparable to the stimuli of the present experiment (cf. Fig. 4 in Corrigall and Trainor, 2010). In response to these chord sequences, the highest preference ratings were given to the standard (unmodified) version which ended on a (syntactically correct) tonic chord in root position (following a dominant without a fifth). The second highest ratings were given to versions that ended on a tonic presented as a six–four chord (“within-harmony”, which is harmonically less regular at the end of a chord progression than a tonic in root position). The third highest ratings were given to versions that ended on a supertonic without a fifth (“out-of-harmony”), which is less likely to occur after a dominant than a tonic, and which is syntactically incorrect at the end of a chord progression. The lowest ratings were given for the sequences that ended on a chord that left the established key (“out-of-key”). These findings indicate that the 4–5-year-olds had acquired not only knowledge about key membership (as reflected in the lowest preference for chords that did not belong to the established key) but also some knowledge of harmonic regularities (as indicated by the different preference ratings to the chord sequences ending on a tonic, compared to sequences ending on a supertonic).

Electrophysiologically, violations of music-syntactic regularities usually elicit two brain responses, denoted as early right anterior negativity (ERAN) and N5. In adults, the ERAN evoked by irregular chords is maximal around 150–200 ms after stimulus onset, and has a frontal scalp distribution, often with right-hemispheric weighting (Kim et al., 2011, Koelsch et al., 2000, Leino et al., 2007, Loui et al., 2005, Miranda and Ullman, 2007). The neural mechanisms underlying the generation of the ERAN operate in the absence of attention (Koelsch et al., 2002b, Loui et al., 2005), but can be modulated by different attentional demands (Loui et al., 2005, Maidhof and Koelsch, 2010). The elicitation of an ERAN requires representations of music-syntactic regularities stored in long-term memory (cf. Koelsch, 2009). The ERAN amplitude is correlated with the degree of harmonic irregularity, such as the probability of local chord transitions (Kim et al., 2011). These two ERP responses can already be observed in 4–5-year-olds (Jentschke et al., 2008, Koelsch et al., 2003), demonstrating knowledge of music-syntactic regularities of harmony in children of that age group. Compared to adults, in children the ERAN latencies are usually longer, namely around 300–350 ms in 5-year-old children (Jentschke et al., 2008, Koelsch et al., 2003) and around 200–250 ms in 11-year-olds (Jentschke and Koelsch, 2009). A recent study by Corrigall and Trainor (2014) also observed an early ERP response to music-syntactic violations, however, this ERP response had a positive polarity instead of the negativity which is usually elicited (interestingly, in adults, reported as another experiment of the same study, an ERAN was obtained using the same stimulus material).

The N5, peaking around 500 ms, is also most prominent over frontal electrodes. The N5 amplitude is enlarged with increasing difficulty to integrate an incoming chord into the previous harmonic context (Koelsch et al., 2000). The N5 is reminiscent of the N400 component elicited by language stimuli that require semantic integration of a word into the working context: N5 and N400 are similar with regard to latency, morphology and scalp distribution and both components were shown to interact (i.e., the N5 amplitude was diminished when a semantic violation in language was presented concurrently; Steinbeis and Koelsch, 2008). These features gave rise to the notion that the N5 is related to the processing of musical meaning, given that processing of musical structure requires integration of both expected and unexpected events into a larger, meaningful musical context, and that harmonic tension-resolution patterns appear to be meaningful to listeners familiar with Western music (cf. Koelsch, 2005, Steinbeis and Koelsch, 2008).

So far, it is unclear whether the cognitive processes reflected in the ERAN and N5 brain responses are already present in children younger than 4–5 years. In the present study, we explored whether music-syntactic processing occurs in children as young as 30-month-olds. We used event-related brain potentials (ERPs), which are very suitable to assess such processing in infants and very young children, and tested whether an ERAN and an N5 can be observed in response to music-syntactic violations.

2. Methods

2.1. Participants

Thirty-month-old children (N = 62; 38 boys; 29–31 months old, M = 30 months) participated in an ERP experiment investigating the processing of musical syntax. All children were healthy, had no hearing problems (i.e., they passed a screening for oto-acoustic emissions), and were born to native German-speaking parents.1 The children were randomly assigned to one of two subgroups with a slightly different set of stimuli (described below): in one subgroup (N = 33; 20 boys), the irregular chord at the end of a sequence was a supertonic; in another subgroup (N = 29; 18 boys), it was a so-called Neapolitan sixth chord (see also below). Parents signed a written informed consent, agreeing to the participation of their children in the experiment. They received a compensation for expenses. The study was conducted according to the Declaration of Helsinki and approved by the Ethics Committee of the Charité University Medicine Berlin.

2.2. Stimuli and paradigm

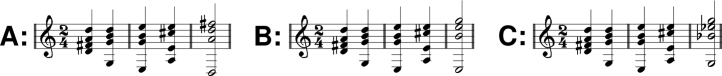

To investigate the processing of musical syntax in 30-month-old children we used a well-established paradigm and ERP methodology, previously used in studies with older children (Jentschke et al., 2008, Koelsch et al., 2003) and adults (e.g., Koelsch et al., 2000, Koelsch et al., 2007). EEG data were recorded while the children listened to chord sequences that ended either on a regular or an irregular chord function. These sequences had a duration of 4800 ms and consisted of five chords (see Fig. 1), four chords based on quarter notes (each 600 ms) and a final chord based on half notes (1200 ms), followed by a pause (1200 ms). All chords had the same attack and the same decay of loudness and were played with a piano sound (generated using Steinberg Cubase SX and The Grand; Steinberg Media Technologies, Hamburg, Germany). For each of the two subgroups of participants, a slightly different paradigm was employed. These paradigms differed with regard to the irregular final chord function of the chord sequences. In both subgroups, the first four chord functions were the same in all sequences: the first chord function was a tonic, the second a subdominant, the third a supertonic and the fourth a dominant. These chords were arranged according to the classical rules of harmony (Hindemith, 1940) and established a harmonic context toward the end of the sequence. The last chord was either a regular tonic (Fig. 1A), or a syntactically irregular chord (Fig. 1B and 1C).2

Fig. 1.

(A–C) Examples of the musical stimuli that were used in the experiment. (A) Chord sequence ending with a regular tonic. (B) Chord sequence ending with an irregular supertonic. (C) Chord sequence ending with an irregular Neapolitan sixth chord.

Thus, the regular sequences were so-called cadences, in which towards the end of the harmonic progression a dominant was followed by a tonic (Fig. 1A). A tonic perceived after a dominant creates a sense of repose or resolution.3 Any progression other than the dominant-tonic progression is less probable, and thus less regular, invoking a feeling of inconclusiveness. The irregular final chords differed between the two subgroups: in one subgroup, the irregular final chord function was a supertonic (Fig. 1B; previously used in, e.g.,Jentschke and Koelsch, 2009, Koelsch et al., 2007). In the other subgroup, it was a so-called Neapolitan sixth chord (Fig. 1C; previously used in, e.g., Koelsch et al., 2003, Koelsch et al., 2000). The Neapolitan sixth chords represented stronger irregularities than the final supertonics: (a) the Neapolitan sixth chords contained two out-of-key notes (with regard to the harmonic context established by the first four chords), whereas the supertonics did not contain out-of-key-notes; (b) the Neapolitan sixth chords introduced three new pitches whereas the supertonics introduced only one (notably, the regular final tonics introduced two new pitches); and (c) whereas final supertonics and final tonics were presented in root position (i.e., with the root tone of the chord in the base voice), the Neapolitan sixth chords were not in root position. By using these two different chord functions as irregular final chords we aimed at comparing the deviance-related brain responses of 30-month-olds. If supertonics show an ERP effect, then this would indicate that the children were sensitive to music-syntactic irregularities (even if they do not violate key membership). If such a response is elicited only by the Neapolitan sixth chords, then this would indicate that the children were sensitive to irregular chords containing several irregularities (as described above), but not to the diatonic (in-key) music-syntactic irregularity used in our study.

In each paradigm, the sequences were transposed to the 12 major keys, resulting in 24 different sequences. Across the experiment, each sequence type was presented eight times, resulting in 192 sequences for the entire experiment. The sequences were presented pseudo-randomly in direct succession with a probability of 0.5 for each sequence type (regular vs. irregular) and with each sequence being presented in a tonal key that was different from the key of the preceding sequence. While listening to the sequences (approx. 17 min) children sat on the lap of their parents (who were acoustically isolated by listening to a CD via headphones) and watched a silent movie.

2.3. EEG recording and processing

EEG data were recorded with Ag–AgCl electrodes from 21 scalp locations – F7, F3, FZ, F4, F8, FC3, FC4, T7, C3, CZ, C4, C8, CP5, CP6, P7, P3, PZ, P4, P8, O1, O2 according to the Extended International 10–20 System (American Electroencephalographic Society, 1994) – and 6 further locations on the head – outer canthi of both eyes (H+ and H−), supraorbital and infraorbital on the right eye (V+ and V−), and left (M1) and right mastoid (M2). Data were sampled at 250 Hz with a reference at the left mastoid and without online filtering using a PORTI-32/MREFA amplifier (TMS International B.V., Enschede, NL). Impedances of the scalp electrodes were kept below 5 kΩ, of the other electrodes below 10 kΩ. Data were processed offline using EEGLAB (Delorme and Makeig, 2004): they were re-referenced to linked mastoids (mean of M1 and M2), filtered with a 0.25 Hz high-pass filter (finite impulse response (FIR), 1311 points; to remove drifts) and a 49–51 Hz band-stop filter (FIR, 437 points; to remove line noise). Then an independent component analysis (ICA) was conducted and artifact components (e.g., eye blinks, eye movements, or muscle artefact's) were removed. Thereafter, data were rejected with five rejection procedures provided by EEGLAB.4 Finally, non-rejected epochs (29–91 trials, M = 70) were averaged for a period of 200 ms before (baseline) to 1200 ms after stimulus onset (length of the final chord).5

2.4. Statistical evaluation

Variables used in the analyses did not deviate from a standard normal distribution (tested with Kolmogorov–Smirnov tests; 0.07 ≤ p ≤ 1.00; Md = 0.76). For the analysis of the ERPs, four regions of interest (ROIs) were computed: left-anterior (F3, F7, FC3), right-anterior (F4, F8, FC4), left-posterior (P3, T7, CP5), and right-posterior (P4, T8, CP6). Two time windows were chosen in accordance with our hypotheses and centred around the peak of the ERP components: (1) 240–320 ms (ERAN), and (2) 650–850 ms (N5) after stimulus onset; (3) an early positivity, 120–200 ms, was suggested by visual inspection but did not reveal any significant effects. ERPs were evaluated statistically by mixed-model ANOVAs for repeated measures, with the within-subject factors regularity (regular vs. irregular chord function), anterior–posterior distribution, and hemisphere (left vs. right), and the between-subjects factor subgroup (supertonic vs. the Neapolitan sixth chord as irregular chord). For the ERAN, where a main effect of regularity was observed, follow-up ANOVAs (with the same within-subject factors) were calculated for each subgroup.

3. Results

3.1. ERAN

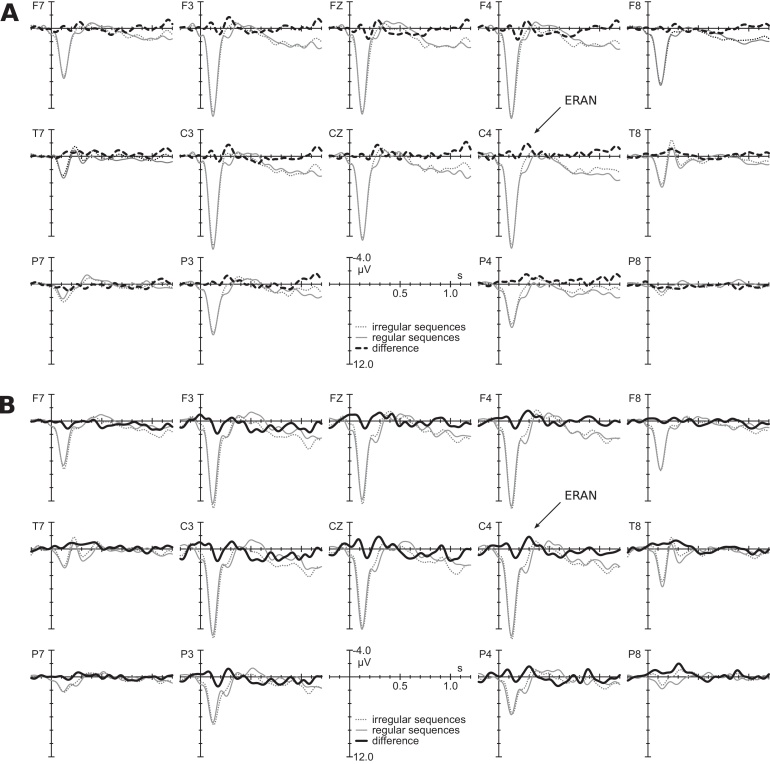

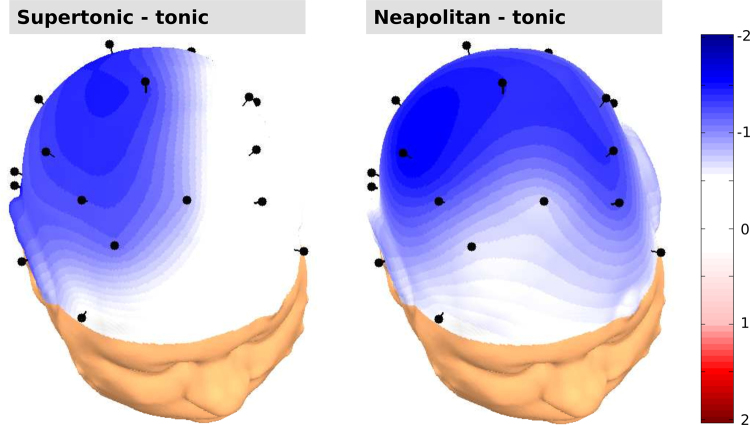

Fig. 2 presents the ERP responses to the regular and the irregular final chords of the sequences. These responses show that an ERAN, peaking around 300 ms, was elicited by both the music-syntactically irregular supertonics (Fig. 2A) and the irregular Neapolitan sixth chords (Fig. 2B). Unlike in older children and adults, where the ERAN is strongest at frontal leads (e.g., Jentschke and Koelsch, 2009, Jentschke et al., 2008, Koelsch et al., 2000, Koelsch and Jentschke, 2008), the ERAN was more broadly distributed over the scalp (see Fig. 3). The lateralisation of the ERAN differed between subgroups: in response to supertonics, it had a bilateral (slightly leftward) fronto-central distribution (see Table 1, Fig. 2, Fig. 3). The ERAN elicited by the Neapolitan sixth chords was most prominent at right-centro-temporal electrodes (see Table 1, Fig. 2, Fig. 3).

Fig. 2.

Grand-average ERP waveforms elicited by the final chords, (A) in the subgroup in which irregular chord functions were supertonics and (B) in the subgroup in which irregular chord functions were Neapolitan sixth chords. The solid grey lines indicates potentials elicited by regular (tonic) chords, the dotted grey line responses to irregular chords (supertonic or Neapolitan sixth chord). The thick black lines represent the difference wave (regular subtracted from irregular chords) – dashed in the subgroup where supertonics served as irregular chords, solid in the subgroup where Neapolitan sixth chords served as irregular chords. The ERAN is indicated by an arrow.

Fig. 3.

Scalp topographies of the ERAN in the subgroup in which irregular chord functions were supertonics (left panel), and the subgroup in which irregular chord functions were Neapolitan sixth chords (right panel). The head plots represent spherical spline interpolations of the amplitude difference between irregular and regular chords (regular subtracted from irregular chords) in the ERAN time window (240–320 ms).

Table 1.

Overview of the amplitude differences between the regular and irregular final chords in the time windows 240–320 ms (ERAN), 650–850 ms (N5), and 120–200 ms (early positivity).

| Anterior |

Posterior |

|||

|---|---|---|---|---|

| Left | Right | Left | Right | |

| ERAN – supertonics (μV) | −1.48 | −1.00 | −0.63 | −0.35 |

| ERAN – Neapolitan sixth chords (μV) | −0.27 | −1.31 | −0.51 | −1.28 |

| Late negativity (N5) (μV) | 0.40 | 0.35 | 0.41 | 0.08 |

| Early positivity (μV) | 0.57 | 0.51 | 0.48 | −0.06 |

The difference in the responses to irregular vs. regular chords was reflected by a main effect of regularity (F(1,60) = 10.91, p = 0.002), the different lateralisation of the ERAN in response to the two different irregular chord types by an interaction of regularity × hemisphere × subgroup (F(1,60) = 5.16, p = 0.027).6 No other significant effects involving regularity or subgroup were observed. Two separate ANOVAs for each subgroup confirmed a significant main effect of regularity in both subgroups (with supertonics as irregular chords: F(1,32) = 5.21, p = 0.029; with the Neapolitan sixth chords: F(1,28) = 6.01, p = 0.021), indicating that an ERAN was elicited in response to the irregular chords in both subgroups. In each of these two ANOVAs the main effect of regularity was the only significant result.

As mentioned in the Introduction, the ERAN is usually followed by a later negativity (N5) in older children and in adults. No such effect was observed in the two-year-olds. In the N5 time window, ERPs elicited by irregular chords were slightly more positive than those elicited by regular chords (see Table 1), but this effect was statistically not significant (regularity, p = 0.244).

Waveforms also slightly differed between 120 and 200 ms after stimulus onset (with positive polarity and being strongest at left-anterior ROIs, see Table 1). However, this effect was statistically not significant (regularity, p = 0.068).

4. Discussion

The present data show that an ERAN elicited by music-syntactically irregular harmonies can be observed in children as young as 30 months of age. The ERAN observed in the present study resembles the neurophysiological response to irregular chord progressions in older children and adults. Thus, our results provide the first empirical evidence demonstrating knowledge of music-syntactic regularities in children at this age. Importantly, an ERAN response was observed in response to the final supertonics, i.e. chords that were music-syntactically irregular but belonged to the same key as the regular chords. Using the same sequences as in the present study, we have demonstrated previously (particularly Fig. 3 in Koelsch et al., 2007) that the final supertonics are acoustically even more congruent with the preceding harmonic context than regular tonics. This rules out the possibility that the ERAN observed in the present study was simply due to acoustical irregularity, and indicates that the 30-month-old children of our study were sensitive to purely music-syntactic irregularity (without confounding acoustical deviance). Given the significant difference in lateralisation in response to the Neapolitan sixth chords compared to the supertonics, the present results also indicate that there were additional contributions of (one or more of) the properties that differed between the two irregular chord types. These properties included (a) two out-of-key notes in the Neapolitan sixth chords but none in the supertonics (i.e., Neapolitans were out-of-key chords, while supertonics were in-key chords), (b) three new pitches in the Neapolitan sixth chords but only one in the supertonics (i.e., Neapolitans were acoustically less similar to the preceding harmonic context than supertonics) and [c] the chord inversion (i.e., Neapolitan sixth chords were not presented in root position, other than the supertonics and the tonics).

Notably, the current data demonstrate that neurophysiological indicators of music-syntactic processing can be observed much earlier in development than previously assumed (see Section 1 for an overview). A number of factors might account for the divergent findings when comparing those previous studies with the present study. Firstly, there are differences in methodology. Most previous studies required an overt response. Either participants had to judge how appropriate a probe tone continued a sequence of tones (Corrigall and Trainor, 2010, Krumhansl and Keil, 1982) or chords (Corrigall and Trainor, 2010), or they had to detect a change in a standard sequence using a go/no-go conditioned head-turn (Trainor and Trehub, 1992) or hand-sign procedure (Trainor and Trehub, 1994). Methods that do not require such an overt response (e.g., ERPs) are presumably capable of detecting finer nuances of children's implicit knowledge regarding harmonic regularities. This assumption is substantiated by evidence from a recent study by Corrigall and Trainor (2014), where children showed different ERP responses to regular and irregular chords, but could not differentiate them in a behavioural experiment.

A second factor might be differences in the stimulus material. Most previous studies have explored the development of knowledge of harmony using sequences of tones (e.g., Krumhansl and Keil, 1982, Trainor and Trehub, 1992, Trainor and Trehub, 1994). More recent behavioural studies and all neurophysiological studies used either chord sequences (Jentschke et al., 2008, Koelsch et al., 2003) or both sequences of chord and tones (Corrigall and Trainor, 2010, Corrigall and Trainor, 2014). These latter studies revealed results similar to those of the present study (see Section 1). Although chords appear to be more complex stimuli, implicit knowledge about rules guiding their progression within chord sequences seems to be present earlier in development than proposed by the former three studies (Krumhansl and Keil, 1982, Trainor and Trehub, 1992, Trainor and Trehub, 1994). This is reflected by the fact that music-syntactic knowledge was observed in 4-year-olds in the studies by Corrigall and Trainor (2010), but only in older children when studies used tone sequences (Krumhansl and Keil, 1982, Trainor and Trehub, 1992, Trainor and Trehub, 1994). Perhaps a stronger harmonic expectancy is established by a chord such as the dominant (three pitch classes) or dominant seventh chord (4 pitch classes) than by a single tone. Accordingly, in an ERP study exploring processing of music-syntactic irregularities in melodies compared to chord sequences, a larger ERAN amplitude was elicited by irregular chords than by irregular single tones (Koelsch and Jentschke, 2010). Differences in the stimulus material most presumably also account for the unexpected result of the study by Corrigall and Trainor (2014), where an ERP response with positive polarity was observed, whereas in most other studies an early negativity (ERAN) was elicited in response to music-syntactic violations: music-syntactic irregularities in the study by Corrigall and Trainor (2014) might have been more difficult to detect than those of other studies (e.g., due to tonic implication, or mixolydian key of some stimuli).

The ERAN in 30-month-old children differs from those in older children and adults in latency and scalp distribution: as in other studies exploring music-syntactic processing in children (Jentschke et al., 2008, Koelsch et al., 2003), the latency of the ERAN was longer than in adults (around 300 ms in 30-month- and 5-year-olds, but around 200 ms in adults). This difference is in agreement with the general observation that ERP components in very young children have smaller amplitudes and longer latencies than in adults, e.g., Thierry (2005). In addition, the ERAN was distributed more broadly in our participants compared to adults and older children. It appears that the ERAN becomes increasingly more prominent at frontal electrodes until the age of five years (cf. Jentschke et al., 2008, Koelsch et al., 2003). Possible reasons for this difference are manifold, including a thinner skull, incompletely closed fontanels (Flemming et al., 2005, Trainor, 2012), and cortical thickening with maturation (with the inferior frontal gyrus, a core neural substrate of syntax processing in music and language, being one of the brain regions with the most significant change; Sowell et al., 2004).

A music-syntactic violation usually elicits an ERAN-N5 brain response in older children and adults, while no N5 brain response was observed in 30-month-old children. The N5 is taken to reflect processes of harmonic integration of the actual chord into a mental representation of the harmonic context (built up by the preceding chords; cf. Koelsch et al., 2000) and has been hypothesised to be an indicator of the processing of intra-musical meaning (cf. Koelsch, 2005, Steinbeis and Koelsch, 2008), e.g. meaning resulting from the harmonic tension-resolution patterns (for a more thorough discussion of different kinds of musical semantics, see Koelsch, 2012). The present data suggest that 30-month-old children had acquired sufficient knowledge about music-syntactic regularities to detect their violation (as reflected by the ERAN), but had not yet acquired enough knowledge concerning the significance of these violations and how to incorporate such unexpected events into a given context: the irregular final chords raise the expectation of a continuation which requires to update the harmonic context. Although ERAN and N5 appear as a pattern of successive brain responses, they were systematically modulated using different linguistic material containing either a semantic or a syntactic violation: Steinbeis and Koelsch (2008) showed that the ERAN was reduced when presented concurrently with a linguistic syntax violation but not with a semantic incongruency; the reversed pattern was observed for the N5. This indicates that, whereas the N5 might build upon the ERAN, these responses are distinct in terms of their function and their neural generators.

Interestingly, the time course of acquisition of syntactic regularities is similar between music and language. In the language domain, a violation of syntactic phrase structure rules elicited an early left anterior negativity (ELAN) in children of the same age (namely as young as 24–30 months old), indicating a sensitivity to linguistic–syntactic regularities (Oberecker et al., 2005, Oberecker and Friederici, 2006).7 The ELAN is similar to the ERAN in latency and in the frontal scalp distribution (although it is usually more left lateralised than the ERAN, cf., e.g., Hahne and Friederici, 1999, Herrmann et al., 2011). A growing body of research indicates a considerable amount of overlap between these two cognitive domains. This includes evidence of shared neural resources underlie both syntactic processing in music and language (e.g., Koelsch et al., 2002a; for reviews, see Besson and Schön, 2001, Koelsch, 2012, Patel, 2008), theoretical accounts proposing similar mechanisms in acquisition (e.g., McMullen and Saffran, 2004, Ettlinger et al., 2011) and studies that demonstrated that musical training can facilitate the learning of both linguistic and musical structures (e.g., Jentschke and Koelsch, 2009, Schön et al., 2010).

Music-syntactic processing of local dependencies is based upon (usually implicit) knowledge about regularities that are stored in a long-term memory format (for a review, see Koelsch, 2009). Therefore, the acquisition of such regularities must have happened before the age at which the ERAN can be observed. Koelsch and Jentschke (2008) reported that the ERAN amplitude significantly declined when chord sequences similar to those in the present experiment were presented to participants for about two hours. This demonstrates that the cognitive representations of musical regularities are influenced by the repeated presentation of unexpected, irregular harmonies, which is consistent with the assumption that music-structural regularities are acquired by implicit learning mechanisms (such as statistical learning) without intentional attempts to acquire information (cf., e.g., Perruchet, 2008).

5. Conclusion

The present data provide evidence that first neurophysiological indicators of syntactic processing of harmonies can be observed in 30-month-old children. This was revealed by a brain-electric response (the ERAN) that was elicited by in-key chords (as well as by out-of-key chords) which did not follow the regularities of chord progressions in major-minor tonal music. Different to older children and adults, who usually show an ERAN-N5 pattern in response to a violation of musical syntax, no N5 was elicited, and the ERAN had a relatively small amplitude size and a longer latency. This demonstrates that neural mechanisms underlying the (automatic) detection of music-syntactic violations are already established, while processes of harmonic integration are still developing.

Conflict of interest

None declared.

Acknowledgements

We thank our participants, their parents, Christina Rügen and Jördis Haselow (who helped to acquire the data), Volker Hesse (who took care of the somatic and neurological screening of the children), as well as Daniela Ordonez Acuna, Laura Hahn, and John de Priest (for valuable comments on the manuscript). This work was supported by the Max Planck Society and carried out in the Junior Research Group “Neurocognition of Music” at the Max Planck Institute for Human Cognitive and Brain Sciences, as well as by the German Research Foundation (DFG) with grants awarded to A.F. ( FR-519/18-1 ), and to S.K. ( KO 2266/2-1/2 ).

Footnotes

Children were participants of the German Language Development study (supported by the German Research Foundation, DFG Grant FR-519/18-1, awarded to A.F.). They underwent regular somatic and neurological screening during participation. Data of 34 further children were acquired, but had to be excluded because they did not finish the experiment (N = 12), or the EEG measurement could not be evaluated (due to artifacts, i.e., drifts, chewing, or excessive movement; N = 22).

Examples of the chord sequences can be found in the supplementary material and at http://www.stefan-koelsch.de.

The majority of musical pieces from the baroque, classical, and romantic periods end with a dominant-tonic progression, and the transition probability for the dominant-tonic progression was the highest among all transition probabilities in a corpus of Bach chorales (cf. Table 2 in Rohrmeier and Cross, 2008).

The following parameters were used: (1) for threshold (if amplitudes exceeded ±120 μV), (2) for linear trends (if linear trends exceeded 160 μV in a 400 ms gliding time window), (3) for improbable data (if the trial was lying outside a ±6 SD range (for a single channel) or ±3 SD range (for all channels) of the mean probability distribution), (4) for abnormally distributed data (if the data were lying outside a ±6 SD range (for a single channel) or a ±3 SD range (for all channels) of the mean distribution of kurtosis values), and (5) for improbable spectra (spectra should not deviate from the baseline spectrum by ±30 dB in the 0–2 Hz frequency window (to reject eye movements) and +15/−30 dB in the 8–12 Hz frequency window (to reject alpha activity)).

Epoch length and baseline values were chosen in accordance with earlier experiments using the same paradigm (e.g., Jentschke and Koelsch, 2009, Jentschke et al., 2008, Koelsch et al., 2000, Koelsch et al., 2003).

Motivated by the higher proportion of boys in either group, we also calculated an ANOVA controlling for gender, revealing similar results, namely a main effect of regularity (F(1,58) = 8.77, p = 0.004), an interaction of regularity × hemisphere × subgroup (F(1,58) = 4.64, p = 0.035), but also an interaction of regularity × anterior–posterior distribution × gender (F(1,58) = 7.93, p = 0.007) due to a more anterior distribution in boys.

In these experiments usually (a) sentences without a prepositional phase (syntactically correct) are compared with (b) sentences with an incomplete prepositional phrase (syntactically incorrect) and (c) sentences with a complete prepositional phrase (filler, to prevent participants to anticipate the violation when encountering the preposition). Examples of such sentences are: (a) Der Löwe brüllt. (The lion roars/is roaring. – syntactically correct); (b) Der Löwe im brüllt. (The lion in the roars/is roaring. – syntactically incorrect); (c) Der Löwe im Zoo brüllt (The lion in the zoo roars/is roaring – filler).

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.dcn.2014.04.005.

Contributor Information

Sebastian Jentschke, Email: Sebastian.Jentschke@fu-berlin.de, Sebastian.Jentschke@gmail.com.

Angela D. Friederici, Email: angelafr@cbs.mpg.de.

Stefan Koelsch, Email: koelsch@cbs.mpg.de.

Appendix B. Supplementary data

The following are the supplementary data to this article:

References

- American Electroencephalographic Society Guideline thirteen: guidelines for standard electrode position nomenclature. J. Clin. Neurophysiol. 1994;11:111–113. [PubMed] [Google Scholar]

- Besson M., Schön D. Comparison between language and music. Ann. N. Y. Acad. Sci. 2001;930:232–258. doi: 10.1111/j.1749-6632.2001.tb05736.x. [DOI] [PubMed] [Google Scholar]

- Chiandetti C., Vallortigara G. Chicks like consonant music. Psychol. Sci. 2011;22:1270–1273. doi: 10.1177/0956797611418244. [DOI] [PubMed] [Google Scholar]

- Corrigall K.A., Trainor L.J. Musical enculturation in preschool children: acquisition of key and harmonic knowledge. Music Percept. 2010;28:195–200. [Google Scholar]

- Corrigall K.A., Trainor L.J. Enculturation to musical pitch structure in young children: evidence from behavioral and electrophysiological methods. Dev. Sci. 2014;17:142–158. doi: 10.1111/desc.12100. [DOI] [PubMed] [Google Scholar]

- Delorme A., Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Ettlinger M., Margulis E.H., Wong P.C.M. Implicit memory in music and language. Front. Psychol. 2011;2:211. doi: 10.3389/fpsyg.2011.00211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fishman Y.I., Volkov I.O., Noh M.D., Garell P.C., Bakken H., Arezzo J.C., Howard M.A., Steinschneider M. Consonance and dissonance of musical chords: neural correlates in auditory cortex of monkeys and humans. J. Neurophysiol. 2001;86:2761–3288. doi: 10.1152/jn.2001.86.6.2761. [DOI] [PubMed] [Google Scholar]

- Fitch W.T. The biology and evolution of music: a comparative perspective. Cognition. 2006;100:173–215. doi: 10.1016/j.cognition.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Flemming L., Wang Y., Caprihan A., Eiselt M., Haueisen J., Okada Y. Evaluation of the distortion of EEG signals caused by a hole in the skull mimicking the fontanel in the skull of human neonates. Clin. Neurophysiol. 2005;116:1141–1152. doi: 10.1016/j.clinph.2005.01.007. [DOI] [PubMed] [Google Scholar]

- Fritz T.H., Jentschke S., Gosselin N., Sammler D., Peretz I., Turner R., Friederici A.D., Koelsch S. Universal recognition of three basic emotions in music. Curr. Biol. 2009;19:573–576. doi: 10.1016/j.cub.2009.02.058. [DOI] [PubMed] [Google Scholar]

- Gerry D., Unrau A., Trainor L.J. Active music classes in infancy enhance musical, communicative and social development. Dev. Sci. 2012;15:398–407. doi: 10.1111/j.1467-7687.2012.01142.x. [DOI] [PubMed] [Google Scholar]

- Hahne A., Friederici A.D. Electrophysiological evidence for two steps in syntactic analysis: early automatic and late controlled processes. J. Cogn. Neurosci. 1999;11:194–205. doi: 10.1162/089892999563328. [DOI] [PubMed] [Google Scholar]

- Hannon E.E., Trainor L.J. Music acquisition: effects of enculturation and formal training on development. Trends Cogn. Sci. 2007;11:466–472. doi: 10.1016/j.tics.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Herrmann B., Maess B., Friederici A.D. Violation of syntax and prosody – disentangling their contributions to the early left anterior negativity (ELAN) Neurosci. Lett. 2011;490:116–120. doi: 10.1016/j.neulet.2010.12.039. [DOI] [PubMed] [Google Scholar]

- Hindemith P. 1940. Unterweisung im Tonsatz. Theoret. Mainz: Schott, vol. 1. [Google Scholar]

- Huron D. Is music an evolutionary adaptation? Ann. N. Y. Acad. Sci. 2001;930:43–61. doi: 10.1111/j.1749-6632.2001.tb05724.x. [DOI] [PubMed] [Google Scholar]

- Jentschke S., Koelsch S. Musical training modulates the development of syntax processing in children. Neuroimage. 2009;47:735–744. doi: 10.1016/j.neuroimage.2009.04.090. [DOI] [PubMed] [Google Scholar]

- Jentschke S., Koelsch S., Sallat S., Friederici A.D. Children with specific language impairment also show impairment of music-syntactic processing. J. Cogn. Neurosci. 2008;20:1940–1951. doi: 10.1162/jocn.2008.20135. [DOI] [PubMed] [Google Scholar]

- Kim S.-G., Kim J.S., Chung C.K. The effect of conditional probability of chord progression on brain response: an MEG study. PLoS ONE. 2011;6:e17337. doi: 10.1371/journal.pone.0017337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S. Neural substrates of processing syntax and semantics in music. Curr. Opin. Neurobiol. 2005;15:207–212. doi: 10.1016/j.conb.2005.03.005. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Music-syntactic processing and auditory memory: similarities and differences between ERAN and MMN. Psychophysiology. 2009;46:179–190. doi: 10.1111/j.1469-8986.2008.00752.x. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Wiley-Blackwell; Oxford, UK: 2012. Brain and Music. [Google Scholar]

- Koelsch S., Grossmann T., Gunter T.C., Hahne A., Schröger E., Friederici A.D. Children processing music: electric brain responses reveal musical competence and gender differences. J. Cogn. Neurosci. 2003;15:683–693. doi: 10.1162/089892903322307401. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Gunter T.C., von Cramon D.Y., Zysset S., Lohmann G., Friederici A.D. Bach speaks: a cortical “language-network” serves the processing of music. Neuroimage. 2002;17:956–966. [PubMed] [Google Scholar]

- Koelsch S., Gunter T.C., Friederici A.D., Schröger E. Brain indices of music processing: “nonmusicians” are musical. J. Cogn. Neurosci. 2000;12:520–541. doi: 10.1162/089892900562183. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Jentschke S. Short-term effects of processing musical syntax: an ERP study. Brain Res. 2008;1212:55–62. doi: 10.1016/j.brainres.2007.10.078. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Jentschke S. Differences in electric brain responses to melodies and chords. J. Cogn. Neurosci. 2010;22:2251–2262. doi: 10.1162/jocn.2009.21338. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Jentschke S., Sammler D., Mietchen D. Untangling syntactic and sensory processing: an ERP study of music perception. Psychophysiology. 2007;44:476–490. doi: 10.1111/j.1469-8986.2007.00517.x. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Schröger E., Gunter T.C. Music matters: preattentive musicality of the human brain. Psychophysiology. 2002;39:38–48. doi: 10.1017/S0048577202000185. [DOI] [PubMed] [Google Scholar]

- Krumhansl C.L., Keil F.C. Acquisition of the hierarchy of tonal functions in music. Memory Cogn. 1982;10:243–251. doi: 10.3758/bf03197636. [DOI] [PubMed] [Google Scholar]

- Leino S., Brattico E., Tervaniemi M., Vuust P. Representation of harmony rules in the human brain: further evidence from event-related potentials. Brain Res. 2007;1142:169–177. doi: 10.1016/j.brainres.2007.01.049. [DOI] [PubMed] [Google Scholar]

- Lerdahl F., Jackendoff R. MIT Press; Cambridge, MA: 1996. A Generative Theory of Tonal Music. [Google Scholar]

- Loui P., Grent-’t Jong T., Torpey D., Woldorff M.G. Effects of attention on the neural processing of harmonic syntax in Western music. Brain Res. Cogn. Brain Res. 2005;25:678–687. doi: 10.1016/j.cogbrainres.2005.08.019. [DOI] [PubMed] [Google Scholar]

- Maidhof C., Koelsch S. Effects of selective attention on syntax processing in music and language. J. Cogn. Neurosci. 2010;23:2232–2247. doi: 10.1162/jocn.2010.21542. [DOI] [PubMed] [Google Scholar]

- Masataka N. Preference for infant-directed singing in 2-day-old hearing infants of deaf parents. Dev. Psychol. 1999;35:1001–1005. doi: 10.1037//0012-1649.35.4.1001. [DOI] [PubMed] [Google Scholar]

- McMullen E., Saffran J.R. Music and language: a developmental comparison. Music Percept. Interdiscip. J. 2004;21:289–311. [Google Scholar]

- McMullen Jonaitis E., Saffran J.R. Learning harmony: the role of serial statistics. Cogn. Sci. 2009;33:951–968. doi: 10.1111/j.1551-6709.2009.01036.x. [DOI] [PubMed] [Google Scholar]

- Miranda R.A., Ullman M.T. Double dissociation between rules and memory in music: an event-related potential study. Neuroimage. 2007;38:331–345. doi: 10.1016/j.neuroimage.2007.07.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakata T., Trehub S.E. Infants’ responsiveness to maternal speech and singing. Infant Behav. Dev. 2004;27:455–464. [Google Scholar]

- Oberecker R., Friederici A.D. Syntactic event-related potential components in 24-month-olds’ sentence comprehension. Neuroreport. 2006;17:1017–1021. doi: 10.1097/01.wnr.0000223397.12694.9a. [DOI] [PubMed] [Google Scholar]

- Oberecker R., Friedrich M., Friederici A.D. Neural correlates of syntactic processing in two-year-olds. J. Cogn. Neurosci. 2005;17:1667–1678. doi: 10.1162/089892905774597236. [DOI] [PubMed] [Google Scholar]

- Patel A.D. Oxford University Press; New York, NY: 2008. Music, Language, and the Brain. [Google Scholar]

- Perruchet P. Implicit learning. In: Byrne J.H., editor. Learning and Memory: A Comprehensive Reference, Cognitive Psychology of Memory, vol. 2. 1st ed. Elsevier Science Publishers B.V.; Amsterdam, NL: 2008. pp. 597–621. (Chapter 2.23.1) [Google Scholar]

- Rohrmeier M.A. Towards a generative syntax of tonal harmony. J. Math. Music. 2011;5:35–53. [Google Scholar]

- Rohrmeier M.A., Cross I. Statistical properties of tonal harmony in Bach's chorales. In: Adachi M., Nakajima Y., Hiraga Y., editors. Proceedings of the 10th International Conference on Music Perception and Cognition. Hokkaido University; Sapporo, Japan: 2008. pp. 619–627. [Google Scholar]

- Rohrmeier M.A., Rebuschat P. Implicit learning and acquisition of music. Top. Cogn. Sci. 2012;4:525–553. doi: 10.1111/j.1756-8765.2012.01223.x. [DOI] [PubMed] [Google Scholar]

- Saffran J.R., Johnson E.K., Aslin R.N., Newport E.L. Statistical learning of tone sequences by human infants and adults. Cognition. 1999;70:27–52. doi: 10.1016/s0010-0277(98)00075-4. [DOI] [PubMed] [Google Scholar]

- Schön D., Gordon R.L., Campagne A., Magne C.L., Astésano C., Anton J.-L., Besson M. Similar cerebral networks in language, music and song perception. Neuroimage. 2010;51:450–461. doi: 10.1016/j.neuroimage.2010.02.023. [DOI] [PubMed] [Google Scholar]

- Shenfield T., Trehub S.E., Nakata T. Maternal singing modulates infant arousal. Psychol. Music. 2003;31:365–375. [Google Scholar]

- Sowell E.R., Thompson P.M., Leonard C.M., Welcome S.E., Kan E., Toga A.W. Longitudinal mapping of cortical thickness and brain growth in normal children. J. Neurosci. 2004;24:8223–8231. doi: 10.1523/JNEUROSCI.1798-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalinski S.M., Schellenberg E.G. Music cognition: a developmental perspective. Top. Cogn. Sci. 2012;4:485–497. doi: 10.1111/j.1756-8765.2012.01217.x. [DOI] [PubMed] [Google Scholar]

- Steinbeis N., Koelsch S. Shared neural resources between music and language indicate semantic processing of musical tension-resolution patterns. Cereb Cortex (New York, NY: 1991) 2008;18:1169–1178. doi: 10.1093/cercor/bhm149. [DOI] [PubMed] [Google Scholar]

- Thierry G. The use of event-related potentials in the study of early cognitive development. Infant Child Dev. 2005;14:85–94. [Google Scholar]

- Trainor L.J. Musical experience, plasticity, and maturation: issues in measuring developmental change using EEG and MEG. Ann. N. Y. Acad. Sci. 2012;1252:25–36. doi: 10.1111/j.1749-6632.2012.06444.x. [DOI] [PubMed] [Google Scholar]

- Trainor L.J., Corrigall K.A. Music acquisition and effects of musical experience. In: Riess Jones M., Fay R.R., Popper A.N., editors. Music Perception, Springer Handbook of Auditory Research, vol. 36. Springer; New York, NY: 2010. pp. 89–127. (Chapter 4) [Google Scholar]

- Trainor L.J., Trehub S.E. A comparison of infants’ and adults’ sensitivity to western musical structure. J. Exp. Psychol. Hum. Percept. Perform. 1992;18:394–402. doi: 10.1037//0096-1523.18.2.394. [DOI] [PubMed] [Google Scholar]

- Trainor L.J., Trehub S.E. Key membership and implied harmony in Western tonal music: developmental perspectives. Percept. Psychophys. 1994;56:125–132. doi: 10.3758/bf03213891. [DOI] [PubMed] [Google Scholar]

- Trainor L.J., Tsang C.D., Cheung V.H.W. Preference for sensory consonance in 2- and 4-month-old infants. Music Percept. Interdiscip. J. 2002;20:187–194. [Google Scholar]

- Trehub S.E. The developmental origins of musicality. Nat. Neurosci. 2003;6:669–673. doi: 10.1038/nn1084. [DOI] [PubMed] [Google Scholar]

- Trehub S.E., Nakata T. Emotion and music in infancy. Musicae Sci. 2001;6:37–61. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.