Key Points

Question

How accurately does smartphone video–based diagnosis by epileptologists and residents predict final video electroencephalogram diagnosis of paroxysmal neurological events?

Findings

This diagnostic study conducted at 8 tertiary care epilepsy centers found that video reviewed by experts predicted a final diagnosis with an accuracy of 89% for epileptic seizures and 86% for psychogenic nonepileptic attacks. The findings also confirmed the ability to perform a secure exchange of smartphone videos among multiple institutions.

Meaning

This study provides class II evidence demonstrating the high accuracy of smartphone videography and validates its value as an adjunct to routine history and physical examination.

Abstract

Importance

Misdiagnosis of epilepsy is common. Video electroencephalogram provides a definitive diagnosis but is impractical for many patients referred for evaluation of epilepsy.

Objective

To evaluate the accuracy of outpatient smartphone videos in epilepsy.

Design, Setting, and Participants

This prospective, masked, diagnostic accuracy study (the OSmartViE study) took place between August 31, 2015, and August 31, 2018, at 8 academic epilepsy centers in the United States and included a convenience sample of 44 nonconsecutive outpatients who volunteered a smartphone video during evaluation and subsequently underwent video electroencephalogram monitoring. Three epileptologists uploaded videos for physicians from the 8 epilepsy centers to review.

Main Outcomes and Measures

Measures of performance (accuracy, sensitivity, specificity, positive predictive value, and negative predictive value) for smartphone video–based diagnosis by experts and trainees (the index test) were compared with those for history and physical examination and video electroencephalogram monitoring (the reference standard).

Results

Forty-four eligible epilepsy clinic outpatients (31 women [70.5%]; mean [range] age, 45.1 [20-82] years) submitted smartphone videos (530 total physician reviews). Final video electroencephalogram diagnoses included 11 epileptic seizures, 30 psychogenic nonepileptic attacks, and 3 physiologic nonepileptic events. Expert interpretation of a smartphone video was accurate in predicting a video electroencephalogram monitoring diagnosis of epileptic seizures 89.1% (95% CI, 84.2%-92.9%) of the time, with a specificity of 93.3% (95% CI, 88.3%-96.6%). Resident responses were less accurate for all metrics involving epileptic seizures and psychogenic nonepileptic attacks, despite greater confidence. Motor signs during events increased accuracy. One-fourth of the smartphone videos were correctly diagnosed by 100% of the reviewing physicians, composed solely of psychogenic attacks. When histories and physical examination results were combined with smartphone videos, correct diagnoses rose from 78.6% to 95.2%. The odds of receiving a correct diagnosis were 5.45 times greater using smartphone video alongside patient history and physical examination results than with history and physical examination alone (95% CI, 1.01-54.3; P = .02).

Conclusions and Relevance

Outpatient smartphone video review by experts has predictive and additive value for diagnosing epileptic seizures. Smartphone videos may reliably aid psychogenic nonepileptic attacks diagnosis for some people.

This study assesses the value of smartphone videos for diagnosis of epileptic seizures in adult outpatients with epilepsy.

Introduction

Epilepsy has a substantial global burden of disease.1 Diagnosis is made clinically based on a historical recount of witnessed events and a laboratory assessment. Differential diagnosis for seizures is broad. Even seasoned clinicians can be misled when individuals lack medical knowledge or pertinent terminology to accurately represent witnessed seizure behavior.2 Video electroencephalogram (EEG) monitoring (VEM) is recommended when there is diagnostic uncertainty in classifying seizure type or epilepsy syndrome.3 Video EEG monitoring provides objective evidence for definitive diagnosis4; however, 20% to 30% of patients undergoing inpatient VEM have nonepileptic conditions.5,6,7,8 Additionally, VEM may not be practical for some patients because of relative infrequency of events or occurrence only during certain activities or in unique settings.3,4 Geographic limitations, transportation constraints, and insurance coverage may also restrict access.2,4 Therefore, better methods to achieve accurate diagnoses are essential.

Applications and online communities have recently explored smartphones as potential health care tools to manage chronic medical conditions.9,10 While VEM is the criterion standard for seizure diagnosis, web-based smartphone use has become a popular means to augment clinical practice involving seizure reporting by people with epilepsy.10 Seizure Tracker (Seizure Tracker LLC), My Seizure Diary (Epilepsy Foundation), and EpiDiary (Irody Inc) are examples of available smartphone applications that allow self-collection of information, in turn aiding physicians in clinical decision-making and optimal management.11,12 We hypothesize that outpatient smartphone videos have good predictive value in adults referred for evaluation of epilepsy. We aimed to assess the accuracy of a smartphone video to predict a final VEM diagnosis of epilepsy.

Methods

Study Overview

A prospective, multicenter masked clinical trial at 8 academic epilepsy centers (all certified as level IV by the National Association of Epilepsy Centers) evaluated adult outpatient smartphone videos between August 15, 2015, and August 31, 2018. Centers were selected based on diverse geography and excellence in epilepsy specialization. Study participants were outpatients referred with events that could have or could not have been epileptic seizures for elective evaluation. Diagnostic VEM was recommended based on clinical necessity at the treating institution and repeated from prior performance only when there was further delineation needed. A final diagnosis was rendered after VEM by an expert who was board certified in epilepsy and clinical neurophysiology. A convenience sample included self-selected patients who volunteered a smartphone video following initial consultation and prior to VEM. Three surveys were completed at different times: following a standard history and physical examination (HP) (treating physician), following smartphone video review (all physicians), and on reaching a final diagnosis after VEM (treating physician). History and physical examination results and VEM were used as a reference standard to assess the index test (smartphone video–based diagnosis). Reviewing physicians independently interpreted web-based videos and completed a diagnostic survey (eFigure 1 in the Supplement). Survey completion with the final diagnosis, duration of VEM, and video quality was completed separately using VEM as the criterion standard. The primary goal was to identify and stratify important features of outpatient smartphone videography that apply to diagnostic interpretation in patients suspected of having epilepsy. A forced choice for a smartphone diagnosis of (1) epileptic seizures (ES), (2) psychogenic nonepileptic attack, (3) physiologic nonepileptic events, or (4) unknown diagnosis with a corresponding level of certainty (0-10 scale) was assigned. Video interpretation and survey completion were performed by 10 epilepsy experts and 9 senior neurology residents without plans for epilepsy or sleep medicine fellowship at the same center who were masked to the video-EEG monitoring final diagnosis. The decision to include an individual resident was made by the leadership at each program. Multiple responses were possible in response to specific questions. Data sharing was performed by a Health Insurance Portability and Accountability Act (HIPAA)–protected data transfer platform using a free web-based software application (CaptureProof). Computer-based surveys using proprietary software were accessed, completed, transferred, and securely stored after completion. The protocol was approved by the institutional review board of each participating center. All patients provided oral informed consent. Video uploading was performed by patients (or someone of their choice assisting them) using either Android (Open Handset Alliance) or IOS (Apple) formats. This study is reported in accordance with the Standards for Reporting of Diagnostic Accuracy guideline criteria.13

Study Aims

The primary study aim was to assess the diagnostic accuracy of smartphone videos reviewed by masked interpreters to correctly identify VEM diagnoses of epileptic seizures. Secondary aims involved determining the diagnostic accuracy of smartphone videos to correctly identify VEM diagnoses of psychogenic nonepileptic attacks and the association of experts vs trainees with video interpretation.

Inclusion and Exclusion Criteria

Patients were included in the study if they were 18 years or older, provided voluntary consent, completed an HP, submitted an outpatient smartphone video of their primary ictal event, underwent inpatient VEM, had more than 95% of each survey completed by reviewers, and had a final diagnosis. Patients were excluded from the study if they were younger than 18 years, pregnant, had an incomplete/absent HP, had no smartphone video, did not undergo VEM, had a confirmed history of mixed epileptic and nonepileptic events (based on prior VEM), declined study participation, or did not provide informed consent.

Smartphone Videos

Patients voluntarily supplied a witness-generated outpatient smartphone video during initial evaluation. Videos recorded were an observable event and those chosen represented the disabling/most common episode resulting in epilepsy clinic evaluation and prompting VEM. Instructions for acquiring and uploading smartphone video were provided to optimize recovery of information, requesting a recording of a single typical event, encompassing the entire body, lasting approximately 2 minutes, and demonstrating interactivity with the patient. Most patients submitted a single representative video as instructed; when several were submitted, the most informative and representative video was chosen based on the duration and historical depiction of ictal phenomenology. Data sharing was performed via HIPAA-compliant, password-protected data storage and transfer using a web-based or mobile software application (CaptureProof).

Video EEG Monitoring

Eligible patients completed a single diagnostic VEM session in a hospital-based, academic, tertiary care epilepsy monitoring unit and received a final diagnosis. Video EEG monitoring was obtained at a National Association of Epilepsy Centers level IV epilepsy center.14 Final diagnosis following VEM was rendered by prominent epilepsy experts who were board certified in epilepsy and clinical neurophysiology. Reviewing physicians from different institutions were masked to VEM results.

Statistical Analysis

Statistical analyses used Stata, version 15.1 (StataCorp). Diagnostic accuracy assessed the sensitivity, specificity, accuracy, and positive and negative predictive value of a smartphone video. Variable numbers of videos per reviewer were dealt with by additional sensitivity analyses to limit the effect of bias. Quantities were calculated when pooling all relevant assessments together across reviewers and smartphone videos. Paired comparison of correct diagnoses with HP vs smartphone video used the McNemar test. For odds and likelihood ratios, significance was assessed using the χ2 test and differences between experts and residents were obtained using the Mantel-Haenszel χ2 test of homogeneity. Two-sided P values less than .05 were statistically significant with 95% CIs. Differences in levels of confidence used the Mann-Whitney test for experts and residents, Kruskal-Wallis test for the 3 paroxysmal event types, and Wilcoxon signed rank test for treating physicians. The significance for area under the curve used a 1-sample test of proportions. Diagnostic accuracy for convulsive vs nonconvulsive events used the Pearson χ2 test or Fisher exact test when expected cell values were low. Inter-rater reliability was analyzed via the Scott/Fleiss κ coefficient.

Results

Patients

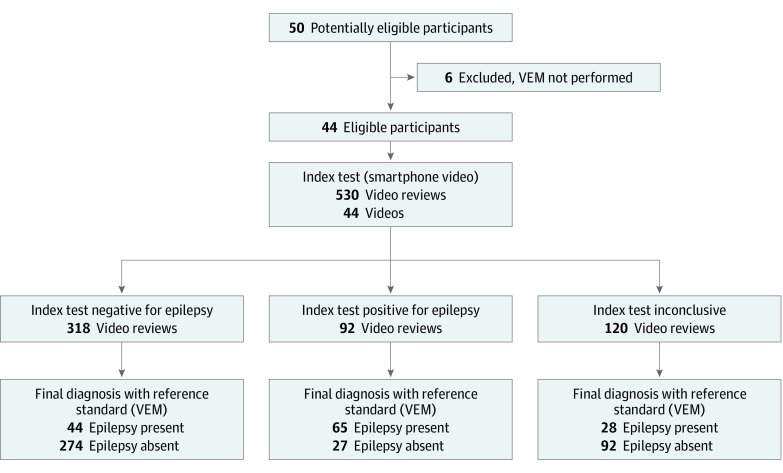

A mean of 330 annual hospital admissions for VEM occur in a level IV epilepsy center for evaluation and management recommendations of uncontrolled seizures or “spells.”14 As shown in Figure 1, 50 patients were recruited to the study and 44 met inclusion criteria, representing fewer than 1% of those admitted for VEM. Six patients were excluded because VEM was not performed after enrollment. Demographics are summarized in Table 1. From the time of performing the index standard and the reference test, patients continued to receive the best medical treatment. No adverse events were encountered during the acquisition of a smartphone video or VEM in any patient. Patients identified events on smartphone videos and VEM to ensure targeted events were matched.

Figure 1. Standards for Reporting of Diagnostic Accuracy Flow Diagram for Epilepsy Diagnosis.

VEM indicates video electroencephalogram monitoring.

Table 1. Participant and Smartphone Video Characteristics by VEM Final Diagnoses.

| Features | ES (n = 11) | PNEA (n = 30) | PhysNEE (n = 3) | Overall (N = 44) |

|---|---|---|---|---|

| Participants | ||||

| Women, No. (%) | 8 (72) | 23 (76) | 0 (0) | 31 (70) |

| Age, mean (range), y | 46.3 (23-79) | 43.8 (20-80) | 54.3 (28-82) | 45.1 (20-82) |

| Length of VEM, mean (range), d | 3.2 (2-5) | 3.1 (1-9) | 2.7 (2-4) | 3.1 (1-9) |

| Smartphone video | ||||

| Length of smartphone videos, mean (range), s | 138.5 (9-399) | 136.7 (10-543) | 88.3 (10-227) | 133.8 (9-543) |

| Adequacy of technical recording (scale, 0-10), median (IQR) | 7 (5-8) | 7 (5-8) | 4 (1-6) | 7 (4-8) |

| Reviews per smartphone video, mean (range) | 12.5 (8-15) | 11.7 (2-15) | 13.7 (13-15) | 12.0 (2-15) |

| By experts, mean (range) | 7.0 (4-9) | 6.4 (1-9) | 7.3 (7-8) | 6.6 (1-9) |

| By residents, mean (range) | 5.5 (4-6) | 5.4 (1-8) | 6.3 (5-8) | 5.5 (1-8) |

| Total smartphone video reviews per diagnosis, No. | 137 | 352 | 41 | 530 |

Abbreviations: ES, epileptic seizure; IQR, interquartile range; PNEA, psychogenic nonepileptic attack; PhysNEE, physiologic nonepileptic events; VEM, video electroencephalogram monitoring.

History and Physical Examination

Standard HP was performed by 3 experts (L.J.H., M.G., and W.O.T.). Study surveys for HP and smartphone video review were available for analysis for 42 of 44 patients (95.5%). The predictive value for correct diagnoses from HP is listed in Table 2. For all events, 33 of 42 patients (78.6%) had a correct diagnosis derived from HP. When HP and smartphone video were combined, 40 of 42 (95.2%; 95% CI, 83.8%-99.4%) were correctly identified. Wrong diagnoses from HP (5 unknown, 4 incorrect) and smartphone video (4 unknown and 6 incorrect) compromised accuracy. Correct diagnosis after HP was no more likely than after smartphone video review when diagnoses disagreed. Overall, the diagnostic level of confidence after viewing a smartphone video (9/10) was higher than the diagnosis obtained with HP alone (8/10; P = .04).

Table 2. Measures of Diagnostic Utility for Smartphone Videos vs HP for ES and PNEAa,b.

| Characteristic | % (95% CI) | ||

|---|---|---|---|

| Diagnosis | HP | Smartphone Videos | |

| Accuracy | ES | 91.9 (78.1-98.3) | 89.5 (75.2-97.1) |

| PNEA | 91.9 (78.1-98.3) | 86.8 (71.9-95.6) | |

| Sensitivity | ES | 100 (69.2-100) | 70.0 (34.8-93.3) |

| PNEA | 87.5 (67.6-97.3) | 92.0 (74.0-99.0) | |

| Specificity | ES | 88.9 (70.8-97.6) | 96.4 (81.7- 99.9) |

| PNEA | 100 (75.3-100) | 76.9 (46.2-95.0) | |

| PPV | ES | 76.9 (46.2-95.0) | 87.5 (47.3-99.7) |

| PNEA | 100 (83.9-100) | 88.5 (69.8-97.6) | |

| NPV | ES | 100 (85.8-100) | 90.0 (73.5-97.9) |

| PNEA | 81.3 (54.4-96.0) | 83.3 (51.6-97.9) | |

Abbreviations: ES, epileptic seizure; HP, history and physical examination; NPV, negative predictive value; PNEA, psychogenic nonepileptic attack; PPV, positive predictive value.

Additionally, unknown diagnoses on smartphone videos and HP surveys were excluded from the diagnostic utility analysis.

Only the treating physician (expert) performed an HP.

Video Electroencephalogram Monitoring

Final clinical diagnosis followed a mean (SD) of 3.1 (1.9) days of VEM and included 11 patients with epileptic seizures (25.0%), 30 (68.2%) with psychogenic nonepileptic attacks, and 3 (6.8%) with physiologic nonepileptic events (Table 1). One of the patients with a psychogenic nonepileptic attack received a dual diagnosis at discharge (also received a diagnosis of physiologic nonepileptic events), but these secondary events (physiologic nonepileptic events/somnolence) were not the targeted events before VEM, were not paroxysmal, and could not be confused with the psychogenic nonepileptic attacks in question before admission. This incidence of dual diagnosed physiologic nonepileptic events and psychogenic nonepileptic attacks was 2.3% (1/44). This finding is substantially lower than prior estimates, likely because of the strict pre-VEM selection criteria to exclude patients with a confirmed history of mixed events.15

Overall, 34 patients (77.3%) had at least 1 typical outpatient event following VEM. Of the remaining 10 patients, 3 (6.8% of the cohort) had atypical events (eg, a patient with atypical tremors; a patient with “minor spells,” sleepiness, and hypnagogic jerks; and a patient with brief body jerks and subjective “jerklike feeling with internal warmth” with final diagnoses of physiologic nonepileptic events, psychogenic nonepileptic attacks/physiologic nonepileptic events, and psychogenic nonepileptic attacks, respectively); 2 (4.5% of the cohort) had epileptiform discharges on EEG features (both with generalized epileptiform discharges: one with generalized polyspike-and-wave with Jeavon syndrome and the other with 5-Hz generalized spike-and-wave and a final diagnosis of psychogenic nonepileptic attacks). Five patients (11.4% of cohort) did not have events or EEG findings recorded during VEM (including 3 final diagnoses of psychogenic nonepileptic attacks and 2 final diagnoses of epileptic seizures), in line with prior studies reporting no event capture rates of approximately 20% during VEM.16,17

Smartphone Video Interpretation

Five hundred thirty smartphone videos were interpreted by 19 reviewers (10 epilepsy experts and 9 senior neurology residents) with a mean of 6.6 experts/video and 5.5 residents/video (range, 1-9 experts/video to 1-8 residents/video). Because the 44 smartphone videos assessed varied between reviewers, to prevent biasing toward reviewers who assessed more videos, a sensitivity analysis was performed. For the 19 reviewers, the median number of smartphone videos reviewed was 34 (range, 3-44), and 11 of the 19 reviewers (57.9%) interpreted more than 30 smartphone videos (eTables 1 and 2 in the Supplement). Overall sensitivity for correct interpretation of epileptic seizures by residents was less than experts (41.5% vs 76.8%) despite similar specificity (88.3% vs 93.3%). For experts predicting psychogenic nonepileptic attacks, sensitivity (89.9% vs 86.6%) and specificity (77.5% vs 52.4%) were greater than for residents. Smartphone videos averaged 2.23 minutes (range, 9 seconds-9 minutes and 5 seconds) compared with typical 60-minute HP (P < .001).

Diagnostic Accuracy

Expert interpretation of a smartphone video was accurate in predicting a VEM-confirmed diagnosis of epileptic seizures 89.1% of the time, with a specificity of 93.3% (Table 3). Diagnostic accuracy was reduced when 120 surveys (70 [58.3%] from experts, 50 [41.7%] from residents) with an unknown diagnosis were included (eTable 3 in the Supplement). Among event types, 28 of 137 epileptic seizures (20.4%) , 7 of 352 psychogenic nonepileptic attacks (21.6%), and 16 of 41 physiologic nonepileptic events (39.0%) were unknown. Eleven videos had 100% diagnostic accuracy by reviewing physicians; 8 were correctly predicted and 2 were unknowns by HP (1 excluded for duplicate responses). The odds of receiving a correct diagnosis were 5.45 times greater using smartphone videos alongside HP than with HP alone (P = .02; 95% CI, 1.01-54.3). Overall, patients were 6.65 times (95% CI, 4.49-9.83) more likely to receive a correct diagnosis of epileptic seizures and 2.58 times (95% CI, 2.03-3.27) more likely receive a diagnosis of psychogenic nonepileptic attacks from a smartphone video when they actually had the condition (P < .001) (eTable 4 in the Supplement). Resident responses were less accurate for all metrics involving epileptic seizures and psychogenic nonepileptic attacks, including accuracy, sensitivity, specificity, and positive and negative predictive values (Table 3). However, residents reported higher levels of confidence compared with experts (eFigure 2 in the Supplement). Overall, the median level of confidence was 8 of 10 (interquartile range [IQR], 6-9) for residents and 7 of 10 for experts (IQR, 6-9; P = .02). Levels of confidence also differed between epileptic seizures (median, 7; IQR, 5-8), psychogenic nonepileptic attacks (median, 8; IQR, 7-9), and physiologic nonepileptic events (median, 5; IQR, 4-8; P < .001). Resident interrater reliability was fair (0.30; 95% CI, 0.17%-0.42%) and experts moderate (0.44; 95% CI, 0.32%-0.56%).18

Table 3. Measures of Diagnostic Utility of Smartphone Video for ES and PNEA.

| Characteristic | % (95% CI) | |||

|---|---|---|---|---|

| Diagnosis | All Reviewers | Experts Only | Residents Only | |

| Accuracy | ES | 82.7 (78.7-86.2) | 89.1 (84.2-92.9) | 75.3 (68.5-81.2) |

| PNEA | 81.0 (76.8-84.7) | 85.9 (80.6-90.2) | 75.3 (68.5-81.2) | |

| Sensitivity | ES | 59.6 (49.8-68.9) | 76.8 (63.6-87.0) | 41.5 (28.1-55.9) |

| PNEA | 88.4 (84.0-91.9) | 89.9 (83.9-94.3) | 86.6 (79.4-92.0) | |

| Specificity | ES | 91.0 (87.2-94.0) | 93.3 (88.3-96.6) | 88.3 (81.7-93.2) |

| PNEA | 65.7 (57.0-73.7) | 77.5 (66.0-86.5) | 52.4 (39.4-65.1) | |

| PPV | ES | 70.7 (60.2-79.7) | 79.6 (66.5-89.4) | 57.9 (40.8-73.7) |

| PNEA | 84.1 (79.4-88.1) | 89.3 (83.3-93.8) | 78.6 (70.8-85.1) | |

| NPV | ES | 86.2 (81.9-89.8) | 92.2 (87.0-95.8) | 79.6 (72.3-85.7) |

| PNEA | 73.3 (64.5-81.0) | 78.6 (67.1-87.5) | 66.0 (51.2-78.8) | |

Abbreviations: ES, epileptic seizure; NPV, negative predictive value; PNEA, psychogenic nonepileptic attack; PPV, positive predictive value.

Smartphone Video Quality

The video quality was adequate for interpretation in 10 of 11 patients with epileptic seizures (90.9%) and 25 of 30 with psychogenic nonepileptic attacks (83.3%) (eFigure 2 in the Supplement). Physiologic nonepileptic events lacked similar acceptability (1/3 [33.3%]), probably because of the low sample size. Variability in smartphone video interpretation was due to technique as opposed to technical limitations (eFigure 3 in the Supplement) and varied by clinician type.

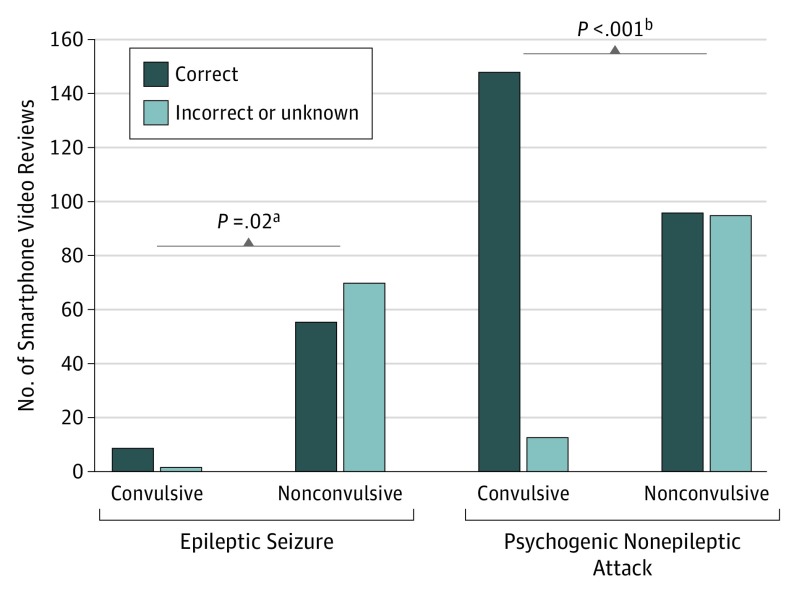

Diagnostic Value

Eleven of 44 smartphone videos (25.0%) had high diagnostic value, with 100% of reviewing physicians correctly predicting a final diagnosis from the video; these videos depicted psychogenic nonepileptic attacks. Epileptic seizures and physiologic nonepileptic events were never correctly diagnosed by 100% of the physicians reviewing the smartphone videos. When examined by event type, 10 of 11 smartphone videos with epileptic seizures (90.9%) and 16 of 30 with psychogenic nonepileptic attacks (53.3%) were nonconvulsive. Semiology was classified as motor and nonmotor.19 Overall, convulsive episodes (9 correct vs 2 incorrect/unknown reviews; P < .03) and psychogenic nonepileptic attacks (148 correct vs 13 incorrect/unknown; P < .001) were significantly more likely to be correctly diagnosed from video review (Figure 2) Events that were convulsive had a higher diagnostic accuracy than events classified as nonconvulsive for epileptic seizures (98.2%; 95% CI, 94.7-99.6 vs 72.4%; 95% CI, 66.3-77.8) and psychogenic nonepileptic attacks (95.7%; 95% CI, 91.4-98.3 vs 71.1%; 95% CI, 65.0-76.7).

Figure 2. Diagnostic Accuracy With Convulsive vs Nonconvulsive Smartphone Video-Recorded Events.

ES indicates epileptic seizure; PNEA, psychogenic nonepileptic attack.

aCalculated using the Fisher exact test (because of the low cell numbers in the convulsive group for ES).

bCalculated using the Pearson χ2 test.

Discussion

This study provides class II evidence for the diagnostic value of smartphone videos in adult outpatients with epilepsy. An epilepsy diagnosis by experts is sensitive with an adequate HP; however, HP alone lacks specificity for some semiologies.2,20 We found that smartphone video diagnostic accuracy was comparable with HP when diagnostic unknowns/incorrect responses were excluded. An HP had approximately 1 in 5 patients with unknown/incorrect diagnoses, but combined with a smartphone video, the yield rose to 95.2%. A prior VEM study of 5 epileptologists analyzing 41 events (34 epileptic seizures) in 30 patients had an overall video diagnostic accuracy of 65% using charted description and 88% with video, similar to this study.21 In another study of 43 patients with video and EEG that relied on video recordings alone, the authors correctly identified 27 epileptic seizures with a sensitivity of 93% and specificity of 94%.22 The lower sensitivity of 76.8% in this study, despite a similar specificity of 93.3% by expert reviewers, likely reflects unbiased patient-derived video preselection. The odds of receiving a correct diagnosis were 5.45 times greater using smartphone video alongside HP than with HP alone (95% CI, 1.01-54.3; P = .02). Compared with a typical 60-minute HP, the time saved was significant.

Smartphone videos added diagnostic value to HP, especially for patients with psychogenic nonepileptic attacks. Almost 70% of patients who volunteered a smartphone video had psychogenic nonepileptic attacks, serving as a potential “red flag” for a seizure mimic. We speculate that the patient’s need to validate the attacks as real may be partial explanation. Semiology is a key clinical tool when evaluating individuals presenting with seizures.19 Behavioral description between caregivers and neurologists has only fair to moderate inter-rater reliability.23,24 In this real-world study, expert interrater reliability for a correct smartphone video diagnosis was moderate compared with other studies with excellent interrater reliability21 but similar to others involving psychogenic nonepileptic attacks.20 We found a greater predictability when motor signs were present. This aligns with one study involving video review22 but contrasts with another including nonmotor symptoms. In a pediatric cohort using HP, consistent predictability was present for motor seizures but only fair agreement for nonconvulsive seizures.25 The sensitivity of smartphone videos to predict psychogenic nonepileptic attacks was greater than for epileptic seizures. The subset of videos with 100% of reviewing physicians correctly predicting a final diagnosis in 25% was composed entirely of psychogenic nonepileptic attacks. A prospective VEM study found a similar number of cases (7/23 [30%]) in which a confident diagnosis was established by all 5 epilepsy expert raters; however, by contrast, diagnosis was split between epileptic seizures and psychogenic nonepileptic attacks based on video data alone.24

In clinical practice, some nonmotor semiologies (eg, eye closure, visual hallucinations, and ictal speech) have diagnostic, localizing, or lateralizing value. A large prospective observational study from India using 29 targeted signs in 312 patients with 624 home videos compared with 282 patients recording 572 seizures identified 3.3 features/videos vs 2.1 from medical history.26 One video study found a diagnostic difference for the area under the curve comparing neurologists with neurology trainees.27 We also saw similar differences using smartphone videos for the area under the curve, although our prospective, multiexpert, multicenter US study was all-inclusive without video selection and did not use a leave out analysis. Addressing diagnostic confidence, we found experts scored higher more consistently than residents on virtually every metric. However, despite the relative lack of predictability, the resident level of confidence was consistently greater. This reflects the effect of experience for certain disciplines and activities28 but contrasts with other procedures.29 Greater exposure to VEM during a neurology residency could help underscore how often one is incorrect about a seizure diagnosis.2,4,5,21 Hence, less predictive value was seen when nonexperts viewed smartphone videos; therefore, expert results likely underestimate the overall clinical effect.

To make an accurate diagnosis of epilepsy, neurologic assessment takes up to 60 minutes to complete and years of training to master.30 This raises issues for use in developing countries, which often lack neurologists and skilled EEG technologists.26 “Smart” telemedicine includes other neurologic conditions, such as stroke,30,31 multiple sclerosis,32 Parkinson disease,33,34 and sleep.35 The rise in demand of electronic consulting to resolve testing inconsistencies for EEG emphasizes the need for additional information.36 Patients with paroxysmal neurologic events and limited access to care26 may now obtain a semiology-based, time-efficient, expert opinion at low or no cost via HIPAA-secured video transfer. For a subset of patients (ie, psychogenic nonepileptic attacks), the potential to triage or eliminate VEM could provide substantial resource reallocation and cost savings. Using the move toward advancing mobile health, legal barriers, including state licensure and practice laws, credentialing, and liability concerns, may limit the use of interstate performance of telehealth.37

Limitations

Despite robust findings, this study has limitations. Small sample sizes of epileptic seizures and physiologic nonepileptic events are a major limitation and do not incorporate the array of semiology during VEM.38 Several factors, including physician time for review, patients’ ability to transfer videos, strict inclusion criteria, and the lack of on-site study coordinators, hampered enrollment and retention. Still, multiple video views add strength to the conclusions. Temporal assessment was restricted to a single video at 1 point. Assessing longitudinal care with serial videos could reveal even greater benefits.35 Study clinicians are notable epilepsy experts; therefore, residents at comprehensive epilepsy centers are not generalizable to community clinicians. Moreover, smartphone videos were from patients identified at tertiary care referral centers and therefore represented atypical events presenting diagnostic challenges. Also, while our survey was not a validated tool, surveys are often used to capture the qualitative aspects of semiology.2,20,26,27 We recognize that there could be inherent statistical bias by treating smartphone video reviews as independent events. The pragmatic, real-world design of this study resulted in findings derived from pooled data composed of multiple video views. Aggregating measures of accuracy across many different reviewers serves as a limiting factor without weighting the results. Furthermore, we recognize that omitting reviews when a diagnosis could not be determined from smartphone video could distort statistical results (as demonstrated in eTable 3 in the Supplement). However, we felt this exclusion was warranted, as even in the presence of a stated unknown diagnosis smartphone video may still provide additive clues.

Several practical limitations require comment. More generally, using smartphone videos as a diagnostic tool may be further limited by (1) the degree of digital sophistication, (2) preserved patient consciousness and motor abilities, observer in proximity who is able to conduct video recording, and (3) costs to maintain electronic platforms and wireless connection with established video transfer privacy.39 Emphasizing recording the ictus (vs postictal), event duration, whole body focus, and the interaction to demonstrate impaired awareness are helpful tips to enhance diagnostic reliability. Overall, the inconsistencies associated with the witnessed description of an event40 are offset by accuracy and time-efficiency using adjunctive smartphone videos. Therefore, more unknown and inexperienced personnel obtaining smartphone videos may reflect an underestimation of the true diagnostic potential to improve diagnostic accuracy.

Conclusions

Secure big data transfer of outpatient smartphone videos is feasible between multiple epilepsy centers to provide diagnostic interpretation. Smartphone videos have added value for final VEM diagnosis of epileptic seizures. The usefulness of smartphone videos is most specific for patients with psychogenic nonepileptic attacks, especially when motor signs are present. Expert evaluation of smartphone videos reflects a modern mobile health tool and is a useful adjunct to HP. The ability to identify patients without epilepsy before inpatient VEM could triage and realign resources to patients for whom the need is high (ie, surgery). The adjunctive use of adult outpatient smartphone videos is expected to change the practice of epilepsy diagnosis and management currently based solely on HP.

eAppendix. Survey for Video Semiology and Quality Review

eTable 1. Measures of Diagnostic Utility of SV for Convulsive vs Non-Convulsive Events Among the 11 Reviewers Who Assessed >30 Videos

eTable 2. Measure of Diagnostic Utility of SV for ES (A) and PNEA (B)

eTable 3. Measure of Diagnostic Accuracy of Smartphone Videos for ES and PNEA with “Unknowns” Excluded Versus Included

eTable 4. Likelihood and Odds Ratios as Measures of Diagnostic Utility of Smartphone Video for ES and PNEA

eFigure 1. Differences in LOC in Diagnosis from Smartphone Videos for Residents Versus Experts by Diagnostic Accuracy

eFigure 2. Reviewer-Designated Hindrances to Diagnosis from Smartphone Video

eFigure 3. Radar Plot Including Listed Reason for Difficulty with Diagnosis from Smartphone Video by Clinician Type

References

- 1.Murray CJL, Barber RM, Foreman KJ, et al. ; GBD 2013 DALYs and HALE Collaborators . Global, regional, and national disability-adjusted life years (DALYs) for 306 diseases and injuries and healthy life expectancy (HALE) for 188 countries, 1990-2013: quantifying the epidemiological transition. Lancet. 2015;386(10009):2145-2191. doi: 10.1016/S0140-6736(15)61340-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Deacon C, Wiebe S, Blume WT, McLachlan RS, Young GB, Matijevic S. Seizure identification by clinical description in temporal lobe epilepsy: how accurate are we? Neurology. 2003;61(12):1686-1689. doi: 10.1212/01.WNL.0000090566.40544.04 [DOI] [PubMed] [Google Scholar]

- 3.Velis D, Plouin P, Gotman J, da Silva FL; ILAE DMC Subcommittee on Neurophysiology . Recommendations regarding the requirements and applications for long-term recordings in epilepsy. Epilepsia. 2007;48(2):379-384. doi: 10.1111/j.1528-1167.2007.00920.x [DOI] [PubMed] [Google Scholar]

- 4.Cascino GD. Clinical indications and diagnostic yield of video-electroencephalographic monitoring in patients with seizures and spells. Mayo Clin Proc. 2002;77(10):1111-1120. doi: 10.4065/77.10.1111 [DOI] [PubMed] [Google Scholar]

- 5.Benbadis SR. Nonepileptic behavioral disorders: diagnosis and treatment. Continuum (Minneap Minn). 2013;19(3 Epilepsy):715-729. doi: 10.1212/01.CON.0000431399.69594.de [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Seneviratne U, Reutens D, D’Souza W. Stereotypy of psychogenic nonepileptic seizures: insights from video-EEG monitoring. Epilepsia. 2010;51(7):1159-1168. doi: 10.1111/j.1528-1167.2010.02560.x [DOI] [PubMed] [Google Scholar]

- 7.Syed TU, LaFrance WC Jr, Kahriman ES, et al. Can semiology predict psychogenic nonepileptic seizures? A prospective study. Ann Neurol. 2011;69(6):997-1004. doi: 10.1002/ana.22345 [DOI] [PubMed] [Google Scholar]

- 8.LaFrance WC Jr, Baker GA, Duncan R, Goldstein LH, Reuber M. Minimum requirements for the diagnosis of psychogenic nonepileptic seizures: a staged approach: a report from the International League Against Epilepsy Nonepileptic Seizures Task Force. Epilepsia. 2013;54(11):2005-2018. doi: 10.1111/epi.12356 [DOI] [PubMed] [Google Scholar]

- 9.Kumar N, Khunger M, Gupta A, Garg N. A content analysis of smartphone-based applications for hypertension management. J Am Soc Hypertens. 2015;9(2):130-136. doi: 10.1016/j.jash.2014.12.001 [DOI] [PubMed] [Google Scholar]

- 10.Lorig KR, Holman H. Self-management education: history, definition, outcomes, and mechanisms. Ann Behav Med. 2003;26(1):1-7. doi: 10.1207/S15324796ABM2601_01 [DOI] [PubMed] [Google Scholar]

- 11.Casassa C, Rathbun Levit E, Goldenholz DM. Opinion and special articles: self-management in epilepsy: web-based seizure tracking applications. Neurology. 2018;91(21):e2027-e2030. doi: 10.1212/WNL.0000000000006547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Goldenholz DM, Moss R, Jost DA, et al. Common data elements for epilepsy mobile health systems. Epilepsia. 2018;59(5):1020-1026. doi: 10.1111/epi.14066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bossuyt PM, Reitsma JB, Bruns DE, et al. ; STARD Group . STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. Radiology. 2015;277(3):826-832. doi: 10.1148/radiol.2015151516 [DOI] [PubMed] [Google Scholar]

- 14.Gumnit RJ, Labiner DM, Fountain NB, et al. ; Prepared by the National Association of Epilepsy Centers Data on specialized epilepsy centers: report to the Institute of Medicine's Committee on the Public Health Dimensions of the Epilepsies. https://www.ncbi.nlm.nih.gov/books/NBK100603/?report=classic. Accessed January 6, 2019.

- 15.Vossler DG. Nonepileptic seizures of physiologic origin. J Epilepsy. 1995;8(1):1-10. doi: 10.1016/0896-6974(94)00014-Q [DOI] [Google Scholar]

- 16.Benbadis SR, O’Neill E, Tatum WO, Heriaud L. Outcome of prolonged video-EEG monitoring at a typical referral epilepsy center. Epilepsia. 2004;45(9):1150-1153. doi: 10.1111/j.0013-9580.2004.14504.x [DOI] [PubMed] [Google Scholar]

- 17.Dobesberger J, Walser G, Unterberger I, et al. Video-EEG monitoring: safety and adverse events in 507 consecutive patients. Epilepsia. 2011;52(3):443-452. doi: 10.1111/j.1528-1167.2010.02782.x [DOI] [PubMed] [Google Scholar]

- 18.Altman DG. Practical Statistics for Medical Research. New York, NY: Chapman and Hall/CRC; 1990. [Google Scholar]

- 19.Scheffer IE, Berkovic S, Capovilla G, et al. ILAE classification of the epilepsies: position paper of the ILAE Commission for Classification and Terminology. Epilepsia. 2017;58(4):512-521. doi: 10.1111/epi.13709 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shih JJ, Fountain NB, Herman ST, et al. Indications and methodology for video-electroencephalographic studies in the epilepsy monitoring unit. Epilepsia. 2018;59(1):27-36. doi: 10.1111/epi.13938 [DOI] [PubMed] [Google Scholar]

- 21.Beniczky SA, Fogarasi A, Neufeld M, et al. Seizure semiology inferred from clinical descriptions and from video recordings. How accurate are they? Epilepsy Behav. 2012;24(2):213-215. doi: 10.1016/j.yebeh.2012.03.036 [DOI] [PubMed] [Google Scholar]

- 22.Chen DK, Graber KD, Anderson CT, Fisher RS. Sensitivity and specificity of video alone versus electroencephalography alone for the diagnosis of partial seizures. Epilepsy Behav. 2008;13(1):115-118. doi: 10.1016/j.yebeh.2008.02.018 [DOI] [PubMed] [Google Scholar]

- 23.Benbir G, Demiray DY, Delil S, Yeni N. Interobserver variability of seizure semiology between two neurologist and caregivers. Seizure. 2013;22(7):548-552. doi: 10.1016/j.seizure.2013.04.001 [DOI] [PubMed] [Google Scholar]

- 24.Erba G, Giussani G, Juersivich A, et al. The semiology of psychogenic nonepileptic seizures revisited: can video alone predict the diagnosis? preliminary data from a prospective feasibility study. Epilepsia. 2016;57(5):777-785. doi: 10.1111/epi.13351 [DOI] [PubMed] [Google Scholar]

- 25.Hirfanoglu T, Serdaroglu A, Cansu A, Bilir E, Gucuyener K. Semiological seizure classification: before and after video-EEG monitoring of seizures. Pediatr Neurol. 2007;36(4):231-235. doi: 10.1016/j.pediatrneurol.2006.12.002 [DOI] [PubMed] [Google Scholar]

- 26.Dash D, Sharma A, Yuvraj K, et al. Can home video facilitate diagnosis of epilepsy type in a developing country? Epilepsy Res. 2016;125:19-23. doi: 10.1016/j.eplepsyres.2016.04.004 [DOI] [PubMed] [Google Scholar]

- 27.Seneviratne U, Rajendran D, Brusco M, Phan TG. How good are we at diagnosing seizures based on semiology? Epilepsia. 2012;53(4):e63-e66. doi: 10.1111/j.1528-1167.2011.03382.x [DOI] [PubMed] [Google Scholar]

- 28.Hu Y-Y, Peyre SE, Arriaga AF, et al. Postgame analysis: using video-based coaching for continuous professional development. J Am Coll Surg. 2012;214(1):115-124. doi: 10.1016/j.jamcollsurg.2011.10.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nathan BR, Kincaid O. Does experience doing lumbar punctures result in expertise? a medical maxim bites the dust. Neurology. 2012;79(2):115-116. doi: 10.1212/WNL.0b013e31825dd3b0 [DOI] [PubMed] [Google Scholar]

- 30.Boukhvalova AK, Kowalczyk E, Harris T, et al. Identifying and Quantifying Neurological Disability via Smartphone. Front Neurol. 2018;9:740. doi: 10.3389/fneur.2018.00740 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.MEedge Smartphone versus Holter monitoring for poststroke atrial fibrillation detection. https://www.mdedge.com/neurology/article/178669/stroke/smartphone-versus-holter-monitoring-poststroke-atrial-fibrillation. Accessed July 27, 2019.

- 32.Bove R, White CC, Giovannoni G, et al. Evaluating more naturalistic outcome measures: a 1-year smartphone study in multiple sclerosis. Neurol Neuroimmunol Neuroinflamm. 2015;2(6):e162. doi: 10.1212/NXI.0000000000000162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Varghese J, Niewöhner S, Soto-Rey I, et al. A smart device system to identify new phenotypical characteristics in movement disorders. Front Neurol. 2019;10:48. doi: 10.3389/fneur.2019.00048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Zhan A, Mohan S, Tarolli C, et al. Using smartphones and machine learning to quantify Parkinson disease severity: the Mobile Parkinson Disease Score. JAMA Neurol. 2018;75(7):876-880. doi: 10.1001/jamaneurol.2018.0809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Choi YK, Demiris G, Lin S-Y, et al. Smartphone applications to support sleep self-management: review and evaluation. J Clin Sleep Med. 2018;14(10):1783-1790. doi: 10.5664/jcsm.7396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bradi AC, Sitwell L, Liddy C, Afkham A, Keely E. Ask a neurologist: what primary care providers ask, and reducing referrals through eConsults. Neurol Clin Pract. 2018;8(3):186-191. doi: 10.1212/CPJ.0000000000000458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dorsey ER, Topol EJ. State of telehealth. N Engl J Med. 2016;375(2):154-161. doi: 10.1056/NEJMra1601705 [DOI] [PubMed] [Google Scholar]

- 38.Jobst BC, Siegel AM, Thadani VM, Roberts DW, Rhodes HC, Williamson PD. Intractable seizures of frontal lobe origin: clinical characteristics, localizing signs, and results of surgery. Epilepsia. 2000;41(9):1139-1152. doi: 10.1111/j.1528-1157.2000.tb00319.x [DOI] [PubMed] [Google Scholar]

- 39.Fisher RS, Blum DE, DiVentura B, et al. Seizure diaries for clinical research and practice: limitations and future prospects. Epilepsy Behav. 2012;24(3):304-310. doi: 10.1016/j.yebeh.2012.04.128 [DOI] [PubMed] [Google Scholar]

- 40.Ristić AJ, Drašković M, Bukumirić Z, Sokić D. Reliability of the witness descriptions of epileptic seizures and psychogenic non-epileptic attacks: a comparative analysis. Neurol Res. 2015;37(6):560-562. doi: 10.1179/1743132815Y.0000000009 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix. Survey for Video Semiology and Quality Review

eTable 1. Measures of Diagnostic Utility of SV for Convulsive vs Non-Convulsive Events Among the 11 Reviewers Who Assessed >30 Videos

eTable 2. Measure of Diagnostic Utility of SV for ES (A) and PNEA (B)

eTable 3. Measure of Diagnostic Accuracy of Smartphone Videos for ES and PNEA with “Unknowns” Excluded Versus Included

eTable 4. Likelihood and Odds Ratios as Measures of Diagnostic Utility of Smartphone Video for ES and PNEA

eFigure 1. Differences in LOC in Diagnosis from Smartphone Videos for Residents Versus Experts by Diagnostic Accuracy

eFigure 2. Reviewer-Designated Hindrances to Diagnosis from Smartphone Video

eFigure 3. Radar Plot Including Listed Reason for Difficulty with Diagnosis from Smartphone Video by Clinician Type