Abstract

Background/Aims:

Cost-efficient methods are essential for successful participant recruitment in clinical trials. Patient portal messages are an emerging means of recruiting potentially eligible patients into trials. We assessed the response rate and complaint rate from direct-to-patient, targeted recruitment through patient portals of an electronic medical record for a clinical trial and compared response rates by differences in message content.

Methods:

The Study to Understand Fall Reduction and Vitamin D in You (STURDY) trial is a National Institutes of Health-sponsored, community-based study of vitamin D supplementation for fall prevention in older adults conducted at Johns Hopkins. Potential participants were identified using the Epic electronic medical record at the Johns Hopkins Health System based on age (≥70 years), ZIP code (30-mile radius of study site), and prior activation of a patient portal account. We prepared a shorter message and a longer message. Both had basic information about study participation, but the longer message also contained information about the significance of the study and a personal invitation from the STURDY principal investigator. The Hopkins IRB did not require prior consent from the patient or their providers. We calculated the response rate and tracked the number of complaints and requests for removal from future messages. We also determined response rate according to message content.

Results:

Of the 5.5 million individuals receiving care at the Johns Hopkins Health System, a sample of 6,896 met our inclusion criteria and were sent 1 patient portal recruitment message between April 6, 2017 and August 3, 2017. Assessment of enrollment by this method ended December 1, 2017. There were 116 patients who expressed interest in the study (response rate: 1.7%). Twelve (0.2%) of recipients were randomized. There were 2 complaints (0.03%) and 1 request to unsubscribe from future recruitment messages (0.01%). Response rate was higher with the longer message than the shorter message (2.1% vs. 1.2%; P=0.005).

Conclusions:

Patient portal messages inviting seniors to participate in an RCT resulted in a response rate similar to commercial email marketing and resulted in very few complaints or opt-out requests. Furthermore, a longer message with more content enhanced response rate. Recruitment through patient portals might be an effective strategy to enroll trial participants.

Keywords: Clinical trial, randomized controlled trial, recruitment methods, electronic medical records, patient portal messages

Background

Attaining adequate statistical power in randomized controlled trials (RCTs) requires the timely enrollment of sufficient numbers of participants.1-3 Traditional, community-based RCT recruitment strategies may employ costly advertisement methods, such as mailing brochures and placing advertisements on television, radio, and in periodicals. Modern marketing strategies leverage direct email communications.4 Email marketing strategies can be perceived as obtrusive and typically involve an option to unsubscribe from future communications.5 Electronic medical records contain structured demographic and medical history information that facilitate targeted recruitment strategies using electronic communication, but several practical considerations prevent common use of this approach. For example, the inherent insecurity of email communication may not be concordant with privacy standards required by institutional review boards. Furthermore, contacting patients may require obtaining their physicians’ consent, which can be a major barrier to recruitment efforts.

Patient portal extensions on electronic medical records allow patients direct access to their health information and allow staff to communicate with patients regarding their care in a secure environment. Direct-to-patient, targeted recruitment messages through patient portals comply with patient privacy standards,6 but little is known regarding the feasibility, acceptability, and effectiveness of this approach.7 Also, whether changes in wording of these messages could affect response has not been studied. The objective of this paper is to describe the design, implementation, response rate, and complaints generated from a patient portal recruitment campaign targeting older adults without involving their physicians at a single academic medical center. We also examine the effect of two different messages on participant response rate.

Methods

Study to Understand Fall Reduction and Vitamin D in You (STURDY) study design

STURDY (ClinicalTrials.gov registration number , principal investigator LJA) is an ongoing RCT evaluating the effect of 4 doses of vitamin D on falls among elderly adults who are vitamin D deficient and concerned about falling. Details of the trial design were published.8 The trial began enrollment in 2015 using conventional recruitment methods including mass mailing of brochures, radio advertisements, newspaper advertisements, and recruitment at health fairs. The trial and all recruitment strategies were approved by the Johns Hopkins University School of Medicine Institutional Review Board.

Electronic medical record implementation

The Johns Hopkins Health System uses the fully integrated Epic electronic medical record (Epic Systems, Verona, WI) and integrated MyChart patient portal across the entire health system including community hospitals and outpatient practices. Access to the patient portal is available through a website and mobile application.

Patient portal recruitment policy development

Many of the details related to patient portal recruitment institutional policy development were published elsewhere and an overview is available in Supplemental Figure S1.7 In brief, a working group involving the Johns Hopkins University Institute for Clinical and Translational Research, Institutional Review Board (IRB), and Epic support teams developed specific policies for the use of the patient portal to recruit patients for IRB-approved clinical protocols. Importantly, prior consent for receipt of these messages was not required from any clinical staff or patients receiving clinical care at the Johns Hopkins Health System. IRB-approved recruitment messages were delivered to patients meeting pre-specified demographic and clinical characteristics by the Institute for Clinical and Translational Research team on behalf of the investigators. The investigators were permitted to receive aggregate demographic information about recipients but did not have access to individual patient records. Participants that went on to contact STURDY staff for more information about the study (either by phone or through our online form) were asked about their demographic information and how they heard about the study as part of the standard STURDY trial screening protocol. The STURDY IRB allowed for analytic use of these screening data. We assessed for participant completion of study visits and ultimately randomization status.

STURDY investigators received IRB approval for the patient portal recruitment messages that briefly described the study design, basic eligibility criteria, and information on how to contact the study staff, including the study phone number and the website (sturdystudy.org),9 where visitors could use a secure form to share their own contact information to speak with trial staff. The message also included instructions for opting out of future recruitment messages and a link to a page detailing patient portal recruitment message use, the core content of which can be found in Supplemental Figure S2.10 Two versions of recruitment messages were developed and appear in Supplemental Figure S3. The core content of the two messages was identical, with 1. An invitation to participate in the trial, 2. Brief eligibility criteria, 3. Contact information, 4. Signature of the Vice Dean for Clinical Investigation and STURDY principal investigator, 5. Information about the voluntary nature of the study, 6. Links to the patient portal recruitment message frequently asked questions page, 7. Information on how to unsubscribe from future messages, and 8. Information on the IRB approval of the recruitment message. The ‘short’ variant was 129 words in length and the ‘long’ variant was 219 words in length. The long variant differed from the short variant in that it included a personal invitation to participate in the study, facts describing the implications of falls among seniors, and details about the importance of clinical trials to advance medical knowledge.

Search criteria to identify recruitment message recipients

We defined patients as a unique medical record number with ≥1 diagnosis, ≥1 medication order, ≥1 procedure, or ≥1 laboratory result at any inpatient or outpatient affiliate of the Johns Hopkins Health System since September 1, 2016. This date represents the approximate timing of cross-network deployment of the electronic medical record.

Patients were identified via a query conducted by the Johns Hopkins Center for Clinical Data Analytics. This data analytic group has been granted access to patient records for the purpose of conducting investigator-initiated queries from research staff who are not directly involved with patient care. This process prevents research staff from directly accessing personal health information. The query contained 3 criteria: Aged ≥70 years, living within ZIP Codes within a 30-mile radius of the ProHealth research clinic in Woodlawn, MD, and patients who had activated their patient portal account. Planning for the query was conducted using the “SlicerDicer” function of Epic, which tabulates number of potential participants with specific characteristics (demographic, labs, medications, and ICD codes). The query was inclusive by intention and did not include key elements of the STURDY trial, namely, vitamin D deficiency status, supplement use, or fall history. The principal reason for this inclusive approach is that key eligibility criteria, that is, low serum level of vitamin D, use of vitamin D supplements, and history of falls, was thought to be more likely incomplete and/or outdated in the medical record. Notably, this protocol did not require permission from clinicians or patients prior to the receipt of these messages as these messages were deemed to be of minimal potential harm to recipients.

Recruitment message distribution

Messages were sent to 250 to 1000 patients at a time in 9 batches of a random sub-selection of patients, on Thursdays approximately every 2 weeks over a 17-week span, until one message was sent to all patients matching the search criteria. Patients only received one message during this timeframe. The first and final batches of messages were sent on April 6, 2017 and August 3, 2017, respectively. The Epic team and the STURDY team coordinated on the exact timing of each batch and altered the date and number of messages based upon monthly monitoring of recruitment goals and STURDY staff availability to respond to the recruitment requests.

Response rate & randomization rate

The response rate was defined as the number of interested participants who contacted the study team to learn about their eligibility who also reported hearing about the trial through the patient portal (numerator) divided by the total number of participants who received a recruitment message (denominator). Randomization rate was calculated as the number of randomized participants divided by the number who reported receiving a recruitment message. Any potential participants who contacted the study team between April 6, 2017 and December 1, 2017 (inclusive) were included in this analysis.

Complaints about patient portal messages and requests to unsubscribe from future recruitment messages

The Johns Hopkins School of Medicine academic community (faculty, staff, and trainees) received a general email detailing the initiation of patient portal recruitment methods (not specific to the STURDY trial) and were instructed to direct any concerns or complaints to the chair of the Institute for Clinical and Translational Research, which can be found in Supplemental Figure S4. The STURDY PI (LJA), the chair of the Institute for Clinical and Translational Research (DEF), and the Epic team compiled all complaints from patients about the recruitment message. We tabulated and described the complaints. We also tabulated the requests to unsubscribe from future patient portal recruitment messages.

Statistical analysis plan

We tabulated demographics for patient portal use versus no patient portal use among those with age ≥70. We determined population characteristics of patients receiving a portal message, contacting the study staff for more information, and ultimately undergoing randomization. We were unable to derive demographics of all recipients stratified by message length. Demographics were not compiled for recipients who complained or requested to unsubscribe due to privacy concerns related to the small numbers in these groups. We calculated median time to response overall and by message variant, defining the day of message receipt as day 1. We characterized time to response using a cumulative incidence Kaplan-Meier plot. We compared responses with Cox proportional hazard models that were 1. Unadjusted and 2. Adjusted for month (a categorical variable based on batches sent in April-May, June, or July-August) in order to assess for temporal trends. We confirmed that each model did not violate the proportional hazards assumption by calculating Schoenfeld residuals. We also compared using an unadjusted log-rank test and using a log-rank test adjusted for month. We considered a P-value <0.05 to indicate statistical significance. Analyses were performed with Stata MP 15.1 (StataCorp, College Station, TX). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Results

Patient populations

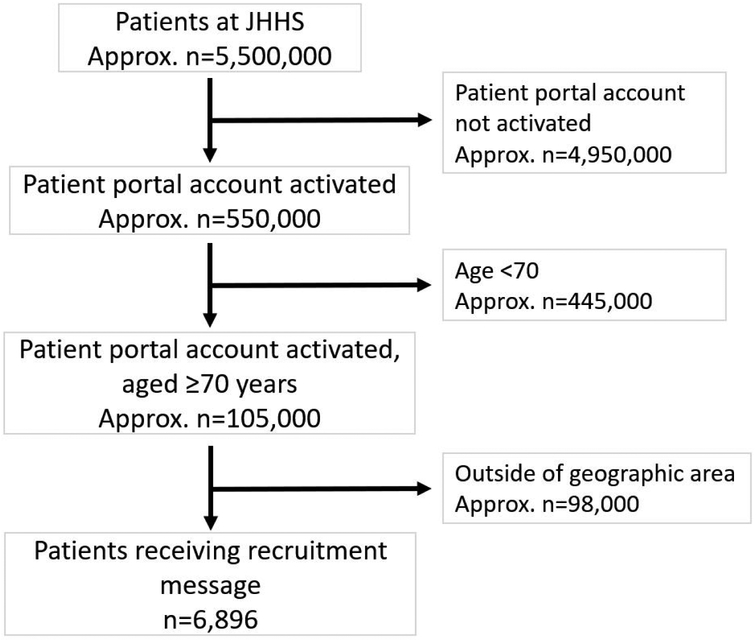

Among the approximately 1.3 million patients at the Johns Hopkins Health System between April 6, 2017 and August 3, 2017, approximately 550,000 (43%) had patient portal activation (Figure 1). This proportion was 40% among adults ≥70 years old. Those aged ≥70 years with active patient portal accounts differed from the 60% of adults aged ≥70 who had not activated their patient portal accounts in several ways. First, those with activated patient portal accounts were younger as 73% were between 70 and 80 years old, whereas only 55% of those without patient portal activation were between 70 and 80 years old. Men comprised a greater proportion of patient portal users (49%) than non-users (40%). Finally, white adults comprised a greater proportion of patient portal users (80%) than non-users (67%).

Figure 1 -. Message receipt flow diagram.

*Defined as an active, unique medical record with ≥1 diagnosis, ≥1 medication order, ≥1 procedure, or ≥1 lab result since September 1, 2016 at any inpatient or outpatient JHHS affiliate.

Among the approximately 105,000 adults aged ≥70 years with activated patient portal accounts, 93% were excluded for living outside of the geographic area (Figure 1). A total of 6,896 patients met our search criteria and received a patient portal recruitment message.

Among the 6,896 patients who received a message through the patient portal, the mean (SD) age of recipients was 77 (6) years (Table 1). About half were female, most were white (80%), and the majority were married (67%). Of the 116 respondents (1.7% response rate), 20% were not interested in trial participation, 58% were ineligible, and 2% dropped out for other reasons. Of those who were ineligible, 19% were neither afraid of falling nor at risk for falling, 19% were taking a high dose of vitamin D supplements (eligibility ≤1000 IU/day), 37% had a screening vitamin D25 level that was out of range for study participation (eligibility range 10-29 ng/mL), and 24% were ineligible for other reasons. Of those who responded, 10% were ultimately randomized.

Table 1 -.

Patient characteristics

| Received patient portal message* |

Responded | Randomized | |

|---|---|---|---|

| N (% of recipients) | 6896 (100) | 116 (1.7) | 12 (0.5) |

| Age, y | 77 (6) | 76 (5) | 76 (3) |

| Female, % | 3,586/6,896 (52) | 48/116 (41) | 5/12 (42) |

| Race/ethnicity | |||

| White, % | 5,517/6,896 (80) | 103/116 (89) | 11/12 (92) |

| Black, % | 896/6,896 (13) | 7/116 (6) | 1/12 (8) |

| Asian, % | 276/6,896 (4) | 2/116 (2) | 0/12 (0) |

| Hispanic, % | 69/6,896 (1) | 0/114 (0) | 0/12 (0) |

| Other, % | 207/6,896 (3) | 4/116 (3) | 0/12 (0) |

| Marital status | |||

| Single, % | 552/6,896 (8) | 9/104 (9) | 1/9 (11) |

| Married, % | 4,620/6,896 (67) | 74/104 (71) | 7/9 (78) |

| Divorced, % | 483/6,896 (7) | 9/104 (9) | 0/9 (0) |

| Widowed, % | 1,103/6,896 (16) | 10/104 (10) | 0/9 (0) |

| Other, % | 138/6,896 (2) | 2/104 (2) | 1/9 (11) |

Numerators in this column are approximated as only percentages were available for all recipients.

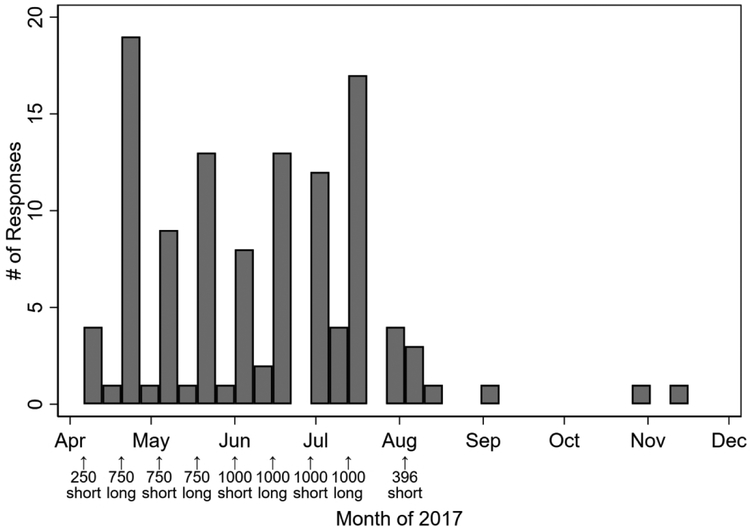

The schedule of the messaging, number of messages sent, and responses by calendar time are shown in Figure 2. The first response occurred on April 6, 2017, which coincided with the date of the first message being sent. The final response occurred on November 11, 2017. The median (IQR) duration until response was 2 (1-7) days. Among those who eventually responded, 77% responded within 1 week, 84% within 2 weeks, and 91% within 4 weeks.

Figure 2 -. Histogram of responses by week of follow up.

*Bar width is Thursday through Wednesday (7 days) throughout the follow-up period, beginning on the day that the first message was sent. Messages were sent out on April 6, April 20, May 4, May 18, June 1, June 15, June 29, July 13, and August 3, 2017.

Complaints and requests to unsubscribe from future recruitment messages

Of the 6,896 message recipients, there were two reported complaints (0.03%). One patient was already enrolled in STURDY and was not pleased that they received a recruitment message despite already being enrolled. They did not request to unsubscribe from future recruitment messages. The second patient was not enrolled in STURDY and complained to their physician about receiving the message. This individual also requested to unsubscribe from future recruitment messages. This was the only request to unsubscribe (0.01%).

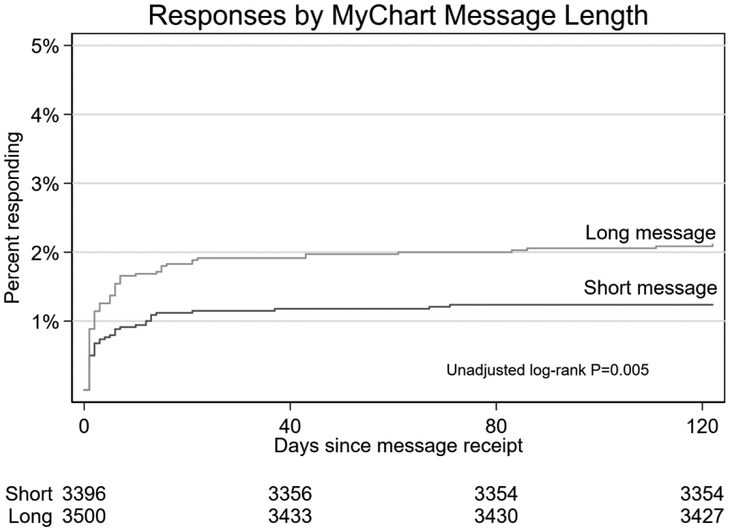

Variation in response rate by message length

Five of the batches contained the short variant and 4 contained the long variant. The short variant was received by 3,396 (49%) of patients and the long variant was received by 3,500 (51%) of patients. The overall response rate was 1.7% (116/6,896). There was a significantly lower response rate for the shorter variant (42/3396; 1.2%) than the longer variant (74/3,500; 2.1%; HR 1.71; 95% CI 1.17, 2.50; P=0.005; Table 2). After adjustment for month, this comparison remained significant (HR 1.51; 95% CI 1.04, 2.30; P=0.03). The cumulative response rate is shown in Figure 3.

Table 2 -.

Characteristics of Responding patients (n=116) by message length

| Short | Long | |

|---|---|---|

| Response rate, %* | 42/3,396 (1.24) | 74/3,500 (2.11) |

| Age, y | 76 (6) | 76 (4) |

| Female, % | 20/42 (48) | 28/74 (38) |

| Race/ethnicity | ||

| White, % | 36/42 (86) | 67/74 (91) |

| Black, % | 3/42 (7) | 4/74 (5) |

| Asian, % | 1/42 (2) | 1/74 (1) |

| Hispanic, % | 0/42 (0) | 0/72 (0) |

| Other, % | 2/42 (5) | 2/74 (3) |

| Marital status | ||

| Single, % | 3/39 (8) | 6/65 (9) |

| Married, % | 29/39 (74) | 45/65 (69) |

| Divorced, % | 3/39 (8) | 6/65 (9) |

| Widowed, % | 2/39 (5) | 8/65 (12) |

| Other, % | 2/39 (5) | 0/65 (0) |

Adjusted P=0.008

Figure 3 -. Cumulative incidence curve for response by message length.

*Log-rank adjusted for month, P=0.008.

Overall enrollment was low, with 3/42 (7%) and 9/74 (12%) of the short and long responders being randomized. This corresponded with an overall enrollment rate of 0.17% (12/6,896) overall, 3/3,396 (0.08%) among short message recipients, and 9/3,500 (0.26%) among long message recipients. There was no difference in enrollment between the groups using recipients as the denominator (χ2 P=0.09) or responders as the denominator (χ2 P=0.19).

Discussion

In this study of direct-to-patient, targeted recruitment through patient portals of a commercial electronic medical record, we documented a response rate of 1.7% and enrollment rate of 0.17%. Furthermore, half of responses occurred within 2 days of message receipt with 91% responding within 4 weeks. This intervention reassuringly generated only 2 complaints and 1 request for removal from future recruitment emails. Finally, variation in message content appeared to affect response rate.

Few studies have reported on the acceptability of the use of patient portal messages as a recruitment modality. One prior study at Johns Hopkins enrolled patients with atrial fibrillation using patient portal recruitment messages. Of 1,303 recipients who completed a survey about the appropriateness of the patient portal for this use, >90% reported it being a good use of the patient portal and 99% reported that it did not change their satisfaction as a patient at the Johns Hopkins Health System.7 While we did not explicitly survey the recipients of the STURDY recruitment message, we only received 2 complaints and 1 opt-out request. The unsubscribing request rate (0.01%) was lower than commercial strategies (0.1 to 0.4%).5 The overall response rate of 1.7% was on par with conventional email marketing campaigns, which typically achieve a 1% response rate.4

As there is a paucity of research on the use of patient portal messages for recruiting patients into clinical trials, it is unclear how this might compare at other institutions. It is possible that the response rate may be higher for patient portal messages than commercial email marketing campaigns as patient portal messages may be perceived as of greater importance than an email. Investigation at other institutions and in multicenter studies is needed to delineate this further.

One of the principal advantages to portal messaging is timing. Half of our responses were received within 2 days of the message and 91% by 4 weeks of the message being sent out. In contrast, non-electronic, traditional modes of recruitment (e.g. direct mailing of brochures, advertisements in periodicals) may require more time for receipt and response to a study solicitation. This should be an important consideration when using this modality. While having an immediate response may be advantageous for shortening recruitment periods and achieving enrollment goals, recruitment staff could be overwhelmed by an immediate bolus of replies with patient portal messaging or other digital recruitment strategies (e.g., email-based recruitment). As a result, batching messages may be a more practical option for managing workflow.

Variation in message content appeared to alter response rate. Specifically, there was a higher rate of enrollees among those receiving the longer (vs. shorter) message. While we were unable to control for recipient characteristics in this analysis, the batches were comprised of random subgroups of eligible patients. Thus, it is unlikely that recipient characteristics differed in a meaningful way between groups. It was not possible to identify the specific differences in message content that affected response rate. Future studies should implement a stepwise approach in message development and distribution focusing on one specific change in message content at a time and following the response rate. Such stepwise message crafting can result in optimization of the message content, which can also inform the selection of content of traditional recruitment modalities. Message presentation and content represents potentially cost neutral interventions that might profoundly impact recruitment yields. Research into message effectiveness represents an important area of inquiry for future studies.

Only 10% of respondents enrolled in our study. Unfortunately, we are unable to compare this rate to other recruitment modalities. However, eligibility is one of the primary reasons that people ultimately do not proceed to enrollment. It is possible that enrollment rates might be improved with greater specificity of the targeting strategy. For example, we might have messaged only participants with a known vitamin D deficiency or fall history. This increased specificity could also miss eligible people. On the other hand, non-specific messaging could contribute to patient fatigue with regard to subsequent messages for other studies. For studies with costly screening modalities, it may be advantageous to maximize specificity to reduce screen failures. This highlights an important area for further research, focused on optimizing the specificity and sensitivity of electronic medical record-based strategies to maintain patient goodwill toward research, while maximizing recruitment yields. Such a strategy could identify a pool of potential trial participants and assess efficacy of traditional recruitment methods versus targeted recruitment messages, like those employed in this pilot study. The relative efficacy of these means can be compared in a prospective manner to identify which potential participants are reached best by traditional recruitment methods, with electronic medical record-enabled patient portal messaging techniques, or by other novel methods (e.g., social media advertisements). Additionally, the development of recruitment cohorts from clinical trials is needed to assess optimal recruitment strategies by demographic groupings.

The Johns Hopkins Health System electronic medical record and its patient portal have not been optimized for this communication method and several enhancements may improve future patient portal recruitment campaigns. First, one patient who received the recruitment message was already enrolled in the study and complained about receiving a recruitment message. STURDY participants are not identified as such in the electronic medical record. Documenting specific trial enrollments within the electronic medical record can guide recruitment campaigns and exclude these patients from messaging. Such practices can then identify cohorts of patients who have previously participated in trials. Anecdotally, we have found patients with prior trial enrollment to be more enthusiastic in responding to recruitment efforts. Continual documentation of RCT enrollment may identify a subgroup of patients more likely to participate in an RCT. Second, there was no automated method to unsubscribe from future recruitment messages and documenting this status was cumbersome. Fortunately, there was only one request so it did not adversely affect workflow among the staff. Developing an automated method to request a future opt-out would improve workflow efficiency. Third, the messages were not sent by the RCT staff and there was no opportunity to respond to investigators directly using the patient portal. The response rate may have been improved if patients could ask questions or perform the pre-screening through the portal as well, rather than over the phone.

The majority of study participants were white, female, and married, reflecting characteristics of patient portal users among our target population. We expect this may be different for studies targeting younger patient populations. Prior research has documented a desire from older adults to participate in clinical research,11 though means of strategies for engaging them differ from younger populations.12 The overall level of patient portal activation among patients at the Johns Hopkins Health System patients was 43% and 40% in adults aged ≥70 years. Patient portals may therefore be one method to engage seniors in clinical research. The lower proportion of non-white patients with activation may limit its ability to promote racial diversity in clinical research.

This study has weaknesses. We do not have direct patient satisfaction assessments upon message receipt and cannot say that the messages were overall well-received. Future investigation is required to assess user experience and best practices for future recruitment campaigns leveraging patient portals. Collection of complaint data was passive so it is likely that there were complaints made to clinical staff (e.g., a patient complaining to their primary care doctor) that were not shared with us. However, requests to unsubscribe from future communications are a reasonable secondary metric to assess for unreported complaints. We note that in our project, only one opt-out was requested in the timeframe, and it was from the same recipient who registered a complaint. Recruitment messages were only sent to older adults, and it is not clear if response would be different among younger adults. As this was a pilot study, we are unable to estimate the costs of this recruitment modality and compare its cost efficacy against traditional marketing methods. This pilot was run at a large academic medical center with a focus on trial recruitment, so generalizability may be limited. We only sent one message during this pilot, so we are unclear if multiple messages may have augmented response. We were limited in our ability to determine patient-level information about message recipients due to privacy protections. Furthermore, we were unable to assess habits of patient portal use among the recipients and whether the messages were opened in their patient portal inbox. It is unclear how well this strategy would work for diseases with negative stereotypes (e.g., alcoholism) or diseases that the patients may have in their record but were not aware of as a medical issue (e.g., lower-stage chronic kidney disease). We did not perform recruitment using conventional email, which represents an important future step to understanding the cost effectiveness of this approach.

This study has several strengths. First, to our knowledge, our study is the first demonstration of patient portal recruitment without physician consent among any population. Second, our tracking of recruitment rates, timing and volume of response, and tolerability of messaging is directly pertinent to principal investigators and study coordinators executing recruitment strategies. Third, our comparison of message content demonstrates an opportunity to optimize recruitment yields. Finally, our study provides data on the demographic utilization of the patient portal at a large institution, which is essential for implementing generalizable studies. Importantly we demonstrate an ability to recruit older adults, a population thought to underutilize technology, through an electronic patient portal. Future efforts should attempt to improve the response from non-white patients.

There are several key implications from this study. Patients were successfully messaged without physician involvement, using a patient opt-out model. Recruitment through clinic environments conventionally involves physician consent, the rationale being that physicians best know their patients’ needs and are best positioned to advise a patient on whether trial participation would be ideal for them. It has also been argued that soliciting patients through an electronic record is intrusive, a third party coming between the relationship of trust held between doctor and patient. However, these perspectives have served to be a barrier to research.13-16 First, physicians carry substantial clinical burdens such that learning the nuance of a trial, and taking time out of their limited patient sessions to discuss a topic ancillary to direct care is not practical. Second, this model removes patient autonomy as the physician is serving as a gatekeeper to information, rather than affording patients direct access to evaluate and learn about options on their own. Third, some patients feel pressured to participate in studies when the invitation comes from their physician. The minimal complaints and a response rate exceeding industry benchmarks among a population known for lower technology utilization is meaningful, implies that direct messaging of older patients is efficient and a potentially acceptable use of this technology. On a final note, this recruitment approach should be viewed in terms of the overall recruitment goal of the trial, specifically, up to 1,200 randomized participants. Hence, this strategy alone is insufficient; as in most trials, multiple recruitment strategies need to be implemented.

In conclusion, a direct-to-patient, targeted recruitment through a commercial electronic medical record’s patient portal to 6,896 patients yielded a 1.7% response rate and only 2 complaints. Variations in message content were associated with differing response rates. Further research is needed to understand how best to optimize the use of patient portal messaging for recruitment to clinical trials.

Supplementary Material

Acknowledgments

The authors thank the participants and the field center staff for their invaluable contributions to the STURDY trial. We would like to thank National Institutes of Health and its Institutes for supporting multiple aspects of this work. STURDY is supported by the National Institute on Aging (U01AG047837). Members of the STURDY Collaborative Research Group include Lawrence J. Appel, MD, MPH (chair); Nicole Cronin; Stephen P. Juraschek, MD, PhD; Scott McClure, MS; Christine M. Mitchell, ScM (Johns Hopkins Welch Center for Prevention, Epidemiology and Clinical Research); Rita R. Kalyani, MD, MHS; David L. Roth, PhD; Jennifer A. Schrack, PhD; Sarah L. Szanton, PhD; Jacek Urbanek, PhD; Jeremy Walston, MD; Amal Wanigatunga, PhD, MPH (Johns Hopkins Center on Aging and Health); Sheriza Baksh, MPH; Amanda L. Blackford, ScM; Cathleen Ewing; Sana Haider; Rosetta Jackson; Andrea Lears, BS; Curtis Meinert, PhD; David Shade, JD; Michael Smith, BS; Alice L. Sternberg, ScM; James Tonascia, PhD; Mark L. Van Natta, MHS; Annette Wagoner (STURDY Data Coordinating Center); Erin D. Michos, MD, MHS (Site PI); J. Denise Bennett; Pamela Bowers; Josef Coresh, MD, PhD; Tammy Crunkleton; Briana Dick; Rebecca Evans, RN; Mary Godwin; Lynne Hammann; Deborah Hawks; Karen Horning; Brandi Mills; Melissa Minotti, MPH; Melissa Myers; Leann Raley; Rhonda Reeder; Adria Spikes; Rhonda Stouffer; Kelly Weicht. (George W. Comstock Center for Public Health Research and Prevention Clinical Field Center); Edgar R. Miller III, MD, PhD (Site PI); Bernellyn Carey; Jeanne Charleston, BSN, RN; Syree Davis; Naomi DeRoche-Brown; Debra Gayles; Ina Glenn-Smith; Duane Johnson; Mia Johnson; Eva Keyes; Kristen McArthur; Ruth Osheyack; Danielle Santiago; Chanchai Sapun; Valerie Sneed; Letitia Thomas; Jarod White (ProHealth Clinical Research Unit Field Center); Robert H. Christenson, PhD; Show-Hong Duh, PhD; Heather Rebuck (Laboratory, University of Maryland School of Medicine); Clifford Rosen, MD (chair, Maine Medical Center Research Institute); Tom Cook, PhD (University of Wisconsin); Pamela Duncan, PhD (Wake Forest Baptist Health); Karen Hansen, MD, MS (2016-present, University of Wisconsin); Anne Kenny, MD (2014-2016, University of Connecticut); Sue Shapses, PhD, RD (Rutgers University) (Data and Safety Monitoring Board); Judy Hannah, PhD; Sergei Romashkan, MD (National Institute on Aging) Cindy D. Davis, PhD (Office of Dietary Supplements). Consultants: Jack M. Guralnik, MD, PhD (University of Maryland School of Medicine); J.C. Gallagher, MD (Creighton University School of Medicine).

Funding

The Johns Hopkins Institute for Clinical and Translational Research is supported by the National Center for Advancing Translational Sciences Award (UL1TR001079). TBP was sponsored by grants from Health Resources and Services Administration (T32HP10025B0) and National Heart, Lung, and Blood Institute (NHLBI, 2T32HL007180-41A1) SPJ was supported by National Institute of Diabetes and Digestive and Kidney Diseases (T32DK007732) and the NHLBI (K23HL135273). KTG and HNM were supported by a predoctoral fellowship in Interdisciplinary Training in Cardiovascular Health Research (T32NR012704). KTG was also supported by a Predoctoral Clinical Research Training Program (TL1TR001078). ML is supported by grants from the National Institute of Diabetes and Digestive and Kidney Diseases (R01DK089174, R01DK108784, U01DK061730), National Heart, Lung, and Blood Institute (UH3HL130688-02), and National Institute of Alcohol Abuse and Alcoholism (U10AA025286).

Grant funding:

Research reported in this publication was supported by the National Institute on Aging of the National Institutes of Health (NIH/NIA) under Award Number U01AG047837. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The Office of Dietary Supplements (ODS) also supported this research.

The Johns Hopkins ICTR is supported by the National Center for Advancing Translational Sciences Award (UL1TR001079)

TBP was sponsored by grants from Health Resources and Services Administration (T32HP10025B0) and National Heart, Lung, and Blood Institute (NHLBI, 2T32HL007180-41A1)

SPJ was supported by National Institute of Diabetes and Digestive and Kidney Diseases (T32DK007732) and the NHLBI (K23HL135273).

KTG was supported by a predoctoral fellowship in Interdisciplinary Training in Cardiovascular Health Research (T32NR012704) and a Predoctoral Clinical Research Training Program (TL1TR001078).

HNM was supported by a predoctoral fellowship in Interdisciplinary Training in Cardiovascular Health Research (T32NR012704)

Footnotes

Trial registration: ClinicalTrials.gov .

Disclosures

The authors report no conflicts of interest.

References

- 1.Hunninghake DB, Darby CA and Probstfield JL. Recruitment experience in clinical trials: literature summary and annotated bibliography. Control Clin Trials 1987; 8: 6S–30S. [DOI] [PubMed] [Google Scholar]

- 2.Easterbrook PJ and Matthews DR. Fate of research studies. J R Soc Med 1992; 85: 71–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Holden G, Rosenberg G, Barker K, et al. The recruitment of research participants: a review. Soc Work Health Care 1993; 19: 1–44. [DOI] [PubMed] [Google Scholar]

- 4.Katsarelas M eCommerce Email Marketing Benchmarks for 2016. Remarkety, https://www.remarkety.com/ecommerce-email-marketing-benchmarks-2016 (2016, accessed 2 November 2018).

- 5.Email Marketing Benchmarks. Mailchimp, https://mailchimp.com/resources/email-marketing-benchmarks/ (accessed 12 November 2018).

- 6.ONC Health IT Certification Details: Including Cost and Limitations Disclosures. Information for Meaningful Use and other regulatory programs., https://www.epic.com/docs/mucertification.pdf (2018, accessed 15 January 2019).

- 7.Gleason KT, Ford DE, Gumas D, et al. Development and preliminary evaluation of a patient portal messaging for research recruitment service. J Clin Transl Sci 2018; 2: 53–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Michos ED, Mitchell CM, Miller ER, et al. Rationale and design of the Study To Understand Fall Reduction and Vitamin D in You (STURDY): A randomized clinical trial of Vitamin D supplement doses for the prevention of falls in older adults. Contemp Clin Trials 2018; 73: 111–122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.June 26, 2017 Archive.org snapshot of sturdystudy.org. Sturdy Trial - Home, https://web.archive.org/web/20170626221931/http://www.sturdystudy.org/ (2017, accessed 29 November 2018).

- 10.August 2, 2017 Archive.org snapshot of the MyChart Recruitment Messages Frequently Asked Questions page at the Johns Hopkins ICTR website. MyChart Recruitment Messages, https://web.archive.org/web/20170802143440/https://ictr.johnshopkins.edu/community/community-involvement/mychart-recruitment-messages/ (2017, accessed 29 November 2018).

- 11.Witham MD and McMurdo MET. How to get older people included in clinical studies. Drugs Aging 2007; 24: 187–196. [DOI] [PubMed] [Google Scholar]

- 12.Forster SE, Jones L, Saxton JM, et al. Recruiting older people to a randomised controlled dietary intervention trial--how hard can it be? BMC Med Res Methodol 2010; 10: 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Nelson K, Garcia RE, Brown J, et al. Do patient consent procedures affect participation rates in health services research? Med Care 2002; 40: 283–288. [DOI] [PubMed] [Google Scholar]

- 14.Patterson S, Kramo K, Soteriou T, et al. The great divide: a qualitative investigation of factors influencing researcher access to potential randomised controlled trial participants in mental health settings. J Ment Health Abingdon Engl 2010; 19: 532–541. [DOI] [PubMed] [Google Scholar]

- 15.Gurwitz JH, Guadagnoli E, Landrum MB, et al. The treating physician as active gatekeeper in the recruitment of research subjects. Med Care 2001; 39: 1339–1344. [DOI] [PubMed] [Google Scholar]

- 16.Sugarman J, Regan K, Parker B, et al. Ethical ramifications of alternative means of recruiting research participants from cancer registries. Cancer 1999; 86: 647–651. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.