Abstract.

Diffuse optical tomography (DOT) is a promising noninvasive imaging modality and is capable of providing functional characteristics of biological tissue by quantifying optical parameters. The DOT image reconstruction is ill-posed and ill-conditioned, due to the highly diffusive nature of light propagation in biological tissues and limited boundary measurements. The widely used regularization technique for DOT image reconstruction is Tikhonov regularization, which tends to yield oversmoothed and low-quality images containing severe artifacts. It is necessary to accurately choose a regularization parameter for Tikhonov regularization. To overcome these limitations, we develop a noniterative reconstruction method, whereby optical properties are recovered based on a back-propagation neural network (BPNN). We train the parameters of BPNN before DOT image reconstruction based on a set of training data. DOT image reconstruction is achieved by implementing a single evaluation of the trained network. To demonstrate the performance of the proposed algorithm, we compare with the conventional Tikhonov regularization-based reconstruction method. The experimental results demonstrate that image quality and quantitative accuracy of reconstructed optical properties are significantly improved with the proposed algorithm.

Keywords: diffuse optical tomography; back-propagation neural network; image reconstruction, inverse problem

1. Introduction

Diffuse optical tomography (DOT) has shown a great potential for breast imaging1–9 and functional brain imaging,10–12 which use near-infrared light in the spectral range of 600 to 950 nm to quantify tissue optical (absorption and scattering) coefficients. There is a critical need to develop an efficient image reconstruction algorithm for DOT. Recovering the internal distribution of optical properties is a severely ill-posed and under-determined inverse problem, due to light propagation in highly scattering biological tissues and limited number of measurements,13,14 which makes image reconstruction challenging.

Although both linear and nonlinear reconstruction algorithms for DOT are available,14 considerable efforts have been made to develop various reconstruction algorithms to improve quantitative accuracy and image quality.14–22 To date, the ill-posedness of the inverse problem in DOT can be alleviated by employing a regularization technique, which utilizes a data fitting term together with a regularizer ( or norm, etc.) to suppress the effect of measurement noise and modeling errors.23

When the regularizer is a norm, the reconstruction algorithm becomes the well-known Tikhonov regularization method, which imposes restrictions on the norm of the optical properties23 and is optimal when the distribution of optical properties subjects to Gaussian distribution.24 The merits of Tikhonov regularization are simple, and easy to be implemented. However, the norm will oversmooth reconstructed images and yield low-quality images by penalizing large values.23 An alternative regularizer is total variation (TV) norm, which is the ideal choice when the distribution of optical properties is known to be piecewise constant.24 Another possible regularizer is norm (), which poses a sparsity constraint on the optical properties. The quality of reconstructed images can be improved with the use of sparsity regularization.23,25

The abovementioned algorithms are generally iterative reconstruction algorithms.14 These algorithms require heavy computation and large storage memory because the forward problem must be solved repeatedly, and an updated distribution of optical properties must be found at each iterative step.14 However, iterative reconstruction algorithms have limited capability in terms of reconstruction accuracy and image quality,26 which are important for accurate diagnosis of breast cancers. In addition, how to accurately choose parameters, particularly regularization parameter, for iterative reconstruction algorithms needs to be further considered.27

Recently, artificial neural networks with various network architectures, including deep convolutional neural network,28,29 generative adversarial networks,30 and multilayer perceptron,31 have achieved significant improvements over existing iterative reconstruction methods in the quality of reconstructed images. It is likely that image recovery in DOT benefits from these important developments.

In this work, we investigated the feasibility and effectiveness of a back-propagation (BP) neural network (BPNN) to recover the distribution of optical properties in DOT. BPNN is a widely used neural network because it has many advantages. For example, BPNN is simple, efficient at computing the gradient descent, and straightforward to implement. The basic procedure of BPNN includes the forward propagation of input data and the reverse transmission of output error.32 More detailed introduction about BPNN can be found in Refs. 33–39. The forward propagation of input data is to transmit input data from the input layer through a series of hidden layers toward the output layer, which builds the relationship between input data and output data. The reverse transmission of output error between the calculated and the ground true output is backward propagated from the output layer through the hidden layers to the first layer to adjust the connection weights and bias variables of neurons. By repeatedly applying this procedure, the output error is adjusted to an expected range. We validate the proposed method using simulation experiments and compare BPNN with the popular Tikhonov regularization. Our results demonstrate that our method provides higher accuracy and superior image quality than Tikhonov regularization in recovering a single inclusion or two closely spaced inclusions.

The remainder of the paper is organized as follows. Section 2 describes the light propagation model, BPNN, and evaluation metrics. Experimental results and comparisons are presented in Sec. 3. Finally, we present a discussion of results with our conclusions and future work in Sec. 4.

2. Methods

2.1. Forward Model

The light propagation in biological tissues can be modeled by the steady diffusion equation,13,14 which can be described as follows:

| (1) |

where is the imaged object, is the photon fluence rate at position , is the diffusion coefficient (), is the absorption coefficient (), is the reduced scattering coefficients (), and is the source term.

Here, the boundary condition used for Eq. (1) is Robin-type condition, which can be expressed as follows:13,14

| (2) |

where is the surface boundary of imaged object , is the boundary mismatch parameter, and is the outer normal on .

When the distributions of and are known, light measurements at the detectors can be calculated by solving Eqs. (1) and (2) based on the finite-element method,40 which can be modeled with the following equation:

| (3) |

where and represent the optical properties ( and ) and the measurements at the detectors, respectively; and are the number of finite-element nodes and the number of boundary measurements, respectively; and is the forward operator that relates the unknown distribution of optical properties to the boundary measurements.

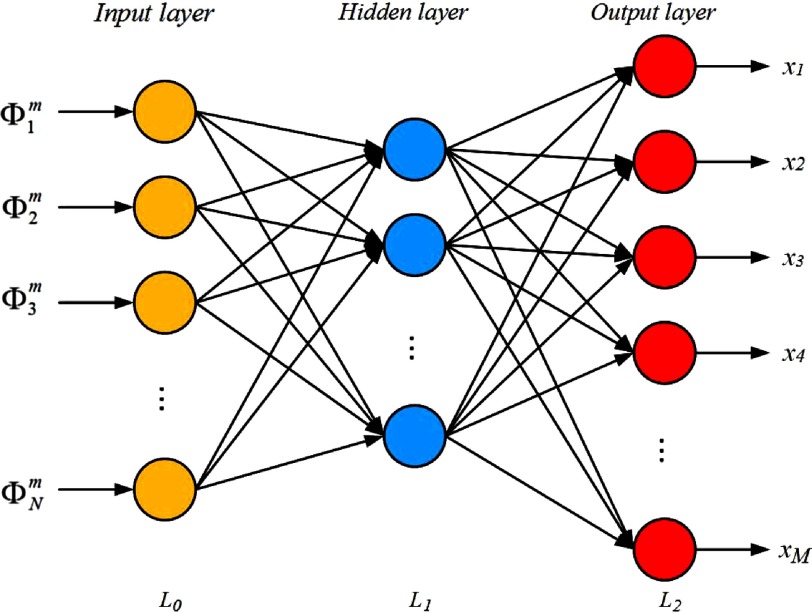

2.2. Back-Propagation Neural Network-Based Reconstruction

To improve the performances of iterative reconstruction algorithms in DOT, here we develop a reconstruction algorithm based on a BPNN. BPNN is divided into three types of layers: the input layer (), the fully connected hidden layer (), and the predictable output layer (). The architecture of the three-layer BPNN used for DOT image reconstruction is shown in Fig. 1. We train the neural network from the boundary measurements to learn a DOT reconstruction. In this work, the reduced scattering coefficient () is assumed to be spatially constant and known and we recover only the absorption coefficient (). Therefore, for the network training, boundary measurements (i.e., amplitude) are regarded as the input vector, which are generated by solving the forward model using open source software Nirfast,40 and the ground true distribution of absorption coefficient is served as the expected output.

Fig. 1.

The architecture of the three-layer BPNN used in DOT image reconstruction.

During the training process, the error between the predicted output and the true output is backward propagated from the output layer to the hidden layer to adjust the weights and biases in the opposite direction to the signal flow with respect to each individual weight.32 By repeatedly applying this procedure for each sample in the training set, the learning process can converge.

The BPNN-based reconstruction algorithm can be described as follows:36

-

Step 1:

Randomly initialize the weights and the bias variables at the ’th layer, and set the stop threshold and maximum number of iteration ;

-

Step 2:Compute the output of the ’th neuron at the ’th () layer using Eq. (4):

where is the neuron activation function, is the input of the ’th layer, is the number of the ’th layer neurons. Here, is the input of the network, i.e., .(4) -

Step 3:Calculate the mean square error for the output layer () between the ground truth output and the predicted output according to Eq. (5):

and compute the mean square error in the hidden layer () using Eq. (6):(5)

where is the derivative of neuron activation function.(6) -

Step 4:Adjust the connection weights and biases between layers at the ’th iteration based on Eqs. (7) and (8):

(7)

where is the learning rate, which controls the speed of adjusting neural network weights based on the gradient descent method.(8) -

Step 5:

Go back to Step 2 if the mean squared error of the neural network output is larger than the given stop threshold , or is smaller than , or the loss function is less than ; otherwise, the training processing will be terminated and output the weights and biases.

-

Step 6:

Directly reconstruct the distribution of absorption coefficient by evaluating the trained network.

In our algorithm, the Tansig function is adopted as the activation function. Its formula is given in Eq. (9):

| (9) |

and its derivative is shown in Eq. (10):

| (10) |

2.3. Evaluation Metrics

The performance of the proposed algorithm is accessed with four evaluation metrics, including the absolute bias error (ABE), mean square error (MSE),41,42 peak signal-to-noise ratio (PSNR),43 and structural similarity index (SSIM).44 These parameters are defined as follows:

| (11) |

| (12) |

| (13) |

| (14) |

| (15) |

where and are the true and reconstructed absorption coefficients at the finite node , respectively; and are the mean and standard derivation for the ground true () or reconstructed () absorption coefficients, respectively; is the covariance between the ground true and the reconstructed absorption coefficients, and are stabilization constants used to prevent division by a small denominator;44 is the above mentioned number of finite-element nodes. The ABE and MSE are used to compare the accuracy of reconstructed images. The PSNR (unit: dB) is used to compare the restoration of the images, without depending strongly on the image intensity scaling.15 SSIM is used to measure the similarity between the true and the reconstructed images, and an SSIM value of 1.0 refers to identical images. We expect lower ABE and MSE, while higher PSNR and SSIM, which show better performance.

3. Results

3.1. Data Preparation

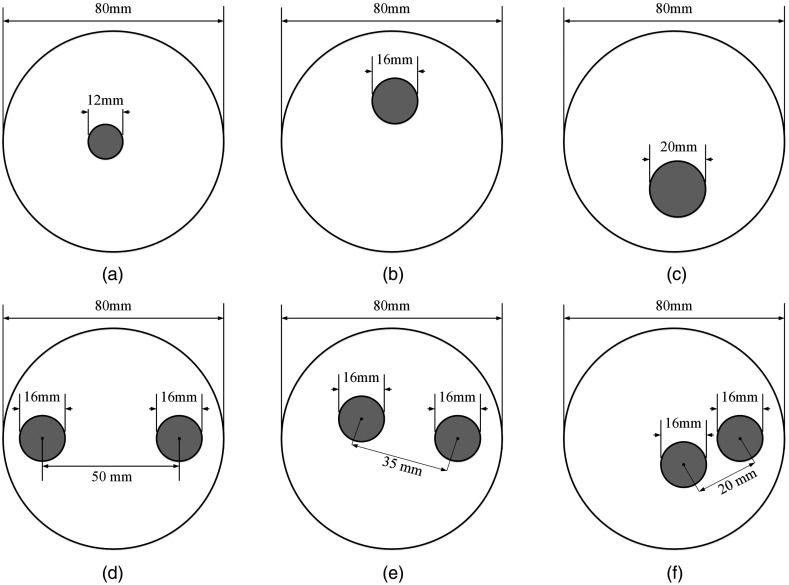

A 2-D circular phantom with a diameter of 80 mm was used to generate dataset. It was discretized into 2001 finite-element nodes and 3867 triangular elements. The absorption coefficient () and the reduced scattering coefficient () of the phantom were and , respectively. A total of 16 sources and 16 detectors were uniformly arranged along the circumference of the phantom. For each source illumination, data were collected at the remaining 15 detector locations, thus leading to a total of 240 () measurements. To generate simulation datasets, the phantom includes circular inclusions associated with varied sizes, locations, and absorption coefficients. Initially, an inclusion with the diameter of 6, 8, or 10 mm was randomly placed at different locations over the phantom. In this case, the absorption coefficients of the inclusions were varied from 0.015 to , leading to 17075 geometries. Next, two inclusions, which have the same radius of 8 mm, were placed at different edge-to-edge distances over the phantom. In this case, the phantoms were assigned different absorption coefficients (0.015, 0.02, 0.04, 0.06, or ) to each of its inclusions, leading to 5015 geometries. In all cases, the reduced scattering coefficients of inclusions were assumed to be , which were the same as those of the background. Examples of geometries of generating data are shown in Fig. 2. Software Nirfast was used to generate the simulation data,40 and 2% random Gaussian noise was added to the measurement data. A total of 22,090 samples which are data pairs contained input data and desired output data were obtained, and were separated into training, validation, and testing datasets. About 20,000 samples were used for training, 1045 for validation, and 1045 for testing.

Fig. 2.

Examples of geometries of generating datasets. (a)–(c) The examples of geometry with a single inclusion that has different locations and sizes. (d)–(f) The examples of geometries with two inclusions that have different edge-to-edge distance and locations. The absorption coefficients of inclusions in each geometry are varied from 0.015 to .

In the following experiments, the neural network has three layers: an input layer (240 neurons), a fully connected hidden layer, and an output layer (2001 neurons). To determine the number of neurons in the hidden layer, an empirical formula that has been introduced in Ref. 45 was used. The formula is given by

| (16) |

where is the number of the hidden layer units, and are the numbers of input neurons and output neurons. In our experiments, the values of and are 240 and 2001, respectively. According to Eq. (16), the calculated number of neurons in hidden layer is 694.3. In our experiments, the number of neurons in the hidden layer was set to 695. The learning rate , the stopping threshold , the maximum number of iteration and the threshold were set to be 10, , 50,000 and , respectively. BPNN was trained for 16,000 epochs to minimize the MSE between the true and the recovered images. It took about 26 h to train the BP neural network. The experiments ran on a personal computer with Intel Core i7 CPU at 2.8 GHz and 8 GB RAM.

For the purpose of comparison, we also performed Tikhonov regularization-based DOT reconstruction based on the Nirfast software.40 Tikhonov regularization-based DOT reconstruction is achieved using a least squares (LS) minimization technique, which is solved in the Levenberg–Marquardt procedure.40 The objective function in the Tikhonov regularization-based reconstruction algorithm typically consists of a data fidelity term of weighted LS and a regularization term of norm, balanced by a regularization parameter . For at the ’th iteration, it was setting as , where is the Jacobian matrix at the ’th iteration. The stopping criterion for Tikhonov regularization is defined such that the algorithm stops when the change in the difference between the forward data and the reconstructed data of two successive iterations is less than 2% or the maximum number of iteration (50) is reached. The initial regularization parameter is set to be 10. More detailed information about Tikhonov regularization-based DOT reconstruction can be found in Ref. 40.

3.2. Experimental Results

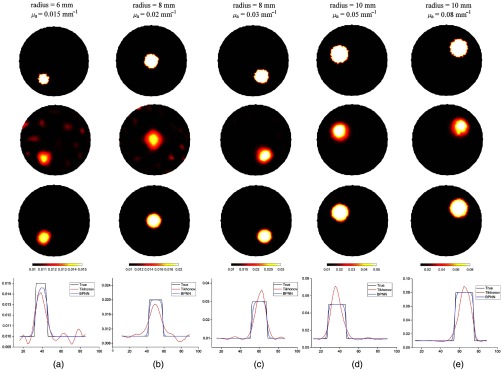

In this subsection, we provide numerical simulations to illustrate recovered results using BPNN and compare it with widely used Tikhonov regularization-based reconstruction method. Figure 3 shows some examples of recovered absorption coefficient using the two algorithms in the case of single inclusion. In Fig. 3, the size, the location, and the absorption coefficient of inclusions are varied. The ground true images are shown in the top row of Fig. 3, the reconstructed images using Tikhonov regularization and BPNN are given in the second and the third rows of Fig. 3, respectively. The corresponding cross-section profiles through their centers of the inclusions and along the axis are plotted in the last row of Fig. 3. From the last row of Fig. 3, we can see that the maximum values of recovered using Tikhonov regularization are much higher compared to their ground truths, the recovered using BPNN matched with their true values. The quantitative comparisons for the five cases in Fig. 3 are listed in Table 1. Compared with Tikhonov regularization, the values of ABE and MSE obtained using BPNN are significantly reduced, and the values of PSNR and SSIM are greatly improved. As an example, the values of ABE and MSE in Fig. 3(a) are reduced by 80% and 61%, respectively, which show that BPNN can yield higher reconstruction accuracy. It is evident that BPNN provides high-quality images with less artifacts in the background than those of Tikhonov regularization. Therefore, BPNN can have a PSNR gain of about 4.4 dB over Tikhonov regularization while the higher value of SSIM is obtained. The value of SSIM is improved by 424%, which indicates that the recovered image is nearly the same with the ground truth image. The similar results can also be observed in other images of Fig. 3. These results show that BPNN outperforms Tikhonov regularization in terms of higher accuracy and better image quality. In addition, the results also show that the image quality obtained by Tikhonov regularization is improved with increment of size and absorption coefficient of inclusions. By contrast, BPNN can always obtain robust reconstruction results.

Fig. 3.

(a)–(e) Reconstructed images of a single inclusion with different sizes and different values of absorption coefficients. The first row is the true images, the second and third rows are the recovered images using Tikhonov regularization and BPNN, respectively. The last row is the corresponding profiles through the center of inclusions and along axis. The sizes and the true values of absorption coefficients for each case are shown at the top of the figure. The reconstructed images of each column are shown at the same scale.

Table 1.

The quantitative comparisons presented in Fig. 3.

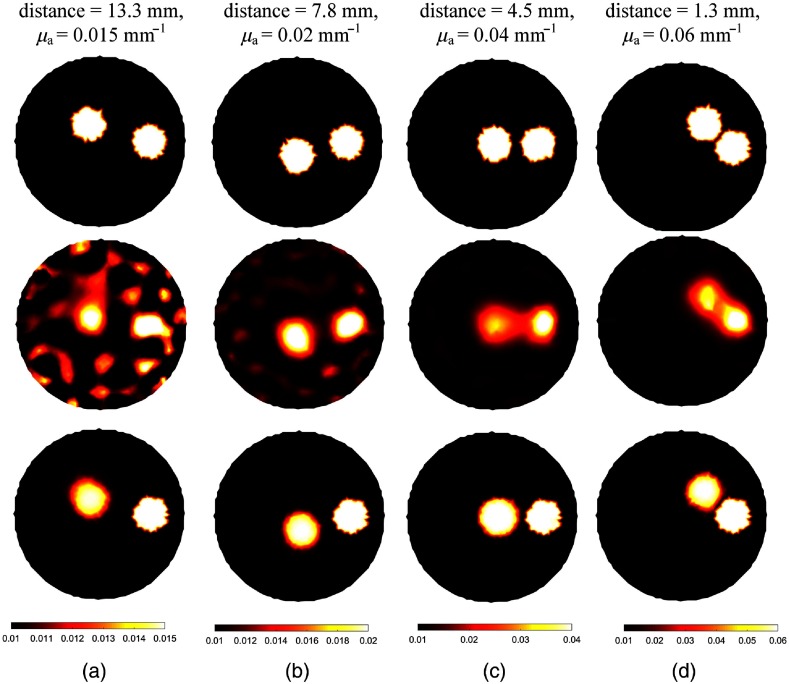

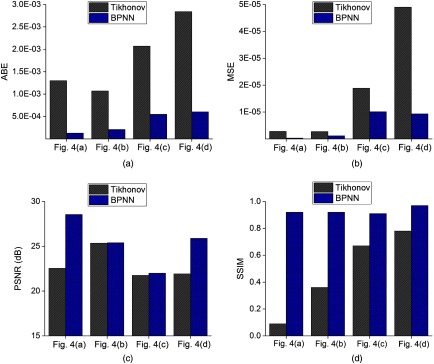

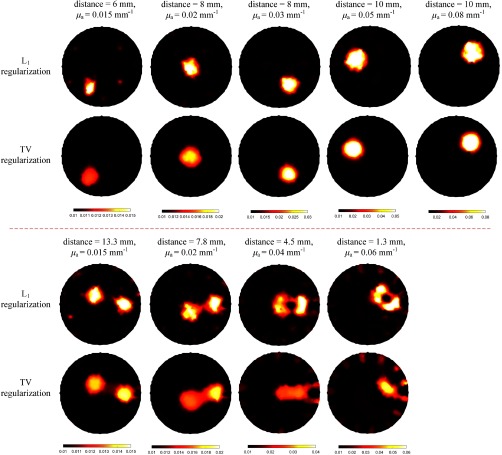

Figure 4 shows the capability of BPNN to recover images with two inclusions. And the corresponding quantitative results are compiled in Table 2. The two inclusions are observable and reconstructed with their centers at the correct positions for the two algorithms. But it can be seen that the shapes and the edges of the inclusions can be clearly observed by BPNN even when the edge-to-edge distance is 1.3 mm. However, the absorption coefficients of the inclusions far away from the boundary were underestimated and the images were somehow distorted for the Tikhonov regularization-based reconstruction method. For each evaluation metric, we draw a bar plot of test images in Fig. 5. As the higher accuracy of BPNN can be clearly observed in Fig. 5 and Table 2, we do not compare the cross-sections of different images here. Similar to the previous simulation results of one inclusion, BPNN reduces the artifacts and has offered more than 55.0%, 46.6%, and 16.7% improvement in ABE, MSE, and SSIM, respectively, compared with Tikhonov regularization-based reconstruction algorithm. The PSNRs in Figs. 4(b) and 4(c) are slightly higher than those of the Tikhonov regularization-based reconstruction algorithm because the absorption coefficients reconstructed by Tikhonov regularization have been overestimated with peak values of 0.03 and , respectively. By contrast, the peak values obtained by BPNN are 0.02 and , which are the same as the true targeted values. For Fig. 4, BPNN can have an average PSNR gain of about 2.56 dB over Tikhonov regularization. Overall, BPNN can obtain better performance.

Fig. 4.

(a)–(d) Examples of reconstructed images for increased intensity of absorption coefficient but with decreased edge-to-edge distance in the case of two inclusions. The first row is the ground truth images. From the second row to the last row, the images are reconstructed using Tikhonov regularization and BPNN, respectively. The edge-to-edge distance and the true values of absorption coefficients for each case are shown at the top of the figure. The reconstructed images of each column are shown at the same scale.

Table 2.

The quantitative comparisons in the case of two inclusions presented in Fig. 4.

Fig. 5.

Comparisons of evaluation metrics for reconstructed images of two inclusions using Tikhonov regularization and BPNN. (a) ABE; (b) MSE; (c) PSNR; and (d) SSIM.

Here, we reported the results of 1045 samples, which was randomly selected from the dataset to further evaluate the performance of our algorithm. We use the mean and standard deviation (SD) of ABE, MSE, PSNR, and SSIM to evaluate the performances of the two algorithms. The boxplots for the statistical results are presented in Fig. 6. And the corresponding quantitative comparisons are shown in Table 3.

Fig. 6.

The boxplots for the statistical results (). (a) ABE; (b) MSE; (c) PSNR; and (d) SSIM.

Table 3.

Mean ± SD of ABE/MSE/PSNR/SSIM for .

| ABE | MSE | PSNR | SSIM | |

|---|---|---|---|---|

| Tikhonov | ||||

| BPNN | ||||

| -value | * | * | * | * |

*Significant values are marked.

For BPNN, the ABE had a mean value (SD) of (); for Tikhonov regularization, the ABE had a mean value (SD) of (). The accuracy improvement is 77.3% compared with Tikhonov regularization. The MSE of BPNN has an average of , while the average for Tikhonov regularization is . The improvement in the average MSE of BPNN with respect to Tikhonov regularization is 74.0%. Furthermore, the average PSNR of BPNN is improved from 24.34 to 27.79 dB compared with Tikhonov regularization. The average value of SSIM (0.91) obtained by BPNN is significant as compared to the average value of SSIM (0.46) produced by Tikhonov regularization. For every one of these metrics, BPNN performs better than Tikhonov regularization being compared.

A student’s -test is used to determine whether there is statistical significance of the improvement between the evaluation metrics of Tikhonov regularization and BPNN. Significance is achieved at the 95% confidence interval using a two-tailed distribution. The corresponding -values are also listed in Table 3. Statistically significant differences ( values ) were found in the four evaluation metrics for BPNN versus Tikhonov regularization results, which further confirms that BPNN achieves more stable and effective performance than Tikhonov regularization.

4. Discussion and Conclusion

Iterative reconstruction algorithms with regularization have become the dominant approach for solving DOT inverse problem over the past few decades. However, it remains difficult to provide high-quality images. In this study, we explored using a BPNN to recover optical properties to improve the reconstruction accuracy and image quality of DOT. This was motivated by the fact that popular Tikhonov regularization-based reconstruction algorithms tend to produce oversmoothed images, which leads to poor reconstruction accuracy and bad image quality. The superior performance of the proposed algorithm was presented with numerical simulation experiments. Our results indicate that significant improvements of accuracy and image quality can be achieved by the proposed algorithm when compared with the Tikhonov regularization-based algorithm. Qualitative analysis demonstrated that our method can outperform Tikhonov regularization up to 77.3%, 74.0%, 14.2%, and 97.8% in terms of ABE, MSE, PSNR, and SSIM, respectively.

Furthermore, we compared the reconstructed results of the proposed method to those of the and TV regularized reconstruction algorithms. The examples of reconstructed images are shown in Fig. 7. For the regularized reconstruction algorithm, it was solved with the GPSR algorithm.46 For the TV regularized reconstruction algorithm, it was solved by the Split Bregman algorithm.47 As for the regularization parameters used in the two reconstruction algorithms, their values were the same and were set to 0.01, which was tuned manually to get the best performance. In the case of single inclusion, the - or TV-based reconstruction algorithm can obtain better images than the Tikhonov regularization-based reconstruction algorithm in terms of less artifacts. In the case of two inclusions, decreasing the edge-to-edge distance between inclusions leads to degraded image quality for the - and TV-based reconstruction algorithms. When the edge-to-edge distance of inclusions decreased to 4.5 mm, the two inclusions could not be discriminated for the TV-based reconstruction algorithm. Compared to regularization-based reconstruction algorithms (, and TV), our algorithm performs the best in reconstructing DOT images.

Fig. 7.

Reconstructed results by both and TV regularization algorithms, along with the corresponding reconstructions in Figs. 3 and 4. Top images: reconstructed images with a single inclusion. Bottom images: reconstructed images with two inclusions.

It is possible to obtain high-quality DOT images by training a neural network, even when iterative reconstruction algorithms underperform. Compared to iterative reconstruction algorithms, BPNN-based reconstruction algorithm is capable of: (1) improving the reconstruction accuracy with relatively stable performance; (2) enhancing the image quality with fewer image artifacts in the background; and (3) improving the spatial resolution.

Currently, computational speed is still an open active research area in DOT. To the best of our knowledge, the widely used iterative reconstruction algorithms in DOT require to solve the forward model and to calculate the Jacobian matrix at each iteration.40 Therefore, the computational speed is relatively slow. For example, the computational time for each reconstruction in this study is about 1 to 2 min for Tikhonov regularization. For this reason, an algorithm that can fast reconstruct the distribution of optical properties is preferred. Although it takes a rather longer time to train the neuron network for the proposed algorithm, the training is implemented off-line. Once the training is finished, the time for the reconstruction is in a few seconds, which is practically useful for in vivo data.

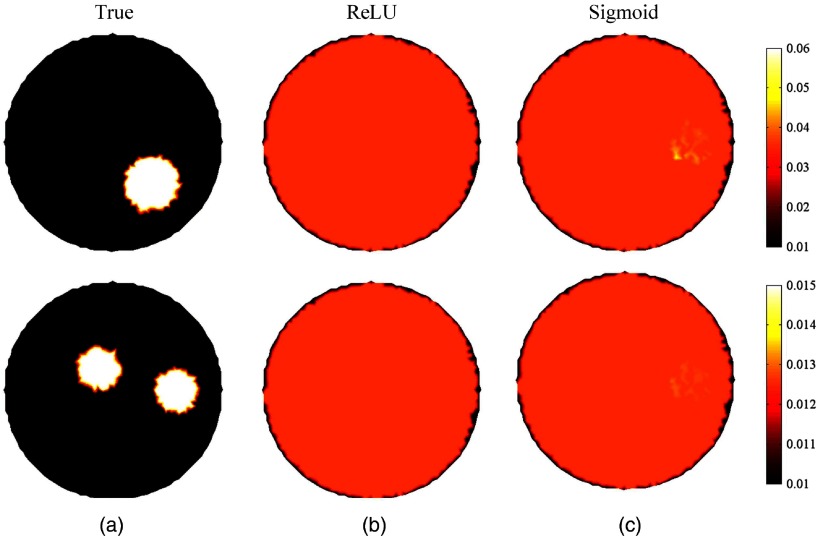

The effects of different activation functions, including ReLU, Sigmoid, and Tansig, were also examined. The representative results are shown in Fig. 8. Figure 8 shows that the distribution of absorption properties cannot be accurately reconstructed when using either ReLU or sigmoid as the activation function. The mean values of SSIM were 0.02 and 0.03 when using ReLU and sigmoid as the activation function, respectively. There are significant differences between the reconstructed and true images. By contrast, better images can be achieved with the activation function of Tansig and the mean SSIM is 0.91. However, it is still unclear why the activation function of Tansig works for DOT reconstruction.

Fig. 8.

The reconstructed images using different activation functions. (a) True images; (b) and (c) reconstructed images with activation functions of ReLU and sigmoid, respectively.

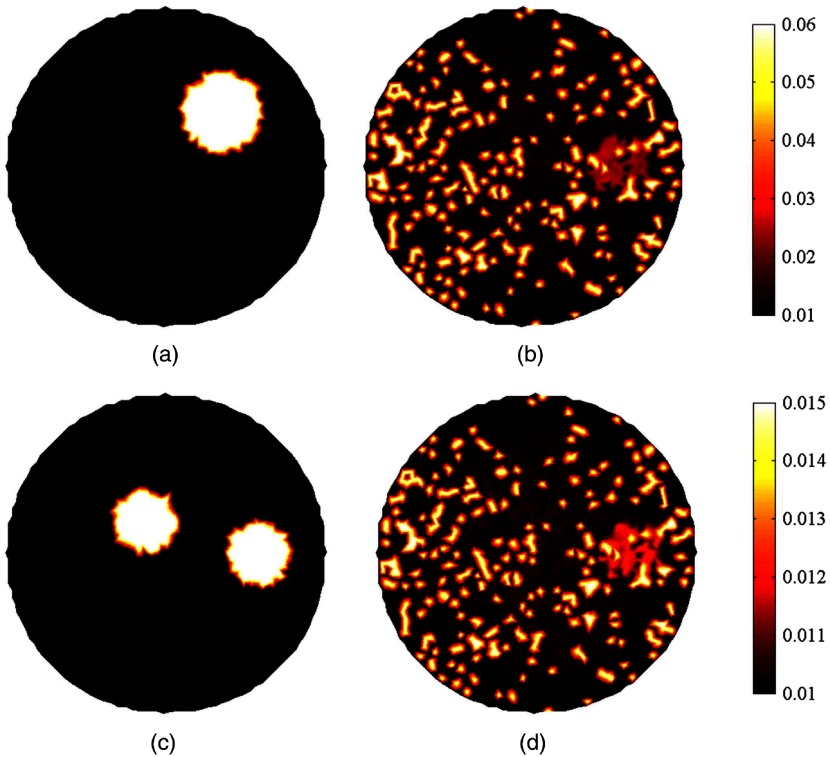

How to determine the number of neurons in the hidden layer needs to be investigated. Except for Eq. (16), one of the typical equations which can be used to determine the number of neurons in the hidden layer is ,48 where is the number of input neurons. In our experiments, is 240. Therefore, is about 8. We trained our network with eight hidden neurons. Using the trained network, DOT image reconstruction was performed. The examples of reconstructed images are shown in Fig. 9. Figure 9 shows that the distribution of absorption coefficient cannot be accurately reconstructed. The reason is that using too few neurons in the hidden layer will result in underfitting.

Fig. 9.

The examples of reconstructed images using the neural network with eight hidden neurons. The first and second rows are the results of single inclusion and two inclusions, respectively. (a) and (c) The true images; (b) and (d) the reconstructed images.

The effects of the learning rate on the performance of BPNN were also analyzed. The learning rate was set to 0.01, 0.1, 1, 10, or 50. The optimal learning rate was the one with the lowest validation loss. In the experiments, the activation function was fixed as Tansig. When the learning rate was set to 50, the validation loss fluctuated over epochs. Therefore, the learning rate of 50 was discarded. The validation losses were 0.15, 0.09, 0.04, and 0.02, corresponding to the learning rates, 0.01, 0.1, 1, and 10, respectively. When learning rate was 10, the lowest validation loss was obtained. We also note that a higher learning rate effectively speeds up the convergence for the training procedure. Therefore, the learning rate was set to 10 in our experiments.

Note that several recent publications have applied various deep learning architectures for solving inverse problems.49–51 For example, a recent work by Sun et al. has shown that a convolutional neural network can be applied to perform image reconstruction under multiple scattering problem in diffraction tomography.49 However, our focus is on diffuse optical tomography. Recently, an artificial neural network-based approach is developed to estimate the inclusion location, then the estimated inclusion location is used as a-priori knowledge in DOT reconstruction.50 Therefore, the approach is not to reconstruct DOT images directly by learning an artificial neural network. Deep learning technique has been recently applied to reconstruct DOT images, and its superiority has been indicated by comparing with an analytic technique.51 The average value of SSIM they reported is 0.46, which is relatively low. By contrast, a higher value of SSIM is obtained by our algorithm, and its value is 0.91. A future study would be to compare the performances of the two algorithms in the same datasets.

Although the proposed BPNN method has achieved promising results, our work could still be considered as a preliminary attempt of applying neural network in DOT; its application in DOT is still very challenging. The performance of BPNN depends on the training data; however, it would be difficult to acquire a sufficient number of real data for training in patient studies. An available strategy is to create training data pairs from breast geometries with known optical properties and the real data is for evaluation. Breast geometries can be obtained from breast MRI images. Since breast geometries have different sizes and shapes, the finite-element meshes generated from breast geometries are different, which leads to different BPNN architectures. To deal with the problem, pixel basis provides a solution.40 Each finite-element mesh is mapped to the same pixel basis for network training. Therefore, the trained BPNN will be a universal network. Certain challenges remain which are the subject of further study, including the effect of heterogeneity in the background region as well as performance evaluation using clinical patient data.

Acknowledgments

This work has been sponsored by the National Science Foundation of China under Grant No. 81871394, the Beijing Municipal Education Committee Science Foundation under Grant No. KM201810005030, and Beijing Laboratory of Advanced Information Networks under Grant No. 040000546618017.

Biography

Biographies of the authors are not available.

Disclosures

The authors have no relevant financial interests in the manuscript.

References

- 1.Feng J., et al. , “Addition of T2-guided optical tomography improves noncontrast breast magnetic resonance imaging diagnosis,” Breast Cancer Res. 19, 117 (2017). 10.1186/s13058-017-0902-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zhu Q., et al. , “Assessment of functional differences inmalignant and benign breast lesions and improvement of diagnostic accuracy by using US-guided diffuse optical tomography in conjunction with conventional US,” Radiology 280(2), 387–397 (2016). 10.1148/radiol.2016151097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Xu C., et al. , “Ultrasound-guided diffuse optical tomography for predicting and monitoring neoadjuvant chemotherapy of breast cancers: recent progress,” Ultrason. Imaging 38(1), 5–18, (2016). 10.1177/0161734615580280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ntziachristos V., et al. , “Concurrent MRI and diffuse optical tomography of breast after indocyanine green enhancement,” Proc. Natl. Acad. Sci. U. S. A. 97(6), 2767–2772 (2000). 10.1073/pnas.040570597 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Brooksby B., et al. , “Imaging breast adipose and fibroglandular tissue molecular signatures by using hybrid MRI-guided near-infrared spectral tomography,” Proc. Natl. Acad. Sci. U. S. A. 103(23), 8828–8833 (2005). 10.1073/pnas.0509636103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mastanduno M. A., et al. , “Sensitivity of MRI-guided near-infrared spectroscopy clinical breast exam data and its impact on diagnostic performance,” Biomed. Opt. Express 5, 3103–3115 (2014). 10.1364/BOE.5.003103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jiang S., et al. , “Predicting breast tumor response to neoadjuvant chemotherapy with diffuse optical spectroscopic tomography prior to treatment,” Clin. Cancer Res. 20(23), 6006–6015 (2014). 10.1158/1078-0432.CCR-14-1415 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mastanduno M., et al. , “MR-guided near-infrared spectral tomography increases diagnostic performance of breast MRI,” Clin. Cancer Res. 21(17), 3906–3912 (2015). 10.1158/1078-0432.CCR-14-2546 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ntziachristos V., et al. , “MRI-guided diffuse optical spectroscopy of malignant and benign breast lesions,” Neoplasia 4, 347–354 (2002). 10.1038/sj.neo.7900244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chen C., et al. , “Diffuse optical tomography enhanced by clustered sparsity for functional brain imaging,” IEEE Trans. Med. Imaging 33(12), 2323–2331 (2014). 10.1109/TMI.2014.2338214 [DOI] [PubMed] [Google Scholar]

- 11.Franceschini M. A., Boas D. A., “Noninvasive measurement of neuronal activity with near-infrared optical imaging,” Neuroimage 21(1), 372–386 (2004). 10.1016/j.neuroimage.2003.09.040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lee C. W., Cooper R. J., Austin T., “Diffuse optical tomography to investigate the newborn brain,” Pediatr. Res. 82, 376–386 (2017). 10.1038/pr.2017.107 [DOI] [PubMed] [Google Scholar]

- 13.Arridge S. R., “Optical tomography in medical imaging,” Inverse Prob. 15(2), R41–R93 (1999). 10.1088/0266-5611/15/2/022 [DOI] [Google Scholar]

- 14.Arridge S. R., Schotland J. C., “Optical tomography: forward and inverse problems,” Inverse Probl. 25(12), 123010 (2009). 10.1088/0266-5611/25/12/123010 [DOI] [Google Scholar]

- 15.Feng J., et al. , “Impact of weighting function on reconstructed breast images using direct regularization imaging method for near-infrared spectral tomography,” Biomed. Opt. Express 9(7), 3266–3283 (2018). 10.1364/BOE.9.003266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Baikejiang R., Zhang W., Li C., “Diffuse optical tomography for breast cancer imaging guided by computed tomography: a feasibility study,” J. X-Ray Sci. Technol. 25, 341–355 (2017). 10.3233/XST-16183 [DOI] [PubMed] [Google Scholar]

- 17.Feng J., et al. , “Multiobjective guided priors improve the accuracy of near-infrared spectral tomography for breast imaging,” J. Biomed. Opt. 21(9), 090506 (2016). 10.1117/1.JBO.21.9.090506 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zhang L., et al. , “Direct regularization from co-registered anatomical images for MRI-guided near-infrared spectral tomographic image reconstruction,” Biomed. Opt. Express 6, 3618–3630 (2015). 10.1364/BOE.6.003618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fang Q., et al. , “Combined optical and X-ray tomosynthesis breast imaging,” Radiology 258(1), 89–97 (2011). 10.1148/radiol.10082176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yalavarthy P. K., et al. , “Structural information within regularization matrices improves near infrared diffuse optical tomography,” Opt. Express 15, 8043–8058 (2007). 10.1364/OE.15.008043 [DOI] [PubMed] [Google Scholar]

- 21.Yuan Z., Jiang H., “Image reconstruction scheme that combines modified Newton method and efficient initial guess estimation for optical tomography of finger joints,” Appl. Opt. 46(14), 2757–2768 (2007). 10.1364/AO.46.002757 [DOI] [PubMed] [Google Scholar]

- 22.Yalavarthy P. K., et al. , “Weight-matrix structured regularization provides optimal generalized least-squares estimate in diffuse optical tomography,” Med. Phys. 34(6), 2085–2098 (2007). 10.1118/1.2733803 [DOI] [PubMed] [Google Scholar]

- 23.Cao N., Nehorai A., Jacobs M., “Image reconstruction for diffuse optical tomography using sparsity regularization and expectation-maximization algorithm,” Opt. Express 15(21), 13695–13708 (2007). 10.1364/OE.15.013695 [DOI] [PubMed] [Google Scholar]

- 24.Gibson A. P., Hebden J. C., Arridge S. R., “Recent advances in diffuse optical imaging,” Phys. Med. Biol. 50, R1–R43 (2005). 10.1088/0031-9155/50/4/R01 [DOI] [PubMed] [Google Scholar]

- 25.Prakash J., et al. , “Sparse recovery methods hold promise for diffuse optical tomographic image reconstruction,” IEEE J. Sel. Top. Quantum Electron. 20(2), 74–82 (2014). 10.1109/JSTQE.2013.2278218 [DOI] [Google Scholar]

- 26.Prakash J., et al. , “Model-resolution based basis pursuit deconvolution improves diffuse optical tomographic imaging,” IEEE Trans. Med. Imaging 33(4), 891–901 (2014). 10.1109/TMI.2013.2297691 [DOI] [PubMed] [Google Scholar]

- 27.Zhao Y., et al. , “Optimization of image reconstruction for magnetic resonance imaging-guided near-infrared diffuse optical spectroscopy in breast,” J. Biomed. Opt. 20(5), 056009 (2015). 10.1117/1.JBO.20.5.056009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yang X., et al. , “Low-dose x-ray tomography through a deep convolutional neural network,” Sci. Rep. 8, 2575 (2018). 10.1038/s41598-018-19426-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Pelt D. M., Batenburg K. J., “Fast tomographic reconstruction from limited data using artificial neural networks,” IEEE Trans. Image Process. 22(12), 5238–5251 (2013). 10.1109/TIP.2013.2283142 [DOI] [PubMed] [Google Scholar]

- 30.Yang G., et al. , “DAGAN: deep de-aliasing generative adversarial networks for fast compressed sensing MRI reconstruction,” IEEE Trans. Image Process. 37(6), 1310–1321 (2018). 10.1109/TMI.2017.2785879 [DOI] [PubMed] [Google Scholar]

- 31.Yang B., Ying L., Tang J., “Artificial neural network enhanced Bayesian PET image reconstruction,” IEEE Trans. Image Process. 37(6), 1297–1309 (2018). 10.1109/TMI.2018.2803681 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lv C., et al. , “Levenberg-Marquardt backpropagation training of multilayer neural networks for state estimation of a safety critical cyber-physical system,” IEEE Trans. Ind. Inf. 14(8) 3436–3446 (2018). 10.1109/TII.2017.2777460 [DOI] [Google Scholar]

- 33.Rumelhart D. E., Hinton G. E., Williams R. J., “Learning representations by back-propagating errors,” Nature 323(6088), 533–536 (1986). 10.1038/323533a0 [DOI] [Google Scholar]

- 34.Wasserman P. D., Neural Computing: Theory and Practice, Van Nostrand Reinhold, New York: (1989). [Google Scholar]

- 35.Hecht-Nielsen R., “Theory of the backpropagation neural network,” in Int. Joint Conf. on Neural Networks, Vol. 1, pp. 593–605 (1989). 10.1109/IJCNN.1989.118638 [DOI] [Google Scholar]

- 36.Haykin S., Neural Networks, Macmillan College Publishing Company, Inc., New York: (1994). [Google Scholar]

- 37.Basheer I. A, Hajmeer M, “Artificial neural networks: fundamentals, computing, design, and application,” J. Microbiol. Methods 43(1), 3–31 (2000). 10.1016/S0167-7012(00)00201-3 [DOI] [PubMed] [Google Scholar]

- 38.Amato F., et al. , “Artificial neural networks in medical diagnosis,” J. Appl. Biomed. 11, 47–58 (2013). 10.2478/v10136-012-0031-x [DOI] [Google Scholar]

- 39.Kuriscak E., et al. , “Biological context of Hebb learning in artificial neural networks, a review,” Neurocomputing 152, 27–35 (2015). 10.1016/j.neucom.2014.11.022 [DOI] [Google Scholar]

- 40.Dehghani H., et al. , “Near infrared optical tomography using NIRFAST: algorithm for numerical model and image reconstruction,” Commun. Num. Methods Eng. 25(6), 711–732 (2009). 10.1002/cnm.v25:6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pogue B. W., et al. , “Statistical analysis of nonlinearly reconstructed near-infrared tomographic images: part I-theory and simulations,” IEEE Trans. Med. Imaging 21(7), 755–763 (2002). 10.1109/TMI.2002.801155 [DOI] [PubMed] [Google Scholar]

- 42.Song X., et al. , “Statistical analysis of nonlinearly reconstructed near-infrared tomographic images: part II-experimental interpretation,” IEEE Trans. Med. Imaging 21(7), 764–772 (2002). 10.1109/TMI.2002.801158 [DOI] [PubMed] [Google Scholar]

- 43.Cuadros A. P., Arce G. R., “Coded aperture optimization in compressive X-ray tomography: a gradient descent approach,” Opt. Express 25(20), 23833–23849 (2017). 10.1364/OE.25.023833 [DOI] [PubMed] [Google Scholar]

- 44.Wang Z., et al. , “Image quality assessment: from error visibility to structural similarity,” IEEE Trans. Image Process. 13(4), 600–612 (2004). 10.1109/TIP.2003.819861 [DOI] [PubMed] [Google Scholar]

- 45.Gao D., Wu S., “An optimization method for the topological structures of feed-forward multi-layer neural networks,” Pattern Recognit. 31(9), 1337–1342 (1998). 10.1016/S0031-3203(97)00160-X [DOI] [Google Scholar]

- 46.Figueiredo M., Nowak R., Wright S., “Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems,” IEEE J. Sel. Top. Signal Process. 1(4), 586–597 (2007). 10.1109/JSTSP.2007.910281 [DOI] [Google Scholar]

- 47.Feng J., et al. , “Total variation regularization for bioluminescence tomography with the split Bregman method,” Appl. Opt. 51(19), 4501–4512 (2012) 10.1364/AO.51.004501 [DOI] [PubMed] [Google Scholar]

- 48.Gorman R. P., Sejnowski T. J., “Analysis of hidden units in a layered network trained to classify sonar target,” Neural Networks 1, 75–89 (1988). 10.1016/0893-6080(88)90023-8 [DOI] [Google Scholar]

- 49.Sun Y., Xia Z., Kamilov U. S., “Efficient and accurate inversion of multiple scattering with deep learning,” Opt. Express 26(11), 14678–14688 (2018). 10.1364/OE.26.014678 [DOI] [PubMed] [Google Scholar]

- 50.Patra R., Dutta P. K., “Improved dot reconstruction by estimating the inclusion location using artificial neural network,” Proc. SPIE 8668, 86684C (2013). 10.1117/12.2007905 [DOI] [Google Scholar]

- 51.Yedder H. B., et al. , “Deep learning based image reconstruction for diffuse optical tomography,” in Machine Learning for Medical Image Reconstruction, Knoll F., Maier A., Rueckert D., Eds., Int. Workshop on Machine Learning for Medical Image Reconstruction, pp. 112–119 (2018). [Google Scholar]